a3e823b97814da6a62c87ac36f279dfb.ppt

- Количество слайдов: 19

ZFS and Netbackup Chris Street, TSYS Europe “Dedupe for misers”

W 3 ● ● ● What - Disk based deduplicated storage pools Where – remote offices, test labs, invariant data Why – Puredisk and appliances cost money. .

ZFS ● File system from Solaris ● Robust self healing storage ● Disk level encryption ● Disk level compression ● Deduplication

ZFS ● Open. ZFS project bringing the filesystem to Linux ● Most mature and robust implementations are on BSD ● Free. NAS provides a useful appliance offering ZFS, i. SCSI, CIFS and NFS targets

Benefits of ZFS ● Actively assumes discs are failing ● All writes are checksummed to ensure they are written correctly ● Offers useful data management tools ● Free. NAS can perform as a small scale SAN despite it's name

Security ● Discs are encrypted on the fly – poor performance unless processor with AES extensions is used ● Keys are stored on the boot drive! ● It is intended to protect the disc if it is RMA'd without being wiped. ● Will not protect against system theft.

Performance ● A small commodity server will accept data at gigabit speed ● 32 GB, 8 spindles will take 2 gigbits bonded running dedupe or compression ● Don't use Raid-Z as write performance is horrifically slow ● Mirrored stripes are much faster and as secure

Performance 2 ● Free. NAS performance heavily dependant on RAM ● Disc writes to async targets are not committed immediately ● Disc writes to sync targets are, using the ZFS Intent Log ● This harms performance considerably ● Poorly deduping data is very slow

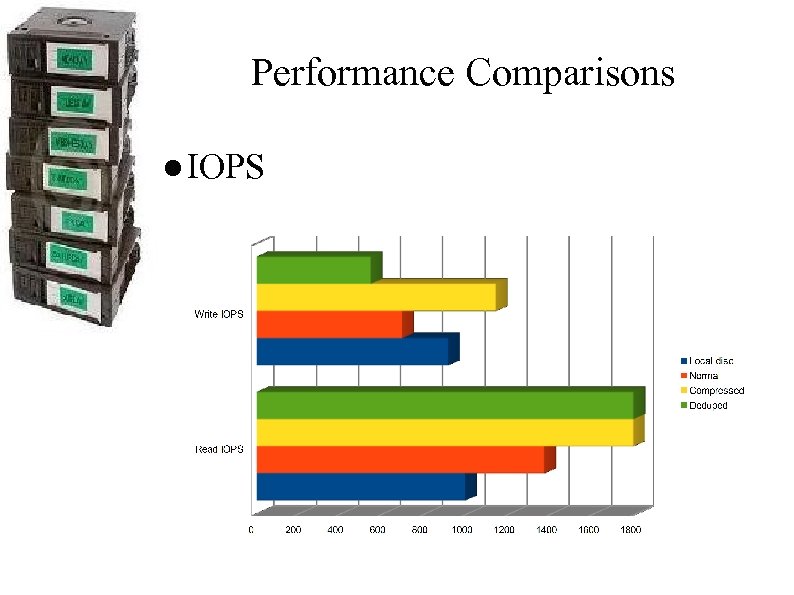

Performance Comparisons ● IOPS

Performance Comparisons ● Transfer Speeds MB/sec

RAM management ● ZFS uses most of it's RAM to provide the adaptive read cache (ARC) which replaces the LRU ● Second level caching occurs in dedicated SSD ZIL drives if fitted ● For bulk writing this is not the preferred solution

Forget reliability ● Data integrity is not the same as reliability ● If a system fails during the backup so what? Rerun the backup ● Use async targets and provide a UPS which enforces shutdown on low battery ● Remember – data commited to disc is safe and uncorrupted

Dedupe ● Memory demand is huge - the ARC and the dedupe tables compete for RAM ● Once ARC drops to 25% of RAM dedupe tables swap to disc and performance suffers immensely ● 20 GB of RAM for the first TB of physical dedupe space, plus 5 GB for each extra TB ● Have multiple small dedupe z. VOL

Real world ● User data is good – 80 -90% reduction is not uncommon ● Repeated full database backups can also be good candidates (75 -96%) ● Lab environments where small incremental tweaks are made ● Backups of many systems on the same OS

Compression ● Choice of LZH or GZIP ● Much less demanding on RAM ● More demanding on processor ● Can be nearly as good as dedupe – experiment first ● Easier to recover if moved to system with less RAM ● Robust and mature enough for main

Compression ● Choice of LZH or GZIP ● Much less demanding on RAM ● More demanding on processor ● Can be nearly as good as dedupe – experiment first ● Easier to recover if moved to system with less RAM ● Robust and mature enough for main

Cost ● RAM cost vs Disc cost ● For a 4 TB system, 40 GB RAM needed ● Assuming 80% dedupe rates 40 GB RAM “buys” 20 TB of storage ● 40 TB (mirrored) disc lists at £ 1652 ● 40 GB ECC RAM + 8 TB disc lists at £ 660 ● Data that offers 75% reduction with

Conclusion ● Probably not suited to mainstream backups yet ● A niche solution where more risk can be tolerated for the lower cost ● Ideal to replace remote automated tape libraries in smaller offices ● Very useful in lab or development

Questions ?

a3e823b97814da6a62c87ac36f279dfb.ppt