f863cb1114f57d1f117a5bd294093f0d.ppt

- Количество слайдов: 67

Zettabyte File System (File System Married to Volume Manager) Dusan Baljevic Sydney, Australia

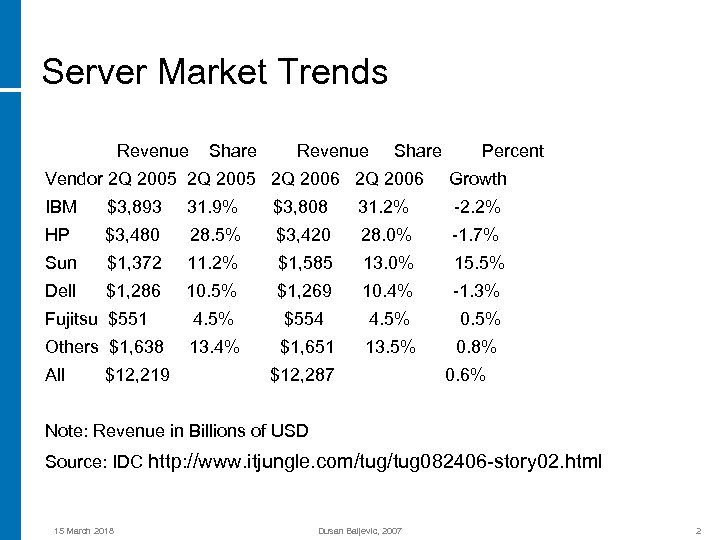

Server Market Trends Revenue Share Percent Vendor 2 Q 2005 2 Q 2006 Growth IBM $3, 893 31. 9% $3, 808 31. 2% -2. 2% HP $3, 480 28. 5% $3, 420 28. 0% -1. 7% Sun $1, 372 11. 2% $1, 585 13. 0% 15. 5% Dell $1, 286 10. 5% $1, 269 10. 4% -1. 3% Fujitsu $551 4. 5% $554 4. 5% 0. 5% Others $1, 638 13. 4% $1, 651 13. 5% 0. 8% All $12, 219 $12, 287 0. 6% Note: Revenue in Billions of USD Source: IDC http: //www. itjungle. com/tug 082406 -story 02. html 15 March 2018 Dusan Baljevic, 2007 2

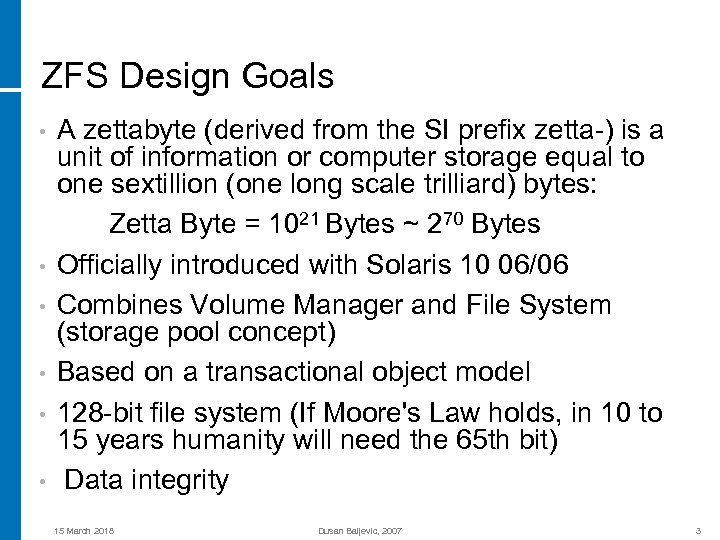

ZFS Design Goals • • • A zettabyte (derived from the SI prefix zetta-) is a unit of information or computer storage equal to one sextillion (one long scale trilliard) bytes: Zetta Byte = 1021 Bytes ~ 270 Bytes Officially introduced with Solaris 10 06/06 Combines Volume Manager and File System (storage pool concept) Based on a transactional object model 128 -bit file system (If Moore's Law holds, in 10 to 15 years humanity will need the 65 th bit) Data integrity 15 March 2018 Dusan Baljevic, 2007 3

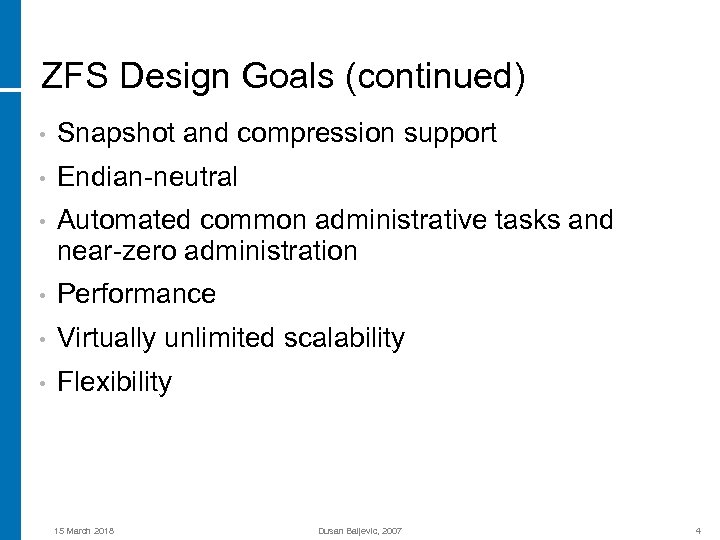

ZFS Design Goals (continued) • Snapshot and compression support • Endian-neutral • Automated common administrative tasks and near-zero administration • Performance • Virtually unlimited scalability • Flexibility 15 March 2018 Dusan Baljevic, 2007 4

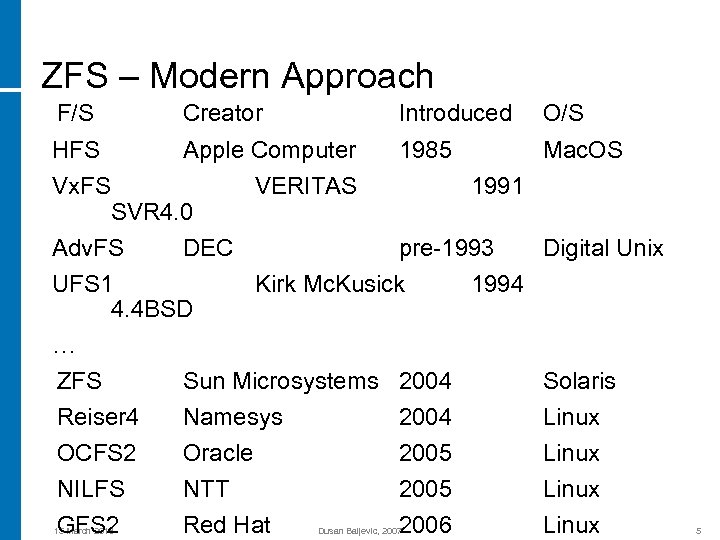

ZFS – Modern Approach F/S Creator Introduced HFS Vx. FS Apple Computer 1985 VERITAS 1991 SVR 4. 0 Adv. FS DEC pre-1993 UFS 1 Kirk Mc. Kusick 1994 4. 4 BSD … ZFS Sun Microsystems 2004 Reiser 4 Namesys 2004 OCFS 2 Oracle 2005 NILFS NTT 2005 GFS 2 Red Hat 2006 15 March 2018 Dusan Baljevic, 2007 O/S Mac. OS Digital Unix Solaris Linux 5

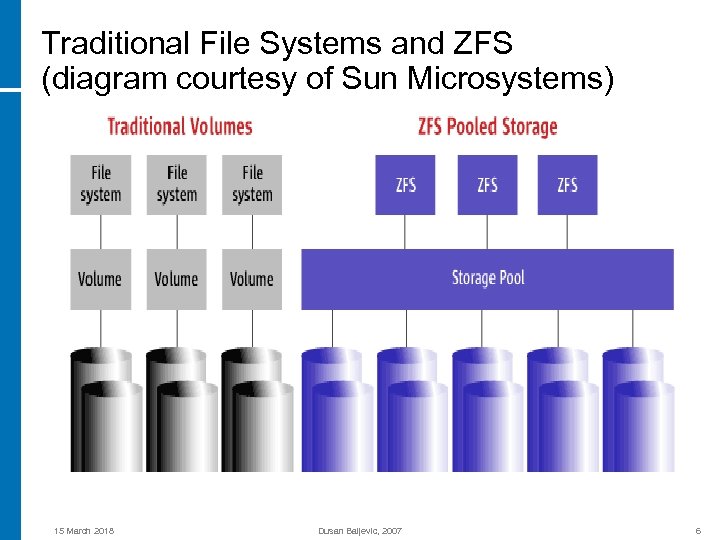

Traditional File Systems and ZFS (diagram courtesy of Sun Microsystems) 15 March 2018 Dusan Baljevic, 2007 6

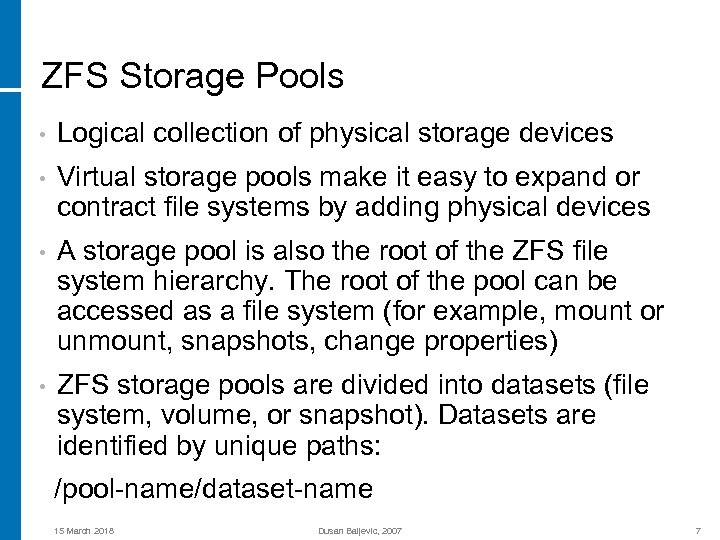

ZFS Storage Pools • Logical collection of physical storage devices • Virtual storage pools make it easy to expand or contract file systems by adding physical devices • A storage pool is also the root of the ZFS file system hierarchy. The root of the pool can be accessed as a file system (for example, mount or unmount, snapshots, change properties) • ZFS storage pools are divided into datasets (file system, volume, or snapshot). Datasets are identified by unique paths: /pool-name/dataset-name 15 March 2018 Dusan Baljevic, 2007 7

ZFS Administration Tools • Standard tools are zpool and zfs Most tasks can be run through a web interface (ZFS Administration GUI and the SAM FS Manager use the same underlying web console packages): https: //hostname: 6789/zfs Prerequisite: #/usr/sbin/smcwebserver start #/usr/sbin/smcwebserver enable • zdb (ZFS debugger) command for support engineers • 15 March 2018 Dusan Baljevic, 2007 8

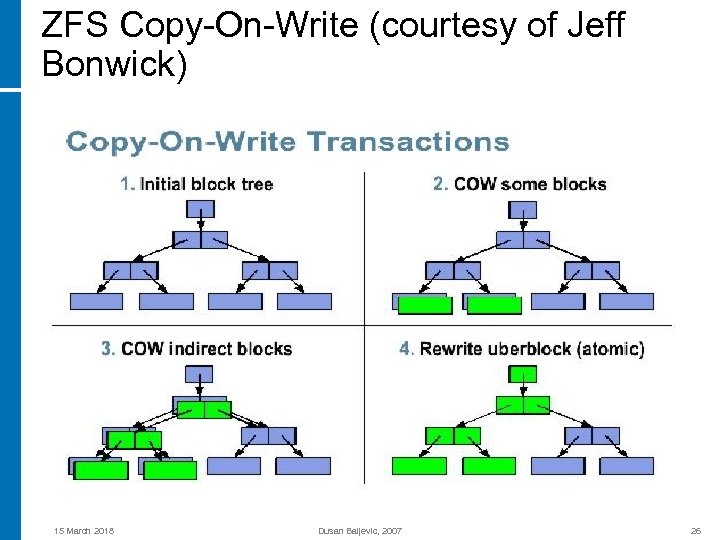

ZFS Transactional Object Model • All operations are copy-on-write (COW). Live data is never overwritten • ZFS writes data to a new block before changing the data pointers and committing the write. Copyon-write provides several benefits: * Always-valid on-disk state * Consistent, reliable backups * Data rollback to known point in time • Time-consuming recovery procedures like fsck are not required if the system is shut down in an unclean manner 15 March 2018 Dusan Baljevic, 2007 9

ZFS Snapshot versus Clone • • • Snapshot is a read-only copy of a file system (or volume) that initially consumes no additional space. It cannot be mounted as a file system. ZFS snapshots are immutable. This features is critical for supporting legal compliance requirements, such as Sarbanes-Oxley, where businesses have to demonstrate that the view of the data at a given point in time is correct Clone is a write-enabled “snapshot”. It can only be created from a snapshot. A clone can be mounted Snapshot properties are inherited at creation time and cannot be changed 15 March 2018 Dusan Baljevic, 2007 10

ZFS Data Integrity • Solaris 10 with ZFS is the only known operating system designed to provide end-to-end checksum capability for all data • All data is protected by 64 -bit checksums • ZFS constantly reads and checks data to help ensure it is correct. If it detects an error in a mirrored pool, the technology can automatically repair the corrupt data 15 March 2018 Dusan Baljevic, 2007 11

ZFS Data Integrity (continued) • Checksums stored with indirect blocks • Self-validating, self-authenticating checksum tree • Detects phantom writes, misdirections, common administrative errors (for example, swap on active ZFS disk) 15 March 2018 Dusan Baljevic, 2007 12

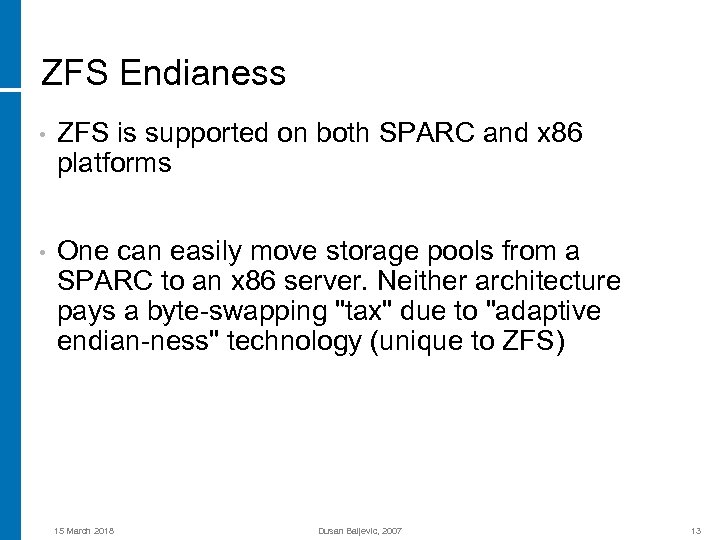

ZFS Endianess • ZFS is supported on both SPARC and x 86 platforms • One can easily move storage pools from a SPARC to an x 86 server. Neither architecture pays a byte-swapping "tax" due to "adaptive endian-ness" technology (unique to ZFS) 15 March 2018 Dusan Baljevic, 2007 13

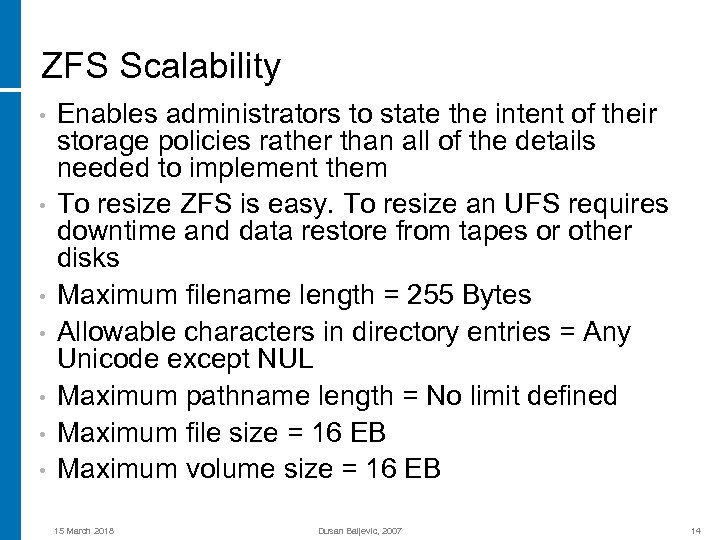

ZFS Scalability • • Enables administrators to state the intent of their storage policies rather than all of the details needed to implement them To resize ZFS is easy. To resize an UFS requires downtime and data restore from tapes or other disks Maximum filename length = 255 Bytes Allowable characters in directory entries = Any Unicode except NUL Maximum pathname length = No limit defined Maximum file size = 16 EB Maximum volume size = 16 EB 15 March 2018 Dusan Baljevic, 2007 14

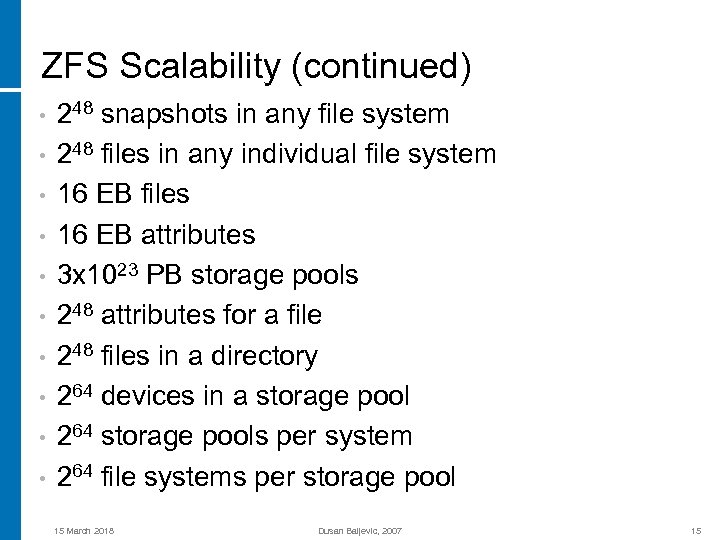

ZFS Scalability (continued) • • • 248 snapshots in any file system 248 files in any individual file system 16 EB files 16 EB attributes 3 x 1023 PB storage pools 248 attributes for a file 248 files in a directory 264 devices in a storage pool 264 storage pools per system 264 file systems per storage pool 15 March 2018 Dusan Baljevic, 2007 15

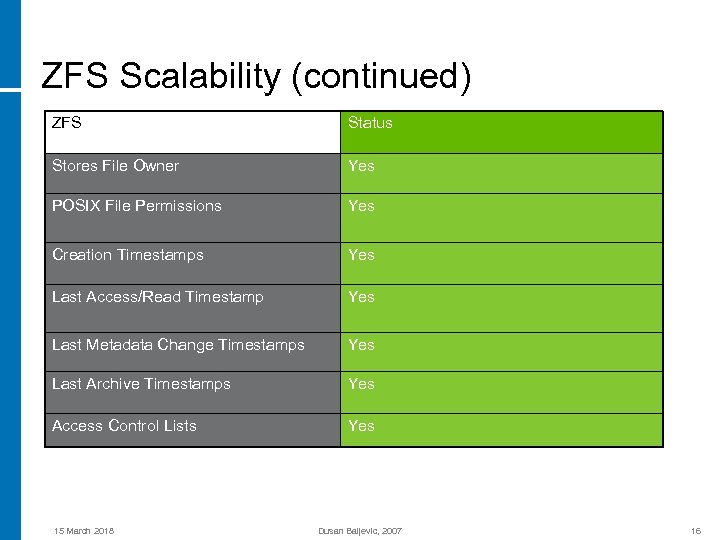

ZFS Scalability (continued) ZFS Status Stores File Owner Yes POSIX File Permissions Yes Creation Timestamps Yes Last Access/Read Timestamp Yes Last Metadata Change Timestamps Yes Last Archive Timestamps Yes Access Control Lists Yes 15 March 2018 Dusan Baljevic, 2007 16

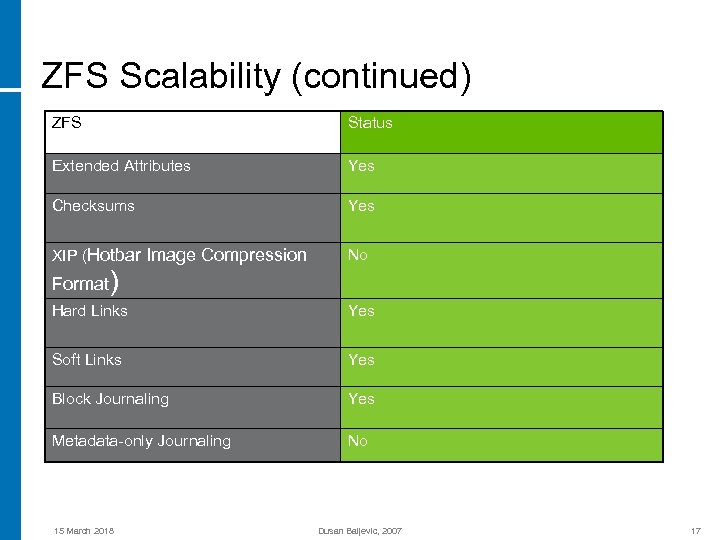

ZFS Scalability (continued) ZFS Status Extended Attributes Yes Checksums Yes XIP (Hotbar Image Compression No Hard Links Yes Soft Links Yes Block Journaling Yes Metadata-only Journaling No Format) 15 March 2018 Dusan Baljevic, 2007 17

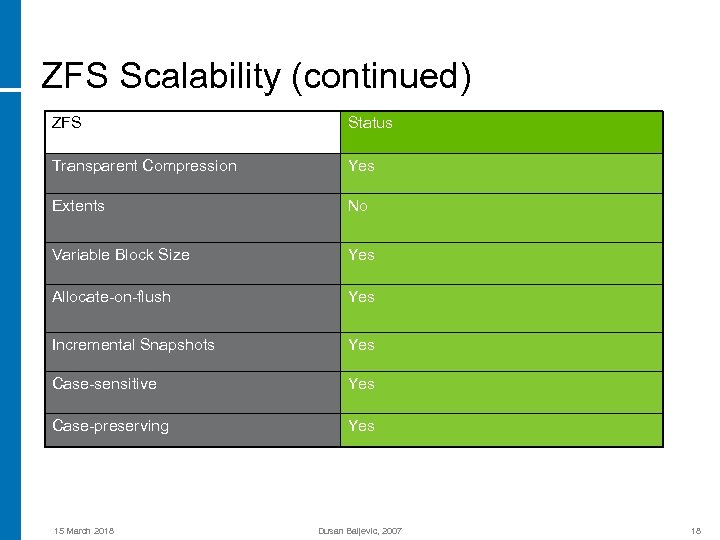

ZFS Scalability (continued) ZFS Status Transparent Compression Yes Extents No Variable Block Size Yes Allocate-on-flush Yes Incremental Snapshots Yes Case-sensitive Yes Case-preserving Yes 15 March 2018 Dusan Baljevic, 2007 18

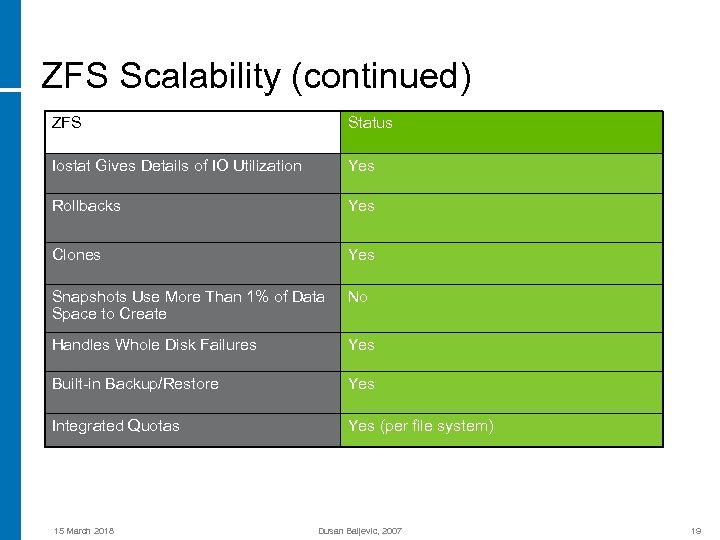

ZFS Scalability (continued) ZFS Status Iostat Gives Details of IO Utilization Yes Rollbacks Yes Clones Yes Snapshots Use More Than 1% of Data Space to Create No Handles Whole Disk Failures Yes Built-in Backup/Restore Yes Integrated Quotas Yes (per file system) 15 March 2018 Dusan Baljevic, 2007 19

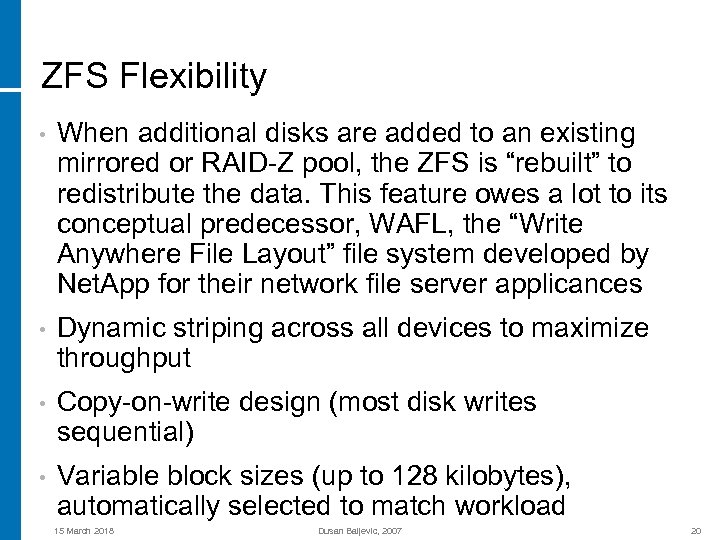

ZFS Flexibility • When additional disks are added to an existing mirrored or RAID-Z pool, the ZFS is “rebuilt” to redistribute the data. This feature owes a lot to its conceptual predecessor, WAFL, the “Write Anywhere File Layout” file system developed by Net. App for their network file server applicances • Dynamic striping across all devices to maximize throughput • Copy-on-write design (most disk writes sequential) • Variable block sizes (up to 128 kilobytes), automatically selected to match workload 15 March 2018 Dusan Baljevic, 2007 20

ZFS Flexibility (continued) • • • Globally optimal I/O sorting and aggregation Multiple independent prefetch streams with automatic length and stride detection Unlimited, instantaneous read/write snapshots Parallel, constant-time directory operations Explicit I/O priority with deadline scheduling 15 March 2018 Dusan Baljevic, 2007 21

ZFS Simplifies NFS To share file systems via NFS, no entries in /etc/dfstab are required • • Automatically handled by ZFS if the property sharenfs=on is set • Commands zfs share and zfs unshare 15 March 2018 Dusan Baljevic, 2007 22

ZFS RAID Levels • ZFS file systems automatically stripe across all top-level disk devices • Mirrors and RAID-Z devices are considered to be top-level devices • It is not recommended to mix RAID types in a pool (zpool tries to prevent this, but it can be forced with the -f flag) 15 March 2018 Dusan Baljevic, 2007 23

ZFS RAID Levels (continued) The following RAID levels are supported: * RAID-0 (striping) * RAID-1 (mirroring) * RAID-Z (similar to RAID-5, but with variablewidth stripes to avoid RAID-5 write hole) * RAID-Z 2 (double-parity RAID-5) 15 March 2018 Dusan Baljevic, 2007 24

ZFS RAID Levels (continued) • A RAID-Z configuration with N disks of size X with P parity disks can hold approximately (N-P)*X bytes and can withstand one device failing • Start a single-parity RAID-Z configuration at 3 disks (2+1) • Start a double-parity RAID-Z 2 configuration at 5 disks (3+2) • (N+P) with P = 1 (RAID-Z) or 2 (RAID-Z 2) and N equals 2, 4, or 8 • The recommended number of disks per group is between 3 and 9 (use multiple groups if larger) 15 March 2018 Dusan Baljevic, 2007 25

ZFS Copy-On-Write (courtesy of Jeff Bonwick) 15 March 2018 Dusan Baljevic, 2007 26

ZFS and Disk Arrays with Own Cache • ZFS does not “trust” that anything it writes to the ZFS Intent Log (ZIL) made it to your storage, until it flushes the storage cache • After every write to the ZIL, ZFS executes an fsync() call to instruct the storage to flush its write cache to the disk. ZFS will not consider a write operation done until the ZIL write and flush have completed • Problem might occur when trying to layer ZFS over an intelligent storage array with a batterybacked cache (due to arrays ability to use cache and override ZFS activities) 15 March 2018 Dusan Baljevic, 2007 27

ZFS and Disk Arrays with Own Cache (continued) • Initial tests show that HP EVAs and Hitachi/HP XP SAN are not affected and work well with ZFS • Lab test will provide more comprehensive data for HP EVA and XP SAN 15 March 2018 Dusan Baljevic, 2007 28

ZFS and Disk Arrays with Own Cache (continued) Two possible solutions for SAN that are affected: • Disable the ZIL. The ZIL is the way ZFS maintains consistency until it can get the blocks written to their final place on the disk. BAD OPTION! • Configure the disk array to ignore ZFS flush commands. Quite safe and beneficial 15 March 2018 Dusan Baljevic, 2007 29

Configure Disk Array to Ignore ZFS flush For Engenio arrays (Sun Storage. Tek Flex. Line 200/300 series, Sun Stor. Edge 6130, Sun Storage. Tek 6140/6540, IBM DS 4 x 00, many SGI Infinite. Storage arrays): • Shut down the server or, at minimum, export ZFS pools before running this • Cut and paste the following into the script editor of the "Enterprise Management Window" of the SANtricity management GUI: 15 March 2018 Dusan Baljevic, 2007 30

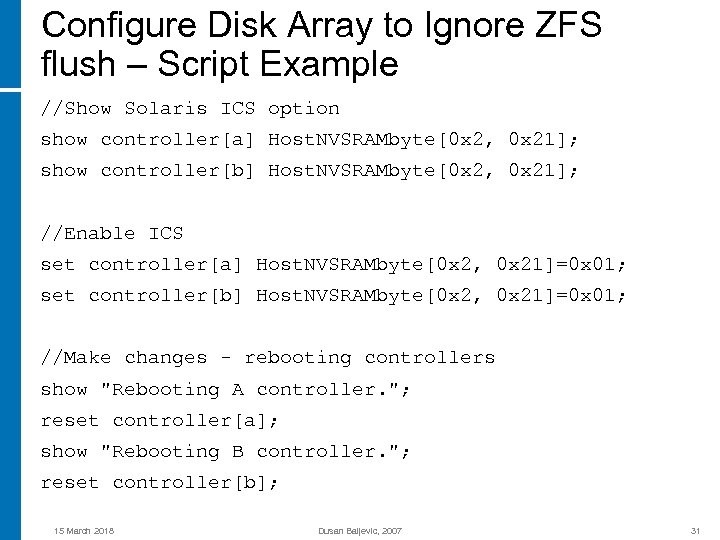

Configure Disk Array to Ignore ZFS flush – Script Example //Show Solaris ICS option show controller[a] Host. NVSRAMbyte[0 x 2, 0 x 21]; show controller[b] Host. NVSRAMbyte[0 x 2, 0 x 21]; //Enable ICS set controller[a] Host. NVSRAMbyte[0 x 2, 0 x 21]=0 x 01; set controller[b] Host. NVSRAMbyte[0 x 2, 0 x 21]=0 x 01; //Make changes - rebooting controllers show "Rebooting A controller. "; reset controller[a]; show "Rebooting B controller. "; reset controller[b]; 15 March 2018 Dusan Baljevic, 2007 31

Why ZFS Now? • • • ZFS is positioned to support more file systems, snapshots, and files in a file system than can possibly be created in the foreseeable future Complicated storage administration concepts are automated and consolidated into straightforward language, reducing administrative overhead by up to 80 percent Unlike traditional file systems that require a separate volume manager, ZFS integrates volume management functions. It breaks out of “one-to-one mapping between the file system and its associated volumes” limitation with the storage pool model. When capacity is no longer required by one file system in the pool, it becomes available to others 15 March 2018 Dusan Baljevic, 2007 32

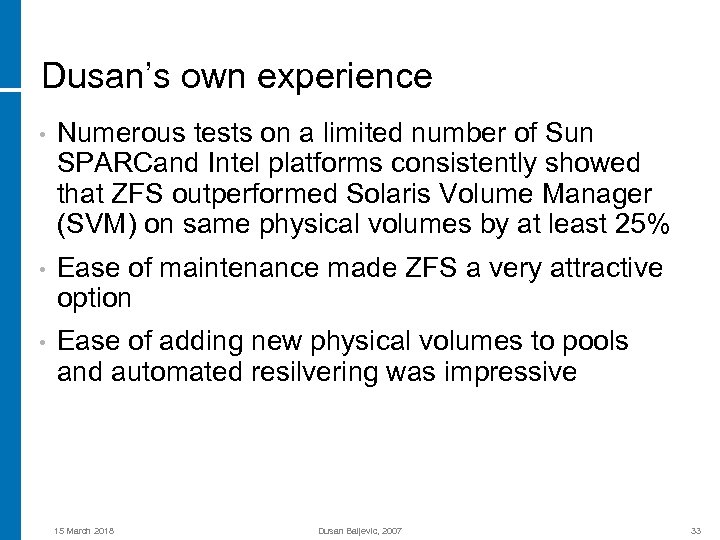

Dusan’s own experience • Numerous tests on a limited number of Sun SPARCand Intel platforms consistently showed that ZFS outperformed Solaris Volume Manager (SVM) on same physical volumes by at least 25% • Ease of maintenance made ZFS a very attractive option • Ease of adding new physical volumes to pools and automated resilvering was impressive 15 March 2018 Dusan Baljevic, 2007 33

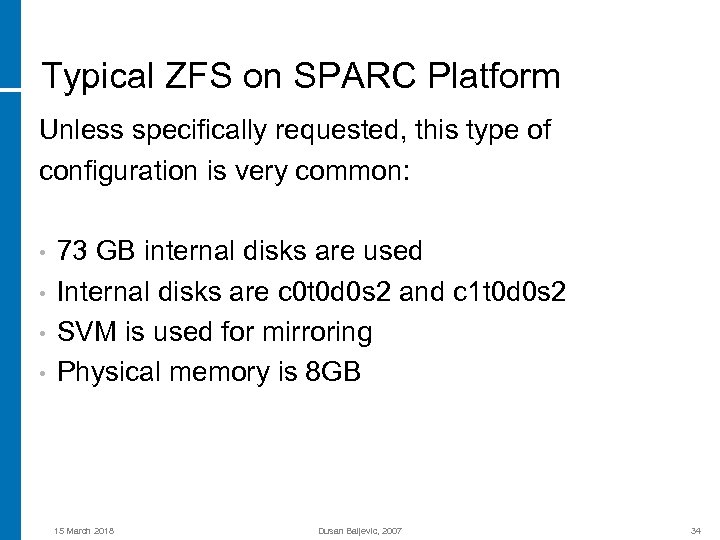

Typical ZFS on SPARC Platform Unless specifically requested, this type of configuration is very common: • • 73 GB internal disks are used Internal disks are c 0 t 0 d 0 s 2 and c 1 t 0 d 0 s 2 SVM is used for mirroring Physical memory is 8 GB 15 March 2018 Dusan Baljevic, 2007 34

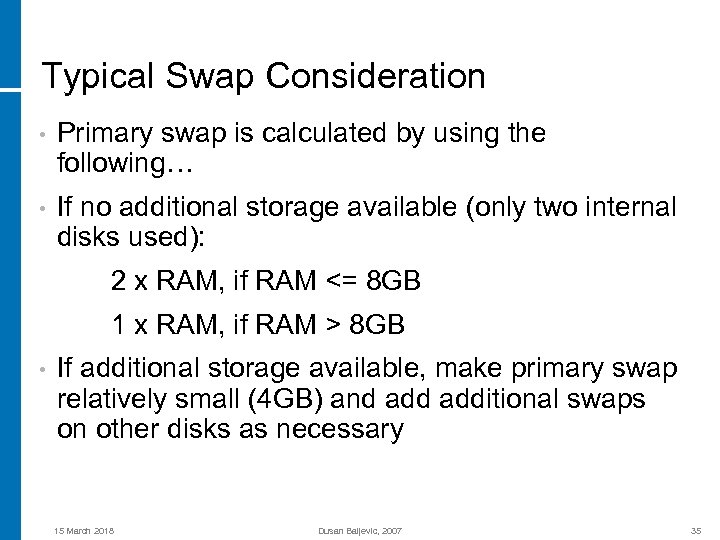

Typical Swap Consideration • Primary swap is calculated by using the following… • If no additional storage available (only two internal disks used): 2 x RAM, if RAM <= 8 GB 1 x RAM, if RAM > 8 GB • If additional storage available, make primary swap relatively small (4 GB) and additional swaps on other disks as necessary 15 March 2018 Dusan Baljevic, 2007 35

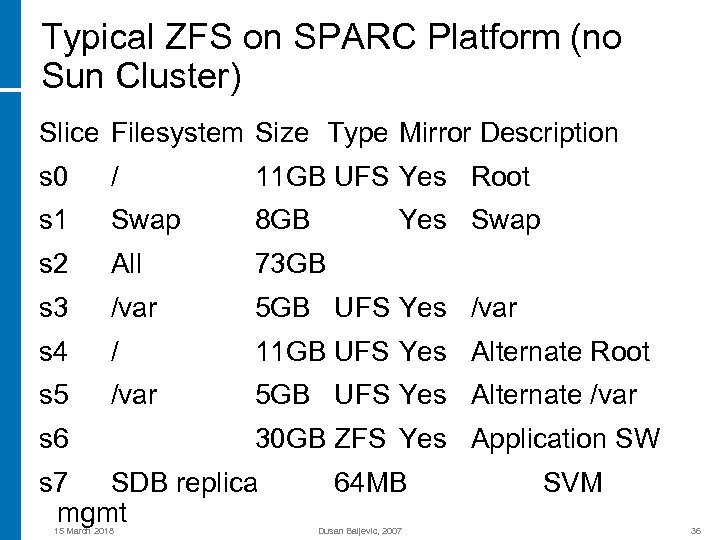

Typical ZFS on SPARC Platform (no Sun Cluster) Slice Filesystem Size Type Mirror Description s 0 / 11 GB UFS Yes Root s 1 Swap 8 GB s 2 All 73 GB s 3 /var 5 GB UFS Yes /var s 4 / 11 GB UFS Yes Alternate Root s 5 /var 5 GB UFS Yes Alternate /var s 6 30 GB ZFS Yes Application SW s 7 SDB replica mgmt 15 March 2018 Yes Swap 64 MB Dusan Baljevic, 2007 SVM 36

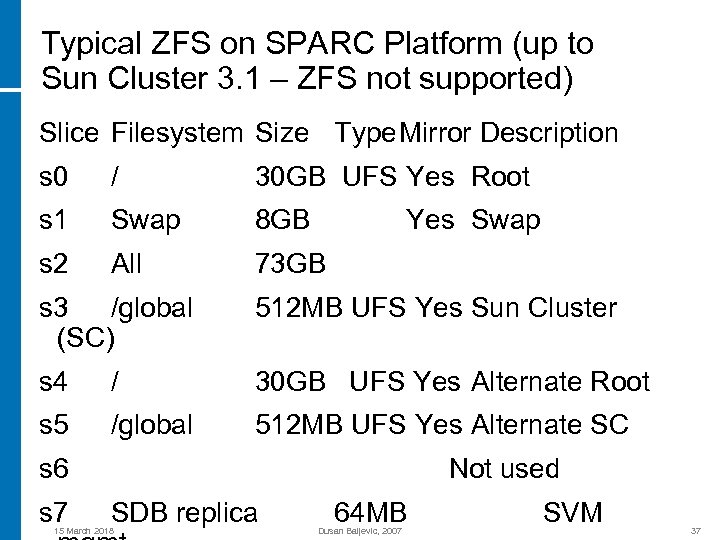

Typical ZFS on SPARC Platform (up to Sun Cluster 3. 1 – ZFS not supported) Slice Filesystem Size Type. Mirror Description s 0 / 30 GB UFS Yes Root s 1 Swap 8 GB s 2 All 73 GB Yes Swap s 3 /global (SC) 512 MB UFS Yes Sun Cluster s 4 / 30 GB UFS Yes Alternate Root s 5 /global 512 MB UFS Yes Alternate SC s 6 s 7 Not used SDB replica 15 March 2018 64 MB Dusan Baljevic, 2007 SVM 37

Support for ZFS in Sun Cluster 3. 2 • ZFS is supported as a highly available local file system in the Sun Cluster 3. 2 release • ZFS with Sun Cluster offers a file system solution combining high availability, data integrity, performance, and scalability, covering the needs of the most demanding environments 15 March 2018 Dusan Baljevic, 2007 38

ZFS Best Practices • • • Run ZFS on servers that run 64 -bit kernel One GB or more of RAM is recommended Because ZFS caches data in kernel addressable memory, the kernel will possibly be larger than with other file systems. Use the size of physical memory as an upper bound to the extra amount of swap space that might be required Do not use slices on the same disk for both swap space and ZFS file systems. Keep the swap areas separate from the ZFS file systems Set up one storage pool using whole disks per system 15 March 2018 Dusan Baljevic, 2007 39

ZFS Best Practices (continued) • Set up a replicated pool (raidz, raidz 2, or raid 1 configuration) for all production environments • Do not use disk slices for storage pools intended for production use • Set up hot spares to speed up healing in the face of hardware failures • For replicated pools, use multiple controllers to reduce hardware failures and improve performance 15 March 2018 Dusan Baljevic, 2007 40

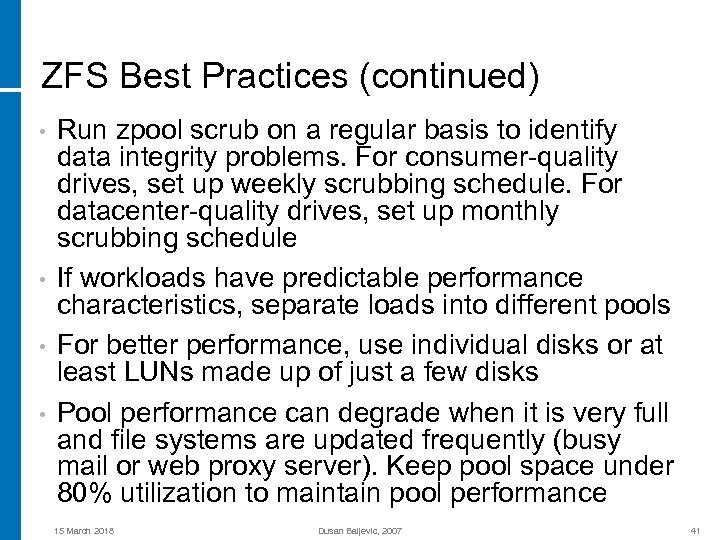

ZFS Best Practices (continued) • • Run zpool scrub on a regular basis to identify data integrity problems. For consumer-quality drives, set up weekly scrubbing schedule. For datacenter-quality drives, set up monthly scrubbing schedule If workloads have predictable performance characteristics, separate loads into different pools For better performance, use individual disks or at least LUNs made up of just a few disks Pool performance can degrade when it is very full and file systems are updated frequently (busy mail or web proxy server). Keep pool space under 80% utilization to maintain pool performance 15 March 2018 Dusan Baljevic, 2007 41

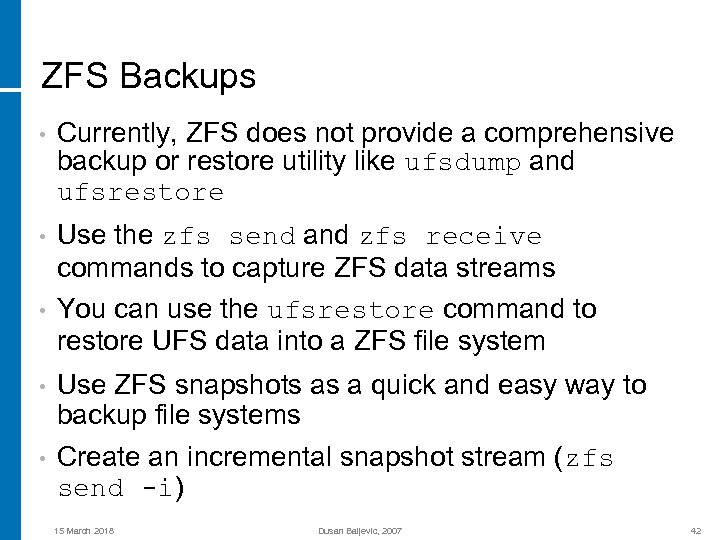

ZFS Backups • Currently, ZFS does not provide a comprehensive backup or restore utility like ufsdump and ufsrestore • Use the zfs send and zfs receive commands to capture ZFS data streams You can use the ufsrestore command to restore UFS data into a ZFS file system • • • Use ZFS snapshots as a quick and easy way to backup file systems Create an incremental snapshot stream (zfs send -i) 15 March 2018 Dusan Baljevic, 2007 42

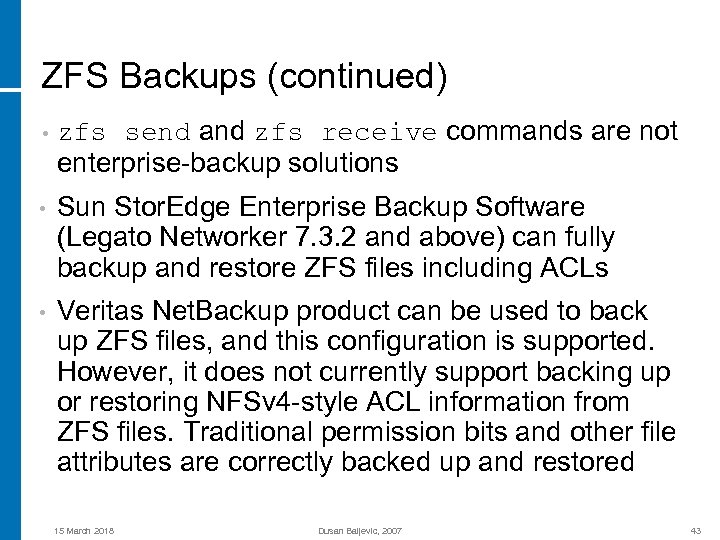

ZFS Backups (continued) send and zfs receive commands are not enterprise-backup solutions • zfs • Sun Stor. Edge Enterprise Backup Software (Legato Networker 7. 3. 2 and above) can fully backup and restore ZFS files including ACLs • Veritas Net. Backup product can be used to back up ZFS files, and this configuration is supported. However, it does not currently support backing up or restoring NFSv 4 -style ACL information from ZFS files. Traditional permission bits and other file attributes are correctly backed up and restored 15 March 2018 Dusan Baljevic, 2007 43

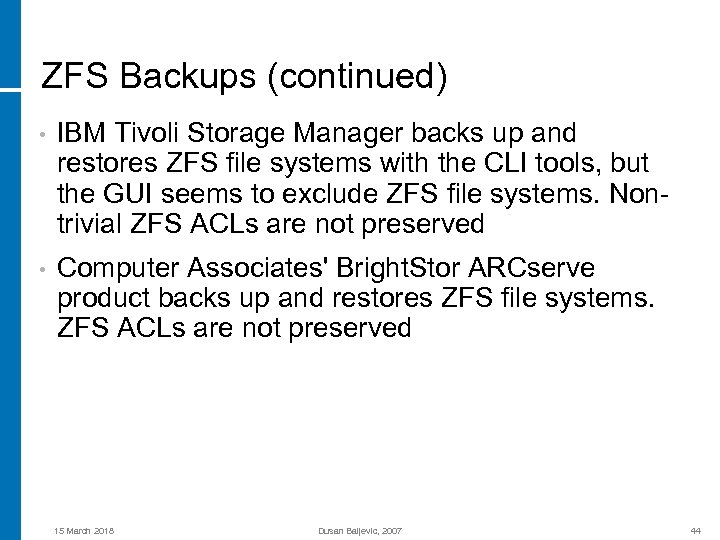

ZFS Backups (continued) • IBM Tivoli Storage Manager backs up and restores ZFS file systems with the CLI tools, but the GUI seems to exclude ZFS file systems. Nontrivial ZFS ACLs are not preserved • Computer Associates' Bright. Stor ARCserve product backs up and restores ZFS file systems. ZFS ACLs are not preserved 15 March 2018 Dusan Baljevic, 2007 44

ZFS and Data Protector • According to “HP Openview Storage Data Protector Planned Enhancements to Platforms, Integrations, Clusters and Zero Downtime Backups version 4. 2”, dated 12 th of January 2007: Back Agent (Disk Agent) support for Solaris 10 ZFS will be released in Data Protector 6 in March 2007 http: //storage. corp. hp. com/Application/View/Prod. C enter. asp? OID=326828&rdo. Type=filter&inp. Criteri a 1=Quick. Link&inp. Criteria 2=nothing&inp. Criteria. C hild 1=3#Quick. Link 15 March 2018 Dusan Baljevic, 2007 45

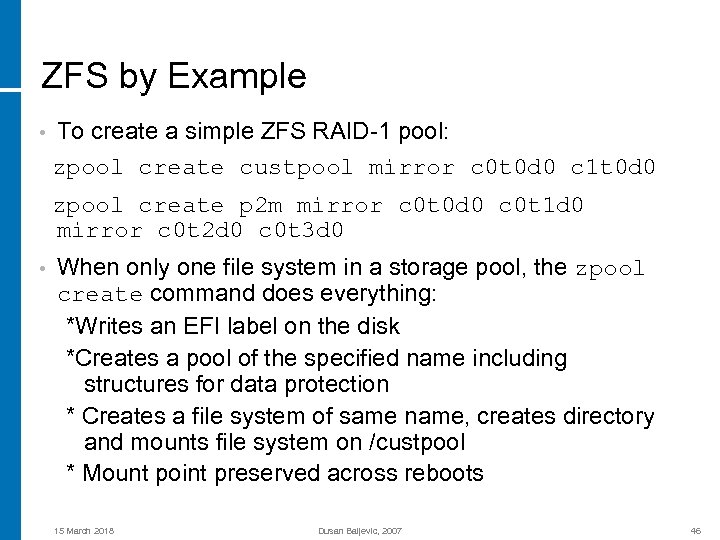

ZFS by Example • To create a simple ZFS RAID-1 pool: zpool create custpool mirror c 0 t 0 d 0 c 1 t 0 d 0 zpool create p 2 m mirror c 0 t 0 d 0 c 0 t 1 d 0 mirror c 0 t 2 d 0 c 0 t 3 d 0 • When only one file system in a storage pool, the zpool create command does everything: *Writes an EFI label on the disk *Creates a pool of the specified name including structures for data protection * Creates a file system of same name, creates directory and mounts file system on /custpool * Mount point preserved across reboots 15 March 2018 Dusan Baljevic, 2007 46

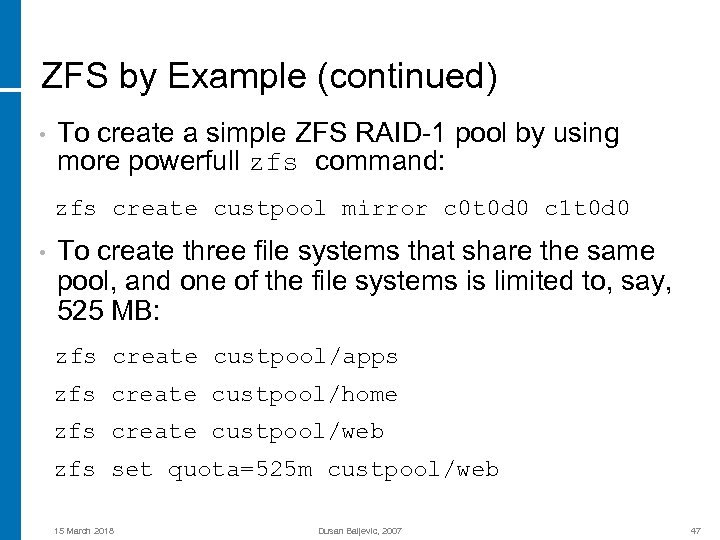

ZFS by Example (continued) • To create a simple ZFS RAID-1 pool by using more powerfull zfs command: zfs create custpool mirror c 0 t 0 d 0 c 1 t 0 d 0 • To create three file systems that share the same pool, and one of the file systems is limited to, say, 525 MB: zfs create custpool/apps zfs create custpool/home zfs create custpool/web zfs set quota=525 m custpool/web 15 March 2018 Dusan Baljevic, 2007 47

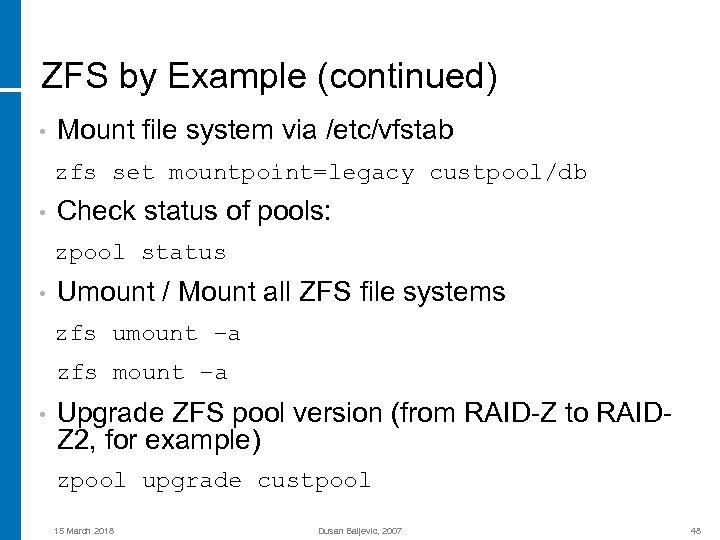

ZFS by Example (continued) • Mount file system via /etc/vfstab zfs set mountpoint=legacy custpool/db • Check status of pools: zpool status • Umount / Mount all ZFS file systems zfs umount –a zfs mount –a • Upgrade ZFS pool version (from RAID-Z to RAIDZ 2, for example) zpool upgrade custpool 15 March 2018 Dusan Baljevic, 2007 48

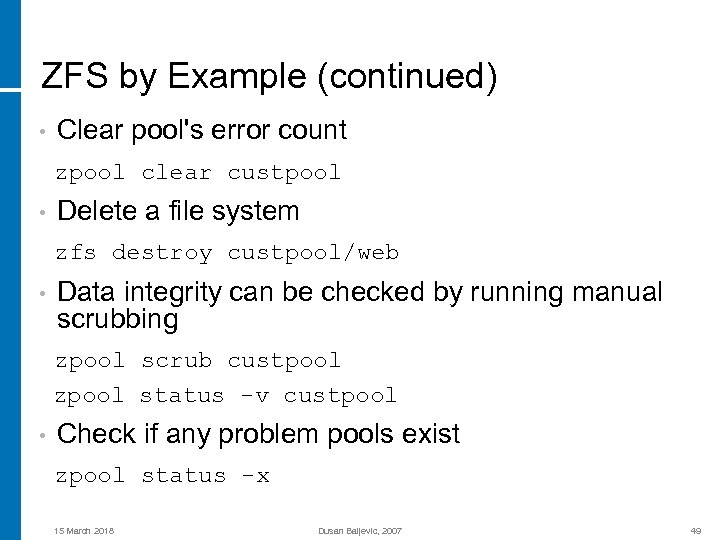

ZFS by Example (continued) • Clear pool's error count zpool clear custpool • Delete a file system zfs destroy custpool/web • Data integrity can be checked by running manual scrubbing zpool scrub custpool zpool status -v custpool • Check if any problem pools exist zpool status -x 15 March 2018 Dusan Baljevic, 2007 49

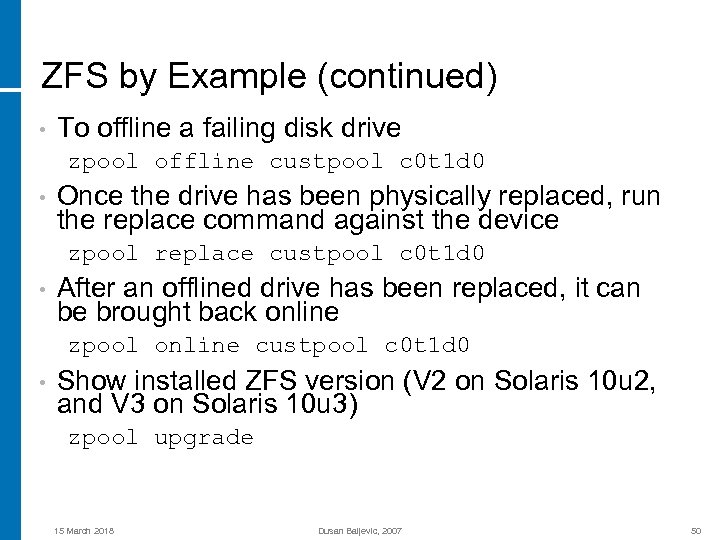

ZFS by Example (continued) • To offline a failing disk drive zpool offline custpool c 0 t 1 d 0 • Once the drive has been physically replaced, run the replace command against the device zpool replace custpool c 0 t 1 d 0 • After an offlined drive has been replaced, it can be brought back online zpool online custpool c 0 t 1 d 0 • Show installed ZFS version (V 2 on Solaris 10 u 2, and V 3 on Solaris 10 u 3) zpool upgrade 15 March 2018 Dusan Baljevic, 2007 50

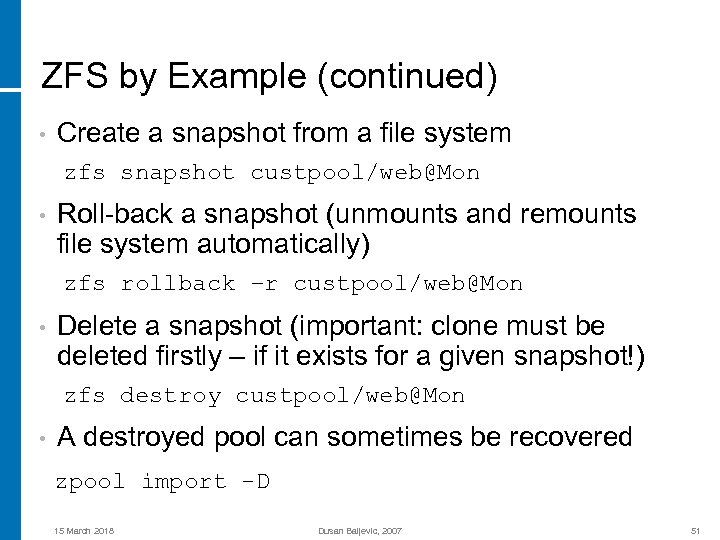

ZFS by Example (continued) • Create a snapshot from a file system zfs snapshot custpool/web@Mon • Roll-back a snapshot (unmounts and remounts file system automatically) zfs rollback –r custpool/web@Mon • Delete a snapshot (important: clone must be deleted firstly – if it exists for a given snapshot!) zfs destroy custpool/web@Mon • A destroyed pool can sometimes be recovered zpool import -D 15 March 2018 Dusan Baljevic, 2007 51

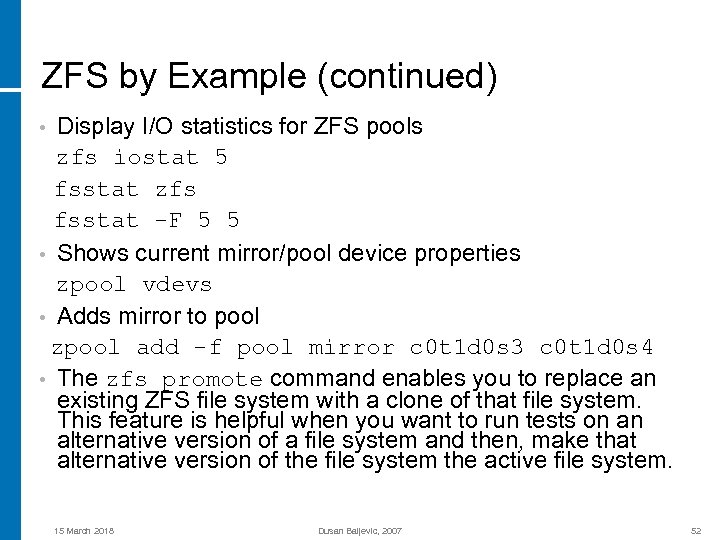

ZFS by Example (continued) Display I/O statistics for ZFS pools zfs iostat 5 fsstat zfs fsstat -F 5 5 • Shows current mirror/pool device properties zpool vdevs • Adds mirror to pool zpool add -f pool mirror c 0 t 1 d 0 s 3 c 0 t 1 d 0 s 4 • The zfs promote command enables you to replace an existing ZFS file system with a clone of that file system. This feature is helpful when you want to run tests on an alternative version of a file system and then, make that alternative version of the file system the active file system. • 15 March 2018 Dusan Baljevic, 2007 52

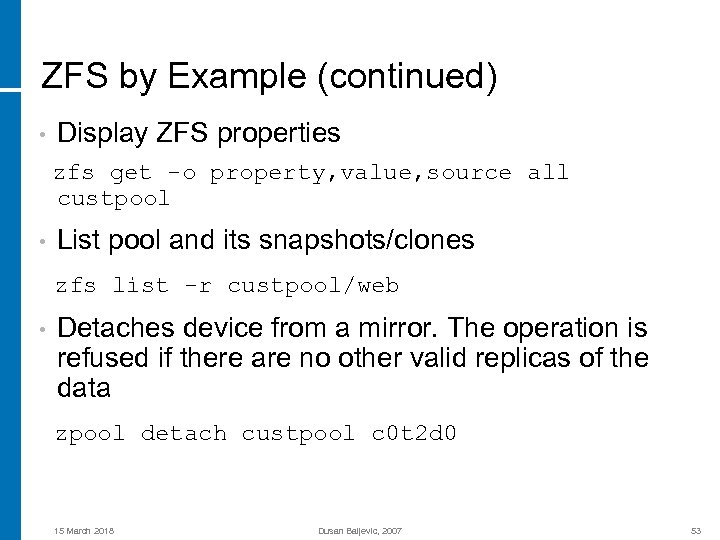

ZFS by Example (continued) • Display ZFS properties zfs get -o property, value, source all custpool • List pool and its snapshots/clones zfs list -r custpool/web • Detaches device from a mirror. The operation is refused if there are no other valid replicas of the data zpool detach custpool c 0 t 2 d 0 15 March 2018 Dusan Baljevic, 2007 53

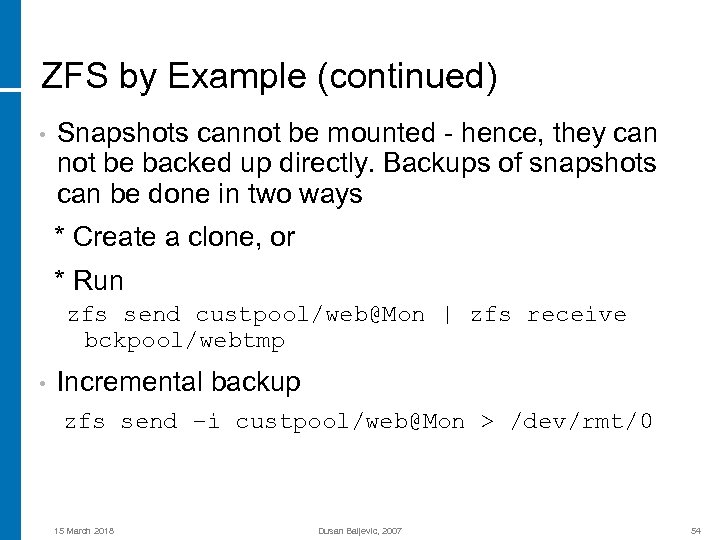

ZFS by Example (continued) • Snapshots cannot be mounted - hence, they can not be backed up directly. Backups of snapshots can be done in two ways * Create a clone, or * Run zfs send custpool/web@Mon | zfs receive bckpool/webtmp • Incremental backup zfs send –i custpool/web@Mon > /dev/rmt/0 15 March 2018 Dusan Baljevic, 2007 54

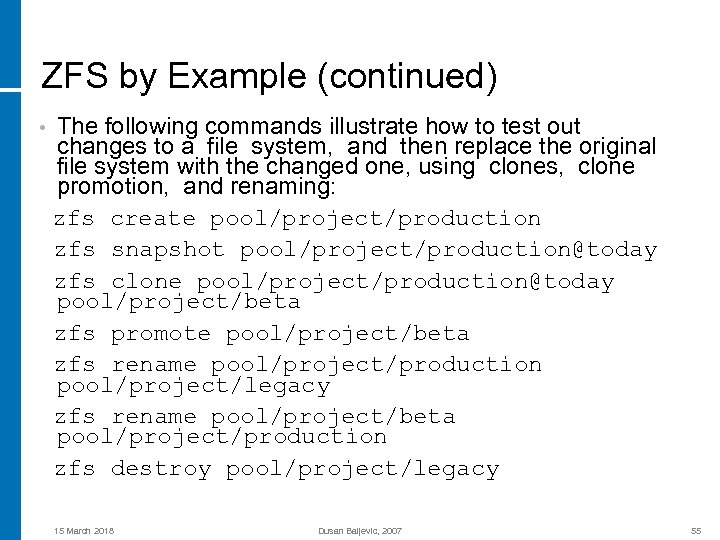

ZFS by Example (continued) • The following commands illustrate how to test out changes to a file system, and then replace the original file system with the changed one, using clones, clone promotion, and renaming: zfs create pool/project/production zfs snapshot pool/project/production@today zfs clone pool/project/production@today pool/project/beta zfs promote pool/project/beta zfs rename pool/project/production pool/project/legacy zfs rename pool/project/beta pool/project/production zfs destroy pool/project/legacy 15 March 2018 Dusan Baljevic, 2007 55

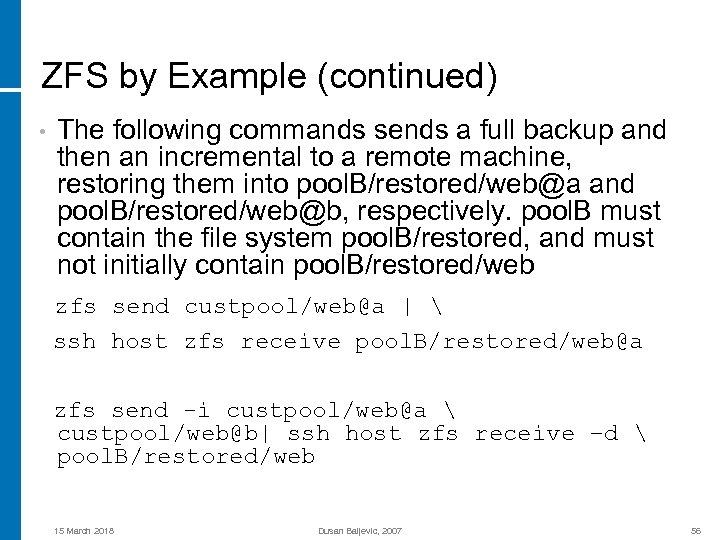

ZFS by Example (continued) • The following commands sends a full backup and then an incremental to a remote machine, restoring them into pool. B/restored/web@a and pool. B/restored/web@b, respectively. pool. B must contain the file system pool. B/restored, and must not initially contain pool. B/restored/web zfs send custpool/web@a | ssh host zfs receive pool. B/restored/web@a zfs send -i custpool/web@a custpool/web@b| ssh host zfs receive –d pool. B/restored/web 15 March 2018 Dusan Baljevic, 2007 56

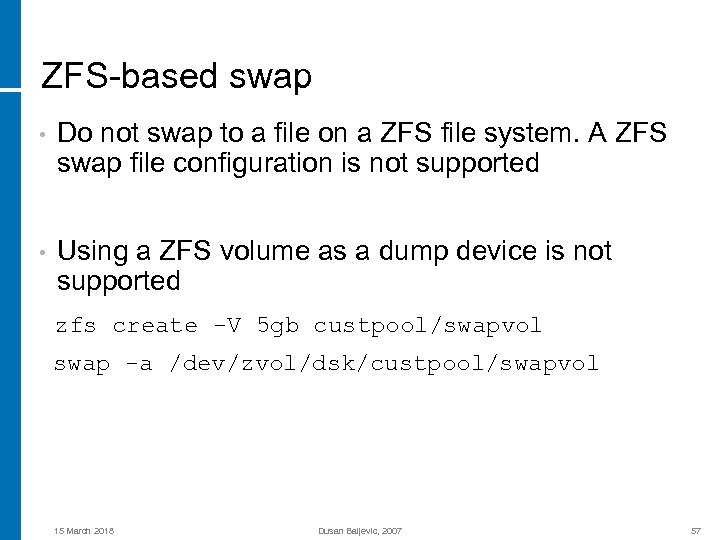

ZFS-based swap • Do not swap to a file on a ZFS file system. A ZFS swap file configuration is not supported • Using a ZFS volume as a dump device is not supported zfs create -V 5 gb custpool/swapvol swap -a /dev/zvol/dsk/custpool/swapvol 15 March 2018 Dusan Baljevic, 2007 57

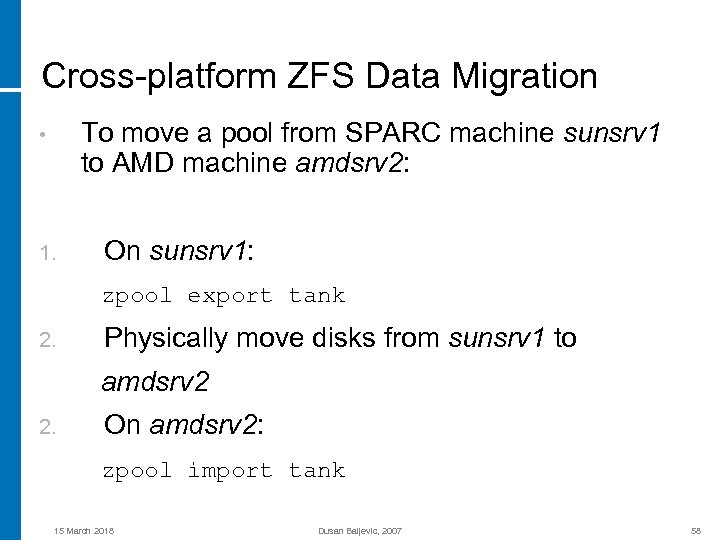

Cross-platform ZFS Data Migration To move a pool from SPARC machine sunsrv 1 to AMD machine amdsrv 2: • 1. On sunsrv 1: zpool export tank 2. Physically move disks from sunsrv 1 to amdsrv 2 2. On amdsrv 2: zpool import tank 15 March 2018 Dusan Baljevic, 2007 58

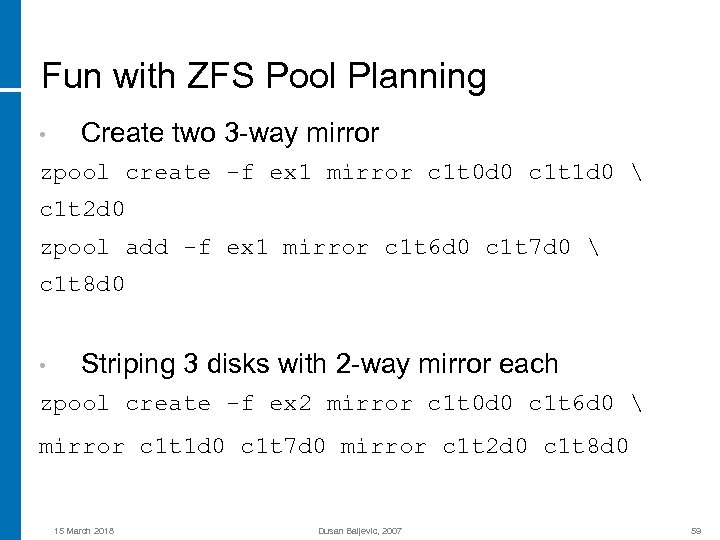

Fun with ZFS Pool Planning • Create two 3 -way mirror zpool create -f ex 1 mirror c 1 t 0 d 0 c 1 t 1 d 0 c 1 t 2 d 0 zpool add -f ex 1 mirror c 1 t 6 d 0 c 1 t 7 d 0 c 1 t 8 d 0 • Striping 3 disks with 2 -way mirror each zpool create -f ex 2 mirror c 1 t 0 d 0 c 1 t 6 d 0 mirror c 1 t 1 d 0 c 1 t 7 d 0 mirror c 1 t 2 d 0 c 1 t 8 d 0 15 March 2018 Dusan Baljevic, 2007 59

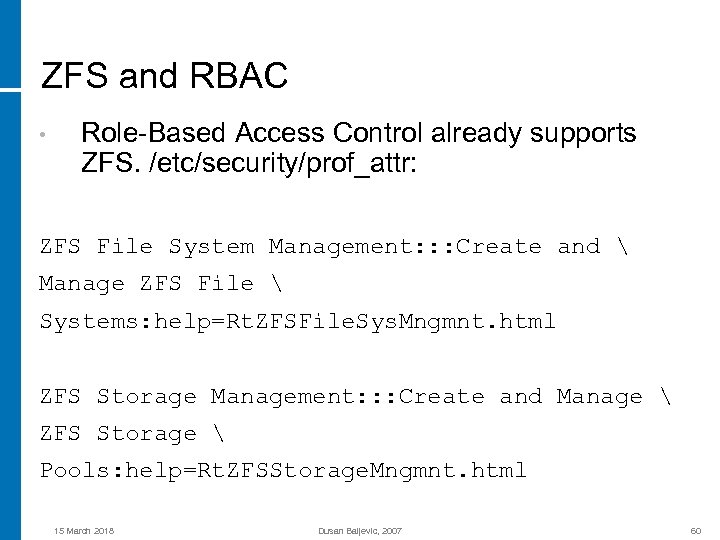

ZFS and RBAC • Role-Based Access Control already supports ZFS. /etc/security/prof_attr: ZFS File System Management: : : Create and Manage ZFS File Systems: help=Rt. ZFSFile. Sys. Mngmnt. html ZFS Storage Management: : : Create and Manage ZFS Storage Pools: help=Rt. ZFSStorage. Mngmnt. html 15 March 2018 Dusan Baljevic, 2007 60

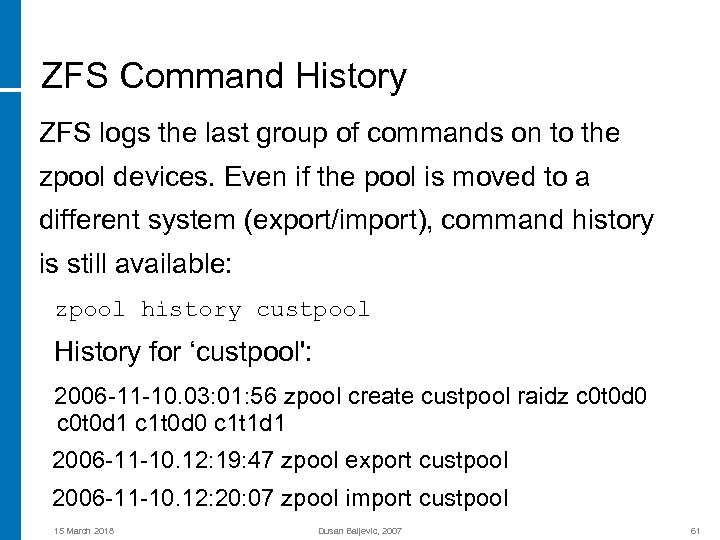

ZFS Command History ZFS logs the last group of commands on to the zpool devices. Even if the pool is moved to a different system (export/import), command history is still available: zpool history custpool History for ‘custpool': 2006 -11 -10. 03: 01: 56 zpool create custpool raidz c 0 t 0 d 0 c 0 t 0 d 1 c 1 t 0 d 0 c 1 t 1 d 1 2006 -11 -10. 12: 19: 47 zpool export custpool 2006 -11 -10. 12: 20: 07 zpool import custpool 15 March 2018 Dusan Baljevic, 2007 61

ZFS Boot Recovery • If a panic-reboot loop is caused by a ZFS software programming error, the server can be instructed to boot without the ZFS file systems boot -m milestone=none • When the system is up, remount root file system “rw” and remove file /etc/zfs/zpool. cache. The remainder of the boot can proceed with command svcadm milestone • At that point, import the good pools. The damaged pools may need to be re-initialized 15 March 2018 Dusan Baljevic, 2007 62

ZFS and Containers • Solaris 10 06/06 supports the use of ZFS file systems • It is possible to install a zone into a ZFS file system, but the installer/upgrader program does not understand ZFS well enough to upgrade zones that reside on a ZFS file system • Upgrading a server that has a zone installed on a ZFS file system is not yet supported 15 March 2018 Dusan Baljevic, 2007 63

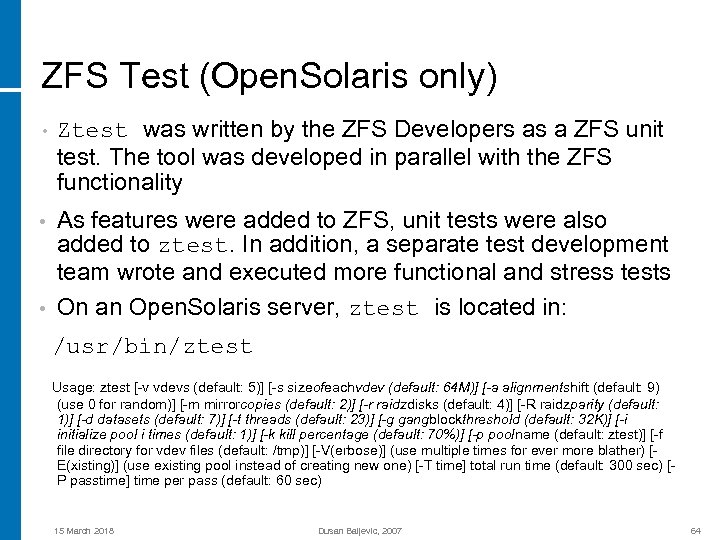

ZFS Test (Open. Solaris only) • Ztest was written by the ZFS Developers as a ZFS unit test. The tool was developed in parallel with the ZFS functionality • As features were added to ZFS, unit tests were also added to ztest. In addition, a separate test development team wrote and executed more functional and stress tests On an Open. Solaris server, ztest is located in: • /usr/bin/ztest Usage: ztest [-v vdevs (default: 5)] [-s sizeofeachvdev (default: 64 M)] [-a alignmentshift (default: 9) (use 0 for random)] [-m mirrorcopies (default: 2)] [-r raidzdisks (default: 4)] [-R raidzparity (default: 1)] [-d datasets (default: 7)] [-t threads (default: 23)] [-g gangblockthreshold (default: 32 K)] [-i initialize pool i times (default: 1)] [-k kill percentage (default: 70%)] [-p poolname (default: ztest)] [-f file directory for vdev files (default: /tmp)] [-V(erbose)] (use multiple times for ever more blather) [E(xisting)] (use existing pool instead of creating new one) [-T time] total run time (default: 300 sec) [P passtime] time per pass (default: 60 sec) 15 March 2018 Dusan Baljevic, 2007 64

ZFS Current Status • Solaris 10 Update 3 (11/06) has been released. It brings a large number of features that have been in Open. Solaris into the fully supported release, including: ZFS command improvements and changes, including RAID-Z 2, hot-spares, recursive snapshots, promotion of clones, compact NFSv 4 ACLs, destroyed pools recovery, error clearing, ZFS integration with FMA, and more • As of Open. Solaris Build 53, a powerful new feature: ZFS and i. SCSI integration • One of the missing features: file systems are not mountable after detaching “submirrors” 15 March 2018 Dusan Baljevic, 2007 65

ZFS Current Status (continued) • Improvements for NFS over ZFS complies with all NFS semantics even with write caches enabled. Disabling the ZIL (setting zil_disable to 1 using mdb and then mounting the filesystem) is one way to generate an improper NFS service. With the ZIL disabled, commit request are ignored with potential client's view corruption • No support for reducing a zpool's size by removing devices 15 March 2018 Dusan Baljevic, 2007 66

ZFS Mountroot (currently X 86 only) • ZFS Mountroot provides capability of configuring a ZFS root file system. It is not a complete boot solution - it relies on the existence of a small UFS boot environment • ZFS Mountroot was integrated in Solaris Nevada Build 37 - Open. Solaris release (disabled by default) • The ZFS Mountroot does not work on SPARC currently • ACLs are not fully preserved when copying files from a UFS root to a ZFS root. ZFS will convert UFS style ACLs to the new NFSv 4 ACLs, but they may not be entirely identical 15 March 2018 Dusan Baljevic, 2007 67

f863cb1114f57d1f117a5bd294093f0d.ppt