4ad48ae62fd4468c5d43d6b12d59da09.ppt

- Количество слайдов: 46

x. CAT+Moab Cloud Egan Ford Team Lead, ATS STa. CC (Scientific, Technical, and Cloud Computing) Project Leader, x. CAT egan@us. ibm. com © 2008 IBM Corporation

Agenda § Objectives § Stateless/Statelite § x. CAT § Moab § x. CAT+Moab 2 3/17/2018 © 2009 IBM Corporation

Objectives § Increase ROI – Increase utilization. – Reduce management overhead. – Reduce downtime/Increase availability. • Installation • Maintenance – Rapid data & application-based provisioning. – Better cross departmental use of computing resources. • Grid, On-Demand, Utility Computing, Cloud § Reduce Investment § Reduce Footprint § Reduce Power Usage 3 3/17/2018 © 2009 IBM Corporation

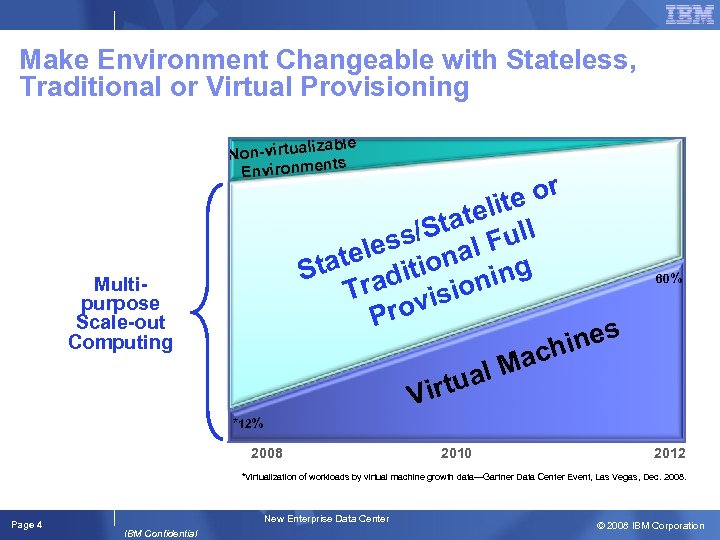

Make Environment Changeable with Stateless, Traditional or Virtual Provisioning zable Non-virtuali ts Environmen or elite t Sta Full ss/ al e atel ition ng St rad isioni T v Pro nes chi l Ma rtua Vi Multipurpose Scale-out Computing 60% *12% 2008 2010 2012 *Virtualization of workloads by virtual machine growth data—Gartner Data Center Event, Las Vegas, Dec. 2008. Page 4 New Enterprise Data Center IBM Confidential © 2008 IBM Corporation

What is stateless? § Stateless is not a new concept. – The processors and memory (RAM) subsystems in modern machines do not maintain any state between reboots, i. e. , having no information about what occurred previously. § Stateless provisioning takes this concept to the next level and removes the need to store the operating system and the operating system state locally. – Bproc/Beoproc – Blue. Gene/L § SAN/i. SCSI provisioning and NFS-root-RW is not stateless. – State is maintained remotely (disk-elsewhere). 5 17 -Mar-18 © 2009 IBM Corporation

What is stateless provisioning? § Stateless provisioning loads the OS over the network into memory without the need to install to direct-attached disk. § OS state is not maintained locally or remotely after a reboot. For example booting an OS from CD (e. g. Live CD). – The initial start state will always be the same for any nodes using the same stateless image as if reinstalling between reboots. – SAN-boot, i. SCSI-boot, NFS-root-RW are not stateless. § Think of your nodes/servers as preprogrammed appliances that serve a fixed or limited purpose. – E. g. DVD Player, toaster, etc. . . 6 17 -Mar-18 © 2009 IBM Corporation

Stateless is not diskless, diskfree, or disk-elsewhere § Stateless provisioning can leverage local disk, SAN, or i. SCSI for /tmp, /var/tmp, scratch, application data, and swap. § If possible diskfree is recommended. – Reduced power – Reduced cooling – Reduced downtime (Increased system MTBF) – Reduced space • Future diskfree only nodes. E. g. Blue. Gene. § Stateless does not change the way applications access data. – NFS, SAN, GPFS, local disk, etc… supported. 7 17 -Mar-18 © 2009 IBM Corporation

Why stateless provisioning? § Less (horizontal) software to maintain. – No inconsistencies over time. § Less (vertical) software to maintain. – Small fix purpose images vs. large general purpose images. • Less risk of a software component having a security hole. • Reduced complexity. § Greater security. – No locally stored authentication data. § Initial large installations and upgrades can be reduced to minutes of boot time verses hours or days of operating system installation time. – Reprovisioning/repurposing a large number of machines can be accomplished in a few minutes. 8 17 -Mar-18 © 2009 IBM Corporation

Why stateless provisioning? § Provides a framework for automated per application provisioning using intelligent application schedulers. – Change server function as needed (On-Demand/Utility/Cloud computing). – Increase node utilization. § A stateless image can be easily share across the enterprise enabling the promise of grid computing. – This can be automated with grid scheduling solutions. § The migration of a running virtual machine between physical machines has no large OS image to migrate. 9 17 -Mar-18 © 2009 IBM Corporation

Stateless Customer Set Limitations § Operating System must boot and operate as OS vendor intended and must not require modification. – Support • • Vendor Application Administrator Community § Images must be easy to create and maintain. – RPM/YUM/YAST – A real file system layout for manual configuration. § Must support a system or method of per node unique configuration. – IP Addresses, NFS mounts, etc. . . – License Files – Authentication configuration and credentials (must not be in image). § Avoid reengineering existing or adding new networks. § Predictive Performance § Untethered (e. g. No SAN) – May be unavoidable 10 3/17/2018 © 2009 IBM Corporation

What is x. CAT? § Extreme Cluster(Cloud) Administration Toolkit – Open Source Linux/AIX/Windows Scale-out Cluster Management Solution § Design Principles – Build upon the work of others • Leverage best practices – Scripts only (no compiled code) • Portable • Source – Vox Populi -- Voice of the People • • 11 Community requirements driven Do not assume anything 17 -Mar-18 © 2009 IBM Corporation

What does x. CAT do? § Remote Hardware Control – Power, Reset, Vitals, Inventory, Event Logs, SNMP alert processing – x. CAT can even tell you which light path LEDs are lit up remotely § Remote Console Management – Serial Console, SOL, Logging / Video Console (no logging) § Remote Destiny Control – Local/SAN Boot, Network Boot, i. SCSI Boot § Remote Automated Unattended Network Installation – Auto-Discovery • MAC Address Collection • Service Processor Programming • Remote Flashing – Kickstart, Autoyast, Imaging, Stateless/Diskless, i. SCSI § Scales! Think 100, 000 nodes. § x. CAT will make you lazy. No need to walk to datacenter again. 12 17 -Mar-18 © 2009 IBM Corporation

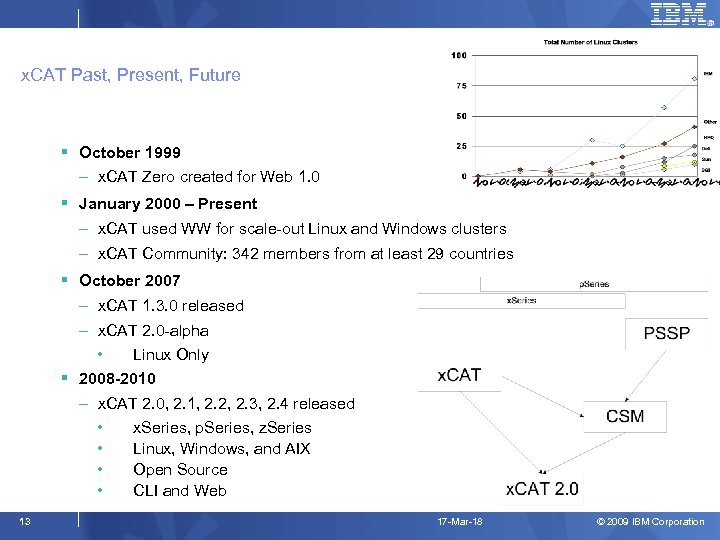

x. CAT Past, Present, Future § October 1999 – x. CAT Zero created for Web 1. 0 § January 2000 – Present – x. CAT used WW for scale-out Linux and Windows clusters – x. CAT Community: 342 members from at least 29 countries § October 2007 – x. CAT 1. 3. 0 released – x. CAT 2. 0 -alpha • Linux Only § 2008 -2010 – x. CAT 2. 0, 2. 1, 2. 2, 2. 3, 2. 4 released • x. Series, p. Series, z. Series • Linux, Windows, and AIX • Open Source • CLI and Web 13 17 -Mar-18 © 2009 IBM Corporation

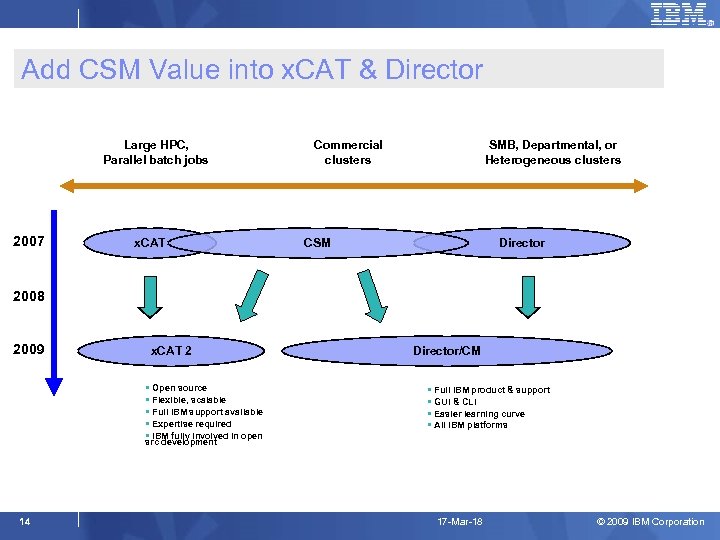

Add CSM Value into x. CAT & Director Large HPC, Parallel batch jobs 2007 x. CAT Commercial clusters SMB, Departmental, or Heterogeneous clusters CSM Director 2008 2009 x. CAT 2 § Open source § Flexible, scalable § Full IBM support available § Expertise required § IBM fully involved in open Director/CM § Full IBM product & support § GUI & CLI § Easier learning curve § All IBM platforms src development 14 17 -Mar-18 © 2009 IBM Corporation

Where is x. CAT in use today? § NSF Teragrid (teragrid. org) – ~1500 IA 64 nodes (2 x proc/node), 4 sites, Myrinet § A Bank in America – n clouds @ 252 – 1008 i. DPX nodes each, multi-site, rollout on-going, 10 GE § University of Toronto (Sci. Net) – Hybrid 3864 i. DPX/Linux (30, 912 cores) and 104 P 6/AIX (3, 328 cores) § Weta Digital (x. CAT -- one tool to rule them all) – 1200 Xeon blades (2 x proc/node), Gigabit Ethernet § LANL Roadrunner – Phase 1: 2016 Opteron Nodes (8 core/node), IB, Stateless – Phase 3: 3240 LS 21, 6480 QS 22, IB, Stateless § Anonymous Petroleum Customer – 30, 000 nodes, 20, 000 in largest single cluster. Was Windows, now Linux. § IBM On-Demand Center § IBM GTS – "They can have my x. CAT when they pry it from my cold dead hands. " -- Douglas Myers, IBM GS Special Events 15 17 -Mar-18 © 2009 IBM Corporation

x. CAT 2 Support Requirements Attributes of support offering § 24 x 7 support § Worldwide § Close to traditional L 1/L 2/L 3 model § Identical support mechanism for system x and p § Begins with x. CAT 2. 0 (9/2008) 16 17 -Mar-18 © 2009 IBM Corporation

x. CAT 2 Team Members & Responsibilities § Egan Ford (architecture, scaling expert, customer input, marketing, cloud, etc. . . ) § Jarrod Johnson (architecture & development, system x HW control, 1350 test, ESX, Xen, KVM, Linux Containers) § Bruce Potter (architecture, GUI) § Linda Mellor (development, HPC integration) § Lissa Valletta (documentation, general management functions) § Norm Nott (AIX deployment, AIX porting & open source) § Ling Gao (PERCS, monitoring, scaling) § Scot Sakolish (system p HW control) § Shujun Zhou (Road. Runner cluster setup/admin) § Jay Urbanski (Open source approval process) § Adaptive Computing (Hyper V, Moab, cloud) § Sumavi § Other IBMers, BPs, and customers 17 17 -Mar-18 © 2009 IBM Corporation

x. CAT Tech 18 3/17/2018 © 2009 IBM Corporation

x. CAT 2. x Architecture § Everything is a node – Physical Nodes – Virtual Machines/LPARs/z. VM • Xen, KVM, ESXi, Scale. MP – rpower, live migration, console logging, Linux and Windows guests. – Infrastructure • • 19 Terminal Servers Switches Storage HMC 17 -Mar-18 © 2009 IBM Corporation

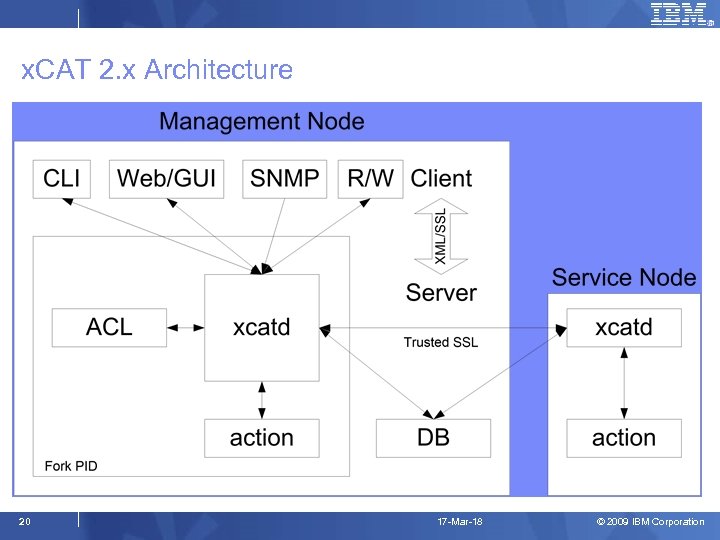

x. CAT 2. x Architecture 20 17 -Mar-18 © 2009 IBM Corporation

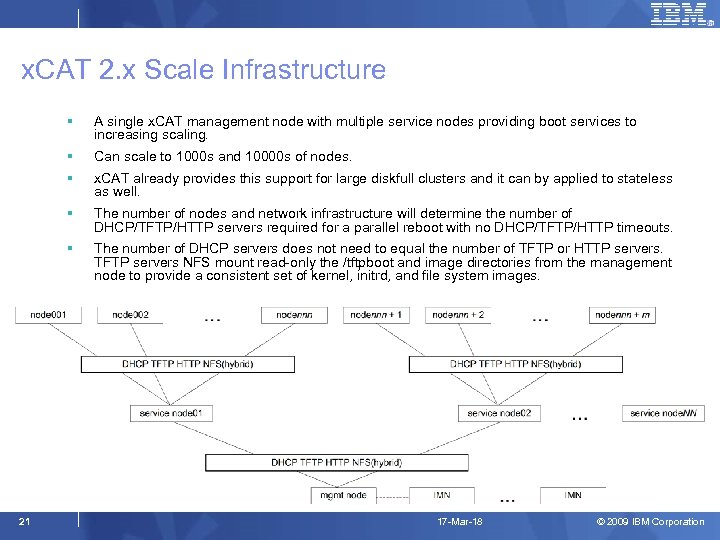

x. CAT 2. x Scale Infrastructure § A single x. CAT management node with multiple service nodes providing boot services to increasing scaling. § Can scale to 1000 s and 10000 s of nodes. § x. CAT already provides this support for large diskfull clusters and it can by applied to stateless as well. § The number of nodes and network infrastructure will determine the number of DHCP/TFTP/HTTP servers required for a parallel reboot with no DHCP/TFTP/HTTP timeouts. § The number of DHCP servers does not need to equal the number of TFTP or HTTP servers. TFTP servers NFS mount read-only the /tftpboot and image directories from the management node to provide a consistent set of kernel, initrd, and file system images. 21 17 -Mar-18 © 2009 IBM Corporation

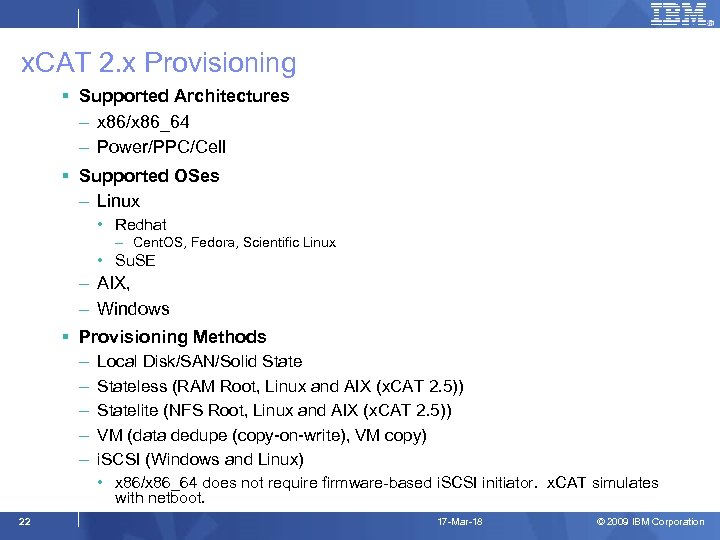

x. CAT 2. x Provisioning § Supported Architectures – x 86/x 86_64 – Power/PPC/Cell § Supported OSes – Linux • Redhat – Cent. OS, Fedora, Scientific Linux • Su. SE – AIX, – Windows § Provisioning Methods – – – Local Disk/SAN/Solid Stateless (RAM Root, Linux and AIX (x. CAT 2. 5)) Statelite (NFS Root, Linux and AIX (x. CAT 2. 5)) VM (data dedupe (copy-on-write), VM copy) i. SCSI (Windows and Linux) • x 86/x 86_64 does not require firmware-based i. SCSI initiator. x. CAT simulates with netboot. 22 17 -Mar-18 © 2009 IBM Corporation

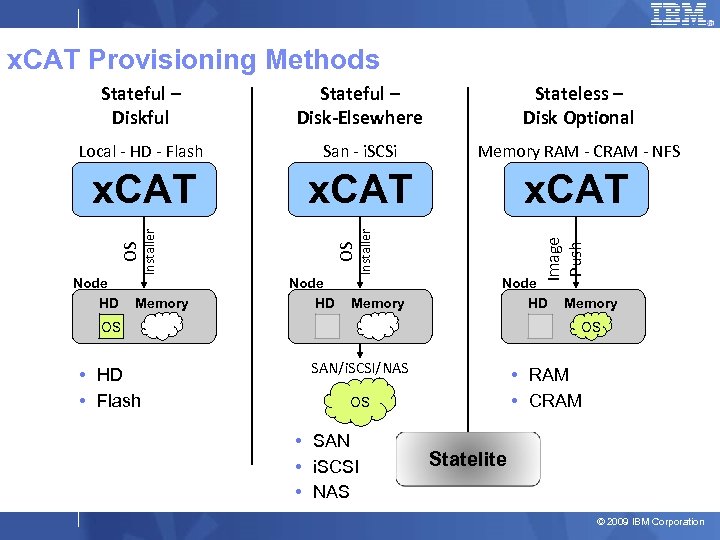

x. CAT Provisioning Methods Stateless – Disk Optional Local - HD - Flash San - i. SCSi Memory RAM - CRAM - NFS x. CAT Memory Image Push OS OS Node HD Installer Stateful – Disk-Elsewhere Installer Stateful – Diskful Node HD Memory Node HD OS • HD • Flash Memory OS SAN/i. SCSI/NAS • RAM • CRAM OS • SAN • i. SCSI • NAS Statelite © 2009 IBM Corporation

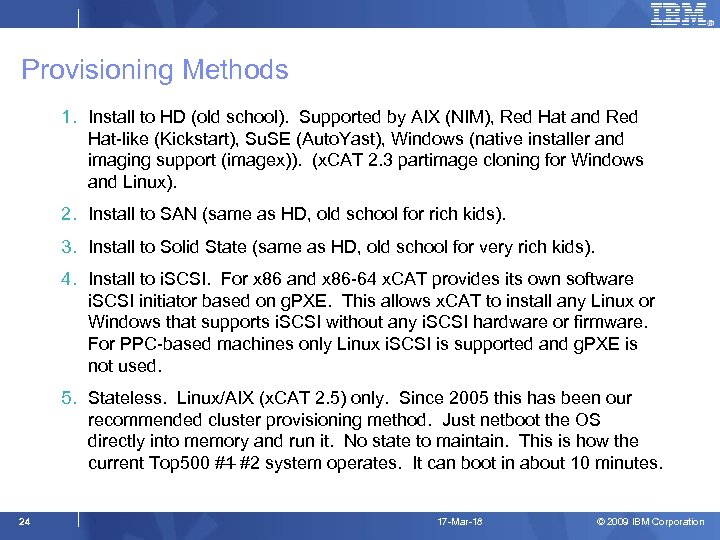

Provisioning Methods 1. Install to HD (old school). Supported by AIX (NIM), Red Hat and Red Hat-like (Kickstart), Su. SE (Auto. Yast), Windows (native installer and imaging support (imagex)). (x. CAT 2. 3 partimage cloning for Windows and Linux). 2. Install to SAN (same as HD, old school for rich kids). 3. Install to Solid State (same as HD, old school for very rich kids). 4. Install to i. SCSI. For x 86 and x 86 -64 x. CAT provides its own software i. SCSI initiator based on g. PXE. This allows x. CAT to install any Linux or Windows that supports i. SCSI without any i. SCSI hardware or firmware. For PPC-based machines only Linux i. SCSI is supported and g. PXE is not used. 5. Stateless. Linux/AIX (x. CAT 2. 5) only. Since 2005 this has been our recommended cluster provisioning method. Just netboot the OS directly into memory and run it. No state to maintain. This is how the current Top 500 #1 #2 system operates. It can boot in about 10 minutes. 24 17 -Mar-18 © 2009 IBM Corporation

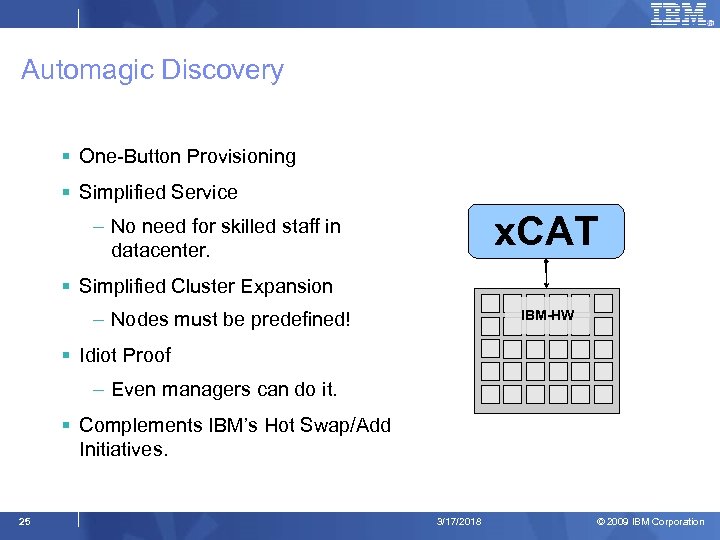

Automagic Discovery § One-Button Provisioning § Simplified Service x. CAT – No need for skilled staff in datacenter. § Simplified Cluster Expansion – Nodes must be predefined! IBM-HW § Idiot Proof – Even managers can do it. § Complements IBM’s Hot Swap/Add Initiatives. 25 3/17/2018 © 2009 IBM Corporation

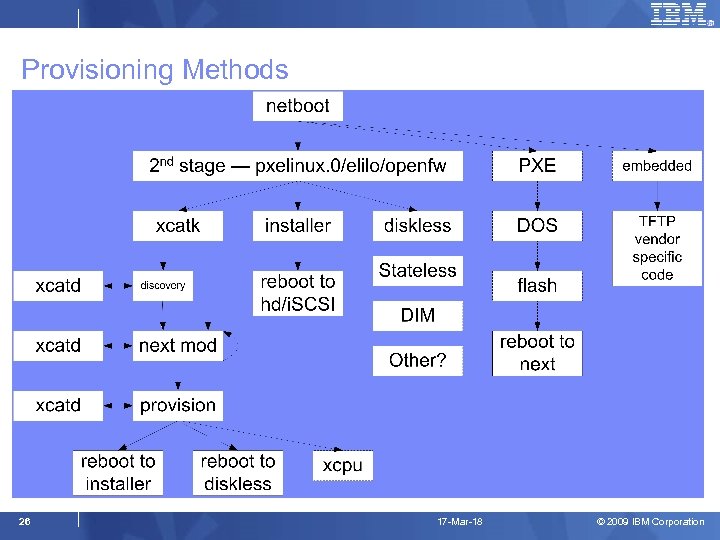

Provisioning Methods 26 17 -Mar-18 © 2009 IBM Corporation

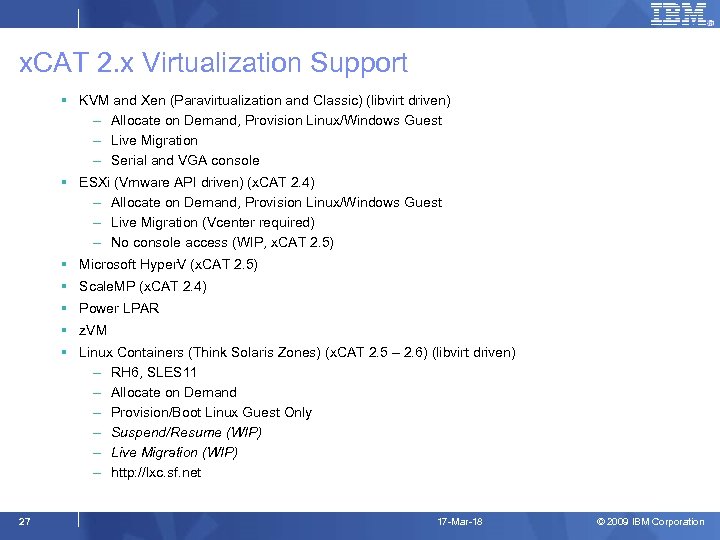

x. CAT 2. x Virtualization Support § KVM and Xen (Paravirtualization and Classic) (libvirt driven) – Allocate on Demand, Provision Linux/Windows Guest – Live Migration – Serial and VGA console § ESXi (Vmware API driven) (x. CAT 2. 4) – Allocate on Demand, Provision Linux/Windows Guest – Live Migration (Vcenter required) – No console access (WIP, x. CAT 2. 5) § Microsoft Hyper. V (x. CAT 2. 5) § Scale. MP (x. CAT 2. 4) § Power LPAR § z. VM § Linux Containers (Think Solaris Zones) (x. CAT 2. 5 – 2. 6) (libvirt driven) – RH 6, SLES 11 – Allocate on Demand – Provision/Boot Linux Guest Only – Suspend/Resume (WIP) – Live Migration (WIP) – http: //lxc. sf. net 27 17 -Mar-18 © 2009 IBM Corporation

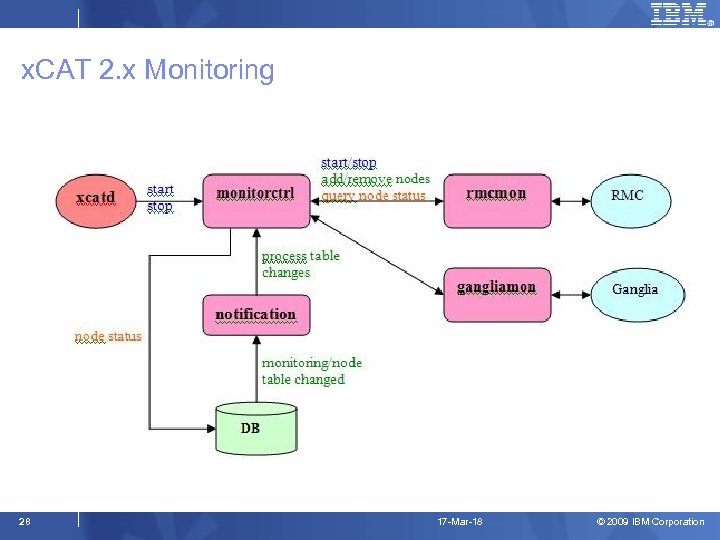

x. CAT 2. x Monitoring 28 17 -Mar-18 © 2009 IBM Corporation

What is Moab? § The Brain: Intelligent Management and Automation Engine. § Policy and Service Level Enforcer. § Provides simple Web-based job management, graphical cluster administration, and management reporting tools. page 29 © 2009 IBM Corporation

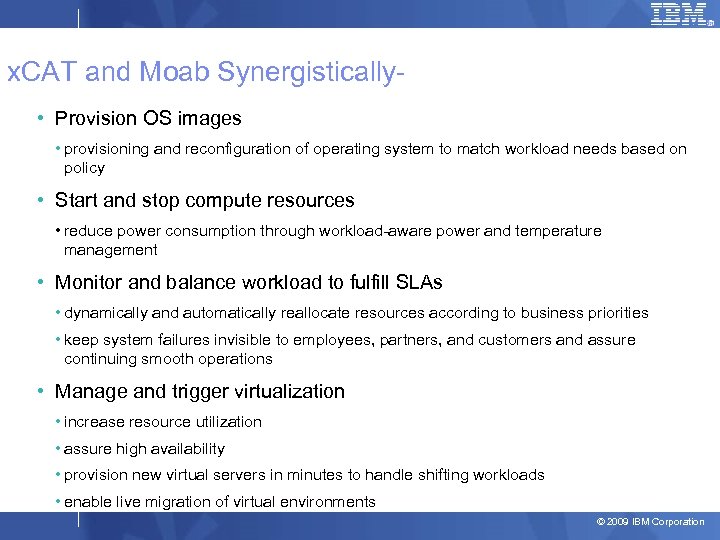

x. CAT and Moab Synergistically- • Provision OS images • provisioning and reconfiguration of operating system to match workload needs based on policy • Start and stop compute resources • reduce power consumption through workload-aware power and temperature management • Monitor and balance workload to fulfill SLAs • dynamically and automatically reallocate resources according to business priorities • keep system failures invisible to employees, partners, and customers and assure continuing smooth operations • Manage and trigger virtualization • increase resource utilization • assure high availability • provision new virtual servers in minutes to handle shifting workloads • enable live migration of virtual environments © 2009 IBM Corporation

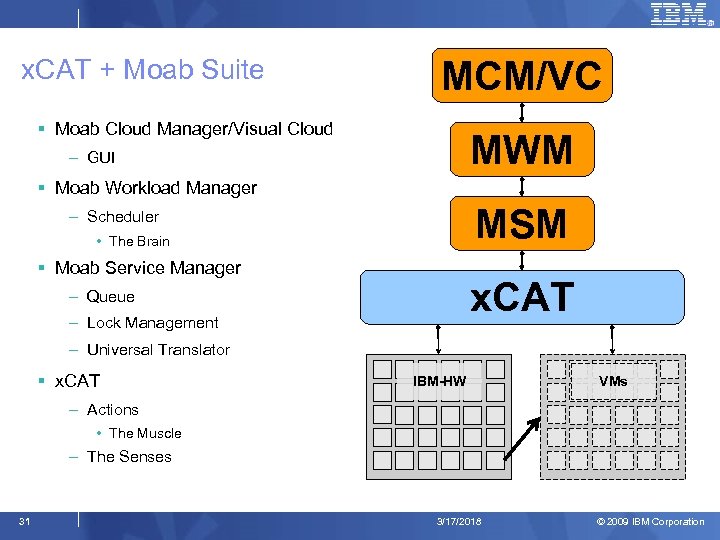

x. CAT + Moab Suite MCM/VC § Moab Cloud Manager/Visual Cloud MWM – GUI § Moab Workload Manager MSM – Scheduler • The Brain § Moab Service Manager x. CAT – Queue – Lock Management – Universal Translator § x. CAT IBM-HW VMs – Actions • The Muscle – The Senses 31 3/17/2018 © 2009 IBM Corporation

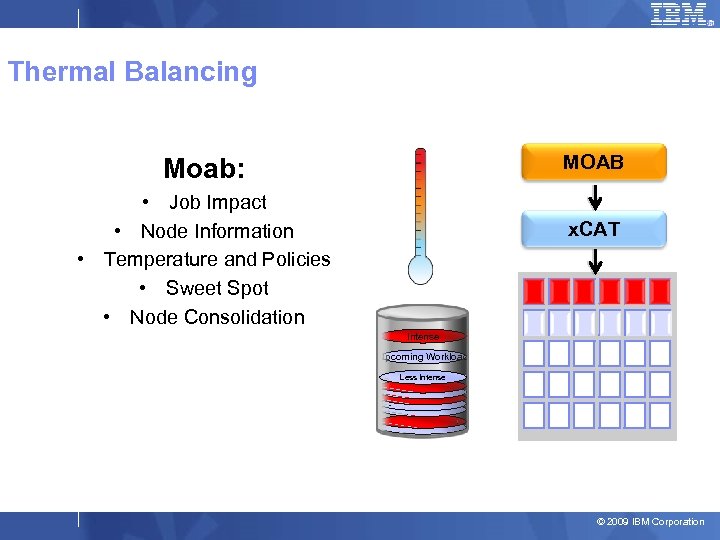

Thermal Balancing MOAB Moab: • Job Impact • Node Information • Temperature and Policies • Sweet Spot • Node Consolidation x. CAT Intense Upcoming Workload Less Intense © 2009 IBM Corporation

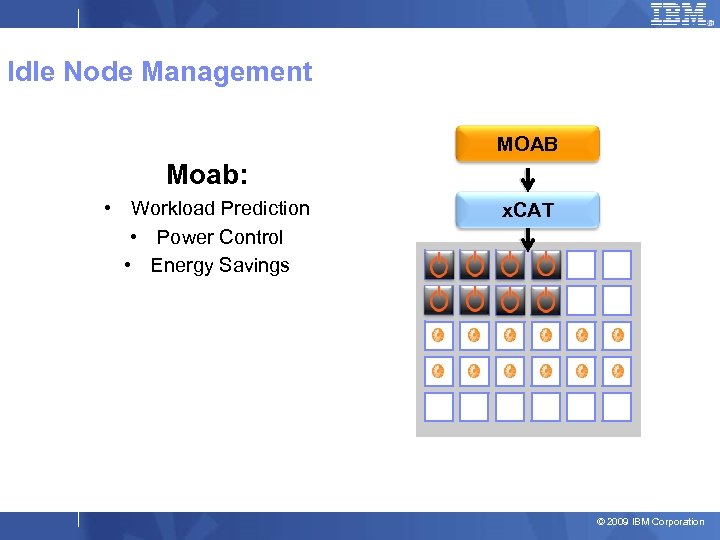

Idle Node Management MOAB Moab: • Workload Prediction • Power Control • Energy Savings x. CAT © 2009 IBM Corporation

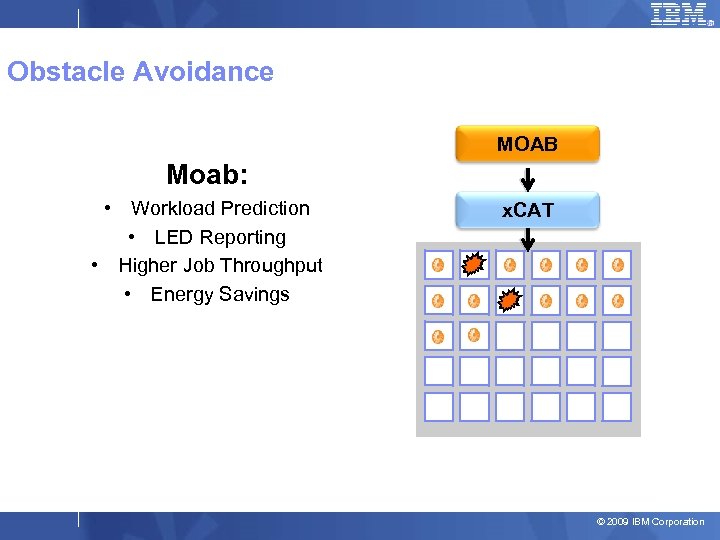

Obstacle Avoidance MOAB Moab: • Workload Prediction • LED Reporting • Higher Job Throughput • Energy Savings x. CAT © 2009 IBM Corporation

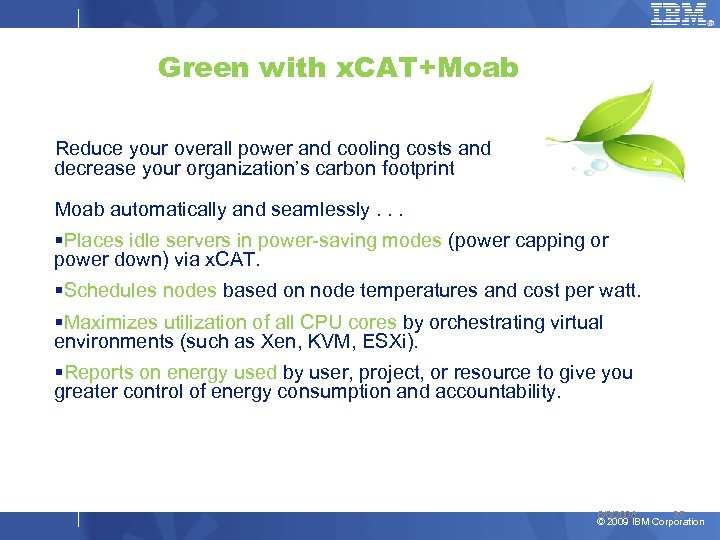

Green with x. CAT+Moab Reduce your overall power and cooling costs and decrease your organization’s carbon footprint Moab automatically and seamlessly. . . §Places idle servers in power-saving modes (power capping or power down) via x. CAT. §Schedules nodes based on node temperatures and cost per watt. §Maximizes utilization of all CPU cores by orchestrating virtual environments (such as Xen, KVM, ESXi). §Reports on energy used by user, project, or resource to give you greater control of energy consumption and accountability. 6/6/2008 35 © 2009 IBM Corporation

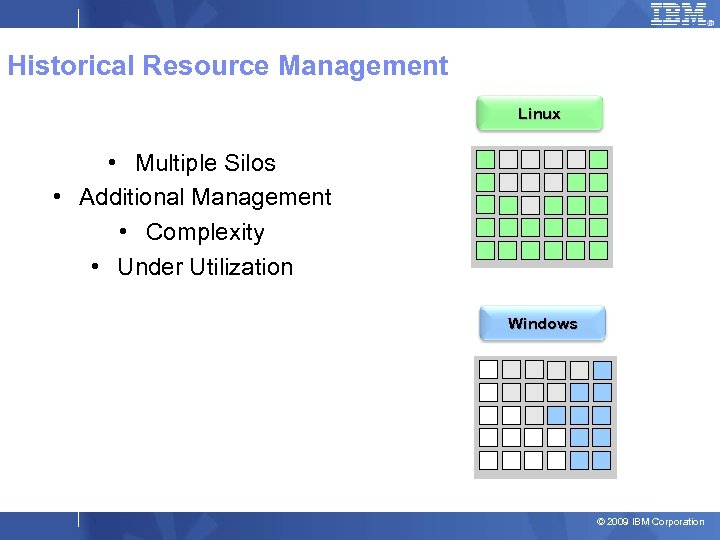

Historical Resource Management Linux • Multiple Silos • Additional Management • Complexity • Under Utilization Windows © 2009 IBM Corporation

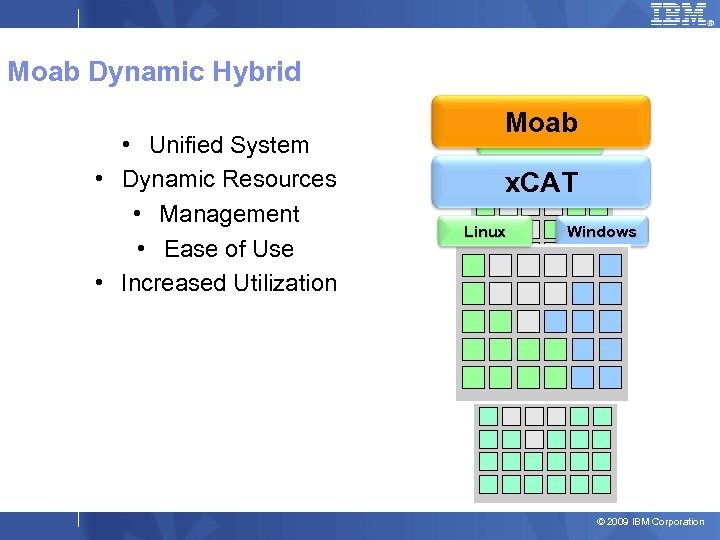

Moab Dynamic Hybrid • Unified System • Dynamic Resources • Management • Ease of Use • Increased Utilization Moab Linux x. CAT Linux Windows © 2009 IBM Corporation

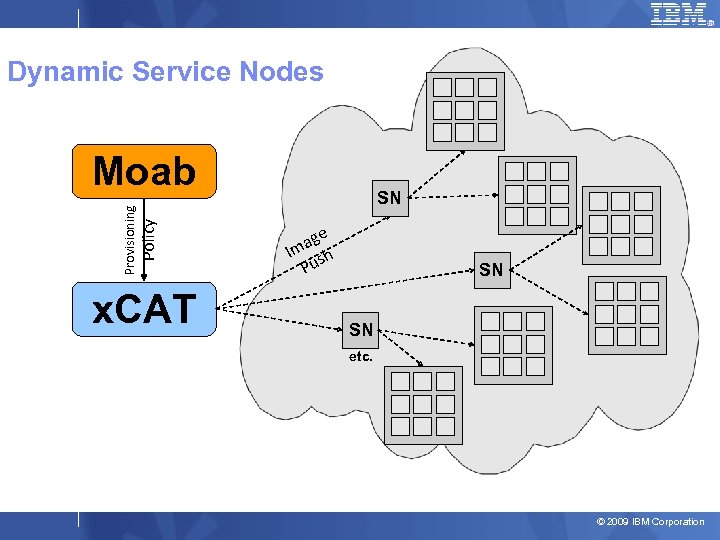

Dynamic Service Nodes Policy Provisioning Moab x. CAT SN ge ma h I s Pu SN SN etc. © 2009 IBM Corporation

Virtual Machine Automation § Create/Delete VMs § Dynamic Add/Remove § Live Migration (KVM, Xen, ESXi) § Stateless Hypervisor (KVM, Xen, ESXi, Scale. MP) – Multiple HV Support § Balancing § Consolidation § Route Around Current/Future Problems 39 3/17/2018 © 2009 IBM Corporation

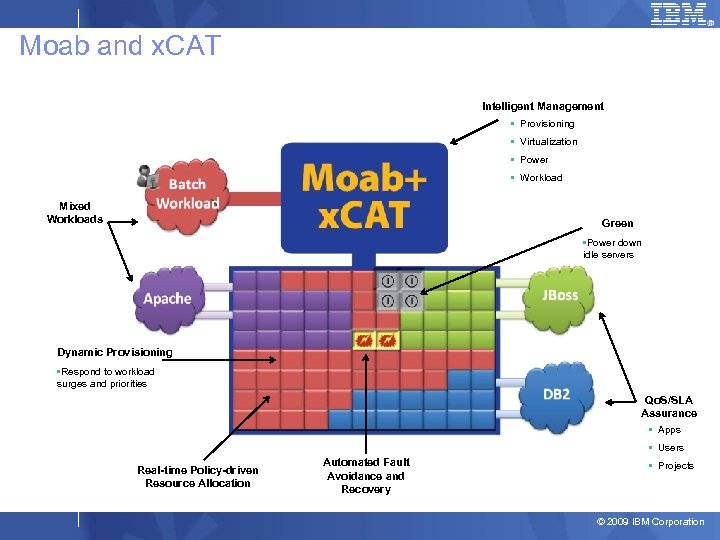

Moab and x. CAT Intelligent Management • Provisioning • Virtualization • Power • Workload Mixed Workloads Green • Power down idle servers Dynamic Provisioning • Respond to workload surges and priorities Qo. S/SLA Assurance • Apps • Users Real-time Policy-driven Resource Allocation Automated Fault Avoidance and Recovery • Projects © 2009 IBM Corporation

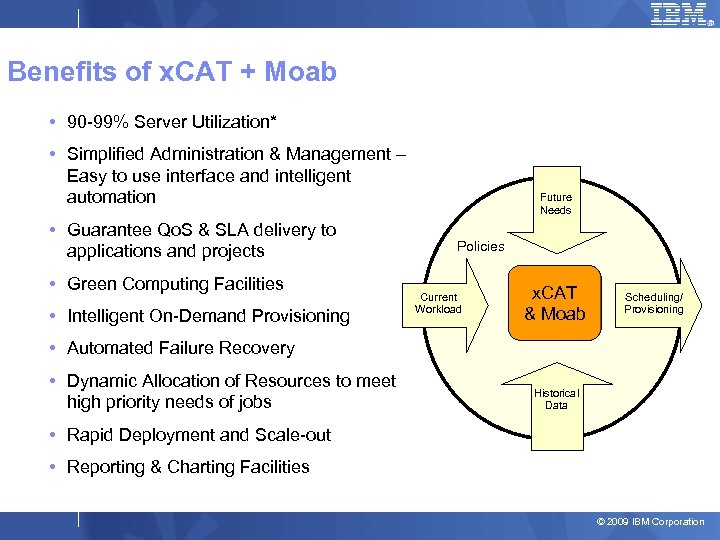

Benefits of x. CAT + Moab • 90 -99% Server Utilization* • Simplified Administration & Management – Easy to use interface and intelligent automation • Guarantee Qo. S & SLA delivery to applications and projects • Green Computing Facilities • Intelligent On-Demand Provisioning Future Needs Policies Current Workload x. CAT & Moab Scheduling/ Provisioning • Automated Failure Recovery • Dynamic Allocation of Resources to meet high priority needs of jobs Historical Data • Rapid Deployment and Scale-out • Reporting & Charting Facilities © 2009 IBM Corporation

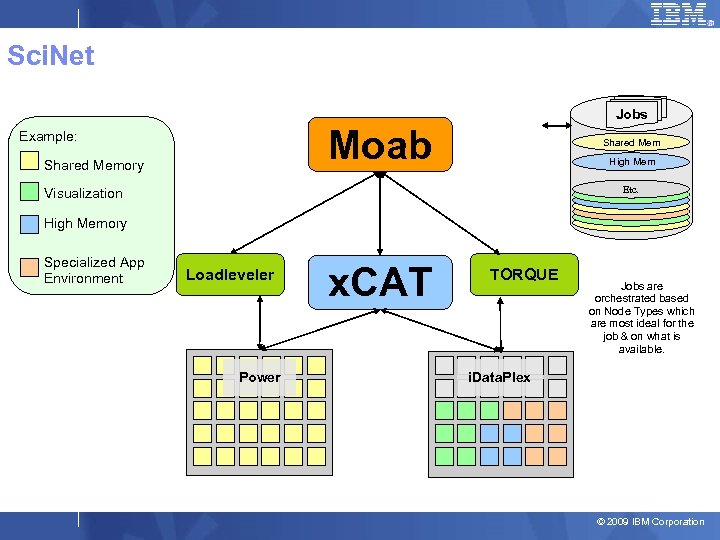

Case Studies: IBM Systems w/Moab • Sci. Net • Moab provides key adaptive scheduling of x. CAT’s on-demand environment provisioning • Cluster Resources and IBM Canada jointly worked to respond to the RFP, present to the customer, and secure this win • Road. Runner • 1 -peta. FLOP System • Cell-based processors • IBM’s VLP • Moab enables IBM to host resources to software partners to enable testing © 2009 IBM Corporation

Sci. Net Jobs Moab Example: Shared Memory Shared Mem High Mem Etc. Visualization High Memory Specialized App Environment Loadleveler Power x. CAT TORQUE Jobs are orchestrated based on Node Types which are most ideal for the job & on what is available. i. Data. Plex © 2009 IBM Corporation

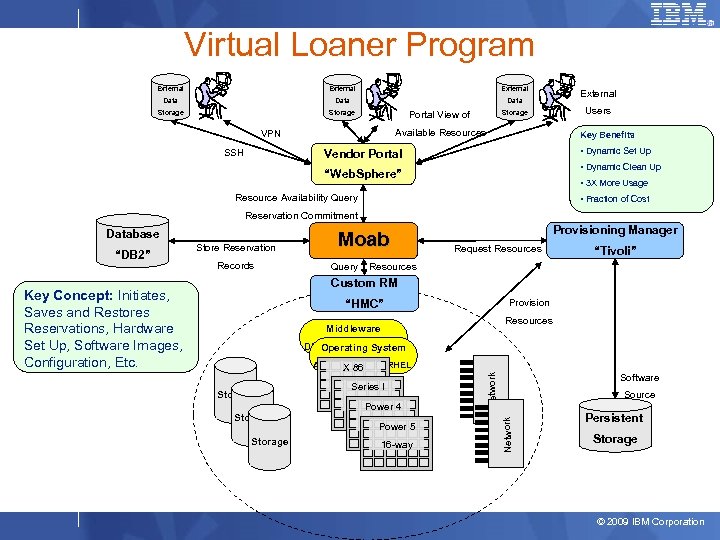

Virtual Loaner Program External Data Storage Users Storage Portal View of Available Resources VPN SSH External Key Benefits • Dynamic Set Up Vendor Portal • Dynamic Clean Up “Web. Sphere” • 3 X More Usage Resource Availability Query • Fraction of Cost Reservation Commitment Database Store Reservation Records Provisioning Manager Request Resources “Tivoli” Query Resources Custom RM Key Concept: Initiates, Saves and Restores Reservations, Hardware Set Up, Software Images, Configuration, Etc. “HMC” Provision Resources Middleware DB 2, Web. Sphere, …. Operating System Storage Series X Series I Power 4 32 -way Power 5 16 -way Software Source Network AIX, i 5 OS, SLES, RHEL X 86 Network “DB 2” Moab Persistent Storage © 2009 IBM Corporation

Summary § Available Today – x. CAT 2. 3 – Moab (x. Series Part Number) § Available Soon – x. CAT 2. 4 (April 30 2010) § Available not-so-soon – x. CAT 2. 5 (October 31 2010) 45 3/17/2018 © 2009 IBM Corporation

Who’s responsible for this stuff? § Blame me: – Egan Ford – egan@us. ibm. com – egan@sense. net (email both for faster service) 46 3/17/2018 © 2009 IBM Corporation

4ad48ae62fd4468c5d43d6b12d59da09.ppt