b4d74726f42caa43438b362d92859568.ppt

- Количество слайдов: 22

WWW Robots & Robots Exclusion Standard Babulal K. R Email : Babulal. K@gcisolutions. in

WWW Robots • Who am I ? – WWW Robots (also called wanderers or spiders) are programs that traverse many pages in the World Wide Web by recursively retrieving linked pages. • Who give me birth? – The robots. txt protocol was created by consensus in June 1994 by members of the robots mailing list. – (robots-request @ nexor. co. uk). • No body rules me ! – There is no official standards body or RFC for the protocol. Babulal K. R

About Me. • A program or automated script which browses the World Wide Web in a methodical, automated manner. • Known less frequently as crawlers, ants, automatic indexers, bots, and worms. • I am Not : Normal Web browsers, because they are operated by a human, and don't automatically retrieve referenced documents. • I am Good: If well designed, professionally operated, and provide a valuable service. Babulal K. R

I am Bad!!!. • I Can swamp servers with “rapid-fire” requests. • Overload networks and servers. • Refused connections, a high load, performance slowdowns, or in extreme cases a system crash. Get rid of me >> Babulal K. R

How do I keep a robot off my server? ? Babulal K. R

Robots exclusion standard or robots. txt protocol. Ver 1. 0. 0 Well I said nobody rules me, Then what is this? ? ? . Babulal K. R

Let me explain • It is not an official standard backed by a standards body, or owned by any commercial organization. • It is not enforced by anybody, and there no guarantee that all current and future robots will use it. Rather • The robots. txt protocol was created by consensus in June 1994 by members of the robots mailing list. • The protocol is purely advisory. • Offered by the WWW community to protect WWW server against unwanted accesses. Babulal K. R

Method • • create a file on the server which specifies an access policy for robots. This file must be accessible via HTTP on the local URL "/robots. txt". Why robots. txt ? Why not foo. txt? Considering 1. File naming restrictions of all common operating systems. 2. Should not require extra server configuration. 3. Should indicate the purpose of the file. 4. Should be easy to remember. 5. Clash with existing files should be minimal. Babulal K. R

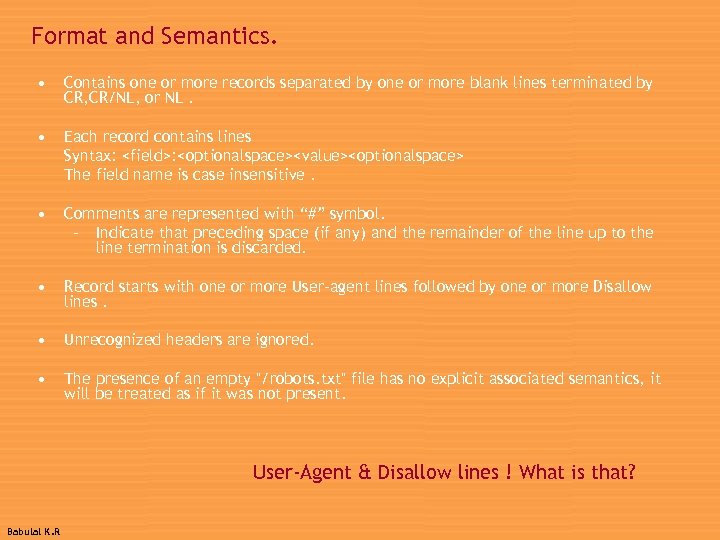

Format and Semantics. • Contains one or more records separated by one or more blank lines terminated by CR, CR/NL, or NL. • Each record contains lines Syntax: <field>: <optionalspace><value><optionalspace> The field name is case insensitive. • Comments are represented with “#” symbol. – Indicate that preceding space (if any) and the remainder of the line up to the line termination is discarded. • Record starts with one or more User-agent lines followed by one or more Disallow lines. • Unrecognized headers are ignored. • The presence of an empty "/robots. txt" file has no explicit associated semantics, it will be treated as if it was not present. User-Agent & Disallow lines ! What is that? Babulal K. R

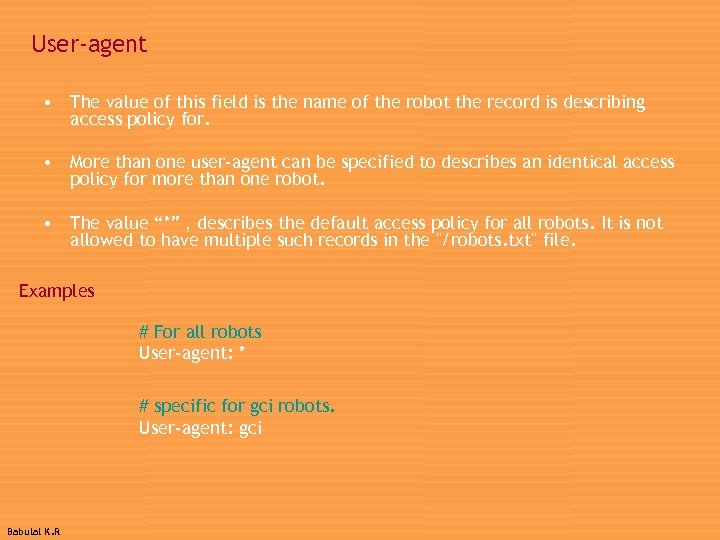

User-agent • The value of this field is the name of the robot the record is describing access policy for. • More than one user-agent can be specified to describes an identical access policy for more than one robot. • The value “*” , describes the default access policy for all robots. It is not allowed to have multiple such records in the "/robots. txt" file. Examples # For all robots User-agent: * # specific for gci robots. User-agent: gci Babulal K. R

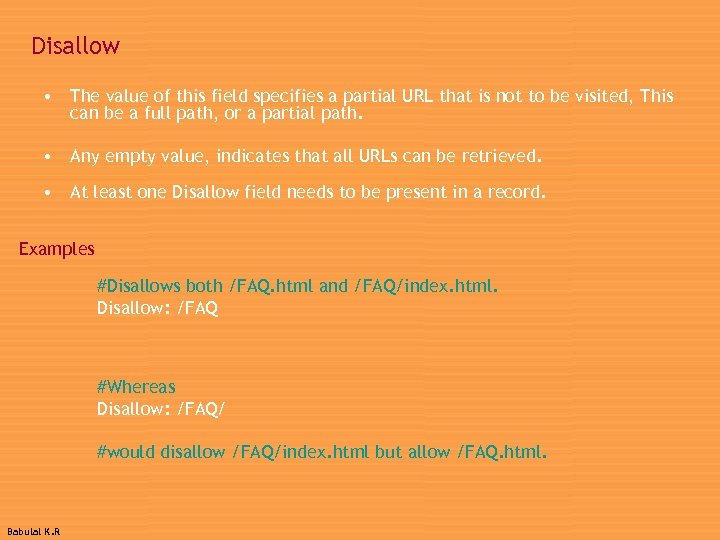

Disallow • The value of this field specifies a partial URL that is not to be visited, This can be a full path, or a partial path. • Any empty value, indicates that all URLs can be retrieved. • At least one Disallow field needs to be present in a record. Examples #Disallows both /FAQ. html and /FAQ/index. html. Disallow: /FAQ #Whereas Disallow: /FAQ/ #would disallow /FAQ/index. html but allow /FAQ. html. Babulal K. R

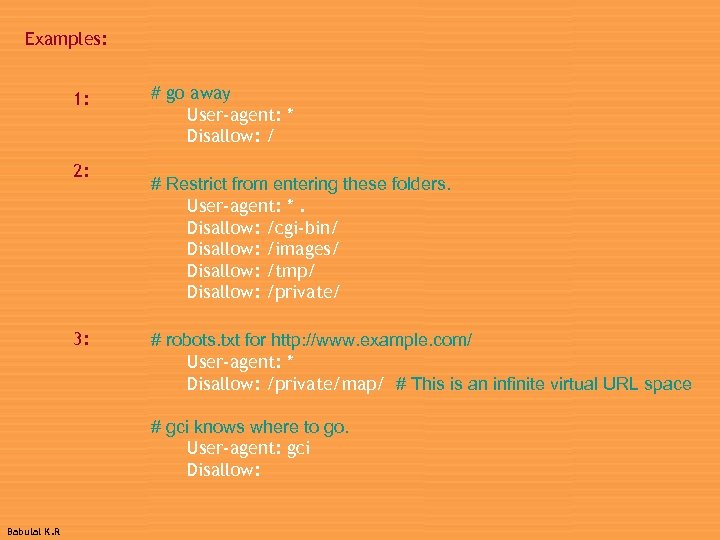

Examples: 1: 2: 3: # go away User-agent: * Disallow: / # Restrict from entering these folders. User-agent: *. Disallow: /cgi-bin/ Disallow: /images/ Disallow: /tmp/ Disallow: /private/ # robots. txt for http: //www. example. com/ User-agent: * Disallow: /private/map/ # This is an infinite virtual URL space # gci knows where to go. User-agent: gci Disallow: Babulal K. R

An Extended Standard for Robot Exclusion Ver 2. 0 More standards, No jokes please!!!. Babulal K. R

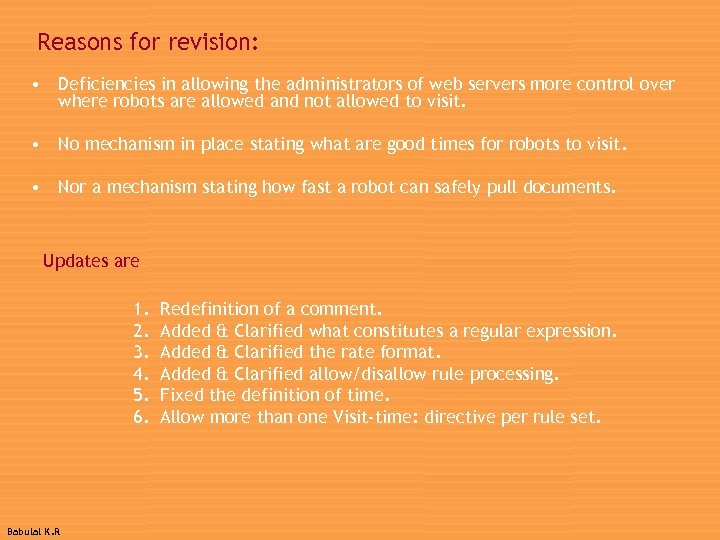

Reasons for revision: • Deficiencies in allowing the administrators of web servers more control over where robots are allowed and not allowed to visit. • No mechanism in place stating what are good times for robots to visit. • Nor a mechanism stating how fast a robot can safely pull documents. Updates are 1. 2. 3. 4. 5. 6. Babulal K. R Redefinition of a comment. Added & Clarified what constitutes a regular expression. Added & Clarified the rate format. Added & Clarified allow/disallow rule processing. Fixed the definition of time. Allow more than one Visit-time: directive per rule set.

![Directives : Syntax: <directive> ': ' [<whitespace>] <data> [<whitespace>] [<comment>] <end-of-line> Robot-version: 1. The Directives : Syntax: <directive> ': ' [<whitespace>] <data> [<whitespace>] [<comment>] <end-of-line> Robot-version: 1. The](https://present5.com/presentation/b4d74726f42caa43438b362d92859568/image-15.jpg)

Directives : Syntax: <directive> ': ' [<whitespace>] <data> [<whitespace>] [<comment>] <end-of-line> Robot-version: 1. The version is a two part number, separated by a period. 2. The first number indicates major revisions. 3. The second number represents clarifications or fixes. 4. This will follow the User-agent: header. ( If it does not immediately follow, or is missing, then the robot is to assume the rule set follows the Version 1. 0 standard). 5. Only one Robot-version: header per rule set is allowed. Syntax : Robot-version: <version> Example : Robot-version: 2. 0 # uses 2. 0 spec Babulal K. R

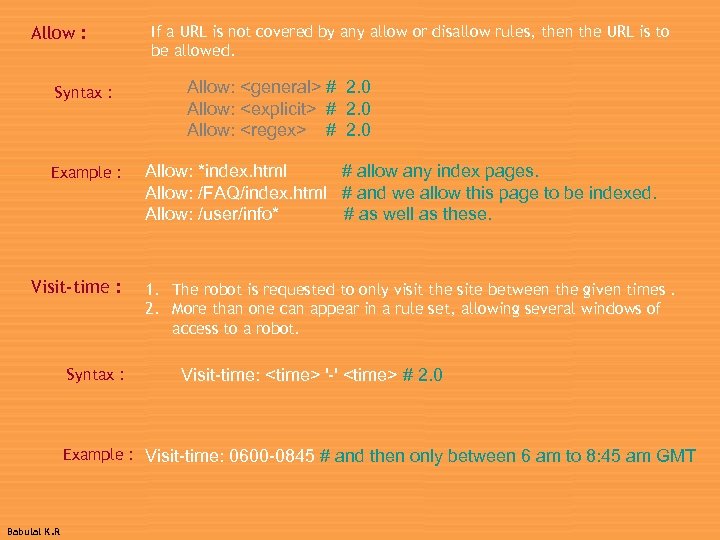

Allow : Syntax : Example : Visit-time : Syntax : If a URL is not covered by any allow or disallow rules, then the URL is to be allowed. Allow: <general> # 2. 0 Allow: <explicit> # 2. 0 Allow: <regex> # 2. 0 Allow: *index. html # allow any index pages. Allow: /FAQ/index. html # and we allow this page to be indexed. Allow: /user/info* # as well as these. 1. The robot is requested to only visit the site between the given times. 2. More than one can appear in a rule set, allowing several windows of access to a robot. Visit-time: <time> '-' <time> # 2. 0 Example : Visit-time: 0600 -0845 # and then only between 6 am to 8: 45 am GMT Babulal K. R

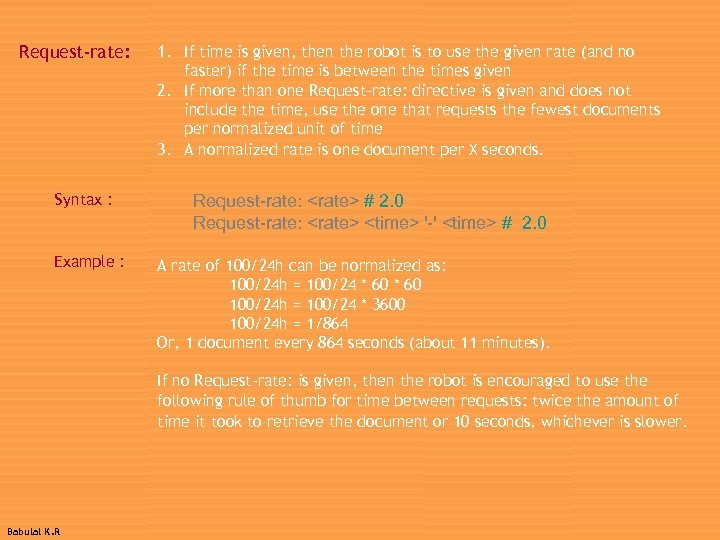

Request-rate: Syntax : Example : 1. If time is given, then the robot is to use the given rate (and no faster) if the time is between the times given 2. If more than one Request-rate: directive is given and does not include the time, use the one that requests the fewest documents per normalized unit of time 3. A normalized rate is one document per X seconds. Request-rate: <rate> # 2. 0 Request-rate: <rate> <time> '-' <time> # 2. 0 A rate of 100/24 h can be normalized as: 100/24 h = 100/24 * 60 100/24 h = 100/24 * 3600 100/24 h = 1/864 Or, 1 document every 864 seconds (about 11 minutes). If no Request-rate: is given, then the robot is encouraged to use the following rule of thumb for time between requests: twice the amount of time it took to retrieve the document or 10 seconds, whichever is slower. Babulal K. R

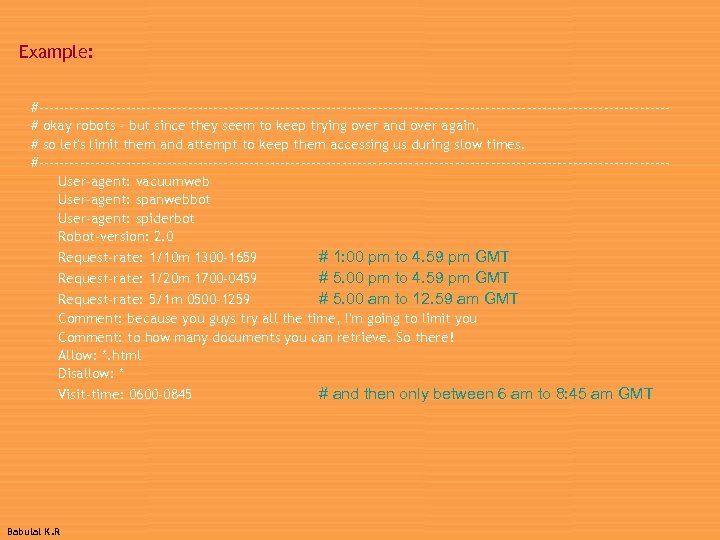

Example: #-------------------------------------------------------------# okay robots - but since they seem to keep trying over and over again, # so let's limit them and attempt to keep them accessing us during slow times. #-------------------------------------------------------------User-agent: vacuumweb User-agent: spanwebbot User-agent: spiderbot Robot-version: 2. 0 Request-rate: 1/10 m 1300 -1659 # 1: 00 pm to 4. 59 pm GMT Request-rate: 1/20 m 1700 -0459 # 5. 00 pm to 4. 59 pm GMT # 5. 00 am to 12. 59 am GMT Request-rate: 5/1 m 0500 -1259 Comment: because you guys try all the time, I'm going to limit you Comment: to how many documents you can retrieve. So there! Allow: *. html Disallow: * Visit-time: 0600 -0845 # and then only between 6 am to 8: 45 am GMT Babulal K. R

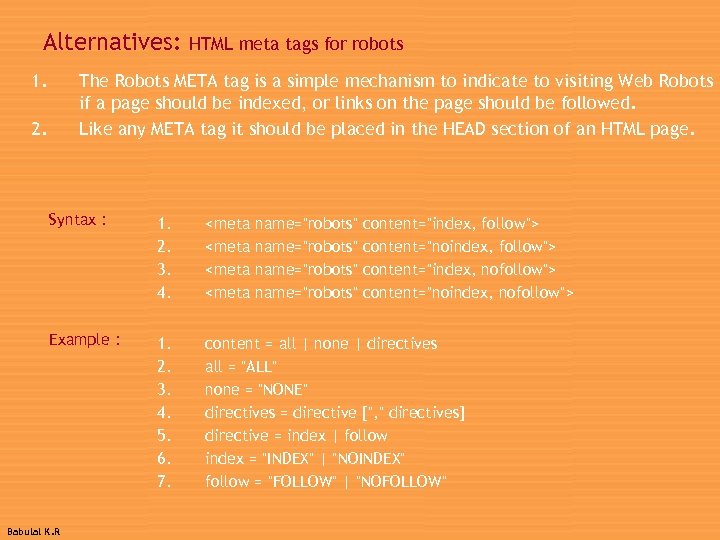

Alternatives: 1. HTML meta tags for robots The Robots META tag is a simple mechanism to indicate to visiting Web Robots if a page should be indexed, or links on the page should be followed. Like any META tag it should be placed in the HEAD section of an HTML page. 2. Syntax : 1. 2. 3. 4. <meta Example : 1. 2. 3. 4. 5. 6. 7. content = all | none | directives all = "ALL" none = "NONE" directives = directive [", " directives] directive = index | follow index = "INDEX" | "NOINDEX" follow = "FOLLOW" | "NOFOLLOW" Babulal K. R name="robots" content="index, follow"> content="noindex, follow"> content="index, nofollow"> content="noindex, nofollow">

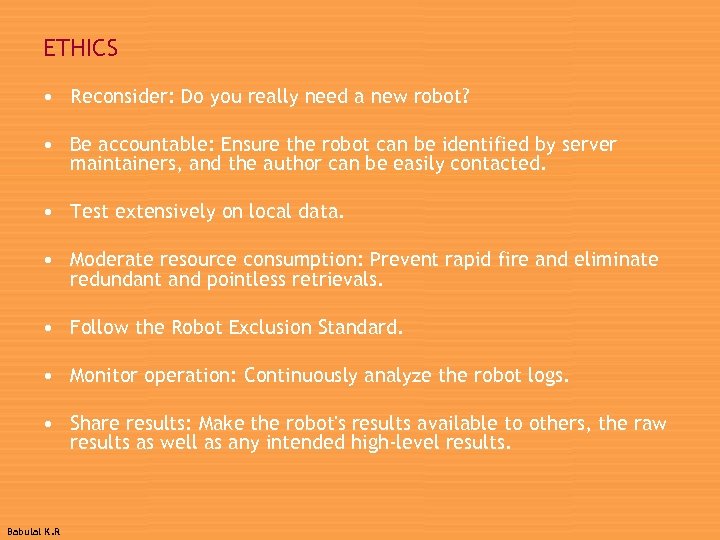

ETHICS • Reconsider: Do you really need a new robot? • Be accountable: Ensure the robot can be identified by server maintainers, and the author can be easily contacted. • Test extensively on local data. • Moderate resource consumption: Prevent rapid fire and eliminate redundant and pointless retrievals. • Follow the Robot Exclusion Standard. • Monitor operation: Continuously analyze the robot logs. • Share results: Make the robot's results available to others, the raw results as well as any intended high-level results. Babulal K. R

References : • http: //www. robotstxt. org/ Babulal K. R

Why this? Because it annoys, to see that people cause other people unnecessary hassle, and the whole discussion can be so much gentler. Thank You. Babulal K. R

b4d74726f42caa43438b362d92859568.ppt