aaa6615a7f958f2241a42c681d14d88e.ppt

- Количество слайдов: 32

WMO AMDAR PANEL Q Data Quality Control and Quality Monitoring Jitze van der Meulen, 2013 -09 -11 1

WMO AMDAR PANEL Q Data Quality Control and Quality Monitoring Jitze van der Meulen, 2013 -09 -11 1

Workshop on Aircraft Observing System Data Management (2012) l l l Recognize comparisons of aircraft observations to NWP model background fields as a critical component of Aircraft Observations Quality Control (AO QC). Consider whether or not such comparisons should be done before AO data are exchanged on the GTS. Semi-automatic near real time monitoring information such as data counts, missing data, higher than normal rejects by the assimilation system, etc. should be exchanged regularly (monthly or more frequently as required and agreed to) between designated centres and data managers (and/or producers). This could include and Alarm/Event system. Consideration should be given to the designation of centres to carry out international QC of Aircraft Observations (WMO and ICAO), possibly before insertion on the GTS, to flag the data. That distribution of ICAO automated aircraft observations on the GTS be done using WMO approved format (BUFR) with an appropriate template (similar to the AMDAR ones) for clear identification of the source of the data (ADS, MODE-S, Aircraft ID, etc. ). 2013 -09 -11 Data Quality Control and Quality Monitoring 2

Workshop on Aircraft Observing System Data Management (2012) l l l Recognize comparisons of aircraft observations to NWP model background fields as a critical component of Aircraft Observations Quality Control (AO QC). Consider whether or not such comparisons should be done before AO data are exchanged on the GTS. Semi-automatic near real time monitoring information such as data counts, missing data, higher than normal rejects by the assimilation system, etc. should be exchanged regularly (monthly or more frequently as required and agreed to) between designated centres and data managers (and/or producers). This could include and Alarm/Event system. Consideration should be given to the designation of centres to carry out international QC of Aircraft Observations (WMO and ICAO), possibly before insertion on the GTS, to flag the data. That distribution of ICAO automated aircraft observations on the GTS be done using WMO approved format (BUFR) with an appropriate template (similar to the AMDAR ones) for clear identification of the source of the data (ADS, MODE-S, Aircraft ID, etc. ). 2013 -09 -11 Data Quality Control and Quality Monitoring 2

The AMDAR Panel has identified 20 key aspects for further developing the Aircraft Observations Quality Control (AO QC); • • • • Data display 3 rd party data Data access ADS (ICAO), other new data Data transfer sources (Mode-S) Typical data: Atmosph. Composition Archiving (data and metadata) data Delivery (level II data; also profile • Phenomena: Icing, Turbulence, use data for local), relation to of data (e. g. direct input, verification) time/place resolution) • Timeliness (taking into account Q/C Optimization of observations processes) data targeting (additional, for • Data checking, filtering, flagging applications) (relation with rules, M. GDPFS) data coverage (global), provision • Excluding aircraft (how to manage) (e. g. Africa); programme • Quality control: monitoring extentions (availability), technics (NWP), stages Developing countries, special (real time, off-line); flagging constraints (data comm. issues) Quality Controlprinciples; archiving; logistics; feed 3 2013 -09 -11 Data and Quality Monitoring Data format (incl. resolution) back

The AMDAR Panel has identified 20 key aspects for further developing the Aircraft Observations Quality Control (AO QC); • • • • Data display 3 rd party data Data access ADS (ICAO), other new data Data transfer sources (Mode-S) Typical data: Atmosph. Composition Archiving (data and metadata) data Delivery (level II data; also profile • Phenomena: Icing, Turbulence, use data for local), relation to of data (e. g. direct input, verification) time/place resolution) • Timeliness (taking into account Q/C Optimization of observations processes) data targeting (additional, for • Data checking, filtering, flagging applications) (relation with rules, M. GDPFS) data coverage (global), provision • Excluding aircraft (how to manage) (e. g. Africa); programme • Quality control: monitoring extentions (availability), technics (NWP), stages Developing countries, special (real time, off-line); flagging constraints (data comm. issues) Quality Controlprinciples; archiving; logistics; feed 3 2013 -09 -11 Data and Quality Monitoring Data format (incl. resolution) back

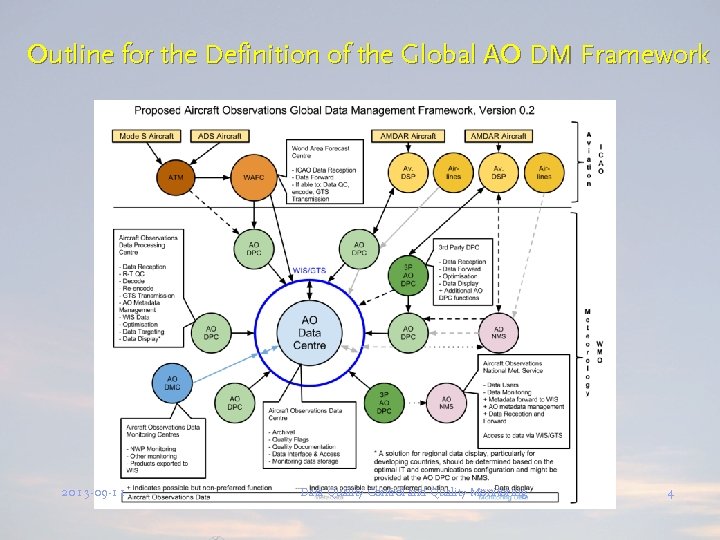

Outline for the Definition of the Global AO DM Framework 2013 -09 -11 Data Quality Control and Quality Monitoring 4

Outline for the Definition of the Global AO DM Framework 2013 -09 -11 Data Quality Control and Quality Monitoring 4

Use of NWP l l NWP model forecast background fields are regarded as the most appropriate references for (near) real time quality control. For operational practices it's will be necessary to evaluate these references to define the moist appropriate choices (update intervals, forecast interval), time and space interpolation techniques or algorithms. NWP background fields as defined used for references require sufficient information on its uncertainties. Traceability to objective observations is required, providing information on its uncertainties (time and place related) and possible seasonal variations or daily characteristics (daytime/night time). In particular altitude related bias behaviour is relevant. 2013 -09 -11 Data Quality Control and Quality Monitoring 5

Use of NWP l l NWP model forecast background fields are regarded as the most appropriate references for (near) real time quality control. For operational practices it's will be necessary to evaluate these references to define the moist appropriate choices (update intervals, forecast interval), time and space interpolation techniques or algorithms. NWP background fields as defined used for references require sufficient information on its uncertainties. Traceability to objective observations is required, providing information on its uncertainties (time and place related) and possible seasonal variations or daily characteristics (daytime/night time). In particular altitude related bias behaviour is relevant. 2013 -09 -11 Data Quality Control and Quality Monitoring 5

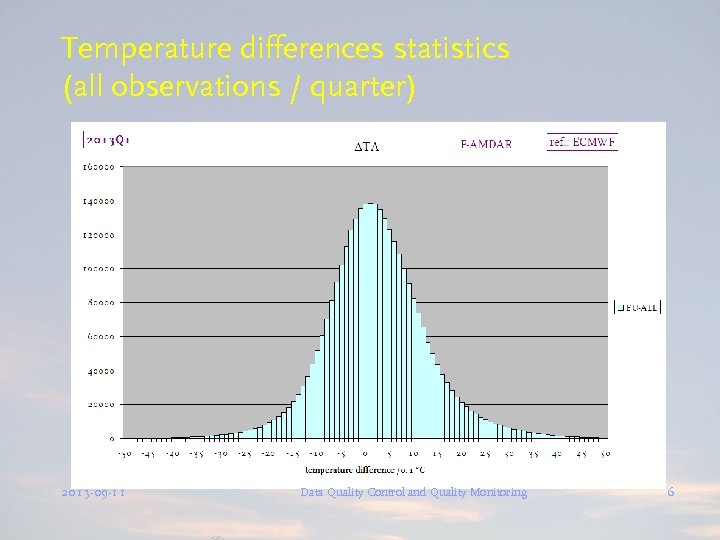

Temperature differences statistics (all observations / quarter) 2013 -09 -11 Data Quality Control and Quality Monitoring 6

Temperature differences statistics (all observations / quarter) 2013 -09 -11 Data Quality Control and Quality Monitoring 6

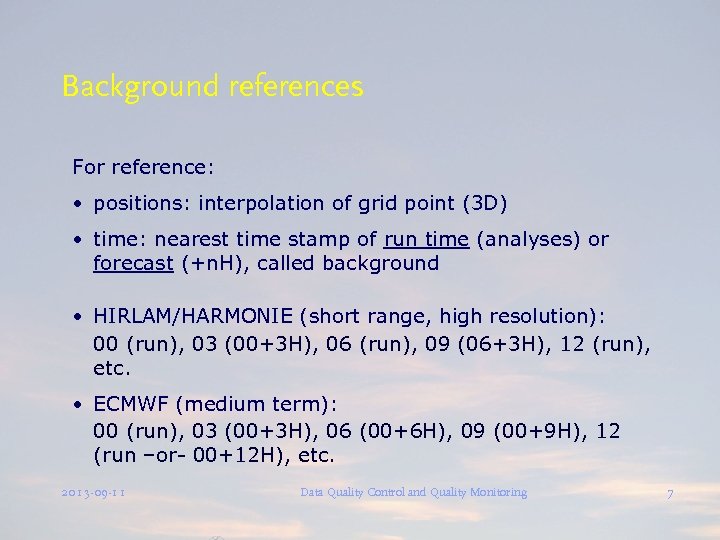

Background references For reference: • positions: interpolation of grid point (3 D) • time: nearest time stamp of run time (analyses) or forecast (+n. H), called background • HIRLAM/HARMONIE (short range, high resolution): 00 (run), 03 (00+3 H), 06 (run), 09 (06+3 H), 12 (run), etc. • ECMWF (medium term): 00 (run), 03 (00+3 H), 06 (00+6 H), 09 (00+9 H), 12 (run –or- 00+12 H), etc. 2013 -09 -11 Data Quality Control and Quality Monitoring 7

Background references For reference: • positions: interpolation of grid point (3 D) • time: nearest time stamp of run time (analyses) or forecast (+n. H), called background • HIRLAM/HARMONIE (short range, high resolution): 00 (run), 03 (00+3 H), 06 (run), 09 (06+3 H), 12 (run), etc. • ECMWF (medium term): 00 (run), 03 (00+3 H), 06 (00+6 H), 09 (00+9 H), 12 (run –or- 00+12 H), etc. 2013 -09 -11 Data Quality Control and Quality Monitoring 7

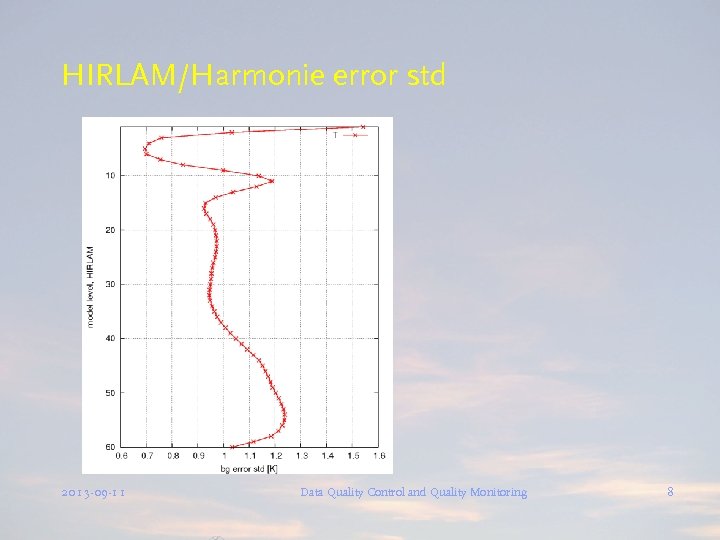

HIRLAM/Harmonie error std 2013 -09 -11 Data Quality Control and Quality Monitoring 8

HIRLAM/Harmonie error std 2013 -09 -11 Data Quality Control and Quality Monitoring 8

Interpretation of differences • Should be based on rules, defined by Int. Metrological Organizations (ICSU, BIPM) • Statistical analyses first, then definition of parameter used for further interpretation and requirements • Expressed in terms of uncertainty (not STD or RMSE), preferably 95% confidence interval 2013 -09 -11 Data Quality Control and Quality Monitoring 9

Interpretation of differences • Should be based on rules, defined by Int. Metrological Organizations (ICSU, BIPM) • Statistical analyses first, then definition of parameter used for further interpretation and requirements • Expressed in terms of uncertainty (not STD or RMSE), preferably 95% confidence interval 2013 -09 -11 Data Quality Control and Quality Monitoring 9

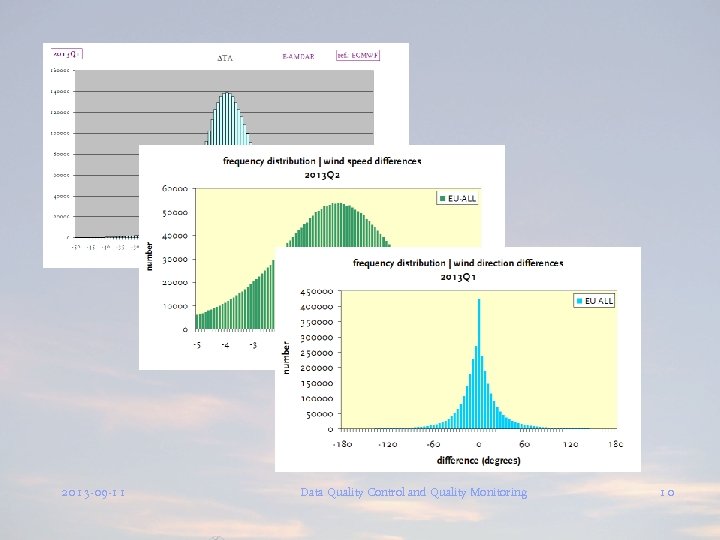

2013 -09 -11 Data Quality Control and Quality Monitoring 10

2013 -09 -11 Data Quality Control and Quality Monitoring 10

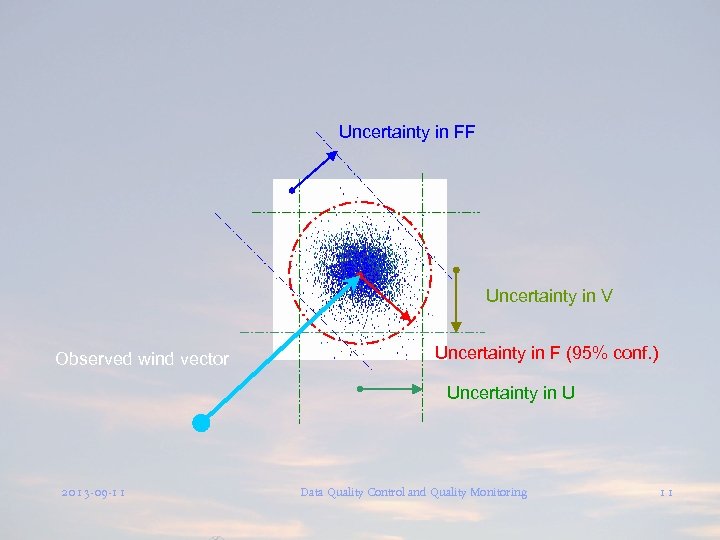

Uncertainty in FF Uncertainty in V Observed wind vector Uncertainty in F (95% conf. ) Uncertainty in U 2013 -09 -11 Data Quality Control and Quality Monitoring 11

Uncertainty in FF Uncertainty in V Observed wind vector Uncertainty in F (95% conf. ) Uncertainty in U 2013 -09 -11 Data Quality Control and Quality Monitoring 11

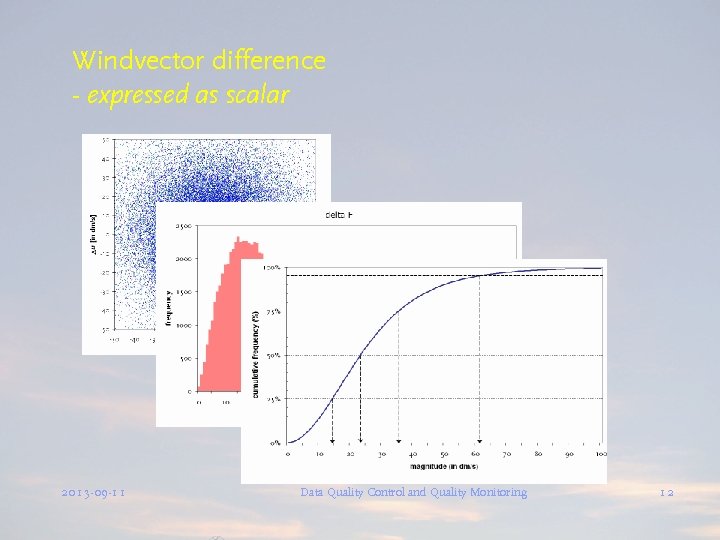

Windvector difference - expressed as scalar 2013 -09 -11 Data Quality Control and Quality Monitoring 12

Windvector difference - expressed as scalar 2013 -09 -11 Data Quality Control and Quality Monitoring 12

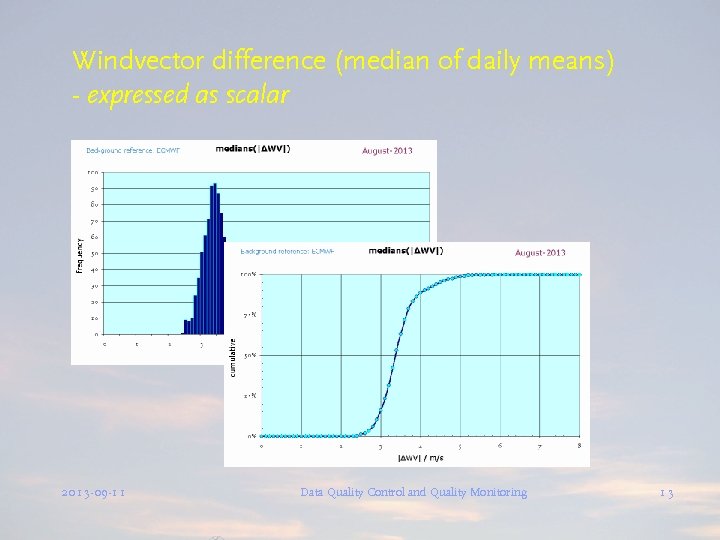

Windvector difference (median of daily means) - expressed as scalar 2013 -09 -11 Data Quality Control and Quality Monitoring 13

Windvector difference (median of daily means) - expressed as scalar 2013 -09 -11 Data Quality Control and Quality Monitoring 13

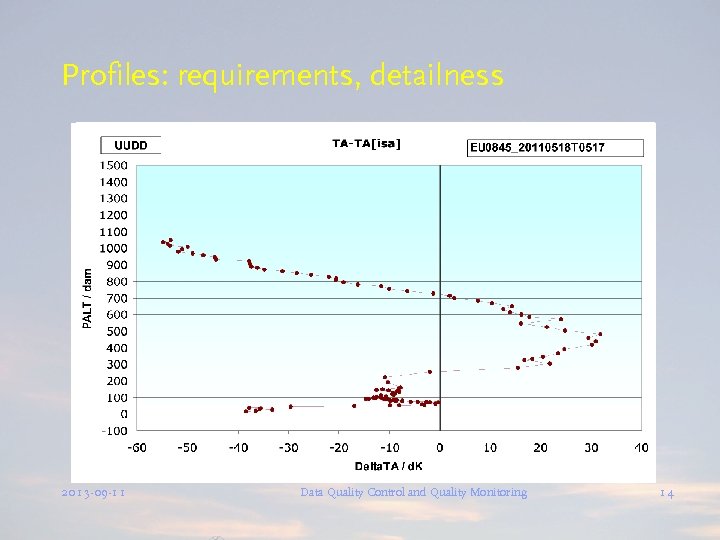

Profiles: requirements, detailness 2013 -09 -11 Data Quality Control and Quality Monitoring 14

Profiles: requirements, detailness 2013 -09 -11 Data Quality Control and Quality Monitoring 14

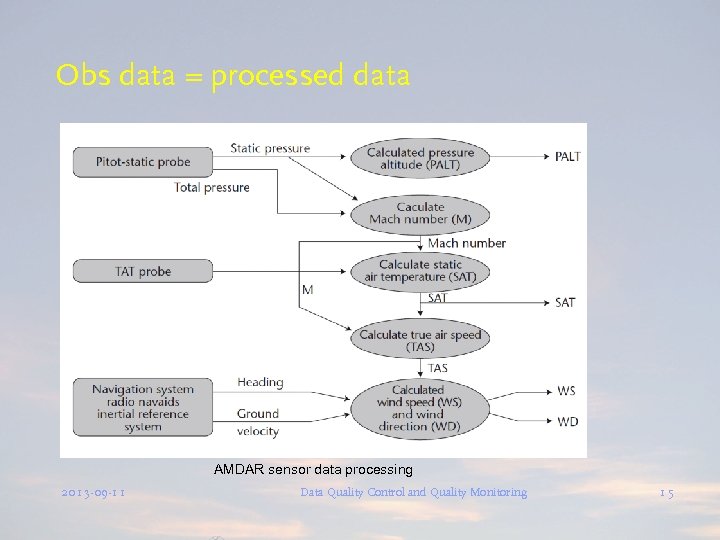

Obs data = processed data AMDAR sensor data processing 2013 -09 -11 Data Quality Control and Quality Monitoring 15

Obs data = processed data AMDAR sensor data processing 2013 -09 -11 Data Quality Control and Quality Monitoring 15

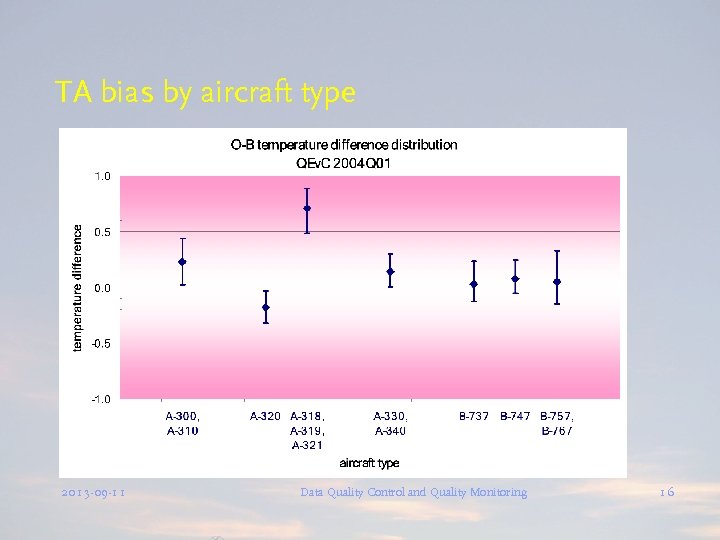

TA bias by aircraft type 2013 -09 -11 Data Quality Control and Quality Monitoring 16

TA bias by aircraft type 2013 -09 -11 Data Quality Control and Quality Monitoring 16

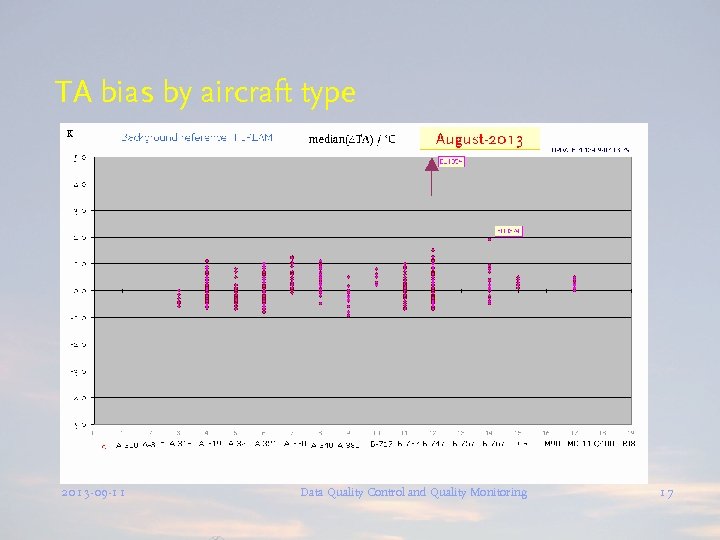

TA bias by aircraft type 2013 -09 -11 Data Quality Control and Quality Monitoring 17

TA bias by aircraft type 2013 -09 -11 Data Quality Control and Quality Monitoring 17

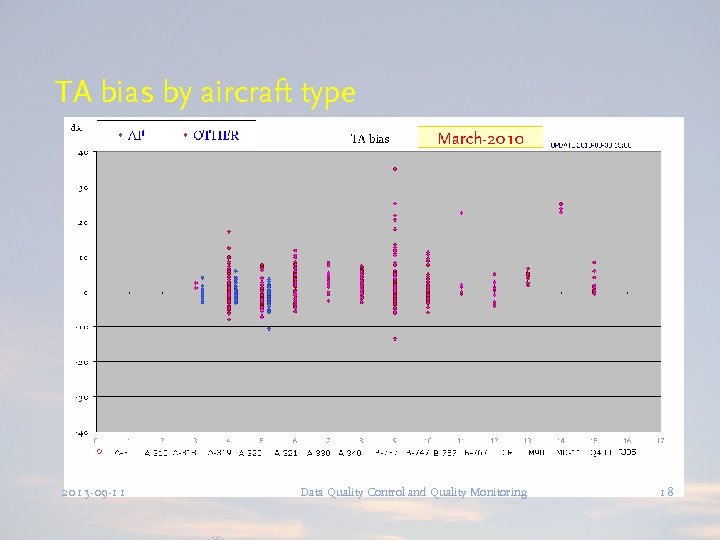

TA bias by aircraft type 2013 -09 -11 Data Quality Control and Quality Monitoring 18

TA bias by aircraft type 2013 -09 -11 Data Quality Control and Quality Monitoring 18

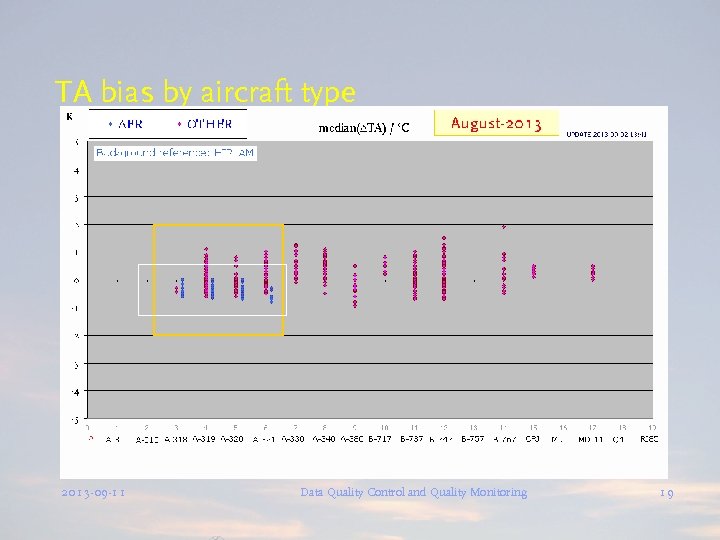

TA bias by aircraft type 2013 -09 -11 Data Quality Control and Quality Monitoring 19

TA bias by aircraft type 2013 -09 -11 Data Quality Control and Quality Monitoring 19

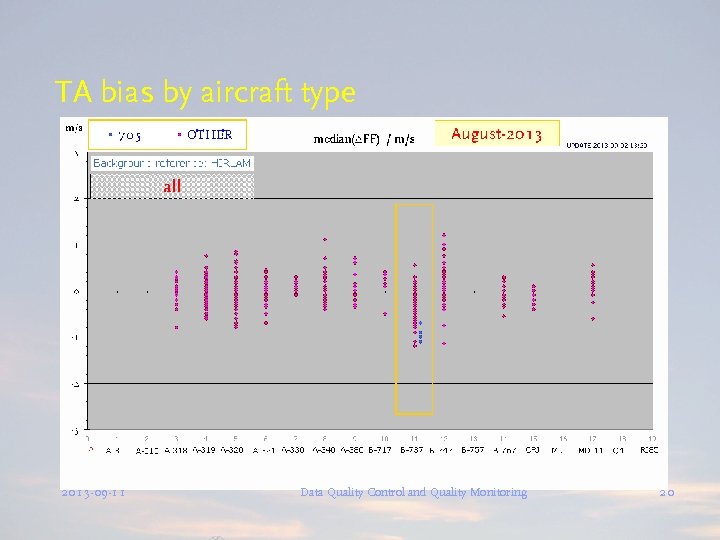

TA bias by aircraft type 2013 -09 -11 Data Quality Control and Quality Monitoring 20

TA bias by aircraft type 2013 -09 -11 Data Quality Control and Quality Monitoring 20

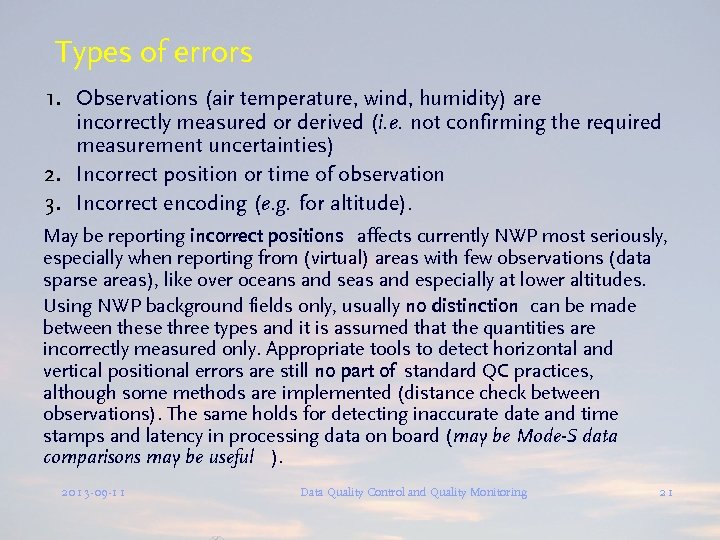

Types of errors 1. Observations (air temperature, wind, humidity) are incorrectly measured or derived (i. e. not confirming the required measurement uncertainties) 2. Incorrect position or time of observation 3. Incorrect encoding (e. g. for altitude). May be reporting incorrect positions affects currently NWP most seriously, especially when reporting from (virtual) areas with few observations (data sparse areas), like over oceans and seas and especially at lower altitudes. Using NWP background fields only, usually no distinction can be made between these three types and it is assumed that the quantities are incorrectly measured only. Appropriate tools to detect horizontal and vertical positional errors are still no part of standard QC practices, although some methods are implemented (distance check between observations). The same holds for detecting inaccurate date and time stamps and latency in processing data on board (may be Mode-S data comparisons may be useful ). 2013 -09 -11 Data Quality Control and Quality Monitoring 21

Types of errors 1. Observations (air temperature, wind, humidity) are incorrectly measured or derived (i. e. not confirming the required measurement uncertainties) 2. Incorrect position or time of observation 3. Incorrect encoding (e. g. for altitude). May be reporting incorrect positions affects currently NWP most seriously, especially when reporting from (virtual) areas with few observations (data sparse areas), like over oceans and seas and especially at lower altitudes. Using NWP background fields only, usually no distinction can be made between these three types and it is assumed that the quantities are incorrectly measured only. Appropriate tools to detect horizontal and vertical positional errors are still no part of standard QC practices, although some methods are implemented (distance check between observations). The same holds for detecting inaccurate date and time stamps and latency in processing data on board (may be Mode-S data comparisons may be useful ). 2013 -09 -11 Data Quality Control and Quality Monitoring 21

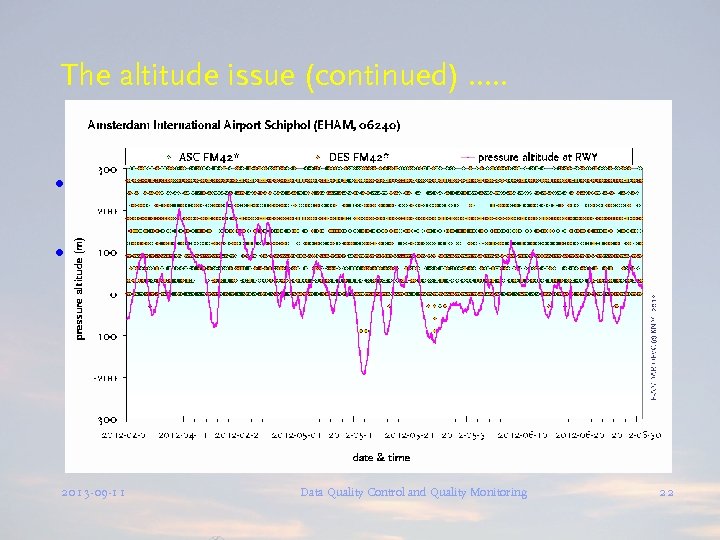

The altitude issue (continued) …. . l l Typically, some FM 42 & BUFR reports show PALT, FL < pressure altitude[RWY] Typically, some reports show only FL > altitude[RWY] or only FL > 0 2013 -09 -11 Data Quality Control and Quality Monitoring 22

The altitude issue (continued) …. . l l Typically, some FM 42 & BUFR reports show PALT, FL < pressure altitude[RWY] Typically, some reports show only FL > altitude[RWY] or only FL > 0 2013 -09 -11 Data Quality Control and Quality Monitoring 22

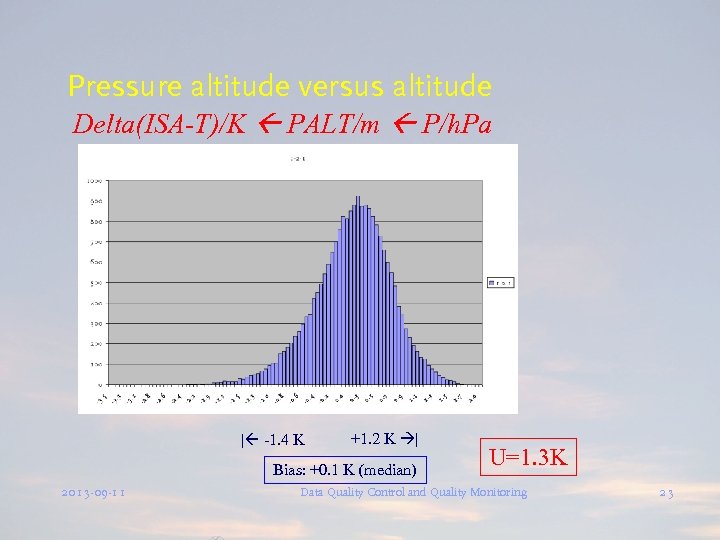

Pressure altitude versus altitude Delta(ISA-T)/K PALT/m P/h. Pa | -1. 4 K +1. 2 K | Bias: +0. 1 K (median) 2013 -09 -11 U=1. 3 K Data Quality Control and Quality Monitoring 23

Pressure altitude versus altitude Delta(ISA-T)/K PALT/m P/h. Pa | -1. 4 K +1. 2 K | Bias: +0. 1 K (median) 2013 -09 -11 U=1. 3 K Data Quality Control and Quality Monitoring 23

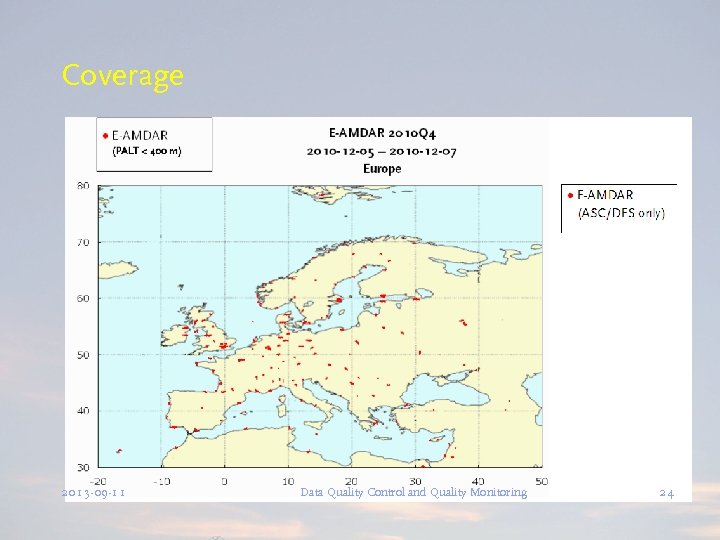

Coverage 2013 -09 -11 Data Quality Control and Quality Monitoring 24

Coverage 2013 -09 -11 Data Quality Control and Quality Monitoring 24

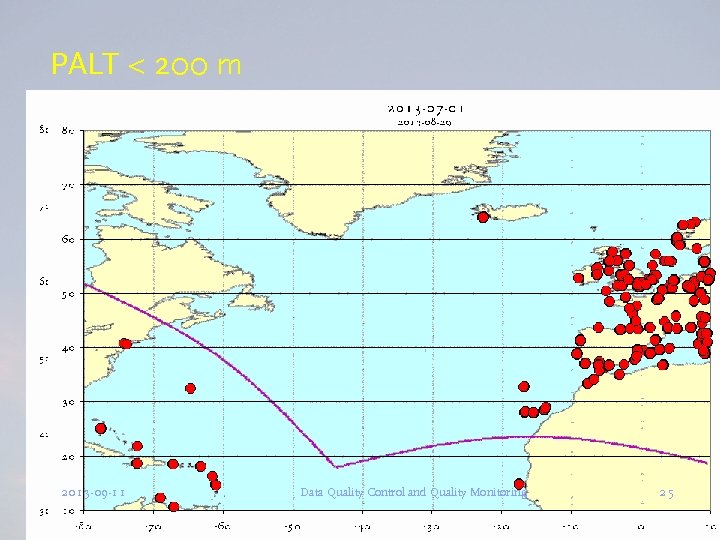

PALT < 200 m 2013 -09 -11 Data Quality Control and Quality Monitoring 25

PALT < 200 m 2013 -09 -11 Data Quality Control and Quality Monitoring 25

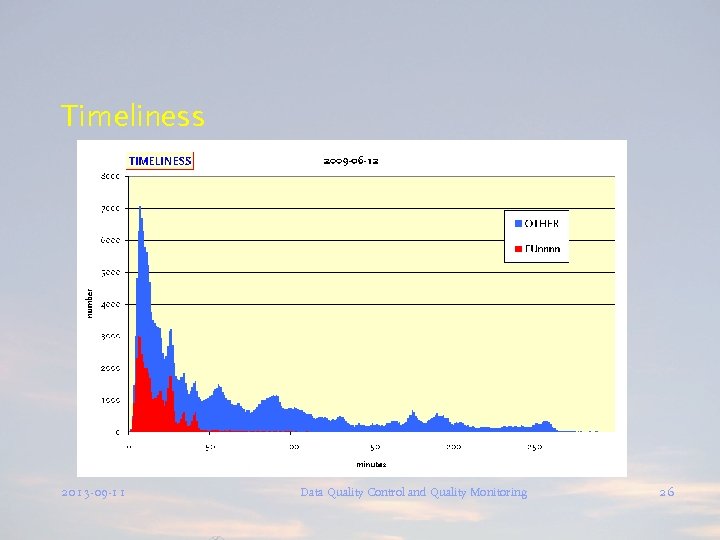

Timeliness 2013 -09 -11 Data Quality Control and Quality Monitoring 26

Timeliness 2013 -09 -11 Data Quality Control and Quality Monitoring 26

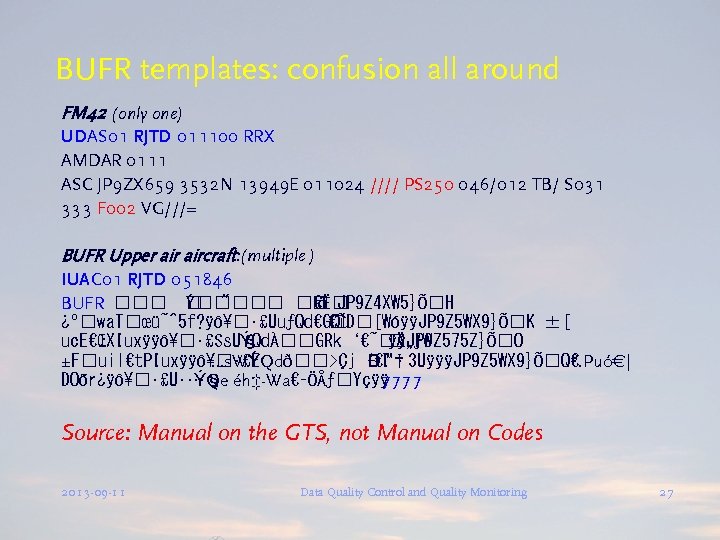

BUFR templates: confusion all around FM 42 (only one) UDAS 01 RJTD 011100 RRX AMDAR 0111 ASC JP 9 ZX 659 3532 N 13949 E 011024 //// PS 250 046/012 TB/ S 031 333 F 002 VG///= BUFR Upper aircraft: (multiple ) IUAC 01 RJTD 051846 BUFR " Ý €Ë JP 9 Z 4 XW 5}Õ H 𠿺 wa. T œü˜^5 f? ÿô¥ • £UuƒQd€GQù €˜D [WóÿÿJP 9 Z 5 WX 9}Õ K ±[ uc. E€ŒXIuxÿÿô¥ • £Ss. U§ ÝQdÀ GRk‘€˜ Ä„ƒW ÿÿJP 9 Z 575 Z}Õ O ±F uil€t. PIuxÿÿô¥ • £E …s. WÝQdð ›Çj‘€™ ÐI† 3 UÿÿÿJP 9 Z 5 WX 9}Õ Q€ ³. Puó€| DOõr¿ÿô¥ • £U • …§ éh‡-Wa€–ÖŃ Yçÿÿ 777 ÝQe 7 Source: Manual on the GTS, not Manual on Codes 2013 -09 -11 Data Quality Control and Quality Monitoring 27

BUFR templates: confusion all around FM 42 (only one) UDAS 01 RJTD 011100 RRX AMDAR 0111 ASC JP 9 ZX 659 3532 N 13949 E 011024 //// PS 250 046/012 TB/ S 031 333 F 002 VG///= BUFR Upper aircraft: (multiple ) IUAC 01 RJTD 051846 BUFR " Ý €Ë JP 9 Z 4 XW 5}Õ H 𠿺 wa. T œü˜^5 f? ÿô¥ • £UuƒQd€GQù €˜D [WóÿÿJP 9 Z 5 WX 9}Õ K ±[ uc. E€ŒXIuxÿÿô¥ • £Ss. U§ ÝQdÀ GRk‘€˜ Ä„ƒW ÿÿJP 9 Z 575 Z}Õ O ±F uil€t. PIuxÿÿô¥ • £E …s. WÝQdð ›Çj‘€™ ÐI† 3 UÿÿÿJP 9 Z 5 WX 9}Õ Q€ ³. Puó€| DOõr¿ÿô¥ • £U • …§ éh‡-Wa€–ÖŃ Yçÿÿ 777 ÝQe 7 Source: Manual on the GTS, not Manual on Codes 2013 -09 -11 Data Quality Control and Quality Monitoring 27

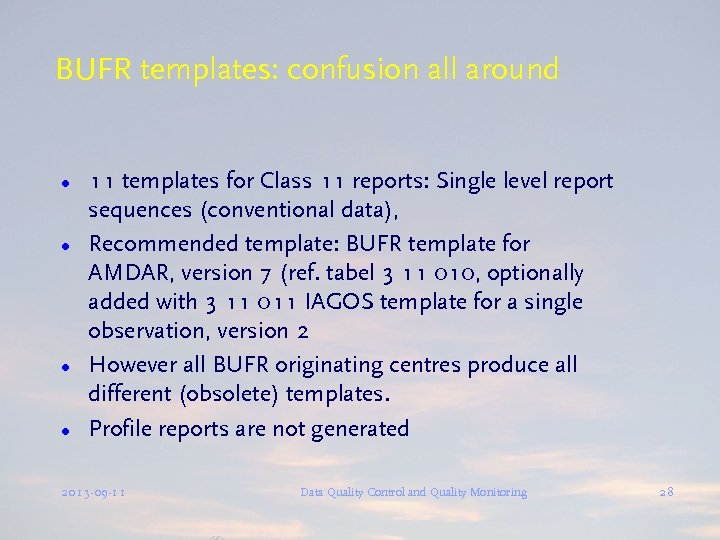

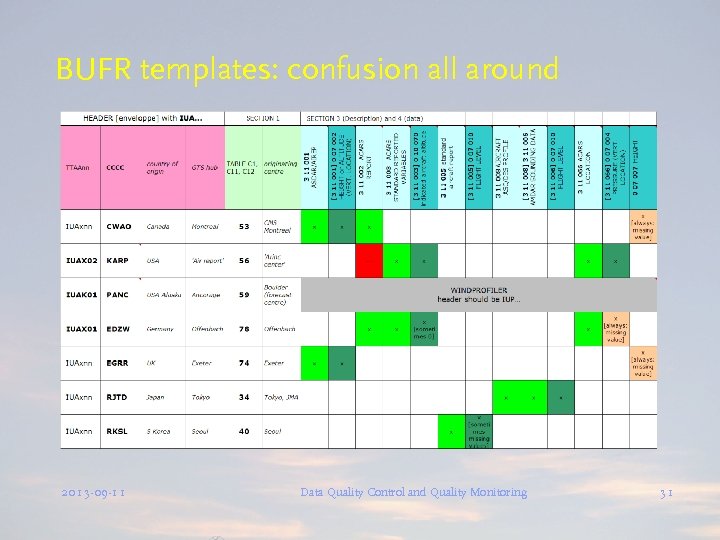

BUFR templates: confusion all around l l 11 templates for Class 11 reports: Single level report sequences (conventional data), Recommended template: BUFR template for AMDAR, version 7 (ref. tabel 3 11 010, optionally added with 3 11 011 IAGOS template for a single observation, version 2 However all BUFR originating centres produce all different (obsolete) templates. Profile reports are not generated 2013 -09 -11 Data Quality Control and Quality Monitoring 28

BUFR templates: confusion all around l l 11 templates for Class 11 reports: Single level report sequences (conventional data), Recommended template: BUFR template for AMDAR, version 7 (ref. tabel 3 11 010, optionally added with 3 11 011 IAGOS template for a single observation, version 2 However all BUFR originating centres produce all different (obsolete) templates. Profile reports are not generated 2013 -09 -11 Data Quality Control and Quality Monitoring 28

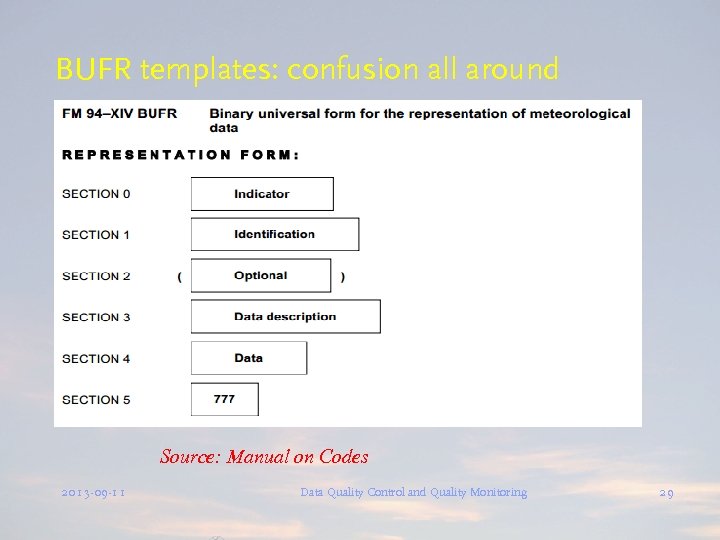

BUFR templates: confusion all around Source: Manual on Codes 2013 -09 -11 Data Quality Control and Quality Monitoring 29

BUFR templates: confusion all around Source: Manual on Codes 2013 -09 -11 Data Quality Control and Quality Monitoring 29

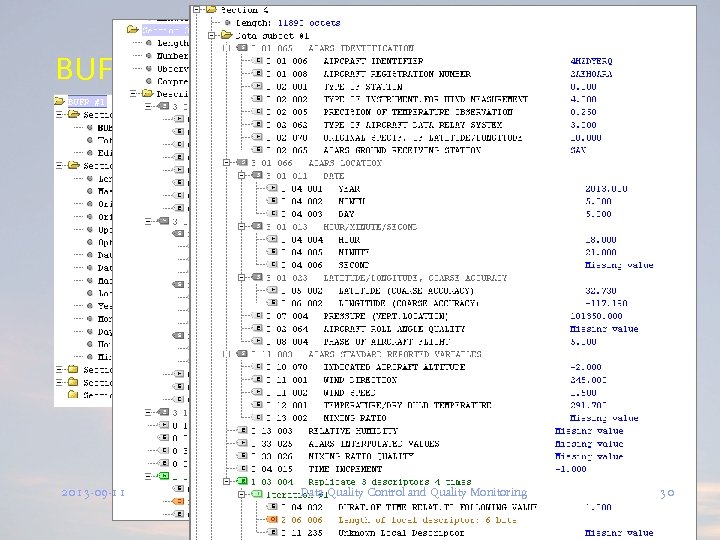

BUFR templates: confusion all around 2013 -09 -11 Data Quality Control and Quality Monitoring 30

BUFR templates: confusion all around 2013 -09 -11 Data Quality Control and Quality Monitoring 30

BUFR templates: confusion all around 2013 -09 -11 Data Quality Control and Quality Monitoring 31

BUFR templates: confusion all around 2013 -09 -11 Data Quality Control and Quality Monitoring 31

Q 2013 -09 -11 Data Quality Control and Quality Monitoring 32

Q 2013 -09 -11 Data Quality Control and Quality Monitoring 32