33a02b1e5d366722cabc3d79d32aa67e.ppt

- Количество слайдов: 59

Windows NT Scalability Jim Gray Microsoft Research Gray@Microsoft. com http/www. research. Microsoft. com/~Gray/talks/

Outline Scale Up Scale Out • Scalability: What & Why? • Scale UP: NT SMP scalability • Scale OUT: NT Cluster scalability • Key Message: – NT can do the most demanding apps today. – Tomorrow will be even better. Scale Down

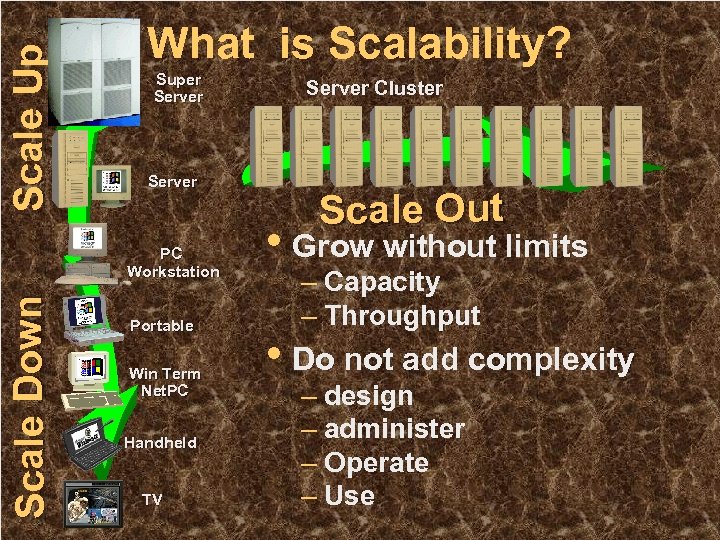

Scale Up What is Scalability? Super Server Scale Down PC Workstation Portable Win Term Net. PC Handheld TV Server Cluster Scale Out • Grow without limits – Capacity – Throughput • Do not add complexity – design – administer – Operate – Use

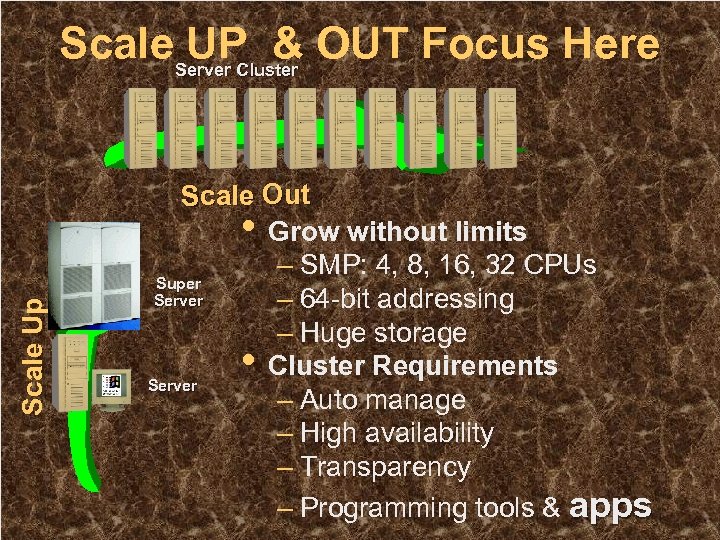

Scale Up Scale. Server Cluster OUT Focus Here UP & Scale Out • Grow without limits – SMP: 4, 8, 16, 32 CPUs Super Server – 64 -bit addressing – Huge storage • Cluster Requirements Server – Auto manage – High availability – Transparency – Programming tools & apps

Scalability is Important • Automation benefits growing – ROI of 1 month. . • Slice price going to zero Server – Cyberbrick costs 5 k$ • Design, Implement & Manage cost going down – DCOM & Viper make it easy! – NT Clusters are easy! • Billions of clients imply • millions of HUGE servers. Thin clients imply huge servers.

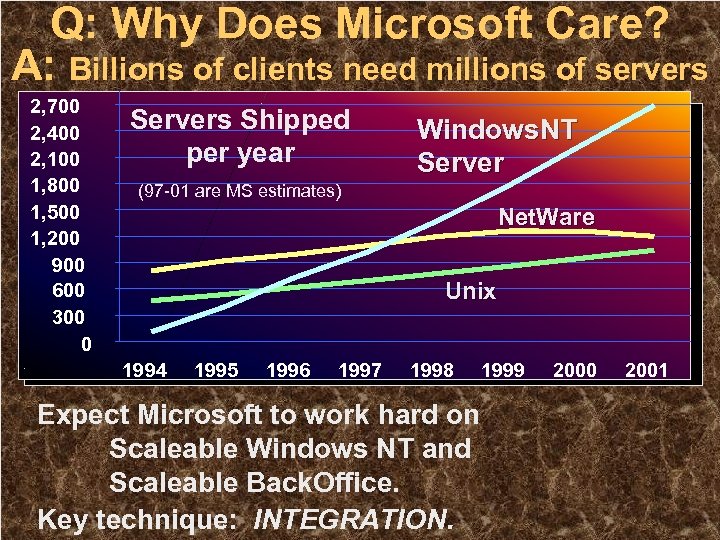

Q: Why Does Microsoft Care? A: Billions of clients need millions of servers 2, 700 2, 400 2, 100 1, 800 1, 500 1, 200 900 600 300 0 Servers Shipped per year Windows. NT Server (97 -01 are MS estimates) Net. Ware Unix 1994 1995 1996 1997 1998 Expect Microsoft to work hard on Scaleable Windows NT and Scaleable Back. Office. Key technique: INTEGRATION. 1999 2000 2001

How Scaleable is NT? ? The Single Node Story • 64 bit file system in NT 1, 2, 3, 4, 5 • 8 node SMP in NT 4. E, 32 node OEM • 64 bit addressing in NT 5 • 1 Terabyte SQL Databases (Peta. Byte capable) • 10, 000 users (TPC-C benchmark) • 100 Million web hits per day (IIS) • 50 GB Exchange mail store next release designed for 16 TB • 50, 000 POP 3 users on Exchange (1. 8 M messages/day) • And, more coming…. .

Windows NT Server • Scalability Enterprise Edition – 8 x SMP support (32 x in OEM kit) – Larger process memory (3 GB Intel) – Unlimited Virtual Roots in IIS (web) • Transactions – DCOM transactions (Viper TP mon) – Message Queuing (Falcon) • Availability – Clustering (Wolf. Pack) – Web, File, Print, DB … servers fail over.

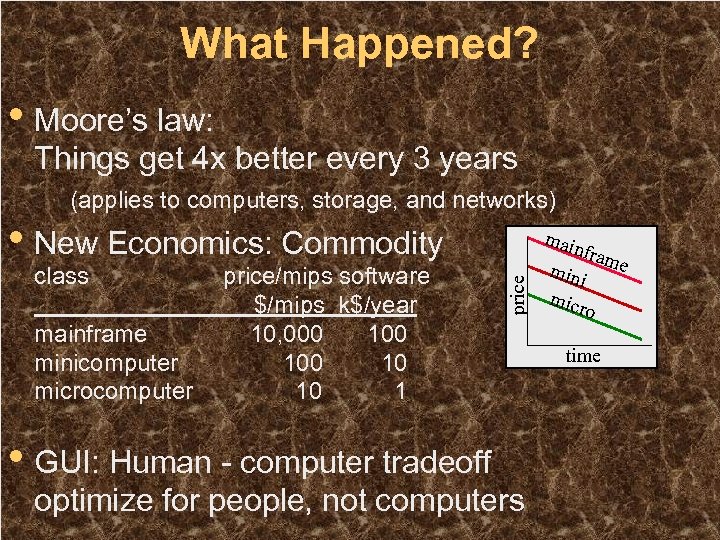

What Happened? • Moore’s law: Things get 4 x better every 3 years (applies to computers, storage, and networks) • New Economics: Commodity mainframe minicomputer microcomputer price/mips software $/mips k$/year 10, 000 100 10 10 1 • GUI: Human - computer tradeoff price class main optimize for people, not computers fram e min i micr o time

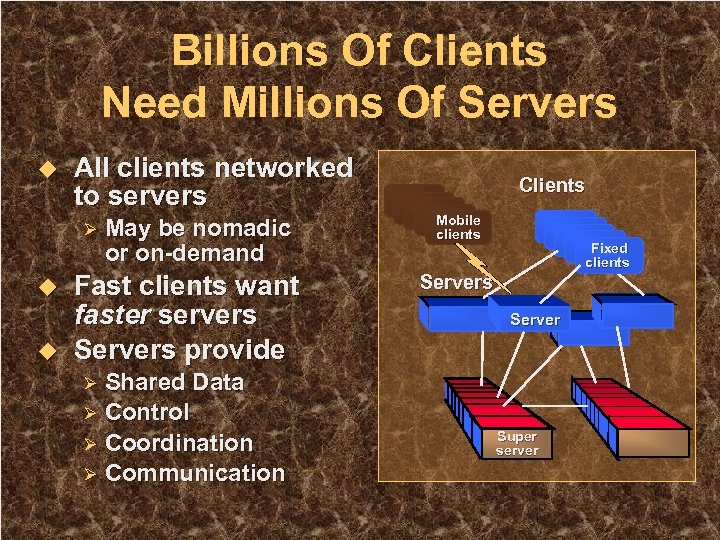

Billions Of Clients Need Millions Of Servers u All clients networked to servers Ø u u May be nomadic or on-demand Fast clients want faster servers Servers provide Shared Data Ø Control Ø Coordination Ø Communication Clients Mobile clients Fixed clients Server Ø Super server

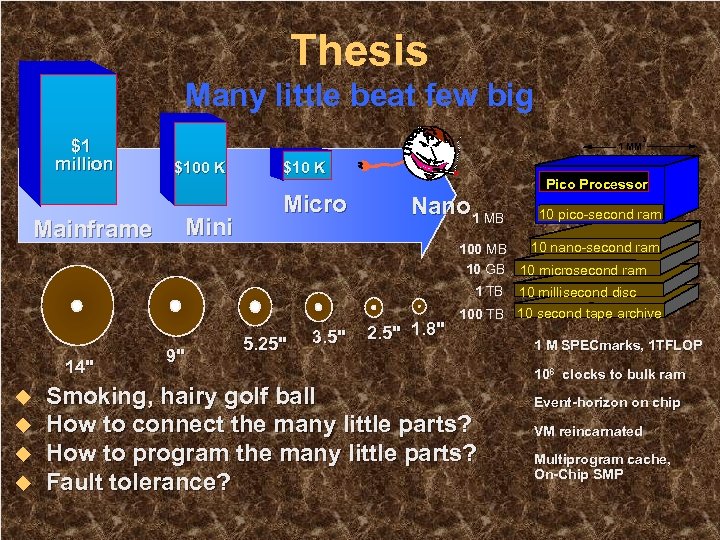

Thesis Many little beat few big $1 million Mainframe 3 1 MM $100 K Mini $10 K Micro Nano 1 MB Pico Processor 10 pico-second ram 10 nano-second ram 100 MB 10 GB 10 microsecond ram 1 TB 14" u u 9" 5. 25" 3. 5" 2. 5" 1. 8" 10 millisecond disc 100 TB 10 second tape archive Smoking, hairy golf ball How to connect the many little parts? How to program the many little parts? Fault tolerance? 1 M SPECmarks, 1 TFLOP 106 clocks to bulk ram Event-horizon on chip VM reincarnated Multiprogram cache, On-Chip SMP

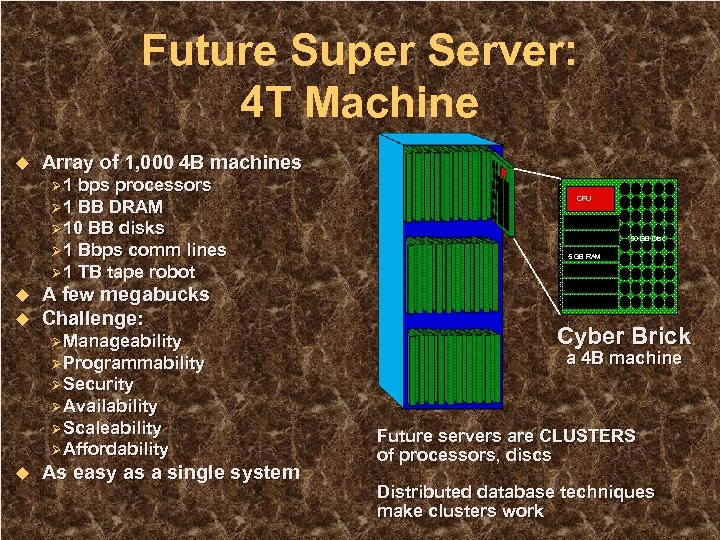

Future Super Server: 4 T Machine u Array of 1, 000 4 B machines Ø 1 bps processors Ø 1 BB DRAM Ø 10 BB disks Ø 1 Bbps comm lines Ø 1 TB tape robot u u A few megabucks Challenge: Ø Manageability Ø Programmability CPU 50 GB Disc 5 GB RAM Cyber Brick a 4 B machine Ø Security Ø Availability Ø Scaleability Ø Affordability u As easy as a single system Future servers are CLUSTERS of processors, discs Distributed database techniques make clusters work

The Hardware Is In Place… And then a miracle occurs ? u u u SNAP: scaleable network and platforms Commodity-distributed OS built on: Ø Commodity platforms Ø Commodity network interconnect Enables parallel applications

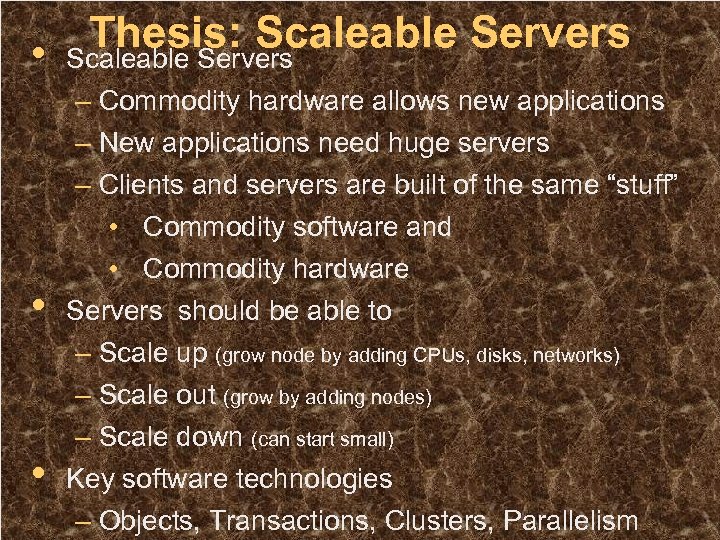

Thesis: Scaleable Servers • Scaleable Servers • • – Commodity hardware allows new applications – New applications need huge servers – Clients and servers are built of the same “stuff” • Commodity software and • Commodity hardware Servers should be able to – Scale up (grow node by adding CPUs, disks, networks) – Scale out (grow by adding nodes) – Scale down (can start small) Key software technologies – Objects, Transactions, Clusters, Parallelism

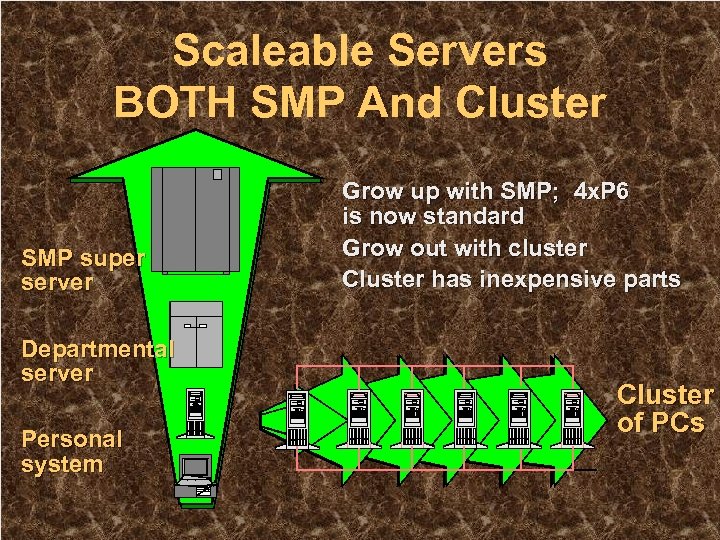

Scaleable Servers BOTH SMP And Cluster SMP super server Departmental server Personal system Grow up with SMP; 4 x. P 6 is now standard Grow out with cluster Cluster has inexpensive parts Cluster of PCs

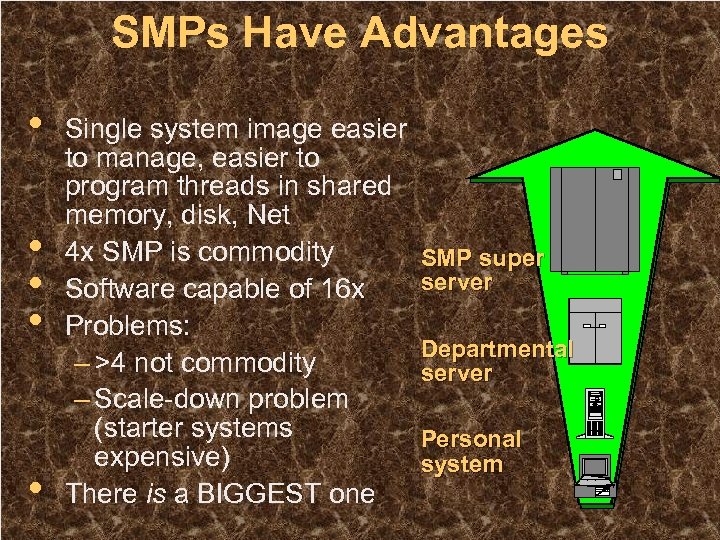

SMPs Have Advantages • • • Single system image easier to manage, easier to program threads in shared memory, disk, Net 4 x SMP is commodity Software capable of 16 x Problems: – >4 not commodity – Scale-down problem (starter systems expensive) There is a BIGGEST one SMP super server Departmental server Personal system

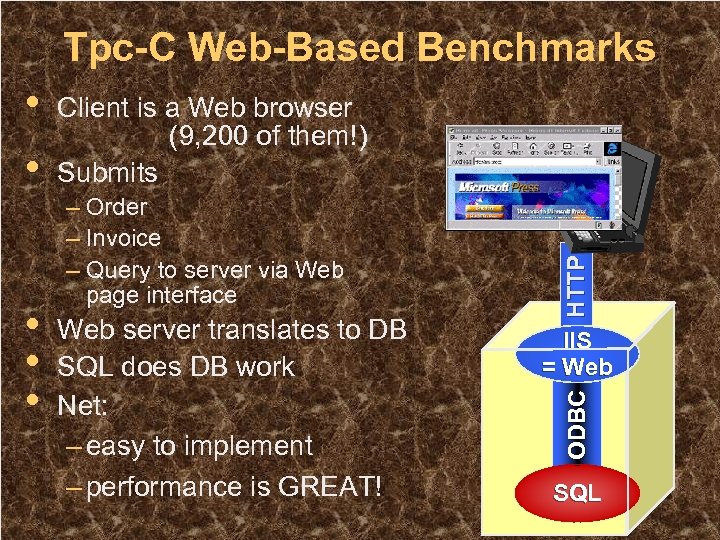

Tpc-C Web-Based Benchmarks • • • – Order – Invoice – Query to server via Web page interface Web server translates to DB SQL does DB work Net: – easy to implement – performance is GREAT! HTTP • Client is a Web browser (9, 200 of them!) Submits IIS = Web ODBC • SQL

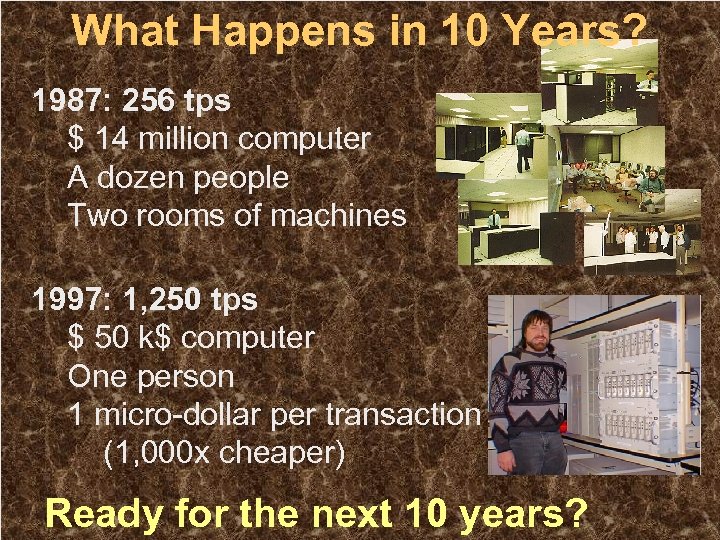

What Happens in 10 Years? 1987: 256 tps $ 14 million computer A dozen people Two rooms of machines 1997: 1, 250 tps $ 50 k$ computer One person 1 micro-dollar per transaction (1, 000 x cheaper) Ready for the next 10 years?

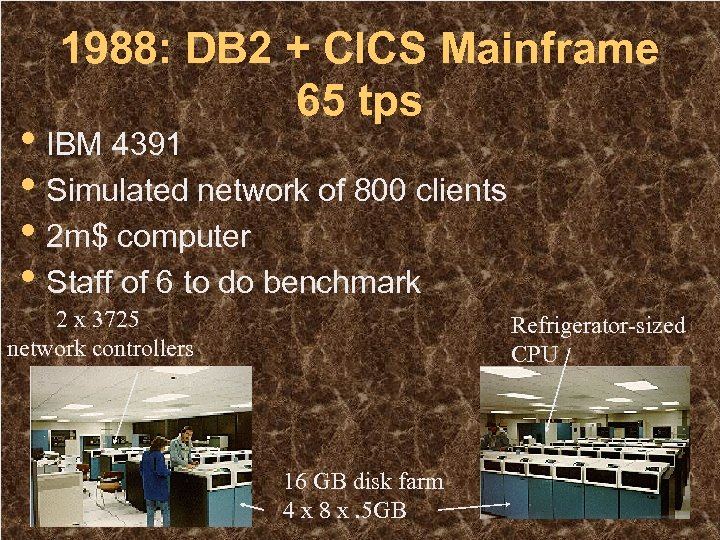

1988: DB 2 + CICS Mainframe 65 tps • IBM 4391 • Simulated network of 800 clients • 2 m$ computer • Staff of 6 to do benchmark 2 x 3725 network controllers Refrigerator-sized CPU 16 GB disk farm 4 x 8 x. 5 GB

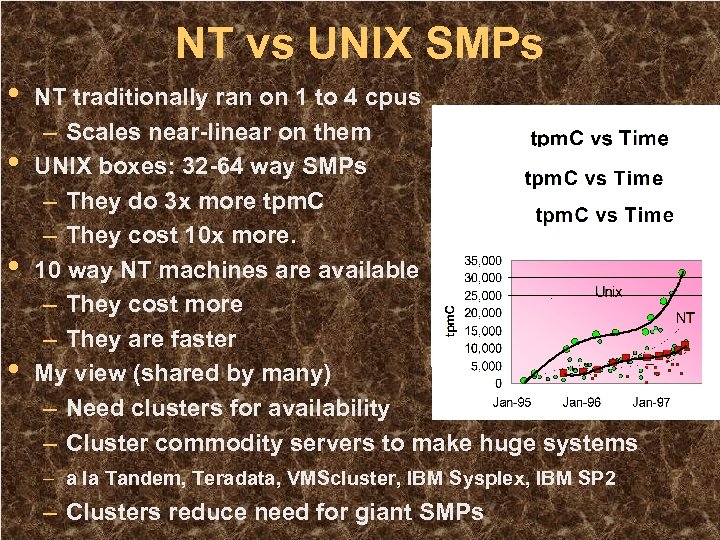

NT vs UNIX SMPs • • NT traditionally ran on 1 to 4 cpus – Scales near-linear on them UNIX boxes: 32 -64 way SMPs – They do 3 x more tpm. C – They cost 10 x more. 10 way NT machines are available – They cost more – They are faster My view (shared by many) – Need clusters for availability – Cluster commodity servers to make huge systems – a la Tandem, Teradata, VMScluster, IBM Sysplex, IBM SP 2 – Clusters reduce need for giant SMPs

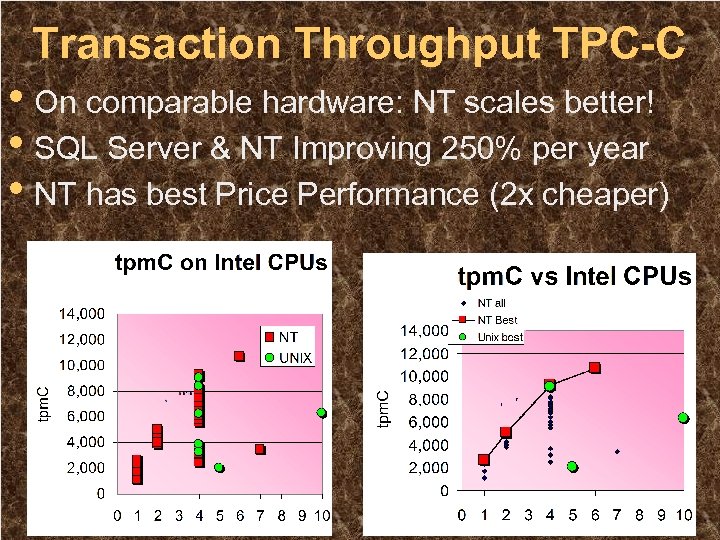

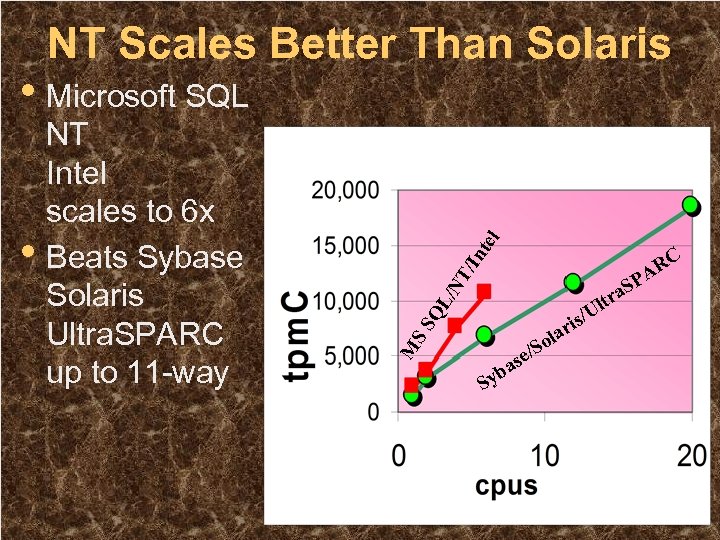

Transaction Throughput TPC-C • On comparable hardware: NT scales better! • SQL Server & NT Improving 250% per year • NT has best Price Performance (2 x cheaper)

NT Scales Better Than Solaris T/ RC A SP SS QL /N a / ris la o S se/ a M • NT Intel scales to 6 x Beats Sybase Solaris Ultra. SPARC up to 11 -way In tel • Microsoft SQL Sy b tr Ul

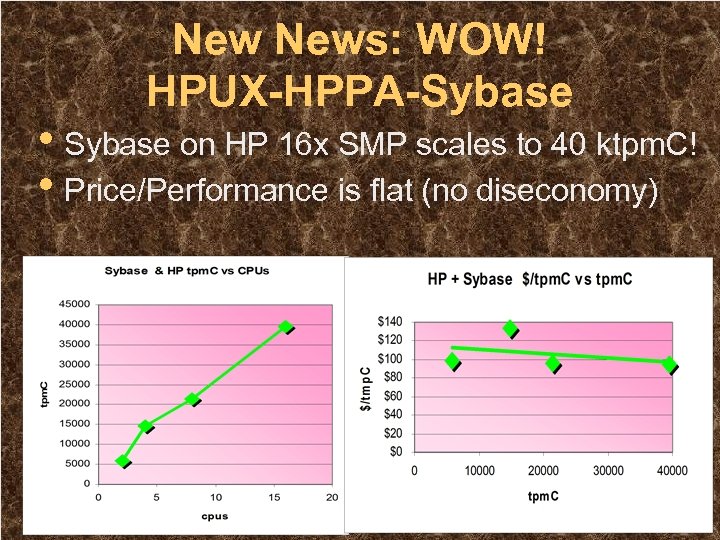

New News: WOW! HPUX-HPPA-Sybase • Sybase on HP 16 x SMP scales to 40 ktpm. C! • Price/Performance is flat (no diseconomy)

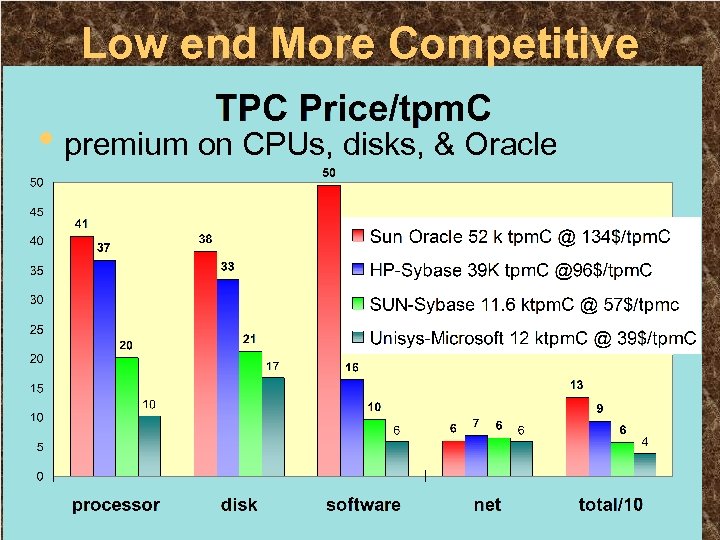

Low end More Competitive • premium on CPUs, disks, & Oracle

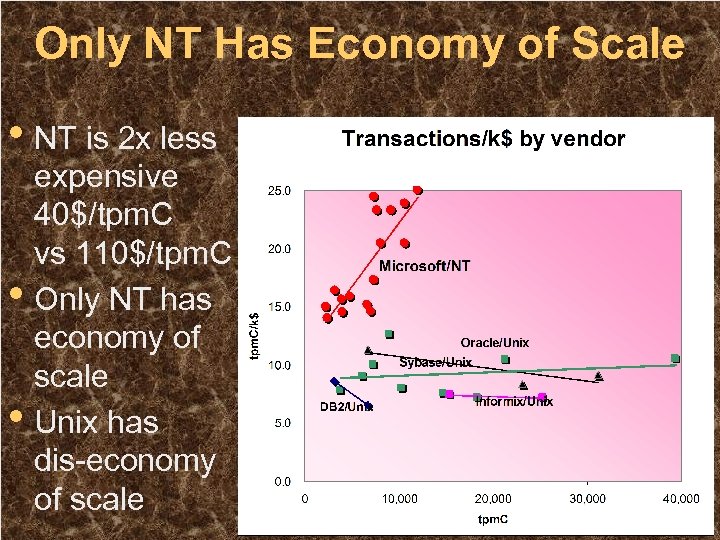

Only NT Has Economy of Scale • NT is 2 x less • • expensive 40$/tpm. C vs 110$/tpm. C Only NT has economy of scale Unix has dis-economy of scale

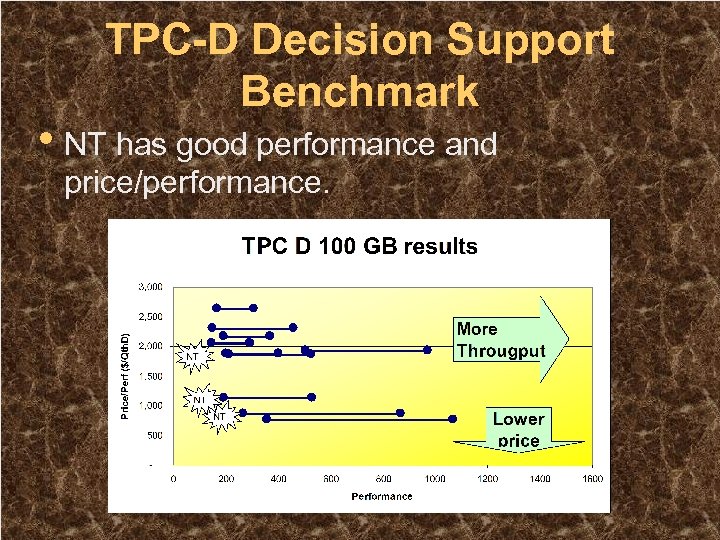

TPC-D Decision Support Benchmark • NT has good performance and price/performance.

• • • Scaleup To Big Databases? NT 4 and SQL Server 6. 5 – DBs up to 1 Billion records, – 100 GB – Covers most (80%) data warehouses SQL Server 7. 0 – Designed for Terabytes • Hundreds of disks per server. • SMP parallel search – Data Mining and Multi-Media Terra. Server is good MM example Satellite photos of Earth (1 TB) Dayton-Hudson Sales records (300 GB) Human Genome (3 GB) Manhattan phone book (15 MB) Excel spreadsheet

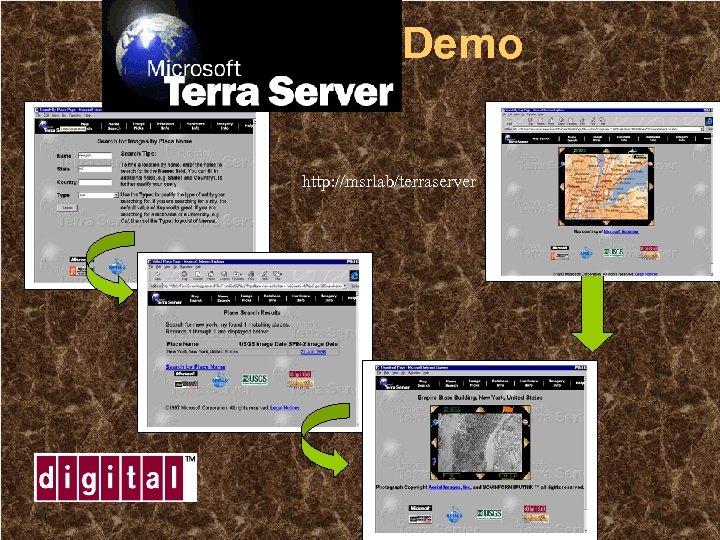

Database Scaleup: Terra. Server™ • • Demo NT and SQL Server scalability Stress test SQL Server 7. 0 Requirements – 1 TB – Unencumbered (put on www) – Interesting to everyone everywhere – And not offensive to anyone anywhere Loaded – 1. 1 M place names from Encarta World Atlas – 1 M Sq Km from USGS (1 meter resolution) – 2 M Sq Km from Russian Space agency (2 m) Will be on web (world’s largest atlas) Sell images with commerce server. USGS CRDA: 3 TB more coming.

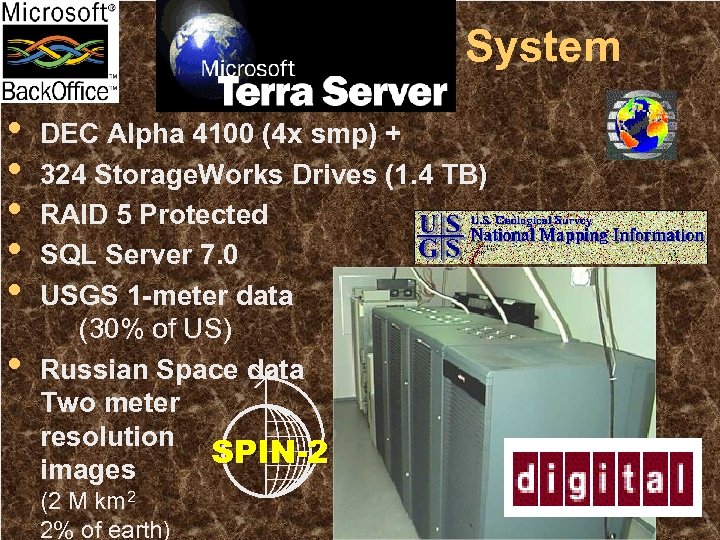

Terra. Server System • • • DEC Alpha 4100 (4 x smp) + 324 Storage. Works Drives (1. 4 TB) RAID 5 Protected SQL Server 7. 0 USGS 1 -meter data (30% of US) Russian Space data Two meter resolution SPIN-2 images (2 M km 2 2% of earth)

Demo http: //msrlab/terraserver

Manageability Windows NT 5. 0 and Windows 98 • Active Directory tracks all objects in net • Integration with IE 4. – Web-centric user interface • Management Console – Component architecture • Zero Admin Kit and Systems Management Server • Plug. NPlay, Instant On, Remote Boot, . . • Hydra and Intelli-Mirroring

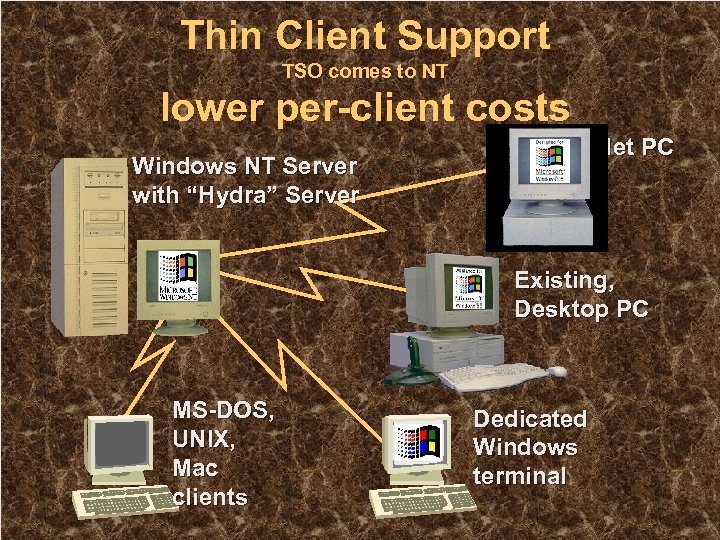

Thin Client Support TSO comes to NT lower per-client costs Net PC Windows NT Server with “Hydra” Server Existing, Desktop PC MS-DOS, UNIX, Mac clients Dedicated Windows terminal

Windows NT 5. 0 Intelli. Mirror™ • Extends CMU Coda File System ideas • Files and settings mirrored on • • client and server Great for disconnected users Facilitates roaming Easy to replace PCs Optimizes network performance Best of PC and centralized computing advantages

Scale Up Outline Scale Out • Scalability: What & Why? • Scale UP: NT SMP scalability • Scale OUT: NT Cluster scalability • Key Message: – NT can do the most demanding apps today. – Tomorrow will be even better. Scale Down

Scale OUT Clusters Have Advantages • Fault tolerance: – Spare modules mask failures • Modular growth without limits – Grow by adding small modules • Parallel data search – Use multiple processors and disks Clients and servers made from the same stuff – Inexpensive: built with commodity Cyber. Bricks •

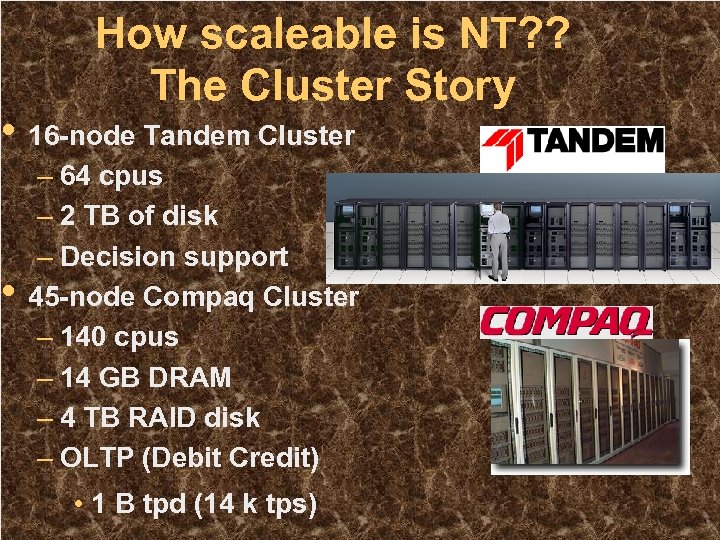

How scaleable is NT? ? The Cluster Story • 16 -node Tandem Cluster • – 64 cpus – 2 TB of disk – Decision support 45 -node Compaq Cluster – 140 cpus – 14 GB DRAM – 4 TB RAID disk – OLTP (Debit Credit) • 1 B tpd (14 k tps)

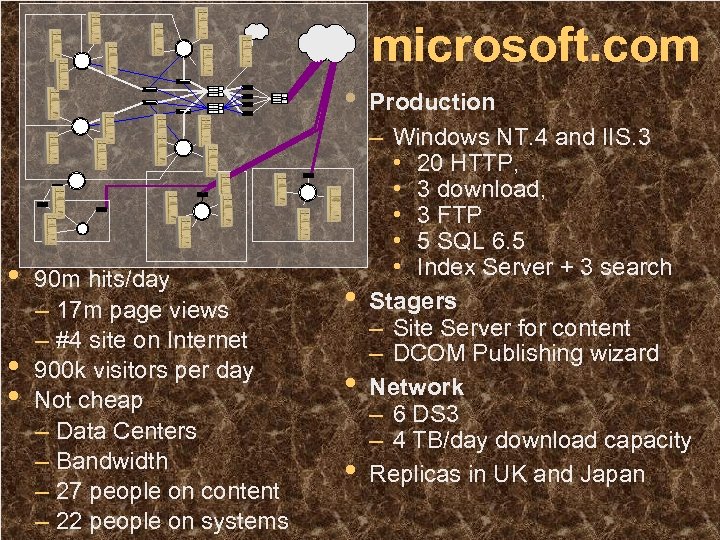

microsoft. com • • 90 m hits/day – 17 m page views – #4 site on Internet 900 k visitors per day Not cheap – Data Centers – Bandwidth – 27 people on content – 22 people on systems • • • Production – Windows NT. 4 and IIS. 3 • 20 HTTP, • 3 download, • 3 FTP • 5 SQL 6. 5 • Index Server + 3 search Stagers – Site Server for content – DCOM Publishing wizard Network – 6 DS 3 – 4 TB/day download capacity Replicas in UK and Japan

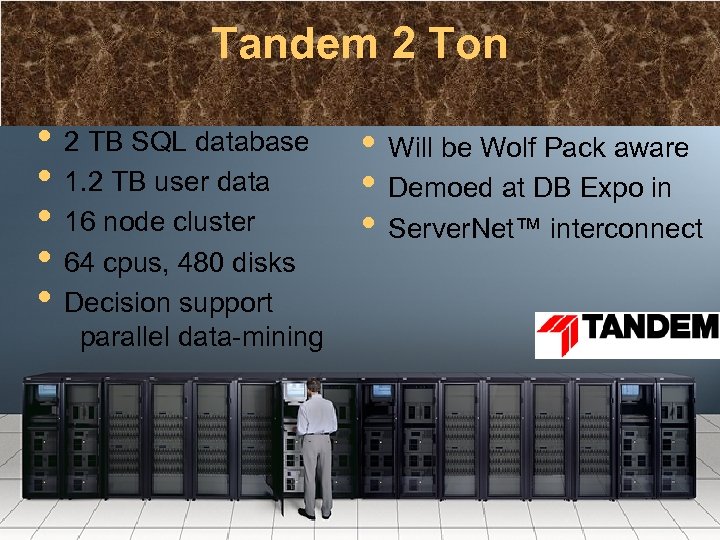

Tandem 2 Ton • 2 TB SQL database • 1. 2 TB user data • 16 node cluster • 64 cpus, 480 disks • Decision support parallel data-mining • Will be Wolf Pack aware • Demoed at DB Expo in • Server. Net™ interconnect

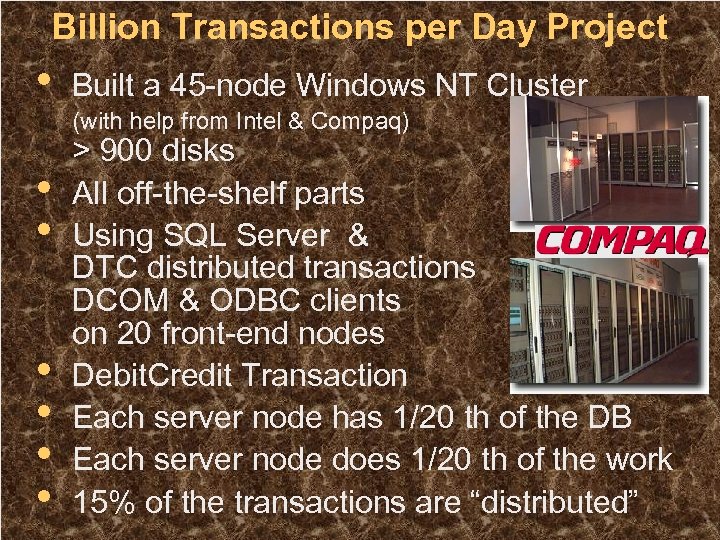

Billion Transactions per Day Project • Built a 45 -node Windows NT Cluster (with help from Intel & Compaq) • • • > 900 disks All off-the-shelf parts Using SQL Server & DTC distributed transactions DCOM & ODBC clients on 20 front-end nodes Debit. Credit Transaction Each server node has 1/20 th of the DB Each server node does 1/20 th of the work 15% of the transactions are “distributed”

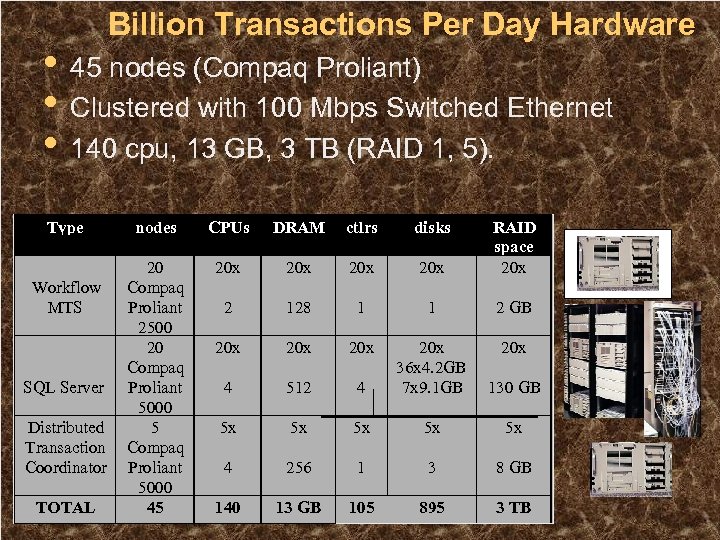

Billion Transactions Per Day Hardware • 45 nodes (Compaq Proliant) • Clustered with 100 Mbps Switched Ethernet • 140 cpu, 13 GB, 3 TB (RAID 1, 5). Type Workflow MTS SQL Server Distributed Transaction Coordinator TOTAL nodes CPUs DRAM ctlrs disks 20 Compaq Proliant 2500 20 Compaq Proliant 5000 5 Compaq Proliant 5000 45 20 x 20 x RAID space 20 x 2 128 1 1 2 GB 20 x 20 x 4 512 4 20 x 36 x 4. 2 GB 7 x 9. 1 GB 130 GB 5 x 5 x 5 x 4 256 1 3 8 GB 140 13 GB 105 895 3 TB

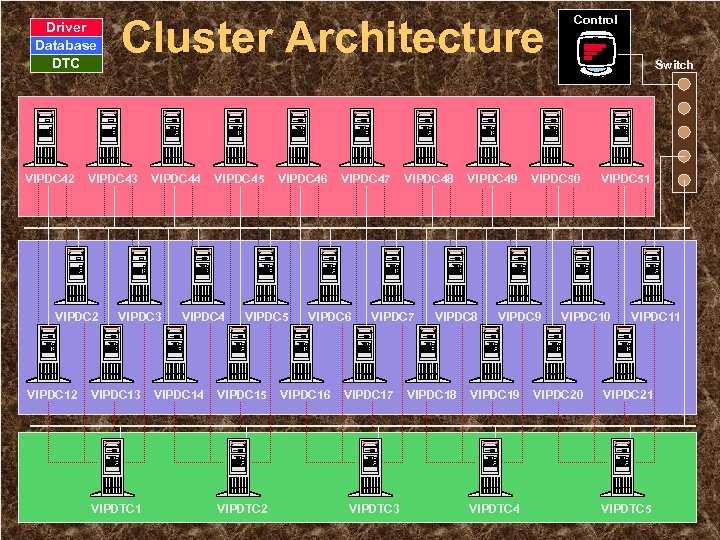

Driver Database DTC VIPDC 42 VIPDC 43 VIPDC 2 VIPDC 12 Cluster Architecture VIPDC 44 VIPDC 3 VIPDC 13 VIPDTC 1 VIPDC 45 VIPDC 4 VIPDC 14 VIPDC 46 VIPDC 5 VIPDC 15 VIPDTC 2 VIPDC 47 VIPDC 6 VIPDC 16 VIPDC 48 VIPDC 7 VIPDC 17 VIPDTC 3 VIPDC 49 VIPDC 8 VIPDC 18 VIPDTC 4 Switch VIPDC 50 VIPDC 9 VIPDC 19 Control VIPDC 51 VIPDC 10 VIPDC 20 VIPDC 11 VIPDC 21 VIPDTC 5

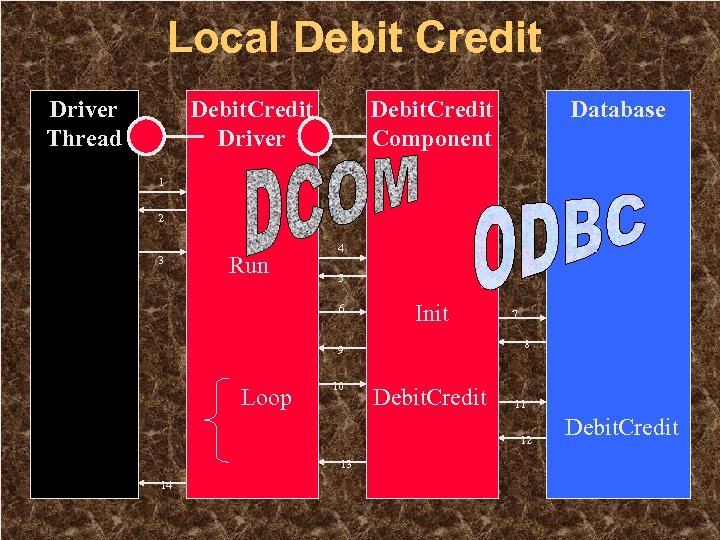

Local Debit Credit Driver Thread Debit. Credit Driver Debit. Credit Component Database 1 2 3 Run 4 5 6 Init 8 9 Loop 10 7 Debit. Credit 11 12 13 14 Debit. Credit

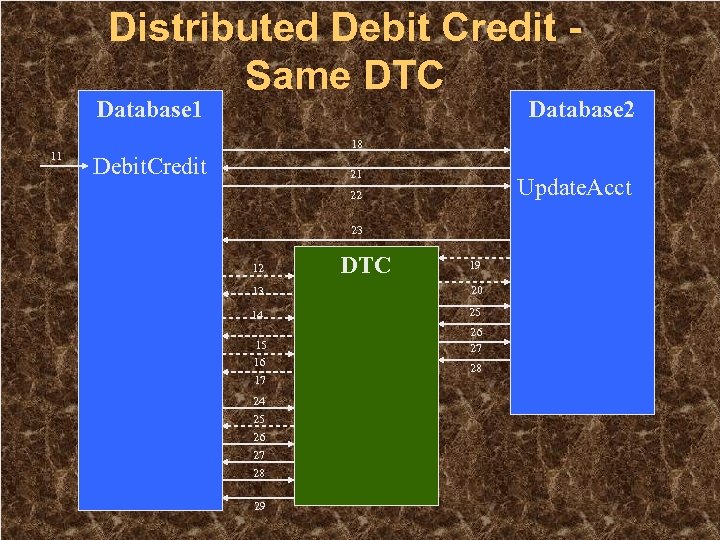

Distributed Debit Credit Same DTC Database 1 11 Database 2 18 Debit. Credit 21 Update. Acct 22 23 12 DTC 19 13 20 14 25 15 16 17 24 25 26 27 28 29 26 27 28

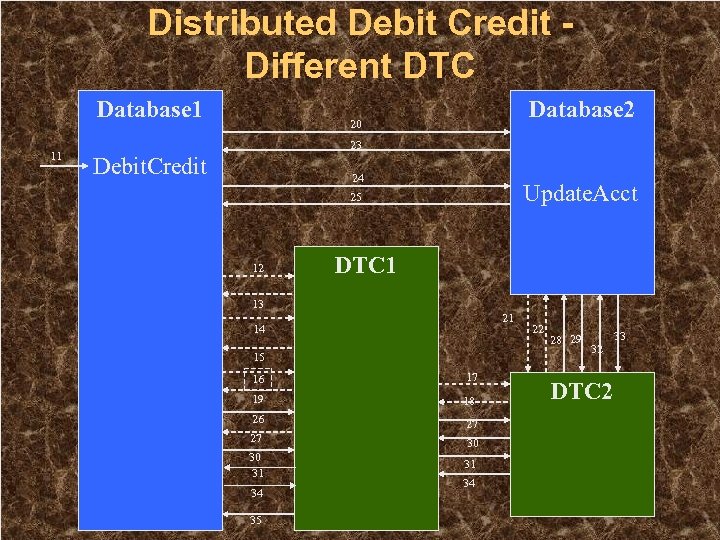

Distributed Debit Credit Different DTC Database 1 11 Database 2 20 23 Debit. Credit 24 Update. Acct 25 12 DTC 1 13 21 14 15 16 17 19 18 26 27 27 30 30 31 31 34 35 34 22 28 29 32 DTC 2 33

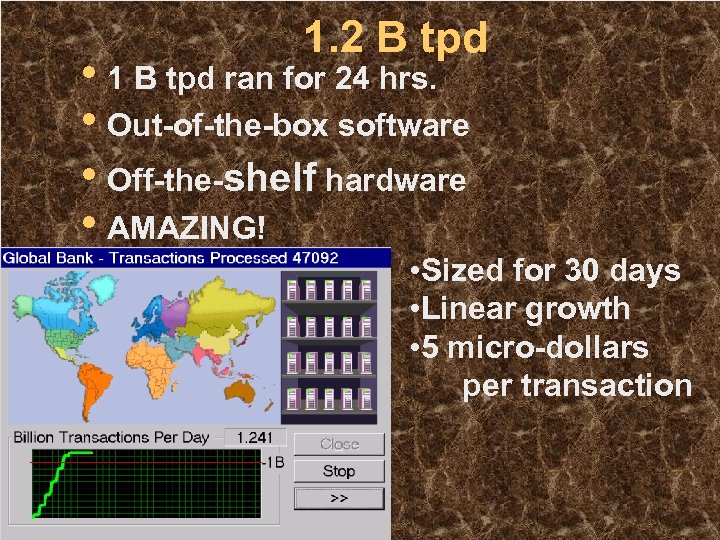

1. 2 B tpd • 1 B tpd ran for 24 hrs. • Out-of-the-box software • Off-the-shelf hardware • AMAZING! • Sized for 30 days • Linear growth • 5 micro-dollars per transaction

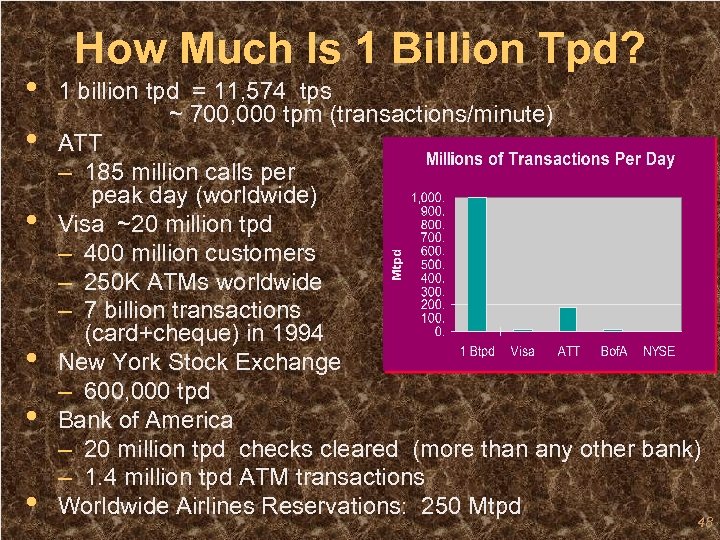

• • • How Much Is 1 Billion Tpd? 1 billion tpd = 11, 574 tps ~ 700, 000 tpm (transactions/minute) ATT – 185 million calls per peak day (worldwide) Visa ~20 million tpd – 400 million customers – 250 K ATMs worldwide – 7 billion transactions (card+cheque) in 1994 New York Stock Exchange – 600, 000 tpd Bank of America – 20 million tpd checks cleared (more than any other bank) – 1. 4 million tpd ATM transactions Worldwide Airlines Reservations: 250 Mtpd 48

1 B tpd: So What? • Shows what is possible, easy to build • • – Grows without limits Shows scaleup of DTC, MTS, SQL… Shows (again) that shared-nothing clusters scale • Next task: make it easy. – auto partition data – auto partition application – auto manage & operate

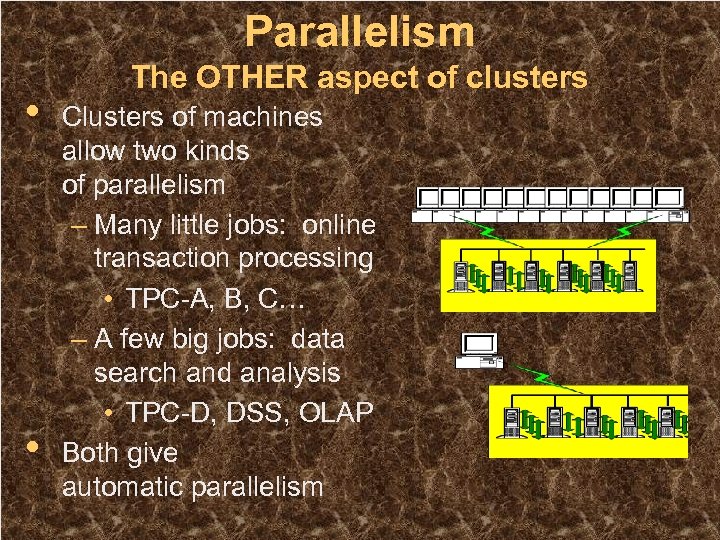

Parallelism • • The OTHER aspect of clusters Clusters of machines allow two kinds of parallelism – Many little jobs: online transaction processing • TPC-A, B, C… – A few big jobs: data search and analysis • TPC-D, DSS, OLAP Both give automatic parallelism

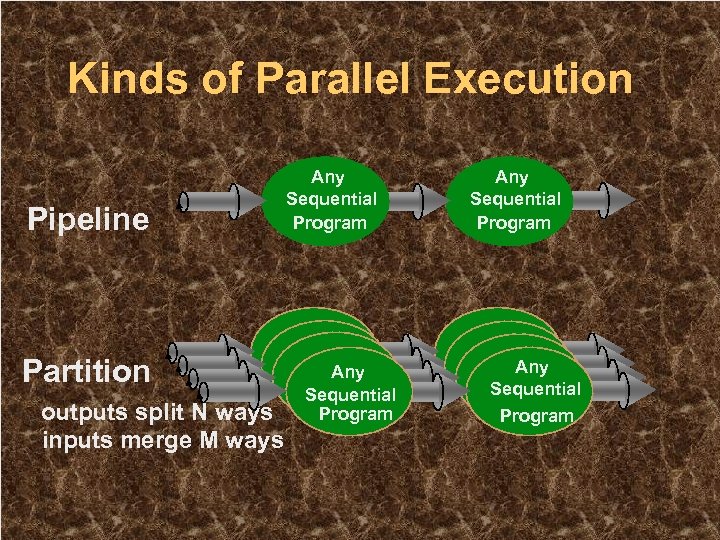

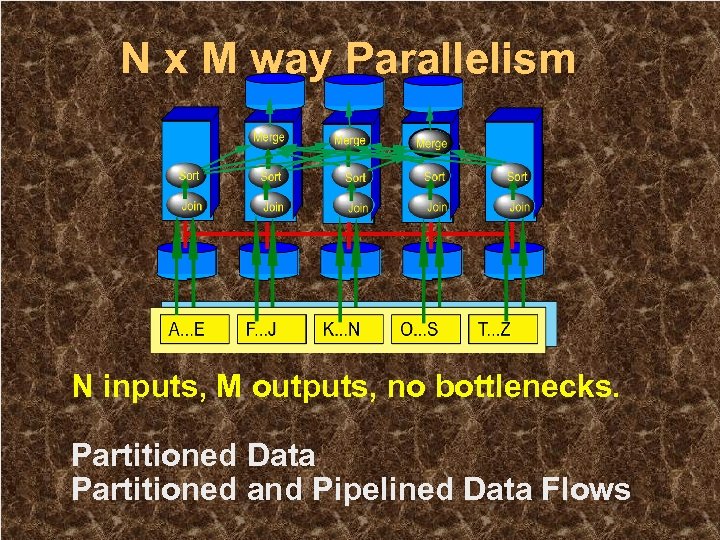

Kinds of Parallel Execution Pipeline Partition outputs split N ways inputs merge M ways Any Sequential Program

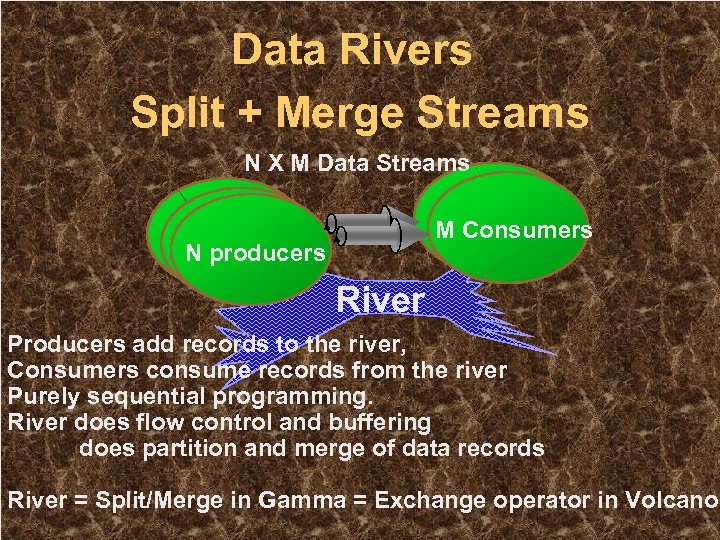

Data Rivers Split + Merge Streams N X M Data Streams M Consumers N producers River Producers add records to the river, Consumers consume records from the river Purely sequential programming. River does flow control and buffering does partition and merge of data records River = Split/Merge in Gamma = Exchange operator in Volcano.

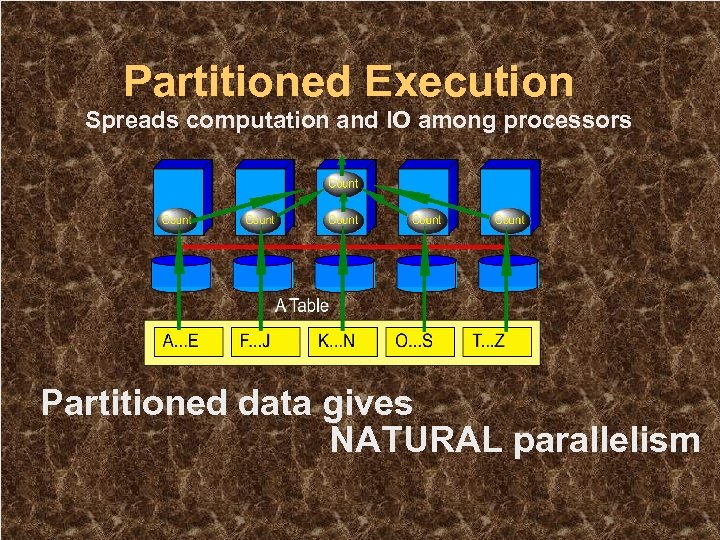

Partitioned Execution Spreads computation and IO among processors Partitioned data gives NATURAL parallelism

N x M way Parallelism N inputs, M outputs, no bottlenecks. Partitioned Data Partitioned and Pipelined Data Flows

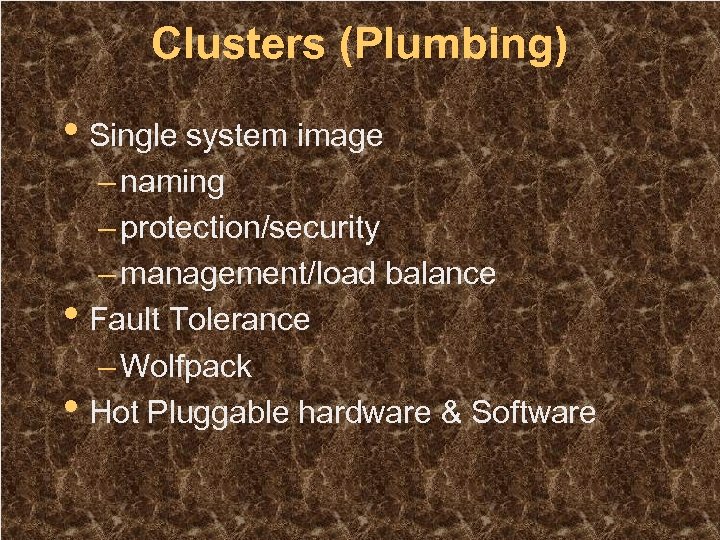

Clusters (Plumbing) • Single system image • • – naming – protection/security – management/load balance Fault Tolerance – Wolfpack Hot Pluggable hardware & Software

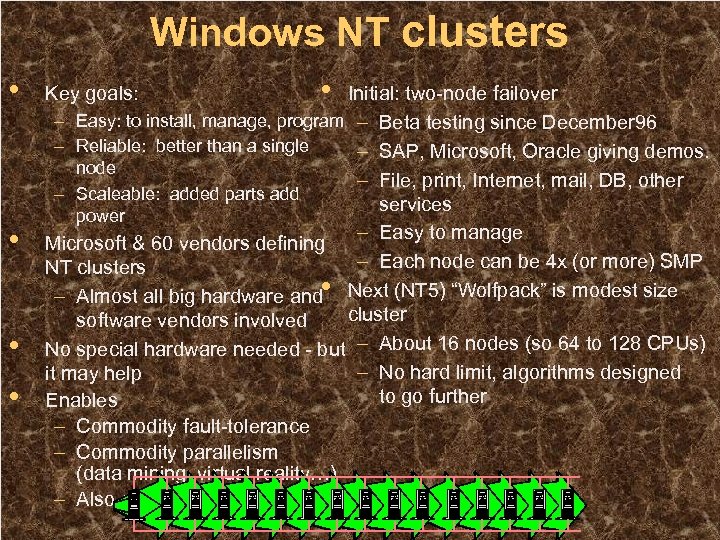

Windows NT clusters • • Key goals: • Initial: two-node failover – Easy: to install, manage, program – Beta testing since December 96 – Reliable: better than a single – SAP, Microsoft, Oracle giving demos. node – File, print, Internet, mail, DB, other – Scaleable: added parts add services power – Easy to manage Microsoft & 60 vendors defining – Each node can be 4 x (or more) SMP NT clusters – Almost all big hardware and • Next (NT 5) “Wolfpack” is modest size cluster software vendors involved No special hardware needed - but – About 16 nodes (so 64 to 128 CPUs) – No hard limit, algorithms designed it may help to go further Enables – Commodity fault-tolerance – Commodity parallelism (data mining, virtual reality…) – Also great for workgroups!

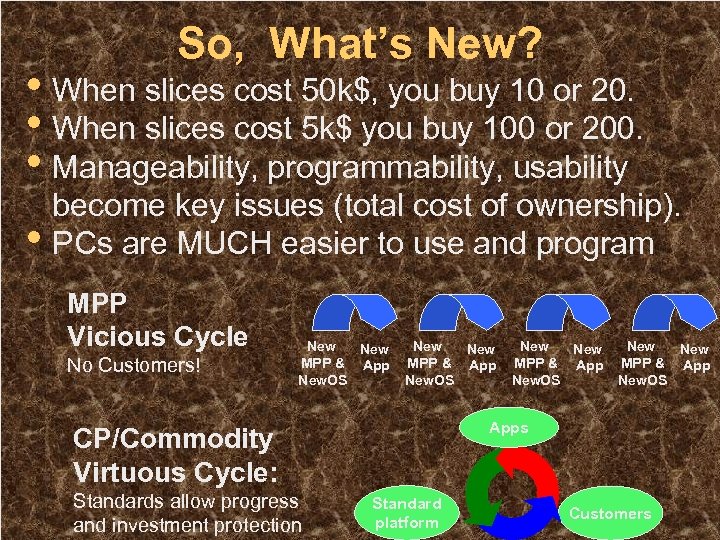

So, What’s New? • When slices cost 50 k$, you buy 10 or 20. • When slices cost 5 k$ you buy 100 or 200. • Manageability, programmability, usability • become key issues (total cost of ownership). PCs are MUCH easier to use and program MPP Vicious Cycle No Customers! New New MPP & App New. OS Apps CP/Commodity Virtuous Cycle: Standards allow progress and investment protection New MPP & App New. OS Standard platform Customers

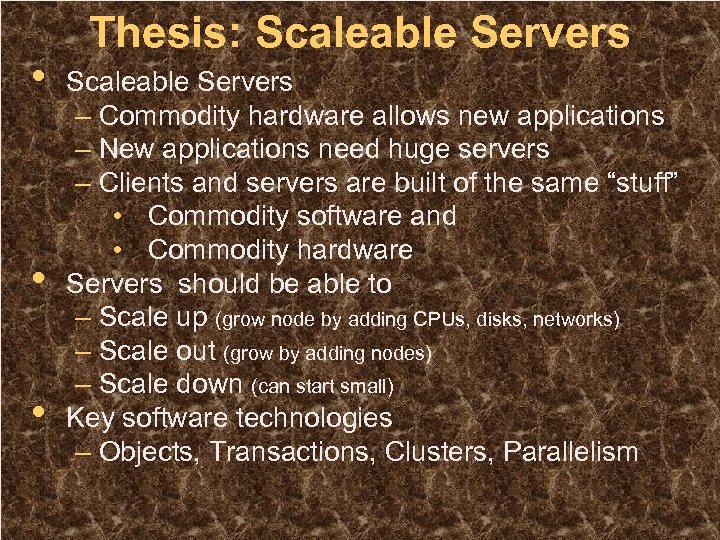

• • • Thesis: Scaleable Servers – Commodity hardware allows new applications – New applications need huge servers – Clients and servers are built of the same “stuff” • Commodity software and • Commodity hardware Servers should be able to – Scale up (grow node by adding CPUs, disks, networks) – Scale out (grow by adding nodes) – Scale down (can start small) Key software technologies – Objects, Transactions, Clusters, Parallelism

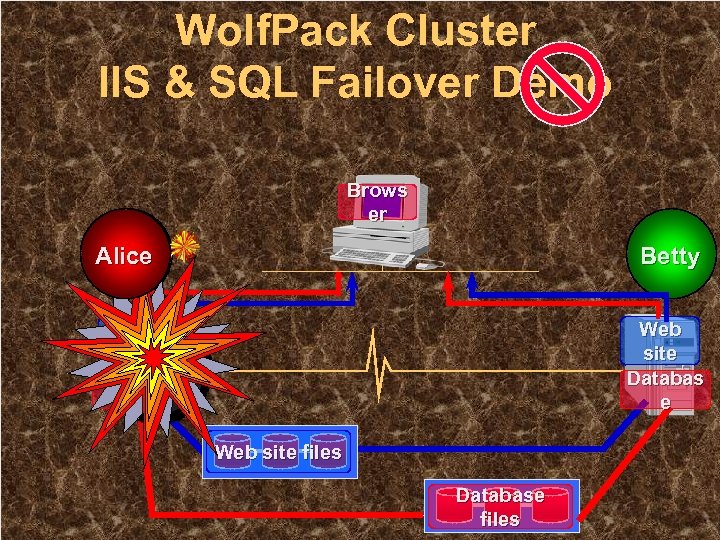

Wolf. Pack Cluster IIS & SQL Failover Demo Brows er Alice Betty Web site Databas e Web site files Database files

Summary Scale Up Scale Out • SMP Scale UP: OK but limited • Cluster Scale OUT: OK and unlimited • Manageability: • • • – fault tolerance OK & easy! – more needed Cyber. Bricks work Manual Federation now Automatic in future Scale Down

Scalability Research Problems • Automatic everything • Scaleable applications • • • – Parallel programming with clusters – Harvesting cluster resources Data and process placement – auto load balance – dealing with scale (thousands of nodes) High-performance DCOM – active messages meet ORBs? Process pairs, other FT concepts? Real time: instant failover Geographic (WAN) failover

33a02b1e5d366722cabc3d79d32aa67e.ppt