23370ca8e8348abdc88af93e06a3026c.ppt

- Количество слайдов: 58

Why Statistics? Statistical literacy is a necessary precondition for an educated citizenship in a technological democracy. Understanding risks and asking critical questions can also shape the emotional climate in a society so that hopes and anxieties are no longer as easily manipulated from outside and citizens can develop a better-informed and more relaxed attitude toward their health. Gigerenzer, G. , Gaissmaier, W. , Kurz-Milcke, E. , Schwartz, L. M. and Woloshin, S. 2007 “Helping doctors and patients to make sense of health statistics” Psychological Science in the Public Interest, 8, 53– 96. 0. 1 1 19 March 2018 3: 57 PM

Why Statistics? Statistical literacy is a necessary precondition for an educated citizenship in a technological democracy. Understanding risks and asking critical questions can also shape the emotional climate in a society so that hopes and anxieties are no longer as easily manipulated from outside and citizens can develop a better-informed and more relaxed attitude toward their health. Gigerenzer, G. , Gaissmaier, W. , Kurz-Milcke, E. , Schwartz, L. M. and Woloshin, S. 2007 “Helping doctors and patients to make sense of health statistics” Psychological Science in the Public Interest, 8, 53– 96. 0. 1 1 19 March 2018 3: 57 PM

Why Statistics? Our nine-point guide to spotting a dodgy statistic - The Guardian - 17 July 2016 - David Spiegelhalter is the Winton Professor of the Public Understanding of Risk at the University of Cambridge and president of the Royal Statistical Society 2017 and 2018. 1. 2. 3. 4. 5. 6. 7. 8. 9. Use a real number, but change its meaning Make the number look big (but not too big) Casually imply causation from correlation Choose your definitions carefully 4 Use total numbers rather than proportions (or whichever way suits your argument) Don’t provide any relevant context Exaggerate the importance of a possibly illusory change Prematurely announce the success of a policy initiative using unofficial selected data If all else fails, just make the numbers up 0. 2 2

Why Statistics? Our nine-point guide to spotting a dodgy statistic - The Guardian - 17 July 2016 - David Spiegelhalter is the Winton Professor of the Public Understanding of Risk at the University of Cambridge and president of the Royal Statistical Society 2017 and 2018. 1. 2. 3. 4. 5. 6. 7. 8. 9. Use a real number, but change its meaning Make the number look big (but not too big) Casually imply causation from correlation Choose your definitions carefully 4 Use total numbers rather than proportions (or whichever way suits your argument) Don’t provide any relevant context Exaggerate the importance of a possibly illusory change Prematurely announce the success of a policy initiative using unofficial selected data If all else fails, just make the numbers up 0. 2 2

Why Statistics? “If I had my way no man guilty of golf would be eligible to any office of trust or profit under the United States” H. L. Mencken, A Mencken Chrestomathy The Donald comes under fire for golfing every weekend in October spending one out of every three days since taking office at a Trump golf property. 0. 3 3

Why Statistics? “If I had my way no man guilty of golf would be eligible to any office of trust or profit under the United States” H. L. Mencken, A Mencken Chrestomathy The Donald comes under fire for golfing every weekend in October spending one out of every three days since taking office at a Trump golf property. 0. 3 3

Why Statistics? Best (2013) has provided a guide to spotting dubious statistics. Be suspicious when you encounter 1. Numbers that seem inconsistent with basic, familiar facts 2. Correlations that are used to suggest causation 3. Extreme example that are presented as typical instances 4. Large, round numbers that reflect possible guesses 5. Unclear or very broad definitions of phenomena that may include irrelevant cases 6. Inconsistent measurement techniques that may increase the number of extreme cases 7. Omission of data or cases that could provide counter arguments Stat-Spotting: A Field Guide to Identifying Dubious Data Joel Best 2013 Berkeley: University of California Press ISBN: 9780520279988 Statistical analysis of rounded data: Recovering of information lost due to rounding N. G. Ushakov and V. G. Ushakov Journal of the Korean Statistical Society 2017 DOI: 10. 1016/j. jkss. 2017. 01. 003 We consider situations when data for statistical analysis are given in a rounded form, and the rounding errors (the discretization step) are comparable or even greater than the measurement errors. We study possibilities to achieve accuracy much higher than the discretization step and to recover information lost due to rounding. The main tool for solving this problem is the use of additional measurement errors. 0. 4 4

Why Statistics? Best (2013) has provided a guide to spotting dubious statistics. Be suspicious when you encounter 1. Numbers that seem inconsistent with basic, familiar facts 2. Correlations that are used to suggest causation 3. Extreme example that are presented as typical instances 4. Large, round numbers that reflect possible guesses 5. Unclear or very broad definitions of phenomena that may include irrelevant cases 6. Inconsistent measurement techniques that may increase the number of extreme cases 7. Omission of data or cases that could provide counter arguments Stat-Spotting: A Field Guide to Identifying Dubious Data Joel Best 2013 Berkeley: University of California Press ISBN: 9780520279988 Statistical analysis of rounded data: Recovering of information lost due to rounding N. G. Ushakov and V. G. Ushakov Journal of the Korean Statistical Society 2017 DOI: 10. 1016/j. jkss. 2017. 01. 003 We consider situations when data for statistical analysis are given in a rounded form, and the rounding errors (the discretization step) are comparable or even greater than the measurement errors. We study possibilities to achieve accuracy much higher than the discretization step and to recover information lost due to rounding. The main tool for solving this problem is the use of additional measurement errors. 0. 4 4

Why Statistics? Statistical literacy guide - House Of Commons - 2010 The guide details some common ways in which statistics are used inappropriately or spun and some tips to help spot this. The tips are given in detail at the end of the note, but the three essential questions to ask yourself when looking at statistics are: Compared to what? Since when? Says who? 0. 5 5

Why Statistics? Statistical literacy guide - House Of Commons - 2010 The guide details some common ways in which statistics are used inappropriately or spun and some tips to help spot this. The tips are given in detail at the end of the note, but the three essential questions to ask yourself when looking at statistics are: Compared to what? Since when? Says who? 0. 5 5

Why Statistics? Politics and the English Language - George Orwell – 1946 An essay in which he complains that people had begun to speak and write without clarity. Criticises the "ugly and inaccurate" written English of his time. The essay focuses on language, which "is designed to make lies sound truthful and murder respectable, and to give an appearance of solidity to pure wind. " 1. 2. 3. 4. 5. 6. Never use a metaphor, simile or other figure of speech which you are used to seeing in print. Never use a long word where a short one will do. If it is possible to cut a word out, always cut it out. Never use the passive where you can use the active. Never use a foreign phrase, a scientific word or a jargon word if you can think of an everyday English equivalent. Break any of these rules sooner than say anything barbarous. 0. 6 6

Why Statistics? Politics and the English Language - George Orwell – 1946 An essay in which he complains that people had begun to speak and write without clarity. Criticises the "ugly and inaccurate" written English of his time. The essay focuses on language, which "is designed to make lies sound truthful and murder respectable, and to give an appearance of solidity to pure wind. " 1. 2. 3. 4. 5. 6. Never use a metaphor, simile or other figure of speech which you are used to seeing in print. Never use a long word where a short one will do. If it is possible to cut a word out, always cut it out. Never use the passive where you can use the active. Never use a foreign phrase, a scientific word or a jargon word if you can think of an everyday English equivalent. Break any of these rules sooner than say anything barbarous. 0. 6 6

Ten Simple Rules for Effective Statistical Practice In particular rule 6 (Kass et al. , 2016). KISS Keep It Simple Stupid Kass R. E. , Caffo B. S. , Davidian M. , Meng X. -L. , Yu B. , Reid N. (2016) Ten Simple Rules for Effective Statistical Practice. PLo. S Comput Biol 12(6): e 1004961. DOI: 10. 1371/journal. pcbi. 1004961 Ten simple rules to use statistics effectively - Science. Daily - 20 June 2016 0. 7 7

Ten Simple Rules for Effective Statistical Practice In particular rule 6 (Kass et al. , 2016). KISS Keep It Simple Stupid Kass R. E. , Caffo B. S. , Davidian M. , Meng X. -L. , Yu B. , Reid N. (2016) Ten Simple Rules for Effective Statistical Practice. PLo. S Comput Biol 12(6): e 1004961. DOI: 10. 1371/journal. pcbi. 1004961 Ten simple rules to use statistics effectively - Science. Daily - 20 June 2016 0. 7 7

Ten Simple Rules for Effective Statistical Practice 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. Statistical Methods Should Enable Data to Answer Scientific Questions Signals Always Come With Noise Plan Ahead, Really Ahead Worry About Data Quality Statistical Analysis Is More Than a Set of Computations Keep it Simple Provide Assessments of Variability Check Your Assumptions When Possible, Replicate! Make Your Analysis Reproducible Kass R. E. , Caffo B. S. , Davidian M. , Meng X. -L. , Yu B. , Reid N. (2016) Ten Simple Rules for Effective Statistical Practice. PLo. S Comput Biol 12(6): e 1004961. DOI: 10. 1371/journal. pcbi. 1004961 Ten simple rules to use statistics effectively - Science. Daily - 20 June 2016 0. 8 8

Ten Simple Rules for Effective Statistical Practice 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. Statistical Methods Should Enable Data to Answer Scientific Questions Signals Always Come With Noise Plan Ahead, Really Ahead Worry About Data Quality Statistical Analysis Is More Than a Set of Computations Keep it Simple Provide Assessments of Variability Check Your Assumptions When Possible, Replicate! Make Your Analysis Reproducible Kass R. E. , Caffo B. S. , Davidian M. , Meng X. -L. , Yu B. , Reid N. (2016) Ten Simple Rules for Effective Statistical Practice. PLo. S Comput Biol 12(6): e 1004961. DOI: 10. 1371/journal. pcbi. 1004961 Ten simple rules to use statistics effectively - Science. Daily - 20 June 2016 0. 8 8

Which Approach? There is a divide in contemporary research between qualitative and quantitative approaches. Qualitative methods are to be found almost exclusively in social sciences. The quantitative approach is especially developed in natural sciences, with sophisticated methodology developed around hypothesis testing and inferential statistics. The middle ground is represented by the historical approach, combining verbal description with descriptive statistics. The latter offers amazing options towards data visualisation, yet carries relatively little weight in terms of academic success. It is argued that a more even spread of the three broad types of methodologies would benefit research across fields. Descriptive statistics in research and teaching: are we losing the middle ground? Procheş, Ş. Quality & Quantity 2016 50 2165 -2174 DOI: 10. 1007/s 11135 -015 -0256 -3 0. 9 9

Which Approach? There is a divide in contemporary research between qualitative and quantitative approaches. Qualitative methods are to be found almost exclusively in social sciences. The quantitative approach is especially developed in natural sciences, with sophisticated methodology developed around hypothesis testing and inferential statistics. The middle ground is represented by the historical approach, combining verbal description with descriptive statistics. The latter offers amazing options towards data visualisation, yet carries relatively little weight in terms of academic success. It is argued that a more even spread of the three broad types of methodologies would benefit research across fields. Descriptive statistics in research and teaching: are we losing the middle ground? Procheş, Ş. Quality & Quantity 2016 50 2165 -2174 DOI: 10. 1007/s 11135 -015 -0256 -3 0. 9 9

Can You Trust Papers? This is what happened when psychologists tried to replicate 100 previously published findings (BPS) After some high-profile and at times acrimonious failures to replicate past landmark findings, psychology as a discipline and scientific community has led the way in trying to find out more about why some scientific findings reproduce and others don't, including instituting reporting practices to improve the reliability of future results. Open Science Collaboration (2015) Estimating the reproducibility of psychological science The tally of retractions in MEDLINE — one of the world’s largest databases of scientific abstracts — for 2016 has just been released, and the number is: 664. The site is dedicated to monitoring retracted papers! 0. 10

Can You Trust Papers? This is what happened when psychologists tried to replicate 100 previously published findings (BPS) After some high-profile and at times acrimonious failures to replicate past landmark findings, psychology as a discipline and scientific community has led the way in trying to find out more about why some scientific findings reproduce and others don't, including instituting reporting practices to improve the reliability of future results. Open Science Collaboration (2015) Estimating the reproducibility of psychological science The tally of retractions in MEDLINE — one of the world’s largest databases of scientific abstracts — for 2016 has just been released, and the number is: 664. The site is dedicated to monitoring retracted papers! 0. 10

Can You Trust Papers? Replication success correlates with researcher expertise (but not for the reasons you might think) – BPS To see if there really is any link between researcher expertise and the chances of replication success, Bench and his colleagues have analysed the results of the recent “Reproducibility Project” in which 270 psychologists attempted to replicate 100 previous studies, managing a success rate of less than 40 per cent. Bench’s team found that replication researcher team expertise, as measured by first and senior author’s number of prior publications, was indeed correlated with the size of effect obtained in the replication attempt, but there’s more to the story. Does expertise matter in replication? An examination of the reproducibility project: Psychology Shane W. Bench, Grace N. Rivera, Rebecca J. Schlegel, Joshua A. Hicks and Heather C. Lench Journal of Experimental Social Psychology Volume 68, 2017, Pages 181– 184 DOI: 10. 1016/j. jesp. 2016. 07. 003 0. 11

Can You Trust Papers? Replication success correlates with researcher expertise (but not for the reasons you might think) – BPS To see if there really is any link between researcher expertise and the chances of replication success, Bench and his colleagues have analysed the results of the recent “Reproducibility Project” in which 270 psychologists attempted to replicate 100 previous studies, managing a success rate of less than 40 per cent. Bench’s team found that replication researcher team expertise, as measured by first and senior author’s number of prior publications, was indeed correlated with the size of effect obtained in the replication attempt, but there’s more to the story. Does expertise matter in replication? An examination of the reproducibility project: Psychology Shane W. Bench, Grace N. Rivera, Rebecca J. Schlegel, Joshua A. Hicks and Heather C. Lench Journal of Experimental Social Psychology Volume 68, 2017, Pages 181– 184 DOI: 10. 1016/j. jesp. 2016. 07. 003 0. 11

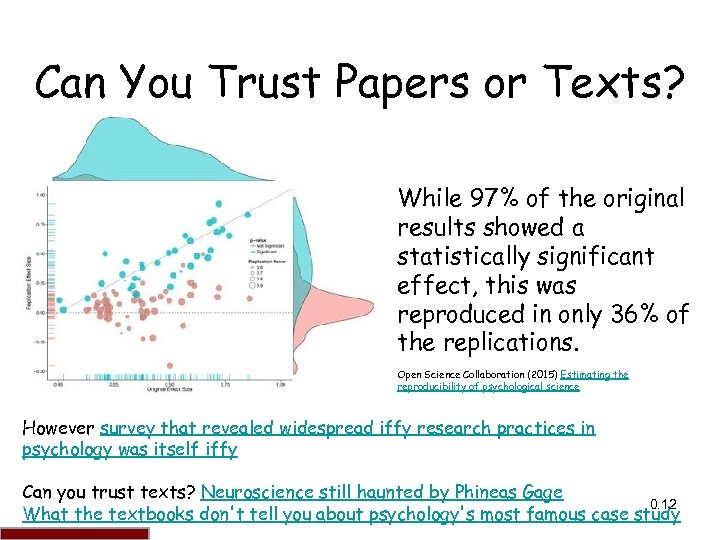

Can You Trust Papers or Texts? While 97% of the original results showed a statistically significant effect, this was reproduced in only 36% of the replications. Open Science Collaboration (2015) Estimating the reproducibility of psychological science However survey that revealed widespread iffy research practices in psychology was itself iffy Can you trust texts? Neuroscience still haunted by Phineas Gage 0. 12 12 What the textbooks don't tell you about psychology's most famous case study

Can You Trust Papers or Texts? While 97% of the original results showed a statistically significant effect, this was reproduced in only 36% of the replications. Open Science Collaboration (2015) Estimating the reproducibility of psychological science However survey that revealed widespread iffy research practices in psychology was itself iffy Can you trust texts? Neuroscience still haunted by Phineas Gage 0. 12 12 What the textbooks don't tell you about psychology's most famous case study

Can You Trust Papers? Researchers have found more than half of the public datasets provided with scientific papers are incomplete, which prevents reproducibility tests and follow-up studies. Public Data Archiving in Ecology and Evolution: How Well Are We Doing? Dominique G. Roche, Loeske E. B. Kruuk, Robert Lanfear, Sandra A. Binning. PLOS Biology, 2015 13(11) e 1002295 DOI: 10. 1371/journal. pbio. 1002295 Simple errors limit scientific scrutiny - Science. Daily - 11 November 2015 0. 13 13

Can You Trust Papers? Researchers have found more than half of the public datasets provided with scientific papers are incomplete, which prevents reproducibility tests and follow-up studies. Public Data Archiving in Ecology and Evolution: How Well Are We Doing? Dominique G. Roche, Loeske E. B. Kruuk, Robert Lanfear, Sandra A. Binning. PLOS Biology, 2015 13(11) e 1002295 DOI: 10. 1371/journal. pbio. 1002295 Simple errors limit scientific scrutiny - Science. Daily - 11 November 2015 0. 13 13

Can You Trust Papers? Reproducibility: A tragedy of errors David B. Allison, Andrew W. Brown, Brandon J. George & Kathryn A. Kaiser Nature 2016 530 27– 29 DOI: 10. 1038/530027 a “In the course of assembling weekly lists of articles in our field, we began noticing more peer-reviewed articles containing what we call substantial or invalidating errors. These involve factual mistakes or veer substantially from clearly accepted procedures in ways that, if corrected, might alter a paper's conclusions. ” “After attempting to address more than 25 of these errors with letters to authors or journals, and identifying at least a dozen more, we had to stop — the work too much of our time. Our efforts revealed invalidating practices that occur repeatedly (see ‘Three common errors’) and showed how journals and authors react when faced with mistakes that need correction. ” Reproducibility should be at science’s heart. It isn’t. But that may soon change. Let’s just try that again - The Economist - 6 Feb 2016 0. 14 14

Can You Trust Papers? Reproducibility: A tragedy of errors David B. Allison, Andrew W. Brown, Brandon J. George & Kathryn A. Kaiser Nature 2016 530 27– 29 DOI: 10. 1038/530027 a “In the course of assembling weekly lists of articles in our field, we began noticing more peer-reviewed articles containing what we call substantial or invalidating errors. These involve factual mistakes or veer substantially from clearly accepted procedures in ways that, if corrected, might alter a paper's conclusions. ” “After attempting to address more than 25 of these errors with letters to authors or journals, and identifying at least a dozen more, we had to stop — the work too much of our time. Our efforts revealed invalidating practices that occur repeatedly (see ‘Three common errors’) and showed how journals and authors react when faced with mistakes that need correction. ” Reproducibility should be at science’s heart. It isn’t. But that may soon change. Let’s just try that again - The Economist - 6 Feb 2016 0. 14 14

Can You Trust Papers? Progress toward openness, transparency, and reproducibility in cognitive neuroscience R. O. Gilmore, M. T. Diaz, B. A. Wyble and T. Yarkoni Annals of the New York Academy of Sciences, 2017; DOI: 10. 1111/nyas. 13325 Criticism that researchers in the psychological and brain sciences are failing to reproduce studies - a key step in the scientific method - may have more to do with the complexity of managing data, rather than an attempt to hide methods and results. Data sharing can offer help in science's reproducibility crisis – Science. Daily – 18 May 2017 0. 15 15

Can You Trust Papers? Progress toward openness, transparency, and reproducibility in cognitive neuroscience R. O. Gilmore, M. T. Diaz, B. A. Wyble and T. Yarkoni Annals of the New York Academy of Sciences, 2017; DOI: 10. 1111/nyas. 13325 Criticism that researchers in the psychological and brain sciences are failing to reproduce studies - a key step in the scientific method - may have more to do with the complexity of managing data, rather than an attempt to hide methods and results. Data sharing can offer help in science's reproducibility crisis – Science. Daily – 18 May 2017 0. 15 15

Can You Trust Papers? Can We Trust Psychological Studies? – BPS Research Digest – 7 Nov 2016 This is Episode 8 of Psych. Crunch, the podcast from the British Psychological Society’s Research Digest, sponsored by Routledge Psychology. Download here. 0. 16

Can You Trust Papers? Can We Trust Psychological Studies? – BPS Research Digest – 7 Nov 2016 This is Episode 8 of Psych. Crunch, the podcast from the British Psychological Society’s Research Digest, sponsored by Routledge Psychology. Download here. 0. 16

Are you statistically challenged? 1. What percentage of drivers are better than average? Calculated Risks: How to Know When Numbers Deceive You 17 By Gerd Gigerenzer, Simon & Schuster, New York.

Are you statistically challenged? 1. What percentage of drivers are better than average? Calculated Risks: How to Know When Numbers Deceive You 17 By Gerd Gigerenzer, Simon & Schuster, New York.

Are you statistically challenged? 1. What percentage of drivers are better than average? Around 63%, when “average” is determined by number of accidents. This is so because the distribution of accidents is asymmetrical; bad drivers account for more accidents than good ones, so most drivers have fewer than the average number of accidents. Ccccccccccccccccccccccccccccccccccccc mean/median/mode - normality ccccccccccc Calculated Risks: How to Know When Numbers Deceive You 0. 18 18 By Gerd Gigerenzer, Simon & Schuster, New York.

Are you statistically challenged? 1. What percentage of drivers are better than average? Around 63%, when “average” is determined by number of accidents. This is so because the distribution of accidents is asymmetrical; bad drivers account for more accidents than good ones, so most drivers have fewer than the average number of accidents. Ccccccccccccccccccccccccccccccccccccc mean/median/mode - normality ccccccccccc Calculated Risks: How to Know When Numbers Deceive You 0. 18 18 By Gerd Gigerenzer, Simon & Schuster, New York.

Are you statistically challenged? 2. If men with high cholesterol have a 50% higher risk of heart attack than men with normal cholesterol, should you panic if your cholesterol level is high? Calculated Risks: How to Know When Numbers Deceive You 0. 19 19 By Gerd Gigerenzer, Simon & Schuster, New York.

Are you statistically challenged? 2. If men with high cholesterol have a 50% higher risk of heart attack than men with normal cholesterol, should you panic if your cholesterol level is high? Calculated Risks: How to Know When Numbers Deceive You 0. 19 19 By Gerd Gigerenzer, Simon & Schuster, New York.

Are you statistically challenged? 2. If men with high cholesterol have a 50% higher risk of heart attack than men with normal cholesterol, should you panic if your cholesterol level is high? Ccccccccccccccccccccccccccccccccccccc Probably not. Although 50% sounds frightening, it is only because it is given in relative terms: 6 out of 100 men with high cholesterol will have a heart attack in 10 years, versus 4 out of 100 for men with normal levels. In absolute terms, the increased risk is only 2 out of 100 – or 2%. Look at it this way: Even in the high cholesterol category, 94% of the men won’t have heart attacks. ccccccccccc Calculated Risks: How to Know When Numbers Deceive You 0. 20 20 By Gerd Gigerenzer, Simon & Schuster, New York.

Are you statistically challenged? 2. If men with high cholesterol have a 50% higher risk of heart attack than men with normal cholesterol, should you panic if your cholesterol level is high? Ccccccccccccccccccccccccccccccccccccc Probably not. Although 50% sounds frightening, it is only because it is given in relative terms: 6 out of 100 men with high cholesterol will have a heart attack in 10 years, versus 4 out of 100 for men with normal levels. In absolute terms, the increased risk is only 2 out of 100 – or 2%. Look at it this way: Even in the high cholesterol category, 94% of the men won’t have heart attacks. ccccccccccc Calculated Risks: How to Know When Numbers Deceive You 0. 20 20 By Gerd Gigerenzer, Simon & Schuster, New York.

Are you statistically challenged? 3. HIV tests are 99. 9% accurate. You test positive for HIV, although you have no known risk factors. What is the likelihood that you have AIDS, if 0. 01% of men with no known risk behaviour are infected? Estimate your risk. Calculated Risks: How to Know When Numbers Deceive You By Gerd Gigerenzer, Simon & Schuster, New York. 0. 21 21

Are you statistically challenged? 3. HIV tests are 99. 9% accurate. You test positive for HIV, although you have no known risk factors. What is the likelihood that you have AIDS, if 0. 01% of men with no known risk behaviour are infected? Estimate your risk. Calculated Risks: How to Know When Numbers Deceive You By Gerd Gigerenzer, Simon & Schuster, New York. 0. 21 21

Are you statistically challenged? 3. HIV tests are 99. 9% accurate. You test positive for HIV, although you have no known risk factors. What is the likelihood that you have AIDS, if 0. 01% of men with no known risk behaviour are infected? Ccccccccccccccccccccccccccccccccccccc Fifty-fifty. Most people assume the possibility is much higher, an illustration of the “illusion of certainty. ” The correct answer is clear if the problem is framed in frequencies: Take 10, 000 men with no known risk factors. 1 of these men has AIDS; he will almost certainly test positive. Of the remaining 9, 999 men, 1 will also test positive. Thus, the likelihood that you have AIDS given a positive test is 1 out of 2. A positive AIDS test, although cause for ccccccccccc concern, is far from a death sentence. Calculated Risks: How to Know When Numbers Deceive You By Gerd Gigerenzer, Simon & Schuster, New York. 0. 22 22

Are you statistically challenged? 3. HIV tests are 99. 9% accurate. You test positive for HIV, although you have no known risk factors. What is the likelihood that you have AIDS, if 0. 01% of men with no known risk behaviour are infected? Ccccccccccccccccccccccccccccccccccccc Fifty-fifty. Most people assume the possibility is much higher, an illustration of the “illusion of certainty. ” The correct answer is clear if the problem is framed in frequencies: Take 10, 000 men with no known risk factors. 1 of these men has AIDS; he will almost certainly test positive. Of the remaining 9, 999 men, 1 will also test positive. Thus, the likelihood that you have AIDS given a positive test is 1 out of 2. A positive AIDS test, although cause for ccccccccccc concern, is far from a death sentence. Calculated Risks: How to Know When Numbers Deceive You By Gerd Gigerenzer, Simon & Schuster, New York. 0. 22 22

Are you statistically challenged? 4. The blood found under the fingernails of a murdered woman matches the defendant’s blood type, which only 17. 3% of the population shares. The blood on defendant’s shoes matches the victim’s type, which only 15. 7% of the population shares. An expert witness at trial testified that multiplying these two probabilities together gives a joint probability of 2. 7% (17. 3%× 15. 7%) that these two matches would occur by chance – and that there was, therefore, a 97. 3% chance that defendant is the murderer. What is the flaw in the expert’s reasoning? Calculated Risks: How to Know When Numbers Deceive You 0. 23 23 By Gerd Gigerenzer, Simon & Schuster, New York.

Are you statistically challenged? 4. The blood found under the fingernails of a murdered woman matches the defendant’s blood type, which only 17. 3% of the population shares. The blood on defendant’s shoes matches the victim’s type, which only 15. 7% of the population shares. An expert witness at trial testified that multiplying these two probabilities together gives a joint probability of 2. 7% (17. 3%× 15. 7%) that these two matches would occur by chance – and that there was, therefore, a 97. 3% chance that defendant is the murderer. What is the flaw in the expert’s reasoning? Calculated Risks: How to Know When Numbers Deceive You 0. 23 23 By Gerd Gigerenzer, Simon & Schuster, New York.

Are you statistically challenged? Ccccccccccccccccccccccccccccccccccccc 4. This is an example of the “prosecutor’s fallacy” – namely, the erroneous assumption that the random match probability equals probability of guilt. The actual possibility that the defendant is the murderer based solely on these two matches is very small. Frequency analysis again shows why: Assume that any of the 100, 000 men in the city where the murder took place could be the murderer. One of them, the murderer, will show both matches with practical certainty. Of the remaining 99, 999 other residents, we can expect that 2, 700 (2. 7%) show the same matches. Thus, the probability that a man with both matches is the murderer ccccccccccc than one-tenth of 1%. is 1 in 2, 700 - less Calculated Risks: How to Know When Numbers Deceive You By Gerd Gigerenzer, Simon & Schuster, New York. 0. 24 24

Are you statistically challenged? Ccccccccccccccccccccccccccccccccccccc 4. This is an example of the “prosecutor’s fallacy” – namely, the erroneous assumption that the random match probability equals probability of guilt. The actual possibility that the defendant is the murderer based solely on these two matches is very small. Frequency analysis again shows why: Assume that any of the 100, 000 men in the city where the murder took place could be the murderer. One of them, the murderer, will show both matches with practical certainty. Of the remaining 99, 999 other residents, we can expect that 2, 700 (2. 7%) show the same matches. Thus, the probability that a man with both matches is the murderer ccccccccccc than one-tenth of 1%. is 1 in 2, 700 - less Calculated Risks: How to Know When Numbers Deceive You By Gerd Gigerenzer, Simon & Schuster, New York. 0. 24 24

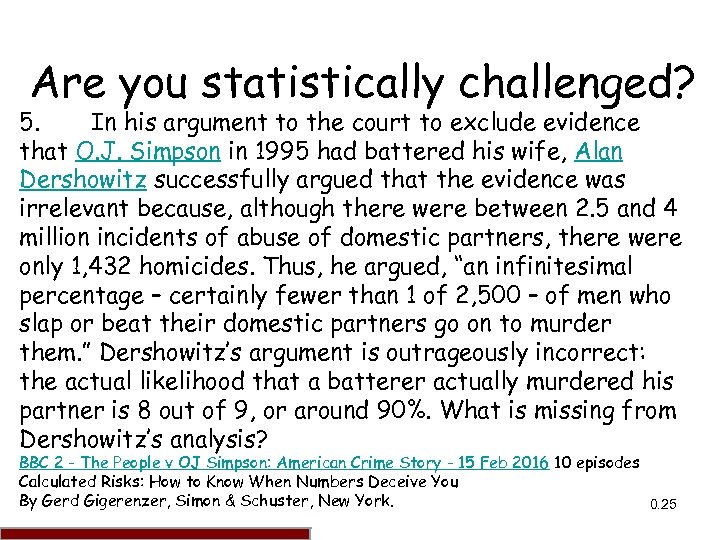

Are you statistically challenged? 5. In his argument to the court to exclude evidence that O. J. Simpson in 1995 had battered his wife, Alan Dershowitz successfully argued that the evidence was irrelevant because, although there were between 2. 5 and 4 million incidents of abuse of domestic partners, there were only 1, 432 homicides. Thus, he argued, “an infinitesimal percentage – certainly fewer than 1 of 2, 500 – of men who slap or beat their domestic partners go on to murder them. ” Dershowitz’s argument is outrageously incorrect: the actual likelihood that a batterer actually murdered his partner is 8 out of 9, or around 90%. What is missing from Dershowitz’s analysis? BBC 2 - The People v OJ Simpson: American Crime Story - 15 Feb 2016 10 episodes Calculated Risks: How to Know When Numbers Deceive You By Gerd Gigerenzer, Simon & Schuster, New York. 0. 25 25

Are you statistically challenged? 5. In his argument to the court to exclude evidence that O. J. Simpson in 1995 had battered his wife, Alan Dershowitz successfully argued that the evidence was irrelevant because, although there were between 2. 5 and 4 million incidents of abuse of domestic partners, there were only 1, 432 homicides. Thus, he argued, “an infinitesimal percentage – certainly fewer than 1 of 2, 500 – of men who slap or beat their domestic partners go on to murder them. ” Dershowitz’s argument is outrageously incorrect: the actual likelihood that a batterer actually murdered his partner is 8 out of 9, or around 90%. What is missing from Dershowitz’s analysis? BBC 2 - The People v OJ Simpson: American Crime Story - 15 Feb 2016 10 episodes Calculated Risks: How to Know When Numbers Deceive You By Gerd Gigerenzer, Simon & Schuster, New York. 0. 25 25

Are you statistically challenged? 5. Either Dershowitz was confused, or he purposely Ccccccccccccccccccccccccccccccccccccc hoodwinked the court, in much the same way that the tobacco industry seeks to obscure the risks of smoking. His analysis omits a key element: what number of battered women are killed each year by someone other than their partners? The answer is around 0. 05%. Now, think of 100, 000 battered women. 40 will be murdered this year by their partners. 5 will be murdered by someone else. Thus, 40/45 murdered and battered women will be killed by their batterers - in only 1/9 cases is the murderer someone other than the batterer. ccccccccccc Calculated Risks: How to Know When Numbers Deceive You By Gerd Gigerenzer, Simon & Schuster, New York. 0. 26 26

Are you statistically challenged? 5. Either Dershowitz was confused, or he purposely Ccccccccccccccccccccccccccccccccccccc hoodwinked the court, in much the same way that the tobacco industry seeks to obscure the risks of smoking. His analysis omits a key element: what number of battered women are killed each year by someone other than their partners? The answer is around 0. 05%. Now, think of 100, 000 battered women. 40 will be murdered this year by their partners. 5 will be murdered by someone else. Thus, 40/45 murdered and battered women will be killed by their batterers - in only 1/9 cases is the murderer someone other than the batterer. ccccccccccc Calculated Risks: How to Know When Numbers Deceive You By Gerd Gigerenzer, Simon & Schuster, New York. 0. 26 26

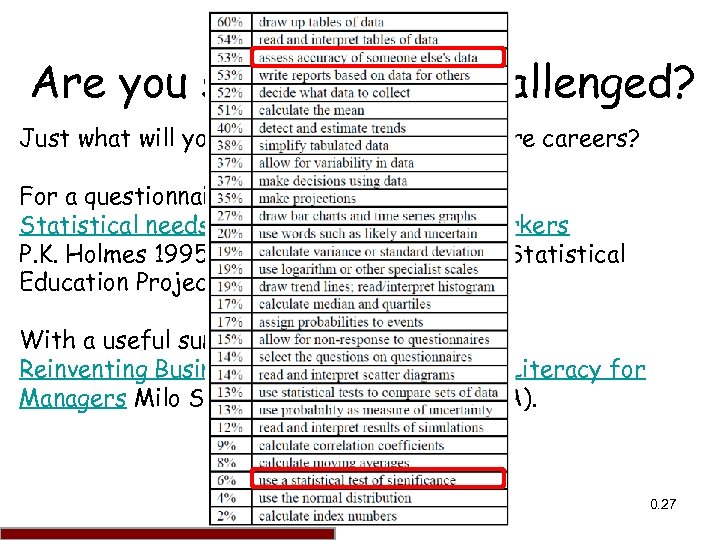

Are you statistically challenged? Just what will you need to know in your future careers? For a questionnaire investigating Statistical needs of non-specialist young workers P. K. Holmes 1995 Royal Statistical Society, Statistical Education Project 16 - 19 With a useful summary Reinventing Business Statistics: Statistical Literacy for Managers Milo Schield 2013 (see Appendix A). 0. 27 27

Are you statistically challenged? Just what will you need to know in your future careers? For a questionnaire investigating Statistical needs of non-specialist young workers P. K. Holmes 1995 Royal Statistical Society, Statistical Education Project 16 - 19 With a useful summary Reinventing Business Statistics: Statistical Literacy for Managers Milo Schield 2013 (see Appendix A). 0. 27 27

How To Open An Excel Worksheet in SPSS is primarily designed to analyse questionnaires. Each column is a variable. Each row a case. Essentially a row for each individual. 28

How To Open An Excel Worksheet in SPSS is primarily designed to analyse questionnaires. Each column is a variable. Each row a case. Essentially a row for each individual. 28

How To Open An Excel Worksheet in SPSS Data preparation in Excel. The data type and width for each variable are determined by the data type (text, numeric etc. ) and width in the Excel file. 29

How To Open An Excel Worksheet in SPSS Data preparation in Excel. The data type and width for each variable are determined by the data type (text, numeric etc. ) and width in the Excel file. 29

Blank Cells For numeric variables, blank cells are converted to the system-missing value, indicated by a period. 0. 30 30

Blank Cells For numeric variables, blank cells are converted to the system-missing value, indicated by a period. 0. 30 30

Variable Names The name must begin with a letter. The remaining characters can be any letter, any digit, a period, or the symbols @, #, _ or $. 31

Variable Names The name must begin with a letter. The remaining characters can be any letter, any digit, a period, or the symbols @, #, _ or $. 31

Variable Names Variable names can be defined with any mixture of uppercase and lowercase characters, and case is preserved for display purposes. 32

Variable Names Variable names can be defined with any mixture of uppercase and lowercase characters, and case is preserved for display purposes. 32

Variable Names Variable names cannot end with a period. 33

Variable Names Variable names cannot end with a period. 33

Variable Names The length of the name cannot exceed 64 characters. 34

Variable Names The length of the name cannot exceed 64 characters. 34

Variable Names Blanks and special characters (for example !, ? , ' and *) cannot be used. 35

Variable Names Blanks and special characters (for example !, ? , ' and *) cannot be used. 35

Variable Names Reserved keywords such as ALL, AND, BY, EQ, GE, GT, LE, LT, NE, NOT, OR, TO, WITH cannot be used as variable names. 36

Variable Names Reserved keywords such as ALL, AND, BY, EQ, GE, GT, LE, LT, NE, NOT, OR, TO, WITH cannot be used as variable names. 36

Data Entry For simplicity enter your data into Excel with the first cell in each column containing the variable name. 37

Data Entry For simplicity enter your data into Excel with the first cell in each column containing the variable name. 37

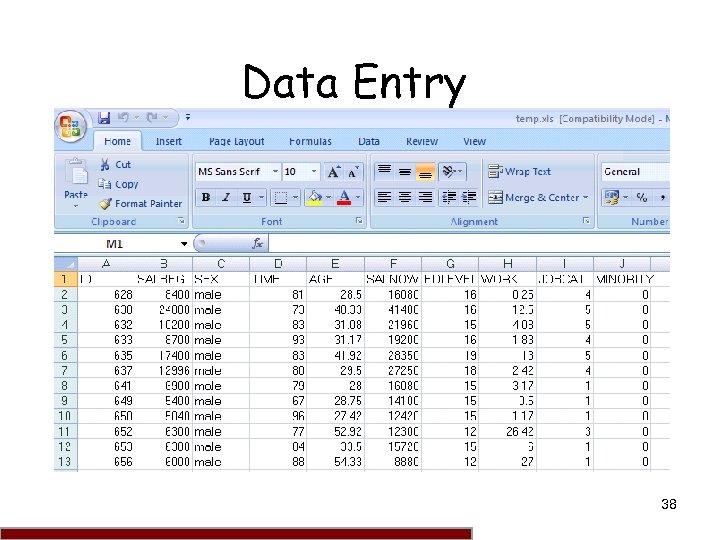

Data Entry 38

Data Entry 38

Data Entry It is essential that all column widths are stretched to accommodate their data. You may perform all subsidiary calculations, such as collating of responses to form a score and any negative coding, within Excel. 39

Data Entry It is essential that all column widths are stretched to accommodate their data. You may perform all subsidiary calculations, such as collating of responses to form a score and any negative coding, within Excel. 39

Data Entry It is important that you “proof” your data. For instance check the minimum and maximum value in each column. You can do this either in Excel (not forgetting to delete these extra rows) or in SPSS (not forgetting to make the corrections to the Excel version). 0. 40 40

Data Entry It is important that you “proof” your data. For instance check the minimum and maximum value in each column. You can do this either in Excel (not forgetting to delete these extra rows) or in SPSS (not forgetting to make the corrections to the Excel version). 0. 40 40

Caveat Emptor Excel is fantastic for data entry, basic calculations and some graphics, however On the accuracy of statistical procedures in Microsoft Excel 2010 Melard, G. Computational Statistics 2014 29(5) 1095 -1128 DOI: 10. 1007/s 00180 -014 -0482 -5 URL All previous versions of Microsoft Excel until Excel 2007 have been criticized by statisticians for several reasons, including the accuracy of statistical functions, the properties of the random number generator, the quality of statistical add-ins, the weakness of the Solver for nonlinear regression, and the data graphical representation. Until recently Microsoft did not make an attempt to fix all the errors in Excel and was still marketing a product that contained known errors. We provide an update of these studies given the recent release of Excel 2010 and we have added Open. Office. org Calc 3. 3 and Gnumeric 1. 10. 16 to the analysis, for the purpose of comparison. The conclusion is that the stream of papers, mainly in Computational Statistics and Data Analysis, has started to pay off: Microsoft has partially improved the statistical aspects of Excel, essentially the statistical functions and the random number generator. 0. 41 41

Caveat Emptor Excel is fantastic for data entry, basic calculations and some graphics, however On the accuracy of statistical procedures in Microsoft Excel 2010 Melard, G. Computational Statistics 2014 29(5) 1095 -1128 DOI: 10. 1007/s 00180 -014 -0482 -5 URL All previous versions of Microsoft Excel until Excel 2007 have been criticized by statisticians for several reasons, including the accuracy of statistical functions, the properties of the random number generator, the quality of statistical add-ins, the weakness of the Solver for nonlinear regression, and the data graphical representation. Until recently Microsoft did not make an attempt to fix all the errors in Excel and was still marketing a product that contained known errors. We provide an update of these studies given the recent release of Excel 2010 and we have added Open. Office. org Calc 3. 3 and Gnumeric 1. 10. 16 to the analysis, for the purpose of comparison. The conclusion is that the stream of papers, mainly in Computational Statistics and Data Analysis, has started to pay off: Microsoft has partially improved the statistical aspects of Excel, essentially the statistical functions and the random number generator. 0. 41 41

Caveat Emptor Also see Statistical Accuracy of Spreadsheet Software Keeling, Kellie B. ; Pavur, Robert J. American Statistician 2011 65(4) 265 -273 DOI: 10. 1198/tas. 2011. 09076 On the Numerical Accuracy of Spreadsheets Almiron, Marcelo G. ; Lopes, Bruno; Oliveira, Alyson L. C. ; Medeiros, A. C. ; Frery, A. C. Journal Of Statistical Software 2010 34(4) 1 -29 DOI: 10. 18637/jss. v 034. i 04 0. 42 42

Caveat Emptor Also see Statistical Accuracy of Spreadsheet Software Keeling, Kellie B. ; Pavur, Robert J. American Statistician 2011 65(4) 265 -273 DOI: 10. 1198/tas. 2011. 09076 On the Numerical Accuracy of Spreadsheets Almiron, Marcelo G. ; Lopes, Bruno; Oliveira, Alyson L. C. ; Medeiros, A. C. ; Frery, A. C. Journal Of Statistical Software 2010 34(4) 1 -29 DOI: 10. 18637/jss. v 034. i 04 0. 42 42

Caveat Emptor On the accuracy of statistical procedures in Microsoft Excel 2007 Mc. Cullough, B. D. and Heiser, D. A. Computational Statistics & Data Analysis 2008 52(10) 4570 -4578 DOI: 10. 1016/j. csda. 2008. 03. 004 URL Excel 2007, like its predecessors, fails a standard set of intermediate-level accuracy tests in three areas: statistical distributions, random number generation, and estimation. Additional errors in specific Excel procedures are discussed. Microsoft's continuing inability to correctly fix errors is discussed. No statistical procedure in Excel should be used until Microsoft documents that the procedure is correct; it is not safe to assume that Microsoft Excel's statistical procedures give the correct answer. Persons who wish to conduct statistical analyses should use some other package. Errors, Faults and Fixes for Excel Statistical Functions and Routines 0. 43 43

Caveat Emptor On the accuracy of statistical procedures in Microsoft Excel 2007 Mc. Cullough, B. D. and Heiser, D. A. Computational Statistics & Data Analysis 2008 52(10) 4570 -4578 DOI: 10. 1016/j. csda. 2008. 03. 004 URL Excel 2007, like its predecessors, fails a standard set of intermediate-level accuracy tests in three areas: statistical distributions, random number generation, and estimation. Additional errors in specific Excel procedures are discussed. Microsoft's continuing inability to correctly fix errors is discussed. No statistical procedure in Excel should be used until Microsoft documents that the procedure is correct; it is not safe to assume that Microsoft Excel's statistical procedures give the correct answer. Persons who wish to conduct statistical analyses should use some other package. Errors, Faults and Fixes for Excel Statistical Functions and Routines 0. 43 43

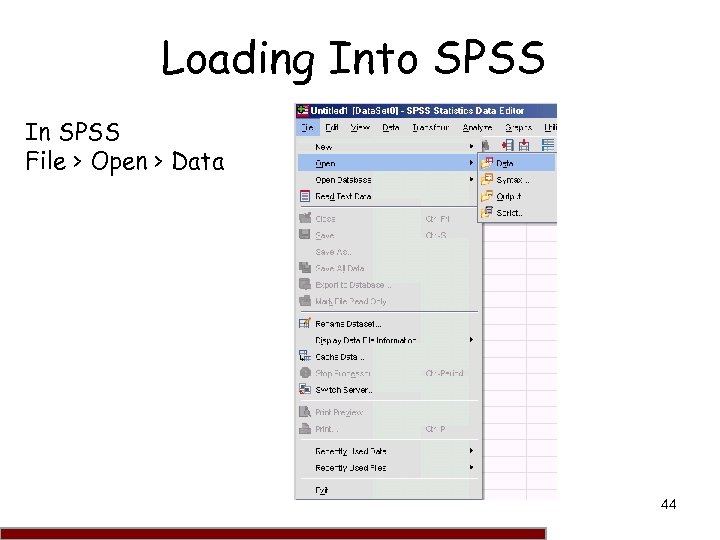

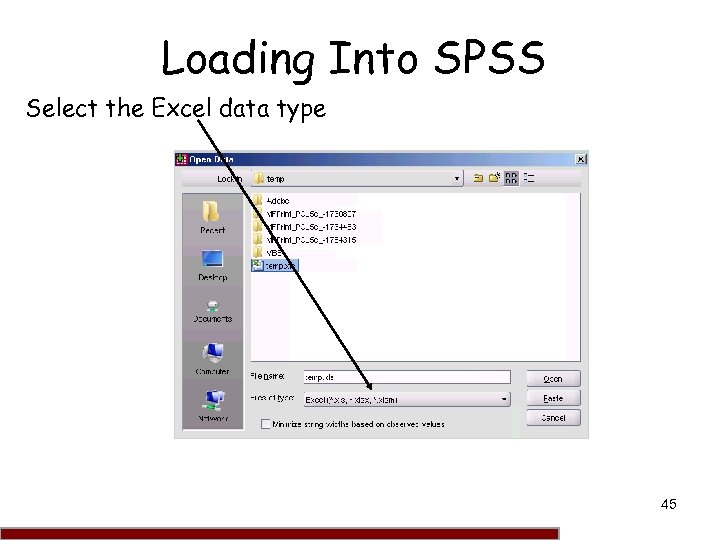

Loading Into SPSS In SPSS File > Open > Data 44

Loading Into SPSS In SPSS File > Open > Data 44

Loading Into SPSS Select the Excel data type 45

Loading Into SPSS Select the Excel data type 45

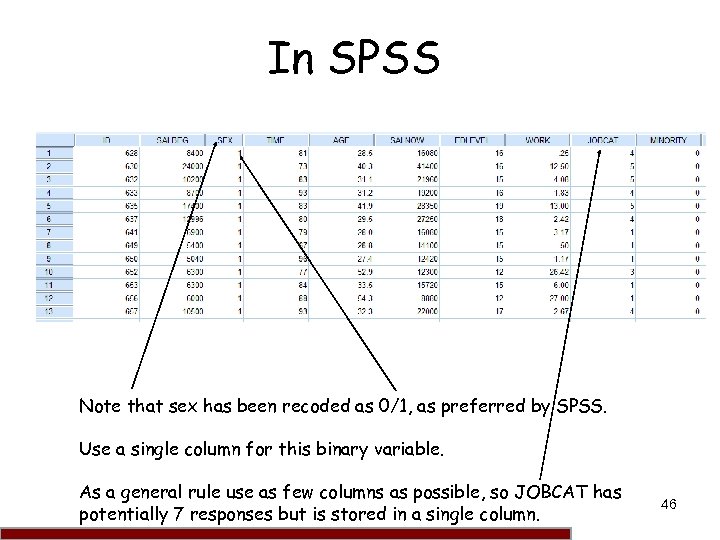

In SPSS Note that sex has been recoded as 0/1, as preferred by SPSS. Use a single column for this binary variable. As a general rule use as few columns as possible, so JOBCAT has potentially 7 responses but is stored in a single column. 46

In SPSS Note that sex has been recoded as 0/1, as preferred by SPSS. Use a single column for this binary variable. As a general rule use as few columns as possible, so JOBCAT has potentially 7 responses but is stored in a single column. 46

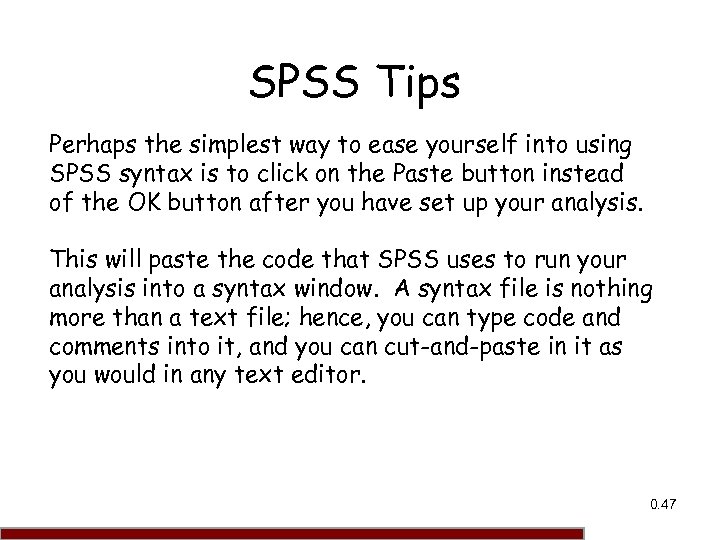

SPSS Tips Perhaps the simplest way to ease yourself into using SPSS syntax is to click on the Paste button instead of the OK button after you have set up your analysis. This will paste the code that SPSS uses to run your analysis into a syntax window. A syntax file is nothing more than a text file; hence, you can type code and comments into it, and you can cut-and-paste in it as you would in any text editor. 0. 47 47

SPSS Tips Perhaps the simplest way to ease yourself into using SPSS syntax is to click on the Paste button instead of the OK button after you have set up your analysis. This will paste the code that SPSS uses to run your analysis into a syntax window. A syntax file is nothing more than a text file; hence, you can type code and comments into it, and you can cut-and-paste in it as you would in any text editor. 0. 47 47

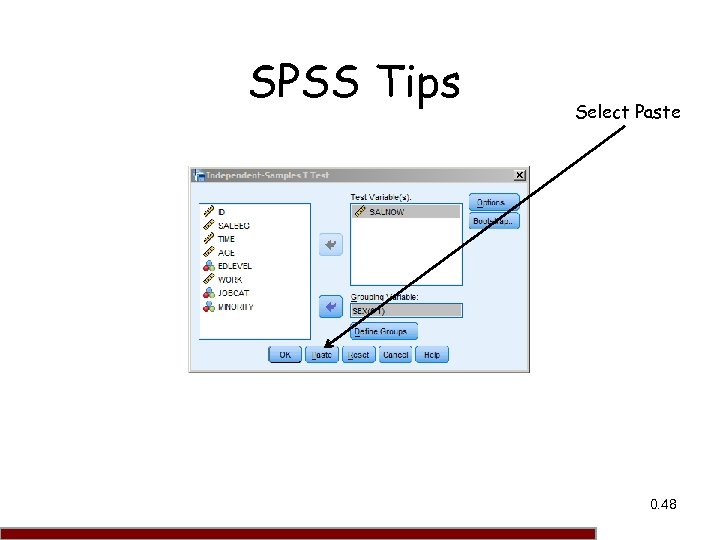

SPSS Tips Select Paste 0. 48 48

SPSS Tips Select Paste 0. 48 48

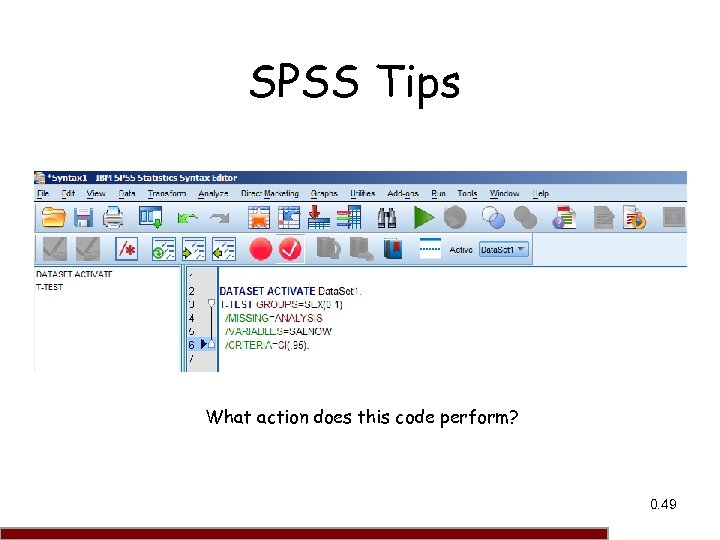

SPSS Tips What action does this code perform? 0. 49 49

SPSS Tips What action does this code perform? 0. 49 49

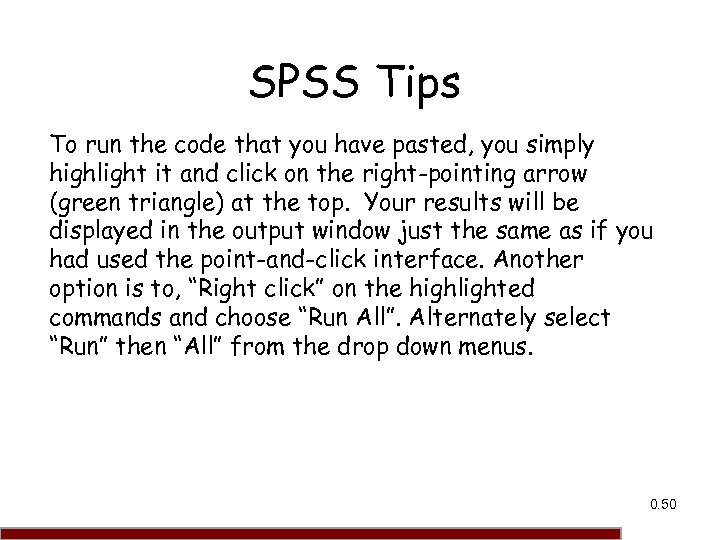

SPSS Tips To run the code that you have pasted, you simply highlight it and click on the right-pointing arrow (green triangle) at the top. Your results will be displayed in the output window just the same as if you had used the point-and-click interface. Another option is to, “Right click” on the highlighted commands and choose “Run All”. Alternately select “Run” then “All” from the drop down menus. 0. 50 50

SPSS Tips To run the code that you have pasted, you simply highlight it and click on the right-pointing arrow (green triangle) at the top. Your results will be displayed in the output window just the same as if you had used the point-and-click interface. Another option is to, “Right click” on the highlighted commands and choose “Run All”. Alternately select “Run” then “All” from the drop down menus. 0. 50 50

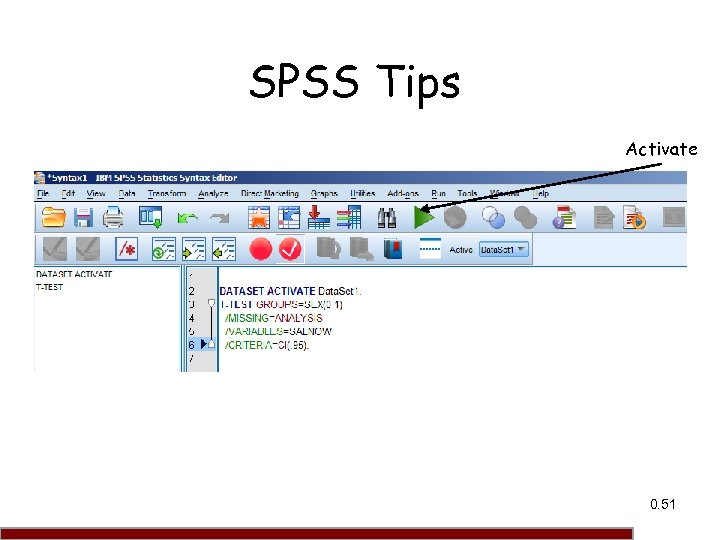

SPSS Tips Activate 0. 51 51

SPSS Tips Activate 0. 51 51

SPSS Tips Alternately to activate, return via the drop down menu’s to your intended commanded. Your previous selection will be intact, you can now select OK as usual. However you have preserved your syntax for use on future occasions. For instance running a full analysis having already conducted a pilot study. 0. 52 52

SPSS Tips Alternately to activate, return via the drop down menu’s to your intended commanded. Your previous selection will be intact, you can now select OK as usual. However you have preserved your syntax for use on future occasions. For instance running a full analysis having already conducted a pilot study. 0. 52 52

SPSS Tips The EXPORT command exports output from an open Viewer document to an external file format, such as Word. By default, the contents of the designated Viewer document are exported, but a different Viewer document can be specified by name. The target file/format may be selected. It may be activated by “right clicking” within the output shown by the statistics viewer and selecting Export. 0. 53 53

SPSS Tips The EXPORT command exports output from an open Viewer document to an external file format, such as Word. By default, the contents of the designated Viewer document are exported, but a different Viewer document can be specified by name. The target file/format may be selected. It may be activated by “right clicking” within the output shown by the statistics viewer and selecting Export. 0. 53 53

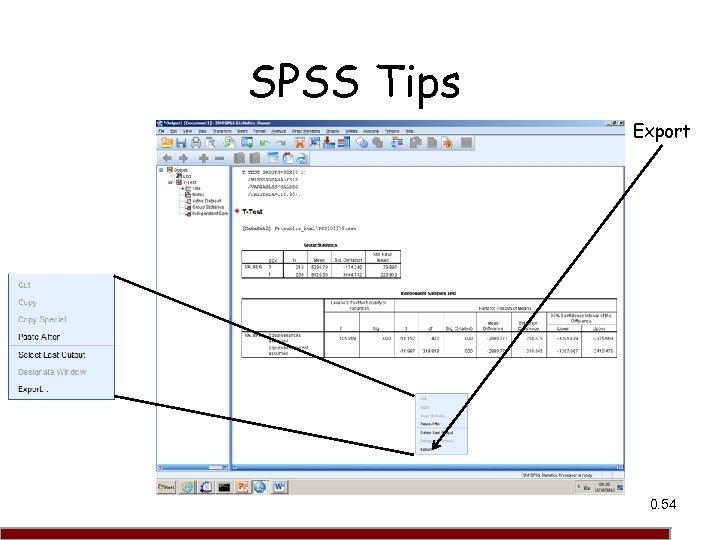

SPSS Tips Export 0. 54 54

SPSS Tips Export 0. 54 54

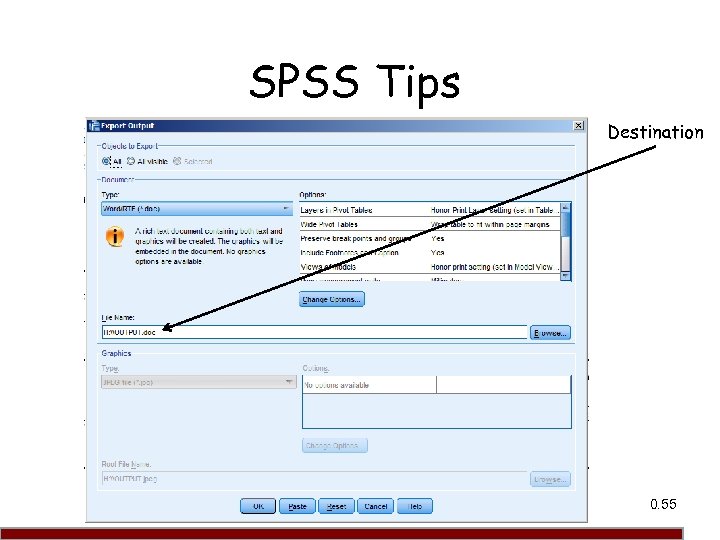

SPSS Tips Destination 0. 55 55

SPSS Tips Destination 0. 55 55

SPSS Tips Now you should go and try for yourself. Each week a cluster is booked to follow this session. This will enable you to come and go as you please. Obviously other timetabled sessions take precedence. 0. 56 56

SPSS Tips Now you should go and try for yourself. Each week a cluster is booked to follow this session. This will enable you to come and go as you please. Obviously other timetabled sessions take precedence. 0. 56 56

For the Complex and Important Topics of Power and Sample Size Refer to the Module Webpage 57

For the Complex and Important Topics of Power and Sample Size Refer to the Module Webpage 57

58

58