1cbfabb245795bbf8d4ce26552640bba.ppt

- Количество слайдов: 92

Why Consider Single-Case Design for Intervention Research: Reasons and Rationales Tom Kratochwill February 12, 2016 Wisconsin Center for Education Research School Psychology Program University of Wisconsin-Madison, Wisconsin 1

Why Consider Single-Case Design for Intervention Research: Reasons and Rationales Tom Kratochwill February 12, 2016 Wisconsin Center for Education Research School Psychology Program University of Wisconsin-Madison, Wisconsin 1

Recommended text for general overview of single-case design: (Kazdin, 2011) Recommend Kratochwill and Levin (2014) text for advanced information on design and data analysis Institute of Education Sciences 2015 Single. Case Design Institute Web site: http: //www. nccsite. com/events/SCDInstitute 2 015/

Recommended text for general overview of single-case design: (Kazdin, 2011) Recommend Kratochwill and Levin (2014) text for advanced information on design and data analysis Institute of Education Sciences 2015 Single. Case Design Institute Web site: http: //www. nccsite. com/events/SCDInstitute 2 015/

Rational, Reasons, Logic, and Foundations of Single-Case Intervention Research § Purposes and Fundamental Assumptions of Single. Case Intervention Research Methods § § Defining features of SCDs Core design types Internal validity and the role of replication Characteristics of Scientifically Credible Single. Case Intervention Studies § § “True” Single-Case Applications and the WWC Standards (design and evidence credibility) Classroom-Based Applications (design and evidence credibility) 5

Rational, Reasons, Logic, and Foundations of Single-Case Intervention Research § Purposes and Fundamental Assumptions of Single. Case Intervention Research Methods § § Defining features of SCDs Core design types Internal validity and the role of replication Characteristics of Scientifically Credible Single. Case Intervention Studies § § “True” Single-Case Applications and the WWC Standards (design and evidence credibility) Classroom-Based Applications (design and evidence credibility) 5

Features of Single-Case Research Methods Experimental Single-Case Research will have Four Features: Independent variable Dependent variable Focus is on functional relation (causal effect) Dimension(s) of predicted change over time (e. g. , level, trend, variability, score overlap)

Features of Single-Case Research Methods Experimental Single-Case Research will have Four Features: Independent variable Dependent variable Focus is on functional relation (causal effect) Dimension(s) of predicted change over time (e. g. , level, trend, variability, score overlap)

Operational definition of dependent variable (DV) Measure of DV is valid, reliable, and addresses the dimension(s) of concern Repeated measurement of an outcome before, during, and/or after active manipulation of independent variable Operational definition of independent variable (IV) Core features of IV are defined and measured to document fidelity Unit of IV implementation Group versus individual unit (an important distinction; WWC Standards only for individual unit of analysis).

Operational definition of dependent variable (DV) Measure of DV is valid, reliable, and addresses the dimension(s) of concern Repeated measurement of an outcome before, during, and/or after active manipulation of independent variable Operational definition of independent variable (IV) Core features of IV are defined and measured to document fidelity Unit of IV implementation Group versus individual unit (an important distinction; WWC Standards only for individual unit of analysis).

Types of Research Questions that Can be Answered with Single-Case Design Types § Evaluate Intervention Effects Relative to Baseline § § Compare Relative Effectiveness of Interventions § § Does a forgiveness intervention reduce the level of bullying behaviors for students in a high school setting? Is “function-based behavior support” more effective than “nonfunction-base support” at reducing the level and variability of problem behavior for this participant? Compare Single- and Multi-Component Interventions § Does adding performance feedback to basic teacher training improve the fidelity with which instructional skills are used by new teachers in the classroom?

Types of Research Questions that Can be Answered with Single-Case Design Types § Evaluate Intervention Effects Relative to Baseline § § Compare Relative Effectiveness of Interventions § § Does a forgiveness intervention reduce the level of bullying behaviors for students in a high school setting? Is “function-based behavior support” more effective than “nonfunction-base support” at reducing the level and variability of problem behavior for this participant? Compare Single- and Multi-Component Interventions § Does adding performance feedback to basic teacher training improve the fidelity with which instructional skills are used by new teachers in the classroom?

Is a certain teaching procedure functionally related to an increase in the level of social initiations by young children with autism? Is time delay prompting or least-to-most prompting more effective in increasing the level of self-help skills performed by young children with severe intellectual disabilities? Is the pacing of reading instruction functionally related to increased level and slope of reading performance (as measured by ORF) for third graders? Is Adderal (at clinically prescribed dosage) functionally related to increased level of attention performance for elementary age students with Attention Deficit Disorder?

Is a certain teaching procedure functionally related to an increase in the level of social initiations by young children with autism? Is time delay prompting or least-to-most prompting more effective in increasing the level of self-help skills performed by young children with severe intellectual disabilities? Is the pacing of reading instruction functionally related to increased level and slope of reading performance (as measured by ORF) for third graders? Is Adderal (at clinically prescribed dosage) functionally related to increased level of attention performance for elementary age students with Attention Deficit Disorder?

Like RCTs, purpose is to document causal relationships Control for major threats to internal validity through replication Document effects for specific individuals / settings Replication across participants required to enhance external validity Can be distinguished from case studies

Like RCTs, purpose is to document causal relationships Control for major threats to internal validity through replication Document effects for specific individuals / settings Replication across participants required to enhance external validity Can be distinguished from case studies

Ambiguous Temporal Precedence Selection History Maturation Testing Instrumentation Additive and Interactive Effects of Threats See Shadish, Cook, and Campbell (2002)

Ambiguous Temporal Precedence Selection History Maturation Testing Instrumentation Additive and Interactive Effects of Threats See Shadish, Cook, and Campbell (2002)

Often characterized by narrative description of case, treatment, and outcome variables Typically lack a formal design with replication but can involve a basic design format (e. g. , A/B) Methods have been suggested to improve drawing valid inferences from case study research [e. g. , Kazdin, 1982; Kratochwill, 1985; Kazdin, A. E. (2011). Single-case research designs: Methods for clinical and applied settings(2 nd ed. ). New York: Oxford University Press]

Often characterized by narrative description of case, treatment, and outcome variables Typically lack a formal design with replication but can involve a basic design format (e. g. , A/B) Methods have been suggested to improve drawing valid inferences from case study research [e. g. , Kazdin, 1982; Kratochwill, 1985; Kazdin, A. E. (2011). Single-case research designs: Methods for clinical and applied settings(2 nd ed. ). New York: Oxford University Press]

Defining Features of Single-Case Intervention Design

Defining Features of Single-Case Intervention Design

Hammond and Gast (2011) reviewed 196 randomly identified journal issues (from 19832007) containing 1, 936 articles (a total of 556 single-case designs were coded). Multiple baseline designs were reported more often than withdrawal designs and these were more often reported across individuals and groups.

Hammond and Gast (2011) reviewed 196 randomly identified journal issues (from 19832007) containing 1, 936 articles (a total of 556 single-case designs were coded). Multiple baseline designs were reported more often than withdrawal designs and these were more often reported across individuals and groups.

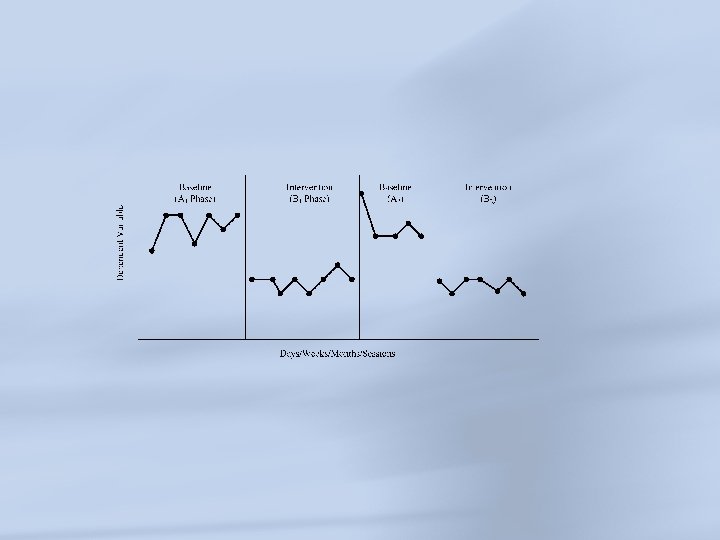

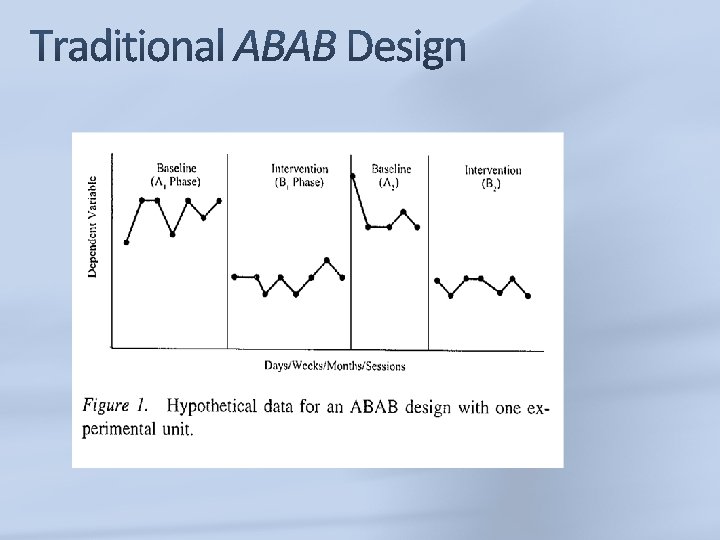

![Simple phase change designs [e. g. , ABAB; BCBC design]. (In the literature, ABAB Simple phase change designs [e. g. , ABAB; BCBC design]. (In the literature, ABAB](https://present5.com/presentation/1cbfabb245795bbf8d4ce26552640bba/image-15.jpg) Simple phase change designs [e. g. , ABAB; BCBC design]. (In the literature, ABAB designs are sometimes referred to as withdrawal designs, intrasubject replication designs, within-series designs, operant, or reversal designs)

Simple phase change designs [e. g. , ABAB; BCBC design]. (In the literature, ABAB designs are sometimes referred to as withdrawal designs, intrasubject replication designs, within-series designs, operant, or reversal designs)

ABAB Reversal/Withdrawal Designs In these designs, estimates of level, trend, and variability within a data series are assessed under similar conditions; the manipulated variable is introduced and concomitant changes in the outcome measure(s) are assessed in the level, trend, and variability between phases of the series, with special attention to the degree of overlap, immediacy of effect, and similarity of data patterns across similar phases (e. g. , all baseline phases).

ABAB Reversal/Withdrawal Designs In these designs, estimates of level, trend, and variability within a data series are assessed under similar conditions; the manipulated variable is introduced and concomitant changes in the outcome measure(s) are assessed in the level, trend, and variability between phases of the series, with special attention to the degree of overlap, immediacy of effect, and similarity of data patterns across similar phases (e. g. , all baseline phases).

ABAB Reversal/Withdrawal Designs Some Example Design Limitations: Behavior must be reversible in the ABAB…series (e. g. , return to baseline). May be ethical issues involved in reversing behavior back to baseline (A 2). May be a complex study when multiple conditions need to be compared. There may be order effects in the design.

ABAB Reversal/Withdrawal Designs Some Example Design Limitations: Behavior must be reversible in the ABAB…series (e. g. , return to baseline). May be ethical issues involved in reversing behavior back to baseline (A 2). May be a complex study when multiple conditions need to be compared. There may be order effects in the design.

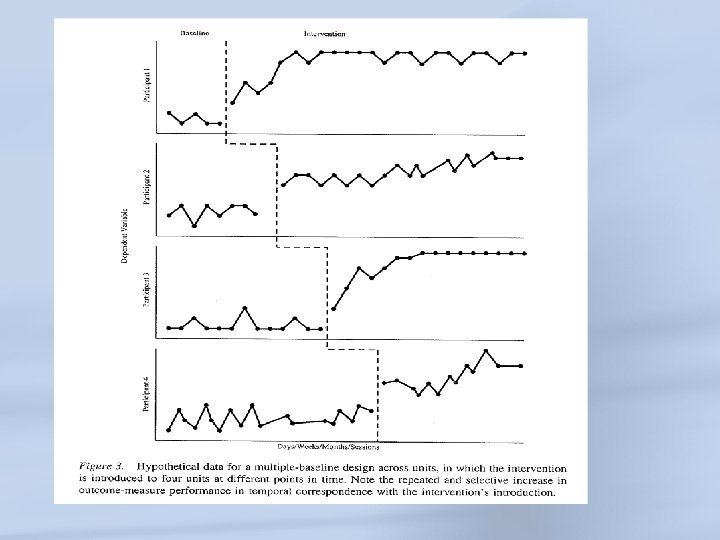

Multiple baseline design. The design can be applied across units(participants), across behaviors, across situations.

Multiple baseline design. The design can be applied across units(participants), across behaviors, across situations.

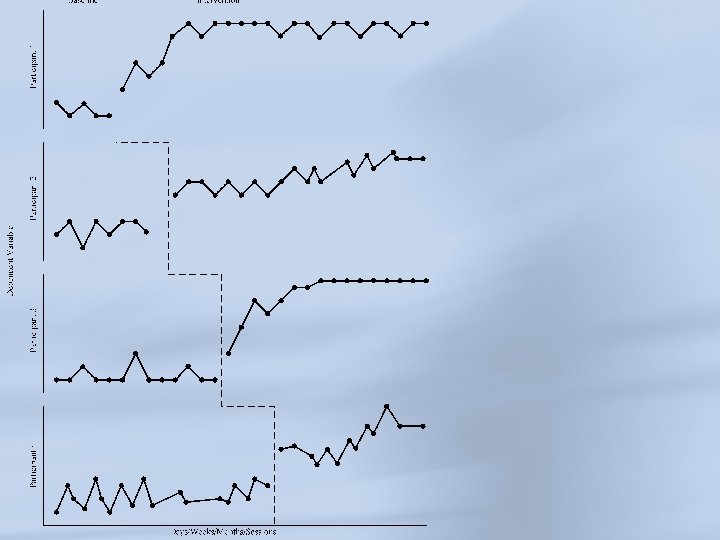

Multiple Baseline Designs In these designs, multiple AB data series are compared and introduction of the intervention is staggered across time. Comparisons are made both between and within a data series. Repetitions of a single simple phase change are scheduled, each with a new series and in which both the length and timing of the phase change differ across replications.

Multiple Baseline Designs In these designs, multiple AB data series are compared and introduction of the intervention is staggered across time. Comparisons are made both between and within a data series. Repetitions of a single simple phase change are scheduled, each with a new series and in which both the length and timing of the phase change differ across replications.

Some Example Design Limitations: The design is generally limited to demonstrating the effect of one independent variable on some outcome. The design depends on the “independence” of the multiple baselines (across units, settings, and behaviors). There can be practical as well as ethical issues in keeping individuals on baseline for long periods of time (as in the last series).

Some Example Design Limitations: The design is generally limited to demonstrating the effect of one independent variable on some outcome. The design depends on the “independence” of the multiple baselines (across units, settings, and behaviors). There can be practical as well as ethical issues in keeping individuals on baseline for long periods of time (as in the last series).

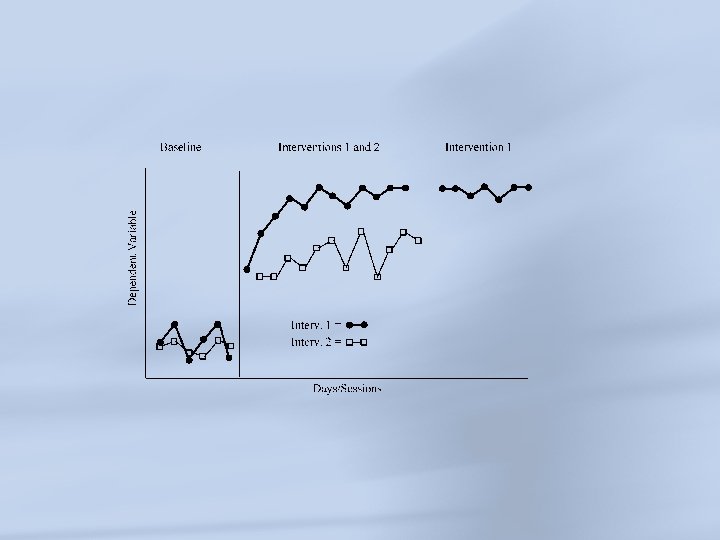

Alternating Treatment Designs Alternating treatments (in the behavior analysis literature, alternating treatment designs are sometimes referred to as part of a class of multielement designs).

Alternating Treatment Designs Alternating treatments (in the behavior analysis literature, alternating treatment designs are sometimes referred to as part of a class of multielement designs).

In these designs, estimates of level, trend, and variability in a data series are assessed on measures within specific conditions and across time. Changes/differences in the outcome measure(s) are assessed by comparing the series associated with different conditions.

In these designs, estimates of level, trend, and variability in a data series are assessed on measures within specific conditions and across time. Changes/differences in the outcome measure(s) are assessed by comparing the series associated with different conditions.

Some Example Design Limitations: Behavior must be reversed during alternation of the intervention. There is the possibility of interaction/carryover effects as conditions are alternated. Comparing more than three treatments is very challenging in terms of balancing conditions.

Some Example Design Limitations: Behavior must be reversed during alternation of the intervention. There is the possibility of interaction/carryover effects as conditions are alternated. Comparing more than three treatments is very challenging in terms of balancing conditions.

Single-case researchers have a number of conceptual and methodological standards to guide their synthesis work. These standards, alternatively referred to as “guidelines, ” have been developed by a number of professional organizations and authors interested primarily in providing guidance for reviewing the literature in a particular content domain (e. g. , Smith, 2012; Wendt & Miller, 2012). The development of these standards has also provided researchers who are designing their own intervention studies with a protocol that is capable of meeting or exceeding the proposed standards.

Single-case researchers have a number of conceptual and methodological standards to guide their synthesis work. These standards, alternatively referred to as “guidelines, ” have been developed by a number of professional organizations and authors interested primarily in providing guidance for reviewing the literature in a particular content domain (e. g. , Smith, 2012; Wendt & Miller, 2012). The development of these standards has also provided researchers who are designing their own intervention studies with a protocol that is capable of meeting or exceeding the proposed standards.

Wendt and Miller (2012) identified seven “quality appraisal tools” and compared these standards to the single-case research criteria advanced by Horner et al. (2005). Smith (2012) reviewed research design and various methodological characteristics of singlecase designs in peer-reviewed journals, primarily from the psychological literature (over the years 2000 -2010). Based on his review, six standards for appraisal of the literature (some of which overlap with the Wendt and Miller review).

Wendt and Miller (2012) identified seven “quality appraisal tools” and compared these standards to the single-case research criteria advanced by Horner et al. (2005). Smith (2012) reviewed research design and various methodological characteristics of singlecase designs in peer-reviewed journals, primarily from the psychological literature (over the years 2000 -2010). Based on his review, six standards for appraisal of the literature (some of which overlap with the Wendt and Miller review).

National Reading Panel American Psychological Association (APA) Division 12/53 American Psychological Association (APA) Division 16 What Works Clearinghouse (WWC) Consolidated Standards of Reporting Trials (CONSORT) Guidelines for N-of-1 Trials (the CONSORT Extension for N-of 1 Trials 37 [CENT]

National Reading Panel American Psychological Association (APA) Division 12/53 American Psychological Association (APA) Division 16 What Works Clearinghouse (WWC) Consolidated Standards of Reporting Trials (CONSORT) Guidelines for N-of-1 Trials (the CONSORT Extension for N-of 1 Trials 37 [CENT]

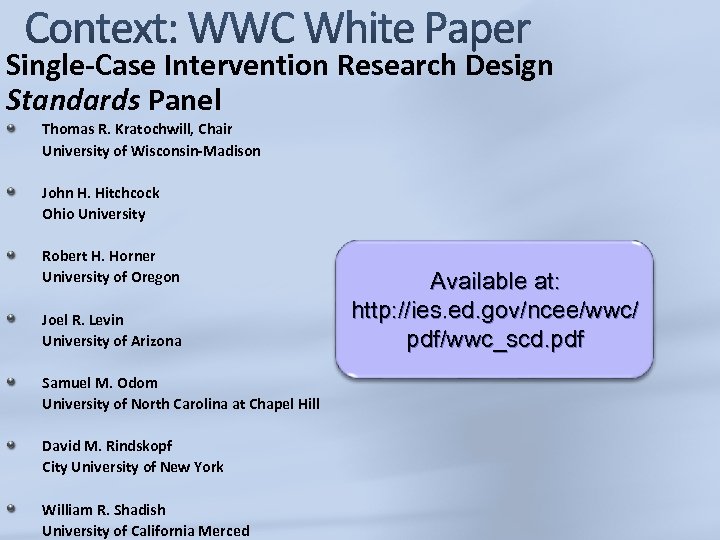

Single-Case Intervention Research Design Standards Panel Thomas R. Kratochwill, Chair University of Wisconsin-Madison John H. Hitchcock Ohio University Robert H. Horner University of Oregon Joel R. Levin University of Arizona Samuel M. Odom University of North Carolina at Chapel Hill David M. Rindskopf City University of New York William R. Shadish University of California Merced Available at: http: //ies. ed. gov/ncee/wwc/ pdf/wwc_scd. pdf

Single-Case Intervention Research Design Standards Panel Thomas R. Kratochwill, Chair University of Wisconsin-Madison John H. Hitchcock Ohio University Robert H. Horner University of Oregon Joel R. Levin University of Arizona Samuel M. Odom University of North Carolina at Chapel Hill David M. Rindskopf City University of New York William R. Shadish University of California Merced Available at: http: //ies. ed. gov/ncee/wwc/ pdf/wwc_scd. pdf

What Works Clearinghouse Standards Design Standards Evidence Criteria Effect Size and Social Validity

What Works Clearinghouse Standards Design Standards Evidence Criteria Effect Size and Social Validity

WWC Design Standards 42

WWC Design Standards 42

Sullivan and Shadish (2011) assessed the WWC pilot Standards related to implementation of the intervention, acceptable levels of observer agreement/reliability, opportunities to demonstrate a treatment effect, and acceptable numbers of data points in a phase. In published studies in 21 journals in 2008, they found that nearly 45% of the research met the strictest WWC standards of design and 30% met with some reservations. So, it can be concluded that around 75% of the published research during a sampling year of major journals that publish single-case intervention research would meet (or meet with reservations) the WWC design standards.

Sullivan and Shadish (2011) assessed the WWC pilot Standards related to implementation of the intervention, acceptable levels of observer agreement/reliability, opportunities to demonstrate a treatment effect, and acceptable numbers of data points in a phase. In published studies in 21 journals in 2008, they found that nearly 45% of the research met the strictest WWC standards of design and 30% met with some reservations. So, it can be concluded that around 75% of the published research during a sampling year of major journals that publish single-case intervention research would meet (or meet with reservations) the WWC design standards.

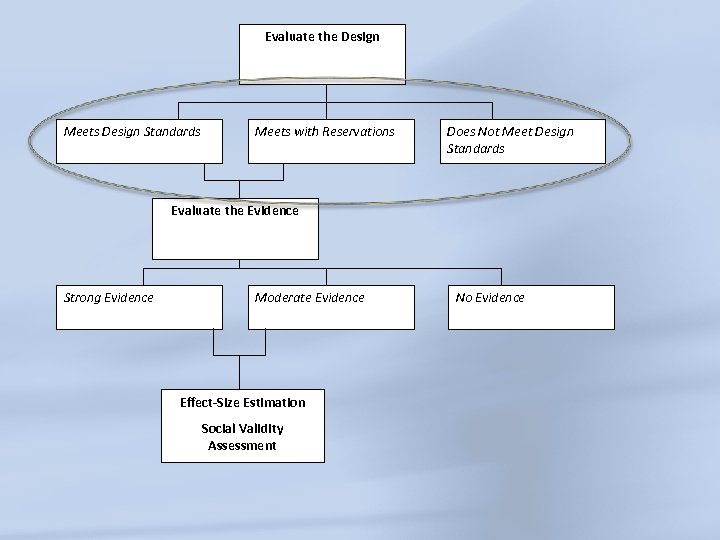

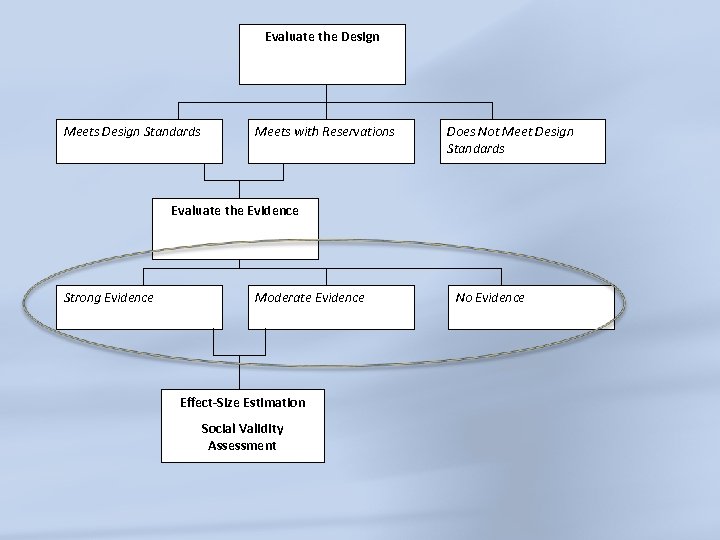

Evaluate the Design Meets Design Standards Meets with Reservations Does Not Meet Design Standards Evaluate the Evidence Strong Evidence Moderate Evidence Effect-Size Estimation Social Validity Assessment No Evidence

Evaluate the Design Meets Design Standards Meets with Reservations Does Not Meet Design Standards Evaluate the Evidence Strong Evidence Moderate Evidence Effect-Size Estimation Social Validity Assessment No Evidence

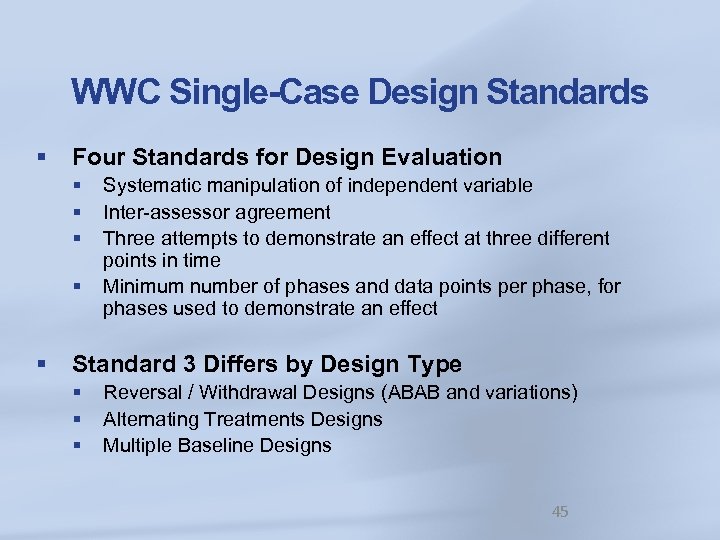

WWC Single-Case Design Standards § Four Standards for Design Evaluation § § § Systematic manipulation of independent variable Inter-assessor agreement Three attempts to demonstrate an effect at three different points in time Minimum number of phases and data points per phase, for phases used to demonstrate an effect Standard 3 Differs by Design Type § § § Reversal / Withdrawal Designs (ABAB and variations) Alternating Treatments Designs Multiple Baseline Designs 45

WWC Single-Case Design Standards § Four Standards for Design Evaluation § § § Systematic manipulation of independent variable Inter-assessor agreement Three attempts to demonstrate an effect at three different points in time Minimum number of phases and data points per phase, for phases used to demonstrate an effect Standard 3 Differs by Design Type § § § Reversal / Withdrawal Designs (ABAB and variations) Alternating Treatments Designs Multiple Baseline Designs 45

Standard 1: Systematic Manipulation of the Independent Variable § Researcher Must Determine When and How the Independent Variable Conditions Change. § If Standard Is Not Met, Study Does Not Meet Design Standards. 46

Standard 1: Systematic Manipulation of the Independent Variable § Researcher Must Determine When and How the Independent Variable Conditions Change. § If Standard Is Not Met, Study Does Not Meet Design Standards. 46

Examples of Manipulation that is Not Systematic § Teacher/Consultee Begins to Implement an Intervention Prematurely Because of Parent Pressure. § Researcher Looks Retrospectively at Data Collected during an Intervention Program. 47

Examples of Manipulation that is Not Systematic § Teacher/Consultee Begins to Implement an Intervention Prematurely Because of Parent Pressure. § Researcher Looks Retrospectively at Data Collected during an Intervention Program. 47

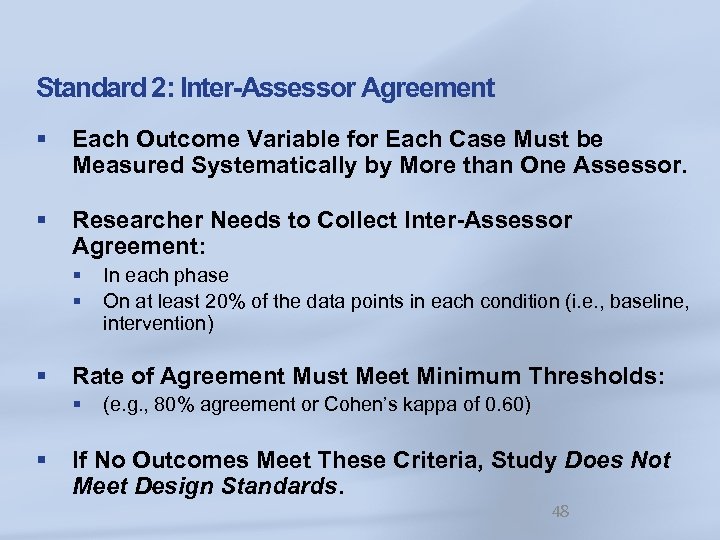

Standard 2: Inter-Assessor Agreement § Each Outcome Variable for Each Case Must be Measured Systematically by More than One Assessor. § Researcher Needs to Collect Inter-Assessor Agreement: § § § Rate of Agreement Must Meet Minimum Thresholds: § § In each phase On at least 20% of the data points in each condition (i. e. , baseline, intervention) (e. g. , 80% agreement or Cohen’s kappa of 0. 60) If No Outcomes Meet These Criteria, Study Does Not Meet Design Standards. 48

Standard 2: Inter-Assessor Agreement § Each Outcome Variable for Each Case Must be Measured Systematically by More than One Assessor. § Researcher Needs to Collect Inter-Assessor Agreement: § § § Rate of Agreement Must Meet Minimum Thresholds: § § In each phase On at least 20% of the data points in each condition (i. e. , baseline, intervention) (e. g. , 80% agreement or Cohen’s kappa of 0. 60) If No Outcomes Meet These Criteria, Study Does Not Meet Design Standards. 48

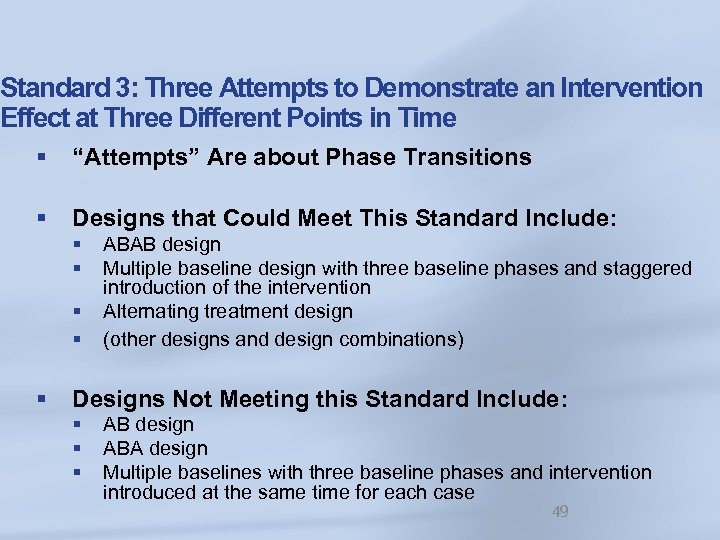

Standard 3: Three Attempts to Demonstrate an Intervention Effect at Three Different Points in Time § “Attempts” Are about Phase Transitions § Designs that Could Meet This Standard Include: § § § ABAB design Multiple baseline design with three baseline phases and staggered introduction of the intervention Alternating treatment design (other designs and design combinations) Designs Not Meeting this Standard Include: § § § AB design ABA design Multiple baselines with three baseline phases and intervention introduced at the same time for each case 49

Standard 3: Three Attempts to Demonstrate an Intervention Effect at Three Different Points in Time § “Attempts” Are about Phase Transitions § Designs that Could Meet This Standard Include: § § § ABAB design Multiple baseline design with three baseline phases and staggered introduction of the intervention Alternating treatment design (other designs and design combinations) Designs Not Meeting this Standard Include: § § § AB design ABA design Multiple baselines with three baseline phases and intervention introduced at the same time for each case 49

Basic Effect: Change in the pattern of responding after manipulation of the independent variable (level, trend, variability). Experimental Control: At least three demonstrations of basic effect, each at a different point in time.

Basic Effect: Change in the pattern of responding after manipulation of the independent variable (level, trend, variability). Experimental Control: At least three demonstrations of basic effect, each at a different point in time.

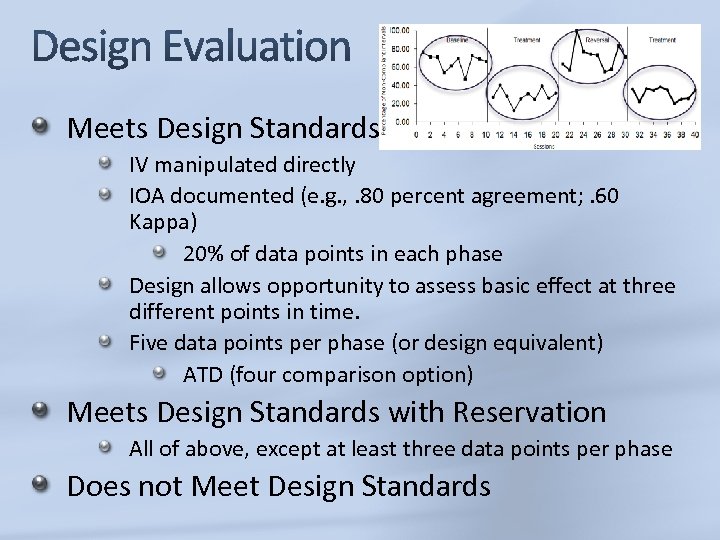

Meets Design Standards IV manipulated directly IOA documented (e. g. , . 80 percent agreement; . 60 Kappa) 20% of data points in each phase Design allows opportunity to assess basic effect at three different points in time. Five data points per phase (or design equivalent) ATD (four comparison option) Meets Design Standards with Reservation All of above, except at least three data points per phase Does not Meet Design Standards

Meets Design Standards IV manipulated directly IOA documented (e. g. , . 80 percent agreement; . 60 Kappa) 20% of data points in each phase Design allows opportunity to assess basic effect at three different points in time. Five data points per phase (or design equivalent) ATD (four comparison option) Meets Design Standards with Reservation All of above, except at least three data points per phase Does not Meet Design Standards

Random Thoughts On Enhancing the Scientific Credibility of Single -Case Intervention Research: Randomization to the Rescue for Designs (Kratochwill & Levin, Psychological Methods, 2010)

Random Thoughts On Enhancing the Scientific Credibility of Single -Case Intervention Research: Randomization to the Rescue for Designs (Kratochwill & Levin, Psychological Methods, 2010)

Internal Validity Promotes the status of single-case research by increasing the scientific credibility of its methodology; the tradition has been replication and with the use of randomization these procedures can rival randomized clinical trial studies. Statistical-Conclusion Validity Legitimizes the conduct of various statistical tests and one’s interpretation of results; the tradition has been visual analysis.

Internal Validity Promotes the status of single-case research by increasing the scientific credibility of its methodology; the tradition has been replication and with the use of randomization these procedures can rival randomized clinical trial studies. Statistical-Conclusion Validity Legitimizes the conduct of various statistical tests and one’s interpretation of results; the tradition has been visual analysis.

WWC Standards 61

WWC Standards 61

Evaluate the Design Meets Design Standards Meets with Reservations Does Not Meet Design Standards Evaluate the Evidence Strong Evidence Moderate Evidence Effect-Size Estimation Social Validity Assessment No Evidence

Evaluate the Design Meets Design Standards Meets with Reservations Does Not Meet Design Standards Evaluate the Evidence Strong Evidence Moderate Evidence Effect-Size Estimation Social Validity Assessment No Evidence

Visual Analysis of Single-Case Evidence § Traditional Method of Data Evaluation for SCDs § § Determine whether evidence of a causal relation exists Characterize the strength or magnitude of that relation Singular approach used by WWC for rating SCD evidence Methods for Effect-Size Estimation § § § Several methods proposed SCD WWC Panel members among those developing these methods, but methods are still being tested and some are now comparable with group-comparison studies WWC standards for effect-size are being assessed as field reaches greater consensus on appropriate statistical approaches 63

Visual Analysis of Single-Case Evidence § Traditional Method of Data Evaluation for SCDs § § Determine whether evidence of a causal relation exists Characterize the strength or magnitude of that relation Singular approach used by WWC for rating SCD evidence Methods for Effect-Size Estimation § § § Several methods proposed SCD WWC Panel members among those developing these methods, but methods are still being tested and some are now comparable with group-comparison studies WWC standards for effect-size are being assessed as field reaches greater consensus on appropriate statistical approaches 63

Goal, Rationale, Advantages, and Limitations of Visual Analysis § Goal is to Identify Intervention Effects § § A basic effect is a change in the dependent variable in response to researcher manipulation of the independent variable. “Subjective” determination of evidence, but practice and common framework for applying visual analysis can help to improve agreement rate. Evidence criteria are met by examining effects that are replicated at different points. Encourages Focus on Interventions with Strong Effects § § § Strong effects are generally desired by applied researchers and clinicians. Weak results are filtered out because effects should be clear from looking at data - viewed as an advantage. Statistical evaluation can be more sensitive than visual analysis in detecting intervention effects. 64

Goal, Rationale, Advantages, and Limitations of Visual Analysis § Goal is to Identify Intervention Effects § § A basic effect is a change in the dependent variable in response to researcher manipulation of the independent variable. “Subjective” determination of evidence, but practice and common framework for applying visual analysis can help to improve agreement rate. Evidence criteria are met by examining effects that are replicated at different points. Encourages Focus on Interventions with Strong Effects § § § Strong effects are generally desired by applied researchers and clinicians. Weak results are filtered out because effects should be clear from looking at data - viewed as an advantage. Statistical evaluation can be more sensitive than visual analysis in detecting intervention effects. 64

Goal, Rationale, Advantages, Limitations (cont’d) § Statistical Evaluation and Visual Analysis are Not Fundamentally Different in terms of Controlling Errors (Kazdin, 2011) § Both attempt to avoid Type I and Type II errors § § § Type I: Concluding the intervention produced an effect when it did not Type II: Concluding the intervention did not produce an effect when it did Possible Limitations of Visual Analysis § § Lack of concrete decision-making rules (e. g. , in contrast to p<0. 05 used in statistical analysis) Multiple influences need to be analyzed simultaneously 65

Goal, Rationale, Advantages, Limitations (cont’d) § Statistical Evaluation and Visual Analysis are Not Fundamentally Different in terms of Controlling Errors (Kazdin, 2011) § Both attempt to avoid Type I and Type II errors § § § Type I: Concluding the intervention produced an effect when it did not Type II: Concluding the intervention did not produce an effect when it did Possible Limitations of Visual Analysis § § Lack of concrete decision-making rules (e. g. , in contrast to p<0. 05 used in statistical analysis) Multiple influences need to be analyzed simultaneously 65

Multiple Influences in Applying Visual Analysis § § Level: Mean of the data series within a phase Trend: Slope of the best-fit line within a phase Variability: Deviation of data around the best-fit line Data Overlap: Percentage of data from an intervention phase entering that enters the range of data from the previous phase § Immediacy: Magnitude of change between the last 3 data points in one phase and the first 3 in the next § Consistency: Extent to which data patterns are similar in similar phases 66

Multiple Influences in Applying Visual Analysis § § Level: Mean of the data series within a phase Trend: Slope of the best-fit line within a phase Variability: Deviation of data around the best-fit line Data Overlap: Percentage of data from an intervention phase entering that enters the range of data from the previous phase § Immediacy: Magnitude of change between the last 3 data points in one phase and the first 3 in the next § Consistency: Extent to which data patterns are similar in similar phases 66

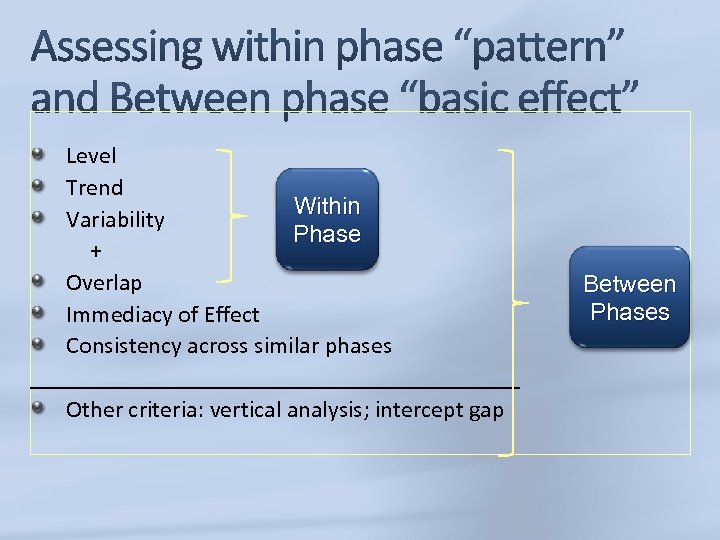

Documenting Experimental Control Three demonstrations of a “basic effect” at three different points in time. A “basic effect” is a predicted change in the dependent variable when the independent variable is actively manipulated. To assess a “basic effect” Visual Analysis includes simultaneous assessment of: Level, Trend, Variability, Immediacy of Effect, Overlap across Adjacent Phases, Consistency of Data Pattern in Similar Phases. (Parsonson & Baer, 1978; Kratochwill & Levin, 1992)

Documenting Experimental Control Three demonstrations of a “basic effect” at three different points in time. A “basic effect” is a predicted change in the dependent variable when the independent variable is actively manipulated. To assess a “basic effect” Visual Analysis includes simultaneous assessment of: Level, Trend, Variability, Immediacy of Effect, Overlap across Adjacent Phases, Consistency of Data Pattern in Similar Phases. (Parsonson & Baer, 1978; Kratochwill & Levin, 1992)

Level Trend Within Variability Phase + Overlap Immediacy of Effect Consistency across similar phases _____________________ Other criteria: vertical analysis; intercept gap Between Phases

Level Trend Within Variability Phase + Overlap Immediacy of Effect Consistency across similar phases _____________________ Other criteria: vertical analysis; intercept gap Between Phases

Define protocol for teaching Discriminate Acceptable Designs Visual Analysis of Data Resource: http: //www. wcer. wisc. edu/publications/working. P apers/Working_Paper_No_2010_13. pdf Resource: www. singlecase. org For training For research Resource: https: //foxylearning. com/tutorials/va For training For research

Define protocol for teaching Discriminate Acceptable Designs Visual Analysis of Data Resource: http: //www. wcer. wisc. edu/publications/working. P apers/Working_Paper_No_2010_13. pdf Resource: www. singlecase. org For training For research Resource: https: //foxylearning. com/tutorials/va For training For research

Anyone, any time may use the website to assess their visual analysis acumen. For Instructors: Contact Rob Horner with course code, and dates needed Set up “coordinator” Students enter code and name You can download their scores, number of sessions, time For Researchers: Inter-rater agreement with visual analysis Training protocols for teaching visual analysis.

Anyone, any time may use the website to assess their visual analysis acumen. For Instructors: Contact Rob Horner with course code, and dates needed Set up “coordinator” Students enter code and name You can download their scores, number of sessions, time For Researchers: Inter-rater agreement with visual analysis Training protocols for teaching visual analysis.

Visual Analysis of Single Case Designs Determine viability of design first Assess baseline Assess the within phase pattern of each phase Assess the basic effects with each phase comparison Assess the extent to which the overall design documents experimental control (e. g. , Three demonstrations of basic effect, each at a different point in time) Assess effect size Assess social validity

Visual Analysis of Single Case Designs Determine viability of design first Assess baseline Assess the within phase pattern of each phase Assess the basic effects with each phase comparison Assess the extent to which the overall design documents experimental control (e. g. , Three demonstrations of basic effect, each at a different point in time) Assess effect size Assess social validity

Historical Overview Three Considerations (after Levin, Marascuilo, & Hubert, 1978) (the WWWs): 1. Why an investigator would want, or feel the need, to conduct a formal statistical analysis • scholarly research vs. client-centered focus • investigator’s purpose and desired inferences • generalizations from a single-case study Levin, J. R. , Marascuilo, L. A. , & Hubert, L. J. (1978). N = nonparametric randomization tests. In T. R. Kratochwill (Ed. ), Single subject research: Strategies for evaluating change (pp. 167 -196). New York: Academic Press.

Historical Overview Three Considerations (after Levin, Marascuilo, & Hubert, 1978) (the WWWs): 1. Why an investigator would want, or feel the need, to conduct a formal statistical analysis • scholarly research vs. client-centered focus • investigator’s purpose and desired inferences • generalizations from a single-case study Levin, J. R. , Marascuilo, L. A. , & Hubert, L. J. (1978). N = nonparametric randomization tests. In T. R. Kratochwill (Ed. ), Single subject research: Strategies for evaluating change (pp. 167 -196). New York: Academic Press.

Historical Overview 2. Whether any statistical analysis should be conducted • predefined outcome criteria • visual analysis options • validity issues – external: generalization, sampling – internal: design features, experimental control Levin, J. R. , Marascuilo, L. A. , & Hubert, L. J. (1978). N = nonparametric randomization tests. In T. R. Kratochwill (Ed. ), Single subject research: Strategies for evaluating change (pp. 167 -196). New York: Academic Press.

Historical Overview 2. Whether any statistical analysis should be conducted • predefined outcome criteria • visual analysis options • validity issues – external: generalization, sampling – internal: design features, experimental control Levin, J. R. , Marascuilo, L. A. , & Hubert, L. J. (1978). N = nonparametric randomization tests. In T. R. Kratochwill (Ed. ), Single subject research: Strategies for evaluating change (pp. 167 -196). New York: Academic Press.

Historical Overview 3. Which statistical procedure, of many potentially available, is the most appropriate one to use for the particular study/design? • distributional assumptions • statistical properties • the most important criteria to be satisfied Levin, J. R. , Marascuilo, L. A. , & Hubert, L. J. (1978). N = nonparametric randomization tests. In T. R. Kratochwill (Ed. ), Single subject research: Strategies for evaluating change (pp. 167 -196). New York: Academic Press.

Historical Overview 3. Which statistical procedure, of many potentially available, is the most appropriate one to use for the particular study/design? • distributional assumptions • statistical properties • the most important criteria to be satisfied Levin, J. R. , Marascuilo, L. A. , & Hubert, L. J. (1978). N = nonparametric randomization tests. In T. R. Kratochwill (Ed. ), Single subject research: Strategies for evaluating change (pp. 167 -196). New York: Academic Press.

The Statistical Elephant in the Room: The Independence Assumption Of tea tastes (Fisher, 1935) and t tests From Shine (Shine & Bower, 1971) to Gentile, Roden, & Klein (1972) and beyond (e. g. , Kratochwill et al. , 1974) As reflected by the series autocorrelation (AC) coefficient, or the degree of serial dependence in the data Fisher, R. A. (1935). The design of experiments. New York: Hafner. Gentile, J. R. , Roden, A. H. , & Klein, R. D. (1972). An analysis of variance model for the intrasubject replication design. Journal of Applied Behavior. Analysis, 5, 193 -198. Kratochwill, T. R. , Alden, K. , Demuth, D. , Dawson, 0. , Panicucci, C. , Arntson, P. , Mc. Murray, N. , Hempstead, J. , & Levin, J. (1974). A further consideration in the application of an analysis-of-variance model for the intrasubject replication design. Journal of Applied Behavior Analysis, 7, 629 -633. Shine, L. C. , & Bower, S. M. (1971). A one-way analysis of variance for single-subject designs. Educational and Psychological Measurement, 31, 105 -113.

The Statistical Elephant in the Room: The Independence Assumption Of tea tastes (Fisher, 1935) and t tests From Shine (Shine & Bower, 1971) to Gentile, Roden, & Klein (1972) and beyond (e. g. , Kratochwill et al. , 1974) As reflected by the series autocorrelation (AC) coefficient, or the degree of serial dependence in the data Fisher, R. A. (1935). The design of experiments. New York: Hafner. Gentile, J. R. , Roden, A. H. , & Klein, R. D. (1972). An analysis of variance model for the intrasubject replication design. Journal of Applied Behavior. Analysis, 5, 193 -198. Kratochwill, T. R. , Alden, K. , Demuth, D. , Dawson, 0. , Panicucci, C. , Arntson, P. , Mc. Murray, N. , Hempstead, J. , & Levin, J. (1974). A further consideration in the application of an analysis-of-variance model for the intrasubject replication design. Journal of Applied Behavior Analysis, 7, 629 -633. Shine, L. C. , & Bower, S. M. (1971). A one-way analysis of variance for single-subject designs. Educational and Psychological Measurement, 31, 105 -113.

t tests ANOVA Some time-series analysis tests

t tests ANOVA Some time-series analysis tests

Statistical Randomization Techniques for Single -Case Intervention Data

Statistical Randomization Techniques for Single -Case Intervention Data

Statistical-Conclusion Validity Each of the randomized single-case designs described in Kratochwill and Levin (2010) can be associated with a valid “randomization” inferential statistical test. Investigating the statistical properties (viz. , Type I error and power) of several such tests was one of the foci of the Kratochwill/Levin IES grant. * *Special thanks to our research collaborators Venessa Lall and

Statistical-Conclusion Validity Each of the randomized single-case designs described in Kratochwill and Levin (2010) can be associated with a valid “randomization” inferential statistical test. Investigating the statistical properties (viz. , Type I error and power) of several such tests was one of the foci of the Kratochwill/Levin IES grant. * *Special thanks to our research collaborators Venessa Lall and

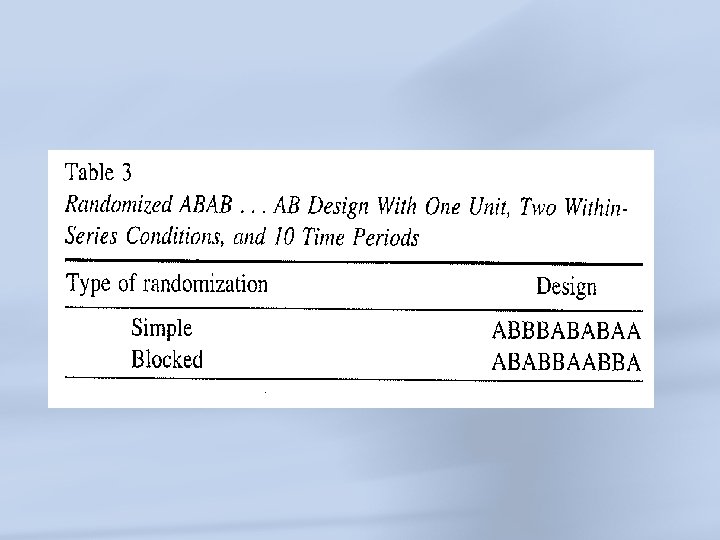

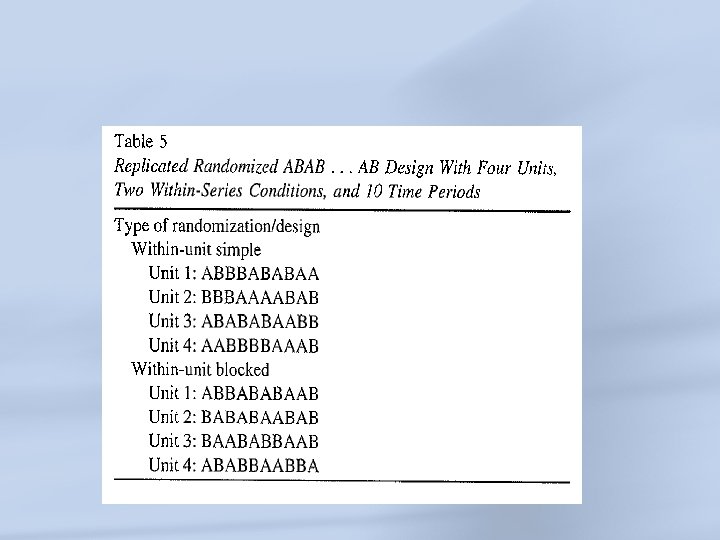

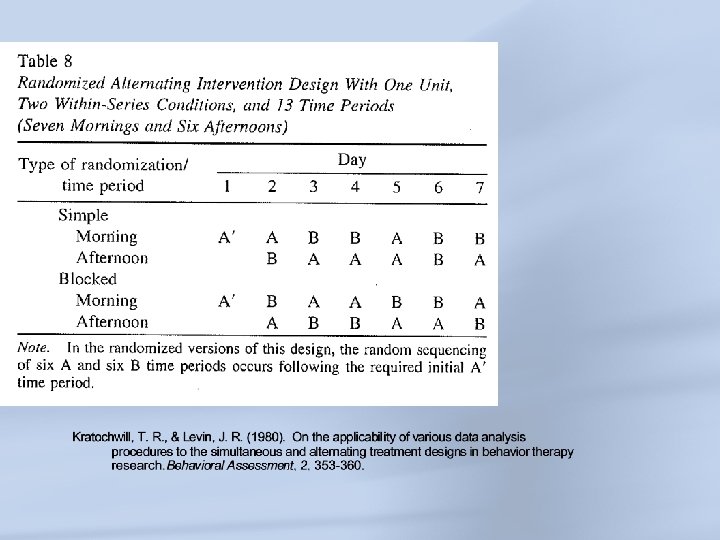

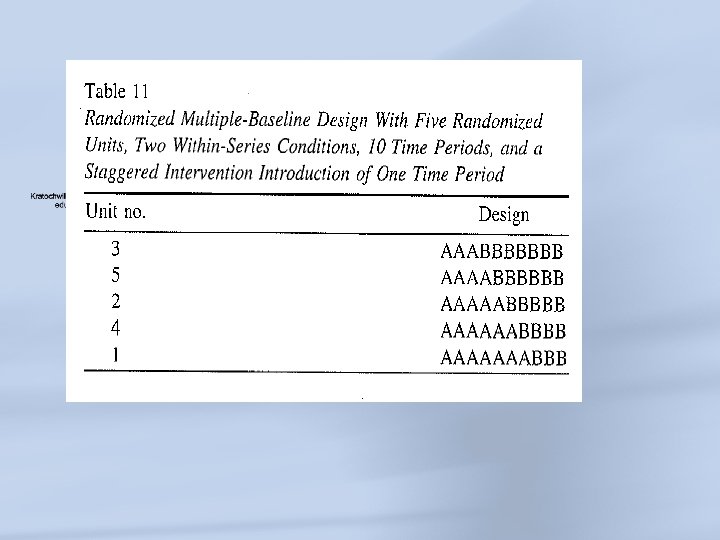

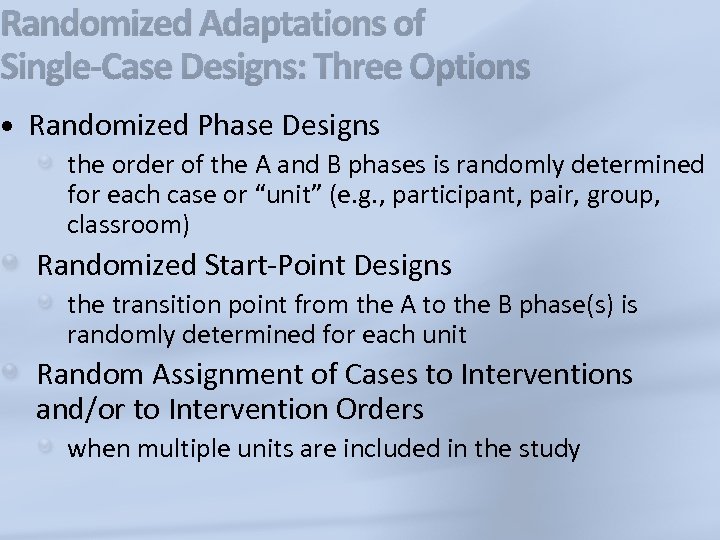

Randomized Adaptations of Single-Case Designs: Three Options • Randomized Phase Designs the order of the A and B phases is randomly determined for each case or “unit” (e. g. , participant, pair, group, classroom) Randomized Start-Point Designs the transition point from the A to the B phase(s) is randomly determined for each unit Random Assignment of Cases to Interventions and/or to Intervention Orders when multiple units are included in the study

Randomized Adaptations of Single-Case Designs: Three Options • Randomized Phase Designs the order of the A and B phases is randomly determined for each case or “unit” (e. g. , participant, pair, group, classroom) Randomized Start-Point Designs the transition point from the A to the B phase(s) is randomly determined for each unit Random Assignment of Cases to Interventions and/or to Intervention Orders when multiple units are included in the study

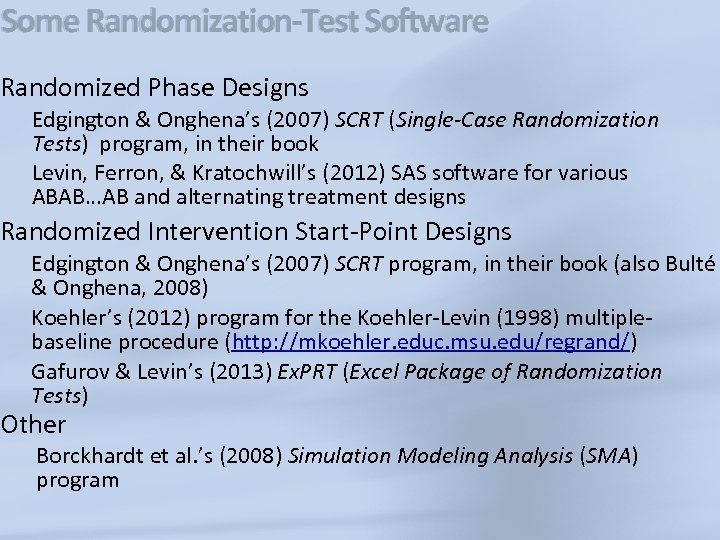

Some Randomization-Test Software Randomized Phase Designs Edgington & Onghena’s (2007) SCRT (Single-Case Randomization Tests) program, in their book Levin, Ferron, & Kratochwill’s (2012) SAS software for various ABAB…AB and alternating treatment designs Randomized Intervention Start-Point Designs Edgington & Onghena’s (2007) SCRT program, in their book (also Bulté & Onghena, 2008) Koehler’s (2012) program for the Koehler-Levin (1998) multiplebaseline procedure (http: //mkoehler. educ. msu. edu/regrand/) Gafurov & Levin’s (2013) Ex. PRT (Excel Package of Randomization Tests) Other Borckhardt et al. ’s (2008) Simulation Modeling Analysis (SMA) program

Some Randomization-Test Software Randomized Phase Designs Edgington & Onghena’s (2007) SCRT (Single-Case Randomization Tests) program, in their book Levin, Ferron, & Kratochwill’s (2012) SAS software for various ABAB…AB and alternating treatment designs Randomized Intervention Start-Point Designs Edgington & Onghena’s (2007) SCRT program, in their book (also Bulté & Onghena, 2008) Koehler’s (2012) program for the Koehler-Levin (1998) multiplebaseline procedure (http: //mkoehler. educ. msu. edu/regrand/) Gafurov & Levin’s (2013) Ex. PRT (Excel Package of Randomization Tests) Other Borckhardt et al. ’s (2008) Simulation Modeling Analysis (SMA) program

Applications of the WWC Standards in Literature Reviews 82

Applications of the WWC Standards in Literature Reviews 82

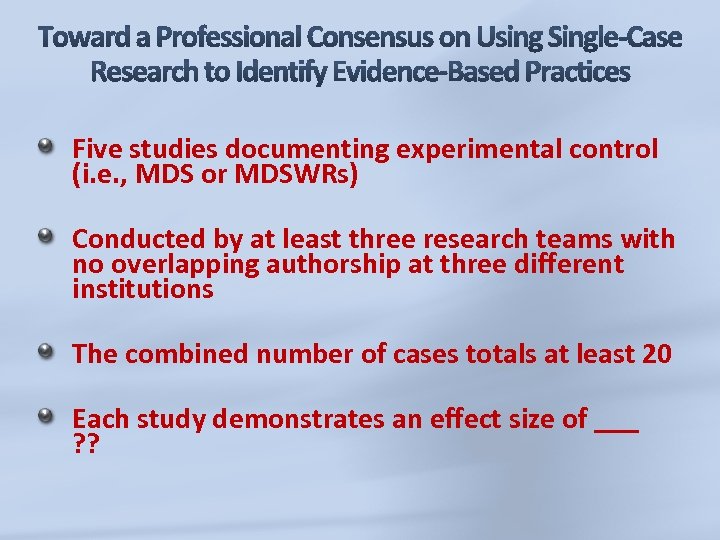

Five studies documenting experimental control (i. e. , MDS or MDSWRs) Conducted by at least three research teams with no overlapping authorship at three different institutions The combined number of cases totals at least 20 Each study demonstrates an effect size of ___ ? ?

Five studies documenting experimental control (i. e. , MDS or MDSWRs) Conducted by at least three research teams with no overlapping authorship at three different institutions The combined number of cases totals at least 20 Each study demonstrates an effect size of ___ ? ?

An application of the What Works Clearinghouse Standards for evaluating single-subject research: Synthesis of the self-management literature base (Maggin, Briesch, & Chafouleas, 2013). Studies documenting experimental control [n=37 (MDS)/n=31(MDSWR)] At least three settings /scholars (Yes) At least 20 participants (Yes) EVIDENCE CRITERIA: Strong evidence (n=25) Moderate evidence (n=30) No evidence (n=13)

An application of the What Works Clearinghouse Standards for evaluating single-subject research: Synthesis of the self-management literature base (Maggin, Briesch, & Chafouleas, 2013). Studies documenting experimental control [n=37 (MDS)/n=31(MDSWR)] At least three settings /scholars (Yes) At least 20 participants (Yes) EVIDENCE CRITERIA: Strong evidence (n=25) Moderate evidence (n=30) No evidence (n=13)

85

85

Sensory interventions have gained popularity and are the most frequently requested intervention for children with autism, despite having limited empirical support and particularly common with children with autism (Green, Pituch, Itchon, Choi, O’Reilly, & Sigafoos, 2006; Olson & Moulton, 2004). The driving principle for the use of sensory interventions is to improve sensory processing and increase adaptive functioning in individuals with sensory dysfunction (Ayers, 1979). This principle is based on sensory integration theory, which purports that controlled sensory experiences will help children appropriately respond to sensory input (Ayers, 1972).

Sensory interventions have gained popularity and are the most frequently requested intervention for children with autism, despite having limited empirical support and particularly common with children with autism (Green, Pituch, Itchon, Choi, O’Reilly, & Sigafoos, 2006; Olson & Moulton, 2004). The driving principle for the use of sensory interventions is to improve sensory processing and increase adaptive functioning in individuals with sensory dysfunction (Ayers, 1979). This principle is based on sensory integration theory, which purports that controlled sensory experiences will help children appropriately respond to sensory input (Ayers, 1972).

Weighted vests are frequently requested for children with autism and related disorders No protocols exist for their use Different uses observed across practitioners Conceptually systematic? Seem to be aversive & reinforcing Passive intervention for reducing challenging behavior Typically not used as part of a Behavior Support Plan Evidence is unknown?

Weighted vests are frequently requested for children with autism and related disorders No protocols exist for their use Different uses observed across practitioners Conceptually systematic? Seem to be aversive & reinforcing Passive intervention for reducing challenging behavior Typically not used as part of a Behavior Support Plan Evidence is unknown?

Researchers have conducted a systematic review of sensory-based treatments and found them to be unsupported by research. The research involved both single-case research and randomized experimental group research (Barton, Reichow, Schnitz, Smith, & Sherlock, 2015). Lang et al. (2012) conducted a SI review and concluded there was no support.

Researchers have conducted a systematic review of sensory-based treatments and found them to be unsupported by research. The research involved both single-case research and randomized experimental group research (Barton, Reichow, Schnitz, Smith, & Sherlock, 2015). Lang et al. (2012) conducted a SI review and concluded there was no support.

Given the high placebo effects that have been seen in interventions for children with developmental disabilities (Sandler, 2005 ) and the prevalence of a culture of unproven treatments for individuals with autism (Offit, 2008 ), use of therapeutic vests must proceed with caution (if at all) until practice guidelines based on empirical evidence can be established.

Given the high placebo effects that have been seen in interventions for children with developmental disabilities (Sandler, 2005 ) and the prevalence of a culture of unproven treatments for individuals with autism (Offit, 2008 ), use of therapeutic vests must proceed with caution (if at all) until practice guidelines based on empirical evidence can be established.

Barton, E. E. , Reichow, B. , Schnitz, A. , Smith, I. C. , & Sherlock, D. (2015). A systematic review of sensory-based treatments for children with disabilities. Research in Developmental Disabilities, 37, 64 -80. Cox, A. L. , Gast, D. L. , Luscre, D. , & Ayres, K. M. (2009). The effects of weighted vests on appropriate in-seat behaviors of elementary-age students with autism and severe to profound intellectual disabilities. Focus on Autism and Other Developmental Disabilities, 24, 17– 26. Reichow, B. R. , Barton, E. E. , Neely, J. , Good, L. , & Wolery, M. (2010). Effects of weighted vests on the engagement of children with developmental delays and autism. Focus on Autism and Developmental Disabilities, 25, 3 – 11. Reichow, B. R. , Barton, E. E. , Good, L. & Wolery, M. (2009). Effects of pressure vest usage on engagement and problem behaviors of a young child with developmental delays. Journal of Autism and Developmental Disorders, 27, 333 – 339.

Barton, E. E. , Reichow, B. , Schnitz, A. , Smith, I. C. , & Sherlock, D. (2015). A systematic review of sensory-based treatments for children with disabilities. Research in Developmental Disabilities, 37, 64 -80. Cox, A. L. , Gast, D. L. , Luscre, D. , & Ayres, K. M. (2009). The effects of weighted vests on appropriate in-seat behaviors of elementary-age students with autism and severe to profound intellectual disabilities. Focus on Autism and Other Developmental Disabilities, 24, 17– 26. Reichow, B. R. , Barton, E. E. , Neely, J. , Good, L. , & Wolery, M. (2010). Effects of weighted vests on the engagement of children with developmental delays and autism. Focus on Autism and Developmental Disabilities, 25, 3 – 11. Reichow, B. R. , Barton, E. E. , Good, L. & Wolery, M. (2009). Effects of pressure vest usage on engagement and problem behaviors of a young child with developmental delays. Journal of Autism and Developmental Disorders, 27, 333 – 339.

Non-overlap methods Meta-analysis options

Non-overlap methods Meta-analysis options

There a Number of Methods and Many have Problems: 1. PND 2. PEM 3. PEM-T (ECL) 4. PAND 5. R-IRD 6. NAP 7. Tau. U

There a Number of Methods and Many have Problems: 1. PND 2. PEM 3. PEM-T (ECL) 4. PAND 5. R-IRD 6. NAP 7. Tau. U

The Tau-U score is not affected by the ceiling effect present in other non-overlap methods, and performs well in the presence of autocorrelation.

The Tau-U score is not affected by the ceiling effect present in other non-overlap methods, and performs well in the presence of autocorrelation.

Many different synthesis metrics Each has significant limitations and flaws None are completely satisfactory PND been quite popular (maybe most flawed), but increasing popularity of PAND is evident Common problems Variability, trends, and “known” data patterns (extinction bursts, learning curves, delayed effects) Failure to measure magnitude Ignoring the replication logic of Single-Case Design

Many different synthesis metrics Each has significant limitations and flaws None are completely satisfactory PND been quite popular (maybe most flawed), but increasing popularity of PAND is evident Common problems Variability, trends, and “known” data patterns (extinction bursts, learning curves, delayed effects) Failure to measure magnitude Ignoring the replication logic of Single-Case Design

Options include those that are just for summarizing single-case design studies: Shadish, W. R. , Hedges, L. V. , and Pustejovsky, J. E. (2014). Analysis and Meta-Analysis of Single-Case Designs With a Standardized Mean Difference Statistic: A Primer and Applications. Journal of School Psychology, 52, 123 -147. Ex. PRT provides an effect size based on Busk and Serlin (1992) Some options for summarizing single-case design and group research designs: Shadish, Hedges, Horner, and Odom (2015) summarize methods for between-case effect size in single-case design. Download the free report at the following web site: http: //ies. ed. gov/ncser/pubs/2015002/

Options include those that are just for summarizing single-case design studies: Shadish, W. R. , Hedges, L. V. , and Pustejovsky, J. E. (2014). Analysis and Meta-Analysis of Single-Case Designs With a Standardized Mean Difference Statistic: A Primer and Applications. Journal of School Psychology, 52, 123 -147. Ex. PRT provides an effect size based on Busk and Serlin (1992) Some options for summarizing single-case design and group research designs: Shadish, Hedges, Horner, and Odom (2015) summarize methods for between-case effect size in single-case design. Download the free report at the following web site: http: //ies. ed. gov/ncser/pubs/2015002/

Single-case methods are an effective and efficient approach for documenting experimental effects in intervention research. A number of randomized experimental designs are now available for intervention research. Need exists for adopting standards for training and using visual analysis, and combinations of visual analysis with statistical analysis. There are encouraging (but still emerging) approaches for statistical analysis that will improve literature summary options. There is now more precision in reviews of the intervention research literature with use of the WWC Standards.

Single-case methods are an effective and efficient approach for documenting experimental effects in intervention research. A number of randomized experimental designs are now available for intervention research. Need exists for adopting standards for training and using visual analysis, and combinations of visual analysis with statistical analysis. There are encouraging (but still emerging) approaches for statistical analysis that will improve literature summary options. There is now more precision in reviews of the intervention research literature with use of the WWC Standards.

Single-Case Intervention Research: New Developments in Methodology and Data Analysis University of Wisconsin-Madison June 27 - July 1, 2016 Application deadline: April 11, 2016 Web site: http: //www. apa. org/science/about/psa/2015/1 2/advanced-training-institutes. aspx

Single-Case Intervention Research: New Developments in Methodology and Data Analysis University of Wisconsin-Madison June 27 - July 1, 2016 Application deadline: April 11, 2016 Web site: http: //www. apa. org/science/about/psa/2015/1 2/advanced-training-institutes. aspx

Thomas R. Kratochwill, Ph. D Sears Bascom Professor and Director, School Psychology Program Wisconsin Center for Education Research University of Wisconsin-Madison, Wisconsin 53706 Phone: (608) 262 -5912 E-Mail: tomkat@education. wisc. edu

Thomas R. Kratochwill, Ph. D Sears Bascom Professor and Director, School Psychology Program Wisconsin Center for Education Research University of Wisconsin-Madison, Wisconsin 53706 Phone: (608) 262 -5912 E-Mail: tomkat@education. wisc. edu