37455975bdaea2a113d762a261e2e4b2.ppt

- Количество слайдов: 74

What is Digital Image Processing? • An image is defined as a two-dimensional function, f(x, y), where x and y are spatial (plane) coordinates, and the amplitude of f at any pair of coordinates (x, y) is called the intensity of the image at that point. • When x, y and the amplitude of f are all finite, discrete quantities, we call the image a digital image. • The field of digital image processing refers to processing digital images by using computers. • A digital image consists of a finite number of elements, each of which has a particular location and value. • The elements are referred as picture elements, image elements, pels and pixels.

What is Digital Image Processing? • An image is defined as a two-dimensional function, f(x, y), where x and y are spatial (plane) coordinates, and the amplitude of f at any pair of coordinates (x, y) is called the intensity of the image at that point. • When x, y and the amplitude of f are all finite, discrete quantities, we call the image a digital image. • The field of digital image processing refers to processing digital images by using computers. • A digital image consists of a finite number of elements, each of which has a particular location and value. • The elements are referred as picture elements, image elements, pels and pixels.

Machines vs humans • Among the five senses, vision is considered to be vital one for a human being. • But a human being can perceive only visible part of the electromagnetic spectrum. • But machines can span the entire range of electromagnetic spectrum from gamma to radio waves.

Machines vs humans • Among the five senses, vision is considered to be vital one for a human being. • But a human being can perceive only visible part of the electromagnetic spectrum. • But machines can span the entire range of electromagnetic spectrum from gamma to radio waves.

Image vision vs Image analysis • Image processing is a branch in which both the input and output of a process are images. • The goal of computer vision is to use computers to emulate human vision, including learning, making inferences and taking actions. • The area of image analysis is in between image processing and computer vision.

Image vision vs Image analysis • Image processing is a branch in which both the input and output of a process are images. • The goal of computer vision is to use computers to emulate human vision, including learning, making inferences and taking actions. • The area of image analysis is in between image processing and computer vision.

Types of Processes in Image Processing • There are 3 types of computerized processes. • They are : low, mid and high level processes. • Low-level processes involve primitive operations such as image preprocessing to reduce noise, contrast enhancement and image sharpening. • Here both the input and output are images. • Mid-level processing involves segmentation (partitioning image into regions), description of objects to reduce them to a form so that a computer can process and classification (recognition) of objects. • Here inputs are images but outputs are attributes extracted from images. • In high-level processing, we ‘make sense’ of a collection of recognized objects.

Types of Processes in Image Processing • There are 3 types of computerized processes. • They are : low, mid and high level processes. • Low-level processes involve primitive operations such as image preprocessing to reduce noise, contrast enhancement and image sharpening. • Here both the input and output are images. • Mid-level processing involves segmentation (partitioning image into regions), description of objects to reduce them to a form so that a computer can process and classification (recognition) of objects. • Here inputs are images but outputs are attributes extracted from images. • In high-level processing, we ‘make sense’ of a collection of recognized objects.

Example of dip and image analysis • The process of acquiring an image of a text, processing it, extracting (segmenting) individual characters, describing characters suitable for computer processing and recognizing those individual characters are in the scope of digital image processing. • Making sense of the content of the page (text) is viewed as the domain of image analysis and computer vision.

Example of dip and image analysis • The process of acquiring an image of a text, processing it, extracting (segmenting) individual characters, describing characters suitable for computer processing and recognizing those individual characters are in the scope of digital image processing. • Making sense of the content of the page (text) is viewed as the domain of image analysis and computer vision.

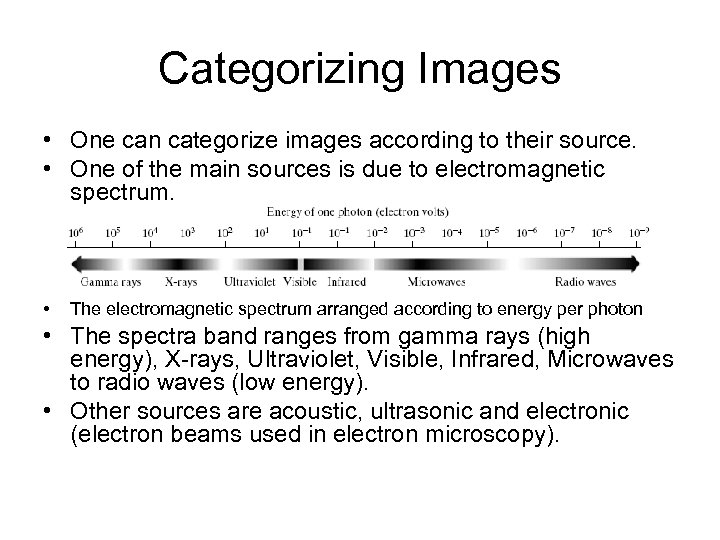

Categorizing Images • One can categorize images according to their source. • One of the main sources is due to electromagnetic spectrum. • The electromagnetic spectrum arranged according to energy per photon • The spectra band ranges from gamma rays (high energy), X-rays, Ultraviolet, Visible, Infrared, Microwaves to radio waves (low energy). • Other sources are acoustic, ultrasonic and electronic (electron beams used in electron microscopy).

Categorizing Images • One can categorize images according to their source. • One of the main sources is due to electromagnetic spectrum. • The electromagnetic spectrum arranged according to energy per photon • The spectra band ranges from gamma rays (high energy), X-rays, Ultraviolet, Visible, Infrared, Microwaves to radio waves (low energy). • Other sources are acoustic, ultrasonic and electronic (electron beams used in electron microscopy).

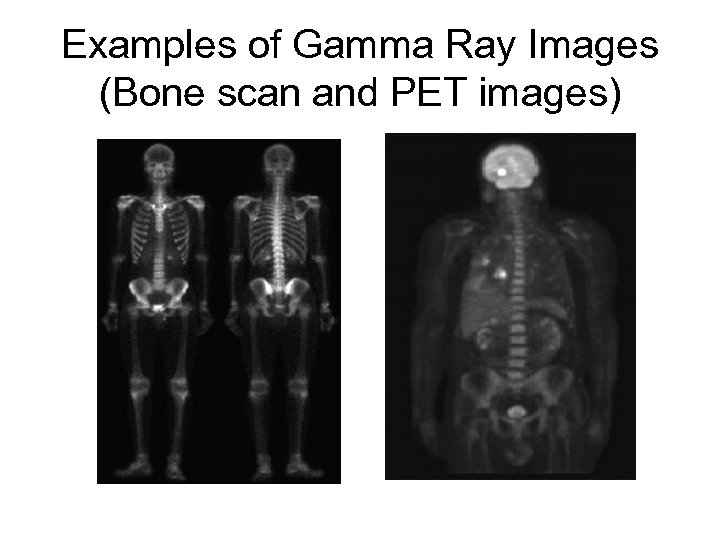

Gamma Ray Imaging • These are used in nuclear medicine and astronomical observations. • In medicine, it is used for complete bone scan. • The nuclear imaging is also used in positron emission tomography (PET). • It can render the 3 -D image of patients. • It can detect tumors in the brain and lungs. • The images of stars which exploded about 15000 years ago, can be captured using gamma rays.

Gamma Ray Imaging • These are used in nuclear medicine and astronomical observations. • In medicine, it is used for complete bone scan. • The nuclear imaging is also used in positron emission tomography (PET). • It can render the 3 -D image of patients. • It can detect tumors in the brain and lungs. • The images of stars which exploded about 15000 years ago, can be captured using gamma rays.

Examples of Gamma Ray Images (Bone scan and PET images)

Examples of Gamma Ray Images (Bone scan and PET images)

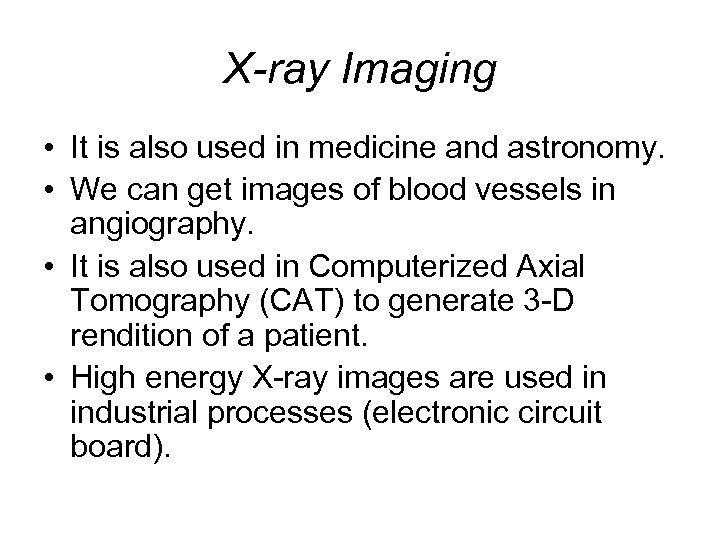

X-ray Imaging • It is also used in medicine and astronomy. • We can get images of blood vessels in angiography. • It is also used in Computerized Axial Tomography (CAT) to generate 3 -D rendition of a patient. • High energy X-ray images are used in industrial processes (electronic circuit board).

X-ray Imaging • It is also used in medicine and astronomy. • We can get images of blood vessels in angiography. • It is also used in Computerized Axial Tomography (CAT) to generate 3 -D rendition of a patient. • High energy X-ray images are used in industrial processes (electronic circuit board).

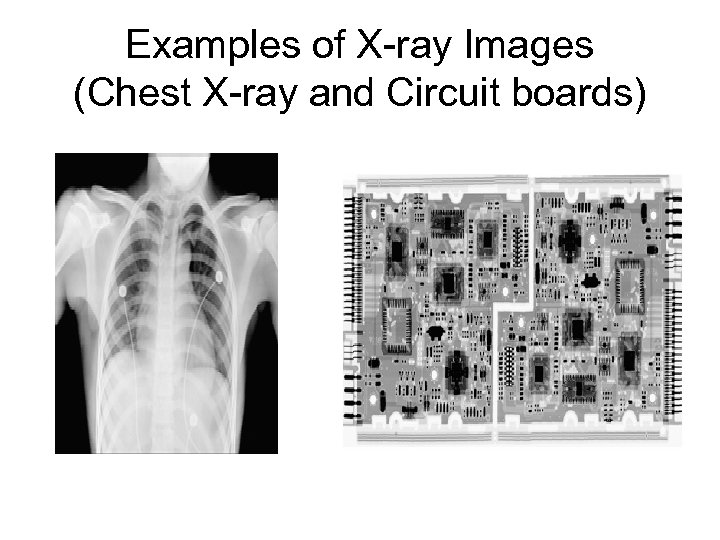

Examples of X-ray Images (Chest X-ray and Circuit boards)

Examples of X-ray Images (Chest X-ray and Circuit boards)

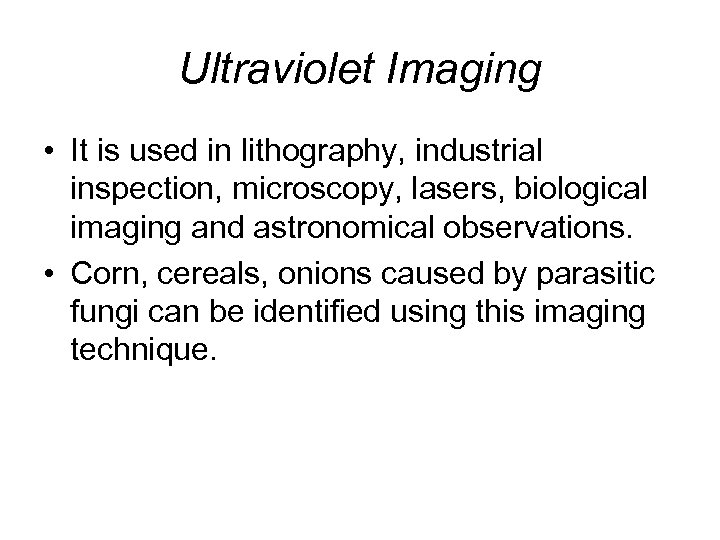

Ultraviolet Imaging • It is used in lithography, industrial inspection, microscopy, lasers, biological imaging and astronomical observations. • Corn, cereals, onions caused by parasitic fungi can be identified using this imaging technique.

Ultraviolet Imaging • It is used in lithography, industrial inspection, microscopy, lasers, biological imaging and astronomical observations. • Corn, cereals, onions caused by parasitic fungi can be identified using this imaging technique.

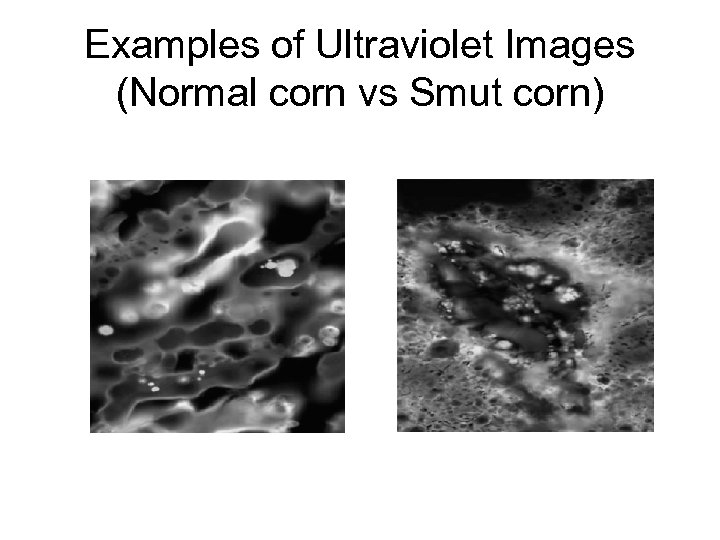

Examples of Ultraviolet Images (Normal corn vs Smut corn)

Examples of Ultraviolet Images (Normal corn vs Smut corn)

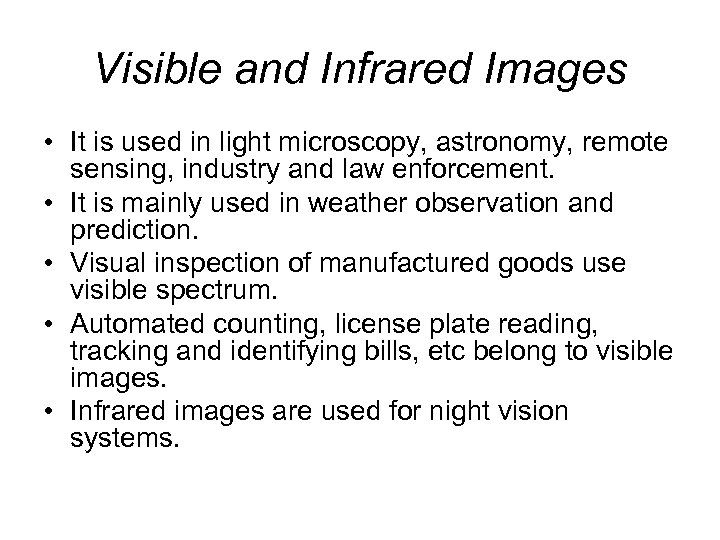

Visible and Infrared Images • It is used in light microscopy, astronomy, remote sensing, industry and law enforcement. • It is mainly used in weather observation and prediction. • Visual inspection of manufactured goods use visible spectrum. • Automated counting, license plate reading, tracking and identifying bills, etc belong to visible images. • Infrared images are used for night vision systems.

Visible and Infrared Images • It is used in light microscopy, astronomy, remote sensing, industry and law enforcement. • It is mainly used in weather observation and prediction. • Visual inspection of manufactured goods use visible spectrum. • Automated counting, license plate reading, tracking and identifying bills, etc belong to visible images. • Infrared images are used for night vision systems.

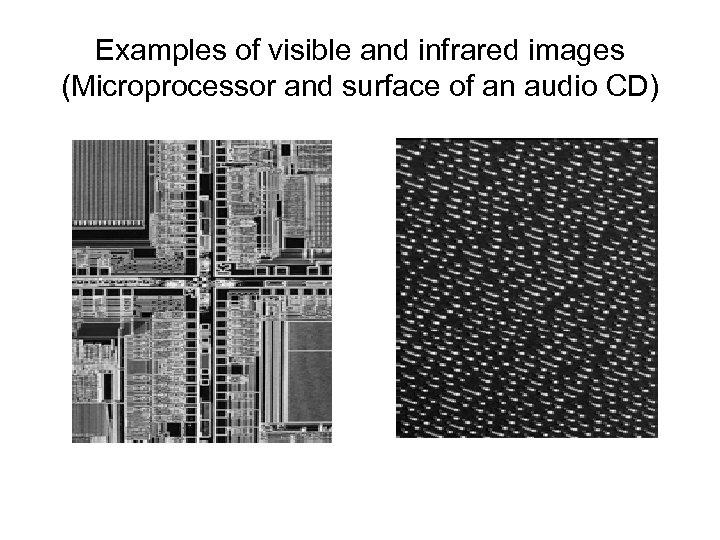

Examples of visible and infrared images (Microprocessor and surface of an audio CD)

Examples of visible and infrared images (Microprocessor and surface of an audio CD)

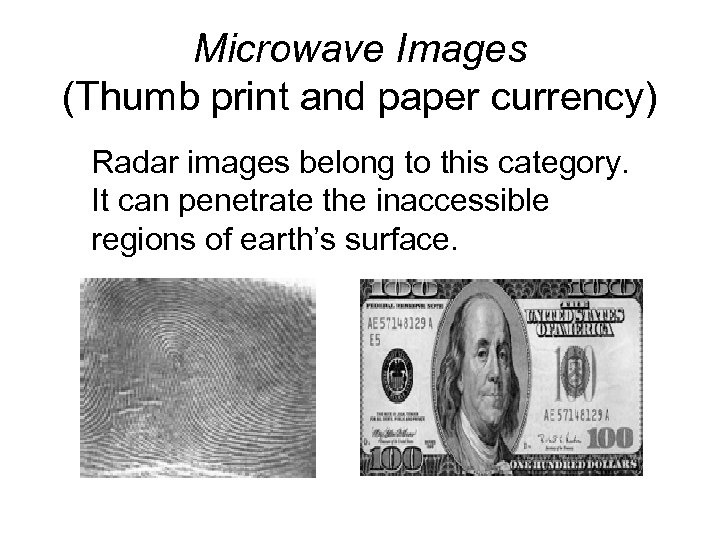

Microwave Images (Thumb print and paper currency) Radar images belong to this category. It can penetrate the inaccessible regions of earth’s surface.

Microwave Images (Thumb print and paper currency) Radar images belong to this category. It can penetrate the inaccessible regions of earth’s surface.

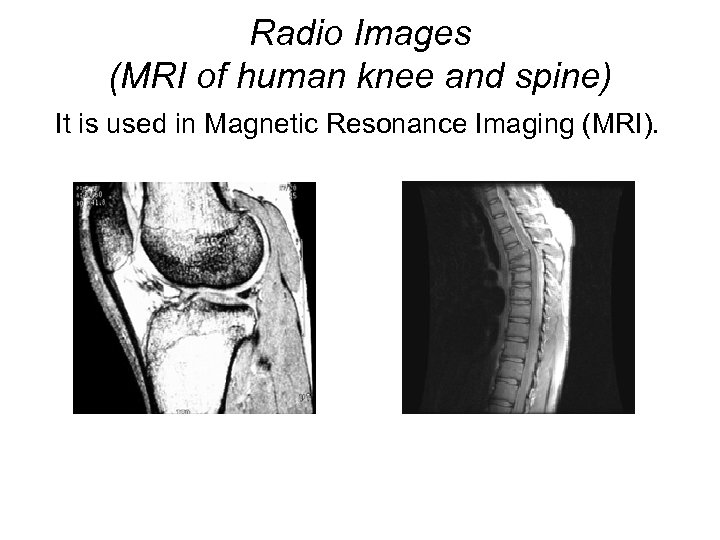

Radio Images (MRI of human knee and spine) It is used in Magnetic Resonance Imaging (MRI).

Radio Images (MRI of human knee and spine) It is used in Magnetic Resonance Imaging (MRI).

Acoustic imaging • The images use sound energy. • They are used in geological exploration, industry and medicine. • It is also used in mineral and oil exploration. • Ultrasonic images are used in obstetrics to determine the health of unborn babies and determining the sex of the baby.

Acoustic imaging • The images use sound energy. • They are used in geological exploration, industry and medicine. • It is also used in mineral and oil exploration. • Ultrasonic images are used in obstetrics to determine the health of unborn babies and determining the sex of the baby.

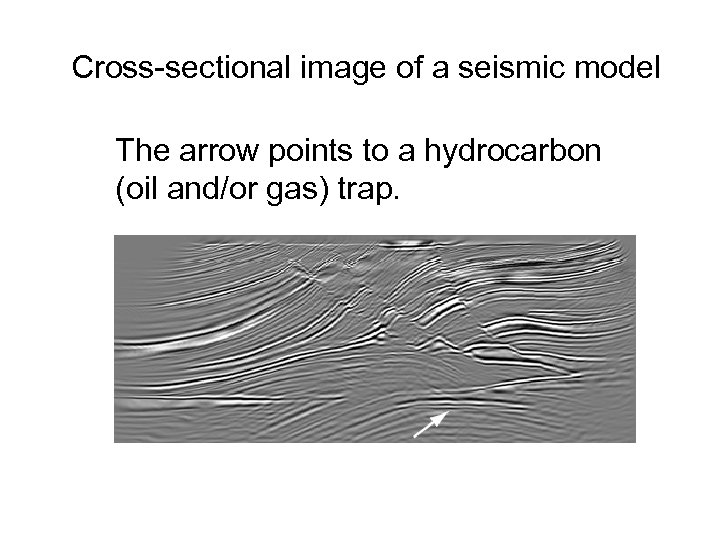

Cross-sectional image of a seismic model The arrow points to a hydrocarbon (oil and/or gas) trap.

Cross-sectional image of a seismic model The arrow points to a hydrocarbon (oil and/or gas) trap.

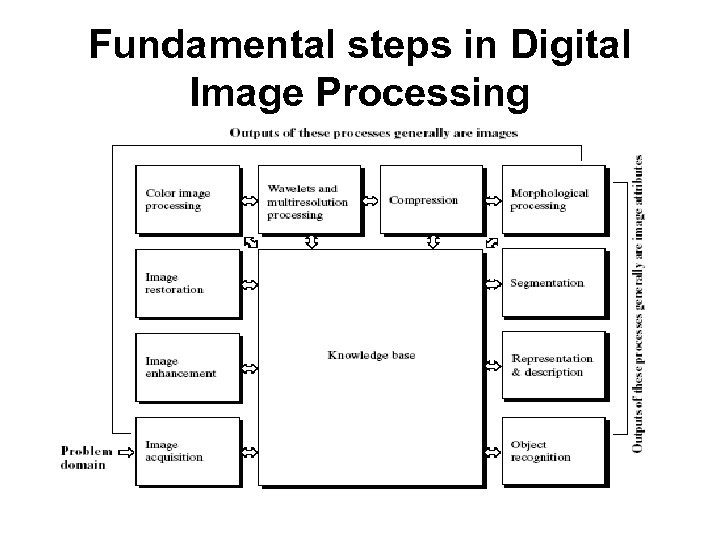

Fundamental steps in Digital Image Processing

Fundamental steps in Digital Image Processing

Fundamental steps of DIP • • • Image acquisition – This stage involves preprocessing, such as scaling. Image enhancement – Here we bring out details that were obscured or highlight some features of interest in an image. (eg) increasing the contrast of an image. Image Restoration – Here we talk about how to improve the appearance of an image. Unlike enhancement, which is subjective, this is objective. Color Image Processing – Due to Internet, this area is becoming popular. Various color models are worthy to know. Wavelets – Representing the images in various degrees of resolution in the basis of wavelets.

Fundamental steps of DIP • • • Image acquisition – This stage involves preprocessing, such as scaling. Image enhancement – Here we bring out details that were obscured or highlight some features of interest in an image. (eg) increasing the contrast of an image. Image Restoration – Here we talk about how to improve the appearance of an image. Unlike enhancement, which is subjective, this is objective. Color Image Processing – Due to Internet, this area is becoming popular. Various color models are worthy to know. Wavelets – Representing the images in various degrees of resolution in the basis of wavelets.

Fundamental steps of DIP • • Compression – It is a technique for reducing the storage required to save an image or bandwidth needed to transmit. Morphological Processing – It deals with tools for extracting image components that are useful in the representation and description of shape. Segmentation – These procedures partition an image into its constituent parts or objects. Representation and description – It follows the output of a segmentation stage. It uses either the boundary of a region or all the points in the region itself. Description ( also called feature selection) deals with extracting attributes or are basic for differentiating one class of objects from another. Recognition – It is the process that assigns a label (eg. Vehicle) to an object based on its descriptors.

Fundamental steps of DIP • • Compression – It is a technique for reducing the storage required to save an image or bandwidth needed to transmit. Morphological Processing – It deals with tools for extracting image components that are useful in the representation and description of shape. Segmentation – These procedures partition an image into its constituent parts or objects. Representation and description – It follows the output of a segmentation stage. It uses either the boundary of a region or all the points in the region itself. Description ( also called feature selection) deals with extracting attributes or are basic for differentiating one class of objects from another. Recognition – It is the process that assigns a label (eg. Vehicle) to an object based on its descriptors.

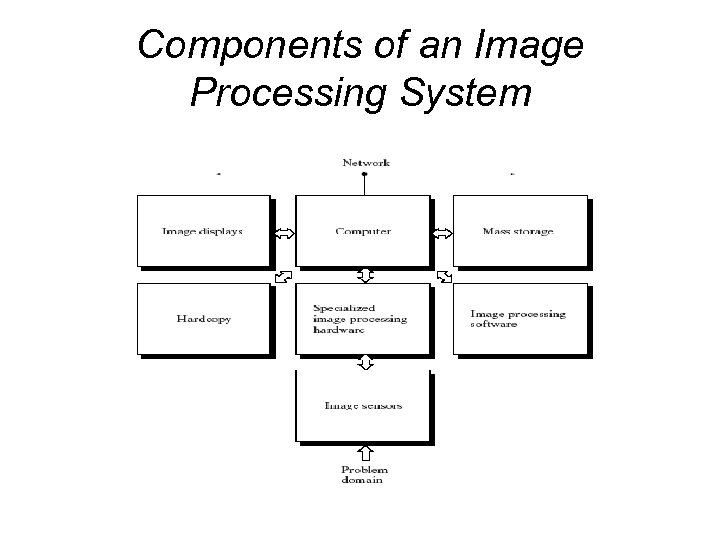

Components of an Image Processing System

Components of an Image Processing System

Basic components of a general-purpose system used for digital image processing • • • Image sensors – Two elements are needed to acquire digital images. First one is the physical device that is sensitive to energy radiated by the object that we want to image. The second one, called the digitizer, is a device for converting the output of the physical sensing device into digital form. (eg) in a digital video camera, the sensors produce an electrical output proportional to light intensity. The digitizer converts these outputs to digital data.

Basic components of a general-purpose system used for digital image processing • • • Image sensors – Two elements are needed to acquire digital images. First one is the physical device that is sensitive to energy radiated by the object that we want to image. The second one, called the digitizer, is a device for converting the output of the physical sensing device into digital form. (eg) in a digital video camera, the sensors produce an electrical output proportional to light intensity. The digitizer converts these outputs to digital data.

Basic components of a general-purpose system used for digital image processing • • • Specialized Image Processing Hardware It consists of digitizer plus hardware that performs other primitive operations such as an arithmetic logic unit (ALU), which performs arithmetic and logical operations on entire image. This type of hardware is also called as front-end subsystem and its characteristic is speed. This unit does things that require fast data throughputs which main computer cannot handle. Computer – In an image processing system it is a general-purpose computer. Software – It consists of specialized modules that does specific tasks (eg. matlab)

Basic components of a general-purpose system used for digital image processing • • • Specialized Image Processing Hardware It consists of digitizer plus hardware that performs other primitive operations such as an arithmetic logic unit (ALU), which performs arithmetic and logical operations on entire image. This type of hardware is also called as front-end subsystem and its characteristic is speed. This unit does things that require fast data throughputs which main computer cannot handle. Computer – In an image processing system it is a general-purpose computer. Software – It consists of specialized modules that does specific tasks (eg. matlab)

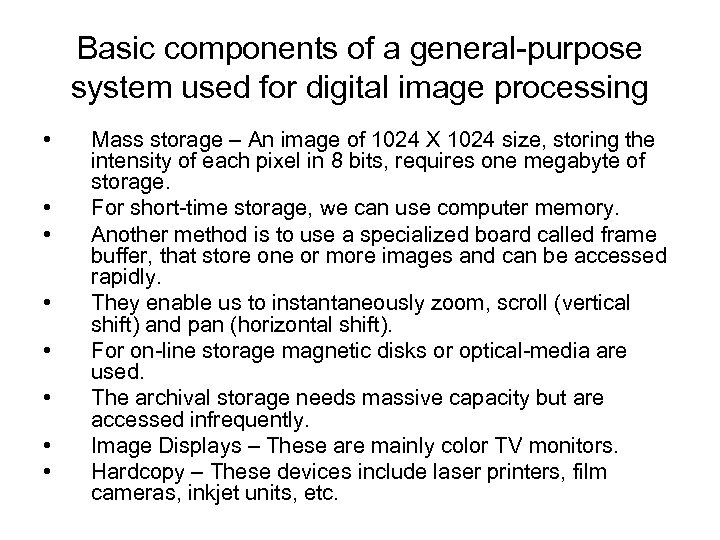

Basic components of a general-purpose system used for digital image processing • • Mass storage – An image of 1024 X 1024 size, storing the intensity of each pixel in 8 bits, requires one megabyte of storage. For short-time storage, we can use computer memory. Another method is to use a specialized board called frame buffer, that store one or more images and can be accessed rapidly. They enable us to instantaneously zoom, scroll (vertical shift) and pan (horizontal shift). For on-line storage magnetic disks or optical-media are used. The archival storage needs massive capacity but are accessed infrequently. Image Displays – These are mainly color TV monitors. Hardcopy – These devices include laser printers, film cameras, inkjet units, etc.

Basic components of a general-purpose system used for digital image processing • • Mass storage – An image of 1024 X 1024 size, storing the intensity of each pixel in 8 bits, requires one megabyte of storage. For short-time storage, we can use computer memory. Another method is to use a specialized board called frame buffer, that store one or more images and can be accessed rapidly. They enable us to instantaneously zoom, scroll (vertical shift) and pan (horizontal shift). For on-line storage magnetic disks or optical-media are used. The archival storage needs massive capacity but are accessed infrequently. Image Displays – These are mainly color TV monitors. Hardcopy – These devices include laser printers, film cameras, inkjet units, etc.

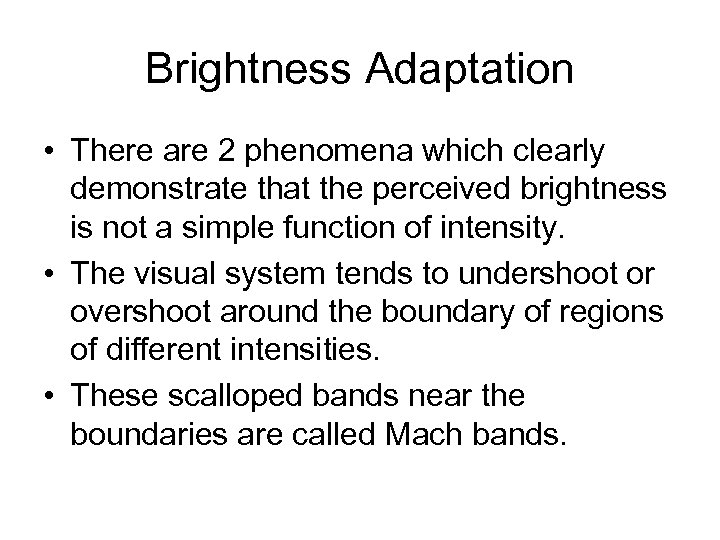

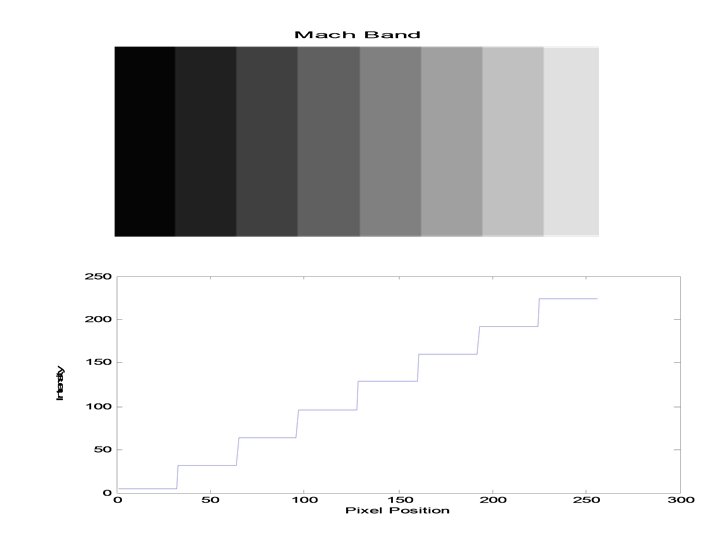

Brightness Adaptation • There are 2 phenomena which clearly demonstrate that the perceived brightness is not a simple function of intensity. • The visual system tends to undershoot or overshoot around the boundary of regions of different intensities. • These scalloped bands near the boundaries are called Mach bands.

Brightness Adaptation • There are 2 phenomena which clearly demonstrate that the perceived brightness is not a simple function of intensity. • The visual system tends to undershoot or overshoot around the boundary of regions of different intensities. • These scalloped bands near the boundaries are called Mach bands.

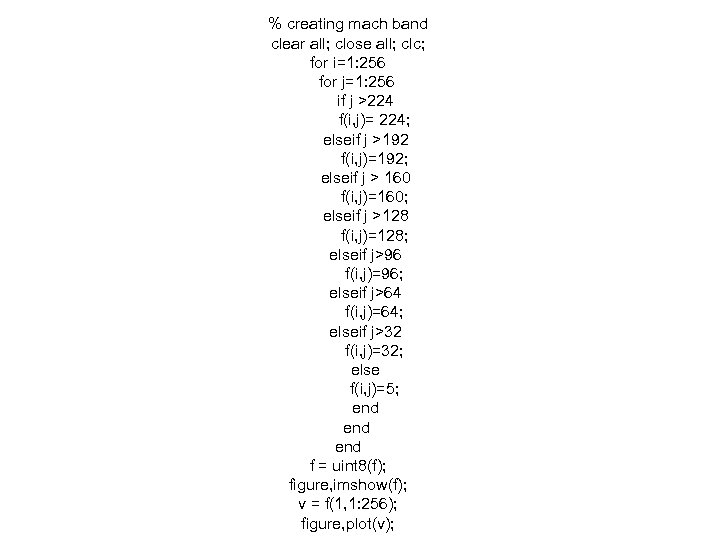

% creating mach band clear all; close all; clc; for i=1: 256 for j=1: 256 if j >224 f(i, j)= 224; elseif j >192 f(i, j)=192; elseif j > 160 f(i, j)=160; elseif j >128 f(i, j)=128; elseif j>96 f(i, j)=96; elseif j>64 f(i, j)=64; elseif j>32 f(i, j)=32; else f(i, j)=5; end end f = uint 8(f); figure, imshow(f); v = f(1, 1: 256); figure, plot(v);

% creating mach band clear all; close all; clc; for i=1: 256 for j=1: 256 if j >224 f(i, j)= 224; elseif j >192 f(i, j)=192; elseif j > 160 f(i, j)=160; elseif j >128 f(i, j)=128; elseif j>96 f(i, j)=96; elseif j>64 f(i, j)=64; elseif j>32 f(i, j)=32; else f(i, j)=5; end end f = uint 8(f); figure, imshow(f); v = f(1, 1: 256); figure, plot(v);

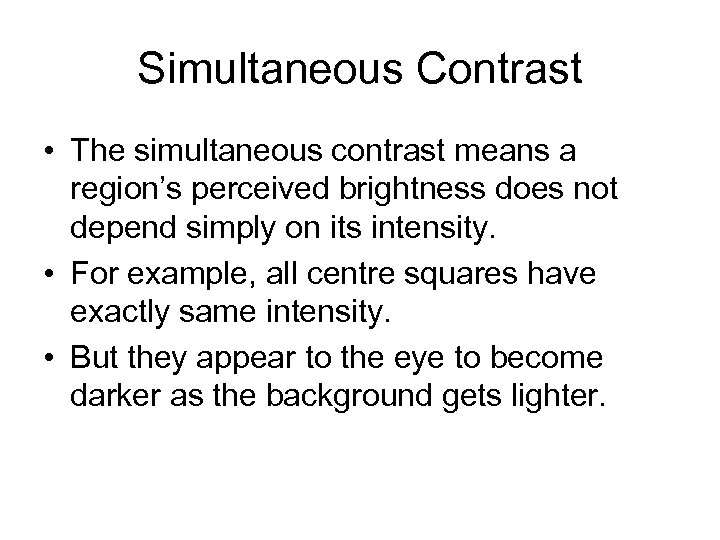

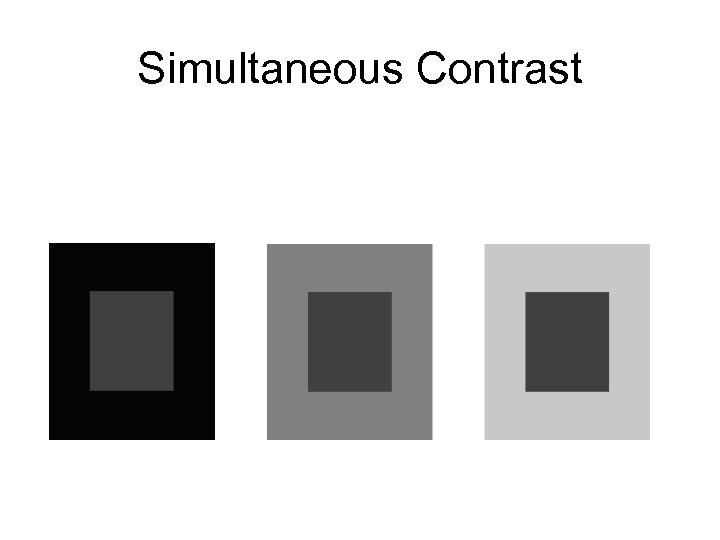

Simultaneous Contrast • The simultaneous contrast means a region’s perceived brightness does not depend simply on its intensity. • For example, all centre squares have exactly same intensity. • But they appear to the eye to become darker as the background gets lighter.

Simultaneous Contrast • The simultaneous contrast means a region’s perceived brightness does not depend simply on its intensity. • For example, all centre squares have exactly same intensity. • But they appear to the eye to become darker as the background gets lighter.

Simultaneous Contrast

Simultaneous Contrast

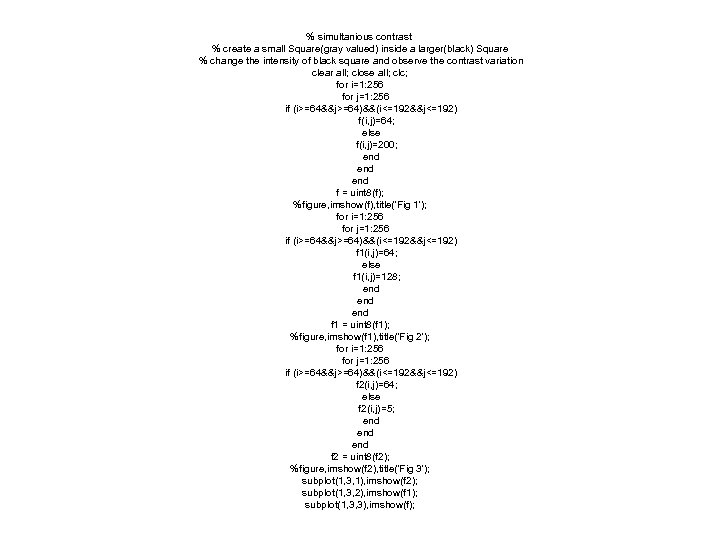

% simultanious contrast % create a small Square(gray valued) inside a larger(black) Square % change the intensity of black square and observe the contrast variation clear all; close all; clc; for i=1: 256 for j=1: 256 if (i>=64&&j>=64)&&(i<=192&&j<=192) f(i, j)=64; else f(i, j)=200; end end f = uint 8(f); %figure, imshow(f), title('Fig 1'); for i=1: 256 for j=1: 256 if (i>=64&&j>=64)&&(i<=192&&j<=192) f 1(i, j)=64; else f 1(i, j)=128; end end f 1 = uint 8(f 1); %figure, imshow(f 1), title('Fig 2'); for i=1: 256 for j=1: 256 if (i>=64&&j>=64)&&(i<=192&&j<=192) f 2(i, j)=64; else f 2(i, j)=5; end end f 2 = uint 8(f 2); %figure, imshow(f 2), title('Fig 3'); subplot(1, 3, 1), imshow(f 2); subplot(1, 3, 2), imshow(f 1); subplot(1, 3, 3), imshow(f);

% simultanious contrast % create a small Square(gray valued) inside a larger(black) Square % change the intensity of black square and observe the contrast variation clear all; close all; clc; for i=1: 256 for j=1: 256 if (i>=64&&j>=64)&&(i<=192&&j<=192) f(i, j)=64; else f(i, j)=200; end end f = uint 8(f); %figure, imshow(f), title('Fig 1'); for i=1: 256 for j=1: 256 if (i>=64&&j>=64)&&(i<=192&&j<=192) f 1(i, j)=64; else f 1(i, j)=128; end end f 1 = uint 8(f 1); %figure, imshow(f 1), title('Fig 2'); for i=1: 256 for j=1: 256 if (i>=64&&j>=64)&&(i<=192&&j<=192) f 2(i, j)=64; else f 2(i, j)=5; end end f 2 = uint 8(f 2); %figure, imshow(f 2), title('Fig 3'); subplot(1, 3, 1), imshow(f 2); subplot(1, 3, 2), imshow(f 1); subplot(1, 3, 3), imshow(f);

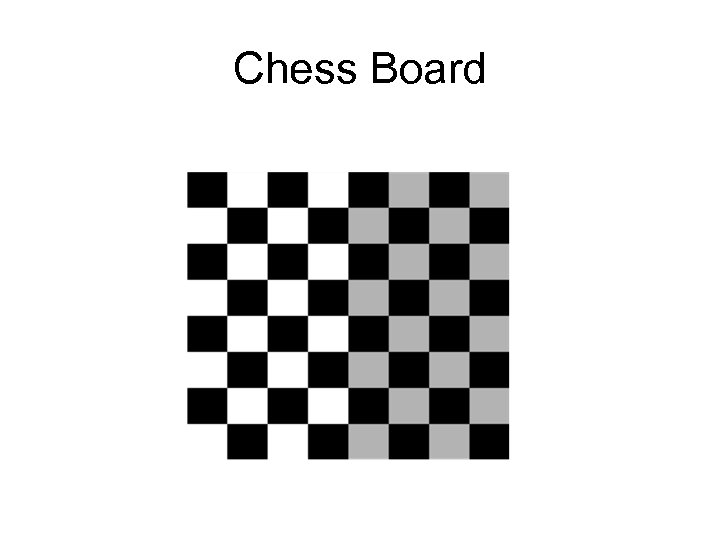

Chess Board

Chess Board

Chess board creation • • clear all; close all; clc; f = checkerboard(8); f = imresize(f, [256, 256]); imshow(f);

Chess board creation • • clear all; close all; clc; f = checkerboard(8); f = imresize(f, [256, 256]); imshow(f);

Exercises • Write a matlab code to create the Mach bands and observe the transition of intensities for various pixel positions in the Mach band. • Write a matlab code to simulate simultanious contrast create a small Square(gray valued) inside a larger(black) square - change the intensity of black square and observe the contrast variation. • Write a matlab code to generate a chessboard consisting of eight alternating black and white squares. • Write a matlab code to generate the 1 D barcodes (EAN 13) that are used in ISBN. • Write a matlab code to generate the 2 D barcodes. • Write a matlab code to generate the visual code for the given ID (83 bits)

Exercises • Write a matlab code to create the Mach bands and observe the transition of intensities for various pixel positions in the Mach band. • Write a matlab code to simulate simultanious contrast create a small Square(gray valued) inside a larger(black) square - change the intensity of black square and observe the contrast variation. • Write a matlab code to generate a chessboard consisting of eight alternating black and white squares. • Write a matlab code to generate the 1 D barcodes (EAN 13) that are used in ISBN. • Write a matlab code to generate the 2 D barcodes. • Write a matlab code to generate the visual code for the given ID (83 bits)

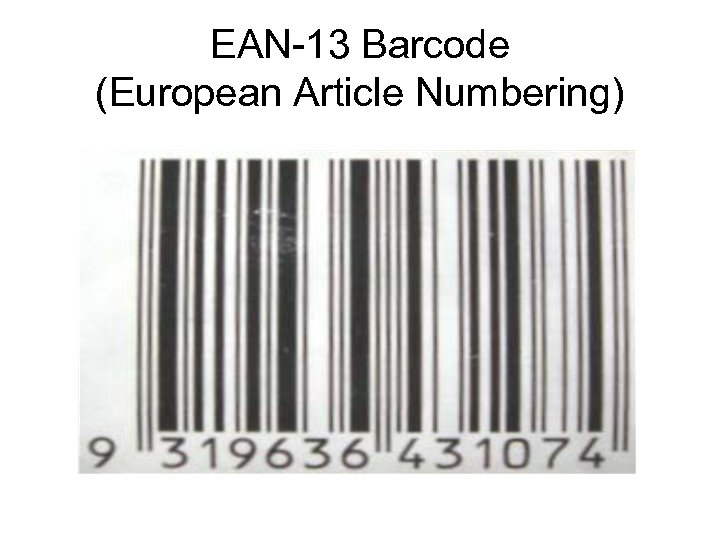

EAN-13 Barcode (European Article Numbering)

EAN-13 Barcode (European Article Numbering)

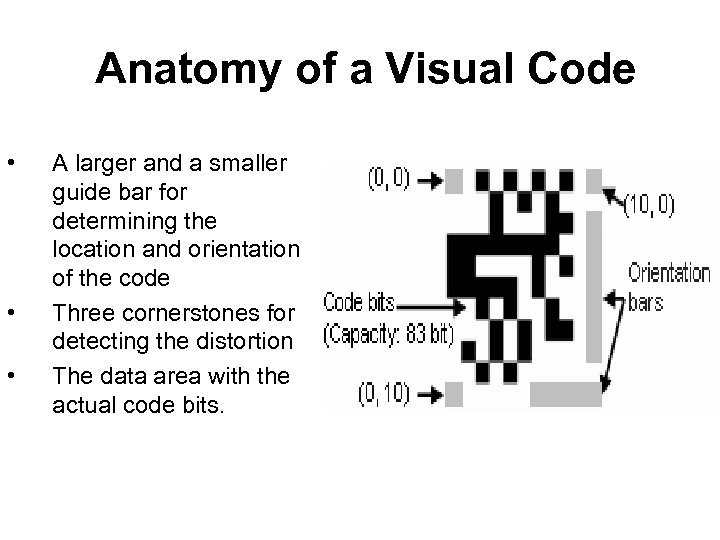

Anatomy of a Visual Code • • • A larger and a smaller guide bar for determining the location and orientation of the code Three cornerstones for detecting the distortion The data area with the actual code bits.

Anatomy of a Visual Code • • • A larger and a smaller guide bar for determining the location and orientation of the code Three cornerstones for detecting the distortion The data area with the actual code bits.

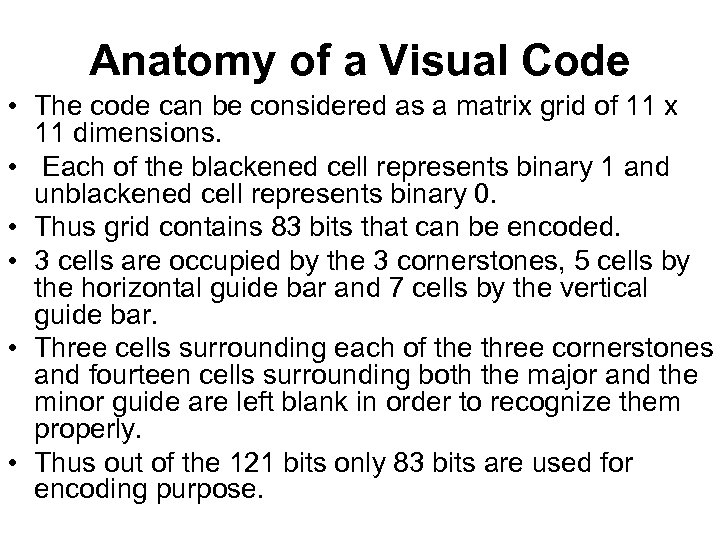

Anatomy of a Visual Code • The code can be considered as a matrix grid of 11 x 11 dimensions. • Each of the blackened cell represents binary 1 and unblackened cell represents binary 0. • Thus grid contains 83 bits that can be encoded. • 3 cells are occupied by the 3 cornerstones, 5 cells by the horizontal guide bar and 7 cells by the vertical guide bar. • Three cells surrounding each of the three cornerstones and fourteen cells surrounding both the major and the minor guide are left blank in order to recognize them properly. • Thus out of the 121 bits only 83 bits are used for encoding purpose.

Anatomy of a Visual Code • The code can be considered as a matrix grid of 11 x 11 dimensions. • Each of the blackened cell represents binary 1 and unblackened cell represents binary 0. • Thus grid contains 83 bits that can be encoded. • 3 cells are occupied by the 3 cornerstones, 5 cells by the horizontal guide bar and 7 cells by the vertical guide bar. • Three cells surrounding each of the three cornerstones and fourteen cells surrounding both the major and the minor guide are left blank in order to recognize them properly. • Thus out of the 121 bits only 83 bits are used for encoding purpose.

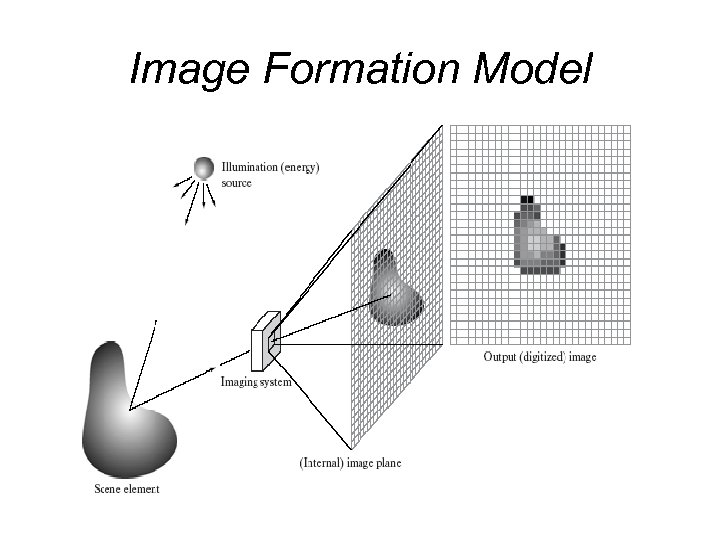

Image Formation Model

Image Formation Model

Image Formation Model • When an image is generated from a physical process, its values are proportional to energy radiated by a physical source (em waves). • Hence f(x, y) must be nonzero and finite. That is • The function f(x, y) is characterized by 2 components. • 1) The amount of source illumination incident on the scene being viewed called illumination component denoted as i(x, y) • 2) The amount of illumination reflected by the objects in the scene called reflectance component denoted as r(x, y).

Image Formation Model • When an image is generated from a physical process, its values are proportional to energy radiated by a physical source (em waves). • Hence f(x, y) must be nonzero and finite. That is • The function f(x, y) is characterized by 2 components. • 1) The amount of source illumination incident on the scene being viewed called illumination component denoted as i(x, y) • 2) The amount of illumination reflected by the objects in the scene called reflectance component denoted as r(x, y).

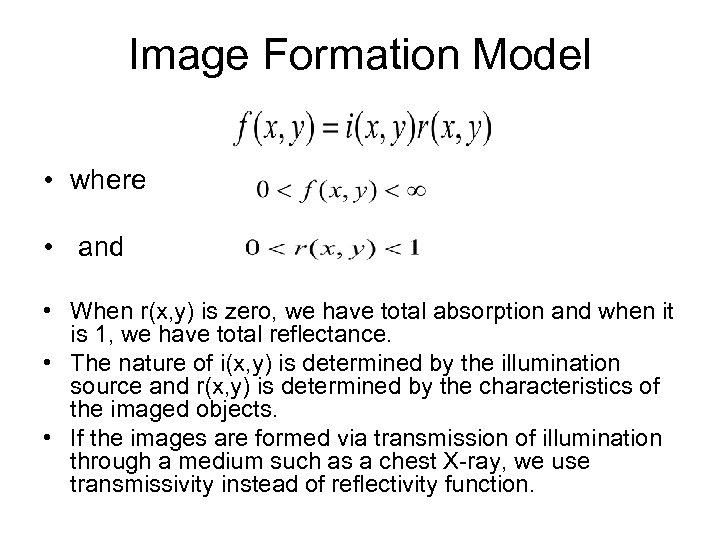

Image Formation Model • where • and • When r(x, y) is zero, we have total absorption and when it is 1, we have total reflectance. • The nature of i(x, y) is determined by the illumination source and r(x, y) is determined by the characteristics of the imaged objects. • If the images are formed via transmission of illumination through a medium such as a chest X-ray, we use transmissivity instead of reflectivity function.

Image Formation Model • where • and • When r(x, y) is zero, we have total absorption and when it is 1, we have total reflectance. • The nature of i(x, y) is determined by the illumination source and r(x, y) is determined by the characteristics of the imaged objects. • If the images are formed via transmission of illumination through a medium such as a chest X-ray, we use transmissivity instead of reflectivity function.

Illumination values of objects • On a clear day, sun produces about 90000 lm/m 2 of illumination on the surface of the Earth. It is about 10000 lm/ m 2 on a cloudy day. • On a clear evening, a full moon gives about 0. 1 lm/ m 2 of illumination. • The typical illumination of an office is about 1000 lm/m 2. • The typical values of r(x, y) are: 0. 01 for black velvet, 0. 65 for stainless steel, 0. 8 for white wall paint, 0. 90 for silver plated metal and 0. 93 for snow.

Illumination values of objects • On a clear day, sun produces about 90000 lm/m 2 of illumination on the surface of the Earth. It is about 10000 lm/ m 2 on a cloudy day. • On a clear evening, a full moon gives about 0. 1 lm/ m 2 of illumination. • The typical illumination of an office is about 1000 lm/m 2. • The typical values of r(x, y) are: 0. 01 for black velvet, 0. 65 for stainless steel, 0. 8 for white wall paint, 0. 90 for silver plated metal and 0. 93 for snow.

Image Sampling • The output of many sensors is continuous voltage. • To get a digital image, we need to convert this voltage into digital form. • But this involves 2 processes, namely sampling and quantization. • An image is continuous with respect to x and y coordinates and in amplitude. • Digitizing the coordinates is called sampling. • Digitizing the amplitude is called quantization.

Image Sampling • The output of many sensors is continuous voltage. • To get a digital image, we need to convert this voltage into digital form. • But this involves 2 processes, namely sampling and quantization. • An image is continuous with respect to x and y coordinates and in amplitude. • Digitizing the coordinates is called sampling. • Digitizing the amplitude is called quantization.

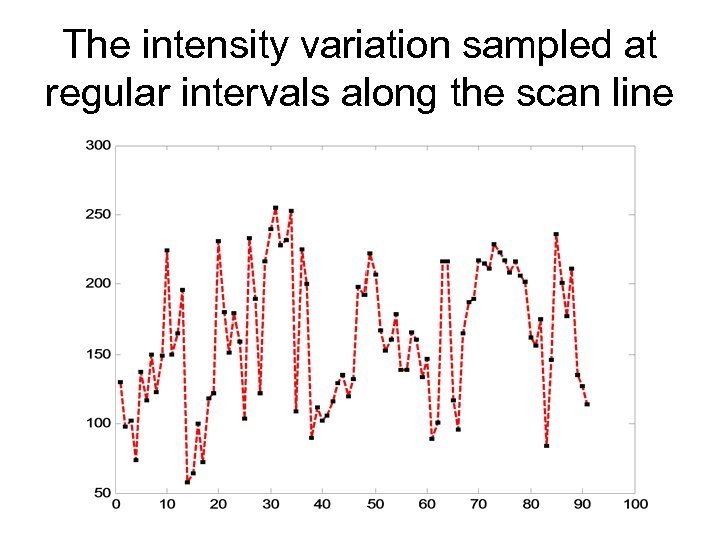

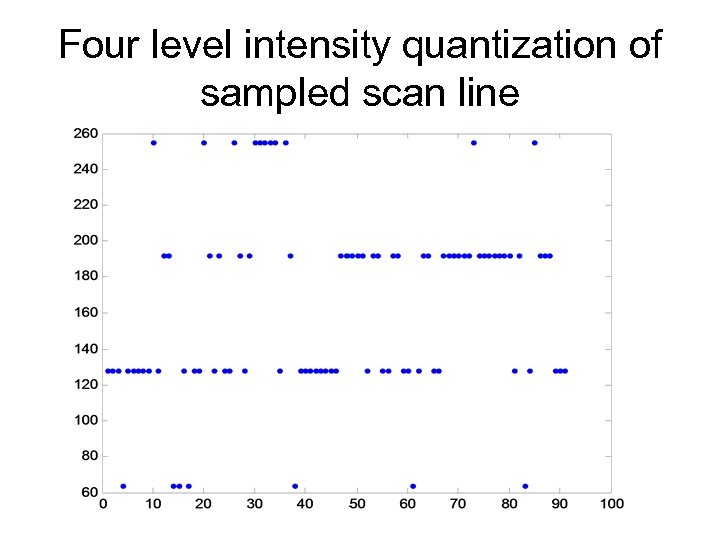

Sampling and Quantization • Let us consider a gray scale image. • We can take intensity values along a particular line. • Subsequently, we consider few equally spaced points (discrete locations) along this line and mark the intensity values at these points called sampling points. • But the values of amplitude are continuous in nature. • The gray level values can also be converted (quantized) into discrete quantities. • This is called quantization. • We have converted the gray level ranges into 4 levels. • For this we assign one of the 4 discrete gray levels (closest one) to each sample.

Sampling and Quantization • Let us consider a gray scale image. • We can take intensity values along a particular line. • Subsequently, we consider few equally spaced points (discrete locations) along this line and mark the intensity values at these points called sampling points. • But the values of amplitude are continuous in nature. • The gray level values can also be converted (quantized) into discrete quantities. • This is called quantization. • We have converted the gray level ranges into 4 levels. • For this we assign one of the 4 discrete gray levels (closest one) to each sample.

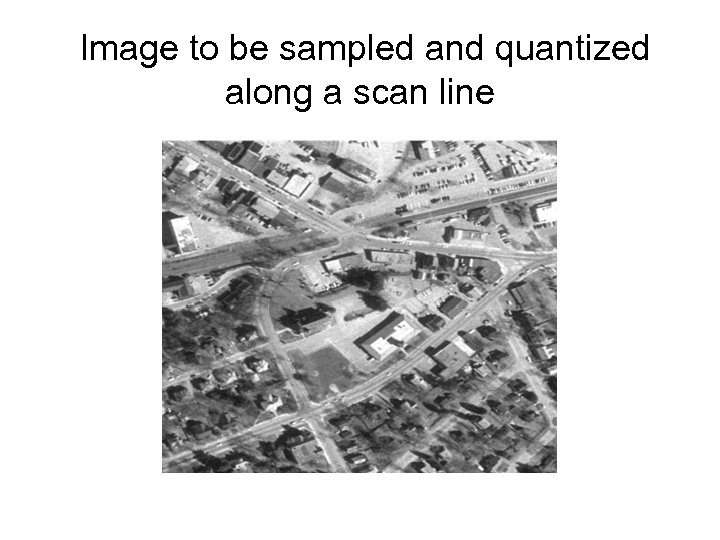

Image to be sampled and quantized along a scan line

Image to be sampled and quantized along a scan line

The intensity variation sampled at regular intervals along the scan line

The intensity variation sampled at regular intervals along the scan line

Four level intensity quantization of sampled scan line

Four level intensity quantization of sampled scan line

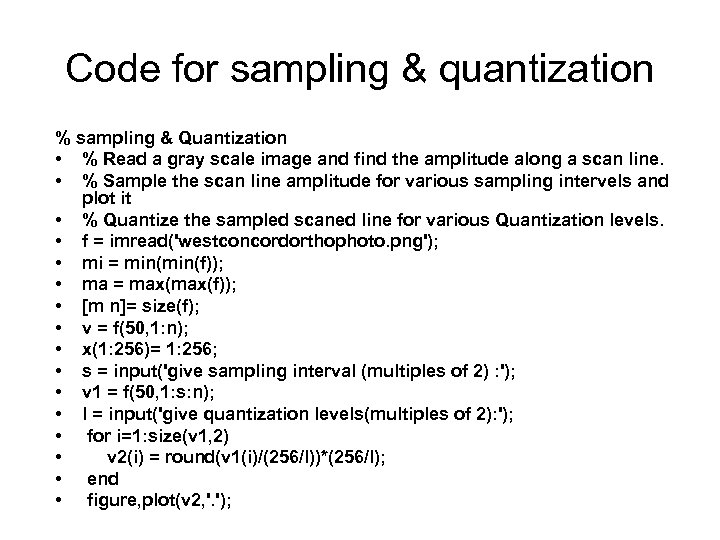

Code for sampling & quantization % sampling & Quantization • % Read a gray scale image and find the amplitude along a scan line. • % Sample the scan line amplitude for various sampling intervels and plot it • % Quantize the sampled scaned line for various Quantization levels. • f = imread('westconcordorthophoto. png'); • mi = min(f)); • ma = max(f)); • [m n]= size(f); • v = f(50, 1: n); • x(1: 256)= 1: 256; • s = input('give sampling interval (multiples of 2) : '); • v 1 = f(50, 1: s: n); • l = input('give quantization levels(multiples of 2): '); • for i=1: size(v 1, 2) • v 2(i) = round(v 1(i)/(256/l))*(256/l); • end • figure, plot(v 2, '. ');

Code for sampling & quantization % sampling & Quantization • % Read a gray scale image and find the amplitude along a scan line. • % Sample the scan line amplitude for various sampling intervels and plot it • % Quantize the sampled scaned line for various Quantization levels. • f = imread('westconcordorthophoto. png'); • mi = min(f)); • ma = max(f)); • [m n]= size(f); • v = f(50, 1: n); • x(1: 256)= 1: 256; • s = input('give sampling interval (multiples of 2) : '); • v 1 = f(50, 1: s: n); • l = input('give quantization levels(multiples of 2): '); • for i=1: size(v 1, 2) • v 2(i) = round(v 1(i)/(256/l))*(256/l); • end • figure, plot(v 2, '. ');

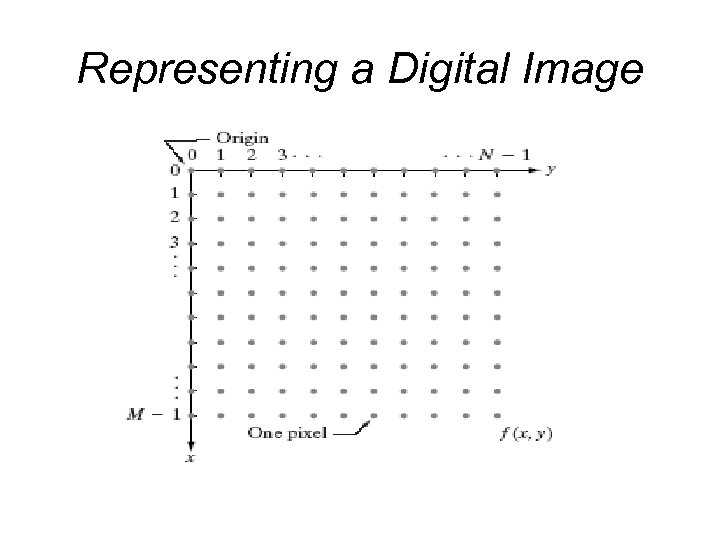

Representing a Digital Image

Representing a Digital Image

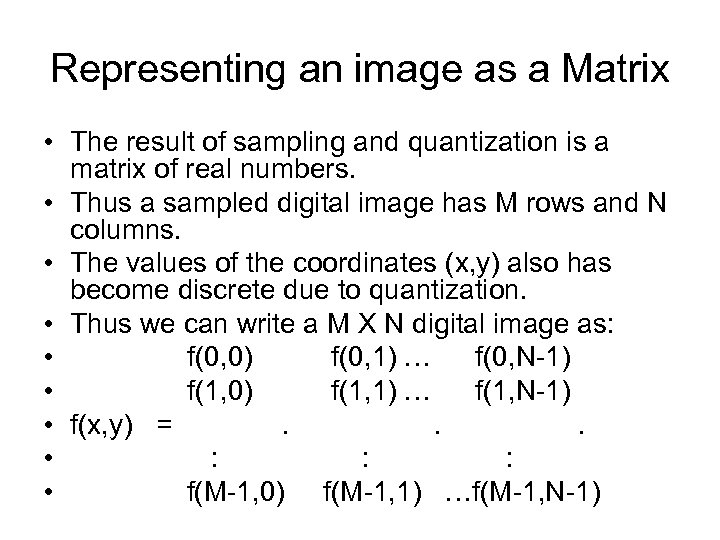

Representing an image as a Matrix • The result of sampling and quantization is a matrix of real numbers. • Thus a sampled digital image has M rows and N columns. • The values of the coordinates (x, y) also has become discrete due to quantization. • Thus we can write a M X N digital image as: • f(0, 0) f(0, 1) … f(0, N-1) • f(1, 0) f(1, 1) … f(1, N-1) • f(x, y) =. . . • : : : • f(M-1, 0) f(M-1, 1) …f(M-1, N-1)

Representing an image as a Matrix • The result of sampling and quantization is a matrix of real numbers. • Thus a sampled digital image has M rows and N columns. • The values of the coordinates (x, y) also has become discrete due to quantization. • Thus we can write a M X N digital image as: • f(0, 0) f(0, 1) … f(0, N-1) • f(1, 0) f(1, 1) … f(1, N-1) • f(x, y) =. . . • : : : • f(M-1, 0) f(M-1, 1) …f(M-1, N-1)

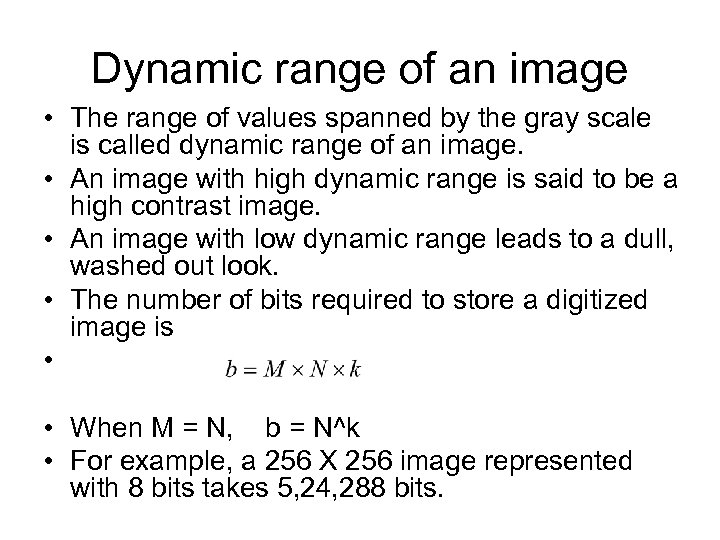

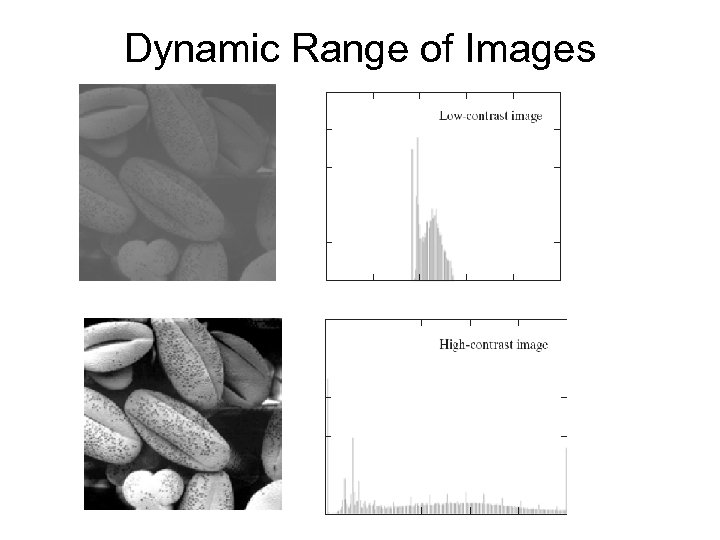

Dynamic range of an image • The range of values spanned by the gray scale is called dynamic range of an image. • An image with high dynamic range is said to be a high contrast image. • An image with low dynamic range leads to a dull, washed out look. • The number of bits required to store a digitized image is • • When M = N, b = N^k • For example, a 256 X 256 image represented with 8 bits takes 5, 24, 288 bits.

Dynamic range of an image • The range of values spanned by the gray scale is called dynamic range of an image. • An image with high dynamic range is said to be a high contrast image. • An image with low dynamic range leads to a dull, washed out look. • The number of bits required to store a digitized image is • • When M = N, b = N^k • For example, a 256 X 256 image represented with 8 bits takes 5, 24, 288 bits.

Dynamic Range of Images

Dynamic Range of Images

Spatial and Gray-Level Resolution • Sampling determines the spatial resolution of an image. • Resolution is the smallest number of discernible line pairs per unit distance. • Gray-level resolution is the smallest discernible change in gray level.

Spatial and Gray-Level Resolution • Sampling determines the spatial resolution of an image. • Resolution is the smallest number of discernible line pairs per unit distance. • Gray-level resolution is the smallest discernible change in gray level.

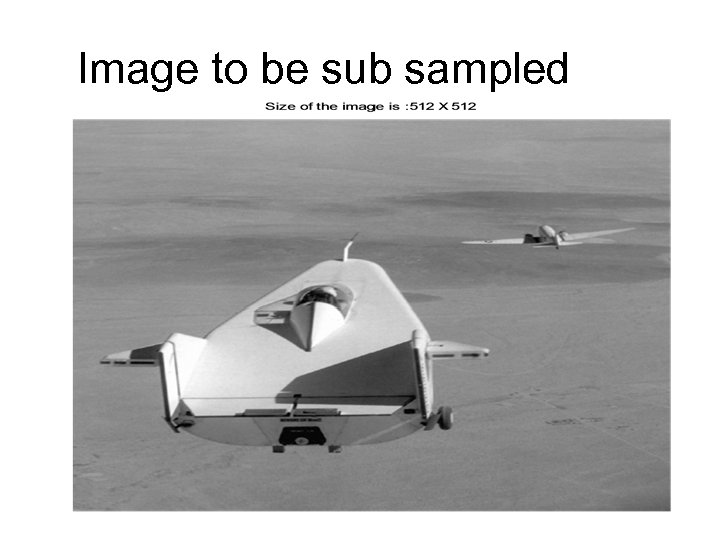

Image to be sub sampled

Image to be sub sampled

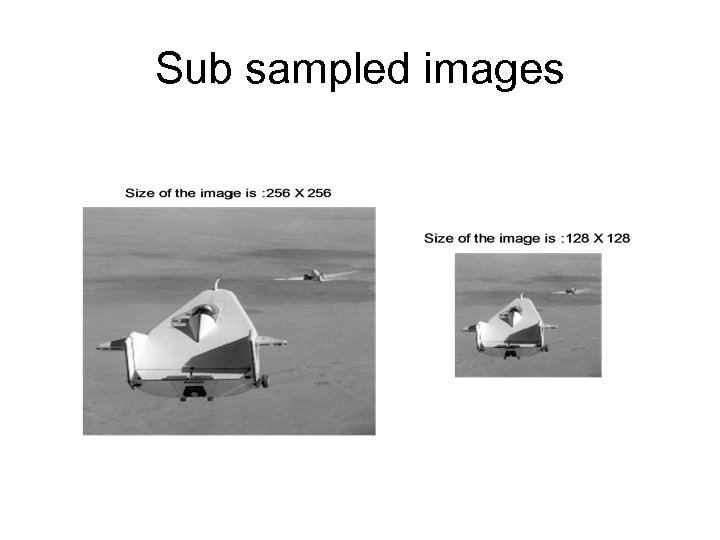

Sub sampled images

Sub sampled images

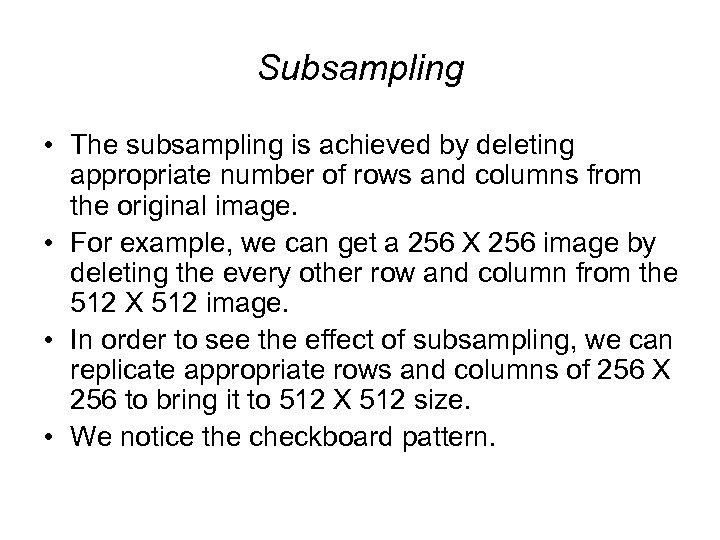

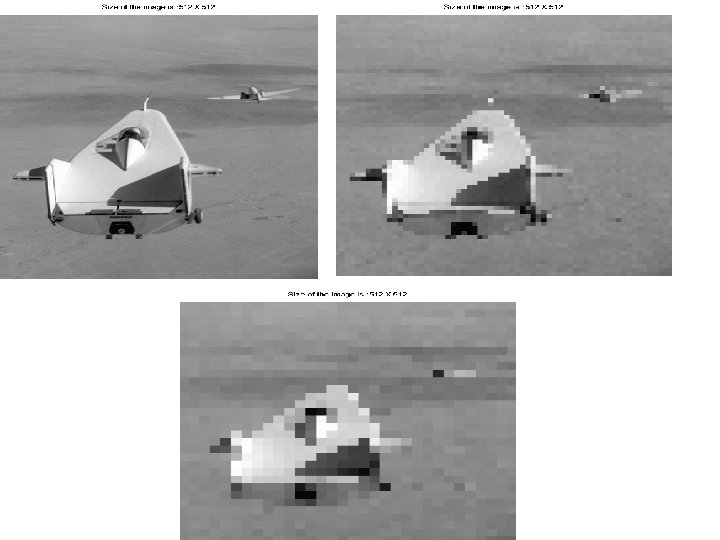

Subsampling • The subsampling is achieved by deleting appropriate number of rows and columns from the original image. • For example, we can get a 256 X 256 image by deleting the every other row and column from the 512 X 512 image. • In order to see the effect of subsampling, we can replicate appropriate rows and columns of 256 X 256 to bring it to 512 X 512 size. • We notice the checkboard pattern.

Subsampling • The subsampling is achieved by deleting appropriate number of rows and columns from the original image. • For example, we can get a 256 X 256 image by deleting the every other row and column from the 512 X 512 image. • In order to see the effect of subsampling, we can replicate appropriate rows and columns of 256 X 256 to bring it to 512 X 512 size. • We notice the checkboard pattern.

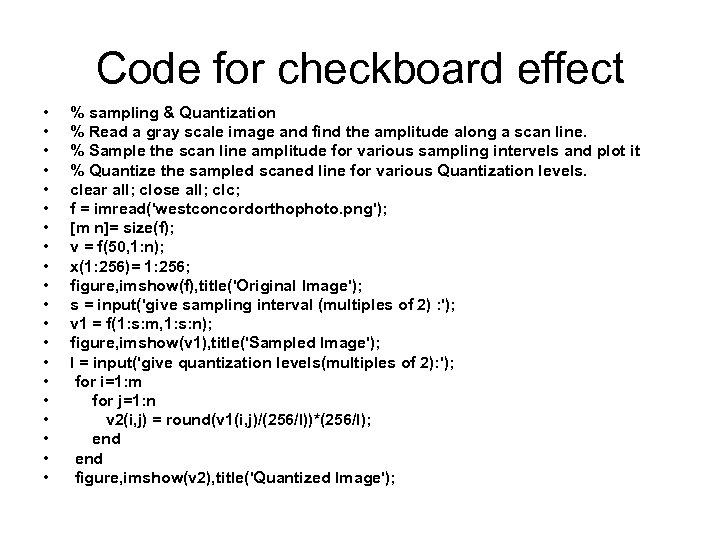

Code for checkboard effect • • • • • % sampling & Quantization % Read a gray scale image and find the amplitude along a scan line. % Sample the scan line amplitude for various sampling intervels and plot it % Quantize the sampled scaned line for various Quantization levels. clear all; close all; clc; f = imread('westconcordorthophoto. png'); [m n]= size(f); v = f(50, 1: n); x(1: 256)= 1: 256; figure, imshow(f), title('Original Image'); s = input('give sampling interval (multiples of 2) : '); v 1 = f(1: s: m, 1: s: n); figure, imshow(v 1), title('Sampled Image'); l = input('give quantization levels(multiples of 2): '); for i=1: m for j=1: n v 2(i, j) = round(v 1(i, j)/(256/l))*(256/l); end figure, imshow(v 2), title('Quantized Image');

Code for checkboard effect • • • • • % sampling & Quantization % Read a gray scale image and find the amplitude along a scan line. % Sample the scan line amplitude for various sampling intervels and plot it % Quantize the sampled scaned line for various Quantization levels. clear all; close all; clc; f = imread('westconcordorthophoto. png'); [m n]= size(f); v = f(50, 1: n); x(1: 256)= 1: 256; figure, imshow(f), title('Original Image'); s = input('give sampling interval (multiples of 2) : '); v 1 = f(1: s: m, 1: s: n); figure, imshow(v 1), title('Sampled Image'); l = input('give quantization levels(multiples of 2): '); for i=1: m for j=1: n v 2(i, j) = round(v 1(i, j)/(256/l))*(256/l); end figure, imshow(v 2), title('Quantized Image');

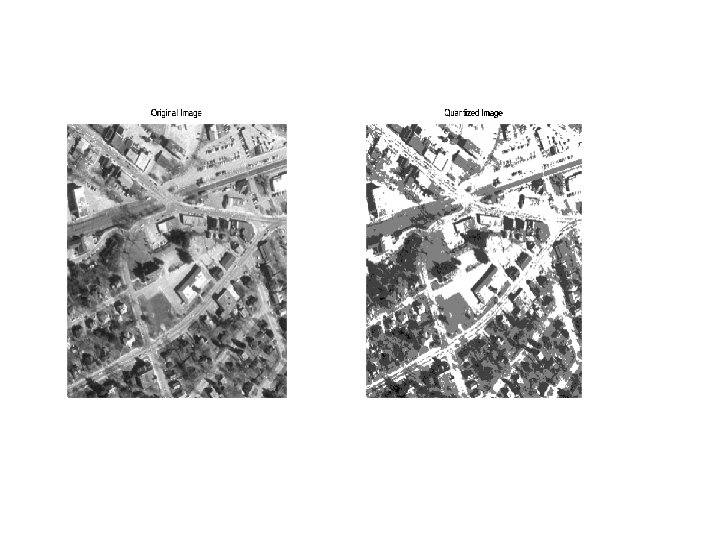

False contouring • We can keep the number of samples constant and reduce the number of gay levels also from 256 to 128, 64, etc. • This creates imperceptible set of very fine ridgelike structures in areas of smooth gray levels, called false contouring. • It is prominent in an image which is displayed with 16 or less gray levels.

False contouring • We can keep the number of samples constant and reduce the number of gay levels also from 256 to 128, 64, etc. • This creates imperceptible set of very fine ridgelike structures in areas of smooth gray levels, called false contouring. • It is prominent in an image which is displayed with 16 or less gray levels.

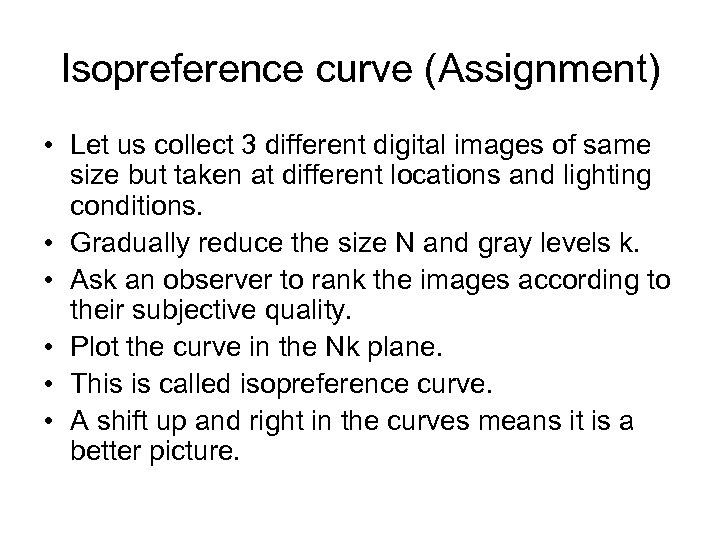

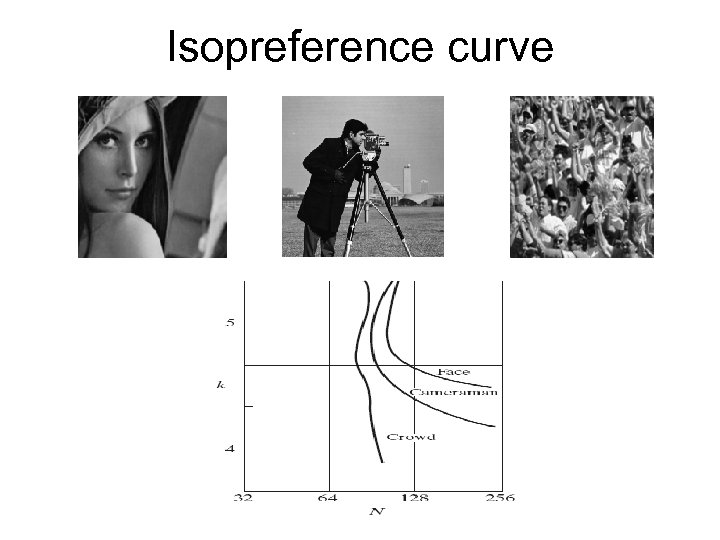

Isopreference curve (Assignment) • Let us collect 3 different digital images of same size but taken at different locations and lighting conditions. • Gradually reduce the size N and gray levels k. • Ask an observer to rank the images according to their subjective quality. • Plot the curve in the Nk plane. • This is called isopreference curve. • A shift up and right in the curves means it is a better picture.

Isopreference curve (Assignment) • Let us collect 3 different digital images of same size but taken at different locations and lighting conditions. • Gradually reduce the size N and gray levels k. • Ask an observer to rank the images according to their subjective quality. • Plot the curve in the Nk plane. • This is called isopreference curve. • A shift up and right in the curves means it is a better picture.

Isopreference curve

Isopreference curve

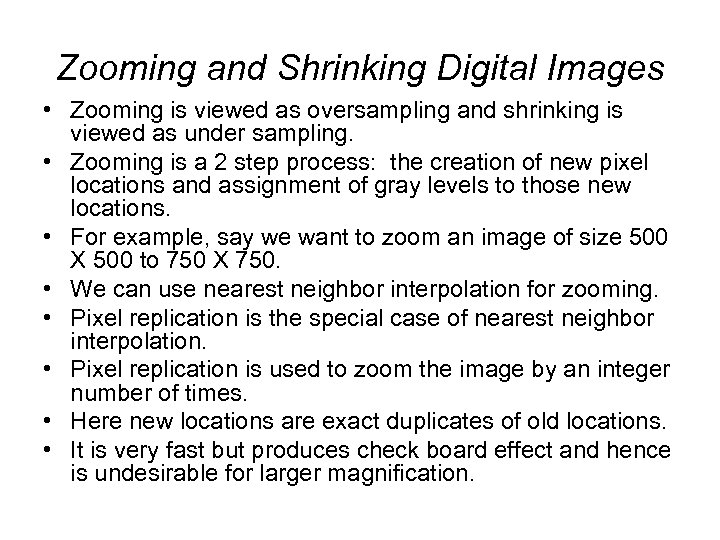

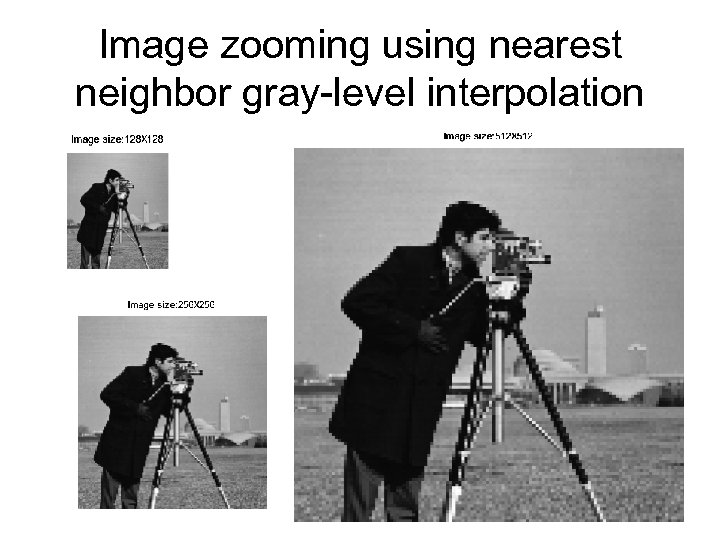

Zooming and Shrinking Digital Images • Zooming is viewed as oversampling and shrinking is viewed as under sampling. • Zooming is a 2 step process: the creation of new pixel locations and assignment of gray levels to those new locations. • For example, say we want to zoom an image of size 500 X 500 to 750 X 750. • We can use nearest neighbor interpolation for zooming. • Pixel replication is the special case of nearest neighbor interpolation. • Pixel replication is used to zoom the image by an integer number of times. • Here new locations are exact duplicates of old locations. • It is very fast but produces check board effect and hence is undesirable for larger magnification.

Zooming and Shrinking Digital Images • Zooming is viewed as oversampling and shrinking is viewed as under sampling. • Zooming is a 2 step process: the creation of new pixel locations and assignment of gray levels to those new locations. • For example, say we want to zoom an image of size 500 X 500 to 750 X 750. • We can use nearest neighbor interpolation for zooming. • Pixel replication is the special case of nearest neighbor interpolation. • Pixel replication is used to zoom the image by an integer number of times. • Here new locations are exact duplicates of old locations. • It is very fast but produces check board effect and hence is undesirable for larger magnification.

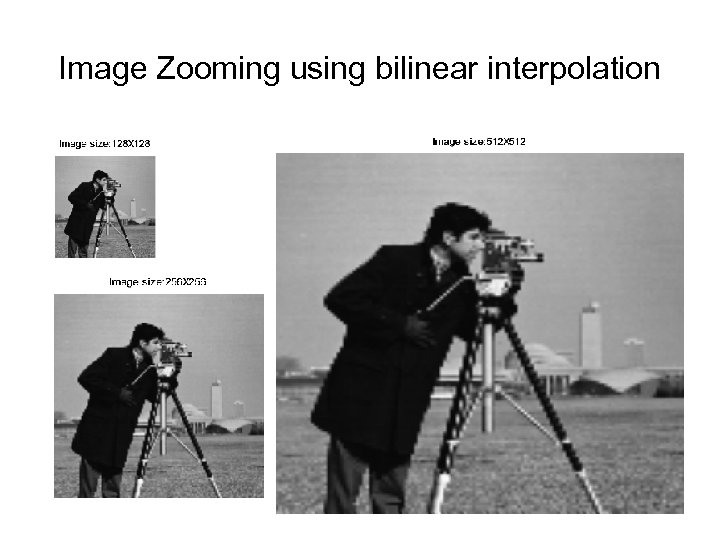

Bilinear interpolation • The best way is to use bilinear interpolation using four nearest neighbors of a point. • Let (x’, y’) denote the coordinates of a point in the zoomed image and let v(x’, y’) denote the gray level assigned to it. • The assigned gray level is given by • v(x’, y’) = ax’ + by’ + cx’y’ + d • Here the four coefficients are determined from the 4 equations in four unknowns that can be written using the 4 nearest neighbors of point (x’, y’). (Assignment)

Bilinear interpolation • The best way is to use bilinear interpolation using four nearest neighbors of a point. • Let (x’, y’) denote the coordinates of a point in the zoomed image and let v(x’, y’) denote the gray level assigned to it. • The assigned gray level is given by • v(x’, y’) = ax’ + by’ + cx’y’ + d • Here the four coefficients are determined from the 4 equations in four unknowns that can be written using the 4 nearest neighbors of point (x’, y’). (Assignment)

Shrinking an image • For shrinking an image by one-half, we delete every other row and column. • In order to shrink an image by non integer factor, we expand the grid to fit over the original image, do gray-level nearest neighbor or bilinear interpolation, and then shrink the grid back to its original specified size. (Assignment). • It is good to blur an image slightly before shrinking it.

Shrinking an image • For shrinking an image by one-half, we delete every other row and column. • In order to shrink an image by non integer factor, we expand the grid to fit over the original image, do gray-level nearest neighbor or bilinear interpolation, and then shrink the grid back to its original specified size. (Assignment). • It is good to blur an image slightly before shrinking it.

Image zooming using nearest neighbor gray-level interpolation

Image zooming using nearest neighbor gray-level interpolation

Image Zooming using bilinear interpolation

Image Zooming using bilinear interpolation

% Read a sub-sampled image. Using column and row replicative technique % magnify the image to an appropriate size. clear all; close all; clc; a=imread('cameraman. tif'); a=im 2 double(a); [m n]=size(a); sp = input('enter how many times the image to be doubled: '); s=sp+1; disp(['your image will be magnified by ', num 2 str(power(2, sp)), 'times']); figure, imshow(a); m 1=2*m; n 1=2*n; I=1; J=1; for i=1: 2: m 1 for j=1: 2: n 1 b(i, j)=a(I, J); J=J+1; end I=I+1; J=1; end for i=2: 2: m 1 for j=2: 2: n 1 b(i, j)=b(i-1, j-1); end for i=1: 2: m 1 for j=2: 2: n 1 b(i, j)=b(i, j-1); end for i=2: 2: m 1 for j=1: 2: n 1 b(i, j)=b(i-1, j); end figure, imshow(b);

% Read a sub-sampled image. Using column and row replicative technique % magnify the image to an appropriate size. clear all; close all; clc; a=imread('cameraman. tif'); a=im 2 double(a); [m n]=size(a); sp = input('enter how many times the image to be doubled: '); s=sp+1; disp(['your image will be magnified by ', num 2 str(power(2, sp)), 'times']); figure, imshow(a); m 1=2*m; n 1=2*n; I=1; J=1; for i=1: 2: m 1 for j=1: 2: n 1 b(i, j)=a(I, J); J=J+1; end I=I+1; J=1; end for i=2: 2: m 1 for j=2: 2: n 1 b(i, j)=b(i-1, j-1); end for i=1: 2: m 1 for j=2: 2: n 1 b(i, j)=b(i, j-1); end for i=2: 2: m 1 for j=1: 2: n 1 b(i, j)=b(i-1, j); end figure, imshow(b);

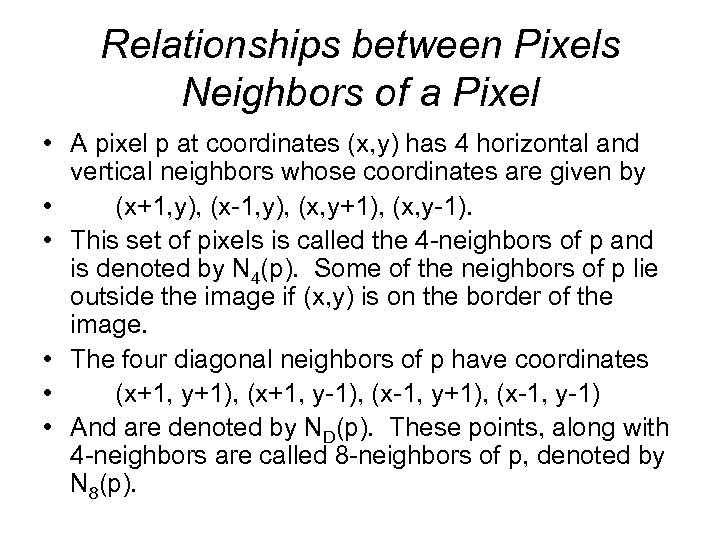

Relationships between Pixels Neighbors of a Pixel • A pixel p at coordinates (x, y) has 4 horizontal and vertical neighbors whose coordinates are given by • (x+1, y), (x-1, y), (x, y+1), (x, y-1). • This set of pixels is called the 4 -neighbors of p and is denoted by N 4(p). Some of the neighbors of p lie outside the image if (x, y) is on the border of the image. • The four diagonal neighbors of p have coordinates • (x+1, y+1), (x+1, y-1), (x-1, y+1), (x-1, y-1) • And are denoted by ND(p). These points, along with 4 -neighbors are called 8 -neighbors of p, denoted by N 8(p).

Relationships between Pixels Neighbors of a Pixel • A pixel p at coordinates (x, y) has 4 horizontal and vertical neighbors whose coordinates are given by • (x+1, y), (x-1, y), (x, y+1), (x, y-1). • This set of pixels is called the 4 -neighbors of p and is denoted by N 4(p). Some of the neighbors of p lie outside the image if (x, y) is on the border of the image. • The four diagonal neighbors of p have coordinates • (x+1, y+1), (x+1, y-1), (x-1, y+1), (x-1, y-1) • And are denoted by ND(p). These points, along with 4 -neighbors are called 8 -neighbors of p, denoted by N 8(p).

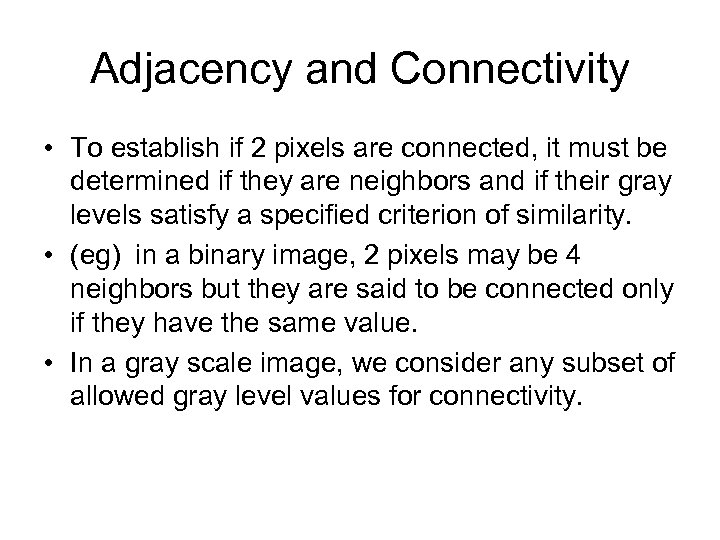

Adjacency and Connectivity • To establish if 2 pixels are connected, it must be determined if they are neighbors and if their gray levels satisfy a specified criterion of similarity. • (eg) in a binary image, 2 pixels may be 4 neighbors but they are said to be connected only if they have the same value. • In a gray scale image, we consider any subset of allowed gray level values for connectivity.

Adjacency and Connectivity • To establish if 2 pixels are connected, it must be determined if they are neighbors and if their gray levels satisfy a specified criterion of similarity. • (eg) in a binary image, 2 pixels may be 4 neighbors but they are said to be connected only if they have the same value. • In a gray scale image, we consider any subset of allowed gray level values for connectivity.

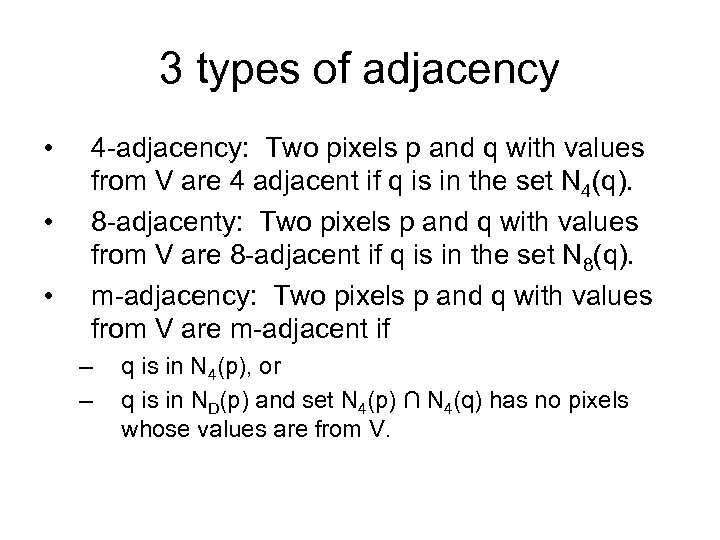

3 types of adjacency • • • 4 -adjacency: Two pixels p and q with values from V are 4 adjacent if q is in the set N 4(q). 8 -adjacenty: Two pixels p and q with values from V are 8 -adjacent if q is in the set N 8(q). m-adjacency: Two pixels p and q with values from V are m-adjacent if – – q is in N 4(p), or q is in ND(p) and set N 4(p) ∩ N 4(q) has no pixels whose values are from V.

3 types of adjacency • • • 4 -adjacency: Two pixels p and q with values from V are 4 adjacent if q is in the set N 4(q). 8 -adjacenty: Two pixels p and q with values from V are 8 -adjacent if q is in the set N 8(q). m-adjacency: Two pixels p and q with values from V are m-adjacent if – – q is in N 4(p), or q is in ND(p) and set N 4(p) ∩ N 4(q) has no pixels whose values are from V.

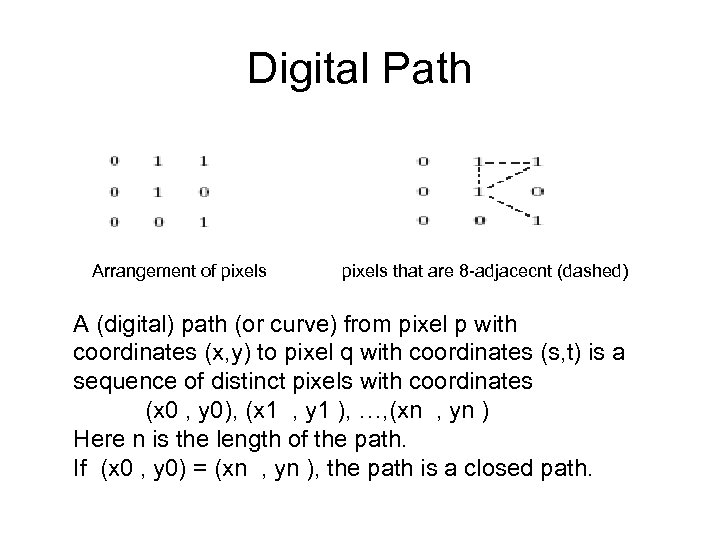

Digital Path Arrangement of pixels that are 8 -adjacecnt (dashed) A (digital) path (or curve) from pixel p with coordinates (x, y) to pixel q with coordinates (s, t) is a sequence of distinct pixels with coordinates (x 0 , y 0), (x 1 , y 1 ), …, (xn , yn ) Here n is the length of the path. If (x 0 , y 0) = (xn , yn ), the path is a closed path.

Digital Path Arrangement of pixels that are 8 -adjacecnt (dashed) A (digital) path (or curve) from pixel p with coordinates (x, y) to pixel q with coordinates (s, t) is a sequence of distinct pixels with coordinates (x 0 , y 0), (x 1 , y 1 ), …, (xn , yn ) Here n is the length of the path. If (x 0 , y 0) = (xn , yn ), the path is a closed path.

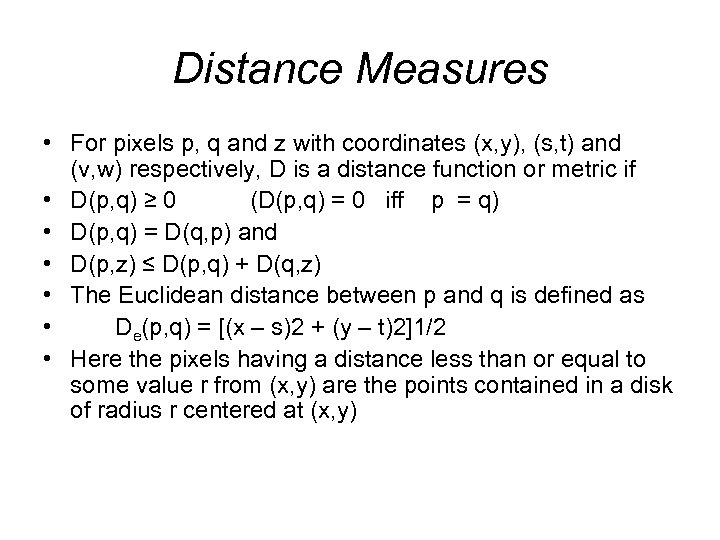

Distance Measures • For pixels p, q and z with coordinates (x, y), (s, t) and (v, w) respectively, D is a distance function or metric if • D(p, q) ≥ 0 (D(p, q) = 0 iff p = q) • D(p, q) = D(q, p) and • D(p, z) ≤ D(p, q) + D(q, z) • The Euclidean distance between p and q is defined as • De(p, q) = [(x – s)2 + (y – t)2]1/2 • Here the pixels having a distance less than or equal to some value r from (x, y) are the points contained in a disk of radius r centered at (x, y)

Distance Measures • For pixels p, q and z with coordinates (x, y), (s, t) and (v, w) respectively, D is a distance function or metric if • D(p, q) ≥ 0 (D(p, q) = 0 iff p = q) • D(p, q) = D(q, p) and • D(p, z) ≤ D(p, q) + D(q, z) • The Euclidean distance between p and q is defined as • De(p, q) = [(x – s)2 + (y – t)2]1/2 • Here the pixels having a distance less than or equal to some value r from (x, y) are the points contained in a disk of radius r centered at (x, y)

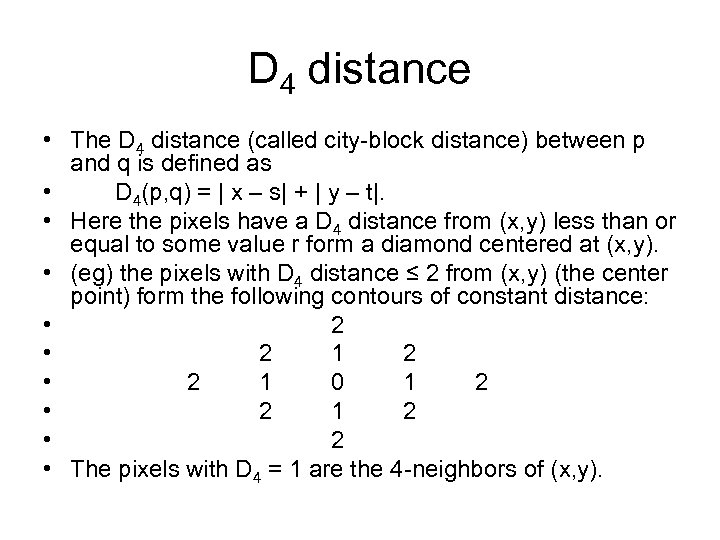

D 4 distance • The D 4 distance (called city-block distance) between p and q is defined as • D 4(p, q) = | x – s| + | y – t|. • Here the pixels have a D 4 distance from (x, y) less than or equal to some value r form a diamond centered at (x, y). • (eg) the pixels with D 4 distance ≤ 2 from (x, y) (the center point) form the following contours of constant distance: • 2 1 2 • 2 1 0 1 2 • 2 • The pixels with D 4 = 1 are the 4 -neighbors of (x, y).

D 4 distance • The D 4 distance (called city-block distance) between p and q is defined as • D 4(p, q) = | x – s| + | y – t|. • Here the pixels have a D 4 distance from (x, y) less than or equal to some value r form a diamond centered at (x, y). • (eg) the pixels with D 4 distance ≤ 2 from (x, y) (the center point) form the following contours of constant distance: • 2 1 2 • 2 1 0 1 2 • 2 • The pixels with D 4 = 1 are the 4 -neighbors of (x, y).

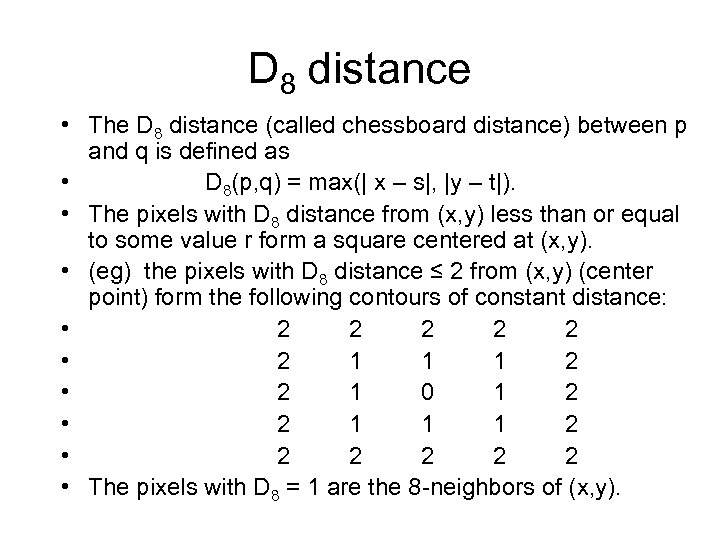

D 8 distance • The D 8 distance (called chessboard distance) between p and q is defined as • D 8(p, q) = max(| x – s|, |y – t|). • The pixels with D 8 distance from (x, y) less than or equal to some value r form a square centered at (x, y). • (eg) the pixels with D 8 distance ≤ 2 from (x, y) (center point) form the following contours of constant distance: • 2 2 2 • 2 1 1 1 2 • 2 1 0 1 2 • 2 1 1 1 2 • 2 2 2 • The pixels with D 8 = 1 are the 8 -neighbors of (x, y).

D 8 distance • The D 8 distance (called chessboard distance) between p and q is defined as • D 8(p, q) = max(| x – s|, |y – t|). • The pixels with D 8 distance from (x, y) less than or equal to some value r form a square centered at (x, y). • (eg) the pixels with D 8 distance ≤ 2 from (x, y) (center point) form the following contours of constant distance: • 2 2 2 • 2 1 1 1 2 • 2 1 0 1 2 • 2 1 1 1 2 • 2 2 2 • The pixels with D 8 = 1 are the 8 -neighbors of (x, y).