f0f8bb95c15e52ccfa15bf193f9c5102.ppt

- Количество слайдов: 78

What is a Natural Language and How to Describe It? Meaning-Text Approaches in contrast with Generative Approaches Sylvain Kahane Lattice, Université Paris 7 Mexico, February 19 -24, 2001 Sylvain Kahane, Mexico, February 2001

What is a Natural Language and How to Describe It? Meaning-Text Approaches in contrast with Generative Approaches Sylvain Kahane Lattice, Université Paris 7 Mexico, February 19 -24, 2001 Sylvain Kahane, Mexico, February 2001

Meaning-Text Theory n n Foundation: research in Machine Translation in Moscow (Zolkovskij & Mel'cuk 1965, 1967) Main references: Mel'cuk, 1988, Dependency Syntax: Theory and Practice, SUNY Press Mel'cuk et al. 1984, 1988, 1992, 1999, Dictionnaire explicatif et combinatoire du français contemporain, Vol. 1, 2, 3, 4. Mel'cuk, 1993 -2000, Cours de morphologie générale, 5 vol. Sylvain Kahane, Mexico, February 2001 2

Meaning-Text Theory n n Foundation: research in Machine Translation in Moscow (Zolkovskij & Mel'cuk 1965, 1967) Main references: Mel'cuk, 1988, Dependency Syntax: Theory and Practice, SUNY Press Mel'cuk et al. 1984, 1988, 1992, 1999, Dictionnaire explicatif et combinatoire du français contemporain, Vol. 1, 2, 3, 4. Mel'cuk, 1993 -2000, Cours de morphologie générale, 5 vol. Sylvain Kahane, Mexico, February 2001 2

Contents 1. MTT's Postulates 2. MTT's Representations 3. Correspondence Modules 4. Comparison with Generative/Unification Grammars Sylvain Kahane, Mexico, February 2001 3

Contents 1. MTT's Postulates 2. MTT's Representations 3. Correspondence Modules 4. Comparison with Generative/Unification Grammars Sylvain Kahane, Mexico, February 2001 3

1. MTT's Postulates Sylvain Kahane, Mexico, February 2001 4

1. MTT's Postulates Sylvain Kahane, Mexico, February 2001 4

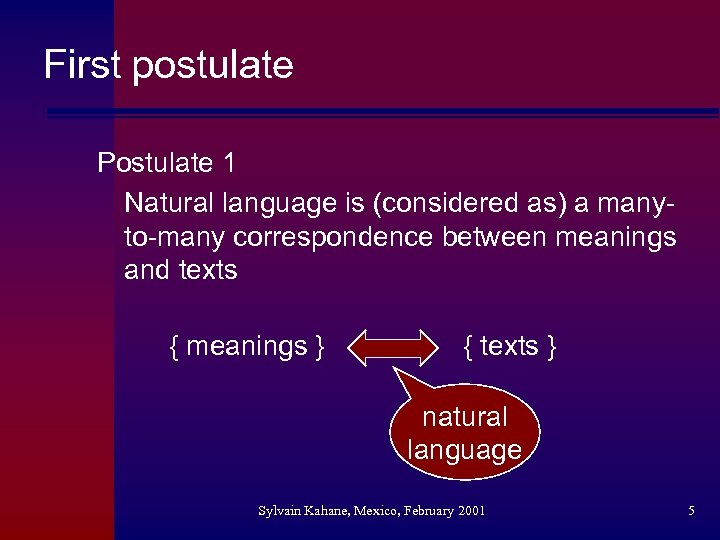

First postulate Postulate 1 Natural language is (considered as) a manyto-many correspondence between meanings and texts { meanings } { texts } natural language Sylvain Kahane, Mexico, February 2001 5

First postulate Postulate 1 Natural language is (considered as) a manyto-many correspondence between meanings and texts { meanings } { texts } natural language Sylvain Kahane, Mexico, February 2001 5

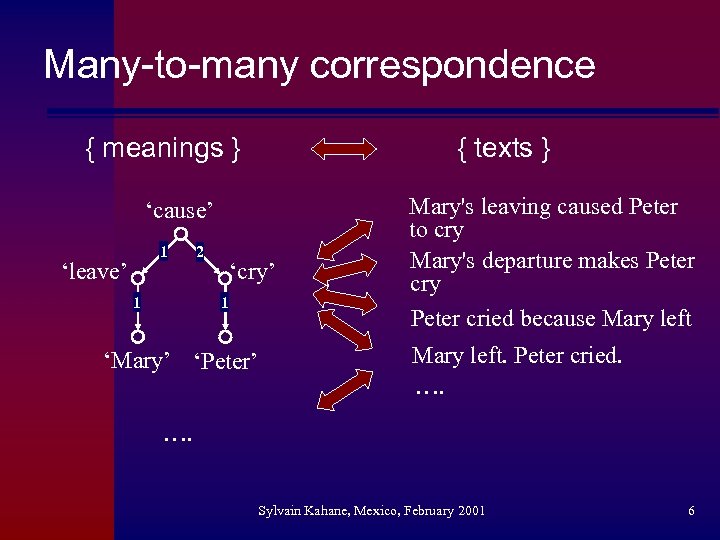

Many-to-many correspondence { meanings } { texts } ‘cause’ 1 ‘leave’ 1 2 ‘cry’ 1 ‘Mary’ ‘Peter’ Mary's leaving caused Peter to cry Mary's departure makes Peter cry Peter cried because Mary left. Peter cried. …. Sylvain Kahane, Mexico, February 2001 6

Many-to-many correspondence { meanings } { texts } ‘cause’ 1 ‘leave’ 1 2 ‘cry’ 1 ‘Mary’ ‘Peter’ Mary's leaving caused Peter to cry Mary's departure makes Peter cry Peter cried because Mary left. Peter cried. …. Sylvain Kahane, Mexico, February 2001 6

Comparison with Chomsky 1957 (1) n n n Chomsky 1957: description of a natural language L = description of the set of acceptable sentences of L Formal language = set of strings A natural language can never be modeled by a formal language in this sense Sylvain Kahane, Mexico, February 2001 7

Comparison with Chomsky 1957 (1) n n n Chomsky 1957: description of a natural language L = description of the set of acceptable sentences of L Formal language = set of strings A natural language can never be modeled by a formal language in this sense Sylvain Kahane, Mexico, February 2001 7

Comparison with Chomsky 1957 (2) n n a sentence is not a string a sentence is a sign with a meaning (signifié) and a form (signifiant) correspondence between two sets A and B = a set of couples of two corresponding elements correspondence between meanings and texts = set of couples of a meaning and a corresponding text = set of sentences Sylvain Kahane, Mexico, February 2001 8

Comparison with Chomsky 1957 (2) n n a sentence is not a string a sentence is a sign with a meaning (signifié) and a form (signifiant) correspondence between two sets A and B = a set of couples of two corresponding elements correspondence between meanings and texts = set of couples of a meaning and a corresponding text = set of sentences Sylvain Kahane, Mexico, February 2001 8

Second postulate Postulate 2 The Meaning-Text correspondence is described by a formal device which simulates the linguistic activity of native speaker n n n A speaker speaks = transforms what he wants to say (a meaning) into what he says (a text) The correspondence is bidirectional but the direction from meanings to texts must be privilegiated Grammar rules = correspondence rules Sylvain Kahane, Mexico, February 2001 9

Second postulate Postulate 2 The Meaning-Text correspondence is described by a formal device which simulates the linguistic activity of native speaker n n n A speaker speaks = transforms what he wants to say (a meaning) into what he says (a text) The correspondence is bidirectional but the direction from meanings to texts must be privilegiated Grammar rules = correspondence rules Sylvain Kahane, Mexico, February 2001 9

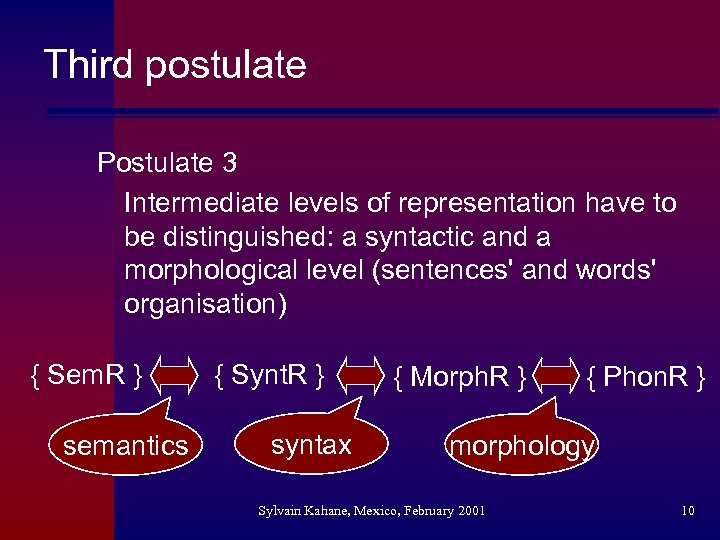

Third postulate Postulate 3 Intermediate levels of representation have to be distinguished: a syntactic and a morphological level (sentences' and words' organisation) { Sem. R } semantics { Synt. R } syntax { Morph. R } { Phon. R } morphology Sylvain Kahane, Mexico, February 2001 10

Third postulate Postulate 3 Intermediate levels of representation have to be distinguished: a syntactic and a morphological level (sentences' and words' organisation) { Sem. R } semantics { Synt. R } syntax { Morph. R } { Phon. R } morphology Sylvain Kahane, Mexico, February 2001 10

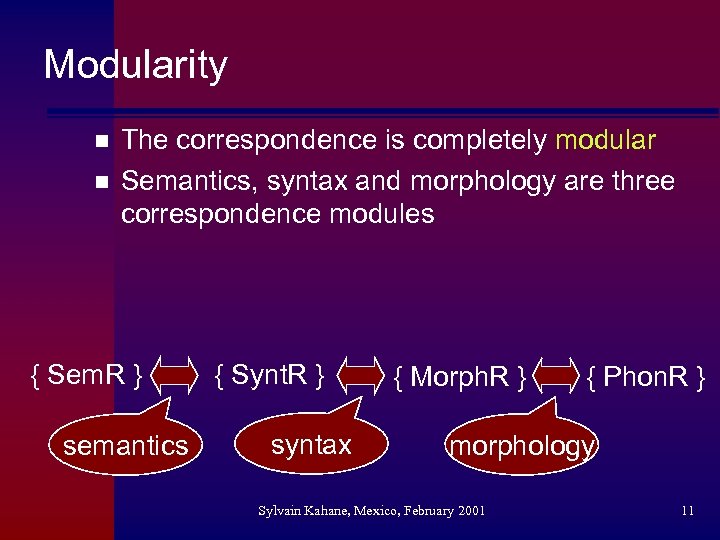

Modularity n n The correspondence is completely modular Semantics, syntax and morphology are three correspondence modules { Sem. R } semantics { Synt. R } syntax { Morph. R } { Phon. R } morphology Sylvain Kahane, Mexico, February 2001 11

Modularity n n The correspondence is completely modular Semantics, syntax and morphology are three correspondence modules { Sem. R } semantics { Synt. R } syntax { Morph. R } { Phon. R } morphology Sylvain Kahane, Mexico, February 2001 11

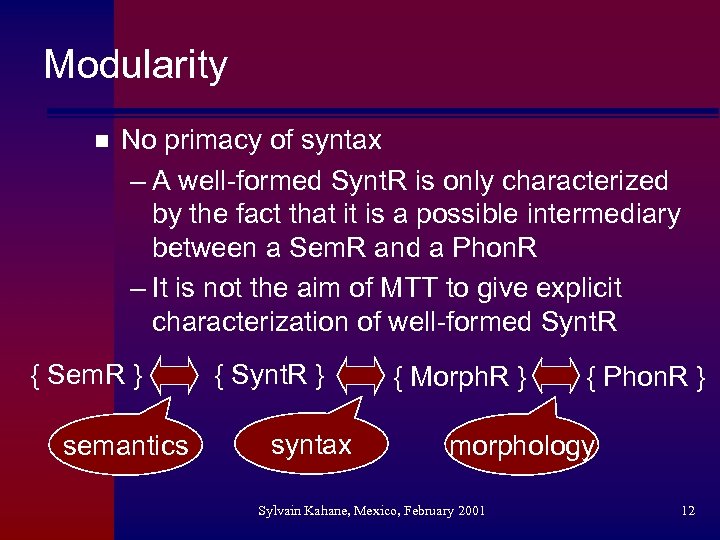

Modularity n No primacy of syntax – A well-formed Synt. R is only characterized by the fact that it is a possible intermediary between a Sem. R and a Phon. R – It is not the aim of MTT to give explicit characterization of well-formed Synt. R { Sem. R } semantics { Synt. R } syntax { Morph. R } { Phon. R } morphology Sylvain Kahane, Mexico, February 2001 12

Modularity n No primacy of syntax – A well-formed Synt. R is only characterized by the fact that it is a possible intermediary between a Sem. R and a Phon. R – It is not the aim of MTT to give explicit characterization of well-formed Synt. R { Sem. R } semantics { Synt. R } syntax { Morph. R } { Phon. R } morphology Sylvain Kahane, Mexico, February 2001 12

Conclusion of Part 1 n MTT's postulates are more or less accepted by the whole linguistic community The first sentences of MP's presentation of Brody 1995: "It is a truism that grammar relates sound and meaning. Theories that account for this relationship with reasonable success postulate representation levels corresponding to sound and meaning and assume that the relationship is mediated through complex representations that are composed of smaller units. " n Main difference of view: describe a natural language as a correspondence Sylvain Kahane, Mexico, February 2001 13

Conclusion of Part 1 n MTT's postulates are more or less accepted by the whole linguistic community The first sentences of MP's presentation of Brody 1995: "It is a truism that grammar relates sound and meaning. Theories that account for this relationship with reasonable success postulate representation levels corresponding to sound and meaning and assume that the relationship is mediated through complex representations that are composed of smaller units. " n Main difference of view: describe a natural language as a correspondence Sylvain Kahane, Mexico, February 2001 13

2. MTT's Representations Sylvain Kahane, Mexico, February 2001 14

2. MTT's Representations Sylvain Kahane, Mexico, February 2001 14

Geometry n n Sem. R = graph of predicate-argument relations Synt. R = dependency tree Morph. R = string of words Phon. R = string of phonems ® Semantic module: hierarchization ® Syntactic module: linearization Sylvain Kahane, Mexico, February 2001 15

Geometry n n Sem. R = graph of predicate-argument relations Synt. R = dependency tree Morph. R = string of words Phon. R = string of phonems ® Semantic module: hierarchization ® Syntactic module: linearization Sylvain Kahane, Mexico, February 2001 15

Semantic representation n n The core of the semantic representation is a directed graph whose nodes are labeled by semantemes – lexical semantemes = meanings of words or idioms – grammatical semantemes = meanings of grammatical inflections Arrows = predicate-argument relations = semantic dependencies Sylvain Kahane, Mexico, February 2001 16

Semantic representation n n The core of the semantic representation is a directed graph whose nodes are labeled by semantemes – lexical semantemes = meanings of words or idioms – grammatical semantemes = meanings of grammatical inflections Arrows = predicate-argument relations = semantic dependencies Sylvain Kahane, Mexico, February 2001 16

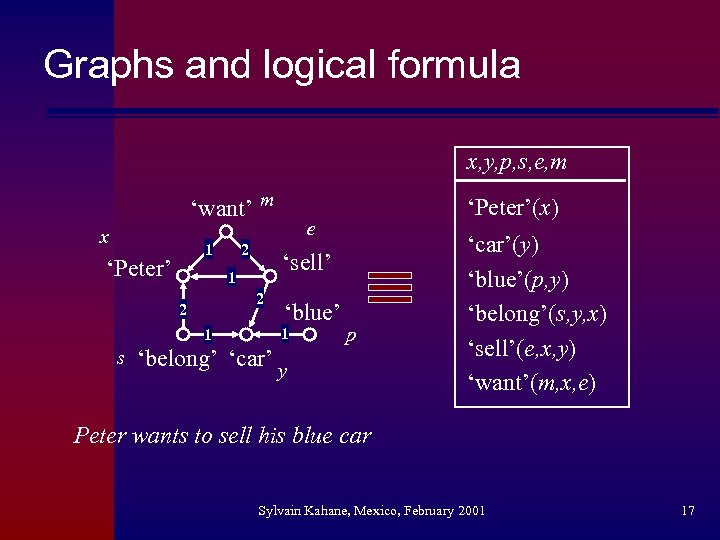

Graphs and logical formula x, y, p, s, e, m ‘want’ m x 1 ‘Peter’ 2 1 e ‘sell’ 1 2 s 2 ‘Peter’(x) ‘blue’ 1 ‘belong’ ‘car’ y p ‘car’(y) ‘blue’(p, y) ‘belong’(s, y, x) ‘sell’(e, x, y) ‘want’(m, x, e) Peter wants to sell his blue car Sylvain Kahane, Mexico, February 2001 17

Graphs and logical formula x, y, p, s, e, m ‘want’ m x 1 ‘Peter’ 2 1 e ‘sell’ 1 2 s 2 ‘Peter’(x) ‘blue’ 1 ‘belong’ ‘car’ y p ‘car’(y) ‘blue’(p, y) ‘belong’(s, y, x) ‘sell’(e, x, y) ‘want’(m, x, e) Peter wants to sell his blue car Sylvain Kahane, Mexico, February 2001 17

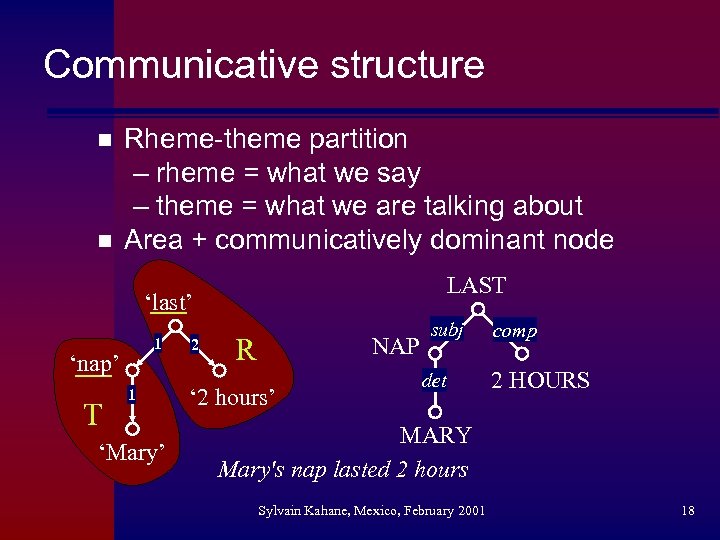

Communicative structure n n Rheme-theme partition – rheme = what we say – theme = what we are talking about Area + communicatively dominant node LAST ‘last’ 1 ‘nap’ T 1 ‘Mary’ 2 R NAP ‘ 2 hours’ subj det comp 2 HOURS MARY Mary's nap lasted 2 hours Sylvain Kahane, Mexico, February 2001 18

Communicative structure n n Rheme-theme partition – rheme = what we say – theme = what we are talking about Area + communicatively dominant node LAST ‘last’ 1 ‘nap’ T 1 ‘Mary’ 2 R NAP ‘ 2 hours’ subj det comp 2 HOURS MARY Mary's nap lasted 2 hours Sylvain Kahane, Mexico, February 2001 18

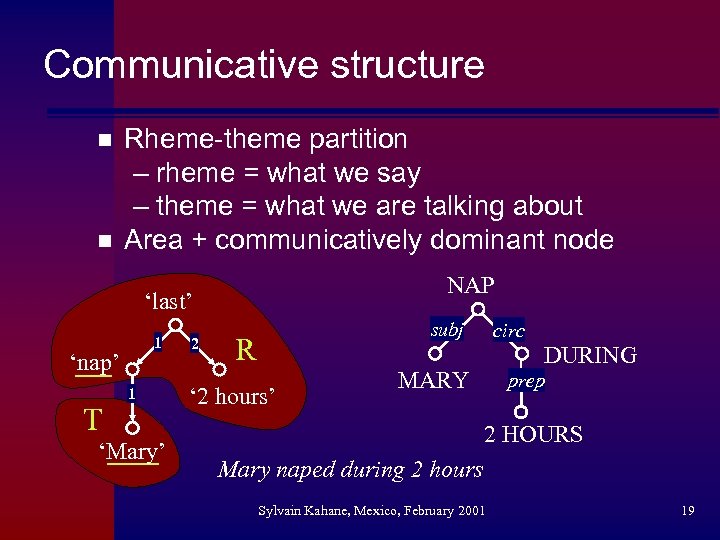

Communicative structure n n Rheme-theme partition – rheme = what we say – theme = what we are talking about Area + communicatively dominant node NAP ‘last’ 1 ‘nap’ T 1 ‘Mary’ 2 subj R ‘ 2 hours’ circ DURING MARY prep 2 HOURS Mary naped during 2 hours Sylvain Kahane, Mexico, February 2001 19

Communicative structure n n Rheme-theme partition – rheme = what we say – theme = what we are talking about Area + communicatively dominant node NAP ‘last’ 1 ‘nap’ T 1 ‘Mary’ 2 subj R ‘ 2 hours’ circ DURING MARY prep 2 HOURS Mary naped during 2 hours Sylvain Kahane, Mexico, February 2001 19

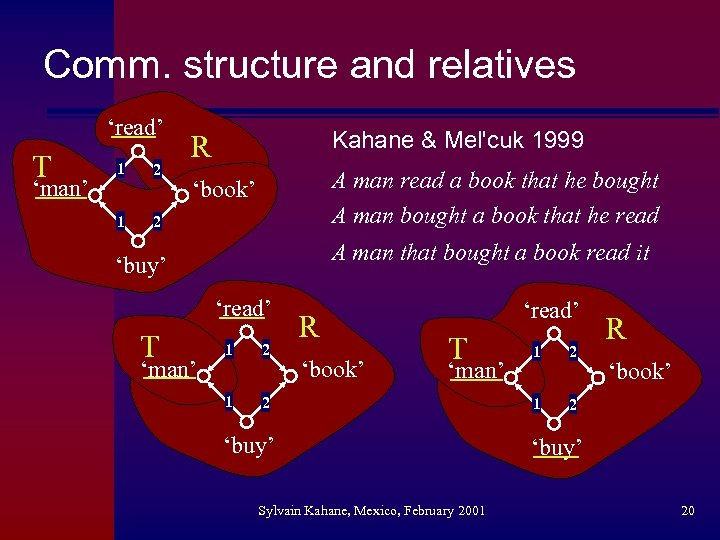

Comm. structure and relatives ‘read’ T ‘man’ 1 2 1 R Kahane & Mel'cuk 1999 ‘book’ A man read a book that he bought A man bought a book that he read 2 A man that bought a book read it ‘buy’ ‘read’ T ‘man’ 1 2 1 R ‘read’ T 2 ‘book’ ‘man’ ‘buy’ Sylvain Kahane, Mexico, February 2001 1 2 1 R 2 ‘book’ ‘buy’ 20

Comm. structure and relatives ‘read’ T ‘man’ 1 2 1 R Kahane & Mel'cuk 1999 ‘book’ A man read a book that he bought A man bought a book that he read 2 A man that bought a book read it ‘buy’ ‘read’ T ‘man’ 1 2 1 R ‘read’ T 2 ‘book’ ‘man’ ‘buy’ Sylvain Kahane, Mexico, February 2001 1 2 1 R 2 ‘book’ ‘buy’ 20

Syntactic representation n The core of the syntactic representation is non ordered dependency tree – whose nodes are labeled by lexical units (+ grammemes) – whose branches are labeled by syntactic relations Sylvain Kahane, Mexico, February 2001 21

Syntactic representation n The core of the syntactic representation is non ordered dependency tree – whose nodes are labeled by lexical units (+ grammemes) – whose branches are labeled by syntactic relations Sylvain Kahane, Mexico, February 2001 21

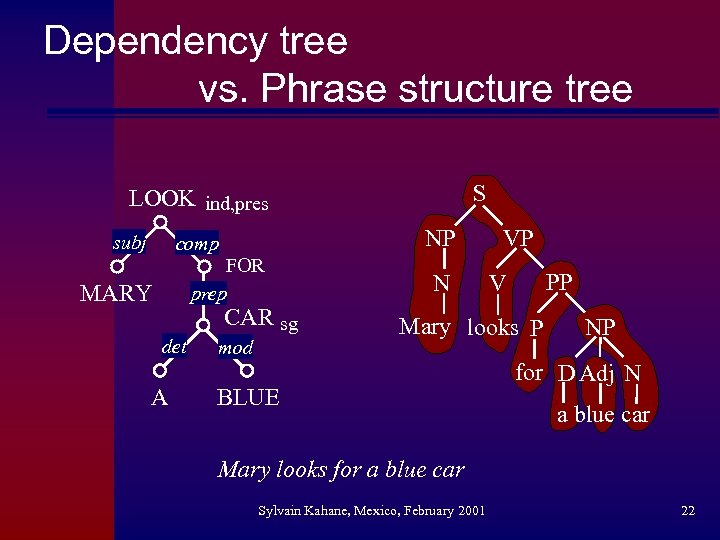

Dependency tree vs. Phrase structure tree S LOOK ind, pres subj NP comp FOR prep MARY CAR sg det A mod N VP PP V Mary looks P BLUE NP for D Adj N a blue car Mary looks for a blue car Sylvain Kahane, Mexico, February 2001 22

Dependency tree vs. Phrase structure tree S LOOK ind, pres subj NP comp FOR prep MARY CAR sg det A mod N VP PP V Mary looks P BLUE NP for D Adj N a blue car Mary looks for a blue car Sylvain Kahane, Mexico, February 2001 22

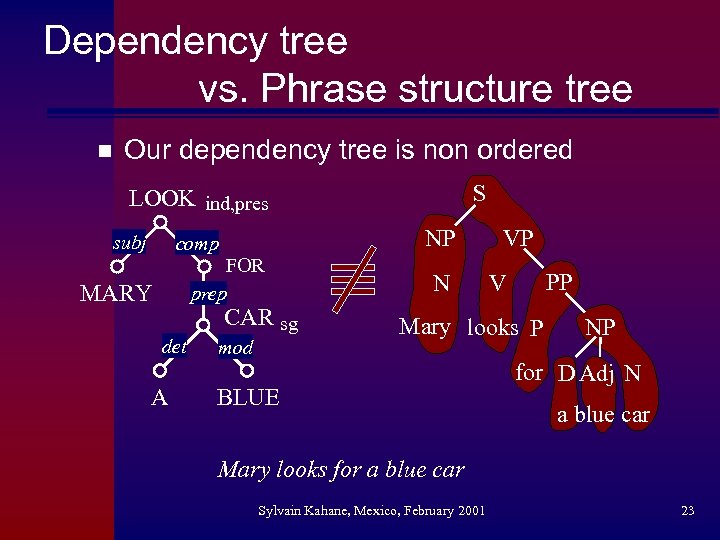

Dependency tree vs. Phrase structure tree n Our dependency tree is non ordered S LOOK ind, pres subj NP comp FOR prep MARY CAR sg det A mod N VP PP V Mary looks P BLUE NP for D Adj N a blue car Mary looks for a blue car Sylvain Kahane, Mexico, February 2001 23

Dependency tree vs. Phrase structure tree n Our dependency tree is non ordered S LOOK ind, pres subj NP comp FOR prep MARY CAR sg det A mod N VP PP V Mary looks P BLUE NP for D Adj N a blue car Mary looks for a blue car Sylvain Kahane, Mexico, February 2001 23

Morphological representation n n The core of the morphological representation is the string of the morphological representation of the words Morphological representation of a word = lemma + string of grammemes (including agreement and government grammemes) MARYsg LOOK ind, present, 3, sg FOR A BLUE CAR sg n Prosodic structure Sylvain Kahane, Mexico, February 2001 24

Morphological representation n n The core of the morphological representation is the string of the morphological representation of the words Morphological representation of a word = lemma + string of grammemes (including agreement and government grammemes) MARYsg LOOK ind, present, 3, sg FOR A BLUE CAR sg n Prosodic structure Sylvain Kahane, Mexico, February 2001 24

3. Correspondence Modules Sylvain Kahane, Mexico, February 2001 25

3. Correspondence Modules Sylvain Kahane, Mexico, February 2001 25

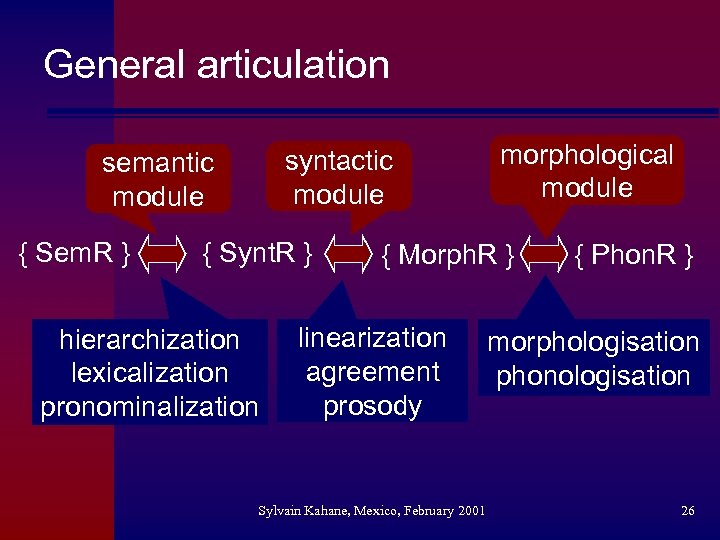

General articulation syntactic module semantic module { Sem. R } { Synt. R } hierarchization lexicalization pronominalization morphological module { Morph. R } linearization agreement prosody Sylvain Kahane, Mexico, February 2001 { Phon. R } morphologisation phonologisation 26

General articulation syntactic module semantic module { Sem. R } { Synt. R } hierarchization lexicalization pronominalization morphological module { Morph. R } linearization agreement prosody Sylvain Kahane, Mexico, February 2001 { Phon. R } morphologisation phonologisation 26

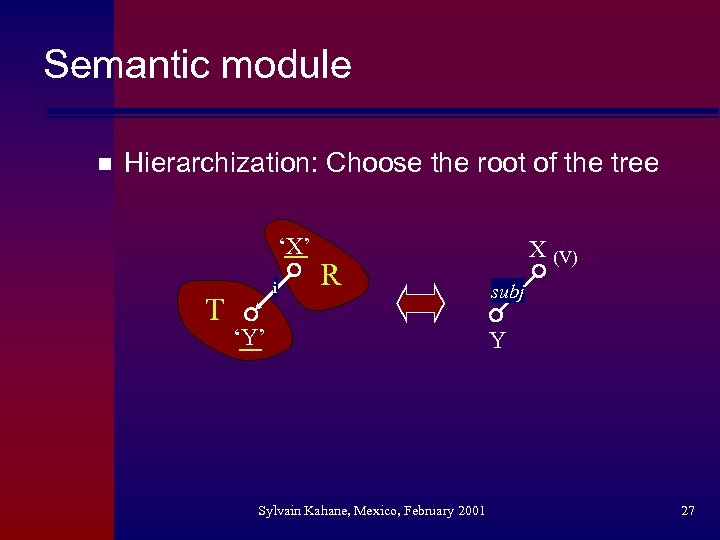

Semantic module n Hierarchization: Choose the root of the tree ‘X’ T i R ‘Y’ Sylvain Kahane, Mexico, February 2001 X (V) subj Y 27

Semantic module n Hierarchization: Choose the root of the tree ‘X’ T i R ‘Y’ Sylvain Kahane, Mexico, February 2001 X (V) subj Y 27

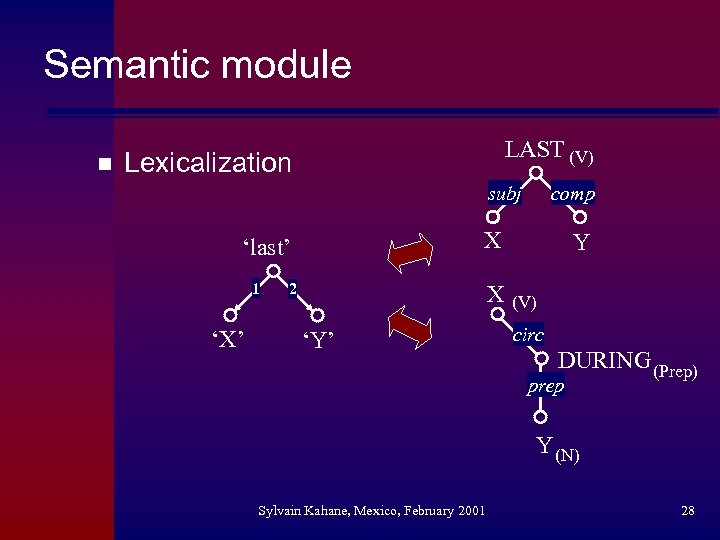

Semantic module n LAST (V) Lexicalization subj X ‘last’ 1 ‘X’ comp 2 X ‘Y’ Y (V) circ DURING (Prep) prep Y (N) Sylvain Kahane, Mexico, February 2001 28

Semantic module n LAST (V) Lexicalization subj X ‘last’ 1 ‘X’ comp 2 X ‘Y’ Y (V) circ DURING (Prep) prep Y (N) Sylvain Kahane, Mexico, February 2001 28

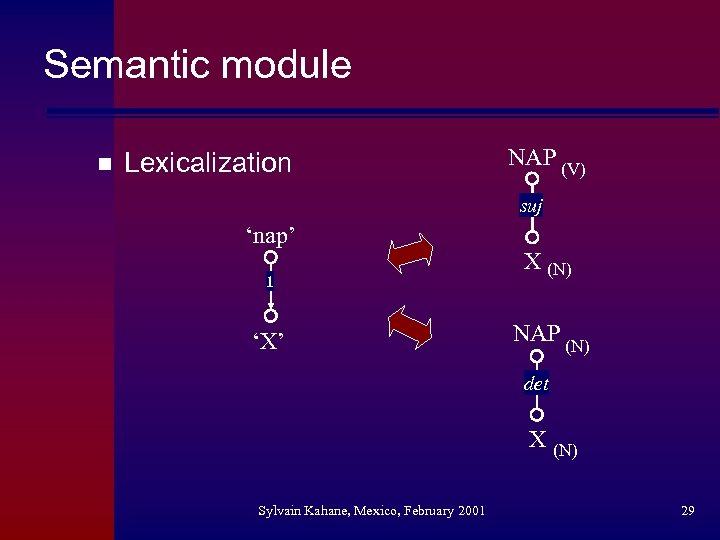

Semantic module n Lexicalization NAP (V) suj ‘nap’ 1 ‘X’ X (N) NAP (N) det X (N) Sylvain Kahane, Mexico, February 2001 29

Semantic module n Lexicalization NAP (V) suj ‘nap’ 1 ‘X’ X (N) NAP (N) det X (N) Sylvain Kahane, Mexico, February 2001 29

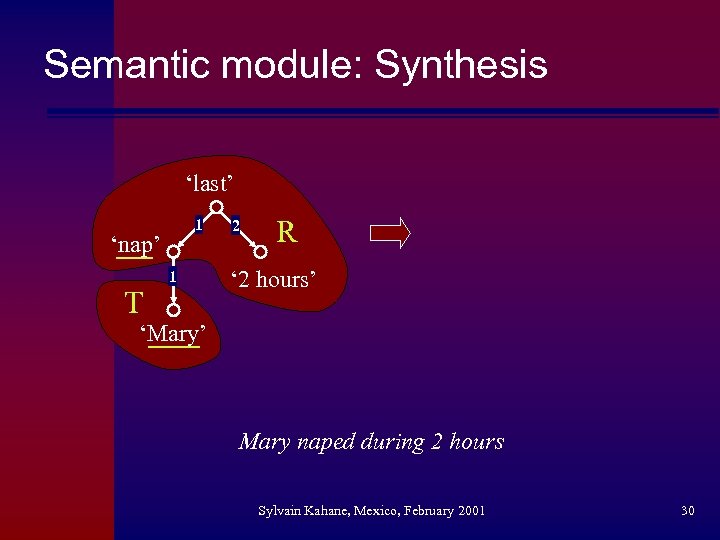

Semantic module: Synthesis ‘last’ 1 ‘nap’ T 1 2 R ‘ 2 hours’ ‘Mary’ Mary naped during 2 hours Sylvain Kahane, Mexico, February 2001 30

Semantic module: Synthesis ‘last’ 1 ‘nap’ T 1 2 R ‘ 2 hours’ ‘Mary’ Mary naped during 2 hours Sylvain Kahane, Mexico, February 2001 30

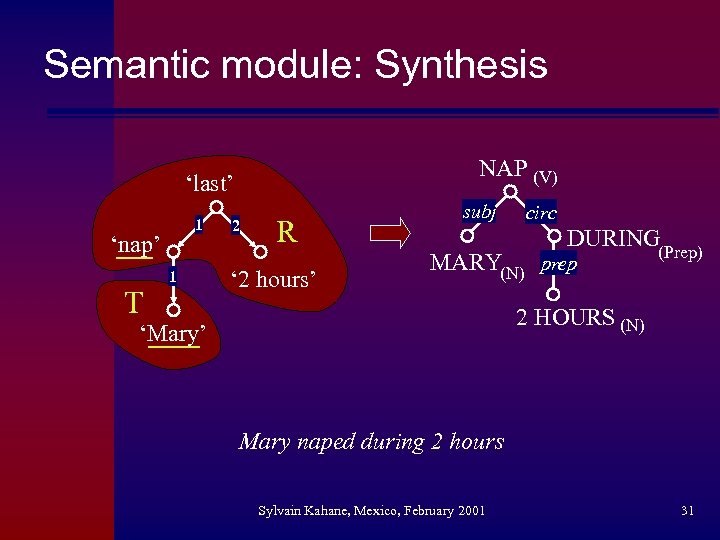

Semantic module: Synthesis NAP (V) ‘last’ 1 ‘nap’ T 1 2 R ‘ 2 hours’ subj circ DURING MARY(N) prep (Prep) 2 HOURS (N) ‘Mary’ Mary naped during 2 hours Sylvain Kahane, Mexico, February 2001 31

Semantic module: Synthesis NAP (V) ‘last’ 1 ‘nap’ T 1 2 R ‘ 2 hours’ subj circ DURING MARY(N) prep (Prep) 2 HOURS (N) ‘Mary’ Mary naped during 2 hours Sylvain Kahane, Mexico, February 2001 31

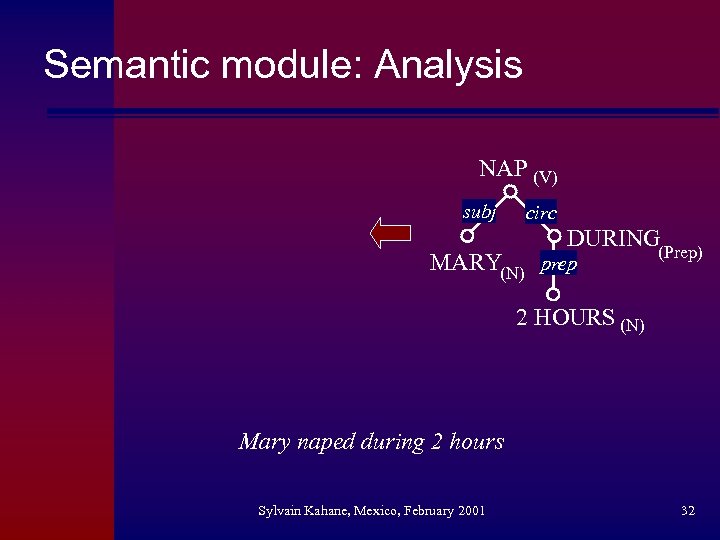

Semantic module: Analysis NAP (V) subj circ DURING MARY(N) prep (Prep) 2 HOURS (N) Mary naped during 2 hours Sylvain Kahane, Mexico, February 2001 32

Semantic module: Analysis NAP (V) subj circ DURING MARY(N) prep (Prep) 2 HOURS (N) Mary naped during 2 hours Sylvain Kahane, Mexico, February 2001 32

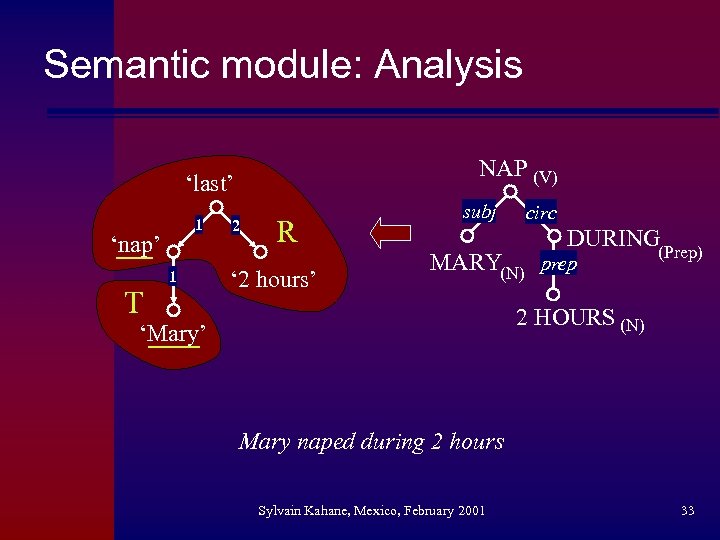

Semantic module: Analysis NAP (V) ‘last’ 1 ‘nap’ T 1 2 R ‘ 2 hours’ subj circ DURING MARY(N) prep (Prep) 2 HOURS (N) ‘Mary’ Mary naped during 2 hours Sylvain Kahane, Mexico, February 2001 33

Semantic module: Analysis NAP (V) ‘last’ 1 ‘nap’ T 1 2 R ‘ 2 hours’ subj circ DURING MARY(N) prep (Prep) 2 HOURS (N) ‘Mary’ Mary naped during 2 hours Sylvain Kahane, Mexico, February 2001 33

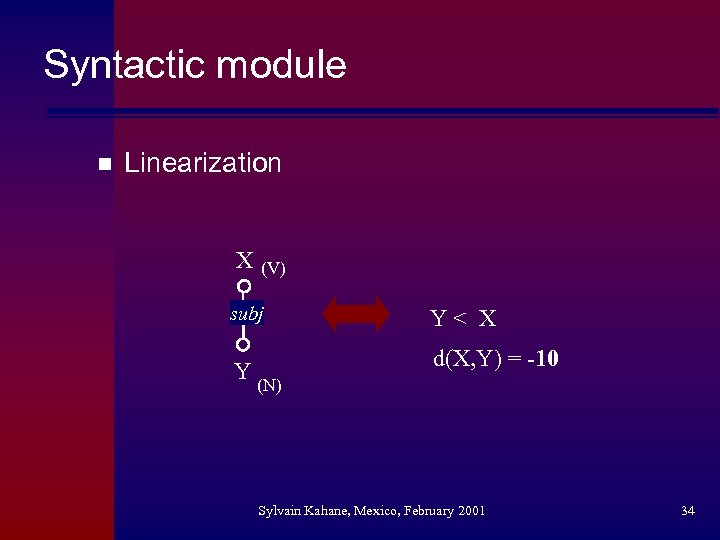

Syntactic module n Linearization X (V) subj Y (N) Y< X d(X, Y) = -10 Sylvain Kahane, Mexico, February 2001 34

Syntactic module n Linearization X (V) subj Y (N) Y< X d(X, Y) = -10 Sylvain Kahane, Mexico, February 2001 34

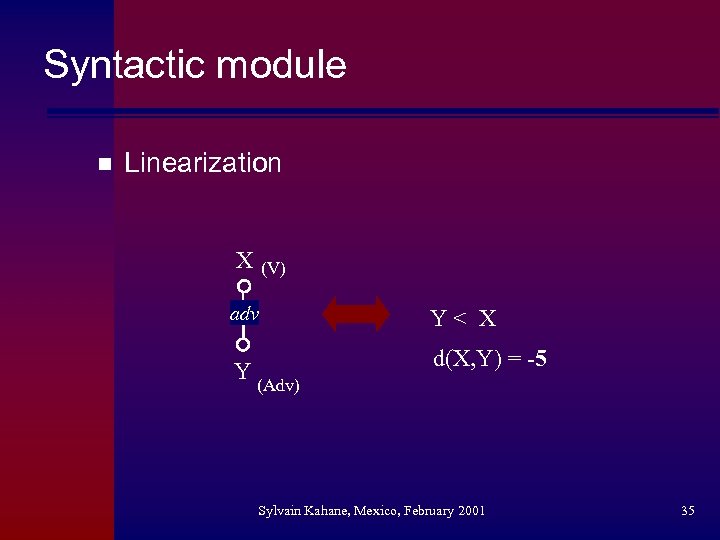

Syntactic module n Linearization X (V) adv Y (Adv) Y< X d(X, Y) = -5 Sylvain Kahane, Mexico, February 2001 35

Syntactic module n Linearization X (V) adv Y (Adv) Y< X d(X, Y) = -5 Sylvain Kahane, Mexico, February 2001 35

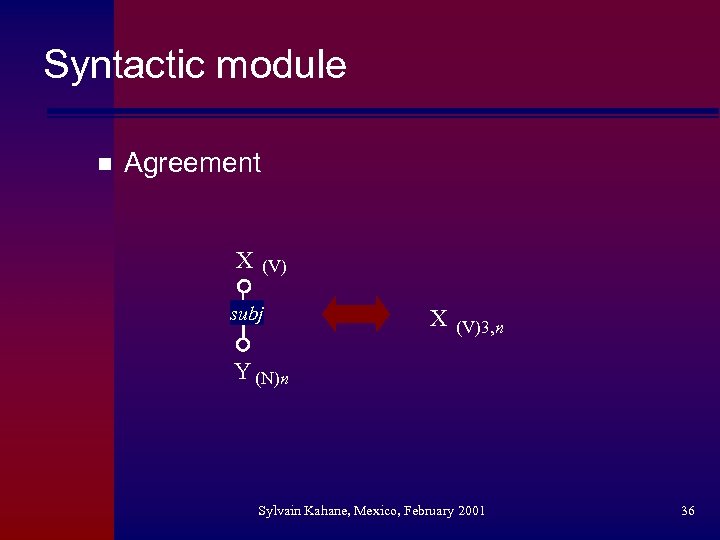

Syntactic module n Agreement X (V) subj X (V)3, n Y (N)n Sylvain Kahane, Mexico, February 2001 36

Syntactic module n Agreement X (V) subj X (V)3, n Y (N)n Sylvain Kahane, Mexico, February 2001 36

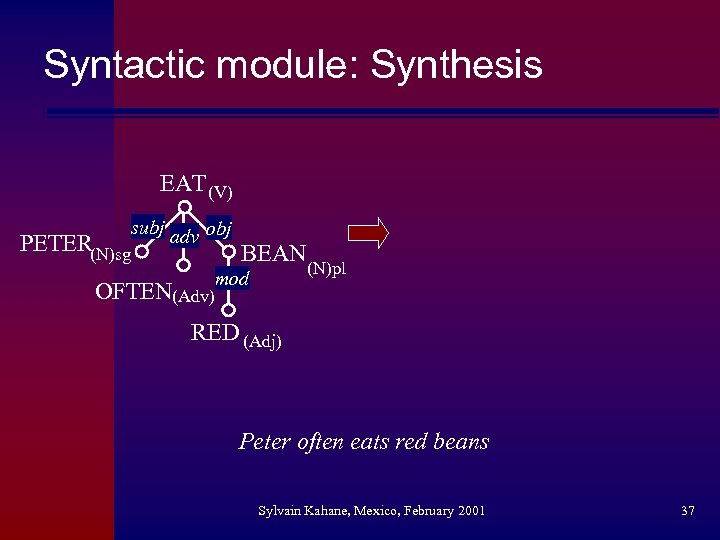

Syntactic module: Synthesis EAT (V) subj adv obj PETER(N)sg BEAN mod OFTEN(Adv) (N)pl RED (Adj) Peter often eats red beans Sylvain Kahane, Mexico, February 2001 37

Syntactic module: Synthesis EAT (V) subj adv obj PETER(N)sg BEAN mod OFTEN(Adv) (N)pl RED (Adj) Peter often eats red beans Sylvain Kahane, Mexico, February 2001 37

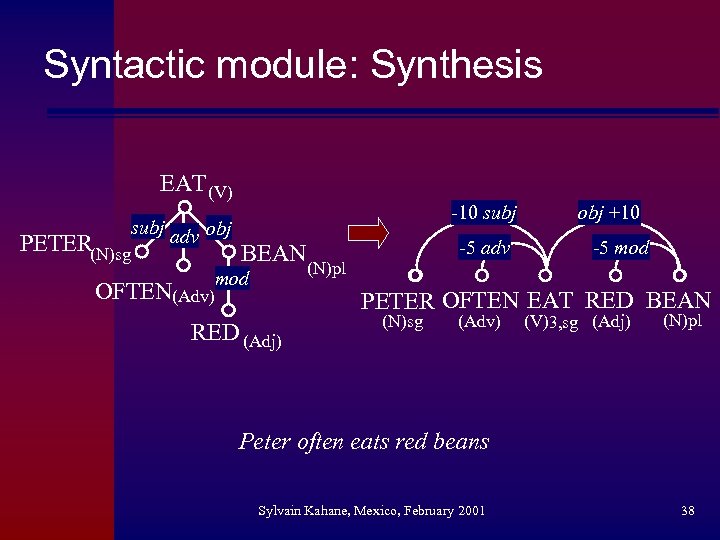

Syntactic module: Synthesis EAT (V) subj adv obj PETER(N)sg -10 subj BEAN mod OFTEN(Adv) -5 adv (N)pl obj +10 -5 mod PETER OFTEN EAT RED BEAN RED (Adj) (N)sg (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans Sylvain Kahane, Mexico, February 2001 38

Syntactic module: Synthesis EAT (V) subj adv obj PETER(N)sg -10 subj BEAN mod OFTEN(Adv) -5 adv (N)pl obj +10 -5 mod PETER OFTEN EAT RED BEAN RED (Adj) (N)sg (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans Sylvain Kahane, Mexico, February 2001 38

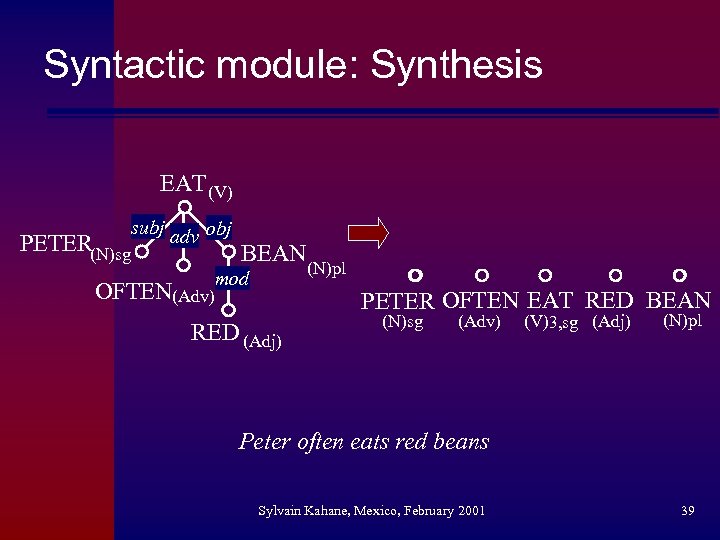

Syntactic module: Synthesis EAT (V) subj adv obj PETER(N)sg BEAN mod OFTEN(Adv) (N)pl PETER OFTEN EAT RED BEAN RED (Adj) (N)sg (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans Sylvain Kahane, Mexico, February 2001 39

Syntactic module: Synthesis EAT (V) subj adv obj PETER(N)sg BEAN mod OFTEN(Adv) (N)pl PETER OFTEN EAT RED BEAN RED (Adj) (N)sg (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans Sylvain Kahane, Mexico, February 2001 39

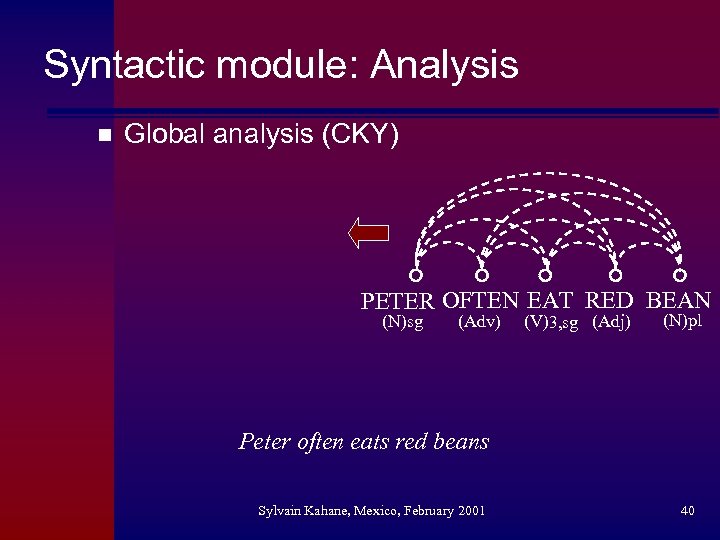

Syntactic module: Analysis n Global analysis (CKY) PETER OFTEN EAT RED BEAN (N)sg (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans Sylvain Kahane, Mexico, February 2001 40

Syntactic module: Analysis n Global analysis (CKY) PETER OFTEN EAT RED BEAN (N)sg (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans Sylvain Kahane, Mexico, February 2001 40

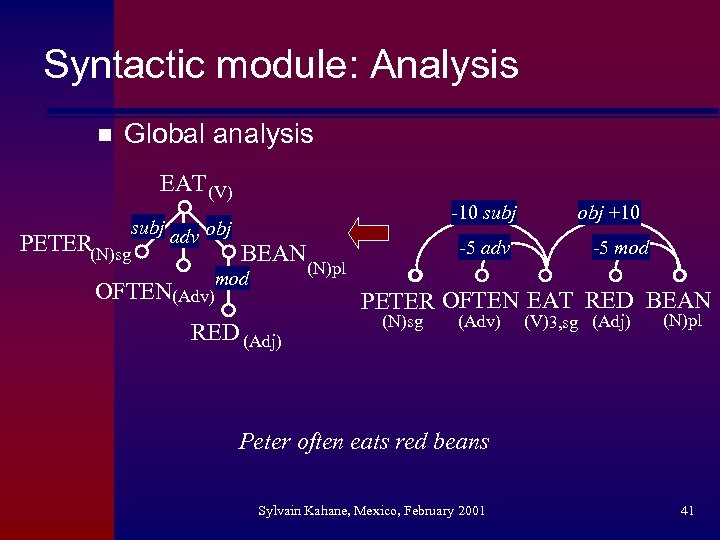

Syntactic module: Analysis n Global analysis EAT (V) subj adv obj PETER(N)sg -10 subj BEAN mod OFTEN(Adv) -5 adv (N)pl obj +10 -5 mod PETER OFTEN EAT RED BEAN RED (Adj) (N)sg (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans Sylvain Kahane, Mexico, February 2001 41

Syntactic module: Analysis n Global analysis EAT (V) subj adv obj PETER(N)sg -10 subj BEAN mod OFTEN(Adv) -5 adv (N)pl obj +10 -5 mod PETER OFTEN EAT RED BEAN RED (Adj) (N)sg (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans Sylvain Kahane, Mexico, February 2001 41

Projectivity n Lecerf 1961, Iordanskaja 1963, Gladkij 1966 n An ordered dependency tree is projective iff: – No dependencies cross each other – No dependency covers the root * * Sylvain Kahane, Mexico, February 2001 42

Projectivity n Lecerf 1961, Iordanskaja 1963, Gladkij 1966 n An ordered dependency tree is projective iff: – No dependencies cross each other – No dependency covers the root * * Sylvain Kahane, Mexico, February 2001 42

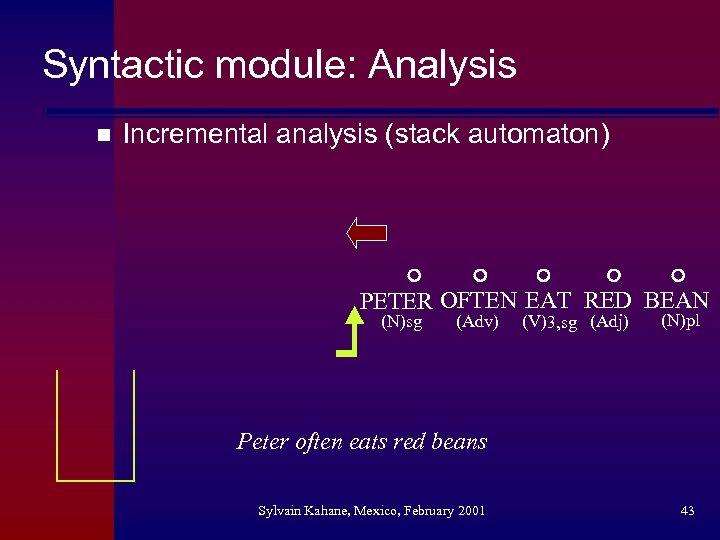

Syntactic module: Analysis n Incremental analysis (stack automaton) PETER OFTEN EAT RED BEAN (N)sg (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans Sylvain Kahane, Mexico, February 2001 43

Syntactic module: Analysis n Incremental analysis (stack automaton) PETER OFTEN EAT RED BEAN (N)sg (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans Sylvain Kahane, Mexico, February 2001 43

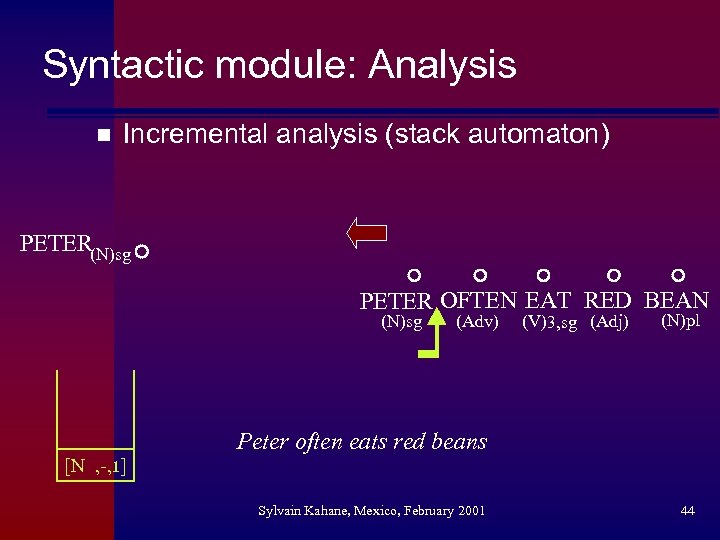

Syntactic module: Analysis n Incremental analysis (stack automaton) PETER(N)sg PETER OFTEN EAT RED BEAN (N)sg (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans [N , -, 1] Sylvain Kahane, Mexico, February 2001 44

Syntactic module: Analysis n Incremental analysis (stack automaton) PETER(N)sg PETER OFTEN EAT RED BEAN (N)sg (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans [N , -, 1] Sylvain Kahane, Mexico, February 2001 44

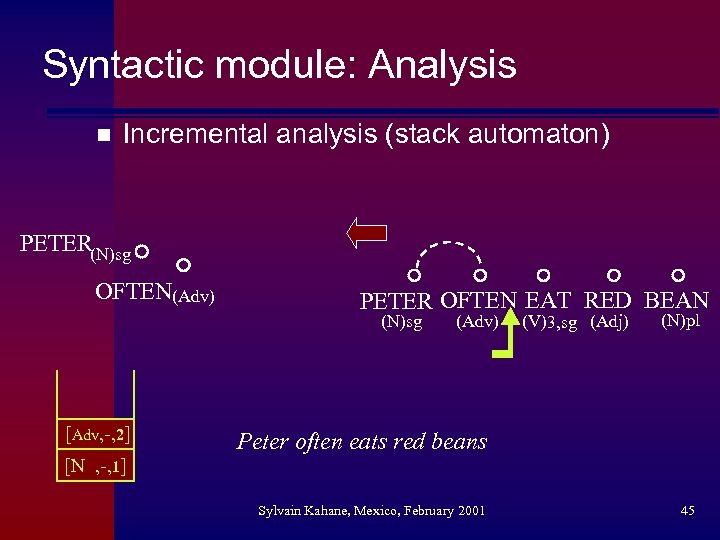

Syntactic module: Analysis n Incremental analysis (stack automaton) PETER(N)sg OFTEN(Adv) PETER OFTEN EAT RED BEAN (N)sg [Adv, -, 2] (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans [N , -, 1] Sylvain Kahane, Mexico, February 2001 45

Syntactic module: Analysis n Incremental analysis (stack automaton) PETER(N)sg OFTEN(Adv) PETER OFTEN EAT RED BEAN (N)sg [Adv, -, 2] (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans [N , -, 1] Sylvain Kahane, Mexico, February 2001 45

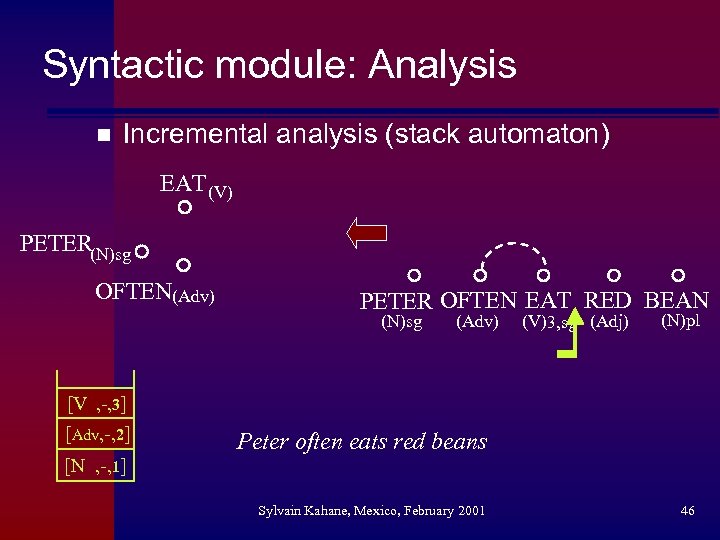

Syntactic module: Analysis n Incremental analysis (stack automaton) EAT (V) PETER(N)sg OFTEN(Adv) PETER OFTEN EAT RED BEAN (N)sg (Adv) (V)3, sg (Adj) (N)pl [V , -, 3] [Adv, -, 2] Peter often eats red beans [N , -, 1] Sylvain Kahane, Mexico, February 2001 46

Syntactic module: Analysis n Incremental analysis (stack automaton) EAT (V) PETER(N)sg OFTEN(Adv) PETER OFTEN EAT RED BEAN (N)sg (Adv) (V)3, sg (Adj) (N)pl [V , -, 3] [Adv, -, 2] Peter often eats red beans [N , -, 1] Sylvain Kahane, Mexico, February 2001 46

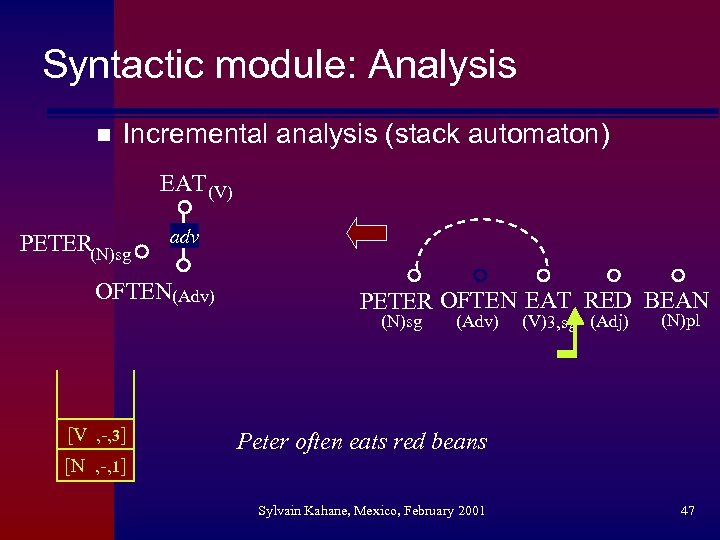

Syntactic module: Analysis n Incremental analysis (stack automaton) EAT (V) PETER(N)sg adv OFTEN(Adv) PETER OFTEN EAT RED BEAN (N)sg [V , -, 3] (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans [N , -, 1] Sylvain Kahane, Mexico, February 2001 47

Syntactic module: Analysis n Incremental analysis (stack automaton) EAT (V) PETER(N)sg adv OFTEN(Adv) PETER OFTEN EAT RED BEAN (N)sg [V , -, 3] (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans [N , -, 1] Sylvain Kahane, Mexico, February 2001 47

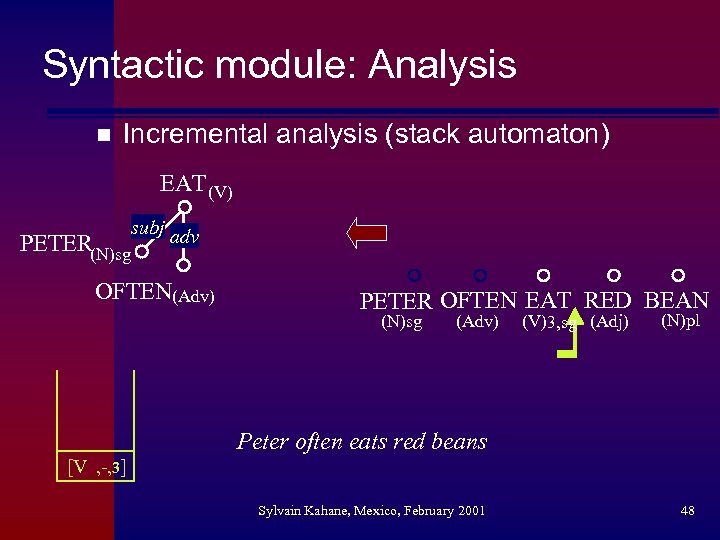

Syntactic module: Analysis n Incremental analysis (stack automaton) EAT (V) subj adv PETER(N)sg OFTEN(Adv) PETER OFTEN EAT RED BEAN (N)sg (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans [V , -, 3] Sylvain Kahane, Mexico, February 2001 48

Syntactic module: Analysis n Incremental analysis (stack automaton) EAT (V) subj adv PETER(N)sg OFTEN(Adv) PETER OFTEN EAT RED BEAN (N)sg (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans [V , -, 3] Sylvain Kahane, Mexico, February 2001 48

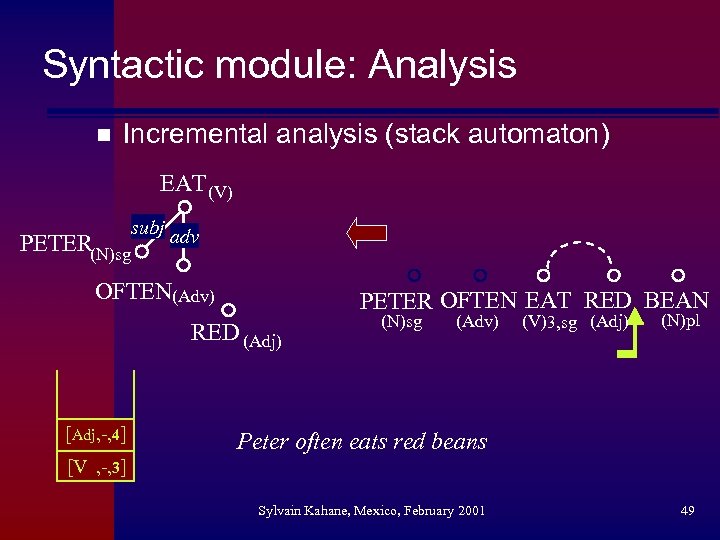

Syntactic module: Analysis n Incremental analysis (stack automaton) EAT (V) subj adv PETER(N)sg OFTEN(Adv) PETER OFTEN EAT RED BEAN RED (Adj) [Adj, -, 4] (N)sg (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans [V , -, 3] Sylvain Kahane, Mexico, February 2001 49

Syntactic module: Analysis n Incremental analysis (stack automaton) EAT (V) subj adv PETER(N)sg OFTEN(Adv) PETER OFTEN EAT RED BEAN RED (Adj) [Adj, -, 4] (N)sg (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans [V , -, 3] Sylvain Kahane, Mexico, February 2001 49

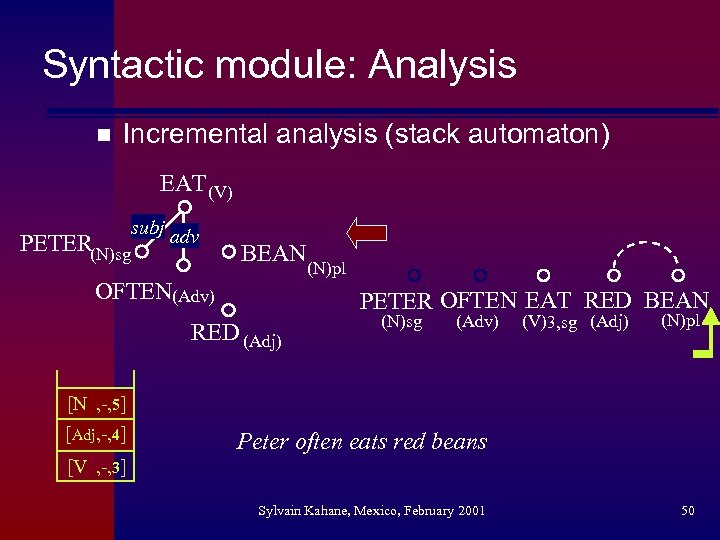

Syntactic module: Analysis n Incremental analysis (stack automaton) EAT (V) subj adv PETER(N)sg BEAN OFTEN(Adv) (N)pl PETER OFTEN EAT RED BEAN RED (Adj) (N)sg (Adv) (V)3, sg (Adj) (N)pl [N , -, 5] [Adj, -, 4] Peter often eats red beans [V , -, 3] Sylvain Kahane, Mexico, February 2001 50

Syntactic module: Analysis n Incremental analysis (stack automaton) EAT (V) subj adv PETER(N)sg BEAN OFTEN(Adv) (N)pl PETER OFTEN EAT RED BEAN RED (Adj) (N)sg (Adv) (V)3, sg (Adj) (N)pl [N , -, 5] [Adj, -, 4] Peter often eats red beans [V , -, 3] Sylvain Kahane, Mexico, February 2001 50

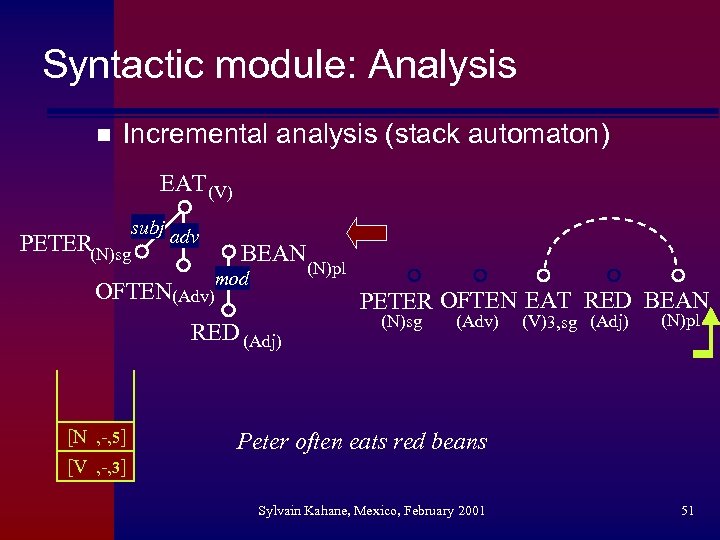

Syntactic module: Analysis n Incremental analysis (stack automaton) EAT (V) subj adv PETER(N)sg BEAN mod OFTEN(Adv) PETER OFTEN EAT RED BEAN RED (Adj) [N , -, 5] [V , -, 3] (N)pl (N)sg (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans Sylvain Kahane, Mexico, February 2001 51

Syntactic module: Analysis n Incremental analysis (stack automaton) EAT (V) subj adv PETER(N)sg BEAN mod OFTEN(Adv) PETER OFTEN EAT RED BEAN RED (Adj) [N , -, 5] [V , -, 3] (N)pl (N)sg (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans Sylvain Kahane, Mexico, February 2001 51

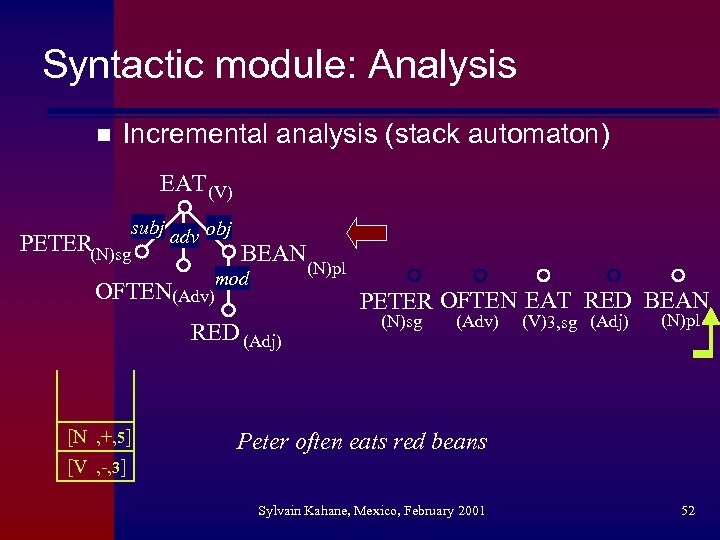

Syntactic module: Analysis n Incremental analysis (stack automaton) EAT (V) subj adv obj PETER(N)sg BEAN mod OFTEN(Adv) PETER OFTEN EAT RED BEAN RED (Adj) [N , +, 5] [V , -, 3] (N)pl (N)sg (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans Sylvain Kahane, Mexico, February 2001 52

Syntactic module: Analysis n Incremental analysis (stack automaton) EAT (V) subj adv obj PETER(N)sg BEAN mod OFTEN(Adv) PETER OFTEN EAT RED BEAN RED (Adj) [N , +, 5] [V , -, 3] (N)pl (N)sg (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans Sylvain Kahane, Mexico, February 2001 52

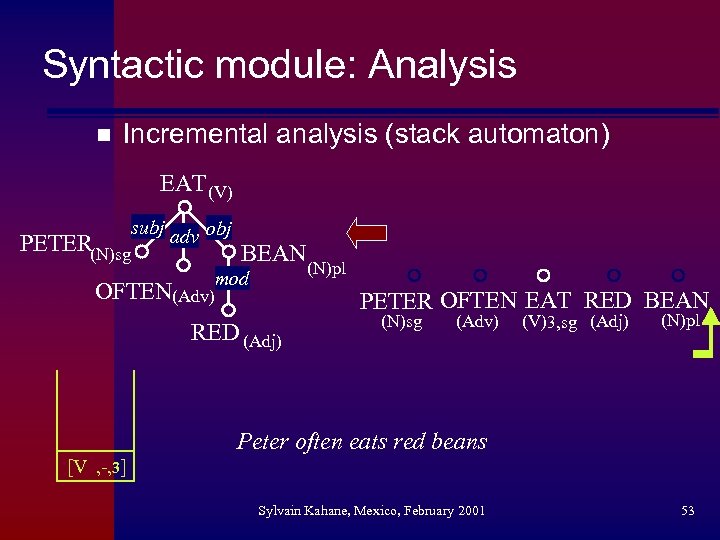

Syntactic module: Analysis n Incremental analysis (stack automaton) EAT (V) subj adv obj PETER(N)sg BEAN mod OFTEN(Adv) (N)pl PETER OFTEN EAT RED BEAN RED (Adj) (N)sg (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans [V , -, 3] Sylvain Kahane, Mexico, February 2001 53

Syntactic module: Analysis n Incremental analysis (stack automaton) EAT (V) subj adv obj PETER(N)sg BEAN mod OFTEN(Adv) (N)pl PETER OFTEN EAT RED BEAN RED (Adj) (N)sg (Adv) (V)3, sg (Adj) (N)pl Peter often eats red beans [V , -, 3] Sylvain Kahane, Mexico, February 2001 53

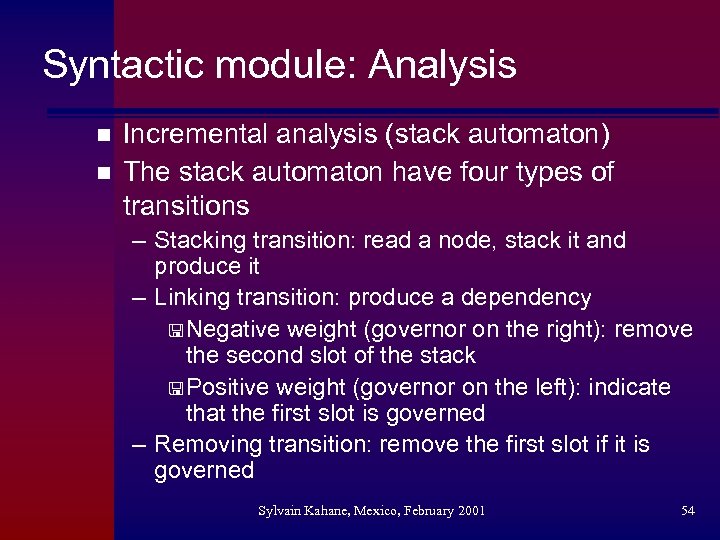

Syntactic module: Analysis n n Incremental analysis (stack automaton) The stack automaton have four types of transitions – Stacking transition: read a node, stack it and produce it – Linking transition: produce a dependency < Negative weight (governor on the right): remove the second slot of the stack < Positive weight (governor on the left): indicate that the first slot is governed – Removing transition: remove the first slot if it is governed Sylvain Kahane, Mexico, February 2001 54

Syntactic module: Analysis n n Incremental analysis (stack automaton) The stack automaton have four types of transitions – Stacking transition: read a node, stack it and produce it – Linking transition: produce a dependency < Negative weight (governor on the right): remove the second slot of the stack < Positive weight (governor on the left): indicate that the first slot is governed – Removing transition: remove the first slot if it is governed Sylvain Kahane, Mexico, February 2001 54

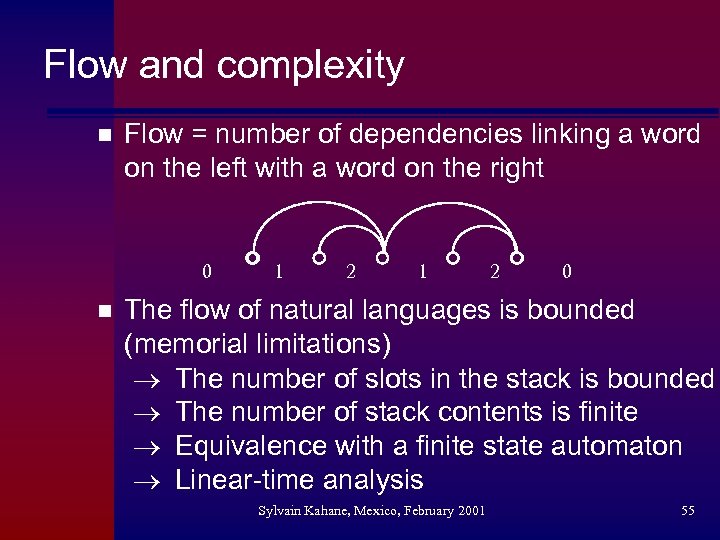

Flow and complexity n Flow = number of dependencies linking a word on the left with a word on the right 0 n 1 2 0 The flow of natural languages is bounded (memorial limitations) ® The number of slots in the stack is bounded ® The number of stack contents is finite ® Equivalence with a finite state automaton ® Linear-time analysis Sylvain Kahane, Mexico, February 2001 55

Flow and complexity n Flow = number of dependencies linking a word on the left with a word on the right 0 n 1 2 0 The flow of natural languages is bounded (memorial limitations) ® The number of slots in the stack is bounded ® The number of stack contents is finite ® Equivalence with a finite state automaton ® Linear-time analysis Sylvain Kahane, Mexico, February 2001 55

4. Comparison with Generative/Unification Grammars Sylvain Kahane, Mexico, February 2001 56

4. Comparison with Generative/Unification Grammars Sylvain Kahane, Mexico, February 2001 56

MTT module and correspondence n An MTT module defines more than a correspondence between two sets of structures Sylvain Kahane, Mexico, February 2001 57

MTT module and correspondence n An MTT module defines more than a correspondence between two sets of structures Sylvain Kahane, Mexico, February 2001 57

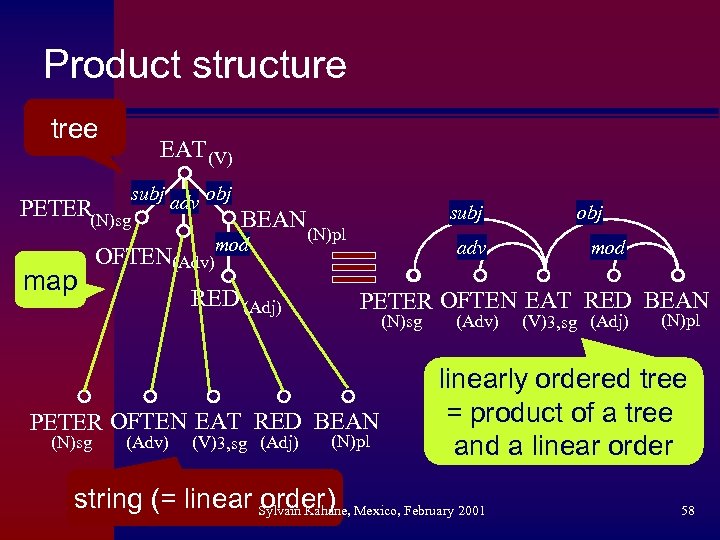

Product structure tree EAT (V) subj adv obj PETER(N)sg BEAN mod map OFTEN(Adv) RED (Adj) subj (N)pl adv (Adv) (V)3, sg (Adj) mod PETER OFTEN EAT RED BEAN (N)sg obj (N)pl (Adv) (V)3, sg (Adj) (N)pl linearly ordered tree = product of a tree and a linear order string (= linear order) Mexico, February 2001 Sylvain Kahane, 58

Product structure tree EAT (V) subj adv obj PETER(N)sg BEAN mod map OFTEN(Adv) RED (Adj) subj (N)pl adv (Adv) (V)3, sg (Adj) mod PETER OFTEN EAT RED BEAN (N)sg obj (N)pl (Adv) (V)3, sg (Adj) (N)pl linearly ordered tree = product of a tree and a linear order string (= linear order) Mexico, February 2001 Sylvain Kahane, 58

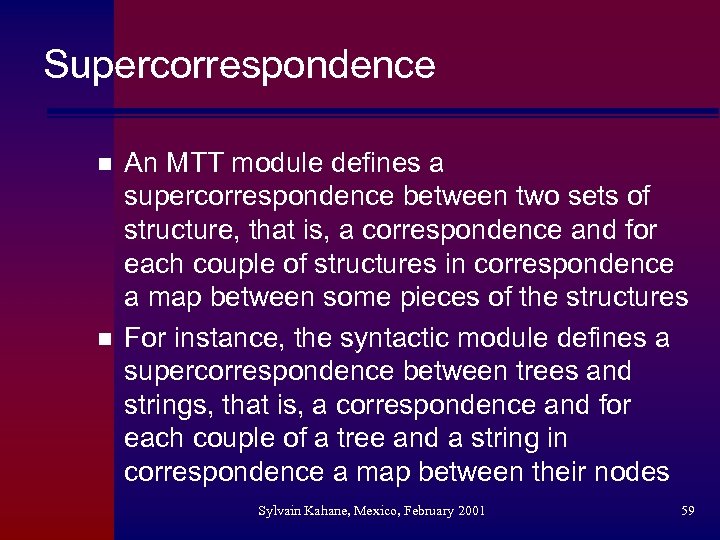

Supercorrespondence n n An MTT module defines a supercorrespondence between two sets of structure, that is, a correspondence and for each couple of structures in correspondence a map between some pieces of the structures For instance, the syntactic module defines a supercorrespondence between trees and strings, that is, a correspondence and for each couple of a tree and a string in correspondence a map between their nodes Sylvain Kahane, Mexico, February 2001 59

Supercorrespondence n n An MTT module defines a supercorrespondence between two sets of structure, that is, a correspondence and for each couple of structures in correspondence a map between some pieces of the structures For instance, the syntactic module defines a supercorrespondence between trees and strings, that is, a correspondence and for each couple of a tree and a string in correspondence a map between their nodes Sylvain Kahane, Mexico, February 2001 59

Let's come back to the first postulate Postulate 1 (revised) Natural language is (considered as) a manyto-many supercorrespondence between meanings and texts n Sentence = product structure map between pieces of meaning and pieces of texts (compositionality) Sylvain Kahane, Mexico, February 2001 60

Let's come back to the first postulate Postulate 1 (revised) Natural language is (considered as) a manyto-many supercorrespondence between meanings and texts n Sentence = product structure map between pieces of meaning and pieces of texts (compositionality) Sylvain Kahane, Mexico, February 2001 60

MTT modules as generative grammars n n n A correspondence between A and B is equivalent to a set of couples (S, S') with S in A and S' in B A supercorrespondence is equivalent to a set of product structures, that is, triples (S, S', ƒ) with S in A, S' in B and ƒ a map between some partitions of S and S' An MTT module defines a supercorrespondence; this can be done by generating the set of product structures Sylvain Kahane, Mexico, February 2001 61

MTT modules as generative grammars n n n A correspondence between A and B is equivalent to a set of couples (S, S') with S in A and S' in B A supercorrespondence is equivalent to a set of product structures, that is, triples (S, S', ƒ) with S in A, S' in B and ƒ a map between some partitions of S and S' An MTT module defines a supercorrespondence; this can be done by generating the set of product structures Sylvain Kahane, Mexico, February 2001 61

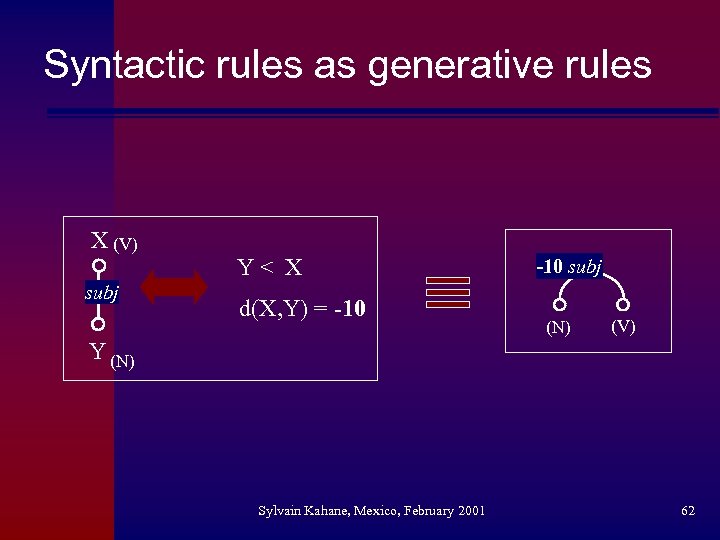

Syntactic rules as generative rules X (V) subj Y< X d(X, Y) = -10 subj (N) (V) Y (N) Sylvain Kahane, Mexico, February 2001 62

Syntactic rules as generative rules X (V) subj Y< X d(X, Y) = -10 subj (N) (V) Y (N) Sylvain Kahane, Mexico, February 2001 62

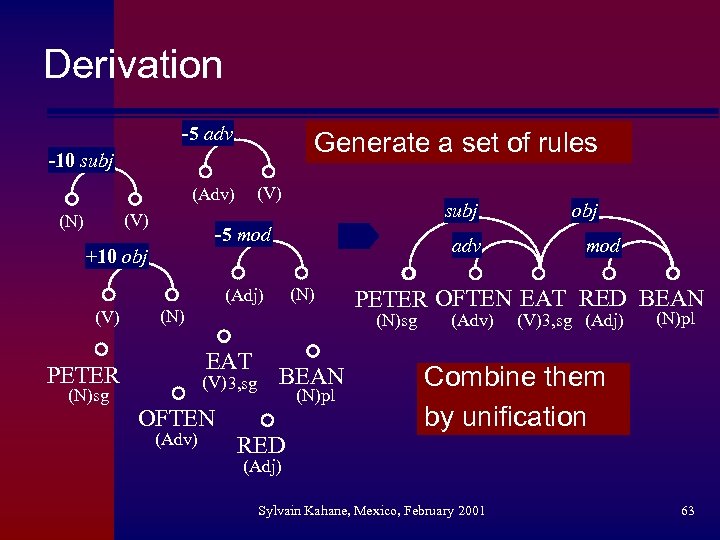

Derivation -5 adv Generate a set of rules -10 subj (V) (Adv) (V) (N) -5 mod +10 obj adv (N) (Adj) (V) (N) (V)3, sg BEAN (N)pl OFTEN (Adv) RED obj mod PETER OFTEN EAT RED BEAN (N)sg EAT PETER (N)sg subj (Adv) (V)3, sg (Adj) (N)pl Combine them by unification (Adj) Sylvain Kahane, Mexico, February 2001 63

Derivation -5 adv Generate a set of rules -10 subj (V) (Adv) (V) (N) -5 mod +10 obj adv (N) (Adj) (V) (N) (V)3, sg BEAN (N)pl OFTEN (Adv) RED obj mod PETER OFTEN EAT RED BEAN (N)sg EAT PETER (N)sg subj (Adv) (V)3, sg (Adj) (N)pl Combine them by unification (Adj) Sylvain Kahane, Mexico, February 2001 63

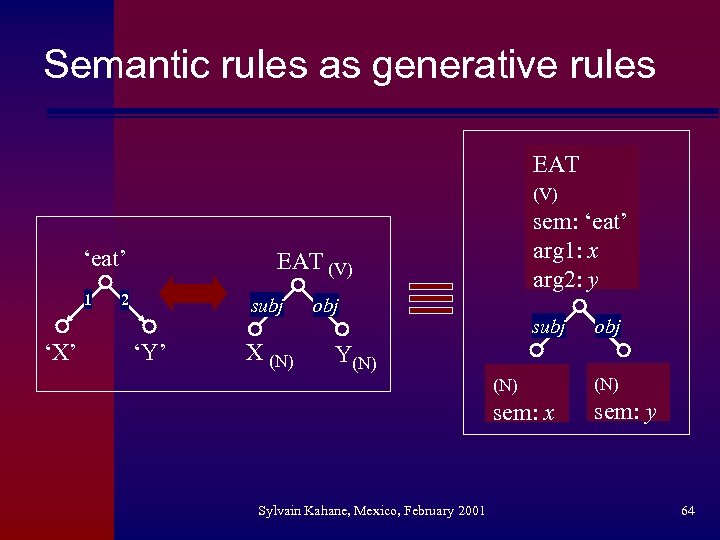

Semantic rules as generative rules EAT (V) ‘eat’ 1 ‘X’ sem: ‘eat’ arg 1: x arg 2: y EAT (V) 2 subj ‘Y’ X (N) obj subj obj Y(N) sem: x Sylvain Kahane, Mexico, February 2001 (N) sem: y 64

Semantic rules as generative rules EAT (V) ‘eat’ 1 ‘X’ sem: ‘eat’ arg 1: x arg 2: y EAT (V) 2 subj ‘Y’ X (N) obj subj obj Y(N) sem: x Sylvain Kahane, Mexico, February 2001 (N) sem: y 64

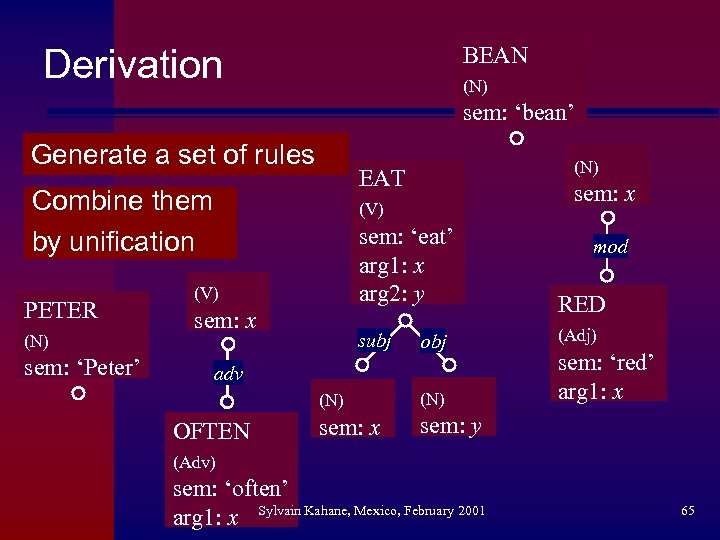

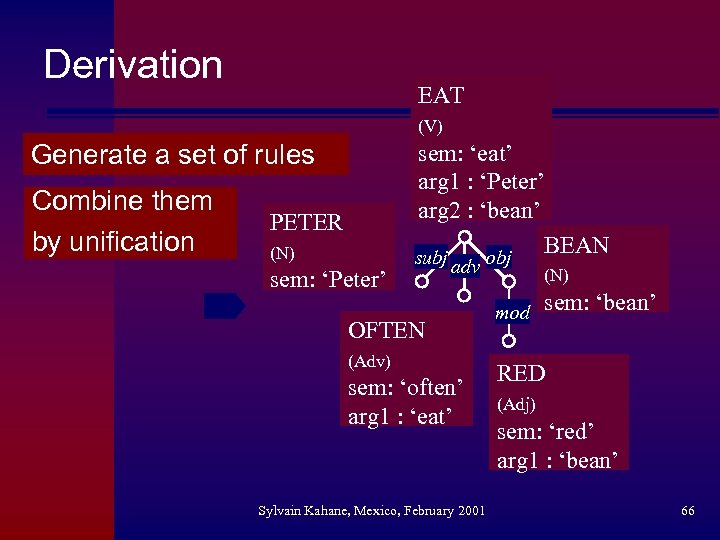

BEAN Derivation (N) sem: ‘bean’ Generate a set of rules Combine them by unification PETER (N) sem: ‘Peter’ (N) EAT sem: x (V) sem: ‘eat’ arg 1: x arg 2: y (V) sem: x subj obj adv (N) OFTEN (N) sem: x mod RED (Adj) sem: ‘red’ arg 1: x sem: y (Adv) sem: ‘often’ arg 1: x Sylvain Kahane, Mexico, February 2001 65

BEAN Derivation (N) sem: ‘bean’ Generate a set of rules Combine them by unification PETER (N) sem: ‘Peter’ (N) EAT sem: x (V) sem: ‘eat’ arg 1: x arg 2: y (V) sem: x subj obj adv (N) OFTEN (N) sem: x mod RED (Adj) sem: ‘red’ arg 1: x sem: y (Adv) sem: ‘often’ arg 1: x Sylvain Kahane, Mexico, February 2001 65

Derivation EAT (V) Generate a set of rules Combine them by unification PETER (N) sem: ‘Peter’ sem: ‘eat’ arg 1 : ‘Peter’ arg 2 : ‘bean’ BEAN subj obj adv OFTEN (Adv) sem: ‘often’ arg 1 : ‘eat’ Sylvain Kahane, Mexico, February 2001 (N) mod sem: ‘bean’ RED (Adj) sem: ‘red’ arg 1 : ‘bean’ 66

Derivation EAT (V) Generate a set of rules Combine them by unification PETER (N) sem: ‘Peter’ sem: ‘eat’ arg 1 : ‘Peter’ arg 2 : ‘bean’ BEAN subj obj adv OFTEN (Adv) sem: ‘often’ arg 1 : ‘eat’ Sylvain Kahane, Mexico, February 2001 (N) mod sem: ‘bean’ RED (Adj) sem: ‘red’ arg 1 : ‘bean’ 66

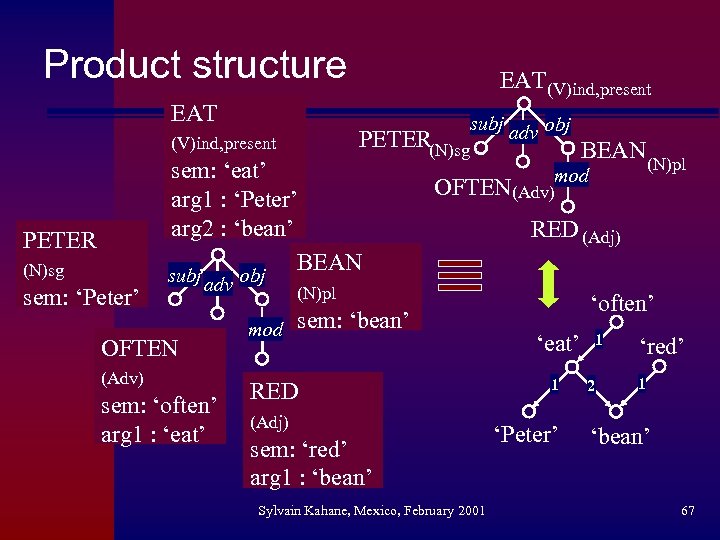

Product structure EAT subj adv obj PETER(N)sg (V)ind, present PETER (N)sg sem: ‘Peter’ EAT (V)ind, present sem: ‘eat’ arg 1 : ‘Peter’ arg 2 : ‘bean’ BEAN subj obj adv OFTEN (Adv) sem: ‘often’ arg 1 : ‘eat’ mod OFTEN(Adv) sem: ‘bean’ RED (Adj) sem: ‘red’ arg 1 : ‘bean’ Sylvain Kahane, Mexico, February 2001 (N)pl RED (Adj) (N)pl mod BEAN ‘often’ ‘eat’ 1 ‘Peter’ 1 2 ‘red’ 1 ‘bean’ 67

Product structure EAT subj adv obj PETER(N)sg (V)ind, present PETER (N)sg sem: ‘Peter’ EAT (V)ind, present sem: ‘eat’ arg 1 : ‘Peter’ arg 2 : ‘bean’ BEAN subj obj adv OFTEN (Adv) sem: ‘often’ arg 1 : ‘eat’ mod OFTEN(Adv) sem: ‘bean’ RED (Adj) sem: ‘red’ arg 1 : ‘bean’ Sylvain Kahane, Mexico, February 2001 (N)pl RED (Adj) (N)pl mod BEAN ‘often’ ‘eat’ 1 ‘Peter’ 1 2 ‘red’ 1 ‘bean’ 67

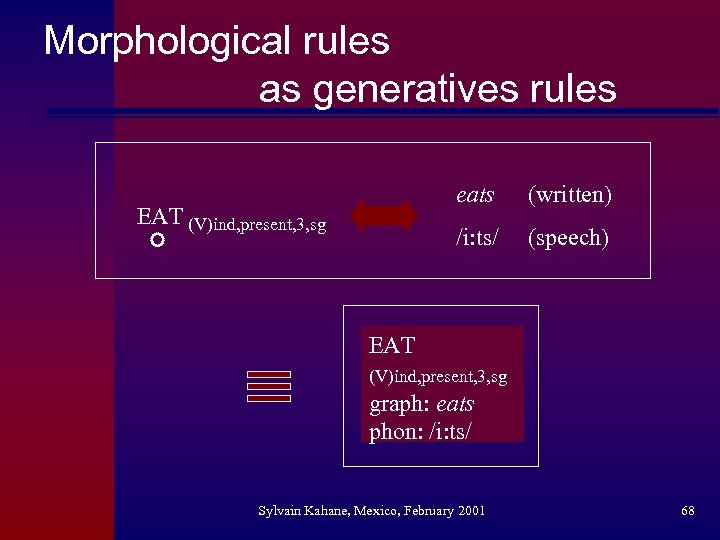

Morphological rules as generatives rules eats /i: ts/ EAT (V)ind, present, 3, sg (written) (speech) EAT (V)ind, present, 3, sg graph: eats phon: /i: ts/ Sylvain Kahane, Mexico, February 2001 68

Morphological rules as generatives rules eats /i: ts/ EAT (V)ind, present, 3, sg (written) (speech) EAT (V)ind, present, 3, sg graph: eats phon: /i: ts/ Sylvain Kahane, Mexico, February 2001 68

Combination of modules Sylvain Kahane, Mexico, February 2001 69

Combination of modules Sylvain Kahane, Mexico, February 2001 69

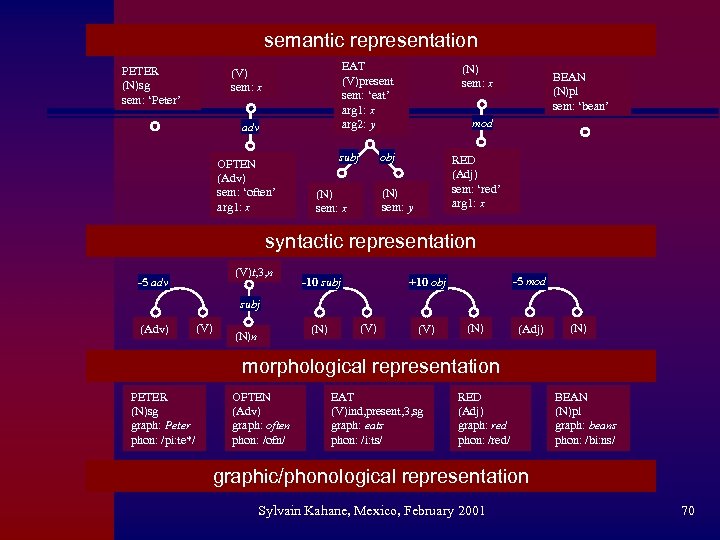

semantic representation PETER (N)sg sem: ‘Peter’ EAT (V)present sem: ‘eat’ arg 1: x arg 2: y (V) sem: x adv OFTEN (Adv) sem: ‘often’ arg 1: x subj (N) sem: x mod obj RED (Adj) sem: ‘red’ arg 1: x (N) sem: y (N) sem: x BEAN (N)pl sem: ‘bean’ syntactic representation (V)t, 3, n -5 adv -10 subj -5 mod +10 obj subj (Adv) (V) (N)n (V) (N) (Adj) (N) morphological representation PETER (N)sg graph: Peter phon: /pi: te*/ OFTEN (Adv) graph: often phon: /ofn/ EAT (V)ind, present, 3, sg graph: eats phon: /i: ts/ RED (Adj) graph: red phon: /red/ BEAN (N)pl graph: beans phon: /bi: ns/ graphic/phonological representation Sylvain Kahane, Mexico, February 2001 70

semantic representation PETER (N)sg sem: ‘Peter’ EAT (V)present sem: ‘eat’ arg 1: x arg 2: y (V) sem: x adv OFTEN (Adv) sem: ‘often’ arg 1: x subj (N) sem: x mod obj RED (Adj) sem: ‘red’ arg 1: x (N) sem: y (N) sem: x BEAN (N)pl sem: ‘bean’ syntactic representation (V)t, 3, n -5 adv -10 subj -5 mod +10 obj subj (Adv) (V) (N)n (V) (N) (Adj) (N) morphological representation PETER (N)sg graph: Peter phon: /pi: te*/ OFTEN (Adv) graph: often phon: /ofn/ EAT (V)ind, present, 3, sg graph: eats phon: /i: ts/ RED (Adj) graph: red phon: /red/ BEAN (N)pl graph: beans phon: /bi: ns/ graphic/phonological representation Sylvain Kahane, Mexico, February 2001 70

Analysis n Two main strategies: – Horizontal analysis (module after module): tagging, shallow parsing, deep analysis – Vertical analysis (word after word): lexicalization Sylvain Kahane, Mexico, February 2001 71

Analysis n Two main strategies: – Horizontal analysis (module after module): tagging, shallow parsing, deep analysis – Vertical analysis (word after word): lexicalization Sylvain Kahane, Mexico, February 2001 71

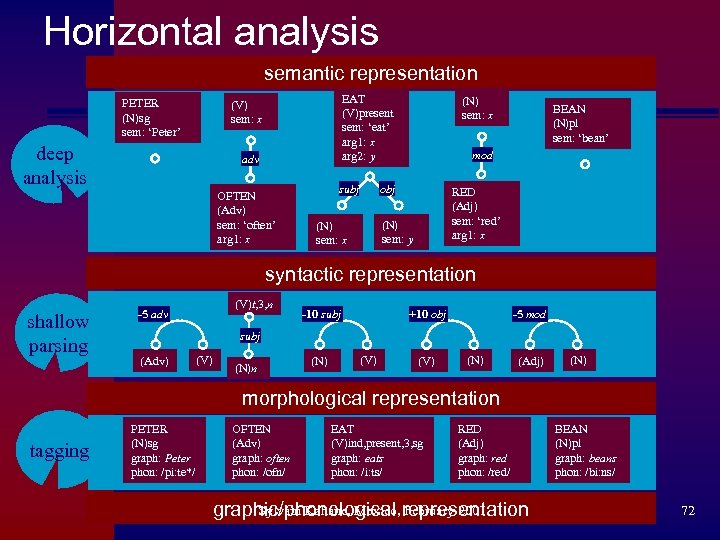

Horizontal analysis semantic representation PETER (N)sg sem: ‘Peter’ EAT (V)present sem: ‘eat’ arg 1: x arg 2: y (V) sem: x deep analysis adv OFTEN (Adv) sem: ‘often’ arg 1: x subj (N) sem: x mod obj RED (Adj) sem: ‘red’ arg 1: x (N) sem: y (N) sem: x BEAN (N)pl sem: ‘bean’ syntactic representation shallow parsing (V)t, 3, n -5 adv -10 subj -5 mod +10 obj subj (Adv) (V) (N)n (N) (V) (N) (Adj) (N) morphological representation tagging PETER (N)sg graph: Peter phon: /pi: te*/ OFTEN (Adv) graph: often phon: /ofn/ EAT (V)ind, present, 3, sg graph: eats phon: /i: ts/ RED (Adj) graph: red phon: /red/ Sylvain Kahane, Mexico, February 2001 graphic/phonological representation BEAN (N)pl graph: beans phon: /bi: ns/ 72

Horizontal analysis semantic representation PETER (N)sg sem: ‘Peter’ EAT (V)present sem: ‘eat’ arg 1: x arg 2: y (V) sem: x deep analysis adv OFTEN (Adv) sem: ‘often’ arg 1: x subj (N) sem: x mod obj RED (Adj) sem: ‘red’ arg 1: x (N) sem: y (N) sem: x BEAN (N)pl sem: ‘bean’ syntactic representation shallow parsing (V)t, 3, n -5 adv -10 subj -5 mod +10 obj subj (Adv) (V) (N)n (N) (V) (N) (Adj) (N) morphological representation tagging PETER (N)sg graph: Peter phon: /pi: te*/ OFTEN (Adv) graph: often phon: /ofn/ EAT (V)ind, present, 3, sg graph: eats phon: /i: ts/ RED (Adj) graph: red phon: /red/ Sylvain Kahane, Mexico, February 2001 graphic/phonological representation BEAN (N)pl graph: beans phon: /bi: ns/ 72

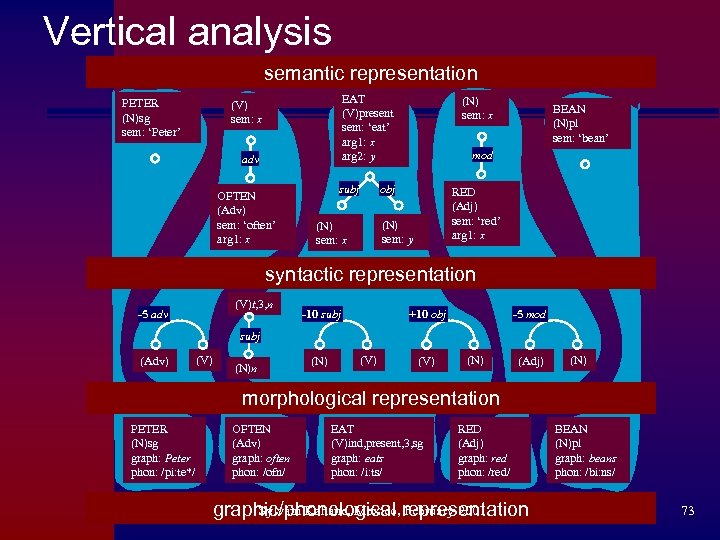

Vertical analysis semantic representation PETER (N)sg sem: ‘Peter’ EAT (V)present sem: ‘eat’ arg 1: x arg 2: y (V) sem: x adv OFTEN (Adv) sem: ‘often’ arg 1: x subj (N) sem: x mod obj RED (Adj) sem: ‘red’ arg 1: x (N) sem: y (N) sem: x BEAN (N)pl sem: ‘bean’ syntactic representation (V)t, 3, n -5 adv -10 subj -5 mod +10 obj subj (Adv) (V) (N)n (N) (V) (N) (Adj) (N) morphological representation PETER (N)sg graph: Peter phon: /pi: te*/ OFTEN (Adv) graph: often phon: /ofn/ EAT (V)ind, present, 3, sg graph: eats phon: /i: ts/ RED (Adj) graph: red phon: /red/ Sylvain Kahane, Mexico, February 2001 graphic/phonological representation BEAN (N)pl graph: beans phon: /bi: ns/ 73

Vertical analysis semantic representation PETER (N)sg sem: ‘Peter’ EAT (V)present sem: ‘eat’ arg 1: x arg 2: y (V) sem: x adv OFTEN (Adv) sem: ‘often’ arg 1: x subj (N) sem: x mod obj RED (Adj) sem: ‘red’ arg 1: x (N) sem: y (N) sem: x BEAN (N)pl sem: ‘bean’ syntactic representation (V)t, 3, n -5 adv -10 subj -5 mod +10 obj subj (Adv) (V) (N)n (N) (V) (N) (Adj) (N) morphological representation PETER (N)sg graph: Peter phon: /pi: te*/ OFTEN (Adv) graph: often phon: /ofn/ EAT (V)ind, present, 3, sg graph: eats phon: /i: ts/ RED (Adj) graph: red phon: /red/ Sylvain Kahane, Mexico, February 2001 graphic/phonological representation BEAN (N)pl graph: beans phon: /bi: ns/ 73

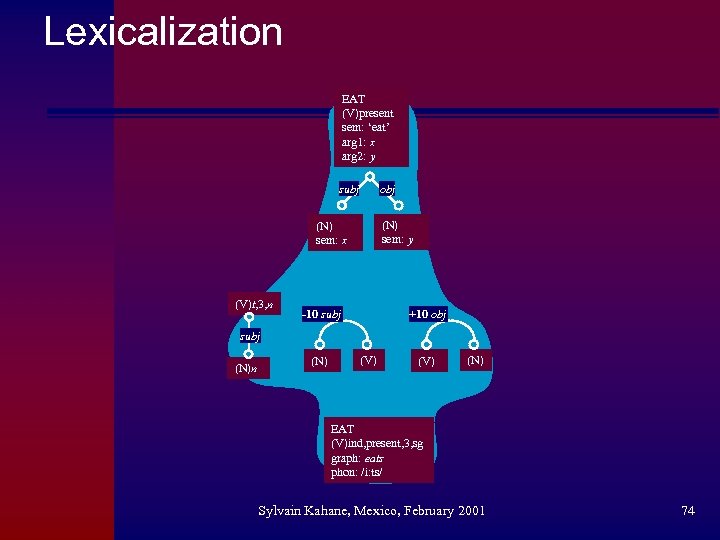

Lexicalization EAT (V)present sem: ‘eat’ arg 1: x arg 2: y subj obj (N) sem: y (N) sem: x (V)t, 3, n -10 subj +10 obj subj (N)n (N) (V) (N) EAT (V)ind, present, 3, sg graph: eats phon: /i: ts/ Sylvain Kahane, Mexico, February 2001 74

Lexicalization EAT (V)present sem: ‘eat’ arg 1: x arg 2: y subj obj (N) sem: y (N) sem: x (V)t, 3, n -10 subj +10 obj subj (N)n (N) (V) (N) EAT (V)ind, present, 3, sg graph: eats phon: /i: ts/ Sylvain Kahane, Mexico, February 2001 74

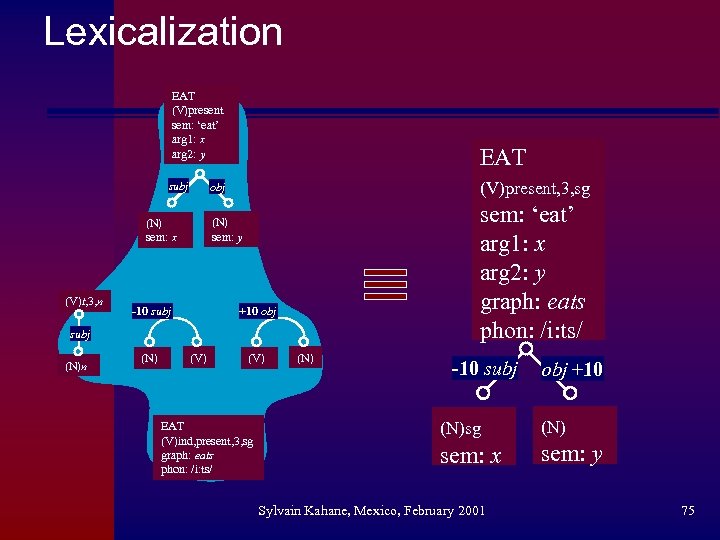

Lexicalization EAT (V)present sem: ‘eat’ arg 1: x arg 2: y subj (V)present, 3, sg obj sem: ‘eat’ arg 1: x arg 2: y graph: eats phon: /i: ts/ (N) sem: y (N) sem: x (V)t, 3, n EAT -10 subj +10 obj subj (N)n (N) (V) EAT (V)ind, present, 3, sg graph: eats phon: /i: ts/ (N) -10 subj obj +10 (N)sg (N) sem: x sem: y Sylvain Kahane, Mexico, February 2001 75

Lexicalization EAT (V)present sem: ‘eat’ arg 1: x arg 2: y subj (V)present, 3, sg obj sem: ‘eat’ arg 1: x arg 2: y graph: eats phon: /i: ts/ (N) sem: y (N) sem: x (V)t, 3, n EAT -10 subj +10 obj subj (N)n (N) (V) EAT (V)ind, present, 3, sg graph: eats phon: /i: ts/ (N) -10 subj obj +10 (N)sg (N) sem: x sem: y Sylvain Kahane, Mexico, February 2001 75

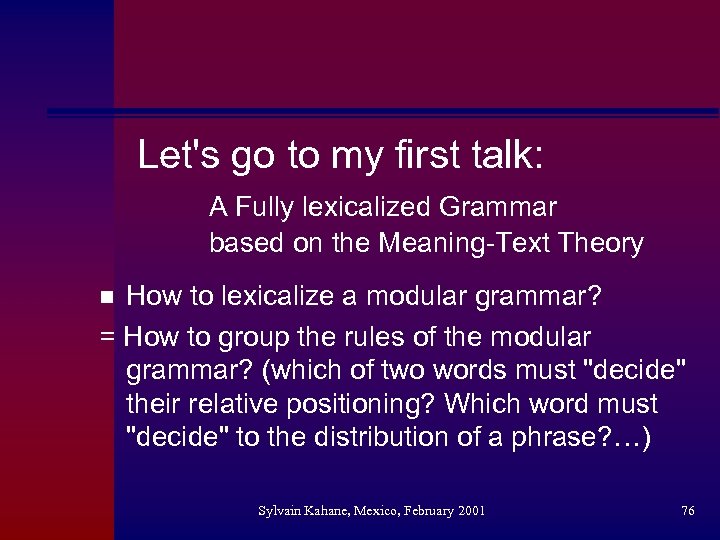

Let's go to my first talk: A Fully lexicalized Grammar based on the Meaning-Text Theory How to lexicalize a modular grammar? = How to group the rules of the modular grammar? (which of two words must "decide" their relative positioning? Which word must "decide" to the distribution of a phrase? …) n Sylvain Kahane, Mexico, February 2001 76

Let's go to my first talk: A Fully lexicalized Grammar based on the Meaning-Text Theory How to lexicalize a modular grammar? = How to group the rules of the modular grammar? (which of two words must "decide" their relative positioning? Which word must "decide" to the distribution of a phrase? …) n Sylvain Kahane, Mexico, February 2001 76

Conclusion n n MTT provides a clear separation of semantic, syntactic and morphological information (modular grammar) Comparison between correspondence grammars and generative grammars: – Linguistics needs grammars that generate product structures and define (super)correspondences – Two grammars are strongly equivalent iff they define the same (super)correspondence Sylvain Kahane, Mexico, February 2001 77

Conclusion n n MTT provides a clear separation of semantic, syntactic and morphological information (modular grammar) Comparison between correspondence grammars and generative grammars: – Linguistics needs grammars that generate product structures and define (super)correspondences – Two grammars are strongly equivalent iff they define the same (super)correspondence Sylvain Kahane, Mexico, February 2001 77

Conclusion n n Get a fully lexicalized grammar from a modular grammar (Vijay-Shanker 1992, Kasper et al. 1995, Candito 1996 …) – Advantage: we write a modular and a fully lexicalized grammar in the same formalism ® lexicalization in several ways ® partially lexicalized grammars Cognitive viewpoint: the modularity of the grammar does not imply that synthesis or analysis are process module after module – Strategies between horizontal and vertical strategies Sylvain Kahane, Mexico, February 2001 78

Conclusion n n Get a fully lexicalized grammar from a modular grammar (Vijay-Shanker 1992, Kasper et al. 1995, Candito 1996 …) – Advantage: we write a modular and a fully lexicalized grammar in the same formalism ® lexicalization in several ways ® partially lexicalized grammars Cognitive viewpoint: the modularity of the grammar does not imply that synthesis or analysis are process module after module – Strategies between horizontal and vertical strategies Sylvain Kahane, Mexico, February 2001 78