8ce56d6ef5164a6b1b2c8e0efdd84d5e.ppt

- Количество слайдов: 84

What Happens When Processing Storage Bandwidth are Free and Infinite? Jim Gray Microsoft Research 1

Outline l Clusters of Hardware Cyber. Bricks – all nodes are very intelligent l Software Cyber. Bricks – standard way to interconnect intelligent nodes l What next? – Processing migrates to where the power is • Disk, network, display controllers have full-blown OS • Send RPCs to them (SQL, Java, HTTP, DCOM, CORBA) to them • Computer is a federated distributed system. 2

When Computers & Communication are Free l l Traditional computer industry is 0 B$/year All the costs are in – Content (good) – System Management (bad) • A vendor claims it costs 8$/MB/year to manage disk storage. – => Web. TV (1 GB drive) costs 8, 000$/year to manage! – => 10 PB DB costs 80 Billion $/year to manage! • Automatic management is ESSENTIAL l In the mean time…. 3

1980 Rule of Thumb l l You need a systems’ programmer per MIPS You need a Data Administrator per 10 GB 4

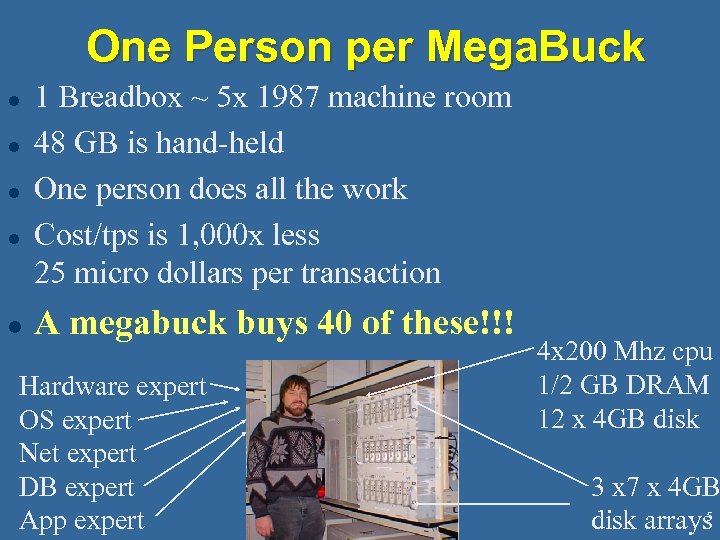

One Person per Mega. Buck l l l 1 Breadbox ~ 5 x 1987 machine room 48 GB is hand-held One person does all the work Cost/tps is 1, 000 x less 25 micro dollars per transaction A megabuck buys 40 of these!!! Hardware expert OS expert Net expert DB expert App expert 4 x 200 Mhz cpu 1/2 GB DRAM 12 x 4 GB disk 3 x 7 x 4 GB 5 disk arrays

All God’s Children Have Clusters! Buying Computing By the Slice l People are buying computers by the gross – After all, they only cost 1 k$/slice! l Clustering them together 6

A cluster is a cluster l l l It’s so natural, even mainframes cluster ! Looking closer at usage patterns, a few models emerge Looking closer at sites, hierarchies bunches functional specialization emerge Which are the roses ? Which are the briars ? 7

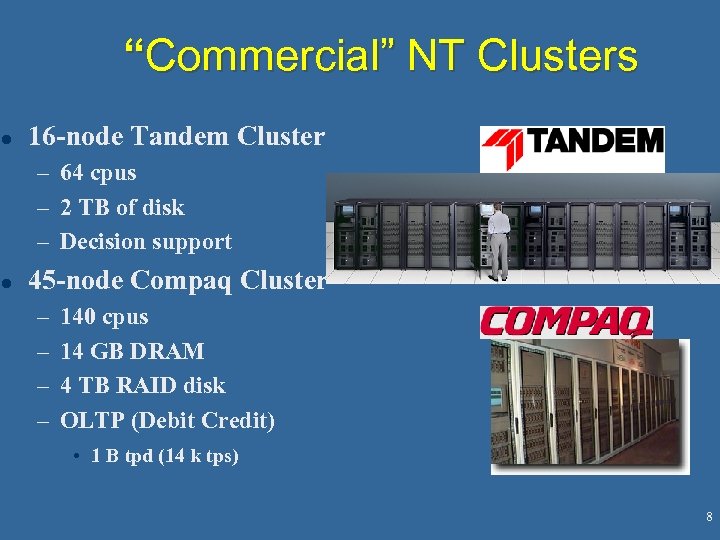

“Commercial” NT Clusters l 16 -node Tandem Cluster – 64 cpus – 2 TB of disk – Decision support l 45 -node Compaq Cluster – – 140 cpus 14 GB DRAM 4 TB RAID disk OLTP (Debit Credit) • 1 B tpd (14 k tps) 8

Tandem Oracle/NT l l 27, 383 tpm. C 71. 50 $/tpm. C 4 x 6 cpus 384 disks =2. 7 TB 9

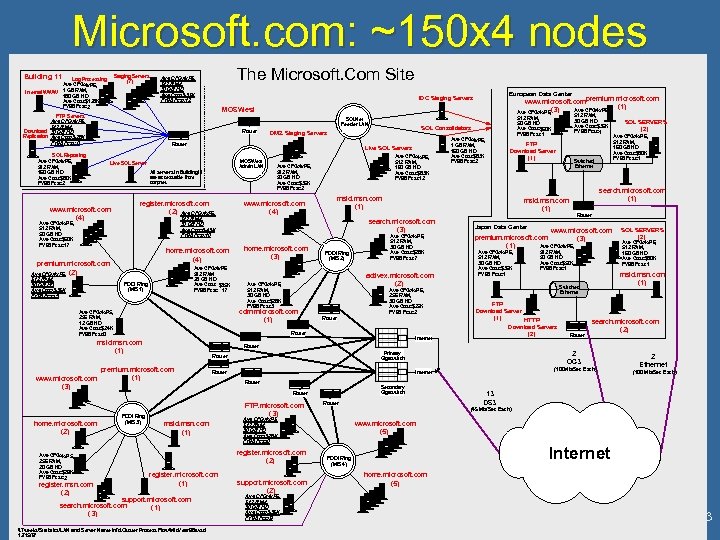

Microsoft. com: ~150 x 4 nodes Building 11 Internal WWW Staging Servers (7) Log Processing Ave CFG: 4 x. P 6, 1 GB RAM, 180 GB HD Ave Cost: $128 K FY 98 Fcst: 2 The Microsoft. Com Site Ave CFG: 4 x. P 5, 512 RAM, 30 GB HD Ave Cost: $35 K FY 98 Fcst: 12 FTP Servers Ave CFG: 4 x. P 5, 512 RAM, Download 30 GB HD Replication Ave Cost: $28 K FY 98 Fcst: 0 SQLNet Feeder LAN Router Live SQL Servers MOSWest Admin LAN Live SQL Server All servers in Building 11 are accessable from corpnet. www. microsoft. com (4) register. microsoft. com (2) Ave CFG: 4 x. P 6, home. microsoft. com (4) premium. microsoft. com (2) Ave CFG: 4 x. P 6, 512 RAM, 30 GB HD Ave Cost: $35 K FY 98 Fcst: 3 Ave CFG: 4 x. P 6, 512 RAM, 160 GB HD Ave Cost: $83 K FY 98 Fcst: 12 Ave CFG: 4 x. P 6, 512 RAM, 50 GB HD Ave Cost: $35 K FY 98 Fcst: 2 www. microsoft. com (4) Ave CFG: 4 x. P 6 512 RAM 28 GB HD Ave Cost: $35 K FY 98 Fcst: 17 FDDI Ring (MIS 1) Ave CFG: 4 x. P 6, 256 RAM, 30 GB HD Ave Cost: $25 K FY 98 Fcst: 2 Router msid. msn. com (1) Internet Ave CFG: 4 x. P 5, 256 RAM, 20 GB HD Ave Cost: $29 K FY 98 Fcst: 2 register. msn. com (2) Switched Ethernet search. microsoft. com (1) Router Japan Data Center www. microsoft. com premium. microsoft. com (3) (1) Ave CFG: 4 x. P 6, 512 RAM, 30 GB HD Ave Cost: $35 K FY 98 Fcst: 1 512 RAM, 50 GB HD Ave Cost: $50 K FY 98 Fcst: 1 FTP Download Server (1) HTTP Download Servers (2) Router SQL SERVERS (2) Ave CFG: 4 x. P 6, 512 RAM, 160 GB HD Ave Cost: $80 K FY 98 Fcst: 1 msid. msn. com (1) Switched Ethernet search. microsoft. com (2) Router FTP. microsoft. com (3) Secondary Gigaswitch support. microsoft. com search. microsoft. com (1) (3) \TweeksStatisticsLAN and Server Name InfoCluster Process FlowMid. Year 98 a. vsd 12/15/97 Router support. microsoft. com (2) Ave CFG: 4 x. P 6, 512 RAM, 30 GB HD Ave Cost: $35 K FY 98 Fcst: 9 2 Ethernet (100 Mb/Sec Each) 13 DS 3 (45 Mb/Sec Each) Ave CFG: 4 x. P 5, 512 RAM, 30 GB HD Ave Cost: $28 K FY 98 Fcst: 0 register. microsoft. com (2) register. microsoft. com (1) (100 Mb/Sec Each) Internet Router msid. msn. com (1) 2 OC 3 Primary Gigaswitch Router premium. microsoft. com (1) FDDI Ring (MIS 3) FTP Download Server (1) SQL SERVERS (2) Ave CFG: 4 x. P 6, 512 RAM, 160 GB HD Ave Cost: $80 K FY 98 Fcst: 1 Router home. microsoft. com (2) Ave CFG: 4 x. P 6, 512 RAM, 30 GB HD Ave Cost: $28 K FY 98 Fcst: 7 activex. microsoft. com (2) Ave CFG: 4 x. P 6, 512 RAM, 30 GB HD Ave Cost: $28 K FY 98 Fcst: 3 cdm. microsoft. com (1) Ave CFG: 4 x. P 5, 256 RAM, 12 GB HD Ave Cost: $24 K FY 98 Fcst: 0 FDDI Ring (MIS 2) 512 RAM, 30 GB HD Ave Cost: $35 K FY 98 Fcst: 1 msid. msn. com (1) search. microsoft. com (3) home. microsoft. com (3) Ave CFG: 4 x. P 6, 1 GB RAM, 160 GB HD Ave Cost: $83 K FY 98 Fcst: 2 msid. msn. com (1) 512 RAM, 30 GB HD Ave Cost: $43 K FY 98 Fcst: 10 Ave CFG: 4 x. P 6, 512 RAM, 50 GB HD Ave Cost: $50 K FY 98 Fcst: 17 www. microsoft. com (3) www. microsoft. com premium. microsoft. com (1) Ave CFG: 4 x. P 6, (3) Ave CFG: 4 x. P 6, 512 RAM, 50 GB HD Ave Cost: $50 K FY 98 Fcst: 1 SQL Consolidators DMZ Staging Servers Router SQL Reporting Ave CFG: 4 x. P 6, 512 RAM, 160 GB HD Ave Cost: $80 K FY 98 Fcst: 2 European Data Center IDC Staging Servers MOSWest www. microsoft. com (5) Internet FDDI Ring (MIS 4) home. microsoft. com (5) 13

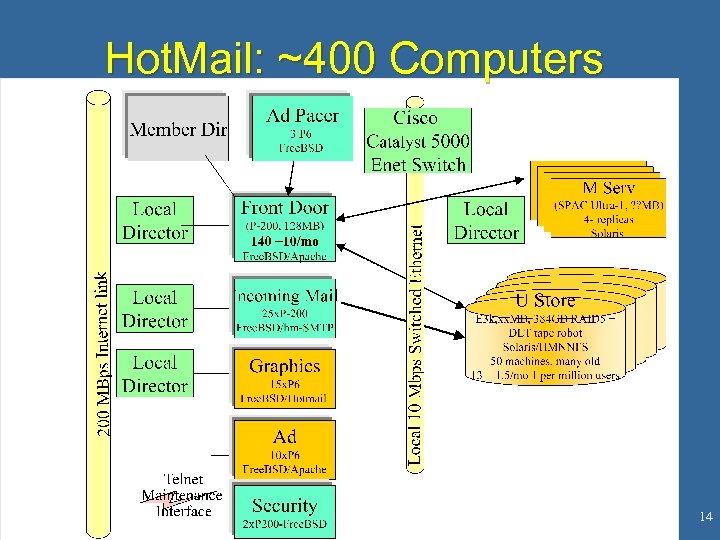

Hot. Mail: ~400 Computers 14

Inktomi (hotbot), Web. TV: > 200 nodes l Inktomi: ~250 Ultra. Sparcs – web crawl – index crawled web and save index – Return search results on demand – Track Ads and click-thrus – ACID vs BASE (basic Availability, Serialized Eventually) l Web TV – ~200 Ultra. Sparcs • Render pages, Provide Email – ~ 4 Network Appliance NFS file servers – A large Oracle app tracking customers 15

Loki: Pentium Clusters for Science http: //loki-www. lanl. gov/ 16 Pentium Processors x 5 Fast Ethernet interfaces + 2 Gbytes RAM + 50 Gbytes Disk + 2 Fast Ethernet switches + Linux…………………. . . = 1. 2 real Gflops for $63, 000 (but that is the 1996 price) Beowulf project is similar http: //cesdis. gsfc. nasa. gov/pub/people/becker/beo wulf. html l Scientists want cheap mips. 16

Your Tax Dollars At Work ASCI for Stockpile Stewardship l l Intel/Sandia: 9000 x 1 node Ppro LLNL/IBM: 512 x 8 Power. PC (SP 2) LNL/Cray: ? Maui Supercomputer Center – 512 x 1 SP 2 17

Berkeley NOW (network of workstations) Project http: //now. cs. berkeley. edu/ l 105 nodes – Sun Ultra. Sparc 170, 128 MB, 2 x 2 GB disk – Myrinet interconnect (2 x 160 MBps per node) – SBus (30 MBps) limited l l l GLUNIX layer above Solaris Inktomi (Hot. Bot search) NAS Parallel Benchmarks Crypto cracker Sort 9 GB per second 18

Wisconsin COW l l l 40 Ultra. Sparcs 64 MB + 2 x 2 GB disk + Myrinet SUN OS Used as a compute engine 19

Andrew Chien’s JBOB http: //www-csag. cs. uiuc. edu/individual/achien. html l l l 48 nodes 36 HP 2 PIIx 128 1 disk Kayak boxes 10 Compaq 2 PIIx 128 1 disk, Wkstation 6000 32 -Myrinet&16 -Server. Net connected Operational All running NT 20

NCSA Cluster l l l The National Center for Supercomputing Applications University of Illinois @ Urbana 500 Pentium cpus, 2 k disks, SAN Compaq + HP +Myricom A Super Computer for 3 M$ Classic Fortran/MPI programming NT + DCOM programming model 21

4 B PC’s (1 Bips, . 1 GB dram, 10 GB disk 1 Gbps Net, B=G) The Bricks of Cyberspace Cost 1, 000 $ l Come with l – NT – DBMS – High speed Net – System management – GUI / OOUI – Tools Compatible with everyone else l Cyber. Bricks l 22

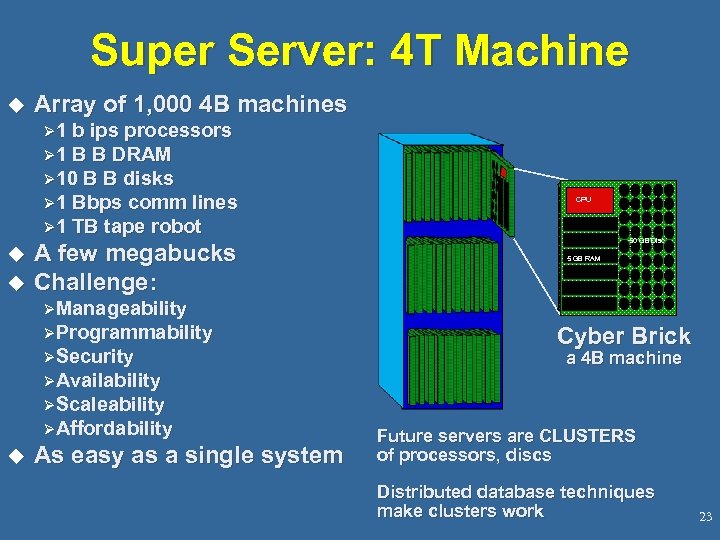

Super Server: 4 T Machine u Array of 1, 000 4 B machines Ø 1 b ips processors Ø 1 B B DRAM Ø 10 B B disks Ø 1 Bbps comm lines Ø 1 TB tape robot u u A few megabucks Challenge: CPU 50 GB Disc 5 GB RAM ØManageability ØProgrammability ØSecurity ØAvailability Cyber Brick a 4 B machine ØScaleability ØAffordability u As easy as a single system Future servers are CLUSTERS of processors, discs Distributed database techniques make clusters work 23

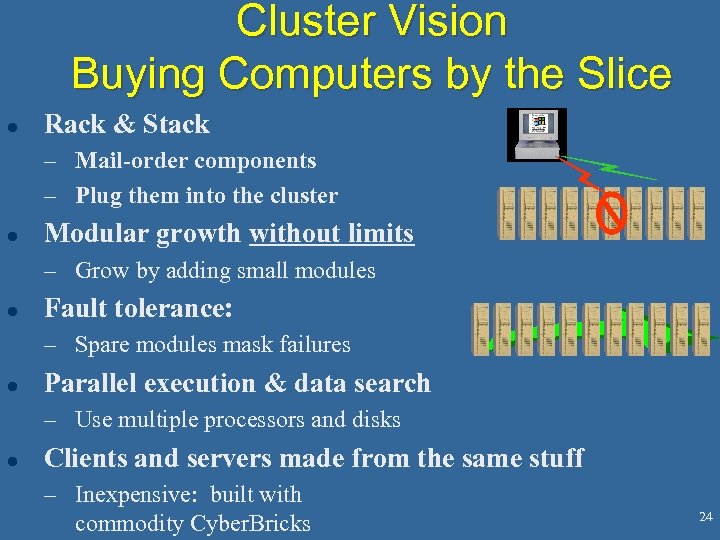

Cluster Vision Buying Computers by the Slice l Rack & Stack – Mail-order components – Plug them into the cluster l Modular growth without limits – Grow by adding small modules l Fault tolerance: – Spare modules mask failures l Parallel execution & data search – Use multiple processors and disks l Clients and servers made from the same stuff – Inexpensive: built with commodity Cyber. Bricks 24

Nostalgia Behemoth in the Basement l l l today’s PC is yesterday’s supercomputer Can use LOTS of them Main Apps changed: – scientific commercial web – Web & Transaction servers – Data Mining, Web Farming 25

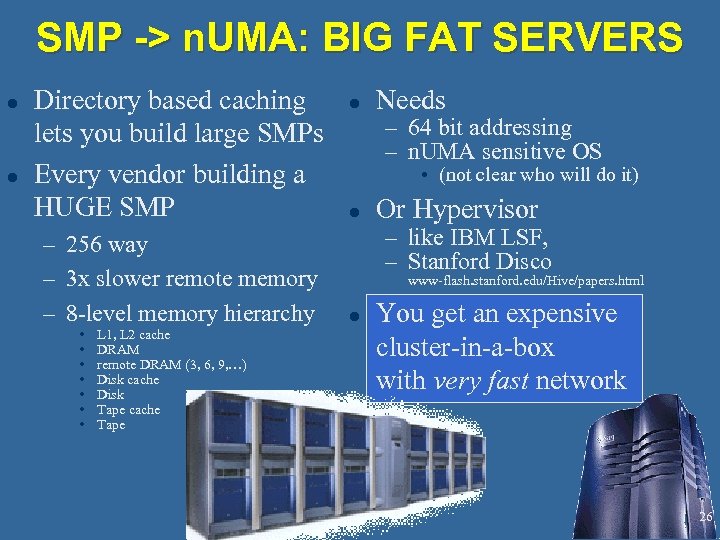

SMP -> n. UMA: BIG FAT SERVERS l l Directory based caching lets you build large SMPs Every vendor building a HUGE SMP – 256 way – 3 x slower remote memory – 8 -level memory hierarchy • • L 1, L 2 cache DRAM remote DRAM (3, 6, 9, …) Disk cache Disk Tape cache Tape l Needs – 64 bit addressing – n. UMA sensitive OS • (not clear who will do it) l Or Hypervisor – like IBM LSF, – Stanford Disco www-flash. stanford. edu/Hive/papers. html l You get an expensive cluster-in-a-box with very fast network 26

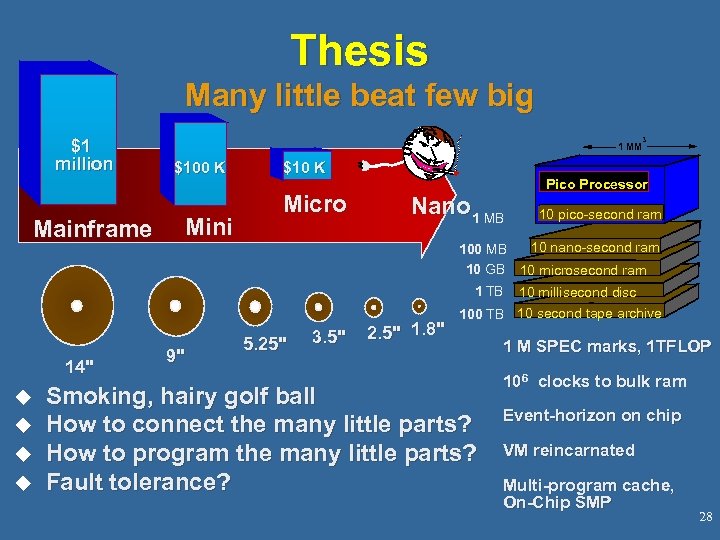

Thesis Many little beat few big $1 million Mainframe 3 1 MM $100 K Mini $10 K Micro Nano 1 MB Pico Processor 10 pico-second ram 10 nano-second ram 100 MB 10 GB 10 microsecond ram 1 TB 14" u u 9" 5. 25" 3. 5" 2. 5" 1. 8" 10 millisecond disc 100 TB 10 second tape archive Smoking, hairy golf ball How to connect the many little parts? How to program the many little parts? Fault tolerance? 1 M SPEC marks, 1 TFLOP 106 clocks to bulk ram Event-horizon on chip VM reincarnated Multi-program cache, On-Chip SMP 28

A Hypothetical Question Taking things to the limit l Moore’s law 100 x per decade: – Exa-instructions per second in 30 years – Exa-bit memory chips – Exa-byte disks l Gilder’s Law of the Telecosom 3 x/year more bandwidth 60, 000 x per decade! – 40 Gbps per fiber today 29

Grove’s Law l l l Link Bandwidth doubles every 100 years! Not much has happened to telephones lately Still twisted pair 30

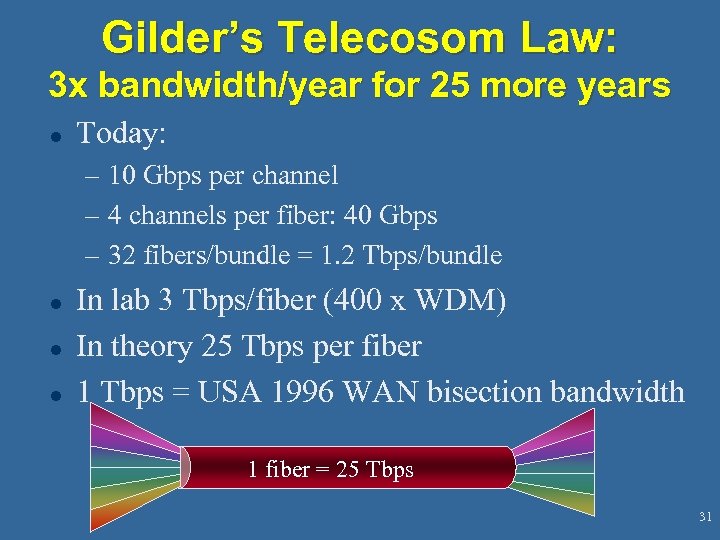

Gilder’s Telecosom Law: 3 x bandwidth/year for 25 more years l Today: – 10 Gbps per channel – 4 channels per fiber: 40 Gbps – 32 fibers/bundle = 1. 2 Tbps/bundle l l l In lab 3 Tbps/fiber (400 x WDM) In theory 25 Tbps per fiber 1 Tbps = USA 1996 WAN bisection bandwidth 1 fiber = 25 Tbps 31

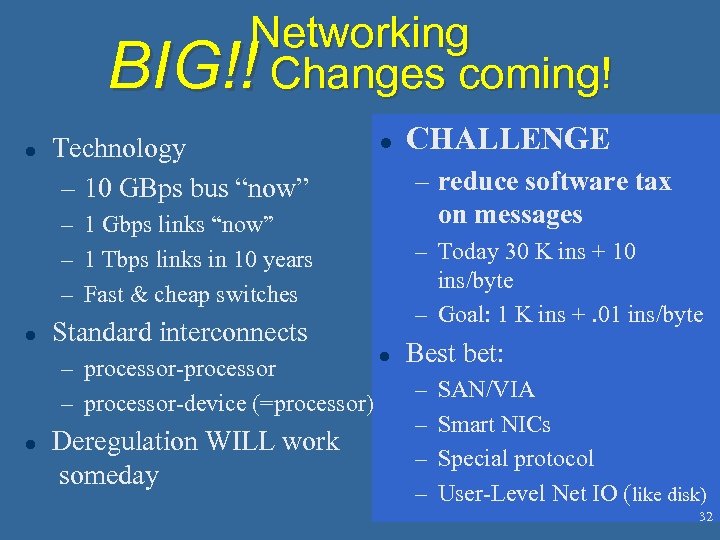

Networking BIG!! Changes coming! l Technology – 10 GBps bus “now” l – reduce software tax on messages – 1 Gbps links “now” – 1 Tbps links in 10 years – Fast & cheap switches l l – Today 30 K ins + 10 ins/byte – Goal: 1 K ins +. 01 ins/byte Standard interconnects – processor-processor – processor-device (=processor) Deregulation WILL work someday CHALLENGE l Best bet: – – SAN/VIA Smart NICs Special protocol User-Level Net IO (like disk) 32

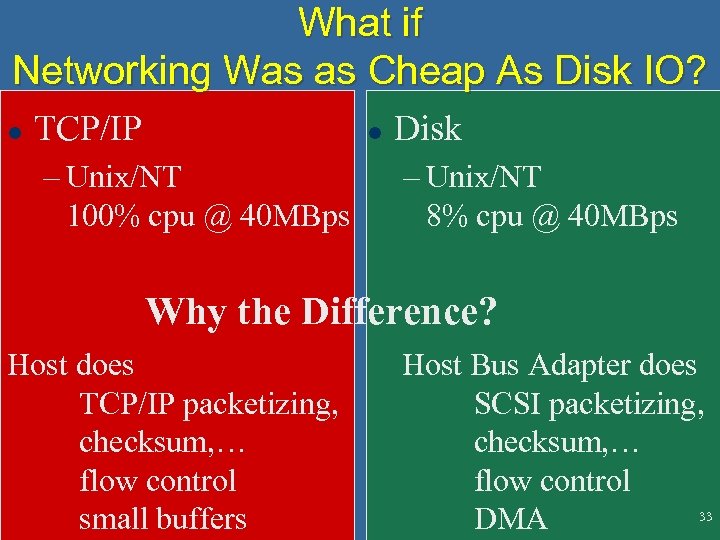

What if Networking Was as Cheap As Disk IO? l TCP/IP l – Unix/NT 100% cpu @ 40 MBps Disk – Unix/NT 8% cpu @ 40 MBps Why the Difference? Host does TCP/IP packetizing, checksum, … flow control small buffers Host Bus Adapter does SCSI packetizing, checksum, … flow control 33 DMA

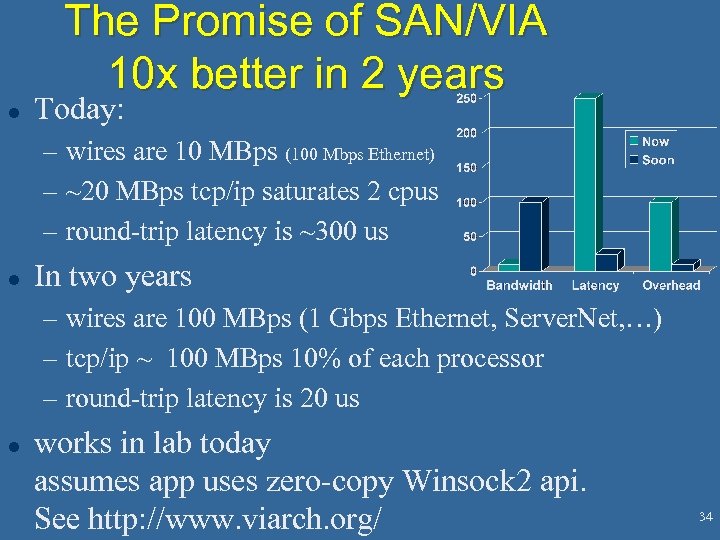

The Promise of SAN/VIA 10 x better in 2 years l Today: – wires are 10 MBps (100 Mbps Ethernet) – ~20 MBps tcp/ip saturates 2 cpus – round-trip latency is ~300 us l In two years – wires are 100 MBps (1 Gbps Ethernet, Server. Net, …) – tcp/ip ~ 100 MBps 10% of each processor – round-trip latency is 20 us l works in lab today assumes app uses zero-copy Winsock 2 api. See http: //www. viarch. org/ 34

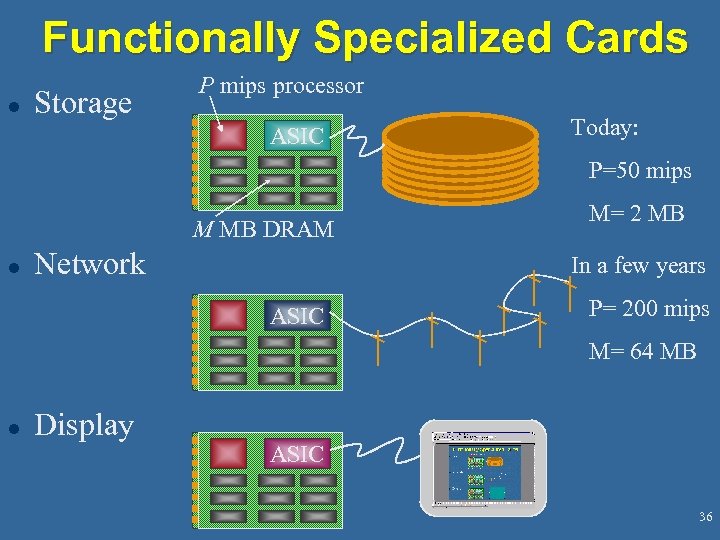

Functionally Specialized Cards l Storage P mips processor ASIC Today: P=50 mips M MB DRAM l Network M= 2 MB In a few years ASIC P= 200 mips M= 64 MB l Display ASIC 36

It’s Already True of Printers Peripheral = Cyber. Brick l l You buy a printer You get a – several network interfaces – A Postscript engine • • cpu, memory, software, a spooler (soon) – and… a print engine. 37

System On A Chip l Integrate Processing with memory on one chip – chip is 75% memory now – 1 MB cache >> 1960 supercomputers – 256 Mb memory chip is 32 MB! – IRAM, CRAM, PIM, … projects abound l Integrate Networking with processing on one chip – system bus is a kind of network – ATM, Fiber. Channel, Ethernet, . . Logic on chip. – Direct IO (no intermediate bus) l Functionally specialized cards shrink to a chip. 38

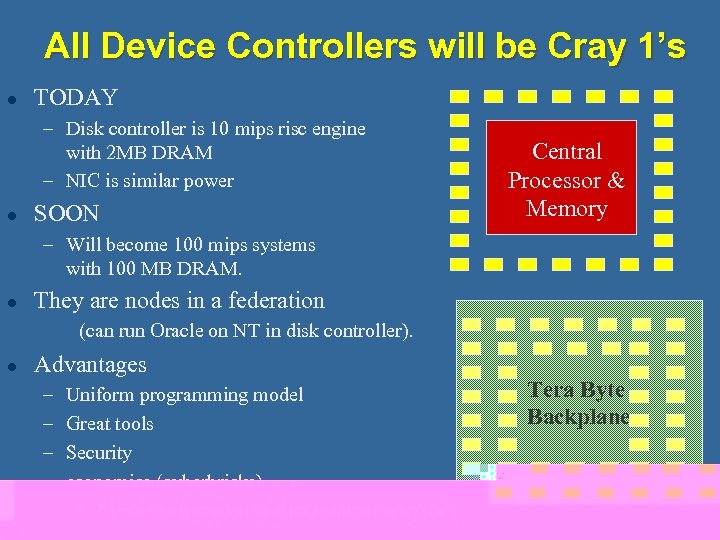

All Device Controllers will be Cray 1’s l TODAY – Disk controller is 10 mips risc engine with 2 MB DRAM – NIC is similar power l SOON Central Processor & Memory – Will become 100 mips systems with 100 MB DRAM. l They are nodes in a federation (can run Oracle on NT in disk controller). l Advantages – – – Uniform programming model Great tools Security economics (cyberbricks) Move computation to data (minimize traffic) Tera Byte Backplane 39

With Tera Byte Interconnect and Super Computer Adapters l Processing is incidental to – Networking – Storage – UI l Disk Controller/NIC is – faster than device – close to device – Can borrow device package & power l l Tera Byte Backplane So use idle capacity for computation. Run app in device. 40

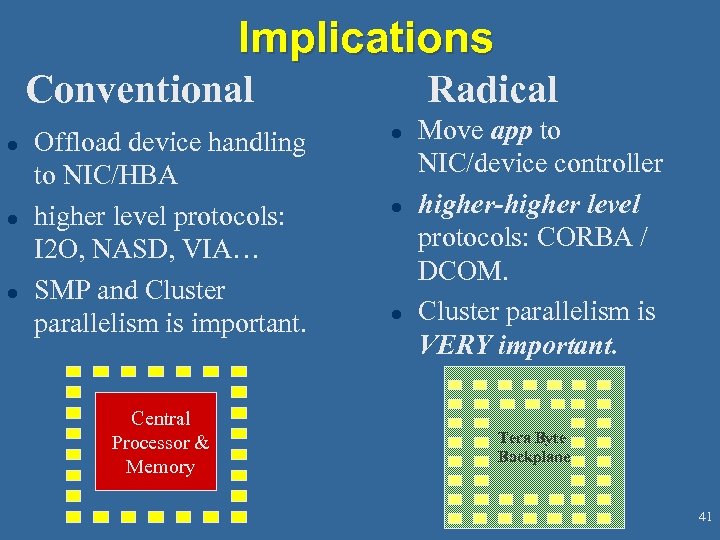

Implications Conventional l Offload device handling to NIC/HBA higher level protocols: I 2 O, NASD, VIA… SMP and Cluster parallelism is important. Central Processor & Memory Radical l Move app to NIC/device controller higher-higher level protocols: CORBA / DCOM. Cluster parallelism is VERY important. Tera Byte Backplane 41

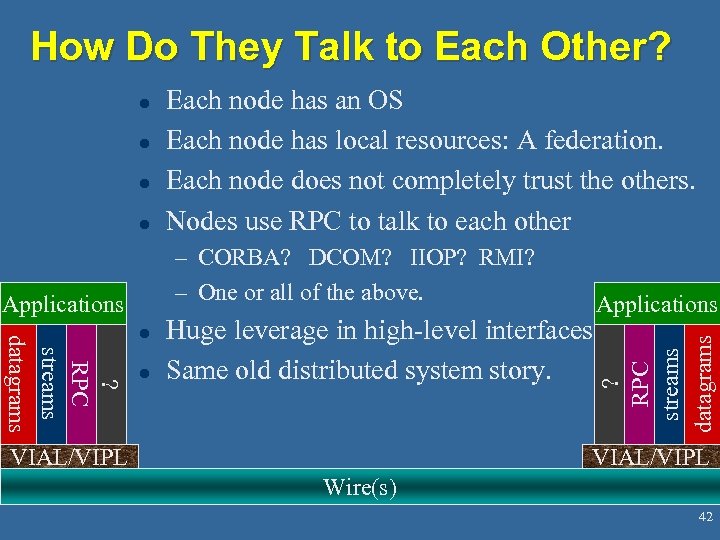

How Do They Talk to Each Other? l l Each node has an OS Each node has local resources: A federation. Each node does not completely trust the others. Nodes use RPC to talk to each other – CORBA? DCOM? IIOP? RMI? – One or all of the above. ? RPC streams datagrams l l Applications Huge leverage in high-level interfaces. Same old distributed system story. ? RPC streams datagrams Applications VIAL/VIPL Wire(s) 42

Punch Line The huge clusters we saw are prototypes for this: A Federation of Functionally specialized nodes Each node shrinks to a “point” device With embedded processing. Each node / device is autonomous 43 Each talks a high-level protocol

Outline l Hardware Cyber. Bricks – all nodes are very intelligent l Software Cyber. Bricks – standard way to interconnect intelligent nodes l What next? – Processing migrates to where the power is • Disk, network, display controllers have full-blown OS • Send RPCs to them (SQL, Java, HTTP, DCOM, CORBA) to them • Computer is a federated distributed system. 44

Software Cyber. Bricks: Objects! l l It’s a zoo Objects and 3 -tier computing (transactions) – Give natural distribution & parallelism – Give remote management! – TP & Web: Dispatch RPCs to pool of object servers l Components are a 1 B$ business today! 45

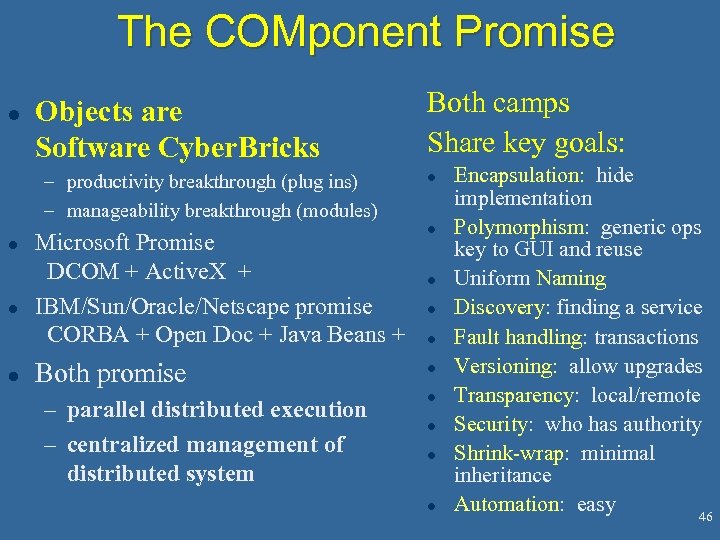

The COMponent Promise l Objects are Software Cyber. Bricks – productivity breakthrough (plug ins) – manageability breakthrough (modules) l l l Both camps Share key goals: l Microsoft Promise DCOM + Active. X + IBM/Sun/Oracle/Netscape promise CORBA + Open Doc + Java Beans + l Both promise l – parallel distributed execution – centralized management of distributed system l l l l Encapsulation: hide implementation Polymorphism: generic ops key to GUI and reuse Uniform Naming Discovery: finding a service Fault handling: transactions Versioning: allow upgrades Transparency: local/remote Security: who has authority Shrink-wrap: minimal inheritance Automation: easy 46

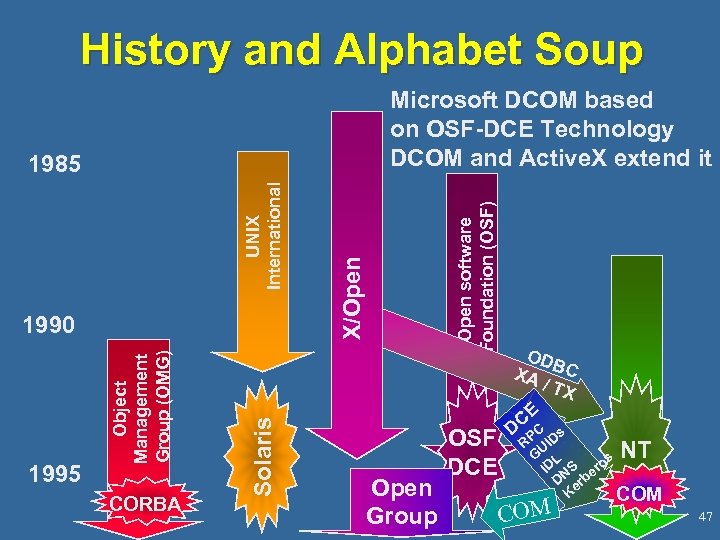

History and Alphabet Soup CORBA Solaris 1995 Object Management Group (OMG) 1990 X/Open UNIX International 1985 Open software Foundation (OSF) Microsoft DCOM based on OSF-DCE Technology DCOM and Active. X extend it Open Group OSF DCE OD B XA C / TX CEC D P s R UID G L s ID NS ero D erb K COM NT 47

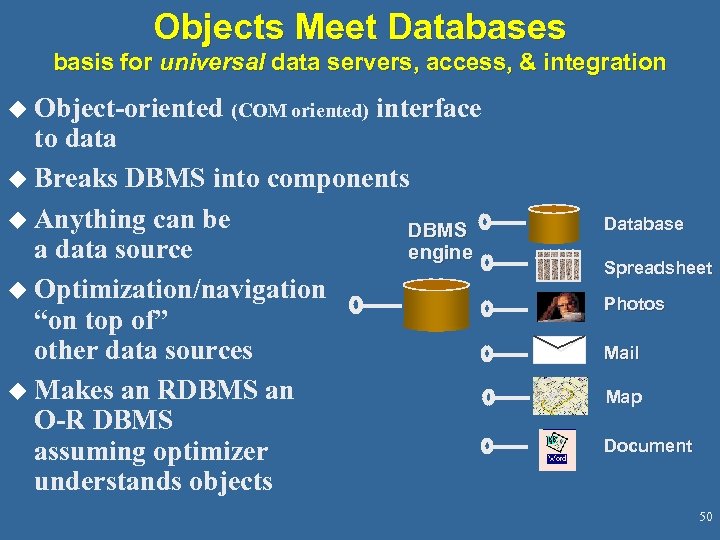

Objects Meet Databases basis for universal data servers, access, & integration u Object-oriented (COM oriented) interface to data u Breaks DBMS into components u Anything can be DBMS a data source engine u Optimization/navigation “on top of” other data sources u Makes an RDBMS an O-R DBMS assuming optimizer understands objects Database Spreadsheet Photos Mail Map Document 50

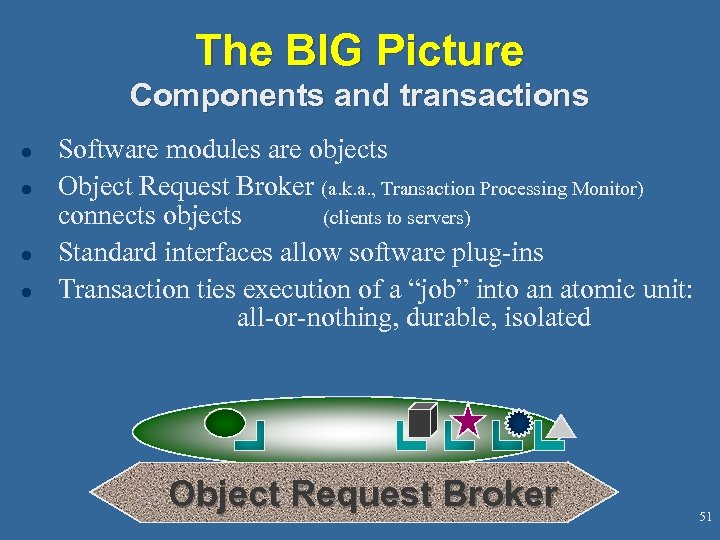

The BIG Picture Components and transactions l l Software modules are objects Object Request Broker (a. k. a. , Transaction Processing Monitor) connects objects (clients to servers) Standard interfaces allow software plug-ins Transaction ties execution of a “job” into an atomic unit: all-or-nothing, durable, isolated Object Request Broker 51

The OO Points So Far l Objects are software Cyber Bricks Object interconnect standards are emerging Cyber Bricks become Federated Systems. l Next points: l l – put processing close to data – do parallel processing. 53

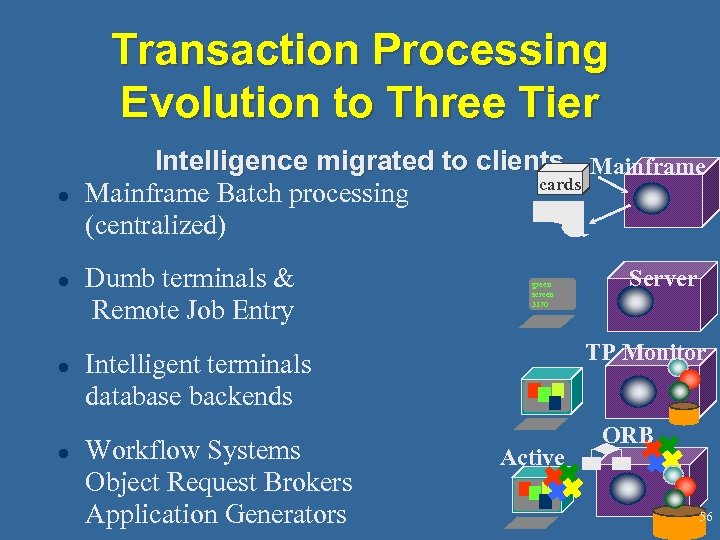

Transaction Processing Evolution to Three Tier l l Intelligence migrated to clients Mainframe cards Mainframe Batch processing (centralized) Dumb terminals & Remote Job Entry green screen 3270 TP Monitor Intelligent terminals database backends Workflow Systems Object Request Brokers Application Generators Server Active ORB 56

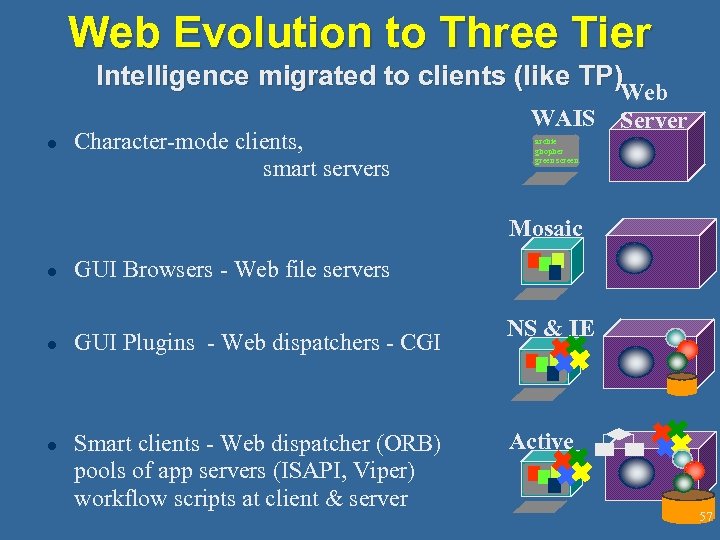

Web Evolution to Three Tier Intelligence migrated to clients (like TP) Web l Character-mode clients, smart servers WAIS Server archie ghopher green screen Mosaic l GUI Browsers - Web file servers l GUI Plugins - Web dispatchers - CGI l Smart clients - Web dispatcher (ORB) pools of app servers (ISAPI, Viper) workflow scripts at client & server NS & IE Active 57

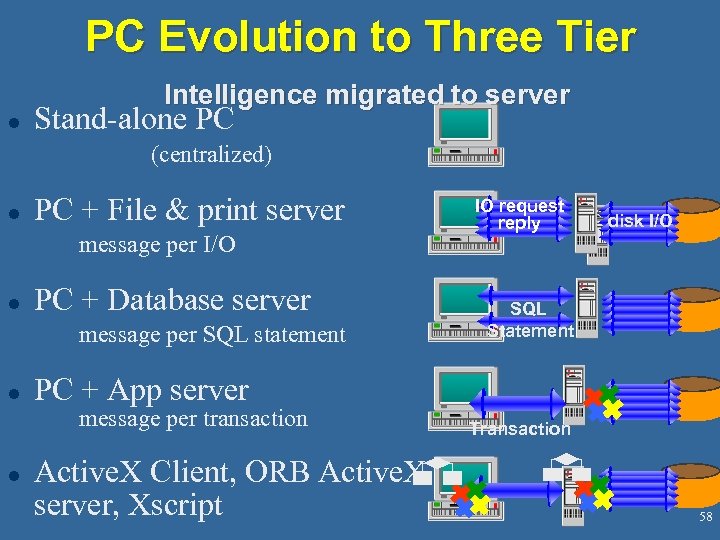

PC Evolution to Three Tier Intelligence migrated to server l Stand-alone PC (centralized) l PC + File & print server message per I/O l PC + Database server message per SQL statement l disk I/O SQL Statement PC + App server message per transaction l IO request reply Active. X Client, ORB Active. X server, Xscript Transaction 58

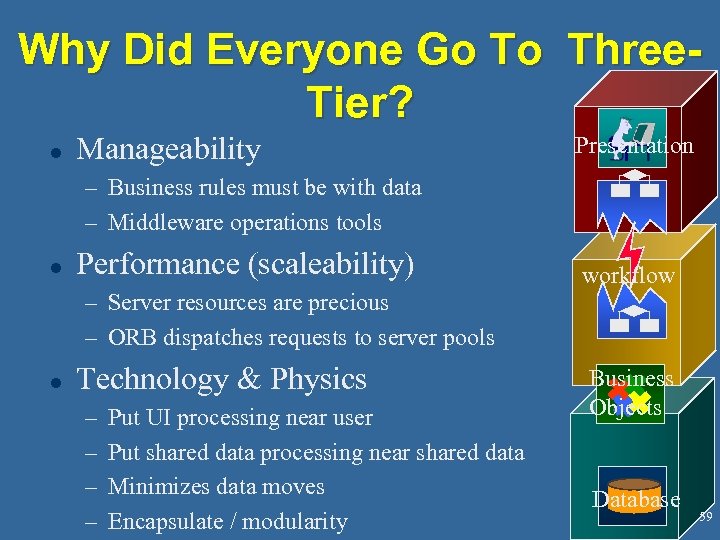

Why Did Everyone Go To Three. Tier? l Manageability Presentation – Business rules must be with data – Middleware operations tools l Performance (scaleability) workflow – Server resources are precious – ORB dispatches requests to server pools l Technology & Physics – – Put UI processing near user Put shared data processing near shared data Minimizes data moves Encapsulate / modularity Business Objects Database 59

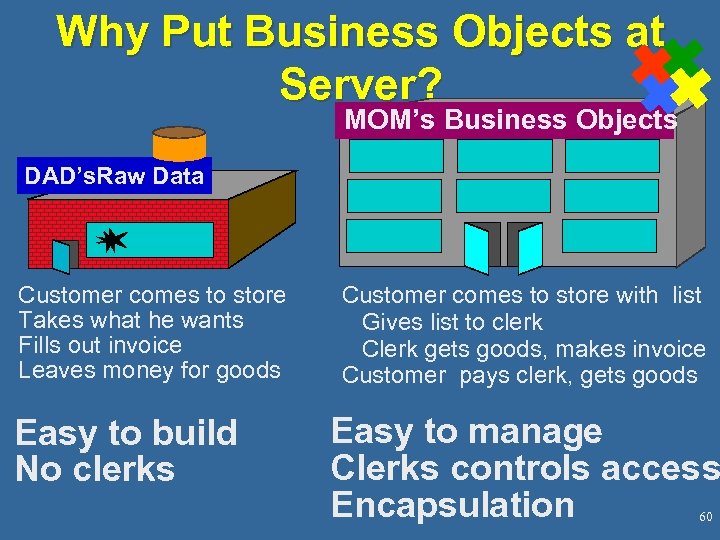

Why Put Business Objects at Server? MOM’s Business Objects DAD’s. Raw Data Customer comes to store Takes what he wants Fills out invoice Leaves money for goods Easy to build No clerks Customer comes to store with list Gives list to clerk Clerk gets goods, makes invoice Customer pays clerk, gets goods Easy to manage Clerks controls access Encapsulation 60

The OO Points So Far l l l Objects are software Cyber Bricks Object interconnect standards are emerging Cyber Bricks become Federated Systems. Put processing close to data Next point: – do parallel processing. 61

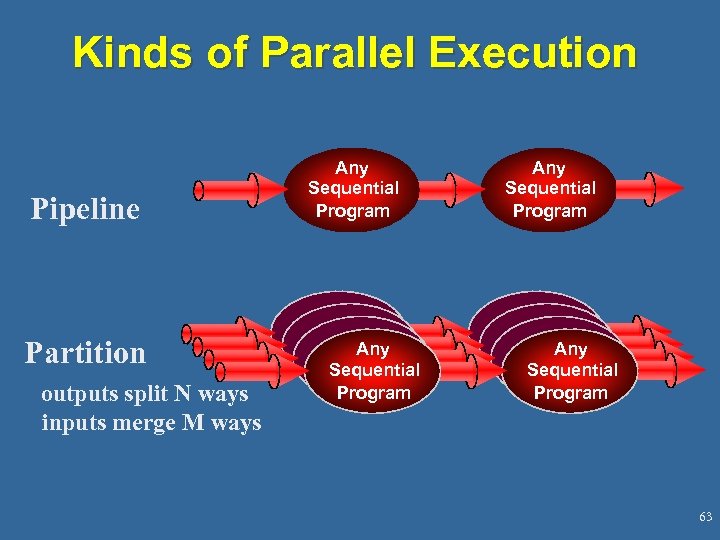

Kinds of Parallel Execution Pipeline Partition outputs split N ways inputs merge M ways Any Sequential Program Sequential Any Sequential Program Any Sequential Program 63

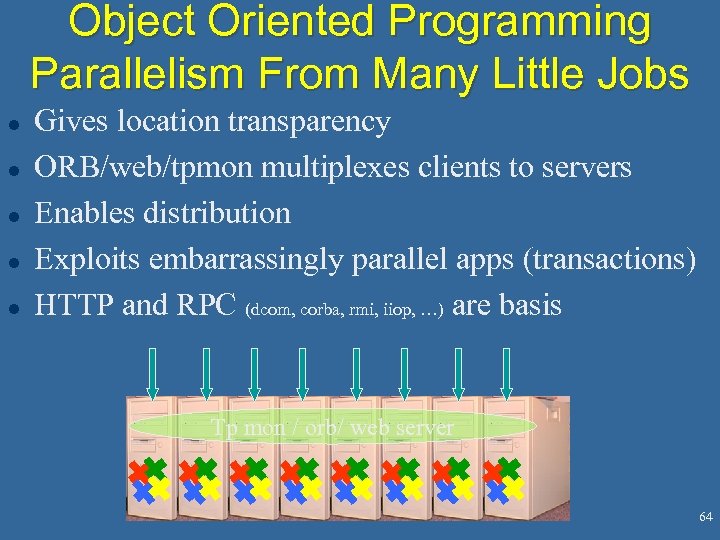

Object Oriented Programming Parallelism From Many Little Jobs l l l Gives location transparency ORB/web/tpmon multiplexes clients to servers Enables distribution Exploits embarrassingly parallel apps (transactions) HTTP and RPC (dcom, corba, rmi, iiop, …) are basis Tp mon / orb/ web server 64

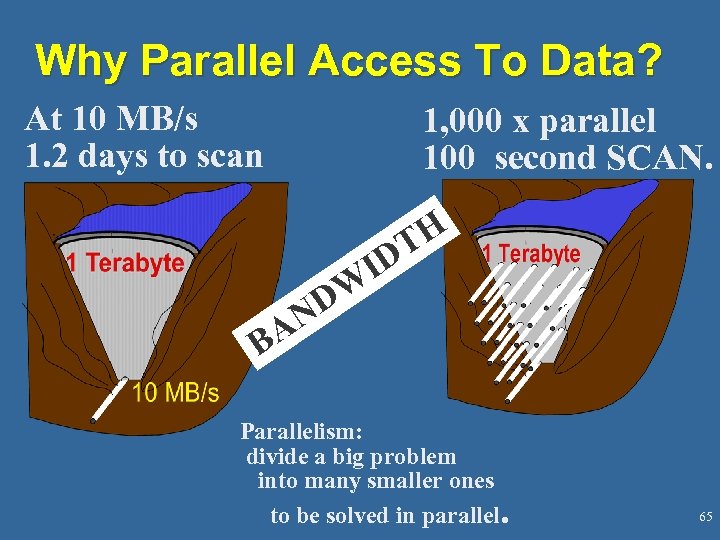

Why Parallel Access To Data? At 10 MB/s 1. 2 days to scan 1, 000 x parallel 100 second SCAN. TH D I AN B W D Parallelism: divide a big problem into many smaller ones to be solved in parallel. 65

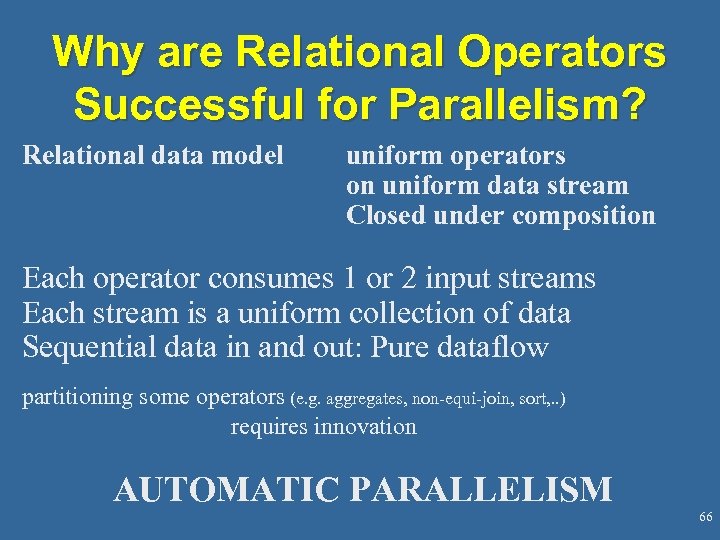

Why are Relational Operators Successful for Parallelism? Relational data model uniform operators on uniform data stream Closed under composition Each operator consumes 1 or 2 input streams Each stream is a uniform collection of data Sequential data in and out: Pure dataflow partitioning some operators (e. g. aggregates, non-equi-join, sort, . . ) requires innovation AUTOMATIC PARALLELISM 66

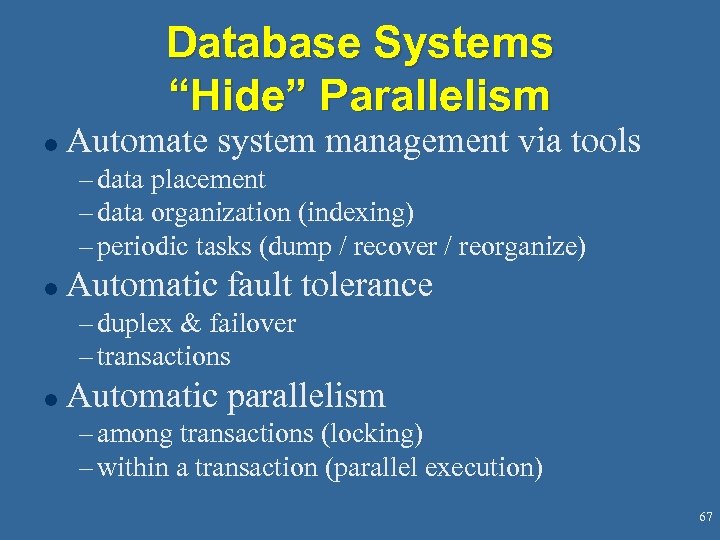

Database Systems “Hide” Parallelism l Automate system management via tools – data placement – data organization (indexing) – periodic tasks (dump / recover / reorganize) l Automatic fault tolerance – duplex & failover – transactions l Automatic parallelism – among transactions (locking) – within a transaction (parallel execution) 67

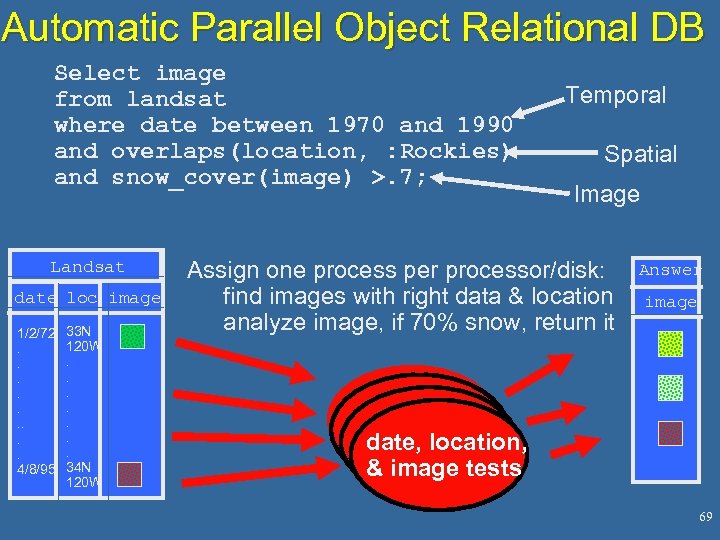

Automatic Parallel Object Relational DB Select image from landsat where date between 1970 and 1990 and overlaps(location, : Rockies) and snow_cover(image) >. 7; Landsat date loc image 1/2/72. . 4/8/95 33 N 120 W. . . . 34 N 120 W Temporal Spatial Image Assign one process per processor/disk: find images with right data & location analyze image, if 70% snow, return it Answer image date, location, & image tests 69

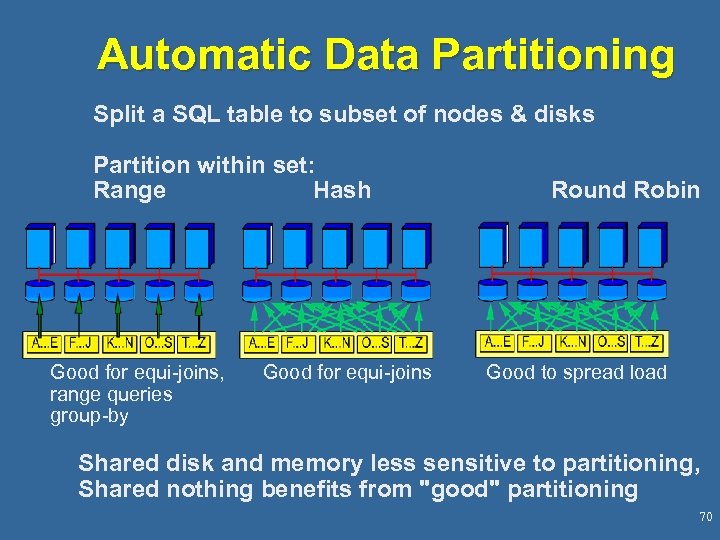

Automatic Data Partitioning Split a SQL table to subset of nodes & disks Partition within set: Range Hash Good for equi-joins, range queries group-by Good for equi-joins Round Robin Good to spread load Shared disk and memory less sensitive to partitioning, Shared nothing benefits from "good" partitioning 70

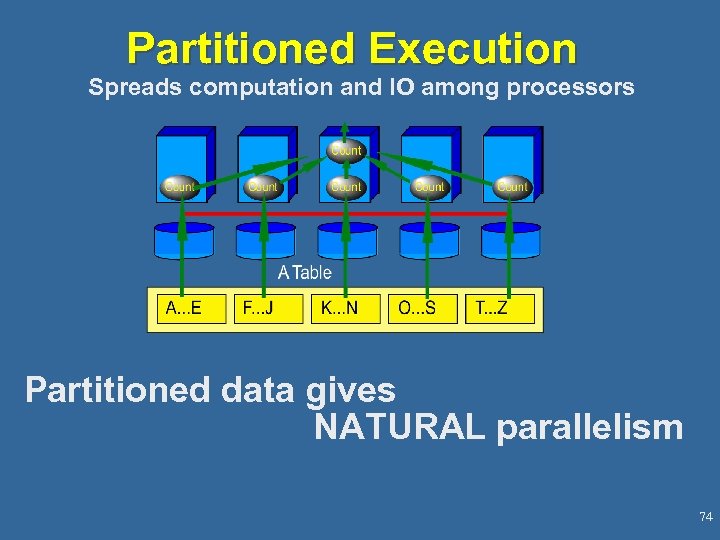

Partitioned Execution Spreads computation and IO among processors Partitioned data gives NATURAL parallelism 74

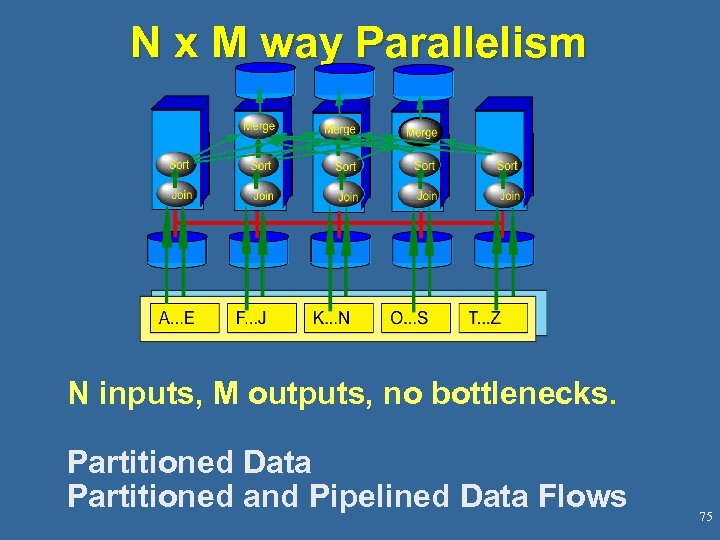

N x M way Parallelism N inputs, M outputs, no bottlenecks. Partitioned Data Partitioned and Pipelined Data Flows 75

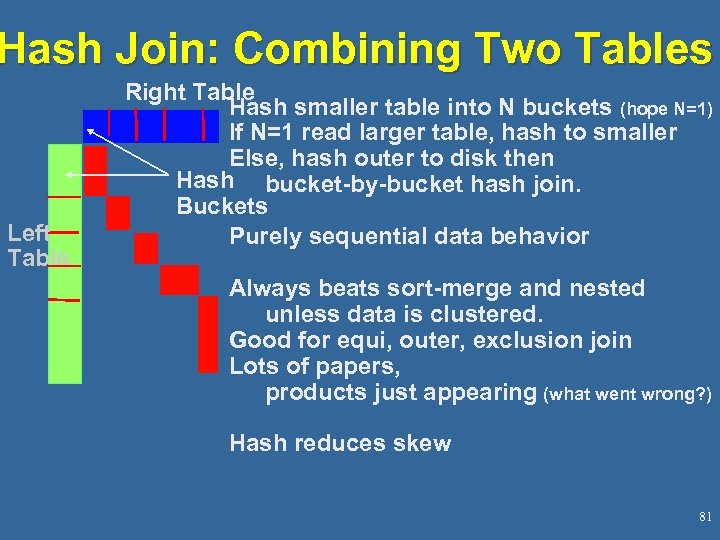

Hash Join: Combining Two Tables Left Table Right Table Hash smaller table into N buckets (hope N=1) If N=1 read larger table, hash to smaller Else, hash outer to disk then Hash bucket-by-bucket hash join. Buckets Purely sequential data behavior Always beats sort-merge and nested unless data is clustered. Good for equi, outer, exclusion join Lots of papers, products just appearing (what went wrong? ) Hash reduces skew 81

Parallel Hash Join ICL implemented hash join with bitmaps in CAFS machine (1976)! Kitsuregawa pointed out the parallelism benefits of hash join in early 1980’s (it partitions beautifully) We ignored them! (why? ) But now, Everybody's doing it. (or promises to do it). Hashing minimizes skew, requires little thinking for redistribution Hashing uses massive main memory 82

Main Message l Technology trends give – many processors and storage units – inexpensively l To analyze large quantities of data – sequential (regular) access patterns are 100 x faster – parallelism is 1000 x faster (trades time for money) – Relational systems show many parallel algorithms. 84

Summary l l All God’s Children Got Clusters! Technology trends imply processors migrated to transducers Components (Software Cyber. Bricks) Programming & Managing Clusters Database experience – Parallelism via transaction processing – Parallelism via data flow – Auto Everything, Always Up 86

End: 86 slides is more than enough for an hour. 87

Clusters Have Advantages l Clients and Servers made from the same stuff. l Inexpensive: – Built with commodity components l Fault tolerance: – Spare modules mask failures l Modular growth – grow by adding small modules 98

Meta-Message: Technology Ratios Are Important l If everything gets faster & cheaper at the same rate THEN nothing really changes. l Things getting MUCH BETTER: l Things staying about the same – communication speed & cost 1, 000 x – processor speed & cost 100 x – storage size & cost 100 x – speed of light (more or less constant) – people (10 x more expensive) – storage speed (only 10 x better) 99

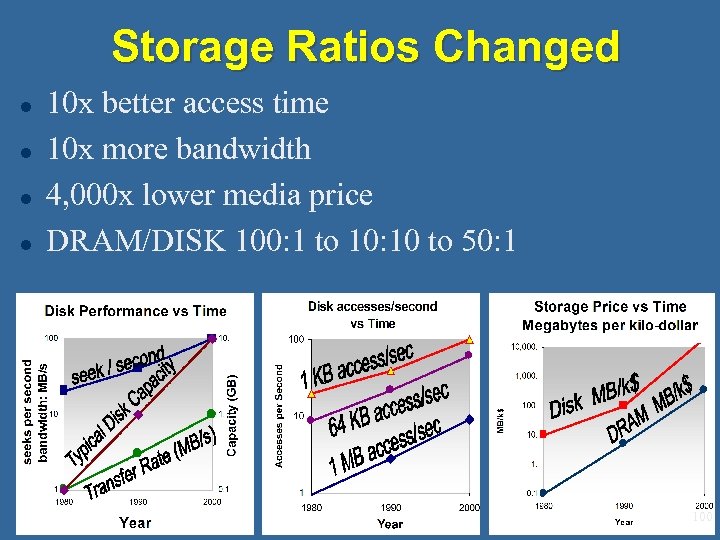

Storage Ratios Changed l l 10 x better access time 10 x more bandwidth 4, 000 x lower media price DRAM/DISK 100: 1 to 10: 10 to 50: 1 100

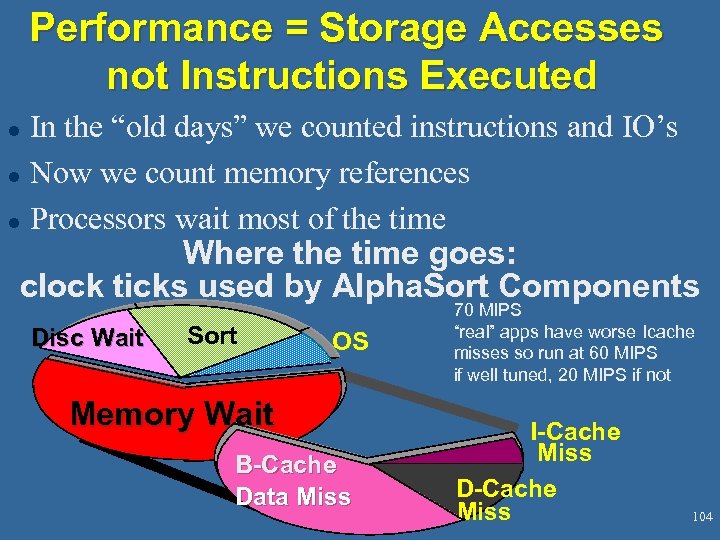

Performance = Storage Accesses not Instructions Executed In the “old days” we counted instructions and IO’s l Now we count memory references l Processors wait most of the time Where the time goes: clock ticks used by Alpha. Sort Components l Disc Wait Sort OS Memory Wait B-Cache Data Miss 70 MIPS “real” apps have worse Icache misses so run at 60 MIPS if well tuned, 20 MIPS if not I-Cache Miss D-Cache Miss 104

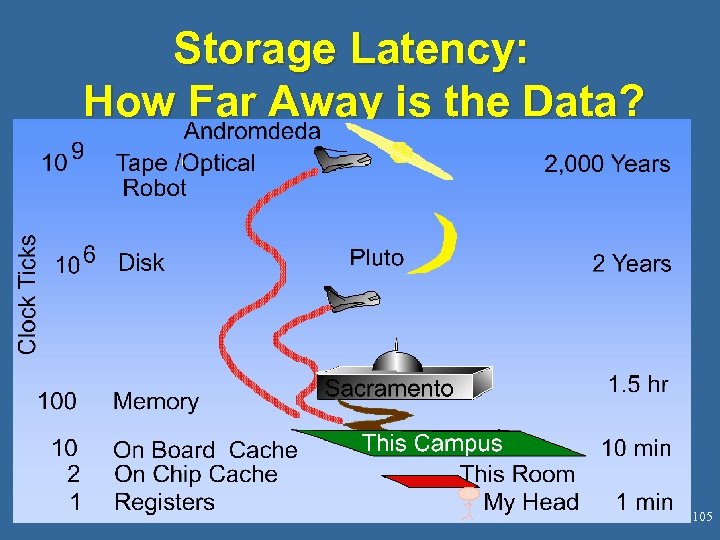

Storage Latency: How Far Away is the Data? 105

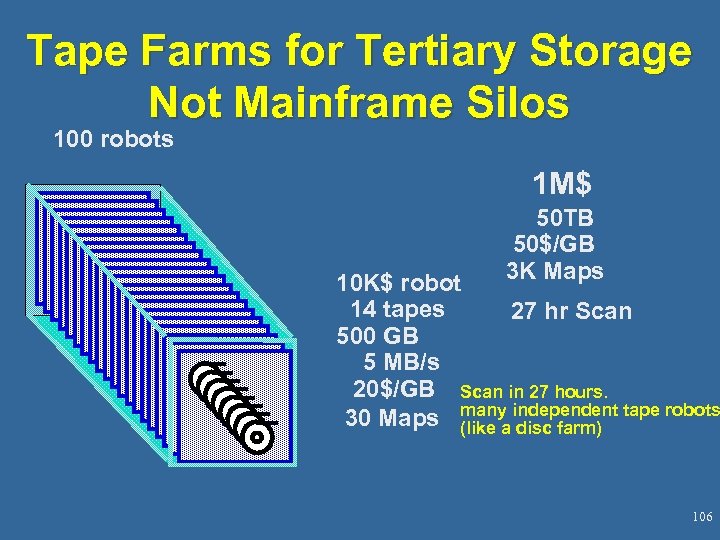

Tape Farms for Tertiary Storage Not Mainframe Silos 100 robots 1 M$ 50 TB 50$/GB 3 K Maps 10 K$ robot 14 tapes 27 hr Scan 500 GB 5 MB/s 20$/GB Scan in 27 hours. 30 Maps many independent tape robots (like a disc farm) 106

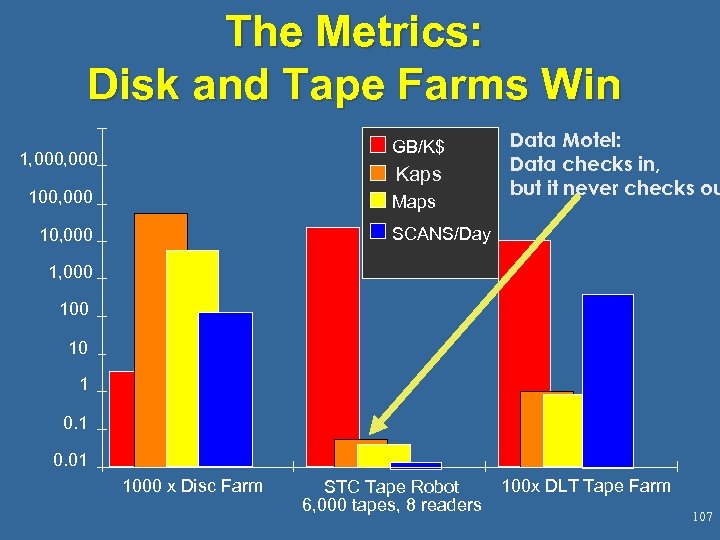

The Metrics: Disk and Tape Farms Win GB/K$ 1, 000 Kaps 100, 000 Maps Data Motel: Data checks in, but it never checks ou SCANS/Day 10, 000 100 10 1 0. 01 1000 x Disc Farm STC Tape Robot 6, 000 tapes, 8 readers 100 x DLT Tape Farm 107

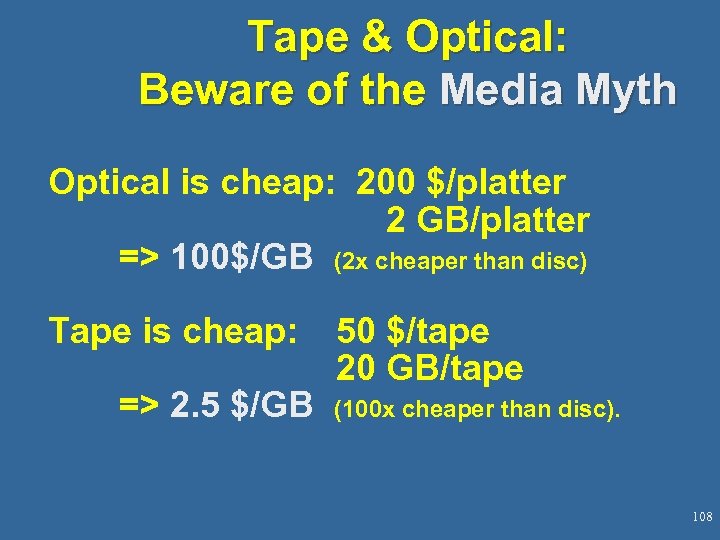

Tape & Optical: Beware of the Media Myth Optical is cheap: 200 $/platter 2 GB/platter => 100$/GB (2 x cheaper than disc) Tape is cheap: => 2. 5 $/GB 50 $/tape 20 GB/tape (100 x cheaper than disc). 108

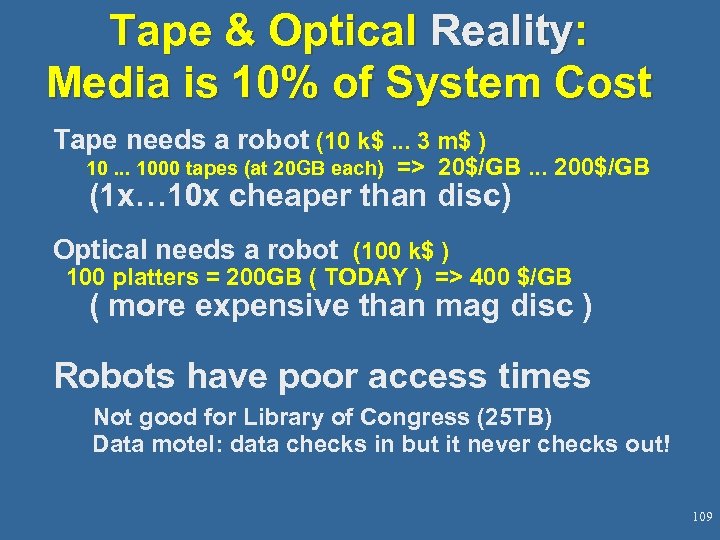

Tape & Optical Reality: Media is 10% of System Cost Tape needs a robot (10 k$. . . 3 m$ ) 10. . . 1000 tapes (at 20 GB each) => 20$/GB. . . 200$/GB (1 x… 10 x cheaper than disc) Optical needs a robot (100 k$ ) 100 platters = 200 GB ( TODAY ) => 400 $/GB ( more expensive than mag disc ) Robots have poor access times Not good for Library of Congress (25 TB) Data motel: data checks in but it never checks out! 109

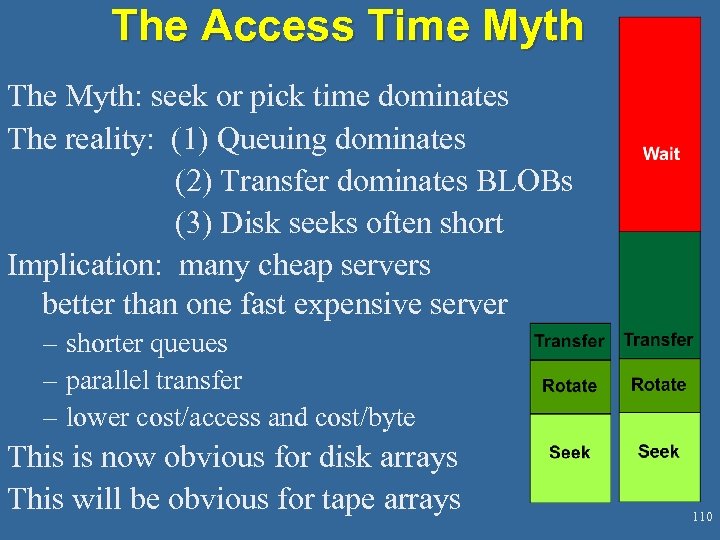

The Access Time Myth The Myth: seek or pick time dominates The reality: (1) Queuing dominates (2) Transfer dominates BLOBs (3) Disk seeks often short Implication: many cheap servers better than one fast expensive server – shorter queues – parallel transfer – lower cost/access and cost/byte This is now obvious for disk arrays This will be obvious for tape arrays 110

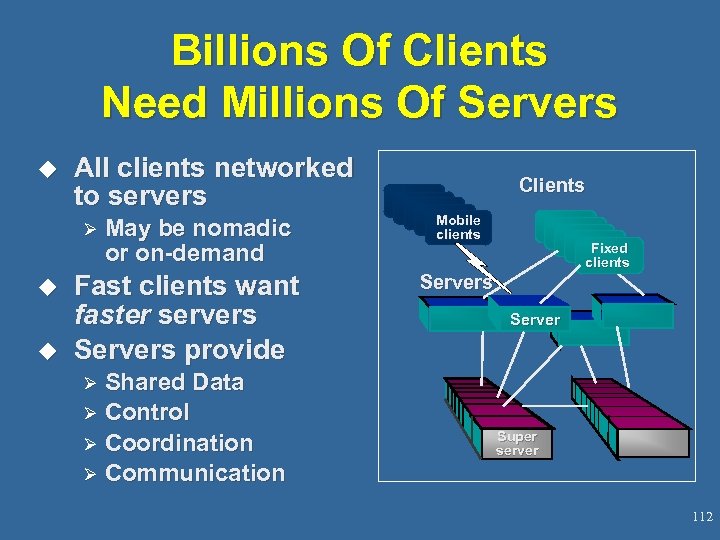

Billions Of Clients l l l Every device will be “intelligent” Doors, rooms, cars… Computing will be ubiquitous 111

Billions Of Clients Need Millions Of Servers u All clients networked to servers Ø u u May be nomadic or on-demand Fast clients want faster servers Servers provide Shared Data Ø Control Ø Coordination Ø Communication Clients Mobile clients Fixed clients Server Ø Super server 112

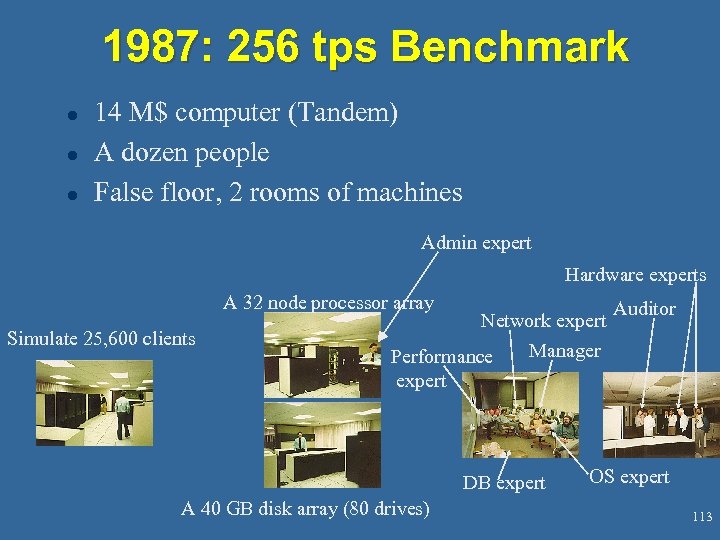

1987: 256 tps Benchmark l l l 14 M$ computer (Tandem) A dozen people False floor, 2 rooms of machines Admin expert Hardware experts A 32 node processor array Simulate 25, 600 clients Network expert Manager Performance expert DB expert A 40 GB disk array (80 drives) Auditor OS expert 113

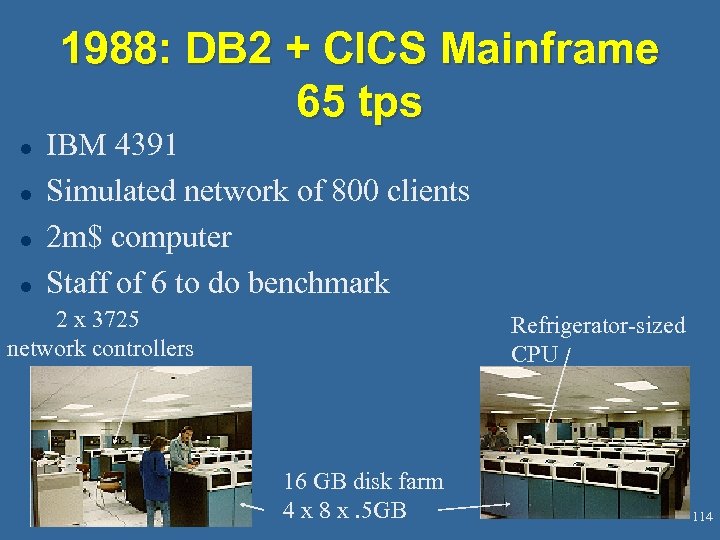

1988: DB 2 + CICS Mainframe 65 tps l l IBM 4391 Simulated network of 800 clients 2 m$ computer Staff of 6 to do benchmark 2 x 3725 network controllers Refrigerator-sized CPU 16 GB disk farm 4 x 8 x. 5 GB 114

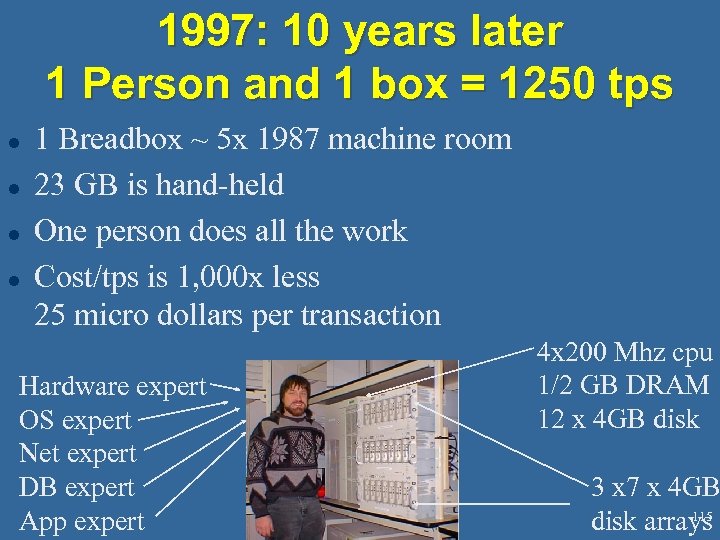

1997: 10 years later 1 Person and 1 box = 1250 tps l l 1 Breadbox ~ 5 x 1987 machine room 23 GB is hand-held One person does all the work Cost/tps is 1, 000 x less 25 micro dollars per transaction Hardware expert OS expert Net expert DB expert App expert 4 x 200 Mhz cpu 1/2 GB DRAM 12 x 4 GB disk 3 x 7 x 4 GB 115 disk arrays

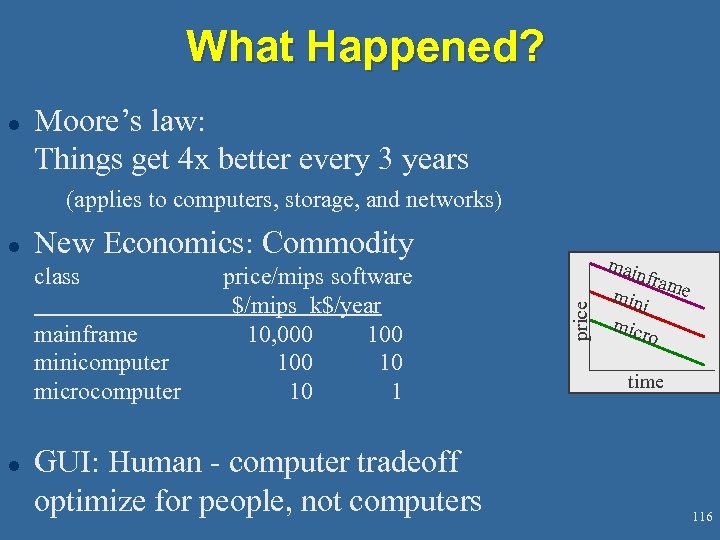

What Happened? l Moore’s law: Things get 4 x better every 3 years (applies to computers, storage, and networks) New Economics: Commodity class mainframe minicomputer microcomputer l price/mips software $/mips k$/year 10, 000 100 10 10 1 GUI: Human - computer tradeoff optimize for people, not computers main price l fram e min i micr o time 116

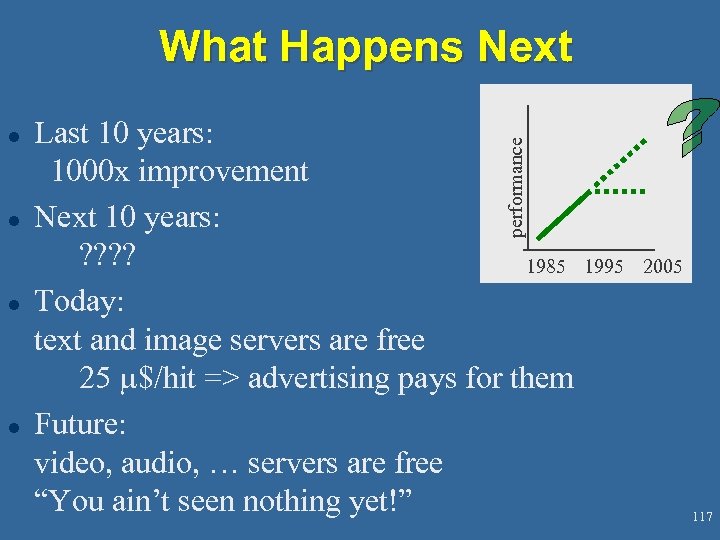

What Happens Next l l l Last 10 years: 1000 x improvement Next 10 years: ? ? 1985 Today: text and image servers are free 25 m$/hit => advertising pays for them Future: video, audio, … servers are free “You ain’t seen nothing yet!” performance l 1995 2005 117

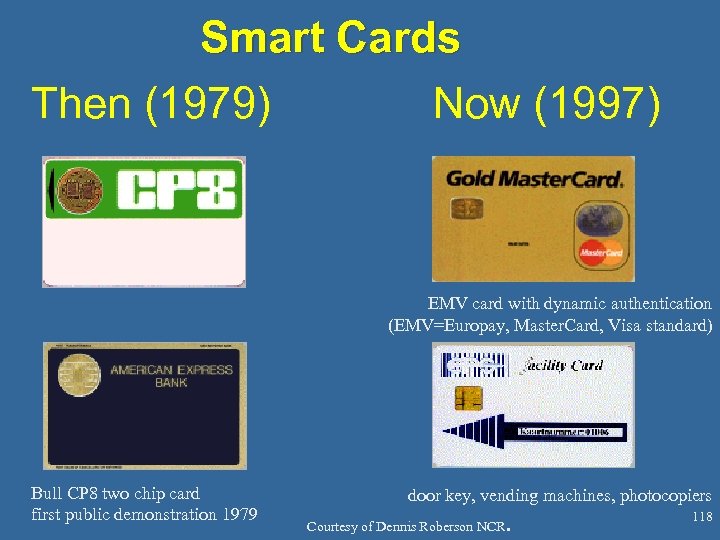

Smart Cards Then (1979) Now (1997) EMV card with dynamic authentication (EMV=Europay, Master. Card, Visa standard) Bull CP 8 two chip card first public demonstration 1979 door key, vending machines, photocopiers Courtesy of Dennis Roberson NCR . 118

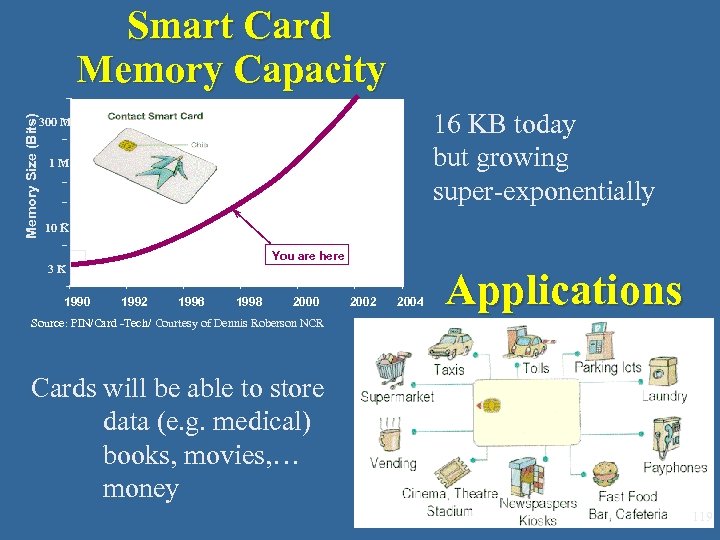

Memory Size (Bits) Smart Card Memory Capacity 16 KB today but growing super-exponentially 300 M 1 M 10 K You are here 3 K 1990 1992 1996 1998 2000 2002 2004 Applications Source: PIN/Card -Tech/ Courtesy of Dennis Roberson NCR Cards will be able to store data (e. g. medical) books, movies, … money 119

8ce56d6ef5164a6b1b2c8e0efdd84d5e.ppt