d64082e5a7afefd51e808a3bdeb1c03e.ppt

- Количество слайдов: 43

Welcome to my presentation n Approximate Frequency Counts over Data Streams n Optimal Aggregation Algorithms for Middleware Presenter: Xuehua Shen Advisor: Prof. Cheng. Xiang Zhai All rights reserved by Xuehua Shen xshen@uiuc. edu 1

Welcome to my presentation n Approximate Frequency Counts over Data Streams n Optimal Aggregation Algorithms for Middleware Presenter: Xuehua Shen Advisor: Prof. Cheng. Xiang Zhai All rights reserved by Xuehua Shen xshen@uiuc. edu 1

Approximate Frequency Counts over Data Streams Gurmeet Singh Manku, Rajeev Motwani Standford University (VLDB 02) All rights reserved by Xuehua Shen xshen@uiuc. edu 2

Approximate Frequency Counts over Data Streams Gurmeet Singh Manku, Rajeev Motwani Standford University (VLDB 02) All rights reserved by Xuehua Shen xshen@uiuc. edu 2

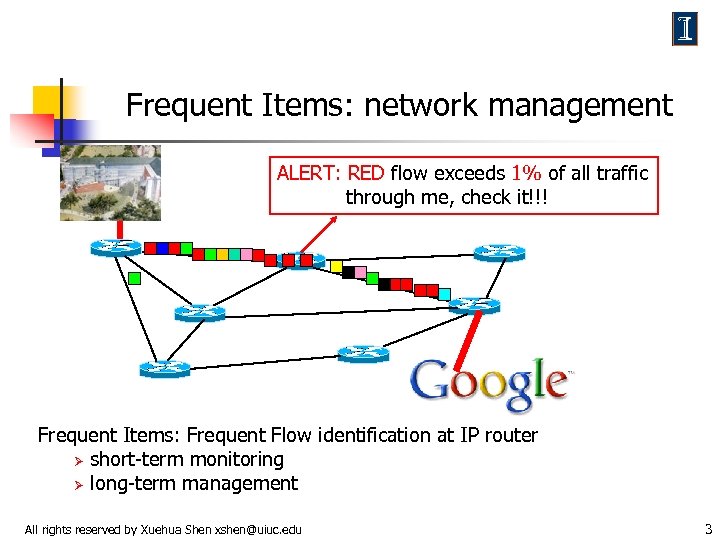

Frequent Items: network management ALERT: RED flow exceeds 1% of all traffic through me, check it!!! Frequent Items: Frequent Flow identification at IP router Ø short-term monitoring Ø long-term management All rights reserved by Xuehua Shen xshen@uiuc. edu 3

Frequent Items: network management ALERT: RED flow exceeds 1% of all traffic through me, check it!!! Frequent Items: Frequent Flow identification at IP router Ø short-term monitoring Ø long-term management All rights reserved by Xuehua Shen xshen@uiuc. edu 3

Frequent Itemsets: Mining Walmart Data I Find it! Among 100 million records seen so far, at least 1% customers buy both beer and diaper at same time and 51% customers who buy beer also buy diaper! … Frequent Itemsets at Supermarket Ø store layout Ø catalog design … All rights reserved by Xuehua Shen xshen@uiuc. edu 4

Frequent Itemsets: Mining Walmart Data I Find it! Among 100 million records seen so far, at least 1% customers buy both beer and diaper at same time and 51% customers who buy beer also buy diaper! … Frequent Itemsets at Supermarket Ø store layout Ø catalog design … All rights reserved by Xuehua Shen xshen@uiuc. edu 4

Challenges n Single pass n Limited Memory (network management) n Enumeration of itemsets(mining Walmart Data) All rights reserved by Xuehua Shen xshen@uiuc. edu 5

Challenges n Single pass n Limited Memory (network management) n Enumeration of itemsets(mining Walmart Data) All rights reserved by Xuehua Shen xshen@uiuc. edu 5

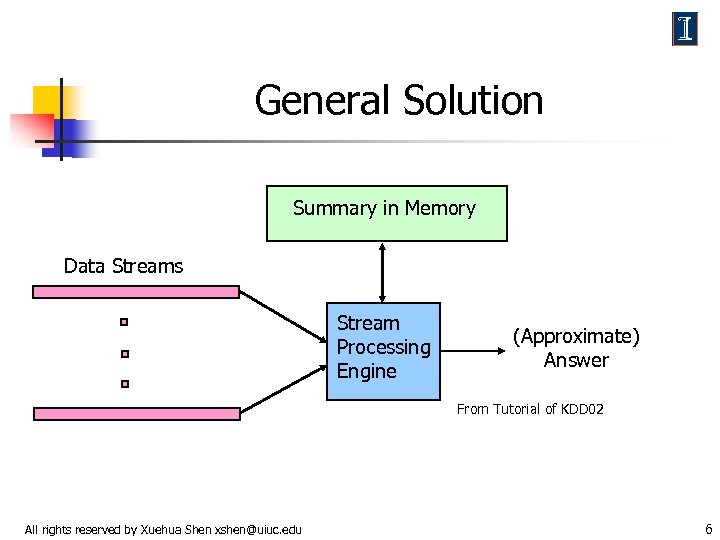

General Solution Summary in Memory Data Streams Stream Processing Engine (Approximate) Answer From Tutorial of KDD 02 All rights reserved by Xuehua Shen xshen@uiuc. edu 6

General Solution Summary in Memory Data Streams Stream Processing Engine (Approximate) Answer From Tutorial of KDD 02 All rights reserved by Xuehua Shen xshen@uiuc. edu 6

Approximate Algorithm n Approximation Algorithm: Ø Ø Difficult to compute exact answers with limited memory in single pass Approximate answers often sufficient (e. g. trend/pattern analysis) For Example: router is interested in all flows whose frequency is at least 1% (s) of the entire traffic stream seen so far (N, say 10, 000) and feels that 1/10 of s (ε=0. 1%) error is comfortable All rights reserved by Xuehua Shen xshen@uiuc. edu 7

Approximate Algorithm n Approximation Algorithm: Ø Ø Difficult to compute exact answers with limited memory in single pass Approximate answers often sufficient (e. g. trend/pattern analysis) For Example: router is interested in all flows whose frequency is at least 1% (s) of the entire traffic stream seen so far (N, say 10, 000) and feels that 1/10 of s (ε=0. 1%) error is comfortable All rights reserved by Xuehua Shen xshen@uiuc. edu 7

Contributions of this Paper n Lossy Counting for frequent items Ø Ø n Single pass Approximation Guarantees Extend Lossy Counting to identify frequent itemset Ø Single pass All rights reserved by Xuehua Shen xshen@uiuc. edu 8

Contributions of this Paper n Lossy Counting for frequent items Ø Ø n Single pass Approximation Guarantees Extend Lossy Counting to identify frequent itemset Ø Single pass All rights reserved by Xuehua Shen xshen@uiuc. edu 8

Outline n Lossy Counting for Frequent Itemsets n Summary and Comments All rights reserved by Xuehua Shen xshen@uiuc. edu 9

Outline n Lossy Counting for Frequent Itemsets n Summary and Comments All rights reserved by Xuehua Shen xshen@uiuc. edu 9

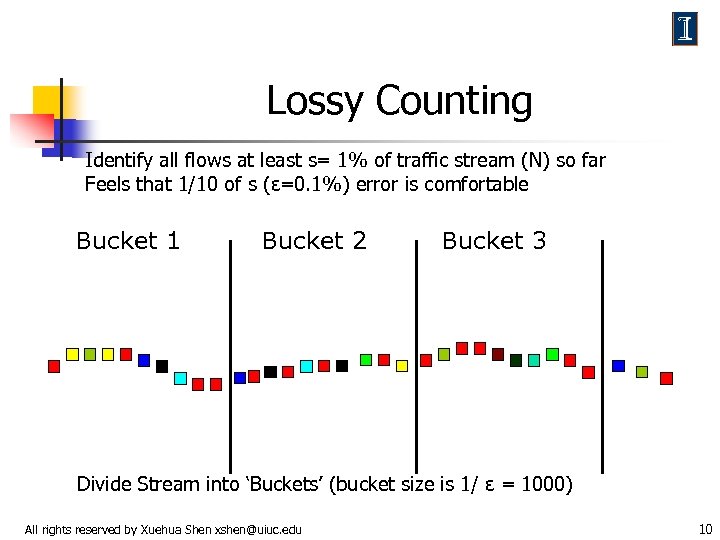

Lossy Counting Identify all flows at least s= 1% of traffic stream (N) so far Feels that 1/10 of s (ε=0. 1%) error is comfortable Bucket 1 Bucket 2 Bucket 3 Divide Stream into ‘Buckets’ (bucket size is 1/ ε = 1000) All rights reserved by Xuehua Shen xshen@uiuc. edu 10

Lossy Counting Identify all flows at least s= 1% of traffic stream (N) so far Feels that 1/10 of s (ε=0. 1%) error is comfortable Bucket 1 Bucket 2 Bucket 3 Divide Stream into ‘Buckets’ (bucket size is 1/ ε = 1000) All rights reserved by Xuehua Shen xshen@uiuc. edu 10

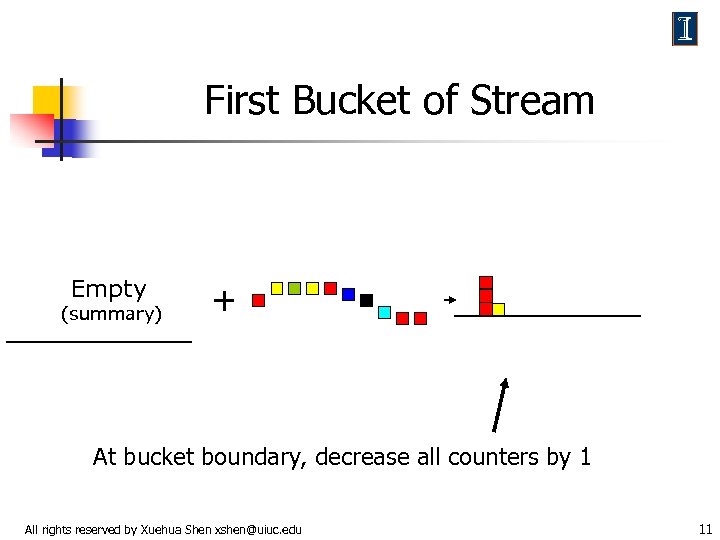

First Bucket of Stream Empty (summary) + At bucket boundary, decrease all counters by 1 All rights reserved by Xuehua Shen xshen@uiuc. edu 11

First Bucket of Stream Empty (summary) + At bucket boundary, decrease all counters by 1 All rights reserved by Xuehua Shen xshen@uiuc. edu 11

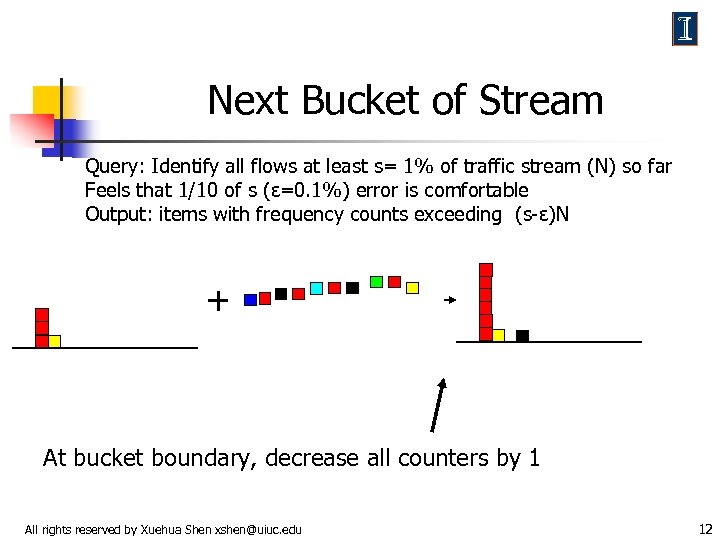

Next Bucket of Stream Query: Identify all flows at least s= 1% of traffic stream (N) so far Feels that 1/10 of s (ε=0. 1%) error is comfortable Output: items with frequency counts exceeding (s-ε)N + At bucket boundary, decrease all counters by 1 All rights reserved by Xuehua Shen xshen@uiuc. edu 12

Next Bucket of Stream Query: Identify all flows at least s= 1% of traffic stream (N) so far Feels that 1/10 of s (ε=0. 1%) error is comfortable Output: items with frequency counts exceeding (s-ε)N + At bucket boundary, decrease all counters by 1 All rights reserved by Xuehua Shen xshen@uiuc. edu 12

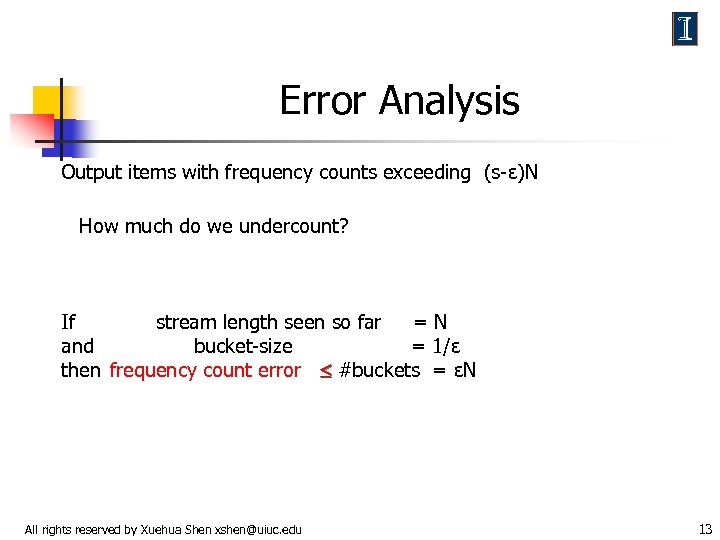

Error Analysis Output items with frequency counts exceeding (s-ε)N How much do we undercount? If stream length seen so far =N and bucket-size = 1/ε then frequency count error #buckets = εN All rights reserved by Xuehua Shen xshen@uiuc. edu 13

Error Analysis Output items with frequency counts exceeding (s-ε)N How much do we undercount? If stream length seen so far =N and bucket-size = 1/ε then frequency count error #buckets = εN All rights reserved by Xuehua Shen xshen@uiuc. edu 13

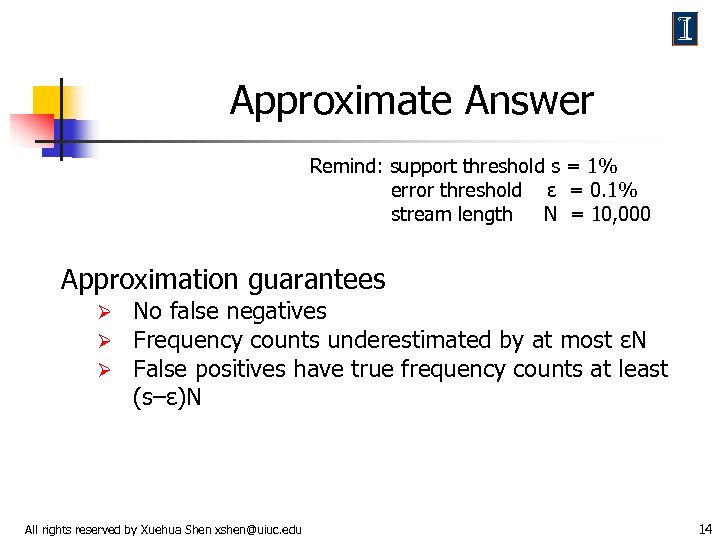

Approximate Answer Remind: support threshold s = 1% error threshold ε = 0. 1% stream length N = 10, 000 Approximation guarantees Ø Ø Ø No false negatives Frequency counts underestimated by at most εN False positives have true frequency counts at least (s–ε)N All rights reserved by Xuehua Shen xshen@uiuc. edu 14

Approximate Answer Remind: support threshold s = 1% error threshold ε = 0. 1% stream length N = 10, 000 Approximation guarantees Ø Ø Ø No false negatives Frequency counts underestimated by at most εN False positives have true frequency counts at least (s–ε)N All rights reserved by Xuehua Shen xshen@uiuc. edu 14

Outline n Lossy Counting for Frequent Itemsets n Summary and Comments All rights reserved by Xuehua Shen xshen@uiuc. edu 15

Outline n Lossy Counting for Frequent Itemsets n Summary and Comments All rights reserved by Xuehua Shen xshen@uiuc. edu 15

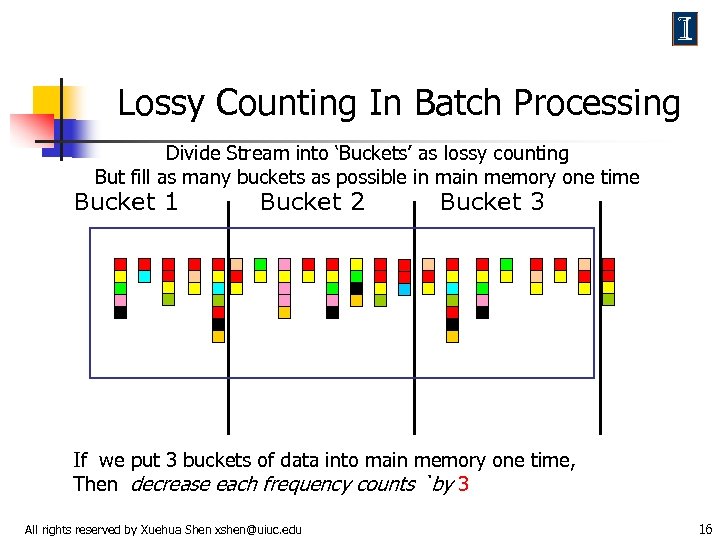

Lossy Counting In Batch Processing Divide Stream into ‘Buckets’ as lossy counting But fill as many buckets as possible in main memory one time Bucket 1 Bucket 2 Bucket 3 If we put 3 buckets of data into main memory one time, Then decrease each frequency counts `by 3 All rights reserved by Xuehua Shen xshen@uiuc. edu 16

Lossy Counting In Batch Processing Divide Stream into ‘Buckets’ as lossy counting But fill as many buckets as possible in main memory one time Bucket 1 Bucket 2 Bucket 3 If we put 3 buckets of data into main memory one time, Then decrease each frequency counts `by 3 All rights reserved by Xuehua Shen xshen@uiuc. edu 16

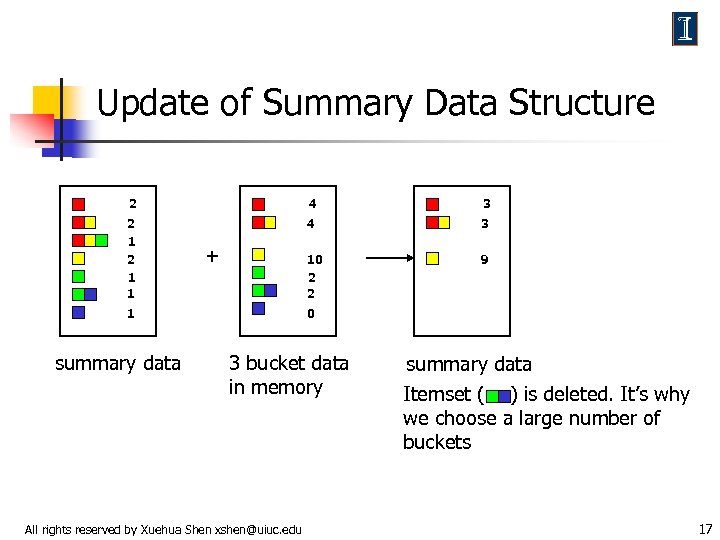

Update of Summary Data Structure 2 4 3 2 1 1 4 3 10 2 2 9 + 1 summary data 0 3 bucket data in memory All rights reserved by Xuehua Shen xshen@uiuc. edu summary data Itemset ( ) is deleted. It’s why we choose a large number of buckets 17

Update of Summary Data Structure 2 4 3 2 1 1 4 3 10 2 2 9 + 1 summary data 0 3 bucket data in memory All rights reserved by Xuehua Shen xshen@uiuc. edu summary data Itemset ( ) is deleted. It’s why we choose a large number of buckets 17

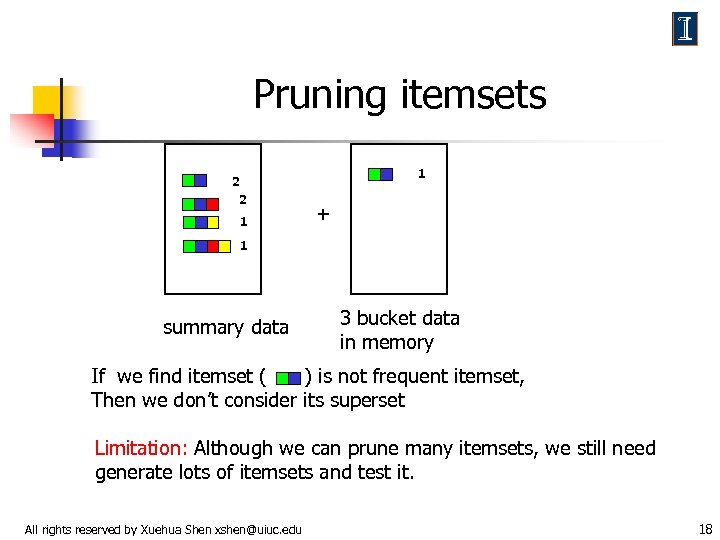

Pruning itemsets 1 2 2 1 + 1 summary data 3 bucket data in memory If we find itemset ( ) is not frequent itemset, Then we don’t consider its superset Limitation: Although we can prune many itemsets, we still need generate lots of itemsets and test it. All rights reserved by Xuehua Shen xshen@uiuc. edu 18

Pruning itemsets 1 2 2 1 + 1 summary data 3 bucket data in memory If we find itemset ( ) is not frequent itemset, Then we don’t consider its superset Limitation: Although we can prune many itemsets, we still need generate lots of itemsets and test it. All rights reserved by Xuehua Shen xshen@uiuc. edu 18

Outline n Lossy Counting for Frequent Itemsets n Summary and Comments All rights reserved by Xuehua Shen xshen@uiuc. edu 19

Outline n Lossy Counting for Frequent Itemsets n Summary and Comments All rights reserved by Xuehua Shen xshen@uiuc. edu 19

Summary n Lossy Counting: very simple idea n Lossy Counting in Frequent Itemsets n A probabilistic algorithm (Sticky Sampling) also proposed All rights reserved by Xuehua Shen xshen@uiuc. edu 20

Summary n Lossy Counting: very simple idea n Lossy Counting in Frequent Itemsets n A probabilistic algorithm (Sticky Sampling) also proposed All rights reserved by Xuehua Shen xshen@uiuc. edu 20

Weakness of this Paper n Space Bound is not good n For frequent itemsets, they do scan each record many times n Sometimes, we are only interested in recent data All rights reserved by Xuehua Shen xshen@uiuc. edu 21

Weakness of this Paper n Space Bound is not good n For frequent itemsets, they do scan each record many times n Sometimes, we are only interested in recent data All rights reserved by Xuehua Shen xshen@uiuc. edu 21

Related Work n n n Identify frequent items using EXACT 1/s space online [Karp, Papadimitriou TODS 2003] Concise Sampling [Gibbons SIGMOD 98] Top-K Frequent Items Zeitgeist, Estimating items with largest frequency count change [Charikar ICALP 2002] All rights reserved by Xuehua Shen xshen@uiuc. edu 22

Related Work n n n Identify frequent items using EXACT 1/s space online [Karp, Papadimitriou TODS 2003] Concise Sampling [Gibbons SIGMOD 98] Top-K Frequent Items Zeitgeist, Estimating items with largest frequency count change [Charikar ICALP 2002] All rights reserved by Xuehua Shen xshen@uiuc. edu 22

All rights reserved by Xuehua Shen xshen@uiuc. edu 23

All rights reserved by Xuehua Shen xshen@uiuc. edu 23

Backup Slides All rights reserved by Xuehua Shen xshen@uiuc. edu 24

Backup Slides All rights reserved by Xuehua Shen xshen@uiuc. edu 24

Space Requirement of Lossy Counting How many counters do we need? Worst case: 1/ε log (ε N) counters All rights reserved by Xuehua Shen xshen@uiuc. edu 25

Space Requirement of Lossy Counting How many counters do we need? Worst case: 1/ε log (ε N) counters All rights reserved by Xuehua Shen xshen@uiuc. edu 25

Small Enhancement of Lossy Counting Currently Frequency Errors: For counter (X, c), true frequency in [c, c+εN] Small Enhancement: Remember previous bucket ID For counter (X, c, Δ), true frequency in [c, c+ Δ -1] All rights reserved by Xuehua Shen xshen@uiuc. edu 26

Small Enhancement of Lossy Counting Currently Frequency Errors: For counter (X, c), true frequency in [c, c+εN] Small Enhancement: Remember previous bucket ID For counter (X, c, Δ), true frequency in [c, c+ Δ -1] All rights reserved by Xuehua Shen xshen@uiuc. edu 26

Network Traffic Monitoring n n n Scalability Tracking million of ants to track a few elephants Sample&Hold(SRAM) vs. Random Sampling (DRAM) [Varghese SIGCOMM 02] Flows in one hour: 1. 7 M(Fix-West), 0. 8 M(MCI) [Peterson GLOBECOMM 99] AT&T collects 100 GBs of Net. Flow Data each day [Garofalakis Tutorial SIGKDD 02] All rights reserved by Xuehua Shen xshen@uiuc. edu 27

Network Traffic Monitoring n n n Scalability Tracking million of ants to track a few elephants Sample&Hold(SRAM) vs. Random Sampling (DRAM) [Varghese SIGCOMM 02] Flows in one hour: 1. 7 M(Fix-West), 0. 8 M(MCI) [Peterson GLOBECOMM 99] AT&T collects 100 GBs of Net. Flow Data each day [Garofalakis Tutorial SIGKDD 02] All rights reserved by Xuehua Shen xshen@uiuc. edu 27

Do. S Attack n n Do. S attack: Occurs when a large traffic from one or more hosts is directed at some resource of network such as a web server. This artificial high load denies services to legitimate users. Distributed Do. S Attack: Congestion is not due to single flow, nor to general increase in traffic, but to a welldefined subset of the traffic. DDo. S occurred Feb, 2000 All rights reserved by Xuehua Shen xshen@uiuc. edu 28

Do. S Attack n n Do. S attack: Occurs when a large traffic from one or more hosts is directed at some resource of network such as a web server. This artificial high load denies services to legitimate users. Distributed Do. S Attack: Congestion is not due to single flow, nor to general increase in traffic, but to a welldefined subset of the traffic. DDo. S occurred Feb, 2000 All rights reserved by Xuehua Shen xshen@uiuc. edu 28

Data Warehouse n n n Data Warehouse: subject-oriented, integrated, time-variant and nonvolatile collection of data in support of management’s decision-making process Task: OLAP Conceptual Modeling: Star schema All rights reserved by Xuehua Shen xshen@uiuc. edu 29

Data Warehouse n n n Data Warehouse: subject-oriented, integrated, time-variant and nonvolatile collection of data in support of management’s decision-making process Task: OLAP Conceptual Modeling: Star schema All rights reserved by Xuehua Shen xshen@uiuc. edu 29

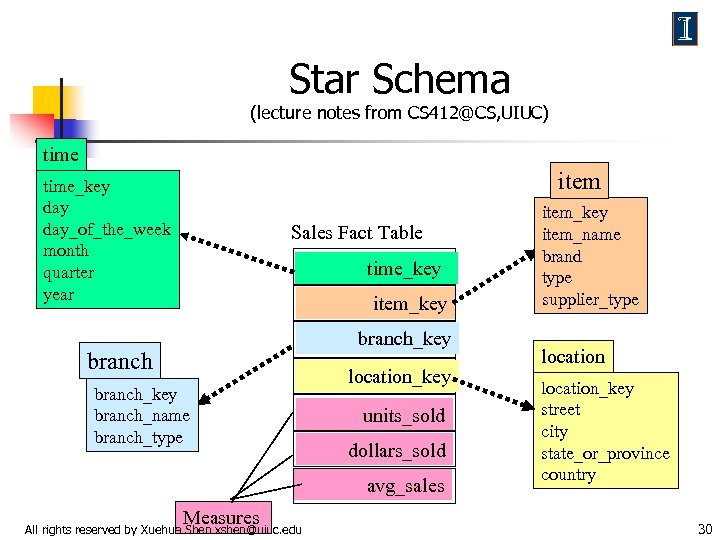

Star Schema (lecture notes from CS 412@CS, UIUC) time item time_key day_of_the_week month quarter year Sales Fact Table time_key item_key branch_key branch_name branch_type location_key units_sold dollars_sold avg_sales Measures All rights reserved by Xuehua Shen xshen@uiuc. edu item_key item_name brand type supplier_type location_key street city state_or_province country 30

Star Schema (lecture notes from CS 412@CS, UIUC) time item time_key day_of_the_week month quarter year Sales Fact Table time_key item_key branch_key branch_name branch_type location_key units_sold dollars_sold avg_sales Measures All rights reserved by Xuehua Shen xshen@uiuc. edu item_key item_name brand type supplier_type location_key street city state_or_province country 30

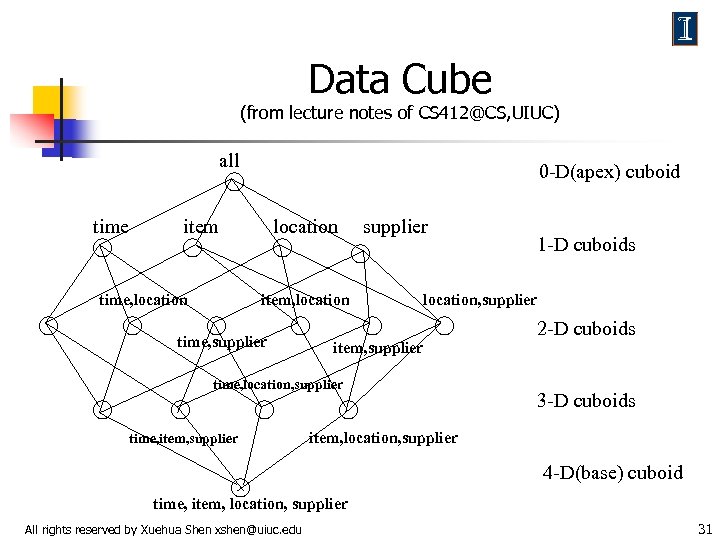

Data Cube (from lecture notes of CS 412@CS, UIUC) all time 0 -D(apex) cuboid item time, location item, location time, supplier location, supplier item, supplier time, location, supplier time, item, supplier 1 -D cuboids 2 -D cuboids 3 -D cuboids item, location, supplier 4 -D(base) cuboid time, item, location, supplier All rights reserved by Xuehua Shen xshen@uiuc. edu 31

Data Cube (from lecture notes of CS 412@CS, UIUC) all time 0 -D(apex) cuboid item time, location item, location time, supplier location, supplier item, supplier time, location, supplier time, item, supplier 1 -D cuboids 2 -D cuboids 3 -D cuboids item, location, supplier 4 -D(base) cuboid time, item, location, supplier All rights reserved by Xuehua Shen xshen@uiuc. edu 31

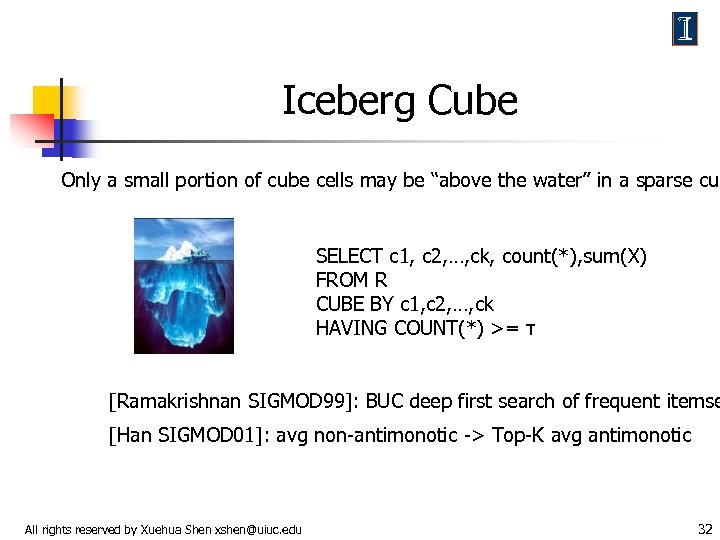

Iceberg Cube Only a small portion of cube cells may be “above the water’’ in a sparse cub SELECT c 1, c 2, …, ck, count(*), sum(X) FROM R CUBE BY c 1, c 2, …, ck HAVING COUNT(*) >= τ [Ramakrishnan SIGMOD 99]: BUC deep first search of frequent itemse [Han SIGMOD 01]: avg non-antimonotic -> Top-K avg antimonotic All rights reserved by Xuehua Shen xshen@uiuc. edu 32

Iceberg Cube Only a small portion of cube cells may be “above the water’’ in a sparse cub SELECT c 1, c 2, …, ck, count(*), sum(X) FROM R CUBE BY c 1, c 2, …, ck HAVING COUNT(*) >= τ [Ramakrishnan SIGMOD 99]: BUC deep first search of frequent itemse [Han SIGMOD 01]: avg non-antimonotic -> Top-K avg antimonotic All rights reserved by Xuehua Shen xshen@uiuc. edu 32

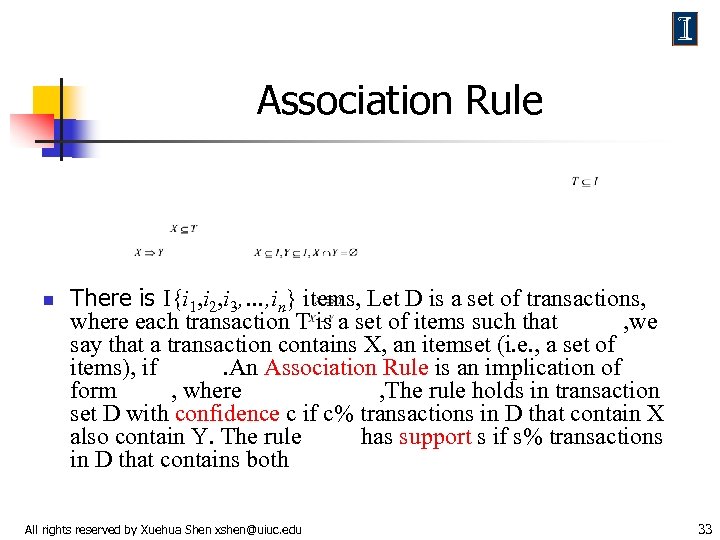

Association Rule n There is I{i 1, i 2, i 3, …, in} items, Let D is a set of transactions, where each transaction T is a set of items such that , we say that a transaction contains X, an itemset (i. e. , a set of items), if. An Association Rule is an implication of form , where , The rule holds in transaction set D with confidence c if c% transactions in D that contain X also contain Y. The rule has support s if s% transactions in D that contains both All rights reserved by Xuehua Shen xshen@uiuc. edu 33

Association Rule n There is I{i 1, i 2, i 3, …, in} items, Let D is a set of transactions, where each transaction T is a set of items such that , we say that a transaction contains X, an itemset (i. e. , a set of items), if. An Association Rule is an implication of form , where , The rule holds in transaction set D with confidence c if c% transactions in D that contain X also contain Y. The rule has support s if s% transactions in D that contains both All rights reserved by Xuehua Shen xshen@uiuc. edu 33

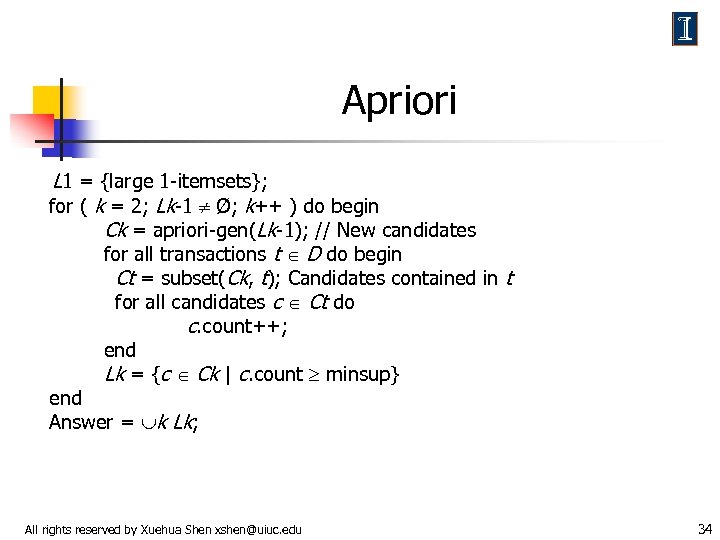

Apriori L 1 = {large 1 -itemsets}; for ( k = 2; Lk-1 Ø; k++ ) do begin Ck = apriori-gen(Lk-1); // New candidates for all transactions t D do begin Ct = subset(Ck, t); Candidates contained in t for all candidates c Ct do c. count++; end Lk = {c Ck | c. count minsup} end Answer = k Lk; All rights reserved by Xuehua Shen xshen@uiuc. edu 34

Apriori L 1 = {large 1 -itemsets}; for ( k = 2; Lk-1 Ø; k++ ) do begin Ck = apriori-gen(Lk-1); // New candidates for all transactions t D do begin Ct = subset(Ck, t); Candidates contained in t for all candidates c Ct do c. count++; end Lk = {c Ck | c. count minsup} end Answer = k Lk; All rights reserved by Xuehua Shen xshen@uiuc. edu 34

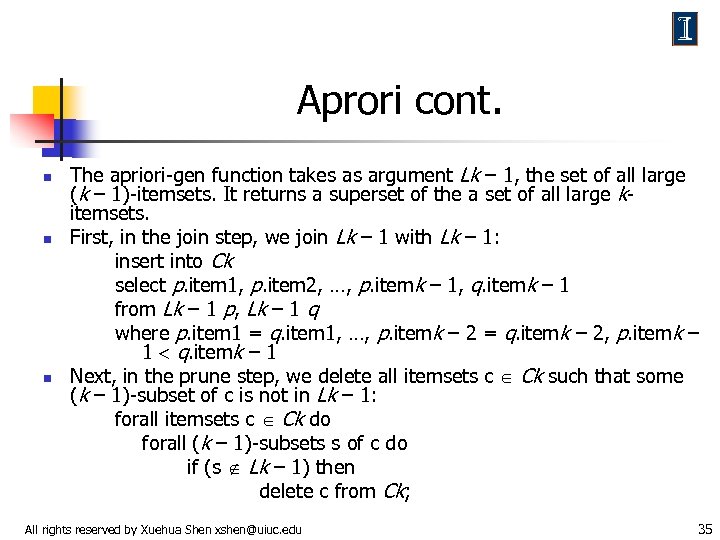

Aprori cont. n n n The apriori-gen function takes as argument Lk – 1, the set of all large (k – 1)-itemsets. It returns a superset of the a set of all large kitemsets. First, in the join step, we join Lk – 1 with Lk – 1: insert into Ck select p. item 1, p. item 2, …, p. itemk – 1, q. itemk – 1 from Lk – 1 p, Lk – 1 q where p. item 1 = q. item 1, …, p. itemk – 2 = q. itemk – 2, p. itemk – 1 q. itemk – 1 Next, in the prune step, we delete all itemsets c Ck such that some (k – 1)-subset of c is not in Lk – 1: forall itemsets c Ck do forall (k – 1)-subsets s of c do if (s Lk – 1) then delete c from Ck; All rights reserved by Xuehua Shen xshen@uiuc. edu 35

Aprori cont. n n n The apriori-gen function takes as argument Lk – 1, the set of all large (k – 1)-itemsets. It returns a superset of the a set of all large kitemsets. First, in the join step, we join Lk – 1 with Lk – 1: insert into Ck select p. item 1, p. item 2, …, p. itemk – 1, q. itemk – 1 from Lk – 1 p, Lk – 1 q where p. item 1 = q. item 1, …, p. itemk – 2 = q. itemk – 2, p. itemk – 1 q. itemk – 1 Next, in the prune step, we delete all itemsets c Ck such that some (k – 1)-subset of c is not in Lk – 1: forall itemsets c Ck do forall (k – 1)-subsets s of c do if (s Lk – 1) then delete c from Ck; All rights reserved by Xuehua Shen xshen@uiuc. edu 35

![Frequent Pattern Tree (FP Tree) [Han SIGMOD 99] n Scan database two times to Frequent Pattern Tree (FP Tree) [Han SIGMOD 99] n Scan database two times to](https://present5.com/presentation/d64082e5a7afefd51e808a3bdeb1c03e/image-36.jpg) Frequent Pattern Tree (FP Tree) [Han SIGMOD 99] n Scan database two times to construct FP Tree Ø Ø Ø n First scan finds frequent items Second scan builds FP Tree (sort items by frequency) FP Tree completely and compactly store FP information Run recursively the algorithm (FP growth) over the tree (conditional base for each item) TID 100 200 300 400 500 Items bought {f, a, c, d, g, i, m, p} {a, b, c, f, l, m, o} {b, f, h, j, o} {b, c, k, s, p} {a, f, c, e, l, p, m, n} All rights reserved by Xuehua Shen xshen@uiuc. edu Item frequency f 4 c 4 a 3 b 3 m 3 p 3 36

Frequent Pattern Tree (FP Tree) [Han SIGMOD 99] n Scan database two times to construct FP Tree Ø Ø Ø n First scan finds frequent items Second scan builds FP Tree (sort items by frequency) FP Tree completely and compactly store FP information Run recursively the algorithm (FP growth) over the tree (conditional base for each item) TID 100 200 300 400 500 Items bought {f, a, c, d, g, i, m, p} {a, b, c, f, l, m, o} {b, f, h, j, o} {b, c, k, s, p} {a, f, c, e, l, p, m, n} All rights reserved by Xuehua Shen xshen@uiuc. edu Item frequency f 4 c 4 a 3 b 3 m 3 p 3 36

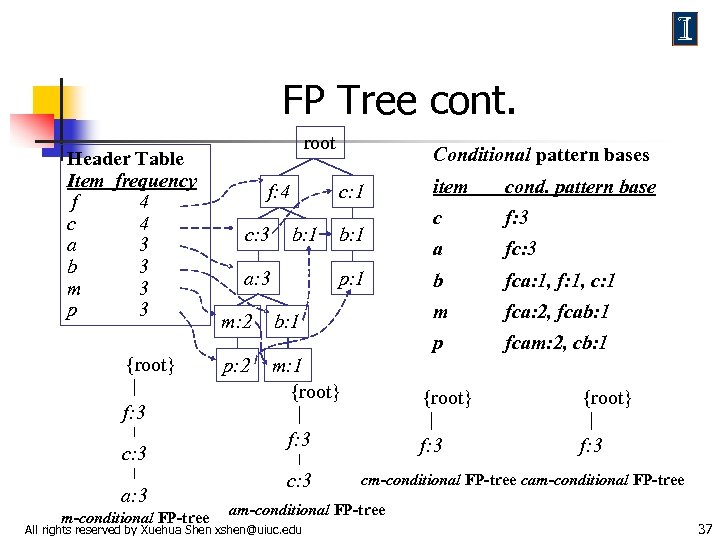

FP Tree cont. Header Table Item frequency f 4 c 4 a 3 b 3 m 3 p 3 {root} f: 3 c: 3 a: 3 m-conditional FP-tree root f: 4 c: 3 Conditional pattern bases b: 1 a: 3 p: 1 m: 2 b: 1 p: 2 m: 1 {root} c f: 3 a fc: 3 b fca: 1, f: 1, c: 1 fca: 2, fcab: 1 fcam: 2, cb: 1 {root} f: 3 c: 3 cond. pattern base p b: 1 item m c: 1 f: 3 cm-conditional FP-tree cam-conditional FP-tree All rights reserved by Xuehua Shen xshen@uiuc. edu 37

FP Tree cont. Header Table Item frequency f 4 c 4 a 3 b 3 m 3 p 3 {root} f: 3 c: 3 a: 3 m-conditional FP-tree root f: 4 c: 3 Conditional pattern bases b: 1 a: 3 p: 1 m: 2 b: 1 p: 2 m: 1 {root} c f: 3 a fc: 3 b fca: 1, f: 1, c: 1 fca: 2, fcab: 1 fcam: 2, cb: 1 {root} f: 3 c: 3 cond. pattern base p b: 1 item m c: 1 f: 3 cm-conditional FP-tree cam-conditional FP-tree All rights reserved by Xuehua Shen xshen@uiuc. edu 37

Approximate Algorithm cont. n Approximate answers – deterministic bounds Ø n Algorithm only compute an approximate answer, but bounds on error Approximate answers – probabilistic bounds Ø Algorithm only compute an approximate answer, and with high probability 1 - the computed answer bounds on error All rights reserved by Xuehua Shen xshen@uiuc. edu 38

Approximate Algorithm cont. n Approximate answers – deterministic bounds Ø n Algorithm only compute an approximate answer, but bounds on error Approximate answers – probabilistic bounds Ø Algorithm only compute an approximate answer, and with high probability 1 - the computed answer bounds on error All rights reserved by Xuehua Shen xshen@uiuc. edu 38

Sticky Sampling All rights reserved by Xuehua Shen xshen@uiuc. edu 39

Sticky Sampling All rights reserved by Xuehua Shen xshen@uiuc. edu 39

Sticky Sampling cont. Gradually adjust probability of selection Decrease frequency in summary by tossing coins Expected number of entries in summary = 2/ ln 1/s Independent of N! All rights reserved by Xuehua Shen xshen@uiuc. edu 40

Sticky Sampling cont. Gradually adjust probability of selection Decrease frequency in summary by tossing coins Expected number of entries in summary = 2/ ln 1/s Independent of N! All rights reserved by Xuehua Shen xshen@uiuc. edu 40

Trie n Trie: A tree for storing strings in which there is one node for every common prefix. The strings are stored in extra leaf nodes All rights reserved by Xuehua Shen xshen@uiuc. edu 41

Trie n Trie: A tree for storing strings in which there is one node for every common prefix. The strings are stored in extra leaf nodes All rights reserved by Xuehua Shen xshen@uiuc. edu 41

Sliding Window n we don’t evaluate the query over the entire past history of the data streams, but rather only over sliding windows of recent data from streams All rights reserved by Xuehua Shen xshen@uiuc. edu 42

Sliding Window n we don’t evaluate the query over the entire past history of the data streams, but rather only over sliding windows of recent data from streams All rights reserved by Xuehua Shen xshen@uiuc. edu 42

Blocking Operator n a query operator that is unable to produce the first tuple of its output until it has seen its entire input, e. g. sorting and most aggregation operator. All rights reserved by Xuehua Shen xshen@uiuc. edu 43

Blocking Operator n a query operator that is unable to produce the first tuple of its output until it has seen its entire input, e. g. sorting and most aggregation operator. All rights reserved by Xuehua Shen xshen@uiuc. edu 43