4a3eefaf272f1b5aa7ea4925f091a448.ppt

- Количество слайдов: 29

Welcome to CW 2007!!! www. cs. wisc. edu/~miron

Welcome to CW 2007!!! www. cs. wisc. edu/~miron

The Condor Project (Established ‘ 85) Distributed Computing research performed by a team of ~40 faculty, full time staff and students who hface software/middleware engineering challenges in a UNIX/Linux/Windows/OS X environment, hinvolved in national and international collaborations, hinteract with users in academia and industry, hmaintain and support a distributed production environment (more than 4000 CPUs at UW), hand educate and train students. www. cs. wisc. edu/~miron

The Condor Project (Established ‘ 85) Distributed Computing research performed by a team of ~40 faculty, full time staff and students who hface software/middleware engineering challenges in a UNIX/Linux/Windows/OS X environment, hinvolved in national and international collaborations, hinteract with users in academia and industry, hmaintain and support a distributed production environment (more than 4000 CPUs at UW), hand educate and train students. www. cs. wisc. edu/~miron

“ … Since the early days of mankind the primary motivation for the establishment of communities has been the idea that by being part of an organized group the capabilities of an individual are improved. The great progress in the area of inter-computer communication led to the development of means by which stand-alone processing subsystems can be integrated into multicomputer ‘communities’. … “ Miron Livny, “ Study of Load Balancing Algorithms for Decentralized Distributed Processing Systems. ”, Ph. D thesis, July 1983. www. cs. wisc. edu/~miron

“ … Since the early days of mankind the primary motivation for the establishment of communities has been the idea that by being part of an organized group the capabilities of an individual are improved. The great progress in the area of inter-computer communication led to the development of means by which stand-alone processing subsystems can be integrated into multicomputer ‘communities’. … “ Miron Livny, “ Study of Load Balancing Algorithms for Decentralized Distributed Processing Systems. ”, Ph. D thesis, July 1983. www. cs. wisc. edu/~miron

A “good year” for the principals and concepts we pioneered and the technologies that implement them www. cs. wisc. edu/~miron

A “good year” for the principals and concepts we pioneered and the technologies that implement them www. cs. wisc. edu/~miron

In August 2006 the UW Academic Planning Committee approved the Center for High Throughput Computing (CHTC). The L&S College created to staff positions for the center www. cs. wisc. edu/~miron

In August 2006 the UW Academic Planning Committee approved the Center for High Throughput Computing (CHTC). The L&S College created to staff positions for the center www. cs. wisc. edu/~miron

Main Threads of Activities › Distributed Computing Research – develop and › › › evaluate new concepts, frameworks and technologies Keep Condor “flight worthy” and support our users The Open Science Grid (OSG) – build and operate a national High Throughput Computing infrastructure The Grid Laboratory Of Wisconsin (GLOW) – build, maintain and operate a distributed computing and storage infrastructure on the UW campus The NSF Middleware Initiative Develop, build and operate a national Build and Test facility powered by Metronome www. cs. wisc. edu/~miron

Main Threads of Activities › Distributed Computing Research – develop and › › › evaluate new concepts, frameworks and technologies Keep Condor “flight worthy” and support our users The Open Science Grid (OSG) – build and operate a national High Throughput Computing infrastructure The Grid Laboratory Of Wisconsin (GLOW) – build, maintain and operate a distributed computing and storage infrastructure on the UW campus The NSF Middleware Initiative Develop, build and operate a national Build and Test facility powered by Metronome www. cs. wisc. edu/~miron

Later today Incorporating VM technologies (Condor VMs are now called slots) and improving support for parallel applications www. cs. wisc. edu/~miron

Later today Incorporating VM technologies (Condor VMs are now called slots) and improving support for parallel applications www. cs. wisc. edu/~miron

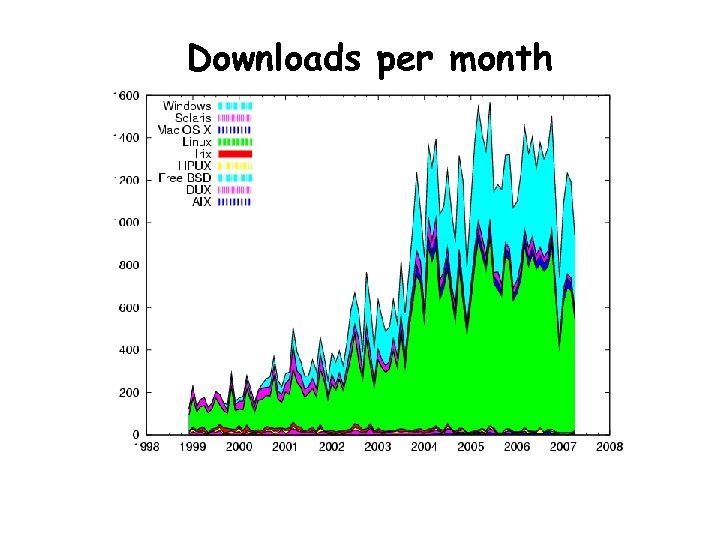

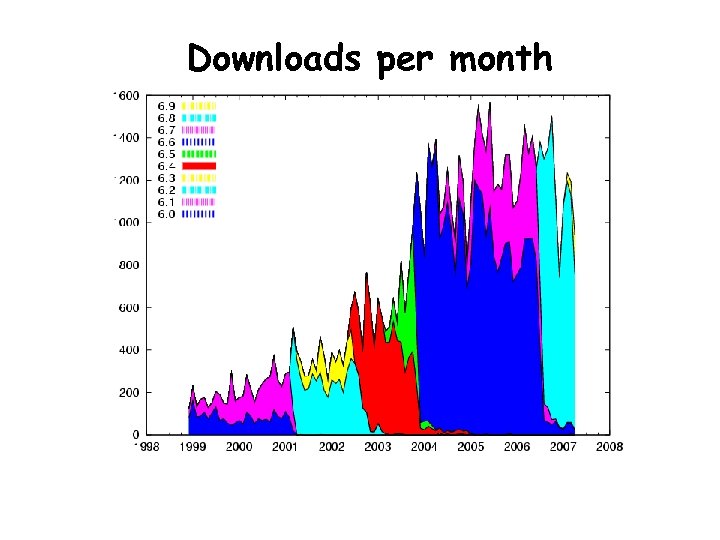

Downloads per month www. cs. wisc. edu/~miron

Downloads per month www. cs. wisc. edu/~miron

Downloads per month www. cs. wisc. edu/~miron

Downloads per month www. cs. wisc. edu/~miron

Software Development for Cyberinfrastructure (NSF 07 -503) Posted October 11, 2006 All awards are required to use NMI Build and Test services, or an NSF designated alternative, to support their software development and testing. Details of the NMI Build and Test facility can be found at http: //nmi. cs. wisc. edu/. www. cs. wisc. edu/~miron

Software Development for Cyberinfrastructure (NSF 07 -503) Posted October 11, 2006 All awards are required to use NMI Build and Test services, or an NSF designated alternative, to support their software development and testing. Details of the NMI Build and Test facility can be found at http: //nmi. cs. wisc. edu/. www. cs. wisc. edu/~miron

Later today Working with Red. Hat on integrating Condor into Linux Miron Livny and Michael Litzkow, "Making Workstations a Friendly Environment for Batch Jobs", Third IEEE Workshop on Workstation Operating Systems, April 1992, Key Biscayne, Florida. http: //www. cs. wisc. edu/condor/publications/doc/friendly-wos 3. pdf www. cs. wisc. edu/~miron

Later today Working with Red. Hat on integrating Condor into Linux Miron Livny and Michael Litzkow, "Making Workstations a Friendly Environment for Batch Jobs", Third IEEE Workshop on Workstation Operating Systems, April 1992, Key Biscayne, Florida. http: //www. cs. wisc. edu/condor/publications/doc/friendly-wos 3. pdf www. cs. wisc. edu/~miron

06/27/97 This month, NCSA's (National Center for Supercomputing Applications) Advanced Computing Group (ACG) will begin testing Condor, a software system developed at the University of Wisconsin that promises to expand computing capabilities through efficient capture of cycles on idle machines. The software, operating within an HTC (High Throughput Computing) rather than a traditional HPC (High Performance Computing) paradigm, organizes machines into clusters, called pools, or collections of clusters called flocks, that can exchange resources. Condor then hunts for idle workstations to run jobs. When the owner resumes computing, Condor migrates the job to another machine. To learn more about recent Condor developments, HPCwire interviewed Miron Livny, professor of Computer Science, University of Wisconsin at Madison and principal investigator for the Condor Project. www. cs. wisc. edu/~miron

06/27/97 This month, NCSA's (National Center for Supercomputing Applications) Advanced Computing Group (ACG) will begin testing Condor, a software system developed at the University of Wisconsin that promises to expand computing capabilities through efficient capture of cycles on idle machines. The software, operating within an HTC (High Throughput Computing) rather than a traditional HPC (High Performance Computing) paradigm, organizes machines into clusters, called pools, or collections of clusters called flocks, that can exchange resources. Condor then hunts for idle workstations to run jobs. When the owner resumes computing, Condor migrates the job to another machine. To learn more about recent Condor developments, HPCwire interviewed Miron Livny, professor of Computer Science, University of Wisconsin at Madison and principal investigator for the Condor Project. www. cs. wisc. edu/~miron

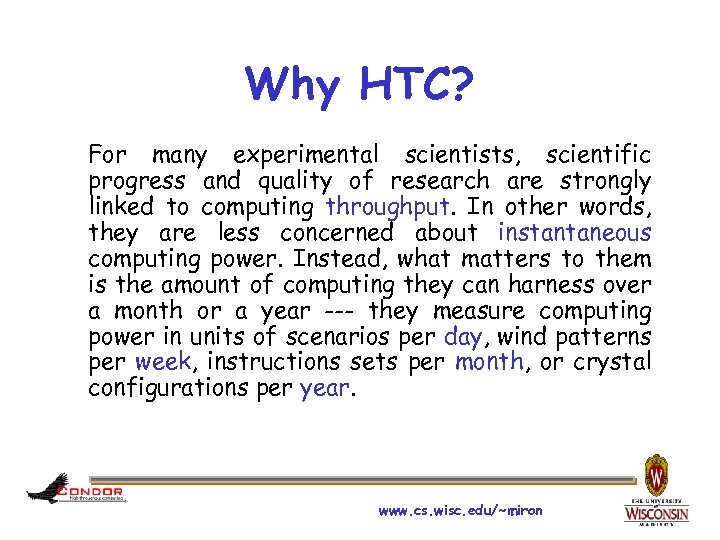

Why HTC? For many experimental scientists, scientific progress and quality of research are strongly linked to computing throughput. In other words, they are less concerned about instantaneous computing power. Instead, what matters to them is the amount of computing they can harness over a month or a year --- they measure computing power in units of scenarios per day, wind patterns per week, instructions sets per month, or crystal configurations per year. www. cs. wisc. edu/~miron

Why HTC? For many experimental scientists, scientific progress and quality of research are strongly linked to computing throughput. In other words, they are less concerned about instantaneous computing power. Instead, what matters to them is the amount of computing they can harness over a month or a year --- they measure computing power in units of scenarios per day, wind patterns per week, instructions sets per month, or crystal configurations per year. www. cs. wisc. edu/~miron

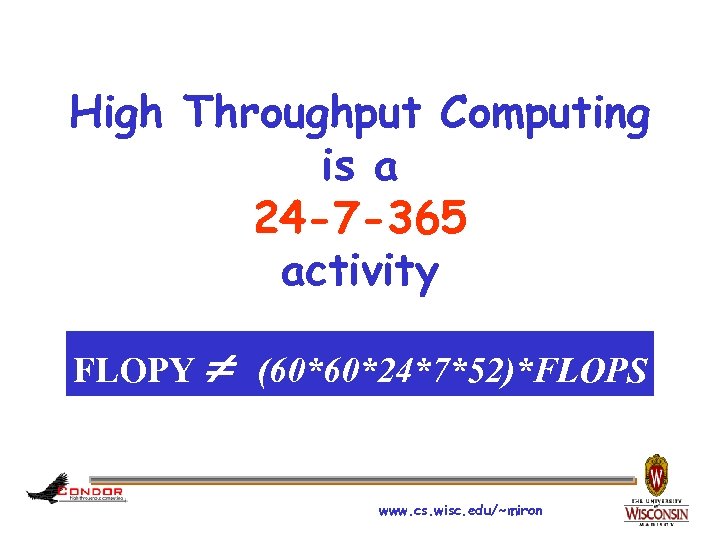

High Throughput Computing is a 24 -7 -365 activity FLOPY (60*60*24*7*52)*FLOPS www. cs. wisc. edu/~miron

High Throughput Computing is a 24 -7 -365 activity FLOPY (60*60*24*7*52)*FLOPS www. cs. wisc. edu/~miron

The Grid: Blueprint for a New Computing Infrastructure Edited by Ian Foster and Carl Kesselman July 1998, 701 pages. The grid promises to fundamentally change the way we think about and use computing. This infrastructure will connect multiple regional and national computational grids, creating a universal source of pervasive and dependable computing power that supports dramatically new classes of applications. The Grid provides a clear vision of what computational grids are, why we need them, who will use them, and how they will be programmed. www. cs. wisc. edu/~miron

The Grid: Blueprint for a New Computing Infrastructure Edited by Ian Foster and Carl Kesselman July 1998, 701 pages. The grid promises to fundamentally change the way we think about and use computing. This infrastructure will connect multiple regional and national computational grids, creating a universal source of pervasive and dependable computing power that supports dramatically new classes of applications. The Grid provides a clear vision of what computational grids are, why we need them, who will use them, and how they will be programmed. www. cs. wisc. edu/~miron

“ … We claim that these mechanisms, although originally developed in the context of a cluster of workstations, are also applicable to computational grids. In addition to the required flexibility of services in these grids, a very important concern is that the system be robust enough to run in “production mode” continuously even in the face of component failures. … “ Miron Livny & Rajesh Raman, "High Throughput Resource Management", in “The Grid: Blueprint for a New Computing Infrastructure”. www. cs. wisc. edu/~miron

“ … We claim that these mechanisms, although originally developed in the context of a cluster of workstations, are also applicable to computational grids. In addition to the required flexibility of services in these grids, a very important concern is that the system be robust enough to run in “production mode” continuously even in the face of component failures. … “ Miron Livny & Rajesh Raman, "High Throughput Resource Management", in “The Grid: Blueprint for a New Computing Infrastructure”. www. cs. wisc. edu/~miron

Later today Working with IBM on supporting HTC on the Blue Gene www. cs. wisc. edu/~miron

Later today Working with IBM on supporting HTC on the Blue Gene www. cs. wisc. edu/~miron

Taking HTC to the National Level www. cs. wisc. edu/~miron

Taking HTC to the National Level www. cs. wisc. edu/~miron

The Open Science Grid (OSG) Taking HTC tothe National Level Miron Livny OSG PI and Facility Coordinator University of Wisconsin-Madison 3/19/2018

The Open Science Grid (OSG) Taking HTC tothe National Level Miron Livny OSG PI and Facility Coordinator University of Wisconsin-Madison 3/19/2018

The OSG vision Transformprocessing and data intensive science through a crossdomain self-managed national distributedcyber-infrastructure that brings togethercampus and community infrastructure and facilitating the needs of Virtual Organizations at all scales

The OSG vision Transformprocessing and data intensive science through a crossdomain self-managed national distributedcyber-infrastructure that brings togethercampus and community infrastructure and facilitating the needs of Virtual Organizations at all scales

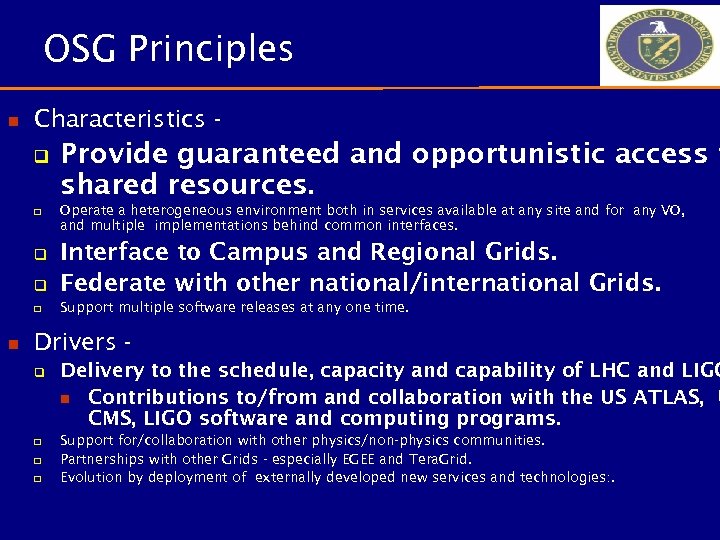

OSG Principles n Characteristics q q Provide guaranteed and opportunistic access t shared resources. Operate a heterogeneous environment both in services available at any site and for any VO, and multiple implementations behind common interfaces. q Interface to Campus and Regional Grids. Federate with other national/international Grids. q Support multiple software releases at any one time. q n Drivers q q Delivery to the schedule, capacity and capability of LHC and LIGO n Contributions to/from and collaboration with the US ATLAS, U CMS, LIGO software and computing programs. Support for/collaboration with other physics/non-physics communities. Partnerships with other Grids - especially EGEE and Tera. Grid. Evolution by deployment of externally developed new services and technologies: .

OSG Principles n Characteristics q q Provide guaranteed and opportunistic access t shared resources. Operate a heterogeneous environment both in services available at any site and for any VO, and multiple implementations behind common interfaces. q Interface to Campus and Regional Grids. Federate with other national/international Grids. q Support multiple software releases at any one time. q n Drivers q q Delivery to the schedule, capacity and capability of LHC and LIGO n Contributions to/from and collaboration with the US ATLAS, U CMS, LIGO software and computing programs. Support for/collaboration with other physics/non-physics communities. Partnerships with other Grids - especially EGEE and Tera. Grid. Evolution by deployment of externally developed new services and technologies: .

Tomorrow Building Campus Grids with Condor www. cs. wisc. edu/~miron

Tomorrow Building Campus Grids with Condor www. cs. wisc. edu/~miron

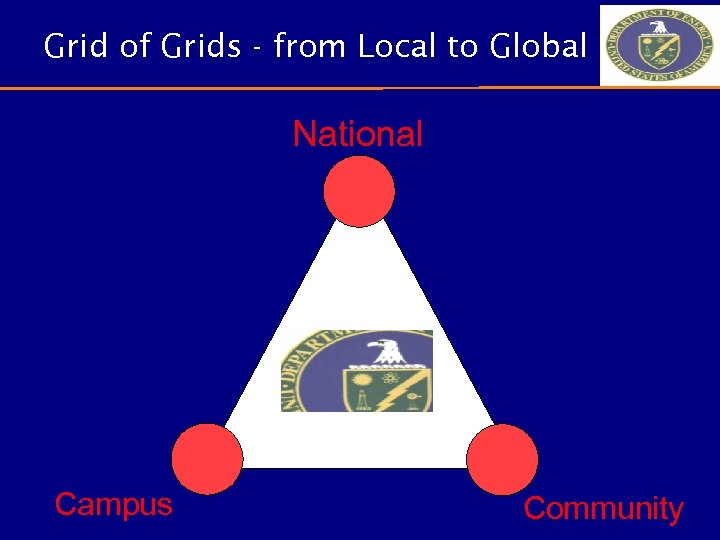

Grid of Grids - from Local to Global National Campus Community

Grid of Grids - from Local to Global National Campus Community

Who are you? n A resource can be accessed by a user via the campus, community or national grid. n A user can access a resource with a campus, community or national grid identity.

Who are you? n A resource can be accessed by a user via the campus, community or national grid. n A user can access a resource with a campus, community or national grid identity.

Tomorrow Just in time scheduling with Condor “glide-ins” (scheduling overlays) www. cs. wisc. edu/~miron

Tomorrow Just in time scheduling with Condor “glide-ins” (scheduling overlays) www. cs. wisc. edu/~miron

OSG challenges n Develop the organizational and management structure of a consortium that drives such a Cyber Infrastructure n Develop the organizational and management structure for the project that builds, operates and evolves such Cyber Infrastructure n Maintain and evolve a software stack capable of offering powerful and dependable capabilities that meet the science objectives of the NSF and DOE scientific communities n Operate and evolve a dependable and well managed distributed facility

OSG challenges n Develop the organizational and management structure of a consortium that drives such a Cyber Infrastructure n Develop the organizational and management structure for the project that builds, operates and evolves such Cyber Infrastructure n Maintain and evolve a software stack capable of offering powerful and dependable capabilities that meet the science objectives of the NSF and DOE scientific communities n Operate and evolve a dependable and well managed distributed facility

The OSG Project n Co-funded by DOE and NSF at an annual rate of ~$6 M for 5 years starting FY-07. n 15 institutions involved – 4 DOE Labs and 11 universities n Currently main stakeholders are from physics - US LHC experiments, LIGO, STAR experiment, the Tevatron Run II and Astrophysics experiments n A mix of DOE-Lab and campus resources n Active “engagement” effort to add new domains and resource providers to the OSG consortium

The OSG Project n Co-funded by DOE and NSF at an annual rate of ~$6 M for 5 years starting FY-07. n 15 institutions involved – 4 DOE Labs and 11 universities n Currently main stakeholders are from physics - US LHC experiments, LIGO, STAR experiment, the Tevatron Run II and Astrophysics experiments n A mix of DOE-Lab and campus resources n Active “engagement” effort to add new domains and resource providers to the OSG consortium

Security Workflows Fire-walls Scalability Scheduling … www. cs. wisc. edu/~miron

Security Workflows Fire-walls Scalability Scheduling … www. cs. wisc. edu/~miron

Thank you for building such a wonderful community www. cs. wisc. edu/~miron

Thank you for building such a wonderful community www. cs. wisc. edu/~miron