ed62477b62a10761bf2f1e5b5a3d8d98.ppt

- Количество слайдов: 87

Web Spam Yonatan Ariel SDBI 2005 The Hebrew University of Jerusalem Based on the work of Gyongyi, Zoltan; Berkhin, Pavel; Garcia-Molina, Hector; Pedersen, Jan Stanford University

Contents • What is web spam • Combating web spam – Trust. Rank • Combating web spam – Mass Estimation • Conclusion

Web Spam • Actions intended to mislead search engines into ranking some pages higher than they deserve. • Search engines are the entryways to the web Financial gains

Consequences • Decreased search results quality § “Kaiser pharmacy” returns techdictionary. com The first step in combating spam is • Increased cost of each processed query understanding it § Search engine indexes are inflated with useless pages

Search Engines • High quality results, i. e. pages that are § Relevant for a specify query • Textual similarity § Important • Popularity • Search engines combine relevance and importance, in order to compute Ranking

Definition revised • any deliberate human action that is meant to trigger an unjustifiably favorable relevance or importance for some web page, considering the page’s true value

Search Engine Optimizers • Engage in spamming (according to our definition) • Ethical methods § Finding relevant directories to which a site can be submitted § Using a reasonably sized description meta tag § Using a short and relevant page title to name each page

Spamming Techniques • Boosting techniques § Achieving high relevance / importance • Hiding techniques § Hiding the boosting techniques We’ll cover them both

Techniques • Boosting Techniques § Term Spamming § Link Spamming • Hiding Techniques

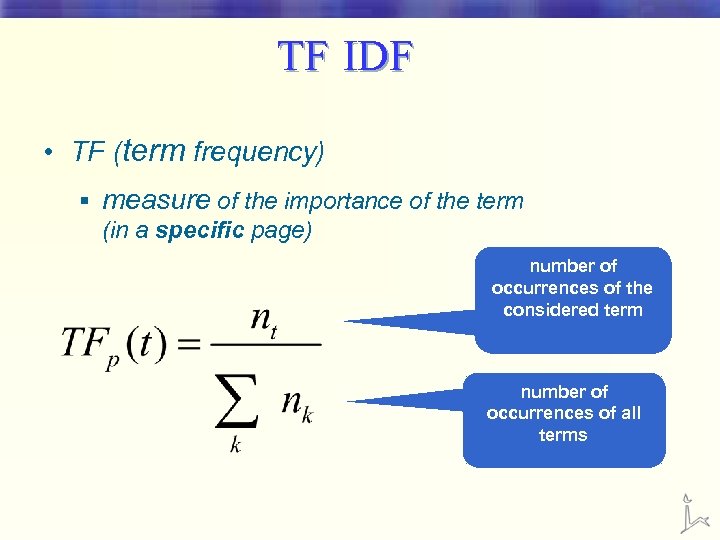

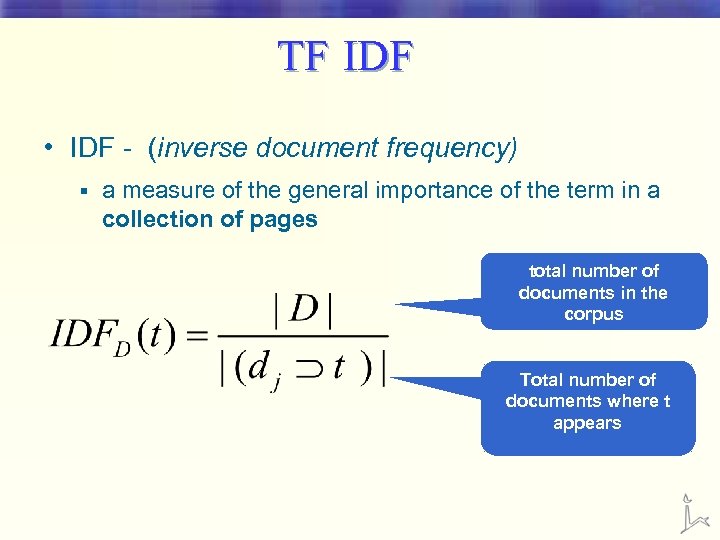

TF IDF • TF (term frequency) § measure of the importance of the term (in a specific page) number of occurrences of the considered term number of occurrences of all terms

TF IDF • IDF - (inverse document frequency) § a measure of the general importance of the term in a collection of pages total number of documents in the corpus Total number of documents where t appears

TF-IDF • A high weight in tf-idf is reached by a high term frequency (in the given document) and a low document frequency of the term in the whole collection of documents. • Spammers: § Make a page relevant for a large number of queries § Make a page very relevant for a specific query

Term Spamming Techniques • Body Spam § Simplest, oldest, most popular. • Title Spam § Higher weights. • Meta tag spam Low priority § <META NAME"=keywords" CONTENT="jew, jews, jew watch, jews and communism, jews and banking, jews and banks, jews in government. . history, diversity, Red Revolution, USSR, jews in government , holocaust, atrocities, defamation, diversity, civil rights, plurali, bible, Bible, murder, crime, Trotsky, genocide, NKVD, Russia, New York, mafia, spy, spies, Rosenberg<" §

Term Spamming Techniques (cont’d) • Anchor text spam § <a href=“target. html”> free, great deals, cheap, free </a> • URL § Buy-canon-rebel-20 d-lens-case. camerasx. com

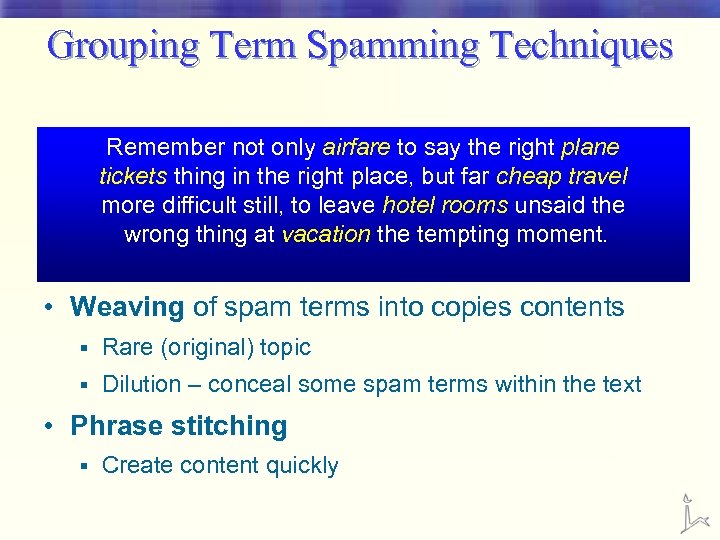

Grouping Term Spamming Techniques • Repetition not only airfare to say the right plane Remember tickets thing in the right place, but far cheap travel Increased relevance for a few specific queries more difficult still, to leave hotel rooms unsaid the • Dumping thing large numbertempting moment. wrong of a at vacation the of unrelated terms § Effective against Rare, obscure terms queries § • Weaving of spam terms into copies contents § Rare (original) topic § Dilution – conceal some spam terms within the text • Phrase stitching § Create content quickly

Techniques • Boosting Techniques § Term Spamming § Link Spamming • Hiding Techniques

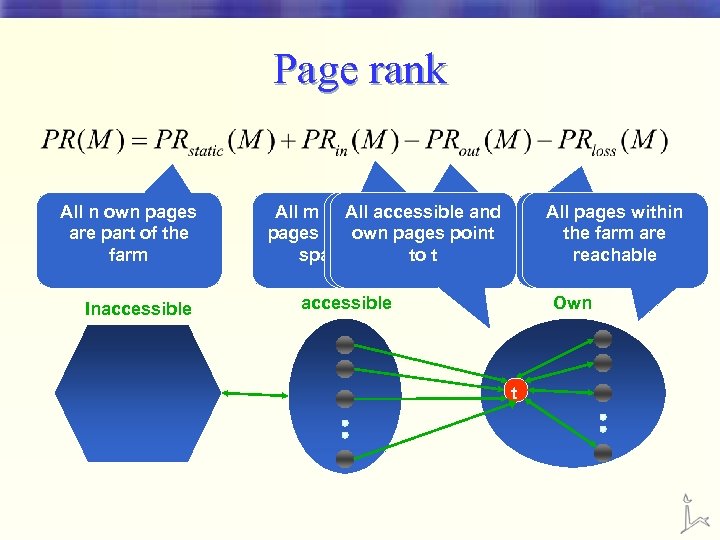

Three Types Of Pages On The Web • Inaccessible § Spammers cannot modify • Accessible § Can be modified in a limited way • Own pages § We call a group of own pages a spam farm

First Algorithm - HITS • Assigns global hub and authority scores to each page • Circular definition: § Important hub pages are those that point to many important authority pages § Important authority pages are those pointed to by many hubs • Hub scores can be easily spammed § Adding outgoing links to a large number of well knows, reputable pages. • Authority score is more complicated § The more the better

Second Algorithm - Page Rank • a family of algorithms for assigning numerical weightings to hyperlinked documents • The Page. Rank value of a page reflects the frequency of hits on that page by a random surfer § is the probability of being at that page after lots of clicks § We continue at random from a sink page

Page rank All n own pages are part of the farm Inaccessible All m accessible All accessible and Links pointing pages point to pagesspam outside the point own the spam farm toare farm t supressed accessible No vote gets lost All pages within (each page has an the farm are outgoing link) reachable Own t

Techniques – Outgoing links • Manually adding outgoing link to wellknows hosts; increased hub score § Directories sites • dmoz. org • Yahoo! Directory § Creating massive outgoing link structure quickly

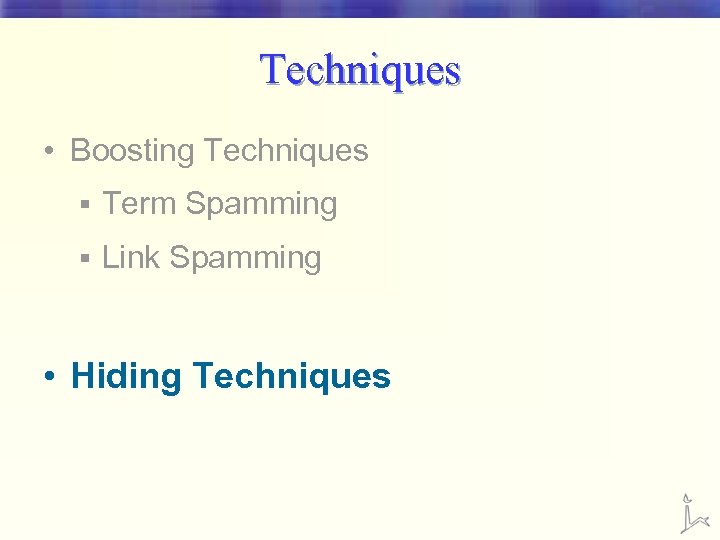

Techniques – Incoming Links • Honey-pot – useful resource • Infiltrate a web directory • Links on blogs, guest books, wikis § Google’s tag – <a href"=http: //www. example. com/" rel"=nofollow">discount</a < • Link exchange • Buy expired domains • Create own spam farm

Techniques • Boosting Techniques § Term Spamming § Link Spamming • Hiding Techniques

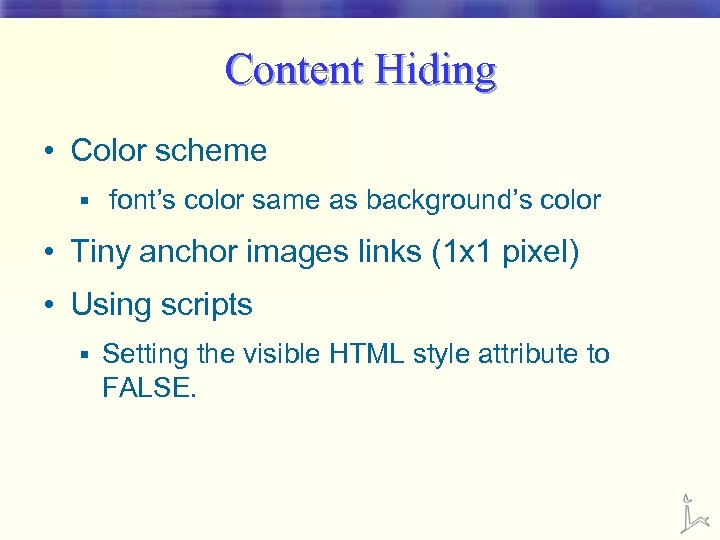

Content Hiding • Color scheme § font’s color same as background’s color • Tiny anchor images links (1 x 1 pixel) • Using scripts § Setting the visible HTML style attribute to FALSE.

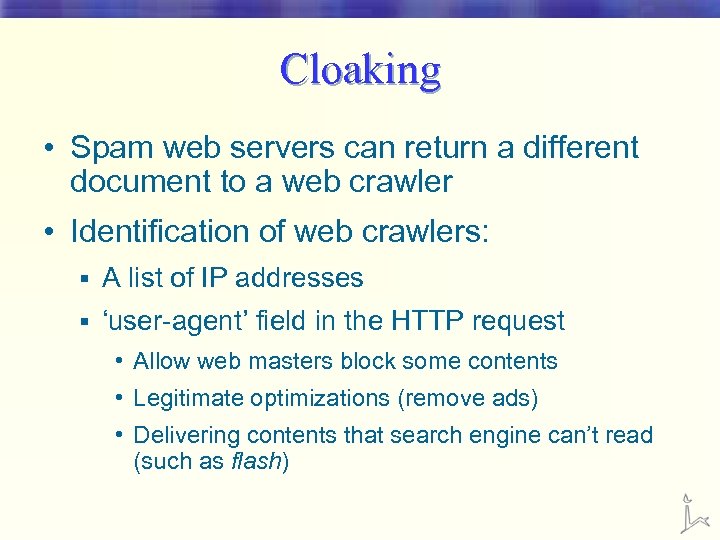

Cloaking • Spam web servers can return a different document to a web crawler • Identification of web crawlers: § A list of IP addresses § ‘user-agent’ field in the HTTP request • Allow web masters block some contents • Legitimate optimizations (remove ads) • Delivering contents that search engine can’t read (such as flash)

Redirection • Automatically redirecting the browser to another URL • Refresh meta tag in the header of an HTML document § <meta http-equiv=“refresh” content=“ 0; url=target. html> • Simple to identify • Scripts § <script language=“javascript> location. replace(“target. html”) </script>

How can we fight it? • IDENTIFY instances of spam § Stop crawling / indexing such pages • PREVENT spamming § Avoid cloaking – identifying as regular web browsers • COUNTERBALANCE the effect of spamming § Use variation of the ranking methods

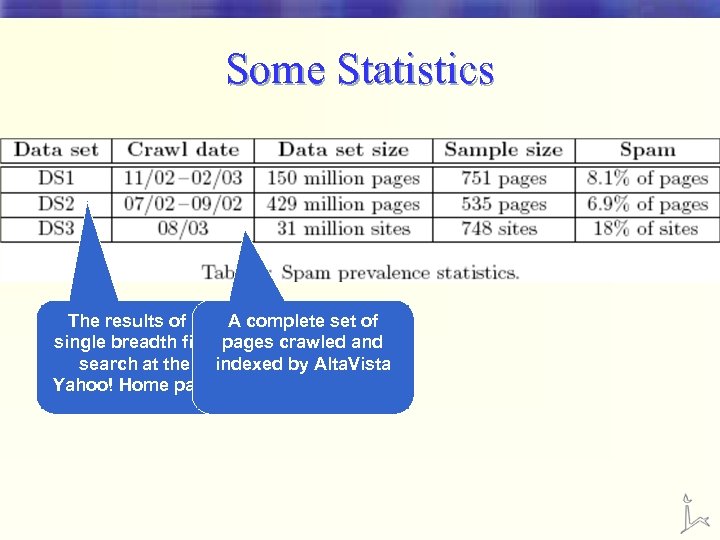

Some Statistics The results of a A complete set of single breadth first pages crawled and search at the indexed by Alta. Vista Yahoo! Home page

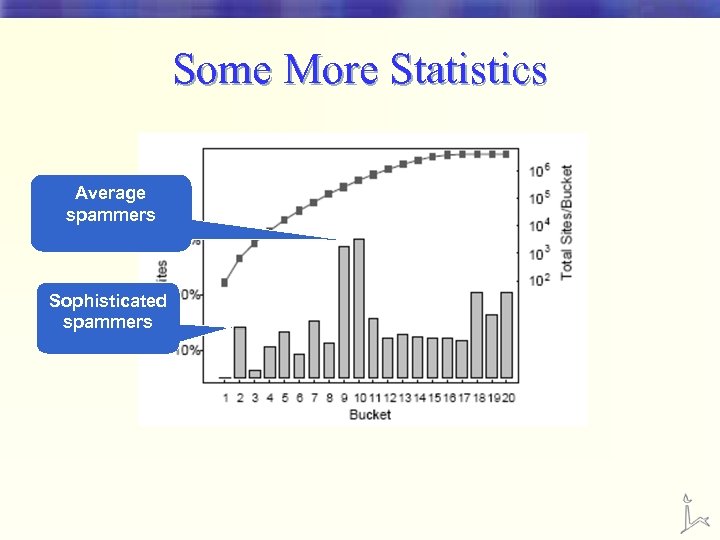

Some More Statistics Average spammers Sophisticated spammers

Contents • What is web spam • Combating web spam – Trust. Rank • Combating web spam – Mass Estimation • Conclusion

Motivation • The spam detection process is very expensive and slow, but is critical to the success of search engines • We’d like to assist the human experts who detect web spam

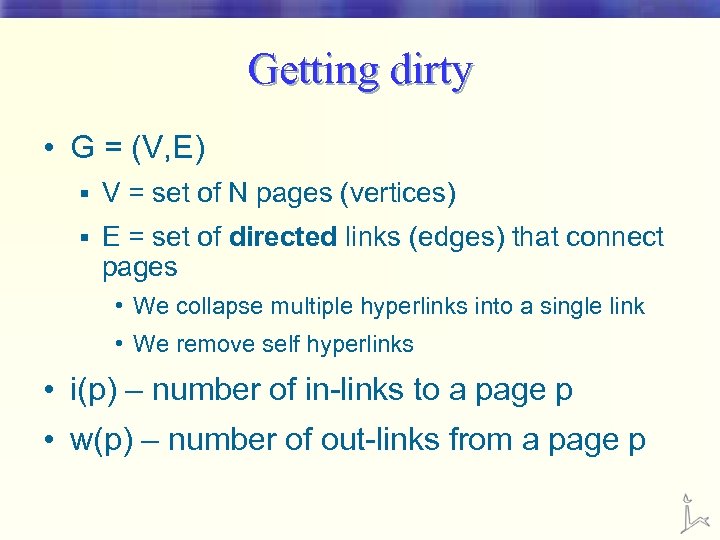

Getting dirty • G = (V, E) § V = set of N pages (vertices) § E = set of directed links (edges) that connect pages • We collapse multiple hyperlinks into a single link • We remove self hyperlinks • i(p) – number of in-links to a page p • w(p) – number of out-links from a page p

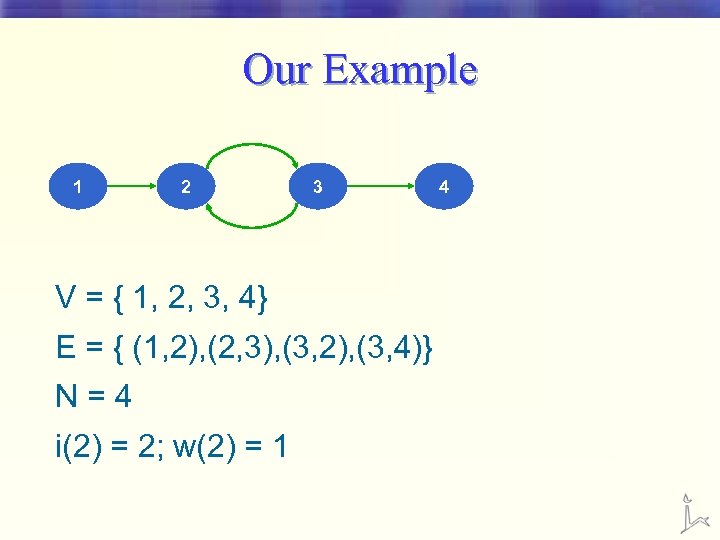

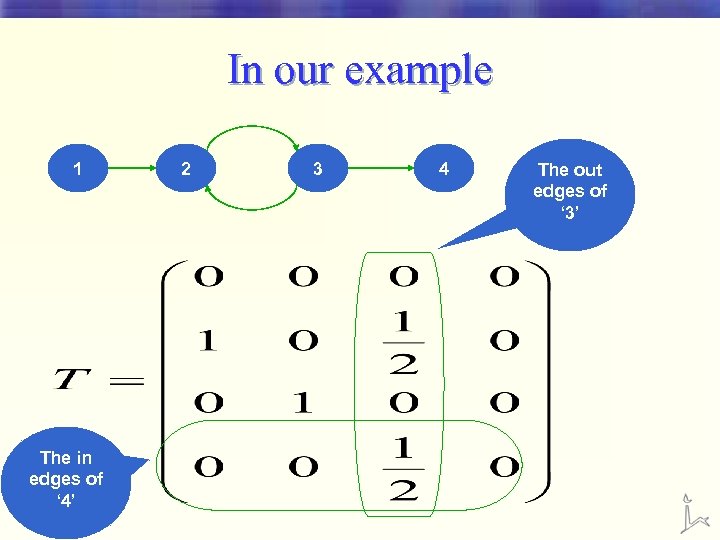

Our Example 1 2 3 V = { 1, 2, 3, 4} E = { (1, 2), (2, 3), (3, 2), (3, 4)} N=4 i(2) = 2; w(2) = 1 4

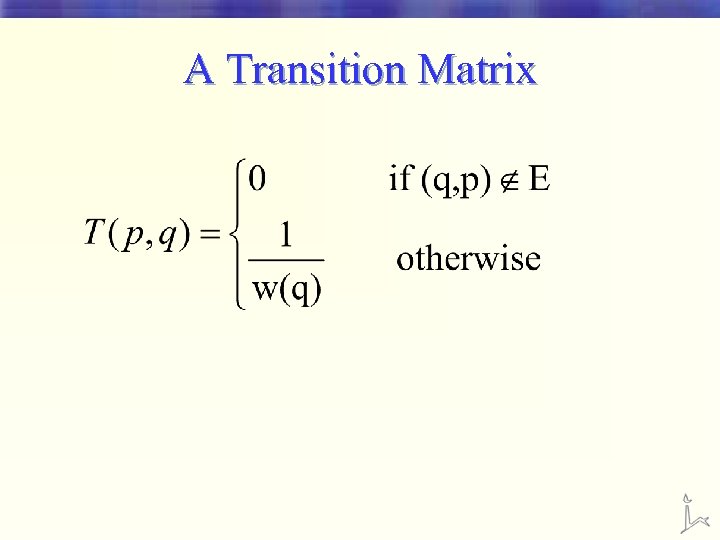

A Transition Matrix

In our example 1 The in edges of ‘ 4’ 2 3 4 The out edges of ‘ 3’

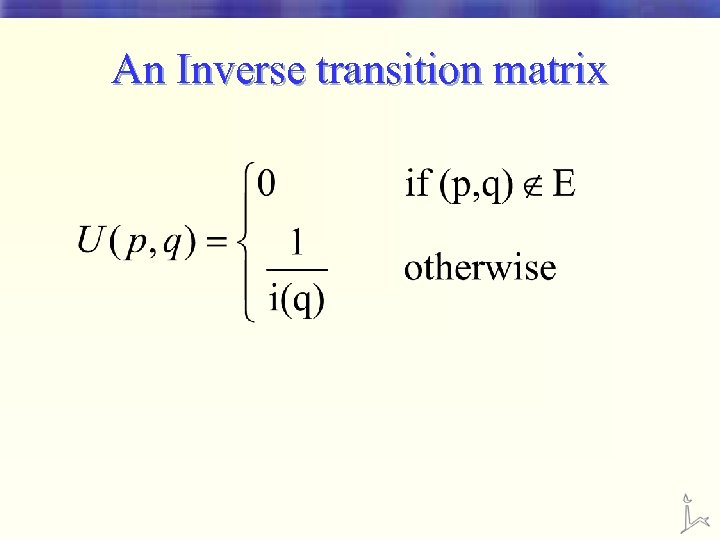

An Inverse transition matrix

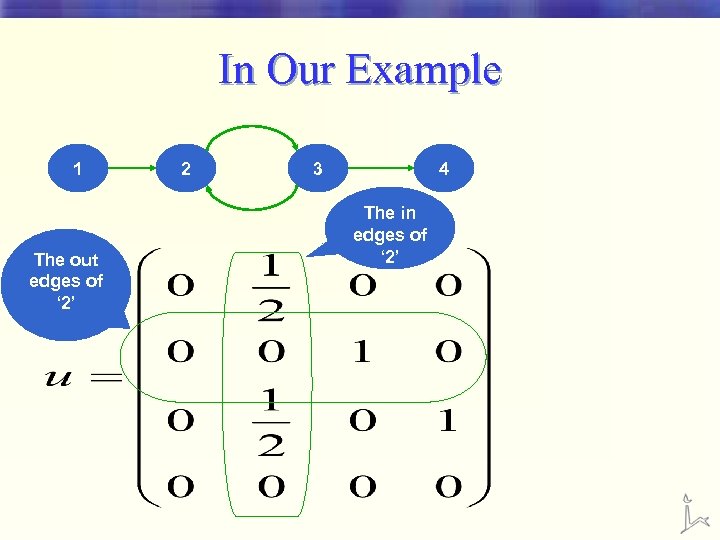

In Our Example 1 The out edges of ‘ 2’ 2 3 4 The in edges of ‘ 2’

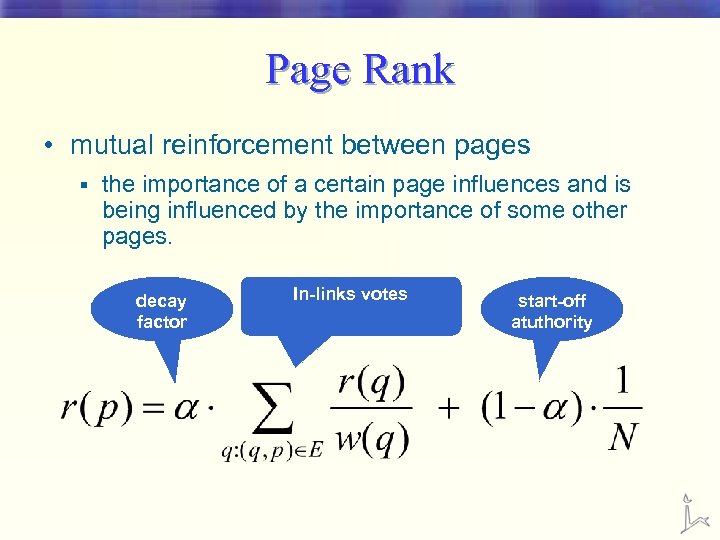

Page Rank • mutual reinforcement between pages § the importance of a certain page influences and is being influenced by the importance of some other pages. decay factor In-links votes start-off atuthority

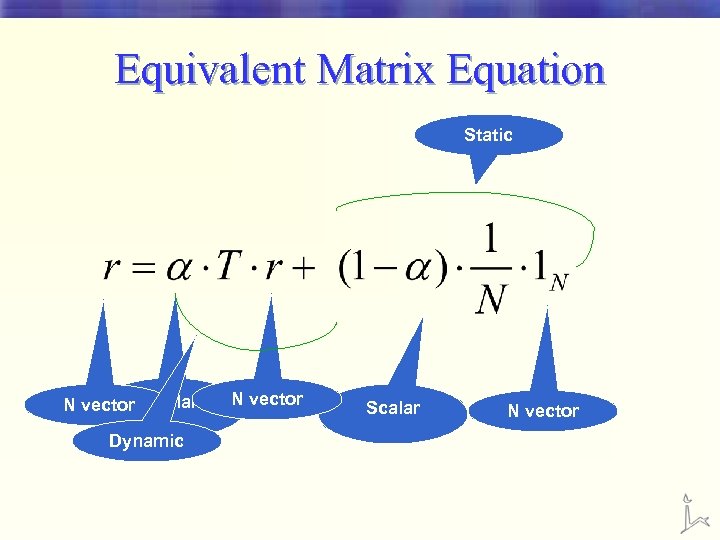

Equivalent Matrix Equation Static N vector Scalar Dynamic N vector Scalar N vector

![A Biased Page. Rank Only pages that are reachable from some d[i]>0 will have A Biased Page. Rank Only pages that are reachable from some d[i]>0 will have](https://present5.com/presentation/ed62477b62a10761bf2f1e5b5a3d8d98/image-43.jpg)

A Biased Page. Rank Only pages that are reachable from some d[i]>0 will have a positive page rank A static score distribution (summing up to one)

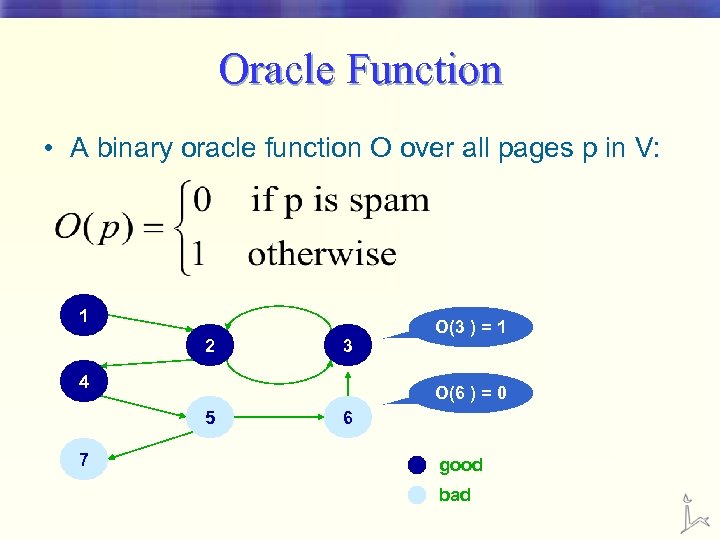

Oracle Function • A binary oracle function O over all pages p in V: 1 2 3 4 O(6 ) = 0 5 7 O(3 ) = 1 6 good bad

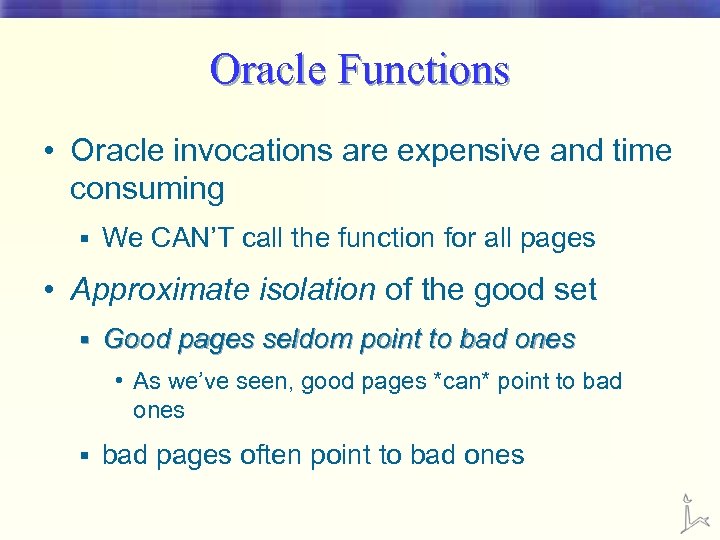

Oracle Functions • Oracle invocations are expensive and time consuming § We CAN’T call the function for all pages • Approximate isolation of the good set § Good pages seldom point to bad ones • As we’ve seen, good pages *can* point to bad ones § bad pages often point to bad ones

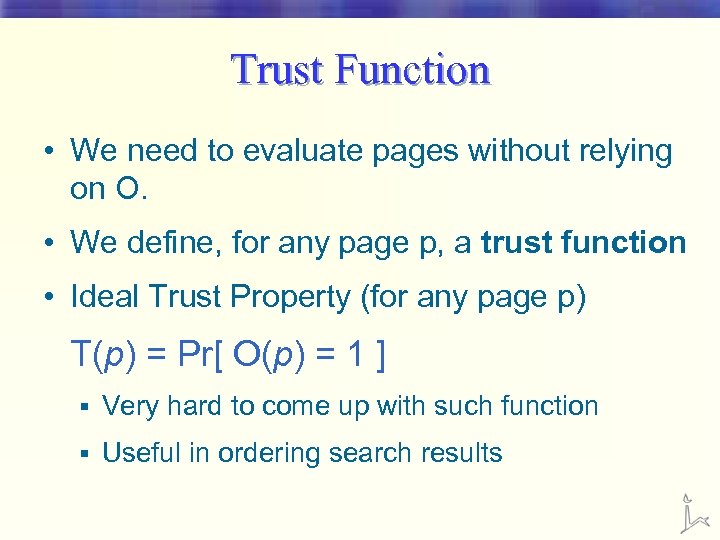

Trust Function • We need to evaluate pages without relying on O. • We define, for any page p, a trust function • Ideal Trust Property (for any page p) T(p) = Pr[ O(p) = 1 ] § Very hard to come up with such function § Useful in ordering search results

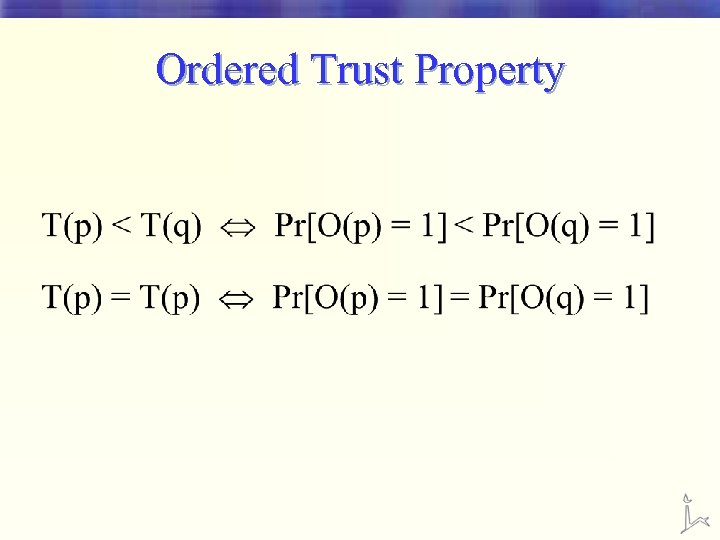

Ordered Trust Property

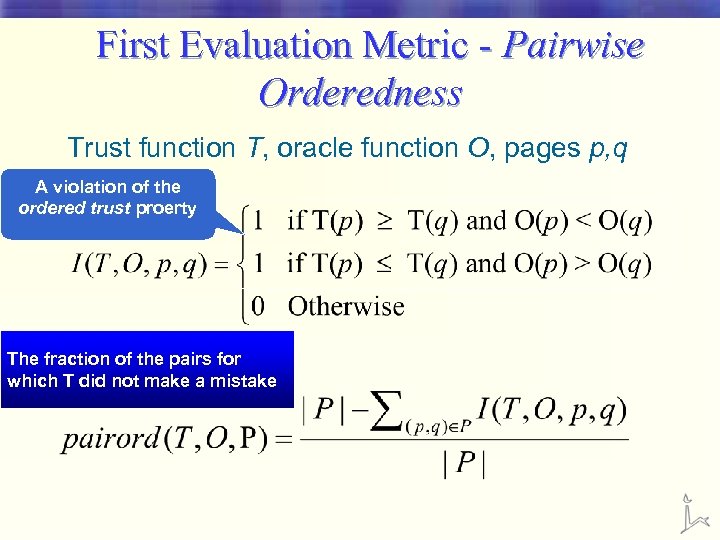

First Evaluation Metric - Pairwise Orderedness Trust function T, oracle function O, pages p, q A violation of the ordered trust proerty The fraction of the pairs for which T did not make a mistake

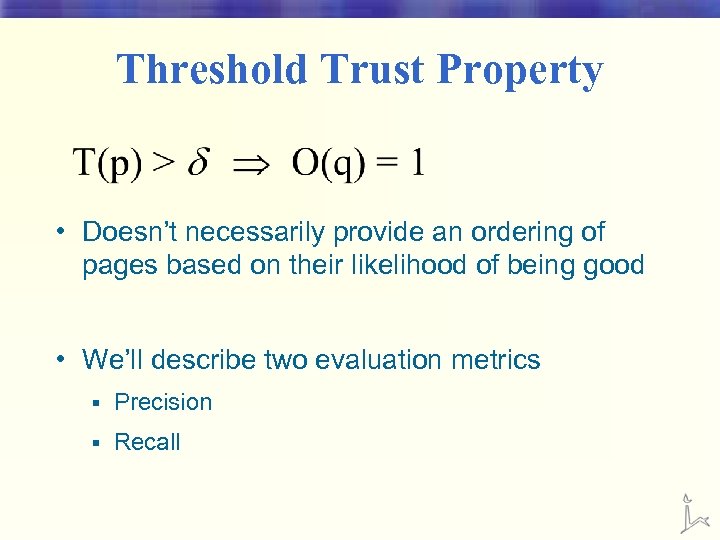

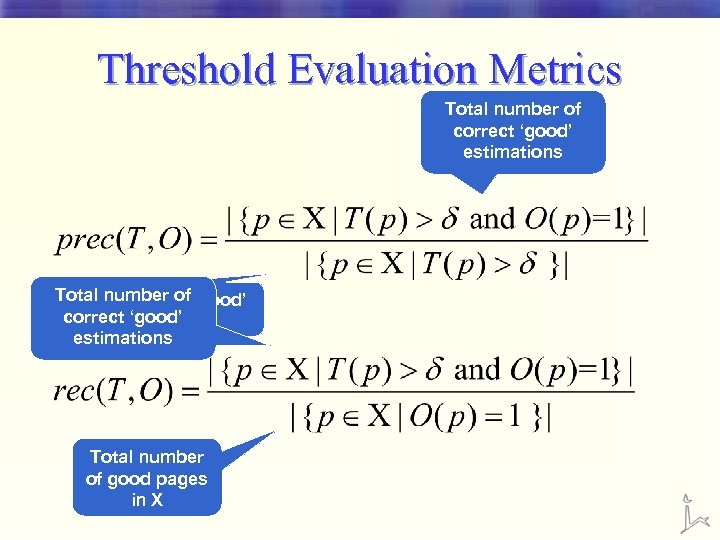

Threshold Trust Property • Doesn’t necessarily provide an ordering of pages based on their likelihood of being good • We’ll describe two evaluation metrics § Precision § Recall

Threshold Evaluation Metrics Total number of correct ‘good’ estimations Total number of ‘good’ correct ‘good’ estimations Total number of good pages in X

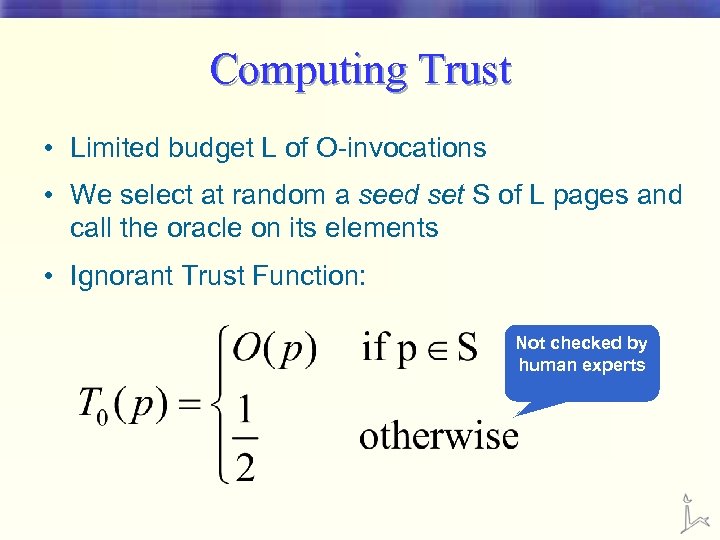

Computing Trust • Limited budget L of O-invocations • We select at random a seed set S of L pages and call the oracle on its elements • Ignorant Trust Function: Not checked by human experts

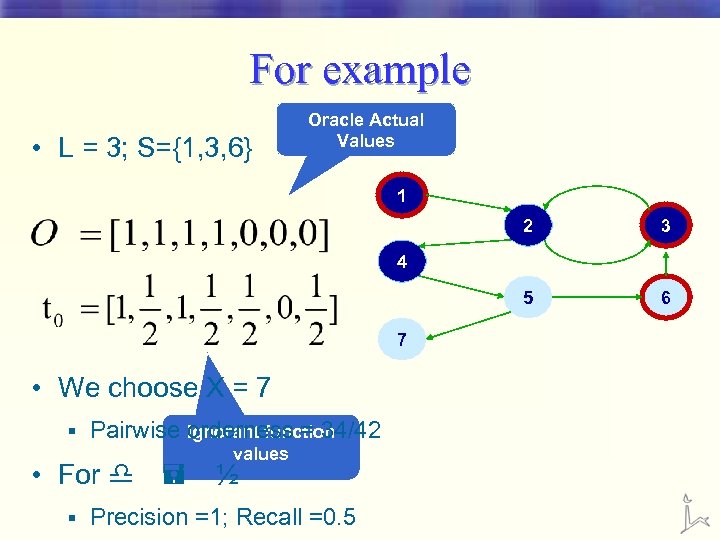

For example • L = 3; S={1, 3, 6} Oracle Actual Values 1 2 3 5 6 4 7 • We choose X = 7 § Pairwise Ignorant function orderness = 34/42 values • For d = ½ § Precision =1; Recall =0. 5

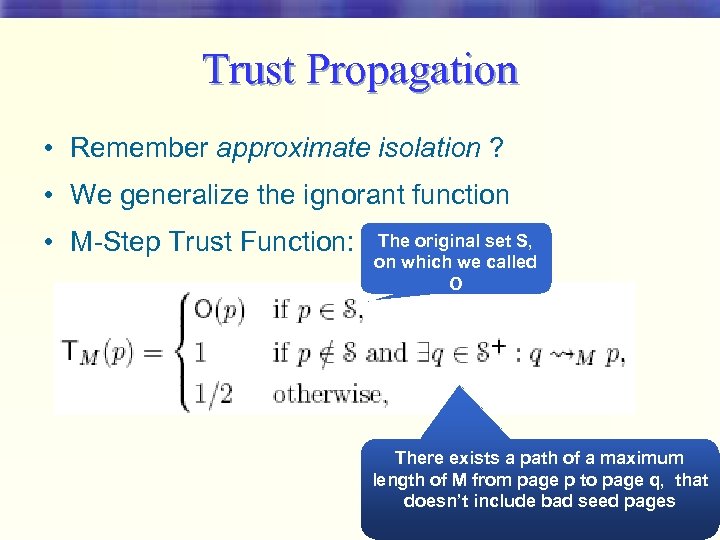

Trust Propagation • Remember approximate isolation ? • We generalize the ignorant function • M-Step Trust Function: The original set S, on which we called O There exists a path of a maximum length of M from page p to page q, that doesn’t include bad seed pages

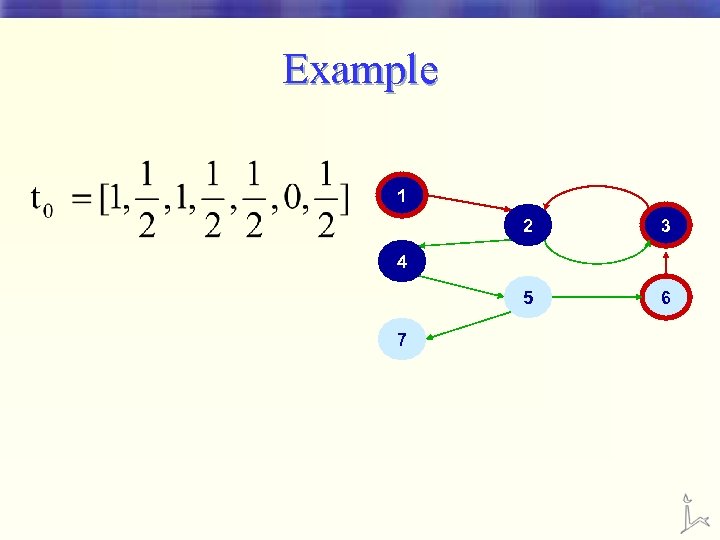

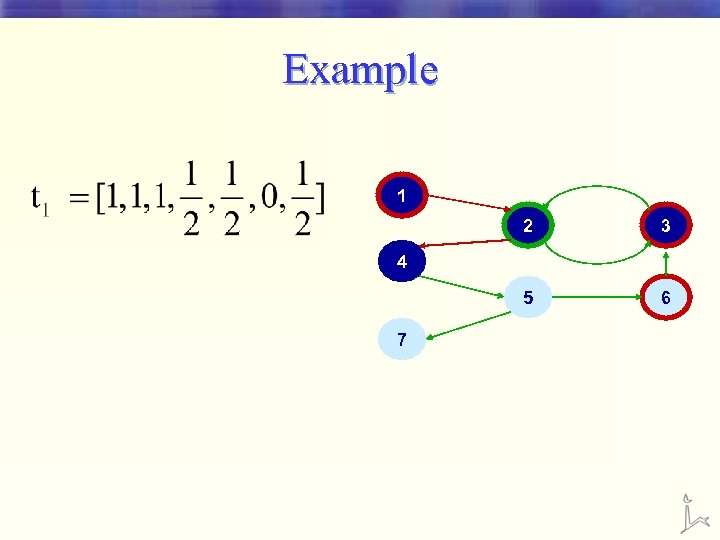

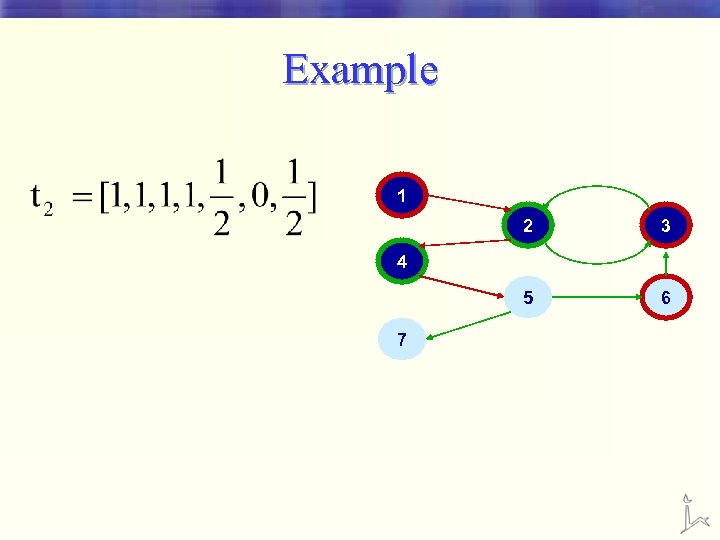

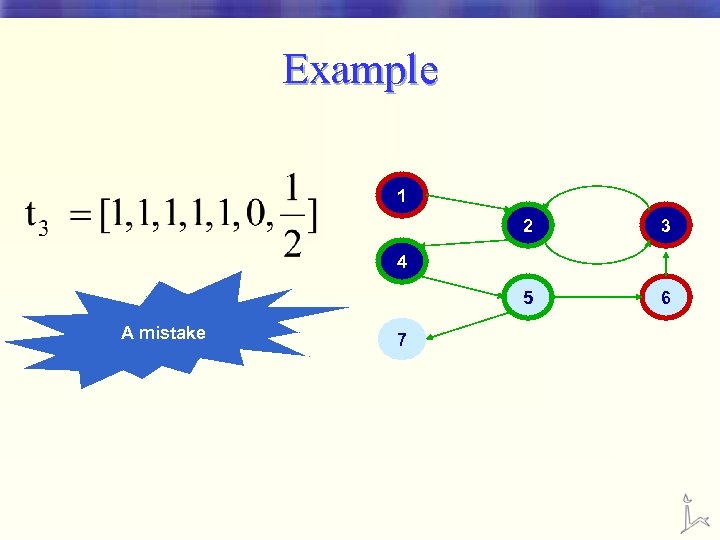

Example 1 2 3 5 6 4 7

Example 1 2 3 5 6 4 7

Example 1 2 3 5 6 4 7

Example 1 2 3 5 6 4 A mistake 7

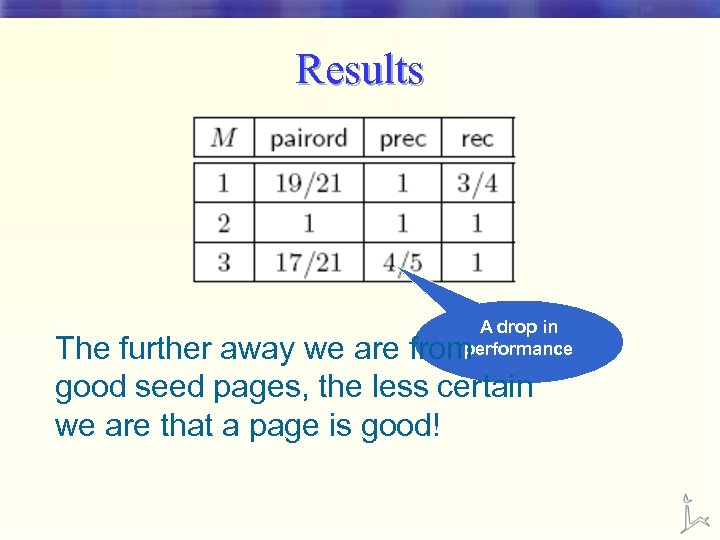

Results A drop in performance The further away we are from good seed pages, the less certain we are that a page is good!

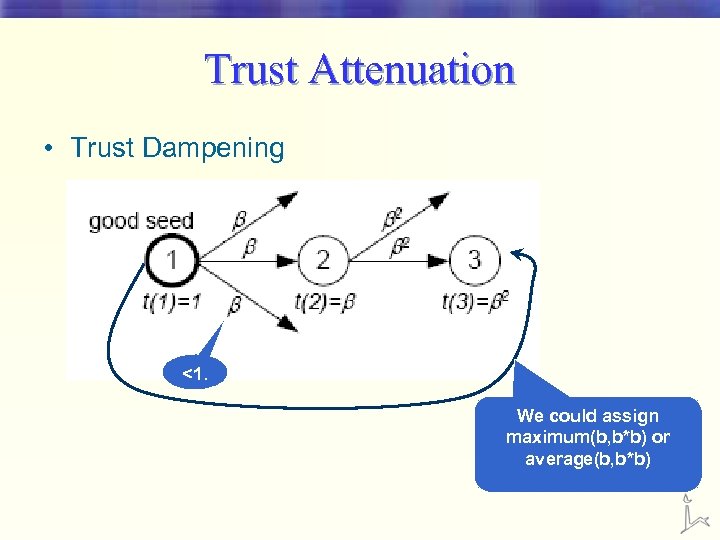

Trust Attenuation • Trust Dampening <1. We could assign maximum(b, b*b) or average(b, b*b)

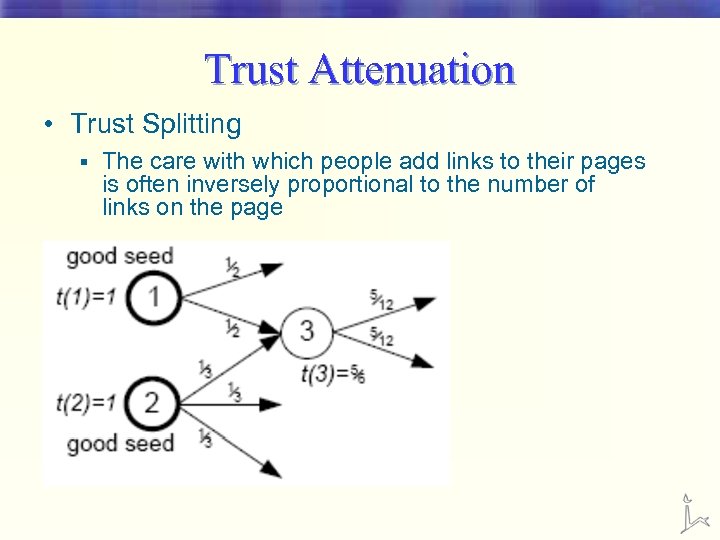

Trust Attenuation • Trust Splitting § The care with which people add links to their pages is often inversely proportional to the number of links on the page

Trust Rank Algorithm 1. (Partially) Evaluate seed-desirability of pages 2. Invoke the oracle function on the L most desirable seed pages, normalize the result (a vector d) 3. Evaluate Trust. Rank scores using a biased Page. Rank computation with d replacing the unfiorm distribution

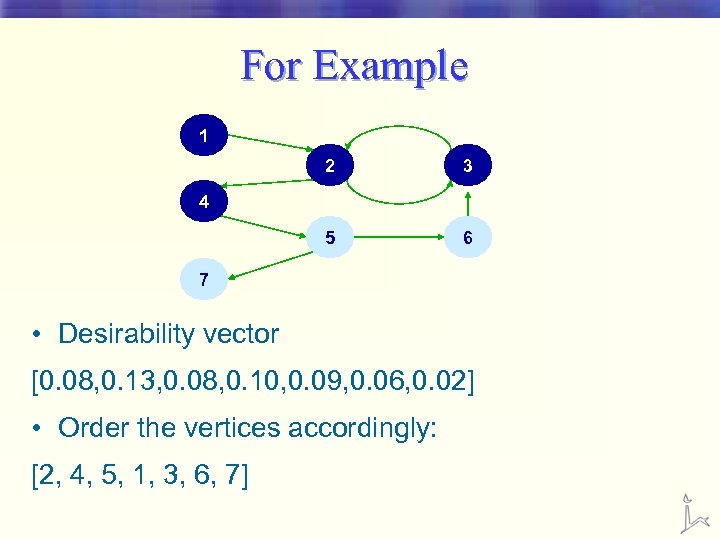

For Example 1 2 3 5 6 4 7 • Desirability vector [0. 08, 0. 13, 0. 08, 0. 10, 0. 09, 0. 06, 0. 02] • Order the vertices accordingly: [2, 4, 5, 1, 3, 6, 7]

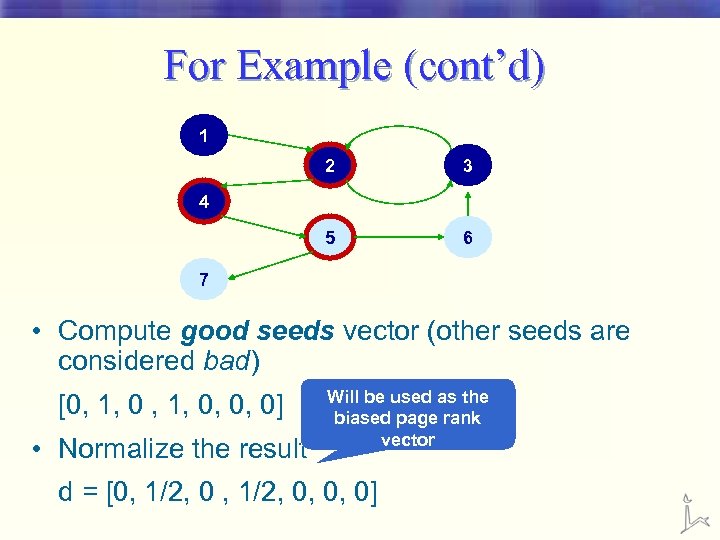

For Example (cont’d) 1 2 3 5 6 4 7 • Compute good seeds vector (other seeds are considered bad) [0, 1, 0, 0, 0] • Normalize the result Will be used as the biased page rank vector d = [0, 1/2, 0, 0, 0]

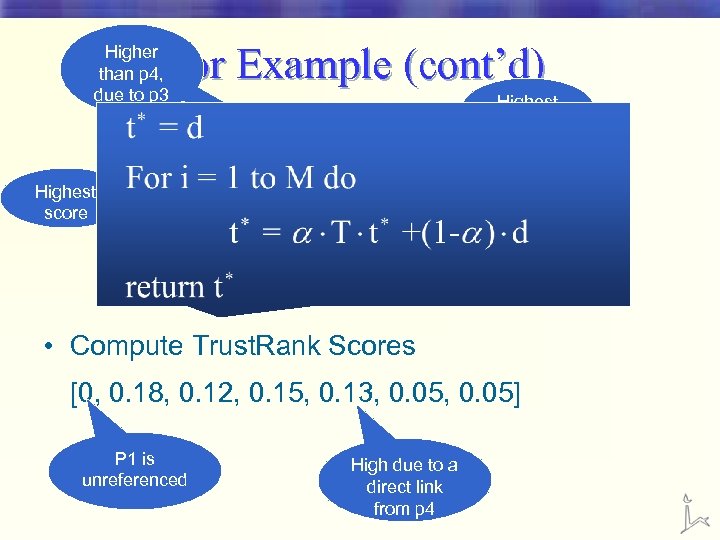

For Example (cont’d) Higher than p 4, due to p 3 Highest score 1 2 5 Highest score 3 6 4 7 • Compute Trust. Rank Scores [0, 0. 18, 0. 12, 0. 15, 0. 13, 0. 05] P 1 is unreferenced High due to a direct link from p 4

Selecting Seeds • We want to choose pages that are useful in identifying additional good pages • We want to keep the seed set small • Two strategies § Inverse page rank § High Page Rank

I. Inverse Page. Rank • Preference to pages from which we can This is actually Page. Rank, pagesthe importance of a page reach many other where § depends on its outlinks We can select seed pages based on the number of outlinks • We’ll choose the pages that point to many • Perform Page. Rank on the graph G=(V, E’) point to many pages that point to many pages that point to many pages … • Use inverse transition matrix U (instead of T)

II. High Page. Rank • We’re interested in high Page. Rank pages • Obtain accurate trust scores for high Page. Rank pages • Preference to pages with high Page. Rank § Likely to point to other high Page. Rank pags § May identify the goodness of fewer pages, but they may be more important pages

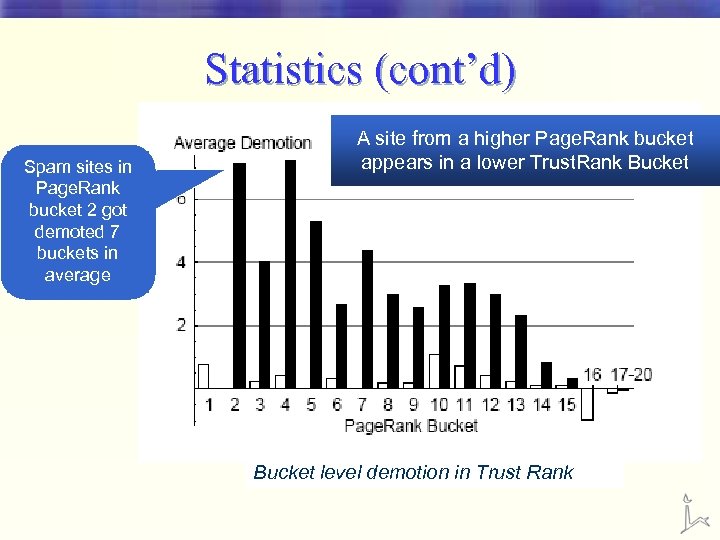

Statistics • |Seed set S| = 1250 (given by inverse Page. Rank) • Only 178 sites were selected to be used as good seeds (due to extremely rigorous selection criteria)

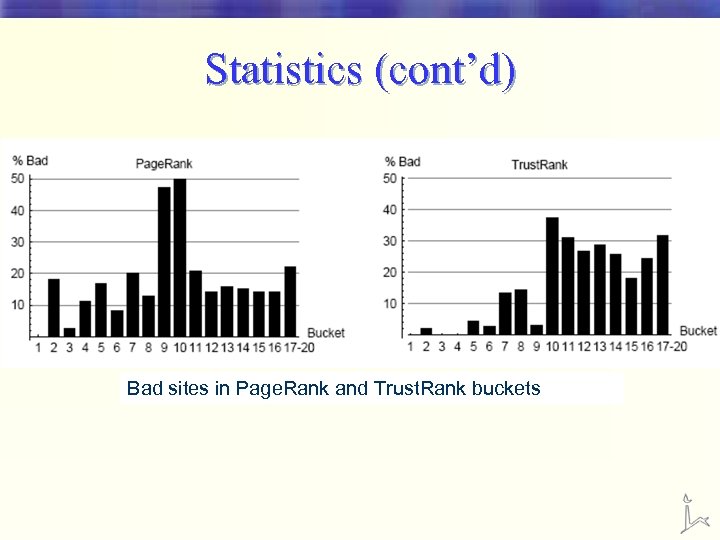

Statistics (cont’d) Bad sites in Page. Rank and Trust. Rank buckets

Statistics (cont’d) Spam sites in Page. Rank bucket 2 got demoted 7 buckets in average A site from a higher Page. Rank bucket appears in a lower Trust. Rank Bucket level demotion in Trust Rank

Contents • What is web spam • Combating web spam – Trust. Rank • Combating web spam – Mass Estimation § Turn the spammers’ ingenuity against themselves • Conclusion

Spam Mass – Naïve Approach • Given a page x, we’d like to know if it got most of its Page. Rank from spam pages or from reputable pages • Suppose that we have a partition of the web into 2 sets § V(S) = Spam pages § V(R) = Reputable pages

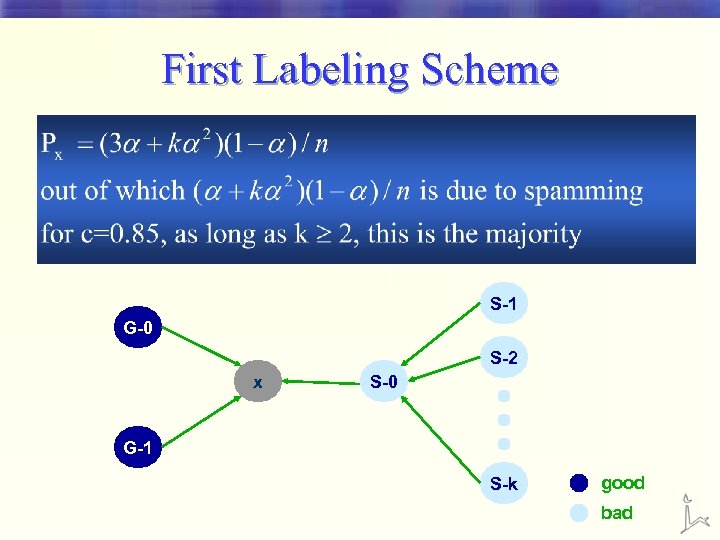

First Labeling Scheme • Look at the number of direct inlinks § If most of them comes from spam pages, then declare that x is a spam page S-1 G-0 S-2 x S-0 G-1 S-k good bad

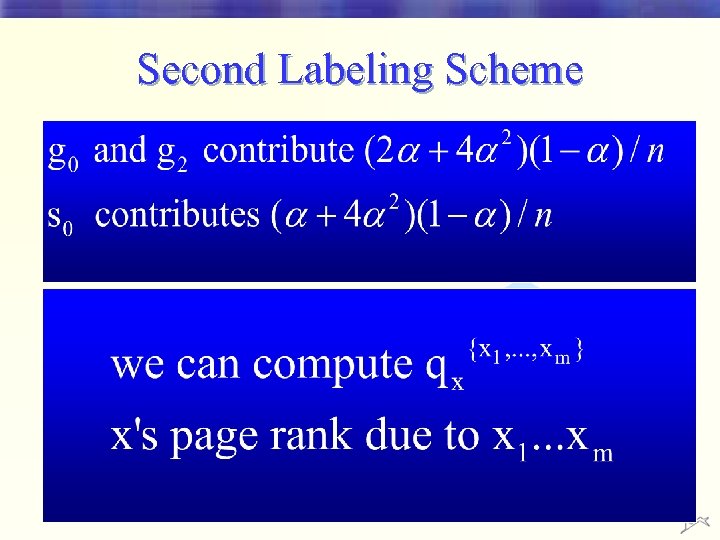

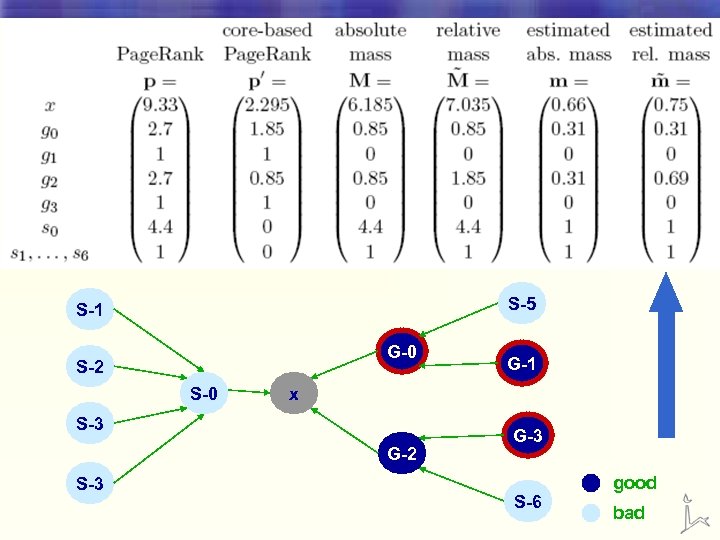

Second Labeling Scheme • If the largest part of x’s Page. Rank comes from spam nodes, we label x as spam S-5 S-1 G-0 S-2 S-0 x S-3 G-2 S-3 G-1 G-3 S-6 good bad

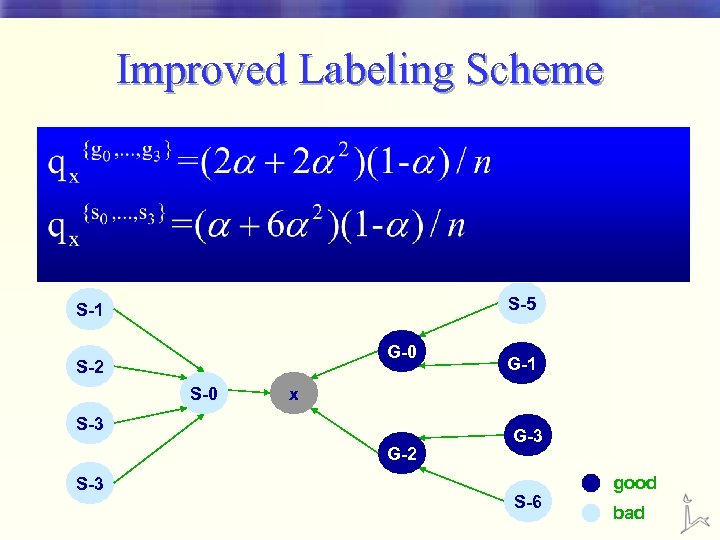

Improved Labeling Scheme S-5 S-1 G-0 S-2 S-0 x S-3 G-2 S-3 G-1 G-3 S-6 good bad

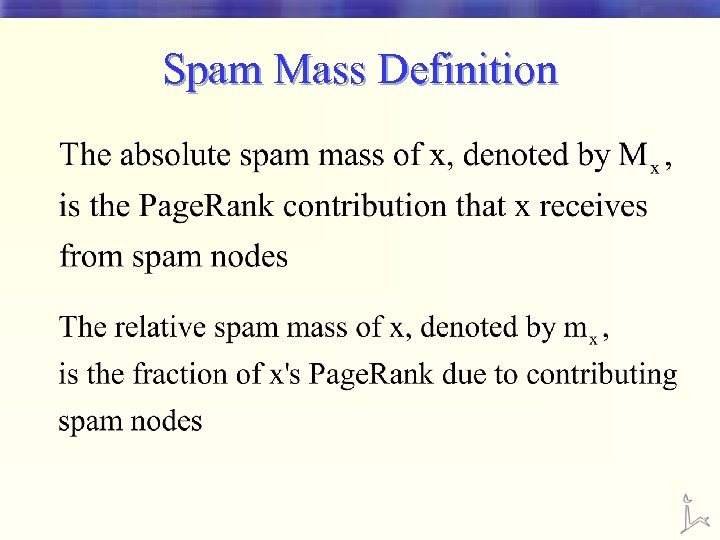

Spam Mass Definition

Estimating • We assumed that we have a priori knowledge of whether nodes are good or bad – not realistic! • What we’ll have is a subset of the good nodes, the good core § Not hard to construct § Bad pages are ofren abandoned

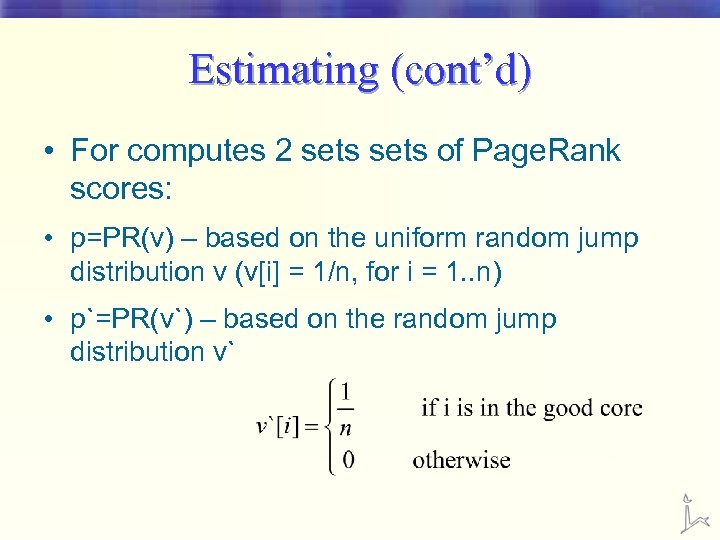

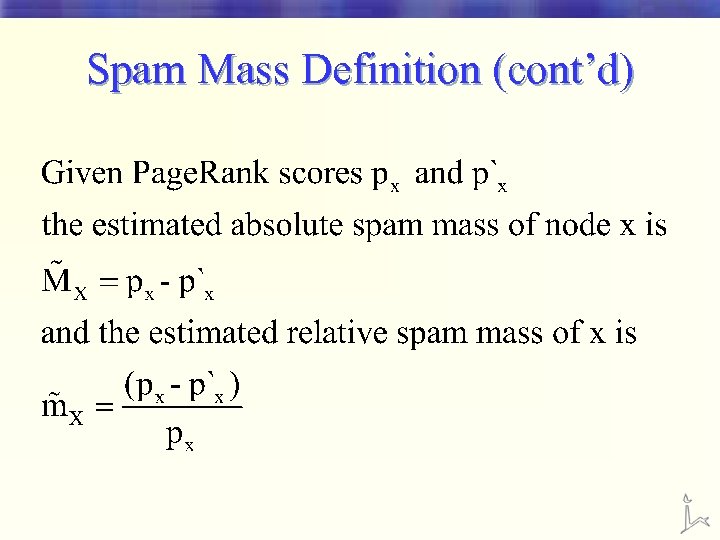

Estimating (cont’d) • For computes 2 sets of Page. Rank scores: • p=PR(v) – based on the uniform random jump distribution v (v[i] = 1/n, for i = 1. . n) • p`=PR(v`) – based on the random jump distribution v`

Spam Mass Definition (cont’d)

S-5 S-1 G-0 S-2 S-0 x S-3 G-2 S-3 G-1 G-3 S-6 good bad

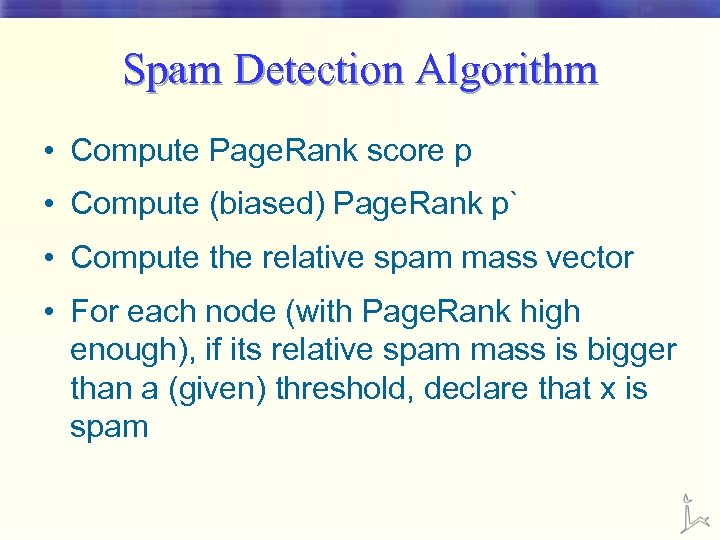

Spam Detection Algorithm • Compute Page. Rank score p • Compute (biased) Page. Rank p` • Compute the relative spam mass vector • For each node (with Page. Rank high enough), if its relative spam mass is bigger than a (given) threshold, declare that x is spam

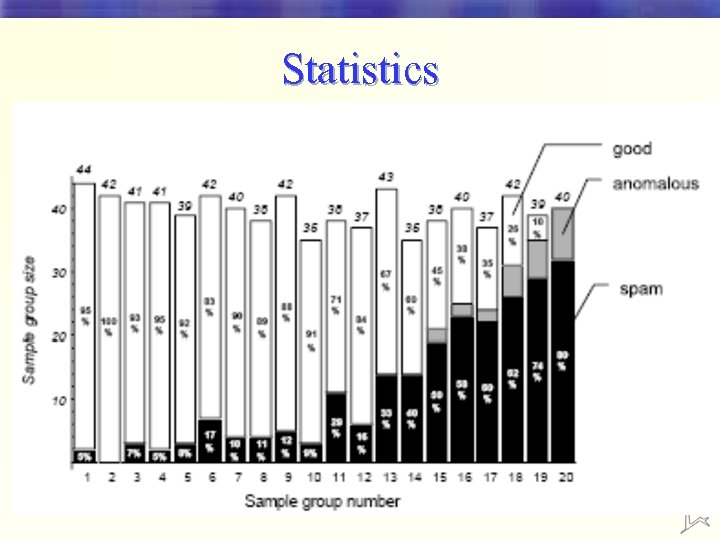

Statistics

Contents • What is web spam • Combating web spam – Trust. Rank • Combating web spam – Mass Estimation • Conclusion

Conclusion • We introduced ‘web spam’ • We presented two ways to combat spammers § Trust. Rank (spam demotion) § Spam mass estimation (spam detection)

Thank you questions?

Bibliography • Web Spam Taxonomy (2004) - Gyongyi, Zoltan; Garcia. Molina, Hector, Stanford University • Combating Web Spam with Trust. Rank (2005) - Gyongyi, Zoltan; Garcia-Molina, Hector; Pedersen, Jan • Link Spam Detection Based on Mass Estimation (2005) Gyongyi, Zoltan; Berkhin, Pavel; Garcia-Molina, Hector; Pedersen, Jan • http//: www. firstmonday. org/issues/issue 10_10/tatum/ • http//: en. wikipedia. org/wiki/TFIDF • http: //en. wikipedia. org/wiki/Pagerank

ed62477b62a10761bf2f1e5b5a3d8d98.ppt