74f87577c1eb72d9c91f7a3b3a947da9.ppt

- Количество слайдов: 35

Web Robots Herng-Yow Chen 1

Outline n n n Robot applications How it works Cycle Avoidance 2

Applications n Behavior of web robots n n n Wander from web site to site (recursively), 1. Fetching content, 2. Following hyperlinks, 3. Process the data they find. Colorful names n n Crawlers, Spiders, Worms, Bots 3

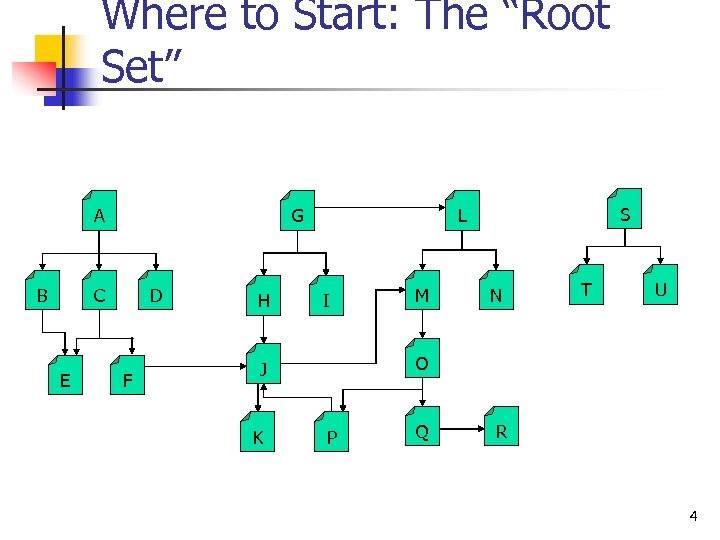

Where to Start: The “Root Set” A B G C E D F H I M N T U O J K S L P Q R 4

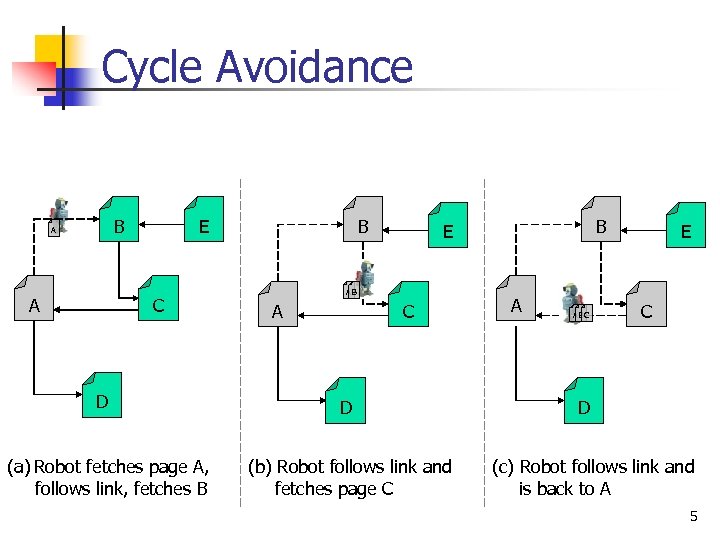

Cycle Avoidance B A A E C D (a) Robot fetches page A, follows link, fetches B B AB A B E C D (b) Robot follows link and fetches page C A AB C E C D (c) Robot follows link and is back to A 5

Loops n Cycles are bad for crawlers for there reasons. n n n Spending robot’s time and space Overwhelm the web site. Duplicate content. 6

Data structure for robot n n n Trees and hash table Lossy presence bit maps Checkpoints n n Save the list of visited URL to disk, in case the robot crashes Partitioning n Robot farms 7

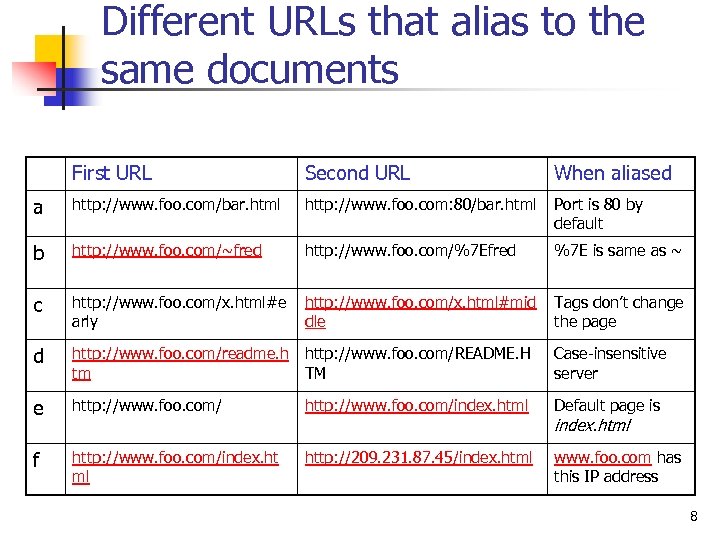

Different URLs that alias to the same documents First URL Second URL When aliased a http: //www. foo. com/bar. html http: //www. foo. com: 80/bar. html Port is 80 by default b http: //www. foo. com/~fred http: //www. foo. com/%7 Efred %7 E is same as ~ c http: //www. foo. com/x. html#e arly http: //www. foo. com/x. html#mid dle Tags don’t change the page d http: //www. foo. com/readme. h http: //www. foo. com/README. H tm TM Case-insensitive server e http: //www. foo. com/index. html Default page is f http: //www. foo. com/index. ht ml http: //209. 231. 87. 45/index. html www. foo. com has this IP address index. html 8

Canonicalizing URLs Most web robots try to eliminate the obvious aliases by “canonicalizing” URL into a standard form, by: n n adding “: 80” to the hostname, if the post isn’t specified. Converting all %xx escaped characters into their character equivalents. Removing # tags 9

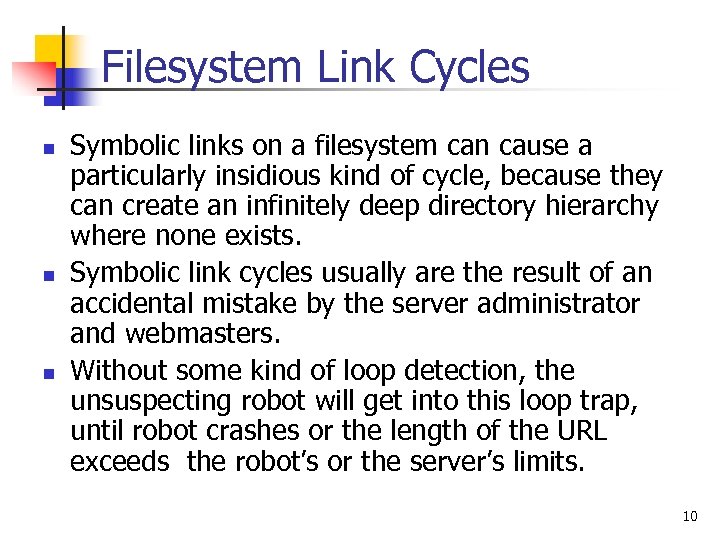

Filesystem Link Cycles n n n Symbolic links on a filesystem can cause a particularly insidious kind of cycle, because they can create an infinitely deep directory hierarchy where none exists. Symbolic link cycles usually are the result of an accidental mistake by the server administrator and webmasters. Without some kind of loop detection, the unsuspecting robot will get into this loop trap, until robot crashes or the length of the URL exceeds the robot’s or the server’s limits. 10

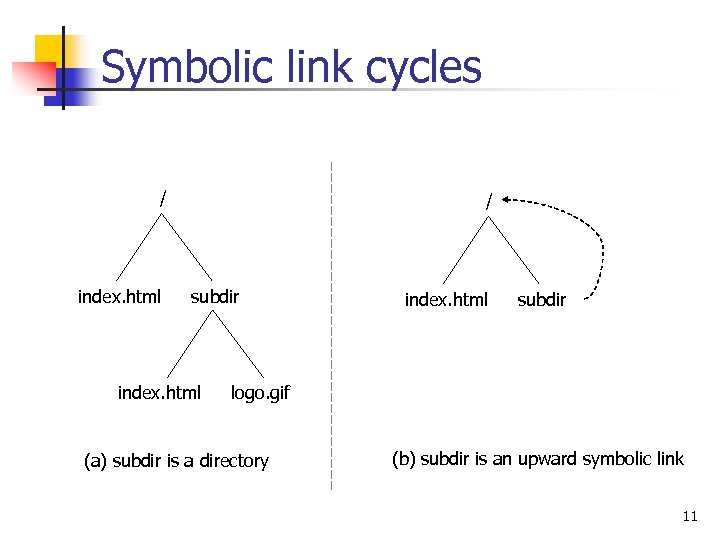

Symbolic link cycles / index. html / subdir index. html subdir logo. gif (a) subdir is a directory (b) subdir is an upward symbolic link 11

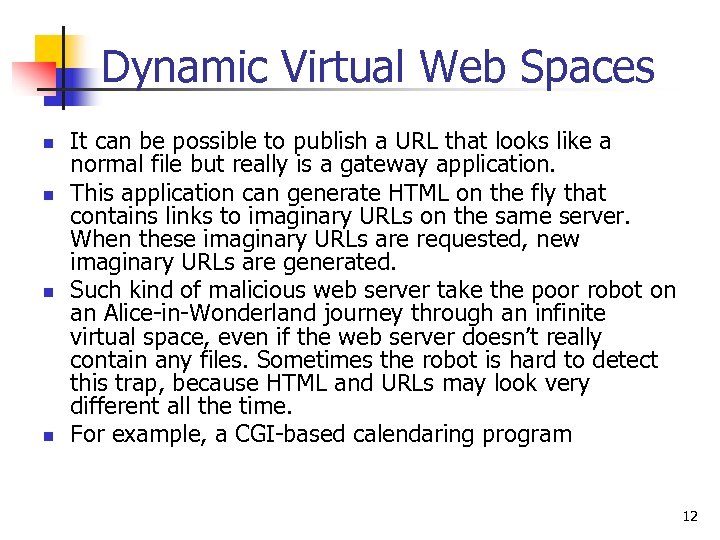

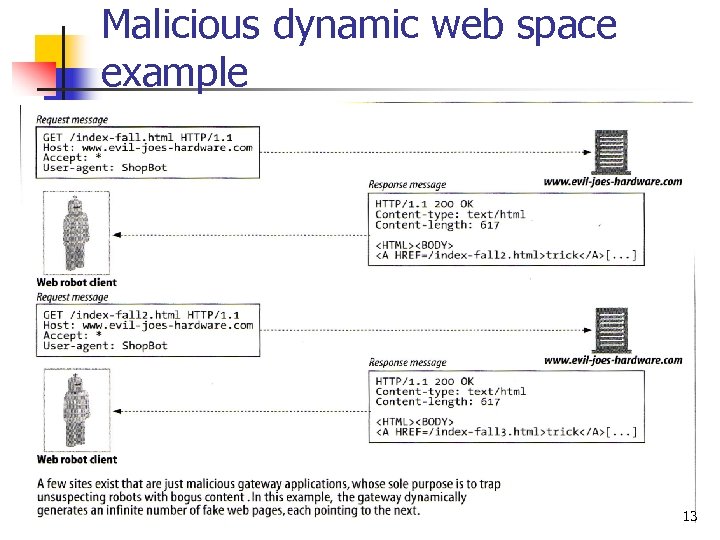

Dynamic Virtual Web Spaces n n It can be possible to publish a URL that looks like a normal file but really is a gateway application. This application can generate HTML on the fly that contains links to imaginary URLs on the same server. When these imaginary URLs are requested, new imaginary URLs are generated. Such kind of malicious web server take the poor robot on an Alice-in-Wonderland journey through an infinite virtual space, even if the web server doesn’t really contain any files. Sometimes the robot is hard to detect this trap, because HTML and URLs may look very different all the time. For example, a CGI-based calendaring program 12

Malicious dynamic web space example 13

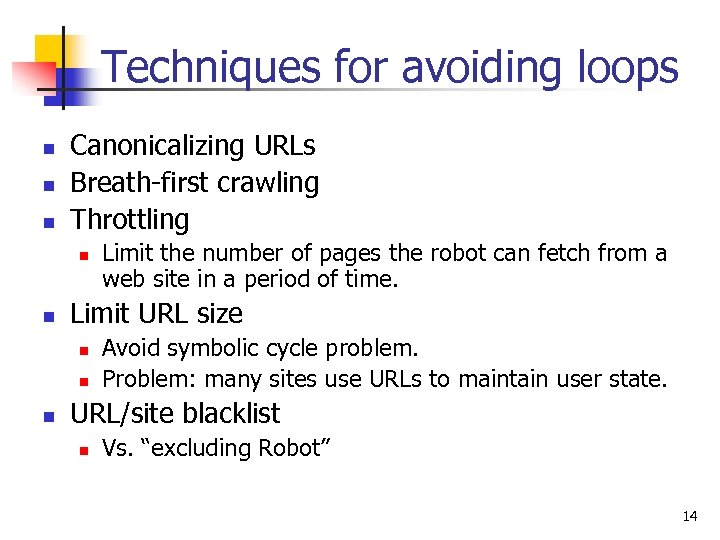

Techniques for avoiding loops n n n Canonicalizing URLs Breath-first crawling Throttling n n Limit URL size n n n Limit the number of pages the robot can fetch from a web site in a period of time. Avoid symbolic cycle problem. Problem: many sites use URLs to maintain user state. URL/site blacklist n Vs. “excluding Robot” 14

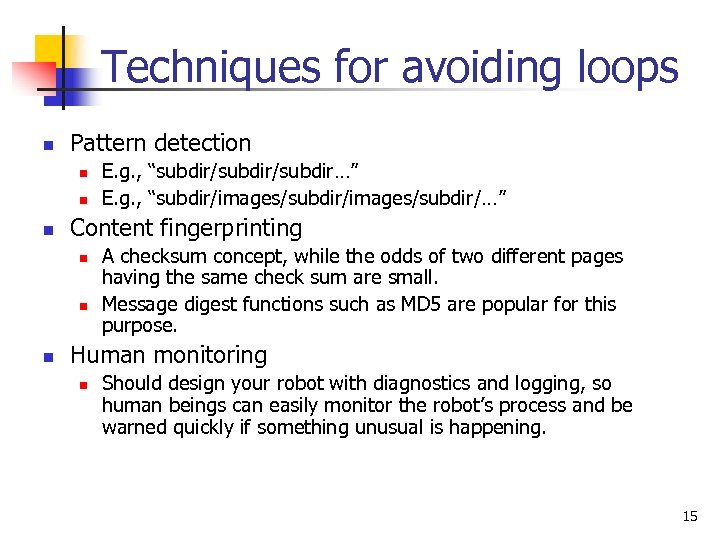

Techniques for avoiding loops n Pattern detection n Content fingerprinting n n n E. g. , “subdir/subdir…” E. g. , “subdir/images/subdir/…” A checksum concept, while the odds of two different pages having the same check sum are small. Message digest functions such as MD 5 are popular for this purpose. Human monitoring n Should design your robot with diagnostics and logging, so human beings can easily monitor the robot’s process and be warned quickly if something unusual is happening. 15

Robotic HTTP n n n No different from any other HTTP client program. Many robots try to implement the minimum amount of HTTP needed to request the content they seek. It is recommended that robot implementers send some basic header information to notify the site of the capabilities of the robot, the robot identify, and where it originated. 16

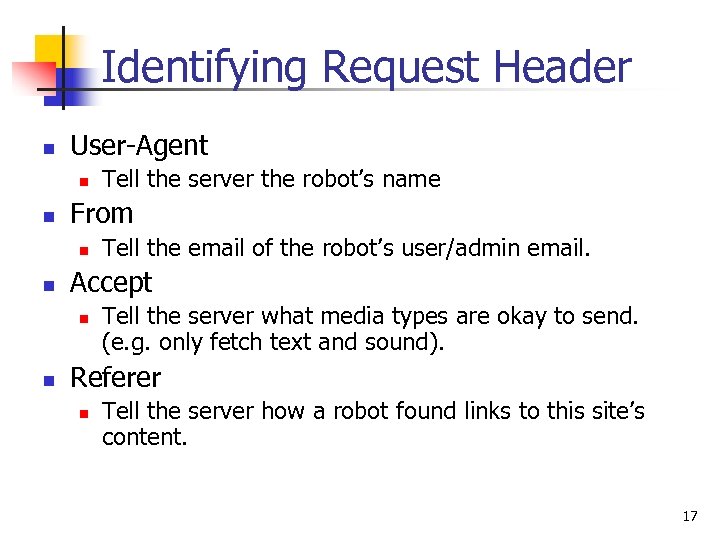

Identifying Request Header n User-Agent n n From n n Tell the email of the robot’s user/admin email. Accept n n Tell the server the robot’s name Tell the server what media types are okay to send. (e. g. only fetch text and sound). Referer n Tell the server how a robot found links to this site’s content. 17

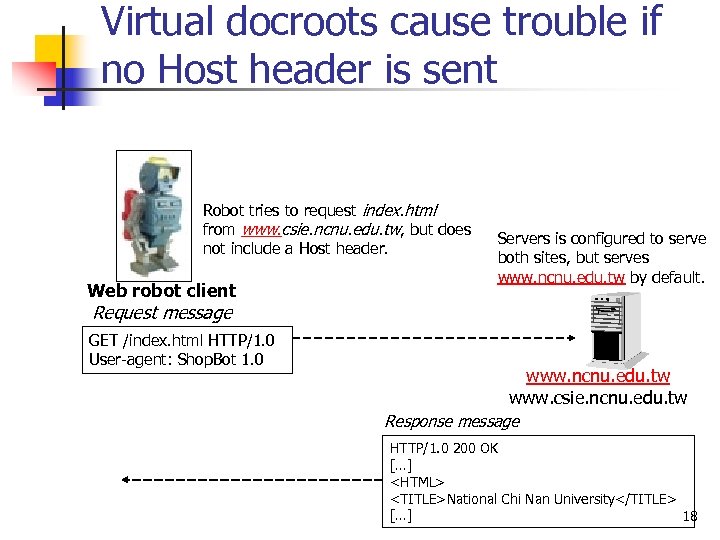

Virtual docroots cause trouble if no Host header is sent Robot tries to request index. html from www. csie. ncnu. edu. tw, but does not include a Host header. Web robot client Servers is configured to serve both sites, but serves www. ncnu. edu. tw by default. Request message GET /index. html HTTP/1. 0 User-agent: Shop. Bot 1. 0 www. ncnu. edu. tw www. csie. ncnu. edu. tw Response message HTTP/1. 0 200 OK […] <HTML> <TITLE>National Chi Nan University</TITLE> […] 18

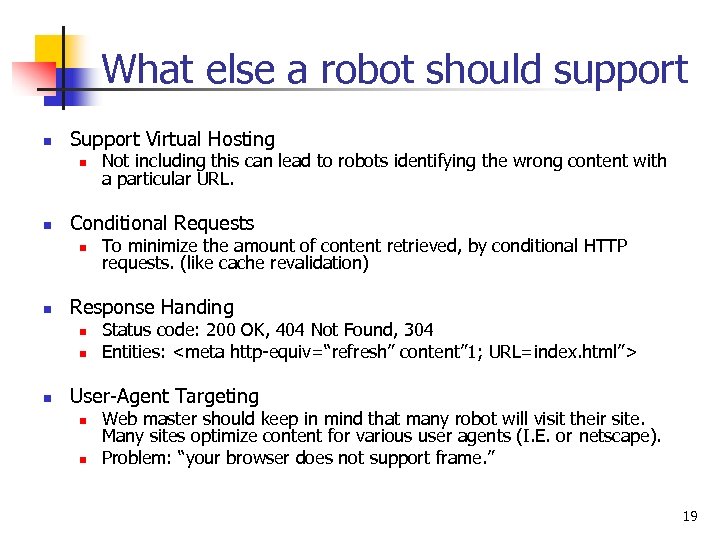

What else a robot should support n Support Virtual Hosting n n Conditional Requests n n To minimize the amount of content retrieved, by conditional HTTP requests. (like cache revalidation) Response Handing n n n Not including this can lead to robots identifying the wrong content with a particular URL. Status code: 200 OK, 404 Not Found, 304 Entities: <meta http-equiv=“refresh” content” 1; URL=index. html”> User-Agent Targeting n n Web master should keep in mind that many robot will visit their site. Many sites optimize content for various user agents (I. E. or netscape). Problem: “your browser does not support frame. ” 19

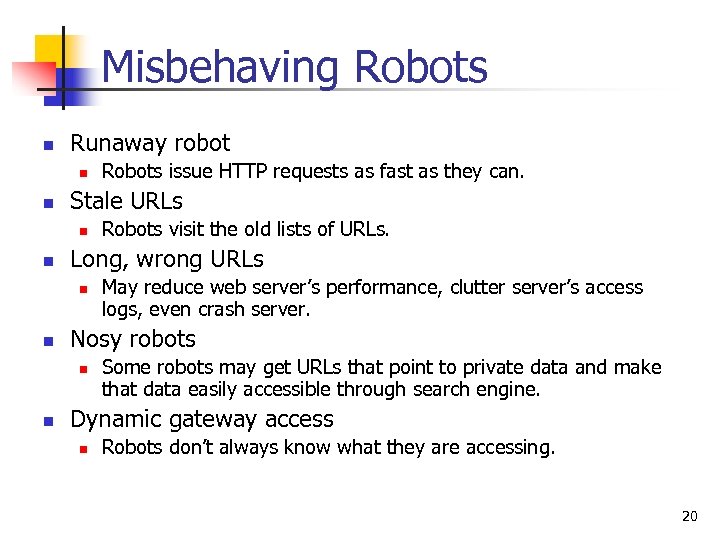

Misbehaving Robots n Runaway robot n n Stale URLs n n May reduce web server’s performance, clutter server’s access logs, even crash server. Nosy robots n n Robots visit the old lists of URLs. Long, wrong URLs n n Robots issue HTTP requests as fast as they can. Some robots may get URLs that point to private data and make that data easily accessible through search engine. Dynamic gateway access n Robots don’t always know what they are accessing. 20

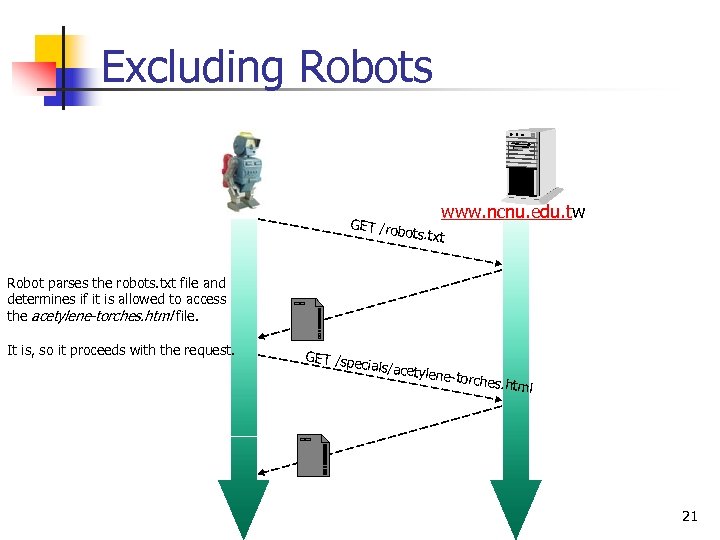

Excluding Robots www. ncnu. edu. tw GET /rob ots. t xt Robot parses the robots. txt file and determines if it is allowed to access the acetylene-torches. html file. It is, so it proceeds with the request. GET /spe cials/ace tylene-t orches. h tm l 21

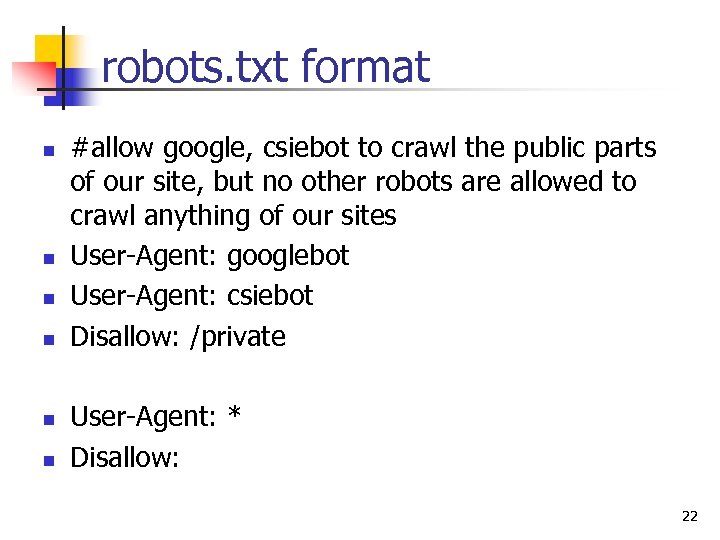

robots. txt format n n n #allow google, csiebot to crawl the public parts of our site, but no other robots are allowed to crawl anything of our sites User-Agent: googlebot User-Agent: csiebot Disallow: /private User-Agent: * Disallow: 22

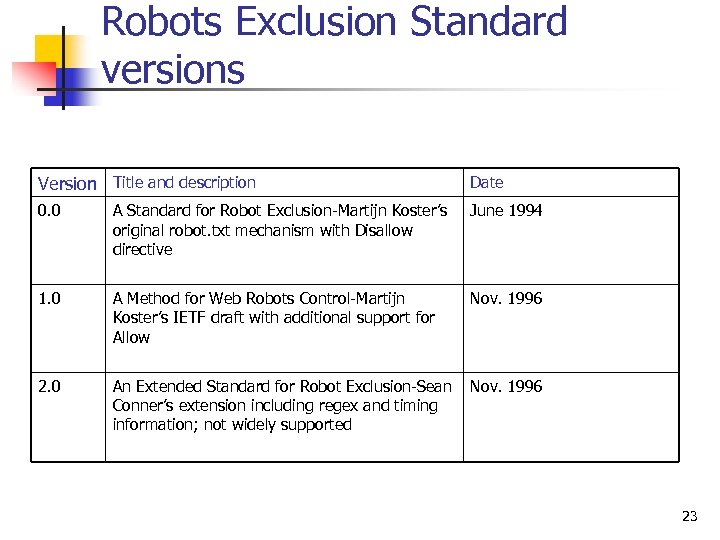

Robots Exclusion Standard versions Version Title and description Date 0. 0 A Standard for Robot Exclusion-Martijn Koster’s original robot. txt mechanism with Disallow directive June 1994 1. 0 A Method for Web Robots Control-Martijn Koster’s IETF draft with additional support for Allow Nov. 1996 2. 0 An Extended Standard for Robot Exclusion-Sean Conner’s extension including regex and timing information; not widely supported Nov. 1996 23

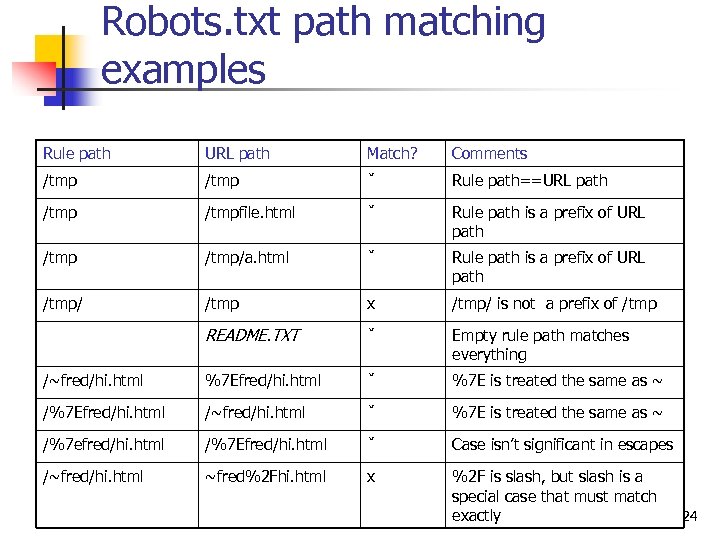

Robots. txt path matching examples Rule path URL path Match? Comments /tmp ˇ Rule path==URL path /tmpfile. html ˇ Rule path is a prefix of URL path /tmp/a. html ˇ Rule path is a prefix of URL path /tmp/ /tmp x /tmp/ is not a prefix of /tmp README. TXT ˇ Empty rule path matches everything /~fred/hi. html %7 Efred/hi. html ˇ %7 E is treated the same as ~ /%7 Efred/hi. html /~fred/hi. html ˇ %7 E is treated the same as ~ /%7 efred/hi. html /%7 Efred/hi. html ˇ Case isn’t significant in escapes /~fred/hi. html ~fred%2 Fhi. html x %2 F is slash, but slash is a special case that must match exactly 24

HTML Robot-control Meta Tags n e. g. n n <META NAME=“ROBOTS” CONTENT=directive-list> Directive-list n NOINDEX n n NOFOLLOW n n n Not to crawl any outgoing links from this page INDEX FOLLOW NOARCHIVE n n Not to process this document content Should not cache a local copy of the page ALL (equivalent to INDEX, FOLLOW) NONE (equivalent to NOINDEX, NOFOLLOW) 26

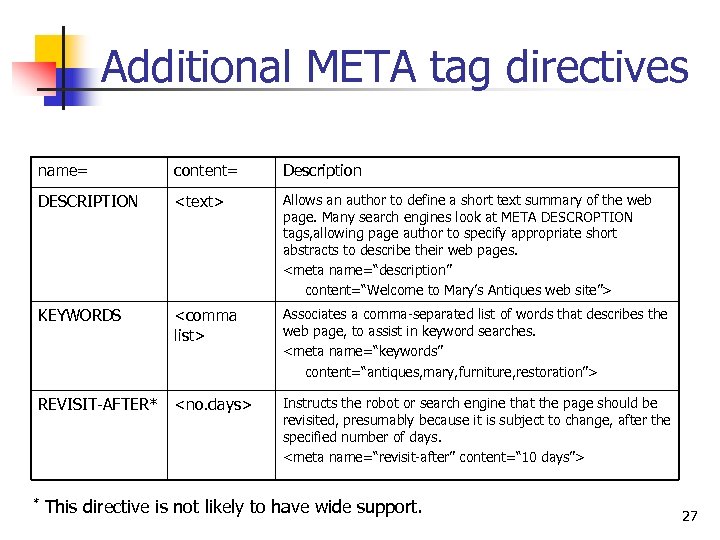

Additional META tag directives name= content= Description DESCRIPTION <text> Allows an author to define a short text summary of the web page. Many search engines look at META DESCROPTION tags, allowing page author to specify appropriate short abstracts to describe their web pages. <meta name=“description” content=“Welcome to Mary’s Antiques web site”> KEYWORDS <comma list> Associates a comma-separated list of words that describes the web page, to assist in keyword searches. <meta name=“keywords” content=“antiques, mary, furniture, restoration”> REVISIT-AFTER* <no. days> Instructs the robot or search engine that the page should be revisited, presumably because it is subject to change, after the specified number of days. <meta name=“revisit-after” content=“ 10 days”> * This directive is not likely to have wide support. 27

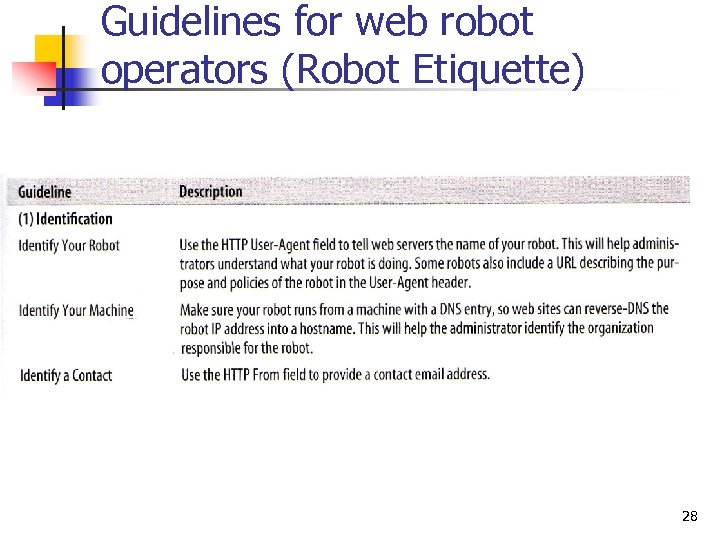

Guidelines for web robot operators (Robot Etiquette) 28

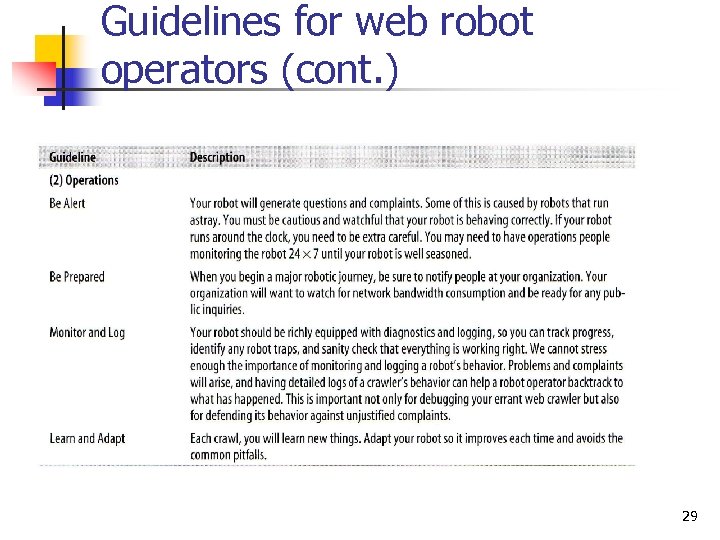

Guidelines for web robot operators (cont. ) 29

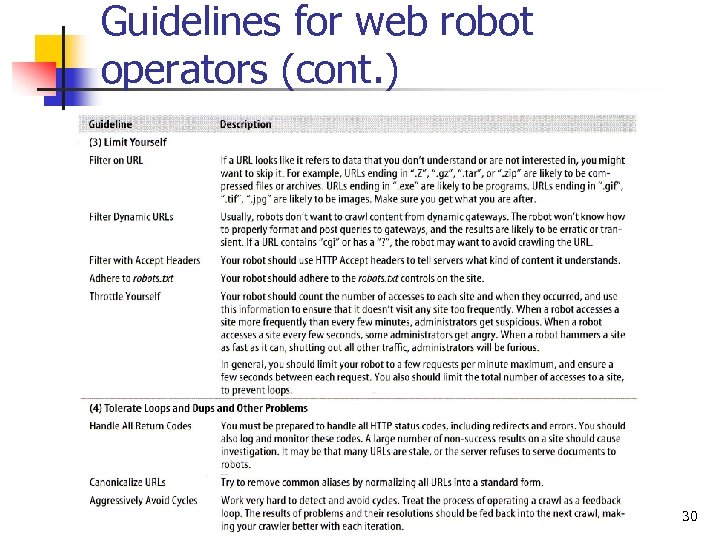

Guidelines for web robot operators (cont. ) 30

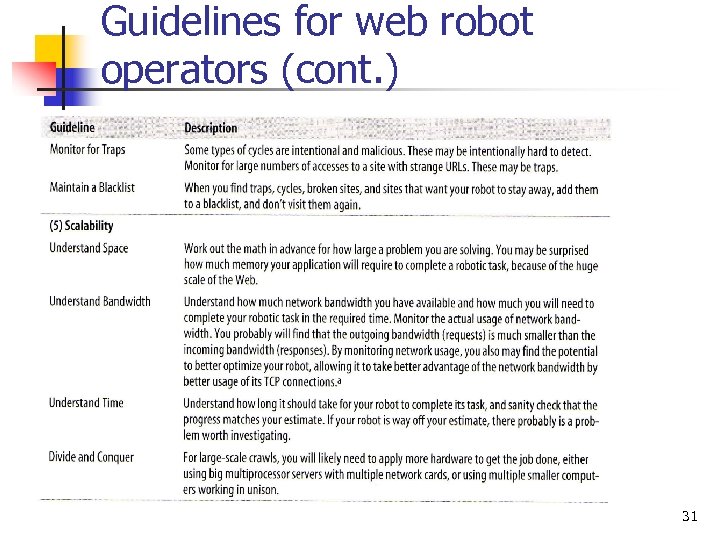

Guidelines for web robot operators (cont. ) 31

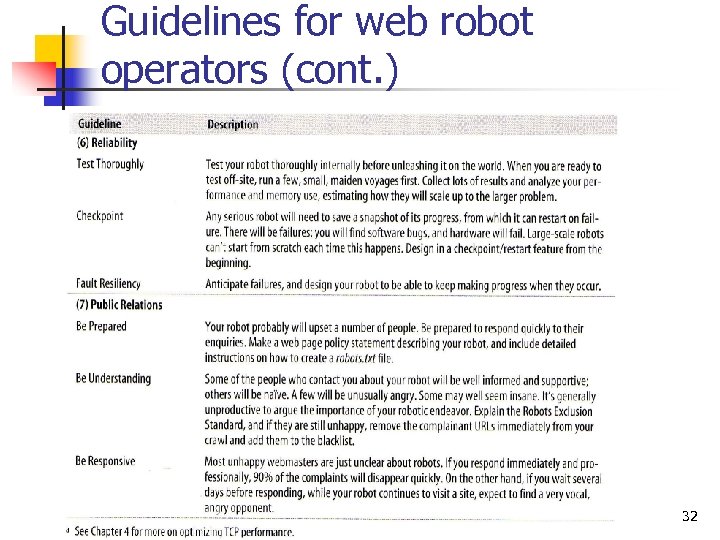

Guidelines for web robot operators (cont. ) 32

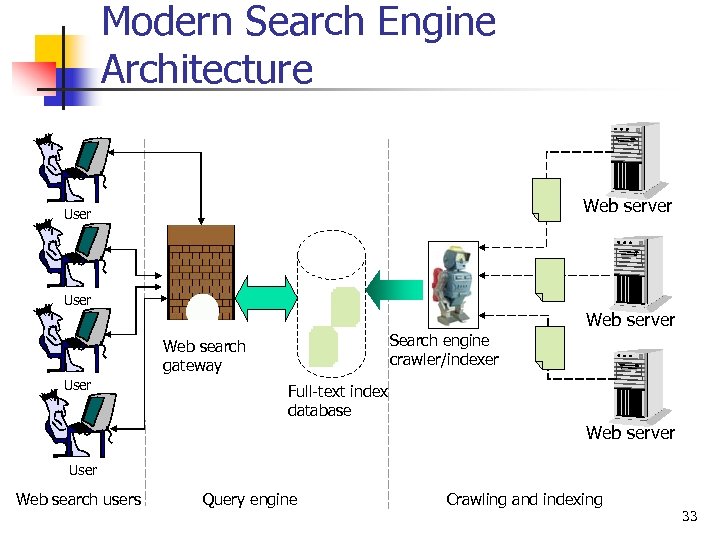

Modern Search Engine Architecture Web server User Web server Search engine crawler/indexer Web search gateway User Full-text index database Web server User Web search users Query engine Crawling and indexing 33

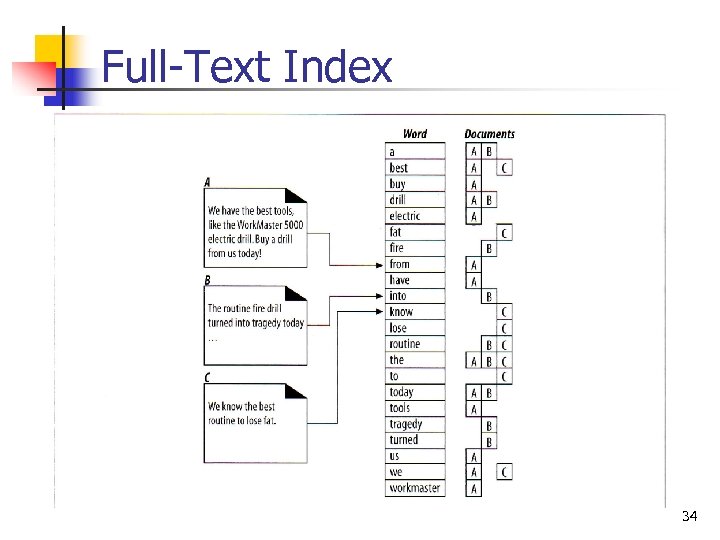

Full-Text Index 34

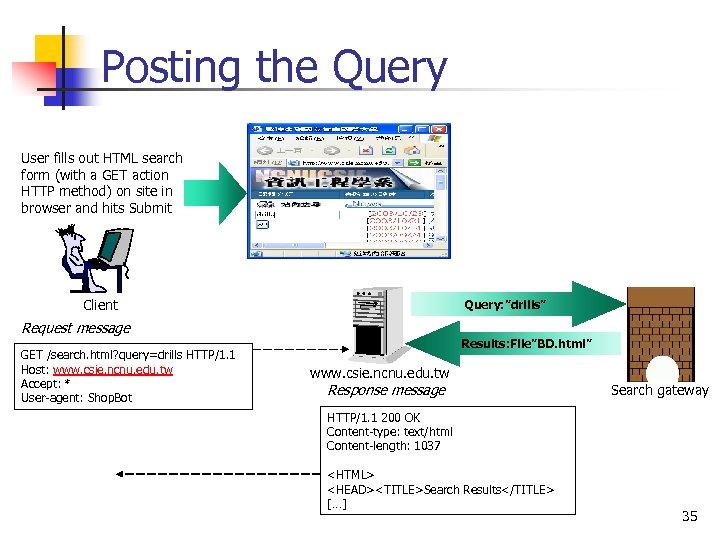

Posting the Query User fills out HTML search form (with a GET action HTTP method) on site in browser and hits Submit Client Query: ”drills” Request message GET /search. html? query=drills HTTP/1. 1 Host: www. csie. ncnu. edu. tw Accept: * User-agent: Shop. Bot Results: File”BD. html” www. csie. ncnu. edu. tw Response message Search gateway HTTP/1. 1 200 OK Content-type: text/html Content-length: 1037 <HTML> <HEAD><TITLE>Search Results</TITLE> […] 35

Reference nhttp: //www. robots. txt. org/wc/robots. html The Web Robots Pages-resources for robot developers, including the registry of Internet Robots. nhttp: //www. searchengineworld. com Search Engine World-resource for search engines and robots. nhttp: //www. searchtools. com Search Tools for Web Sites and Intranets-resources for search tools and robots. nhttp: //search. cpan. org/people/spc/robots 2. html Robot. Rules Perl source. nhttp: //www. conman. org/people/spc/robots 2. html An Ectended Standard for Robot Exclusion. n. Managing Gigabytes: Compressing and Indexing Documents and Images Written, I. , Moffat, A. , and Bell, T. , Morgan Kaufmann. 36

74f87577c1eb72d9c91f7a3b3a947da9.ppt