921aef74d45a5578ad6a8bf6a4073586.ppt

- Количество слайдов: 22

Web Caching and Content Delivery

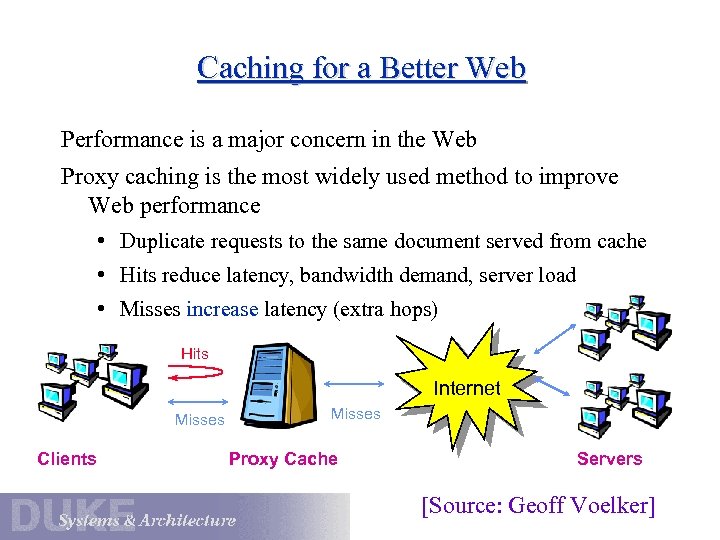

Caching for a Better Web Performance is a major concern in the Web Proxy caching is the most widely used method to improve Web performance • Duplicate requests to the same document served from cache • Hits reduce latency, bandwidth demand, server load • Misses increase latency (extra hops) Hits Internet Misses Clients Misses Proxy Cache Servers [Source: Geoff Voelker]

Proxy Caching How should we build caching systems for the Web? • Seminal paper [Chankhunthod 96] • Proxy caches [Duska 97] • Akamai DNS interposition [Karger 99] • Cooperative caching [Tewari 99, Fan 98, Wolman 99] • Popularity distributions [Breslau 99] • Proxy filtering and transcoding [Fox et al] • Consistency [Tewari, Cao et al] • Replica placement for CDNs [et al] [Voelker]

Issues for Web Caching • Binding clients to proxies, handling failover Manual configuration, router-based “transparent caching”, WPAD (Web Proxy Automatic Discovery) • Proxy may confuse/obscure interactions between server and client. • Consistency management At first approximation the Web is a wide-area read-only file service. . . but it is much more than that. caching responses vs. caching documents deltas [Mogul+Bala/Douglis/Misha/others@research. att. com] • Prefetching, scale, request routing, scale, performance Web caching vs. content distribution (CDNs, e. g. , Akamai)

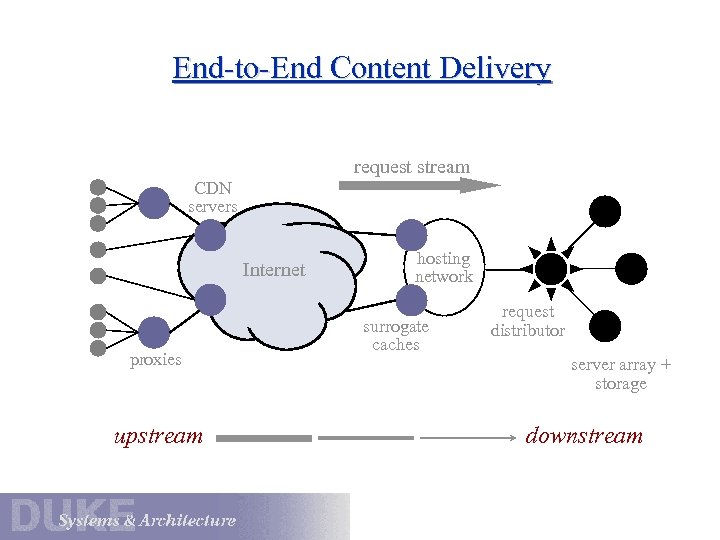

End-to-End Content Delivery request stream CDN servers Internet proxies upstream hosting network surrogate caches request distributor server array + storage downstream

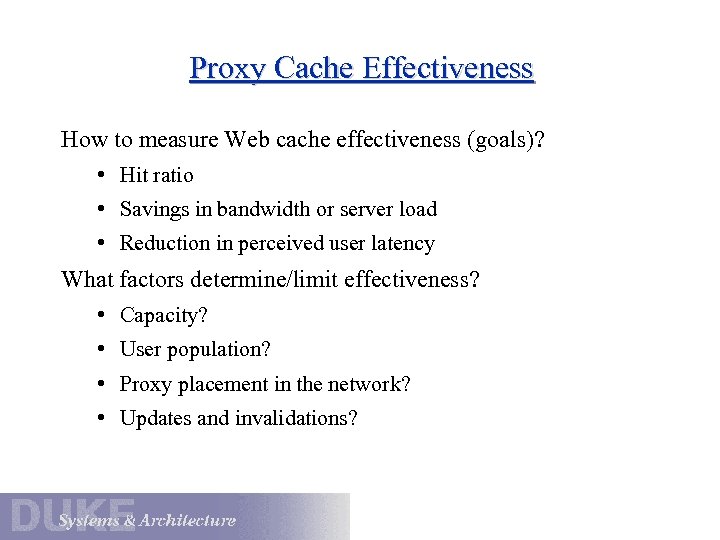

Proxy Cache Effectiveness How to measure Web cache effectiveness (goals)? • Hit ratio • Savings in bandwidth or server load • Reduction in perceived user latency What factors determine/limit effectiveness? • Capacity? • User population? • Proxy placement in the network? • Updates and invalidations?

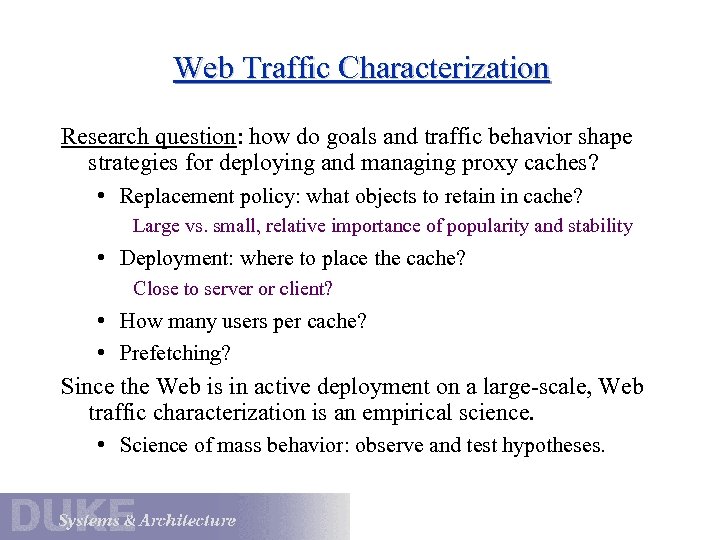

Web Traffic Characterization Research question: how do goals and traffic behavior shape strategies for deploying and managing proxy caches? • Replacement policy: what objects to retain in cache? Large vs. small, relative importance of popularity and stability • Deployment: where to place the cache? Close to server or client? • How many users per cache? • Prefetching? Since the Web is in active deployment on a large-scale, Web traffic characterization is an empirical science. • Science of mass behavior: observe and test hypotheses.

![Zipf [Breslau/Cao 99] and others observed that Web accesses can be modeled using Zipf-like Zipf [Breslau/Cao 99] and others observed that Web accesses can be modeled using Zipf-like](https://present5.com/presentation/921aef74d45a5578ad6a8bf6a4073586/image-8.jpg)

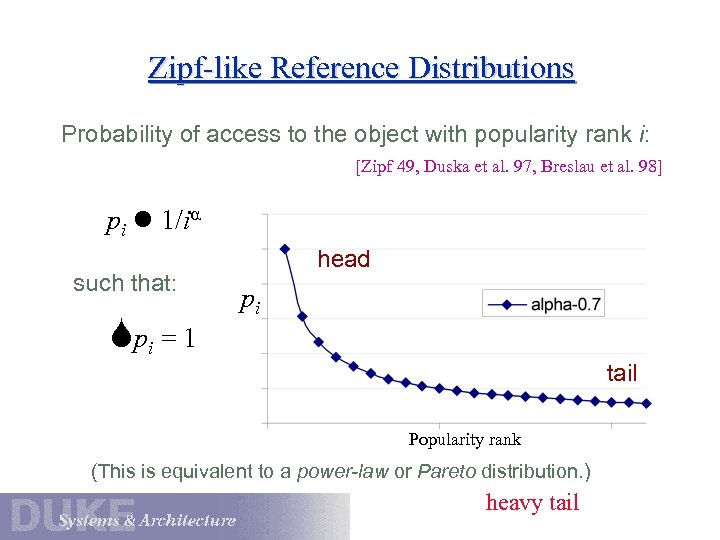

Zipf [Breslau/Cao 99] and others observed that Web accesses can be modeled using Zipf-like probability distributions. • Rank objects by popularity: lower rank i ==> more popular. • The probability that any given reference is to the ith most popular object is pi Not to be confused with pc, the percentage of cacheable objects. Zipf says: “pi is proportional to 1/i , for some with 0 < < 1”. • Higher gives more skew: popular objects are way popular. • Lower gives a more heavy-tailed distribution. • In the Web, ranges from 0. 6 to 0. 8 [Breslau/Cao 99]. • With =0. 8, 0. 3% of the objects get 40% of requests.

Zipf-like Reference Distributions Probability of access to the object with popularity rank i: [Zipf 49, Duska et al. 97, Breslau et al. 98] pi 1/i such that: pi = 1 head pi tail Popularity rank (This is equivalent to a power-law or Pareto distribution. ) heavy tail

Importance of Traffic Models Analytical models like this help us to predict cache hit ratios (object hit ratio or byte hit ratio). • E. g. , get object hit ratio as a function of size by integrating under segments of the Zipf curve …assuming perfect LFU replacement • Must consider update rate Do object update rates correlate with popularity? • Must consider object size How does size correlate with popularity? • Must consider proxy cache population What is the probability of object sharing? • Enables construction of synthetic load generators SURGE [Barford and Crovella 99]

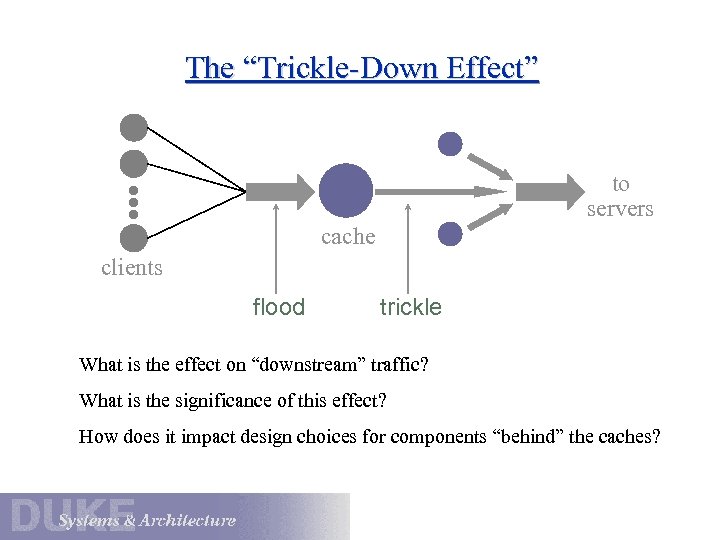

The “Trickle-Down Effect” to servers cache clients flood trickle What is the effect on “downstream” traffic? What is the significance of this effect? How does it impact design choices for components “behind” the caches?

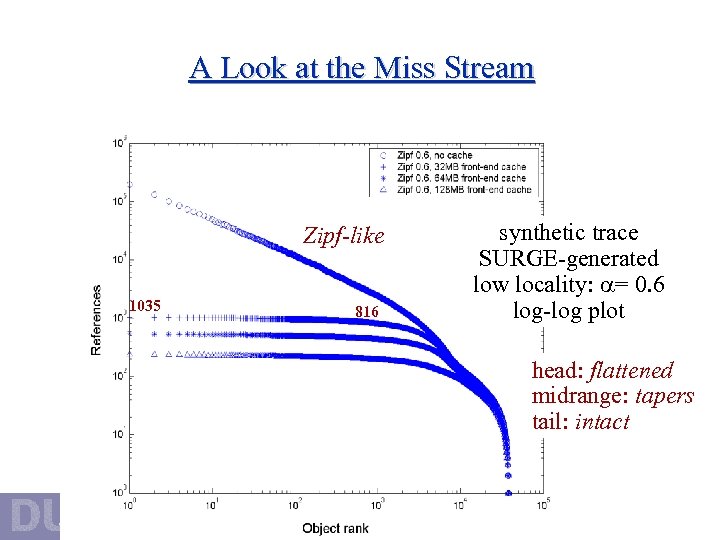

A Look at the Miss Stream Zipf-like 1035 816 synthetic trace SURGE-generated low locality: = 0. 6 log-log plot head: flattened midrange: tapers tail: intact

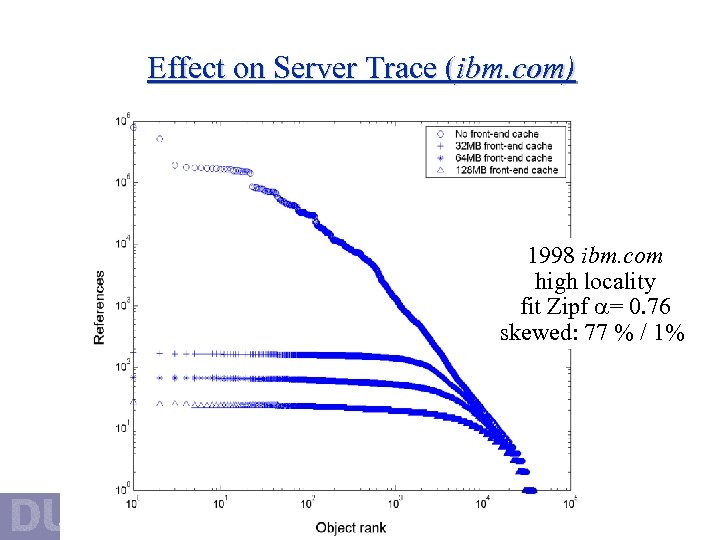

Effect on Server Trace (ibm. com) 1998 ibm. com high locality fit Zipf = 0. 76 skewed: 77 % / 1%

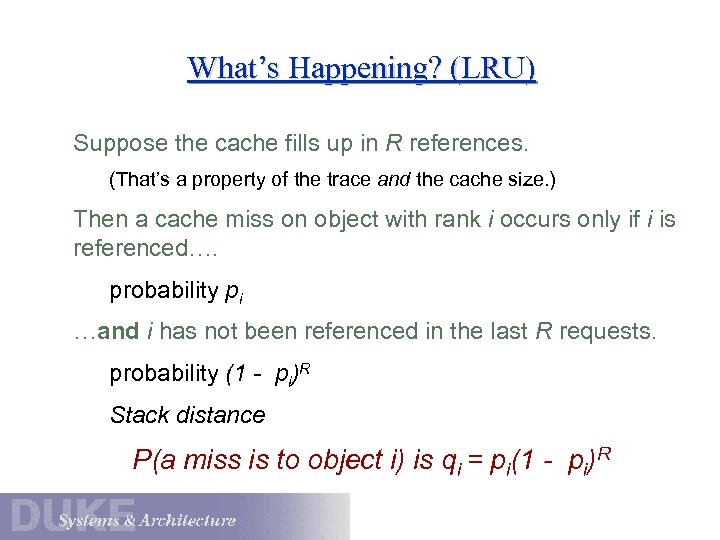

What’s Happening? (LRU) Suppose the cache fills up in R references. (That’s a property of the trace and the cache size. ) Then a cache miss on object with rank i occurs only if i is referenced…. probability pi …and i has not been referenced in the last R requests. probability (1 - pi)R Stack distance P(a miss is to object i) is qi = pi(1 - pi)R

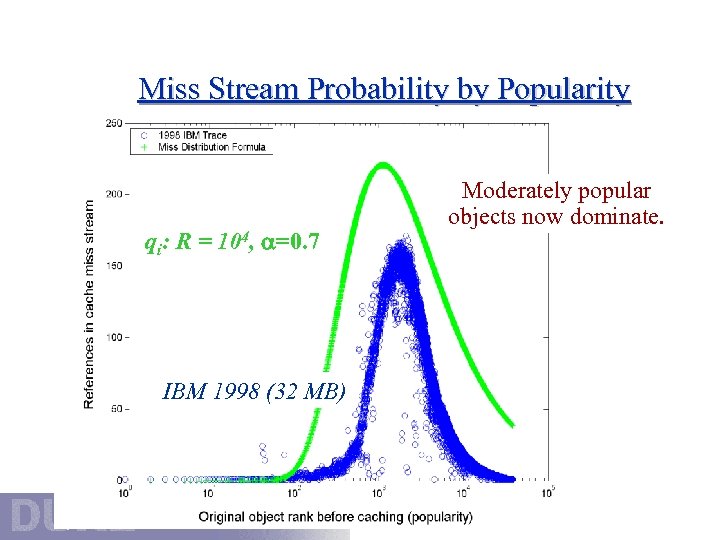

Miss Stream Probability by Popularity qi: R = 104, =0. 7 IBM 1998 (32 MB) Moderately popular objects now dominate.

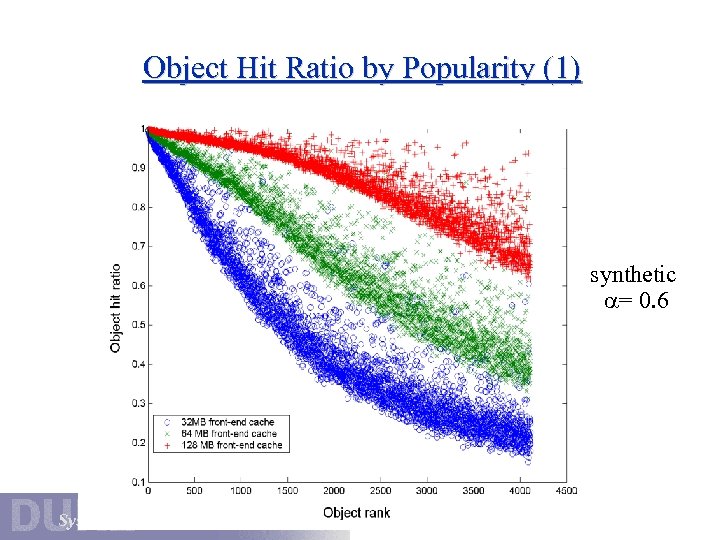

Object Hit Ratio by Popularity (1) synthetic = 0. 6

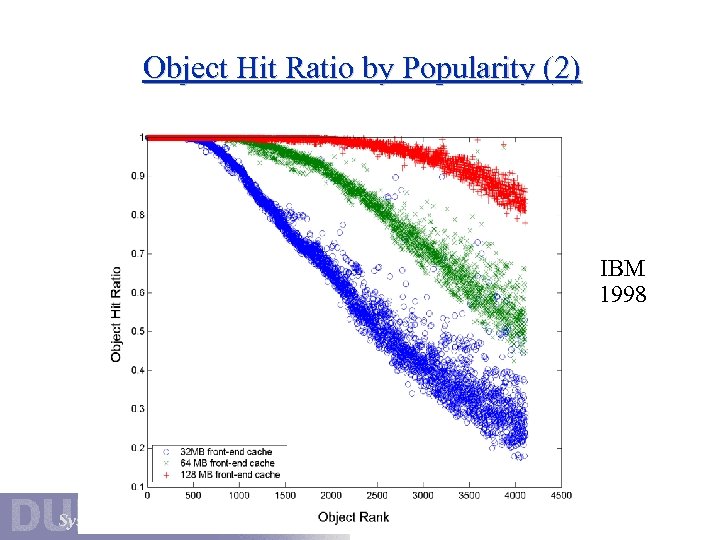

Object Hit Ratio by Popularity (2) IBM 1998

Limitations/Features of This Study static (cacheable) objects ignore misses caused by updates • invalidation/expiration LRU replacement vary cache effectiveness by capacity • cache intercepts all client traffic ignore effect on downstream traffic volume

Proxy Deployment and Use Where to put it? How to direct user Web traffic through the proxy? Request redirection • Much more to come on this topic… Must the server consent? • Protected content • Client identity “Transparent” caching and the end-to-end principle • Must the client consent?

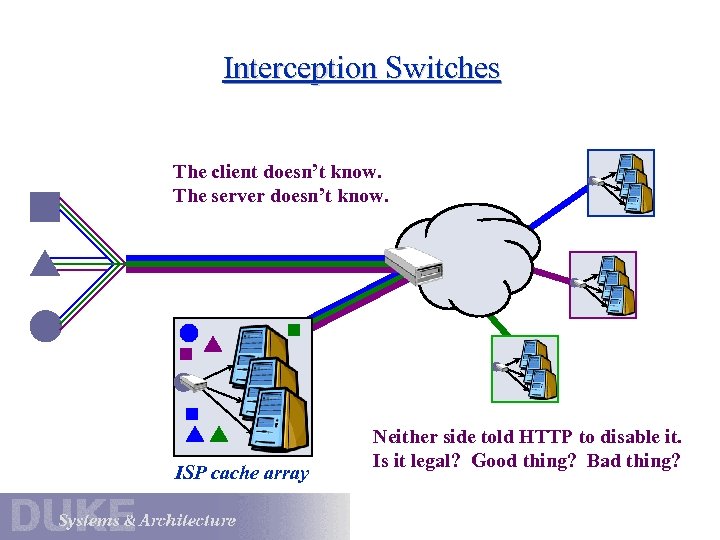

Interception Switches The client doesn’t know. The server doesn’t know. ISP cache array Neither side told HTTP to disable it. Is it legal? Good thing? Bad thing?

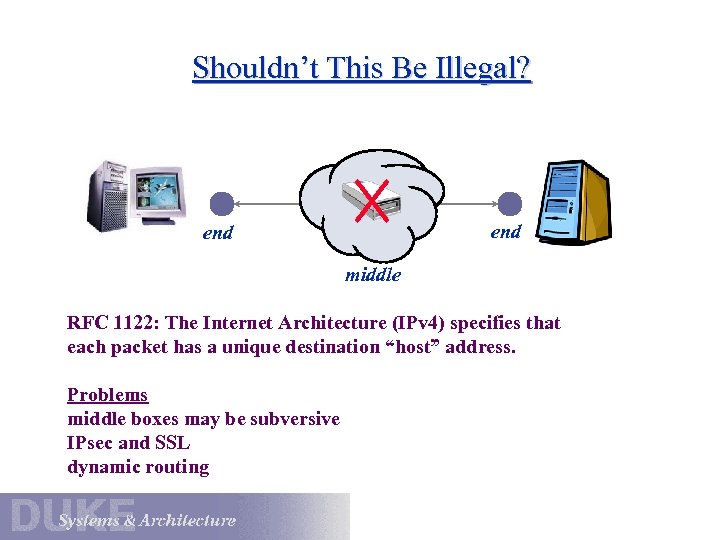

Shouldn’t This Be Illegal? end middle RFC 1122: The Internet Architecture (IPv 4) specifies that each packet has a unique destination “host” address. Problems middle boxes may be subversive IPsec and SSL dynamic routing

Cache Effectiveness Previous work has shown that hit rate increases with population size [Duska et al. 97, Breslau et al. 98] However, single proxy caches have practical limits • Load, network topology, organizational constraints One technique to scale the client population is to have proxy caches cooperate

921aef74d45a5578ad6a8bf6a4073586.ppt