862eb50cc1b4eefed0c96b2e60d163ec.ppt

- Количество слайдов: 15

VL-e Po. C Introduction Maurice Bouwhuis VL-e work shop, April 7 th, 2006

Elements in the Po. C The Po. C refers to three distinct elements Po. C Software Distribution § § set of software that is the basis for the applications both grid middleware and virtual lab generic software Po. C Environment § § the ensemble of systems running the Distribution including your own desktops or local clusters/storage Po. C Central Facilities § those systems with the Po. C Distribution centrally managed for the entire collaboration § computing, storage and hosting resources

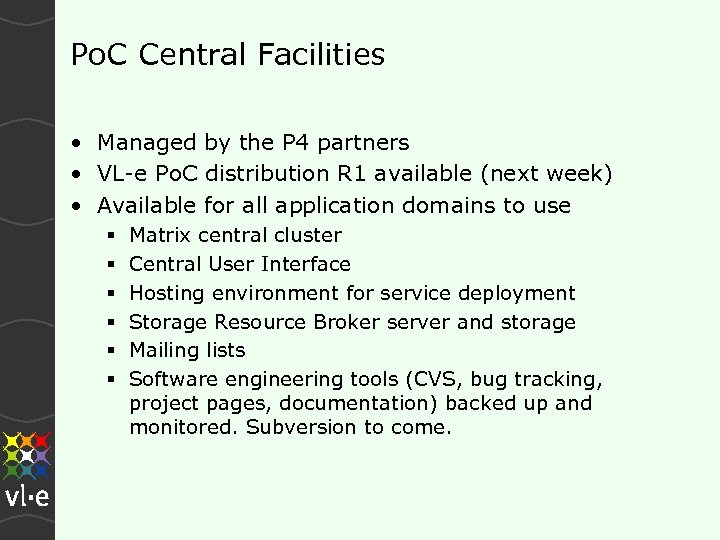

Po. C Central Facilities • Managed by the P 4 partners • VL-e Po. C distribution R 1 available (next week) • Available for all application domains to use § § § Matrix central cluster Central User Interface Hosting environment for service deployment Storage Resource Broker server and storage Mailing lists Software engineering tools (CVS, bug tracking, project pages, documentation) backed up and monitored. Subversion to come.

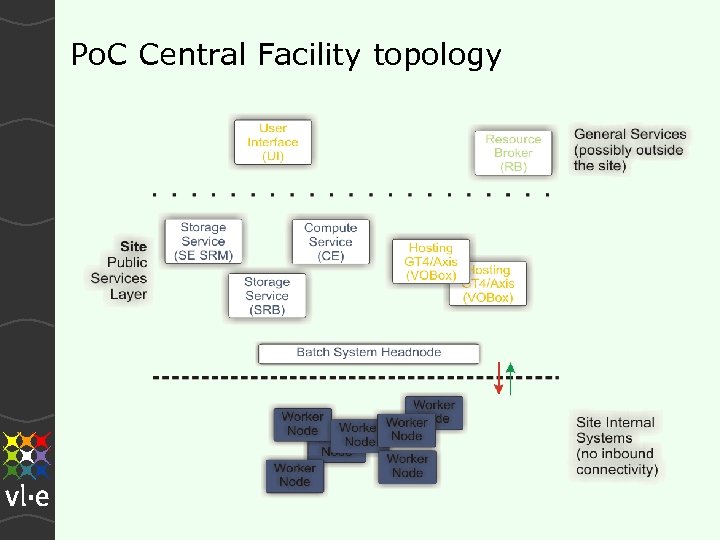

Po. C Central Facility topology

Services Hosting • Usually, a service lives in a container • Container takes care of § Translating incoming SOAP message to appropriate objects (Java objects, C structures, …) § Enforcing access control § Invoking methods § Packaging the results § Destroying the invoked objects

Service Deployment constraints CF • Farm of GT 4 containers provided on the CF § Based on the concept of “VO Boxes” § For now: login to these boxes via gsissh § Not for compute-intensive work • You cannot run a container continuously on worker nodes § No inbound IP connectivity § Resource management and utilization • And you should not want to, because § All services in a container content for CPU and (disk) bandwidth § JVM does not insulate services from each other

Other constraints on the CF • Worker nodes are allocated in a transient way § Jobs run in a one-off scratch directory § No shared file system for $HOME (and if it happens to be there don’t use it!) § Jobs will be queued o o o Short jobs get to run faster Small jobs get to run faster Priority depends on your VO affiliation § You can connect out, but cannot listen § Your job is limited in wall time Hosting anvironment will be available one week after Easter

Data Management Needs • Automate all aspects of data management Discovery (without knowing the file name) Access (without knowing its location) Retrieval (using your preferred API) Control (without having a personal account at the remote storage system) § Performance (use latency management mechanisms to minimize impact of wide-area-networks) § Metadata integration § § § On VL-e Po. C R 1 : Storage Resource Broker

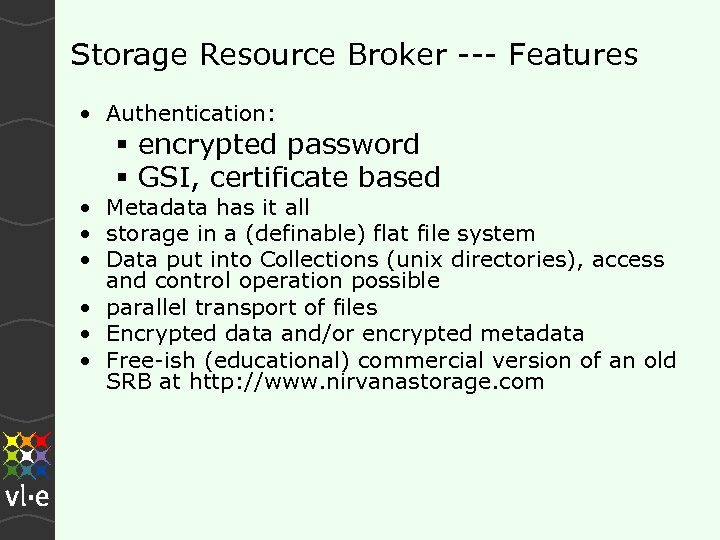

Storage Resource Broker --- Features • Authentication: § encrypted password § GSI, certificate based • Metadata has it all • storage in a (definable) flat file system • Data put into Collections (unix directories), access and control operation possible • parallel transport of files • Encrypted data and/or encrypted metadata • Free-ish (educational) commercial version of an old SRB at http: //www. nirvanastorage. com

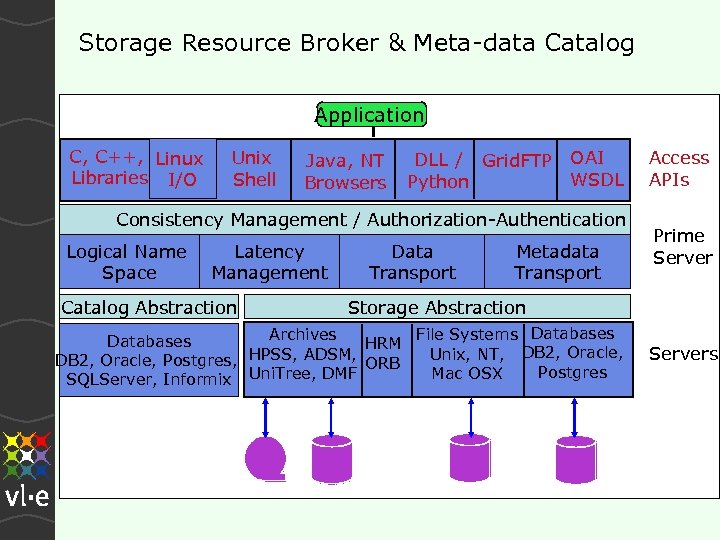

Storage Resource Broker & Meta-data Catalog Application C, C++, Linux Libraries I/O Unix Shell Java, NT Browsers DLL / Grid. FTP Python OAI WSDL Consistency Management / Authorization-Authentication Logical Name Space Latency Management Catalog Abstraction Data Transport Metadata Transport Access APIs Prime Server Storage Abstraction Archives File Systems Databases HRM Unix, NT, DB 2, Oracle, Postgres, HPSS, ADSM, ORB Postgres Mac OSX SQLServer, Informix Uni. Tree, DMF Servers

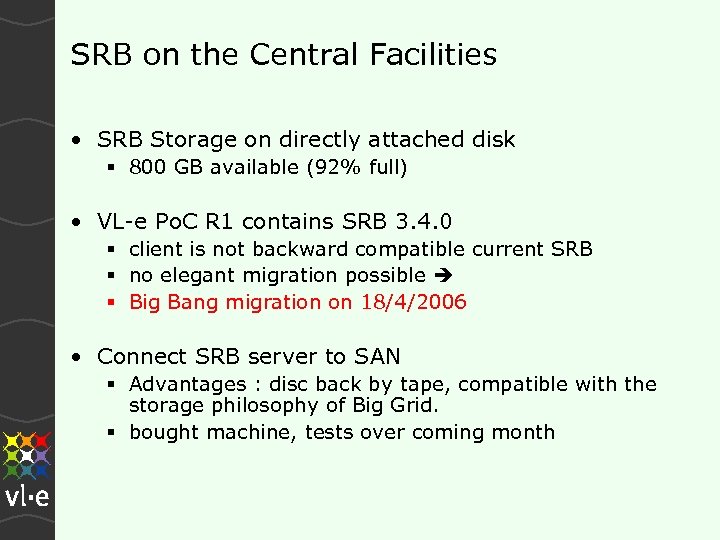

SRB on the Central Facilities • SRB Storage on directly attached disk § 800 GB available (92% full) • VL-e Po. C R 1 contains SRB 3. 4. 0 § client is not backward compatible current SRB § no elegant migration possible § Big Bang migration on 18/4/2006 • Connect SRB server to SAN § Advantages : disc back by tape, compatible with the storage philosophy of Big Grid. § bought machine, tests over coming month

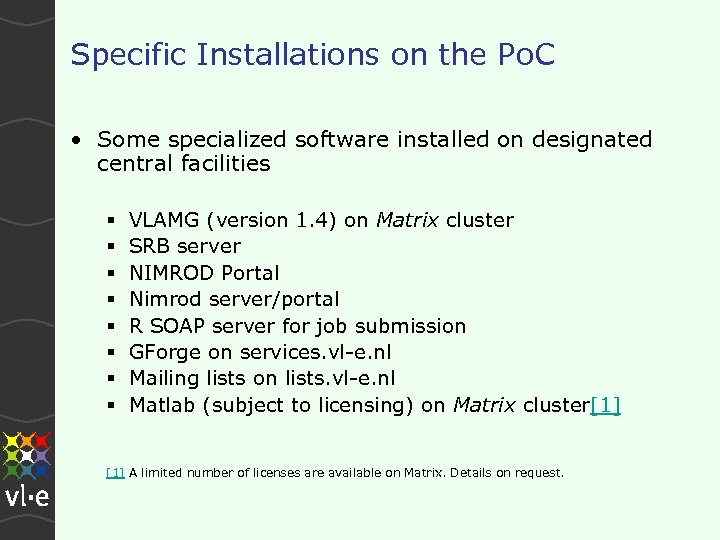

Specific Installations on the Po. C • Some specialized software installed on designated central facilities § § § § VLAMG (version 1. 4) on Matrix cluster SRB server NIMROD Portal Nimrod server/portal R SOAP server for job submission GForge on services. vl-e. nl Mailing lists on lists. vl-e. nl Matlab (subject to licensing) on Matrix cluster[1] A limited number of licenses are available on Matrix. Details on request.

Summary • the VL-e Po. C Central Facilities are available to anyone in VL-e • Information: http: //poc. vl-e. nl • Support: grid. support@{sara, nikhef}. nl

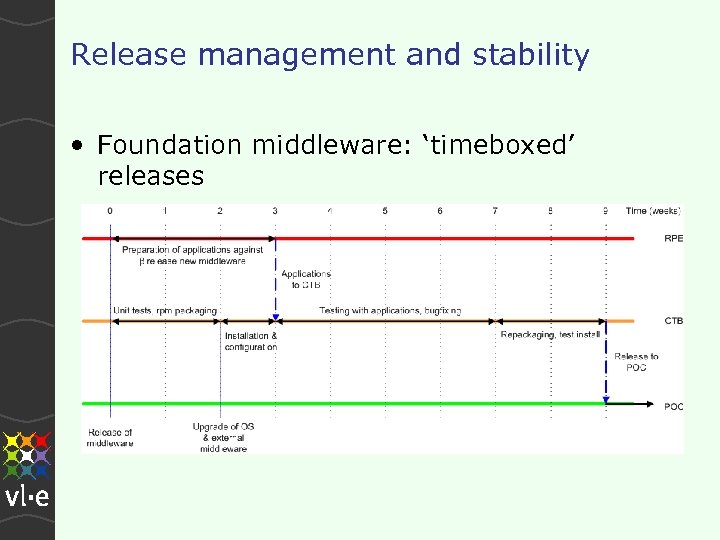

Release management and stability • Foundation middleware: ‘timeboxed’ releases

High performance/throughput Services ‘I need lots of * for my service’ • Parallelize it § § Use fine-grained services decomposition Use MPI (e. g. via IBIS) Use dedicated programs for processing Submit via a resource broker to the compute service • Submit jobs to the compute service to run dedicated GT 4 hosting environments with your service § Keep in mind to submit these to the cluster where your front-end is running on a VO Box

862eb50cc1b4eefed0c96b2e60d163ec.ppt