6a7761b52707f31d3f6320204a5220ff.ppt

- Количество слайдов: 47

Virtuoso: Distributed Computing Using Virtual Machines Peter A. Dinda Prescience Lab Department of Computer Science Northwestern University http: //plab. cs. northwestern. edu

People and Acknowledgements • Students – Ashish Gupta, Ananth Sundararaj, Dong Lu, Jason Skicewicz, Billy Davidson, Andrew Weinrich • Collaborators – In-Vigo project at University of Florida • Renato Figueiredo, Jose Fortes • Funder – NSF through several awards 2

Outline • • Motivation Virtuoso Model Virtual networking and remote devices Information services Resource measurement and prediction Resource control Related work R. Figueiredo, P. Dinda, J. Fortes, A Case For Grid Computing on Conclusions Virtual Machines, ICDCS 2003 3

How do we deliver arbitrary amounts of computational power to ordinary people? 4

Distributed and Parallel Computing How do we deliver arbitrary amounts of computational power to ordinary people? Interactive Applications 5

Distributed and Parallel Computing How do we deliver arbitrary amounts of computational power to ordinary people? Interactive Applications 6

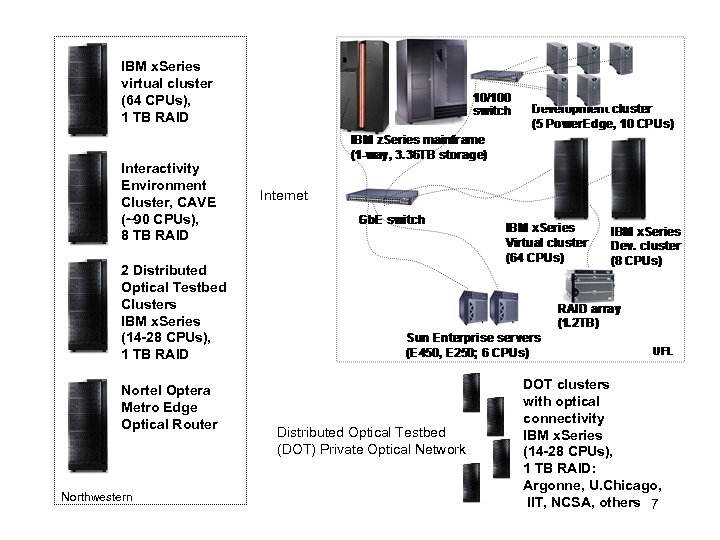

IBM x. Series virtual cluster (64 CPUs), 1 TB RAID Interactivity Environment Cluster, CAVE (~90 CPUs), 8 TB RAID Internet 2 Distributed Optical Testbed Clusters IBM x. Series (14 -28 CPUs), 1 TB RAID Nortel Optera Metro Edge Optical Router Northwestern Distributed Optical Testbed (DOT) Private Optical Network DOT clusters with optical connectivity IBM x. Series (14 -28 CPUs), 1 TB RAID: Argonne, U. Chicago, IIT, NCSA, others 7

Grid Computing • “Flexible, secure, coordinated resource sharing among dynamic collections of individuals, institutions, and resources” • I. Foster, C. Kesselman, S. Tuecke, The Anatomy of the Grid: Enabling Scalable Virtual Organizations, International J. Supercomputer Applications, 15(3), 2001 • Globus, Condor/G, Avaki, EU Data. Grid SW, … 8

Complexity from User’s Perspective • Process or job model – Lots of complex state: connections, special shared libraries, licenses, file descriptors • Operating system specificity – Perhaps even version-specific – Symbolic supercomputer example • Need to buy into some “Grid API” • Install and learn complex Grid software 9

Users already know how to deal with this complexity at another level 10

Complexity from Resource Owner’s Perspective • Install and learn complex Grid software • Deal with local accounts and privileges – Associated with global accounts or certificates • Protection • Support users with different OS, library, license, etc, needs. 11

Virtual Machines • Language-oriented VMs – Abstract interpreted machine, JIT Compiler, large library – Examples: UCSD p-system, Java VM, . NET VM • Application-oriented VMs – Redirect library calls to appropriate place – Examples: Entropia VM • Virtual servers – Kernel makes it appear that a group of processes are running on a separate instance of the kernel – Examples: Ensim, Virtuozzo, SODA, … • Virtual machine monitors (VMMs) – Raw machine is the abstraction – VM represented by a single image – Examples: IBM’s VM, VMWare, Virtual PC/Server, Plex/86, SIMICS, Hypervisor, Des. QView/Task. View. VM/386 12

VMWare GSX VM 13

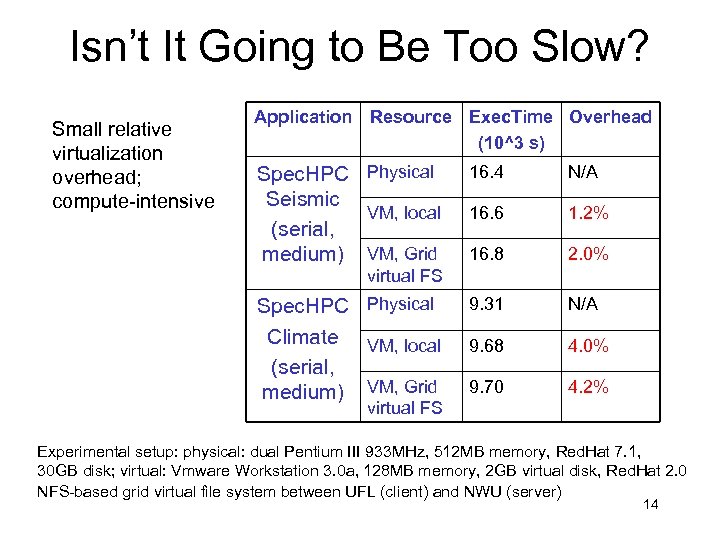

Isn’t It Going to Be Too Slow? Small relative virtualization overhead; compute-intensive Application Resource Exec. Time Overhead (10^3 s) Spec. HPC Physical Seismic VM, local (serial, medium) VM, Grid 16. 4 N/A 16. 6 1. 2% 16. 8 2. 0% Spec. HPC Physical Climate VM, local (serial, medium) VM, Grid 9. 31 N/A 9. 68 4. 0% 9. 70 4. 2% virtual FS Experimental setup: physical: dual Pentium III 933 MHz, 512 MB memory, Red. Hat 7. 1, 30 GB disk; virtual: Vmware Workstation 3. 0 a, 128 MB memory, 2 GB virtual disk, Red. Hat 2. 0 NFS-based grid virtual file system between UFL (client) and NWU (server) 14

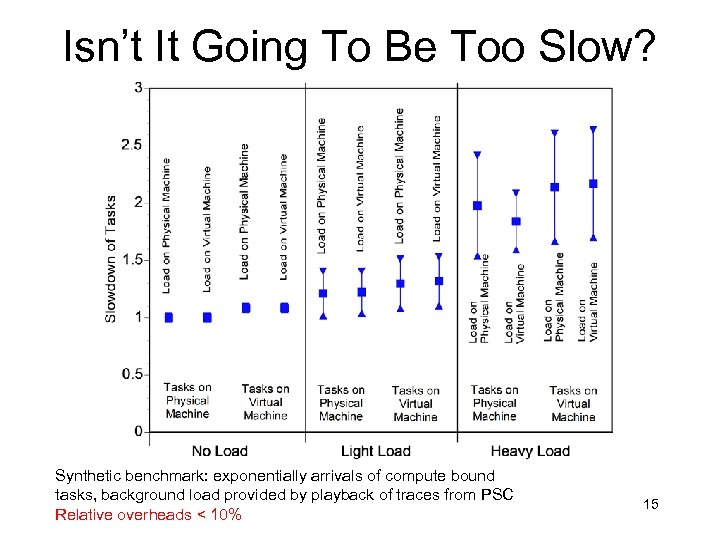

Isn’t It Going To Be Too Slow? Synthetic benchmark: exponentially arrivals of compute bound tasks, background load provided by playback of traces from PSC Relative overheads < 10% 15

Isn’t It Going To Be Too Slow? • Virtualized NICs have very similar bandwidth, slightly higher latencies – J. Sugerman, G. Venkitachalam, B-H Lim, “Virtualizing I/O Devices on VMware Workstation’s Hosted Virtual Machine Monitor”, USENIX 2001 • Disk-intensive workloads (kernel build, web service): 30% slowdown – S. King, G. Dunlap, P. Chen, “OS support for Virtual Machines”, USENIX 2003 16

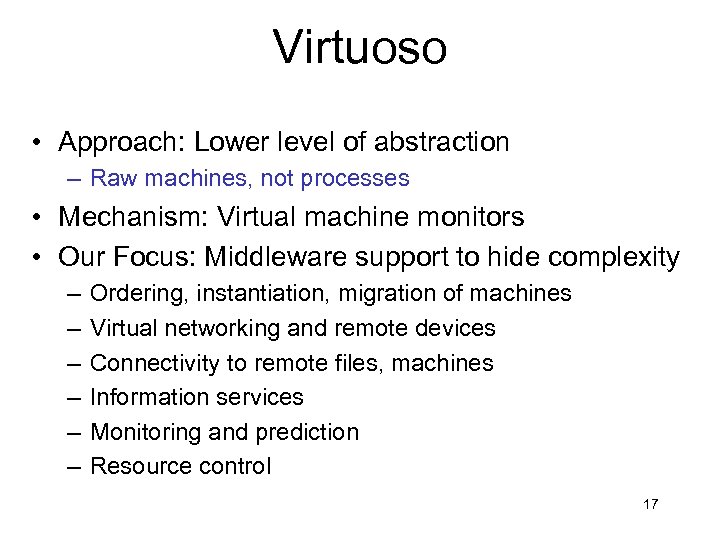

Virtuoso • Approach: Lower level of abstraction – Raw machines, not processes • Mechanism: Virtual machine monitors • Our Focus: Middleware support to hide complexity – – – Ordering, instantiation, migration of machines Virtual networking and remote devices Connectivity to remote files, machines Information services Monitoring and prediction Resource control 17

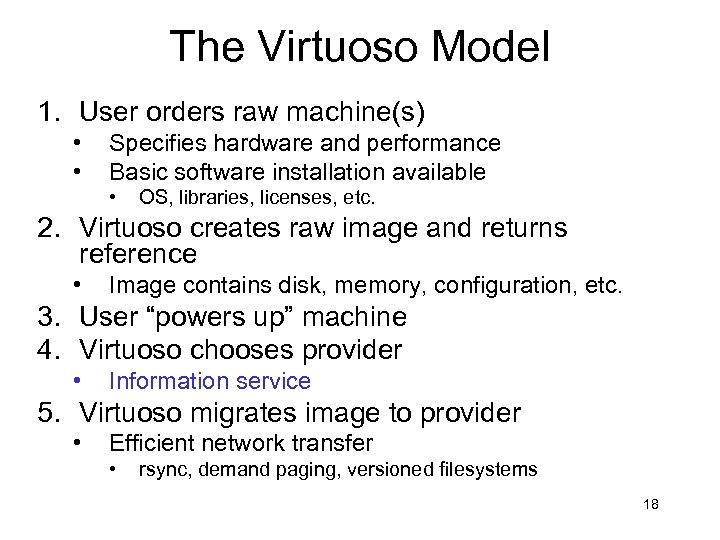

The Virtuoso Model 1. User orders raw machine(s) • • Specifies hardware and performance Basic software installation available • OS, libraries, licenses, etc. 2. Virtuoso creates raw image and returns reference • Image contains disk, memory, configuration, etc. 3. User “powers up” machine 4. Virtuoso chooses provider • Information service 5. Virtuoso migrates image to provider • Efficient network transfer • rsync, demand paging, versioned filesystems 18

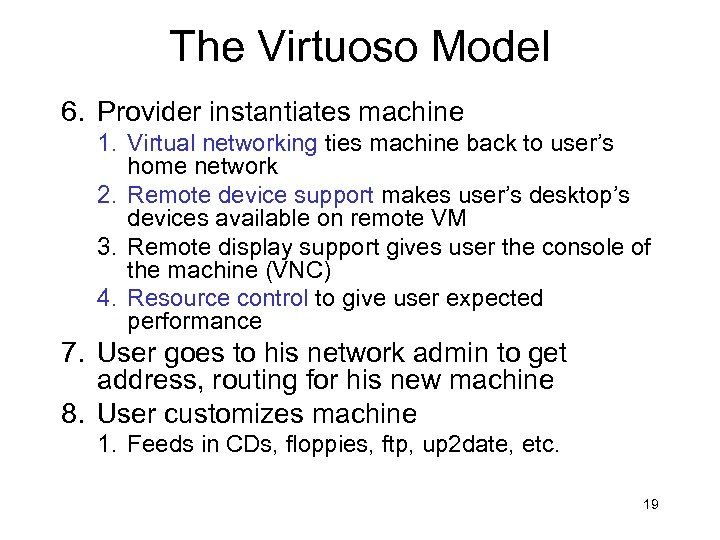

The Virtuoso Model 6. Provider instantiates machine 1. Virtual networking ties machine back to user’s home network 2. Remote device support makes user’s desktop’s devices available on remote VM 3. Remote display support gives user the console of the machine (VNC) 4. Resource control to give user expected performance 7. User goes to his network admin to get address, routing for his new machine 8. User customizes machine 1. Feeds in CDs, floppies, ftp, up 2 date, etc. 19

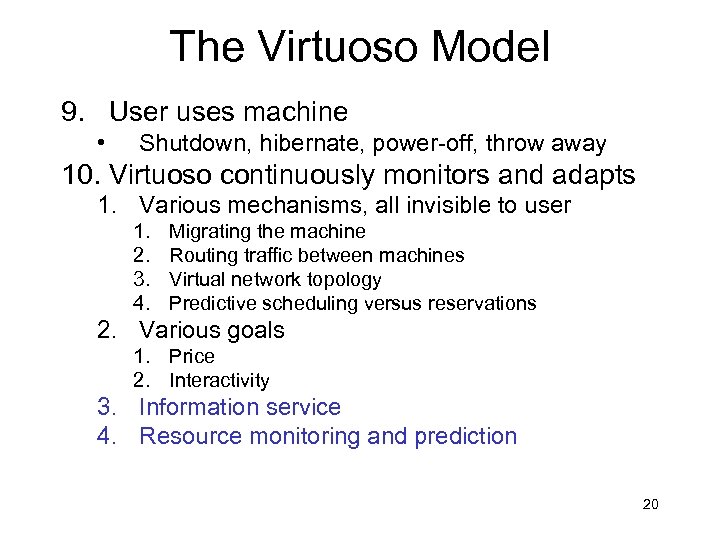

The Virtuoso Model 9. User uses machine • Shutdown, hibernate, power-off, throw away 10. Virtuoso continuously monitors and adapts 1. Various mechanisms, all invisible to user 1. 2. 3. 4. Migrating the machine Routing traffic between machines Virtual network topology Predictive scheduling versus reservations 2. Various goals 1. Price 2. Interactivity 3. Information service 4. Resource monitoring and prediction 20

Outline • • Motivation Virtuoso Model Virtual networking and remote devices Information services Resource measurement and prediction Resource control Related work R. Figueiredo, P. Dinda, J. Fortes, A Case For Grid Computing on Conclusions Virtual Machines, ICDCS 2003 21

Why Virtual Networking? • A machine is suddenly plugged into your network. What happens? – Does it get an IP address? – Is it a routeable address? – Does firewall let its traffic through? – To any port? How do we make virtual machine hostile environments as friendly as the user’s LAN? 22

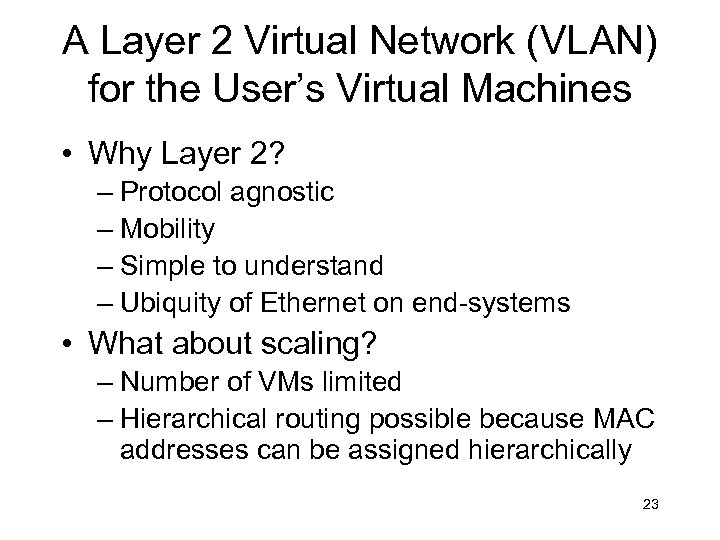

A Layer 2 Virtual Network (VLAN) for the User’s Virtual Machines • Why Layer 2? – Protocol agnostic – Mobility – Simple to understand – Ubiquity of Ethernet on end-systems • What about scaling? – Number of VMs limited – Hierarchical routing possible because MAC addresses can be assigned hierarchically 23

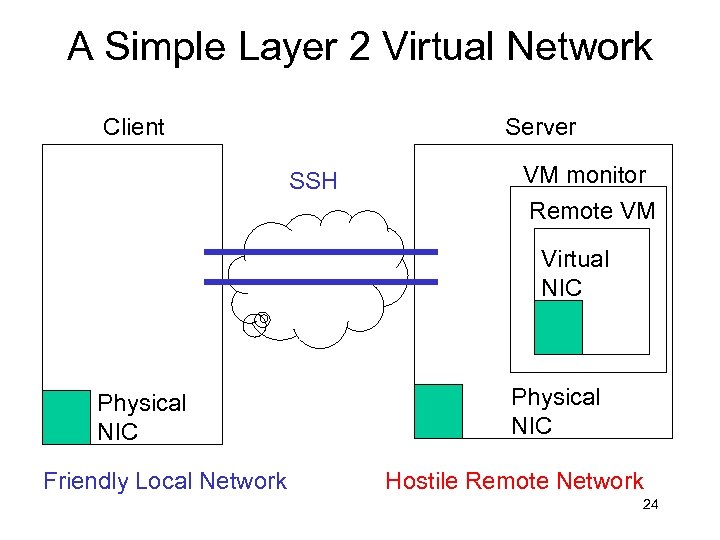

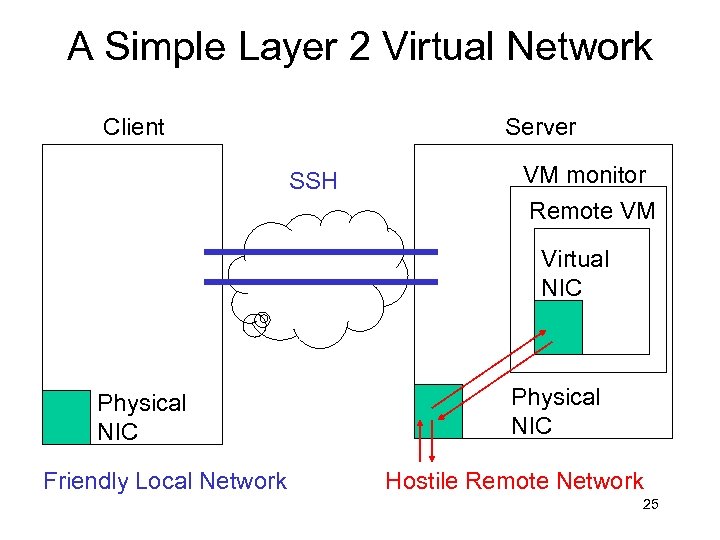

A Simple Layer 2 Virtual Network Client Server SSH VM monitor Remote VM Virtual NIC Physical NIC Friendly Local Network Physical NIC Hostile Remote Network 24

A Simple Layer 2 Virtual Network Client Server SSH VM monitor Remote VM Virtual NIC Physical NIC Friendly Local Network Physical NIC Hostile Remote Network 25

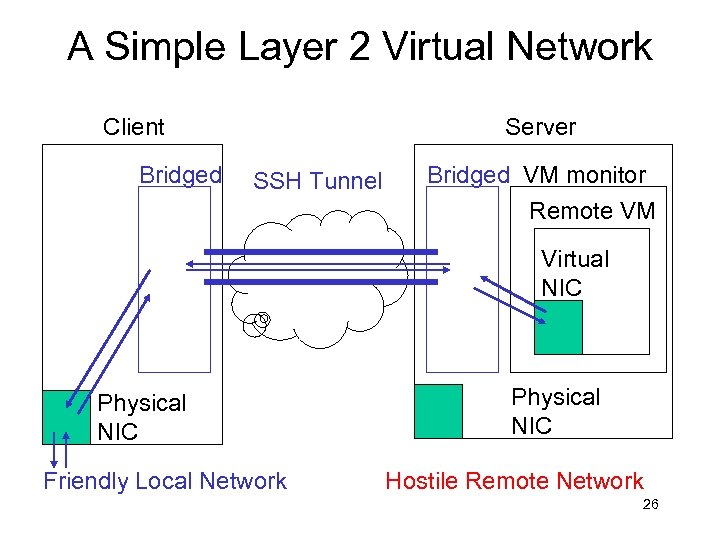

A Simple Layer 2 Virtual Network Client Bridged Server SSH Tunnel Bridged VM monitor Remote VM Virtual NIC Physical NIC Friendly Local Network Physical NIC Hostile Remote Network 26

An Overlay Network • Bridgeds and connections form an overlay network for routing traffic among virtual machines and the user’s home network • Links can trivially be added or removed 27

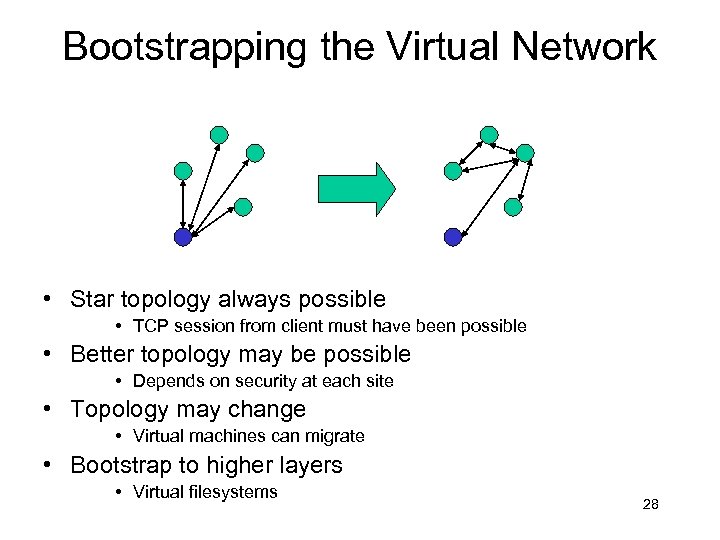

Bootstrapping the Virtual Network • Star topology always possible • TCP session from client must have been possible • Better topology may be possible • Depends on security at each site • Topology may change • Virtual machines can migrate • Bootstrap to higher layers • Virtual filesystems 28

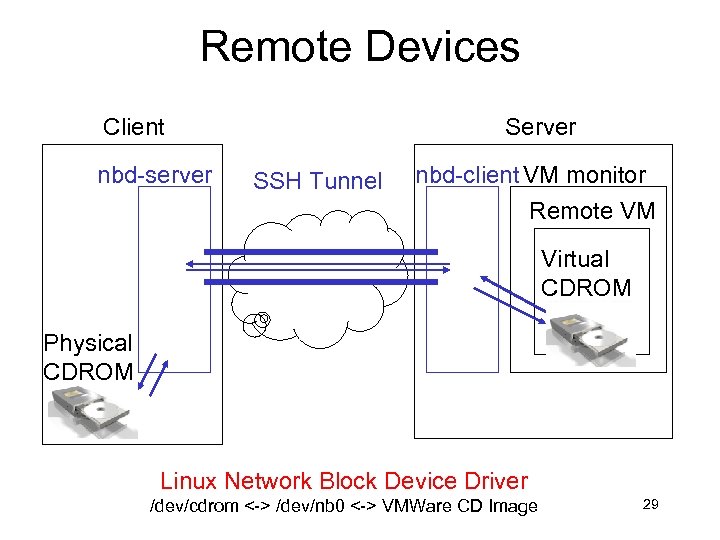

Remote Devices Client nbd-server SSH Tunnel nbd-client VM monitor Remote VM Virtual CDROM Physical CDROM Linux Network Block Device Driver /dev/cdrom <-> /dev/nb 0 <-> VMWare CD Image 29

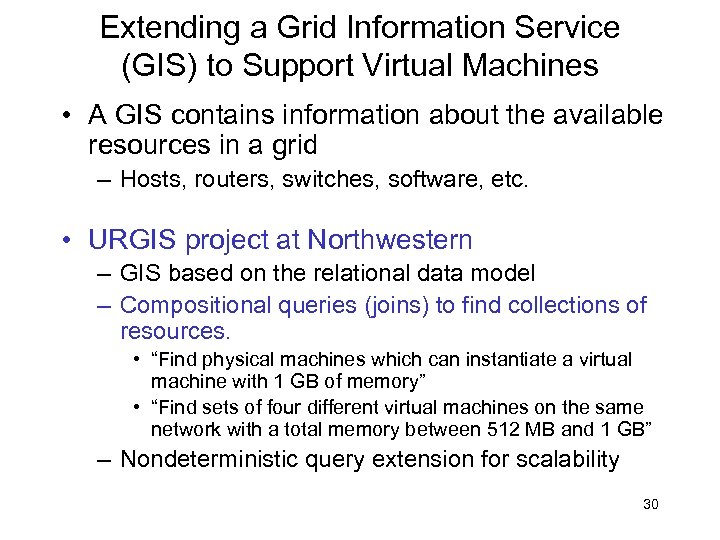

Extending a Grid Information Service (GIS) to Support Virtual Machines • A GIS contains information about the available resources in a grid – Hosts, routers, switches, software, etc. • URGIS project at Northwestern – GIS based on the relational data model – Compositional queries (joins) to find collections of resources. • “Find physical machines which can instantiate a virtual machine with 1 GB of memory” • “Find sets of four different virtual machines on the same network with a total memory between 512 MB and 1 GB” – Nondeterministic query extension for scalability 30

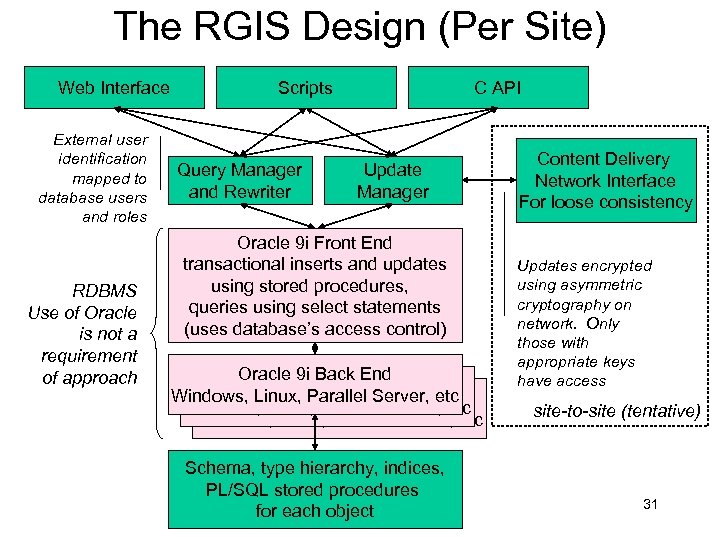

The RGIS Design (Per Site) Web Interface External user identification mapped to database users and roles RDBMS Use of Oracle is not a requirement of approach Scripts Query Manager and Rewriter C API Update Manager Oracle 9 i Front End transactional inserts and updates using stored procedures, queries using select statements (uses database’s access control) Oracle 9 i Back End Windows, Linux, Parallel Server, etc Schema, type hierarchy, indices, PL/SQL stored procedures for each object Content Delivery Network Interface For loose consistency Updates encrypted using asymmetric cryptography on network. Only those with appropriate keys have access site-to-site (tentative) 31

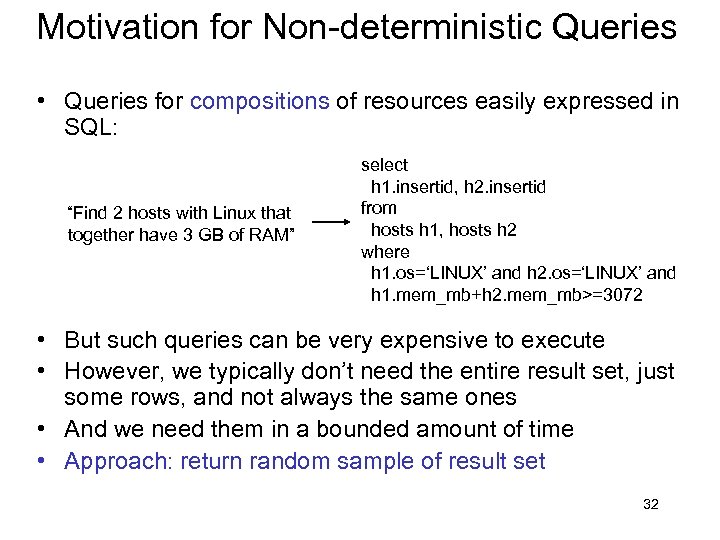

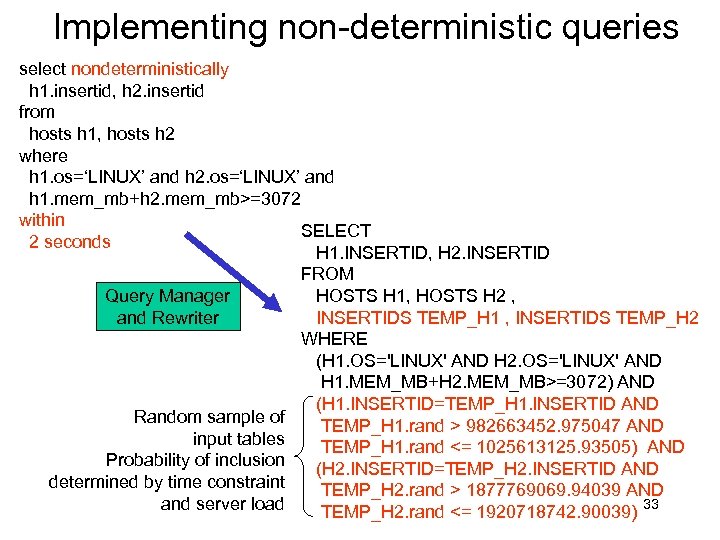

Motivation for Non-deterministic Queries • Queries for compositions of resources easily expressed in SQL: “Find 2 hosts with Linux that together have 3 GB of RAM” select h 1. insertid, h 2. insertid from hosts h 1, hosts h 2 where h 1. os=‘LINUX’ and h 2. os=‘LINUX’ and h 1. mem_mb+h 2. mem_mb>=3072 • But such queries can be very expensive to execute • However, we typically don’t need the entire result set, just some rows, and not always the same ones • And we need them in a bounded amount of time • Approach: return random sample of result set 32

Implementing non-deterministic queries select nondeterministically h 1. insertid, h 2. insertid from hosts h 1, hosts h 2 where h 1. os=‘LINUX’ and h 2. os=‘LINUX’ and h 1. mem_mb+h 2. mem_mb>=3072 within SELECT 2 seconds H 1. INSERTID, H 2. INSERTID FROM Query Manager HOSTS H 1, HOSTS H 2 , and Rewriter INSERTIDS TEMP_H 1 , INSERTIDS TEMP_H 2 WHERE (H 1. OS='LINUX' AND H 2. OS='LINUX' AND H 1. MEM_MB+H 2. MEM_MB>=3072) AND (H 1. INSERTID=TEMP_H 1. INSERTID AND Random sample of TEMP_H 1. rand > 982663452. 975047 AND input tables TEMP_H 1. rand <= 1025613125. 93505) AND Probability of inclusion (H 2. INSERTID=TEMP_H 2. INSERTID AND determined by time constraint TEMP_H 2. rand > 1877769069. 94039 AND and server load TEMP_H 2. rand <= 1920718742. 90039) 33

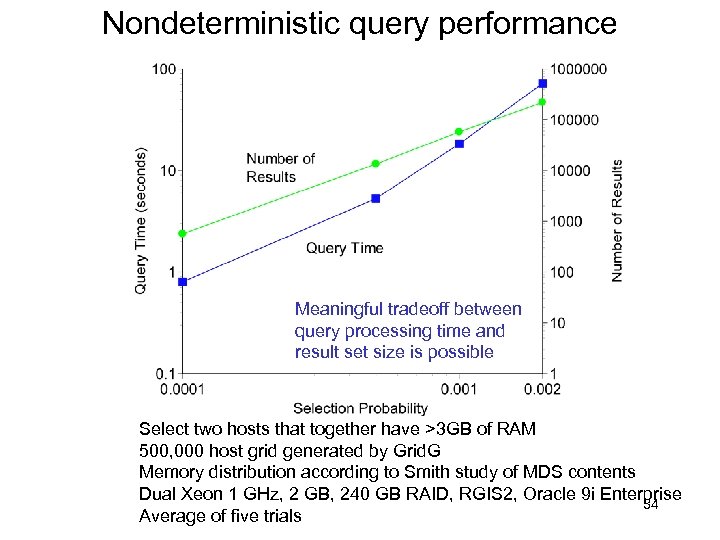

Nondeterministic query performance Meaningful tradeoff between query processing time and result set size is possible Select two hosts that together have >3 GB of RAM 500, 000 host grid generated by Grid. G Memory distribution according to Smith study of MDS contents Dual Xeon 1 GHz, 2 GB, 240 GB RAID, RGIS 2, Oracle 9 i Enterprise 34 Average of five trials

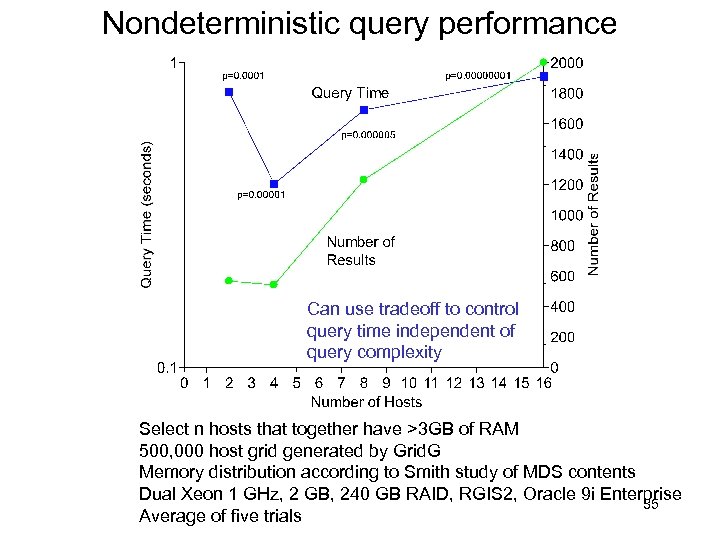

Nondeterministic query performance Can use tradeoff to control query time independent of query complexity Select n hosts that together have >3 GB of RAM 500, 000 host grid generated by Grid. G Memory distribution according to Smith study of MDS contents Dual Xeon 1 GHz, 2 GB, 240 GB RAID, RGIS 2, Oracle 9 i Enterprise 35 Average of five trials

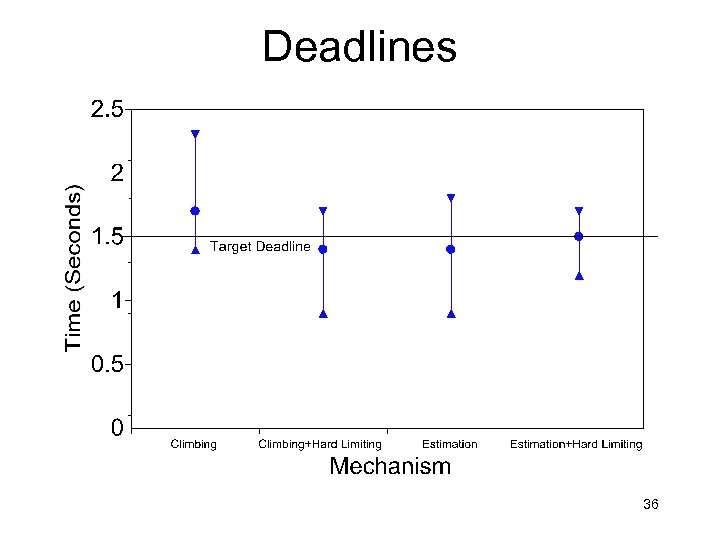

Deadlines 36

Extending a Grid Information Service (GIS) to Support Virtual Machines • Virtual indirection – Each RGIS object has a unique id – Virtualization table associates unique id of virtual resources with unique ids of their constituent physical resources – Virtual nature of resource is hidden unless query explicitly requests it • Futures – An RGIS object that does not exist yet – Futures table of unique ids – Future nature of resource hidden unless query explicitly requests it 37

Extending a Resource Monitoring and Prediction System to Support Virtual Machines • Measuring and predicting dynamic resource availability to support adaptation – Virtual machine migration – Routing on the virtual network – Application-level adaptation • RPS System at Northwestern – Host and network measurements for Unix and Windows – Emphasis on prediction (wide range of linear and nonlinear models) and communication (wide range of transports) 38

![RPS Toolkit • Extensible toolkit for implementing resource signal prediction systems [CMU-CS-99 -138] • RPS Toolkit • Extensible toolkit for implementing resource signal prediction systems [CMU-CS-99 -138] •](https://present5.com/presentation/6a7761b52707f31d3f6320204a5220ff/image-39.jpg)

RPS Toolkit • Extensible toolkit for implementing resource signal prediction systems [CMU-CS-99 -138] • Growing: RTA, RTSA, Wavelets, GUI, etc • Easy “buy-in” for users • C++ and sockets (no threads) • Prebuilt prediction components • Libraries (sensors, time series, communication) 39

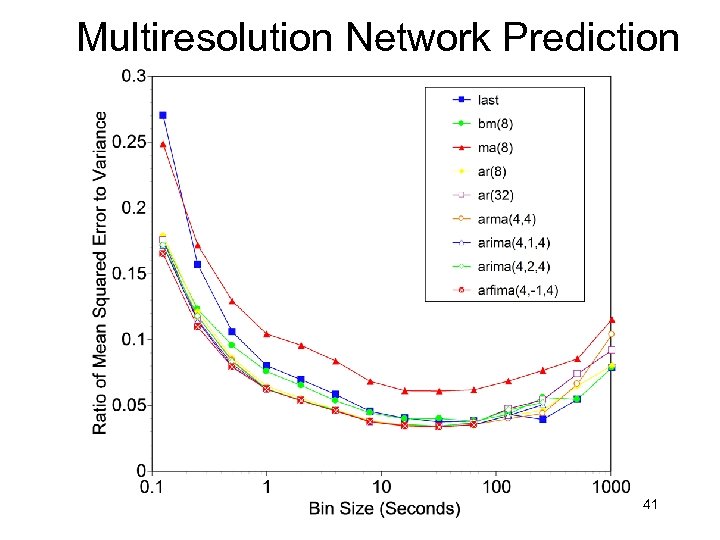

Example: Multiscale Network Prediction • Large, recent study of predictability • Hundreds of NLANR and other traces – Mostly WANs • Different resolutions – Binning and low-pass via wavelets • Sweet Spot – Predictability often maximized at particular resolution 40

Multiresolution Network Prediction 41

Extending a Resource Prediction System to Support Virtual Machines • Goal: monitor physical machine and infer behavior inside of virtual machine • Current approach: /proc on physical machine to slowdown on resource rate in virtual machine – ARX models 42

Resource Control • Owner has an interest in controlling how much and when compute time is given to a virtual machine • Our approach: A language for expressing these constraints, and compilation to real-time schedules, proportional share, etc. • Very early stages. Trying to avoid kernel modifications. 43

Outline • • Motivation Virtuoso Model Virtual networking and remote devices Information services Resource measurement and prediction Resource control Related work R. Figueiredo, P. Dinda, J. Fortes, A Case For Grid Computing on Conclusions Virtual Machines, ICDCS 2003 44

Related Work • Collective / Capsule Computing (Stanford) – VMM, Migration/caching, Hierarchical image files • Denali (U. Washington) – Highly scalable VMMs (1000 s of VMMs per node) • • • Co. Virt (U. Michigan) Xenoserver (Cambridge) SODA (Purdue) – Virtual Server, fast deployment of services • • Internet Suspend/Resume (Intel Labs Pittsburgh) Ensim – Virtual Server, widely used for web site hosting – WFQ-based resource control released into open-source Linux kernel • Virtouzzo (SWSoft) – Ensim competitor • Available VMMs: IBM’s VM, VMWare, Virtual PC/Server, Plex/86, SIMICS, Hypervisor, Des. QView/Task. View. VM/386 45

Current Status (At Northwestern) • Bridged components done – Mechanism for virtual networking – No policy yet • Very preliminary system for acquiring and instantiating VMs done • RGIS schema extensions done • Work In Progress – Remote devices (management) – Virtual networking (policy + adaptation) – VM Monitoring using RPS 46

For More Information • Prescience Lab (Northwestern University) – http: //plab. cs. northwestern. edu • ACIS (University of Florida) – http: //acis. ufl. edu 47

6a7761b52707f31d3f6320204a5220ff.ppt