085656dae7112b9aa0298c7956bc52a4.ppt

- Количество слайдов: 53

Virtual Private Clusters: Virtual Appliances and Networks in the Cloud Renato Figueiredo ACIS Lab - University of Florida Future. Grid Team Advanced Computing and Information Systems laboratory

Virtual Private Clusters: Virtual Appliances and Networks in the Cloud Renato Figueiredo ACIS Lab - University of Florida Future. Grid Team Advanced Computing and Information Systems laboratory

Outline Virtual appliances Virtual networks Virtual clusters Grid appliance and Future. Grid Educational uses Advanced Computing and Information Systems laboratory 2

Outline Virtual appliances Virtual networks Virtual clusters Grid appliance and Future. Grid Educational uses Advanced Computing and Information Systems laboratory 2

What is an appliance? Physical appliances • Webster – “an instrument or device designed for a particular use or function” Advanced Computing and Information Systems laboratory 3

What is an appliance? Physical appliances • Webster – “an instrument or device designed for a particular use or function” Advanced Computing and Information Systems laboratory 3

What is an appliance? Hardware/software appliances • TV receiver + computer + hard disk + Linux + user interface • Computer + network interfaces + Free. BSD + user interface Advanced Computing and Information Systems laboratory 4

What is an appliance? Hardware/software appliances • TV receiver + computer + hard disk + Linux + user interface • Computer + network interfaces + Free. BSD + user interface Advanced Computing and Information Systems laboratory 4

What is a virtual appliance? A virtual appliance packages software and configuration needed for a particular purpose into a virtual machine “image” The virtual appliance has no hardware – just software and configuration The image is a (big) file It can be instantiated on hardware Advanced Computing and Information Systems laboratory 5

What is a virtual appliance? A virtual appliance packages software and configuration needed for a particular purpose into a virtual machine “image” The virtual appliance has no hardware – just software and configuration The image is a (big) file It can be instantiated on hardware Advanced Computing and Information Systems laboratory 5

Virtual appliance example Linux + Apache + My. SQL + PHP A web server Another Web server LAMP image instantiate copy Virtualization Layer Repeat… Advanced Computing and Information Systems laboratory 6

Virtual appliance example Linux + Apache + My. SQL + PHP A web server Another Web server LAMP image instantiate copy Virtualization Layer Repeat… Advanced Computing and Information Systems laboratory 6

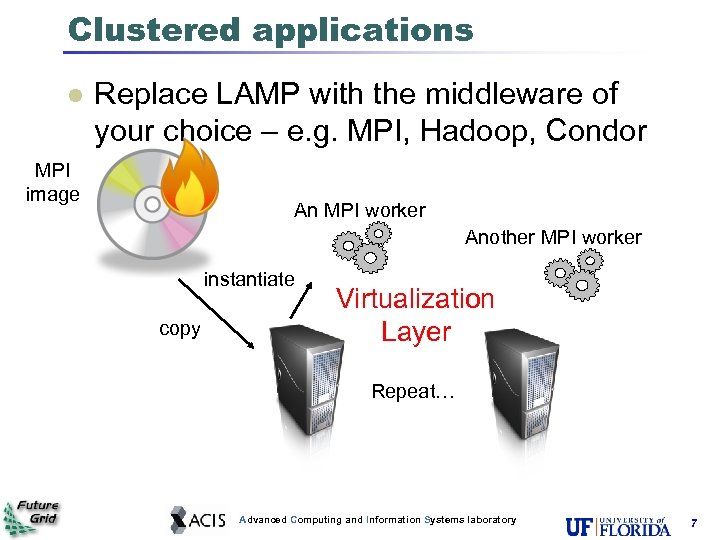

Clustered applications Replace LAMP with the middleware of your choice – e. g. MPI, Hadoop, Condor MPI image An MPI worker Another MPI worker instantiate copy Virtualization Layer Repeat… Advanced Computing and Information Systems laboratory 7

Clustered applications Replace LAMP with the middleware of your choice – e. g. MPI, Hadoop, Condor MPI image An MPI worker Another MPI worker instantiate copy Virtualization Layer Repeat… Advanced Computing and Information Systems laboratory 7

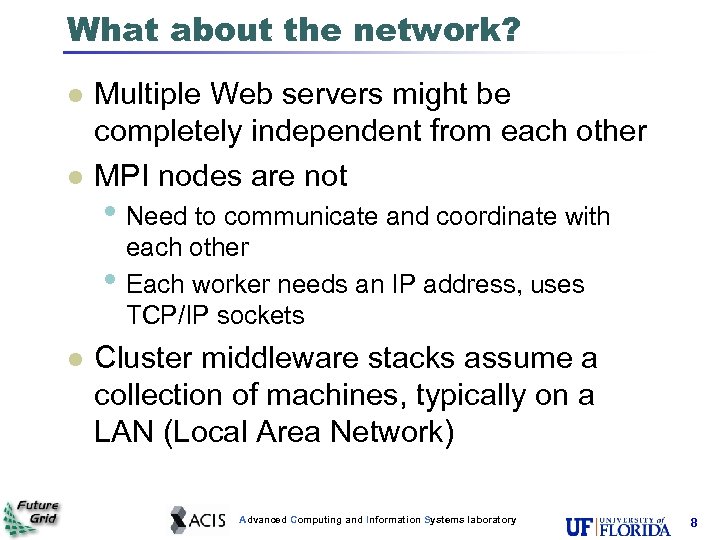

What about the network? Multiple Web servers might be completely independent from each other MPI nodes are not • Need to communicate and coordinate with • each other Each worker needs an IP address, uses TCP/IP sockets Cluster middleware stacks assume a collection of machines, typically on a LAN (Local Area Network) Advanced Computing and Information Systems laboratory 8

What about the network? Multiple Web servers might be completely independent from each other MPI nodes are not • Need to communicate and coordinate with • each other Each worker needs an IP address, uses TCP/IP sockets Cluster middleware stacks assume a collection of machines, typically on a LAN (Local Area Network) Advanced Computing and Information Systems laboratory 8

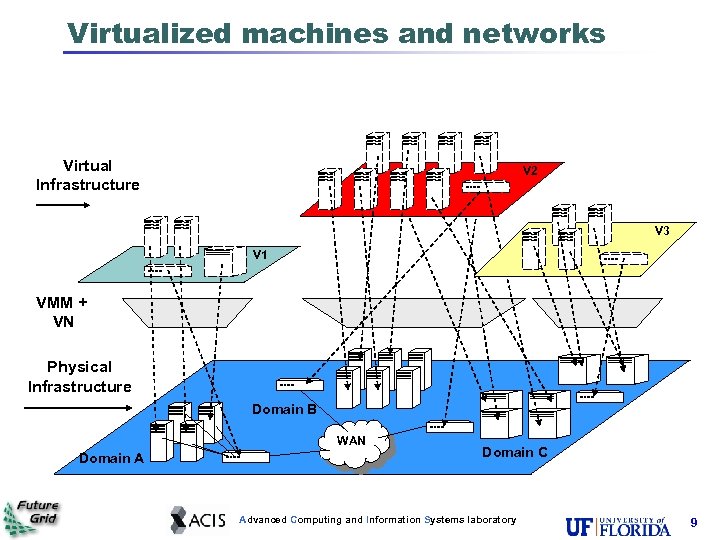

Virtualized machines and networks Virtual Infrastructure V 2 V 3 V 1 VMM + VN Physical Infrastructure Domain B WAN Domain A Domain C Advanced Computing and Information Systems laboratory 9

Virtualized machines and networks Virtual Infrastructure V 2 V 3 V 1 VMM + VN Physical Infrastructure Domain B WAN Domain A Domain C Advanced Computing and Information Systems laboratory 9

Why virtual networks? Cloud-bursting: • Private enterprise LAN/cluster • Run additional worker VMs on a cloud provider • Extending the LAN to all VMs – seamless scheduling, data transfers Federated “Inter-cloud” environments: • Multiple private LANs/clusters across various • institutions inter-connected Virtual machines can be deployed on different sites and form a distributed virtual private cluster Advanced Computing and Information Systems laboratory 10

Why virtual networks? Cloud-bursting: • Private enterprise LAN/cluster • Run additional worker VMs on a cloud provider • Extending the LAN to all VMs – seamless scheduling, data transfers Federated “Inter-cloud” environments: • Multiple private LANs/clusters across various • institutions inter-connected Virtual machines can be deployed on different sites and form a distributed virtual private cluster Advanced Computing and Information Systems laboratory 10

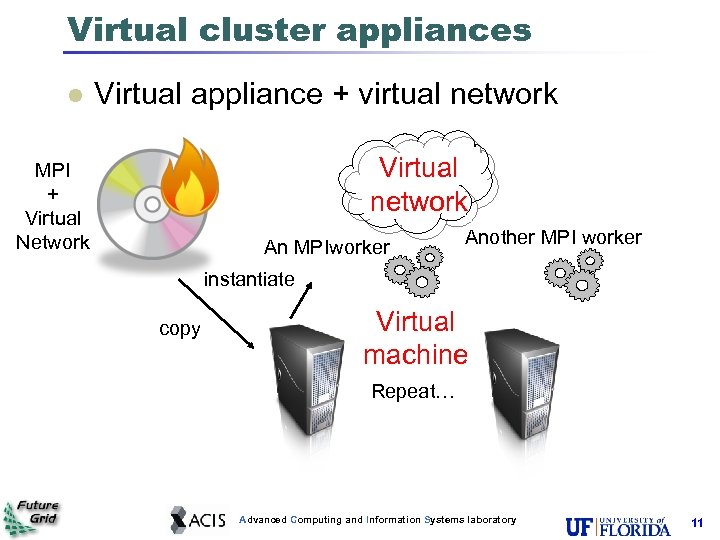

Virtual cluster appliances Virtual appliance + virtual network Virtual network MPI + Virtual Network An MPIworker Another MPI worker instantiate copy Virtual machine Repeat… Advanced Computing and Information Systems laboratory 11

Virtual cluster appliances Virtual appliance + virtual network Virtual network MPI + Virtual Network An MPIworker Another MPI worker instantiate copy Virtual machine Repeat… Advanced Computing and Information Systems laboratory 11

Where virtualization applies Software Network Device (Virtual) machine Virtualized endpoints Network Fabric Software Network Device (Virtual) machine Virtualized fabric Advanced Computing and Information Systems laboratory 12

Where virtualization applies Software Network Device (Virtual) machine Virtualized endpoints Network Fabric Software Network Device (Virtual) machine Virtualized fabric Advanced Computing and Information Systems laboratory 12

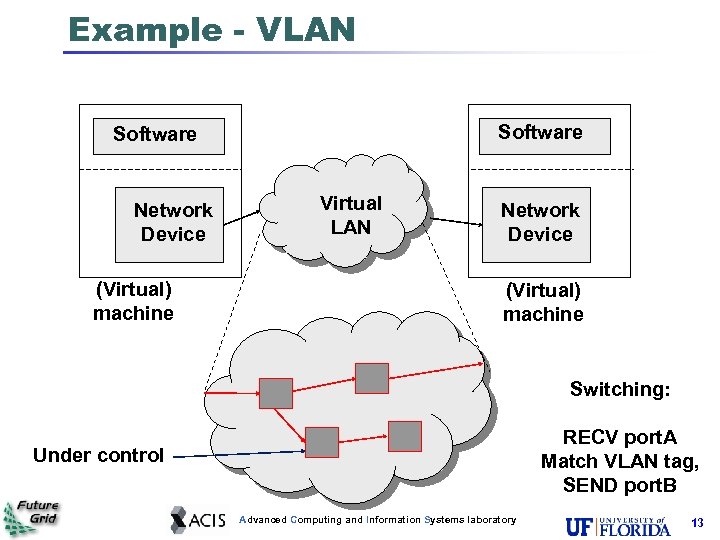

Example - VLAN Software Network Device (Virtual) machine Virtual LAN Network Device (Virtual) machine Switching: RECV port. A Match VLAN tag, SEND port. B Under control Advanced Computing and Information Systems laboratory 13

Example - VLAN Software Network Device (Virtual) machine Virtual LAN Network Device (Virtual) machine Switching: RECV port. A Match VLAN tag, SEND port. B Under control Advanced Computing and Information Systems laboratory 13

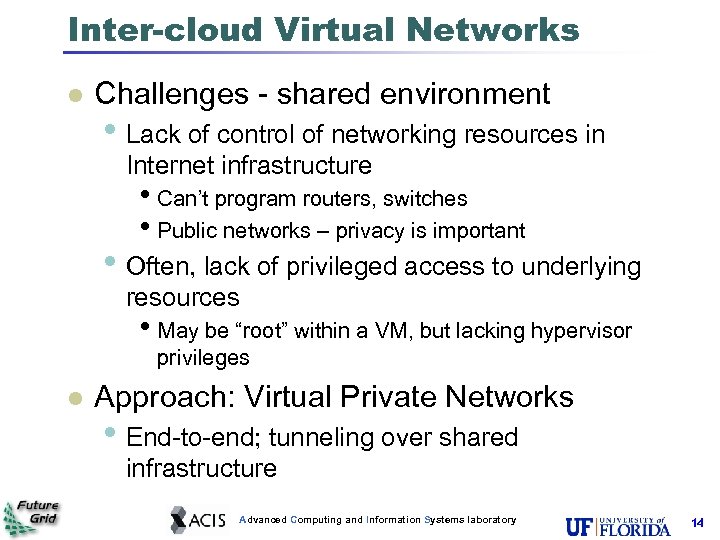

Inter-cloud Virtual Networks Challenges - shared environment • Lack of control of networking resources in Internet infrastructure • Can’t program routers, switches • Public networks – privacy is important • Often, lack of privileged access to underlying resources • May be “root” within a VM, but lacking hypervisor privileges Approach: Virtual Private Networks • End-to-end; tunneling over shared infrastructure Advanced Computing and Information Systems laboratory 14

Inter-cloud Virtual Networks Challenges - shared environment • Lack of control of networking resources in Internet infrastructure • Can’t program routers, switches • Public networks – privacy is important • Often, lack of privileged access to underlying resources • May be “root” within a VM, but lacking hypervisor privileges Approach: Virtual Private Networks • End-to-end; tunneling over shared infrastructure Advanced Computing and Information Systems laboratory 14

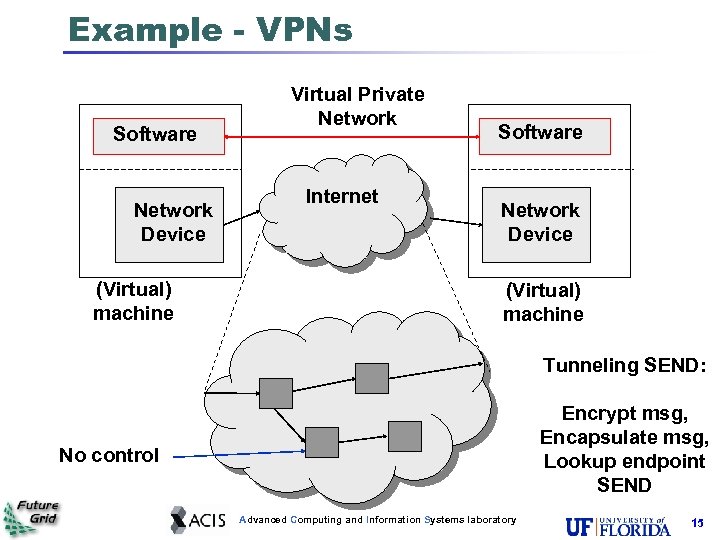

Example - VPNs Software Network Device (Virtual) machine Virtual Private Network Internet Software Network Device (Virtual) machine Tunneling SEND: Encrypt msg, Encapsulate msg, Lookup endpoint SEND No control Advanced Computing and Information Systems laboratory 15

Example - VPNs Software Network Device (Virtual) machine Virtual Private Network Internet Software Network Device (Virtual) machine Tunneling SEND: Encrypt msg, Encapsulate msg, Lookup endpoint SEND No control Advanced Computing and Information Systems laboratory 15

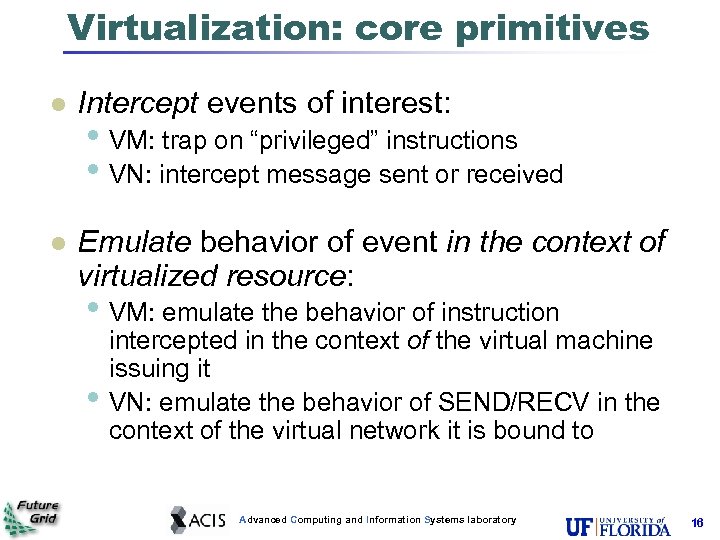

Virtualization: core primitives Intercept events of interest: Emulate behavior of event in the context of virtualized resource: • VM: trap on “privileged” instructions • VN: intercept message sent or received • VM: emulate the behavior of instruction • intercepted in the context of the virtual machine issuing it VN: emulate the behavior of SEND/RECV in the context of the virtual network it is bound to Advanced Computing and Information Systems laboratory 16

Virtualization: core primitives Intercept events of interest: Emulate behavior of event in the context of virtualized resource: • VM: trap on “privileged” instructions • VN: intercept message sent or received • VM: emulate the behavior of instruction • intercepted in the context of the virtual machine issuing it VN: emulate the behavior of SEND/RECV in the context of the virtual network it is bound to Advanced Computing and Information Systems laboratory 16

Layers Layer-2 virtualization • VN supports all protocols layered on data link • Not only IP but also other protocols • Simpler integration • E. g. ARP crosses layers 2 and 3 • Downside: broadcast traffic if VN spans beyond LAN Layer-3 virtualization • VN supports all protocols layered on IP • TCP, UDP, DHCP, … • Sufficient to handle many environments/applications • Downside: tied to IP • Innovative non-IP network protocols will not work Advanced Computing and Information Systems laboratory 17

Layers Layer-2 virtualization • VN supports all protocols layered on data link • Not only IP but also other protocols • Simpler integration • E. g. ARP crosses layers 2 and 3 • Downside: broadcast traffic if VN spans beyond LAN Layer-3 virtualization • VN supports all protocols layered on IP • TCP, UDP, DHCP, … • Sufficient to handle many environments/applications • Downside: tied to IP • Innovative non-IP network protocols will not work Advanced Computing and Information Systems laboratory 17

Technologies and Techniques Amazon VPC: • Virtual private network extending from enterprise to resources at a major Iaa. S commercial cloud Open. Flow: • Open switching specification allowing programmable network devices through a forwarding instruction set Open. Stack Quantum: • Virtual private networking within a private cloud offered by a major open-source Iaa. S stack Vi. Ne: • Inter-cloud, high-performance user-level managed virtual network IP-over-P 2 P (IPOP) and Group. VPN • Peer-to-peer, inter-cloud, self-organizing virtual network Advanced Computing and Information Systems laboratory 18

Technologies and Techniques Amazon VPC: • Virtual private network extending from enterprise to resources at a major Iaa. S commercial cloud Open. Flow: • Open switching specification allowing programmable network devices through a forwarding instruction set Open. Stack Quantum: • Virtual private networking within a private cloud offered by a major open-source Iaa. S stack Vi. Ne: • Inter-cloud, high-performance user-level managed virtual network IP-over-P 2 P (IPOP) and Group. VPN • Peer-to-peer, inter-cloud, self-organizing virtual network Advanced Computing and Information Systems laboratory 18

Vi. Ne Led by Mauricio Tsugawa, Jose Fortes at UF Focus: • Virtual network architecture that allows VNs to be deployed across multiple administrative domains and offer full connectivity among hosts independently of connectivity limitations Internet organization: • Vi. Ne routers (VRs) are used by nodes as gateways • • to overlays, as Internet routers are used as gateways to route Internet messages VRs are dynamically reconfigurable Manipulation of operating parameters of VRs enables the management of VNs Slide provided by M. Tsugawa Advanced Computing and Information Systems laboratory 19

Vi. Ne Led by Mauricio Tsugawa, Jose Fortes at UF Focus: • Virtual network architecture that allows VNs to be deployed across multiple administrative domains and offer full connectivity among hosts independently of connectivity limitations Internet organization: • Vi. Ne routers (VRs) are used by nodes as gateways • • to overlays, as Internet routers are used as gateways to route Internet messages VRs are dynamically reconfigurable Manipulation of operating parameters of VRs enables the management of VNs Slide provided by M. Tsugawa Advanced Computing and Information Systems laboratory 19

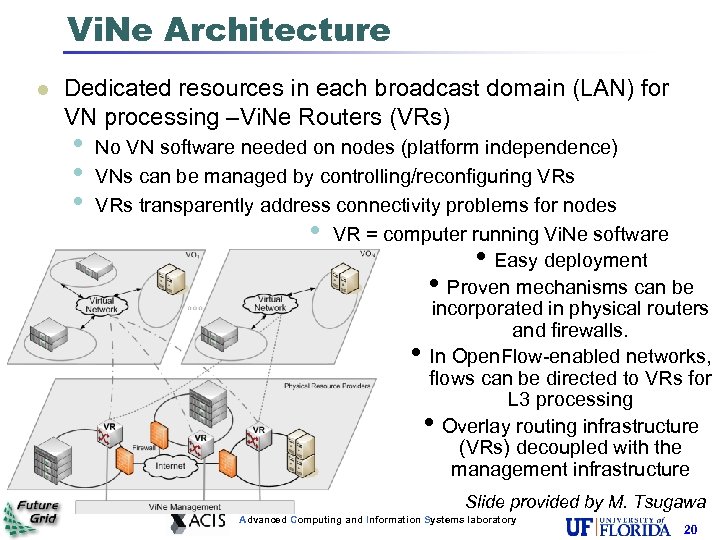

Vi. Ne Architecture Dedicated resources in each broadcast domain (LAN) for VN processing –Vi. Ne Routers (VRs) • • • No VN software needed on nodes (platform independence) VNs can be managed by controlling/reconfiguring VRs transparently address connectivity problems for nodes • VR = computer running Vi. Ne software • Easy deployment • Proven mechanisms can be incorporated in physical routers and firewalls. • In Open. Flow-enabled networks, flows can be directed to VRs for L 3 processing • Overlay routing infrastructure (VRs) decoupled with the management infrastructure Slide provided by M. Tsugawa Advanced Computing and Information Systems laboratory 20

Vi. Ne Architecture Dedicated resources in each broadcast domain (LAN) for VN processing –Vi. Ne Routers (VRs) • • • No VN software needed on nodes (platform independence) VNs can be managed by controlling/reconfiguring VRs transparently address connectivity problems for nodes • VR = computer running Vi. Ne software • Easy deployment • Proven mechanisms can be incorporated in physical routers and firewalls. • In Open. Flow-enabled networks, flows can be directed to VRs for L 3 processing • Overlay routing infrastructure (VRs) decoupled with the management infrastructure Slide provided by M. Tsugawa Advanced Computing and Information Systems laboratory 20

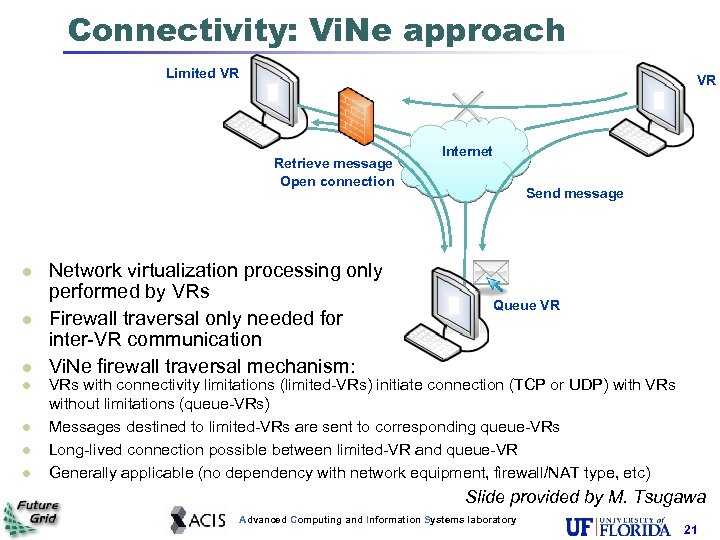

Connectivity: Vi. Ne approach Limited VR VR Retrieve message Open connection Network virtualization processing only performed by VRs Firewall traversal only needed for inter-VR communication Vi. Ne firewall traversal mechanism: Internet Send message Queue VR VRs with connectivity limitations (limited-VRs) initiate connection (TCP or UDP) with VRs without limitations (queue-VRs) Messages destined to limited-VRs are sent to corresponding queue-VRs Long-lived connection possible between limited-VR and queue-VR Generally applicable (no dependency with network equipment, firewall/NAT type, etc) Slide provided by M. Tsugawa Advanced Computing and Information Systems laboratory 21

Connectivity: Vi. Ne approach Limited VR VR Retrieve message Open connection Network virtualization processing only performed by VRs Firewall traversal only needed for inter-VR communication Vi. Ne firewall traversal mechanism: Internet Send message Queue VR VRs with connectivity limitations (limited-VRs) initiate connection (TCP or UDP) with VRs without limitations (queue-VRs) Messages destined to limited-VRs are sent to corresponding queue-VRs Long-lived connection possible between limited-VR and queue-VR Generally applicable (no dependency with network equipment, firewall/NAT type, etc) Slide provided by M. Tsugawa Advanced Computing and Information Systems laboratory 21

Vi. Ne routing performance L 3 processing implemented in Java Mechanisms to avoid IP fragmentation Use of data structures with low access times in the routing module VR routing capacity over 880 Mbps (using modern CPU cores) – Gigabit line rate (120 Mbps total encapsulation overhead) • Sufficient in many cases where WAN performance • is less than Gbps Requires CPUs launched after 2006 (e. g. , 2 GHz Intel Core 2 microarchitecute) Slide provided by M. Tsugawa Advanced Computing and Information Systems laboratory

Vi. Ne routing performance L 3 processing implemented in Java Mechanisms to avoid IP fragmentation Use of data structures with low access times in the routing module VR routing capacity over 880 Mbps (using modern CPU cores) – Gigabit line rate (120 Mbps total encapsulation overhead) • Sufficient in many cases where WAN performance • is less than Gbps Requires CPUs launched after 2006 (e. g. , 2 GHz Intel Core 2 microarchitecute) Slide provided by M. Tsugawa Advanced Computing and Information Systems laboratory

Vi. Ne Management Architecture VR operating parameters configurable at run-time • • Overlay routing tables, buffer size, encryption on/off Autonomic approaches possible Vi. Ne Central Server es qu Re • • . . . sts Vi. Ne Central Server VR ue Configuration actions Requests q Re ts Requests VR Oversees global VN management Maintains Vi. Ne-related information Authentication/authorization based on Public Key Infrastructure Remotely issue commands to reconfigure VR operation Slide provided by M. Tsugawa Advanced Computing and Information Systems laboratory 23

Vi. Ne Management Architecture VR operating parameters configurable at run-time • • Overlay routing tables, buffer size, encryption on/off Autonomic approaches possible Vi. Ne Central Server es qu Re • • . . . sts Vi. Ne Central Server VR ue Configuration actions Requests q Re ts Requests VR Oversees global VN management Maintains Vi. Ne-related information Authentication/authorization based on Public Key Infrastructure Remotely issue commands to reconfigure VR operation Slide provided by M. Tsugawa Advanced Computing and Information Systems laboratory 23

Example: Inter-cloud BLAST 3 Future. Grid sites (US) 3 Grid’ 5000 sites (France) Grid’ 5000 is fully isolated from the Internet • UCSD (San Diego) • UF (Florida) • UC (Chicago) • Lille • Rennes • Sophia • One machine white-listed to access Future. Grid • Vi. Ne queue VR (Virtual Router) for other sites Slide provided by M. Tsugawa Advanced Computing and Information Systems laboratory

Example: Inter-cloud BLAST 3 Future. Grid sites (US) 3 Grid’ 5000 sites (France) Grid’ 5000 is fully isolated from the Internet • UCSD (San Diego) • UF (Florida) • UC (Chicago) • Lille • Rennes • Sophia • One machine white-listed to access Future. Grid • Vi. Ne queue VR (Virtual Router) for other sites Slide provided by M. Tsugawa Advanced Computing and Information Systems laboratory

Cloud. BLAST Experiment • • • Vi. Ne connected a virtual cluster across 3 FG and 3 Grid’ 5000 sites 750 VMs, 1500 cores Executed BLAST on Hadoop (Cloud. BLAST) with 870 X speedup Slide provided by M. Tsugawa Advanced Computing and Information Systems laboratory

Cloud. BLAST Experiment • • • Vi. Ne connected a virtual cluster across 3 FG and 3 Grid’ 5000 sites 750 VMs, 1500 cores Executed BLAST on Hadoop (Cloud. BLAST) with 870 X speedup Slide provided by M. Tsugawa Advanced Computing and Information Systems laboratory

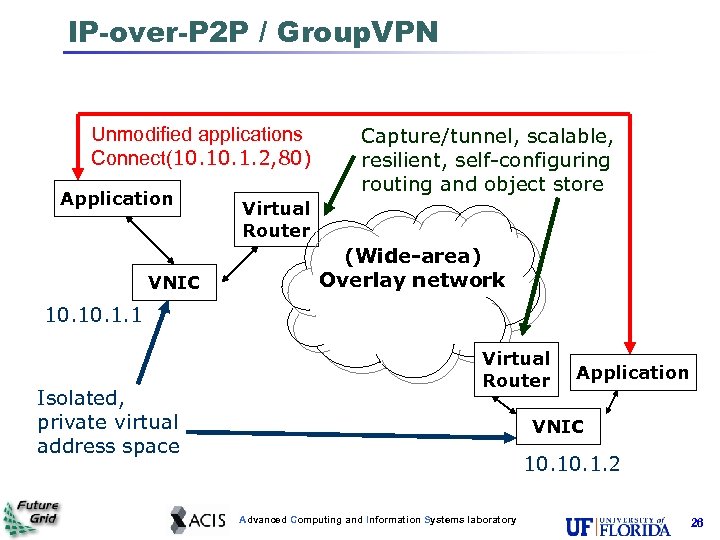

IP-over-P 2 P / Group. VPN Unmodified applications Connect(10. 1. 2, 80) Application VNIC Capture/tunnel, scalable, resilient, self-configuring routing and object store Virtual Router (Wide-area) Overlay network 10. 1. 1 Isolated, private virtual address space Virtual Router Application VNIC 10. 1. 2 Advanced Computing and Information Systems laboratory 26

IP-over-P 2 P / Group. VPN Unmodified applications Connect(10. 1. 2, 80) Application VNIC Capture/tunnel, scalable, resilient, self-configuring routing and object store Virtual Router (Wide-area) Overlay network 10. 1. 1 Isolated, private virtual address space Virtual Router Application VNIC 10. 1. 2 Advanced Computing and Information Systems laboratory 26

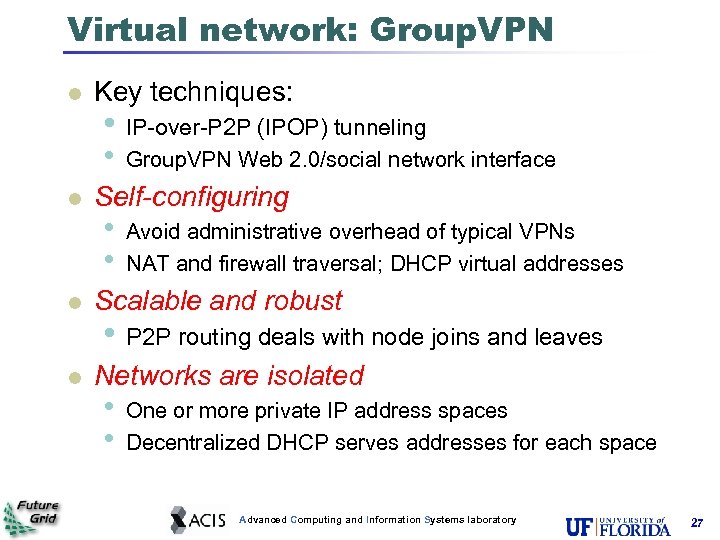

Virtual network: Group. VPN Key techniques: Self-configuring • IP-over-P 2 P (IPOP) tunneling • Group. VPN Web 2. 0/social network interface • • Avoid administrative overhead of typical VPNs NAT and firewall traversal; DHCP virtual addresses Scalable and robust Networks are isolated • P 2 P routing deals with node joins and leaves • • One or more private IP address spaces Decentralized DHCP serves addresses for each space Advanced Computing and Information Systems laboratory 27

Virtual network: Group. VPN Key techniques: Self-configuring • IP-over-P 2 P (IPOP) tunneling • Group. VPN Web 2. 0/social network interface • • Avoid administrative overhead of typical VPNs NAT and firewall traversal; DHCP virtual addresses Scalable and robust Networks are isolated • P 2 P routing deals with node joins and leaves • • One or more private IP address spaces Decentralized DHCP serves addresses for each space Advanced Computing and Information Systems laboratory 27

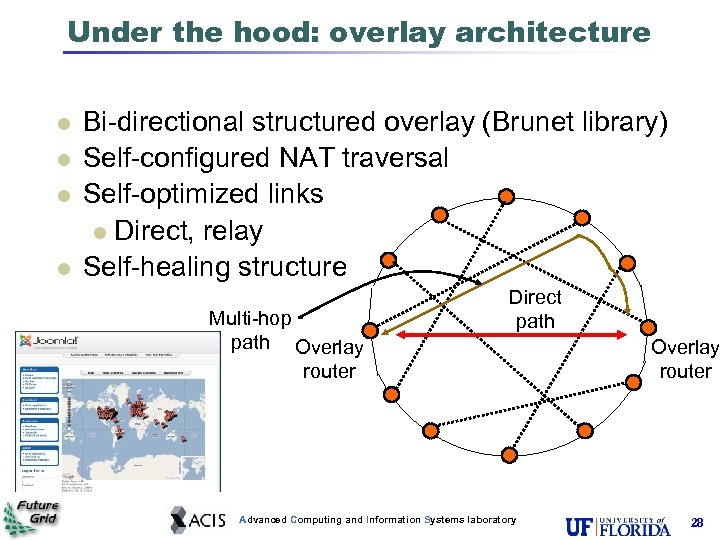

Under the hood: overlay architecture Bi-directional structured overlay (Brunet library) Self-configured NAT traversal Self-optimized links Direct, relay Self-healing structure Multi-hop path Overlay router Direct path Advanced Computing and Information Systems laboratory Overlay router 28

Under the hood: overlay architecture Bi-directional structured overlay (Brunet library) Self-configured NAT traversal Self-optimized links Direct, relay Self-healing structure Multi-hop path Overlay router Direct path Advanced Computing and Information Systems laboratory Overlay router 28

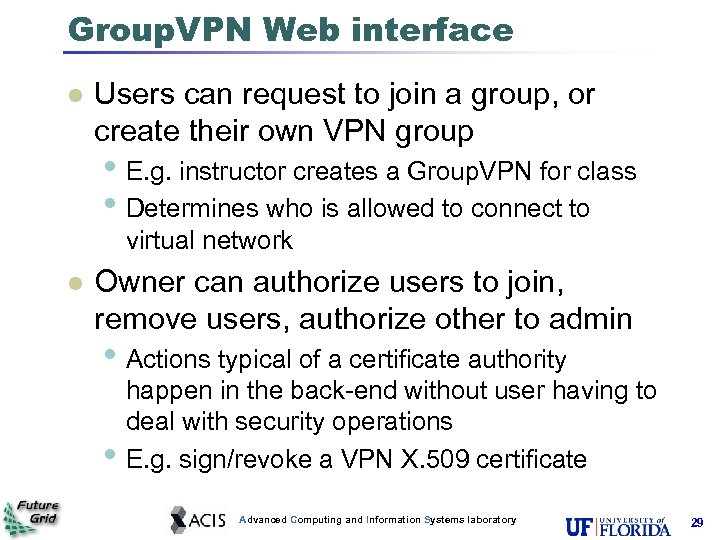

Group. VPN Web interface Users can request to join a group, or create their own VPN group • E. g. instructor creates a Group. VPN for class • Determines who is allowed to connect to virtual network Owner can authorize users to join, remove users, authorize other to admin • Actions typical of a certificate authority • happen in the back-end without user having to deal with security operations E. g. sign/revoke a VPN X. 509 certificate Advanced Computing and Information Systems laboratory 29

Group. VPN Web interface Users can request to join a group, or create their own VPN group • E. g. instructor creates a Group. VPN for class • Determines who is allowed to connect to virtual network Owner can authorize users to join, remove users, authorize other to admin • Actions typical of a certificate authority • happen in the back-end without user having to deal with security operations E. g. sign/revoke a VPN X. 509 certificate Advanced Computing and Information Systems laboratory 29

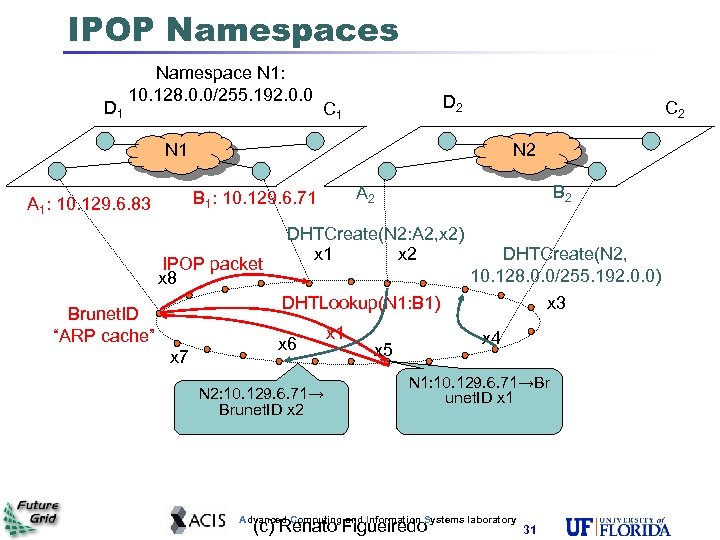

Managing virtual IP spaces One P 2 P overlay supports multiple IPOP namespaces • IP routing within a namespace Each IPOP namespace: a unique string • Distributed Hash Table (DHT) stores mapping • • Key=namespace Value=DHCP configuration (IP range, lease, . . . ) IPOP node configured with a namespace • • • Query namespace for DHCP configuration Guess an IP address at random within range Attempt to store in DHT • • Key=namespace+IP Value=IPOPid (160 -bit) IP->P 2 P Address resolution: • Given namespace+IP, lookup IPOPid Advanced Computing and Information Systems laboratory 30

Managing virtual IP spaces One P 2 P overlay supports multiple IPOP namespaces • IP routing within a namespace Each IPOP namespace: a unique string • Distributed Hash Table (DHT) stores mapping • • Key=namespace Value=DHCP configuration (IP range, lease, . . . ) IPOP node configured with a namespace • • • Query namespace for DHCP configuration Guess an IP address at random within range Attempt to store in DHT • • Key=namespace+IP Value=IPOPid (160 -bit) IP->P 2 P Address resolution: • Given namespace+IP, lookup IPOPid Advanced Computing and Information Systems laboratory 30

IPOP Namespaces D 1 Namespace N 1: 10. 128. 0. 0/255. 192. 0. 0 D 2 C 1 C 2 N 1 IPOP packet x 8 B 2 A 2 B 1: 10. 129. 6. 71 A 1: 10. 129. 6. 83 DHTCreate(N 2: A 2, x 2) x 1 x 2 DHTLookup(N 1: B 1) Brunet. ID “ARP cache” x 7 x 6 N 2: 10. 129. 6. 71→ Brunet. ID x 2 x 1 DHTCreate(N 2, 10. 128. 0. 0/255. 192. 0. 0) x 3 x 4 x 5 N 1: 10. 129. 6. 71→Br unet. ID x 1 Advanced Computing and Information Systems laboratory (c) Renato Figueiredo 31

IPOP Namespaces D 1 Namespace N 1: 10. 128. 0. 0/255. 192. 0. 0 D 2 C 1 C 2 N 1 IPOP packet x 8 B 2 A 2 B 1: 10. 129. 6. 71 A 1: 10. 129. 6. 83 DHTCreate(N 2: A 2, x 2) x 1 x 2 DHTLookup(N 1: B 1) Brunet. ID “ARP cache” x 7 x 6 N 2: 10. 129. 6. 71→ Brunet. ID x 2 x 1 DHTCreate(N 2, 10. 128. 0. 0/255. 192. 0. 0) x 3 x 4 x 5 N 1: 10. 129. 6. 71→Br unet. ID x 1 Advanced Computing and Information Systems laboratory (c) Renato Figueiredo 31

Optimization: Adaptive shortcuts At each node: • Count IPOP packets to other nodes When number of packets within an interval exceeds threshold: • Initiate connection setup; create edge Limit on number of shortcuts • Overhead involved in connection maintenance • Drop connections no longer in use Advanced Computing and Information Systems laboratory (c) Renato Figueiredo 32

Optimization: Adaptive shortcuts At each node: • Count IPOP packets to other nodes When number of packets within an interval exceeds threshold: • Initiate connection setup; create edge Limit on number of shortcuts • Overhead involved in connection maintenance • Drop connections no longer in use Advanced Computing and Information Systems laboratory (c) Renato Figueiredo 32

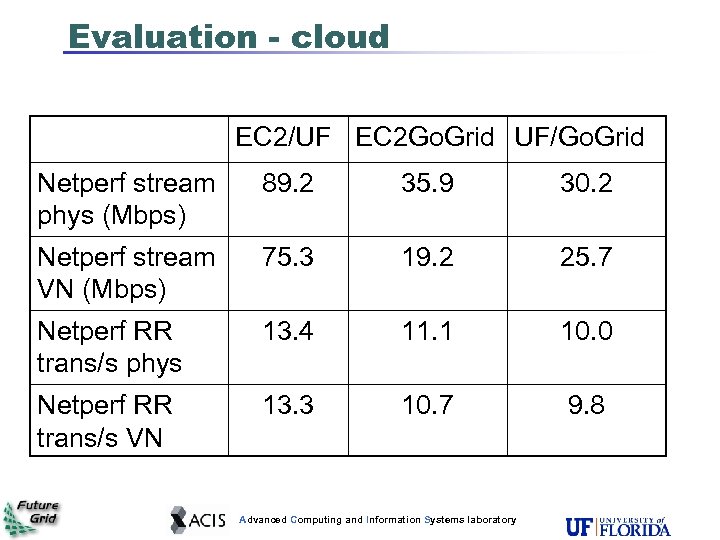

Evaluation - cloud EC 2/UF EC 2 Go. Grid UF/Go. Grid Netperf stream phys (Mbps) 89. 2 35. 9 30. 2 Netperf stream VN (Mbps) 75. 3 19. 2 25. 7 Netperf RR trans/s phys 13. 4 11. 1 10. 0 Netperf RR trans/s VN 13. 3 10. 7 9. 8 Advanced Computing and Information Systems laboratory

Evaluation - cloud EC 2/UF EC 2 Go. Grid UF/Go. Grid Netperf stream phys (Mbps) 89. 2 35. 9 30. 2 Netperf stream VN (Mbps) 75. 3 19. 2 25. 7 Netperf RR trans/s phys 13. 4 11. 1 10. 0 Netperf RR trans/s VN 13. 3 10. 7 9. 8 Advanced Computing and Information Systems laboratory

Grid appliance - virtual clusters Same image, per-group VPNs Group VPN Hadoop + Virtual Network A Hadoop worker Another Hadoop worker instantiate copy Group. VPN Credentials (from Web site) Virtual machine Repeat… Virtual IP - DHCP 10. 1. 1 Virtual IP - DHCP 10. 1. 2 Advanced Computing and Information Systems laboratory 34

Grid appliance - virtual clusters Same image, per-group VPNs Group VPN Hadoop + Virtual Network A Hadoop worker Another Hadoop worker instantiate copy Group. VPN Credentials (from Web site) Virtual machine Repeat… Virtual IP - DHCP 10. 1. 1 Virtual IP - DHCP 10. 1. 2 Advanced Computing and Information Systems laboratory 34

Grid appliance clusters Virtual appliances • Encapsulate software environment in image • Virtual disk file(s) and virtual hardware configuration The Grid appliance • Encapsulates cluster software environments • Current examples: Condor, MPI, Hadoop • Homogeneous images at each node • Virtual Network connecting nodes forms a • cluster Deploy within or across domains Advanced Computing and Information Systems laboratory 35

Grid appliance clusters Virtual appliances • Encapsulate software environment in image • Virtual disk file(s) and virtual hardware configuration The Grid appliance • Encapsulates cluster software environments • Current examples: Condor, MPI, Hadoop • Homogeneous images at each node • Virtual Network connecting nodes forms a • cluster Deploy within or across domains Advanced Computing and Information Systems laboratory 35

Grid appliance internals Host O/S Grid/cloud stack Glue logic for zero-configuration • Linux • MPI, Hadoop, Condor, … • Automatic DHCP address assignment • Multicast DNS (Bonjour, Avahi) resource • • discovery Shared data store - Distributed Hash Table Interaction with VM/cloud Advanced Computing and Information Systems laboratory 36

Grid appliance internals Host O/S Grid/cloud stack Glue logic for zero-configuration • Linux • MPI, Hadoop, Condor, … • Automatic DHCP address assignment • Multicast DNS (Bonjour, Avahi) resource • • discovery Shared data store - Distributed Hash Table Interaction with VM/cloud Advanced Computing and Information Systems laboratory 36

One appliance, multiple hosts Allow same logical cluster environment to instantiate on a variety of platforms • Local desktop, clusters; Future. Grid; Amazon EC 2; Science Clouds… Avoid dependence on host environment • Make minimum assumptions about VM and provisioning software • Desktop: 1 image, VMware, Virtual. Box, KVM • Para-virtualized VMs (e. g. Xen) and cloud stacks – need to deal with idiosyncrasies • Minimum assumptions about networking • Private, NATed Ethernet virtual network interface Advanced Computing and Information Systems laboratory 37

One appliance, multiple hosts Allow same logical cluster environment to instantiate on a variety of platforms • Local desktop, clusters; Future. Grid; Amazon EC 2; Science Clouds… Avoid dependence on host environment • Make minimum assumptions about VM and provisioning software • Desktop: 1 image, VMware, Virtual. Box, KVM • Para-virtualized VMs (e. g. Xen) and cloud stacks – need to deal with idiosyncrasies • Minimum assumptions about networking • Private, NATed Ethernet virtual network interface Advanced Computing and Information Systems laboratory 37

Configuration framework At the end of Group. VPN initialization: • Each node of a private virtual cluster gets a • • DHCP address on virtual tap interface A barebones cluster Additional configuration required depending on middleware • Which node is the Condor negotiator? Hadoop front -end? Which nodes are in the MPI ring? Key frameworks used: • IP multicast discovery over Group. VPN • Front-end queries for all IPs listening in Group. VPN • Distributed hash table • Advertise (put key, value), discover (get key) Advanced Computing and Information Systems laboratory 38

Configuration framework At the end of Group. VPN initialization: • Each node of a private virtual cluster gets a • • DHCP address on virtual tap interface A barebones cluster Additional configuration required depending on middleware • Which node is the Condor negotiator? Hadoop front -end? Which nodes are in the MPI ring? Key frameworks used: • IP multicast discovery over Group. VPN • Front-end queries for all IPs listening in Group. VPN • Distributed hash table • Advertise (put key, value), discover (get key) Advanced Computing and Information Systems laboratory 38

Configuring and deploying groups Generate virtual floppies Deploy appliances image(s) Submit jobs; terminate VMs when done • Through Group. VPN Web interface • Future. Grid (Nimbus/Eucalyptus), EC 2 • GUI or command line tools • Use APIs to copy virtual floppy to image Advanced Computing and Information Systems laboratory 39

Configuring and deploying groups Generate virtual floppies Deploy appliances image(s) Submit jobs; terminate VMs when done • Through Group. VPN Web interface • Future. Grid (Nimbus/Eucalyptus), EC 2 • GUI or command line tools • Use APIs to copy virtual floppy to image Advanced Computing and Information Systems laboratory 39

Demonstration Pre-instantiated VM to save us time: • cloud-client. sh --conf alamo. conf --run --name grid -appliance-2. 05. 03. gz --hours 24 Connect to VM Check virtual network interface Ping other VMs in the virtual cluster Submit Condor job • ssh root@VMip • ifconfig Advanced Computing and Information Systems laboratory 40

Demonstration Pre-instantiated VM to save us time: • cloud-client. sh --conf alamo. conf --run --name grid -appliance-2. 05. 03. gz --hours 24 Connect to VM Check virtual network interface Ping other VMs in the virtual cluster Submit Condor job • ssh root@VMip • ifconfig Advanced Computing and Information Systems laboratory 40

Use case: Education and Training Importance of experimental work in systems research • Needs also to be addressed in education • Complement to fundamental theory Future. Grid: a testbed for experimentation and collaboration • Education and training contributions: • Lower barrier to entry – pre-configured environments, zero-configuration technologies • Community/repository of hands-on executable environments: develop once, share and reuse Advanced Computing and Information Systems laboratory 41

Use case: Education and Training Importance of experimental work in systems research • Needs also to be addressed in education • Complement to fundamental theory Future. Grid: a testbed for experimentation and collaboration • Education and training contributions: • Lower barrier to entry – pre-configured environments, zero-configuration technologies • Community/repository of hands-on executable environments: develop once, share and reuse Advanced Computing and Information Systems laboratory 41

Educational appliances in Future. Grid A flexible, extensible platform for hands -on, lab-oriented education on Future. Grid Executable modules – virtual appliances • Deployable on Future. Grid resources • Deployable on other cloud platforms, as well as virtualized desktops Community sharing – Web 2. 0 portal, appliance image repositories • An aggregation hub for executable modules and documentation Advanced Computing and Information Systems laboratory 42

Educational appliances in Future. Grid A flexible, extensible platform for hands -on, lab-oriented education on Future. Grid Executable modules – virtual appliances • Deployable on Future. Grid resources • Deployable on other cloud platforms, as well as virtualized desktops Community sharing – Web 2. 0 portal, appliance image repositories • An aggregation hub for executable modules and documentation Advanced Computing and Information Systems laboratory 42

Support for classes on Future. Grid Classes are setup and managed using the Future. Grid portal Project proposal: can be a class, workshop, short course, tutorial • Needs to be approved by Future. Grid project to become active Users can be added to a project • Users create accounts using the portal • Project leaders can authorize them to gain • access to resources Students can then interactively use FG resources (e. g. to start VMs) Advanced Computing and Information Systems laboratory 43

Support for classes on Future. Grid Classes are setup and managed using the Future. Grid portal Project proposal: can be a class, workshop, short course, tutorial • Needs to be approved by Future. Grid project to become active Users can be added to a project • Users create accounts using the portal • Project leaders can authorize them to gain • access to resources Students can then interactively use FG resources (e. g. to start VMs) Advanced Computing and Information Systems laboratory 43

Use of Future. Grid in classes Cloud computing/distributed systems classes • U. of Florida, U. Central Florida, U. of Puerto Rico, Univ. of Piemonte Orientale (Italy), Univ. of Mostar (Croatia) Distributed scientific computing Tutorials, workshops: • Louisiana State University • Big Data for Science summer school • A cloudy view on computing • SC’ 11 tutorial – Clouds for science • Science Cloud Summer School Advanced Computing and Information Systems laboratory 44

Use of Future. Grid in classes Cloud computing/distributed systems classes • U. of Florida, U. Central Florida, U. of Puerto Rico, Univ. of Piemonte Orientale (Italy), Univ. of Mostar (Croatia) Distributed scientific computing Tutorials, workshops: • Louisiana State University • Big Data for Science summer school • A cloudy view on computing • SC’ 11 tutorial – Clouds for science • Science Cloud Summer School Advanced Computing and Information Systems laboratory 44

Thank you! More information: • http: //www. futuregrid. org • http: //grid-appliance. org This document was developed with support from the National Science Foundation (NSF) under Grant No. 0910812 to Indiana University for "Future. Grid: An Experimental, High-Performance Grid Test-bed. " Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the NSF Advanced Computing and Information Systems laboratory 45

Thank you! More information: • http: //www. futuregrid. org • http: //grid-appliance. org This document was developed with support from the National Science Foundation (NSF) under Grant No. 0910812 to Indiana University for "Future. Grid: An Experimental, High-Performance Grid Test-bed. " Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the NSF Advanced Computing and Information Systems laboratory 45

Advanced Computing and Information Systems laboratory 46

Advanced Computing and Information Systems laboratory 46

Local appliance deployments Two possibilities: • Share our “bootstrap” infrastructure, but run a separate Group. VPN • Simplest to setup • Deploy your own “bootstrap” infrastructure • More work to setup • Especially if across multiple LANs • Potential for faster connectivity Advanced Computing and Information Systems laboratory 47

Local appliance deployments Two possibilities: • Share our “bootstrap” infrastructure, but run a separate Group. VPN • Simplest to setup • Deploy your own “bootstrap” infrastructure • More work to setup • Especially if across multiple LANs • Potential for faster connectivity Advanced Computing and Information Systems laboratory 47

Planet. Lab bootstrap Shared virtual network bootstrap • • Runs 24/7 on 100 s of machines on the public Internet Connect machines across multiple domains, behind NATs Advanced Computing and Information Systems laboratory 48

Planet. Lab bootstrap Shared virtual network bootstrap • • Runs 24/7 on 100 s of machines on the public Internet Connect machines across multiple domains, behind NATs Advanced Computing and Information Systems laboratory 48

Planet. Lab bootstrap: approach Create Group. VPN and Group. Appliance on the Grid appliance Web site Download configuration floppy Point users to the interface; allow users you trust into the group Trusted users can download configuration floppies and boot up appliances Advanced Computing and Information Systems laboratory 49

Planet. Lab bootstrap: approach Create Group. VPN and Group. Appliance on the Grid appliance Web site Download configuration floppy Point users to the interface; allow users you trust into the group Trusted users can download configuration floppies and boot up appliances Advanced Computing and Information Systems laboratory 49

Private bootstrap: General approach Good choice for single-domain pools Create Group. VPN and Group. Appliance on the Grid appliance Web site Deploy a small IPOP/Group. VPN bootstrap P 2 P pool • Can be on a physical machine, or appliance • Detailed instructions at grid-appliance. org The remaining steps are the same as for the shared bootstrap Advanced Computing and Information Systems laboratory 50

Private bootstrap: General approach Good choice for single-domain pools Create Group. VPN and Group. Appliance on the Grid appliance Web site Deploy a small IPOP/Group. VPN bootstrap P 2 P pool • Can be on a physical machine, or appliance • Detailed instructions at grid-appliance. org The remaining steps are the same as for the shared bootstrap Advanced Computing and Information Systems laboratory 50

Connecting external resources Group. VPN can run directly on a physical machine, if desired • Provides a VPN network interface • Useful for example if you already have a local Condor pool • Can “flock” to Archer • Also allows you to install Archer stack directly on a physical machine if you wish Advanced Computing and Information Systems laboratory 51

Connecting external resources Group. VPN can run directly on a physical machine, if desired • Provides a VPN network interface • Useful for example if you already have a local Condor pool • Can “flock” to Archer • Also allows you to install Archer stack directly on a physical machine if you wish Advanced Computing and Information Systems laboratory 51

Future. Grid example - Eucalyptus Example using Eucalyptus (or ec 2 -runinstances on Amazon EC 2): Image ID on Group. VPN floppy image Eucalyptus server euca-run-instances ami-fd 4 aa 494 -f floppy. zip --instance-type m 1. large -k keypair SSH public key to log in to instance Advanced Computing and Information Systems laboratory 52

Future. Grid example - Eucalyptus Example using Eucalyptus (or ec 2 -runinstances on Amazon EC 2): Image ID on Group. VPN floppy image Eucalyptus server euca-run-instances ami-fd 4 aa 494 -f floppy. zip --instance-type m 1. large -k keypair SSH public key to log in to instance Advanced Computing and Information Systems laboratory 52

Where to go from here? Tutorials on Future. Grid and Grid appliance Web sites for various middleware stacks • Condor, MPI, Hadoop A community resource for educational virtual appliances • Success hinges on users effectively getting • • involved If you are happy with the system, let others know! Contribute with your own content – virtual appliance images, tutorials, etc Advanced Computing and Information Systems laboratory 53

Where to go from here? Tutorials on Future. Grid and Grid appliance Web sites for various middleware stacks • Condor, MPI, Hadoop A community resource for educational virtual appliances • Success hinges on users effectively getting • • involved If you are happy with the system, let others know! Contribute with your own content – virtual appliance images, tutorials, etc Advanced Computing and Information Systems laboratory 53