06e13c014cbff556e385884d213b1e8e.ppt

- Количество слайдов: 26

VESPA: Portable, Scalable, and Flexible FPGA-Based Vector Processors Peter Yiannacouras J. Gregory Steffan Jonathan Rose Univ. of Toronto

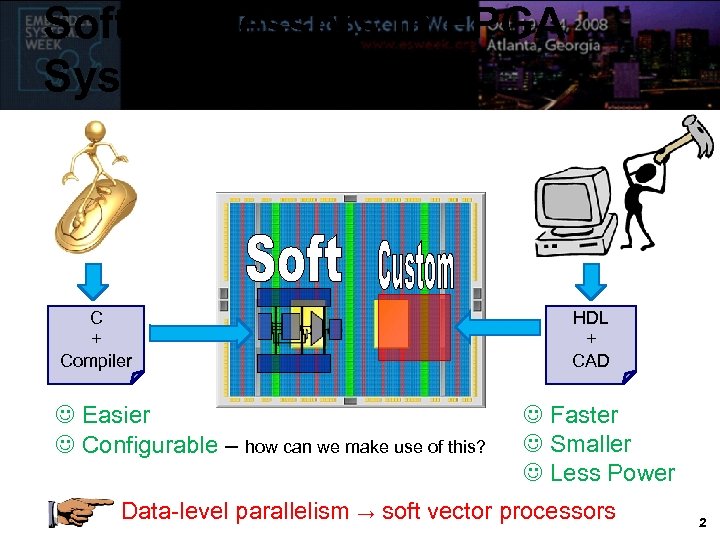

Soft Processors in FPGA Systems C + Compiler Easier Configurable – how can we make use of this? HDL + CAD Faster Smaller Less Power Data-level parallelism → soft vector processors 2

![Vector Processing Primer // C code for(i=0; i<16; i++) b[i]+=a[i] // Vectorized code set Vector Processing Primer // C code for(i=0; i<16; i++) b[i]+=a[i] // Vectorized code set](https://present5.com/presentation/06e13c014cbff556e385884d213b1e8e/image-3.jpg)

Vector Processing Primer // C code for(i=0; i<16; i++) b[i]+=a[i] // Vectorized code set vl, 16 vload vr 0, b vload vr 1, a vadd vr 0, vr 1 vstore vr 0, b Each vector instruction holds many units of independent operations vadd b[15]+=a[15] b[14]+=a[14] b[13]+=a[13] b[12]+=a[12] b[11]+=a[11] b[10]+=a[10] b[9]+=a[9] b[8]+=a[8] b[7]+=a[7] b[6]+=a[6] b[5]+=a[5] b[4]+=a[4] b[3]+=a[3] b[2]+=a[2] b[1]+=a[1] b[0]+=a[0] 1 Vector Lane 3

![Vector Processing Primer // C code for(i=0; i<16; i++) b[i]+=a[i] // Vectorized code set Vector Processing Primer // C code for(i=0; i<16; i++) b[i]+=a[i] // Vectorized code set](https://present5.com/presentation/06e13c014cbff556e385884d213b1e8e/image-4.jpg)

Vector Processing Primer // C code for(i=0; i<16; i++) b[i]+=a[i] // Vectorized code set vl, 16 vload vr 0, b vload vr 1, a vadd vr 0, vr 1 vstore vr 0, b Each vector instruction holds many units of independent operations vadd b[15]+=a[15] b[14]+=a[14] b[13]+=a[13] b[12]+=a[12] b[11]+=a[11] b[10]+=a[10] b[9]+=a[9] b[8]+=a[8] b[7]+=a[7] b[6]+=a[6] b[5]+=a[5] b[4]+=a[4] b[3]+=a[3] b[2]+=a[2] b[1]+=a[1] b[0]+=a[0] 16 Vector Lanes 16 x speedup 1) Portable 2) Flexible 3) Scalable 4

Soft Vector Processor Benefits 1. Portable ¨ SW: Agnostic to HW implementation n ¨ 2. HW: Can be implemented on any FPGA architecture Flexible ¨ Many parameters to tune (by end-user, not vendor) n 3. Eg. Number of lanes, width of lanes, etc. Scalable ¨ ¨ SW: Applies to any code with data-level parallelism HW: Number of lanes can grow with capacity of device n Parallelism can scale with Moore’s law How would this fit in with current FPGA design flow? 5

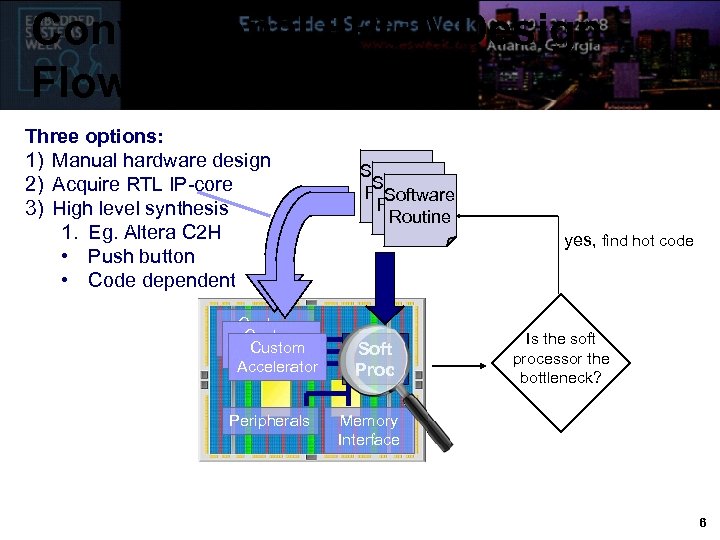

Conventional FPGA Design Flow Three options: 1) Manual hardware design 2) Acquire RTL IP-core 3) High level synthesis 1. Eg. Altera C 2 H • Push button • Code dependent Custom Accelerator Peripherals Software Routine yes, find hot code Soft Proc Is the soft processor the bottleneck? Memory Interface 6

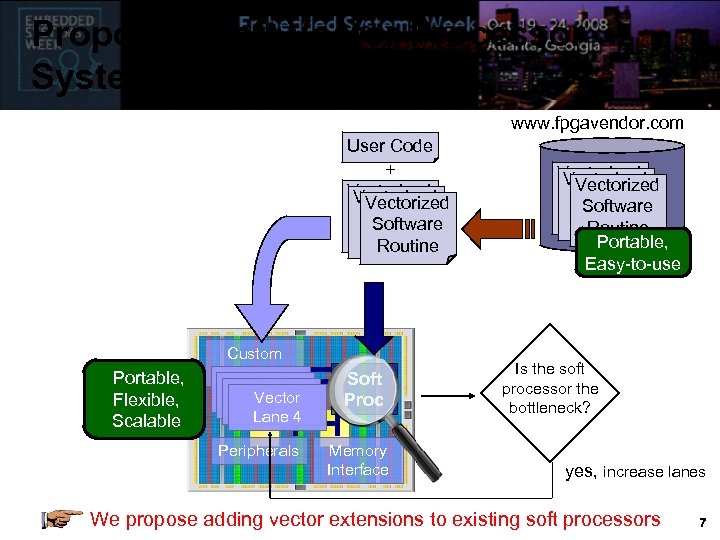

Proposed Soft Vector Processor System Design Flow www. fpgavendor. com User Code + Vectorized Software Routine Portable, Flexible, Scalable Custom Accelerator Vector Lane 1 Lane 23 Lane 4 Lane Peripherals Soft Proc Memory Interface Vectorized Software Routine Portable, Easy-to-use Is the soft processor the bottleneck? yes, increase lanes We propose adding vector extensions to existing soft processors 7

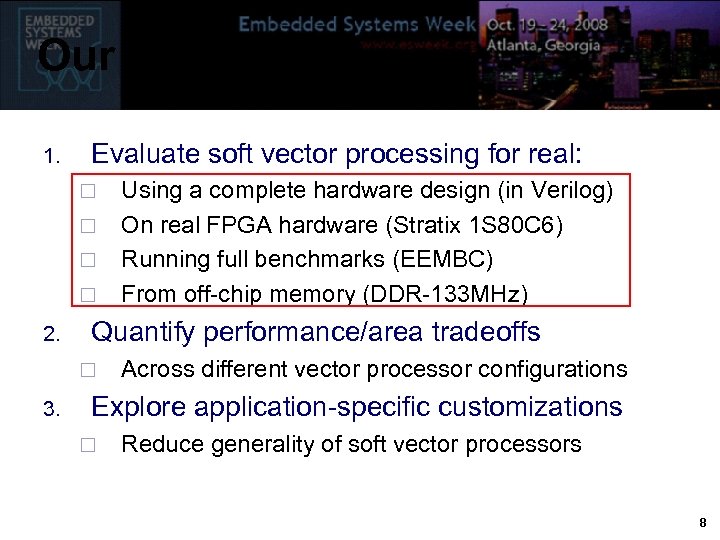

Our Goals 1. Evaluate soft vector processing for real: ¨ ¨ 2. Quantify performance/area tradeoffs ¨ 3. Using a complete hardware design (in Verilog) On real FPGA hardware (Stratix 1 S 80 C 6) Running full benchmarks (EEMBC) From off-chip memory (DDR-133 MHz) Across different vector processor configurations Explore application-specific customizations ¨ Reduce generality of soft vector processors 8

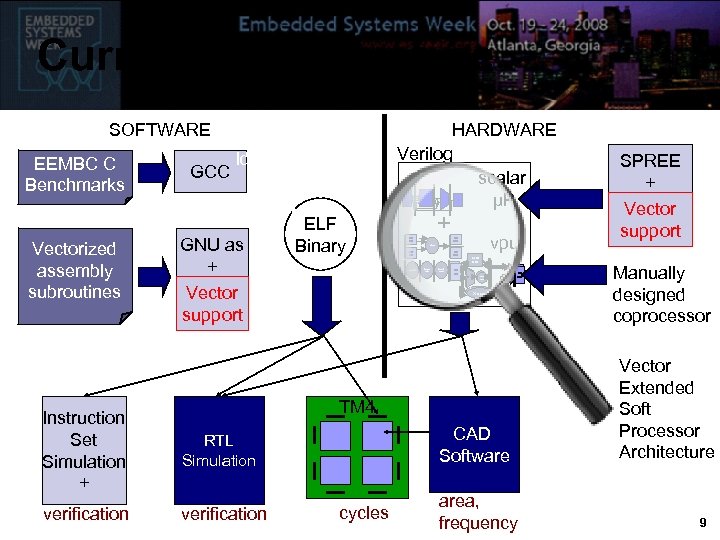

Current Infrastructure SOFTWARE EEMBC C Benchmarks Vectorized assembly subroutines Instruction Set Simulation + verification GCC HARDWARE Verilog scalar μP ld GNU as + Vector support ELF Binary + VC RF vpu VC WB Logic VS RF Decode VS WB Replicate Hazard check Mem Unit VR VR RF RF VR VR WB WB Saturate A L A U L U M X U X x &&satur. x satur. Rshift TM 4 CAD Software RTL Simulation verification cycles area, frequency SPREE + Vector support Manually designed coprocessor Vector Extended Soft Processor Architecture 9

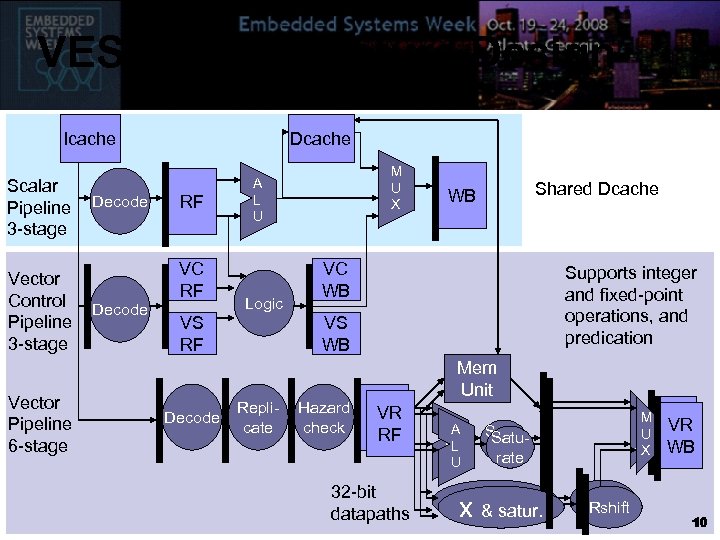

VESPA Architecture Design Icache Scalar Pipeline 3 -stage Vector Control Pipeline 3 -stage Vector Pipeline 6 -stage Decode Dcache RF VC RF Decode Logic VS RF Decode M U X A L U Shared Dcache WB VC WB Supports integer and fixed-point operations, and predication VS WB Replicate Hazard check VR VR RF RF 32 -bit datapaths Mem Unit A A LL U U M M U U X X Saturate x & satur. Rshift VR VR WB WB 10 10

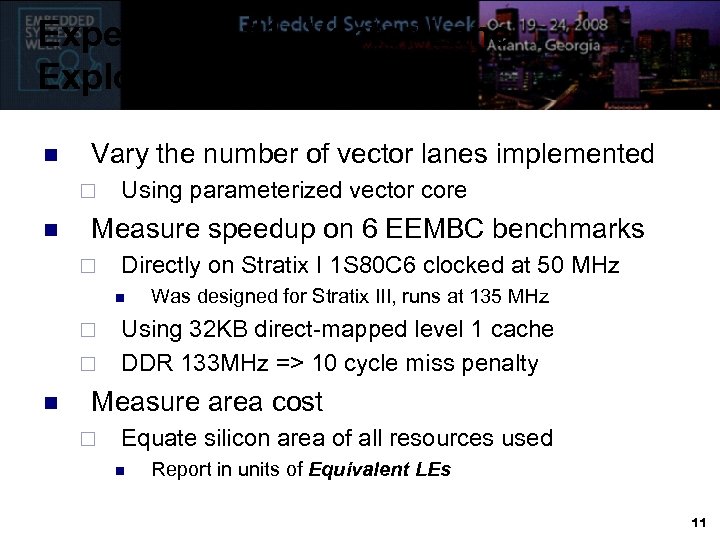

Experiment #1: Vector Lane Exploration n Vary the number of vector lanes implemented ¨ n Using parameterized vector core Measure speedup on 6 EEMBC benchmarks ¨ Directly on Stratix I 1 S 80 C 6 clocked at 50 MHz n ¨ ¨ n Was designed for Stratix III, runs at 135 MHz Using 32 KB direct-mapped level 1 cache DDR 133 MHz => 10 cycle miss penalty Measure area cost ¨ Equate silicon area of all resources used n Report in units of Equivalent LEs 11

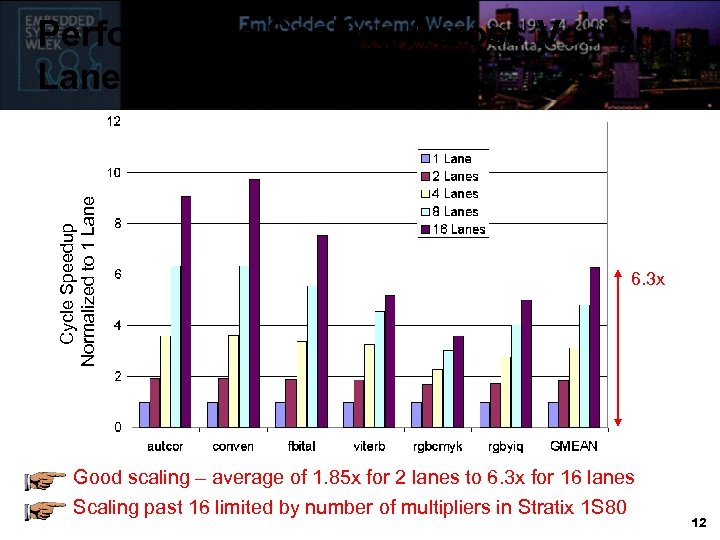

Cycle Speedup Normalized to 1 Lane Performance Scaling Across Vector Lanes 6. 3 x Good scaling – average of 1. 85 x for 2 lanes to 6. 3 x for 16 lanes Scaling past 16 limited by number of multipliers in Stratix 1 S 80 12

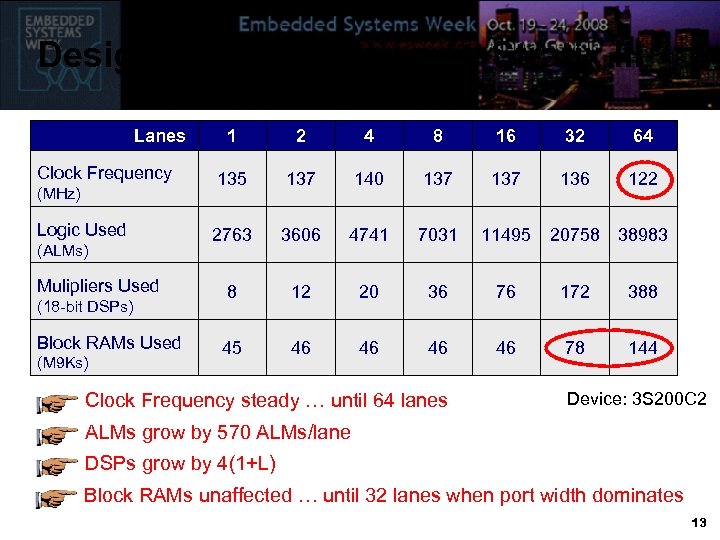

Design Characteristics on Stratix III Lanes Clock Frequency (MHz) Logic Used (ALMs) Mulipliers Used (18 -bit DSPs) Block RAMs Used (M 9 Ks) 1 2 4 8 16 32 64 135 137 140 137 136 122 2763 3606 4741 7031 11495 20758 38983 8 12 20 36 76 172 388 45 46 46 78 144 Clock Frequency steady … until 64 lanes Device: 3 S 200 C 2 ALMs grow by 570 ALMs/lane DSPs grow by 4(1+L) Block RAMs unaffected … until 32 lanes when port width dominates 13

Application-Specific Vector Processing n Customize to the application if: 1. 2. n Observations: Not all applications 1. 2. n It is the only application that will run, OR The FPGA can be reconfigured between runs Operate on 32 -bit data types Use the entire vector instruction set Eliminate unused hardware (reduce area) Reduce cost (buy smaller FPGA) ¨ Re-invest area savings into more lanes ¨ Speed up clock (nets span shorter distances) ¨ 14

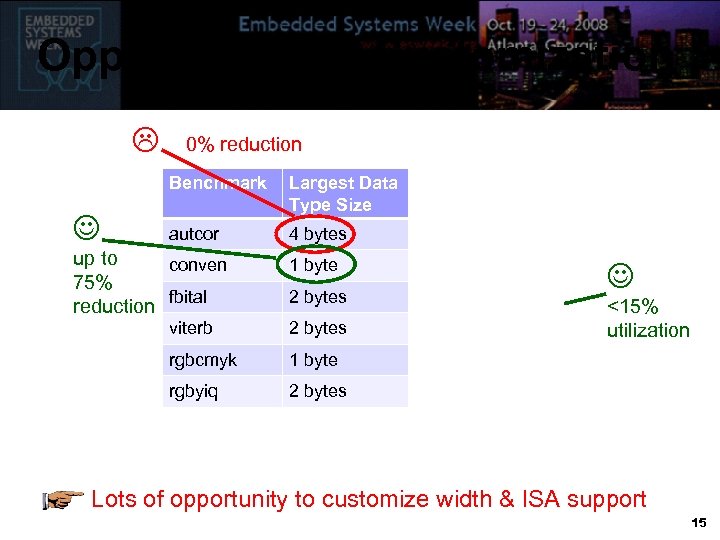

Opportunity for Customization 0% reduction Benchmark autcor 4 bytes 9. 6% 1 byte 5. 9% 2 bytes 14. 1% viterb 2 bytes 13. 3% rgbcmyk 1 byte 5. 9% rgbyiq Largest Data Percentage of Type Size Vector ISA used 2 bytes 8. 1% up to conven 75% fbital reduction <15% utilization Lots of opportunity to customize width & ISA support 15

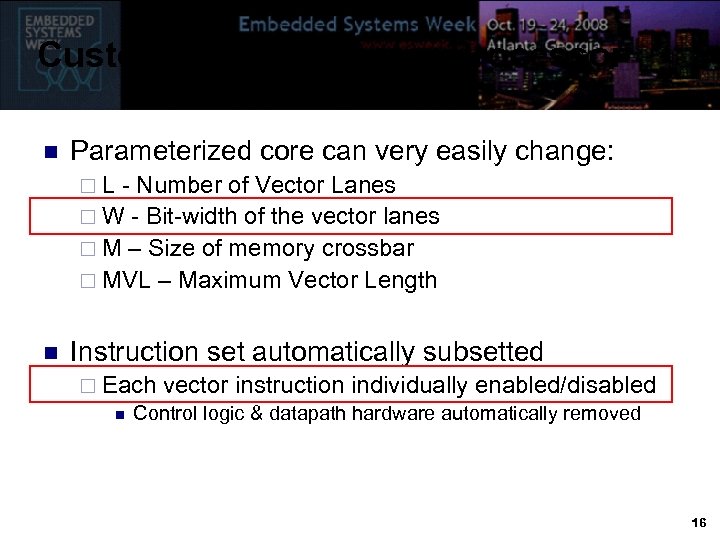

Customizing the Vector Processor n Parameterized core can very easily change: ¨L - Number of Vector Lanes ¨ W - Bit-width of the vector lanes ¨ M – Size of memory crossbar ¨ MVL – Maximum Vector Length n Instruction set automatically subsetted ¨ Each vector instruction individually enabled/disabled n Control logic & datapath hardware automatically removed 16

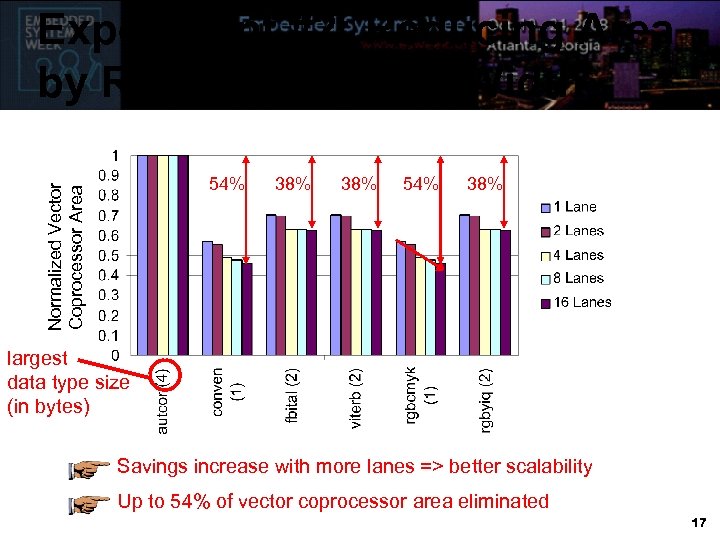

Experiment #2: Reducing Area by Reducing Vector Width Normalized Vector Coprocessor Area 54% 38% largest data type size (in bytes) Savings increase with more lanes => better scalability Up to 54% of vector coprocessor area eliminated 17

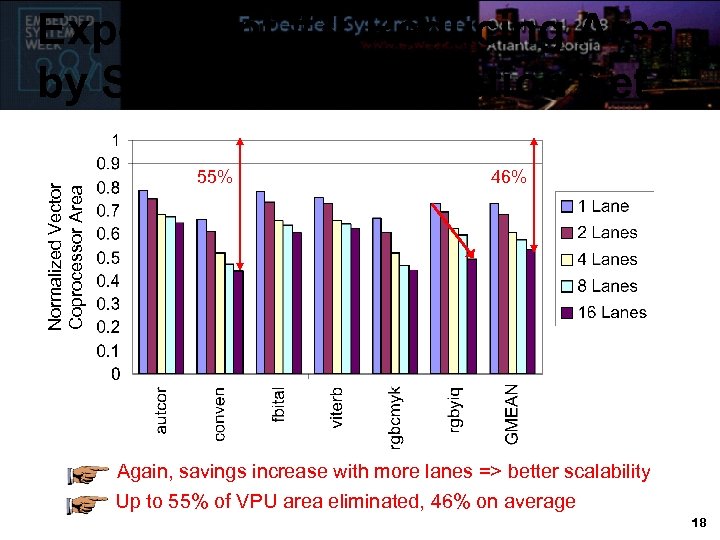

Normalized Vector Coprocessor Area Experiment #3: Reducing Area by Subsetting Instruction Set 55% 46% Again, savings increase with more lanes => better scalability Up to 55% of VPU area eliminated, 46% on average 18

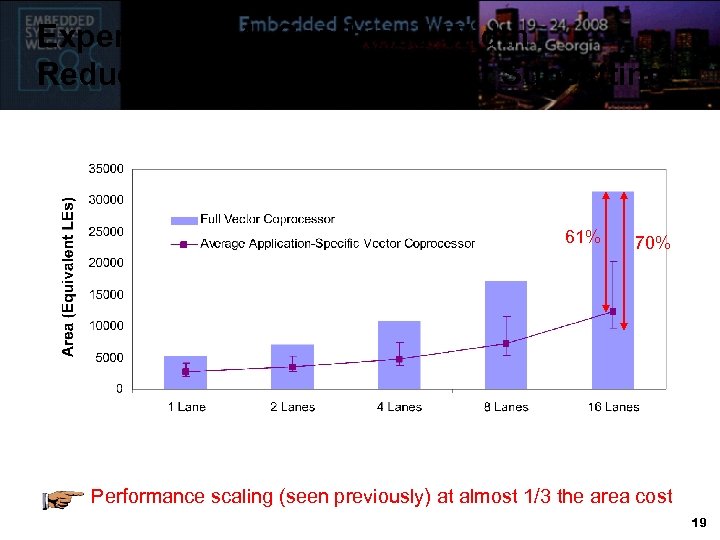

Experiment #4: Combined Width Reduction and Instruction Set Subsetting 61% 70% Performance scaling (seen previously) at almost 1/3 the area cost 19

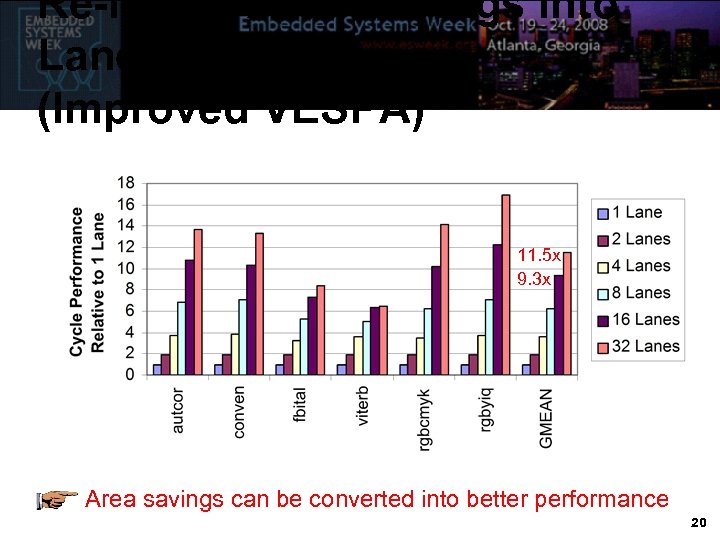

Re-Invest Area Savings into Lanes (Improved VESPA) 11. 5 x 9. 3 x Area savings can be converted into better performance 20

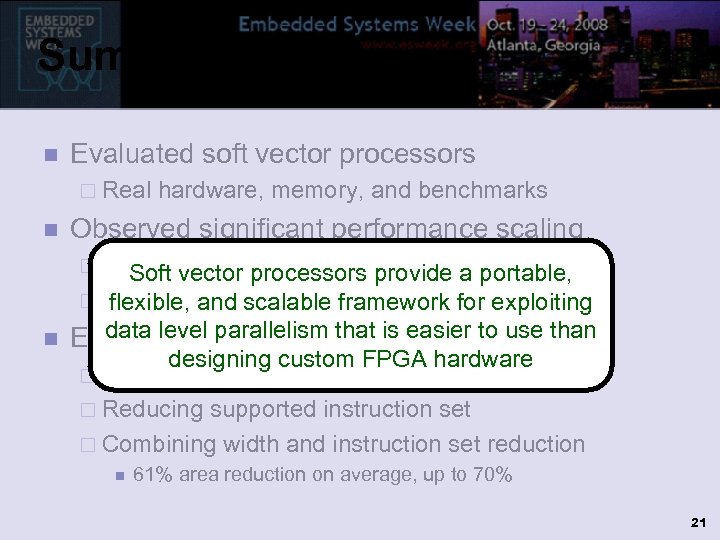

Summary n Evaluated soft vector processors ¨ Real n hardware, memory, and benchmarks Observed significant performance scaling ¨ Average of 6. 3 x with 16 lanes Soft vector processors provide a portable, ¨ Further scaling possible on newerfor exploiting flexible, and scalable framework devices data level parallelism that is easier to cost n Explored measures to reduce area use than designing custom FPGA hardware ¨ Reducing vector width ¨ Reducing supported instruction set ¨ Combining width and instruction set reduction n 61% area reduction on average, up to 70% 21

Backup Slides 22

Future Work n Improve scalability bottlenecks ¨ Memory n Evaluate scaling past 16 lanes ¨ Port n system to platform with newer FPGA Compare against hardware ¨ What do we pay for simpler design? 23

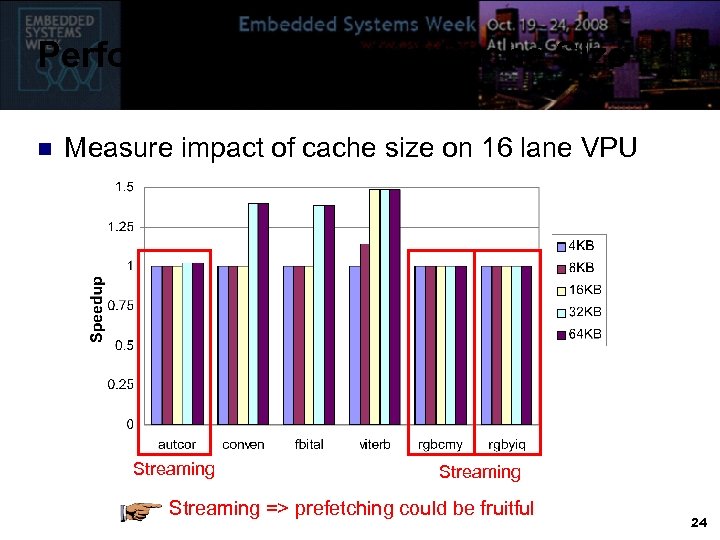

Performance Impact of Cache Size n Measure impact of cache size on 16 lane VPU Streaming => prefetching could be fruitful 24

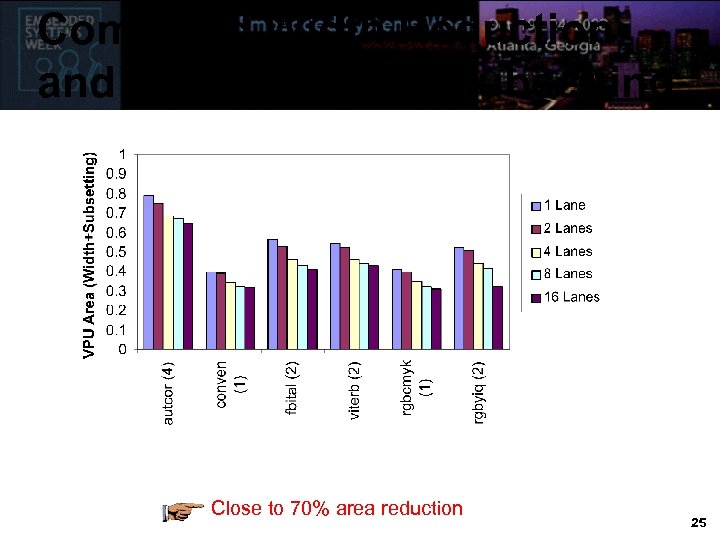

Combined Width Reduction and Instruction Set Subsetting Close to 70% area reduction 25

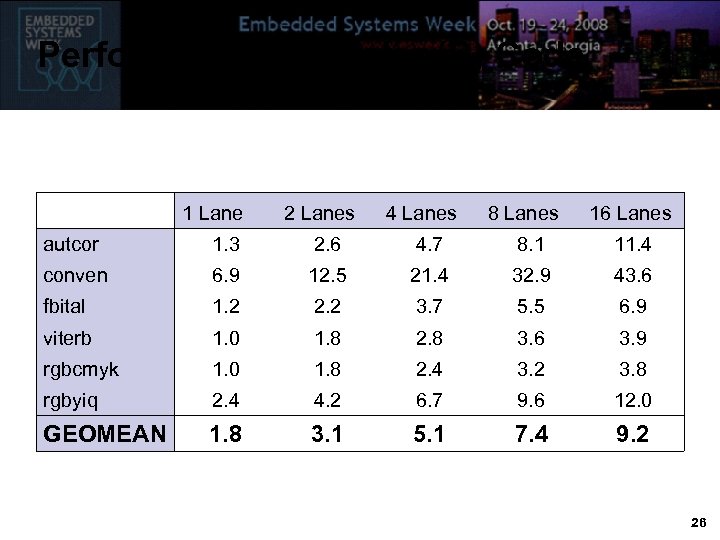

Performance vs Scalar (C) Code 1 Lane 2 Lanes 4 Lanes 8 Lanes 16 Lanes autcor 1. 3 2. 6 4. 7 8. 1 11. 4 conven 6. 9 12. 5 21. 4 32. 9 43. 6 fbital 1. 2 2. 2 3. 7 5. 5 6. 9 viterb 1. 0 1. 8 2. 8 3. 6 3. 9 rgbcmyk 1. 0 1. 8 2. 4 3. 2 3. 8 rgbyiq 2. 4 4. 2 6. 7 9. 6 12. 0 GEOMEAN 1. 8 3. 1 5. 1 7. 4 9. 2 26

06e13c014cbff556e385884d213b1e8e.ppt