d2daf2c7c420bb7c04201aa3e19feea2.ppt

- Количество слайдов: 31

Variant A Malware Similarity Testing Framework October 20 -23 rd, 2015

Purpose q Define a Standard Dataset for use in malware variant testing q Align malware variant detection to broader binary classification field q Test current variant testing tools against proposed solution October 20 -23 rd, 2015 2

Setting q Sources are not testing datasets q Virus total, AV, Open Source, malware code q Derivation of sources are poor testing datasets q Testing against poor results q AV signatures q Lack of breath in code modification October 20 -23 rd, 2015 3

Previous Work q Variant detection papers § Bit. Shred, TLSH, FRASH ü Derived from AV signatures or code ü Varied source ü Not reproducible with accuracy q File similarity § FRASH, CTPH ü Not all variation is as complex as malware October 20 -23 rd, 2015 4

Hypothesis q A malware static, reproducible dataset that is based on human grouping will provide more critical testing of proposed variant detection engines q Benefits § Static, reproducible, results based on best known classification October 20 -23 rd, 2015 5

Findings October 20 -23 rd, 2015 6

Deriving Datasets through Algorithm • Selection of sets from malware sources • Via antivirus identification • Varying source code • Use available source code and vary by algorithm, compile October 20 -23 rd, 2015 7

What are we testing against? • Reproducing AV signature results? • Reproduction of a flawed system • Detecting a few, untested, constructed variance engines? • What about real world breath? • Can we reproduce the dataset for further testing and comparison? October 20 -23 rd, 2015 8

Gold Standard Dataset • Representative of real world data • Knowledge of dataset derived from best available source • Tests enable reproduction for peer review and further comparison • Samples are real, wild malware • Knowledge of dataset is derived from manual analysis • Dataset is static and information is known October 20 -23 rd, 2015 9

Alignment of Broader Field • Malware Variant Detection is Binary/Statistical Classification • Yet field has disparate measurements and terms • Alignment of Nomenclature • • • Apples to Apples comparison against other malware projects Apples to Apples comparison for broader statistical classification projects Removal of ambiguous terms • ie accuacy October 20 -23 rd, 2015 10

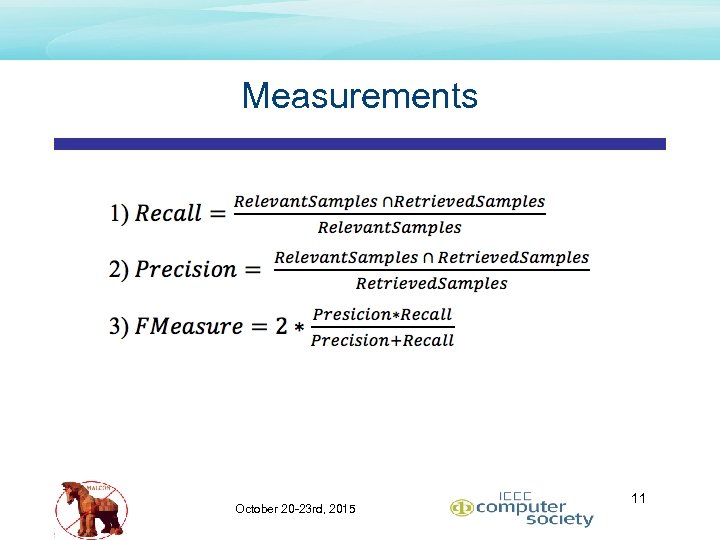

Measurements October 20 -23 rd, 2015 11

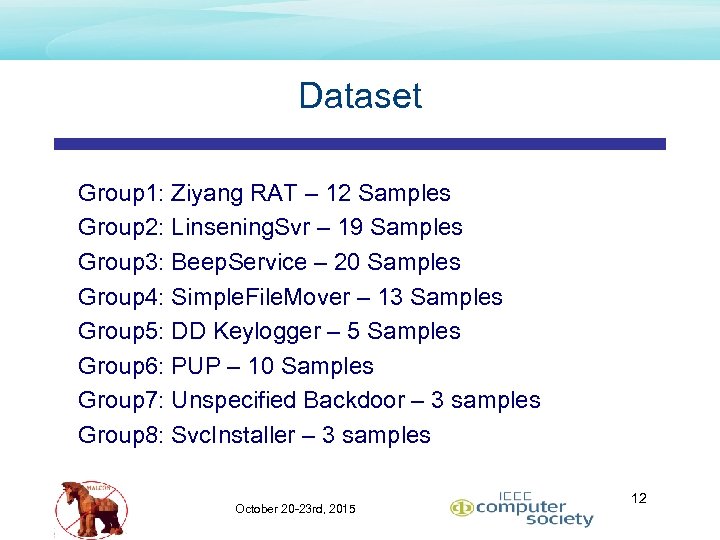

Dataset Group 1: Ziyang RAT – 12 Samples Group 2: Linsening. Svr – 19 Samples Group 3: Beep. Service – 20 Samples Group 4: Simple. File. Mover – 13 Samples Group 5: DD Keylogger – 5 Samples Group 6: PUP – 10 Samples Group 7: Unspecified Backdoor – 3 samples Group 8: Svc. Installer – 3 samples October 20 -23 rd, 2015 12

Dataset (Cont. ) • Dataset is manually analyzed • Best possible information • Dataset is static • Reproducible results • Small • Can be grown October 20 -23 rd, 2015 13

Candidate Solutions • CTPH (fuzzy hash, ssdeep, as published) • triggered, n-gram, raw input, pairwise comparisons • TLSH (as published) • selective, n-gram, raw input, LSH comparisons • sdhash (as published) • full, n-gram, raw input, pairwise comparisons • Bit. Shred (re-implemented) • Full, n-gram, section input, pairwise comparisons • First. Byte (in house) • Selective, n-gram, normalized input, LSH comparisons October 20 -23 rd, 2015 14

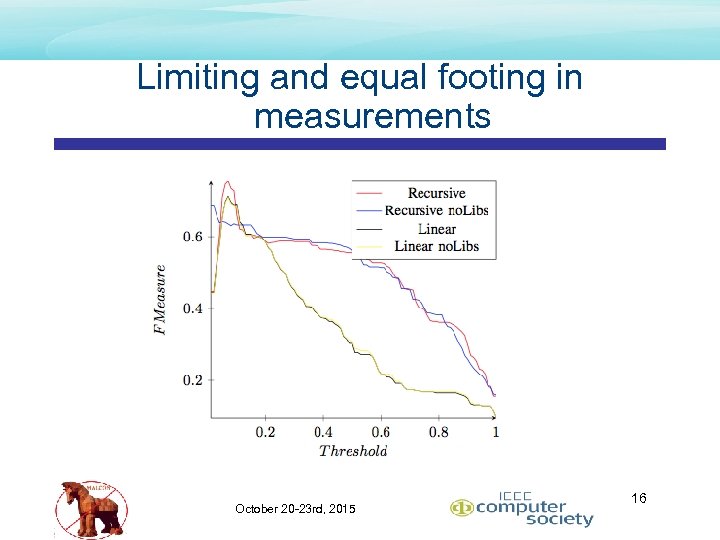

Limiting and equal footing in measurements • 2 x 2 options of First. Byte • Recursive Disassembly vs. Linear Sweep Disassembly • Library Filtering on/off • Selection of Linear, no. Libs • Faster Signature generation • Near performance curve of R-no. Lib October 20 -23 rd, 2015 15

Limiting and equal footing in measurements October 20 -23 rd, 2015 16

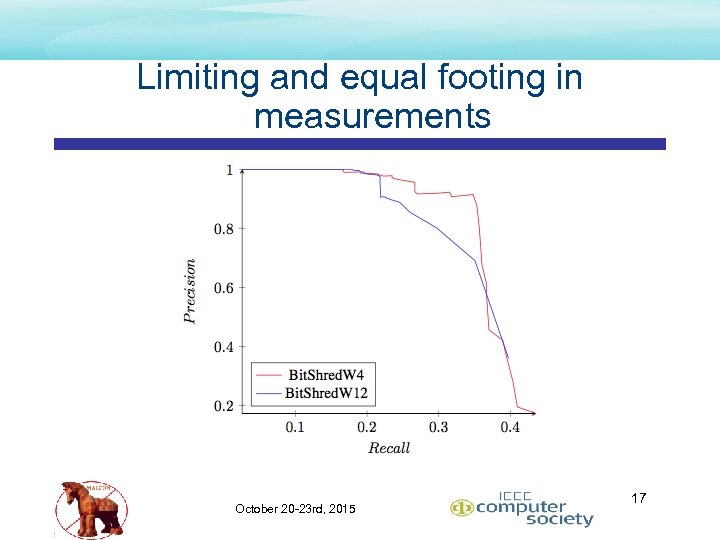

Limiting and equal footing in measurements October 20 -23 rd, 2015 17

Limiting and equal footing in measurements • TLSH bounding • TLSH is a distance measurement, not a similarity • Authors argue distance is a better approach • Change 4 other projects, or TLSH • Authors state distance of 300 is very dissimilar • • Sim = (300 – Distance)/3 Bound Sim < 0 to 0 October 20 -23 rd, 2015 18

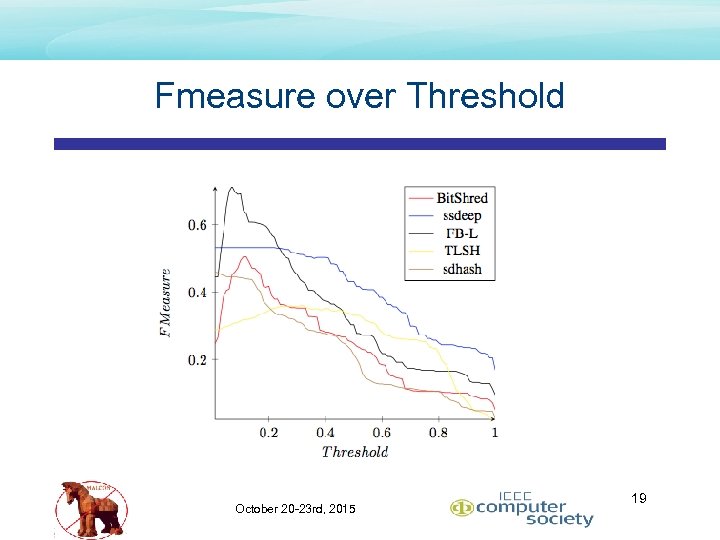

Fmeasure over Threshold October 20 -23 rd, 2015 19

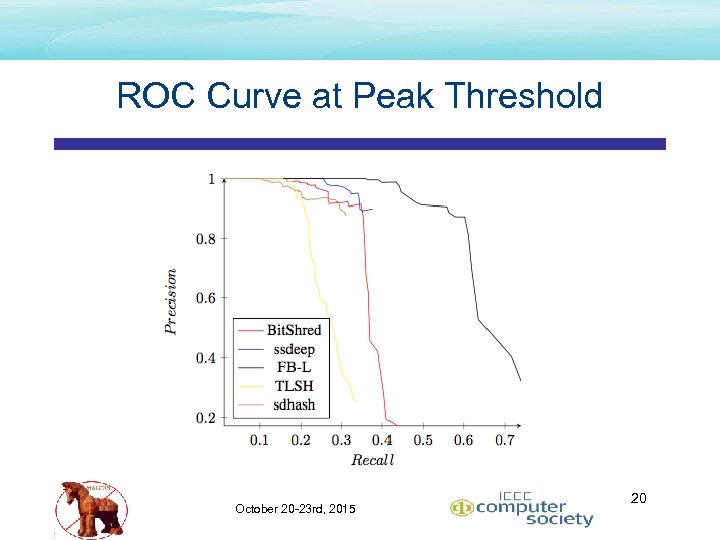

ROC Curve at Peak Threshold October 20 -23 rd, 2015 20

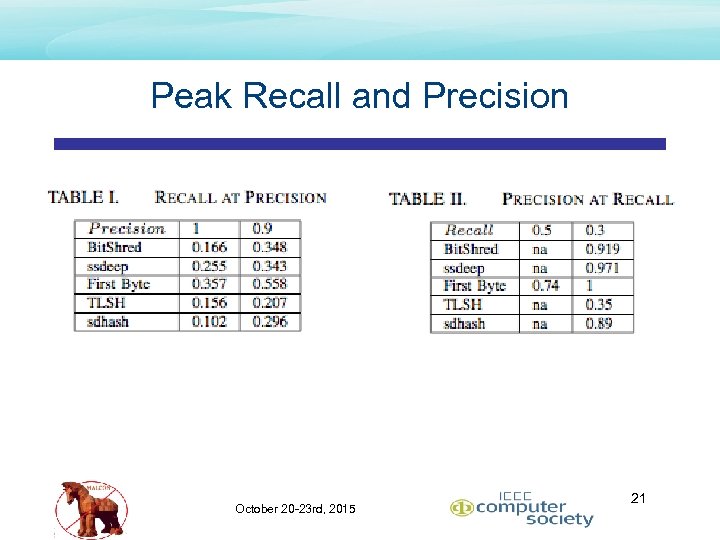

Peak Recall and Precision October 20 -23 rd, 2015 21

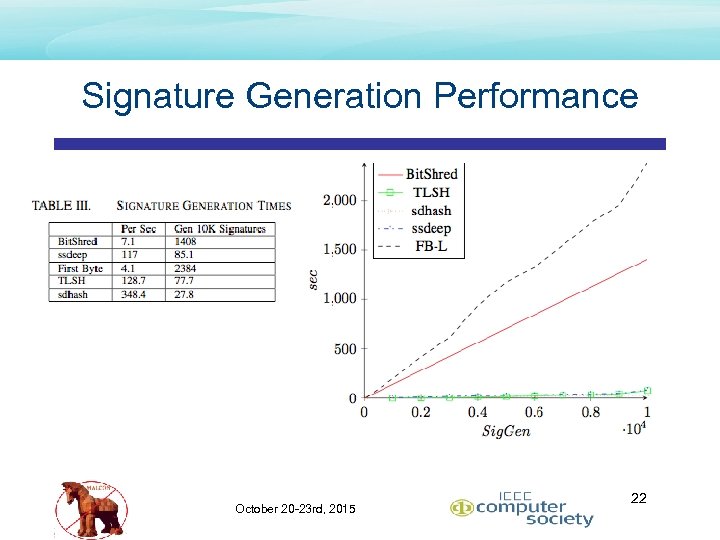

Signature Generation Performance October 20 -23 rd, 2015 22

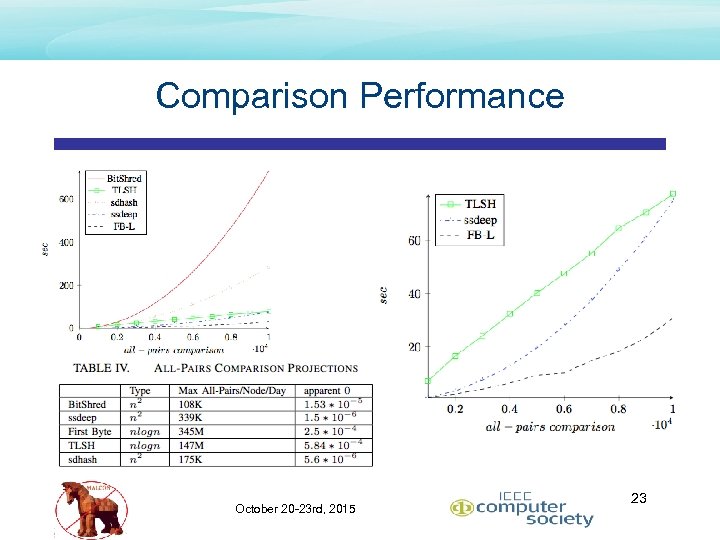

Comparison Performance October 20 -23 rd, 2015 23

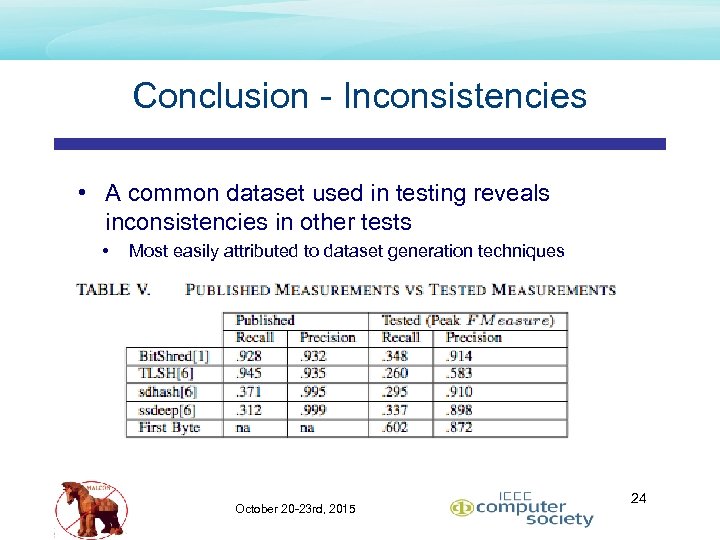

Conclusion - Inconsistencies • A common dataset used in testing reveals inconsistencies in other tests • Most easily attributed to dataset generation techniques October 20 -23 rd, 2015 24

Conclusion – Reproducibility and Alignment • Dataset can be reproduced exactly • Dataset represents a Gold Standard approach • Best known (human) information vs. tested project • Measurements are aligned with the greater binary classification field • Recall, Precision, Fmeasure October 20 -23 rd, 2015 25

Conclusion – Comparative Results • Topped ranked overall speed • TLSH • • • Topped ranked precision and recall • First. Byte • • 2. 5 X signature generation (slower) than sdhash n log n all-pairs easily overcomes slower signature generation 42% better than Bitshred in Fmeasure 95% better than TLSH in Fmeasure 365 K signatures/node/day limit Topped ranked if signature generation is a concern (365 K/node/day) • • Bit. Shred if n 2 not a concern TLSH if n 2 is a concern October 20 -23 rd, 2015 26

Impact: Gold Standard A static, reproducible dataset based on human classification is superior in accessing malware variant detection techniques in that it is more representative of the “best known” classification requirement of a “Gold Standard Dataset” October 20 -23 rd, 2015 27

Broader Contributions q Testing for malware variant binary classification to date is suspect § Inaccuracies October 20 -23 rd, 2015 28

Summary and Conclusions q Variant reveals much lower recall and precision scores between projects q Variant is reproducible q Variant represents an evaluation of the ability to reproduce human results. q Variant aligns field to binary classification q Variant tests multiple tools under the same conditions and can be used in future tests October 20 -23 rd, 2015 29

Remaining Questions q Future Work § Growing the dataset § Open contributions § Retesting of proposed works October 20 -23 rd, 2015 30

Jason Upchurch Jason. R. Upchurch@ Intel. com October 20 -23 rd, 2015 31

d2daf2c7c420bb7c04201aa3e19feea2.ppt