e4984e0790532293c21a16c8ce6f0e67.ppt

- Количество слайдов: 30

Using Supercomputers to Collide Black Holes Solving Einstein’s Equations on the Grid Ed Seidel Albert-Einstein-Institut MPI-Gravitationsphysik & NCSA/U of IL • Solving Einstein’s Equations, Black Holes, and Gravitational Wave Astronomy • Cactus, a new community simulation code framework – Toolkit for any PDE systems, ray tracing, etc. . . – Suite of solvers for Einstein and astrophysics systems • Recent Simulations using Cactus – Black Hole Collisions, Neutron Star Collisions – Collapse of Gravitational Waves • Grid Computing, remote collaborative tools: what a scientist really wants and needs What we will be able to do with this new technology…? Albert-Einstein-Institut www. aei-potsdam. mpg. de

Einstein’s Equations and Gravitational Waves This community owes a lot to Einstein. . . • Einstein’s General Relativity – Fundamental theory of Physics (Gravity) – Among most complex equations of physics • Dozens of coupled, nonlinear hyperbolic-elliptic equations with 1000’s of terms – Predict black holes, gravitational waves, etc. – Barely have capability to solve after a century • This is about to change. . . • Exciting new field about to be born: Gravitational Wave Astronomy – Fundamentally new information about Universe – What are gravitational waves? ? : Ripples in spacetime curvature, caused by matter motion, causing distances to change: • A last major test of Einstein’s theory: do they exist? – Eddington: “Gravitational waves propagate at the speed of thought” – This is about to change. . . Albert-Einstein-Institut www. aei-potsdam. mpg. de

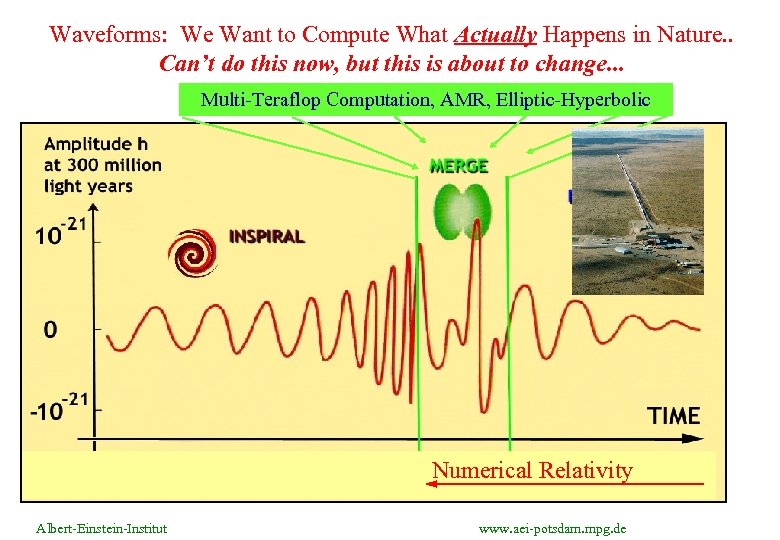

Waveforms: We Want to Compute What Actually Happens in Nature. . Can’t do this now, but this is about to change. . . Multi-Teraflop Computation, AMR, Elliptic-Hyperbolic Numerical Relativity Albert-Einstein-Institut www. aei-potsdam. mpg. de

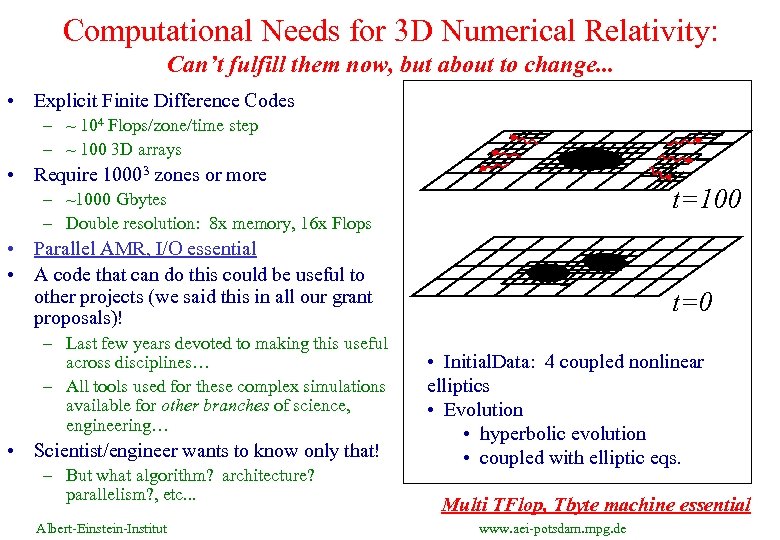

Computational Needs for 3 D Numerical Relativity: Can’t fulfill them now, but about to change. . . • Explicit Finite Difference Codes – ~ 104 Flops/zone/time step – ~ 100 3 D arrays • Require 10003 zones or more t=100 – ~1000 Gbytes – Double resolution: 8 x memory, 16 x Flops • Parallel AMR, I/O essential • A code that can do this could be useful to other projects (we said this in all our grant proposals)! – Last few years devoted to making this useful across disciplines… – All tools used for these complex simulations available for other branches of science, engineering… • Scientist/engineer wants to know only that! – But what algorithm? architecture? parallelism? , etc. . . Albert-Einstein-Institut t=0 • Initial. Data: 4 coupled nonlinear elliptics • Evolution • hyperbolic evolution • coupled with elliptic eqs. Multi TFlop, Tbyte machine essential www. aei-potsdam. mpg. de

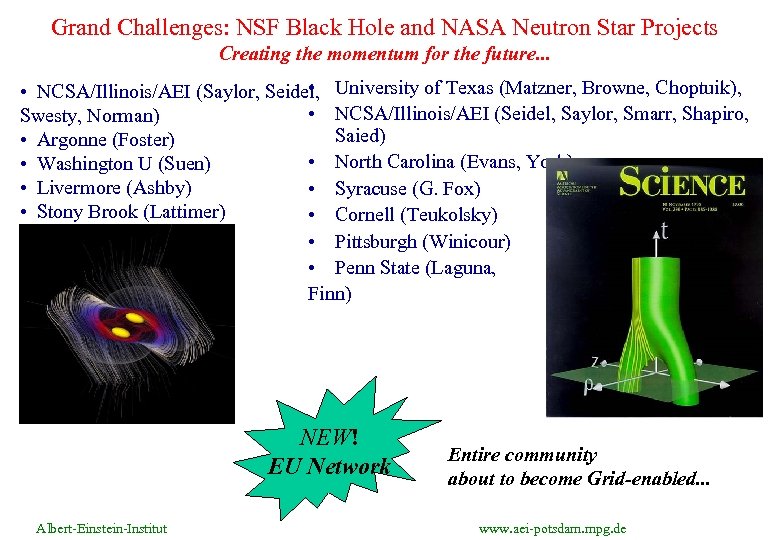

Grand Challenges: NSF Black Hole and NASA Neutron Star Projects Creating the momentum for the future. . . • • NCSA/Illinois/AEI (Saylor, Seidel, University of Texas (Matzner, Browne, Choptuik), • NCSA/Illinois/AEI (Seidel, Saylor, Smarr, Shapiro, Swesty, Norman) Saied) • Argonne (Foster) • North Carolina (Evans, York) • Washington U (Suen) • Livermore (Ashby) • Syracuse (G. Fox) • Stony Brook (Lattimer) • Cornell (Teukolsky) • Pittsburgh (Winicour) • Penn State (Laguna, Finn) NEW! EU Network Albert-Einstein-Institut Entire community about to become Grid-enabled. . . www. aei-potsdam. mpg. de

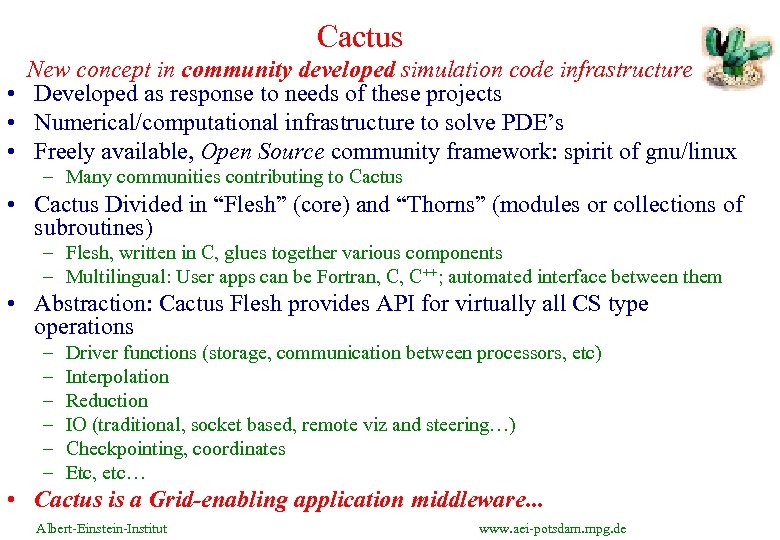

Cactus New concept in community developed simulation code infrastructure • Developed as response to needs of these projects • Numerical/computational infrastructure to solve PDE’s • Freely available, Open Source community framework: spirit of gnu/linux – Many communities contributing to Cactus • Cactus Divided in “Flesh” (core) and “Thorns” (modules or collections of subroutines) – Flesh, written in C, glues together various components – Multilingual: User apps can be Fortran, C, C++; automated interface between them • Abstraction: Cactus Flesh provides API for virtually all CS type operations – – – Driver functions (storage, communication between processors, etc) Interpolation Reduction IO (traditional, socket based, remote viz and steering…) Checkpointing, coordinates Etc, etc… • Cactus is a Grid-enabling application middleware. . . Albert-Einstein-Institut www. aei-potsdam. mpg. de

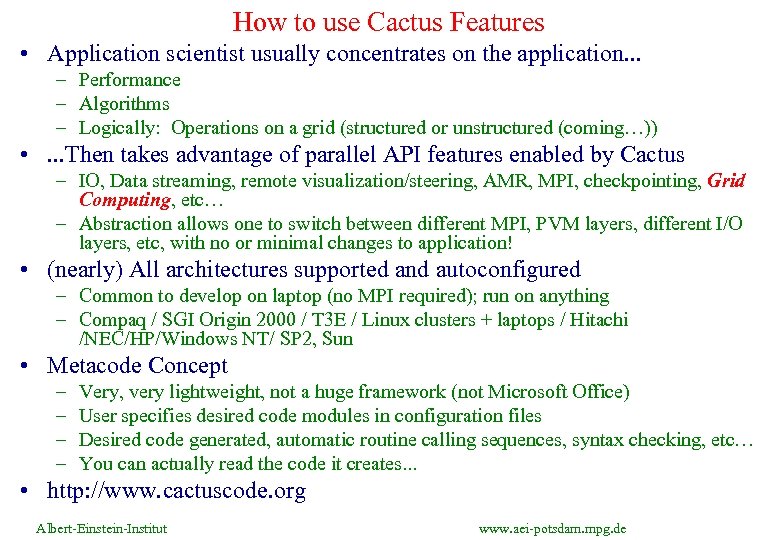

How to use Cactus Features • Application scientist usually concentrates on the application. . . – Performance – Algorithms – Logically: Operations on a grid (structured or unstructured (coming…)) • . . . Then takes advantage of parallel API features enabled by Cactus – IO, Data streaming, remote visualization/steering, AMR, MPI, checkpointing, Grid Computing, etc… – Abstraction allows one to switch between different MPI, PVM layers, different I/O layers, etc, with no or minimal changes to application! • (nearly) All architectures supported and autoconfigured – Common to develop on laptop (no MPI required); run on anything – Compaq / SGI Origin 2000 / T 3 E / Linux clusters + laptops / Hitachi /NEC/HP/Windows NT/ SP 2, Sun • Metacode Concept – – Very, very lightweight, not a huge framework (not Microsoft Office) User specifies desired code modules in configuration files Desired code generated, automatic routine calling sequences, syntax checking, etc… You can actually read the code it creates. . . • http: //www. cactuscode. org Albert-Einstein-Institut www. aei-potsdam. mpg. de

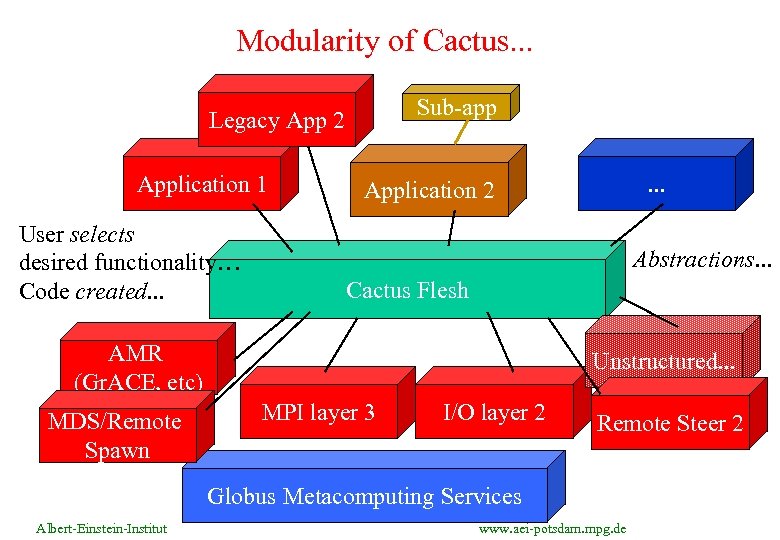

Modularity of Cactus. . . Sub-app Legacy App 2 Application 1 User selects desired functionality… Code created. . . Abstractions. . . Cactus Flesh AMR (Gr. ACE, etc) MDS/Remote Spawn . . . Application 2 Unstructured. . . MPI layer 3 I/O layer 2 Remote Steer 2 Globus Metacomputing Services Albert-Einstein-Institut www. aei-potsdam. mpg. de

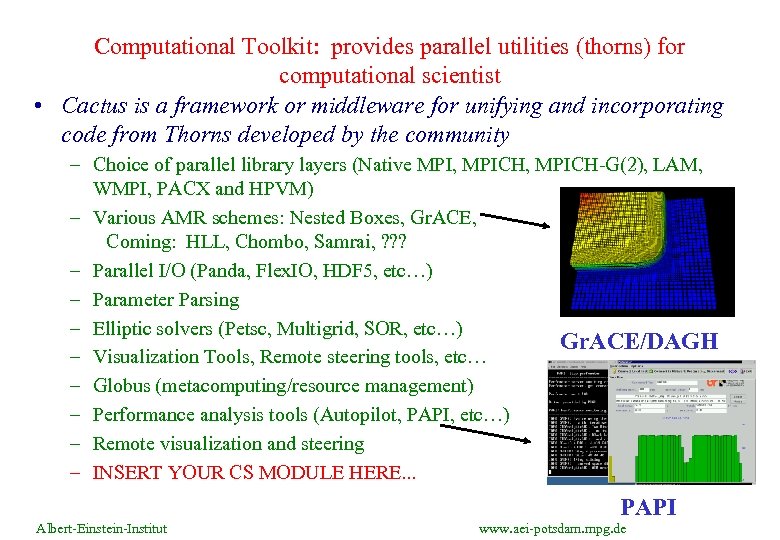

Computational Toolkit: provides parallel utilities (thorns) for computational scientist • Cactus is a framework or middleware for unifying and incorporating code from Thorns developed by the community – Choice of parallel library layers (Native MPI, MPICH-G(2), LAM, WMPI, PACX and HPVM) – Various AMR schemes: Nested Boxes, Gr. ACE, Coming: HLL, Chombo, Samrai, ? ? ? – Parallel I/O (Panda, Flex. IO, HDF 5, etc…) – Parameter Parsing – Elliptic solvers (Petsc, Multigrid, SOR, etc…) Gr. ACE/DAGH – Visualization Tools, Remote steering tools, etc… – Globus (metacomputing/resource management) – Performance analysis tools (Autopilot, PAPI, etc…) – Remote visualization and steering – INSERT YOUR CS MODULE HERE. . . PAPI Albert-Einstein-Institut www. aei-potsdam. mpg. de

High performance: Full 3 D Einstein Equations solved on NCSA NT Supercluster, Origin 2000, T 3 E • Excellent scaling on many architectures – Origin up to 256 processors – T 3 E up to 1024 – NCSA NT cluster up to 128 processors • Achieved 142 Gflops/s on 1024 node T 3 E-1200 (benchmarked for NASA NS Grand Challenge) • Scaling to thousands of processors possible, necessary. . . • But, of course, we want much more… Grid Computing Albert-Einstein-Institut www. aei-potsdam. mpg. de

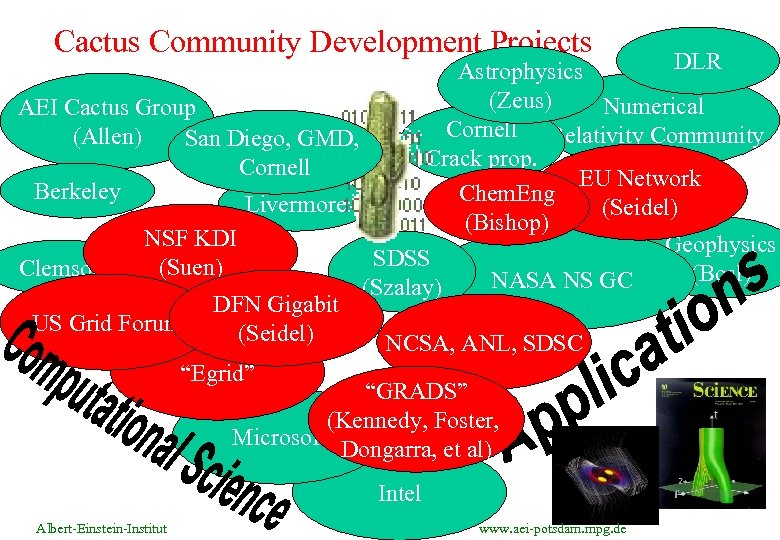

Cactus Community Development Projects DLR Astrophysics (Zeus) Numerical AEI Cactus Group Cornell Relativity Community (Allen) San Diego, GMD, Crack prop. Cornell EU Network Berkeley Chem. Eng Livermore (Seidel) (Bishop) NSF KDI Geophysics SDSS (Suen) Clemson (Bosl) NASA NS GC (Szalay) DFN Gigabit US Grid Forum (Seidel) NCSA, ANL, SDSC “Egrid” “GRADS” (Kennedy, Foster, Microsoft Dongarra, et al) Intel Albert-Einstein-Institut www. aei-potsdam. mpg. de

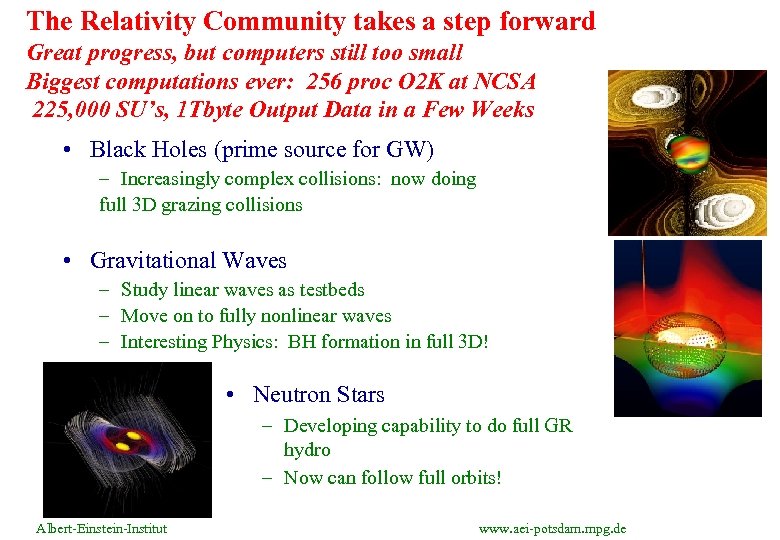

The Relativity Community takes a step forward Great progress, but computers still too small Biggest computations ever: 256 proc O 2 K at NCSA 225, 000 SU’s, 1 Tbyte Output Data in a Few Weeks • Black Holes (prime source for GW) – Increasingly complex collisions: now doing full 3 D grazing collisions • Gravitational Waves – Study linear waves as testbeds – Move on to fully nonlinear waves – Interesting Physics: BH formation in full 3 D! • Neutron Stars – Developing capability to do full GR hydro – Now can follow full orbits! Albert-Einstein-Institut www. aei-potsdam. mpg. de

Evolving Pure Gravitational Waves • Einstein’s equations nonlinear, so low amplitude waves just propagate away, but large amplitude waves may… – Collapse on themselves under their own self-gravity and actually form black holes • Use numerical relativity: Probe GR in highly nonlinear regime – Form BH? , Critical Phenomena in 3 D? , Naked singularities? – … Little known about generic 3 D behavior • Take “Lump of Waves” and evolve – Large amplitude: get BH to form! – Below critical value: disperses and can evolve “forever” as system returns to flat space • We are seeing hints of critical phenomena, known from nonlinear dynamics • But need much more power to explore details, discover new physics. . . Albert-Einstein-Institut www. aei-potsdam. mpg. de

Comparison: sub vs. super-critical solutions Supercritical: BH forms! Subcritical: no BH forms Newman-Penrose Y 4 (showing gravitational waves) with lapse a underneath Albert-Einstein-Institut www. aei-potsdam. mpg. de

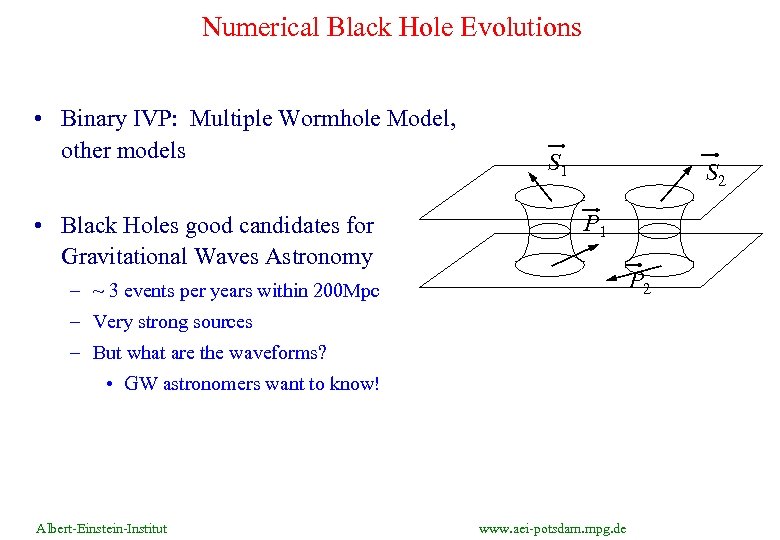

Numerical Black Hole Evolutions • Binary IVP: Multiple Wormhole Model, other models • Black Holes good candidates for Gravitational Waves Astronomy S 1 S 2 P 1 P 2 – ~ 3 events per years within 200 Mpc – Very strong sources – But what are the waveforms? • GW astronomers want to know! Albert-Einstein-Institut www. aei-potsdam. mpg. de

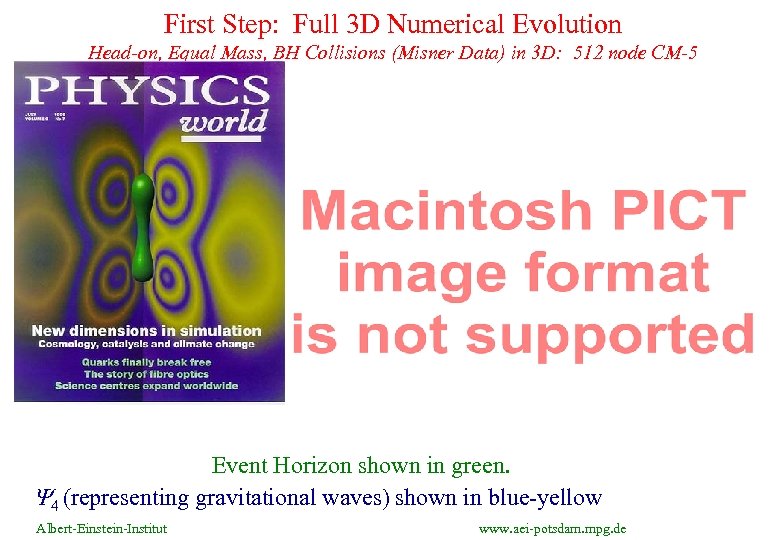

First Step: Full 3 D Numerical Evolution Head-on, Equal Mass, BH Collisions (Misner Data) in 3 D: 512 node CM-5 Event Horizon shown in green. Y 4 (representing gravitational waves) shown in blue-yellow Albert-Einstein-Institut www. aei-potsdam. mpg. de

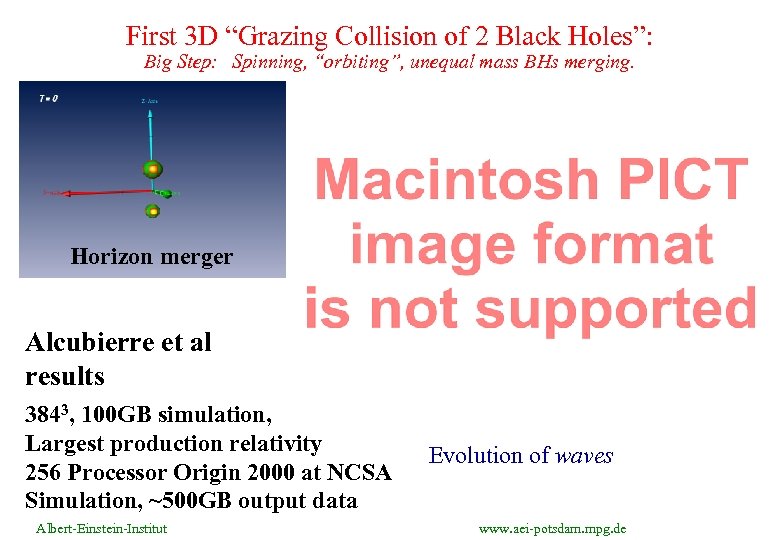

First 3 D “Grazing Collision of 2 Black Holes”: Big Step: Spinning, “orbiting”, unequal mass BHs merging. Horizon merger Alcubierre et al results 3843, 100 GB simulation, Largest production relativity 256 Processor Origin 2000 at NCSA Simulation, ~500 GB output data Albert-Einstein-Institut Evolution of waves www. aei-potsdam. mpg. de

Future view: much of it here already. . . • Scale of computations much larger – Complexity approaching that of Nature – Simulations of the Universe and its constituents • Black holes, neutron stars, supernovae • Airflow around advanced planes, spacecraft • Human genome, human behavior • Teams of computational scientists working together – Must support efficient, high level problem description – Must support collaborative computational science – Must support all different languages • Ubiquitous Grid Computing – Very dynamic simulations, deciding their own future – Apps find the resources themselves: distributed, spawned, etc. . . – Must be tolerant of dynamic infrastructure (variable networks, processor availability, etc…) – Monitored, viz’ed, controlled from anywhere, with colleagues anywhere else. . . Albert-Einstein-Institut www. aei-potsdam. mpg. de

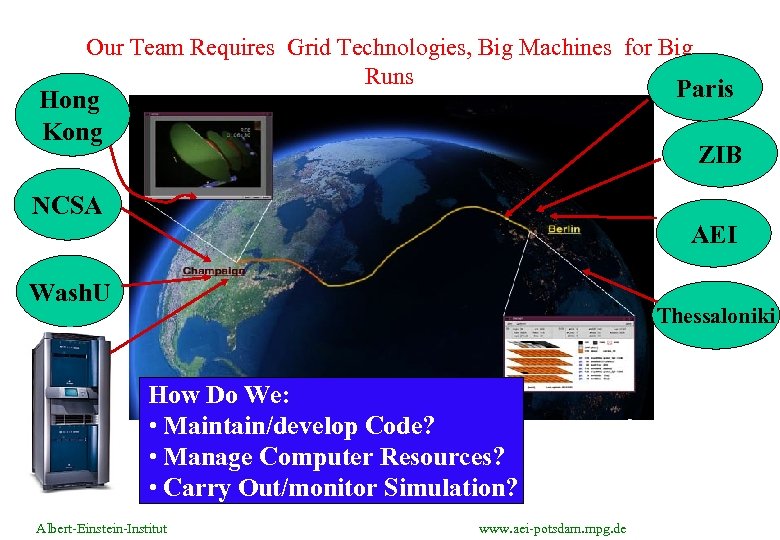

Our Team Requires Grid Technologies, Big Machines for Big Runs Paris Hong Kong ZIB NCSA AEI Wash. U Thessaloniki How Do We: • Maintain/develop Code? • Manage Computer Resources? • Carry Out/monitor Simulation? Albert-Einstein-Institut www. aei-potsdam. mpg. de

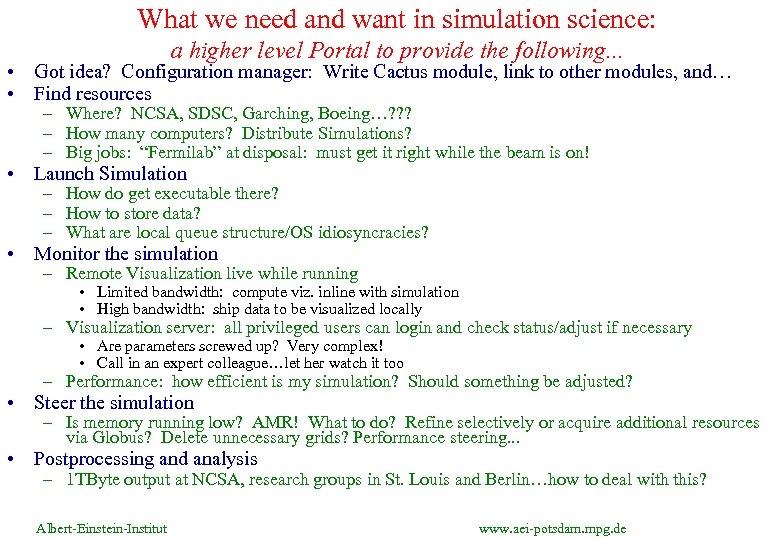

What we need and want in simulation science: a higher level Portal to provide the following. . . • Got idea? Configuration manager: Write Cactus module, link to other modules, and… • Find resources – Where? NCSA, SDSC, Garching, Boeing…? ? ? – How many computers? Distribute Simulations? – Big jobs: “Fermilab” at disposal: must get it right while the beam is on! • Launch Simulation – How do get executable there? – How to store data? – What are local queue structure/OS idiosyncracies? • Monitor the simulation – Remote Visualization live while running • Limited bandwidth: compute viz. inline with simulation • High bandwidth: ship data to be visualized locally – Visualization server: all privileged users can login and check status/adjust if necessary • Are parameters screwed up? Very complex! • Call in an expert colleague…let her watch it too – Performance: how efficient is my simulation? Should something be adjusted? • Steer the simulation – Is memory running low? AMR! What to do? Refine selectively or acquire additional resources via Globus? Delete unnecessary grids? Performance steering. . . • Postprocessing and analysis – 1 TByte output at NCSA, research groups in St. Louis and Berlin…how to deal with this? Albert-Einstein-Institut www. aei-potsdam. mpg. de

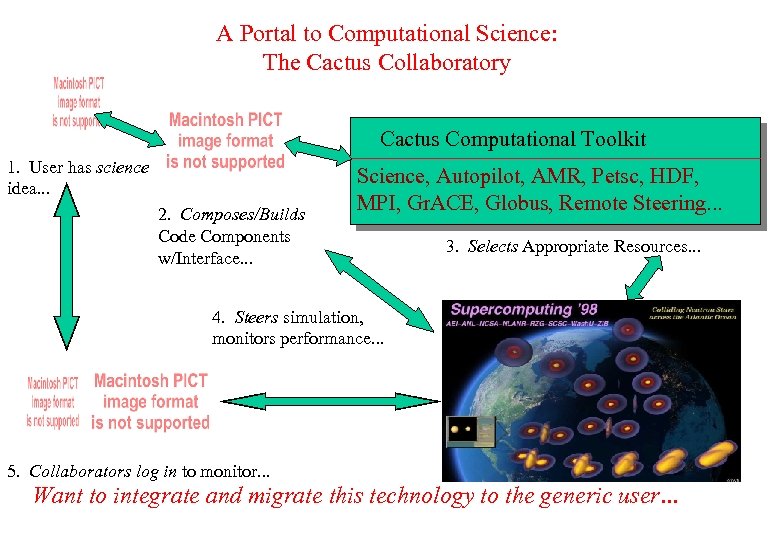

A Portal to Computational Science: The Cactus Collaboratory Cactus Computational Toolkit 1. User has science idea. . . 2. Composes/Builds Code Components w/Interface. . . Science, Autopilot, AMR, Petsc, HDF, MPI, Gr. ACE, Globus, Remote Steering. . . 3. Selects Appropriate Resources. . . 4. Steers simulation, monitors performance. . . 5. Collaborators log in to monitor. . . Want to integrate and migrate this technology to the generic user…

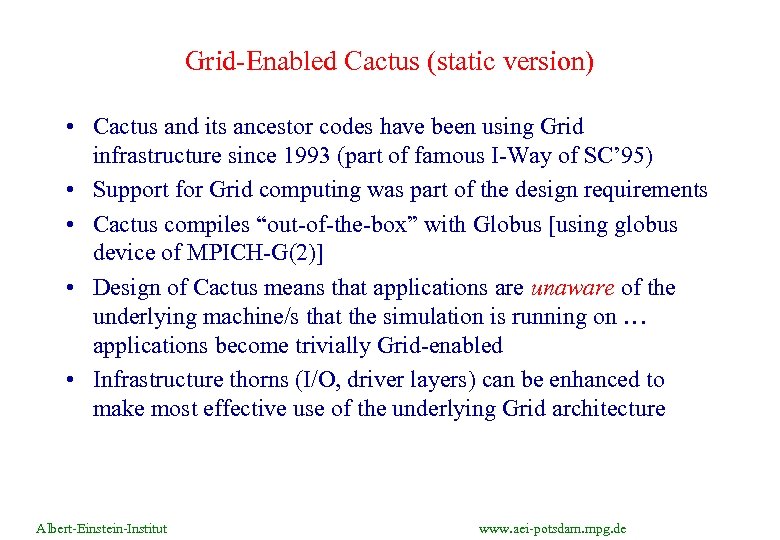

Grid-Enabled Cactus (static version) • Cactus and its ancestor codes have been using Grid infrastructure since 1993 (part of famous I-Way of SC’ 95) • Support for Grid computing was part of the design requirements • Cactus compiles “out-of-the-box” with Globus [using globus device of MPICH-G(2)] • Design of Cactus means that applications are unaware of the underlying machine/s that the simulation is running on … applications become trivially Grid-enabled • Infrastructure thorns (I/O, driver layers) can be enhanced to make most effective use of the underlying Grid architecture Albert-Einstein-Institut www. aei-potsdam. mpg. de

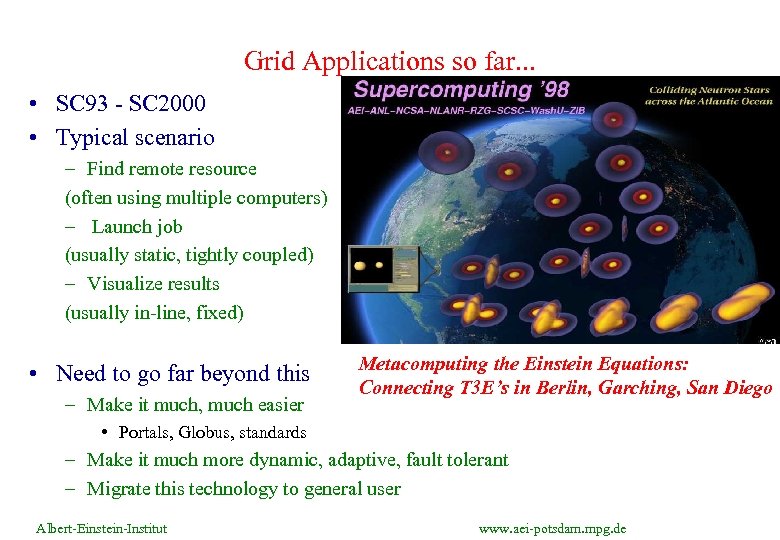

Grid Applications so far. . . • SC 93 - SC 2000 • Typical scenario – Find remote resource (often using multiple computers) – Launch job (usually static, tightly coupled) – Visualize results (usually in-line, fixed) • Need to go far beyond this – Make it much, much easier Metacomputing the Einstein Equations: Connecting T 3 E’s in Berlin, Garching, San Diego • Portals, Globus, standards – Make it much more dynamic, adaptive, fault tolerant – Migrate this technology to general user Albert-Einstein-Institut www. aei-potsdam. mpg. de

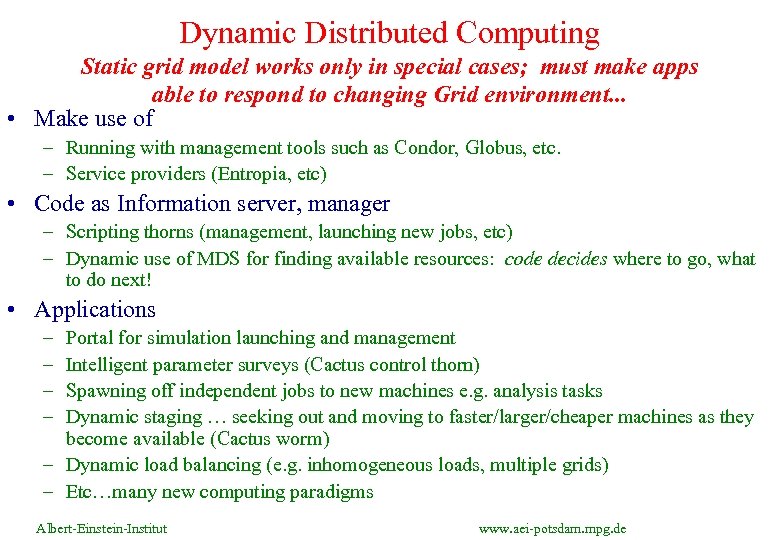

Dynamic Distributed Computing Static grid model works only in special cases; must make apps able to respond to changing Grid environment. . . • Make use of – Running with management tools such as Condor, Globus, etc. – Service providers (Entropia, etc) • Code as Information server, manager – Scripting thorns (management, launching new jobs, etc) – Dynamic use of MDS for finding available resources: code decides where to go, what to do next! • Applications – – Portal for simulation launching and management Intelligent parameter surveys (Cactus control thorn) Spawning off independent jobs to new machines e. g. analysis tasks Dynamic staging … seeking out and moving to faster/larger/cheaper machines as they become available (Cactus worm) – Dynamic load balancing (e. g. inhomogeneous loads, multiple grids) – Etc…many new computing paradigms Albert-Einstein-Institut www. aei-potsdam. mpg. de

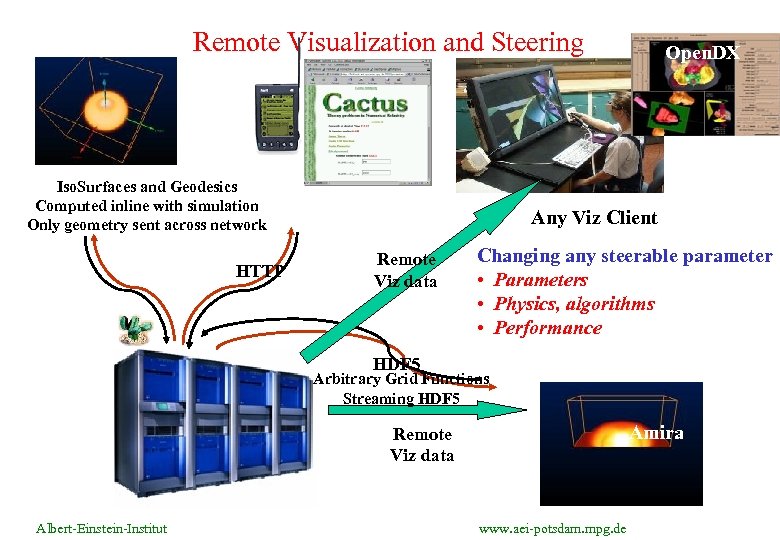

Remote Visualization and Steering Iso. Surfaces and Geodesics Computed inline with simulation Only geometry sent across network HTTP Open. DX Any Viz Client Remote Viz data Changing any steerable parameter • Parameters • Physics, algorithms • Performance HDF 5 Arbitrary Grid Functions Streaming HDF 5 Amira Remote Viz data Albert-Einstein-Institut www. aei-potsdam. mpg. de

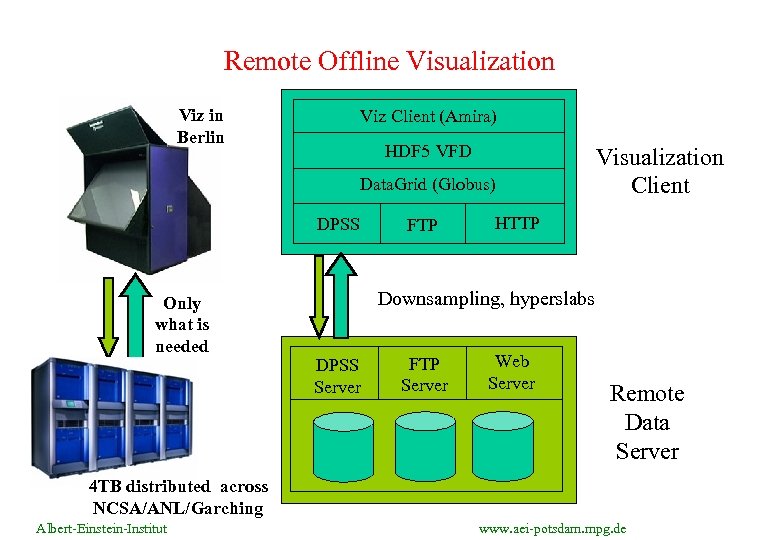

Remote Offline Visualization Viz in Berlin Viz Client (Amira) HDF 5 VFD Data. Grid (Globus) DPSS Only what is needed FTP Visualization Client HTTP Downsampling, hyperslabs DPSS Server FTP Server Web Server Remote Data Server 4 TB distributed across NCSA/ANL/Garching Albert-Einstein-Institut www. aei-potsdam. mpg. de

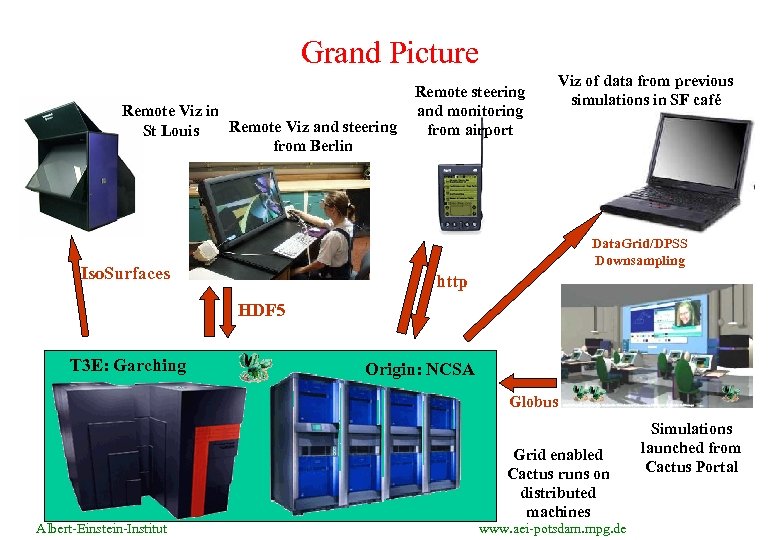

Grand Picture Remote Viz in Remote Viz and steering St Louis from Berlin Remote steering and monitoring from airport Viz of data from previous simulations in SF café Data. Grid/DPSS Downsampling Iso. Surfaces http HDF 5 T 3 E: Garching Origin: NCSA Globus Albert-Einstein-Institut Grid enabled Cactus runs on distributed machines www. aei-potsdam. mpg. de Simulations launched from Cactus Portal

Egrid and Grid Forum Activities • Grid Forum: http: //www. gridforum. org – – Developed in US over last 18 months ~ 200 members (? ) Meeting every 3 -4 months Many working groups discussing grid software, standards, grid techniques, scheduling, applications, etc. • Egrid: http: //www. egrid. org – European initiative now 6 months old – About 2 dozen sites in Europe – Similar goals, but with European identity • Next meeting: Oct 15 -17 in Boston • We hope to enlist many more application groups to drive Grid Development – Cactus Grid Application Development Toolkit Albert-Einstein-Institut www. aei-potsdam. mpg. de

Present Testbeds (a sampling…) • Cactus Virtual Machine Room – Small version of Alliance VMR with European sites (NCSA, ANL, UNM, AEI, ZIB) – Portal allows access for users to all machines, queues, etc, without knowing local passwords, batch systems, file systems, OS, etc. . . – Developed in collaboration with NSF KDI project, AEI, DFN-Verein – Built on Globus services – Will copy and develop Egrid version, hopefully tomorrow. . . • Egrid Demos – Developing Cactus Worm demo for SC 2000! – Cactus simulation runs, queries MDS, finds next resource, migrates itself to next site, runs, and continues around Europe, with continuous remote viz and control. . . • Big Distributed Simulation – Old static model: harness as many supercomputers as possible – Go for a Tflop, even with tightly coupled simulation distributed across continents – Developing techniques to make bandwidth/latency tolerant simulations. . . Albert-Einstein-Institut www. aei-potsdam. mpg. de

Further details. . . • Cactus – http: //www. cactuscode. org – http: //www. computer. org/computer/articles/einstein_1299_1. htm • Movies, research overview (needs major updating) – http: //jean-luc. ncsa. uiuc. edu • Simulation Collaboratory/Portal Work: – http: //wugrav. wustl. edu/ASC/main. Frame. html • Remote Steering, high speed networking – http: //www. zib. de/Visual/projects/TIKSL/ – http: //jean-luc. ncsa. uiuc. edu/Projects/Gigabit/ • EU Astrophysics Network – http: //www. aei-potsdam. mpg. de/research/astro/eu_network/index. html Albert-Einstein-Institut www. aei-potsdam. mpg. de

e4984e0790532293c21a16c8ce6f0e67.ppt