75f7af624e6a1cbfe7b586efb868fb48.ppt

- Количество слайдов: 39

Using Reinforcement Learning to Build a Better Model of Dialogue State Joel Tetreault & Diane Litman University of Pittsburgh LRDC April 7, 2006

Problem n Problems with designing spoken dialogue systems: q q q n What features to use? How to handle noisy data or miscommunications? Hand-tailoring policies for complex dialogues? Previous work used machine learning to improve the dialogue manager of spoken dialogue systems [Singh et al. , ‘ 02; Walker, ‘ 00; Henderson et al. , ‘ 05] n However, very little empirical work on testing the utility of adding specialized features to construct a better dialogue state

Goal n n Lots of features can be used to describe the user state, which ones to you use? Goal: show that adding more complex features to a state is a worthwhile pursuit since it alters what actions a system should make 5 features: certainty, student dialogue move, concept repetition, frustration, student performance All are important to tutoring systems, but also are important to dialogue systems in general

Outline n n Markov Decision Processes (MDP) MDP Instantiation Experimental Method Results

Markov Decision Processes n n What is the best action an agent to take at any state to maximize reward at the end? MDP Input: q q q States Actions Reward Function

MDP Output n Use policy iteration to propagate final reward to the states to determine: q q n V-value: the worth of each state Policy: optimal action to take for each state Values and policies are based on the reward function but also on the probabilities of getting from one state to the next given a certain action

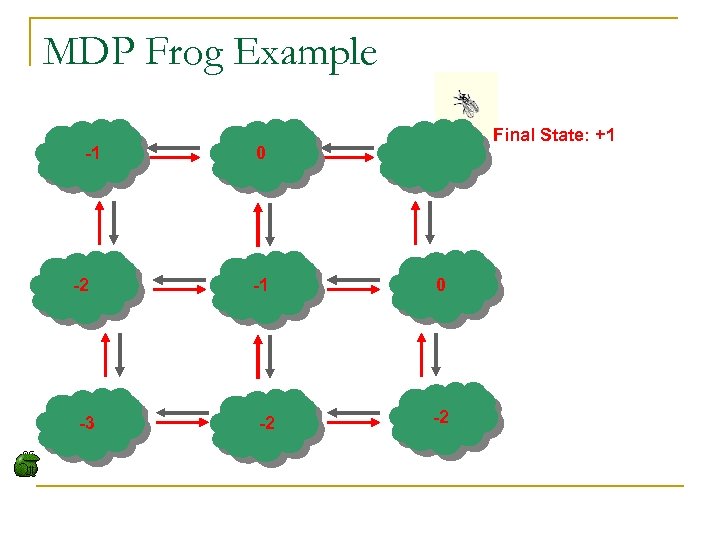

What’s the best path to the fly?

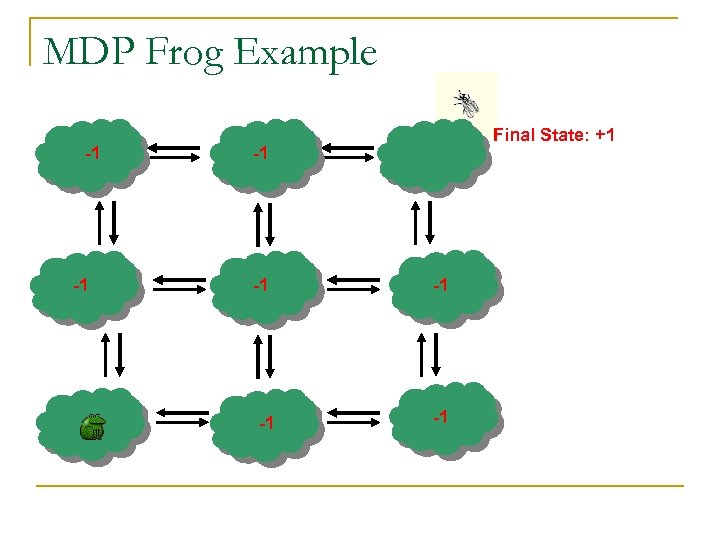

MDP Frog Example -1 -1 Final State: +1 -1 -1 -1

MDP Frog Example -1 -2 -3 Final State: +1 0 -1 -2 0 -2

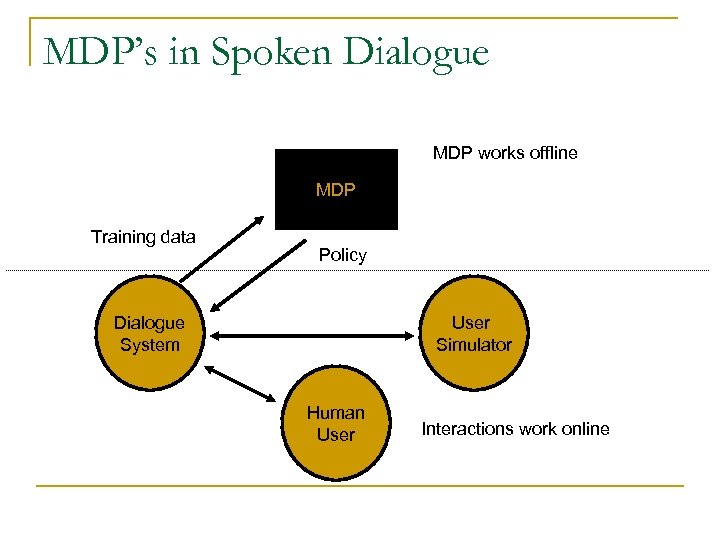

MDP’s in Spoken Dialogue MDP works offline MDP Training data Policy Dialogue System User Simulator Human User Interactions work online

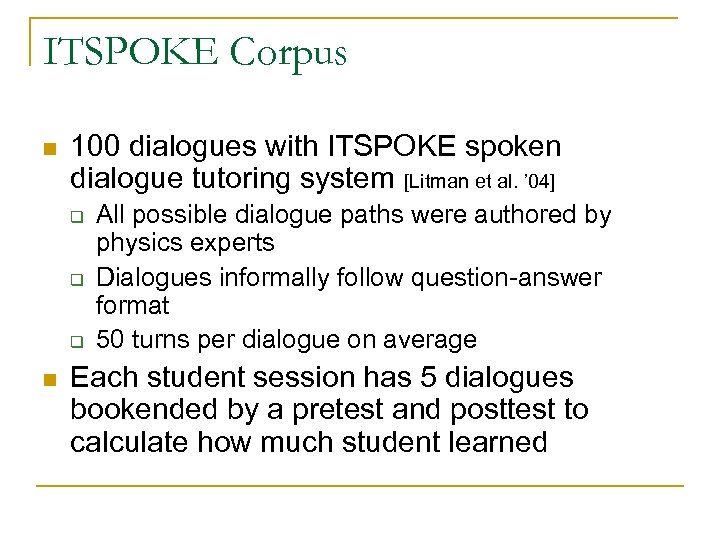

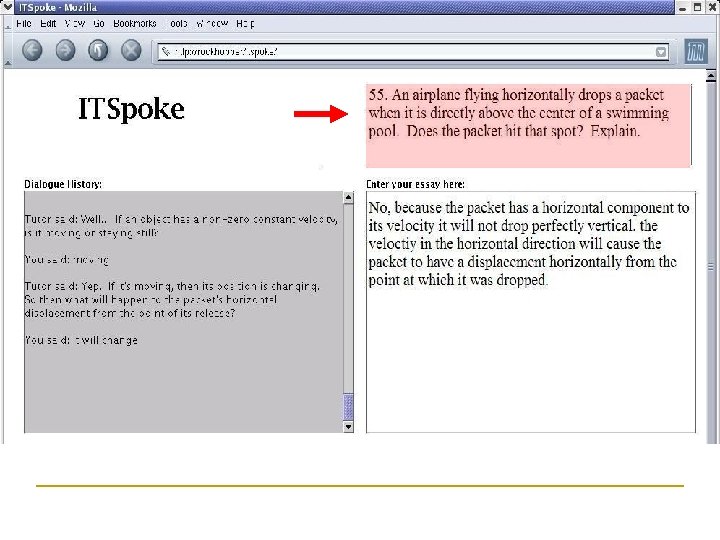

ITSPOKE Corpus n 100 dialogues with ITSPOKE spoken dialogue tutoring system [Litman et al. ’ 04] q q q n All possible dialogue paths were authored by physics experts Dialogues informally follow question-answer format 50 turns per dialogue on average Each student session has 5 dialogues bookended by a pretest and posttest to calculate how much student learned

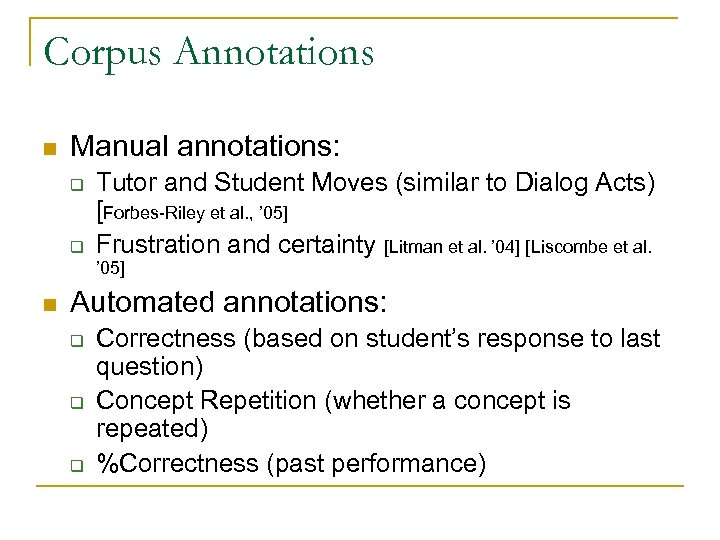

Corpus Annotations n Manual annotations: q q Tutor and Student Moves (similar to Dialog Acts) [Forbes-Riley et al. , ’ 05] Frustration and certainty [Litman et al. ’ 04] [Liscombe et al. ’ 05] n Automated annotations: q q q Correctness (based on student’s response to last question) Concept Repetition (whether a concept is repeated) %Correctness (past performance)

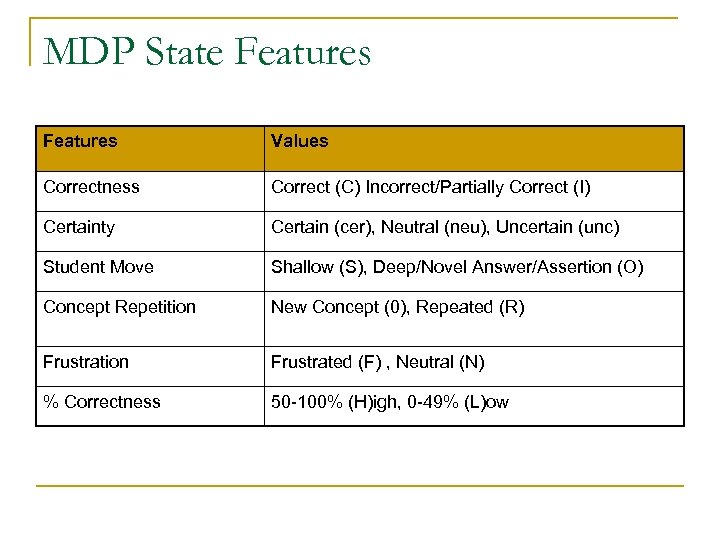

MDP State Features Values Correctness Correct (C) Incorrect/Partially Correct (I) Certainty Certain (cer), Neutral (neu), Uncertain (unc) Student Move Shallow (S), Deep/Novel Answer/Assertion (O) Concept Repetition New Concept (0), Repeated (R) Frustration Frustrated (F) , Neutral (N) % Correctness 50 -100% (H)igh, 0 -49% (L)ow

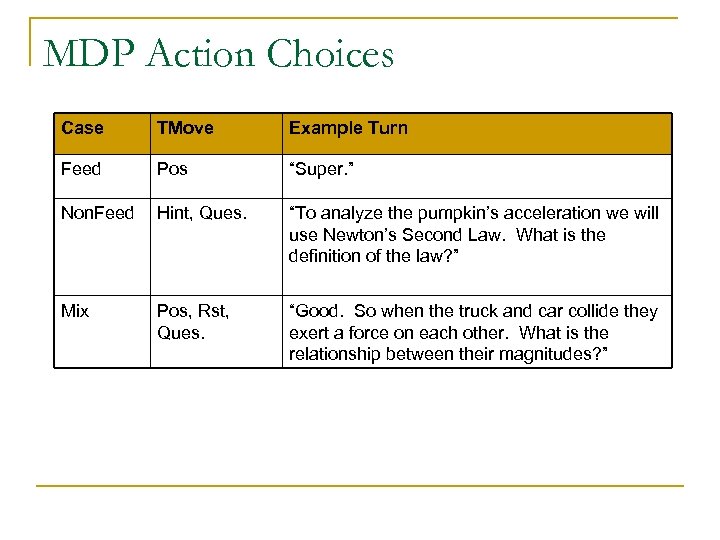

MDP Action Choices Case TMove Example Turn Feed Pos “Super. ” Non. Feed Hint, Ques. “To analyze the pumpkin’s acceleration we will use Newton’s Second Law. What is the definition of the law? ” Mix Pos, Rst, Ques. “Good. So when the truck and car collide they exert a force on each other. What is the relationship between their magnitudes? ”

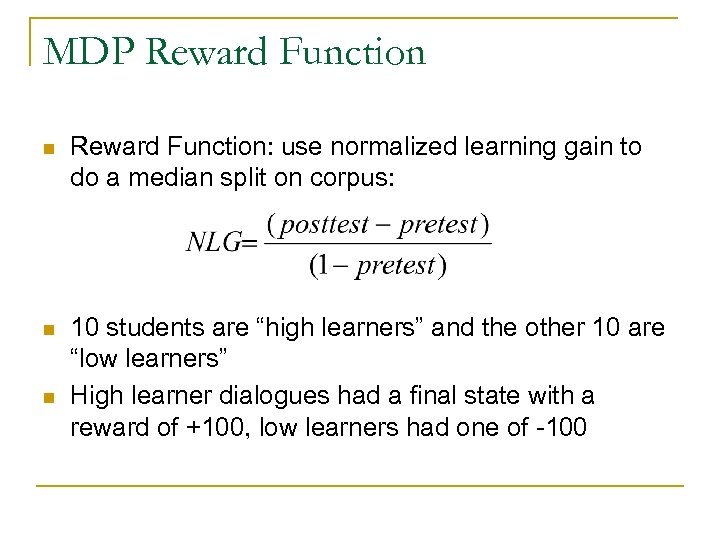

MDP Reward Function n Reward Function: use normalized learning gain to do a median split on corpus: n 10 students are “high learners” and the other 10 are “low learners” High learner dialogues had a final state with a reward of +100, low learners had one of -100 n

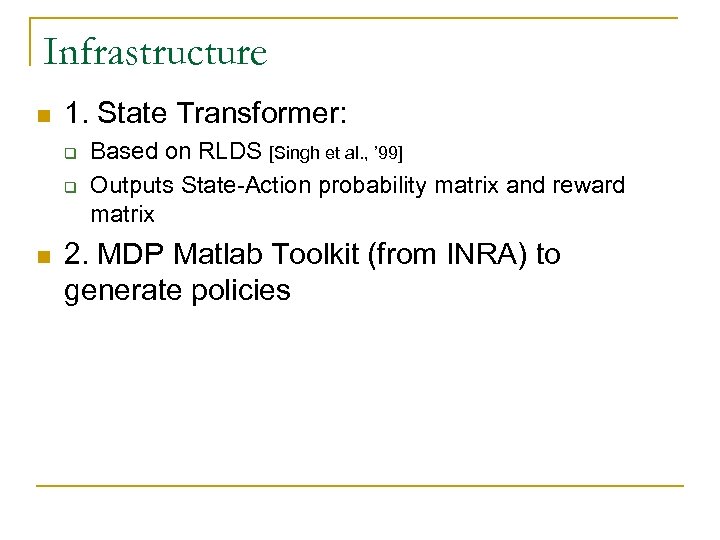

Infrastructure n 1. State Transformer: q q n Based on RLDS [Singh et al. , ’ 99] Outputs State-Action probability matrix and reward matrix 2. MDP Matlab Toolkit (from INRA) to generate policies

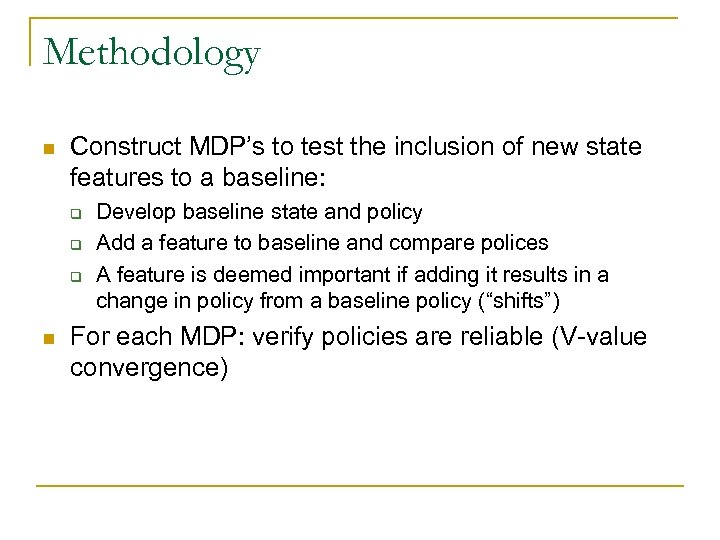

Methodology n Construct MDP’s to test the inclusion of new state features to a baseline: q q q n Develop baseline state and policy Add a feature to baseline and compare polices A feature is deemed important if adding it results in a change in policy from a baseline policy (“shifts”) For each MDP: verify policies are reliable (V-value convergence)

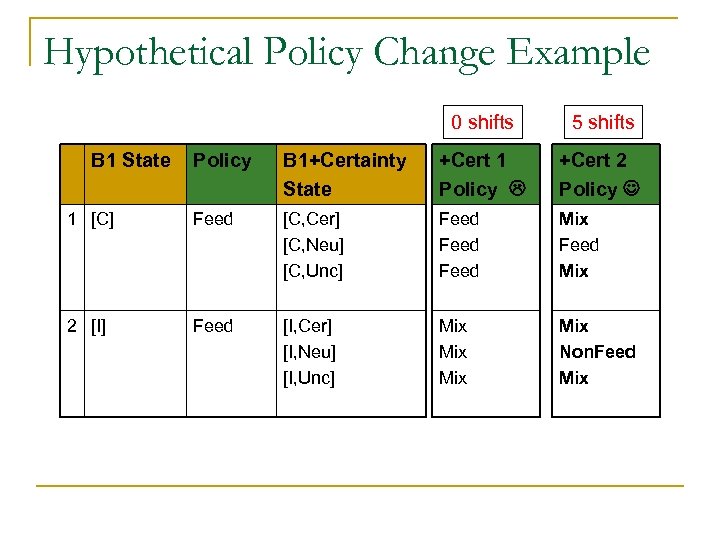

Hypothetical Policy Change Example 0 shifts B 1 State 5 shifts Policy B 1+Certainty State +Cert 1 Policy +Cert 2 Policy 1 [C] Feed [C, Cer] [C, Neu] [C, Unc] Feed Mix 2 [I] Feed [I, Cer] [I, Neu] [I, Unc] Mix Mix Non. Feed Mix

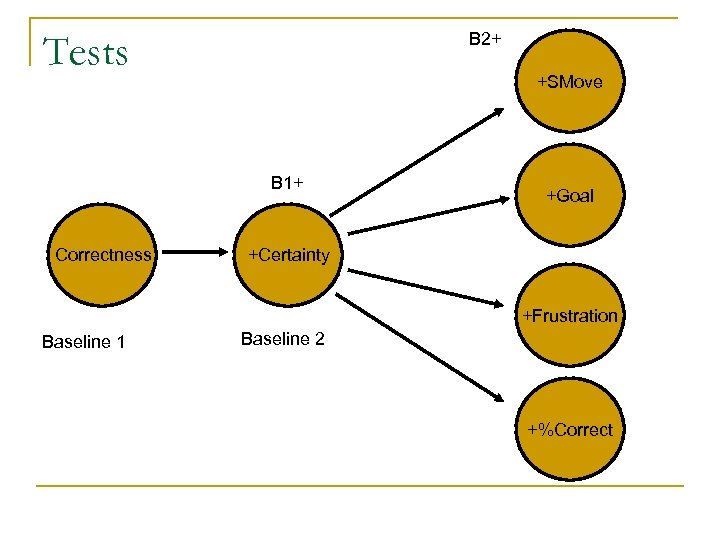

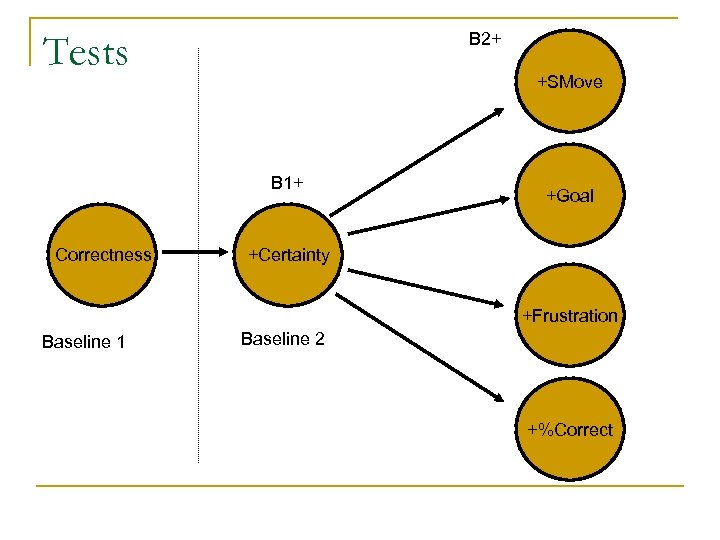

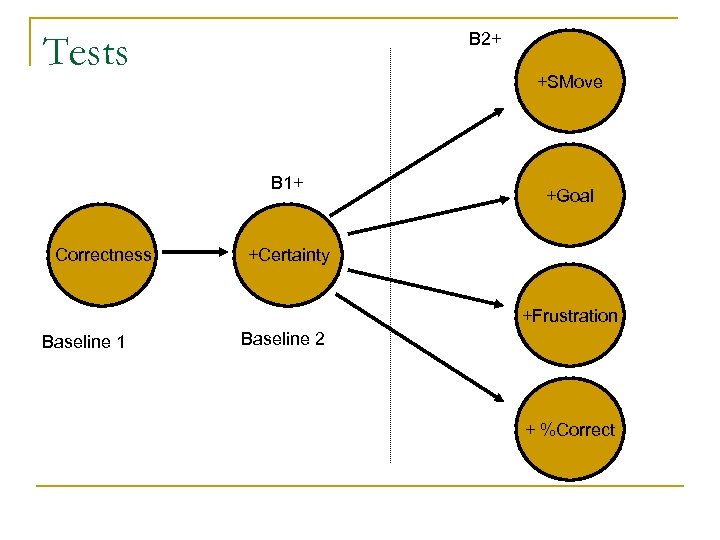

Tests B 2+ +SMove B 1+ Correctness +Goal +Certainty +Frustration Baseline 1 Baseline 2 +%Correct

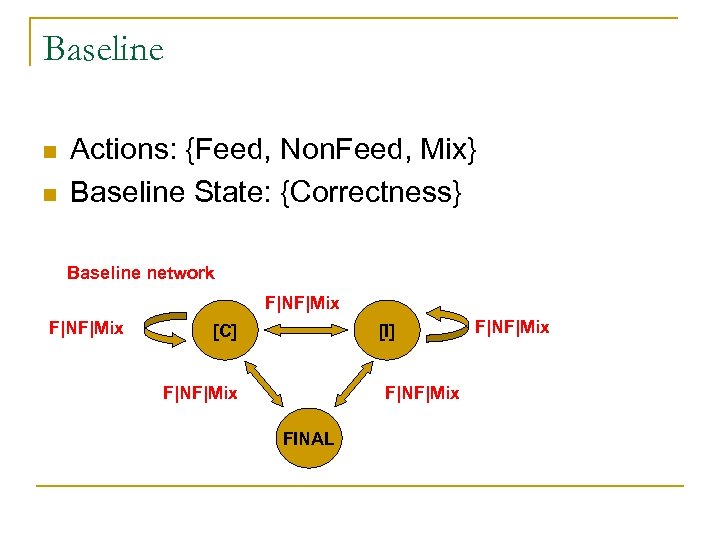

Baseline n n Actions: {Feed, Non. Feed, Mix} Baseline State: {Correctness} Baseline network F|NF|Mix [C] [I] F|NF|Mix FINAL F|NF|Mix

![Baseline 1 Policies # State Size Policy 1 [C] 1308 Feed 2 [I] 872 Baseline 1 Policies # State Size Policy 1 [C] 1308 Feed 2 [I] 872](https://present5.com/presentation/75f7af624e6a1cbfe7b586efb868fb48/image-22.jpg)

Baseline 1 Policies # State Size Policy 1 [C] 1308 Feed 2 [I] 872 Feed n Trend: if you only have student correctness as a model of student state, regardless of their response, the best tactic is to always give simple feedback

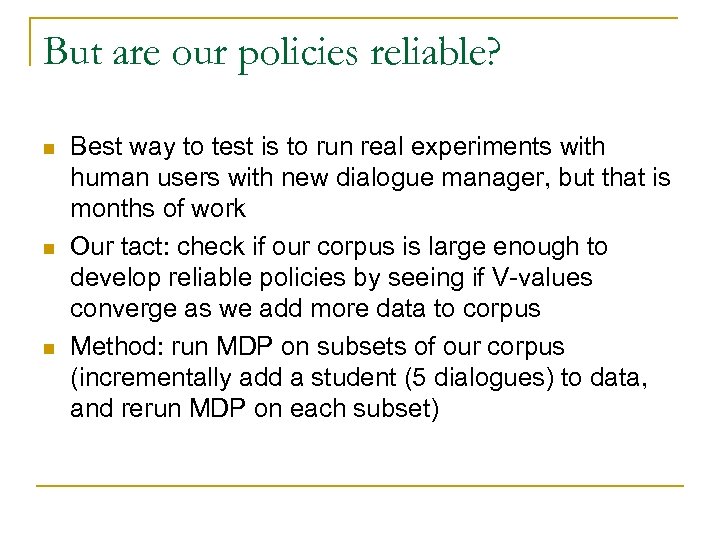

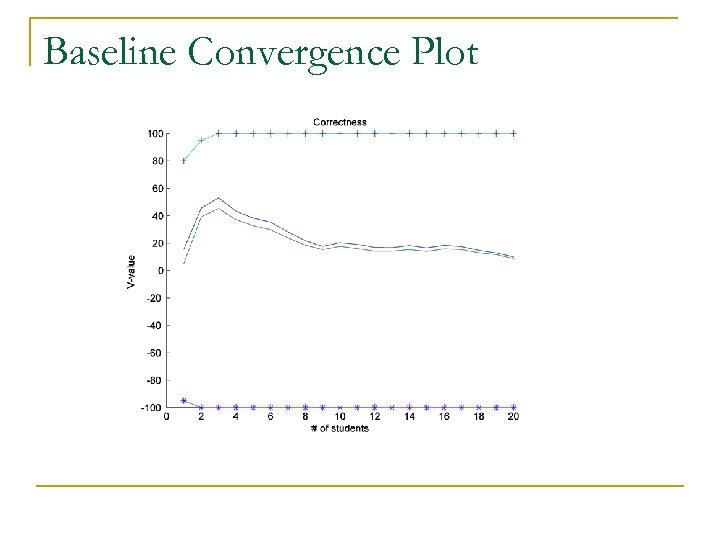

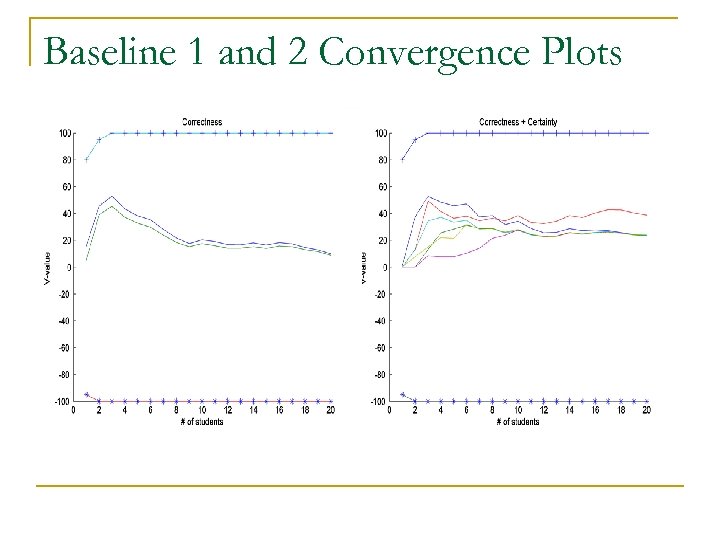

But are our policies reliable? n n n Best way to test is to run real experiments with human users with new dialogue manager, but that is months of work Our tact: check if our corpus is large enough to develop reliable policies by seeing if V-values converge as we add more data to corpus Method: run MDP on subsets of our corpus (incrementally add a student (5 dialogues) to data, and rerun MDP on each subset)

Baseline Convergence Plot

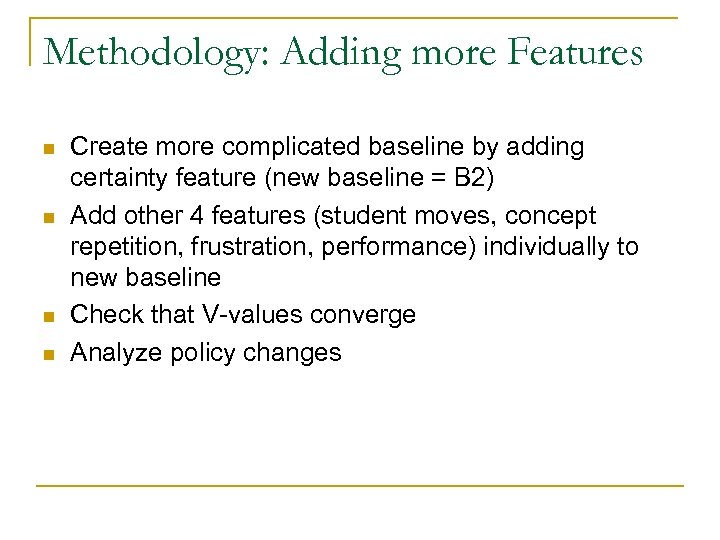

Methodology: Adding more Features n n Create more complicated baseline by adding certainty feature (new baseline = B 2) Add other 4 features (student moves, concept repetition, frustration, performance) individually to new baseline Check that V-values converge Analyze policy changes

Tests B 2+ +SMove B 1+ Correctness +Goal +Certainty +Frustration Baseline 1 Baseline 2 +%Correct

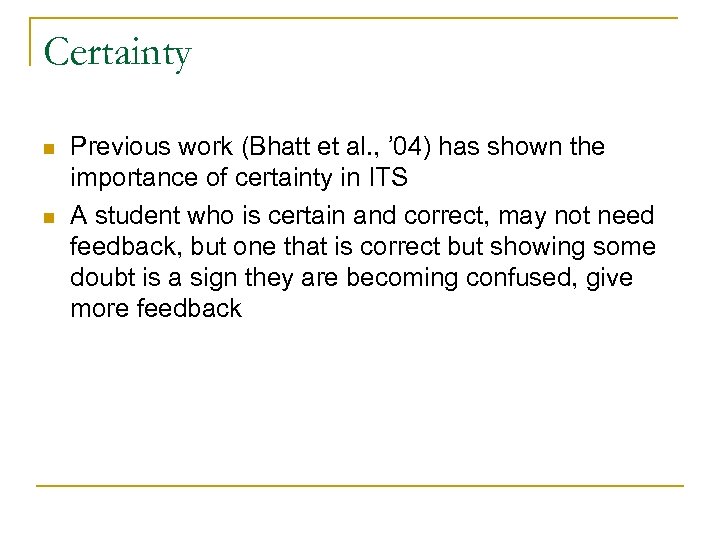

Certainty n n Previous work (Bhatt et al. , ’ 04) has shown the importance of certainty in ITS A student who is certain and correct, may not need feedback, but one that is correct but showing some doubt is a sign they are becoming confused, give more feedback

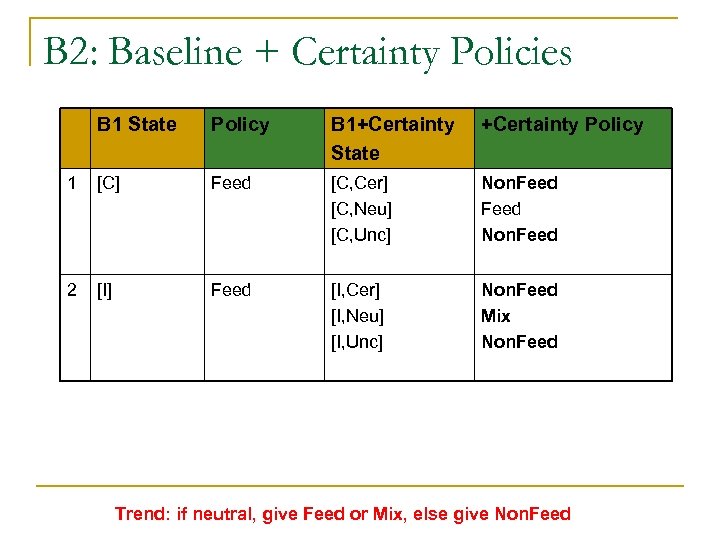

B 2: Baseline + Certainty Policies B 1 State Policy B 1+Certainty State +Certainty Policy 1 [C] Feed [C, Cer] [C, Neu] [C, Unc] Non. Feed 2 [I] Feed [I, Cer] [I, Neu] [I, Unc] Non. Feed Mix Non. Feed Trend: if neutral, give Feed or Mix, else give Non. Feed

Baseline 1 and 2 Convergence Plots

Tests B 2+ +SMove B 1+ Correctness +Goal +Certainty +Frustration Baseline 1 Baseline 2 + %Correct

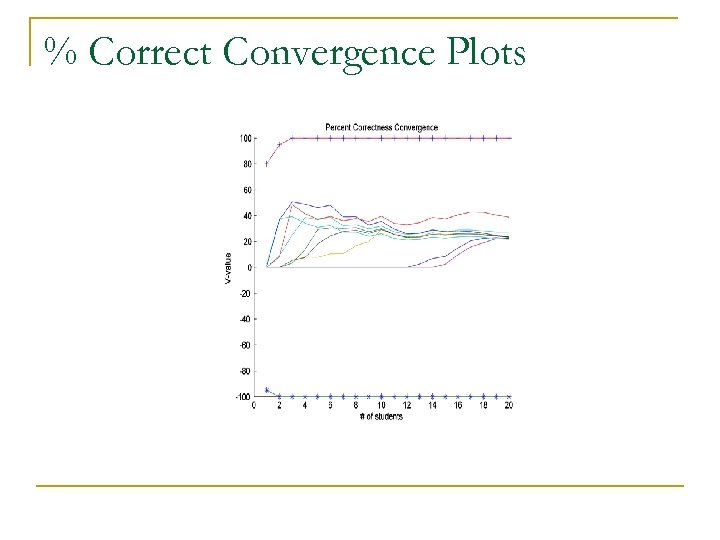

% Correct Convergence Plots

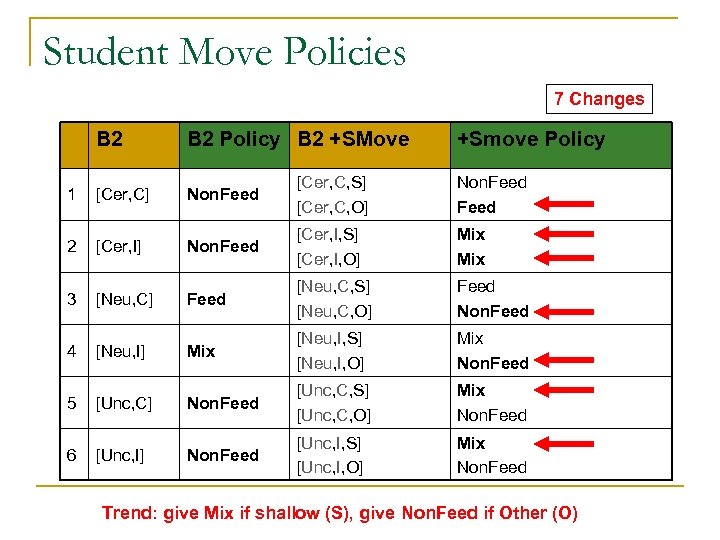

Student Move Policies 7 Changes B 2 Policy B 2 +SMove +Smove Policy 1 [Cer, C] Non. Feed [Cer, C, S] [Cer, C, O] Non. Feed 2 [Cer, I] Non. Feed [Cer, I, S] [Cer, I, O] Mix 3 [Neu, C] Feed [Neu, C, S] [Neu, C, O] Feed Non. Feed 4 [Neu, I] Mix [Neu, I, S] [Neu, I, O] Mix Non. Feed 5 [Unc, C] Non. Feed [Unc, C, S] [Unc, C, O] Mix Non. Feed 6 [Unc, I] Non. Feed [Unc, I, S] [Unc, I, O] Mix Non. Feed Trend: give Mix if shallow (S), give Non. Feed if Other (O)

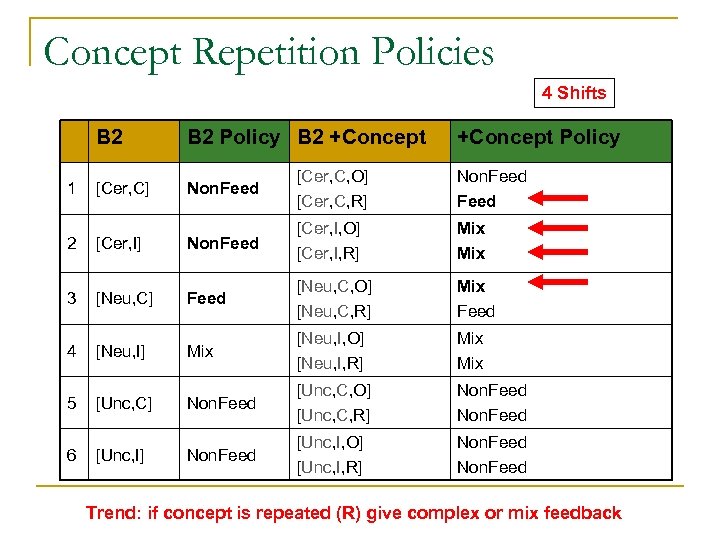

Concept Repetition Policies 4 Shifts B 2 1 B 2 Policy B 2 +Concept Policy [Cer, C] Non. Feed [Cer, C, O] [Cer, C, R] Non. Feed Mix 2 [Cer, I] Non. Feed [Cer, I, O] [Cer, I, R] 3 [Neu, C] Feed [Neu, C, O] [Neu, C, R] Mix Feed 4 [Neu, I] Mix [Neu, I, O] [Neu, I, R] Mix 5 [Unc, C] Non. Feed [Unc, C, O] [Unc, C, R] Non. Feed 6 [Unc, I] Non. Feed [Unc, I, O] [Unc, I, R] Non. Feed Trend: if concept is repeated (R) give complex or mix feedback

![Frustration Policies 4 Shifts B 2 Policy B 2 +Frustration Policy 1 [Cer, C] Frustration Policies 4 Shifts B 2 Policy B 2 +Frustration Policy 1 [Cer, C]](https://present5.com/presentation/75f7af624e6a1cbfe7b586efb868fb48/image-34.jpg)

Frustration Policies 4 Shifts B 2 Policy B 2 +Frustration Policy 1 [Cer, C] Non. Feed [Cer, C, N] [Cer, C, F] Non. Feed 2 [Cer, I] Non. Feed [Cer, I, N] [Cer, I, F] Non. Feed 3 [Neu, C] Feed [Neu, C, N] [Neu, C, F] Feed Non. Feed 4 [Neu, I] Mix [Neu, I, N] [Neu, I, F] Mix Non. Feed 5 [Unc, C] Non. Feed [Unc, C, N] [Unc, C, F] Non. Feed 6 [Unc, I] Non. Feed [Unc, I, N] [Unc, I, F] Non. Feed Trend: if student is frustrated (F), give Non. Feed

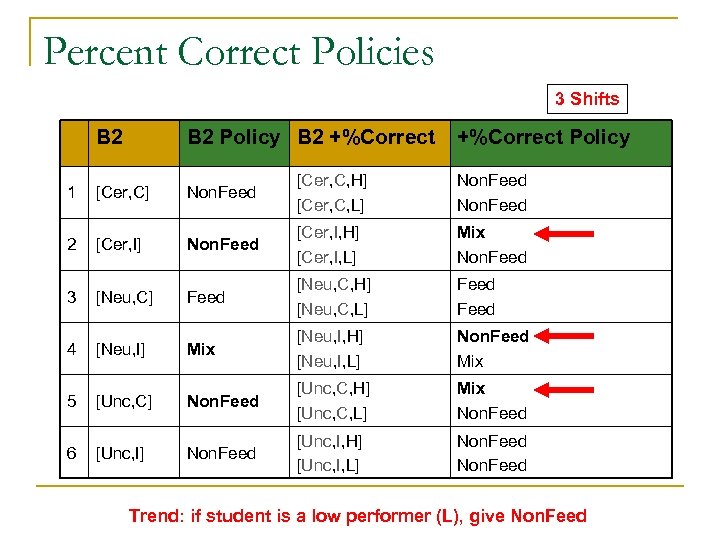

Percent Correct Policies 3 Shifts B 2 Policy B 2 +%Correct Policy 1 [Cer, C] Non. Feed [Cer, C, H] [Cer, C, L] Non. Feed 2 [Cer, I] Non. Feed [Cer, I, H] [Cer, I, L] Mix Non. Feed 3 [Neu, C] Feed [Neu, C, H] [Neu, C, L] Feed 4 [Neu, I] Mix [Neu, I, H] [Neu, I, L] Non. Feed Mix 5 [Unc, C] Non. Feed [Unc, C, H] [Unc, C, L] Mix Non. Feed 6 [Unc, I] Non. Feed [Unc, I, H] [Unc, I, L] Non. Feed Trend: if student is a low performer (L), give Non. Feed

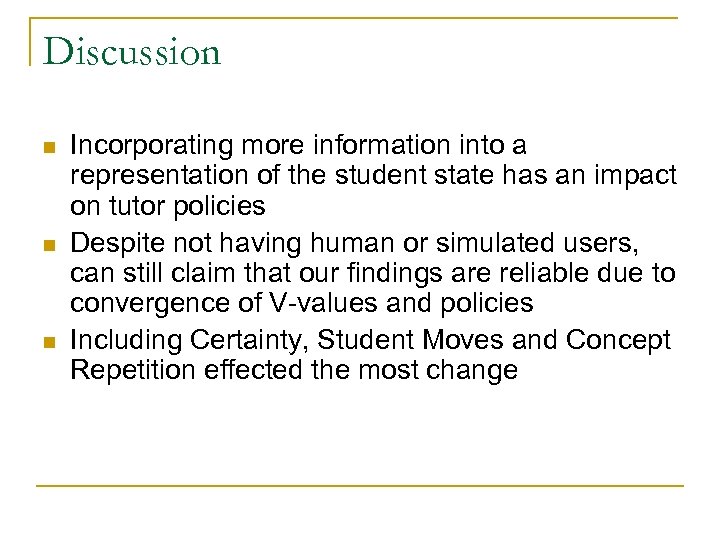

Discussion n Incorporating more information into a representation of the student state has an impact on tutor policies Despite not having human or simulated users, can still claim that our findings are reliable due to convergence of V-values and policies Including Certainty, Student Moves and Concept Repetition effected the most change

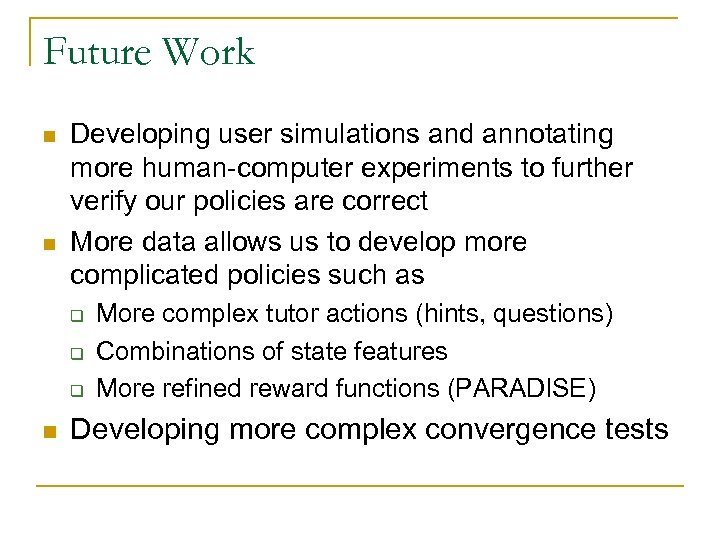

Future Work n n Developing user simulations and annotating more human-computer experiments to further verify our policies are correct More data allows us to develop more complicated policies such as q q q n More complex tutor actions (hints, questions) Combinations of state features More refined reward functions (PARADISE) Developing more complex convergence tests

![Related Work n n [Paek and Chickering, ‘ 05] [Singh et al. , ‘ Related Work n n [Paek and Chickering, ‘ 05] [Singh et al. , ‘](https://present5.com/presentation/75f7af624e6a1cbfe7b586efb868fb48/image-38.jpg)

Related Work n n [Paek and Chickering, ‘ 05] [Singh et al. , ‘ 99] – optimal dialogue length [Frampton et al. , ‘ 05] – last dialogue act [Williams et al. , ‘ 03] – automatically generate good state/action sets

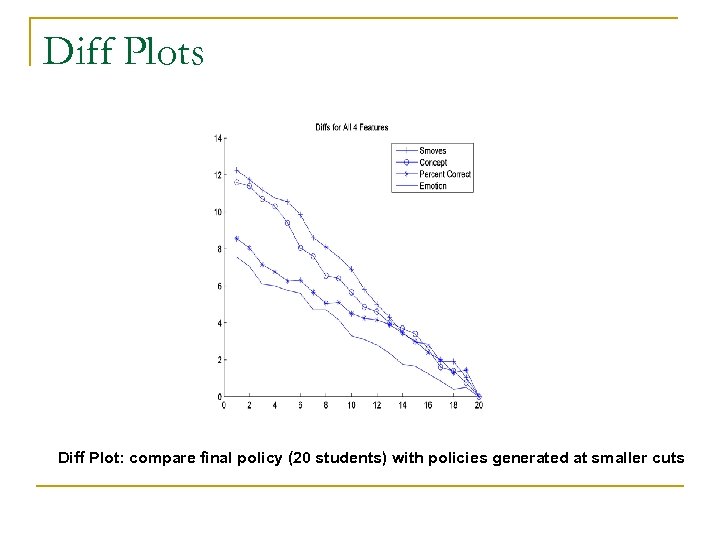

Diff Plots Diff Plot: compare final policy (20 students) with policies generated at smaller cuts

75f7af624e6a1cbfe7b586efb868fb48.ppt