7ba7a2376019ed280ae1e9ad6bb706e8.ppt

- Количество слайдов: 46

Using linked data to interpret tables Varish Mulwad, Tim Finin, Zareen Syed and Anupam Joshi University of Maryland, Baltimore County November 8, 2010 1

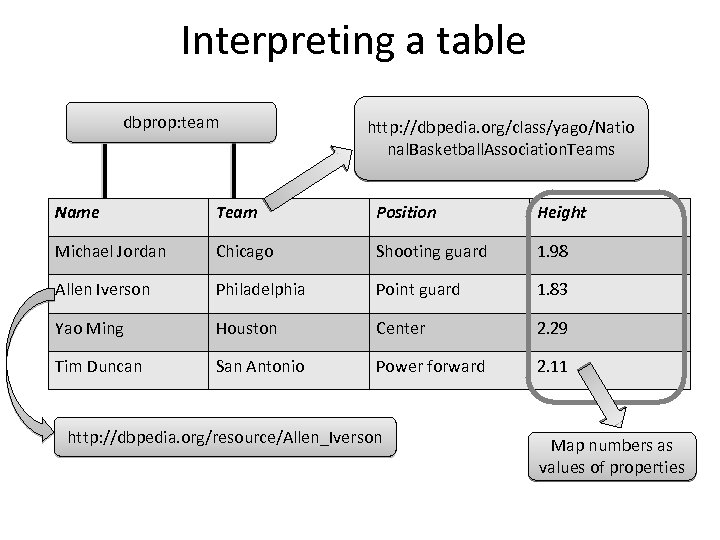

Interpreting a table dbprop: team http: //dbpedia. org/class/yago/Natio nal. Basketball. Association. Teams Name Team Position Height Michael Jordan Chicago Shooting guard 1. 98 Allen Iverson Philadelphia Point guard 1. 83 Yao Ming Houston Center 2. 29 Tim Duncan San Antonio Power forward 2. 11 http: //dbpedia. org/resource/Allen_Iverson Map numbers as values of properties

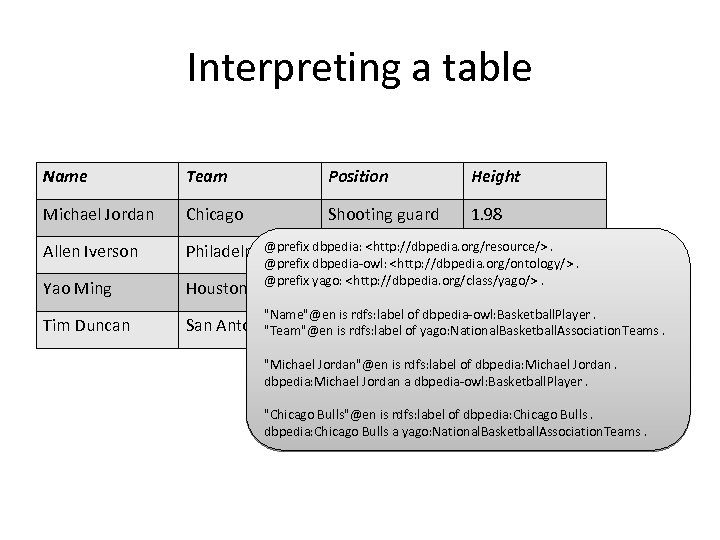

Interpreting a table Name Team Position Height Michael Jordan Chicago Shooting guard 1. 98 Allen Iverson @prefix dbpedia: <http: //dbpedia. org/resource/>. Philadelphia Point guard 1. 83 Yao Ming Houston Tim Duncan San Antonio Power forward 2. 11 "Team"@en is rdfs: label of yago: National. Basketball. Association. Teams. @prefix dbpedia-owl: <http: //dbpedia. org/ontology/>. @prefix yago: <http: //dbpedia. org/class/yago/>. Center 2. 29 "Name"@en is rdfs: label of dbpedia-owl: Basketball. Player. "Michael Jordan"@en is rdfs: label of dbpedia: Michael Jordan a dbpedia-owl: Basketball. Player. "Chicago Bulls"@en is rdfs: label of dbpedia: Chicago Bulls a yago: National. Basketball. Association. Teams.

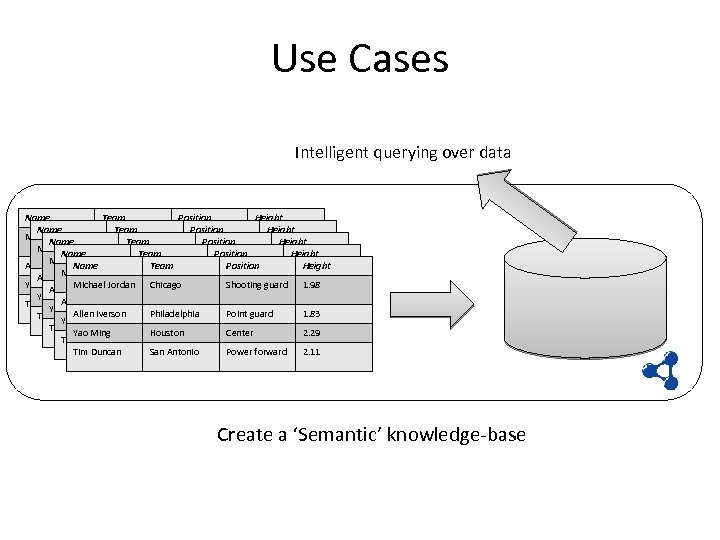

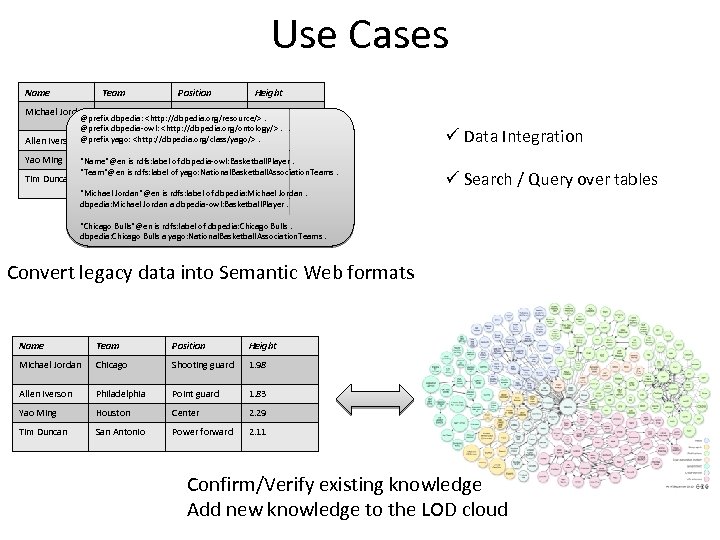

Use Cases Intelligent querying over data Name Team Position Height Michael Jordan Chicago Shooting guard 1. 98 Height Name Team Position Michael Jordan Chicago Shooting guard 1. 98 Height Name Philadelphia. Team Point guard Position Allen Iverson 1. 83 Michael Shooting guard 1. 98 Allen Iverson Jordan Chicago Philadelphia Point guard 1. 83 Shooting guard Yao Ming Iverson. Houston Chicago Allen Michael Jordan Philadelphia Center Point guard 2. 29 1. 83 1. 98 Yao Ming Houston 2. 29 1. 83 Allen Philadelphia Center Point Tim Duncan Iverson Antonio San Power forwardguard 2. 11 2. 29 Yao Ming Iverson. Houston Center Allen Philadelphia Point 1. 83 Tim Duncan Power forwardguard 2. 11 2. 29 Yao Ming San Antonio Houston Center Tim Duncan San Antonio Power forward 2. 11 2. 29 Yao Ming Houston Center Tim Duncan San Antonio Power forward 2. 11 Create a ‘Semantic’ knowledge-base

Use Cases Name Team Position Height Michael Jordan Chicago Shooting guard 1. 98 @prefix dbpedia: <http: //dbpedia. org/resource/>. @prefix dbpedia-owl: <http: //dbpedia. org/ontology/>. . Allen Iverson @prefix yago: <http: //dbpedia. org/class/yago/>1. 83 Philadelphia Point guard Yao Ming Tim Duncan Houston 2. 29 "Name"@en is rdfs: label of. Center dbpedia-owl: Basketball. Player. "Team"@en is rdfs: label of yago: National. Basketball. Association. Teams. San Antonio Power forward 2. 11 "Michael Jordan"@en is rdfs: label of dbpedia: Michael Jordan a dbpedia-owl: Basketball. Player. ü Data Integration ü Search / Query over tables "Chicago Bulls"@en is rdfs: label of dbpedia: Chicago Bulls a yago: National. Basketball. Association. Teams. Convert legacy data into Semantic Web formats Name Team Position Height Michael Jordan Chicago Shooting guard 1. 98 Allen Iverson Philadelphia Point guard 1. 83 Yao Ming Houston Center 2. 29 Tim Duncan San Antonio Power forward 2. 11 Confirm/Verify existing knowledge Add new knowledge to the LOD cloud

Motivation and Related Work

We are laying a strong foundation for the Semantic Web … … but an old problem haunts us …

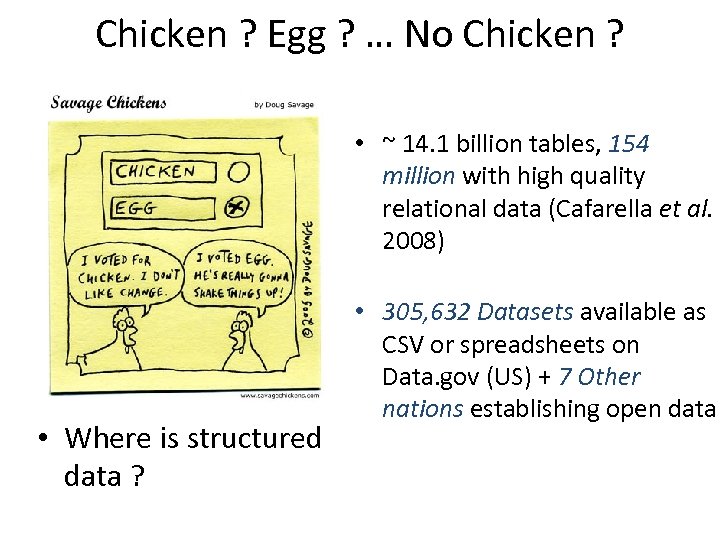

Chicken ? Egg ? … No Chicken ? • ~ 14. 1 billion tables, 154 million with high quality relational data (Cafarella et al. 2008) • Where is structured data ? • 305, 632 Datasets available as CSV or spreadsheets on Data. gov (US) + 7 Other nations establishing open data

Automate the process • Not practical for humans to encode all this into RDF manually • We need systems that can generate data from existing sources

Related Work • Database to Ontology mapping (Barrasa, scar Corcho, & Gmez-prez 2004), (Hu & Qu 2007), (Papapanagiotou et al. 2006), and (Lawrence 2004) • Mapping Relational databases to RDF [W 3 C working group – RDB 2 RDF]

![Related Work • Mapping spreadsheets to RDF [RDF 123, XLWrap] • Practical and helpful Related Work • Mapping spreadsheets to RDF [RDF 123, XLWrap] • Practical and helpful](https://present5.com/presentation/7ba7a2376019ed280ae1e9ad6bb706e8/image-11.jpg)

Related Work • Mapping spreadsheets to RDF [RDF 123, XLWrap] • Practical and helpful systems but … – Require significant manual work – Do not generate linked data • Interpreting web tables to answer complex search queries over the web tables (Limaye et al. 2010)

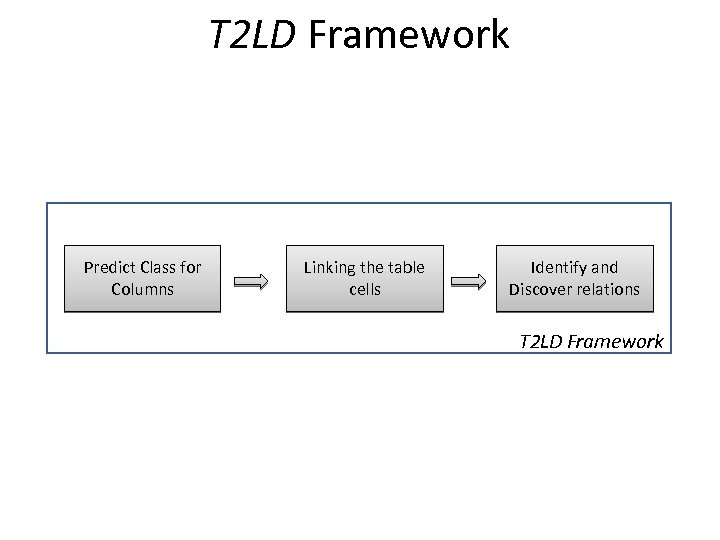

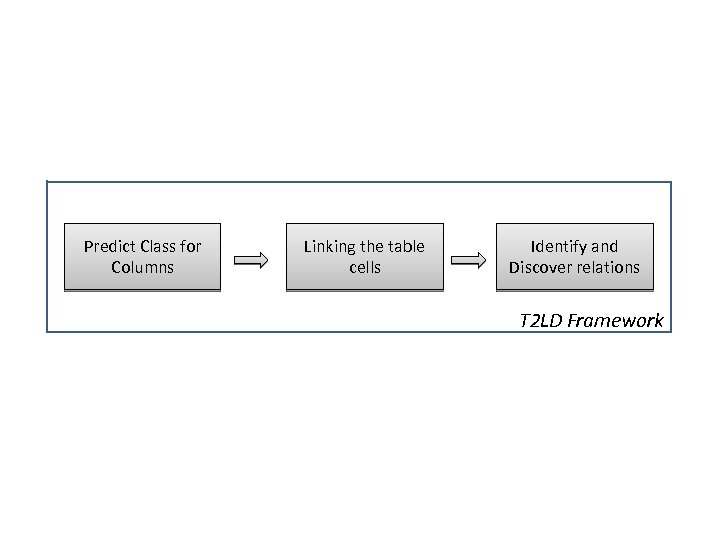

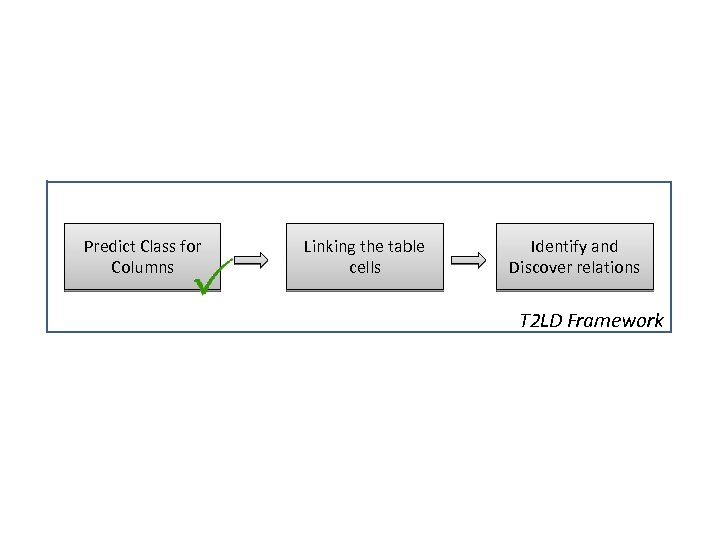

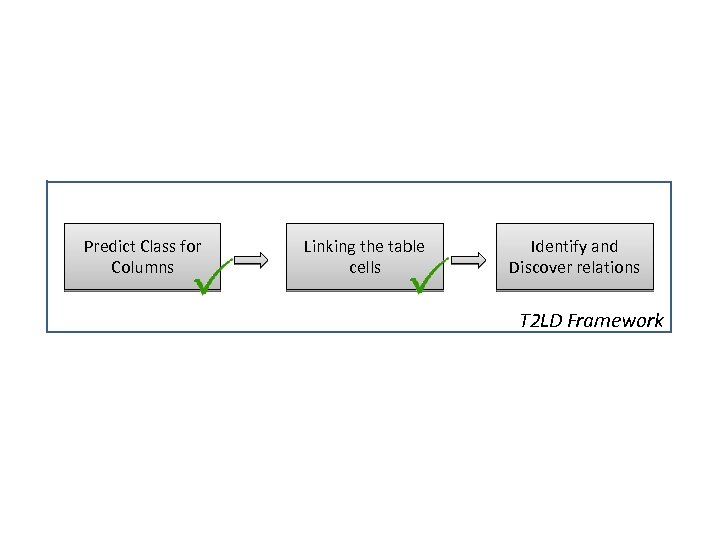

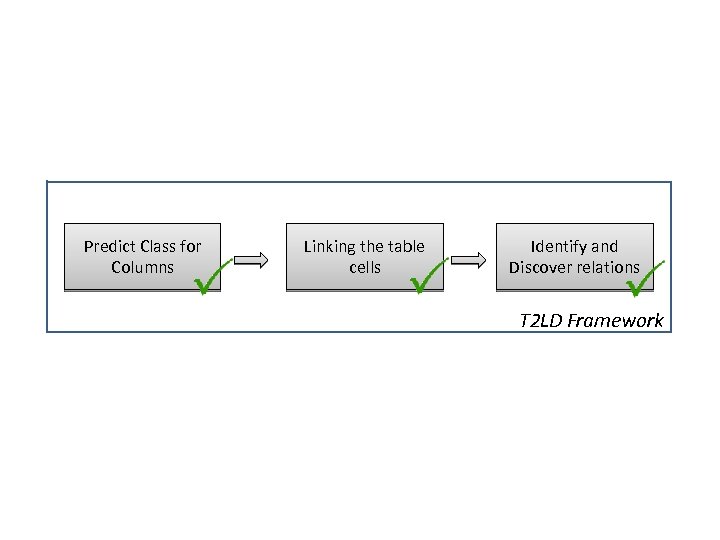

T 2 LD Framework Predict Class for Columns Linking the table cells Identify and Discover relations T 2 LD Framework

Predict Class for Columns Linking the table cells Identify and Discover relations T 2 LD Framework

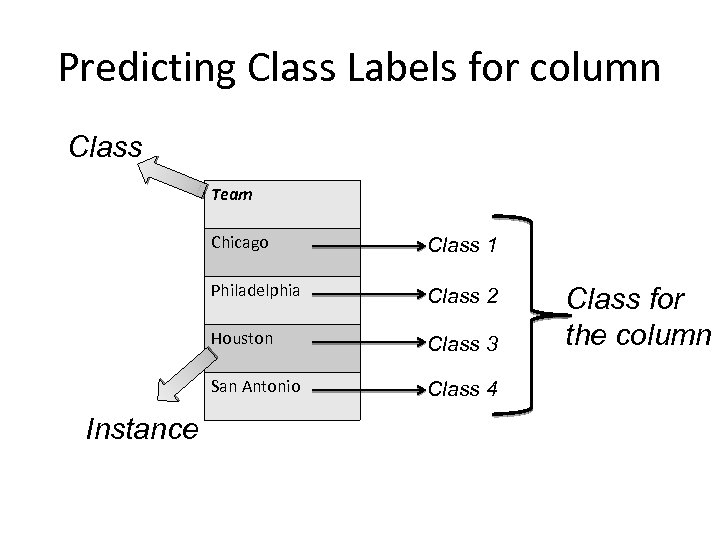

Predicting Class Labels for column Class Team Chicago Philadelphia Class 2 Houston Class 3 San Antonio Instance Class 1 Class 4 Class for the column

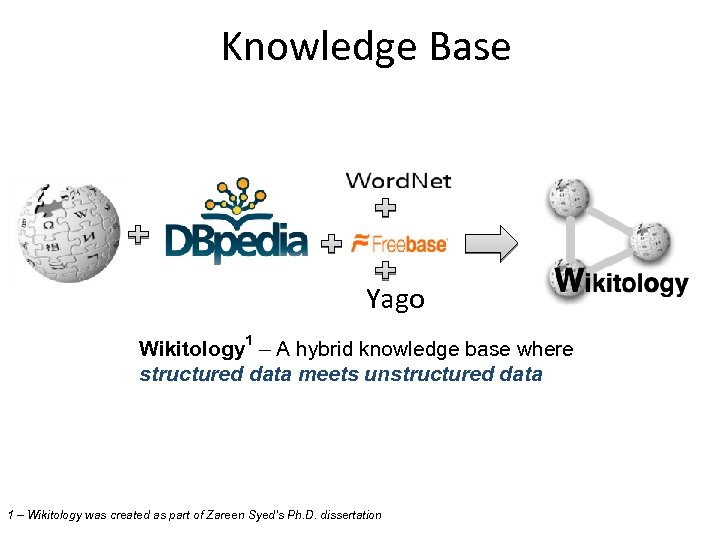

Knowledge Base Yago Wikitology 1 – A hybrid knowledge base where structured data meets unstructured data 1 – Wikitology was created as part of Zareen Syed’s Ph. D. dissertation

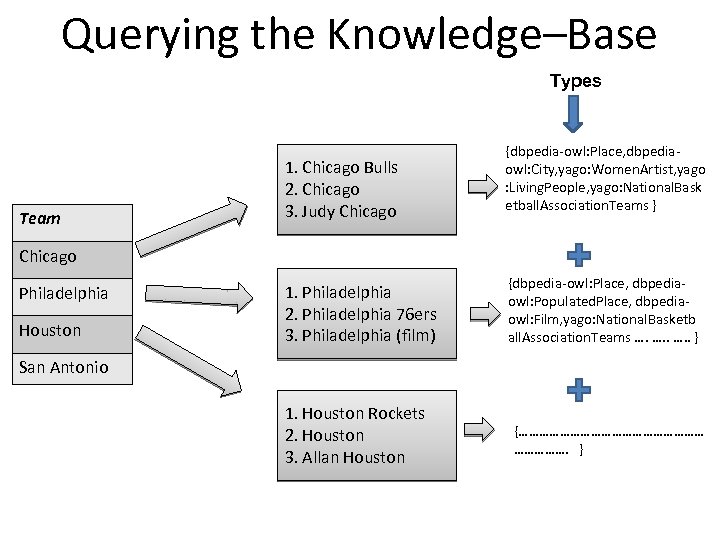

Querying the Knowledge–Base Types Team 1. Chicago Bulls 2. Chicago 3. Judy Chicago {dbpedia-owl: Place, dbpediaowl: City, yago: Women. Artist, yago : Living. People, yago: National. Bask etball. Association. Teams } Chicago Philadelphia Houston 1. Philadelphia 2. Philadelphia 76 ers 3. Philadelphia (film) {dbpedia-owl: Place, dbpediaowl: Populated. Place, dbpediaowl: Film, yago: National. Basketb all. Association. Teams …. …. . } San Antonio 1. Houston Rockets 2. Houston 3. Allan Houston {………………………. }

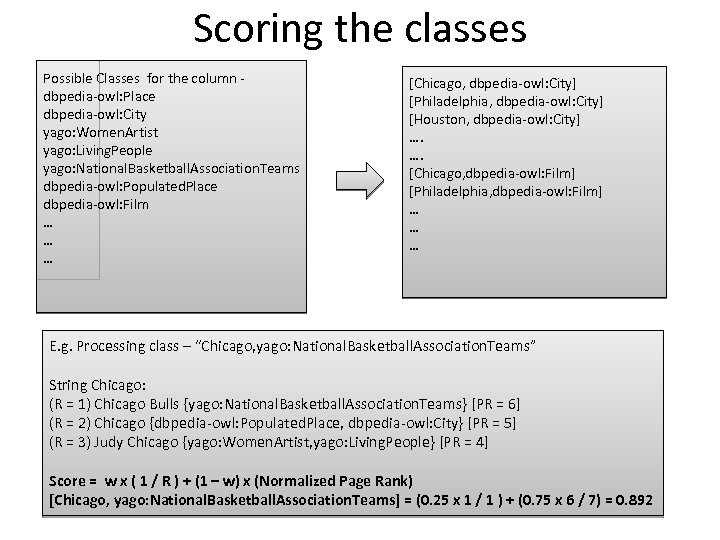

Scoring the classes Possible Classes for the column dbpedia-owl: Place dbpedia-owl: City yago: Women. Artist yago: Living. People yago: National. Basketball. Association. Teams dbpedia-owl: Populated. Place dbpedia-owl: Film … … … [Chicago, dbpedia-owl: City] [Philadelphia, dbpedia-owl: City] [Houston, dbpedia-owl: City] …. …. [Chicago, dbpedia-owl: Film] [Philadelphia, dbpedia-owl: Film] … … … E. g. Processing class – “Chicago, yago: National. Basketball. Association. Teams” String Chicago: (R = 1) Chicago Bulls {yago: National. Basketball. Association. Teams} [PR = 6] (R = 2) Chicago {dbpedia-owl: Populated. Place, dbpedia-owl: City} [PR = 5] (R = 3) Judy Chicago {yago: Women. Artist, yago: Living. People} [PR = 4] Score = w x ( 1 / R ) + (1 – w) x (Normalized Page Rank) [Chicago, yago: National. Basketball. Association. Teams] = (0. 25 x 1 / 1 ) + (0. 75 x 6 / 7) = 0. 892

Predict Class for Columns Linking the table cells Identify and Discover relations T 2 LD Framework

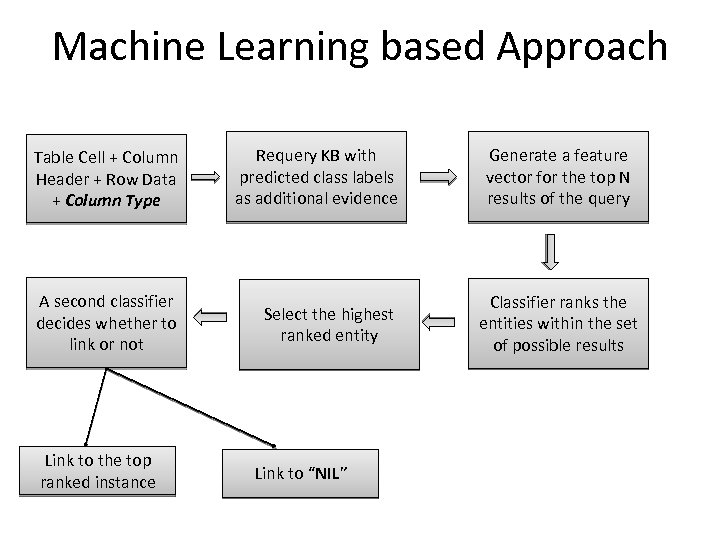

Machine Learning based Approach Table Cell + Column Header + Row Data + Column Type Requery KB with predicted class labels as additional evidence A second classifier decides whether to link or not Select the highest ranked entity Link to the top ranked instance Link to “NIL” Generate a feature vector for the top N results of the query Classifier ranks the entities within the set of possible results

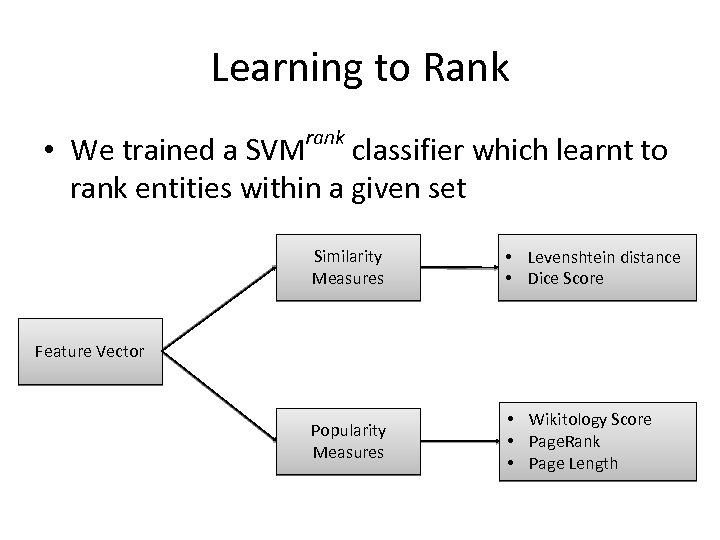

Learning to Rank rank • We trained a SVM classifier which learnt to rank entities within a given set Similarity Measures • Levenshtein distance • Dice Score Popularity Measures • Wikitology Score • Page. Rank • Page Length Feature Vector

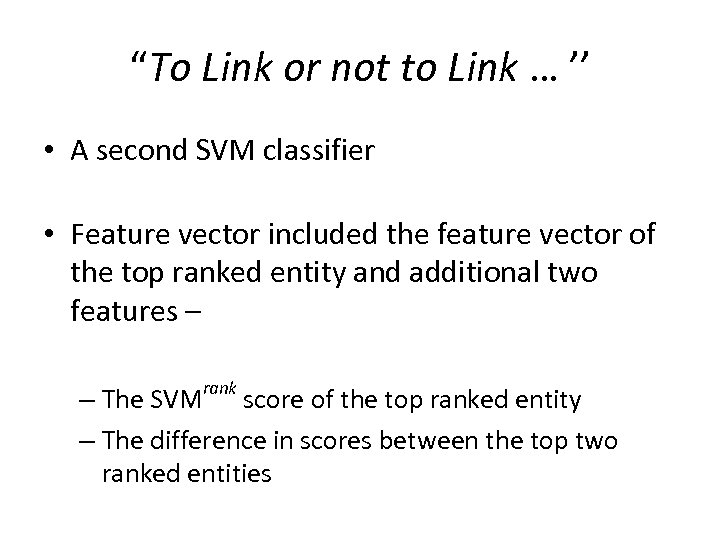

“To Link or not to Link … ’’ • A second SVM classifier • Feature vector included the feature vector of the top ranked entity and additional two features – rank – The SVM score of the top ranked entity – The difference in scores between the top two ranked entities

Predict Class for Columns Linking the table cells Identify and Discover relations T 2 LD Framework

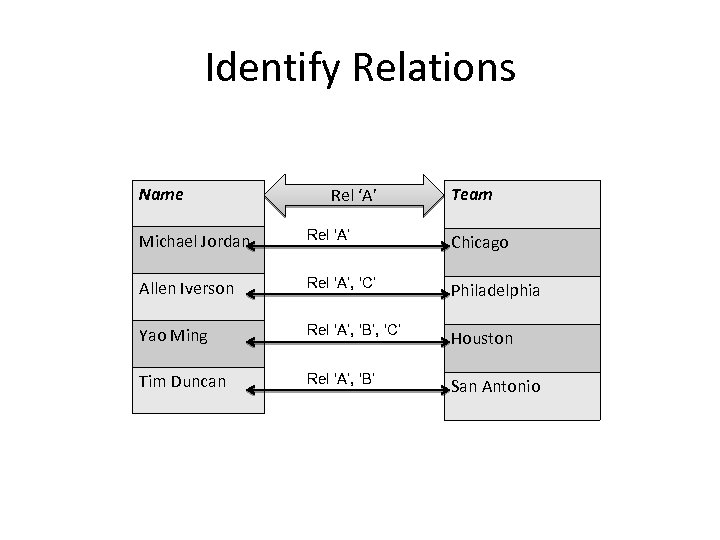

Identify Relations Name Rel ‘A’ Team Michael Jordan Rel ‘A’ Chicago Allen Iverson Rel ‘A’, ‘C’ Philadelphia Yao Ming Rel ‘A’, ‘B’, ‘C’ Houston Tim Duncan Rel ‘A’, ‘B’ San Antonio

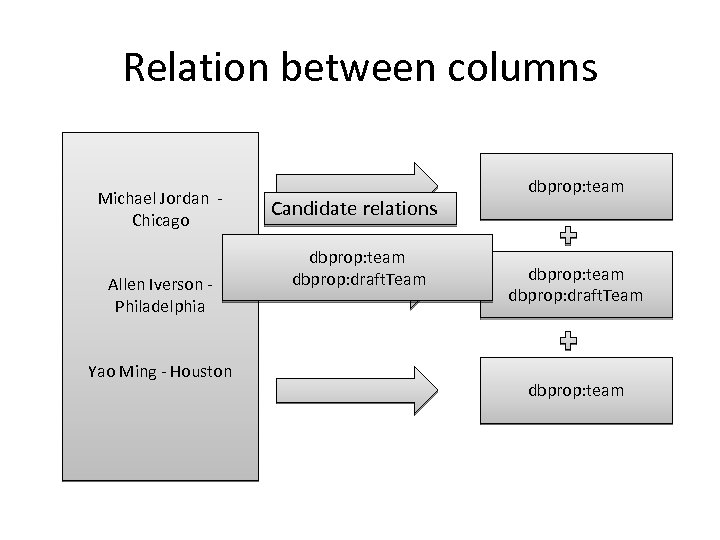

Relation between columns Michael Jordan Chicago Allen Iverson Philadelphia Yao Ming - Houston Candidate relations dbprop: team dbprop: draft. Team dbprop: team

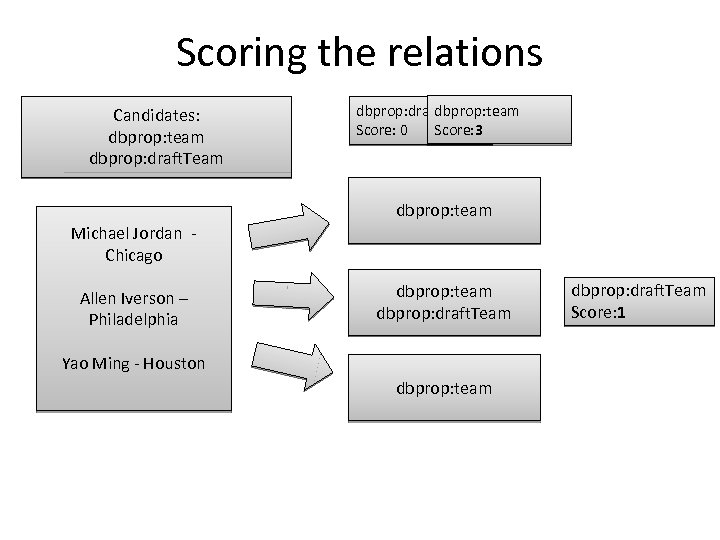

Scoring the relations Candidates: dbprop: team dbprop: draft. Team Score: 3 Score: 0 dbprop: team Michael Jordan Chicago Allen Iverson – Philadelphia dbprop: team dbprop: draft. Team Yao Ming - Houston dbprop: team dbprop: draft. Team Score: 1

Predict Class for Columns Linking the table cells Identify and Discover relations T 2 LD Framework

Annotating web tables for the Semantic Web

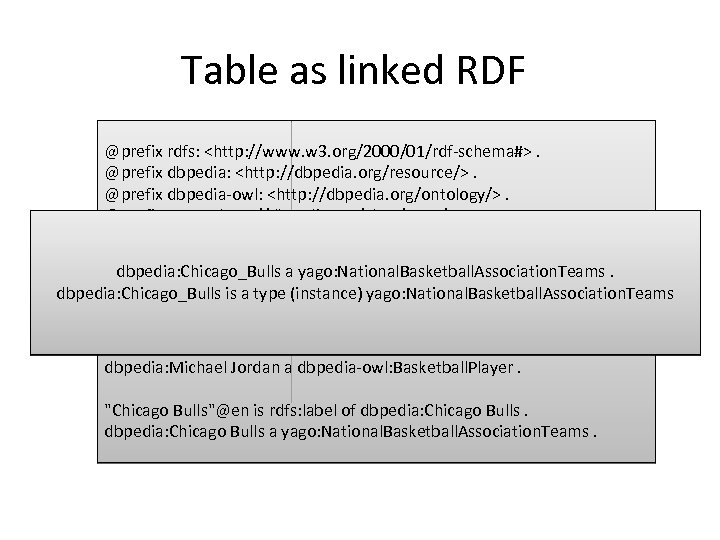

Table as linked RDF @prefix rdfs: <http: //www. w 3. org/2000/01/rdf-schema#>. @prefix dbpedia: <http: //dbpedia. org/resource/>. @prefix dbpedia-owl: <http: //dbpedia. org/ontology/>. @prefix yago: <http: //dbpedia. org/class/yago/>. dbpedia: Chicago_Bulls is rdfs: label of dbpedia-owl: Team. . “Team”@en of dbpedia-owl: Basketball. Player "Name"@en is rdfs: label a yago: National. Basketball. Association. Teams. dbpedia: Chicago_Bulls is a typeof yago: National. Basketball. Association. Teams. “Team” is the rdfs: label (instance) yago: National. Basketball. Association. Teams "Team"@en is common / human name for the class dbpedia-owl: Team "Michael Jordan"@en is rdfs: label of dbpedia: Michael Jordan a dbpedia-owl: Basketball. Player. "Chicago Bulls"@en is rdfs: label of dbpedia: Chicago Bulls a yago: National. Basketball. Association. Teams.

Results

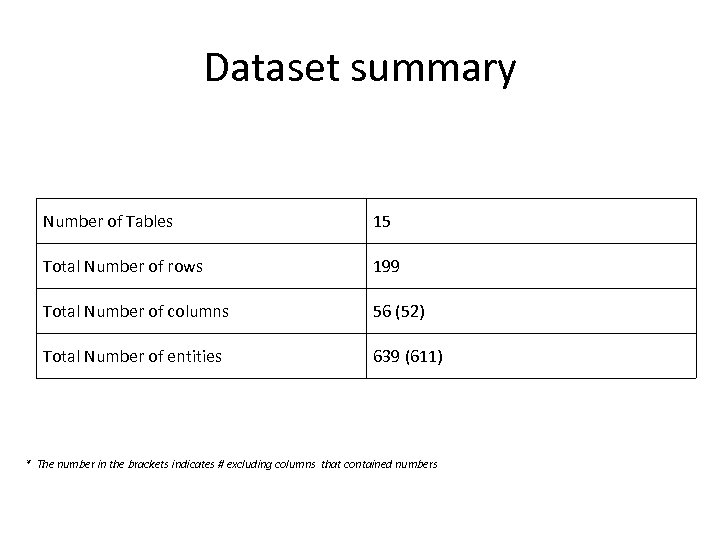

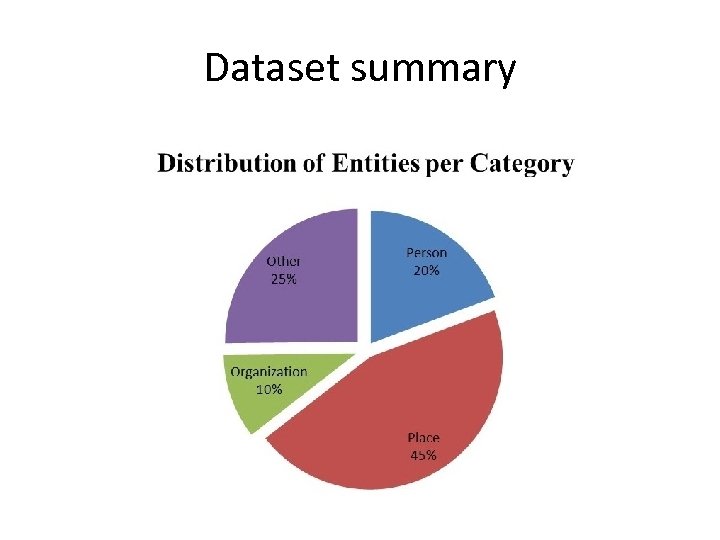

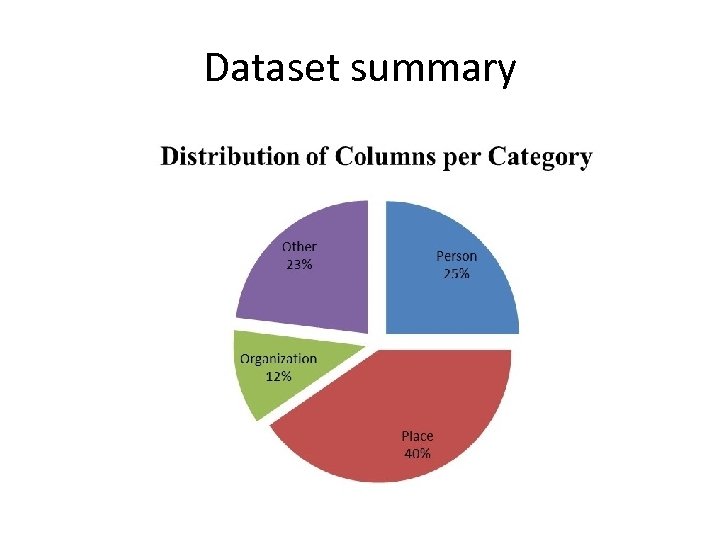

Dataset summary Number of Tables 15 Total Number of rows 199 Total Number of columns 56 (52) Total Number of entities 639 (611) * The number in the brackets indicates # excluding columns that contained numbers

Dataset summary

Dataset summary

Evaluation for class label predictions

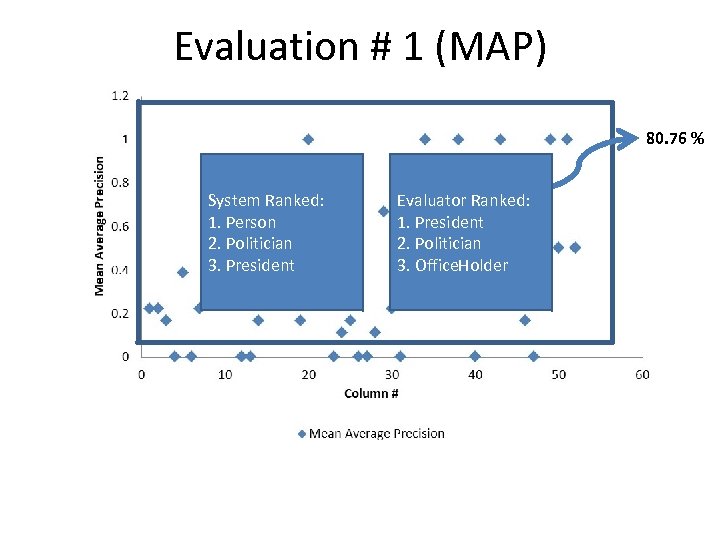

Evaluation # 1 (MAP) • Compared the system’s ranked list of labels against a human ranked list of labels • Metric - Mean Average Precision (MAP) • Commonly used in the Information Retrieval domain to compare two ranked sets

Evaluation # 1 (MAP) 80. 76 % System Ranked: 1. Person 2. Politician 3. President Evaluator Ranked: 1. President 2. Politician 3. Office. Holder

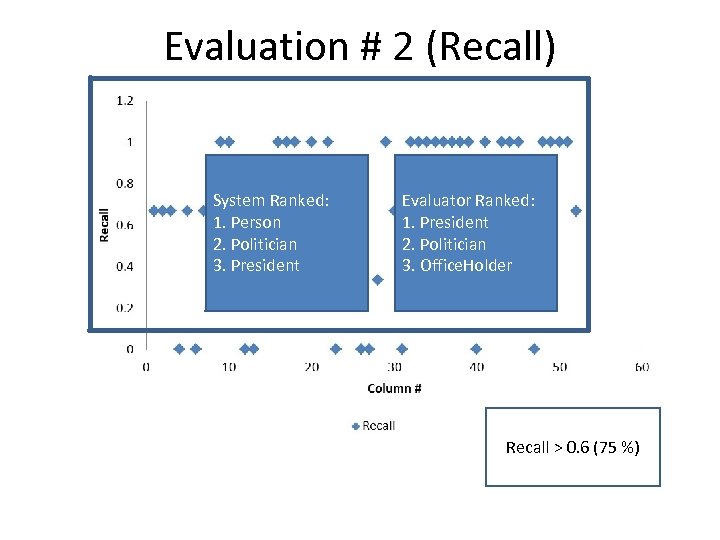

Evaluation # 2 (Recall) System Ranked: 1. Person 2. Politician 3. President Evaluator Ranked: 1. President 2. Politician 3. Office. Holder Recall > 0. 6 (75 %)

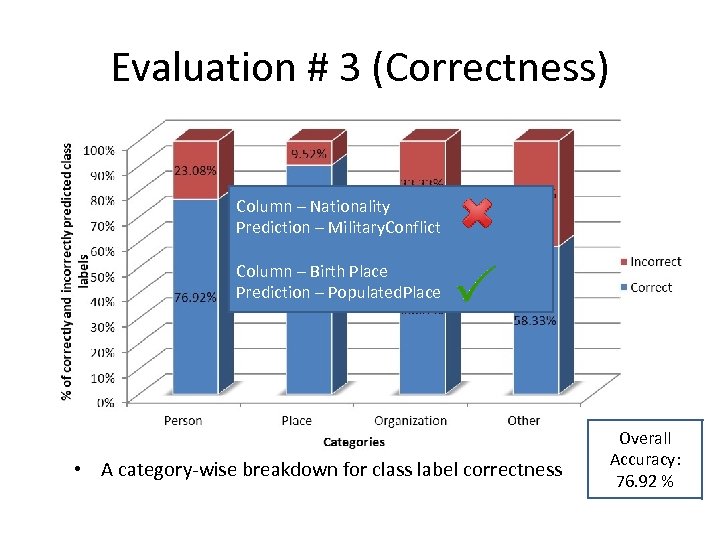

Evaluation # 3 (Correctness) • Evaluated whether our predicted class labels were “fair and correct” • Class label may not be the most accurate one, but may be correct. – E. g. dbpedia-owl: Populated. Place is not the most accurate, but still a correct label for column of cities • Three human judges evaluated our predicted class labels

Evaluation # 3 (Correctness) Column – Nationality Prediction – Military. Conflict Column – Birth Place Prediction – Populated. Place • A category-wise breakdown for class label correctness Overall Accuracy: 76. 92 %

Evaluation for linking table cells to entities

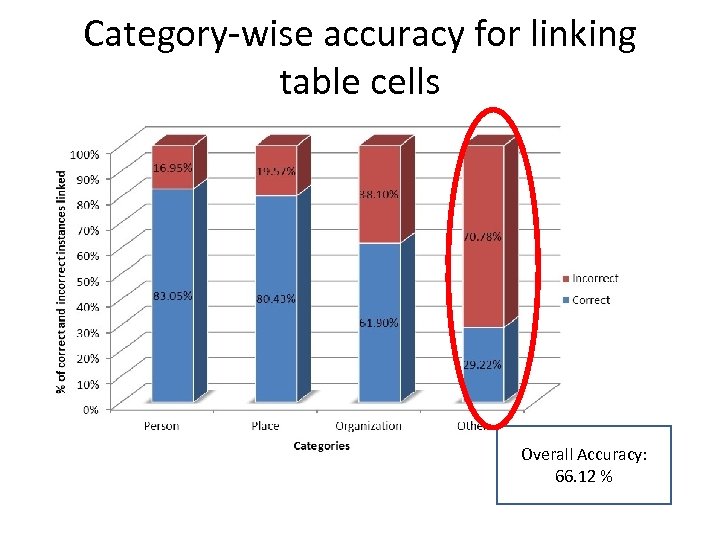

Category-wise accuracy for linking table cells Overall Accuracy: 66. 12 %

Relation between columns • Idea – Ask human evaluators to identify relations between columns in a given table • Pilot Experiment – Asked three evaluators to annotate five random tables from our dataset • Evaluators identified 20 relations • Our accuracy – 5 out of 20 (25 % ) were correct

Conclusion and Future Work

Conclusion • We have demonstrated that it is possible to develop a automated framework for converting tables & spreadsheets to linked data • Extending and adapting this framework for Open government data • Discovery of new relations between entities

References • • Cafarella, M. J. , Halevy, A. , Wang, D. Z. , Wu, E. , Zhang, Y. , 2008. Webtables: exploring the power of tables on the web. Proc. VLDB Endow. 1 (1), 538549. Barrasa, J. , Corcho, O. , Gomez-perez, A. , 2004. R 2 o, an extensible and semantically based database-to-ontology mapping language. In Proceedings of the 2 nd Workshop on Semantic Web and Databases(SWDB 2004). Vol. 3372. pp. 1069 -1070. Hu, W. , and Qu, Y. 2007. Discovering simple mappings between relational database schemas and ontologies. In Aberer, K. ; Choi, K. -S. ; Noy, N. F. ; Allemang, D. ; Lee, K. I. ; Nixon, L. J. B. ; Golbeck, J. ; Mika, P. ; Maynard, D. ; Mizoguchi, R. ; Schreiber, G. ; and Cudre-Mauroux, P. , eds. , ISWC/ASWC, volume 4825 of Lecture Notes in Computer Science, 225238. Springer. Papapanagiotou, P. ; Katsiouli, P. ; Tsetsos, V. ; Anagnostopoulos, C. ; and Hadjiefthymiades, S. 2006. Ronto: Relational to ontology schema matching. In AISSIGSEMIS BULLETIN.

References • • Lawrence, E. D. R. 2004. Composing mappings between schemas using a reference ontology. In In Proceedings of International Conference on Ontologies, Databases and Application of Semantics (ODBASE), 783800. Springer Han, L. ; Finin, T. ; Parr, C. ; Sachs, J. ; and Joshi, A. 2008. RDF 123: from Spreadsheets to RDF. In Seventh International Semantic Web Conference. Springer. Han, L. , Finin, T. , Yesha, Y. , 2009. Finding semantic web ontology terms from words. In: Proceedings of the Eight International Semantic Web Conference. Springer. Limaye, G. , Sarawagi, S. , Chakrabarti, S. : Annotating and searching web tables using entities, types and relationships. In: Proc. of the 36 th Int'l Conference on Very Large Databases (VLDB). (2010)

This work was supported by:

7ba7a2376019ed280ae1e9ad6bb706e8.ppt