c5da93eb4ab447ebf7f24d0b3dfa8c85.ppt

- Количество слайдов: 16

Using Information Technology and Artificial Intelligence to Build an E-Glass for the Blinds KHALED AL-SARAYREH, RAFA E. AL-QUTAISH The Higher Council for Affairs of Persons with Disabilities JORDAN khalid_sar@asu. edu. jo , rafa@ieee. org

Abstract l IT and communication systems are used in a wide range of our lives. Therefore, we can not imagine our lives without IT and communication systems. l From this point we got an idea to build an e-glass which utilizes of an embedded system and neural networks, l however, this e-glass could be used by the blinds to assist them in their ways without any assistants from other persons. thus improving their mobility and independent living

Abstract l It is important to note that the hardware and software components of the e-glass are not expensive. l When this glass is used by the blinds it will make them self confidence, let them walk independently and increase their morale.

Introduction l The idea of building an e-glass it will be treated as a first step in using the technology as an integrated or replaceable part of the damaged human neural system; this will help to make the life easier for the blinds.

Introduction l The proposed e-glass works through an electronic circuit to scan and collect information about the entire object which could be found in front of the blind, then it will analyse these objects to give a voice command to the blind to keep away from these obstacles (objects).

General View of the E-Glass l This e-glass works through the ultra sonic waves by sending radar signals for distance from 2 meter to 50 meters and 120 degrees as a vertical and horizontal cover angle along with 60 degrees to cover the right and left, as a result, in total the cover angle will be 270 degrees. Therefore, after receiving the information from the radar system, they will be analyzed in the artificial intelligence software and produce a warning about any obstacle objects through a headphone.

General View of the E-Glass l The characteristics of this e-glass is that it can identify any small object with a 2 cm 2 area or more from 2 meters distance. l As a future work, this e-glass could be embedded to the electronic devices within the cars. In addition, we can develop the embedded electronic circuit programs to receive the neural flows from the human brain and use the pattern recognition to analyse the objects as images.

How the E-Glass Works? l First phase: putting the receiver (radar) on the glass and analysing all the data which are collected from the radar, this analysis could be done through the first electronic circuit programs, then an electronic signal will be sent to the electronic warning and alarming device (the headphone).

How the E-Glass Works? l Second phase: the electronic signal will be sent from the first electronic circuit to the second electronic circuit which has the artificial intelligence system to give a scaled warning based on the dangerous degree.

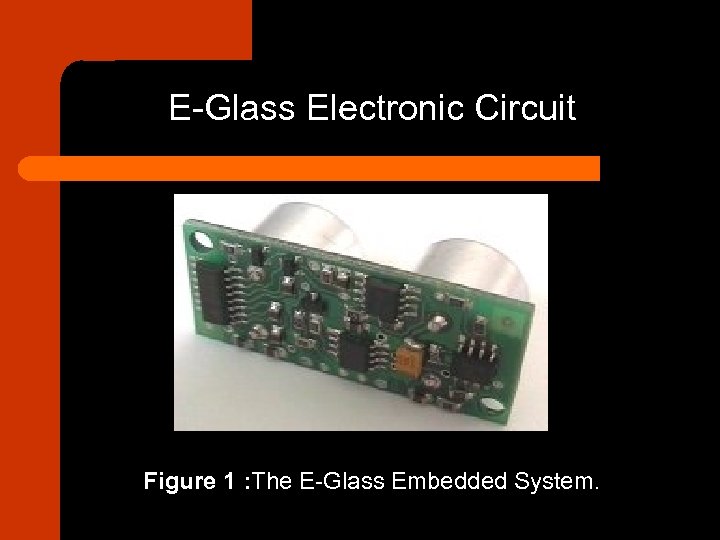

E-Glass Electronic Circuit Figure 1 : The E-Glass Embedded System.

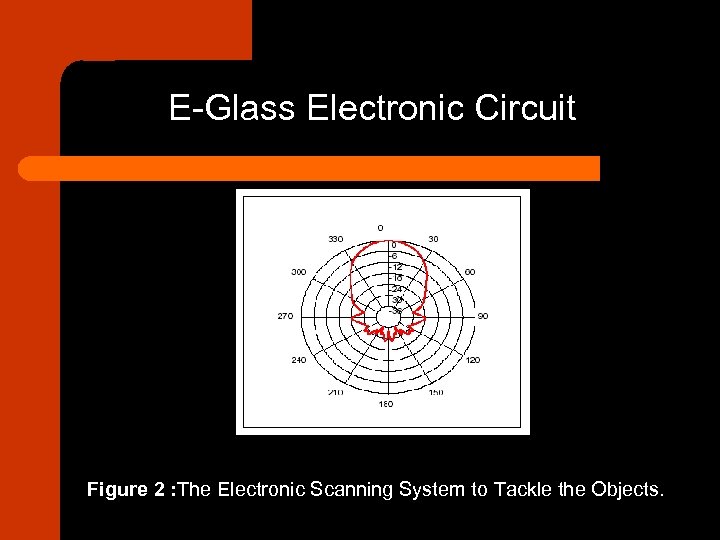

E-Glass Electronic Circuit Figure 2 : The Electronic Scanning System to Tackle the Objects.

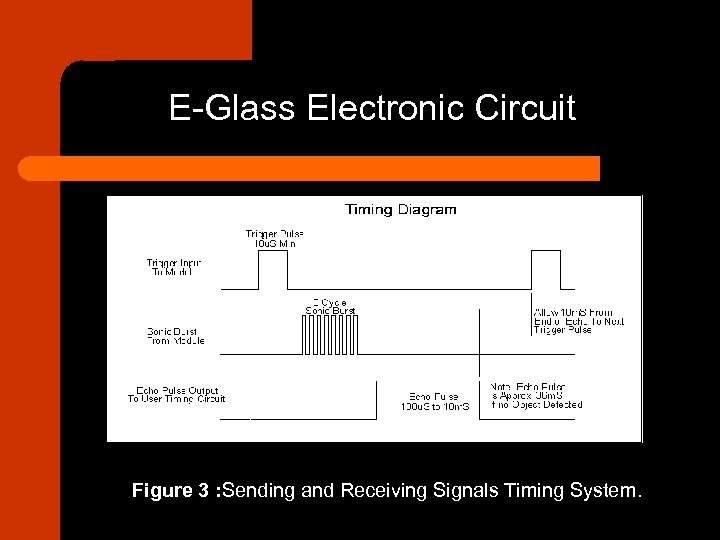

E-Glass Electronic Circuit Figure 3 : Sending and Receiving Signals Timing System.

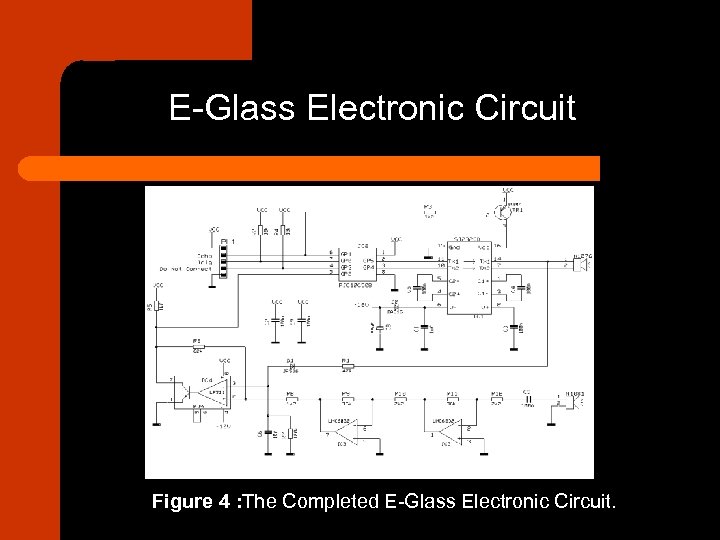

E-Glass Electronic Circuit Figure 4 : The Completed E-Glass Electronic Circuit.

Conclusion l The research in the filed of using IT to assist the special needs person is little which make it distinctive work from the social view. Therefore, within research we just started a huge work to make the life of the special needs person easier.

References l l l l l 1. B. Gold and N. Morgan, Speech and Audio Signal Processing: Processing And Perception of Speech and Music, John Wiley & Sons, Inc. , New York, 2000 2. Do Minh N. , "An Automatic Speaker Recognition System, " Audio Visual Communications Laboratory Swiss Federal Institute of Technology, Lausanne, Switzerland, 3. EE 578 Digital Speech Processing, Levant Arslan’s lecture notes, Spring 2001, Boðaziçi University, Turkey 4. G. Fant, Acoustic Theory of Speech Production, Mouton & Co. , The Hague, 1970 And Some of its Implications”, Journal of Speech and Hearing Research, 4: 303 -320, 1961 5. (Gonzalez, 2002) Gonzales, Rafael. and Woods, Richard. 2002. Digital image processing, second edition, Prentice-hall. 6. (Guo and Liddell, 2002) Guo, S. and Liddell, H. 2000. Support Vector Regression and Classification Based Multi-view Detection and Recognition IEEE International Conference on Automatic Face& Gesture Recognition, pp. 300 -305.

References l l l 7. J. H. L. Hansen, Slides for ECEN-5022 Speech Processing & Recognition, University of Colorado Boulder, 2000, 8. J. R. Deller, J. G Proakis. J. H. Hansen. Discrete-Time Processing of Speech Signals, Macmillan, New York 1993. 9. K. N. Stevens and A. S. House, “An Acoustic Theory of Vowel Production 10. “Matlab VOICEBOX” http: //www. ee. ic. ac. uk/hp/staff/dmb/voicebox. html 11. Mihn Doh, “An Automatic Speaker Recognition System” http: //lcavwww. epfl. ch/~minhdo/asr_project. html 12. Mohamed Gasem, “Vector Quantization” http: //www. geocities. com/mohamedqasem/vectorquantization/vq. html 13. S. B. Davis and P. Mermelstein, "Comparison of parametric representations for Monosyllabic word recognition in continuously spoken sentences", IEEE

c5da93eb4ab447ebf7f24d0b3dfa8c85.ppt