ac9db867392a5d92abb745a39a2b66a2.ppt

- Количество слайдов: 39

Using GPCE Principles for Hardware Systems and Accelerators (bridging the gap to HW design) GPCE 09 October 4, 2009 Rishiyur S. Nikhil CTO, 1 www. bluespec. com

Using GPCE Principles for Hardware Systems and Accelerators (bridging the gap to HW design) GPCE 09 October 4, 2009 Rishiyur S. Nikhil CTO, 1 www. bluespec. com

This seems to be a conference about improving software development. . . 2 Generative and component approaches are revolutionizing software development. . . GPCE provides a venue for researchers and practitioners interested in foundational techniques for enhancing the productivity, quality, and timeto-market in software development. . . In addition to exploring cutting-edge techniques for developing generative and component-based software, our goal is to foster further crossfertilization between the software engineering research community and the programming languages community. . so why am I here talking about hardware design? Two reasons. .

This seems to be a conference about improving software development. . . 2 Generative and component approaches are revolutionizing software development. . . GPCE provides a venue for researchers and practitioners interested in foundational techniques for enhancing the productivity, quality, and timeto-market in software development. . . In addition to exploring cutting-edge techniques for developing generative and component-based software, our goal is to foster further crossfertilization between the software engineering research community and the programming languages community. . so why am I here talking about hardware design? Two reasons. .

Reason (1): you may be interested in seeing how the principles highlighted below. . . Generative Programming (developing programs that synthesize other programs), Component Engineering (raising the level of modularization and analysis in application design), and Domain-Specific Languages (elevating program specifications to compact domainspecific notations that are easier to write, maintain, and analyze) are key technologies for automating program development. . enhancing the productivity, quality, and time-to-market in software development that stems from deploying standard components and automating program generation. . 3 . . . are used with equal capability and effectiveness in HW design

Reason (1): you may be interested in seeing how the principles highlighted below. . . Generative Programming (developing programs that synthesize other programs), Component Engineering (raising the level of modularization and analysis in application design), and Domain-Specific Languages (elevating program specifications to compact domainspecific notations that are easier to write, maintain, and analyze) are key technologies for automating program development. . enhancing the productivity, quality, and time-to-market in software development that stems from deploying standard components and automating program generation. . 3 . . . are used with equal capability and effectiveness in HW design

Reason (2): I would like to tempt you to upgrade from being not only a software engineer (v 1. 0). . . to “The Compleat Computation-ware Engineere (v 2. 0)”. . . HW SW 4 . . . where you think of hardware computation as an important (and easy to use) component in your toolbox, when you solve your next problem.

Reason (2): I would like to tempt you to upgrade from being not only a software engineer (v 1. 0). . . to “The Compleat Computation-ware Engineere (v 2. 0)”. . . HW SW 4 . . . where you think of hardware computation as an important (and easy to use) component in your toolbox, when you solve your next problem.

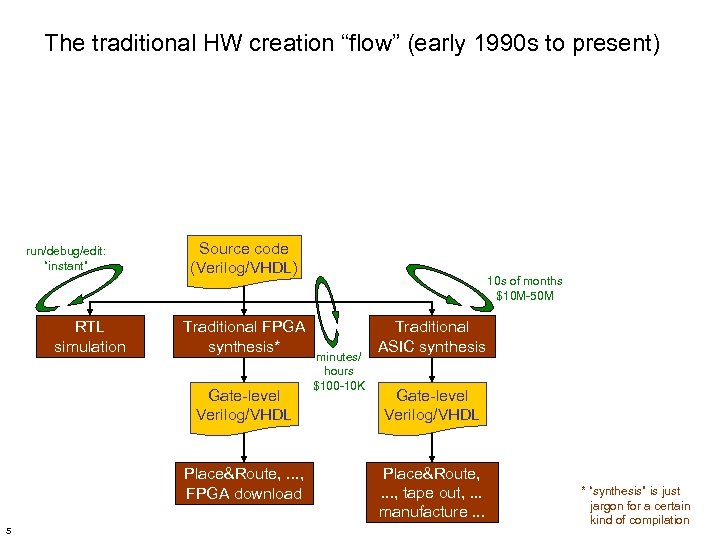

The traditional HW creation “flow” (early 1990 s to present) run/debug/edit: “instant” RTL simulation Source code (Verilog/VHDL) Traditional FPGA synthesis* Gate-level Verilog/VHDL Place&Route, . . . , FPGA download 5 10 s of months $10 M-50 M minutes/ hours $100 -10 K Traditional ASIC synthesis Gate-level Verilog/VHDL Place&Route, . . . , tape out, . . . manufacture. . . * “synthesis” is just jargon for a certain kind of compilation

The traditional HW creation “flow” (early 1990 s to present) run/debug/edit: “instant” RTL simulation Source code (Verilog/VHDL) Traditional FPGA synthesis* Gate-level Verilog/VHDL Place&Route, . . . , FPGA download 5 10 s of months $10 M-50 M minutes/ hours $100 -10 K Traditional ASIC synthesis Gate-level Verilog/VHDL Place&Route, . . . , tape out, . . . manufacture. . . * “synthesis” is just jargon for a certain kind of compilation

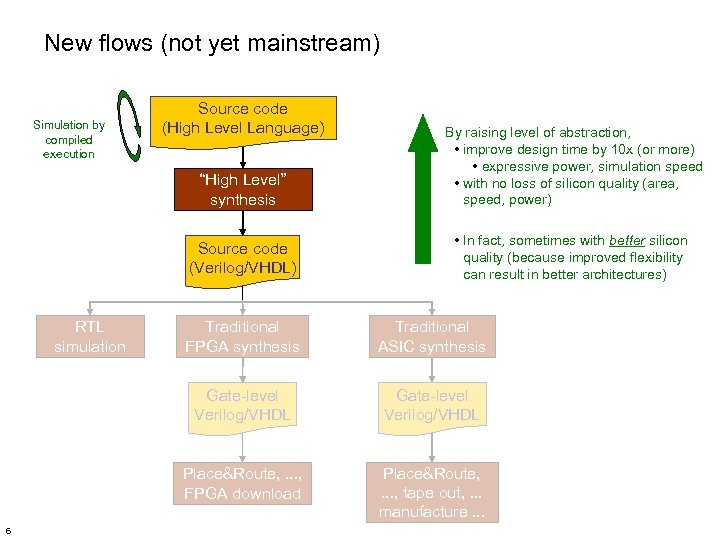

New flows (not yet mainstream) Simulation by compiled execution Source code (High Level Language) “High Level” synthesis Source code (Verilog/VHDL) RTL simulation By raising level of abstraction, • improve design time by 10 x (or more) • expressive power, simulation speed • with no loss of silicon quality (area, speed, power) • In fact, sometimes with better silicon quality (because improved flexibility can result in better architectures) Traditional ASIC synthesis Gate-level Verilog/VHDL Place&Route, . . . , FPGA download 6 Traditional FPGA synthesis Place&Route, . . . , tape out, . . . manufacture. . .

New flows (not yet mainstream) Simulation by compiled execution Source code (High Level Language) “High Level” synthesis Source code (Verilog/VHDL) RTL simulation By raising level of abstraction, • improve design time by 10 x (or more) • expressive power, simulation speed • with no loss of silicon quality (area, speed, power) • In fact, sometimes with better silicon quality (because improved flexibility can result in better architectures) Traditional ASIC synthesis Gate-level Verilog/VHDL Place&Route, . . . , FPGA download 6 Traditional FPGA synthesis Place&Route, . . . , tape out, . . . manufacture. . .

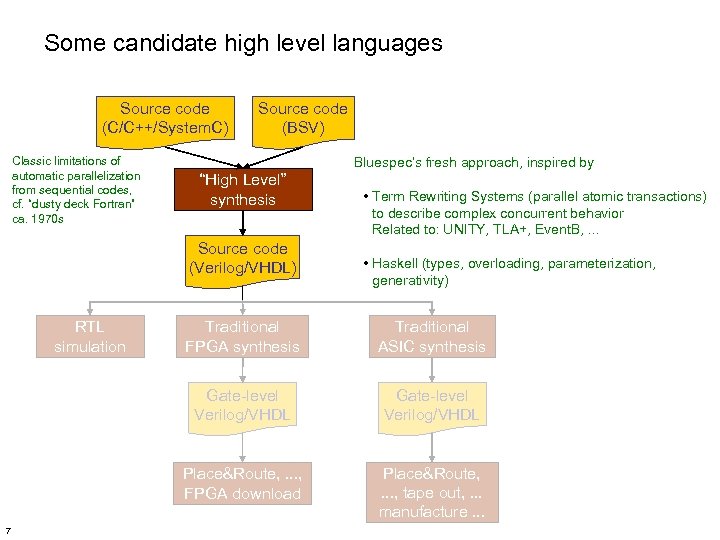

Some candidate high level languages Source code (C/C++/System. C) Classic limitations of automatic parallelization from sequential codes, cf. “dusty deck Fortran” ca. 1970 s Source code (BSV) Bluespec’s fresh approach, inspired by “High Level” synthesis Source code (Verilog/VHDL) RTL simulation • Term Rewriting Systems (parallel atomic transactions) to describe complex concurrent behavior Related to: UNITY, TLA+, Event. B, . . . • Haskell (types, overloading, parameterization, generativity) Traditional ASIC synthesis Gate-level Verilog/VHDL Place&Route, . . . , FPGA download 7 Traditional FPGA synthesis Place&Route, . . . , tape out, . . . manufacture. . .

Some candidate high level languages Source code (C/C++/System. C) Classic limitations of automatic parallelization from sequential codes, cf. “dusty deck Fortran” ca. 1970 s Source code (BSV) Bluespec’s fresh approach, inspired by “High Level” synthesis Source code (Verilog/VHDL) RTL simulation • Term Rewriting Systems (parallel atomic transactions) to describe complex concurrent behavior Related to: UNITY, TLA+, Event. B, . . . • Haskell (types, overloading, parameterization, generativity) Traditional ASIC synthesis Gate-level Verilog/VHDL Place&Route, . . . , FPGA download 7 Traditional FPGA synthesis Place&Route, . . . , tape out, . . . manufacture. . .

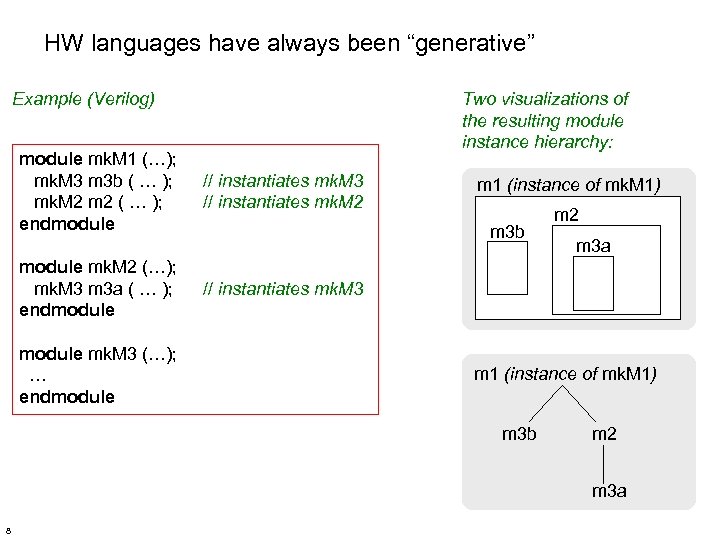

HW languages have always been “generative” Example (Verilog) module mk. M 1 (…); mk. M 3 m 3 b ( … ); mk. M 2 m 2 ( … ); endmodule mk. M 2 (…); mk. M 3 m 3 a ( … ); endmodule mk. M 3 (…); … endmodule Two visualizations of the resulting module instance hierarchy: // instantiates mk. M 3 // instantiates mk. M 2 m 1 (instance of mk. M 1) m 3 b m 2 m 3 a // instantiates mk. M 3 m 1 (instance of mk. M 1) m 3 b m 2 m 3 a 8

HW languages have always been “generative” Example (Verilog) module mk. M 1 (…); mk. M 3 m 3 b ( … ); mk. M 2 m 2 ( … ); endmodule mk. M 2 (…); mk. M 3 m 3 a ( … ); endmodule mk. M 3 (…); … endmodule Two visualizations of the resulting module instance hierarchy: // instantiates mk. M 3 // instantiates mk. M 2 m 1 (instance of mk. M 1) m 3 b m 2 m 3 a // instantiates mk. M 3 m 1 (instance of mk. M 1) m 3 b m 2 m 3 a 8

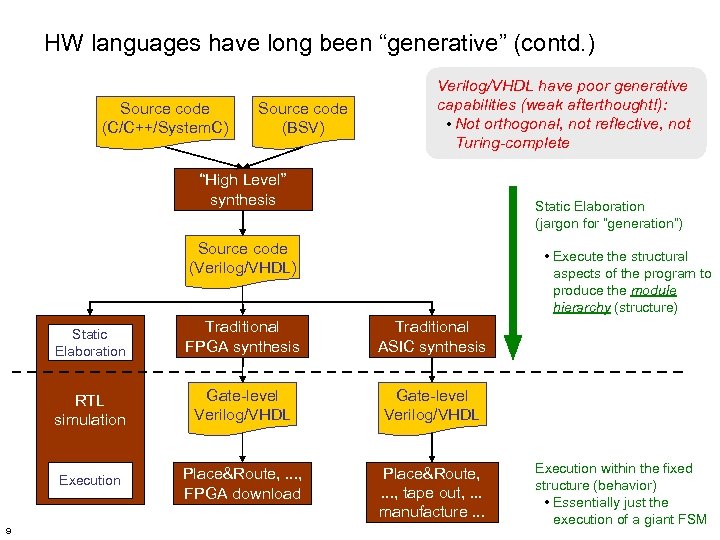

HW languages have long been “generative” (contd. ) Source code (C/C++/System. C) Source code (BSV) Verilog/VHDL have poor generative capabilities (weak afterthought!): • Not orthogonal, not reflective, not Turing-complete “High Level” synthesis Static Elaboration (jargon for “generation”) Source code (Verilog/VHDL) • Execute the structural aspects of the program to produce the module hierarchy (structure) Static Elaboration Traditional ASIC synthesis RTL simulation Gate-level Verilog/VHDL Execution 9 Traditional FPGA synthesis Place&Route, . . . , FPGA download Place&Route, . . . , tape out, . . . manufacture. . . Execution within the fixed structure (behavior) • Essentially just the execution of a giant FSM

HW languages have long been “generative” (contd. ) Source code (C/C++/System. C) Source code (BSV) Verilog/VHDL have poor generative capabilities (weak afterthought!): • Not orthogonal, not reflective, not Turing-complete “High Level” synthesis Static Elaboration (jargon for “generation”) Source code (Verilog/VHDL) • Execute the structural aspects of the program to produce the module hierarchy (structure) Static Elaboration Traditional ASIC synthesis RTL simulation Gate-level Verilog/VHDL Execution 9 Traditional FPGA synthesis Place&Route, . . . , FPGA download Place&Route, . . . , tape out, . . . manufacture. . . Execution within the fixed structure (behavior) • Essentially just the execution of a giant FSM

I’m now going to show you some code examples for some non-trivial HW designs. I hope, at the end of this, you’ll say: “Hey! I could do that!” even if you’ve never designed HW before! 10

I’m now going to show you some code examples for some non-trivial HW designs. I hope, at the end of this, you’ll say: “Hey! I could do that!” even if you’ve never designed HW before! 10

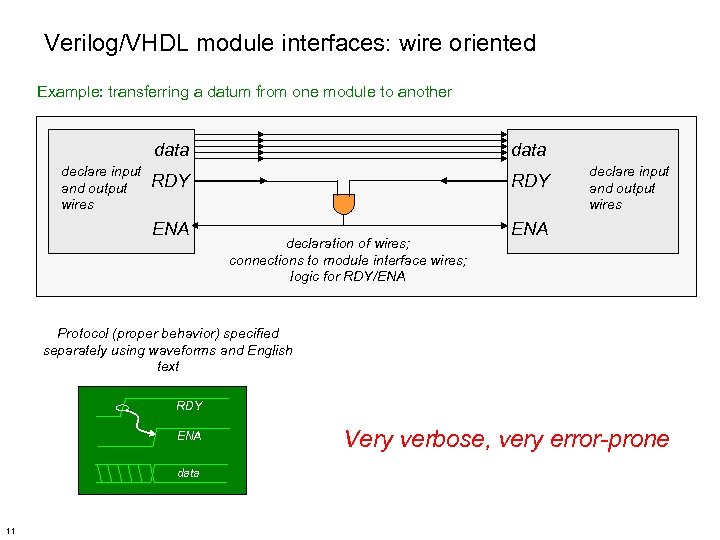

Verilog/VHDL module interfaces: wire oriented Example: transferring a datum from one module to another data declare input and output wires data RDY ENA declaration of wires; connections to module interface wires; logic for RDY/ENA declare input and output wires ENA Protocol (proper behavior) specified separately using waveforms and English text RDY ENA data 11 Very verbose, very error-prone

Verilog/VHDL module interfaces: wire oriented Example: transferring a datum from one module to another data declare input and output wires data RDY ENA declaration of wires; connections to module interface wires; logic for RDY/ENA declare input and output wires ENA Protocol (proper behavior) specified separately using waveforms and English text RDY ENA data 11 Very verbose, very error-prone

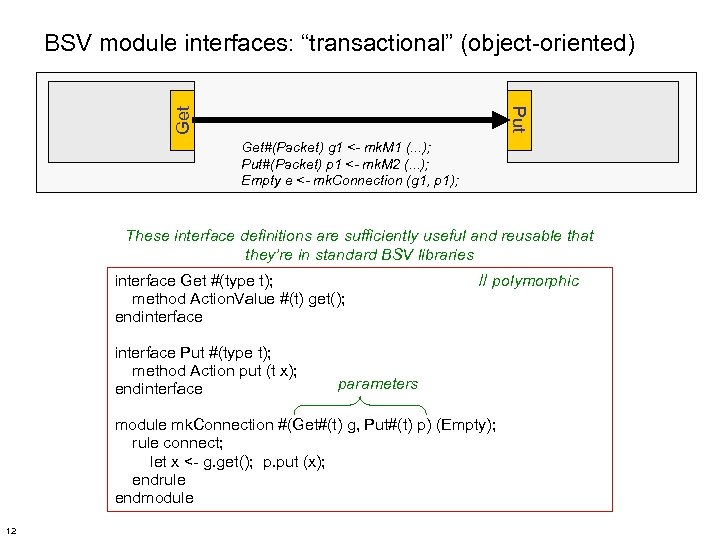

Put Get BSV module interfaces: “transactional” (object-oriented) Get#(Packet) g 1 <- mk. M 1 (. . . ); Put#(Packet) p 1 <- mk. M 2 (. . . ); Empty e <- mk. Connection (g 1, p 1); These interface definitions are sufficiently useful and reusable that they’re in standard BSV libraries interface Get #(type t); method Action. Value #(t) get(); endinterface Put #(type t); method Action put (t x); endinterface // polymorphic parameters module mk. Connection #(Get#(t) g, Put#(t) p) (Empty); rule connect; let x <- g. get(); p. put (x); endrule endmodule 12

Put Get BSV module interfaces: “transactional” (object-oriented) Get#(Packet) g 1 <- mk. M 1 (. . . ); Put#(Packet) p 1 <- mk. M 2 (. . . ); Empty e <- mk. Connection (g 1, p 1); These interface definitions are sufficiently useful and reusable that they’re in standard BSV libraries interface Get #(type t); method Action. Value #(t) get(); endinterface Put #(type t); method Action put (t x); endinterface // polymorphic parameters module mk. Connection #(Get#(t) g, Put#(t) p) (Empty); rule connect; let x <- g. get(); p. put (x); endrule endmodule 12

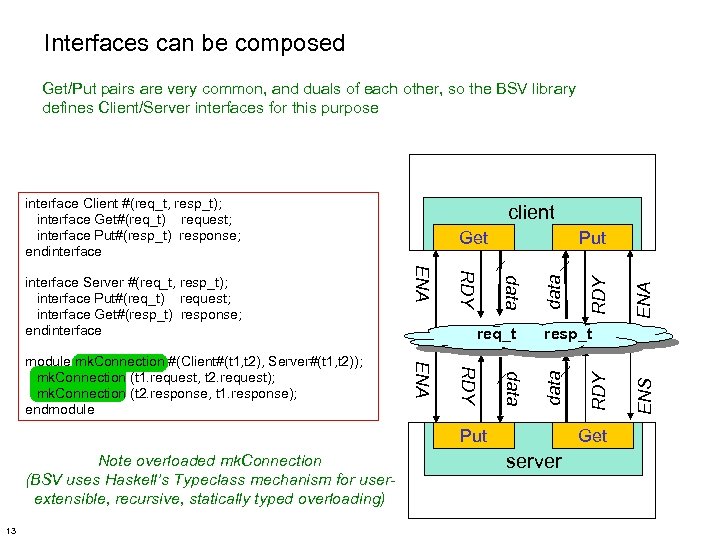

Interfaces can be composed Get/Put pairs are very common, and duals of each other, so the BSV library defines Client/Server interfaces for this purpose interface Client #(req_t, resp_t); interface Get#(req_t) request; interface Put#(resp_t) response; endinterface Note overloaded mk. Connection (BSV uses Haskell’s Typeclass mechanism for userextensible, recursive, statically typed overloading) 13 ENA RDY Get server ENS data RDY Put RDY resp_t data RDY data Put req_t ENA module mk. Connection #(Client#(t 1, t 2), Server#(t 1, t 2)); mk. Connection (t 1. request, t 2. request); mk. Connection (t 2. response, t 1. response); endmodule Get ENA interface Server #(req_t, resp_t); interface Put#(req_t) request; interface Get#(resp_t) response; endinterface client

Interfaces can be composed Get/Put pairs are very common, and duals of each other, so the BSV library defines Client/Server interfaces for this purpose interface Client #(req_t, resp_t); interface Get#(req_t) request; interface Put#(resp_t) response; endinterface Note overloaded mk. Connection (BSV uses Haskell’s Typeclass mechanism for userextensible, recursive, statically typed overloading) 13 ENA RDY Get server ENS data RDY Put RDY resp_t data RDY data Put req_t ENA module mk. Connection #(Client#(t 1, t 2), Server#(t 1, t 2)); mk. Connection (t 1. request, t 2. request); mk. Connection (t 2. response, t 1. response); endmodule Get ENA interface Server #(req_t, resp_t); interface Put#(req_t) request; interface Get#(resp_t) response; endinterface client

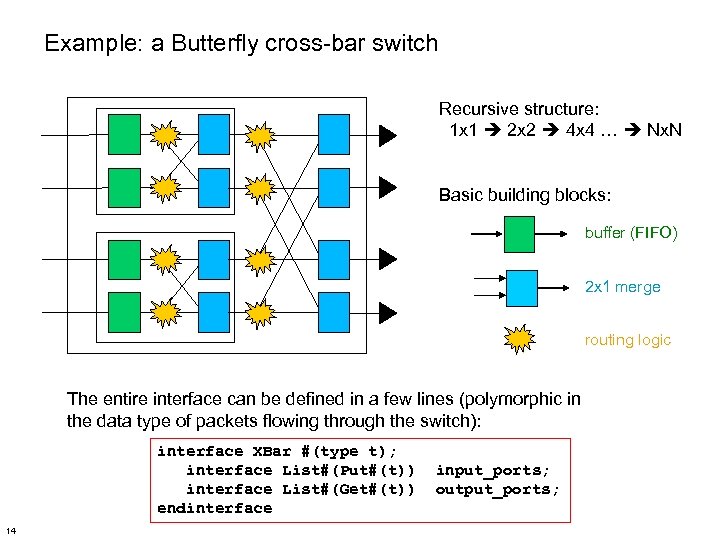

Example: a Butterfly cross-bar switch Recursive structure: 1 x 1 2 x 2 4 x 4 … Nx. N Basic building blocks: buffer (FIFO) 2 x 1 merge routing logic The entire interface can be defined in a few lines (polymorphic in the data type of packets flowing through the switch): interface XBar #(type t); interface List#(Put#(t)) interface List#(Get#(t)) endinterface 14 input_ports; output_ports;

Example: a Butterfly cross-bar switch Recursive structure: 1 x 1 2 x 2 4 x 4 … Nx. N Basic building blocks: buffer (FIFO) 2 x 1 merge routing logic The entire interface can be defined in a few lines (polymorphic in the data type of packets flowing through the switch): interface XBar #(type t); interface List#(Put#(t)) interface List#(Get#(t)) endinterface 14 input_ports; output_ports;

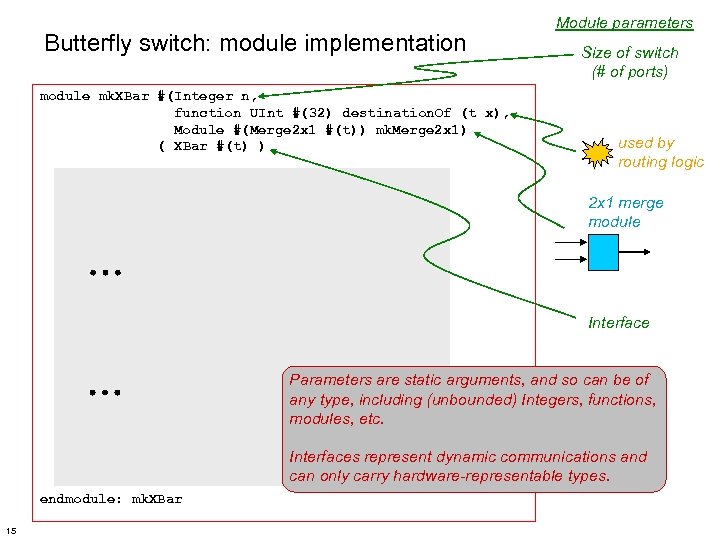

Butterfly switch: module implementation module mk. XBar #(Integer n, function UInt #(32) destination. Of (t x), Module #(Merge 2 x 1 #(t)) mk. Merge 2 x 1) ( XBar #(t) ) Module parameters Size of switch (# of ports) used by routing logic 2 x 1 merge module Interface Parameters are static arguments, and so can be of any type, including (unbounded) Integers, functions, modules, etc. Interfaces represent dynamic communications and can only carry hardware-representable types. endmodule: mk. XBar 15

Butterfly switch: module implementation module mk. XBar #(Integer n, function UInt #(32) destination. Of (t x), Module #(Merge 2 x 1 #(t)) mk. Merge 2 x 1) ( XBar #(t) ) Module parameters Size of switch (# of ports) used by routing logic 2 x 1 merge module Interface Parameters are static arguments, and so can be of any type, including (unbounded) Integers, functions, modules, etc. Interfaces represent dynamic communications and can only carry hardware-representable types. endmodule: mk. XBar 15

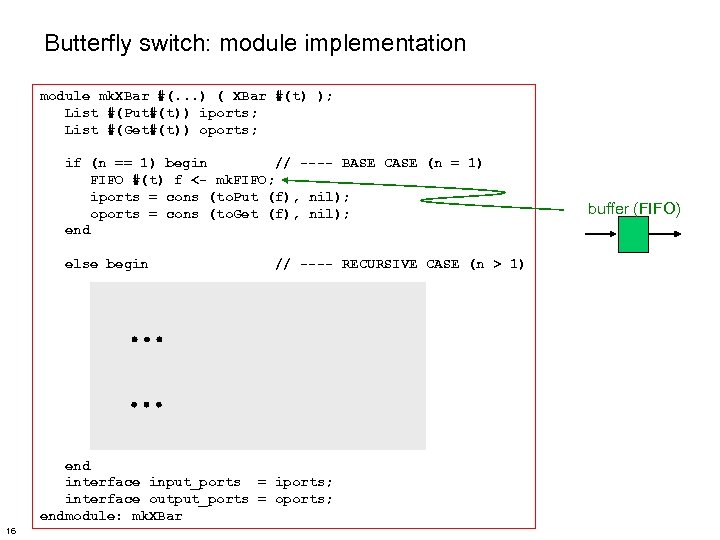

Butterfly switch: module implementation module mk. XBar #(. . . ) ( XBar #(t) ); List #(Put#(t)) iports; List #(Get#(t)) oports; if (n == 1) begin // ---- BASE CASE (n = 1) FIFO #(t) f <- mk. FIFO; iports = cons (to. Put (f), nil); oports = cons (to. Get (f), nil); end else begin // ---- RECURSIVE CASE (n > 1) end interface input_ports = iports; interface output_ports = oports; endmodule: mk. XBar 16 buffer (FIFO)

Butterfly switch: module implementation module mk. XBar #(. . . ) ( XBar #(t) ); List #(Put#(t)) iports; List #(Get#(t)) oports; if (n == 1) begin // ---- BASE CASE (n = 1) FIFO #(t) f <- mk. FIFO; iports = cons (to. Put (f), nil); oports = cons (to. Get (f), nil); end else begin // ---- RECURSIVE CASE (n > 1) end interface input_ports = iports; interface output_ports = oports; endmodule: mk. XBar 16 buffer (FIFO)

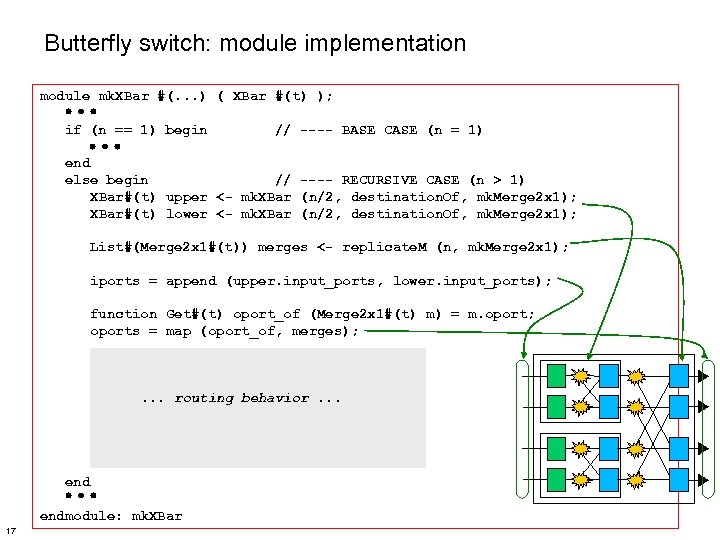

Butterfly switch: module implementation module mk. XBar #(. . . ) ( XBar #(t) ); if (n == 1) begin // ---- BASE CASE (n = 1) end else begin // ---- RECURSIVE CASE (n > 1) XBar#(t) upper <- mk. XBar (n/2, destination. Of, mk. Merge 2 x 1); XBar#(t) lower <- mk. XBar (n/2, destination. Of, mk. Merge 2 x 1); List#(Merge 2 x 1#(t)) merges <- replicate. M (n, mk. Merge 2 x 1); iports = append (upper. input_ports, lower. input_ports); function Get#(t) oport_of (Merge 2 x 1#(t) m) = m. oport; oports = map (oport_of, merges); . . . routing behavior. . . endmodule: mk. XBar 17

Butterfly switch: module implementation module mk. XBar #(. . . ) ( XBar #(t) ); if (n == 1) begin // ---- BASE CASE (n = 1) end else begin // ---- RECURSIVE CASE (n > 1) XBar#(t) upper <- mk. XBar (n/2, destination. Of, mk. Merge 2 x 1); XBar#(t) lower <- mk. XBar (n/2, destination. Of, mk. Merge 2 x 1); List#(Merge 2 x 1#(t)) merges <- replicate. M (n, mk. Merge 2 x 1); iports = append (upper. input_ports, lower. input_ports); function Get#(t) oport_of (Merge 2 x 1#(t) m) = m. oport; oports = map (oport_of, merges); . . . routing behavior. . . endmodule: mk. XBar 17

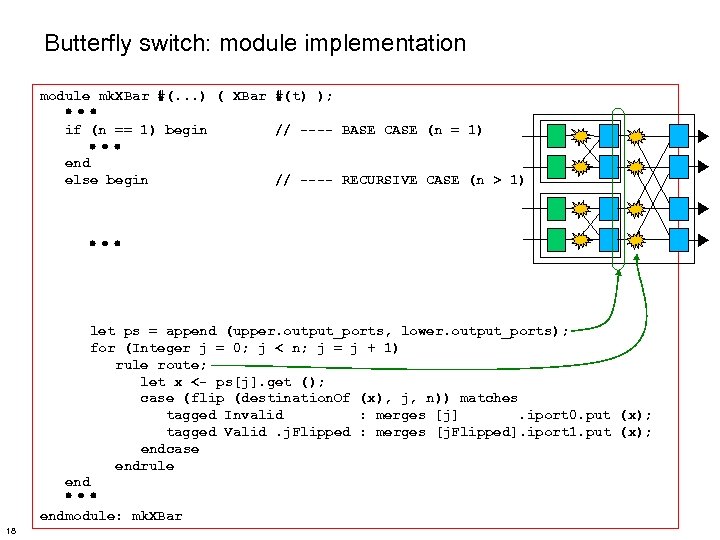

Butterfly switch: module implementation module mk. XBar #(. . . ) ( XBar #(t) ); if (n == 1) begin // ---- BASE CASE (n = 1) end else begin // ---- RECURSIVE CASE (n > 1) let ps = append (upper. output_ports, lower. output_ports); for (Integer j = 0; j < n; j = j + 1) rule route; let x <- ps[j]. get (); case (flip (destination. Of (x), j, n)) matches tagged Invalid : merges [j]. iport 0. put (x); tagged Valid. j. Flipped : merges [j. Flipped]. iport 1. put (x); endcase endrule endmodule: mk. XBar 18

Butterfly switch: module implementation module mk. XBar #(. . . ) ( XBar #(t) ); if (n == 1) begin // ---- BASE CASE (n = 1) end else begin // ---- RECURSIVE CASE (n > 1) let ps = append (upper. output_ports, lower. output_ports); for (Integer j = 0; j < n; j = j + 1) rule route; let x <- ps[j]. get (); case (flip (destination. Of (x), j, n)) matches tagged Invalid : merges [j]. iport 0. put (x); tagged Valid. j. Flipped : merges [j. Flipped]. iport 1. put (x); endcase endrule endmodule: mk. XBar 18

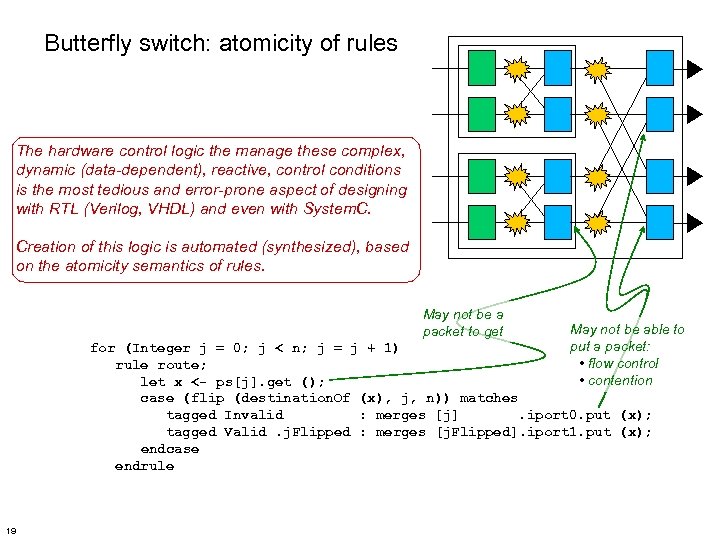

Butterfly switch: atomicity of rules The hardware control logic the manage these complex, dynamic (data-dependent), reactive, control conditions is the most tedious and error-prone aspect of designing with RTL (Verilog, VHDL) and even with System. C. Creation of this logic is automated (synthesized), based on the atomicity semantics of rules. May not be a packet to get May not be able to put a packet: • flow control • contention for (Integer j = 0; j < n; j = j + 1) rule route; let x <- ps[j]. get (); case (flip (destination. Of (x), j, n)) matches tagged Invalid : merges [j]. iport 0. put (x); tagged Valid. j. Flipped : merges [j. Flipped]. iport 1. put (x); endcase endrule 19

Butterfly switch: atomicity of rules The hardware control logic the manage these complex, dynamic (data-dependent), reactive, control conditions is the most tedious and error-prone aspect of designing with RTL (Verilog, VHDL) and even with System. C. Creation of this logic is automated (synthesized), based on the atomicity semantics of rules. May not be a packet to get May not be able to put a packet: • flow control • contention for (Integer j = 0; j < n; j = j + 1) rule route; let x <- ps[j]. get (); case (flip (destination. Of (x), j, n)) matches tagged Invalid : merges [j]. iport 0. put (x); tagged Valid. j. Flipped : merges [j. Flipped]. iport 1. put (x); endcase endrule 19

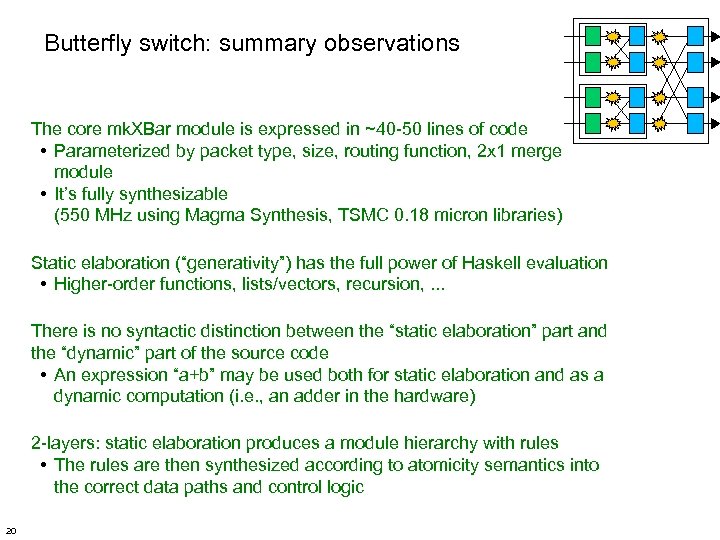

Butterfly switch: summary observations The core mk. XBar module is expressed in ~40 -50 lines of code • Parameterized by packet type, size, routing function, 2 x 1 merge module • It’s fully synthesizable (550 MHz using Magma Synthesis, TSMC 0. 18 micron libraries) Static elaboration (“generativity”) has the full power of Haskell evaluation • Higher-order functions, lists/vectors, recursion, . . . There is no syntactic distinction between the “static elaboration” part and the “dynamic” part of the source code • An expression “a+b” may be used both for static elaboration and as a dynamic computation (i. e. , an adder in the hardware) 2 -layers: static elaboration produces a module hierarchy with rules • The rules are then synthesized according to atomicity semantics into the correct data paths and control logic 20

Butterfly switch: summary observations The core mk. XBar module is expressed in ~40 -50 lines of code • Parameterized by packet type, size, routing function, 2 x 1 merge module • It’s fully synthesizable (550 MHz using Magma Synthesis, TSMC 0. 18 micron libraries) Static elaboration (“generativity”) has the full power of Haskell evaluation • Higher-order functions, lists/vectors, recursion, . . . There is no syntactic distinction between the “static elaboration” part and the “dynamic” part of the source code • An expression “a+b” may be used both for static elaboration and as a dynamic computation (i. e. , an adder in the hardware) 2 -layers: static elaboration produces a module hierarchy with rules • The rules are then synthesized according to atomicity semantics into the correct data paths and control logic 20

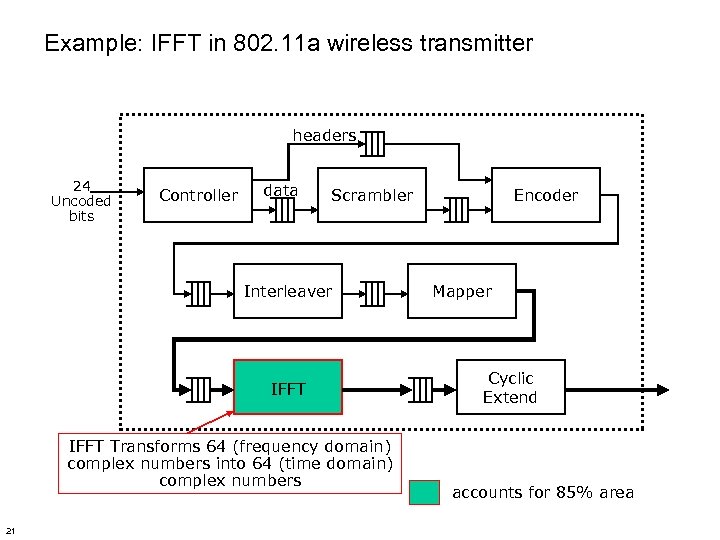

Example: IFFT in 802. 11 a wireless transmitter headers 24 Uncoded bits Controller data Scrambler Interleaver IFFT Transforms 64 (frequency domain) complex numbers into 64 (time domain) complex numbers 21 Encoder Mapper Cyclic Extend accounts for 85% area

Example: IFFT in 802. 11 a wireless transmitter headers 24 Uncoded bits Controller data Scrambler Interleaver IFFT Transforms 64 (frequency domain) complex numbers into 64 (time domain) complex numbers 21 Encoder Mapper Cyclic Extend accounts for 85% area

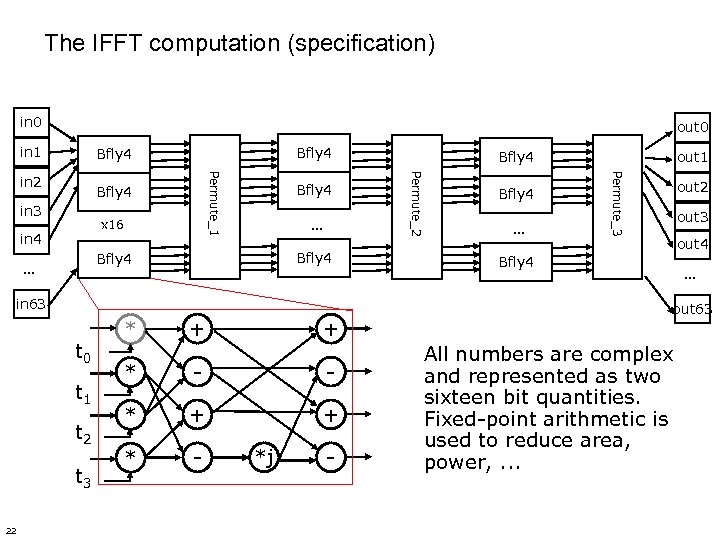

The IFFT computation (specification) in 0 out 0 in 1 Bfly 4 in 3 x 16 in 4 Bfly 4 … in 63 t 0 t 1 t 2 t 3 22 * + + * - - * + + * - *j - Bfly 4 … out 1 Permute_3 Bfly 4 Permute_2 Permute_1 in 2 Bfly 4 out 2 out 3 out 4 Bfly 4 … out 63 All numbers are complex and represented as two sixteen bit quantities. Fixed-point arithmetic is used to reduce area, power, . . .

The IFFT computation (specification) in 0 out 0 in 1 Bfly 4 in 3 x 16 in 4 Bfly 4 … in 63 t 0 t 1 t 2 t 3 22 * + + * - - * + + * - *j - Bfly 4 … out 1 Permute_3 Bfly 4 Permute_2 Permute_1 in 2 Bfly 4 out 2 out 3 out 4 Bfly 4 … out 63 All numbers are complex and represented as two sixteen bit quantities. Fixed-point arithmetic is used to reduce area, power, . . .

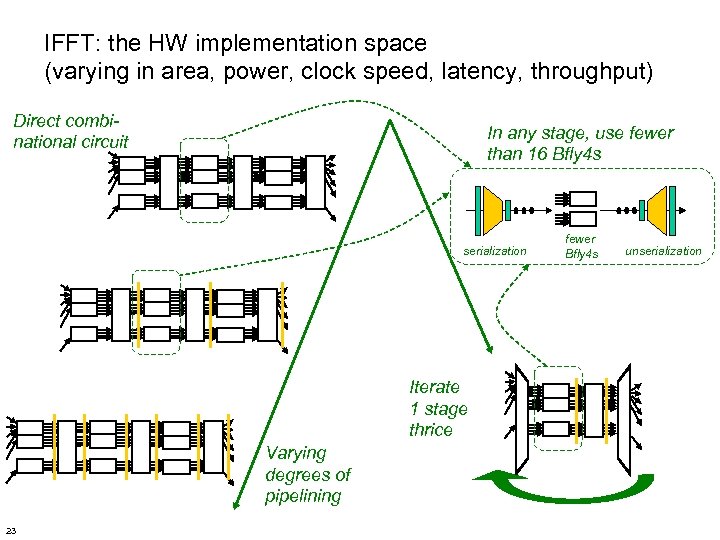

IFFT: the HW implementation space (varying in area, power, clock speed, latency, throughput) Direct combinational circuit In any stage, use fewer than 16 Bfly 4 s serialization Iterate 1 stage thrice Varying degrees of pipelining 23 fewer Bfly 4 s unserialization

IFFT: the HW implementation space (varying in area, power, clock speed, latency, throughput) Direct combinational circuit In any stage, use fewer than 16 Bfly 4 s serialization Iterate 1 stage thrice Varying degrees of pipelining 23 fewer Bfly 4 s unserialization

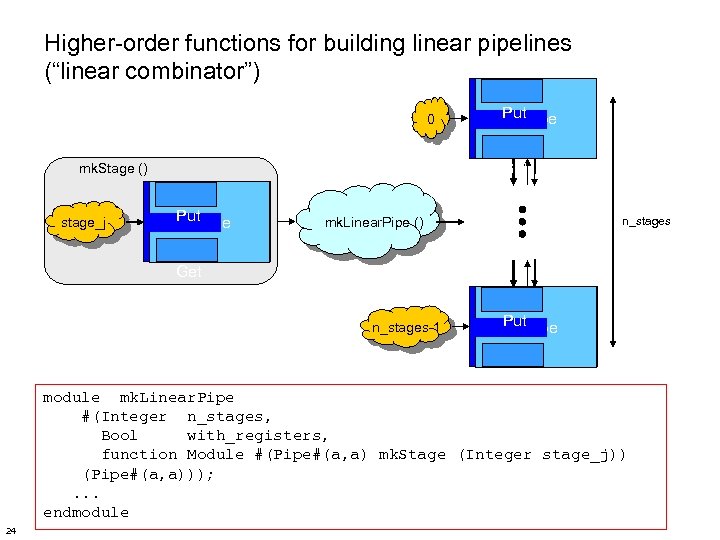

Higher-order functions for building linear pipelines (“linear combinator”) 0 Get mk. Stage () stage_j Put. Pipe n_stages mk. Linear. Pipe () Get n_stages-1 Put. Pipe Get module mk. Linear. Pipe #(Integer n_stages, Bool with_registers, function Module #(Pipe#(a, a) mk. Stage (Integer stage_j)) (Pipe#(a, a))); . . . endmodule 24

Higher-order functions for building linear pipelines (“linear combinator”) 0 Get mk. Stage () stage_j Put. Pipe n_stages mk. Linear. Pipe () Get n_stages-1 Put. Pipe Get module mk. Linear. Pipe #(Integer n_stages, Bool with_registers, function Module #(Pipe#(a, a) mk. Stage (Integer stage_j)) (Pipe#(a, a))); . . . endmodule 24

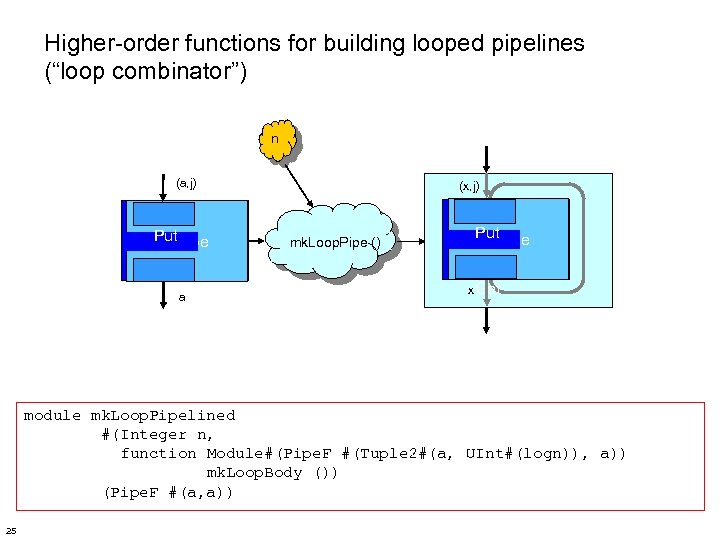

Higher-order functions for building looped pipelines (“loop combinator”) n (a, j) Put. Pipe Geta (x, j) mk. Loop. Pipe () Put. Pipe x Get module mk. Loop. Pipelined #(Integer n, function Module#(Pipe. F #(Tuple 2#(a, UInt#(logn)), a)) mk. Loop. Body ()) (Pipe. F #(a, a)) 25

Higher-order functions for building looped pipelines (“loop combinator”) n (a, j) Put. Pipe Geta (x, j) mk. Loop. Pipe () Put. Pipe x Get module mk. Loop. Pipelined #(Integer n, function Module#(Pipe. F #(Tuple 2#(a, UInt#(logn)), a)) mk. Loop. Body ()) (Pipe. F #(a, a)) 25

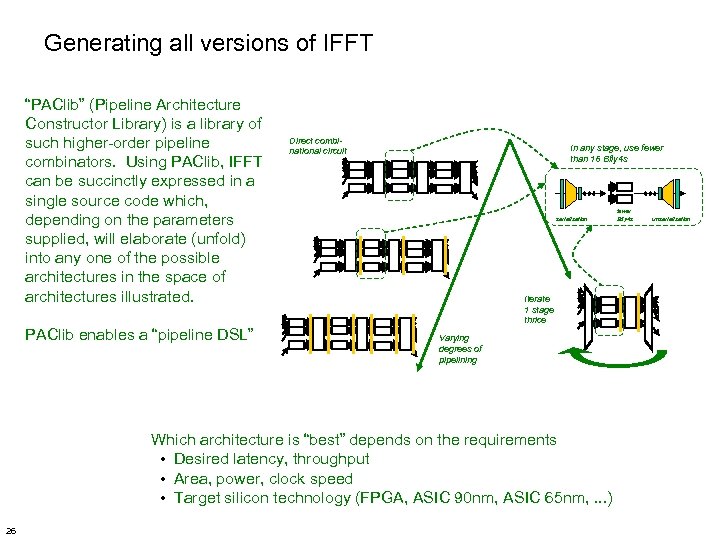

Generating all versions of IFFT “PAClib” (Pipeline Architecture Constructor Library) is a library of such higher-order pipeline combinators. Using PAClib, IFFT can be succinctly expressed in a single source code which, depending on the parameters supplied, will elaborate (unfold) into any one of the possible architectures in the space of architectures illustrated. PAClib enables a “pipeline DSL” Direct combinational circuit In any stage, use fewer than 16 Bfly 4 s serialization Iterate 1 stage thrice Varying degrees of pipelining Which architecture is “best” depends on the requirements • Desired latency, throughput • Area, power, clock speed • Target silicon technology (FPGA, ASIC 90 nm, ASIC 65 nm, . . . ) 26 fewer Bfly 4 s unserialization

Generating all versions of IFFT “PAClib” (Pipeline Architecture Constructor Library) is a library of such higher-order pipeline combinators. Using PAClib, IFFT can be succinctly expressed in a single source code which, depending on the parameters supplied, will elaborate (unfold) into any one of the possible architectures in the space of architectures illustrated. PAClib enables a “pipeline DSL” Direct combinational circuit In any stage, use fewer than 16 Bfly 4 s serialization Iterate 1 stage thrice Varying degrees of pipelining Which architecture is “best” depends on the requirements • Desired latency, throughput • Area, power, clock speed • Target silicon technology (FPGA, ASIC 90 nm, ASIC 65 nm, . . . ) 26 fewer Bfly 4 s unserialization

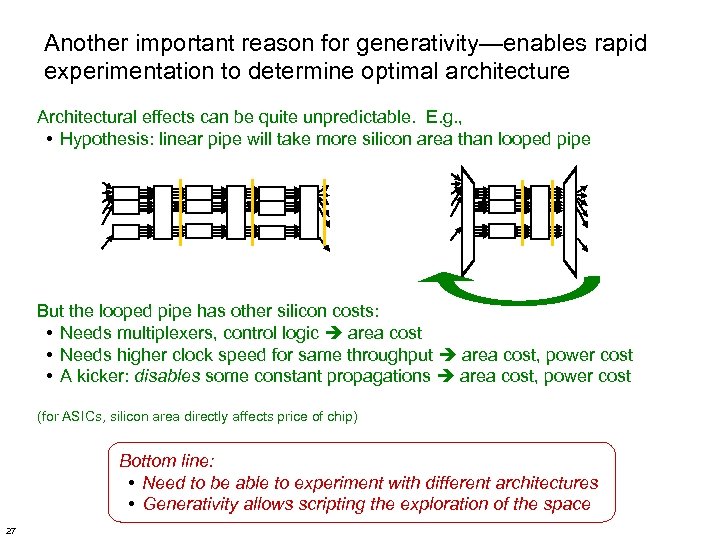

Another important reason for generativity—enables rapid experimentation to determine optimal architecture Architectural effects can be quite unpredictable. E. g. , • Hypothesis: linear pipe will take more silicon area than looped pipe But the looped pipe has other silicon costs: • Needs multiplexers, control logic area cost • Needs higher clock speed for same throughput area cost, power cost • A kicker: disables some constant propagations area cost, power cost (for ASICs, silicon area directly affects price of chip) Bottom line: • Need to be able to experiment with different architectures • Generativity allows scripting the exploration of the space 27

Another important reason for generativity—enables rapid experimentation to determine optimal architecture Architectural effects can be quite unpredictable. E. g. , • Hypothesis: linear pipe will take more silicon area than looped pipe But the looped pipe has other silicon costs: • Needs multiplexers, control logic area cost • Needs higher clock speed for same throughput area cost, power cost • A kicker: disables some constant propagations area cost, power cost (for ASICs, silicon area directly affects price of chip) Bottom line: • Need to be able to experiment with different architectures • Generativity allows scripting the exploration of the space 27

I hope that by now you’re saying: “Hey! Writing HW programs doesn’t look too hard!” (Has all the creature comforts of a modern high-level programming language. ) But, so what? • Why would I want to compute something directly in HW? • Even if I want to, aren’t the costs and logistics of actually putting something in HW just too high a barrier? 28

I hope that by now you’re saying: “Hey! Writing HW programs doesn’t look too hard!” (Has all the creature comforts of a modern high-level programming language. ) But, so what? • Why would I want to compute something directly in HW? • Even if I want to, aren’t the costs and logistics of actually putting something in HW just too high a barrier? 28

Why implement things in HW? Reason (1): Speed Direct implementation in HW typically • removes a layer of interpretation, and interpretation generally costs an order of magnitude in speed • can exploit more parallelism instructions (program) for application X Interpret: Run: 29 fixed machine (e. g. , x 86, GPGPU, Cell) Run: X-machine (fine-grain parallel) Caveat: lots of devils in the details • Interpretation at GHz may still be faster than direct execution at MHz • Interpretation with monster memory bandwidth may still be faster than direct execution with anemic memory bandwidth

Why implement things in HW? Reason (1): Speed Direct implementation in HW typically • removes a layer of interpretation, and interpretation generally costs an order of magnitude in speed • can exploit more parallelism instructions (program) for application X Interpret: Run: 29 fixed machine (e. g. , x 86, GPGPU, Cell) Run: X-machine (fine-grain parallel) Caveat: lots of devils in the details • Interpretation at GHz may still be faster than direct execution at MHz • Interpretation with monster memory bandwidth may still be faster than direct execution with anemic memory bandwidth

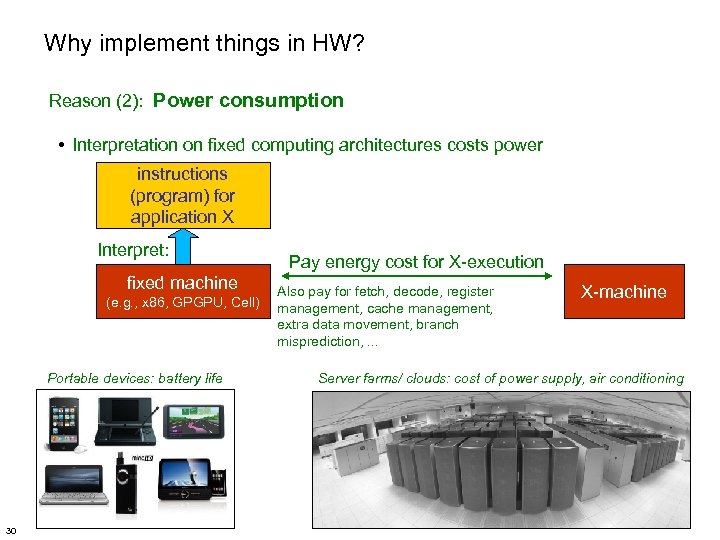

Why implement things in HW? Reason (2): Power consumption • Interpretation on fixed computing architectures costs power instructions (program) for application X Interpret: fixed machine (e. g. , x 86, GPGPU, Cell) Portable devices: battery life 30 Pay energy cost for X-execution Also pay for fetch, decode, register management, cache management, extra data movement, branch misprediction, . . . X-machine Server farms/ clouds: cost of power supply, air conditioning

Why implement things in HW? Reason (2): Power consumption • Interpretation on fixed computing architectures costs power instructions (program) for application X Interpret: fixed machine (e. g. , x 86, GPGPU, Cell) Portable devices: battery life 30 Pay energy cost for X-execution Also pay for fetch, decode, register management, cache management, extra data movement, branch misprediction, . . . X-machine Server farms/ clouds: cost of power supply, air conditioning

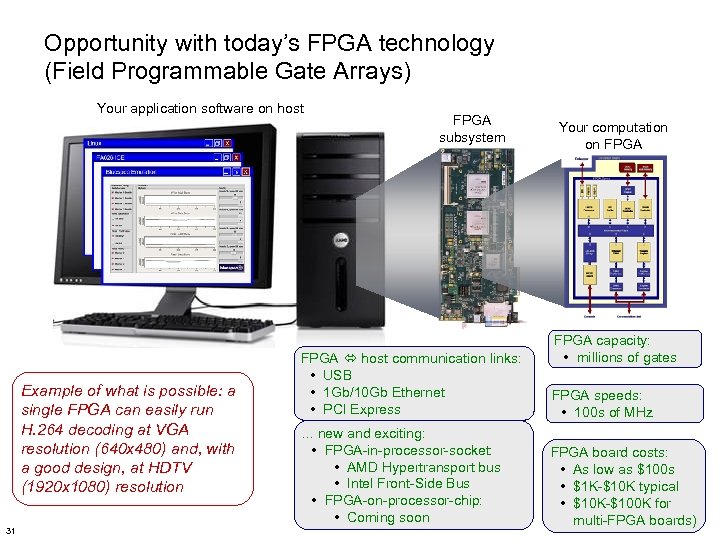

Opportunity with today’s FPGA technology (Field Programmable Gate Arrays) Your application software on host Example of what is possible: a single FPGA can easily run H. 264 decoding at VGA resolution (640 x 480) and, with a good design, at HDTV (1920 x 1080) resolution 31 FPGA subsystem Your computation on FPGA capacity: • millions of gates FPGA host communication links: • USB • 1 Gb/10 Gb Ethernet • PCI Express FPGA speeds: • 100 s of MHz . . . new and exciting: • FPGA-in-processor-socket: • AMD Hypertransport bus • Intel Front-Side Bus • FPGA-on-processor-chip: • Coming soon FPGA board costs: • As low as $100 s • $1 K-$10 K typical • $10 K-$100 K for multi-FPGA boards)

Opportunity with today’s FPGA technology (Field Programmable Gate Arrays) Your application software on host Example of what is possible: a single FPGA can easily run H. 264 decoding at VGA resolution (640 x 480) and, with a good design, at HDTV (1920 x 1080) resolution 31 FPGA subsystem Your computation on FPGA capacity: • millions of gates FPGA host communication links: • USB • 1 Gb/10 Gb Ethernet • PCI Express FPGA speeds: • 100 s of MHz . . . new and exciting: • FPGA-in-processor-socket: • AMD Hypertransport bus • Intel Front-Side Bus • FPGA-on-processor-chip: • Coming soon FPGA board costs: • As low as $100 s • $1 K-$10 K typical • $10 K-$100 K for multi-FPGA boards)

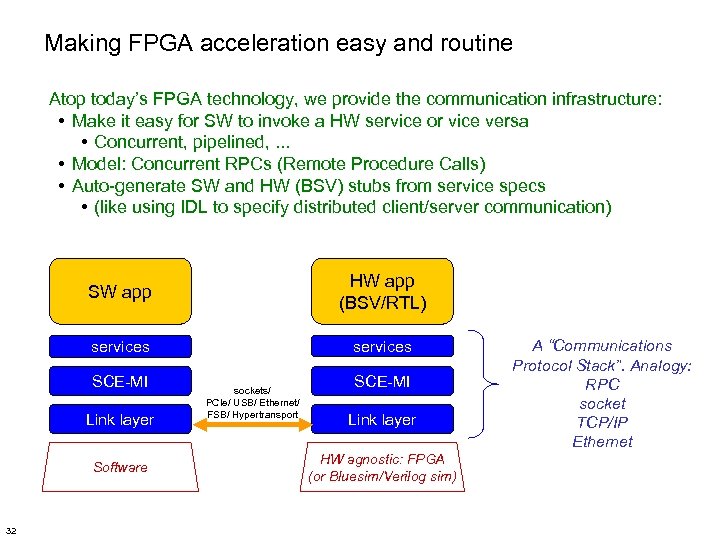

Making FPGA acceleration easy and routine Atop today’s FPGA technology, we provide the communication infrastructure: • Make it easy for SW to invoke a HW service or vice versa • Concurrent, pipelined, . . . • Model: Concurrent RPCs (Remote Procedure Calls) • Auto-generate SW and HW (BSV) stubs from service specs • (like using IDL to specify distributed client/server communication) SW app HW app (BSV/RTL) services SCE-MI Link layer Software 32 sockets/ PCIe/ USB/ Ethernet/ FSB/ Hypertransport SCE-MI Link layer HW agnostic: FPGA (or Bluesim/Verilog sim) A “Communications Protocol Stack”. Analogy: RPC socket TCP/IP Ethernet

Making FPGA acceleration easy and routine Atop today’s FPGA technology, we provide the communication infrastructure: • Make it easy for SW to invoke a HW service or vice versa • Concurrent, pipelined, . . . • Model: Concurrent RPCs (Remote Procedure Calls) • Auto-generate SW and HW (BSV) stubs from service specs • (like using IDL to specify distributed client/server communication) SW app HW app (BSV/RTL) services SCE-MI Link layer Software 32 sockets/ PCIe/ USB/ Ethernet/ FSB/ Hypertransport SCE-MI Link layer HW agnostic: FPGA (or Bluesim/Verilog sim) A “Communications Protocol Stack”. Analogy: RPC socket TCP/IP Ethernet

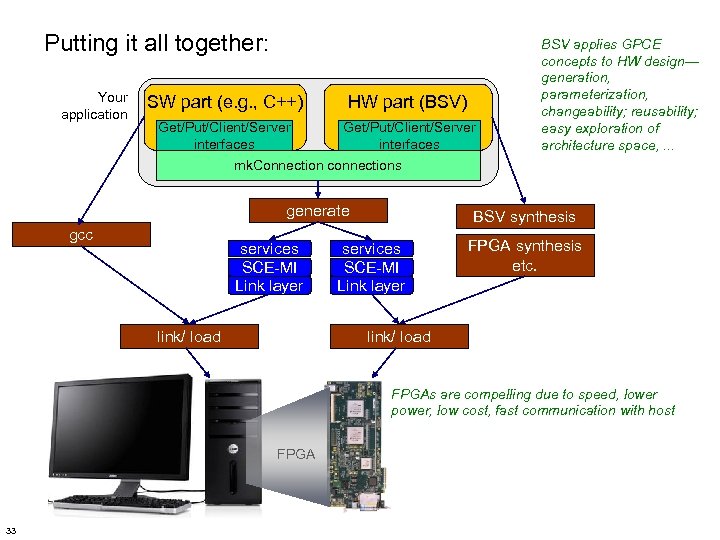

Putting it all together: Your application SW part (e. g. , C++) HW part (BSV) Get/Put/Client/Server interfaces BSV applies GPCE concepts to HW design— generation, parameterization, changeability; reusability; easy exploration of architecture space, . . . mk. Connection connections generate gcc services SCE-MI Link layer link/ load BSV synthesis services SCE-MI Link layer FPGA synthesis etc. link/ load FPGAs are compelling due to speed, lower power, low cost, fast communication with host FPGA 33

Putting it all together: Your application SW part (e. g. , C++) HW part (BSV) Get/Put/Client/Server interfaces BSV applies GPCE concepts to HW design— generation, parameterization, changeability; reusability; easy exploration of architecture space, . . . mk. Connection connections generate gcc services SCE-MI Link layer link/ load BSV synthesis services SCE-MI Link layer FPGA synthesis etc. link/ load FPGAs are compelling due to speed, lower power, low cost, fast communication with host FPGA 33

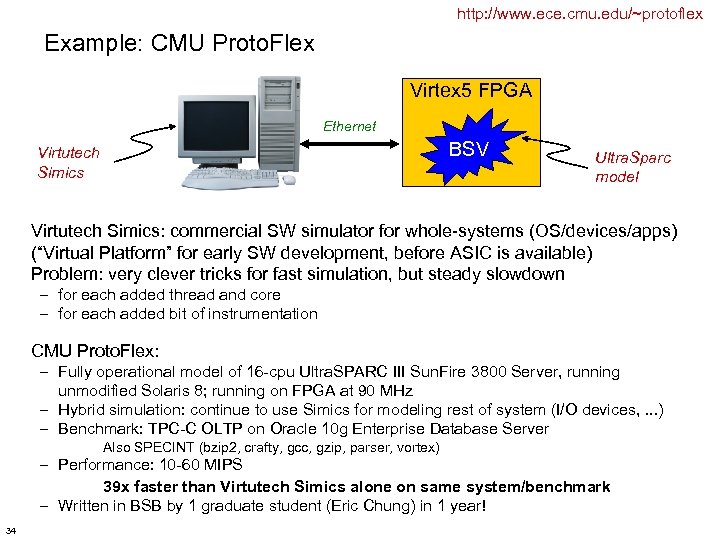

http: //www. ece. cmu. edu/~protoflex Example: CMU Proto. Flex Virtex 5 FPGA Ethernet BSV Virtutech Simics Ultra. Sparc model Virtutech Simics: commercial SW simulator for whole-systems (OS/devices/apps) (“Virtual Platform” for early SW development, before ASIC is available) Problem: very clever tricks for fast simulation, but steady slowdown – for each added thread and core – for each added bit of instrumentation CMU Proto. Flex: – Fully operational model of 16 -cpu Ultra. SPARC III Sun. Fire 3800 Server, running unmodified Solaris 8; running on FPGA at 90 MHz – Hybrid simulation: continue to use Simics for modeling rest of system (I/O devices, . . . ) – Benchmark: TPC-C OLTP on Oracle 10 g Enterprise Database Server Also SPECINT (bzip 2, crafty, gcc, gzip, parser, vortex) – Performance: 10 -60 MIPS 39 x faster than Virtutech Simics alone on same system/benchmark – Written in BSB by 1 graduate student (Eric Chung) in 1 year! 34

http: //www. ece. cmu. edu/~protoflex Example: CMU Proto. Flex Virtex 5 FPGA Ethernet BSV Virtutech Simics Ultra. Sparc model Virtutech Simics: commercial SW simulator for whole-systems (OS/devices/apps) (“Virtual Platform” for early SW development, before ASIC is available) Problem: very clever tricks for fast simulation, but steady slowdown – for each added thread and core – for each added bit of instrumentation CMU Proto. Flex: – Fully operational model of 16 -cpu Ultra. SPARC III Sun. Fire 3800 Server, running unmodified Solaris 8; running on FPGA at 90 MHz – Hybrid simulation: continue to use Simics for modeling rest of system (I/O devices, . . . ) – Benchmark: TPC-C OLTP on Oracle 10 g Enterprise Database Server Also SPECINT (bzip 2, crafty, gcc, gzip, parser, vortex) – Performance: 10 -60 MIPS 39 x faster than Virtutech Simics alone on same system/benchmark – Written in BSB by 1 graduate student (Eric Chung) in 1 year! 34

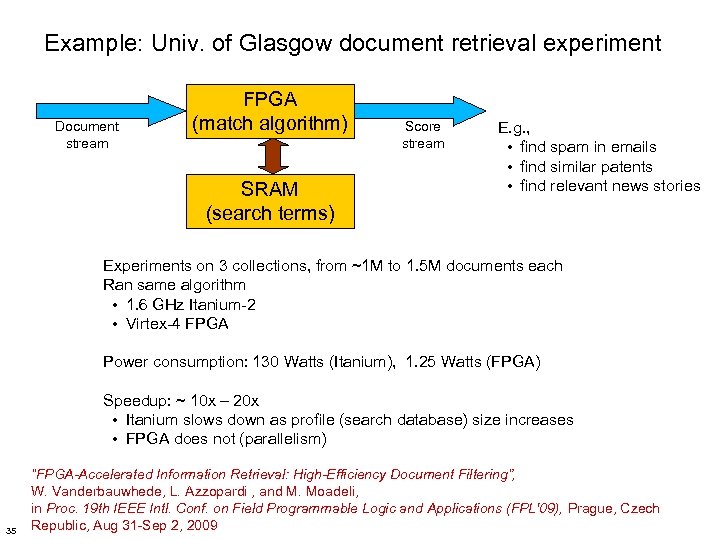

Example: Univ. of Glasgow document retrieval experiment Document stream FPGA (match algorithm) SRAM (search terms) Score stream E. g. , • find spam in emails • find similar patents • find relevant news stories Experiments on 3 collections, from ~1 M to 1. 5 M documents each Ran same algorithm • 1. 6 GHz Itanium-2 • Virtex-4 FPGA Power consumption: 130 Watts (Itanium), 1. 25 Watts (FPGA) Speedup: ~ 10 x – 20 x • Itanium slows down as profile (search database) size increases • FPGA does not (parallelism) 35 “FPGA-Accelerated Information Retrieval: High-Efficiency Document Filtering”, W. Vanderbauwhede, L. Azzopardi , and M. Moadeli, in Proc. 19 th IEEE Intl. Conf. on Field Programmable Logic and Applications (FPL'09), Prague, Czech Republic, Aug 31 -Sep 2, 2009

Example: Univ. of Glasgow document retrieval experiment Document stream FPGA (match algorithm) SRAM (search terms) Score stream E. g. , • find spam in emails • find similar patents • find relevant news stories Experiments on 3 collections, from ~1 M to 1. 5 M documents each Ran same algorithm • 1. 6 GHz Itanium-2 • Virtex-4 FPGA Power consumption: 130 Watts (Itanium), 1. 25 Watts (FPGA) Speedup: ~ 10 x – 20 x • Itanium slows down as profile (search database) size increases • FPGA does not (parallelism) 35 “FPGA-Accelerated Information Retrieval: High-Efficiency Document Filtering”, W. Vanderbauwhede, L. Azzopardi , and M. Moadeli, in Proc. 19 th IEEE Intl. Conf. on Field Programmable Logic and Applications (FPL'09), Prague, Czech Republic, Aug 31 -Sep 2, 2009

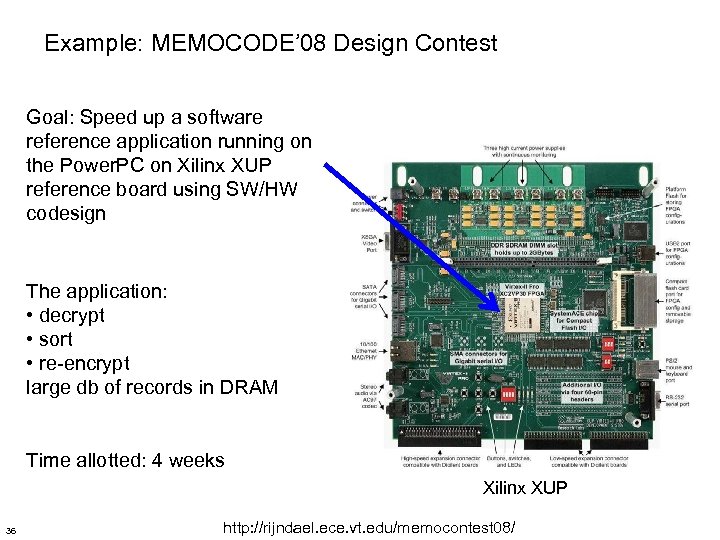

Example: MEMOCODE’ 08 Design Contest Goal: Speed up a software reference application running on the Power. PC on Xilinx XUP reference board using SW/HW codesign The application: • decrypt • sort • re-encrypt large db of records in DRAM Time allotted: 4 weeks Xilinx XUP 36 http: //rijndael. ece. vt. edu/memocontest 08/

Example: MEMOCODE’ 08 Design Contest Goal: Speed up a software reference application running on the Power. PC on Xilinx XUP reference board using SW/HW codesign The application: • decrypt • sort • re-encrypt large db of records in DRAM Time allotted: 4 weeks Xilinx XUP 36 http: //rijndael. ece. vt. edu/memocontest 08/

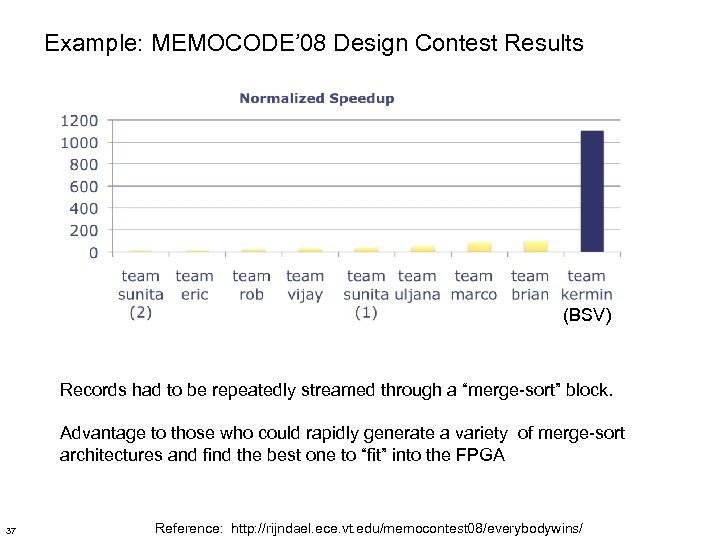

Example: MEMOCODE’ 08 Design Contest Results (BSV) Records had to be repeatedly streamed through a “merge-sort” block. Advantage to those who could rapidly generate a variety of merge-sort architectures and find the best one to “fit” into the FPGA 37 Reference: http: //rijndael. ece. vt. edu/memocontest 08/everybodywins/

Example: MEMOCODE’ 08 Design Contest Results (BSV) Records had to be repeatedly streamed through a “merge-sort” block. Advantage to those who could rapidly generate a variety of merge-sort architectures and find the best one to “fit” into the FPGA 37 Reference: http: //rijndael. ece. vt. edu/memocontest 08/everybodywins/

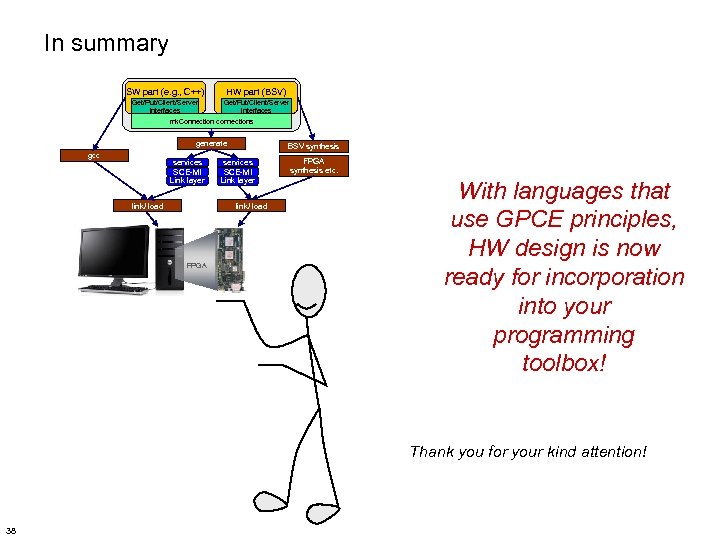

In summary SW part (e. g. , C++) HW part (BSV) Get/Put/Client/Server interfaces mk. Connection connections generate gcc services SCE-MI Link layer link/ load BSV synthesis services SCE-MI Link layer link/ load FPGA synthesis etc. With languages that use GPCE principles, HW design is now ready for incorporation into your programming toolbox! Thank you for your kind attention! 38

In summary SW part (e. g. , C++) HW part (BSV) Get/Put/Client/Server interfaces mk. Connection connections generate gcc services SCE-MI Link layer link/ load BSV synthesis services SCE-MI Link layer link/ load FPGA synthesis etc. With languages that use GPCE principles, HW design is now ready for incorporation into your programming toolbox! Thank you for your kind attention! 38

Acknowledgements James Hoe (MIT/CMU) and Arvind (MIT) for original technology for high-level synthesis from rules to RTL used in BSV today, 1997 -2000 Lennart Augustsson (Chalmers/Sandburst) for Haskell-based generative technology used in BSV today, 2000 -2003 My colleagues in the engineering teams at Sandburst and Bluespec for continuous and substantial improvements, 2000 -2009 Prof. Arvind’s group at MIT for their research and ideas, 2000 -2009 39

Acknowledgements James Hoe (MIT/CMU) and Arvind (MIT) for original technology for high-level synthesis from rules to RTL used in BSV today, 1997 -2000 Lennart Augustsson (Chalmers/Sandburst) for Haskell-based generative technology used in BSV today, 2000 -2003 My colleagues in the engineering teams at Sandburst and Bluespec for continuous and substantial improvements, 2000 -2009 Prof. Arvind’s group at MIT for their research and ideas, 2000 -2009 39