CL 2.ppt

- Количество слайдов: 29

USING CORPORA IN LINGUISTIC RESEARCH Iryna Dilay

USING CORPORA IN LINGUISTIC RESEARCH Iryna Dilay

Plan Corpus linguistics as a methodology: corpus-based vs corpus-driven approaches. n Principles of corpora modelling. n Corpus analytical tools: ¨The notion of concordance. ¨Tagging. Lemmatizers. ¨Collocation displays and frequency lists. n The advantages of using corpora in linguistic research. n

Plan Corpus linguistics as a methodology: corpus-based vs corpus-driven approaches. n Principles of corpora modelling. n Corpus analytical tools: ¨The notion of concordance. ¨Tagging. Lemmatizers. ¨Collocation displays and frequency lists. n The advantages of using corpora in linguistic research. n

What is a corpus? n “A corpus is a collection of pieces of language that are selected and ordered according to explicit linguistic criteria in order to be used as a sample of the language” (Sinclair, 1991) n The major reason for compilinguistic corpora is to “provide the basis for more accurate and reliable descriptions of how languages are structured and used” (Sinclair, 1991). n

What is a corpus? n “A corpus is a collection of pieces of language that are selected and ordered according to explicit linguistic criteria in order to be used as a sample of the language” (Sinclair, 1991) n The major reason for compilinguistic corpora is to “provide the basis for more accurate and reliable descriptions of how languages are structured and used” (Sinclair, 1991). n

With the advances in computer technology the exploitation of massive corpora became feasible. From the 80 s onward the number and size of corpora and corpus based studies have increased dramatically. Corpora have revolutionized almost all branches of linguistics. Computers … n …allow us to speed up the processing data; n … avoid human bias in data analysis; n … allow the enrichment of data with metadata.

With the advances in computer technology the exploitation of massive corpora became feasible. From the 80 s onward the number and size of corpora and corpus based studies have increased dramatically. Corpora have revolutionized almost all branches of linguistics. Computers … n …allow us to speed up the processing data; n … avoid human bias in data analysis; n … allow the enrichment of data with metadata.

![Corpus-based and intuition-based approaches are not mutually exclusive! Leech (1991) writes: “[…] Neither the Corpus-based and intuition-based approaches are not mutually exclusive! Leech (1991) writes: “[…] Neither the](https://present5.com/presentation/19762810_242019636/image-5.jpg) Corpus-based and intuition-based approaches are not mutually exclusive! Leech (1991) writes: “[…] Neither the corpus linguist of the 1950 s, who rejected intuition, nor the general linguist of the 60 s, who rejected corpus data, was able to achieve the interaction of data coverage and the insight that characterize the many successful corpus analyses of recent years”.

Corpus-based and intuition-based approaches are not mutually exclusive! Leech (1991) writes: “[…] Neither the corpus linguist of the 1950 s, who rejected intuition, nor the general linguist of the 60 s, who rejected corpus data, was able to achieve the interaction of data coverage and the insight that characterize the many successful corpus analyses of recent years”.

Is CL a methodology or a theory? . n CL is a METHODOLOGY, not a branch of linguistics, such as grammar, phonetics, semantics, pragmatics, etc. n CL can be used to explore almost any area of linguistic research. No universal agreement

Is CL a methodology or a theory? . n CL is a METHODOLOGY, not a branch of linguistics, such as grammar, phonetics, semantics, pragmatics, etc. n CL can be used to explore almost any area of linguistic research. No universal agreement

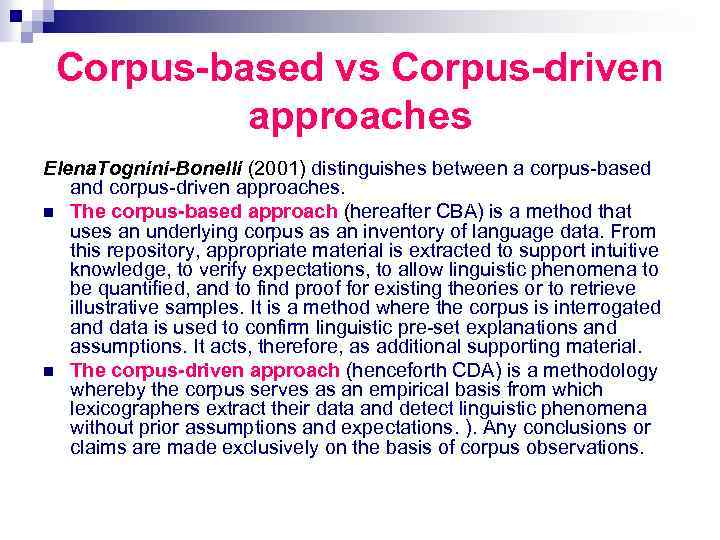

Corpus-based vs Corpus-driven approaches Elena. Tognini-Bonelli (2001) distinguishes between a corpus-based and corpus-driven approaches. n The corpus-based approach (hereafter CBA) is a method that uses an underlying corpus as an inventory of language data. From this repository, appropriate material is extracted to support intuitive knowledge, to verify expectations, to allow linguistic phenomena to be quantified, and to find proof for existing theories or to retrieve illustrative samples. It is a method where the corpus is interrogated and data is used to confirm linguistic pre-set explanations and assumptions. It acts, therefore, as additional supporting material. n The corpus-driven approach (henceforth CDA) is a methodology whereby the corpus serves as an empirical basis from which lexicographers extract their data and detect linguistic phenomena without prior assumptions and expectations. ). Any conclusions or claims are made exclusively on the basis of corpus observations.

Corpus-based vs Corpus-driven approaches Elena. Tognini-Bonelli (2001) distinguishes between a corpus-based and corpus-driven approaches. n The corpus-based approach (hereafter CBA) is a method that uses an underlying corpus as an inventory of language data. From this repository, appropriate material is extracted to support intuitive knowledge, to verify expectations, to allow linguistic phenomena to be quantified, and to find proof for existing theories or to retrieve illustrative samples. It is a method where the corpus is interrogated and data is used to confirm linguistic pre-set explanations and assumptions. It acts, therefore, as additional supporting material. n The corpus-driven approach (henceforth CDA) is a methodology whereby the corpus serves as an empirical basis from which lexicographers extract their data and detect linguistic phenomena without prior assumptions and expectations. ). Any conclusions or claims are made exclusively on the basis of corpus observations.

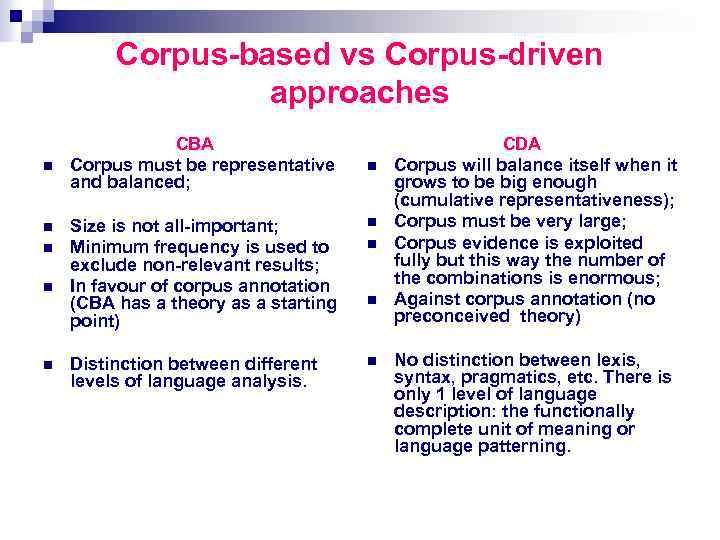

Corpus-based vs Corpus-driven approaches n n n CBA Corpus must be representative and balanced; n Size is not all-important; Minimum frequency is used to exclude non-relevant results; In favour of corpus annotation (CBA has a theory as a starting point) n Distinction between different levels of language analysis. n n n CDA Corpus will balance itself when it grows to be big enough (cumulative representativeness); Corpus must be very large; Corpus evidence is exploited fully but this way the number of the combinations is enormous; Against corpus annotation (no preconceived theory) No distinction between lexis, syntax, pragmatics, etc. There is only 1 level of language description: the functionally complete unit of meaning or language patterning.

Corpus-based vs Corpus-driven approaches n n n CBA Corpus must be representative and balanced; n Size is not all-important; Minimum frequency is used to exclude non-relevant results; In favour of corpus annotation (CBA has a theory as a starting point) n Distinction between different levels of language analysis. n n n CDA Corpus will balance itself when it grows to be big enough (cumulative representativeness); Corpus must be very large; Corpus evidence is exploited fully but this way the number of the combinations is enormous; Against corpus annotation (no preconceived theory) No distinction between lexis, syntax, pragmatics, etc. There is only 1 level of language description: the functionally complete unit of meaning or language patterning.

PRINCIPLES OF CORPORA MODELLING Corpora should be … a) representative b) balanced.

PRINCIPLES OF CORPORA MODELLING Corpora should be … a) representative b) balanced.

Representativeness A corpus is representative if … n … the findings based on its content can be generalised to the said language variety (Leech, 1991); n … its samples include the full range of variability in a population (Biber, 1993).

Representativeness A corpus is representative if … n … the findings based on its content can be generalised to the said language variety (Leech, 1991); n … its samples include the full range of variability in a population (Biber, 1993).

Representativeness Biber determines two criteria to select texts for a corpus: n situational perspective (external): defined situationally, e. g. genres, registers, text types, etc. n linguistic perspective (internal): taking into account the distribution of linguistic features.

Representativeness Biber determines two criteria to select texts for a corpus: n situational perspective (external): defined situationally, e. g. genres, registers, text types, etc. n linguistic perspective (internal): taking into account the distribution of linguistic features.

Sampling A corpus is a sample of a given population. A sample is representative if what we find for the sample holds for the general population. Samples are scaled-down versions of a larger population. Sampling unit for a written text can be a book, periodical or newspaper. Population – the assembly of all sampling units; it can be defined in terms of language production, reception (demographic, sex, age, etc) or language as a product (category, genre of language data). Sampling frame – the list of sampling units.

Sampling A corpus is a sample of a given population. A sample is representative if what we find for the sample holds for the general population. Samples are scaled-down versions of a larger population. Sampling unit for a written text can be a book, periodical or newspaper. Population – the assembly of all sampling units; it can be defined in terms of language production, reception (demographic, sex, age, etc) or language as a product (category, genre of language data). Sampling frame – the list of sampling units.

Sampling techniques: Simple random sampling: all the sampling units within the sampling frame are numbered and the sample is chosen by the use of a table or random numbers; rare features could not be accounted for. n Stratified random sampling: the population is divided in relatively homogeneous groups, i. e. the strata, and then the latter are sampled at random. Both techniques are equally representative. Sample size: Full texts – no balance; peculiarity of individual texts may show through. Text chunks are sufficient (e. g. 2000 running words): frequent linguistic features are stable in their distribution. The number of samples across text categories should be proportional to their frequencies/ weights in the target population in order for the resulting corpus to be considered as representative. n

Sampling techniques: Simple random sampling: all the sampling units within the sampling frame are numbered and the sample is chosen by the use of a table or random numbers; rare features could not be accounted for. n Stratified random sampling: the population is divided in relatively homogeneous groups, i. e. the strata, and then the latter are sampled at random. Both techniques are equally representative. Sample size: Full texts – no balance; peculiarity of individual texts may show through. Text chunks are sufficient (e. g. 2000 running words): frequent linguistic features are stable in their distribution. The number of samples across text categories should be proportional to their frequencies/ weights in the target population in order for the resulting corpus to be considered as representative. n

Data Collection Spoken data must be transcribed from audio recordings. n Written texts must be rendered machine -readable by keyboarding or OCR (Optical Character Recognition) scanning. n Language data so collected form a RAW CORPUS! n

Data Collection Spoken data must be transcribed from audio recordings. n Written texts must be rendered machine -readable by keyboarding or OCR (Optical Character Recognition) scanning. n Language data so collected form a RAW CORPUS! n

Corpus Mark-up System of standard codes inserted into a document stored in electronic form to provide information about the text itself and govern formatting, printing, etc. Most widely used mark-up schemes: n TEI (Text Encoding Initiative) n CES (Corpus Encoding Standard)

Corpus Mark-up System of standard codes inserted into a document stored in electronic form to provide information about the text itself and govern formatting, printing, etc. Most widely used mark-up schemes: n TEI (Text Encoding Initiative) n CES (Corpus Encoding Standard)

TEI mark-up scheme can be expressed by means of different formal languages: n SGML (Standard Generalized Mark-up language – used in the BNC) n XML (Extensible Mark-up language) : n n n �

TEI mark-up scheme can be expressed by means of different formal languages: n SGML (Standard Generalized Mark-up language – used in the BNC) n XML (Extensible Mark-up language) : n n n �

CES (Corpus Encoding Standard) Designed specifically for the encoding of language corpora. n Document-wide mark-up (bibliographical description, encoding description, etc) n Cross structural mark-up (volume, chapter, paragraph, footnotes, etc) n Mark-up for subparagraph structures (sentence, quotation, words abbreviations, etc. )

CES (Corpus Encoding Standard) Designed specifically for the encoding of language corpora. n Document-wide mark-up (bibliographical description, encoding description, etc) n Cross structural mark-up (volume, chapter, paragraph, footnotes, etc) n Mark-up for subparagraph structures (sentence, quotation, words abbreviations, etc. )

![Corpus annotation “The process of adding […] interpretive linguistic information to an electronic corpus Corpus annotation “The process of adding […] interpretive linguistic information to an electronic corpus](https://present5.com/presentation/19762810_242019636/image-18.jpg) Corpus annotation “The process of adding […] interpretive linguistic information to an electronic corpus of spoken and/or written language data” (Leech, 1997).

Corpus annotation “The process of adding […] interpretive linguistic information to an electronic corpus of spoken and/or written language data” (Leech, 1997).

The advantages of annotation: n n n makes extracting information easier, faster and enables human analysts to exploit and retrieve analyses of which they are not themselves capable. Annotated corpora are reusable resources Annotated corpora are multifunctional: can be annotated with the purpose and reused with another Corpus annotation records linguistic analysis explicitly Corpus annotation provides a standard reference resource, a stable base of linguistic analyses, so that successive studies can be compared and contrasted on a common basis.

The advantages of annotation: n n n makes extracting information easier, faster and enables human analysts to exploit and retrieve analyses of which they are not themselves capable. Annotated corpora are reusable resources Annotated corpora are multifunctional: can be annotated with the purpose and reused with another Corpus annotation records linguistic analysis explicitly Corpus annotation provides a standard reference resource, a stable base of linguistic analyses, so that successive studies can be compared and contrasted on a common basis.

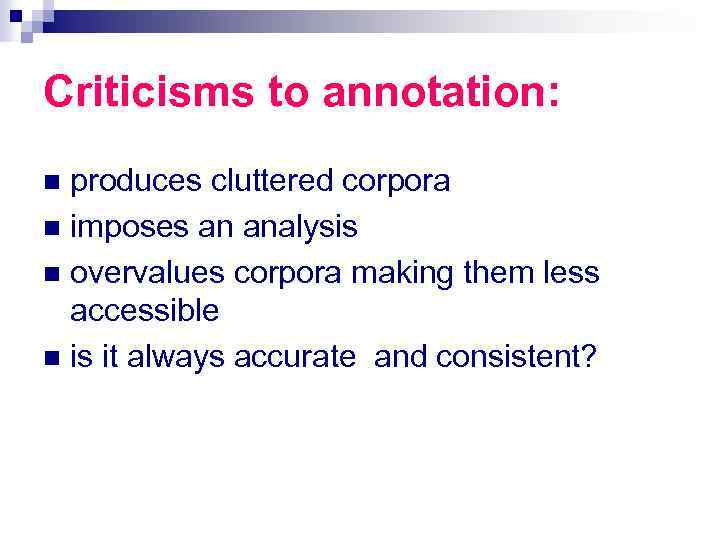

Criticisms to annotation: produces cluttered corpora n imposes an analysis n overvalues corpora making them less accessible n is it always accurate and consistent? n

Criticisms to annotation: produces cluttered corpora n imposes an analysis n overvalues corpora making them less accessible n is it always accurate and consistent? n

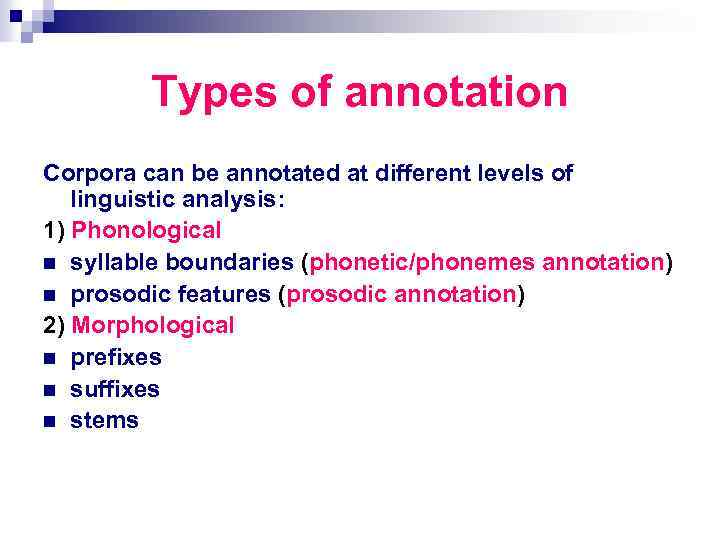

Types of annotation Corpora can be annotated at different levels of linguistic analysis: 1) Phonological n syllable boundaries (phonetic/phonemes annotation) n prosodic features (prosodic annotation) 2) Morphological n prefixes n suffixes n stems

Types of annotation Corpora can be annotated at different levels of linguistic analysis: 1) Phonological n syllable boundaries (phonetic/phonemes annotation) n prosodic features (prosodic annotation) 2) Morphological n prefixes n suffixes n stems

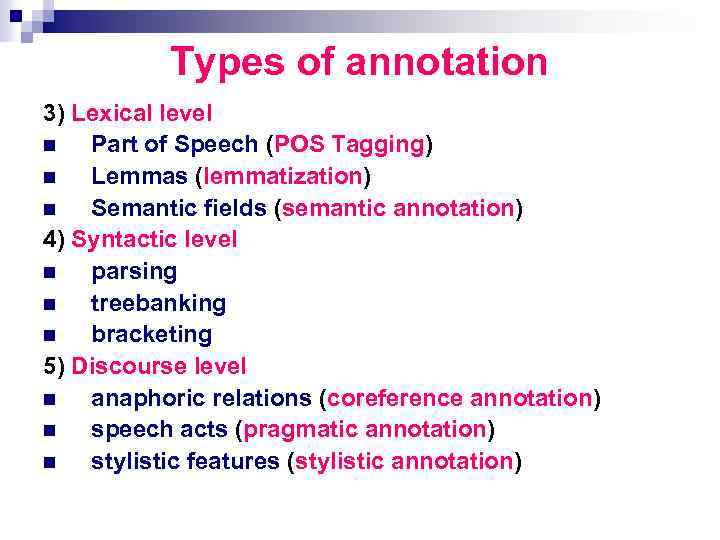

Types of annotation 3) Lexical level n Part of Speech (POS Tagging) n Lemmas (lemmatization) n Semantic fields (semantic annotation) 4) Syntactic level n parsing n treebanking n bracketing 5) Discourse level n anaphoric relations (coreference annotation) n speech acts (pragmatic annotation) n stylistic features (stylistic annotation)

Types of annotation 3) Lexical level n Part of Speech (POS Tagging) n Lemmas (lemmatization) n Semantic fields (semantic annotation) 4) Syntactic level n parsing n treebanking n bracketing 5) Discourse level n anaphoric relations (coreference annotation) n speech acts (pragmatic annotation) n stylistic features (stylistic annotation)

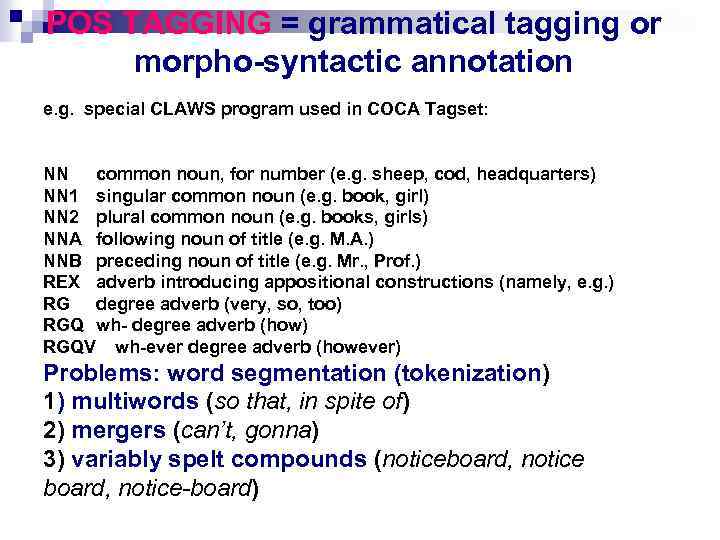

POS TAGGING = grammatical tagging or morpho-syntactic annotation e. g. special CLAWS program used in COCA Tagset: NN common noun, for number (e. g. sheep, cod, headquarters) NN 1 singular common noun (e. g. book, girl) NN 2 plural common noun (e. g. books, girls) NNA following noun of title (e. g. M. A. ) NNB preceding noun of title (e. g. Mr. , Prof. ) REX adverb introducing appositional constructions (namely, e. g. ) RG degree adverb (very, so, too) RGQ wh- degree adverb (how) RGQV wh-ever degree adverb (however) Problems: word segmentation (tokenization) 1) multiwords (so that, in spite of) 2) mergers (can’t, gonna) 3) variably spelt compounds (noticeboard, notice-board)

POS TAGGING = grammatical tagging or morpho-syntactic annotation e. g. special CLAWS program used in COCA Tagset: NN common noun, for number (e. g. sheep, cod, headquarters) NN 1 singular common noun (e. g. book, girl) NN 2 plural common noun (e. g. books, girls) NNA following noun of title (e. g. M. A. ) NNB preceding noun of title (e. g. Mr. , Prof. ) REX adverb introducing appositional constructions (namely, e. g. ) RG degree adverb (very, so, too) RGQ wh- degree adverb (how) RGQV wh-ever degree adverb (however) Problems: word segmentation (tokenization) 1) multiwords (so that, in spite of) 2) mergers (can’t, gonna) 3) variably spelt compounds (noticeboard, notice-board)

LEMMATIZATION Type of annotation that reduces the inflexional variants of the words to their respective lexemes or lemmas as they appear in dictionary entries. n Do, does, did, done, doing – DO n Corpus, corpora – CORPUS Small capital letters are the convention. It can be automatically performed. Corpus allows users to use wildcards and regular expressions. These wildcards are: ? * + , : @ / ( ) [ ] { } _ - < > • ?

LEMMATIZATION Type of annotation that reduces the inflexional variants of the words to their respective lexemes or lemmas as they appear in dictionary entries. n Do, does, did, done, doing – DO n Corpus, corpora – CORPUS Small capital letters are the convention. It can be automatically performed. Corpus allows users to use wildcards and regular expressions. These wildcards are: ? * + , : @ / ( ) [ ] { } _ - < > • ?

PARSING Once a corpus is POS tagged it is possible to bring these morpho-syntactic categories into higher level syntactic relationships with one another, that is, to analyse the sentences in the corpus into their constituents. Parsing consists in bracketing. It can be automated but with low precision rate. e. g. n (S (NP Mary) n (VP visited) n (NP a n (ADJP very nice) n boy)))

PARSING Once a corpus is POS tagged it is possible to bring these morpho-syntactic categories into higher level syntactic relationships with one another, that is, to analyse the sentences in the corpus into their constituents. Parsing consists in bracketing. It can be automated but with low precision rate. e. g. n (S (NP Mary) n (VP visited) n (NP a n (ADJP very nice) n boy)))

CORPUS ANALYTICAL TOOLS The lemmatizer enables researchers to group all the inflexional forms of the lemma. n The concordancer Concordances are listings of the occurrences of the particular feature or combination of features in a corpus. The most commonly used concordance type is KWIC which stands for Key Word in Context n The collocate display. The typical starting point is a node word. n

CORPUS ANALYTICAL TOOLS The lemmatizer enables researchers to group all the inflexional forms of the lemma. n The concordancer Concordances are listings of the occurrences of the particular feature or combination of features in a corpus. The most commonly used concordance type is KWIC which stands for Key Word in Context n The collocate display. The typical starting point is a node word. n

Measuring statistical significance The Mutual information score expresses the extent to which observed frequency of co-occurrence differs from what we would expect (statistically speaking). In does not work very well with very low frequencies. For instance, sour occurs 472 times and puss 31 times in the corpus. Since sour and pour co-occur 4 times, this gives this particular collocation a very high MI score. n The t-score provides a way of getting away from this problem since it also takes frequency into account. To sum up, MI score is more likely to give high scores to totally fixed phrases whereas t-score will yield significant collocates that occur relatively frequently. In most cases t-score is more reliable. Sour cream is the most common collocation. n

Measuring statistical significance The Mutual information score expresses the extent to which observed frequency of co-occurrence differs from what we would expect (statistically speaking). In does not work very well with very low frequencies. For instance, sour occurs 472 times and puss 31 times in the corpus. Since sour and pour co-occur 4 times, this gives this particular collocation a very high MI score. n The t-score provides a way of getting away from this problem since it also takes frequency into account. To sum up, MI score is more likely to give high scores to totally fixed phrases whereas t-score will yield significant collocates that occur relatively frequently. In most cases t-score is more reliable. Sour cream is the most common collocation. n

Advantages of Performing a Corpus-Based Analysis Corpus linguistics provides a more objective view of language than that of introspection and intuition. John Sinclair (1998) pointed out that this is because speakers do not have access to the subliminal (unconscious) patterns which run through a language. A corpus-based analysis can investigate almost any language patterns- lexical, structural, lexico-grammatical, discourse, phonological, morphological.

Advantages of Performing a Corpus-Based Analysis Corpus linguistics provides a more objective view of language than that of introspection and intuition. John Sinclair (1998) pointed out that this is because speakers do not have access to the subliminal (unconscious) patterns which run through a language. A corpus-based analysis can investigate almost any language patterns- lexical, structural, lexico-grammatical, discourse, phonological, morphological.

Thank you for attention!

Thank you for attention!