5a2315eebe15f0a28d89a2c0189a615d.ppt

- Количество слайдов: 75

Using assessment to support learning: why, what and how? Dylan Wiliam, Institute of Education www. dylanwiliam. net

Using assessment to support learning: why, what and how? Dylan Wiliam, Institute of Education www. dylanwiliam. net

Overview of presentation • Why raising achievement is important • Why investing in teachers is the answer • Why assessment for learning should be the focus • Why teacher learning communities should be the mechanism • How we can put this into practice 22

Overview of presentation • Why raising achievement is important • Why investing in teachers is the answer • Why assessment for learning should be the focus • Why teacher learning communities should be the mechanism • How we can put this into practice 22

Raising achievement matters • For individuals – Increased lifetime salary – Improved health • For society – Lower criminal justice costs – Lower health-care costs – Increased economic growth 33

Raising achievement matters • For individuals – Increased lifetime salary – Improved health • For society – Lower criminal justice costs – Lower health-care costs – Increased economic growth 33

Where’s the solution? • Structure – Small high schools – K-8 schools • Alignment – Curriculum reform – Textbook replacement • Governance – Charter schools – Vouchers • Technology 44

Where’s the solution? • Structure – Small high schools – K-8 schools • Alignment – Curriculum reform – Textbook replacement • Governance – Charter schools – Vouchers • Technology 44

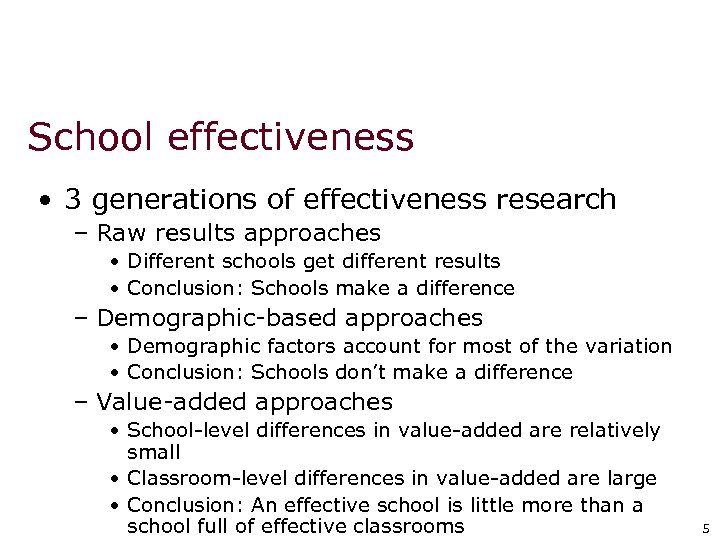

School effectiveness • 3 generations of effectiveness research – Raw results approaches • Different schools get different results • Conclusion: Schools make a difference – Demographic-based approaches • Demographic factors account for most of the variation • Conclusion: Schools don’t make a difference – Value-added approaches • School-level differences in value-added are relatively small • Classroom-level differences in value-added are large • Conclusion: An effective school is little more than a school full of effective classrooms 55

School effectiveness • 3 generations of effectiveness research – Raw results approaches • Different schools get different results • Conclusion: Schools make a difference – Demographic-based approaches • Demographic factors account for most of the variation • Conclusion: Schools don’t make a difference – Value-added approaches • School-level differences in value-added are relatively small • Classroom-level differences in value-added are large • Conclusion: An effective school is little more than a school full of effective classrooms 55

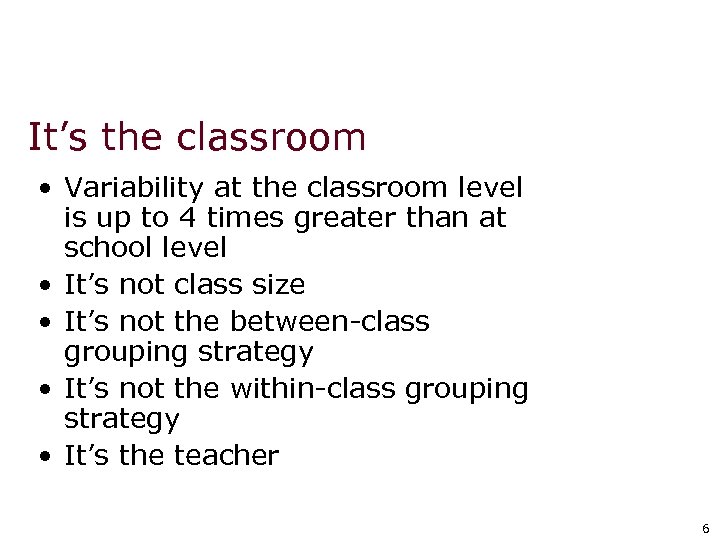

It’s the classroom • Variability at the classroom level is up to 4 times greater than at school level • It’s not class size • It’s not the between-class grouping strategy • It’s not the within-class grouping strategy • It’s the teacher 66

It’s the classroom • Variability at the classroom level is up to 4 times greater than at school level • It’s not class size • It’s not the between-class grouping strategy • It’s not the within-class grouping strategy • It’s the teacher 66

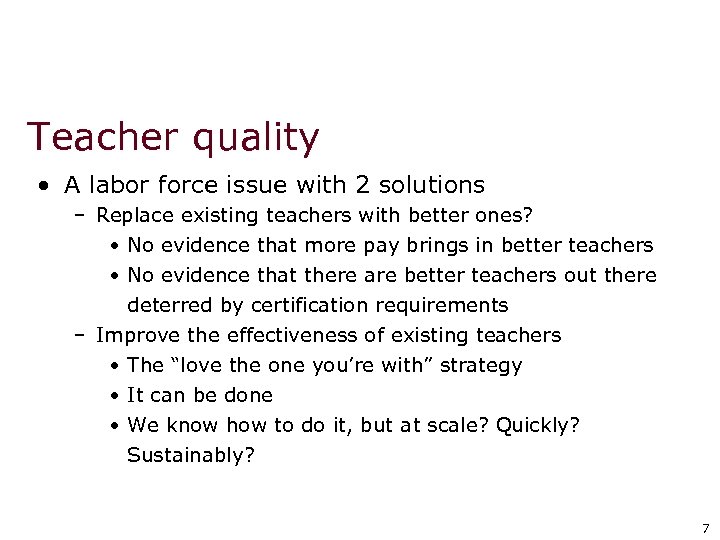

Teacher quality • A labor force issue with 2 solutions – Replace existing teachers with better ones? • No evidence that more pay brings in better teachers • No evidence that there are better teachers out there deterred by certification requirements – Improve the effectiveness of existing teachers • The “love the one you’re with” strategy • It can be done • We know how to do it, but at scale? Quickly? Sustainably? 77

Teacher quality • A labor force issue with 2 solutions – Replace existing teachers with better ones? • No evidence that more pay brings in better teachers • No evidence that there are better teachers out there deterred by certification requirements – Improve the effectiveness of existing teachers • The “love the one you’re with” strategy • It can be done • We know how to do it, but at scale? Quickly? Sustainably? 77

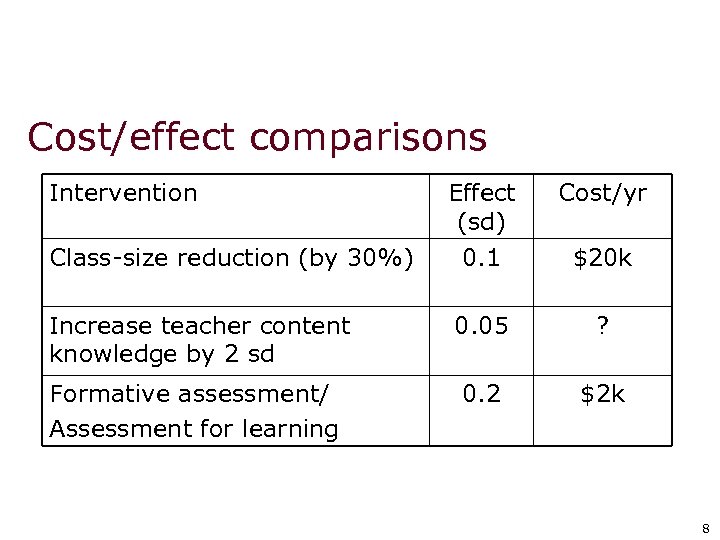

Cost/effect comparisons Intervention Effect (sd) 0. 1 Cost/yr Increase teacher content knowledge by 2 sd 0. 05 ? Formative assessment/ Assessment for learning 0. 2 $2 k Class-size reduction (by 30%) $20 k 88

Cost/effect comparisons Intervention Effect (sd) 0. 1 Cost/yr Increase teacher content knowledge by 2 sd 0. 05 ? Formative assessment/ Assessment for learning 0. 2 $2 k Class-size reduction (by 30%) $20 k 88

Learning power environments • Key concept: – Teachers do not create learning – Learners create learning • Teaching as engineering learning environments • Key features: – Create student engagement (pedagogies of engagement) – Well-regulated (pedagogies of contingency) 99

Learning power environments • Key concept: – Teachers do not create learning – Learners create learning • Teaching as engineering learning environments • Key features: – Create student engagement (pedagogies of engagement) – Well-regulated (pedagogies of contingency) 99

Why pedagogies of engagement? • Intelligence is partly inherited – So what? • Intelligence is partly environmental – Environment creates intelligence – Intelligence creates environment • Learning environments – High cognitive demand – Inclusive – Obligatory 10 10

Why pedagogies of engagement? • Intelligence is partly inherited – So what? • Intelligence is partly environmental – Environment creates intelligence – Intelligence creates environment • Learning environments – High cognitive demand – Inclusive – Obligatory 10 10

Why pedagogies of contingency? • Several major reviews of the research – Natriello (1987) – Crooks (1988) – Kluger & De. Nisi (1996) – Black & Wiliam (1998) – Nyquist (2003) • All find consistent, substantial effects 11 11

Why pedagogies of contingency? • Several major reviews of the research – Natriello (1987) – Crooks (1988) – Kluger & De. Nisi (1996) – Black & Wiliam (1998) – Nyquist (2003) • All find consistent, substantial effects 11 11

Types of formative assessment • Long-cycle – Span: across units, terms – Length: four weeks to one year • Medium-cycle – Span: within and between teaching units – Length: one to four weeks • Short-cycle – Span: within and between lessons – Length: • day-by-day: 24 to 48 hours • minute-by-minute: 5 seconds to 2 hours 12 12

Types of formative assessment • Long-cycle – Span: across units, terms – Length: four weeks to one year • Medium-cycle – Span: within and between teaching units – Length: one to four weeks • Short-cycle – Span: within and between lessons – Length: • day-by-day: 24 to 48 hours • minute-by-minute: 5 seconds to 2 hours 12 12

Effects of formative assessment • Long-cycle – Student monitoring – Curriculum alignment • Medium-cycle – Improved, student-involved, assessment – Improved teacher cognition about learning • Short-cycle – Improved classroom practice – Improved student engagement 13 13

Effects of formative assessment • Long-cycle – Student monitoring – Curriculum alignment • Medium-cycle – Improved, student-involved, assessment – Improved teacher cognition about learning • Short-cycle – Improved classroom practice – Improved student engagement 13 13

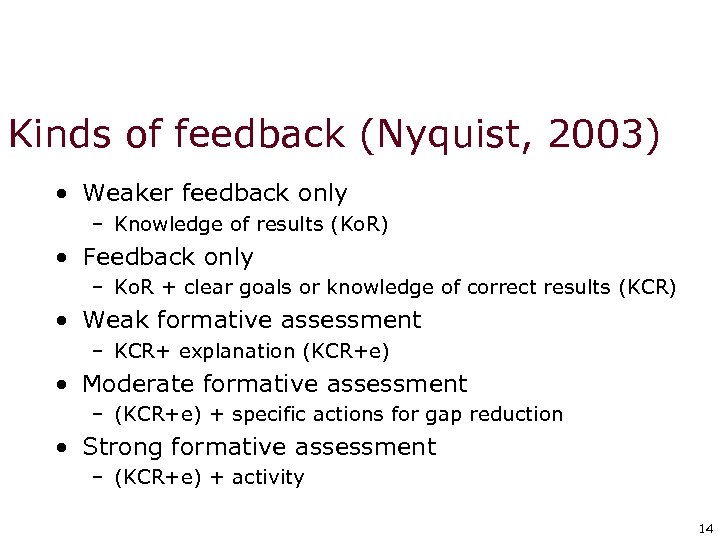

Kinds of feedback (Nyquist, 2003) • Weaker feedback only – Knowledge of results (Ko. R) • Feedback only – Ko. R + clear goals or knowledge of correct results (KCR) • Weak formative assessment – KCR+ explanation (KCR+e) • Moderate formative assessment – (KCR+e) + specific actions for gap reduction • Strong formative assessment – (KCR+e) + activity 14 14

Kinds of feedback (Nyquist, 2003) • Weaker feedback only – Knowledge of results (Ko. R) • Feedback only – Ko. R + clear goals or knowledge of correct results (KCR) • Weak formative assessment – KCR+ explanation (KCR+e) • Moderate formative assessment – (KCR+e) + specific actions for gap reduction • Strong formative assessment – (KCR+e) + activity 14 14

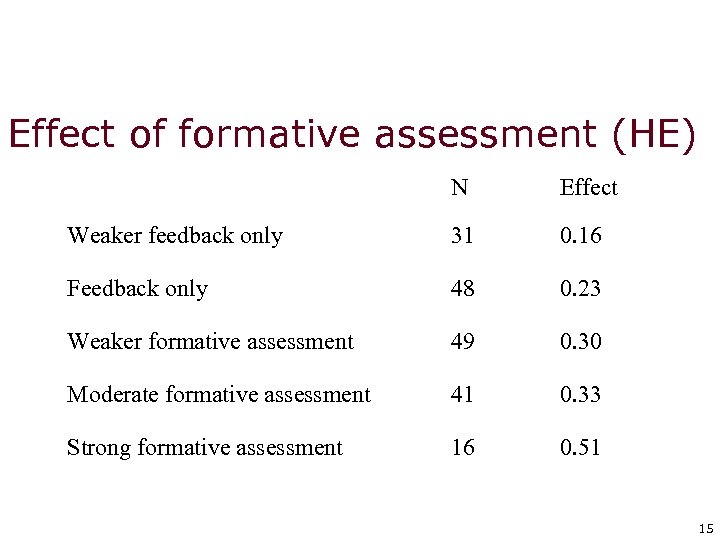

Effect of formative assessment (HE) N Effect Weaker feedback only 31 0. 16 Feedback only 48 0. 23 Weaker formative assessment 49 0. 30 Moderate formative assessment 41 0. 33 Strong formative assessment 16 0. 51 15 15

Effect of formative assessment (HE) N Effect Weaker feedback only 31 0. 16 Feedback only 48 0. 23 Weaker formative assessment 49 0. 30 Moderate formative assessment 41 0. 33 Strong formative assessment 16 0. 51 15 15

Formative assessment • Classroom assessment is not (necessarily) formative assessment • Formative assessment is not (necessarily) classroom assessment 16 16

Formative assessment • Classroom assessment is not (necessarily) formative assessment • Formative assessment is not (necessarily) classroom assessment 16 16

Formative assessment Assessment for learning is any assessment for which the first priority in its design and practice is to serve the purpose of promoting pupils’ learning. It thus differs from assessment designed primarily to serve the purposes of accountability, or of ranking, or of certifying competence. An assessment activity can help learning if it provides information to be used as feedback, by teachers, and by their pupils, in assessing themselves and each other, to modify the teaching and learning activities in which they are engaged. Such assessment becomes ‘formative assessment’ when the evidence is actually used to adapt the teaching work to meet learning needs. Black et al. , 2002 17 17

Formative assessment Assessment for learning is any assessment for which the first priority in its design and practice is to serve the purpose of promoting pupils’ learning. It thus differs from assessment designed primarily to serve the purposes of accountability, or of ranking, or of certifying competence. An assessment activity can help learning if it provides information to be used as feedback, by teachers, and by their pupils, in assessing themselves and each other, to modify the teaching and learning activities in which they are engaged. Such assessment becomes ‘formative assessment’ when the evidence is actually used to adapt the teaching work to meet learning needs. Black et al. , 2002 17 17

Feedback and formative assessment “Feedback is information about the gap between the actual level and the reference level of a system parameter which is used to alter the gap in some way” (Ramaprasad, 1983 p. 4) • Three key instructional processes – Establishing where learners are in their learning – Establishing where they are going – Establishing how to get there 18 18

Feedback and formative assessment “Feedback is information about the gap between the actual level and the reference level of a system parameter which is used to alter the gap in some way” (Ramaprasad, 1983 p. 4) • Three key instructional processes – Establishing where learners are in their learning – Establishing where they are going – Establishing how to get there 18 18

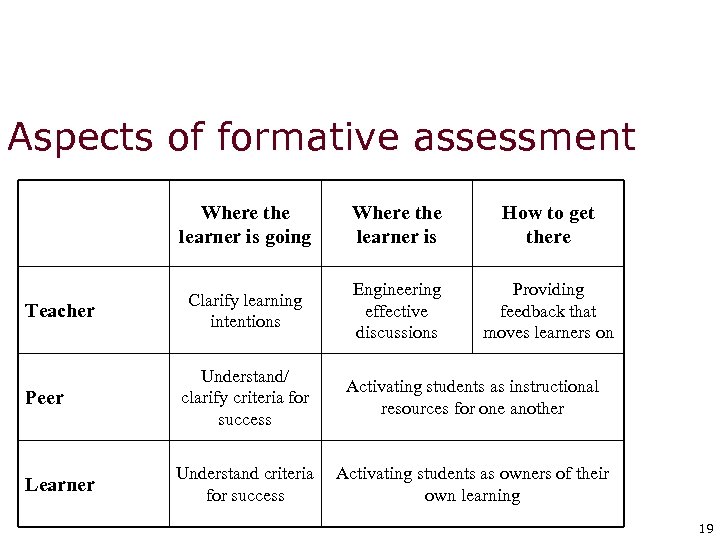

Aspects of formative assessment Where the learner is going Teacher Where the learner is How to get there Clarify learning intentions Engineering effective discussions Providing feedback that moves learners on Peer Understand/ clarify criteria for success Activating students as instructional resources for one another Learner Understand criteria for success Activating students as owners of their own learning 19 19

Aspects of formative assessment Where the learner is going Teacher Where the learner is How to get there Clarify learning intentions Engineering effective discussions Providing feedback that moves learners on Peer Understand/ clarify criteria for success Activating students as instructional resources for one another Learner Understand criteria for success Activating students as owners of their own learning 19 19

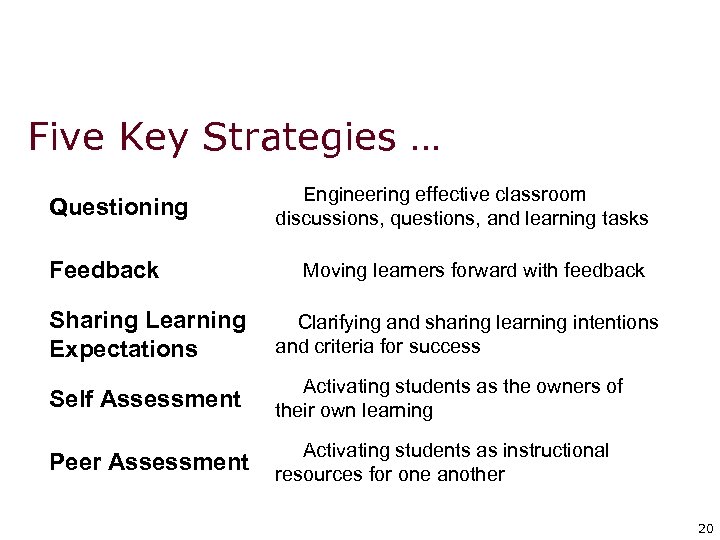

Five Key Strategies … Questioning Feedback Engineering effective classroom discussions, questions, and learning tasks Moving learners forward with feedback Sharing Learning Expectations Clarifying and sharing learning intentions and criteria for success Self Assessment Activating students as the owners of their own learning Peer Assessment Activating students as instructional resources for one another 20 20

Five Key Strategies … Questioning Feedback Engineering effective classroom discussions, questions, and learning tasks Moving learners forward with feedback Sharing Learning Expectations Clarifying and sharing learning intentions and criteria for success Self Assessment Activating students as the owners of their own learning Peer Assessment Activating students as instructional resources for one another 20 20

…and one big idea • Use evidence about learning to adapt instruction to meet student needs 21 21

…and one big idea • Use evidence about learning to adapt instruction to meet student needs 21 21

Keeping Learning on Track (KLT) • • A pilot guides a plane or boat toward its destination by taking constant readings and making careful adjustments in response to wind, currents, weather, etc. A KLT teacher does the same: – – – Plans a carefully chosen route ahead of time (in essence building the track) Takes readings along the way Changes course as conditions dictate 22 22

Keeping Learning on Track (KLT) • • A pilot guides a plane or boat toward its destination by taking constant readings and making careful adjustments in response to wind, currents, weather, etc. A KLT teacher does the same: – – – Plans a carefully chosen route ahead of time (in essence building the track) Takes readings along the way Changes course as conditions dictate 22 22

Questioning

Questioning

Kinds of questions: Israel Which fraction is the smallest? Success rate 88% Which fraction is the largest? Success rate 46%; 39% chose (b) [Vinner, PME conference, Lahti, Finland, 1997] 24 24

Kinds of questions: Israel Which fraction is the smallest? Success rate 88% Which fraction is the largest? Success rate 46%; 39% chose (b) [Vinner, PME conference, Lahti, Finland, 1997] 24 24

25 25

25 25

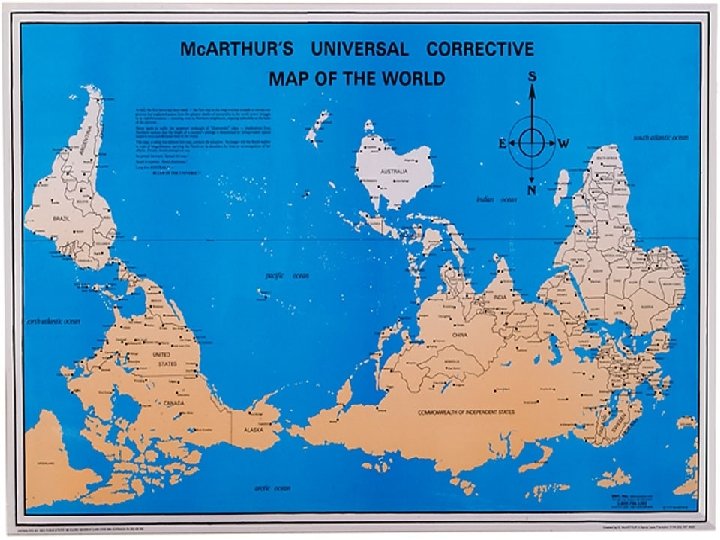

Misconceptions 26 26

Misconceptions 26 26

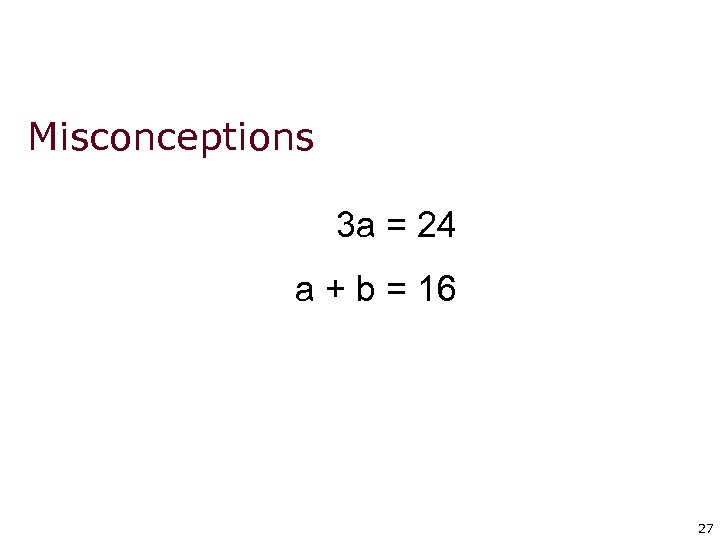

Misconceptions 3 a = 24 a + b = 16 27 27

Misconceptions 3 a = 24 a + b = 16 27 27

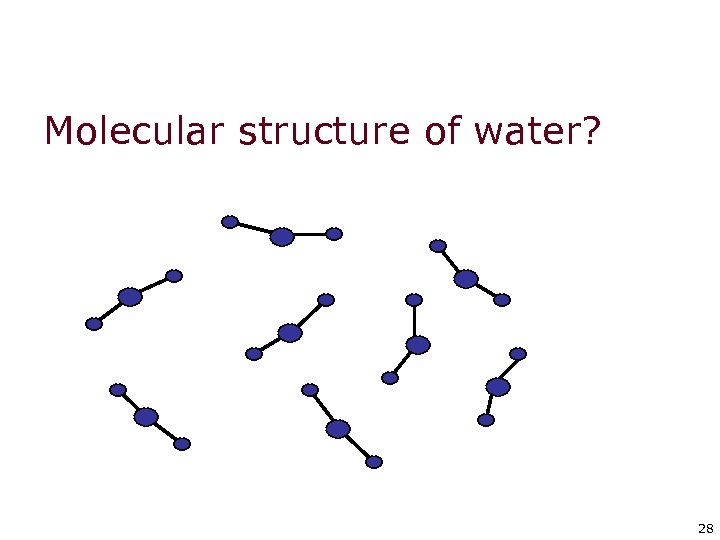

Molecular structure of water? 28 28

Molecular structure of water? 28 28

Feedback

Feedback

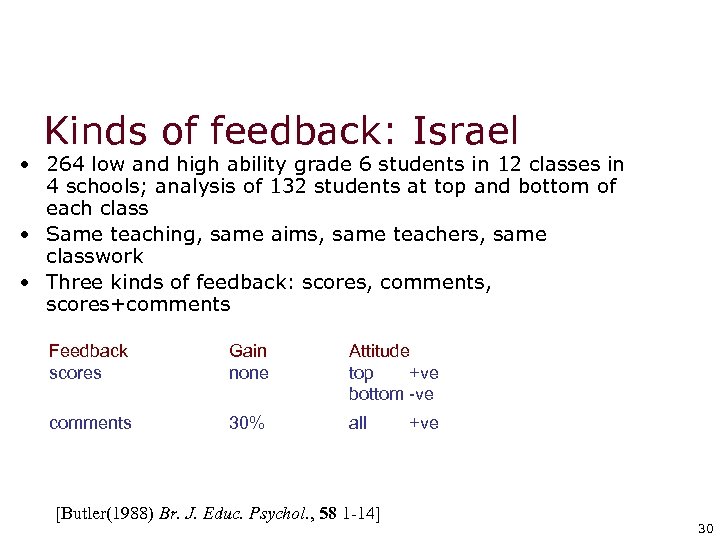

Kinds of feedback: Israel • 264 low and high ability grade 6 students in 12 classes in 4 schools; analysis of 132 students at top and bottom of each class • Same teaching, same aims, same teachers, same classwork • Three kinds of feedback: scores, comments, scores+comments Feedback scores Gain none Attitude top +ve bottom -ve comments 30% all [Butler(1988) Br. J. Educ. Psychol. , 58 1 -14] +ve 30 30

Kinds of feedback: Israel • 264 low and high ability grade 6 students in 12 classes in 4 schools; analysis of 132 students at top and bottom of each class • Same teaching, same aims, same teachers, same classwork • Three kinds of feedback: scores, comments, scores+comments Feedback scores Gain none Attitude top +ve bottom -ve comments 30% all [Butler(1988) Br. J. Educ. Psychol. , 58 1 -14] +ve 30 30

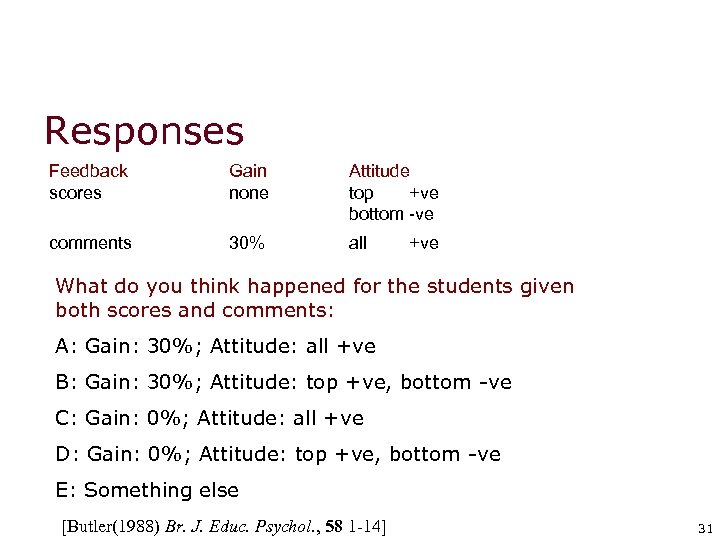

Responses Feedback scores Gain none Attitude top +ve bottom -ve comments 30% all +ve What do you think happened for the students given both scores and comments: A: Gain: 30%; Attitude: all +ve B: Gain: 30%; Attitude: top +ve, bottom -ve C: Gain: 0%; Attitude: all +ve D: Gain: 0%; Attitude: top +ve, bottom -ve E: Something else [Butler(1988) Br. J. Educ. Psychol. , 58 1 -14] 31 31

Responses Feedback scores Gain none Attitude top +ve bottom -ve comments 30% all +ve What do you think happened for the students given both scores and comments: A: Gain: 30%; Attitude: all +ve B: Gain: 30%; Attitude: top +ve, bottom -ve C: Gain: 0%; Attitude: all +ve D: Gain: 0%; Attitude: top +ve, bottom -ve E: Something else [Butler(1988) Br. J. Educ. Psychol. , 58 1 -14] 31 31

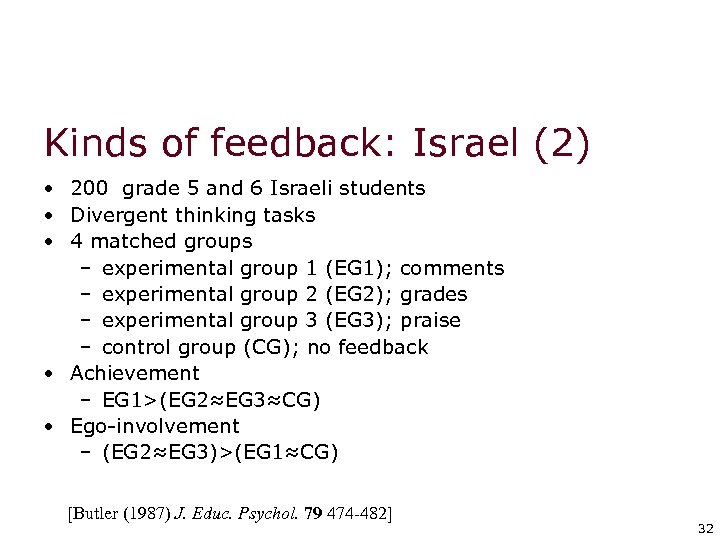

Kinds of feedback: Israel (2) • 200 grade 5 and 6 Israeli students • Divergent thinking tasks • 4 matched groups – experimental group 1 (EG 1); comments – experimental group 2 (EG 2); grades – experimental group 3 (EG 3); praise – control group (CG); no feedback • Achievement – EG 1>(EG 2≈EG 3≈CG) • Ego-involvement – (EG 2≈EG 3)>(EG 1≈CG) [Butler (1987) J. Educ. Psychol. 79 474 -482] 32 32

Kinds of feedback: Israel (2) • 200 grade 5 and 6 Israeli students • Divergent thinking tasks • 4 matched groups – experimental group 1 (EG 1); comments – experimental group 2 (EG 2); grades – experimental group 3 (EG 3); praise – control group (CG); no feedback • Achievement – EG 1>(EG 2≈EG 3≈CG) • Ego-involvement – (EG 2≈EG 3)>(EG 1≈CG) [Butler (1987) J. Educ. Psychol. 79 474 -482] 32 32

Effects of feedback • Kluger & De. Nisi (1996) • Review of 3000 research reports • Excluding those: – without adequate controls – with poor design – with fewer than 10 participants – where performance was not measured – without details of effect sizes • left 131 reports, 607 effect sizes, involving 12652 individuals • Average effect size 0. 4, but – Effect sizes very variable – 40% of effect sizes were negative 33 33

Effects of feedback • Kluger & De. Nisi (1996) • Review of 3000 research reports • Excluding those: – without adequate controls – with poor design – with fewer than 10 participants – where performance was not measured – without details of effect sizes • left 131 reports, 607 effect sizes, involving 12652 individuals • Average effect size 0. 4, but – Effect sizes very variable – 40% of effect sizes were negative 33 33

Feedback • Formative assessment requires – data on the actual level of some measurable attribute; – data on the reference level of that attribute; – a mechanism for comparing the two levels and generating information about the ‘gap’ between the two levels; – a mechanism by which the information can be used to alter the gap. • Feedback is therefore formative only if the information fed back is actually used in closing the gap. 34 34

Feedback • Formative assessment requires – data on the actual level of some measurable attribute; – data on the reference level of that attribute; – a mechanism for comparing the two levels and generating information about the ‘gap’ between the two levels; – a mechanism by which the information can be used to alter the gap. • Feedback is therefore formative only if the information fed back is actually used in closing the gap. 34 34

Formative assessment • Frequent feedback is not necessarily formative • Feedback that causes improvement is not necessarily formative • Assessment is formative only if the information fed back to the learner is used by the learner in making improvements • To be formative, assessment must include a recipe for future action 35 35

Formative assessment • Frequent feedback is not necessarily formative • Feedback that causes improvement is not necessarily formative • Assessment is formative only if the information fed back to the learner is used by the learner in making improvements • To be formative, assessment must include a recipe for future action 35 35

How do students make sense of this? • Attribution (Dweck, 2000) – Personalization (internal v external) – Permanence (stable v unstable) – Essential that students attribute both failures and success to internal, unstable causes. (It’s down to you, and you can do something about it. ) • Views of ‘ability’ – Fixed (IQ) – Incremental (untapped potential) – Essential that teachers inculcate in their students a view that ‘ability’ is incremental rather than fixed (by working, you’re getting smarter) 36 36

How do students make sense of this? • Attribution (Dweck, 2000) – Personalization (internal v external) – Permanence (stable v unstable) – Essential that students attribute both failures and success to internal, unstable causes. (It’s down to you, and you can do something about it. ) • Views of ‘ability’ – Fixed (IQ) – Incremental (untapped potential) – Essential that teachers inculcate in their students a view that ‘ability’ is incremental rather than fixed (by working, you’re getting smarter) 36 36

Sharing learning intentions

Sharing learning intentions

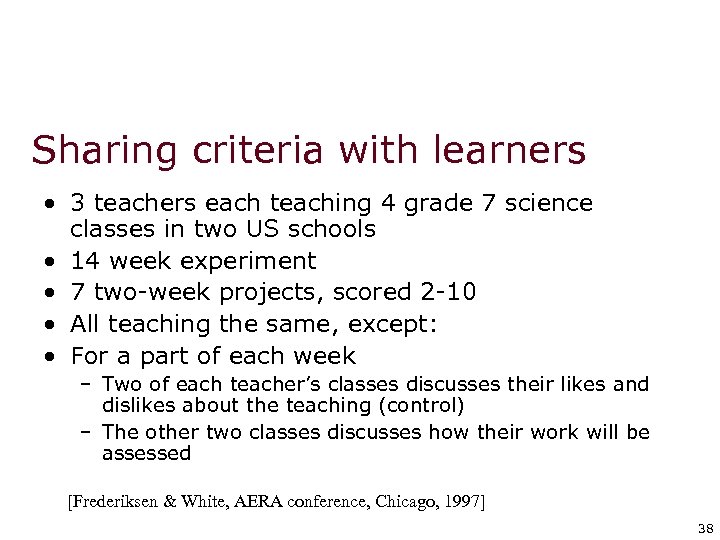

Sharing criteria with learners • 3 teachers each teaching 4 grade 7 science classes in two US schools • 14 week experiment • 7 two-week projects, scored 2 -10 • All teaching the same, except: • For a part of each week – Two of each teacher’s classes discusses their likes and dislikes about the teaching (control) – The other two classes discusses how their work will be assessed [Frederiksen & White, AERA conference, Chicago, 1997] 38 38

Sharing criteria with learners • 3 teachers each teaching 4 grade 7 science classes in two US schools • 14 week experiment • 7 two-week projects, scored 2 -10 • All teaching the same, except: • For a part of each week – Two of each teacher’s classes discusses their likes and dislikes about the teaching (control) – The other two classes discusses how their work will be assessed [Frederiksen & White, AERA conference, Chicago, 1997] 38 38

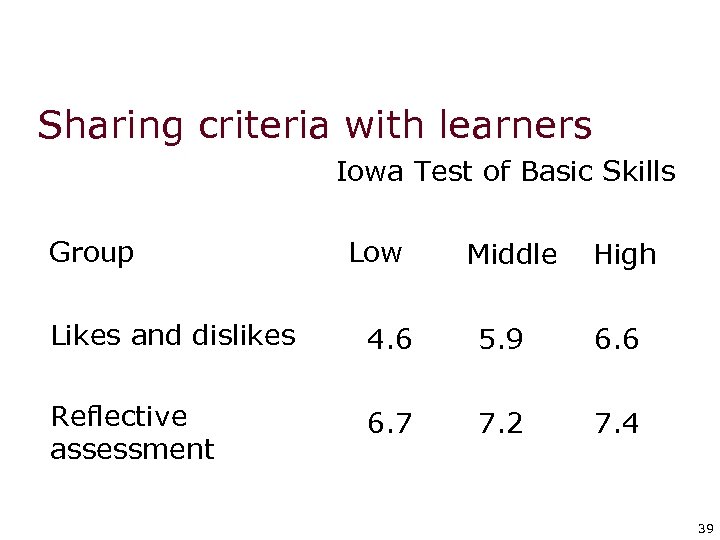

Sharing criteria with learners Iowa Test of Basic Skills Group Low Middle High Likes and dislikes 4. 6 5. 9 6. 6 Reflective assessment 6. 7 7. 2 7. 4 39 39

Sharing criteria with learners Iowa Test of Basic Skills Group Low Middle High Likes and dislikes 4. 6 5. 9 6. 6 Reflective assessment 6. 7 7. 2 7. 4 39 39

Peer- and self-assessment

Peer- and self-assessment

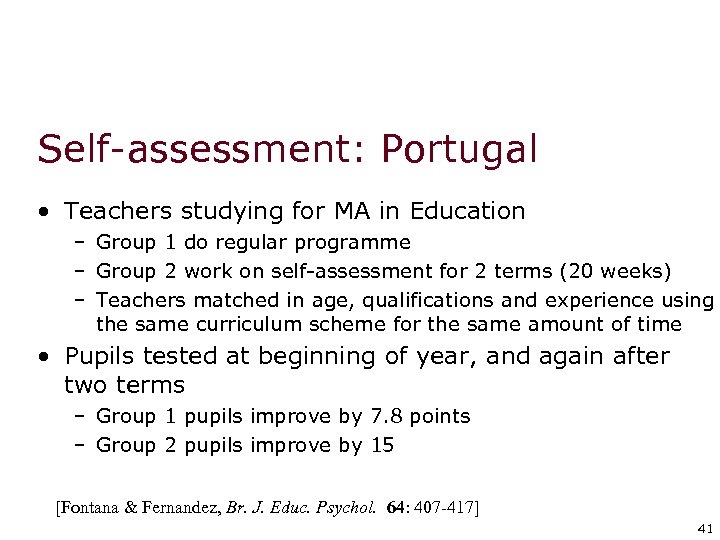

Self-assessment: Portugal • Teachers studying for MA in Education – Group 1 do regular programme – Group 2 work on self-assessment for 2 terms (20 weeks) – Teachers matched in age, qualifications and experience using the same curriculum scheme for the same amount of time • Pupils tested at beginning of year, and again after two terms – Group 1 pupils improve by 7. 8 points – Group 2 pupils improve by 15 [Fontana & Fernandez, Br. J. Educ. Psychol. 64: 407 -417] 41 41

Self-assessment: Portugal • Teachers studying for MA in Education – Group 1 do regular programme – Group 2 work on self-assessment for 2 terms (20 weeks) – Teachers matched in age, qualifications and experience using the same curriculum scheme for the same amount of time • Pupils tested at beginning of year, and again after two terms – Group 1 pupils improve by 7. 8 points – Group 2 pupils improve by 15 [Fontana & Fernandez, Br. J. Educ. Psychol. 64: 407 -417] 41 41

Putting it into practice

Putting it into practice

Eliciting evidence of student achievement by engineering effective classroom discussions, questions and learning tasks

Eliciting evidence of student achievement by engineering effective classroom discussions, questions and learning tasks

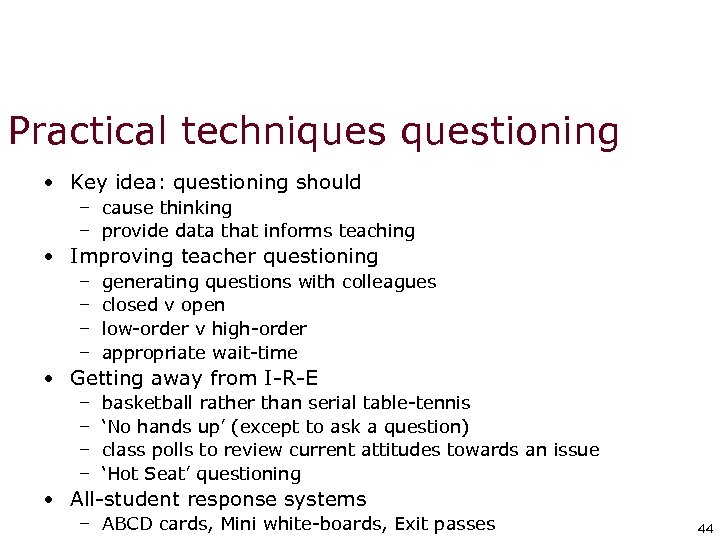

Practical techniquestioning • Key idea: questioning should – cause thinking – provide data that informs teaching • Improving teacher questioning – – generating questions with colleagues closed v open low-order v high-order appropriate wait-time • Getting away from I-R-E – – basketball rather than serial table-tennis ‘No hands up’ (except to ask a question) class polls to review current attitudes towards an issue ‘Hot Seat’ questioning • All-student response systems – ABCD cards, Mini white-boards, Exit passes 44 44

Practical techniquestioning • Key idea: questioning should – cause thinking – provide data that informs teaching • Improving teacher questioning – – generating questions with colleagues closed v open low-order v high-order appropriate wait-time • Getting away from I-R-E – – basketball rather than serial table-tennis ‘No hands up’ (except to ask a question) class polls to review current attitudes towards an issue ‘Hot Seat’ questioning • All-student response systems – ABCD cards, Mini white-boards, Exit passes 44 44

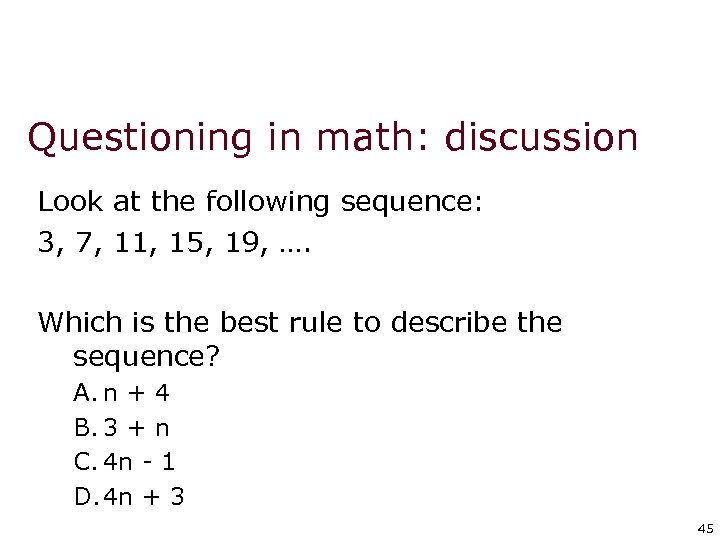

Questioning in math: discussion Look at the following sequence: 3, 7, 11, 15, 19, …. Which is the best rule to describe the sequence? A. n + 4 B. 3 + n C. 4 n - 1 D. 4 n + 3 45 45

Questioning in math: discussion Look at the following sequence: 3, 7, 11, 15, 19, …. Which is the best rule to describe the sequence? A. n + 4 B. 3 + n C. 4 n - 1 D. 4 n + 3 45 45

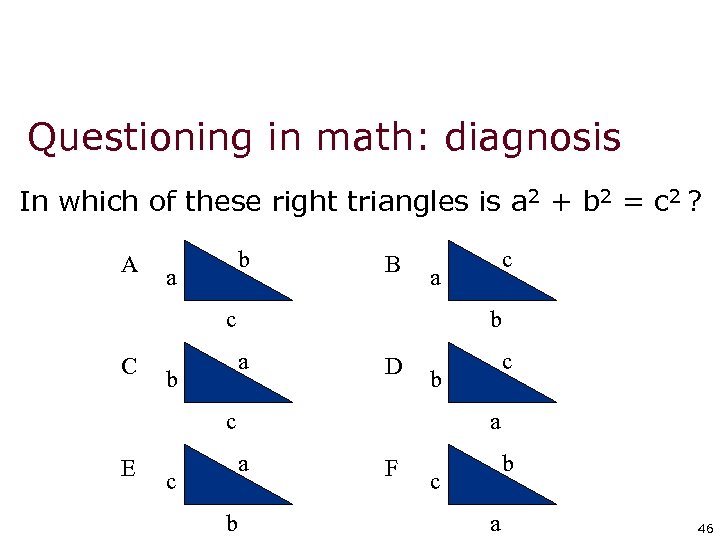

Questioning in math: diagnosis In which of these right triangles is a 2 + b 2 = c 2 ? A b a B a c C b a b D c c b c E c a a b F b c a 46 46

Questioning in math: diagnosis In which of these right triangles is a 2 + b 2 = c 2 ? A b a B a c C b a b D c c b c E c a a b F b c a 46 46

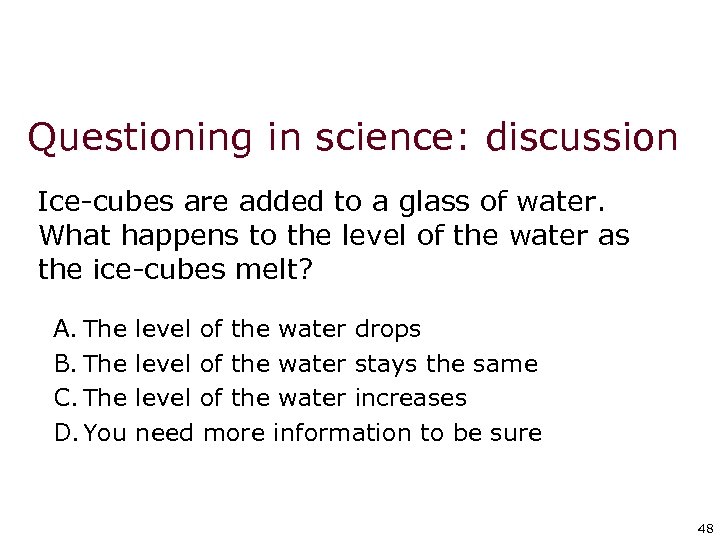

Questioning in science: discussion Ice-cubes are added to a glass of water. What happens to the level of the water as the ice-cubes melt? A. The B. The C. The D. You level of the water drops level of the water stays the same level of the water increases need more information to be sure 48 48

Questioning in science: discussion Ice-cubes are added to a glass of water. What happens to the level of the water as the ice-cubes melt? A. The B. The C. The D. You level of the water drops level of the water stays the same level of the water increases need more information to be sure 48 48

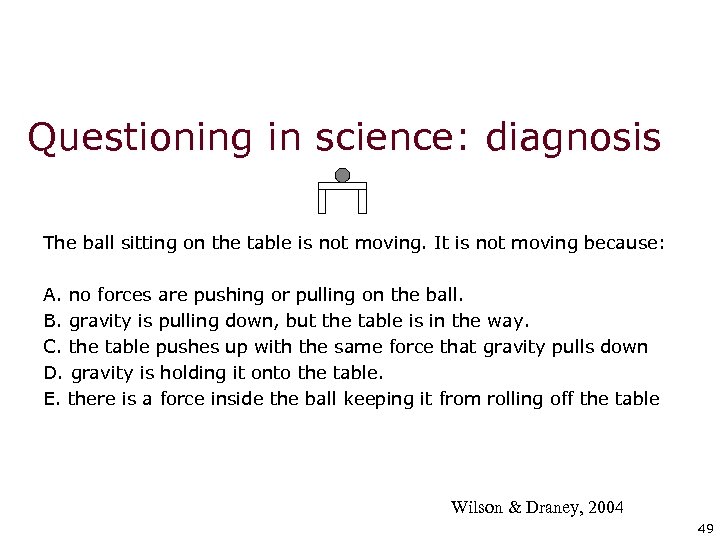

Questioning in science: diagnosis The ball sitting on the table is not moving. It is not moving because: A. no forces are pushing or pulling on the ball. B. gravity is pulling down, but the table is in the way. C. the table pushes up with the same force that gravity pulls down D. gravity is holding it onto the table. E. there is a force inside the ball keeping it from rolling off the table Wilson & Draney, 2004 49 49

Questioning in science: diagnosis The ball sitting on the table is not moving. It is not moving because: A. no forces are pushing or pulling on the ball. B. gravity is pulling down, but the table is in the way. C. the table pushes up with the same force that gravity pulls down D. gravity is holding it onto the table. E. there is a force inside the ball keeping it from rolling off the table Wilson & Draney, 2004 49 49

Dinosaurs extinction Why did dinosaurs become extinct? A) Humans destroyed their habitat B) Humans killed them all for food C) There was a major change in climate 50 50

Dinosaurs extinction Why did dinosaurs become extinct? A) Humans destroyed their habitat B) Humans killed them all for food C) There was a major change in climate 50 50

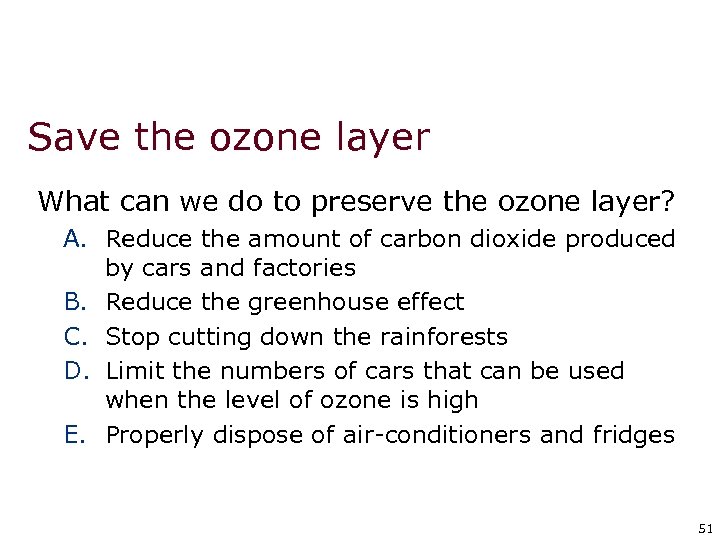

Save the ozone layer What can we do to preserve the ozone layer? A. Reduce the amount of carbon dioxide produced by cars and factories B. Reduce the greenhouse effect C. Stop cutting down the rainforests D. Limit the numbers of cars that can be used when the level of ozone is high E. Properly dispose of air-conditioners and fridges 51 51

Save the ozone layer What can we do to preserve the ozone layer? A. Reduce the amount of carbon dioxide produced by cars and factories B. Reduce the greenhouse effect C. Stop cutting down the rainforests D. Limit the numbers of cars that can be used when the level of ozone is high E. Properly dispose of air-conditioners and fridges 51 51

Questioning in English: discussion • Macbeth: mad or bad? 52 52

Questioning in English: discussion • Macbeth: mad or bad? 52 52

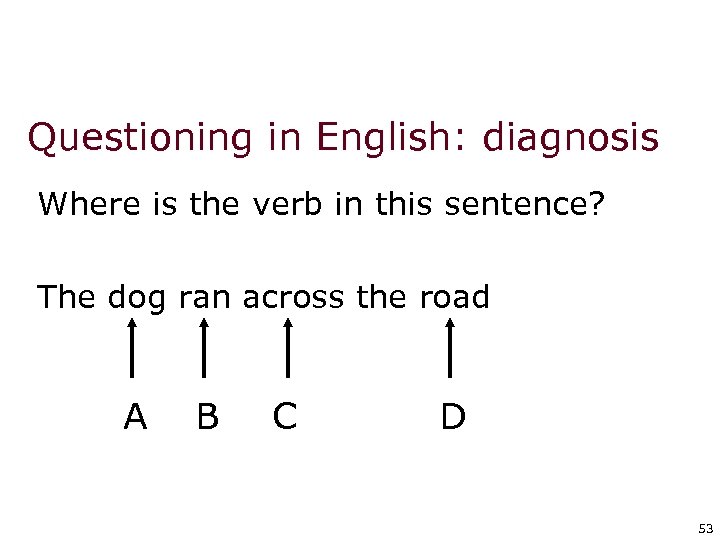

Questioning in English: diagnosis Where is the verb in this sentence? The dog ran across the road A B C D 53 53

Questioning in English: diagnosis Where is the verb in this sentence? The dog ran across the road A B C D 53 53

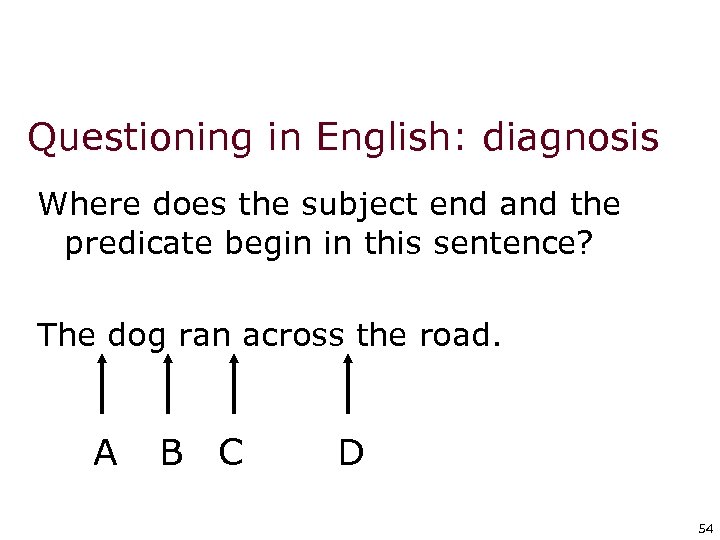

Questioning in English: diagnosis Where does the subject end and the predicate begin in this sentence? The dog ran across the road. A B C D 54 54

Questioning in English: diagnosis Where does the subject end and the predicate begin in this sentence? The dog ran across the road. A B C D 54 54

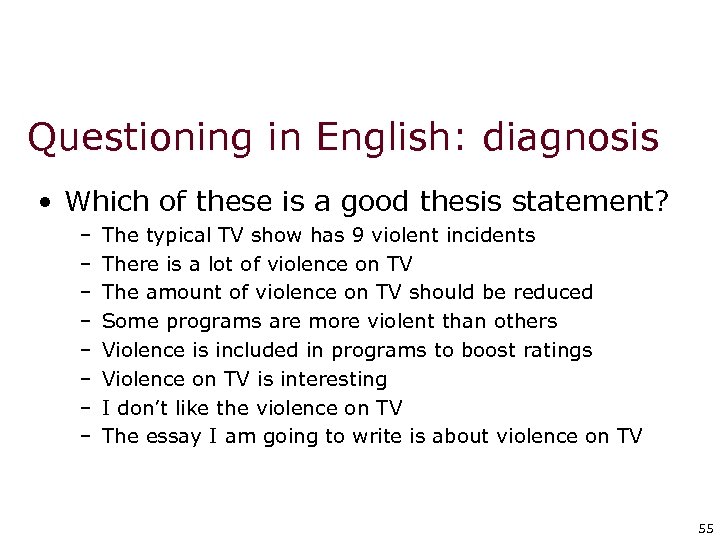

Questioning in English: diagnosis • Which of these is a good thesis statement? – – – – The typical TV show has 9 violent incidents There is a lot of violence on TV The amount of violence on TV should be reduced Some programs are more violent than others Violence is included in programs to boost ratings Violence on TV is interesting I don’t like the violence on TV The essay I am going to write is about violence on TV 55 55

Questioning in English: diagnosis • Which of these is a good thesis statement? – – – – The typical TV show has 9 violent incidents There is a lot of violence on TV The amount of violence on TV should be reduced Some programs are more violent than others Violence is included in programs to boost ratings Violence on TV is interesting I don’t like the violence on TV The essay I am going to write is about violence on TV 55 55

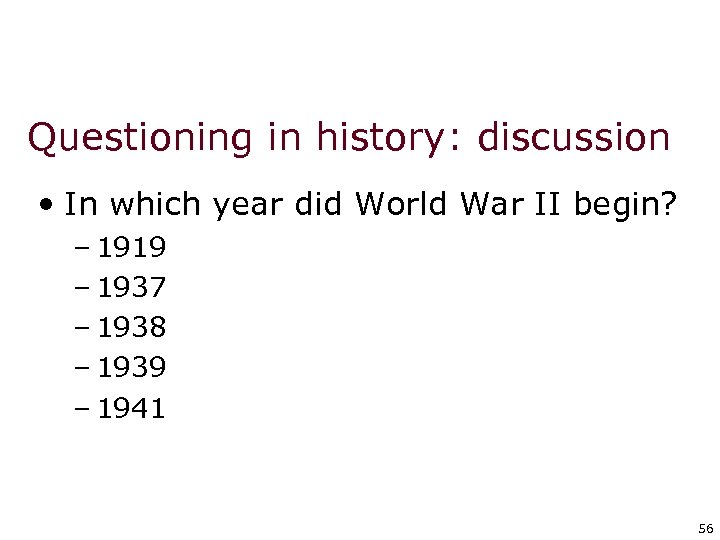

Questioning in history: discussion • In which year did World War II begin? – 1919 – 1937 – 1938 – 1939 – 1941 56 56

Questioning in history: discussion • In which year did World War II begin? – 1919 – 1937 – 1938 – 1939 – 1941 56 56

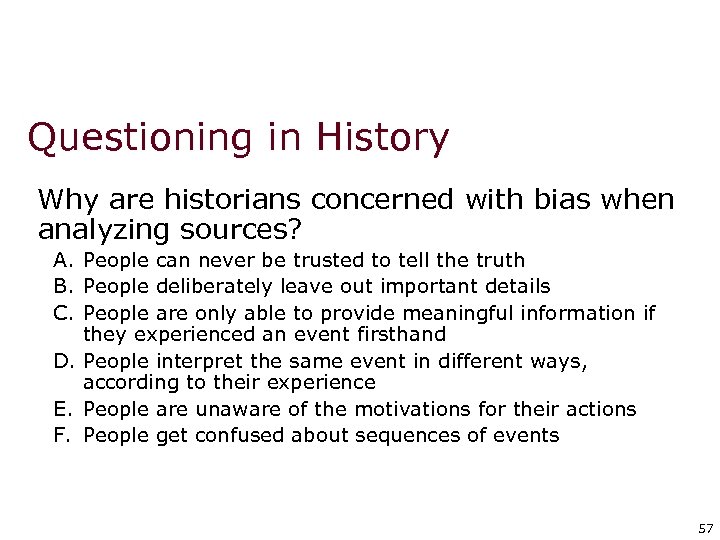

Questioning in History Why are historians concerned with bias when analyzing sources? A. People can never be trusted to tell the truth B. People deliberately leave out important details C. People are only able to provide meaningful information if they experienced an event firsthand D. People interpret the same event in different ways, according to their experience E. People are unaware of the motivations for their actions F. People get confused about sequences of events 57 57

Questioning in History Why are historians concerned with bias when analyzing sources? A. People can never be trusted to tell the truth B. People deliberately leave out important details C. People are only able to provide meaningful information if they experienced an event firsthand D. People interpret the same event in different ways, according to their experience E. People are unaware of the motivations for their actions F. People get confused about sequences of events 57 57

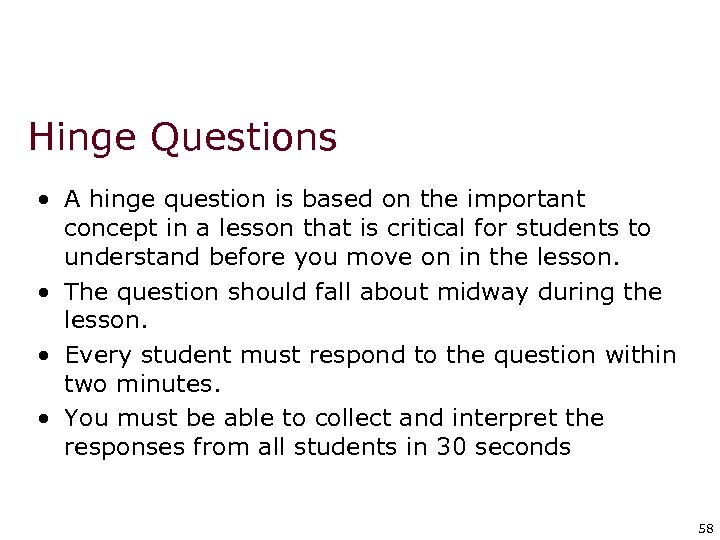

Hinge Questions • A hinge question is based on the important concept in a lesson that is critical for students to understand before you move on in the lesson. • The question should fall about midway during the lesson. • Every student must respond to the question within two minutes. • You must be able to collect and interpret the responses from all students in 30 seconds 58 58

Hinge Questions • A hinge question is based on the important concept in a lesson that is critical for students to understand before you move on in the lesson. • The question should fall about midway during the lesson. • Every student must respond to the question within two minutes. • You must be able to collect and interpret the responses from all students in 30 seconds 58 58

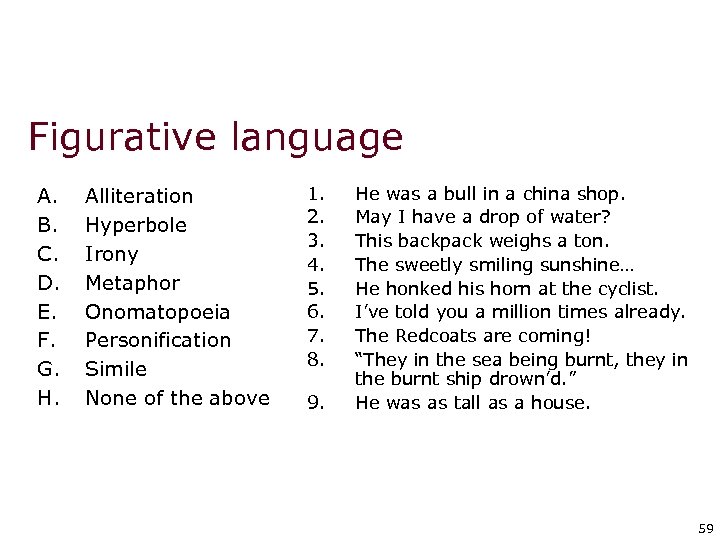

Figurative language A. B. C. D. E. F. G. H. Alliteration Hyperbole Irony Metaphor Onomatopoeia Personification Simile None of the above 1. 2. 3. 4. 5. 6. 7. 8. 9. He was a bull in a china shop. May I have a drop of water? This backpack weighs a ton. The sweetly smiling sunshine… He honked his horn at the cyclist. I’ve told you a million times already. The Redcoats are coming! “They in the sea being burnt, they in the burnt ship drown’d. ” He was as tall as a house. 59 59

Figurative language A. B. C. D. E. F. G. H. Alliteration Hyperbole Irony Metaphor Onomatopoeia Personification Simile None of the above 1. 2. 3. 4. 5. 6. 7. 8. 9. He was a bull in a china shop. May I have a drop of water? This backpack weighs a ton. The sweetly smiling sunshine… He honked his horn at the cyclist. I’ve told you a million times already. The Redcoats are coming! “They in the sea being burnt, they in the burnt ship drown’d. ” He was as tall as a house. 59 59

Triangle shirt waist factory fire, March 25 th, 1911

Triangle shirt waist factory fire, March 25 th, 1911

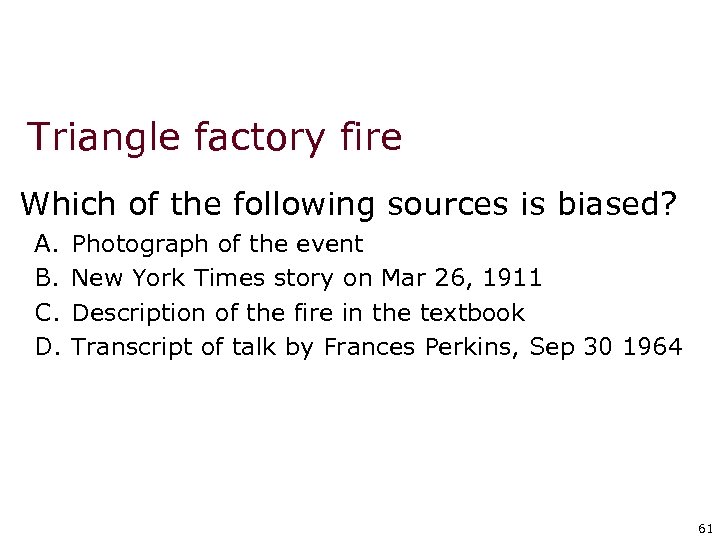

Triangle factory fire Which of the following sources is biased? A. B. C. D. Photograph of the event New York Times story on Mar 26, 1911 Description of the fire in the textbook Transcript of talk by Frances Perkins, Sep 30 1964 61 61

Triangle factory fire Which of the following sources is biased? A. B. C. D. Photograph of the event New York Times story on Mar 26, 1911 Description of the fire in the textbook Transcript of talk by Frances Perkins, Sep 30 1964 61 61

Practical techniques: feedback • Key idea: feedback should – cause thinking – provide guidance on how to improve • • Comment-only grading Focused grading Explicit reference to rubrics Suggestions on how to improve – ‘Strategy cards’ ideas for improvement – Not giving complete solutions • Re-timing assessment – (eg two-thirds-of-the-way-through-a-unit test) 62 62

Practical techniques: feedback • Key idea: feedback should – cause thinking – provide guidance on how to improve • • Comment-only grading Focused grading Explicit reference to rubrics Suggestions on how to improve – ‘Strategy cards’ ideas for improvement – Not giving complete solutions • Re-timing assessment – (eg two-thirds-of-the-way-through-a-unit test) 62 62

Practical techniques: sharing learning intentions • Explaining learning intentions at start of lesson/unit – Learning intentions – Success criteria • Intentions/criteria in students’ language • Posters of key words to talk about learning – eg describe, explain, evaluate • Planning/writing frames • Annotated examples of different standards to ‘flesh out’ assessment rubrics (e. g. lab reports) • Opportunities for students to design their own tests 63 63

Practical techniques: sharing learning intentions • Explaining learning intentions at start of lesson/unit – Learning intentions – Success criteria • Intentions/criteria in students’ language • Posters of key words to talk about learning – eg describe, explain, evaluate • Planning/writing frames • Annotated examples of different standards to ‘flesh out’ assessment rubrics (e. g. lab reports) • Opportunities for students to design their own tests 63 63

Practical techniques: peer and self-assessment • Students assessing their own/peers’ work – with rubrics – with exemplars – “two stars and a wish” • Training students to pose questions/identifying group weaknesses • Self-assessment of understanding – Traffic lights – Red/green discs • End-of-lesson students’ review 64 64

Practical techniques: peer and self-assessment • Students assessing their own/peers’ work – with rubrics – with exemplars – “two stars and a wish” • Training students to pose questions/identifying group weaknesses • Self-assessment of understanding – Traffic lights – Red/green discs • End-of-lesson students’ review 64 64

Putting it into practice

Putting it into practice

A model for teacher learning • Content (what we want teachers to change) – Evidence – Ideas (strategies and techniques) • Process (how to go about change) – Choice – Flexibility – Small steps – Accountability – Support 66 66

A model for teacher learning • Content (what we want teachers to change) – Evidence – Ideas (strategies and techniques) • Process (how to go about change) – Choice – Flexibility – Small steps – Accountability – Support 66 66

Strategies and techniques • Distinction between strategies and techniques – Strategies define the territory of Af. L (no brainers) – Teachers are responsible for choice of techniques • Allows for customization/ caters for local context • Creates ownership • Shares responsibility • Key requirements of techniques – – embodiment of deep cognitive/affective principles relevance feasibility acceptability 67 67

Strategies and techniques • Distinction between strategies and techniques – Strategies define the territory of Af. L (no brainers) – Teachers are responsible for choice of techniques • Allows for customization/ caters for local context • Creates ownership • Shares responsibility • Key requirements of techniques – – embodiment of deep cognitive/affective principles relevance feasibility acceptability 67 67

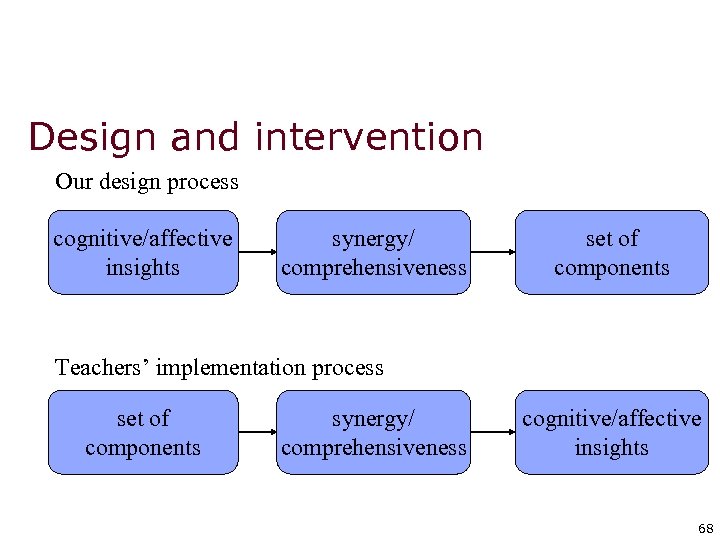

Design and intervention Our design process cognitive/affective insights synergy/ comprehensiveness set of components Teachers’ implementation process set of components synergy/ comprehensiveness cognitive/affective insights 68 68

Design and intervention Our design process cognitive/affective insights synergy/ comprehensiveness set of components Teachers’ implementation process set of components synergy/ comprehensiveness cognitive/affective insights 68 68

Why research hasn’t changed teaching • The nature of expertise in teaching • Aristotle’s main intellectual virtues – Episteme: knowledge of universal truths – Techne: ability to make things – Phronesis: practical wisdom • What works is not the right question – Everything works somewhere – Nothing works everywhere – What’s interesting is “under what conditions” does this work? • Teaching is mainly a matter of phronesis, 69 69

Why research hasn’t changed teaching • The nature of expertise in teaching • Aristotle’s main intellectual virtues – Episteme: knowledge of universal truths – Techne: ability to make things – Phronesis: practical wisdom • What works is not the right question – Everything works somewhere – Nothing works everywhere – What’s interesting is “under what conditions” does this work? • Teaching is mainly a matter of phronesis, 69 69

Knowledge ‘transfer’ After Nonaka & Tageuchi, 1995 70 70

Knowledge ‘transfer’ After Nonaka & Tageuchi, 1995 70 70

Supporting Teachers and Schools to Change through Teacher Learning Communities

Supporting Teachers and Schools to Change through Teacher Learning Communities

Implementing Af. L requires changing teacher habits • • • Teachers “know” most of this already So the problem is not a lack of knowledge It’s a lack of understanding what it means to do Af. L That’s why telling teachers what to do doesn’t work Experience alone is not enough—if it were, then the most experienced teachers would be the best teachers —we know that’s not true (Hanushek, 2005) • People need to reflect on their experiences in systematic ways that build their accessible knowledge base, learn from mistakes, etc. (Bransford, Brown & Cocking, 1999) 72 72

Implementing Af. L requires changing teacher habits • • • Teachers “know” most of this already So the problem is not a lack of knowledge It’s a lack of understanding what it means to do Af. L That’s why telling teachers what to do doesn’t work Experience alone is not enough—if it were, then the most experienced teachers would be the best teachers —we know that’s not true (Hanushek, 2005) • People need to reflect on their experiences in systematic ways that build their accessible knowledge base, learn from mistakes, etc. (Bransford, Brown & Cocking, 1999) 72 72

That’s what TLCs are for: • • TLCs contradict teacher isolation TLCs reprofessionalize teaching by valuing teacher expertise TLCs deprivatize teaching so that teachers’ strengths and struggles become known TLCs offer a steady source of support for struggling teachers They grow expertise by providing a regular space, time, and structure for that kind of systematic reflecting on practice They facilitate sharing of untapped expertise residing in individual teachers They build the collective knowledge base in a school 73 73

That’s what TLCs are for: • • TLCs contradict teacher isolation TLCs reprofessionalize teaching by valuing teacher expertise TLCs deprivatize teaching so that teachers’ strengths and struggles become known TLCs offer a steady source of support for struggling teachers They grow expertise by providing a regular space, time, and structure for that kind of systematic reflecting on practice They facilitate sharing of untapped expertise residing in individual teachers They build the collective knowledge base in a school 73 73

The synergy • Content: assessment for learning • Process: teacher learning communities • Components of a model – Initial workshops – Support for TLC leaders – Monthly TLC meetings – Peer observations – ‘Drip-feed’ resources • Web-site • Writings • New ideas 74 74

The synergy • Content: assessment for learning • Process: teacher learning communities • Components of a model – Initial workshops – Support for TLC leaders – Monthly TLC meetings – Peer observations – ‘Drip-feed’ resources • Web-site • Writings • New ideas 74 74

Summary • Raising achievement is important • Raising achievement requires improving teacher quality • Improving teacher quality requires teacher professional development • To be effective, teacher professional development must address – What teachers do in the classroom – How teachers change what they do in the classroom • Af. L + TLCs – A point of (uniquely? ) high leverage – A “Trojan Horse” into wider issues of pedagogy, psychology, and curriculum 75 75

Summary • Raising achievement is important • Raising achievement requires improving teacher quality • Improving teacher quality requires teacher professional development • To be effective, teacher professional development must address – What teachers do in the classroom – How teachers change what they do in the classroom • Af. L + TLCs – A point of (uniquely? ) high leverage – A “Trojan Horse” into wider issues of pedagogy, psychology, and curriculum 75 75