386179a897fb7eae4de99968402c760f.ppt

- Количество слайдов: 74

USC C S E University of Southern California Center for Software Engineering The COCOMO II Suite of Software Cost Estimation Models Barry Boehm, USC COCOMO/SSCM Forum 21 Tutorial November 8, 2006 boehm@csse. usc. edu, http: //csse. usc. edu/research/cocomosuite 11/8/06 ©USC-CSSE 1

USC C S E University of Southern California Center for Software Engineering The COCOMO II Suite of Software Cost Estimation Models Barry Boehm, USC COCOMO/SSCM Forum 21 Tutorial November 8, 2006 boehm@csse. usc. edu, http: //csse. usc. edu/research/cocomosuite 11/8/06 ©USC-CSSE 1

USC C S E University of Southern California Center for Software Engineering Thanks to USC-CSSE Affiliates (33) • Commercial Industry (10) – Cost Xpert Group, Galorath, Group Systems, IBM, Intelligent Systems, Microsoft, Motorola, Price Systems, Softstar Systems, Sun • Aerospace Industry (8) – BAE Systems, Boeing, General Dynamics, Lockheed Martin, Northrop Grumman(2), Raytheon, SAIC • Government (6) – FAA, NASA-Ames, NSF, US Army Research Labs, US Army TACOM, USAF Cost Center • FFRDC’s and Consortia (6) – Aerospace, FC-MD, IDA, JPL, SEI, SPC • International (3) – Institute of Software, Chinese Academy of Sciences, EASE (Japan), Samsung 11/8/06 ©USC-CSSE 2

USC C S E University of Southern California Center for Software Engineering Thanks to USC-CSSE Affiliates (33) • Commercial Industry (10) – Cost Xpert Group, Galorath, Group Systems, IBM, Intelligent Systems, Microsoft, Motorola, Price Systems, Softstar Systems, Sun • Aerospace Industry (8) – BAE Systems, Boeing, General Dynamics, Lockheed Martin, Northrop Grumman(2), Raytheon, SAIC • Government (6) – FAA, NASA-Ames, NSF, US Army Research Labs, US Army TACOM, USAF Cost Center • FFRDC’s and Consortia (6) – Aerospace, FC-MD, IDA, JPL, SEI, SPC • International (3) – Institute of Software, Chinese Academy of Sciences, EASE (Japan), Samsung 11/8/06 ©USC-CSSE 2

USC C S E University of Southern California Center for Software Engineering USC-CSSE Affiliates Program • Provides priorities for, access to USC-CSE research – Scalable spiral processes, cost/schedule/quality models, requirements groupware, architecting and re-engineering tools, value-based software engineering methods. – Experience in application in Do. D, NASA, industry – Affiliate community events • 14 th Annual Research Review and Executive Workshop, USC Campus, February 12 -15, 2007 • 11 th Annual Ground Systems Architecture Workshop (with Aerospace Corp. ), Manhattan Beach, CA, March 26 -29, 2007 • 22 nd International COCOMO/Systems and Software Cost Estimation Forum, USC Campus, October 23 -26, 2007 • Synergetic with USC distance education programs – MS – Systems Architecting and Engineering – MS / Computer Science – Software Engineering 11/8/06 ©USC-CSSE 3

USC C S E University of Southern California Center for Software Engineering USC-CSSE Affiliates Program • Provides priorities for, access to USC-CSE research – Scalable spiral processes, cost/schedule/quality models, requirements groupware, architecting and re-engineering tools, value-based software engineering methods. – Experience in application in Do. D, NASA, industry – Affiliate community events • 14 th Annual Research Review and Executive Workshop, USC Campus, February 12 -15, 2007 • 11 th Annual Ground Systems Architecture Workshop (with Aerospace Corp. ), Manhattan Beach, CA, March 26 -29, 2007 • 22 nd International COCOMO/Systems and Software Cost Estimation Forum, USC Campus, October 23 -26, 2007 • Synergetic with USC distance education programs – MS – Systems Architecting and Engineering – MS / Computer Science – Software Engineering 11/8/06 ©USC-CSSE 3

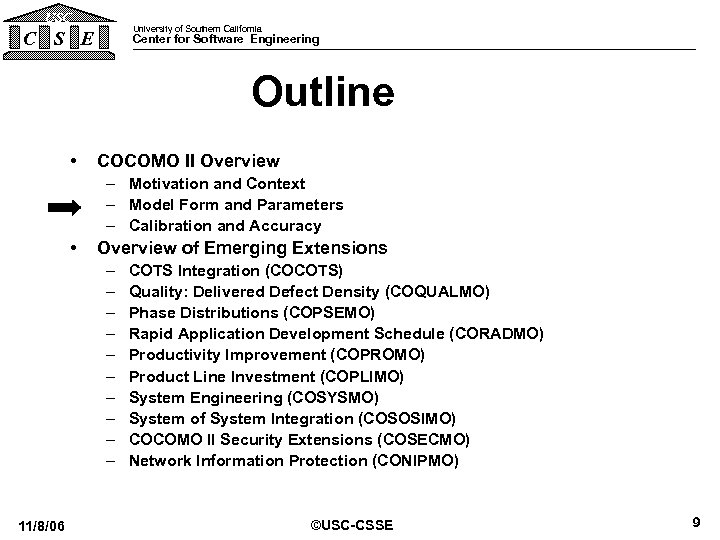

USC University of Southern California C S E Center for Software Engineering Outline • COCOMO II Overview – Motivation and Context – Model Form and Parameters – Calibration and Accuracy • Overview of Emerging Extensions – – – – – 11/8/06 COTS Integration (COCOTS) Quality: Delivered Defect Density (COQUALMO) Phase Distributions (COPSEMO) Rapid Application Development Schedule (CORADMO) Productivity Improvement (COPROMO) Product Line Investment (COPLIMO) System Engineering (COSYSMO) System of System Integration (COSOSIMO) COCOMO II Security Extensions (COSECMO) Network Information Protection (CONIPMO) ©USC-CSSE 4

USC University of Southern California C S E Center for Software Engineering Outline • COCOMO II Overview – Motivation and Context – Model Form and Parameters – Calibration and Accuracy • Overview of Emerging Extensions – – – – – 11/8/06 COTS Integration (COCOTS) Quality: Delivered Defect Density (COQUALMO) Phase Distributions (COPSEMO) Rapid Application Development Schedule (CORADMO) Productivity Improvement (COPROMO) Product Line Investment (COPLIMO) System Engineering (COSYSMO) System of System Integration (COSOSIMO) COCOMO II Security Extensions (COSECMO) Network Information Protection (CONIPMO) ©USC-CSSE 4

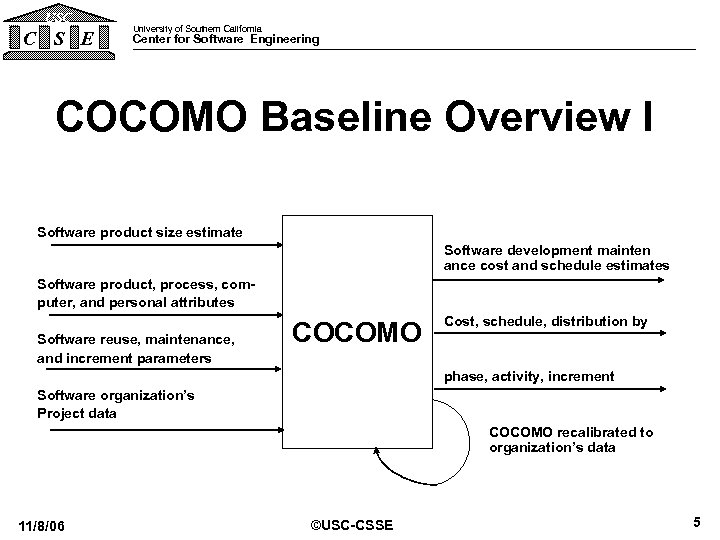

USC C S E University of Southern California Center for Software Engineering COCOMO Baseline Overview I Software product size estimate Software development mainten ance cost and schedule estimates Software product, process, computer, and personal attributes Cost, schedule, distribution by Software reuse, maintenance, and increment parameters COCOMO phase, activity, increment Software organization’s Project data COCOMO recalibrated to organization’s data 11/8/06 ©USC-CSSE 5

USC C S E University of Southern California Center for Software Engineering COCOMO Baseline Overview I Software product size estimate Software development mainten ance cost and schedule estimates Software product, process, computer, and personal attributes Cost, schedule, distribution by Software reuse, maintenance, and increment parameters COCOMO phase, activity, increment Software organization’s Project data COCOMO recalibrated to organization’s data 11/8/06 ©USC-CSSE 5

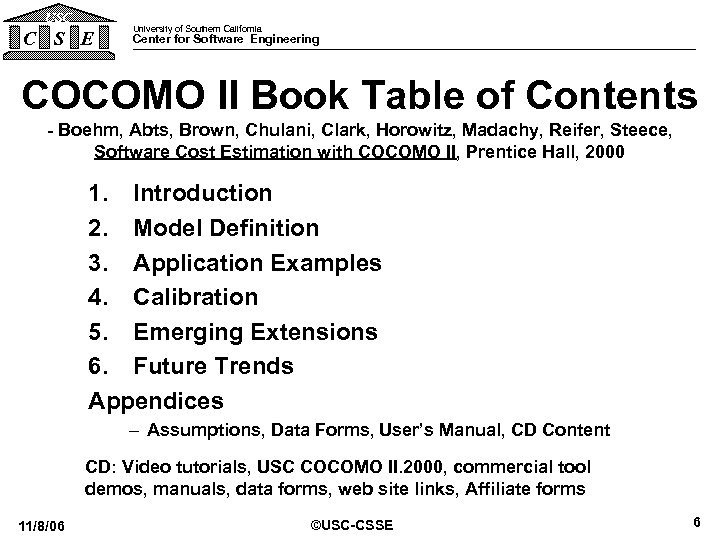

USC C S E University of Southern California Center for Software Engineering COCOMO II Book Table of Contents - Boehm, Abts, Brown, Chulani, Clark, Horowitz, Madachy, Reifer, Steece, Software Cost Estimation with COCOMO II, Prentice Hall, 2000 1. Introduction 2. Model Definition 3. Application Examples 4. Calibration 5. Emerging Extensions 6. Future Trends Appendices – Assumptions, Data Forms, User’s Manual, CD Content CD: Video tutorials, USC COCOMO II. 2000, commercial tool demos, manuals, data forms, web site links, Affiliate forms 11/8/06 ©USC-CSSE 6

USC C S E University of Southern California Center for Software Engineering COCOMO II Book Table of Contents - Boehm, Abts, Brown, Chulani, Clark, Horowitz, Madachy, Reifer, Steece, Software Cost Estimation with COCOMO II, Prentice Hall, 2000 1. Introduction 2. Model Definition 3. Application Examples 4. Calibration 5. Emerging Extensions 6. Future Trends Appendices – Assumptions, Data Forms, User’s Manual, CD Content CD: Video tutorials, USC COCOMO II. 2000, commercial tool demos, manuals, data forms, web site links, Affiliate forms 11/8/06 ©USC-CSSE 6

USC C S E University of Southern California Center for Software Engineering Need to Re. Engineer COCOMO 81 • • 11/8/06 New software processes New sizing phenomena New reuse phenomena Need to make decisions based on incomplete information ©USC-CSSE 7

USC C S E University of Southern California Center for Software Engineering Need to Re. Engineer COCOMO 81 • • 11/8/06 New software processes New sizing phenomena New reuse phenomena Need to make decisions based on incomplete information ©USC-CSSE 7

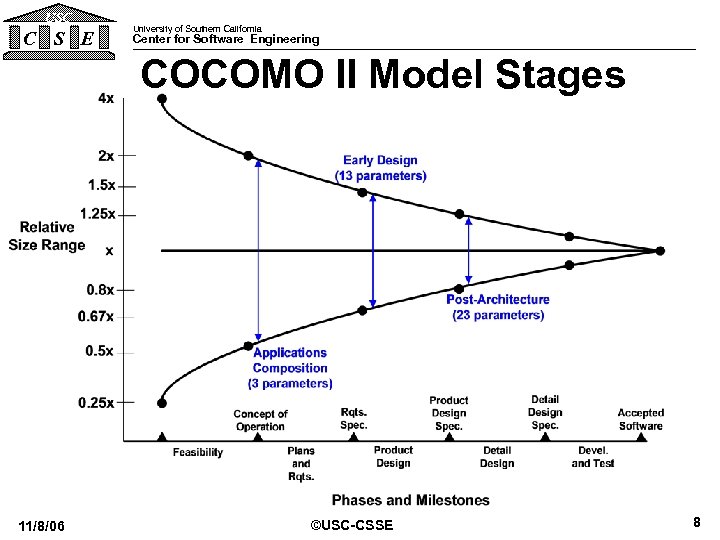

USC C S E University of Southern California Center for Software Engineering COCOMO II Model Stages 11/8/06 ©USC-CSSE 8

USC C S E University of Southern California Center for Software Engineering COCOMO II Model Stages 11/8/06 ©USC-CSSE 8

USC University of Southern California C S E Center for Software Engineering Outline • COCOMO II Overview – Motivation and Context – Model Form and Parameters – Calibration and Accuracy • Overview of Emerging Extensions – – – – – 11/8/06 COTS Integration (COCOTS) Quality: Delivered Defect Density (COQUALMO) Phase Distributions (COPSEMO) Rapid Application Development Schedule (CORADMO) Productivity Improvement (COPROMO) Product Line Investment (COPLIMO) System Engineering (COSYSMO) System of System Integration (COSOSIMO) COCOMO II Security Extensions (COSECMO) Network Information Protection (CONIPMO) ©USC-CSSE 9

USC University of Southern California C S E Center for Software Engineering Outline • COCOMO II Overview – Motivation and Context – Model Form and Parameters – Calibration and Accuracy • Overview of Emerging Extensions – – – – – 11/8/06 COTS Integration (COCOTS) Quality: Delivered Defect Density (COQUALMO) Phase Distributions (COPSEMO) Rapid Application Development Schedule (CORADMO) Productivity Improvement (COPROMO) Product Line Investment (COPLIMO) System Engineering (COSYSMO) System of System Integration (COSOSIMO) COCOMO II Security Extensions (COSECMO) Network Information Protection (CONIPMO) ©USC-CSSE 9

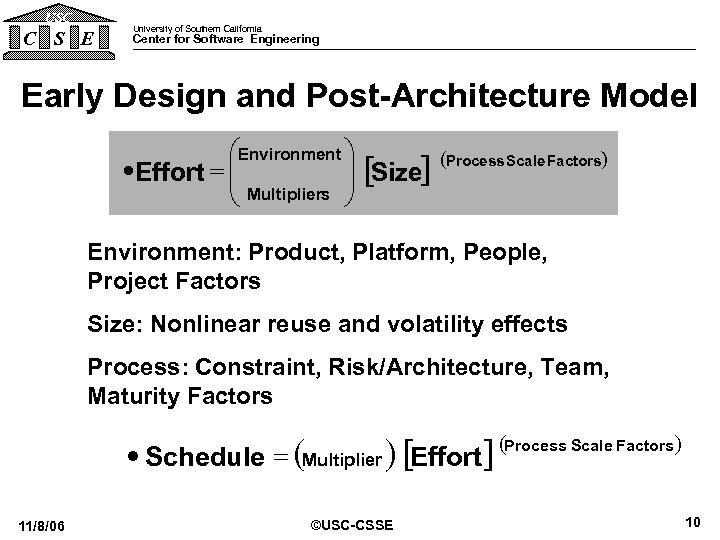

USC C S E University of Southern California Center for Software Engineering Early Design and Post-Architecture Model æEnvironment ö ·Effort = ç ç Multipliers ÷ [Size] ÷ è ø (Process Scale Factors) Environment: Product, Platform, People, Project Factors Size: Nonlinear reuse and volatility effects Process: Constraint, Risk/Architecture, Team, Maturity Factors (Multiplier ) [Effort] (Process Scale Factors ) · Schedule = 11/8/06 ©USC-CSSE 10

USC C S E University of Southern California Center for Software Engineering Early Design and Post-Architecture Model æEnvironment ö ·Effort = ç ç Multipliers ÷ [Size] ÷ è ø (Process Scale Factors) Environment: Product, Platform, People, Project Factors Size: Nonlinear reuse and volatility effects Process: Constraint, Risk/Architecture, Team, Maturity Factors (Multiplier ) [Effort] (Process Scale Factors ) · Schedule = 11/8/06 ©USC-CSSE 10

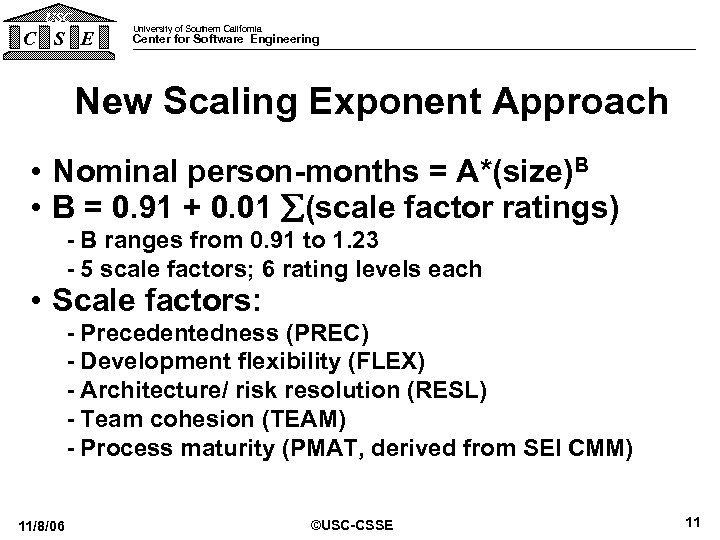

USC C S E University of Southern California Center for Software Engineering New Scaling Exponent Approach • Nominal person-months = A*(size)B • B = 0. 91 + 0. 01 (scale factor ratings) - B ranges from 0. 91 to 1. 23 - 5 scale factors; 6 rating levels each • Scale factors: - Precedentedness (PREC) - Development flexibility (FLEX) - Architecture/ risk resolution (RESL) - Team cohesion (TEAM) - Process maturity (PMAT, derived from SEI CMM) 11/8/06 ©USC-CSSE 11

USC C S E University of Southern California Center for Software Engineering New Scaling Exponent Approach • Nominal person-months = A*(size)B • B = 0. 91 + 0. 01 (scale factor ratings) - B ranges from 0. 91 to 1. 23 - 5 scale factors; 6 rating levels each • Scale factors: - Precedentedness (PREC) - Development flexibility (FLEX) - Architecture/ risk resolution (RESL) - Team cohesion (TEAM) - Process maturity (PMAT, derived from SEI CMM) 11/8/06 ©USC-CSSE 11

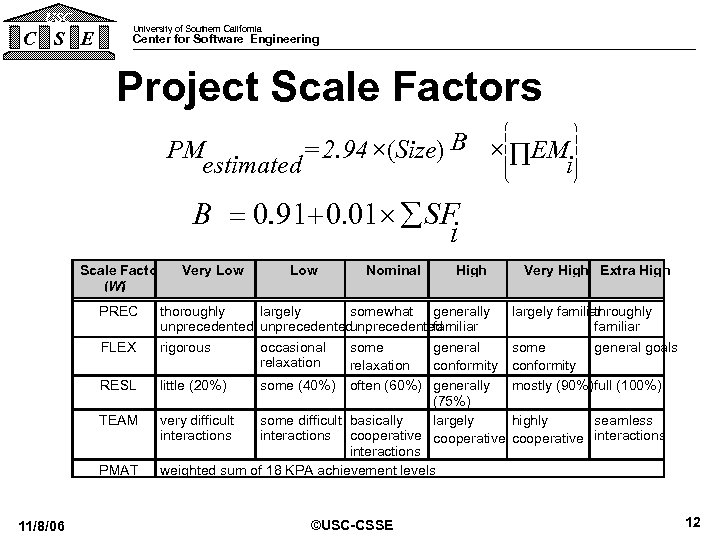

USC C S E University of Southern California Center for Software Engineering Project Scale Factors æ ç ç è = 2. 94 ´(Size) B ´ Õ EM PM estimated i ö ÷ ÷ ø B = 0. 91+ 0. 01´ å SF. i Scale Factors Very Low (Wi ) PREC FLEX RESL TEAM PMAT 11/8/06 Low Nominal High thoroughly largely somewhat generally unprecedented familiar rigorous occasional some general relaxation conformity little (20%) some (40%) Very High Extra High largely familiar throughly familiar some general goals conformity mostly (90%)full (100%) often (60%) generally (75%) very difficult some difficult basically largely highly seamless interactions cooperative interactions weighted sum of 18 KPA achievement levels ©USC-CSSE 12

USC C S E University of Southern California Center for Software Engineering Project Scale Factors æ ç ç è = 2. 94 ´(Size) B ´ Õ EM PM estimated i ö ÷ ÷ ø B = 0. 91+ 0. 01´ å SF. i Scale Factors Very Low (Wi ) PREC FLEX RESL TEAM PMAT 11/8/06 Low Nominal High thoroughly largely somewhat generally unprecedented familiar rigorous occasional some general relaxation conformity little (20%) some (40%) Very High Extra High largely familiar throughly familiar some general goals conformity mostly (90%)full (100%) often (60%) generally (75%) very difficult some difficult basically largely highly seamless interactions cooperative interactions weighted sum of 18 KPA achievement levels ©USC-CSSE 12

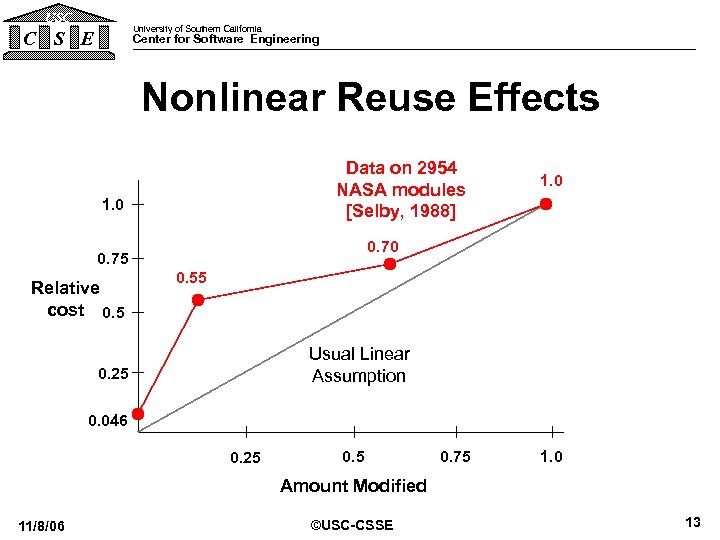

USC University of Southern California C S E Center for Software Engineering Nonlinear Reuse Effects Data on 2954 NASA modules [Selby, 1988] 1. 0 0. 75 Relative cost 0. 5 1. 0 0. 55 Usual Linear Assumption 0. 25 0. 046 0. 25 0. 75 1. 0 Amount Modified 11/8/06 ©USC-CSSE 13

USC University of Southern California C S E Center for Software Engineering Nonlinear Reuse Effects Data on 2954 NASA modules [Selby, 1988] 1. 0 0. 75 Relative cost 0. 5 1. 0 0. 55 Usual Linear Assumption 0. 25 0. 046 0. 25 0. 75 1. 0 Amount Modified 11/8/06 ©USC-CSSE 13

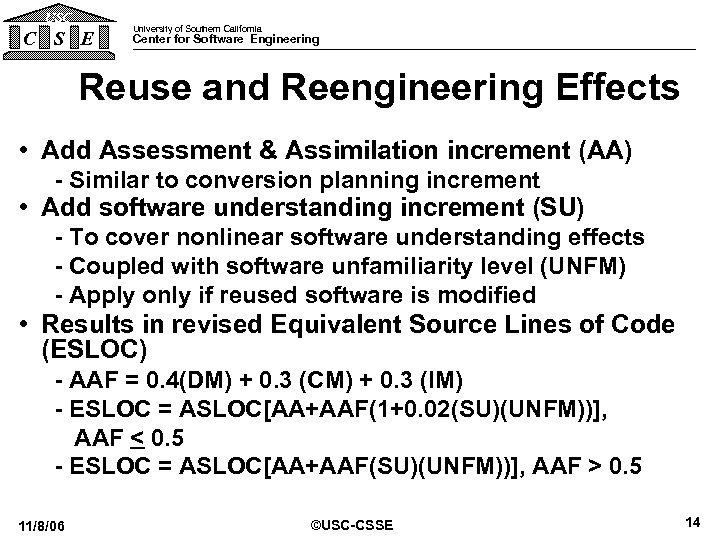

USC C S E University of Southern California Center for Software Engineering Reuse and Reengineering Effects • Add Assessment & Assimilation increment (AA) - Similar to conversion planning increment • Add software understanding increment (SU) - To cover nonlinear software understanding effects - Coupled with software unfamiliarity level (UNFM) - Apply only if reused software is modified • Results in revised Equivalent Source Lines of Code (ESLOC) - AAF = 0. 4(DM) + 0. 3 (CM) + 0. 3 (IM) - ESLOC = ASLOC[AA+AAF(1+0. 02(SU)(UNFM))], AAF < 0. 5 - ESLOC = ASLOC[AA+AAF(SU)(UNFM))], AAF > 0. 5 11/8/06 ©USC-CSSE 14

USC C S E University of Southern California Center for Software Engineering Reuse and Reengineering Effects • Add Assessment & Assimilation increment (AA) - Similar to conversion planning increment • Add software understanding increment (SU) - To cover nonlinear software understanding effects - Coupled with software unfamiliarity level (UNFM) - Apply only if reused software is modified • Results in revised Equivalent Source Lines of Code (ESLOC) - AAF = 0. 4(DM) + 0. 3 (CM) + 0. 3 (IM) - ESLOC = ASLOC[AA+AAF(1+0. 02(SU)(UNFM))], AAF < 0. 5 - ESLOC = ASLOC[AA+AAF(SU)(UNFM))], AAF > 0. 5 11/8/06 ©USC-CSSE 14

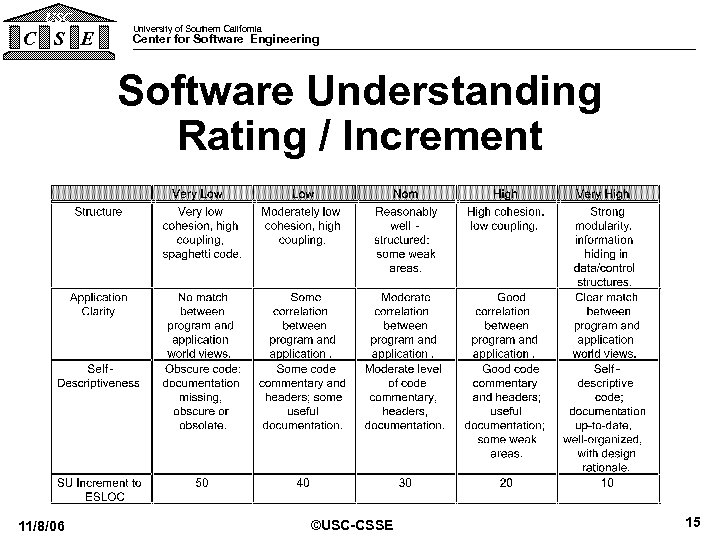

USC C S E University of Southern California Center for Software Engineering Software Understanding Rating / Increment 11/8/06 ©USC-CSSE 15

USC C S E University of Southern California Center for Software Engineering Software Understanding Rating / Increment 11/8/06 ©USC-CSSE 15

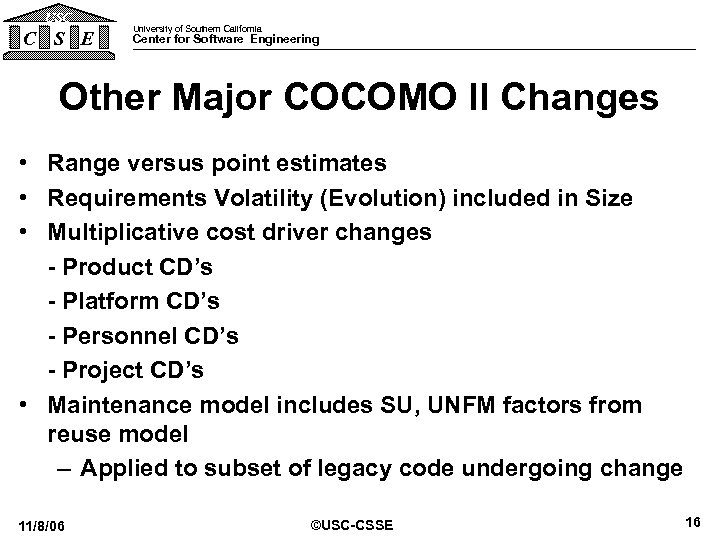

USC C S E University of Southern California Center for Software Engineering Other Major COCOMO II Changes • Range versus point estimates • Requirements Volatility (Evolution) included in Size • Multiplicative cost driver changes - Product CD’s - Platform CD’s - Personnel CD’s - Project CD’s • Maintenance model includes SU, UNFM factors from reuse model – Applied to subset of legacy code undergoing change 11/8/06 ©USC-CSSE 16

USC C S E University of Southern California Center for Software Engineering Other Major COCOMO II Changes • Range versus point estimates • Requirements Volatility (Evolution) included in Size • Multiplicative cost driver changes - Product CD’s - Platform CD’s - Personnel CD’s - Project CD’s • Maintenance model includes SU, UNFM factors from reuse model – Applied to subset of legacy code undergoing change 11/8/06 ©USC-CSSE 16

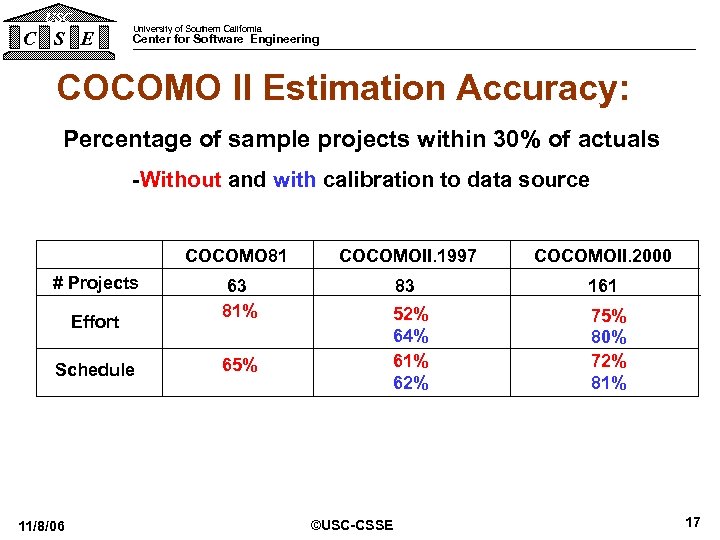

USC C S E University of Southern California Center for Software Engineering COCOMO II Estimation Accuracy: Percentage of sample projects within 30% of actuals -Without and with calibration to data source COCOMO 81 # Projects Effort Schedule 11/8/06 COCOMOII. 1997 COCOMOII. 2000 63 81% 83 161 52% 64% 61% 62% 75% 80% 72% 81% 65% ©USC-CSSE 17

USC C S E University of Southern California Center for Software Engineering COCOMO II Estimation Accuracy: Percentage of sample projects within 30% of actuals -Without and with calibration to data source COCOMO 81 # Projects Effort Schedule 11/8/06 COCOMOII. 1997 COCOMOII. 2000 63 81% 83 161 52% 64% 61% 62% 75% 80% 72% 81% 65% ©USC-CSSE 17

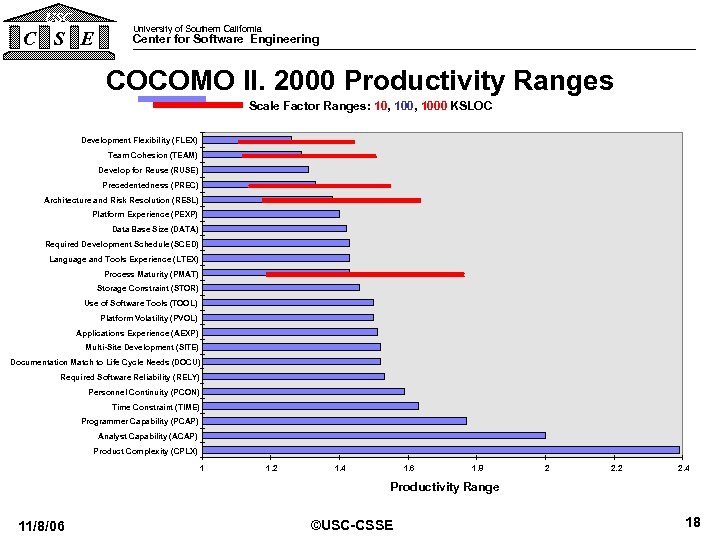

USC C S E University of Southern California Center for Software Engineering COCOMO II. 2000 Productivity Ranges Scale Factor Ranges: 10, 1000 KSLOC Development Flexibility (FLEX) Team Cohesion (TEAM) Develop for Reuse (RUSE) Precedentedness (PREC) Architecture and Risk Resolution (RESL) Platform Experience (PEXP) Data Base Size (DATA) Required Development Schedule (SCED) Language and Tools Experience (LTEX) Process Maturity (PMAT) Storage Constraint (STOR) Use of Software Tools (TOOL) Platform Volatility (PVOL) Applications Experience (AEXP) Multi-Site Development (SITE) Documentation Match to Life Cycle Needs (DOCU) Required Software Reliability (RELY) Personnel Continuity (PCON) Time Constraint (TIME) Programmer Capability (PCAP) Analyst Capability (ACAP) Product Complexity (CPLX) 1 1. 2 1. 4 1. 6 1. 8 2 2. 4 Productivity Range 11/8/06 ©USC-CSSE 18

USC C S E University of Southern California Center for Software Engineering COCOMO II. 2000 Productivity Ranges Scale Factor Ranges: 10, 1000 KSLOC Development Flexibility (FLEX) Team Cohesion (TEAM) Develop for Reuse (RUSE) Precedentedness (PREC) Architecture and Risk Resolution (RESL) Platform Experience (PEXP) Data Base Size (DATA) Required Development Schedule (SCED) Language and Tools Experience (LTEX) Process Maturity (PMAT) Storage Constraint (STOR) Use of Software Tools (TOOL) Platform Volatility (PVOL) Applications Experience (AEXP) Multi-Site Development (SITE) Documentation Match to Life Cycle Needs (DOCU) Required Software Reliability (RELY) Personnel Continuity (PCON) Time Constraint (TIME) Programmer Capability (PCAP) Analyst Capability (ACAP) Product Complexity (CPLX) 1 1. 2 1. 4 1. 6 1. 8 2 2. 4 Productivity Range 11/8/06 ©USC-CSSE 18

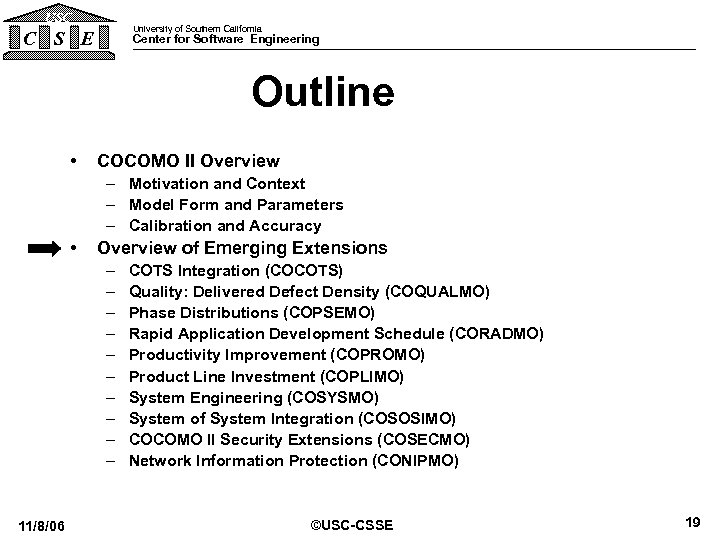

USC University of Southern California C S E Center for Software Engineering Outline • COCOMO II Overview – Motivation and Context – Model Form and Parameters – Calibration and Accuracy • Overview of Emerging Extensions – – – – – 11/8/06 COTS Integration (COCOTS) Quality: Delivered Defect Density (COQUALMO) Phase Distributions (COPSEMO) Rapid Application Development Schedule (CORADMO) Productivity Improvement (COPROMO) Product Line Investment (COPLIMO) System Engineering (COSYSMO) System of System Integration (COSOSIMO) COCOMO II Security Extensions (COSECMO) Network Information Protection (CONIPMO) ©USC-CSSE 19

USC University of Southern California C S E Center for Software Engineering Outline • COCOMO II Overview – Motivation and Context – Model Form and Parameters – Calibration and Accuracy • Overview of Emerging Extensions – – – – – 11/8/06 COTS Integration (COCOTS) Quality: Delivered Defect Density (COQUALMO) Phase Distributions (COPSEMO) Rapid Application Development Schedule (CORADMO) Productivity Improvement (COPROMO) Product Line Investment (COPLIMO) System Engineering (COSYSMO) System of System Integration (COSOSIMO) COCOMO II Security Extensions (COSECMO) Network Information Protection (CONIPMO) ©USC-CSSE 19

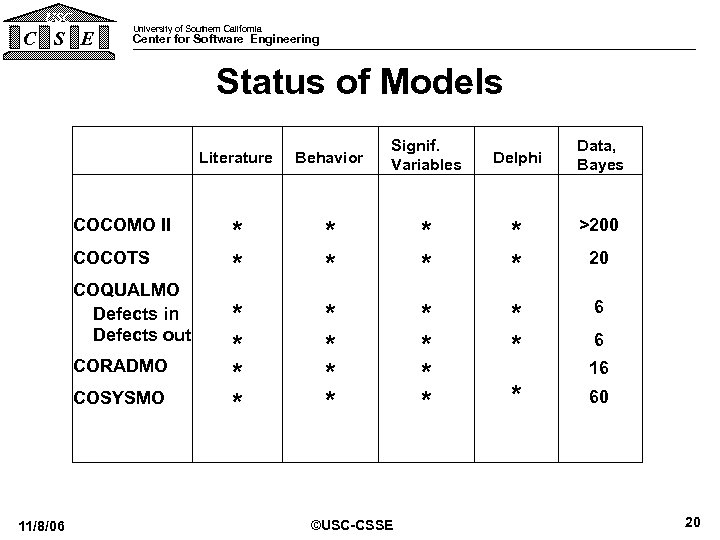

USC C S E University of Southern California Center for Software Engineering Status of Models Literature COCOMO II COCOTS COQUALMO Defects in Defects out CORADMO COSYSMO 11/8/06 Behavior Signif. Variables Delphi * * * * >200 * * * * 6 ©USC-CSSE * Data, Bayes 20 6 16 60 20

USC C S E University of Southern California Center for Software Engineering Status of Models Literature COCOMO II COCOTS COQUALMO Defects in Defects out CORADMO COSYSMO 11/8/06 Behavior Signif. Variables Delphi * * * * >200 * * * * 6 ©USC-CSSE * Data, Bayes 20 6 16 60 20

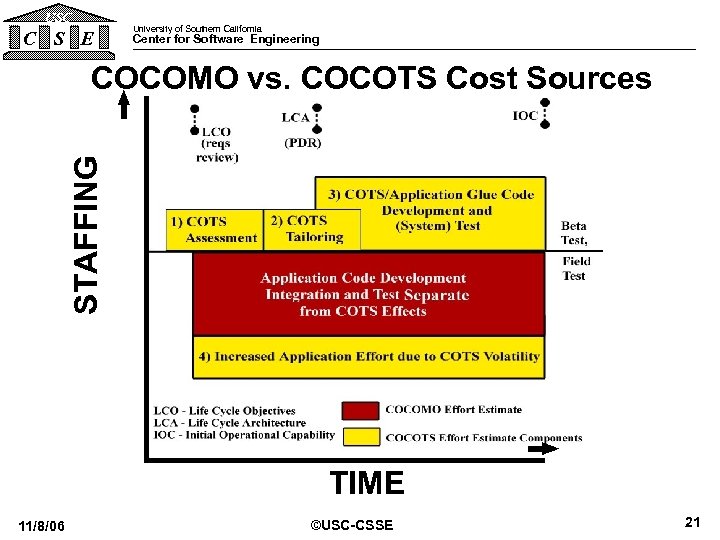

USC C S E University of Southern California Center for Software Engineering STAFFING COCOMO vs. COCOTS Cost Sources 11/8/06 TIME ©USC-CSSE 21

USC C S E University of Southern California Center for Software Engineering STAFFING COCOMO vs. COCOTS Cost Sources 11/8/06 TIME ©USC-CSSE 21

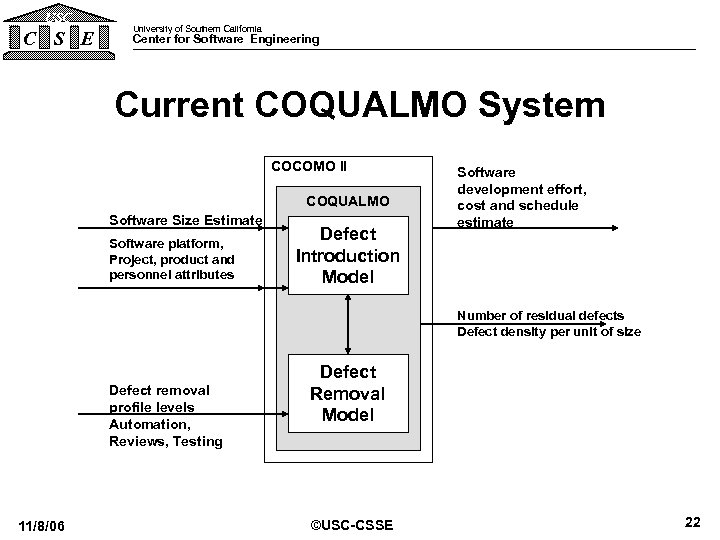

USC C S E University of Southern California Center for Software Engineering Current COQUALMO System COCOMO II COQUALMO Software Size Estimate Software platform, Project, product and personnel attributes Defect Introduction Model Software development effort, cost and schedule estimate Number of residual defects Defect density per unit of size Defect removal profile levels Automation, Reviews, Testing 11/8/06 Defect Removal Model ©USC-CSSE 22

USC C S E University of Southern California Center for Software Engineering Current COQUALMO System COCOMO II COQUALMO Software Size Estimate Software platform, Project, product and personnel attributes Defect Introduction Model Software development effort, cost and schedule estimate Number of residual defects Defect density per unit of size Defect removal profile levels Automation, Reviews, Testing 11/8/06 Defect Removal Model ©USC-CSSE 22

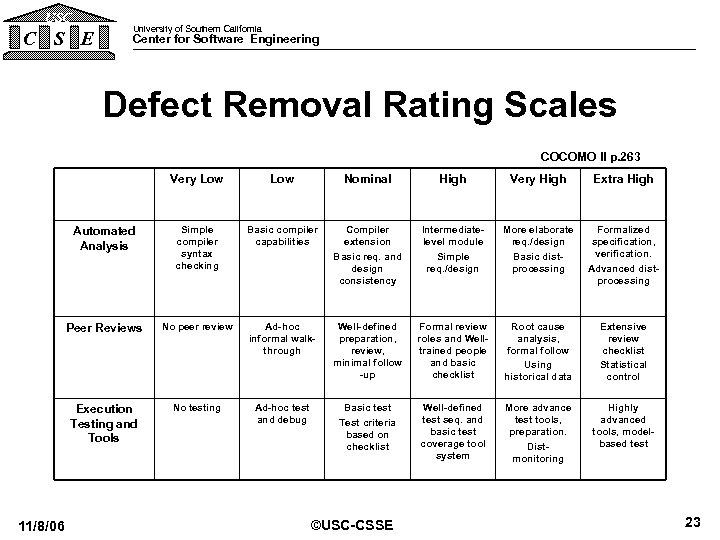

USC C S E University of Southern California Center for Software Engineering Defect Removal Rating Scales COCOMO II p. 263 Very Low Nominal High Very High Extra High Automated Analysis Simple compiler syntax checking Basic compiler capabilities Compiler extension Basic req. and design consistency Intermediatelevel module Simple req. /design More elaborate req. /design Basic distprocessing Formalized specification, verification. Advanced distprocessing Peer Reviews No peer review Ad-hoc informal walkthrough Well-defined preparation, review, minimal follow -up Formal review roles and Welltrained people and basic checklist Root cause analysis, formal follow Using historical data Extensive review checklist Statistical control Execution Testing and Tools 11/8/06 Low No testing Ad-hoc test and debug Basic test Test criteria based on checklist Well-defined test seq. and basic test coverage tool system More advance test tools, preparation. Distmonitoring Highly advanced tools, modelbased test ©USC-CSSE 23

USC C S E University of Southern California Center for Software Engineering Defect Removal Rating Scales COCOMO II p. 263 Very Low Nominal High Very High Extra High Automated Analysis Simple compiler syntax checking Basic compiler capabilities Compiler extension Basic req. and design consistency Intermediatelevel module Simple req. /design More elaborate req. /design Basic distprocessing Formalized specification, verification. Advanced distprocessing Peer Reviews No peer review Ad-hoc informal walkthrough Well-defined preparation, review, minimal follow -up Formal review roles and Welltrained people and basic checklist Root cause analysis, formal follow Using historical data Extensive review checklist Statistical control Execution Testing and Tools 11/8/06 Low No testing Ad-hoc test and debug Basic test Test criteria based on checklist Well-defined test seq. and basic test coverage tool system More advance test tools, preparation. Distmonitoring Highly advanced tools, modelbased test ©USC-CSSE 23

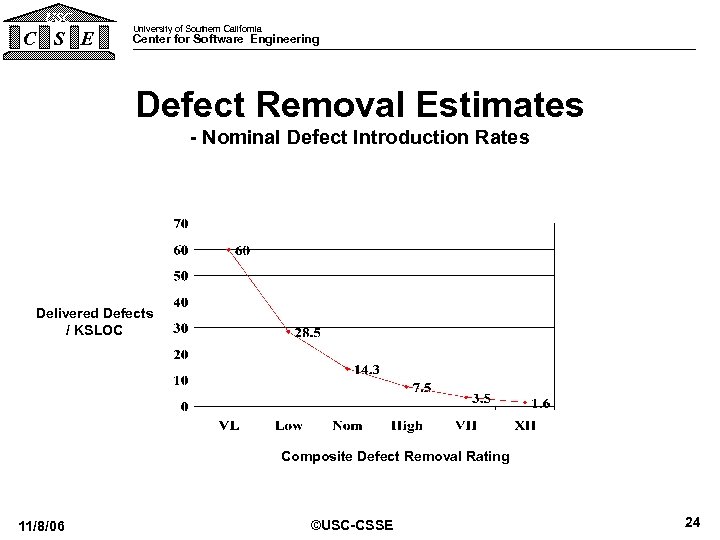

USC C S E University of Southern California Center for Software Engineering Defect Removal Estimates - Nominal Defect Introduction Rates Delivered Defects / KSLOC Composite Defect Removal Rating 11/8/06 ©USC-CSSE 24

USC C S E University of Southern California Center for Software Engineering Defect Removal Estimates - Nominal Defect Introduction Rates Delivered Defects / KSLOC Composite Defect Removal Rating 11/8/06 ©USC-CSSE 24

USC University of Southern California C S E Center for Software Engineering Outline • COCOMO II Overview – Motivation and Context – Model Form and Parameters – Calibration and Accuracy • Overview of Emerging Extensions – – – – – 11/8/06 COTS Integration (COCOTS) Quality: Delivered Defect Density (COQUALMO) Phase Distributions (COPSEMO) Rapid Application Development Schedule (CORADMO) Productivity Improvement (COPROMO) Product Line Investment (COPLIMO) System Engineering (COSYSMO) System of System Integration (COSOSIMO) COCOMO II Security Extensions (COSECMO) Network Information Protection (CONIPMO) ©USC-CSSE 25

USC University of Southern California C S E Center for Software Engineering Outline • COCOMO II Overview – Motivation and Context – Model Form and Parameters – Calibration and Accuracy • Overview of Emerging Extensions – – – – – 11/8/06 COTS Integration (COCOTS) Quality: Delivered Defect Density (COQUALMO) Phase Distributions (COPSEMO) Rapid Application Development Schedule (CORADMO) Productivity Improvement (COPROMO) Product Line Investment (COPLIMO) System Engineering (COSYSMO) System of System Integration (COSOSIMO) COCOMO II Security Extensions (COSECMO) Network Information Protection (CONIPMO) ©USC-CSSE 25

USC C S E University of Southern California Center for Software Engineering COCOMO II RAD Extension (CORADMO) RVHL DPRS CLAB COCOMO II cost drivers (except SCED) Language Level, experience, . . . 11/8/06 COCOMO II Baseline effort, RESL PPOS RCAP schedule Phase Distributions (COPSEMO) Effort, RAD Extension schedule by stage RAD effort, schedule by phase ©USC-CSSE 26

USC C S E University of Southern California Center for Software Engineering COCOMO II RAD Extension (CORADMO) RVHL DPRS CLAB COCOMO II cost drivers (except SCED) Language Level, experience, . . . 11/8/06 COCOMO II Baseline effort, RESL PPOS RCAP schedule Phase Distributions (COPSEMO) Effort, RAD Extension schedule by stage RAD effort, schedule by phase ©USC-CSSE 26

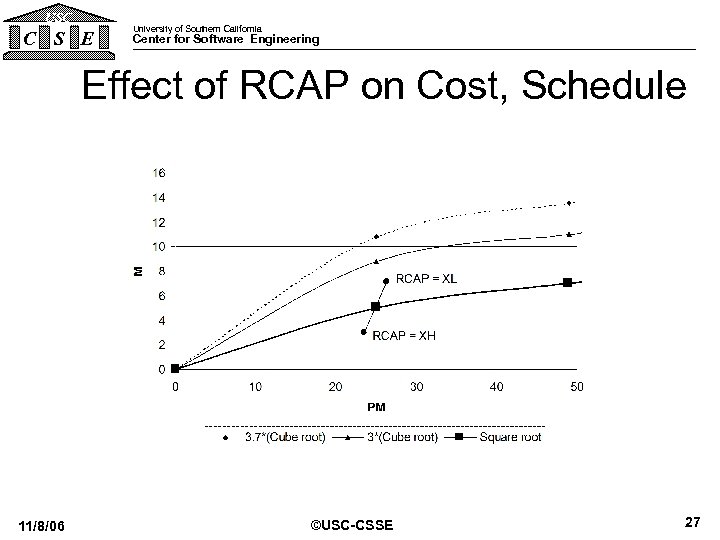

USC C S E University of Southern California Center for Software Engineering Effect of RCAP on Cost, Schedule 11/8/06 ©USC-CSSE 27

USC C S E University of Southern California Center for Software Engineering Effect of RCAP on Cost, Schedule 11/8/06 ©USC-CSSE 27

USC C S E University of Southern California Center for Software Engineering COPROMO (Productivity) Model • Uses COCOMO II model and extensions as assessment framework – Well-calibrated to 161 projects for effort, schedule – Subset of 106 1990’s projects for current-practice baseline – Extensions for Rapid Application Development formulated • Determines impact of technology investments on model parameter settings • Uses these in models to assess impact of technology investments on cost and schedule – Effort used as a proxy for cost 11/8/06 ©USC-CSSE 28

USC C S E University of Southern California Center for Software Engineering COPROMO (Productivity) Model • Uses COCOMO II model and extensions as assessment framework – Well-calibrated to 161 projects for effort, schedule – Subset of 106 1990’s projects for current-practice baseline – Extensions for Rapid Application Development formulated • Determines impact of technology investments on model parameter settings • Uses these in models to assess impact of technology investments on cost and schedule – Effort used as a proxy for cost 11/8/06 ©USC-CSSE 28

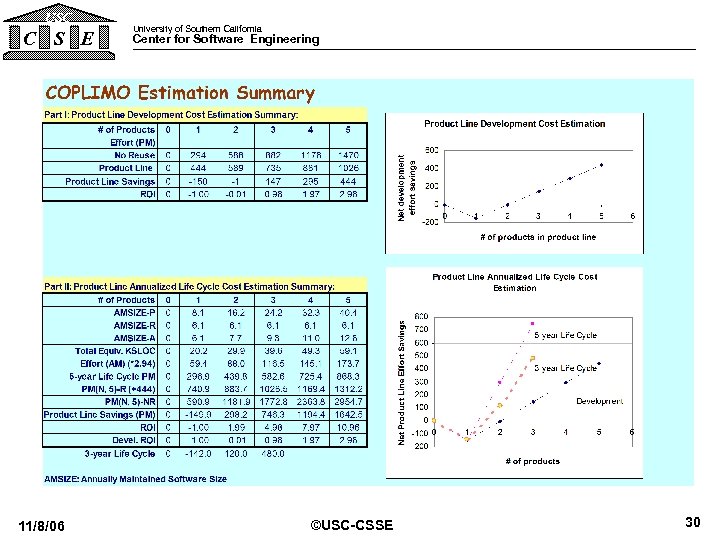

USC C S E University of Southern California Center for Software Engineering The COPLIMO Model – Constructive Product Line Investment Model • Based on COCOMO II software cost model – Statistically calibrated to 161 projects, representing 18 diverse organizations • Based on standard software reuse economic terms – RCR: Relative cost of reuse – RCWR: Relative cost of writing for reuse • Avoids overestimation – Avoids RCWR for non-reused components – Adds life cycle cost savings • Provides experience-based default parameter values • Simple Excel spreadsheet model – Easy to modify, extend, interoperate 11/8/06 ©USC-CSSE 29

USC C S E University of Southern California Center for Software Engineering The COPLIMO Model – Constructive Product Line Investment Model • Based on COCOMO II software cost model – Statistically calibrated to 161 projects, representing 18 diverse organizations • Based on standard software reuse economic terms – RCR: Relative cost of reuse – RCWR: Relative cost of writing for reuse • Avoids overestimation – Avoids RCWR for non-reused components – Adds life cycle cost savings • Provides experience-based default parameter values • Simple Excel spreadsheet model – Easy to modify, extend, interoperate 11/8/06 ©USC-CSSE 29

USC C S E 11/8/06 University of Southern California Center for Software Engineering ©USC-CSSE 30

USC C S E 11/8/06 University of Southern California Center for Software Engineering ©USC-CSSE 30

USC University of Southern California C S E Center for Software Engineering Outline • COCOMO II Overview – Motivation and Context – Model Form and Parameters – Calibration and Accuracy • Overview of Emerging Extensions – – – – – 11/8/06 COTS Integration (COCOTS) Quality: Delivered Defect Density (COQUALMO) Phase Distributions (COPSEMO) Rapid Application Development Schedule (CORADMO) Productivity Improvement (COPROMO) Product Line Investment (COPLIMO) System Engineering (COSYSMO) System of System Integration (COSOSIMO) COCOMO II Security Extensions (COSECMO) Network Information Protection (CONIPMO) ©USC-CSSE 31

USC University of Southern California C S E Center for Software Engineering Outline • COCOMO II Overview – Motivation and Context – Model Form and Parameters – Calibration and Accuracy • Overview of Emerging Extensions – – – – – 11/8/06 COTS Integration (COCOTS) Quality: Delivered Defect Density (COQUALMO) Phase Distributions (COPSEMO) Rapid Application Development Schedule (CORADMO) Productivity Improvement (COPROMO) Product Line Investment (COPLIMO) System Engineering (COSYSMO) System of System Integration (COSOSIMO) COCOMO II Security Extensions (COSECMO) Network Information Protection (CONIPMO) ©USC-CSSE 31

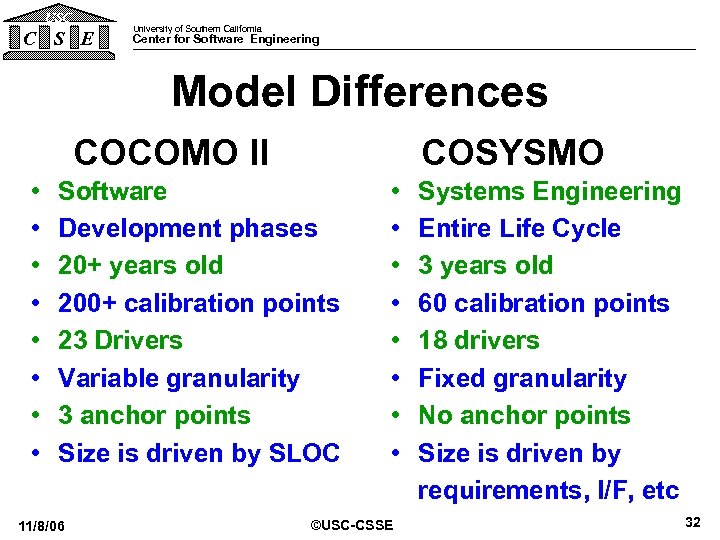

USC C S E University of Southern California Center for Software Engineering Model Differences COCOMO II • • COSYSMO Software Development phases 20+ years old 200+ calibration points 23 Drivers Variable granularity 3 anchor points Size is driven by SLOC 11/8/06 • • ©USC-CSSE Systems Engineering Entire Life Cycle 3 years old 60 calibration points 18 drivers Fixed granularity No anchor points Size is driven by requirements, I/F, etc 32

USC C S E University of Southern California Center for Software Engineering Model Differences COCOMO II • • COSYSMO Software Development phases 20+ years old 200+ calibration points 23 Drivers Variable granularity 3 anchor points Size is driven by SLOC 11/8/06 • • ©USC-CSSE Systems Engineering Entire Life Cycle 3 years old 60 calibration points 18 drivers Fixed granularity No anchor points Size is driven by requirements, I/F, etc 32

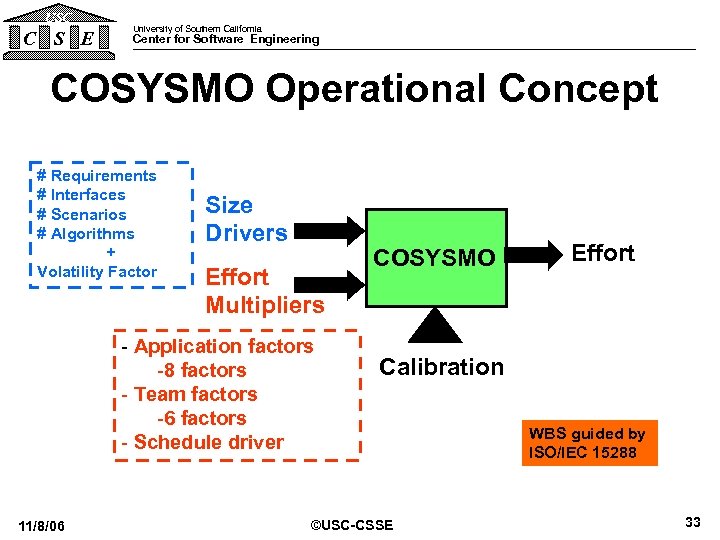

USC C S E University of Southern California Center for Software Engineering COSYSMO Operational Concept # Requirements # Interfaces # Scenarios # Algorithms + Volatility Factor Size Drivers Effort Multipliers - Application factors -8 factors - Team factors -6 factors - Schedule driver 11/8/06 COSYSMO Effort Calibration ©USC-CSSE WBS guided by ISO/IEC 15288 33

USC C S E University of Southern California Center for Software Engineering COSYSMO Operational Concept # Requirements # Interfaces # Scenarios # Algorithms + Volatility Factor Size Drivers Effort Multipliers - Application factors -8 factors - Team factors -6 factors - Schedule driver 11/8/06 COSYSMO Effort Calibration ©USC-CSSE WBS guided by ISO/IEC 15288 33

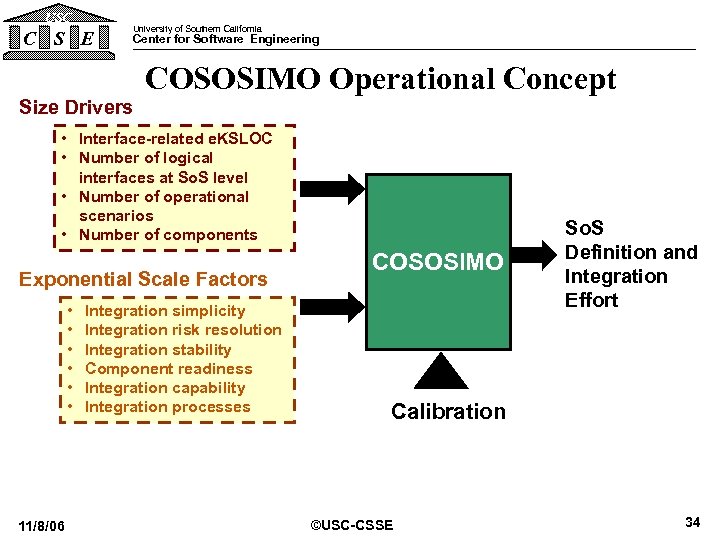

USC C S E University of Southern California Center for Software Engineering COSOSIMO Operational Concept Size Drivers • Interface-related e. KSLOC • Number of logical interfaces at So. S level • Number of operational scenarios • Number of components Exponential Scale Factors • • • 11/8/06 Integration simplicity Integration risk resolution Integration stability Component readiness Integration capability Integration processes COSOSIMO So. S Definition and Integration Effort Calibration ©USC-CSSE 34

USC C S E University of Southern California Center for Software Engineering COSOSIMO Operational Concept Size Drivers • Interface-related e. KSLOC • Number of logical interfaces at So. S level • Number of operational scenarios • Number of components Exponential Scale Factors • • • 11/8/06 Integration simplicity Integration risk resolution Integration stability Component readiness Integration capability Integration processes COSOSIMO So. S Definition and Integration Effort Calibration ©USC-CSSE 34

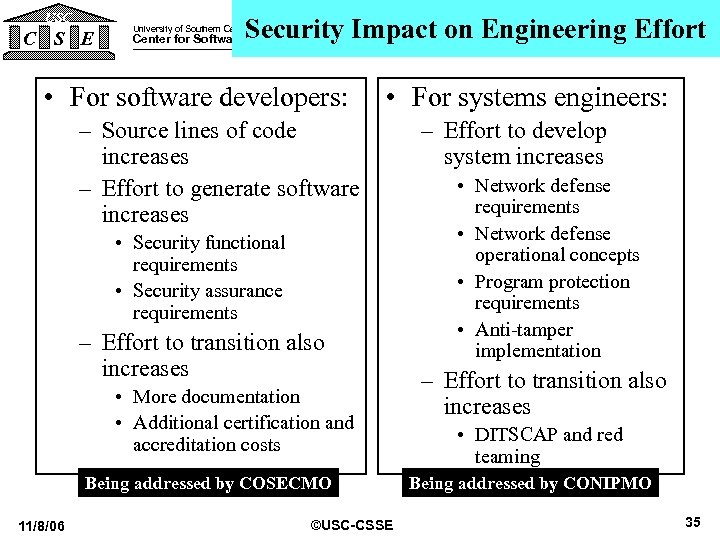

USC C S E Security Impact on Engineering Effort University of Southern California Center for Software Engineering • For software developers: • For systems engineers: – Source lines of code increases – Effort to generate software increases • Security functional requirements • Security assurance requirements – Effort to transition also increases • More documentation • Additional certification and accreditation costs Being addressed by COSECMO 11/8/06 ©USC-CSSE – Effort to develop system increases • Network defense requirements • Network defense operational concepts • Program protection requirements • Anti-tamper implementation – Effort to transition also increases • DITSCAP and red teaming Being addressed by CONIPMO 35

USC C S E Security Impact on Engineering Effort University of Southern California Center for Software Engineering • For software developers: • For systems engineers: – Source lines of code increases – Effort to generate software increases • Security functional requirements • Security assurance requirements – Effort to transition also increases • More documentation • Additional certification and accreditation costs Being addressed by COSECMO 11/8/06 ©USC-CSSE – Effort to develop system increases • Network defense requirements • Network defense operational concepts • Program protection requirements • Anti-tamper implementation – Effort to transition also increases • DITSCAP and red teaming Being addressed by CONIPMO 35

USC C S E University of Southern California Center for Software Engineering Backup Charts 11/8/06 ©USC-CSSE 36

USC C S E University of Southern California Center for Software Engineering Backup Charts 11/8/06 ©USC-CSSE 36

USC C S E University of Southern California Center for Software Engineering Purpose of COCOMO II To help people reason about the cost and schedule implications of their software decisions 11/8/06 ©USC-CSSE 37

USC C S E University of Southern California Center for Software Engineering Purpose of COCOMO II To help people reason about the cost and schedule implications of their software decisions 11/8/06 ©USC-CSSE 37

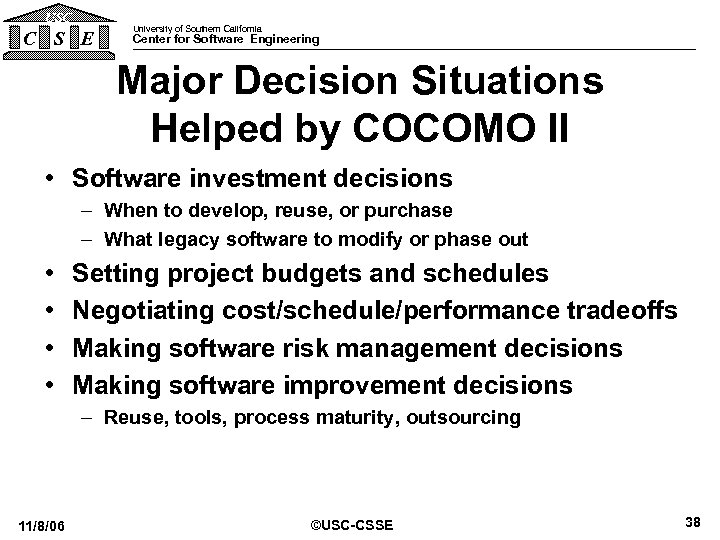

USC C S E University of Southern California Center for Software Engineering Major Decision Situations Helped by COCOMO II • Software investment decisions – When to develop, reuse, or purchase – What legacy software to modify or phase out • • Setting project budgets and schedules Negotiating cost/schedule/performance tradeoffs Making software risk management decisions Making software improvement decisions – Reuse, tools, process maturity, outsourcing 11/8/06 ©USC-CSSE 38

USC C S E University of Southern California Center for Software Engineering Major Decision Situations Helped by COCOMO II • Software investment decisions – When to develop, reuse, or purchase – What legacy software to modify or phase out • • Setting project budgets and schedules Negotiating cost/schedule/performance tradeoffs Making software risk management decisions Making software improvement decisions – Reuse, tools, process maturity, outsourcing 11/8/06 ©USC-CSSE 38

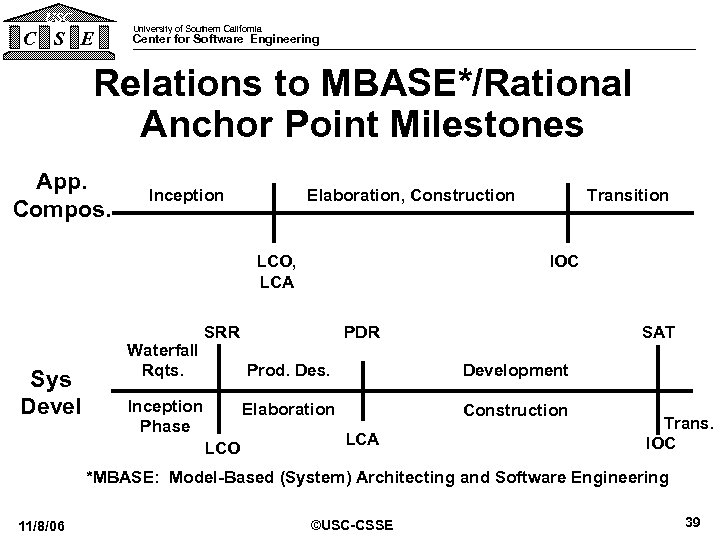

USC C S E University of Southern California Center for Software Engineering Relations to MBASE*/Rational Anchor Point Milestones App. Compos. Inception LCO, LCA Sys Devel Waterfall Rqts. Transition Elaboration, Construction IOC SRR SAT PDR Prod. Des. Inception Elaboration Phase LCA LCO Development Construction Trans. IOC *MBASE: Model-Based (System) Architecting and Software Engineering 11/8/06 ©USC-CSSE 39

USC C S E University of Southern California Center for Software Engineering Relations to MBASE*/Rational Anchor Point Milestones App. Compos. Inception LCO, LCA Sys Devel Waterfall Rqts. Transition Elaboration, Construction IOC SRR SAT PDR Prod. Des. Inception Elaboration Phase LCA LCO Development Construction Trans. IOC *MBASE: Model-Based (System) Architecting and Software Engineering 11/8/06 ©USC-CSSE 39

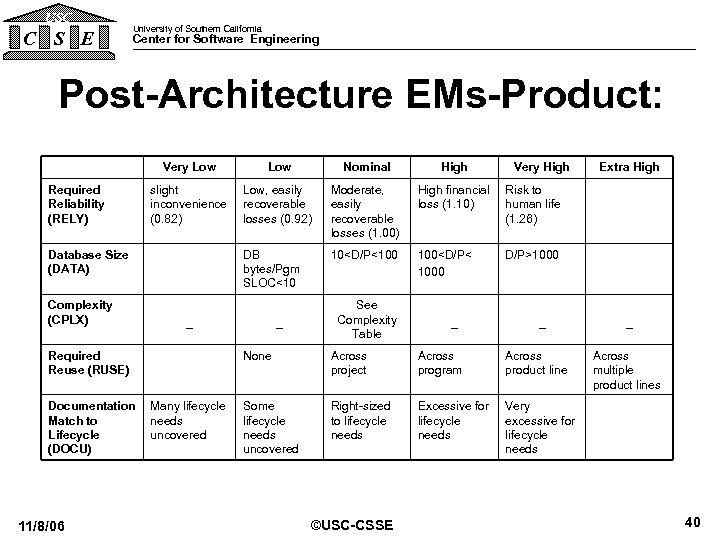

USC C S E University of Southern California Center for Software Engineering Post-Architecture EMs-Product: Very Low Required Reliability (RELY) Low Nominal High slight inconvenience (0. 82) Low, easily recoverable losses (0. 92) Moderate, easily recoverable losses (1. 00) High financial loss (1. 10) Risk to human life (1. 26) DB bytes/Pgm SLOC<10 10

USC C S E University of Southern California Center for Software Engineering Post-Architecture EMs-Product: Very Low Required Reliability (RELY) Low Nominal High slight inconvenience (0. 82) Low, easily recoverable losses (0. 92) Moderate, easily recoverable losses (1. 00) High financial loss (1. 10) Risk to human life (1. 26) DB bytes/Pgm SLOC<10 10

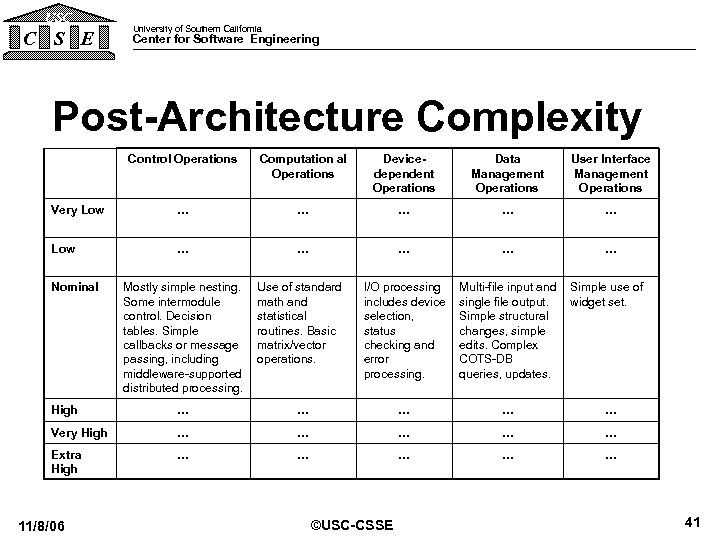

USC C S E University of Southern California Center for Software Engineering Post-Architecture Complexity Control Operations Computation al Operations Devicedependent Operations Data Management Operations User Interface Management Operations Very Low … … … … … I/O processing includes device selection, status checking and error processing. Multi-file input and single file output. Simple structural changes, simple edits. Complex COTS-DB queries, updates. Nominal Mostly simple nesting. Some intermodule control. Decision tables. Simple callbacks or message passing, including middleware-supported distributed processing. Use of standard math and statistical routines. Basic matrix/vector operations. Simple use of widget set. High … … … Very High … … … Extra High … … … 11/8/06 ©USC-CSSE 41

USC C S E University of Southern California Center for Software Engineering Post-Architecture Complexity Control Operations Computation al Operations Devicedependent Operations Data Management Operations User Interface Management Operations Very Low … … … … … I/O processing includes device selection, status checking and error processing. Multi-file input and single file output. Simple structural changes, simple edits. Complex COTS-DB queries, updates. Nominal Mostly simple nesting. Some intermodule control. Decision tables. Simple callbacks or message passing, including middleware-supported distributed processing. Use of standard math and statistical routines. Basic matrix/vector operations. Simple use of widget set. High … … … Very High … … … Extra High … … … 11/8/06 ©USC-CSSE 41

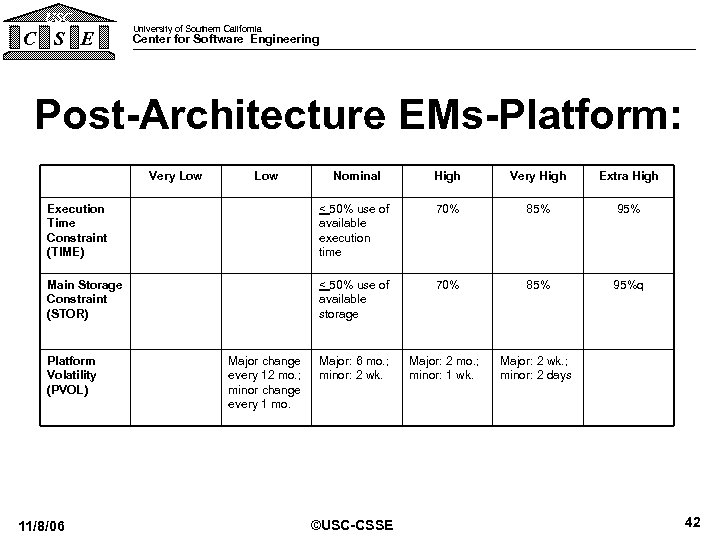

USC C S E University of Southern California Center for Software Engineering Post-Architecture EMs-Platform: Very Low Nominal High Very High Extra High Execution Time Constraint (TIME) < 50% use of available execution time 70% 85% 95% Main Storage Constraint (STOR) < 50% use of available storage 70% 85% 95%q Major: 6 mo. ; minor: 2 wk. Major: 2 mo. ; minor: 1 wk. Major: 2 wk. ; minor: 2 days Platform Volatility (PVOL) 11/8/06 Low Major change every 12 mo. ; minor change every 1 mo. ©USC-CSSE 42

USC C S E University of Southern California Center for Software Engineering Post-Architecture EMs-Platform: Very Low Nominal High Very High Extra High Execution Time Constraint (TIME) < 50% use of available execution time 70% 85% 95% Main Storage Constraint (STOR) < 50% use of available storage 70% 85% 95%q Major: 6 mo. ; minor: 2 wk. Major: 2 mo. ; minor: 1 wk. Major: 2 wk. ; minor: 2 days Platform Volatility (PVOL) 11/8/06 Low Major change every 12 mo. ; minor change every 1 mo. ©USC-CSSE 42

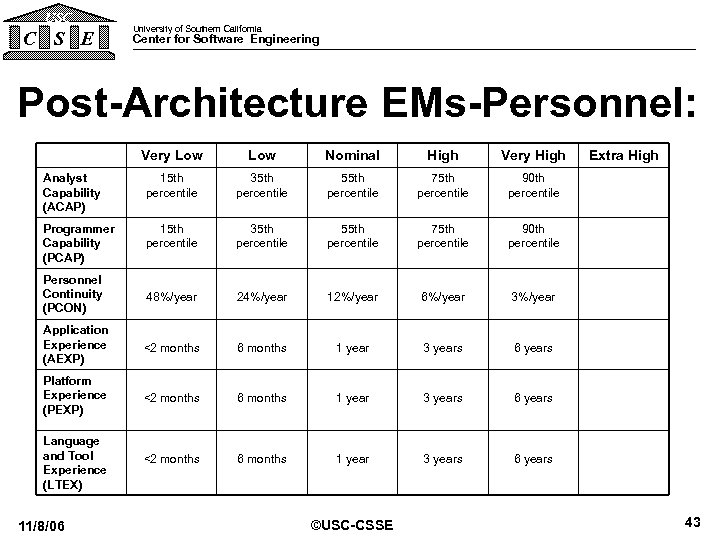

USC C S E University of Southern California Center for Software Engineering Post-Architecture EMs-Personnel: Very Low Nominal High Very High Analyst Capability (ACAP) 15 th percentile 35 th percentile 55 th percentile 75 th percentile 90 th percentile Programmer Capability (PCAP) 15 th percentile 35 th percentile 55 th percentile 75 th percentile 90 th percentile Personnel Continuity (PCON) 48%/year 24%/year 12%/year 6%/year 3%/year Application Experience (AEXP) <2 months 6 months 1 year 3 years 6 years Platform Experience (PEXP) <2 months 6 months 1 year 3 years 6 years Language and Tool Experience (LTEX) <2 months 6 months 1 year 3 years 6 years 11/8/06 ©USC-CSSE Extra High 43

USC C S E University of Southern California Center for Software Engineering Post-Architecture EMs-Personnel: Very Low Nominal High Very High Analyst Capability (ACAP) 15 th percentile 35 th percentile 55 th percentile 75 th percentile 90 th percentile Programmer Capability (PCAP) 15 th percentile 35 th percentile 55 th percentile 75 th percentile 90 th percentile Personnel Continuity (PCON) 48%/year 24%/year 12%/year 6%/year 3%/year Application Experience (AEXP) <2 months 6 months 1 year 3 years 6 years Platform Experience (PEXP) <2 months 6 months 1 year 3 years 6 years Language and Tool Experience (LTEX) <2 months 6 months 1 year 3 years 6 years 11/8/06 ©USC-CSSE Extra High 43

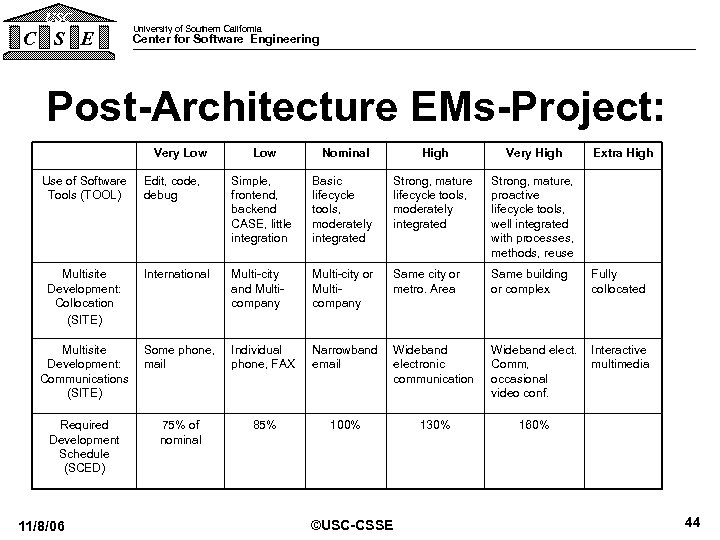

USC C S E University of Southern California Center for Software Engineering Post-Architecture EMs-Project: Very Low Nominal High Very High Edit, code, debug Simple, frontend, backend CASE, little integration Basic lifecycle tools, moderately integrated Strong, mature, proactive lifecycle tools, well integrated with processes, methods, reuse Multisite Development: Collocation (SITE) International Multi-city and Multicompany Multi-city or Multicompany Same city or metro. Area Same building or complex Fully collocated Multisite Development: Communications (SITE) Some phone, mail Individual phone, FAX Narrowband email Wideband electronic communication Wideband elect. Comm, occasional video conf. Interactive multimedia Required Development Schedule (SCED) 75% of nominal 85% 100% 130% 160% Use of Software Tools (TOOL) 11/8/06 ©USC-CSSE Extra High 44

USC C S E University of Southern California Center for Software Engineering Post-Architecture EMs-Project: Very Low Nominal High Very High Edit, code, debug Simple, frontend, backend CASE, little integration Basic lifecycle tools, moderately integrated Strong, mature, proactive lifecycle tools, well integrated with processes, methods, reuse Multisite Development: Collocation (SITE) International Multi-city and Multicompany Multi-city or Multicompany Same city or metro. Area Same building or complex Fully collocated Multisite Development: Communications (SITE) Some phone, mail Individual phone, FAX Narrowband email Wideband electronic communication Wideband elect. Comm, occasional video conf. Interactive multimedia Required Development Schedule (SCED) 75% of nominal 85% 100% 130% 160% Use of Software Tools (TOOL) 11/8/06 ©USC-CSSE Extra High 44

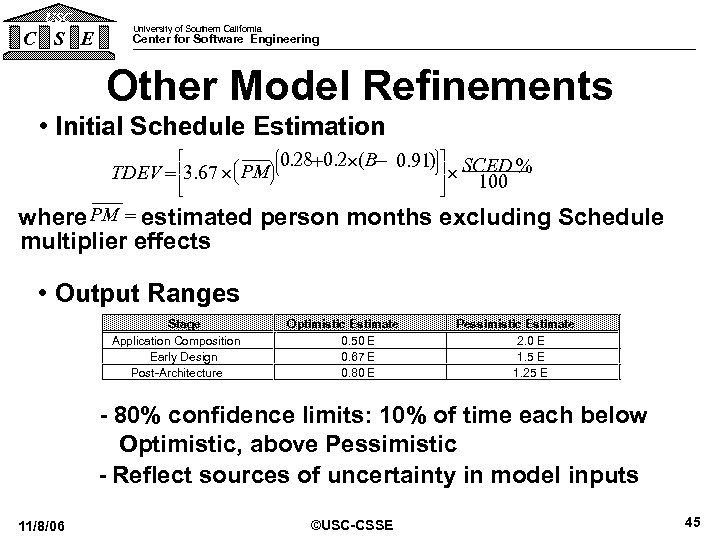

USC C S E University of Southern California Center for Software Engineering Other Model Refinements • Initial Schedule Estimation ù æ ö 0. 28+0. 2´(B- 0. 91) ú SCED % TDEV = 3. 67 ´ ç PMø ´ 100 è ú é ê ê ë ö ÷ ÷ ÷ ø æ ç ç ç è û PM = where estimated person months excluding Schedule multiplier effects • Output Ranges Stage Application Composition Early Design Post-Architecture Optimistic Estimate 0. 50 E 0. 67 E 0. 80 E Pessimistic Estimate 2. 0 E 1. 5 E 1. 25 E - 80% confidence limits: 10% of time each below Optimistic, above Pessimistic - Reflect sources of uncertainty in model inputs 11/8/06 ©USC-CSSE 45

USC C S E University of Southern California Center for Software Engineering Other Model Refinements • Initial Schedule Estimation ù æ ö 0. 28+0. 2´(B- 0. 91) ú SCED % TDEV = 3. 67 ´ ç PMø ´ 100 è ú é ê ê ë ö ÷ ÷ ÷ ø æ ç ç ç è û PM = where estimated person months excluding Schedule multiplier effects • Output Ranges Stage Application Composition Early Design Post-Architecture Optimistic Estimate 0. 50 E 0. 67 E 0. 80 E Pessimistic Estimate 2. 0 E 1. 5 E 1. 25 E - 80% confidence limits: 10% of time each below Optimistic, above Pessimistic - Reflect sources of uncertainty in model inputs 11/8/06 ©USC-CSSE 45

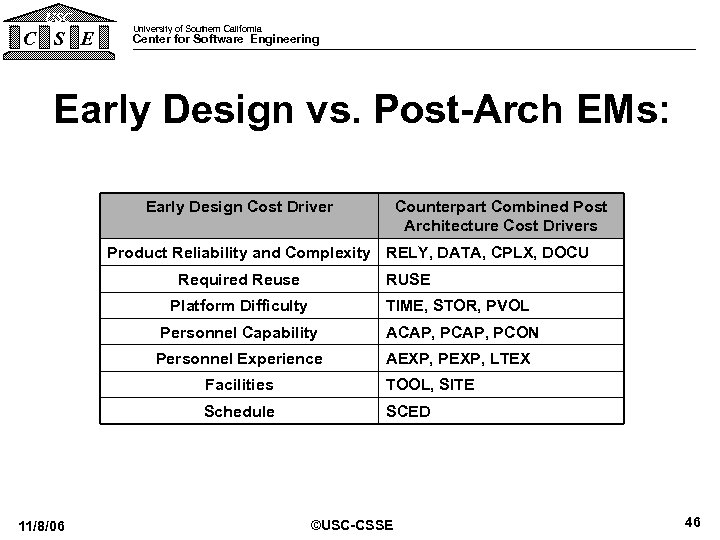

USC C S E University of Southern California Center for Software Engineering Early Design vs. Post-Arch EMs: Early Design Cost Driver Counterpart Combined Post Architecture Cost Drivers Product Reliability and Complexity RELY, DATA, CPLX, DOCU Required Reuse RUSE Platform Difficulty TIME, STOR, PVOL Personnel Capability ACAP, PCON Personnel Experience AEXP, PEXP, LTEX Facilities Schedule 11/8/06 TOOL, SITE SCED ©USC-CSSE 46

USC C S E University of Southern California Center for Software Engineering Early Design vs. Post-Arch EMs: Early Design Cost Driver Counterpart Combined Post Architecture Cost Drivers Product Reliability and Complexity RELY, DATA, CPLX, DOCU Required Reuse RUSE Platform Difficulty TIME, STOR, PVOL Personnel Capability ACAP, PCON Personnel Experience AEXP, PEXP, LTEX Facilities Schedule 11/8/06 TOOL, SITE SCED ©USC-CSSE 46

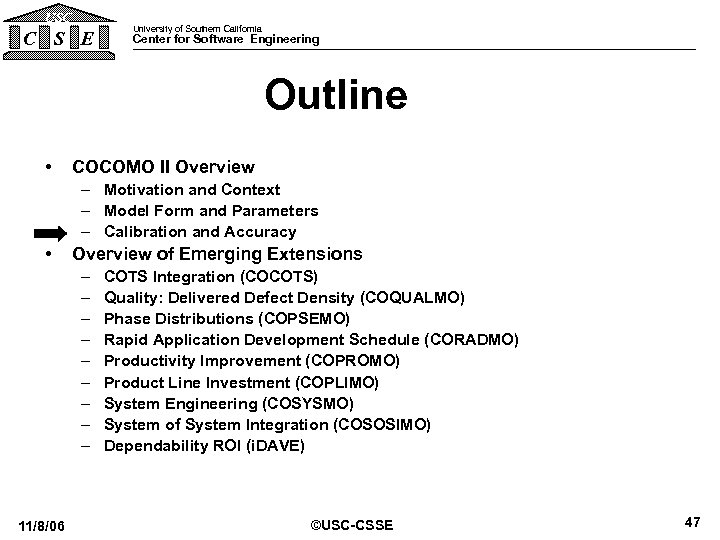

USC C S E University of Southern California Center for Software Engineering Outline • COCOMO II Overview – Motivation and Context – Model Form and Parameters – Calibration and Accuracy • Overview of Emerging Extensions – – – – – 11/8/06 COTS Integration (COCOTS) Quality: Delivered Defect Density (COQUALMO) Phase Distributions (COPSEMO) Rapid Application Development Schedule (CORADMO) Productivity Improvement (COPROMO) Product Line Investment (COPLIMO) System Engineering (COSYSMO) System of System Integration (COSOSIMO) Dependability ROI (i. DAVE) ©USC-CSSE 47

USC C S E University of Southern California Center for Software Engineering Outline • COCOMO II Overview – Motivation and Context – Model Form and Parameters – Calibration and Accuracy • Overview of Emerging Extensions – – – – – 11/8/06 COTS Integration (COCOTS) Quality: Delivered Defect Density (COQUALMO) Phase Distributions (COPSEMO) Rapid Application Development Schedule (CORADMO) Productivity Improvement (COPROMO) Product Line Investment (COPLIMO) System Engineering (COSYSMO) System of System Integration (COSOSIMO) Dependability ROI (i. DAVE) ©USC-CSSE 47

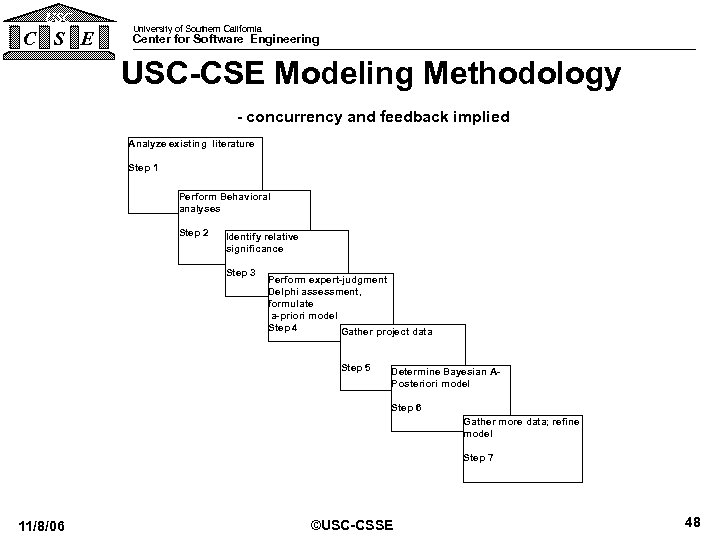

USC C S E University of Southern California Center for Software Engineering USC-CSE Modeling Methodology - concurrency and feedback implied Analyze existing literature Step 1 Perform Behavioral analyses Step 2 Identify relative significance Step 3 Perform expert-judgment Delphi assessment, formulate a-priori model Step 4 Gather project data Step 5 Determine Bayesian APosteriori model Step 6 Gather more data; refine model Step 7 11/8/06 ©USC-CSSE 48

USC C S E University of Southern California Center for Software Engineering USC-CSE Modeling Methodology - concurrency and feedback implied Analyze existing literature Step 1 Perform Behavioral analyses Step 2 Identify relative significance Step 3 Perform expert-judgment Delphi assessment, formulate a-priori model Step 4 Gather project data Step 5 Determine Bayesian APosteriori model Step 6 Gather more data; refine model Step 7 11/8/06 ©USC-CSSE 48

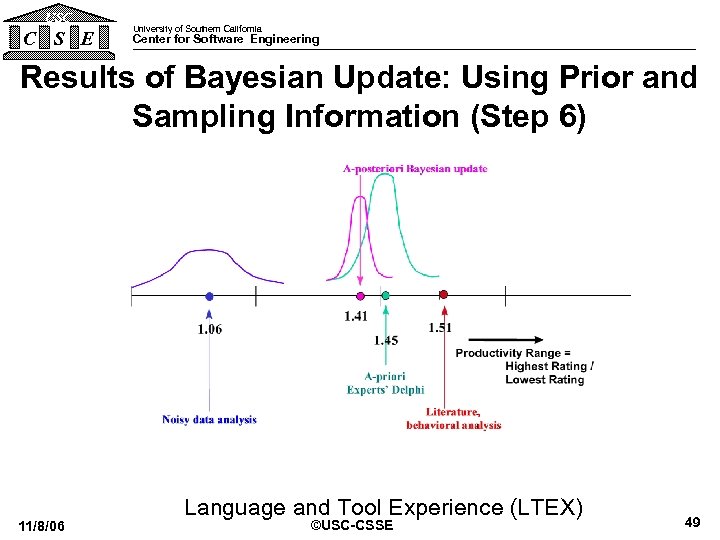

USC C S E University of Southern California Center for Software Engineering Results of Bayesian Update: Using Prior and Sampling Information (Step 6) 11/8/06 Language and Tool Experience (LTEX) ©USC-CSSE 49

USC C S E University of Southern California Center for Software Engineering Results of Bayesian Update: Using Prior and Sampling Information (Step 6) 11/8/06 Language and Tool Experience (LTEX) ©USC-CSSE 49

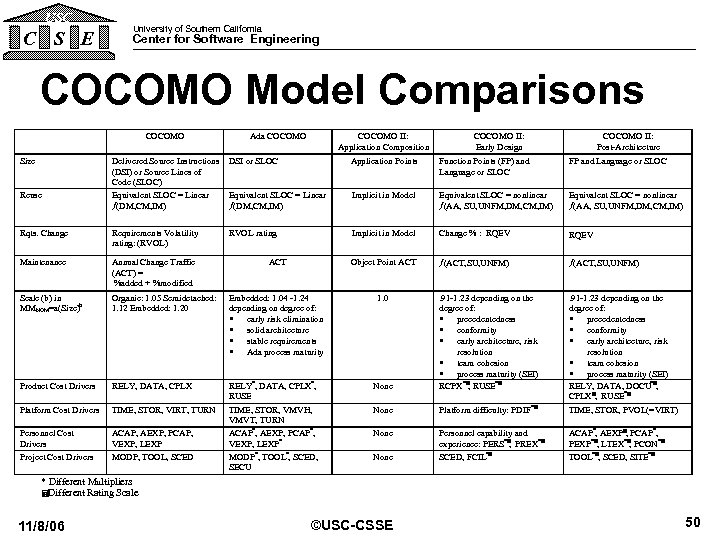

USC C S E University of Southern California Center for Software Engineering COCOMO Model Comparisons COCOMO Size Ada COCOMO II: Application Composition COCOMO II: Early Design COCOMO II: Post-Architecture Delivered Source Instructions (DSI) or Source Lines of Code (SLOC) Equivalent SLOC = Linear ¦(DM, CM, IM) DSI or SLOC Application Points Function Points (FP) and Language or SLOC FP and Language or SLOC Equivalent SLOC = Linear ¦(DM, CM, IM) Implicit in Model Equivalent SLOC = nonlinear ¦(AA, SU, UNFM, DM, CM, IM) Rqts. Change Requirements Volatility rating: (RVOL) RVOL rating Implicit in Model Change % : RQEV Maintenance Annual Change Traffic (ACT) = %added + %modified ACT Object Point ACT ¦(ACT, SU, UNFM) Scale (b) in MMNOM=a(Size)b Organic: 1. 05 Semidetached: 1. 12 Embedded: 1. 20 Embedded: 1. 04 -1. 24 depending on degree of: · early risk elimination · solid architecture · stable requirements · Ada process maturity 1. 0 Product Cost Drivers RELY, DATA, CPLX None Platform Cost Drivers TIME, STOR, VIRT, TURN None Platform difficulty: PDIF *= . 91 -1. 23 depending on the degree of: · precedentedness · conformity · early architecture, risk resolution · team cohesion · process maturity (SEI) RELY, DATA, DOCU*=, CPLX=, RUSE*= TIME, STOR, PVOL(=VIRT) Personnel Cost Drivers Project Cost Drivers ACAP, AEXP, PCAP, VEXP, LEXP MODP, TOOL, SCED RELY*, DATA, CPLX*, RUSE TIME, STOR, VMVH, VMVT, TURN ACAP*, AEXP, PCAP*, VEXP, LEXP* MODP*, TOOL*, SCED, SECU . 91 -1. 23 depending on the degree of: · precedentedness · conformity · early architecture, risk resolution · team cohesion · process maturity (SEI) RCPX*=, RUSE*= None Personnel capability and experience: PERS*= PREX*= , SCED, FCIL*= ACAP*, AEXP=, PCAP*, PEXP*=, LTEX*= PCON*= , TOOL*=, SCED, SITE*= Reuse None * Different Multipliers =Different Rating Scale 11/8/06 ©USC-CSSE 50

USC C S E University of Southern California Center for Software Engineering COCOMO Model Comparisons COCOMO Size Ada COCOMO II: Application Composition COCOMO II: Early Design COCOMO II: Post-Architecture Delivered Source Instructions (DSI) or Source Lines of Code (SLOC) Equivalent SLOC = Linear ¦(DM, CM, IM) DSI or SLOC Application Points Function Points (FP) and Language or SLOC FP and Language or SLOC Equivalent SLOC = Linear ¦(DM, CM, IM) Implicit in Model Equivalent SLOC = nonlinear ¦(AA, SU, UNFM, DM, CM, IM) Rqts. Change Requirements Volatility rating: (RVOL) RVOL rating Implicit in Model Change % : RQEV Maintenance Annual Change Traffic (ACT) = %added + %modified ACT Object Point ACT ¦(ACT, SU, UNFM) Scale (b) in MMNOM=a(Size)b Organic: 1. 05 Semidetached: 1. 12 Embedded: 1. 20 Embedded: 1. 04 -1. 24 depending on degree of: · early risk elimination · solid architecture · stable requirements · Ada process maturity 1. 0 Product Cost Drivers RELY, DATA, CPLX None Platform Cost Drivers TIME, STOR, VIRT, TURN None Platform difficulty: PDIF *= . 91 -1. 23 depending on the degree of: · precedentedness · conformity · early architecture, risk resolution · team cohesion · process maturity (SEI) RELY, DATA, DOCU*=, CPLX=, RUSE*= TIME, STOR, PVOL(=VIRT) Personnel Cost Drivers Project Cost Drivers ACAP, AEXP, PCAP, VEXP, LEXP MODP, TOOL, SCED RELY*, DATA, CPLX*, RUSE TIME, STOR, VMVH, VMVT, TURN ACAP*, AEXP, PCAP*, VEXP, LEXP* MODP*, TOOL*, SCED, SECU . 91 -1. 23 depending on the degree of: · precedentedness · conformity · early architecture, risk resolution · team cohesion · process maturity (SEI) RCPX*=, RUSE*= None Personnel capability and experience: PERS*= PREX*= , SCED, FCIL*= ACAP*, AEXP=, PCAP*, PEXP*=, LTEX*= PCON*= , TOOL*=, SCED, SITE*= Reuse None * Different Multipliers =Different Rating Scale 11/8/06 ©USC-CSSE 50

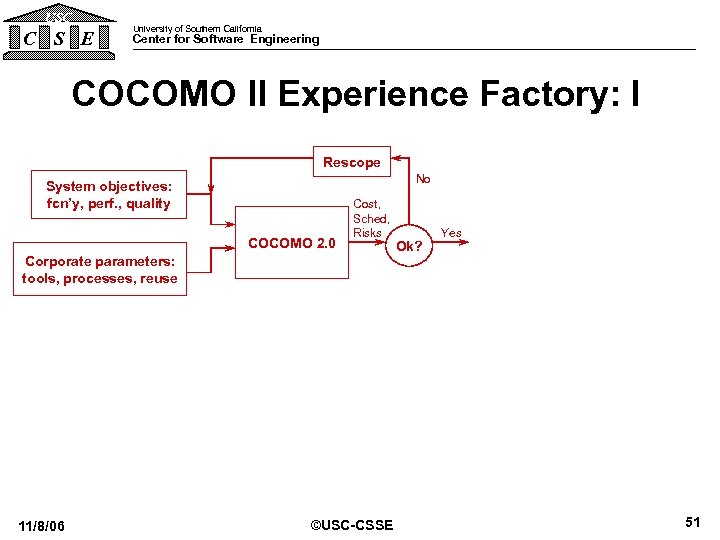

USC C S E University of Southern California Center for Software Engineering COCOMO II Experience Factory: I Rescope No System objectives: fcn’y, perf. , quality COCOMO 2. 0 Cost, Sched, Risks Corporate parameters: tools, processes, reuse 11/8/06 ©USC-CSSE Ok? Yes 51

USC C S E University of Southern California Center for Software Engineering COCOMO II Experience Factory: I Rescope No System objectives: fcn’y, perf. , quality COCOMO 2. 0 Cost, Sched, Risks Corporate parameters: tools, processes, reuse 11/8/06 ©USC-CSSE Ok? Yes 51

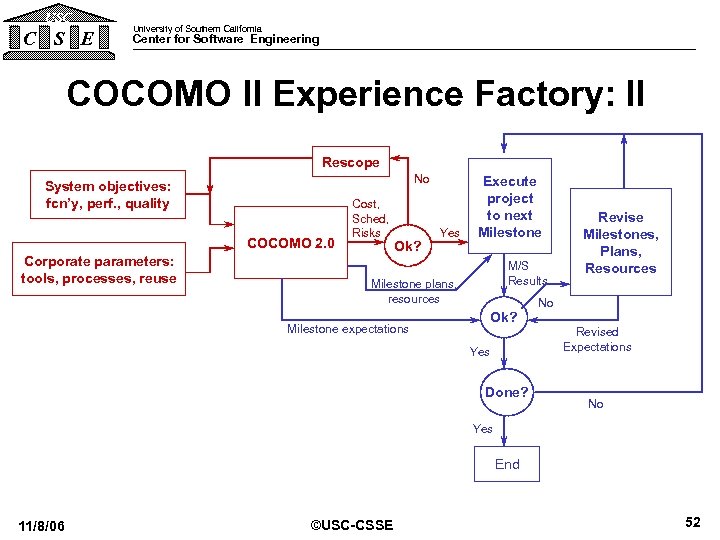

USC C S E University of Southern California Center for Software Engineering COCOMO II Experience Factory: II Rescope No System objectives: fcn’y, perf. , quality COCOMO 2. 0 Corporate parameters: tools, processes, reuse Cost, Sched, Risks Ok? Yes Execute project to next Milestone M/S Results Milestone plans, resources Milestone expectations Revise Milestones, Plans, Resources No Ok? Yes Done? Revised Expectations No Yes End 11/8/06 ©USC-CSSE 52

USC C S E University of Southern California Center for Software Engineering COCOMO II Experience Factory: II Rescope No System objectives: fcn’y, perf. , quality COCOMO 2. 0 Corporate parameters: tools, processes, reuse Cost, Sched, Risks Ok? Yes Execute project to next Milestone M/S Results Milestone plans, resources Milestone expectations Revise Milestones, Plans, Resources No Ok? Yes Done? Revised Expectations No Yes End 11/8/06 ©USC-CSSE 52

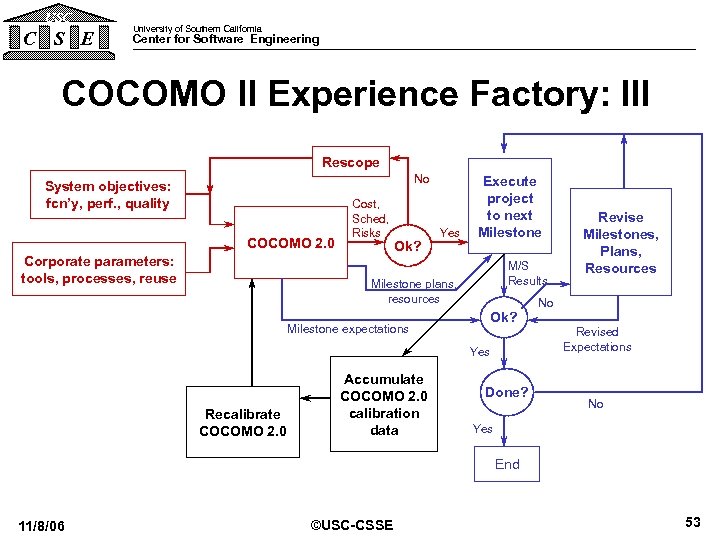

USC C S E University of Southern California Center for Software Engineering COCOMO II Experience Factory: III Rescope No System objectives: fcn’y, perf. , quality COCOMO 2. 0 Corporate parameters: tools, processes, reuse Cost, Sched, Risks Ok? Yes Execute project to next Milestone M/S Results Milestone plans, resources Milestone expectations No Ok? Yes Recalibrate COCOMO 2. 0 Accumulate COCOMO 2. 0 calibration data Revise Milestones, Plans, Resources Done? Revised Expectations No Yes End 11/8/06 ©USC-CSSE 53

USC C S E University of Southern California Center for Software Engineering COCOMO II Experience Factory: III Rescope No System objectives: fcn’y, perf. , quality COCOMO 2. 0 Corporate parameters: tools, processes, reuse Cost, Sched, Risks Ok? Yes Execute project to next Milestone M/S Results Milestone plans, resources Milestone expectations No Ok? Yes Recalibrate COCOMO 2. 0 Accumulate COCOMO 2. 0 calibration data Revise Milestones, Plans, Resources Done? Revised Expectations No Yes End 11/8/06 ©USC-CSSE 53

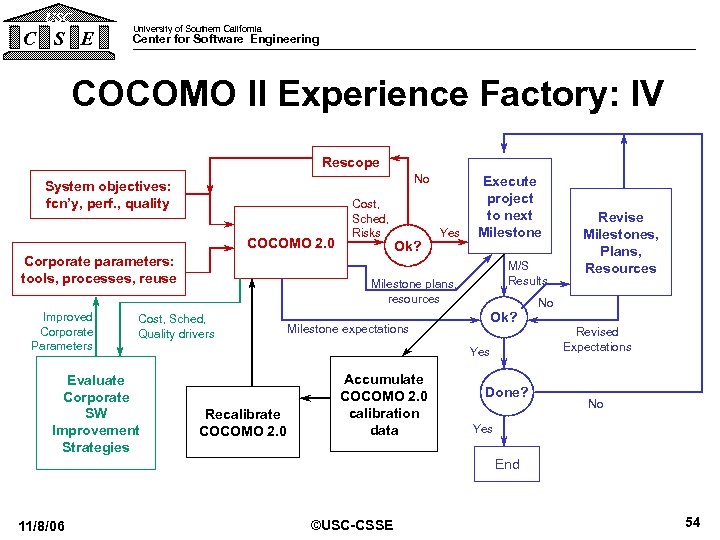

USC C S E University of Southern California Center for Software Engineering COCOMO II Experience Factory: IV Rescope No System objectives: fcn’y, perf. , quality COCOMO 2. 0 Corporate parameters: tools, processes, reuse Improved Corporate Parameters Ok? Yes M/S Results Milestone plans, resources Cost, Sched, Quality drivers Evaluate Corporate SW Improvement Strategies Cost, Sched, Risks Execute project to next Milestone expectations No Ok? Yes Recalibrate COCOMO 2. 0 Accumulate COCOMO 2. 0 calibration data Revise Milestones, Plans, Resources Done? Revised Expectations No Yes End 11/8/06 ©USC-CSSE 54

USC C S E University of Southern California Center for Software Engineering COCOMO II Experience Factory: IV Rescope No System objectives: fcn’y, perf. , quality COCOMO 2. 0 Corporate parameters: tools, processes, reuse Improved Corporate Parameters Ok? Yes M/S Results Milestone plans, resources Cost, Sched, Quality drivers Evaluate Corporate SW Improvement Strategies Cost, Sched, Risks Execute project to next Milestone expectations No Ok? Yes Recalibrate COCOMO 2. 0 Accumulate COCOMO 2. 0 calibration data Revise Milestones, Plans, Resources Done? Revised Expectations No Yes End 11/8/06 ©USC-CSSE 54

USC C S E University of Southern California Center for Software Engineering New Glue Code Submodel Results • Current calibration looking reasonably good – Excluding projects with very large, very small amounts of glue code (Effort Pred): • [0. 5 - 100 KLOC]: Pred (. 30) = 9/17 = 53% • [2 - 100 KLOC]: Pred (. 30) = 8/13 = 62% – For comparison, calibration results shown at ARR 2000: • [0. 1 - 390 KLOC]: Pred (. 30) = 4/13 = 31% • Propose to revisit large, small, anomalous projects – A few follow-up questions on categories of code & effort • Glue code vs. application code • Glue code effort vs. other sources 11/8/06 ©USC-CSSE 55

USC C S E University of Southern California Center for Software Engineering New Glue Code Submodel Results • Current calibration looking reasonably good – Excluding projects with very large, very small amounts of glue code (Effort Pred): • [0. 5 - 100 KLOC]: Pred (. 30) = 9/17 = 53% • [2 - 100 KLOC]: Pred (. 30) = 8/13 = 62% – For comparison, calibration results shown at ARR 2000: • [0. 1 - 390 KLOC]: Pred (. 30) = 4/13 = 31% • Propose to revisit large, small, anomalous projects – A few follow-up questions on categories of code & effort • Glue code vs. application code • Glue code effort vs. other sources 11/8/06 ©USC-CSSE 55

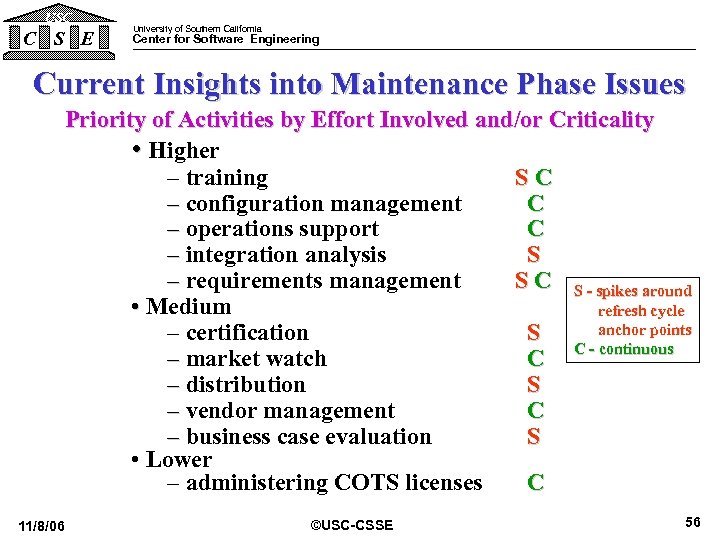

USC C S E University of Southern California Center for Software Engineering Current Insights into Maintenance Phase Issues Priority of Activities by Effort Involved and/or Criticality • Higher – training SC – configuration management C – operations support C – integration analysis S – requirements management S C S - spikes around • Medium refresh cycle anchor points – certification S C - continuous – market watch C – distribution S – vendor management C – business case evaluation S • Lower – administering COTS licenses C 11/8/06 ©USC-CSSE 56

USC C S E University of Southern California Center for Software Engineering Current Insights into Maintenance Phase Issues Priority of Activities by Effort Involved and/or Criticality • Higher – training SC – configuration management C – operations support C – integration analysis S – requirements management S C S - spikes around • Medium refresh cycle anchor points – certification S C - continuous – market watch C – distribution S – vendor management C – business case evaluation S • Lower – administering COTS licenses C 11/8/06 ©USC-CSSE 56

USC C S E University of Southern California Center for Software Engineering RAD Context • RAD a critical competitive strategy – Market window; pace of change • Non-RAD COCOMO II overestimates RAD schedules – Need opportunity-tree cost-schedule adjustment – Cube root model inappropriate for small RAD projects • COCOMO II: 11/8/06 Mo. = 3. 7 ³ PM ©USC-CSSE 57

USC C S E University of Southern California Center for Software Engineering RAD Context • RAD a critical competitive strategy – Market window; pace of change • Non-RAD COCOMO II overestimates RAD schedules – Need opportunity-tree cost-schedule adjustment – Cube root model inappropriate for small RAD projects • COCOMO II: 11/8/06 Mo. = 3. 7 ³ PM ©USC-CSSE 57

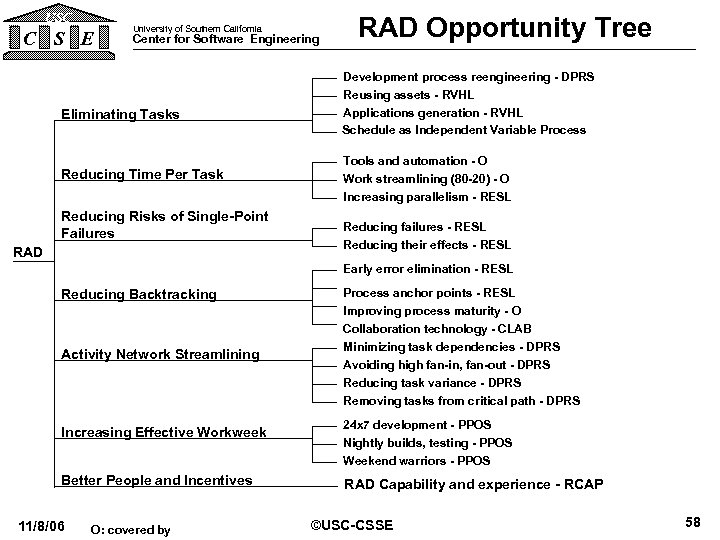

USC C S E University of Southern California Center for Software Engineering Eliminating Tasks Reducing Time Per Task Reducing Risks of Single-Point Failures RAD Opportunity Tree Development process reengineering - DPRS Reusing assets - RVHL Applications generation - RVHL Schedule as Independent Variable Process Tools and automation - O Work streamlining (80 -20) - O Increasing parallelism - RESL Reducing failures - RESL Reducing their effects - RESL Early error elimination - RESL Reducing Backtracking Activity Network Streamlining Increasing Effective Workweek Better People and Incentives 11/8/06 O: covered by Process anchor points - RESL Improving process maturity - O Collaboration technology - CLAB Minimizing task dependencies - DPRS Avoiding high fan-in, fan-out - DPRS Reducing task variance - DPRS Removing tasks from critical path - DPRS 24 x 7 development - PPOS Nightly builds, testing - PPOS Weekend warriors - PPOS RAD Capability and experience - RCAP ©USC-CSSE 58

USC C S E University of Southern California Center for Software Engineering Eliminating Tasks Reducing Time Per Task Reducing Risks of Single-Point Failures RAD Opportunity Tree Development process reengineering - DPRS Reusing assets - RVHL Applications generation - RVHL Schedule as Independent Variable Process Tools and automation - O Work streamlining (80 -20) - O Increasing parallelism - RESL Reducing failures - RESL Reducing their effects - RESL Early error elimination - RESL Reducing Backtracking Activity Network Streamlining Increasing Effective Workweek Better People and Incentives 11/8/06 O: covered by Process anchor points - RESL Improving process maturity - O Collaboration technology - CLAB Minimizing task dependencies - DPRS Avoiding high fan-in, fan-out - DPRS Reducing task variance - DPRS Removing tasks from critical path - DPRS 24 x 7 development - PPOS Nightly builds, testing - PPOS Weekend warriors - PPOS RAD Capability and experience - RCAP ©USC-CSSE 58

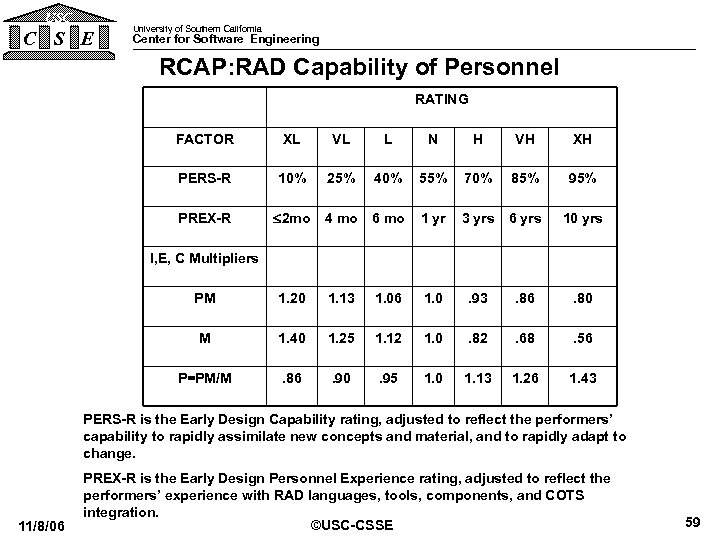

USC C S E University of Southern California Center for Software Engineering RCAP: RAD Capability of Personnel RATING FACTOR XL VL L N H VH XH PERS-R 10% 25% 40% 55% 70% 85% 95% PREX-R 2 mo 4 mo 6 mo 1 yr 3 yrs 6 yrs 10 yrs I, E, C Multipliers PM 1. 20 1. 13 1. 06 1. 0 . 93 . 86 . 80 M 1. 40 1. 25 1. 12 1. 0 . 82 . 68 . 56 P=PM/M . 86 . 90 . 95 1. 0 1. 13 1. 26 1. 43 PERS-R is the Early Design Capability rating, adjusted to reflect the performers’ capability to rapidly assimilate new concepts and material, and to rapidly adapt to change. 11/8/06 PREX-R is the Early Design Personnel Experience rating, adjusted to reflect the performers’ experience with RAD languages, tools, components, and COTS integration. ©USC-CSSE 59

USC C S E University of Southern California Center for Software Engineering RCAP: RAD Capability of Personnel RATING FACTOR XL VL L N H VH XH PERS-R 10% 25% 40% 55% 70% 85% 95% PREX-R 2 mo 4 mo 6 mo 1 yr 3 yrs 6 yrs 10 yrs I, E, C Multipliers PM 1. 20 1. 13 1. 06 1. 0 . 93 . 86 . 80 M 1. 40 1. 25 1. 12 1. 0 . 82 . 68 . 56 P=PM/M . 86 . 90 . 95 1. 0 1. 13 1. 26 1. 43 PERS-R is the Early Design Capability rating, adjusted to reflect the performers’ capability to rapidly assimilate new concepts and material, and to rapidly adapt to change. 11/8/06 PREX-R is the Early Design Personnel Experience rating, adjusted to reflect the performers’ experience with RAD languages, tools, components, and COTS integration. ©USC-CSSE 59

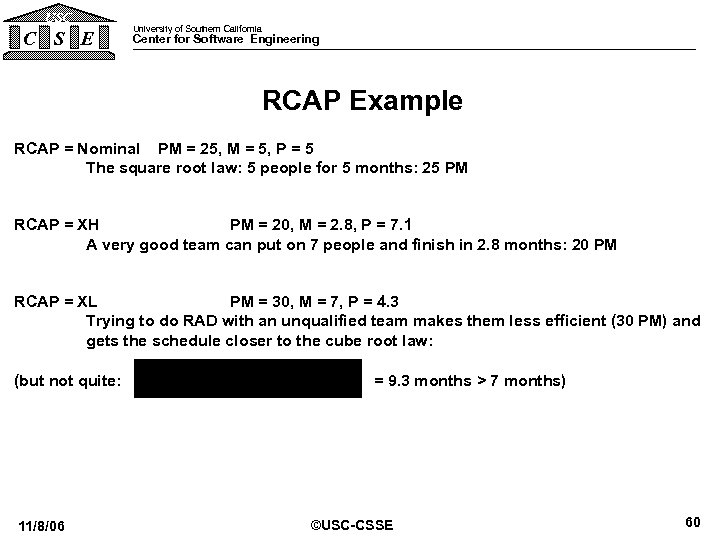

USC C S E University of Southern California Center for Software Engineering RCAP Example RCAP = Nominal PM = 25, M = 5, P = 5 The square root law: 5 people for 5 months: 25 PM RCAP = XH PM = 20, M = 2. 8, P = 7. 1 A very good team can put on 7 people and finish in 2. 8 months: 20 PM RCAP = XL PM = 30, M = 7, P = 4. 3 Trying to do RAD with an unqualified team makes them less efficient (30 PM) and gets the schedule closer to the cube root law: (but not quite: 11/8/06 = 9. 3 months > 7 months) ©USC-CSSE 60

USC C S E University of Southern California Center for Software Engineering RCAP Example RCAP = Nominal PM = 25, M = 5, P = 5 The square root law: 5 people for 5 months: 25 PM RCAP = XH PM = 20, M = 2. 8, P = 7. 1 A very good team can put on 7 people and finish in 2. 8 months: 20 PM RCAP = XL PM = 30, M = 7, P = 4. 3 Trying to do RAD with an unqualified team makes them less efficient (30 PM) and gets the schedule closer to the cube root law: (but not quite: 11/8/06 = 9. 3 months > 7 months) ©USC-CSSE 60

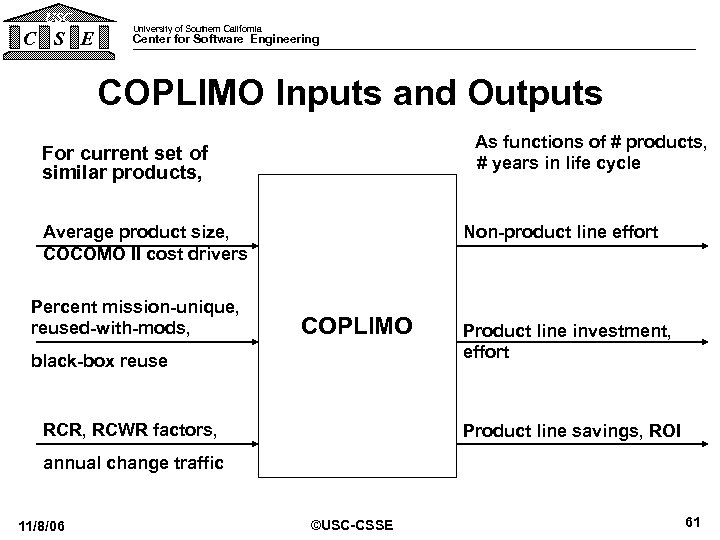

USC C S E University of Southern California Center for Software Engineering COPLIMO Inputs and Outputs As functions of # products, # years in life cycle For current set of similar products, Average product size, COCOMO II cost drivers Percent mission-unique, reused-with-mods, Non-product line effort COPLIMO black-box reuse RCR, RCWR factors, Product line investment, effort Product line savings, ROI annual change traffic 11/8/06 ©USC-CSSE 61

USC C S E University of Southern California Center for Software Engineering COPLIMO Inputs and Outputs As functions of # products, # years in life cycle For current set of similar products, Average product size, COCOMO II cost drivers Percent mission-unique, reused-with-mods, Non-product line effort COPLIMO black-box reuse RCR, RCWR factors, Product line investment, effort Product line savings, ROI annual change traffic 11/8/06 ©USC-CSSE 61

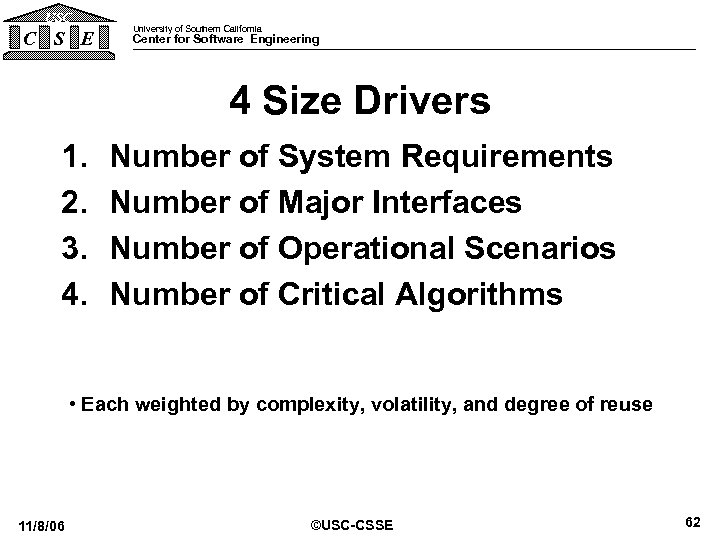

USC C S E University of Southern California Center for Software Engineering 4 Size Drivers 1. 2. 3. 4. Number of System Requirements Number of Major Interfaces Number of Operational Scenarios Number of Critical Algorithms • Each weighted by complexity, volatility, and degree of reuse 11/8/06 ©USC-CSSE 62

USC C S E University of Southern California Center for Software Engineering 4 Size Drivers 1. 2. 3. 4. Number of System Requirements Number of Major Interfaces Number of Operational Scenarios Number of Critical Algorithms • Each weighted by complexity, volatility, and degree of reuse 11/8/06 ©USC-CSSE 62

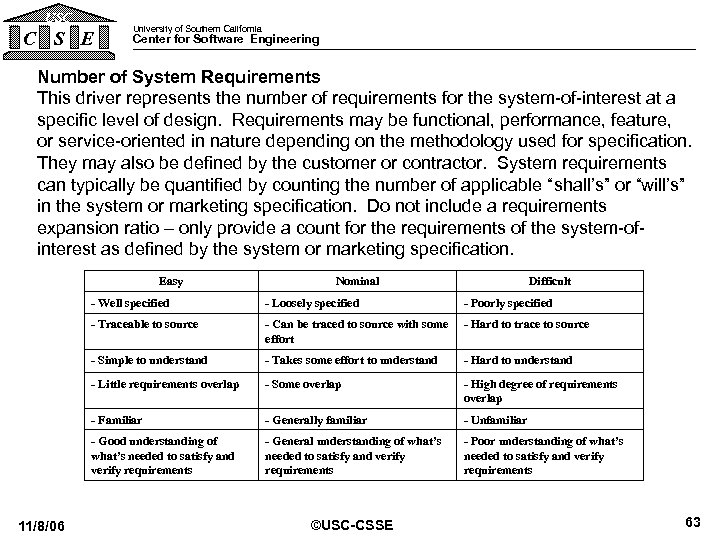

USC C S E University of Southern California Center for Software Engineering Number of System Requirements This driver represents the number of requirements for the system-of-interest at a specific level of design. Requirements may be functional, performance, feature, or service-oriented in nature depending on the methodology used for specification. They may also be defined by the customer or contractor. System requirements can typically be quantified by counting the number of applicable “shall’s” or “will’s” in the system or marketing specification. Do not include a requirements expansion ratio – only provide a count for the requirements of the system-ofinterest as defined by the system or marketing specification. Easy Nominal Difficult - Well specified - Poorly specified - Traceable to source - Can be traced to source with some effort - Hard to trace to source - Simple to understand - Takes some effort to understand - Hard to understand - Little requirements overlap - Some overlap - High degree of requirements overlap - Familiar - Generally familiar - Unfamiliar - Good understanding of what’s needed to satisfy and verify requirements 11/8/06 - Loosely specified - General understanding of what’s needed to satisfy and verify requirements - Poor understanding of what’s needed to satisfy and verify requirements ©USC-CSSE 63

USC C S E University of Southern California Center for Software Engineering Number of System Requirements This driver represents the number of requirements for the system-of-interest at a specific level of design. Requirements may be functional, performance, feature, or service-oriented in nature depending on the methodology used for specification. They may also be defined by the customer or contractor. System requirements can typically be quantified by counting the number of applicable “shall’s” or “will’s” in the system or marketing specification. Do not include a requirements expansion ratio – only provide a count for the requirements of the system-ofinterest as defined by the system or marketing specification. Easy Nominal Difficult - Well specified - Poorly specified - Traceable to source - Can be traced to source with some effort - Hard to trace to source - Simple to understand - Takes some effort to understand - Hard to understand - Little requirements overlap - Some overlap - High degree of requirements overlap - Familiar - Generally familiar - Unfamiliar - Good understanding of what’s needed to satisfy and verify requirements 11/8/06 - Loosely specified - General understanding of what’s needed to satisfy and verify requirements - Poor understanding of what’s needed to satisfy and verify requirements ©USC-CSSE 63

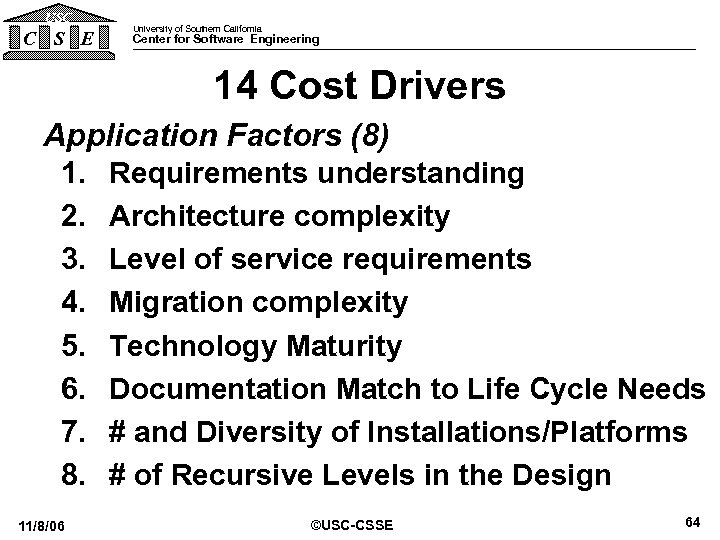

USC C S E University of Southern California Center for Software Engineering 14 Cost Drivers Application Factors (8) 1. Requirements understanding 2. Architecture complexity 3. Level of service requirements 4. Migration complexity 5. Technology Maturity 6. Documentation Match to Life Cycle Needs 7. # and Diversity of Installations/Platforms 8. # of Recursive Levels in the Design 11/8/06 ©USC-CSSE 64

USC C S E University of Southern California Center for Software Engineering 14 Cost Drivers Application Factors (8) 1. Requirements understanding 2. Architecture complexity 3. Level of service requirements 4. Migration complexity 5. Technology Maturity 6. Documentation Match to Life Cycle Needs 7. # and Diversity of Installations/Platforms 8. # of Recursive Levels in the Design 11/8/06 ©USC-CSSE 64

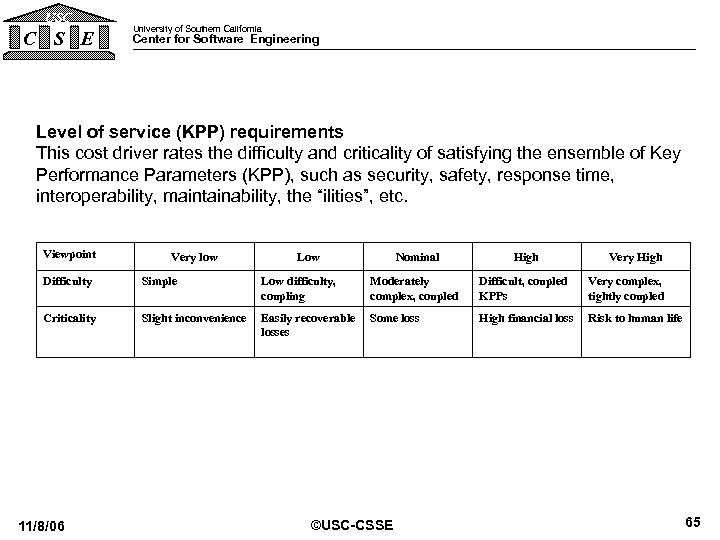

USC C S E University of Southern California Center for Software Engineering Level of service (KPP) requirements This cost driver rates the difficulty and criticality of satisfying the ensemble of Key Performance Parameters (KPP), such as security, safety, response time, interoperability, maintainability, the “ilities”, etc. Viewpoint Very low Low Nominal High Very High Difficulty Simple Low difficulty, coupling Moderately complex, coupled Difficult, coupled KPPs Very complex, tightly coupled Criticality Slight inconvenience Easily recoverable losses Some loss High financial loss Risk to human life 11/8/06 ©USC-CSSE 65

USC C S E University of Southern California Center for Software Engineering Level of service (KPP) requirements This cost driver rates the difficulty and criticality of satisfying the ensemble of Key Performance Parameters (KPP), such as security, safety, response time, interoperability, maintainability, the “ilities”, etc. Viewpoint Very low Low Nominal High Very High Difficulty Simple Low difficulty, coupling Moderately complex, coupled Difficult, coupled KPPs Very complex, tightly coupled Criticality Slight inconvenience Easily recoverable losses Some loss High financial loss Risk to human life 11/8/06 ©USC-CSSE 65

USC C S E University of Southern California Center for Software Engineering 14 Cost Drivers (cont. ) Team Factors (6) 1. 2. 3. 4. 5. 6. 11/8/06 Stakeholder team cohesion Personnel/team capability Personnel experience/continuity Process maturity Multisite coordination Tool support ©USC-CSSE 66

USC C S E University of Southern California Center for Software Engineering 14 Cost Drivers (cont. ) Team Factors (6) 1. 2. 3. 4. 5. 6. 11/8/06 Stakeholder team cohesion Personnel/team capability Personnel experience/continuity Process maturity Multisite coordination Tool support ©USC-CSSE 66

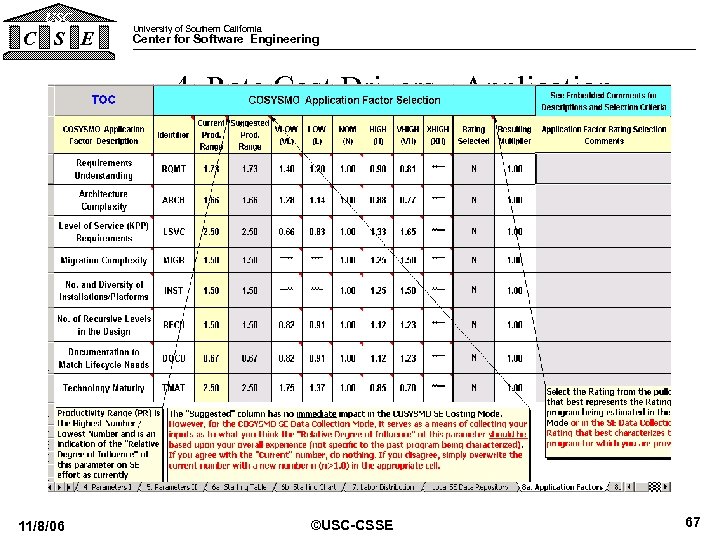

USC C S E University of Southern California Center for Software Engineering 4. Rate Cost Drivers - Application 11/8/06 ©USC-CSSE 67

USC C S E University of Southern California Center for Software Engineering 4. Rate Cost Drivers - Application 11/8/06 ©USC-CSSE 67

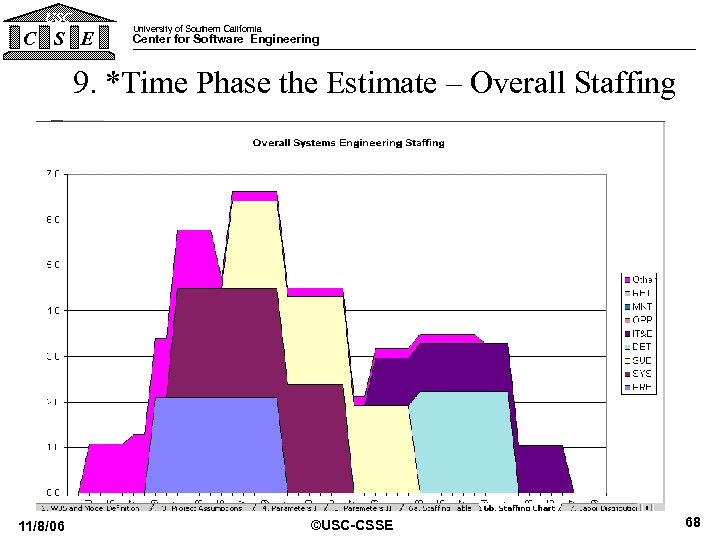

USC C S E University of Southern California Center for Software Engineering 9. *Time Phase the Estimate – Overall Staffing 11/8/06 ©USC-CSSE 68

USC C S E University of Southern California Center for Software Engineering 9. *Time Phase the Estimate – Overall Staffing 11/8/06 ©USC-CSSE 68

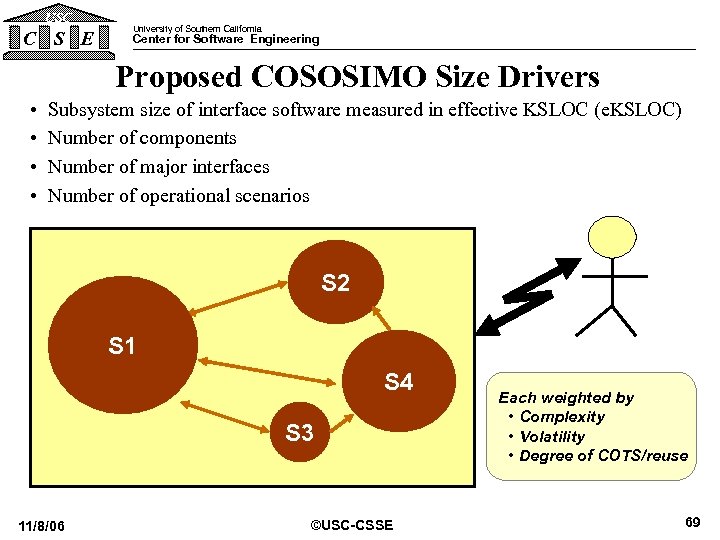

USC C S E University of Southern California Center for Software Engineering Proposed COSOSIMO Size Drivers • • Subsystem size of interface software measured in effective KSLOC (e. KSLOC) Number of components Number of major interfaces Number of operational scenarios S 2 S 1 S 4 S 3 11/8/06 ©USC-CSSE Each weighted by • Complexity • Volatility • Degree of COTS/reuse 69

USC C S E University of Southern California Center for Software Engineering Proposed COSOSIMO Size Drivers • • Subsystem size of interface software measured in effective KSLOC (e. KSLOC) Number of components Number of major interfaces Number of operational scenarios S 2 S 1 S 4 S 3 11/8/06 ©USC-CSSE Each weighted by • Complexity • Volatility • Degree of COTS/reuse 69

USC C S E University of Southern California Center for Software Engineering Proposed COSOSIMO Scale Factors • Integration risk resolution Risk identification and mitigation efforts • Integration simplicity Architecture and performance issues • Integration stability How much change is expected • Component readiness How much prior testing has been conducted on the components • Integration capability People factor • Integration processes Maturity level of processes and integration lab 11/8/06 ©USC-CSSE 70

USC C S E University of Southern California Center for Software Engineering Proposed COSOSIMO Scale Factors • Integration risk resolution Risk identification and mitigation efforts • Integration simplicity Architecture and performance issues • Integration stability How much change is expected • Component readiness How much prior testing has been conducted on the components • Integration capability People factor • Integration processes Maturity level of processes and integration lab 11/8/06 ©USC-CSSE 70

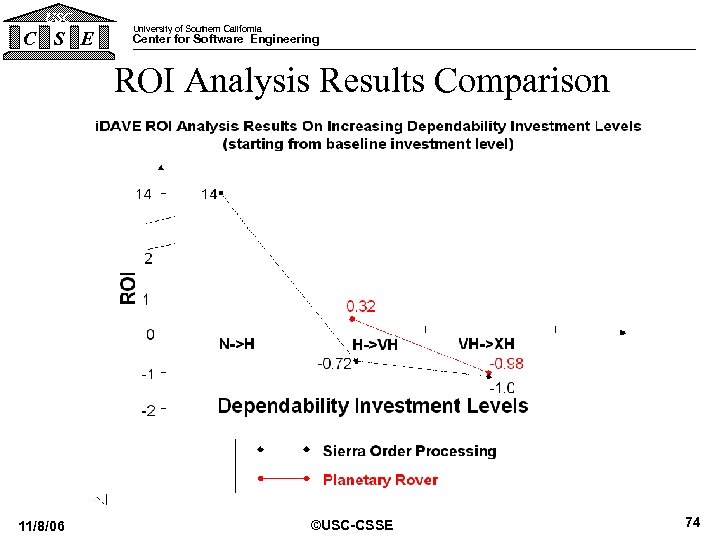

USC C S E University of Southern California Center for Software Engineering Reasoning about the Value of Dependability – i. DAVE • i. DAVE: Information Dependability Attribute Value Estimator • Use i. DAVE model to estimate and track software dependability ROI – Help determine how much dependability is enough – Help analyze and select the most cost-effective combination of software dependability techniques – Use estimates as a basis for tracking performance 11/8/06 ©USC-CSSE 71

USC C S E University of Southern California Center for Software Engineering Reasoning about the Value of Dependability – i. DAVE • i. DAVE: Information Dependability Attribute Value Estimator • Use i. DAVE model to estimate and track software dependability ROI – Help determine how much dependability is enough – Help analyze and select the most cost-effective combination of software dependability techniques – Use estimates as a basis for tracking performance 11/8/06 ©USC-CSSE 71

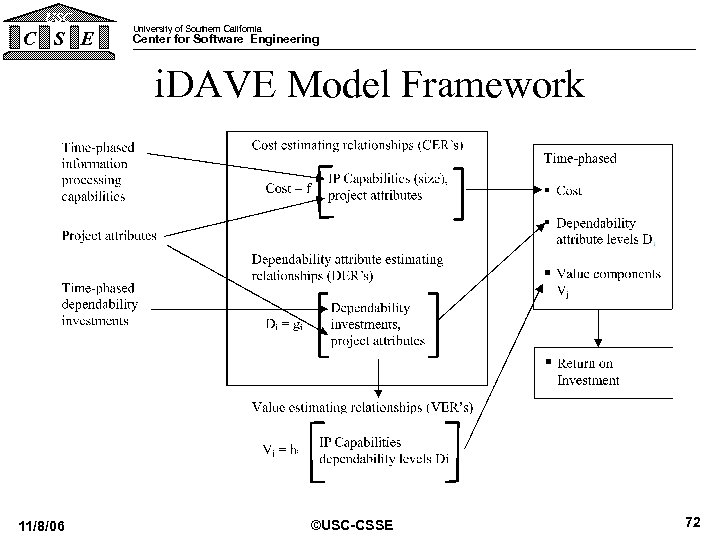

USC C S E University of Southern California Center for Software Engineering i. DAVE Model Framework 11/8/06 ©USC-CSSE 72

USC C S E University of Southern California Center for Software Engineering i. DAVE Model Framework 11/8/06 ©USC-CSSE 72

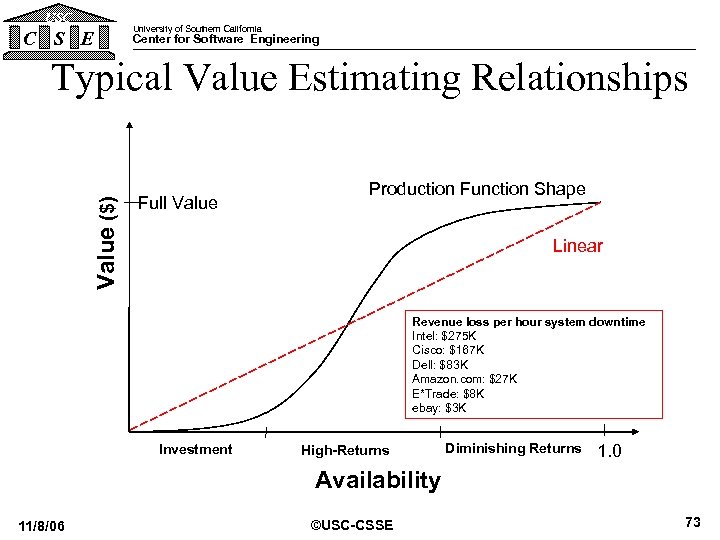

USC C S E University of Southern California Center for Software Engineering Value ($) Typical Value Estimating Relationships Full Value Production Function Shape Linear Revenue loss per hour system downtime Intel: $275 K Cisco: $167 K Dell: $83 K Amazon. com: $27 K E*Trade: $8 K ebay: $3 K Investment High-Returns Diminishing Returns 1. 0 Availability 11/8/06 ©USC-CSSE 73

USC C S E University of Southern California Center for Software Engineering Value ($) Typical Value Estimating Relationships Full Value Production Function Shape Linear Revenue loss per hour system downtime Intel: $275 K Cisco: $167 K Dell: $83 K Amazon. com: $27 K E*Trade: $8 K ebay: $3 K Investment High-Returns Diminishing Returns 1. 0 Availability 11/8/06 ©USC-CSSE 73

USC C S E University of Southern California Center for Software Engineering ROI Analysis Results Comparison 11/8/06 ©USC-CSSE 74

USC C S E University of Southern California Center for Software Engineering ROI Analysis Results Comparison 11/8/06 ©USC-CSSE 74