ca7748cedc07c4d9ea7553165fae11aa.ppt

- Количество слайдов: 30

US Tier 2 Workshop Michael Ernst Brookhaven National Lab U. S. ATLAS Tier 2 Meeting UCSD 8 March, 2007

Overview • LHC Schedule news and implications • Latest funding news • Purpose and Goals for this workshop • Tier-2 Planning and Integration • Action Items from past Tier-2 meeting(s) • Update hardware ramp M. Ernst Tier 2 Meeting 8 March, 2007 UCSD 2

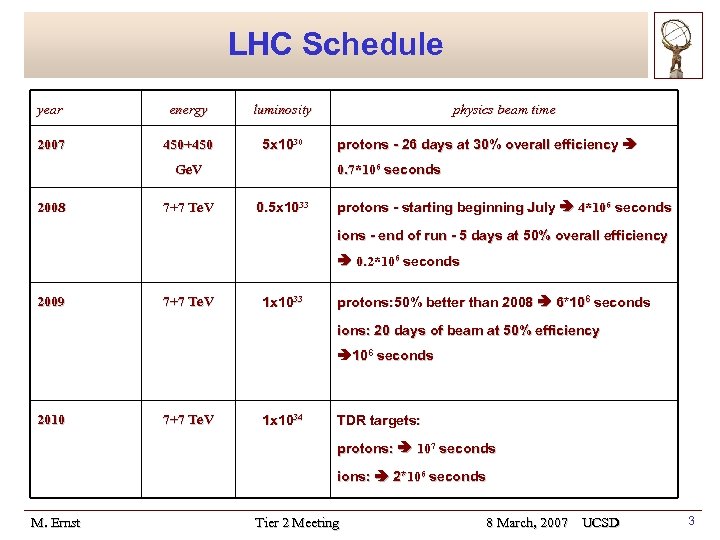

LHC Schedule year 2007 energy luminosity 450+450 5 x 1030 7+7 Te. V protons - 26 days at 30% overall efficiency 0. 7*106 seconds Ge. V 2008 physics beam time 0. 5 x 1033 protons - starting beginning July 4*106 seconds ions - end of run - 5 days at 50% overall efficiency 0. 2*106 seconds 2009 7+7 Te. V 1 x 1033 protons: 50% better than 2008 6*106 seconds ions: 20 days of beam at 50% efficiency 106 seconds 2010 7+7 Te. V 1 x 1034 TDR targets: protons: 107 seconds ions: 2*106 seconds M. Ernst Tier 2 Meeting 8 March, 2007 UCSD 3

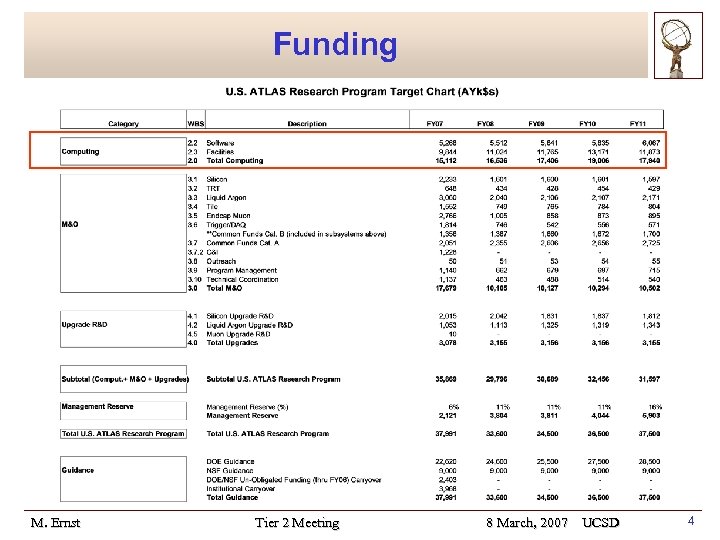

Funding M. Ernst Tier 2 Meeting 8 March, 2007 UCSD 4

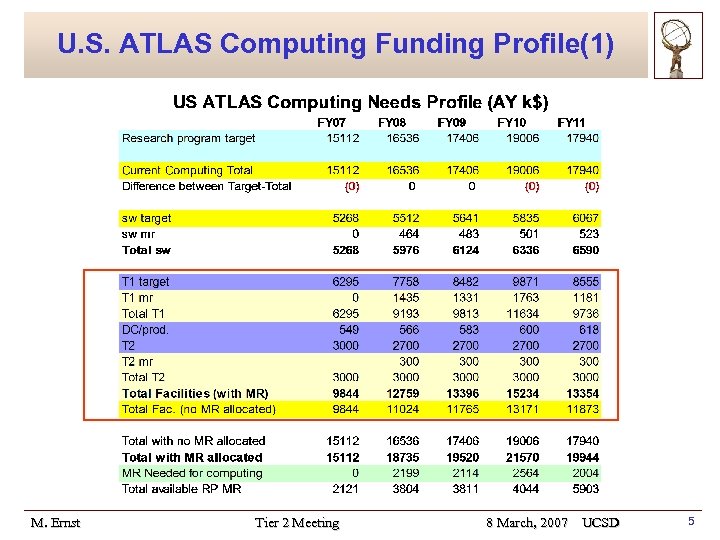

U. S. ATLAS Computing Funding Profile(1) M. Ernst Tier 2 Meeting 8 March, 2007 UCSD 5

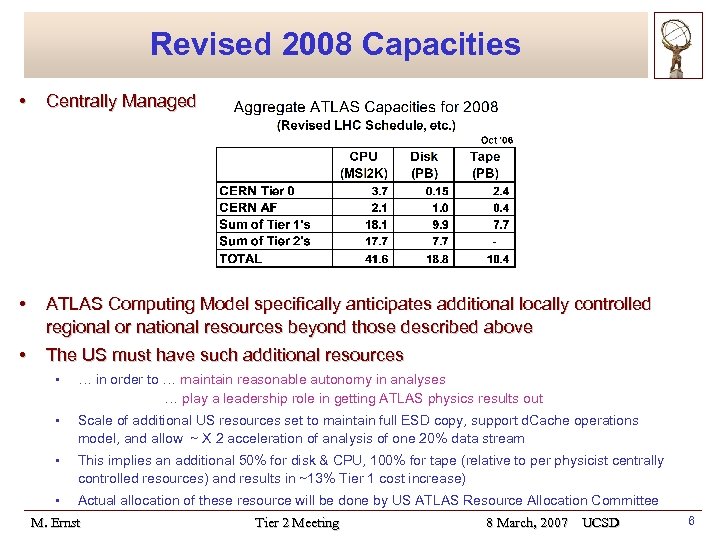

Revised 2008 Capacities • Centrally Managed • ATLAS Computing Model specifically anticipates additional locally controlled regional or national resources beyond those described above • The US must have such additional resources … in order to … maintain reasonable autonomy in analyses … play a leadership role in getting ATLAS physics results out • • Scale of additional US resources set to maintain full ESD copy, support d. Cache operations model, and allow ~ X 2 acceleration of analysis of one 20% data stream • This implies an additional 50% for disk & CPU, 100% for tape (relative to per physicist centrally controlled resources) and results in ~13% Tier 1 cost increase) • Actual allocation of these resource will be done by US ATLAS Resource Allocation Committee M. Ernst Tier 2 Meeting 8 March, 2007 UCSD 6

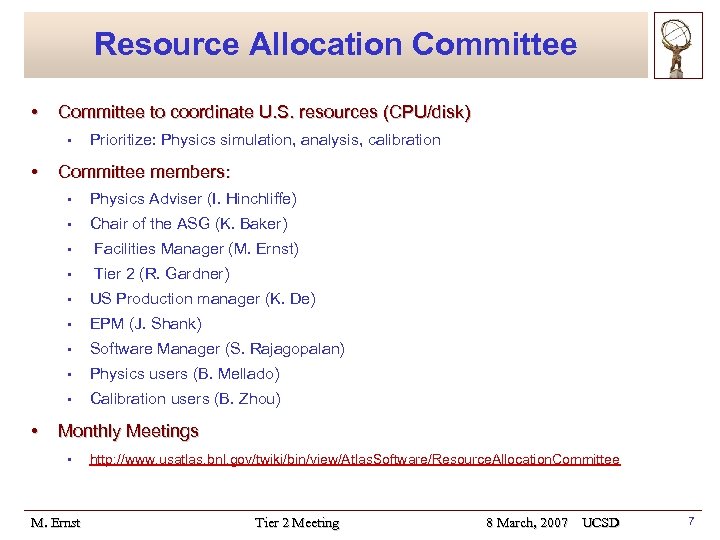

Resource Allocation Committee • Committee to coordinate U. S. resources (CPU/disk) • • Prioritize: Physics simulation, analysis, calibration Committee members: • • Chair of the ASG (K. Baker) • Facilities Manager (M. Ernst) • Tier 2 (R. Gardner) • US Production manager (K. De) • EPM (J. Shank) • Software Manager (S. Rajagopalan) • Physics users (B. Mellado) • • Physics Adviser (I. Hinchliffe) Calibration users (B. Zhou) Monthly Meetings • M. Ernst http: //www. usatlas. bnl. gov/twiki/bin/view/Atlas. Software/Resource. Allocation. Committee Tier 2 Meeting 8 March, 2007 UCSD 7

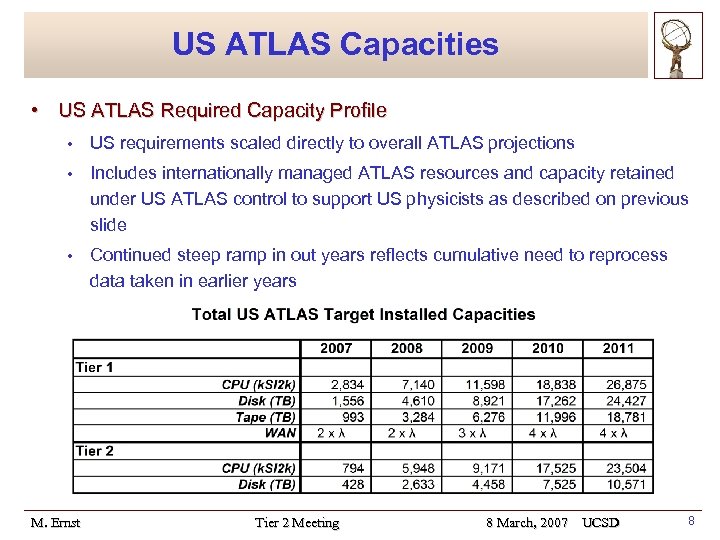

US ATLAS Capacities • US ATLAS Required Capacity Profile • US requirements scaled directly to overall ATLAS projections • Includes internationally managed ATLAS resources and capacity retained under US ATLAS control to support US physicists as described on previous slide • Continued steep ramp in out years reflects cumulative need to reprocess data taken in earlier years M. Ernst Tier 2 Meeting 8 March, 2007 UCSD 8

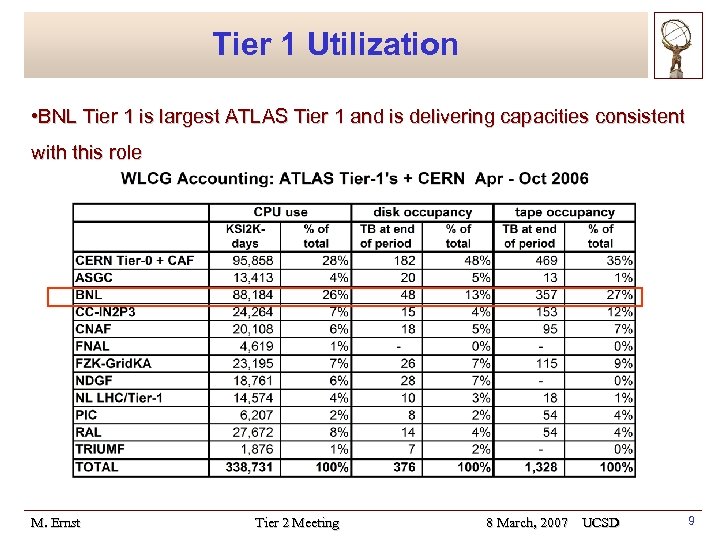

Tier 1 Utilization • BNL Tier 1 is largest ATLAS Tier 1 and is delivering capacities consistent with this role M. Ernst Tier 2 Meeting 8 March, 2007 UCSD 9

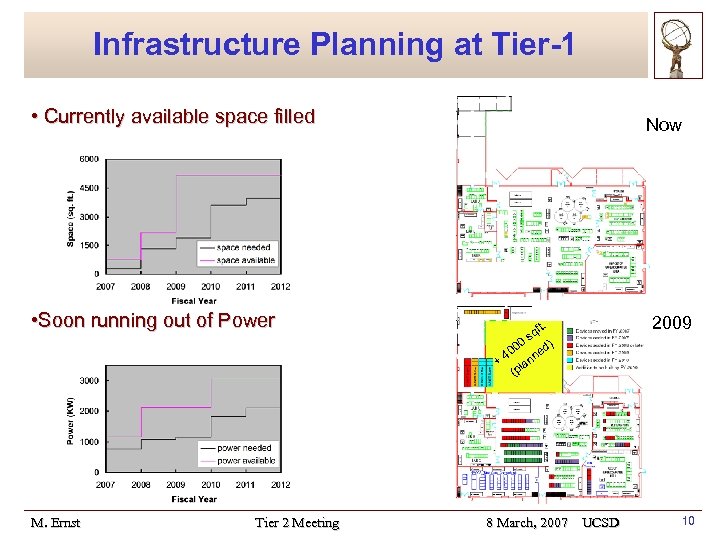

Infrastructure Planning at Tier-1 • Currently available space filled Now • Soon running out of Power M. Ernst Tier 2 Meeting t. qf s 0 ) 00 ned 4 + lan (p 8 March, 2007 UCSD 2009 10

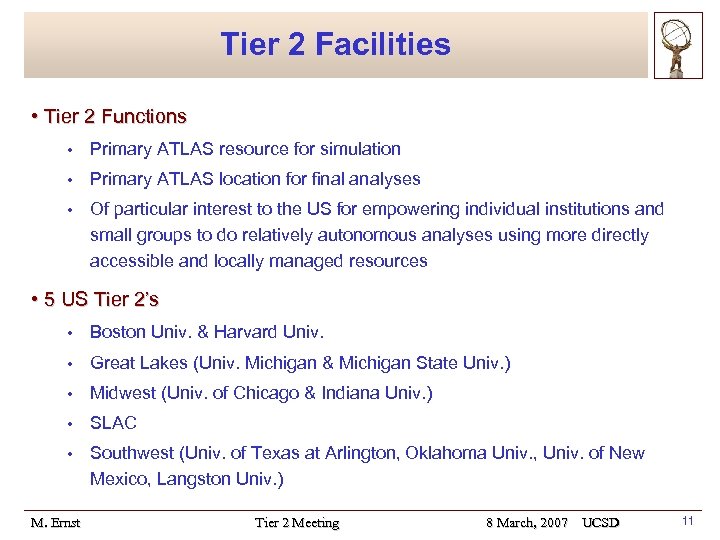

Tier 2 Facilities • Tier 2 Functions • Primary ATLAS resource for simulation • Primary ATLAS location for final analyses • Of particular interest to the US for empowering individual institutions and small groups to do relatively autonomous analyses using more directly accessible and locally managed resources • 5 US Tier 2’s • Boston Univ. & Harvard Univ. • Great Lakes (Univ. Michigan & Michigan State Univ. ) • Midwest (Univ. of Chicago & Indiana Univ. ) • SLAC • Southwest (Univ. of Texas at Arlington, Oklahoma Univ. , Univ. of New Mexico, Langston Univ. ) M. Ernst Tier 2 Meeting 8 March, 2007 UCSD 11

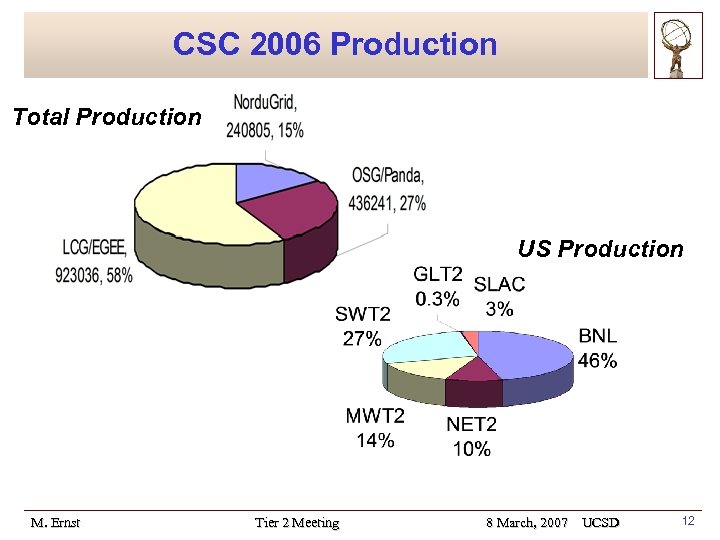

CSC 2006 Production Total Production US Production M. Ernst Tier 2 Meeting 8 March, 2007 UCSD 12

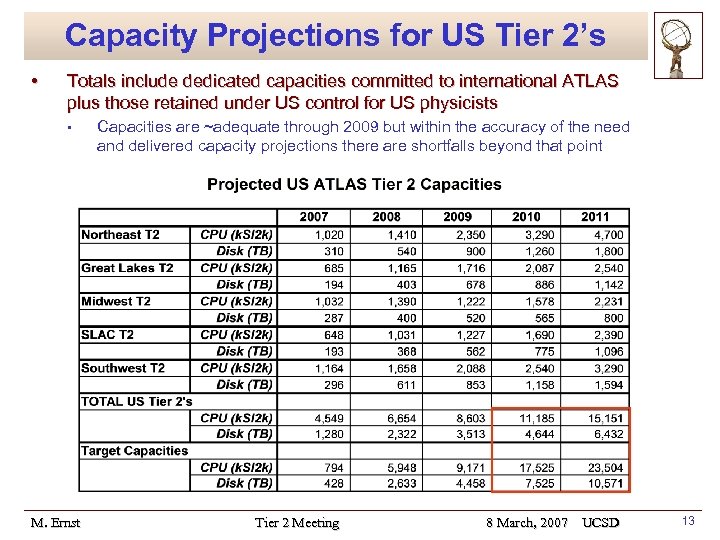

Capacity Projections for US Tier 2’s • Totals include dedicated capacities committed to international ATLAS plus those retained under US control for US physicists • M. Ernst Capacities are ~adequate through 2009 but within the accuracy of the need and delivered capacity projections there are shortfalls beyond that point Tier 2 Meeting 8 March, 2007 UCSD 13

Evolution • There is a growing Tier 2 capacity shortfall with time • We need to be careful to avoid wasted event copies M. Ernst Tier 2 Meeting 8 March, 2007 UCSD 14

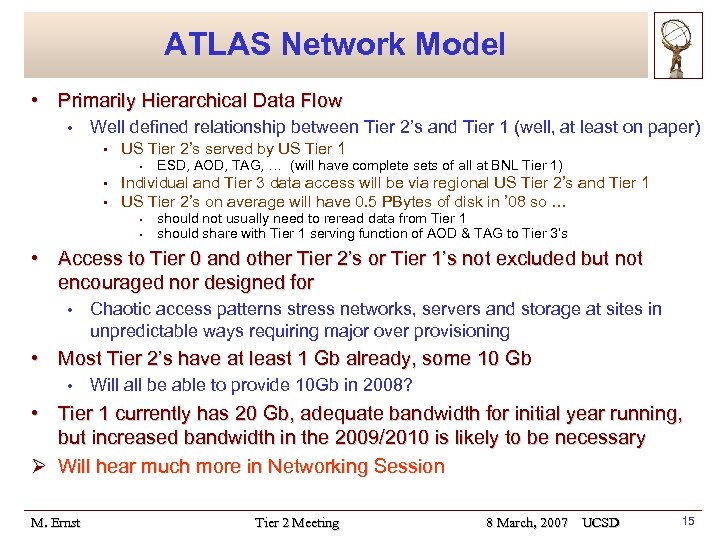

ATLAS Network Model • Primarily Hierarchical Data Flow • Well defined relationship between Tier 2’s and Tier 1 (well, at least on paper) • US Tier 2’s served by US Tier 1 • • • ESD, AOD, TAG, … (will have complete sets of all at BNL Tier 1) Individual and Tier 3 data access will be via regional US Tier 2’s and Tier 1 US Tier 2’s on average will have 0. 5 PBytes of disk in ’ 08 so … • • should not usually need to reread data from Tier 1 should share with Tier 1 serving function of AOD & TAG to Tier 3’s • Access to Tier 0 and other Tier 2’s or Tier 1’s not excluded but not encouraged nor designed for • Chaotic access patterns stress networks, servers and storage at sites in unpredictable ways requiring major over provisioning • Most Tier 2’s have at least 1 Gb already, some 10 Gb • Will all be able to provide 10 Gb in 2008? • Tier 1 currently has 20 Gb, adequate bandwidth for initial year running, but increased bandwidth in the 2009/2010 is likely to be necessary Ø Will hear much more in Networking Session M. Ernst Tier 2 Meeting 8 March, 2007 UCSD 15

Reconciling Requests with Target • 2008 and beyond still a problem • We are working closely with the ATLAS Computing Model Group to understand our T 1/T 2 needs out to 2012 • New LHC running assumptions COULD lead to some savings in a later ramp of hardware M. Ernst Tier 2 Meeting 8 March, 2007 UCSD 16

Need to Ramp up the T 2 hardware • Goal: • M. Ernst Detailed schedule (not just rolled up into 1 year) of hardware ramp. Tier 2 Meeting 8 March, 2007 UCSD 17

Previous T 2 Meetings • Tier 2 Planning Wiki: • M. Ernst http: //www. usatlas. bnl. gov/twiki/bin/view/Admins/Tier. Two. Planning Tier 2 Meeting 8 March, 2007 UCSD 18

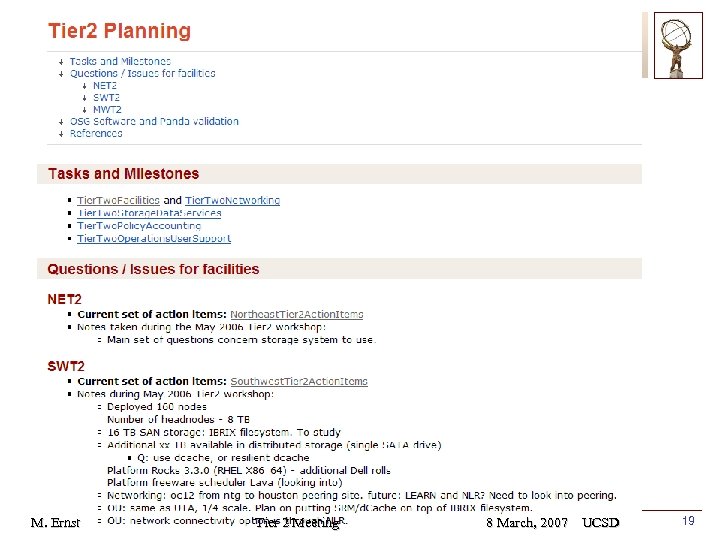

M. Ernst Tier 2 Meeting 8 March, 2007 UCSD 19

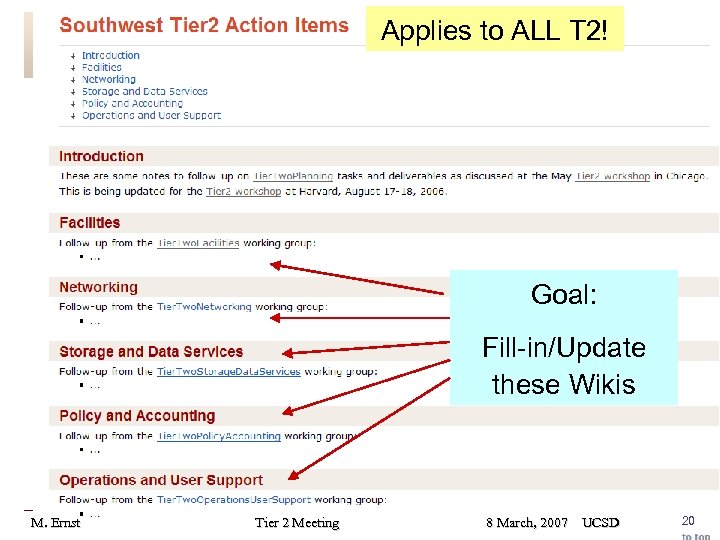

Applies to ALL T 2! Goal: Fill-in/Update these Wikis M. Ernst Tier 2 Meeting 8 March, 2007 UCSD 20

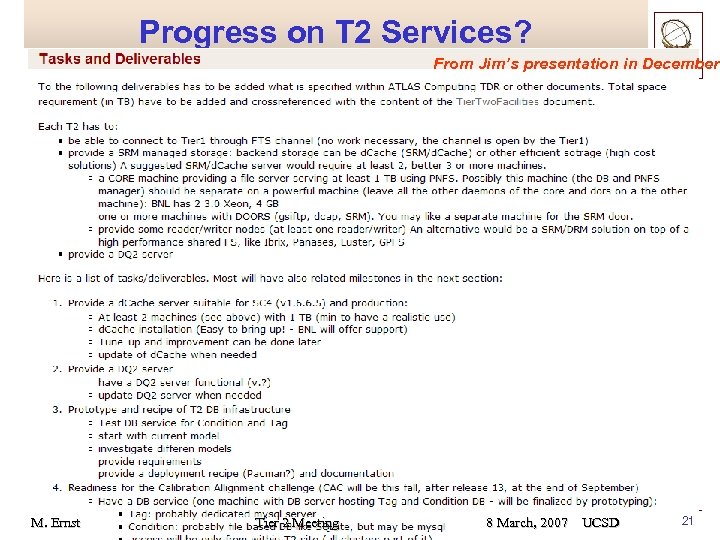

Progress on T 2 Services? From Jim’s presentation in December • DQ 2 Status? M. Ernst Tier 2 Meeting 8 March, 2007 UCSD 21

Tier 2 Documentation • Uniform Web pages • Started out very nicely with first Tier-2 workshop • Not much updated since M. Ernst Tier 2 Meeting 8 March, 2007 UCSD 22

Purpose and Goals (1/3) • Status of Sites • Where are sites as to installed/usable Infrastructure and what are the issues • Production and Preparation for Analysis • Services and expected “volume” • Pan. DA overview, future extensions and release schedule M. Ernst Tier 2 Meeting 8 March, 2007 UCSD 23

Purpose and Goals (2/3) • On the Critical Path • While (Pan. DA managed) Production is coming along very nicely Data Transfer (and Storage? ) and Data Replication is on the critical path • DDM/DQ 2 vulnerable and apparently not up to the performance level required • Data Management and Transfers least transparent component / functionality • Dashboard monitoring informative to some extent but not really helpful in case of problems • • • Ø Very complex situation – Diagnosis almost impossible and requires expertlevel knowledge in multiple areas • • M. Ernst No transfers, or slowly moving – why? Trouble at source or destination? Nature / reason of trouble? Currently limited to few experts (Alexei, Kaushik, Hiro et al) – not scalable and does not allow site admins to assess how their site is doing Often requires access to distributed log files Tier 2 Meeting 8 March, 2007 UCSD 24

Purpose and Goals (3/3) • (Still) On the Critical Path • Storage Systems and Tier-1 / Tier-2 Sites • • No technology baseline in U. S. ATLAS Impact on operational readiness and interoperability unclear Huge number of technical problems at all levels (FTS, DDM/DQ 2, SRM/d. Cache) Some sites are far from where they are supposed to be • • Functionality (i. e. SRM interface) Capacity M. Ernst Tier 2 Meeting 8 March, 2007 UCSD 25

What we need / should do – A proposal • Towards ATLAS Milestones Put an Integration Program in place which aims at building the computing system we need to support LHC Analysis in the US • With exercises designed to verify sites’ readiness, stability and performance • • Should exploit commonality and establish (technology) baseline whenever possible • Synergy allows to bundle resources • Site Certification Site admins will be asked to install well defined software packages and to make needed capacities available to the Collaboration • We will continuously run use-case oriented exercises and will document and archive the results • • Heartbeat – Data Transfers on a basic level, e. g. Srm. Copy w/ and w/o FTS Dataset replication based on high-level functionality (DDM/DQ 2) Processing (Analysis job profile) • • • M. Ernst Grid Job submission (Pan. DA) – distribution based on data affinity Local data access (from SE) Monitor and archive results from exercises Tier 2 Meeting 8 March, 2007 UCSD 26

What we need / should do – A proposal • Need Tools • Heartbeat • • Loadtest • • Subscribe a dataset located at BNL to all Tier-2 Centers, recycle the space, clean up and start all over again Processing • • • A simple file copy operation using basic transfer mechanism(s) Automated submission of analysis jobs running over AODs Feedback of results into Dashboard (incl. descriptive information about errors) Monitoring • Transparency through end-to-end monitoring • • M. Ernst Not just throughput Provide detailed information as to reason for failures (transfers and processing) Tier 2 Meeting 8 March, 2007 UCSD 27

Coordination & Communication • U. S. Production Operations and Deployment Manager • Coordinate Production activities on U. S ATLAS sites • Coordinate deployment of ATLAS Software • Weekly Integration Meetings • Use Wednesday slot with activity driven agenda • • • Integration News – a summary of issues and upcoming activities Reports from Production & DDM operations (technical) Site issues – summaries • • Standing items • M. Ernst Move details to site help sessions (people call in and help with particular issues) Development status of tools & aids Tier 2 Meeting 8 March, 2007 UCSD 28

Coordination & Communication • HN Forums • Fabric Issues • • • Linux Farms (incl. H/W, OS, management, S/W in conjunction with production) Storage Systems (incl. storage technology, interfaces, storage system performance optimization) Networking (LAN and WAN issues) • Data transfer and replication • Facility integration issues (Tier-1/Tier-2, Production, Analysis) M. Ernst Tier 2 Meeting 8 March, 2007 UCSD 29

Users A typical "normally competent member of ATLAS" M. Ernst Tier 2 Meeting 8 March, 2007 UCSD 30

ca7748cedc07c4d9ea7553165fae11aa.ppt