0c10e1ba79ba45d0a5fe526874642f46.ppt

- Количество слайдов: 40

US LHCNet US LHC Network Working Group Meeting 23 -24 October 2006 - FNAL J. Bunn, D. Nae, H. Newman, S. Ravot, R. Voicu, X. Su, Y. Xia California Institute of Technology

US LHCNet US LHC Network Working Group Meeting 23 -24 October 2006 - FNAL J. Bunn, D. Nae, H. Newman, S. Ravot, R. Voicu, X. Su, Y. Xia California Institute of Technology

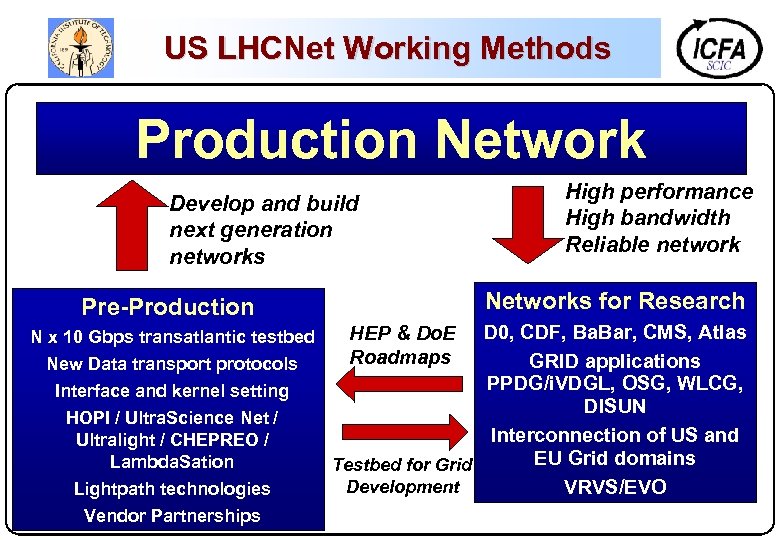

US LHCNet Working Methods Production Network Develop and build next generation networks Networks for Research Pre-Production N x 10 Gbps transatlantic testbed New Data transport protocols Interface and kernel setting HOPI / Ultra. Science Net / Ultralight / CHEPREO / Lambda. Sation Lightpath technologies Vendor Partnerships High performance High bandwidth Reliable network D 0, CDF, Ba. Bar, CMS, Atlas GRID applications PPDG/i. VDGL, OSG, WLCG, DISUN Interconnection of US and EU Grid domains Testbed for Grid Development VRVS/EVO HEP & Do. E Roadmaps

US LHCNet Working Methods Production Network Develop and build next generation networks Networks for Research Pre-Production N x 10 Gbps transatlantic testbed New Data transport protocols Interface and kernel setting HOPI / Ultra. Science Net / Ultralight / CHEPREO / Lambda. Sation Lightpath technologies Vendor Partnerships High performance High bandwidth Reliable network D 0, CDF, Ba. Bar, CMS, Atlas GRID applications PPDG/i. VDGL, OSG, WLCG, DISUN Interconnection of US and EU Grid domains Testbed for Grid Development VRVS/EVO HEP & Do. E Roadmaps

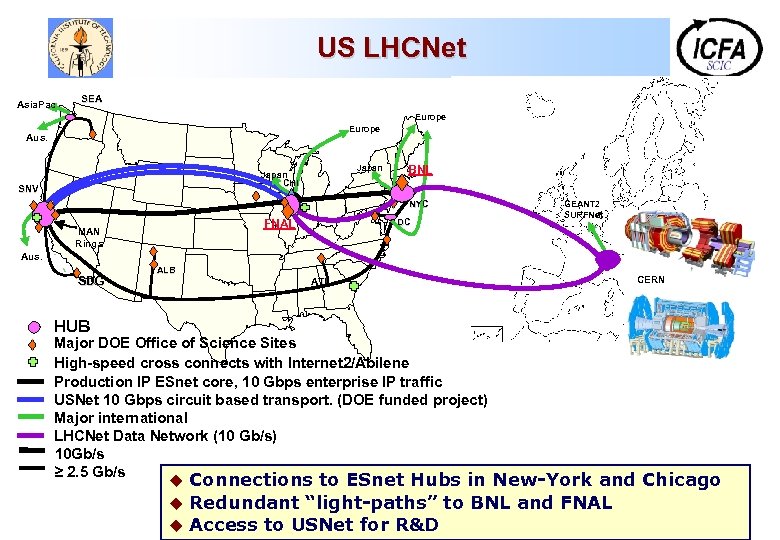

US LHCNet Asia. Pac SEA Europe Aus. Japan CHI SNV BNL NYC FNAL MAN Rings DC GEANT 2 SURFNet Aus. SDG ALB ATL CERN HUB Major DOE Office of Science Sites High-speed cross connects with Internet 2/Abilene Production IP ESnet core, 10 Gbps enterprise IP traffic USNet 10 Gbps circuit based transport. (DOE funded project) Major international LHCNet Data Network (10 Gb/s) 10 Gb/s ≥ 2. 5 Gb/s u Connections to ESnet Hubs in New-York and Chicago u Redundant “light-paths” to BNL and FNAL u Access to USNet for R&D

US LHCNet Asia. Pac SEA Europe Aus. Japan CHI SNV BNL NYC FNAL MAN Rings DC GEANT 2 SURFNet Aus. SDG ALB ATL CERN HUB Major DOE Office of Science Sites High-speed cross connects with Internet 2/Abilene Production IP ESnet core, 10 Gbps enterprise IP traffic USNet 10 Gbps circuit based transport. (DOE funded project) Major international LHCNet Data Network (10 Gb/s) 10 Gb/s ≥ 2. 5 Gb/s u Connections to ESnet Hubs in New-York and Chicago u Redundant “light-paths” to BNL and FNAL u Access to USNet for R&D

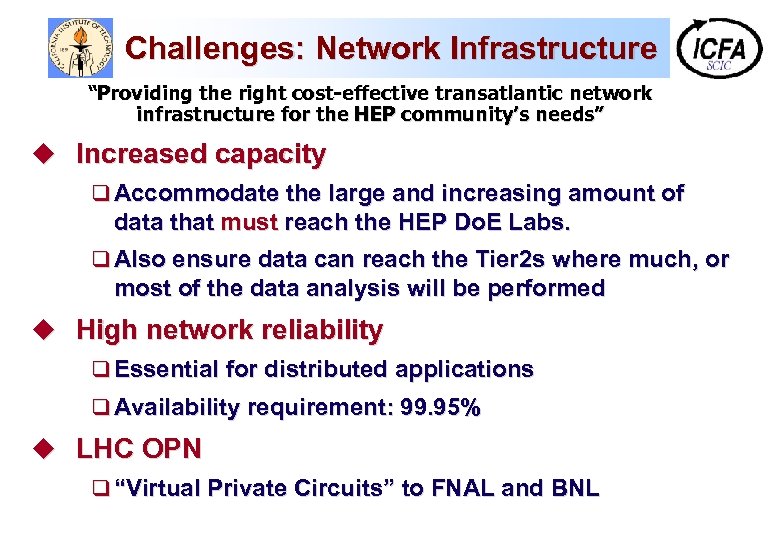

Challenges: Network Infrastructure “Providing the right cost-effective transatlantic network infrastructure for the HEP community’s needs” u Increased capacity q Accommodate the large and increasing amount of data that must reach the HEP Do. E Labs. q Also ensure data can reach the Tier 2 s where much, or most of the data analysis will be performed u High network reliability q Essential for distributed applications q Availability requirement: 99. 95% u LHC OPN q “Virtual Private Circuits” to FNAL and BNL

Challenges: Network Infrastructure “Providing the right cost-effective transatlantic network infrastructure for the HEP community’s needs” u Increased capacity q Accommodate the large and increasing amount of data that must reach the HEP Do. E Labs. q Also ensure data can reach the Tier 2 s where much, or most of the data analysis will be performed u High network reliability q Essential for distributed applications q Availability requirement: 99. 95% u LHC OPN q “Virtual Private Circuits” to FNAL and BNL

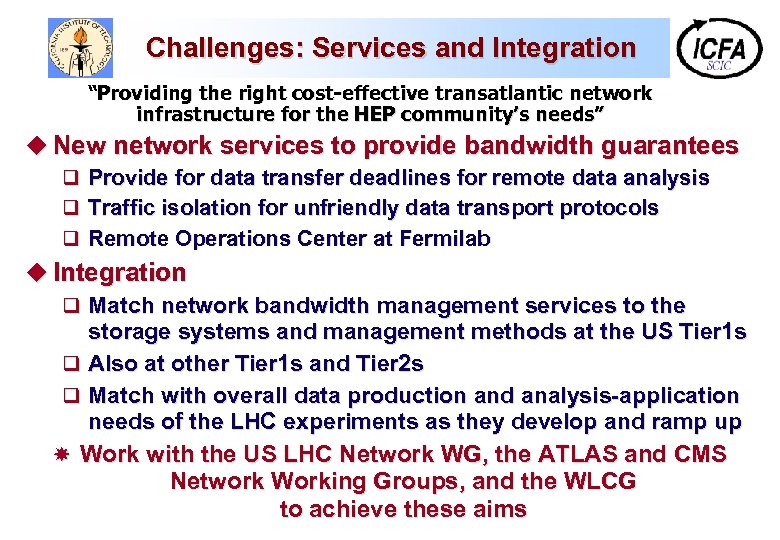

Challenges: Services and Integration “Providing the right cost-effective transatlantic network infrastructure for the HEP community’s needs” u New network services to provide bandwidth guarantees q q q Provide for data transfer deadlines for remote data analysis Traffic isolation for unfriendly data transport protocols Remote Operations Center at Fermilab u Integration q Match network bandwidth management services to the storage systems and management methods at the US Tier 1 s q Also at other Tier 1 s and Tier 2 s q Match with overall data production and analysis-application needs of the LHC experiments as they develop and ramp up Work with the US LHC Network WG, the ATLAS and CMS Network Working Groups, and the WLCG to achieve these aims

Challenges: Services and Integration “Providing the right cost-effective transatlantic network infrastructure for the HEP community’s needs” u New network services to provide bandwidth guarantees q q q Provide for data transfer deadlines for remote data analysis Traffic isolation for unfriendly data transport protocols Remote Operations Center at Fermilab u Integration q Match network bandwidth management services to the storage systems and management methods at the US Tier 1 s q Also at other Tier 1 s and Tier 2 s q Match with overall data production and analysis-application needs of the LHC experiments as they develop and ramp up Work with the US LHC Network WG, the ATLAS and CMS Network Working Groups, and the WLCG to achieve these aims

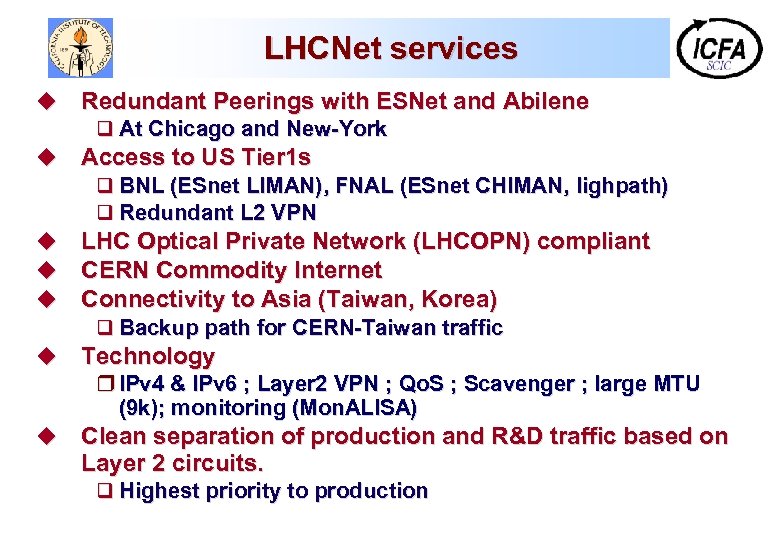

LHCNet services u Redundant Peerings with ESNet and Abilene q At Chicago and New-York u Access to US Tier 1 s q BNL (ESnet LIMAN), FNAL (ESnet CHIMAN, lighpath) q Redundant L 2 VPN u u u LHC Optical Private Network (LHCOPN) compliant CERN Commodity Internet Connectivity to Asia (Taiwan, Korea) q Backup path for CERN-Taiwan traffic u Technology r IPv 4 & IPv 6 ; Layer 2 VPN ; Qo. S ; Scavenger ; large MTU (9 k); monitoring (Mon. ALISA) u Clean separation of production and R&D traffic based on Layer 2 circuits. q Highest priority to production

LHCNet services u Redundant Peerings with ESNet and Abilene q At Chicago and New-York u Access to US Tier 1 s q BNL (ESnet LIMAN), FNAL (ESnet CHIMAN, lighpath) q Redundant L 2 VPN u u u LHC Optical Private Network (LHCOPN) compliant CERN Commodity Internet Connectivity to Asia (Taiwan, Korea) q Backup path for CERN-Taiwan traffic u Technology r IPv 4 & IPv 6 ; Layer 2 VPN ; Qo. S ; Scavenger ; large MTU (9 k); monitoring (Mon. ALISA) u Clean separation of production and R&D traffic based on Layer 2 circuits. q Highest priority to production

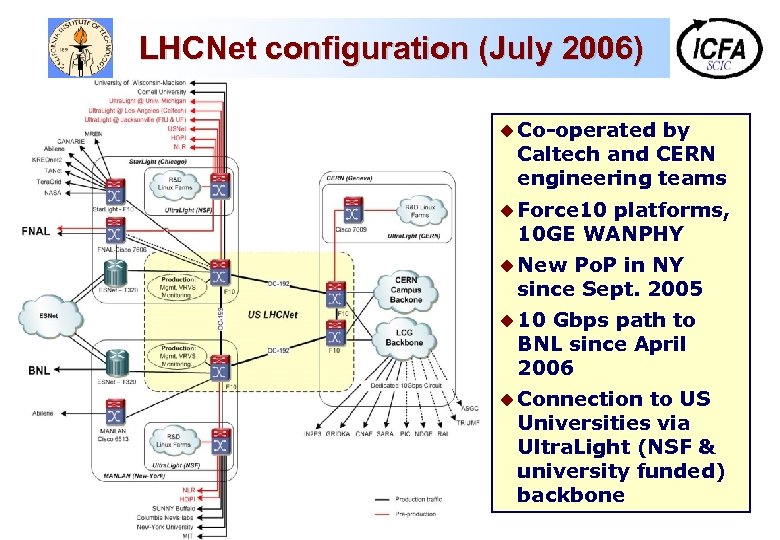

LHCNet configuration (July 2006) u Co-operated by Caltech and CERN engineering teams u Force 10 platforms, 10 GE WANPHY u New Po. P in NY since Sept. 2005 u 10 Gbps path to BNL since April 2006 u Connection to US Universities via Ultra. Light (NSF & university funded) backbone

LHCNet configuration (July 2006) u Co-operated by Caltech and CERN engineering teams u Force 10 platforms, 10 GE WANPHY u New Po. P in NY since Sept. 2005 u 10 Gbps path to BNL since April 2006 u Connection to US Universities via Ultra. Light (NSF & university funded) backbone

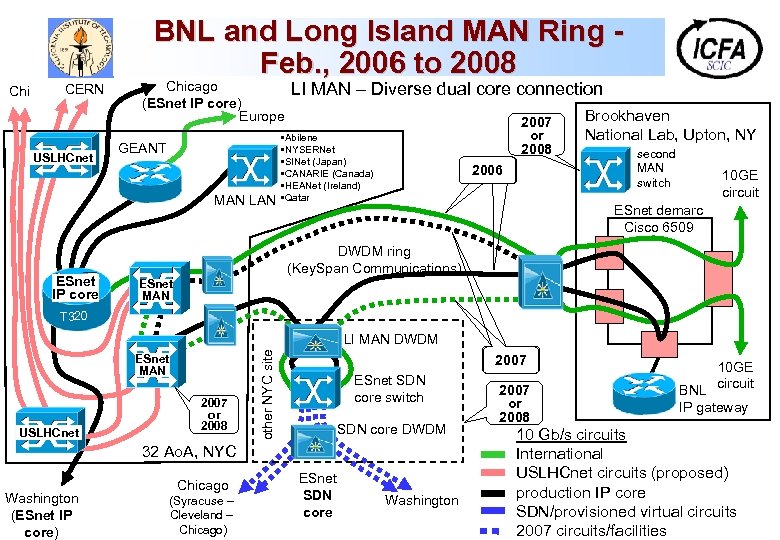

BNL and Long Island MAN Ring Feb. , 2006 to 2008 Chi CERN USLHCnet Chicago (ESnet IP core) Europe GEANT MAN LAN ESnet IP core LI MAN – Diverse dual core connection 2007 or 2008 • Abilene • NYSERNet • SINet (Japan) • CANARIE (Canada) • HEANet (Ireland) • Qatar 2006 Brookhaven National Lab, Upton, NY second MAN switch 10 GE circuit ESnet demarc Cisco 6509 DWDM ring (Key. Span Communications) ESnet MAN T 320 ESnet MAN USLHCnet 2007 or 2008 other NYC site LI MAN DWDM 2007 ESnet SDN core switch SDN core DWDM 32 Ao. A, NYC Washington (ESnet IP core) Chicago (Syracuse – Cleveland – Chicago) ESnet SDN core Washington 2007 or 2008 10 GE BNL circuit IP gateway 10 Gb/s circuits International USLHCnet circuits (proposed) production IP core SDN/provisioned virtual circuits 2007 circuits/facilities

BNL and Long Island MAN Ring Feb. , 2006 to 2008 Chi CERN USLHCnet Chicago (ESnet IP core) Europe GEANT MAN LAN ESnet IP core LI MAN – Diverse dual core connection 2007 or 2008 • Abilene • NYSERNet • SINet (Japan) • CANARIE (Canada) • HEANet (Ireland) • Qatar 2006 Brookhaven National Lab, Upton, NY second MAN switch 10 GE circuit ESnet demarc Cisco 6509 DWDM ring (Key. Span Communications) ESnet MAN T 320 ESnet MAN USLHCnet 2007 or 2008 other NYC site LI MAN DWDM 2007 ESnet SDN core switch SDN core DWDM 32 Ao. A, NYC Washington (ESnet IP core) Chicago (Syracuse – Cleveland – Chicago) ESnet SDN core Washington 2007 or 2008 10 GE BNL circuit IP gateway 10 Gb/s circuits International USLHCnet circuits (proposed) production IP core SDN/provisioned virtual circuits 2007 circuits/facilities

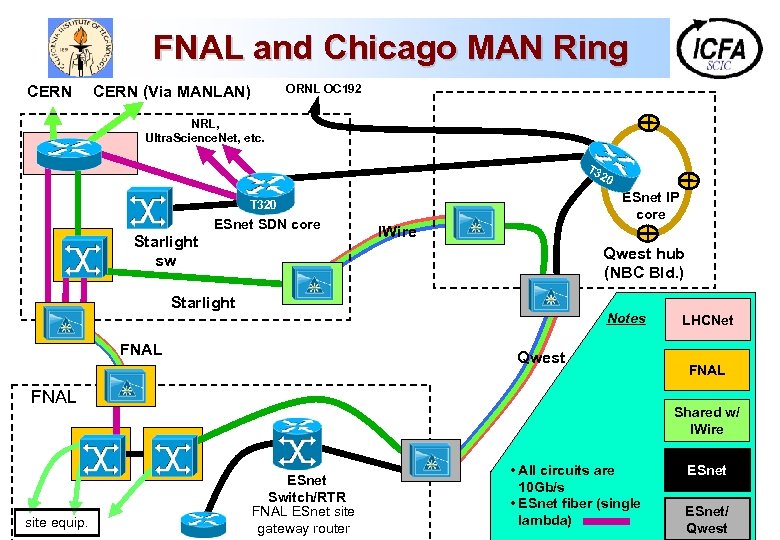

FNAL and Chicago MAN Ring CERN ORNL OC 192 CERN (Via MANLAN) NRL, Ultra. Science. Net, etc. T 3 20 ESnet IP core T 320 ESnet SDN core Starlight sw IWire Qwest hub (NBC Bld. ) Starlight Notes FNAL Qwest FNAL site equip. LHCNet FNAL Shared w/ IWire ESnet Switch/RTR FNAL ESnet site gateway router • All circuits are 10 Gb/s • ESnet fiber (single lambda) ESnet/ Qwest

FNAL and Chicago MAN Ring CERN ORNL OC 192 CERN (Via MANLAN) NRL, Ultra. Science. Net, etc. T 3 20 ESnet IP core T 320 ESnet SDN core Starlight sw IWire Qwest hub (NBC Bld. ) Starlight Notes FNAL Qwest FNAL site equip. LHCNet FNAL Shared w/ IWire ESnet Switch/RTR FNAL ESnet site gateway router • All circuits are 10 Gb/s • ESnet fiber (single lambda) ESnet/ Qwest

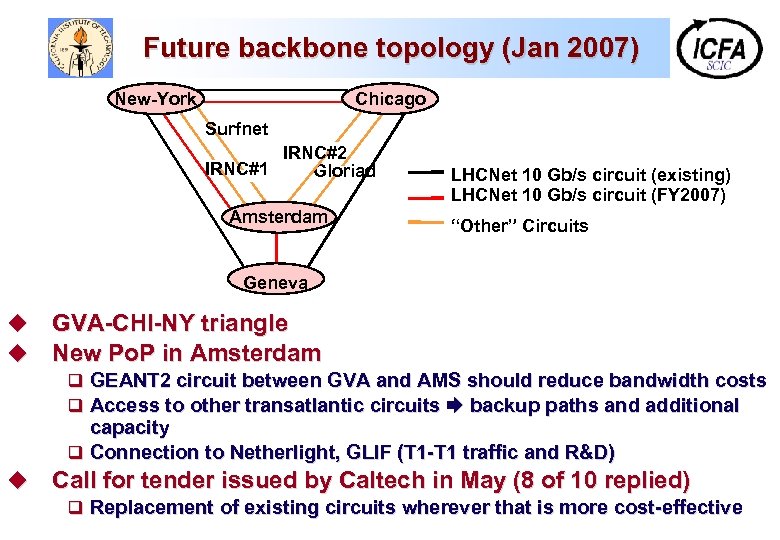

Future backbone topology (Jan 2007) New-York Chicago Surfnet IRNC#2 IRNC#1 Gloriad Amsterdam LHCNet 10 Gb/s circuit (existing) LHCNet 10 Gb/s circuit (FY 2007) “Other” Circuits Geneva u u GVA-CHI-NY triangle New Po. P in Amsterdam q GEANT 2 circuit between GVA and AMS should reduce bandwidth costs q Access to other transatlantic circuits backup paths and additional capacity q Connection to Netherlight, GLIF (T 1 -T 1 traffic and R&D) u Call for tender issued by Caltech in May (8 of 10 replied) q Replacement of existing circuits wherever that is more cost-effective

Future backbone topology (Jan 2007) New-York Chicago Surfnet IRNC#2 IRNC#1 Gloriad Amsterdam LHCNet 10 Gb/s circuit (existing) LHCNet 10 Gb/s circuit (FY 2007) “Other” Circuits Geneva u u GVA-CHI-NY triangle New Po. P in Amsterdam q GEANT 2 circuit between GVA and AMS should reduce bandwidth costs q Access to other transatlantic circuits backup paths and additional capacity q Connection to Netherlight, GLIF (T 1 -T 1 traffic and R&D) u Call for tender issued by Caltech in May (8 of 10 replied) q Replacement of existing circuits wherever that is more cost-effective

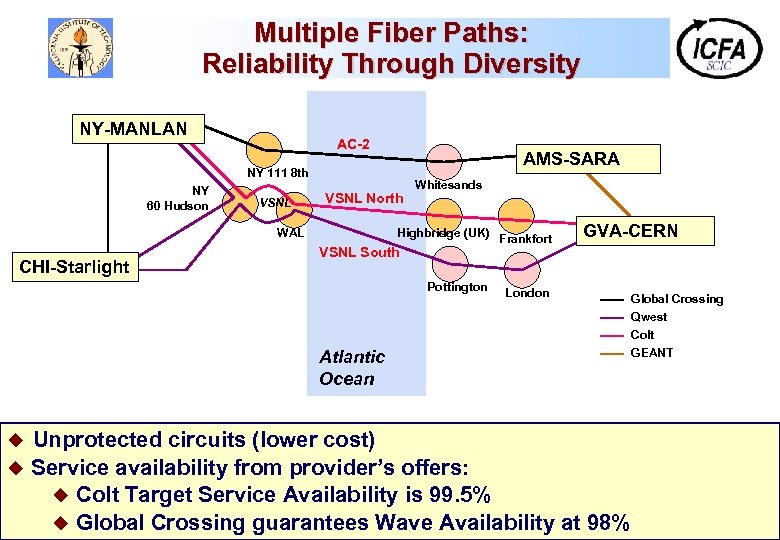

Multiple Fiber Paths: Reliability Through Diversity NY-MANLAN AC-2 AMS-SARA NY 111 8 th NY 60 Hudson VSNL North WAL CHI-Starlight Whitesands Highbridge (UK) Frankfort GVA-CERN VSNL South Pottington London Atlantic Ocean Unprotected circuits (lower cost) u Service availability from provider’s offers: u Colt Target Service Availability is 99. 5% u Global Crossing guarantees Wave Availability at 98% u Global Crossing Qwest Colt GEANT

Multiple Fiber Paths: Reliability Through Diversity NY-MANLAN AC-2 AMS-SARA NY 111 8 th NY 60 Hudson VSNL North WAL CHI-Starlight Whitesands Highbridge (UK) Frankfort GVA-CERN VSNL South Pottington London Atlantic Ocean Unprotected circuits (lower cost) u Service availability from provider’s offers: u Colt Target Service Availability is 99. 5% u Global Crossing guarantees Wave Availability at 98% u Global Crossing Qwest Colt GEANT

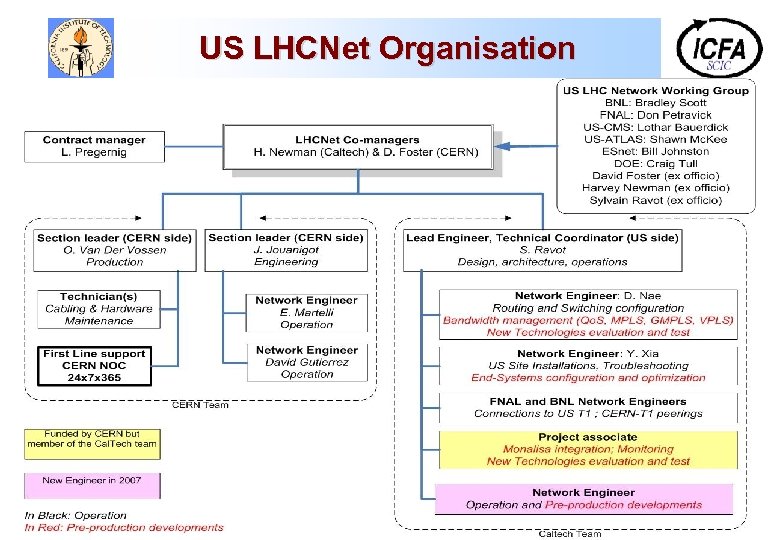

US LHCNet Organisation

US LHCNet Organisation

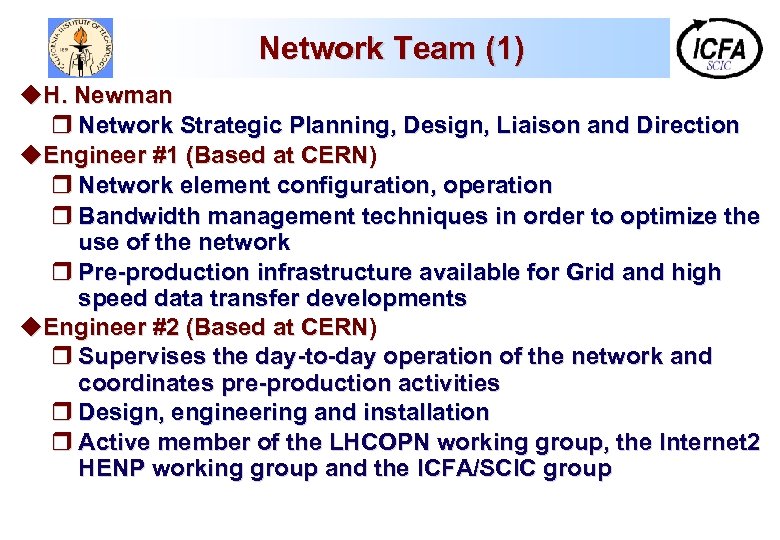

Network Team (1) u. H. Newman r Network Strategic Planning, Design, Liaison and Direction u. Engineer #1 (Based at CERN) r Network element configuration, operation r Bandwidth management techniques in order to optimize the use of the network r Pre-production infrastructure available for Grid and high speed data transfer developments u. Engineer #2 (Based at CERN) r Supervises the day-to-day operation of the network and coordinates pre-production activities r Design, engineering and installation r Active member of the LHCOPN working group, the Internet 2 HENP working group and the ICFA/SCIC group

Network Team (1) u. H. Newman r Network Strategic Planning, Design, Liaison and Direction u. Engineer #1 (Based at CERN) r Network element configuration, operation r Bandwidth management techniques in order to optimize the use of the network r Pre-production infrastructure available for Grid and high speed data transfer developments u. Engineer #2 (Based at CERN) r Supervises the day-to-day operation of the network and coordinates pre-production activities r Design, engineering and installation r Active member of the LHCOPN working group, the Internet 2 HENP working group and the ICFA/SCIC group

Network Team (2) u Engineer #3 (Based at Caltech or CERN) r Help with daily operation, installations and upgrades r Study, evaluate and help implement reservation, provisioning and scheduling mechanisms to take advantage of circuit-switched services u Engineer #4 (Based at Caltech) q Daily US LHCnet operation; emphasis on routing and peering issue in the US r Specialist in end-systems configuration and tuning; Advice and recommendations on the system configurations r Considerable experience in the installation, configuration and operation of optical multiplexers and purely photonic switches

Network Team (2) u Engineer #3 (Based at Caltech or CERN) r Help with daily operation, installations and upgrades r Study, evaluate and help implement reservation, provisioning and scheduling mechanisms to take advantage of circuit-switched services u Engineer #4 (Based at Caltech) q Daily US LHCnet operation; emphasis on routing and peering issue in the US r Specialist in end-systems configuration and tuning; Advice and recommendations on the system configurations r Considerable experience in the installation, configuration and operation of optical multiplexers and purely photonic switches

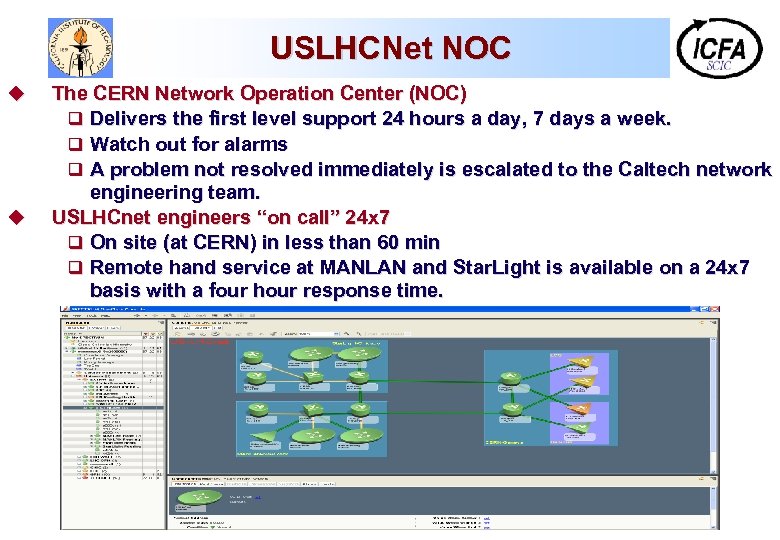

USLHCNet NOC u u The CERN Network Operation Center (NOC) q Delivers the first level support 24 hours a day, 7 days a week. q Watch out for alarms q A problem not resolved immediately is escalated to the Caltech network engineering team. USLHCnet engineers “on call” 24 x 7 q On site (at CERN) in less than 60 min q Remote hand service at MANLAN and Star. Light is available on a 24 x 7 basis with a four hour response time.

USLHCNet NOC u u The CERN Network Operation Center (NOC) q Delivers the first level support 24 hours a day, 7 days a week. q Watch out for alarms q A problem not resolved immediately is escalated to the Caltech network engineering team. USLHCnet engineers “on call” 24 x 7 q On site (at CERN) in less than 60 min q Remote hand service at MANLAN and Star. Light is available on a 24 x 7 basis with a four hour response time.

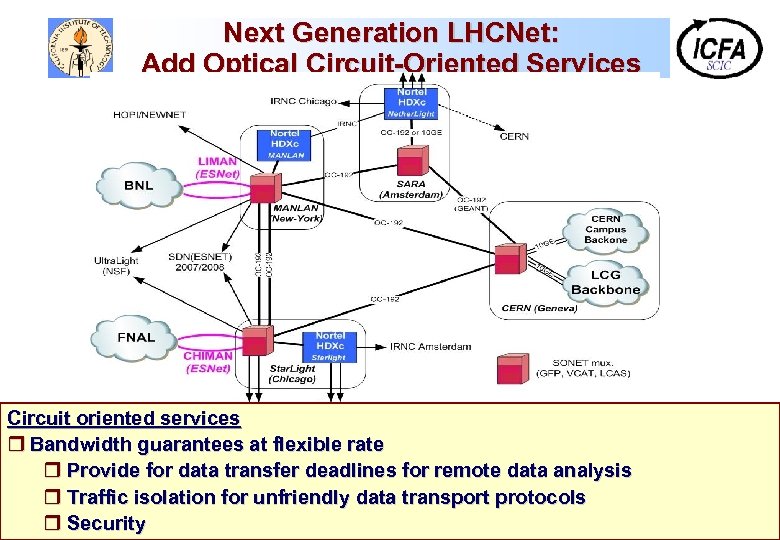

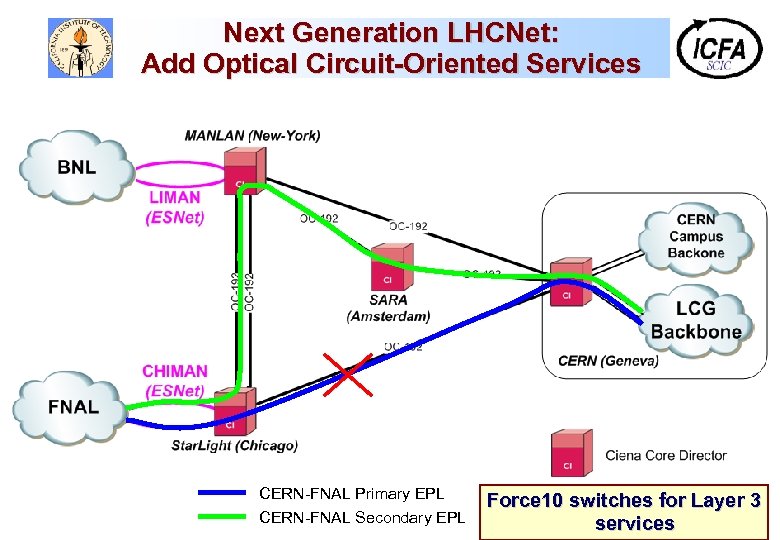

Next Generation LHCNet: Add Optical Circuit-Oriented Services Circuit oriented services r Bandwidth Based on CIENA “Core Director” Optical Multiplexers guarantees at flexible rate r Provide for data transfer deadlines for remote data analysis r Robust fallback, at the optical layer r Traffic isolation for unfriendly data transport protocols r Sophisticated standards-based software: VCAT/LCAS. r Security r Circuit-oriented services: Guaranteed Bandwidth Ethernet Private Line (EPL)

Next Generation LHCNet: Add Optical Circuit-Oriented Services Circuit oriented services r Bandwidth Based on CIENA “Core Director” Optical Multiplexers guarantees at flexible rate r Provide for data transfer deadlines for remote data analysis r Robust fallback, at the optical layer r Traffic isolation for unfriendly data transport protocols r Sophisticated standards-based software: VCAT/LCAS. r Security r Circuit-oriented services: Guaranteed Bandwidth Ethernet Private Line (EPL)

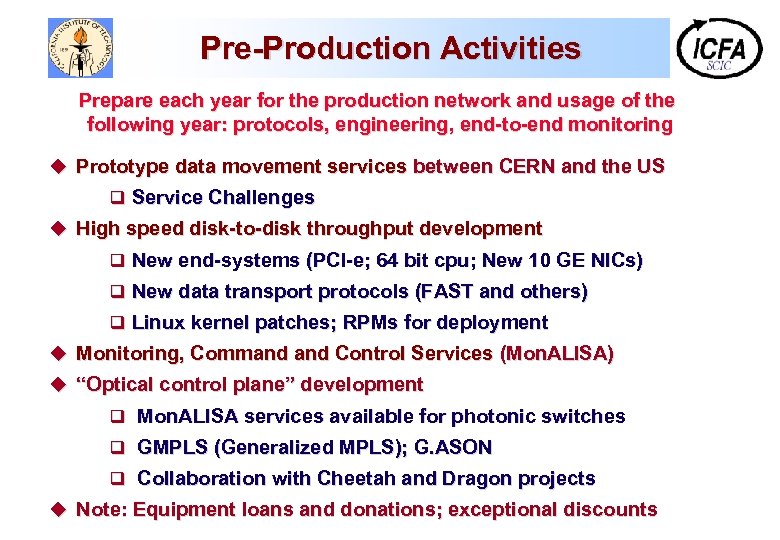

Pre-Production Activities Prepare each year for the production network and usage of the following year: protocols, engineering, end-to-end monitoring u Prototype data movement services between CERN and the US q Service Challenges u High speed disk-to-disk throughput development q New end-systems (PCI-e; 64 bit cpu; New 10 GE NICs) q New data transport protocols (FAST and others) q Linux kernel patches; RPMs for deployment u Monitoring, Command Control Services (Mon. ALISA) u “Optical control plane” development q Mon. ALISA services available for photonic switches q GMPLS (Generalized MPLS); G. ASON q Collaboration with Cheetah and Dragon projects u Note: Equipment loans and donations; exceptional discounts

Pre-Production Activities Prepare each year for the production network and usage of the following year: protocols, engineering, end-to-end monitoring u Prototype data movement services between CERN and the US q Service Challenges u High speed disk-to-disk throughput development q New end-systems (PCI-e; 64 bit cpu; New 10 GE NICs) q New data transport protocols (FAST and others) q Linux kernel patches; RPMs for deployment u Monitoring, Command Control Services (Mon. ALISA) u “Optical control plane” development q Mon. ALISA services available for photonic switches q GMPLS (Generalized MPLS); G. ASON q Collaboration with Cheetah and Dragon projects u Note: Equipment loans and donations; exceptional discounts

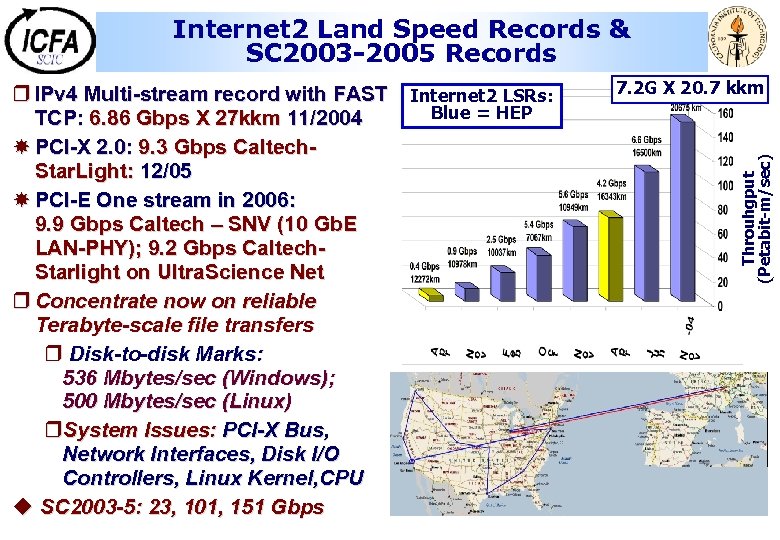

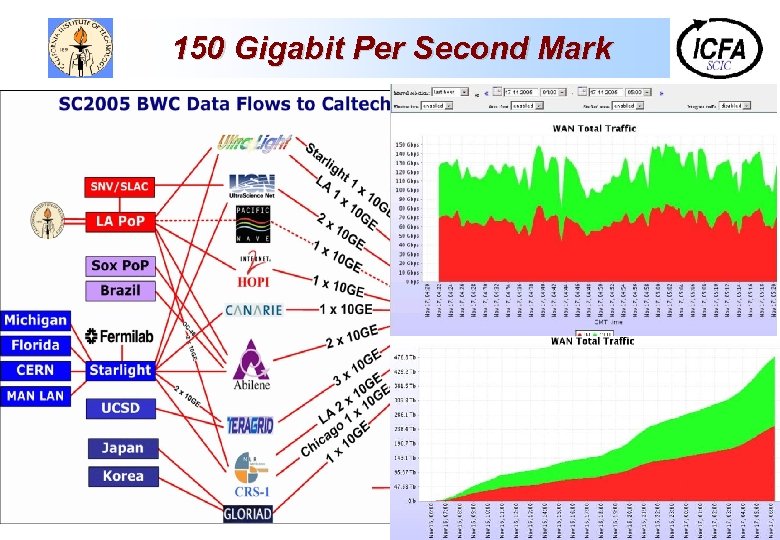

Internet 2 Land Speed Records & SC 2003 -2005 Records 7. 2 G X 20. 7 kkm Throuhgput (Petabit-m/sec) r IPv 4 Multi-stream record with FAST Internet 2 LSRs: Blue = HEP TCP: 6. 86 Gbps X 27 kkm 11/2004 PCI-X 2. 0: 9. 3 Gbps Caltech. Star. Light: 12/05 PCI-E One stream in 2006: 9. 9 Gbps Caltech – SNV (10 Gb. E LAN-PHY); 9. 2 Gbps Caltech. Starlight on Ultra. Science Net r Concentrate now on reliable Terabyte-scale file transfers r Disk-to-disk Marks: 536 Mbytes/sec (Windows); 500 Mbytes/sec (Linux) r. System Issues: PCI-X Bus, Network Interfaces, Disk I/O Controllers, Linux Kernel, CPU u SC 2003 -5: 23, 101, 151 Gbps

Internet 2 Land Speed Records & SC 2003 -2005 Records 7. 2 G X 20. 7 kkm Throuhgput (Petabit-m/sec) r IPv 4 Multi-stream record with FAST Internet 2 LSRs: Blue = HEP TCP: 6. 86 Gbps X 27 kkm 11/2004 PCI-X 2. 0: 9. 3 Gbps Caltech. Star. Light: 12/05 PCI-E One stream in 2006: 9. 9 Gbps Caltech – SNV (10 Gb. E LAN-PHY); 9. 2 Gbps Caltech. Starlight on Ultra. Science Net r Concentrate now on reliable Terabyte-scale file transfers r Disk-to-disk Marks: 536 Mbytes/sec (Windows); 500 Mbytes/sec (Linux) r. System Issues: PCI-X Bus, Network Interfaces, Disk I/O Controllers, Linux Kernel, CPU u SC 2003 -5: 23, 101, 151 Gbps

Milestones: 2006 -2007 u November 2006: Provisioning of new transatlantic circuits u End 2006: Evaluation of CIENA platforms q. Try and buy agreement u Spring 2007: Deployment of Next-generation US LHCNet q. Transition to new circuit-oriented backbone, based on optical multiplexers. q. Maintain full switched and routed IP service for a controlled portion of the bandwidth u Summer 2007: Start of LHC operations

Milestones: 2006 -2007 u November 2006: Provisioning of new transatlantic circuits u End 2006: Evaluation of CIENA platforms q. Try and buy agreement u Spring 2007: Deployment of Next-generation US LHCNet q. Transition to new circuit-oriented backbone, based on optical multiplexers. q. Maintain full switched and routed IP service for a controlled portion of the bandwidth u Summer 2007: Start of LHC operations

Primary Milestones for 2007 -2010 u Provide a robust network service without service interruptions, through q q q Physical diversity of the primary links Automated fallback at the optical layer Mutual backup with other networks (ESnet, IRNC, CANARIE, SURFNet etc. ) u Ramp up the bandwidth, supporting an increasing number of 1 -10 Terabyte-scale flows u Scale up and increase the functionality of the network management services provided u Gain experience on policy-based network resource management, together with FNAL, BNL, and the US Tier 2 organizations u Integrate with the security (AAA) infrastructures of ESnet and the LHC OPN

Primary Milestones for 2007 -2010 u Provide a robust network service without service interruptions, through q q q Physical diversity of the primary links Automated fallback at the optical layer Mutual backup with other networks (ESnet, IRNC, CANARIE, SURFNet etc. ) u Ramp up the bandwidth, supporting an increasing number of 1 -10 Terabyte-scale flows u Scale up and increase the functionality of the network management services provided u Gain experience on policy-based network resource management, together with FNAL, BNL, and the US Tier 2 organizations u Integrate with the security (AAA) infrastructures of ESnet and the LHC OPN

Additional Technical Milestones for 2008 -2010 Targeted at large scale, resilient operation with a relatively small network engineering team u 2008: Circuit-Oriented services q Bandwidth provisioning automated (through the use of Mon. ALISA services working with the CIENAs, for example) q Channels assigned to authenticated, authorized sites and/or user-groups q Based on a policy-driven network-management services infrastructure, currently under development u 2008 -2010: The Network as a Grid resource (2008 -2010) q Extend advanced planning and optimization into the networking and data-access layers. q Provides interfaces and functionality allowing physics applications to interact with the networking resources

Additional Technical Milestones for 2008 -2010 Targeted at large scale, resilient operation with a relatively small network engineering team u 2008: Circuit-Oriented services q Bandwidth provisioning automated (through the use of Mon. ALISA services working with the CIENAs, for example) q Channels assigned to authenticated, authorized sites and/or user-groups q Based on a policy-driven network-management services infrastructure, currently under development u 2008 -2010: The Network as a Grid resource (2008 -2010) q Extend advanced planning and optimization into the networking and data-access layers. q Provides interfaces and functionality allowing physics applications to interact with the networking resources

Conclusion u US LHCNet: An extremely reliable, cost-effective High Capacity Network u A 20+ Year Track Record u High speed inter-connections with the major R&E networks and US T 1 centers u Taking advantage of rapidly advancing network technologies to meet the needs of the LHC physics program at moderate cost u Leading edge R&D projects as required, to build the next generation US LHCNet

Conclusion u US LHCNet: An extremely reliable, cost-effective High Capacity Network u A 20+ Year Track Record u High speed inter-connections with the major R&E networks and US T 1 centers u Taking advantage of rapidly advancing network technologies to meet the needs of the LHC physics program at moderate cost u Leading edge R&D projects as required, to build the next generation US LHCNet

Additional Slides

Additional Slides

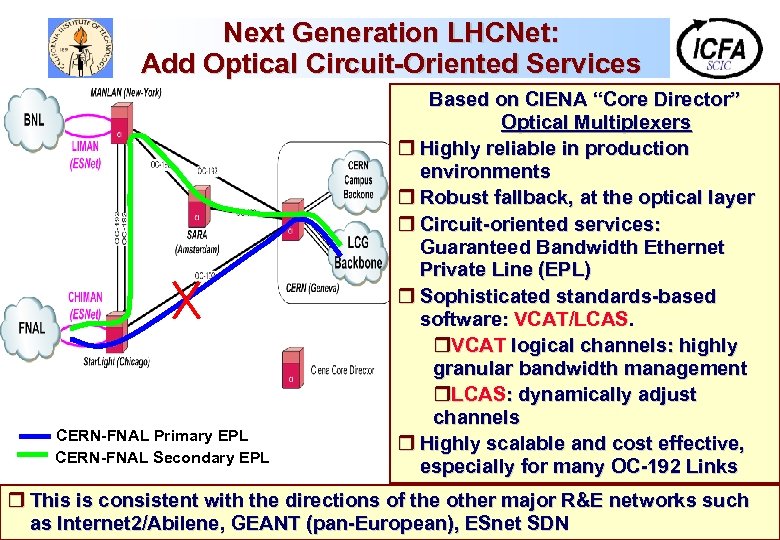

Next Generation LHCNet: Add Optical Circuit-Oriented Services CERN-FNAL Primary EPL CERN-FNAL Secondary EPL Based on CIENA “Core Director” Optical Multiplexers r Highly reliable in production environments r Robust fallback, at the optical layer r Circuit-oriented services: Guaranteed Bandwidth Ethernet Private Line (EPL) r Sophisticated standards-based software: VCAT/LCAS. r. VCAT logical channels: highly granular bandwidth management r. LCAS: dynamically adjust channels r Highly scalable and cost effective, especially for many OC-192 Links r This is consistent with the directions of the other major R&E networks such as Internet 2/Abilene, GEANT (pan-European), ESnet SDN

Next Generation LHCNet: Add Optical Circuit-Oriented Services CERN-FNAL Primary EPL CERN-FNAL Secondary EPL Based on CIENA “Core Director” Optical Multiplexers r Highly reliable in production environments r Robust fallback, at the optical layer r Circuit-oriented services: Guaranteed Bandwidth Ethernet Private Line (EPL) r Sophisticated standards-based software: VCAT/LCAS. r. VCAT logical channels: highly granular bandwidth management r. LCAS: dynamically adjust channels r Highly scalable and cost effective, especially for many OC-192 Links r This is consistent with the directions of the other major R&E networks such as Internet 2/Abilene, GEANT (pan-European), ESnet SDN

SC 2005: Caltech and FNAL/SLAC Booths High Speed Tera. Byte Transfers for Physics u We previewed the global-scale data analysis of the LHC Era Using a realistic mixture of streams: Organized transfer of multi-TB event datasets; plus Numerous smaller flows of physics data that absorb the remaining capacity u We used Twenty Two [*] 10 Gbps waves to carry bidirectional traffic between Fermilab, Caltech, SLAC, BNL, CERN and other partner Grid sites including: Michigan, Florida, Manchester, Rio de Janeiro (UERJ) and Sao Paulo (UNESP) in Brazil, Korea (Kyungpook), and Japan (KEK) [*] 15 10 Gbps wavellengths at the Caltech/CACR Booth and 7 10 GBps wavelengths at the FNAL/SLAC Booth u Monitored by Caltech’s Mon. ALISA global monitoring system u Bandwidth challenge award: q Official mark at 131 Gbps but peaks at 151 Gbps q 475 TB of physics data transferred in a day; Sustained rate > 1 PB/day

SC 2005: Caltech and FNAL/SLAC Booths High Speed Tera. Byte Transfers for Physics u We previewed the global-scale data analysis of the LHC Era Using a realistic mixture of streams: Organized transfer of multi-TB event datasets; plus Numerous smaller flows of physics data that absorb the remaining capacity u We used Twenty Two [*] 10 Gbps waves to carry bidirectional traffic between Fermilab, Caltech, SLAC, BNL, CERN and other partner Grid sites including: Michigan, Florida, Manchester, Rio de Janeiro (UERJ) and Sao Paulo (UNESP) in Brazil, Korea (Kyungpook), and Japan (KEK) [*] 15 10 Gbps wavellengths at the Caltech/CACR Booth and 7 10 GBps wavelengths at the FNAL/SLAC Booth u Monitored by Caltech’s Mon. ALISA global monitoring system u Bandwidth challenge award: q Official mark at 131 Gbps but peaks at 151 Gbps q 475 TB of physics data transferred in a day; Sustained rate > 1 PB/day

150 Gigabit Per Second Mark

150 Gigabit Per Second Mark

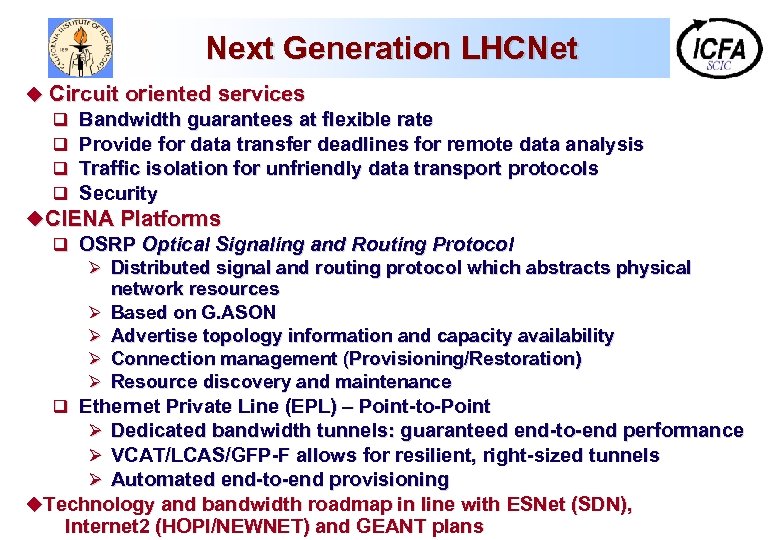

Next Generation LHCNet u Circuit oriented services q Bandwidth guarantees at flexible rate q Provide for data transfer deadlines for remote data analysis q Traffic isolation for unfriendly data transport protocols q Security u. CIENA Platforms q OSRP Optical Signaling and Routing Protocol Ø Distributed signal and routing protocol which abstracts physical Ø Ø network resources Based on G. ASON Advertise topology information and capacity availability Connection management (Provisioning/Restoration) Resource discovery and maintenance q Ethernet Private Line (EPL) – Point-to-Point Ø Dedicated bandwidth tunnels: guaranteed end-to-end performance Ø VCAT/LCAS/GFP-F allows for resilient, right-sized tunnels Ø Automated end-to-end provisioning u. Technology and bandwidth roadmap in line with ESNet (SDN), Internet 2 (HOPI/NEWNET) and GEANT plans

Next Generation LHCNet u Circuit oriented services q Bandwidth guarantees at flexible rate q Provide for data transfer deadlines for remote data analysis q Traffic isolation for unfriendly data transport protocols q Security u. CIENA Platforms q OSRP Optical Signaling and Routing Protocol Ø Distributed signal and routing protocol which abstracts physical Ø Ø network resources Based on G. ASON Advertise topology information and capacity availability Connection management (Provisioning/Restoration) Resource discovery and maintenance q Ethernet Private Line (EPL) – Point-to-Point Ø Dedicated bandwidth tunnels: guaranteed end-to-end performance Ø VCAT/LCAS/GFP-F allows for resilient, right-sized tunnels Ø Automated end-to-end provisioning u. Technology and bandwidth roadmap in line with ESNet (SDN), Internet 2 (HOPI/NEWNET) and GEANT plans

Next Generation LHCNet: Add Optical Circuit-Oriented Services CERN-FNAL Primary EPL CERN-FNAL Secondary EPL Force 10 switches for Layer 3 services

Next Generation LHCNet: Add Optical Circuit-Oriented Services CERN-FNAL Primary EPL CERN-FNAL Secondary EPL Force 10 switches for Layer 3 services

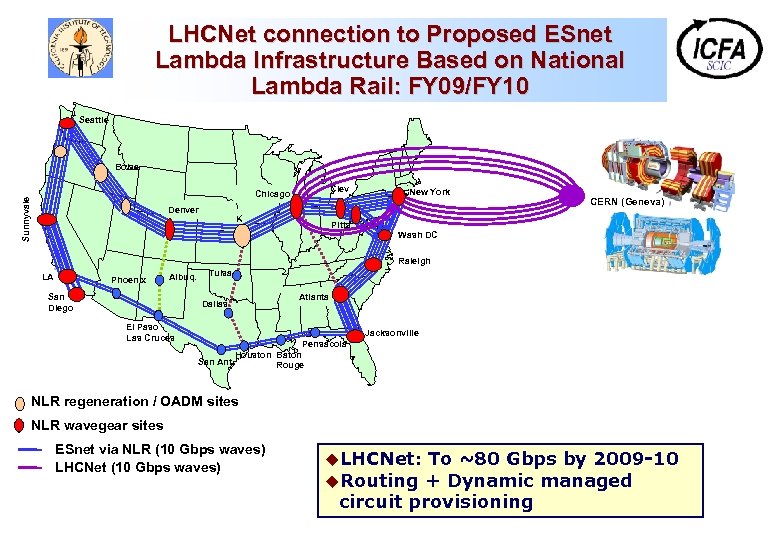

LHCNet connection to Proposed ESnet Lambda Infrastructure Based on National Lambda Rail: FY 09/FY 10 Seattle Boise Clev Sunnyvale Chicago Denver K C New York CERN (Geneva) Pitts Wash DC Raleigh LA Phoenix Albuq. San Diego Tulsa Atlanta Dallas El Paso Las Cruces Jacksonville Pensacola San Ant. Houston Baton Rouge NLR regeneration / OADM sites NLR wavegear sites ESnet via NLR (10 Gbps waves) LHCNet (10 Gbps waves) u. LHCNet: To ~80 Gbps by 2009 -10 u. Routing + Dynamic managed circuit provisioning

LHCNet connection to Proposed ESnet Lambda Infrastructure Based on National Lambda Rail: FY 09/FY 10 Seattle Boise Clev Sunnyvale Chicago Denver K C New York CERN (Geneva) Pitts Wash DC Raleigh LA Phoenix Albuq. San Diego Tulsa Atlanta Dallas El Paso Las Cruces Jacksonville Pensacola San Ant. Houston Baton Rouge NLR regeneration / OADM sites NLR wavegear sites ESnet via NLR (10 Gbps waves) LHCNet (10 Gbps waves) u. LHCNet: To ~80 Gbps by 2009 -10 u. Routing + Dynamic managed circuit provisioning

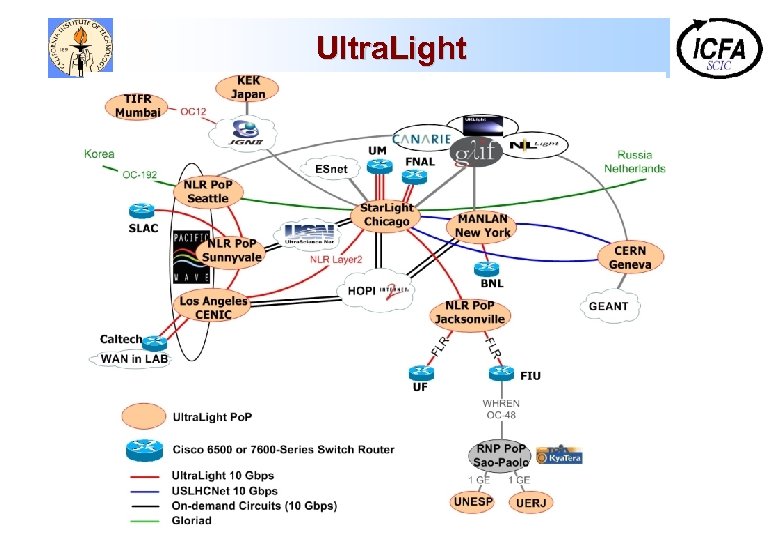

Ultra. Light

Ultra. Light

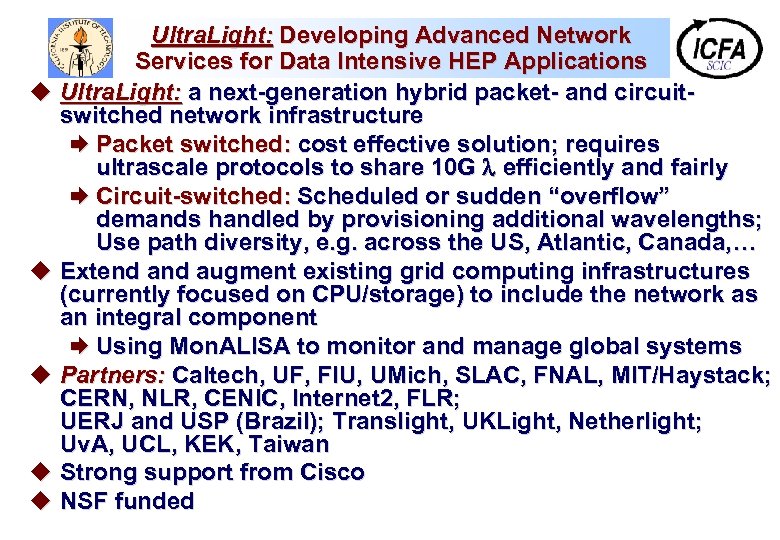

u u u Ultra. Light: Developing Advanced Network Services for Data Intensive HEP Applications Ultra. Light: a next-generation hybrid packet- and circuitswitched network infrastructure Æ Packet switched: cost effective solution; requires ultrascale protocols to share 10 G efficiently and fairly Æ Circuit-switched: Scheduled or sudden “overflow” demands handled by provisioning additional wavelengths; Use path diversity, e. g. across the US, Atlantic, Canada, … Extend augment existing grid computing infrastructures (currently focused on CPU/storage) to include the network as an integral component Æ Using Mon. ALISA to monitor and manage global systems Partners: Caltech, UF, FIU, UMich, SLAC, FNAL, MIT/Haystack; CERN, NLR, CENIC, Internet 2, FLR; UERJ and USP (Brazil); Translight, UKLight, Netherlight; Uv. A, UCL, KEK, Taiwan Strong support from Cisco NSF funded

u u u Ultra. Light: Developing Advanced Network Services for Data Intensive HEP Applications Ultra. Light: a next-generation hybrid packet- and circuitswitched network infrastructure Æ Packet switched: cost effective solution; requires ultrascale protocols to share 10 G efficiently and fairly Æ Circuit-switched: Scheduled or sudden “overflow” demands handled by provisioning additional wavelengths; Use path diversity, e. g. across the US, Atlantic, Canada, … Extend augment existing grid computing infrastructures (currently focused on CPU/storage) to include the network as an integral component Æ Using Mon. ALISA to monitor and manage global systems Partners: Caltech, UF, FIU, UMich, SLAC, FNAL, MIT/Haystack; CERN, NLR, CENIC, Internet 2, FLR; UERJ and USP (Brazil); Translight, UKLight, Netherlight; Uv. A, UCL, KEK, Taiwan Strong support from Cisco NSF funded

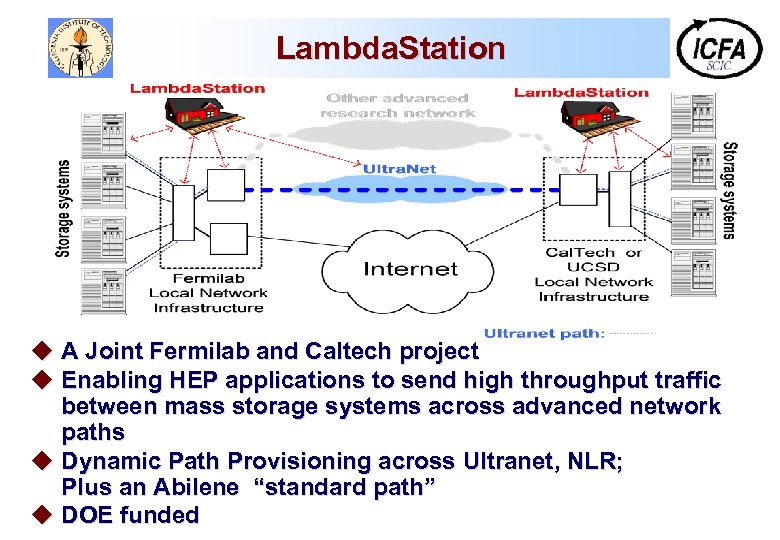

Lambda. Station u A Joint Fermilab and Caltech project u Enabling HEP applications to send high throughput traffic between mass storage systems across advanced network paths u Dynamic Path Provisioning across Ultranet, NLR; Plus an Abilene “standard path” u DOE funded

Lambda. Station u A Joint Fermilab and Caltech project u Enabling HEP applications to send high throughput traffic between mass storage systems across advanced network paths u Dynamic Path Provisioning across Ultranet, NLR; Plus an Abilene “standard path” u DOE funded

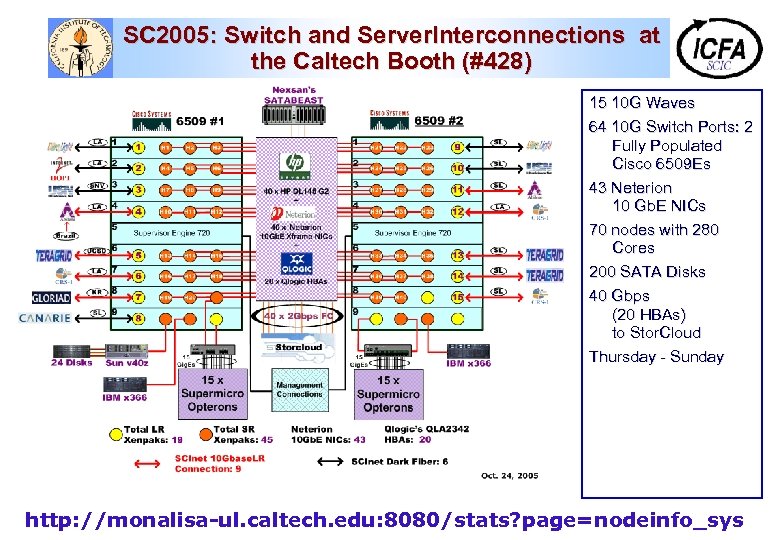

SC 2005: Switch and Server. Interconnections at the Caltech Booth (#428) 15 10 G Waves 64 10 G Switch Ports: 2 Fully Populated Cisco 6509 Es 43 Neterion 10 Gb. E NICs 70 nodes with 280 Cores 200 SATA Disks 40 Gbps (20 HBAs) to Stor. Cloud Thursday - Sunday http: //monalisa-ul. caltech. edu: 8080/stats? page=nodeinfo_sys

SC 2005: Switch and Server. Interconnections at the Caltech Booth (#428) 15 10 G Waves 64 10 G Switch Ports: 2 Fully Populated Cisco 6509 Es 43 Neterion 10 Gb. E NICs 70 nodes with 280 Cores 200 SATA Disks 40 Gbps (20 HBAs) to Stor. Cloud Thursday - Sunday http: //monalisa-ul. caltech. edu: 8080/stats? page=nodeinfo_sys

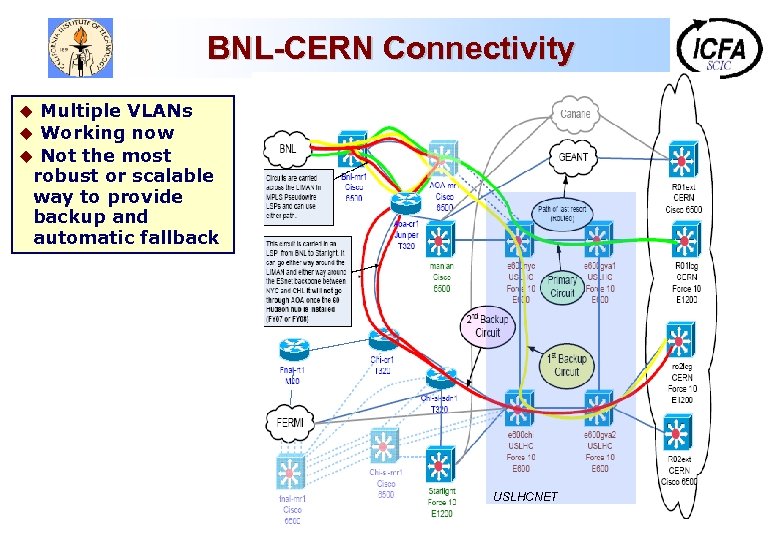

BNL-CERN Connectivity Multiple VLANs u Working now u Not the most robust or scalable way to provide backup and automatic fallback u USLHCNET

BNL-CERN Connectivity Multiple VLANs u Working now u Not the most robust or scalable way to provide backup and automatic fallback u USLHCNET

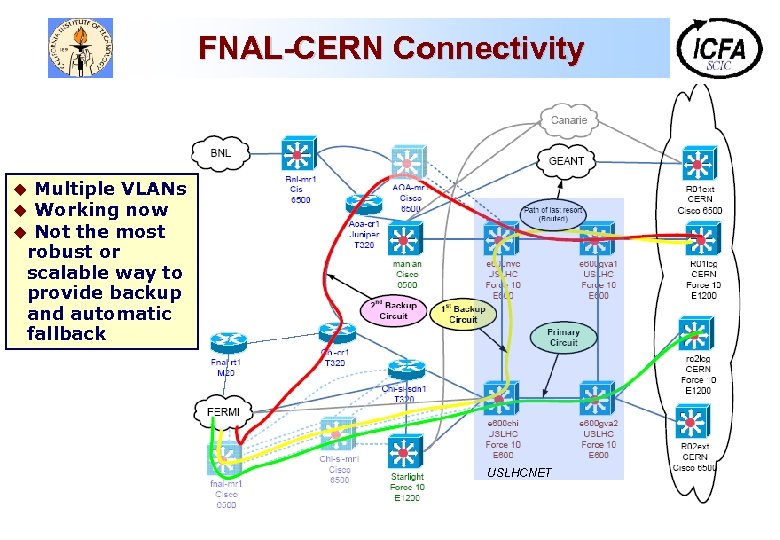

FNAL-CERN Connectivity Multiple VLANs Working now Not the most robust or scalable way to provide backup and automatic fallback u u u USLHCNET

FNAL-CERN Connectivity Multiple VLANs Working now Not the most robust or scalable way to provide backup and automatic fallback u u u USLHCNET

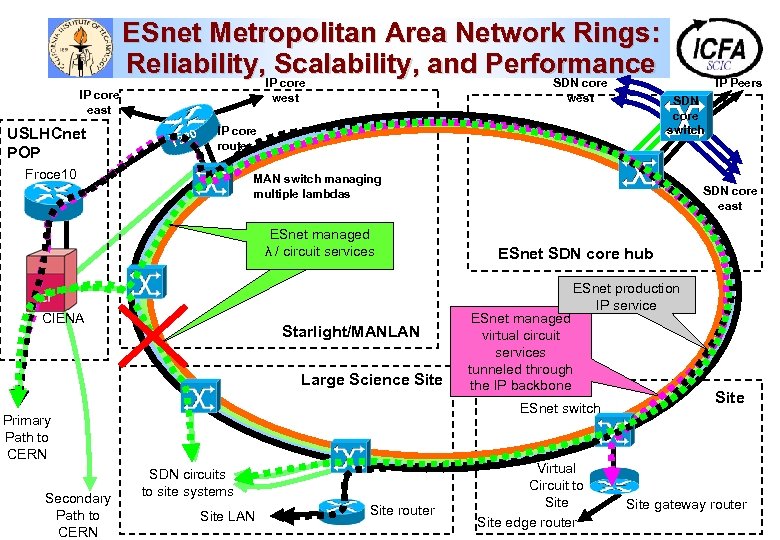

IP core east USLHCnet POP ESnet Metropolitan Area Network Rings: Reliability, Scalability, and Performance IP core SDN core west 20 T 3 west MAN switch managing multiple lambdas ESnet managed λ / circuit services SDN core east ESnet SDN core hub ESnet production IP service CIENA Starlight/MANLAN Large Science Site ESnet managed virtual circuit services tunneled through the IP backbone ESnet switch Primary Path to CERN Secondary Path to CERN SDN core switch IP core router Froce 10 IP Peers SDN circuits to site systems Site LAN Site router Virtual Circuit to Site edge router Site gateway router

IP core east USLHCnet POP ESnet Metropolitan Area Network Rings: Reliability, Scalability, and Performance IP core SDN core west 20 T 3 west MAN switch managing multiple lambdas ESnet managed λ / circuit services SDN core east ESnet SDN core hub ESnet production IP service CIENA Starlight/MANLAN Large Science Site ESnet managed virtual circuit services tunneled through the IP backbone ESnet switch Primary Path to CERN Secondary Path to CERN SDN core switch IP core router Froce 10 IP Peers SDN circuits to site systems Site LAN Site router Virtual Circuit to Site edge router Site gateway router

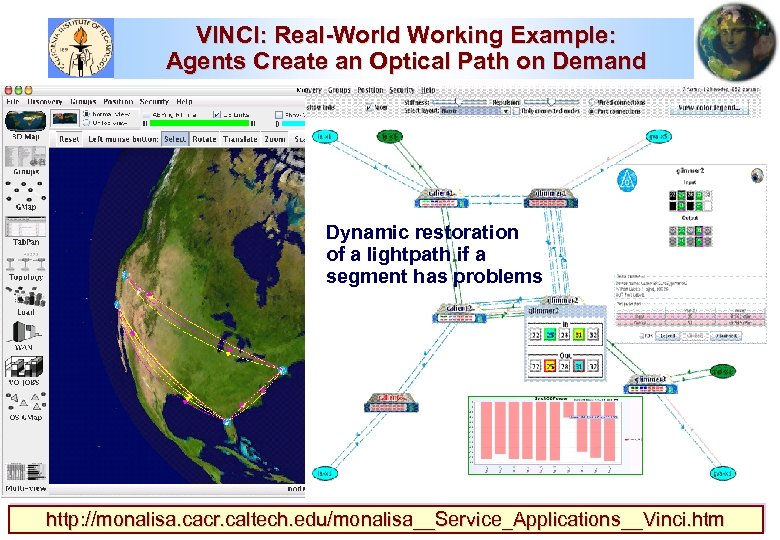

VINCI: Real-World Working Example: Agents Create an Optical Path on Demand Dynamic restoration of a lightpath if a segment has problems http: //monalisa. cacr. caltech. edu/monalisa__Service_Applications__Vinci. htm

VINCI: Real-World Working Example: Agents Create an Optical Path on Demand Dynamic restoration of a lightpath if a segment has problems http: //monalisa. cacr. caltech. edu/monalisa__Service_Applications__Vinci. htm

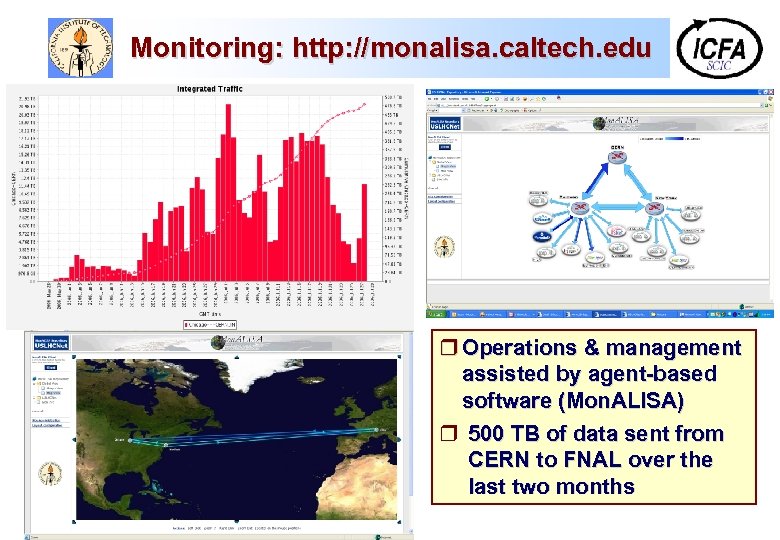

Monitoring: http: //monalisa. caltech. edu r Operations & management assisted by agent-based software (Mon. ALISA) r 500 TB of data sent from CERN to FNAL over the last two months

Monitoring: http: //monalisa. caltech. edu r Operations & management assisted by agent-based software (Mon. ALISA) r 500 TB of data sent from CERN to FNAL over the last two months

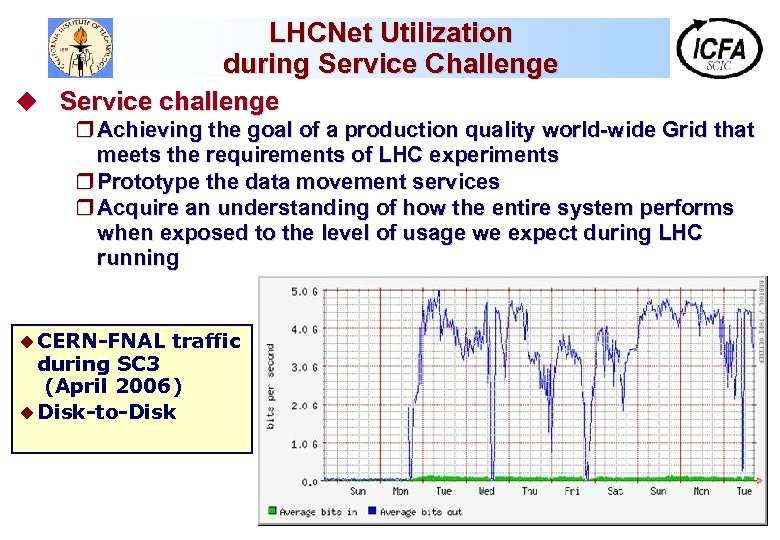

LHCNet Utilization during Service Challenge u Service challenge r Achieving the goal of a production quality world-wide Grid that meets the requirements of LHC experiments r Prototype the data movement services r Acquire an understanding of how the entire system performs when exposed to the level of usage we expect during LHC running u CERN-FNAL traffic during SC 3 (April 2006) u Disk-to-Disk

LHCNet Utilization during Service Challenge u Service challenge r Achieving the goal of a production quality world-wide Grid that meets the requirements of LHC experiments r Prototype the data movement services r Acquire an understanding of how the entire system performs when exposed to the level of usage we expect during LHC running u CERN-FNAL traffic during SC 3 (April 2006) u Disk-to-Disk

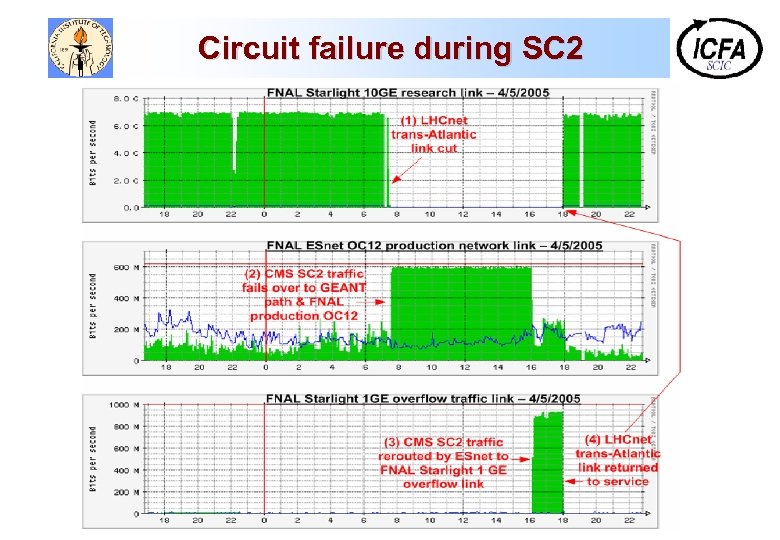

Circuit failure during SC 2

Circuit failure during SC 2