8025a83ea73c89ba3dfd9d3b4d14ae2b.ppt

- Количество слайдов: 71

Understanding • I Hear and I Forget • I See and I Remember • I Do and I Understand Attributed to Confucius, ~500 BCE

Understanding • I Hear and I Forget • I See and I Remember • I Do and I Understand Attributed to Confucius, ~500 BCE

How could a mass of chemical cells produce language and thought? Will computers think and speak? How much can we know about our own experience? How do we learn new concepts? Does our language determine how we think? Is language Innate? How do children learn grammar? How did languages evolve? Why do we experience everything the way that we do?

How could a mass of chemical cells produce language and thought? Will computers think and speak? How much can we know about our own experience? How do we learn new concepts? Does our language determine how we think? Is language Innate? How do children learn grammar? How did languages evolve? Why do we experience everything the way that we do?

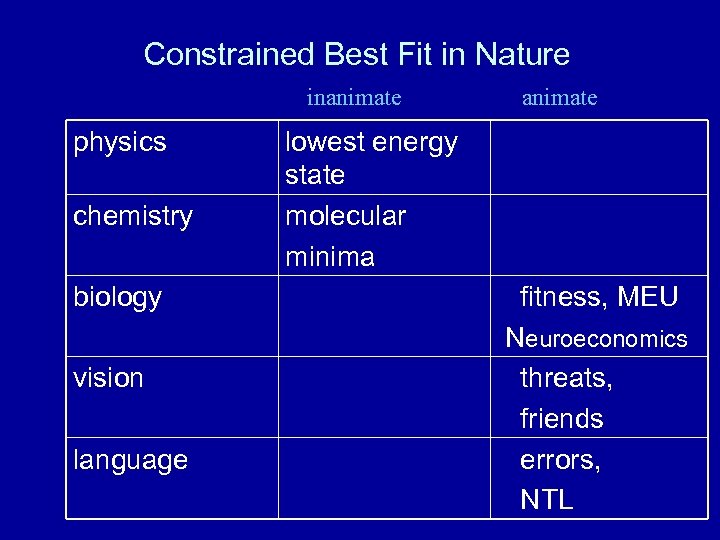

Constrained Best Fit in Nature inanimate physics chemistry biology vision language animate lowest energy state molecular minima fitness, MEU Neuroeconomics threats, friends errors, NTL

Constrained Best Fit in Nature inanimate physics chemistry biology vision language animate lowest energy state molecular minima fitness, MEU Neuroeconomics threats, friends errors, NTL

Embodiment Of all of these fields, the learning of languages would be the most impressive, since it is the most human of these activities. This field, however, seems to depend rather too much on the sense organs and locomotion to be feasible. Alan Turing (Intelligent Machines, 1948)

Embodiment Of all of these fields, the learning of languages would be the most impressive, since it is the most human of these activities. This field, however, seems to depend rather too much on the sense organs and locomotion to be feasible. Alan Turing (Intelligent Machines, 1948)

Pre-Natal Tuning: Internally generated tuning signals • But in the womb, what provides the feedback to establish which neural circuits are the right ones to strengthen? – Not a problem for motor circuits - the feedback and control networks for basic physical actions can be refined as the infant moves its limbs and indeed, this is what happens. – But there is no vision in the womb. Recent research shows that systematic moving patterns of activity are spontaneously generated prenatally in the retina. A predictable pattern, changing over time, provides excellent training data for tuning the connections between visual maps. • The pre-natal development of the auditory system is also interesting and is directly relevant to our story. – Research indicates that infants, immediately after birth, preferentially recognize the sounds of their native language over others. The assumption is that similar activity-dependent tuning mechanisms work with speech signals perceived in the womb.

Pre-Natal Tuning: Internally generated tuning signals • But in the womb, what provides the feedback to establish which neural circuits are the right ones to strengthen? – Not a problem for motor circuits - the feedback and control networks for basic physical actions can be refined as the infant moves its limbs and indeed, this is what happens. – But there is no vision in the womb. Recent research shows that systematic moving patterns of activity are spontaneously generated prenatally in the retina. A predictable pattern, changing over time, provides excellent training data for tuning the connections between visual maps. • The pre-natal development of the auditory system is also interesting and is directly relevant to our story. – Research indicates that infants, immediately after birth, preferentially recognize the sounds of their native language over others. The assumption is that similar activity-dependent tuning mechanisms work with speech signals perceived in the womb.

Post-natal environmental tuning • The pre-natal tuning of neural connections using simulated activity can work quite well – – a newborn colt or calf is essentially functional at birth. – This is necessary because the herd is always on the move. – Many animals, including people, do much of their development after birth and activity-dependent mechanisms can exploit experience in the real world. • In fact, such experience is absolutely necessary for normal development. • As we saw, early experiments with kittens showed that there are fairly short critical periods during which animals deprived of visual input could lose forever their ability to see motion, vertical lines, etc. – For a similar reason, if a human child has one weak eye, the doctor will sometimes place a patch over the stronger one, forcing the weaker eye to gain experience.

Post-natal environmental tuning • The pre-natal tuning of neural connections using simulated activity can work quite well – – a newborn colt or calf is essentially functional at birth. – This is necessary because the herd is always on the move. – Many animals, including people, do much of their development after birth and activity-dependent mechanisms can exploit experience in the real world. • In fact, such experience is absolutely necessary for normal development. • As we saw, early experiments with kittens showed that there are fairly short critical periods during which animals deprived of visual input could lose forever their ability to see motion, vertical lines, etc. – For a similar reason, if a human child has one weak eye, the doctor will sometimes place a patch over the stronger one, forcing the weaker eye to gain experience.

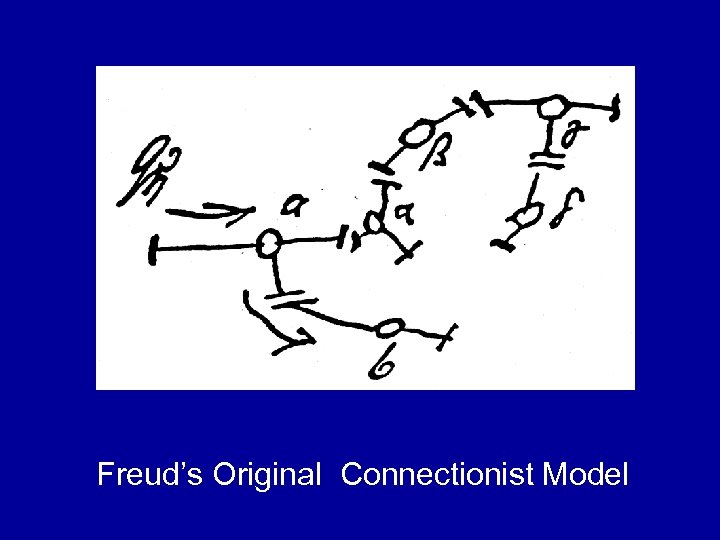

Freud’s Original Connectionist Model

Freud’s Original Connectionist Model

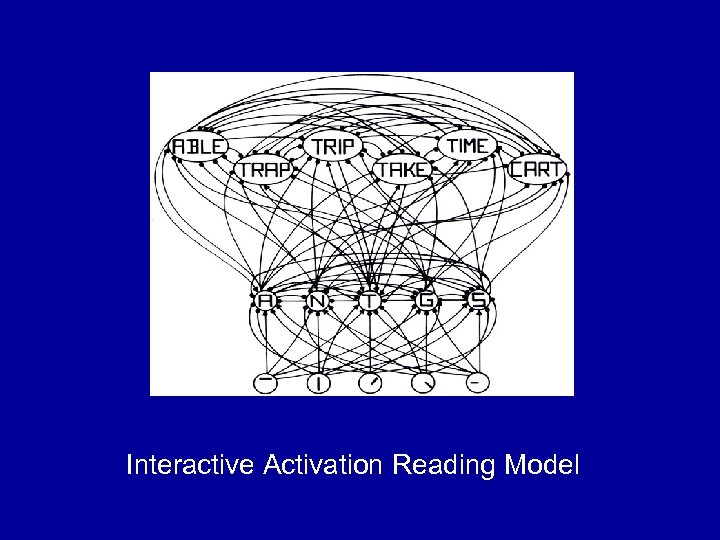

Connectionist Model of Word Recognition (Rumelhart and Mc. Clelland) Interactive Activation Reading Model

Connectionist Model of Word Recognition (Rumelhart and Mc. Clelland) Interactive Activation Reading Model

Modeling lexical access errors • • Semantic error Formal error (i. e. errors related by form) Mixed error (semantic + formal) Phonological access error

Modeling lexical access errors • • Semantic error Formal error (i. e. errors related by form) Mixed error (semantic + formal) Phonological access error

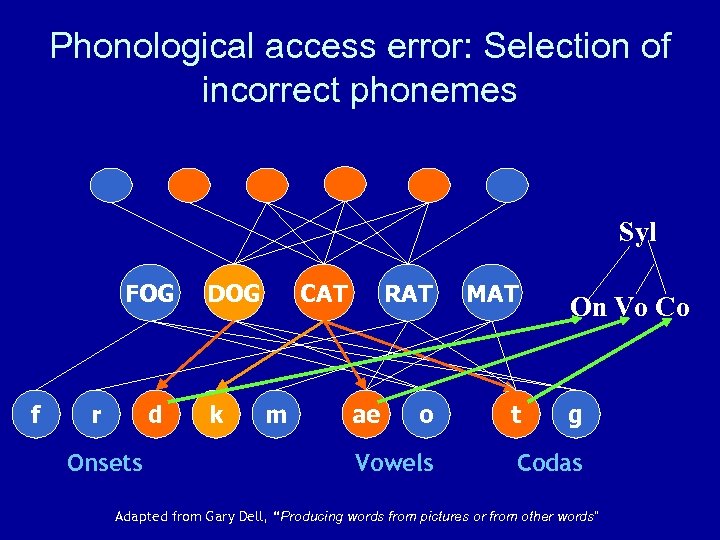

Phonological access error: Selection of incorrect phonemes Syl FOG f r d Onsets DOG k CAT m RAT ae MAT o t Vowels On Vo Co g Codas Adapted from Gary Dell, “Producing words from pictures or from other words”

Phonological access error: Selection of incorrect phonemes Syl FOG f r d Onsets DOG k CAT m RAT ae MAT o t Vowels On Vo Co g Codas Adapted from Gary Dell, “Producing words from pictures or from other words”

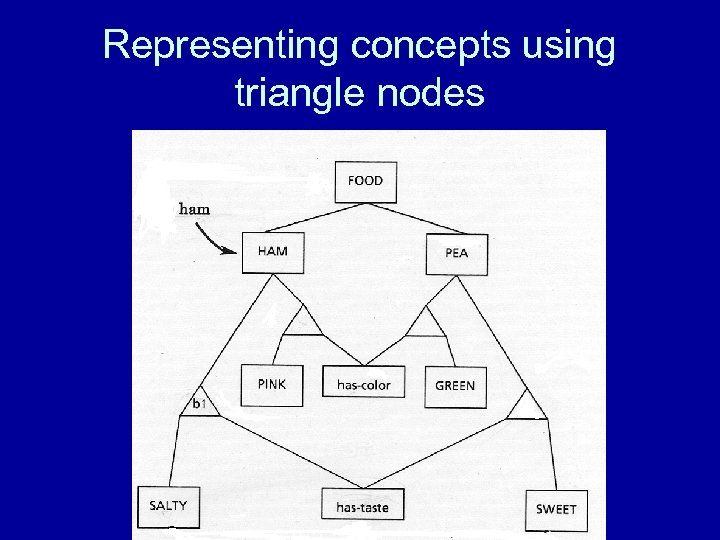

Representing concepts using triangle nodes

Representing concepts using triangle nodes

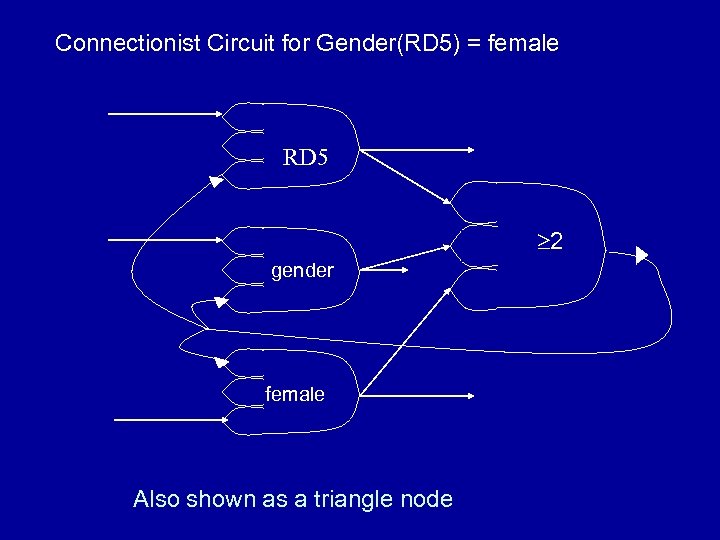

Connectionist Circuit for Gender(RD 5) = female RD 5 2 gender female Also shown as a triangle node

Connectionist Circuit for Gender(RD 5) = female RD 5 2 gender female Also shown as a triangle node

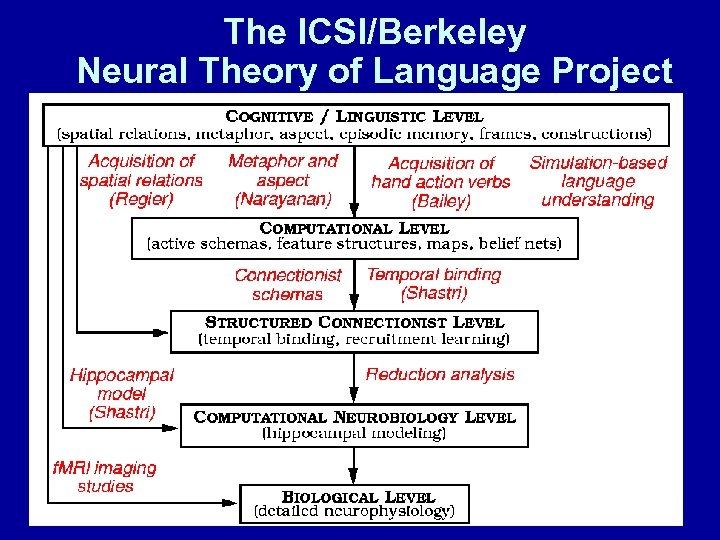

The ICSI/Berkeley Neural Theory of Language Project

The ICSI/Berkeley Neural Theory of Language Project

The Binding Problem q Massively Parallel Brain q Unitary Conscious Experience q Many Variations and Proposals q Our focus: The Variable Binding Problem

The Binding Problem q Massively Parallel Brain q Unitary Conscious Experience q Many Variations and Proposals q Our focus: The Variable Binding Problem

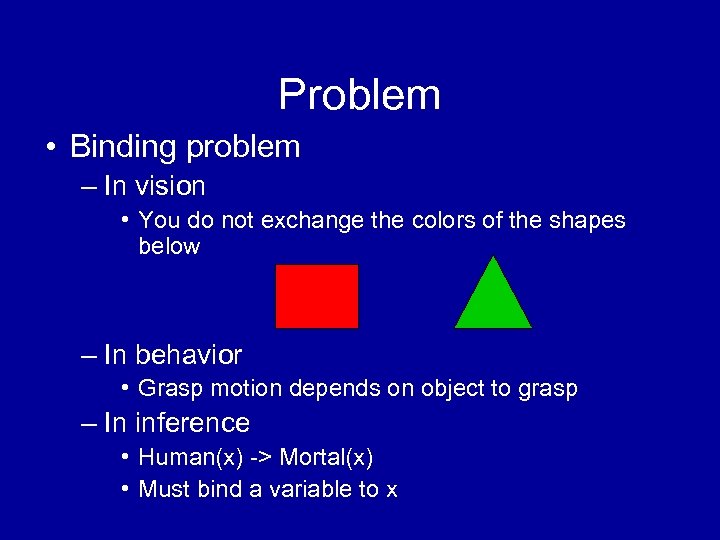

Problem • Binding problem – In vision • You do not exchange the colors of the shapes below – In behavior • Grasp motion depends on object to grasp – In inference • Human(x) -> Mortal(x) • Must bind a variable to x

Problem • Binding problem – In vision • You do not exchange the colors of the shapes below – In behavior • Grasp motion depends on object to grasp – In inference • Human(x) -> Mortal(x) • Must bind a variable to x

Automatic Inference • Inference needed for many tasks – Reference resolution – General language understanding – Planning • Humans do this quickly and without conscious thought – Automatically – No real intuition of how we do it

Automatic Inference • Inference needed for many tasks – Reference resolution – General language understanding – Planning • Humans do this quickly and without conscious thought – Automatically – No real intuition of how we do it

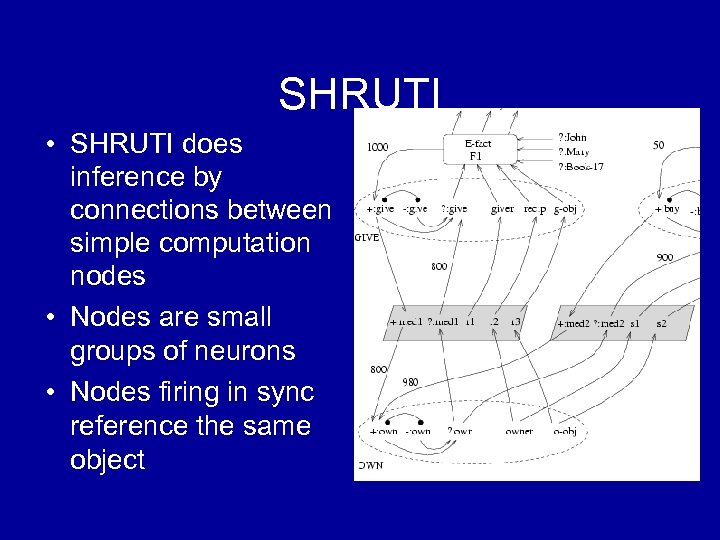

SHRUTI • SHRUTI does inference by connections between simple computation nodes • Nodes are small groups of neurons • Nodes firing in sync reference the same object

SHRUTI • SHRUTI does inference by connections between simple computation nodes • Nodes are small groups of neurons • Nodes firing in sync reference the same object

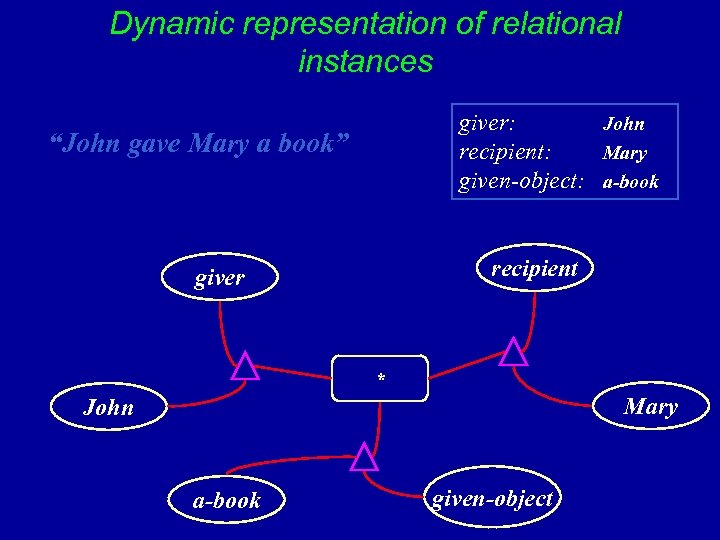

Dynamic representation of relational instances giver: John recipient: Mary given-object: a-book “John gave Mary a book” recipient giver * Mary John a-book given-object

Dynamic representation of relational instances giver: John recipient: Mary given-object: a-book “John gave Mary a book” recipient giver * Mary John a-book given-object

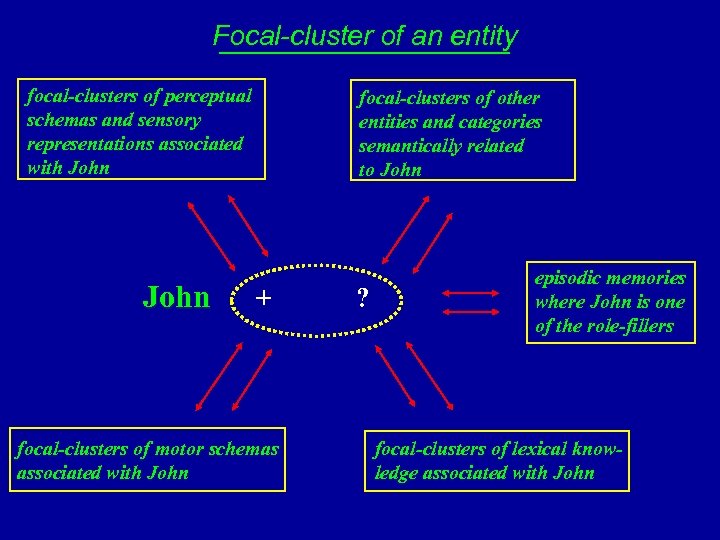

Focal-cluster of an entity focal-clusters of perceptual schemas and sensory representations associated with John focal-clusters of other entities and categories semantically related to John + focal-clusters of motor schemas associated with John ? episodic memories where John is one of the role-fillers focal-clusters of lexical knowledge associated with John

Focal-cluster of an entity focal-clusters of perceptual schemas and sensory representations associated with John focal-clusters of other entities and categories semantically related to John + focal-clusters of motor schemas associated with John ? episodic memories where John is one of the role-fillers focal-clusters of lexical knowledge associated with John

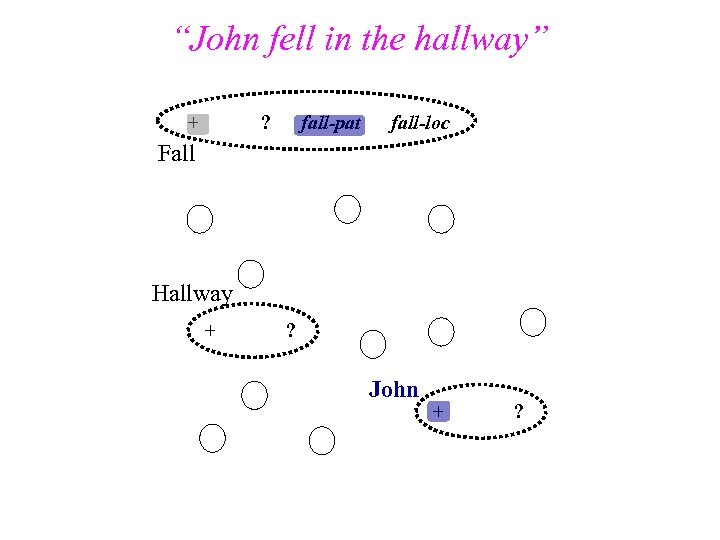

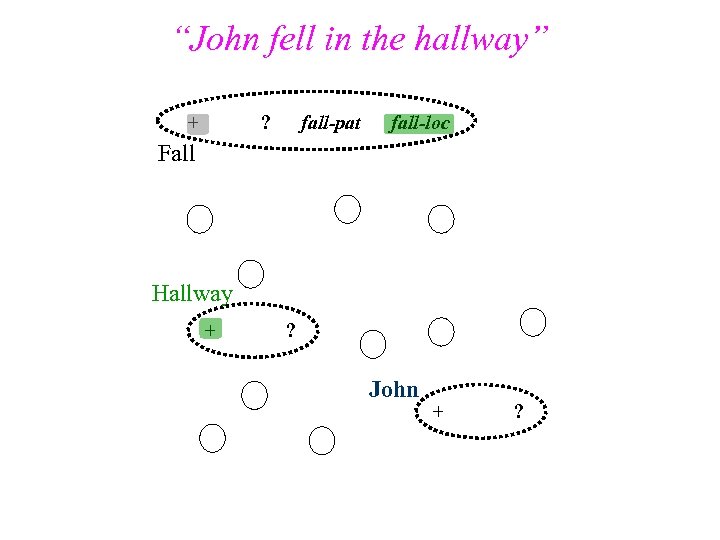

“John fell in the hallway” - + ? fall-pat fall-loc Fall Hallway + ? John + ?

“John fell in the hallway” - + ? fall-pat fall-loc Fall Hallway + ? John + ?

“John fell in the hallway” - + ? fall-pat fall-loc Fall Hallway + ? John + ?

“John fell in the hallway” - + ? fall-pat fall-loc Fall Hallway + ? John + ?

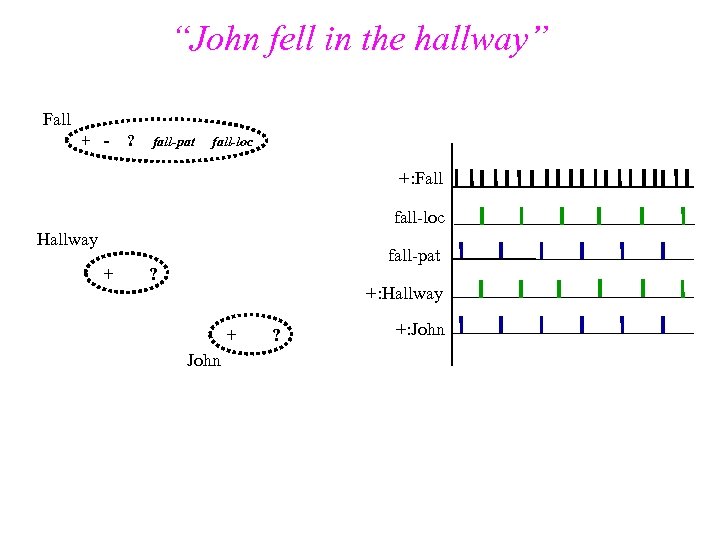

“John fell in the hallway” Fall + -- ? fall-pat fall-loc +: Fall fall-loc Hallway + fall-pat ? +: Hallway + John ? +: John

“John fell in the hallway” Fall + -- ? fall-pat fall-loc +: Fall fall-loc Hallway + fall-pat ? +: Hallway + John ? +: John

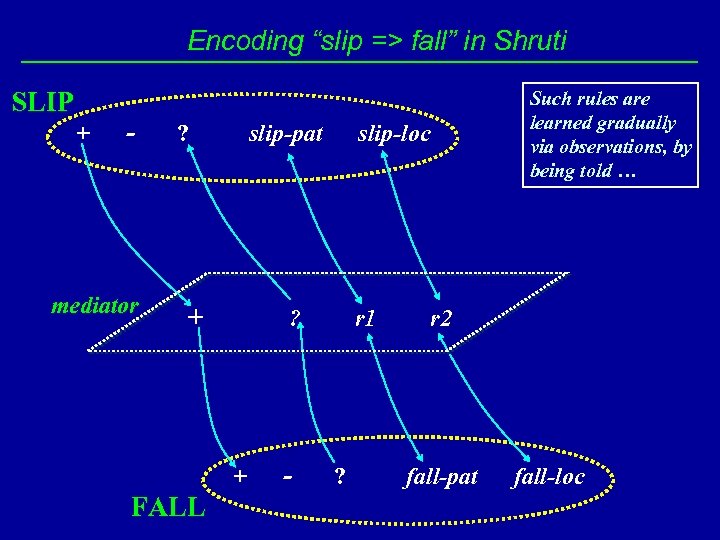

Encoding “slip => fall” in Shruti SLIP + - mediator ? slip-pat + FALL slip-loc ? + - Such rules are learned gradually via observations, by being told … r 1 ? r 2 fall-pat fall-loc

Encoding “slip => fall” in Shruti SLIP + - mediator ? slip-pat + FALL slip-loc ? + - Such rules are learned gradually via observations, by being told … r 1 ? r 2 fall-pat fall-loc

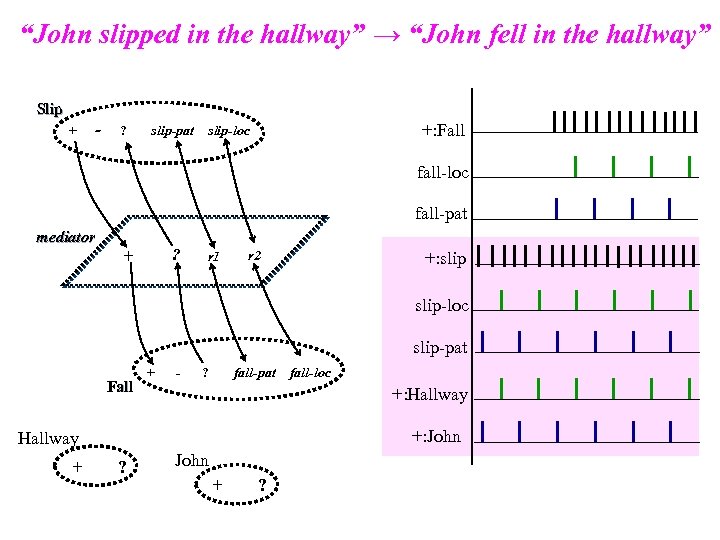

“John slipped in the hallway” → “John fell in the hallway” Slip + - ? slip-pat +: Fall slip-loc fall-pat mediator ? + r 1 r 2 +: slip-loc slip-pat Fall + - ? fall-pat +: Hallway +: John Hallway + fall-loc ? John + ?

“John slipped in the hallway” → “John fell in the hallway” Slip + - ? slip-pat +: Fall slip-loc fall-pat mediator ? + r 1 r 2 +: slip-loc slip-pat Fall + - ? fall-pat +: Hallway +: John Hallway + fall-loc ? John + ?

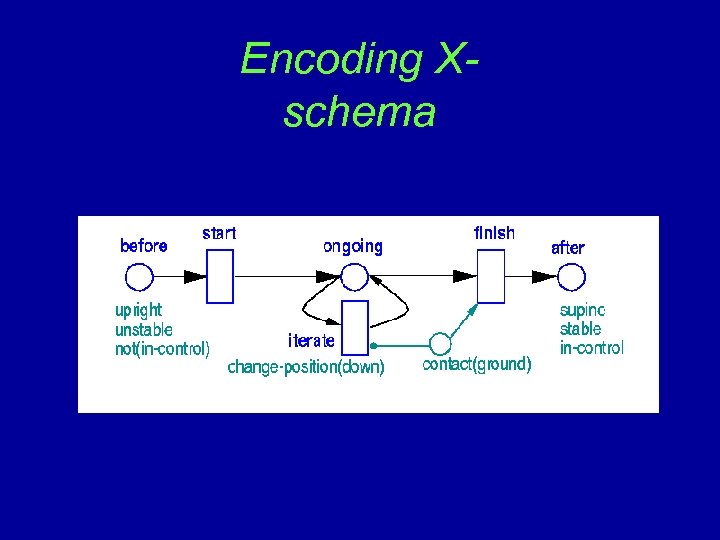

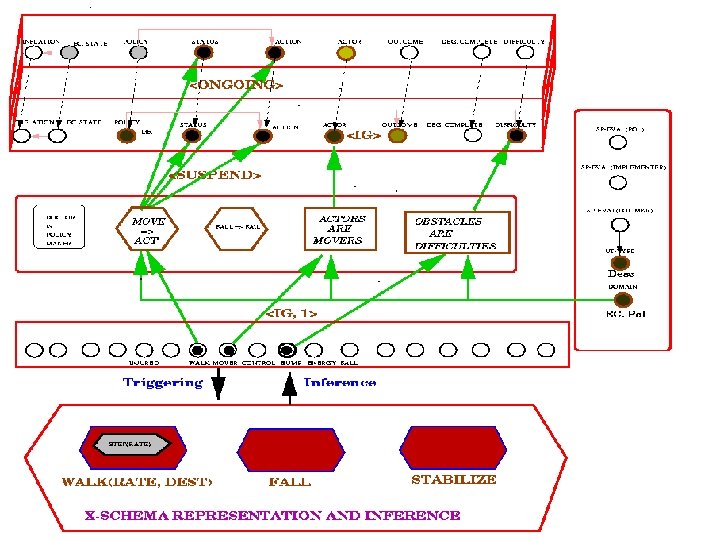

Encoding Xschema

Encoding Xschema

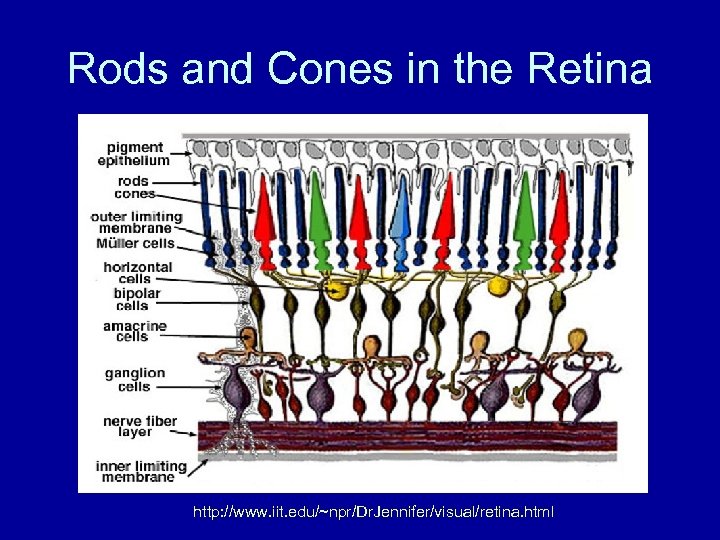

Rods and Cones in the Retina http: //www. iit. edu/~npr/Dr. Jennifer/visual/retina. html

Rods and Cones in the Retina http: //www. iit. edu/~npr/Dr. Jennifer/visual/retina. html

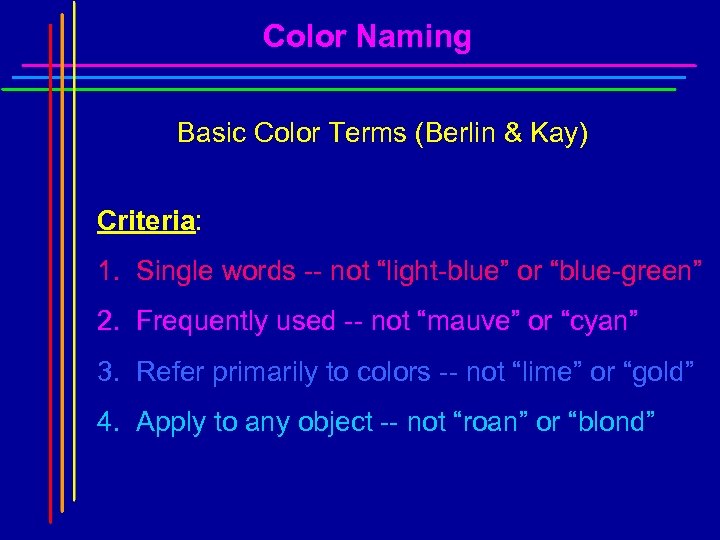

Color Naming Basic Color Terms (Berlin & Kay) Criteria: 1. Single words -- not “light-blue” or “blue-green” 2. Frequently used -- not “mauve” or “cyan” 3. Refer primarily to colors -- not “lime” or “gold” 4. Apply to any object -- not “roan” or “blond” © Stephen E. Palmer, 2002

Color Naming Basic Color Terms (Berlin & Kay) Criteria: 1. Single words -- not “light-blue” or “blue-green” 2. Frequently used -- not “mauve” or “cyan” 3. Refer primarily to colors -- not “lime” or “gold” 4. Apply to any object -- not “roan” or “blond” © Stephen E. Palmer, 2002

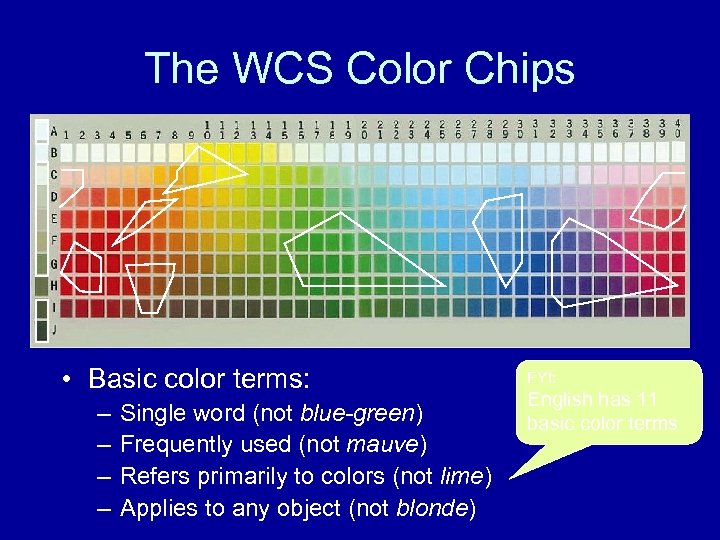

The WCS Color Chips • Basic color terms: – – Single word (not blue-green) Frequently used (not mauve) Refers primarily to colors (not lime) Applies to any object (not blonde) FYI: English has 11 basic color terms

The WCS Color Chips • Basic color terms: – – Single word (not blue-green) Frequently used (not mauve) Refers primarily to colors (not lime) Applies to any object (not blonde) FYI: English has 11 basic color terms

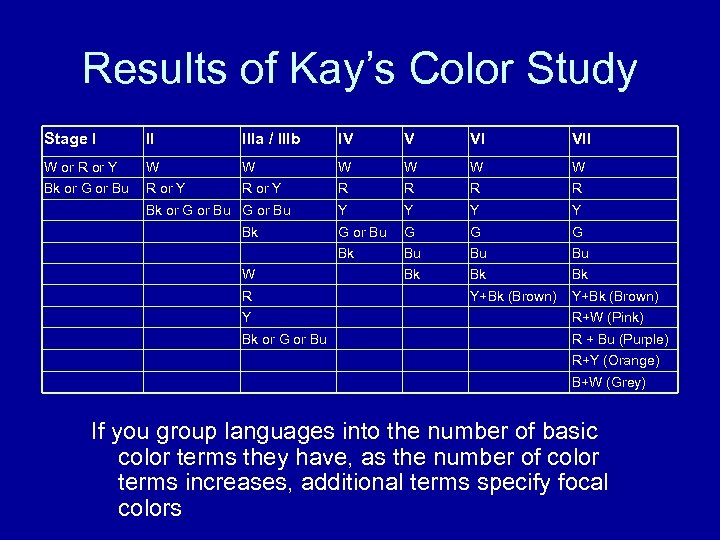

Results of Kay’s Color Study Stage I II IIIa / IIIb IV V VI VII W or R or Y W W W Bk or G or Bu R or Y R R Y Y G or Bu G G G Bk Bu Bu Bu Bk Bk Bk Y+Bk (Brown) Bk or G or Bu Bk W R Y R+W (Pink) Bk or G or Bu R + Bu (Purple) R+Y (Orange) B+W (Grey) If you group languages into the number of basic color terms they have, as the number of color terms increases, additional terms specify focal colors

Results of Kay’s Color Study Stage I II IIIa / IIIb IV V VI VII W or R or Y W W W Bk or G or Bu R or Y R R Y Y G or Bu G G G Bk Bu Bu Bu Bk Bk Bk Y+Bk (Brown) Bk or G or Bu Bk W R Y R+W (Pink) Bk or G or Bu R + Bu (Purple) R+Y (Orange) B+W (Grey) If you group languages into the number of basic color terms they have, as the number of color terms increases, additional terms specify focal colors

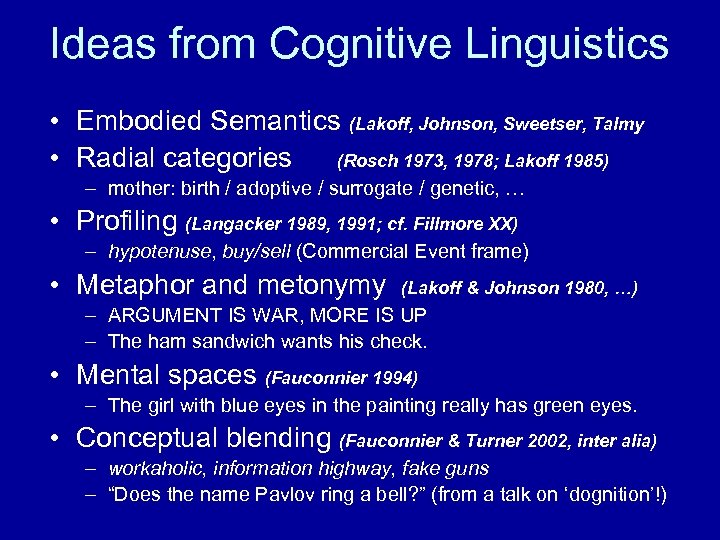

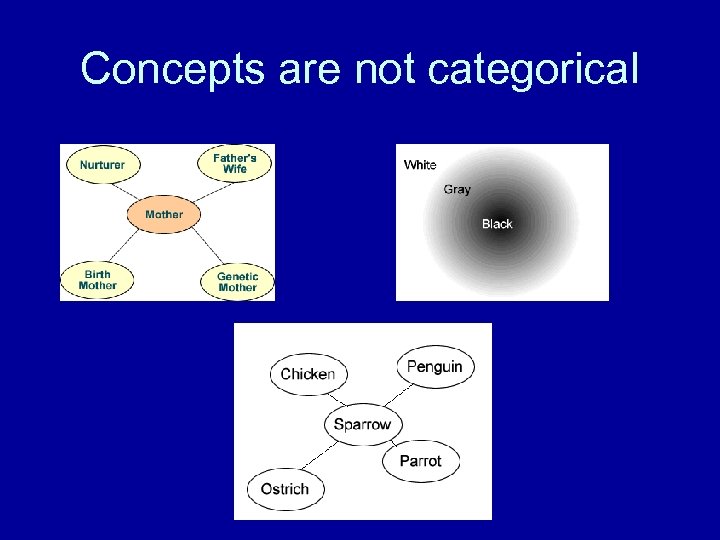

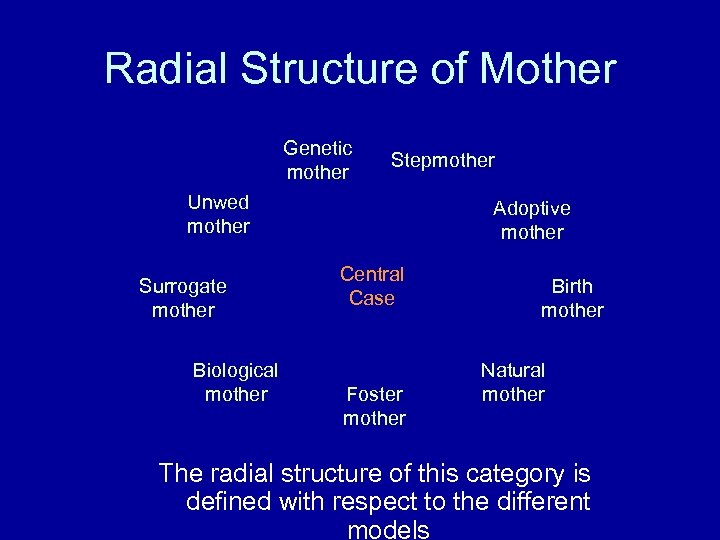

Ideas from Cognitive Linguistics • Embodied Semantics (Lakoff, Johnson, Sweetser, Talmy • Radial categories (Rosch 1973, 1978; Lakoff 1985) – mother: birth / adoptive / surrogate / genetic, … • Profiling (Langacker 1989, 1991; cf. Fillmore XX) – hypotenuse, buy/sell (Commercial Event frame) • Metaphor and metonymy (Lakoff & Johnson 1980, …) – ARGUMENT IS WAR, MORE IS UP – The ham sandwich wants his check. • Mental spaces (Fauconnier 1994) – The girl with blue eyes in the painting really has green eyes. • Conceptual blending (Fauconnier & Turner 2002, inter alia) – workaholic, information highway, fake guns – “Does the name Pavlov ring a bell? ” (from a talk on ‘dognition’!)

Ideas from Cognitive Linguistics • Embodied Semantics (Lakoff, Johnson, Sweetser, Talmy • Radial categories (Rosch 1973, 1978; Lakoff 1985) – mother: birth / adoptive / surrogate / genetic, … • Profiling (Langacker 1989, 1991; cf. Fillmore XX) – hypotenuse, buy/sell (Commercial Event frame) • Metaphor and metonymy (Lakoff & Johnson 1980, …) – ARGUMENT IS WAR, MORE IS UP – The ham sandwich wants his check. • Mental spaces (Fauconnier 1994) – The girl with blue eyes in the painting really has green eyes. • Conceptual blending (Fauconnier & Turner 2002, inter alia) – workaholic, information highway, fake guns – “Does the name Pavlov ring a bell? ” (from a talk on ‘dognition’!)

Concepts are not categorical

Concepts are not categorical

Radial Structure of Mother Genetic mother Stepmother Unwed mother Surrogate mother Biological mother Adoptive mother Central Case Foster mother Birth mother Natural mother The radial structure of this category is defined with respect to the different models

Radial Structure of Mother Genetic mother Stepmother Unwed mother Surrogate mother Biological mother Adoptive mother Central Case Foster mother Birth mother Natural mother The radial structure of this category is defined with respect to the different models

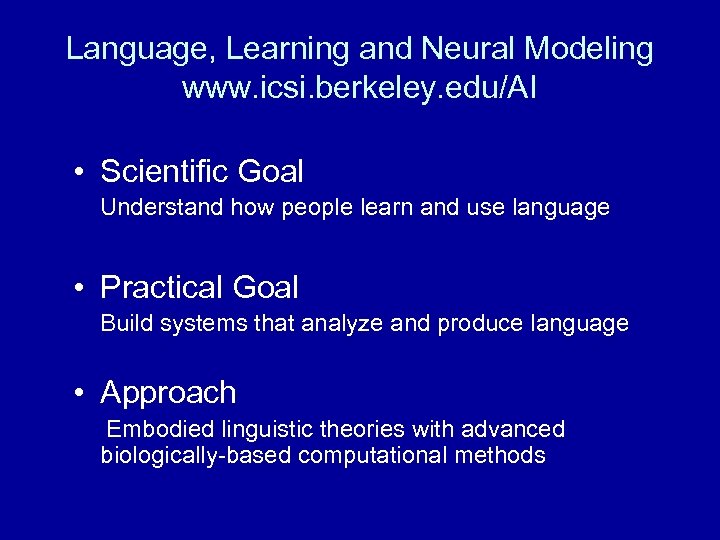

Language, Learning and Neural Modeling www. icsi. berkeley. edu/AI • Scientific Goal Understand how people learn and use language • Practical Goal Build systems that analyze and produce language • Approach Embodied linguistic theories with advanced biologically-based computational methods

Language, Learning and Neural Modeling www. icsi. berkeley. edu/AI • Scientific Goal Understand how people learn and use language • Practical Goal Build systems that analyze and produce language • Approach Embodied linguistic theories with advanced biologically-based computational methods

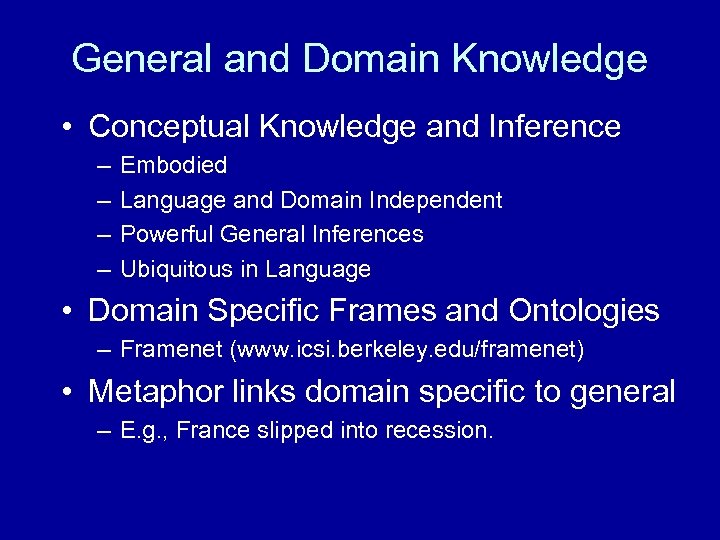

General and Domain Knowledge • Conceptual Knowledge and Inference – – Embodied Language and Domain Independent Powerful General Inferences Ubiquitous in Language • Domain Specific Frames and Ontologies – Framenet (www. icsi. berkeley. edu/framenet) • Metaphor links domain specific to general – E. g. , France slipped into recession.

General and Domain Knowledge • Conceptual Knowledge and Inference – – Embodied Language and Domain Independent Powerful General Inferences Ubiquitous in Language • Domain Specific Frames and Ontologies – Framenet (www. icsi. berkeley. edu/framenet) • Metaphor links domain specific to general – E. g. , France slipped into recession.

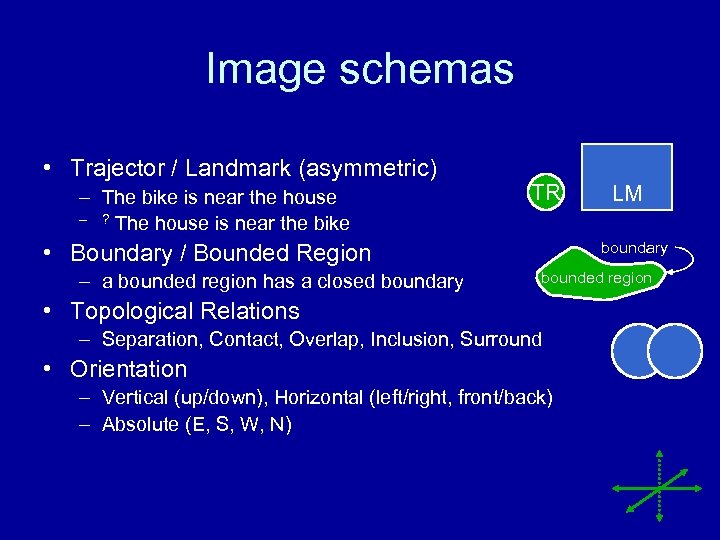

Image schemas • Trajector / Landmark (asymmetric) – The bike is near the house – ? The house is near the bike TR boundary • Boundary / Bounded Region – a bounded region has a closed boundary LM bounded region • Topological Relations – Separation, Contact, Overlap, Inclusion, Surround • Orientation – Vertical (up/down), Horizontal (left/right, front/back) – Absolute (E, S, W, N)

Image schemas • Trajector / Landmark (asymmetric) – The bike is near the house – ? The house is near the bike TR boundary • Boundary / Bounded Region – a bounded region has a closed boundary LM bounded region • Topological Relations – Separation, Contact, Overlap, Inclusion, Surround • Orientation – Vertical (up/down), Horizontal (left/right, front/back) – Absolute (E, S, W, N)

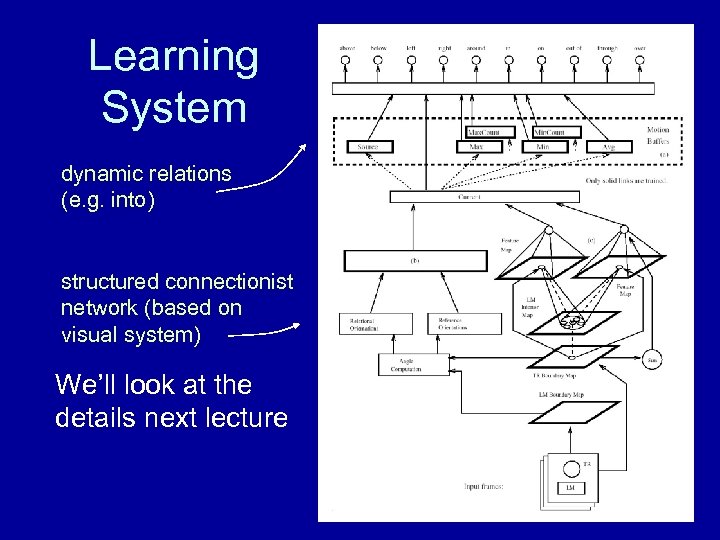

Learning System dynamic relations (e. g. into) structured connectionist network (based on visual system) We’ll look at the details next lecture

Learning System dynamic relations (e. g. into) structured connectionist network (based on visual system) We’ll look at the details next lecture

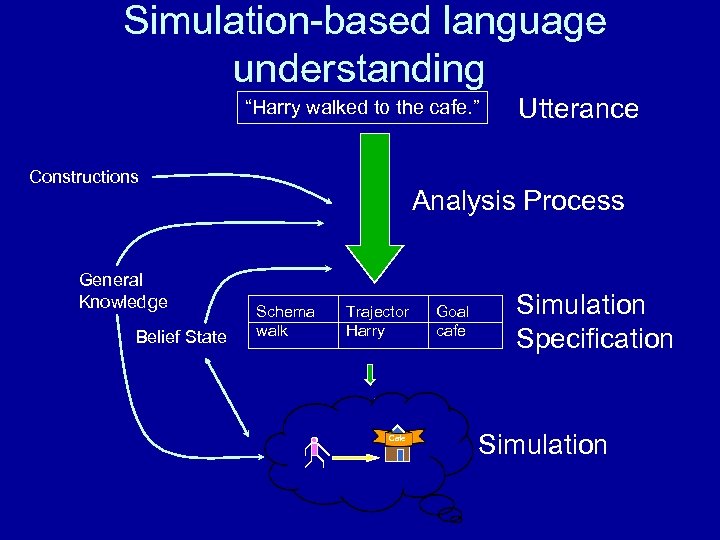

Simulation-based language understanding “Harry walked to the cafe. ” Constructions General Knowledge Belief State Utterance Analysis Process Schema walk Trajector Harry Cafe Goal cafe Simulation Specification Simulation

Simulation-based language understanding “Harry walked to the cafe. ” Constructions General Knowledge Belief State Utterance Analysis Process Schema walk Trajector Harry Cafe Goal cafe Simulation Specification Simulation

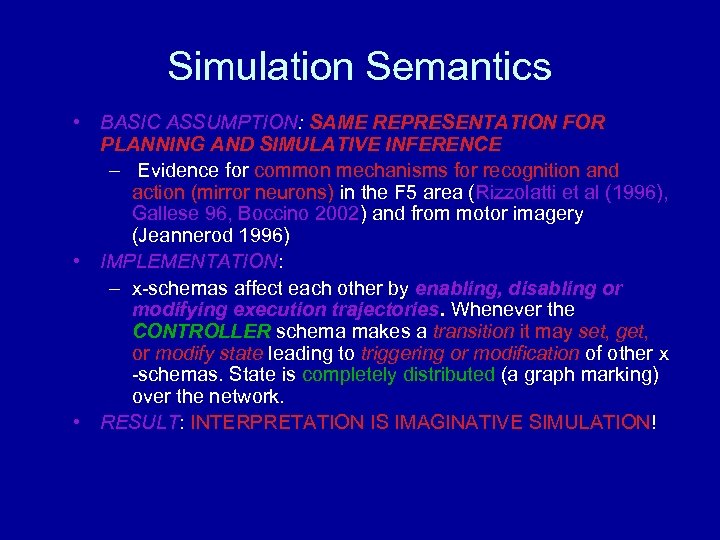

Simulation Semantics • BASIC ASSUMPTION: SAME REPRESENTATION FOR PLANNING AND SIMULATIVE INFERENCE – Evidence for common mechanisms for recognition and action (mirror neurons) in the F 5 area (Rizzolatti et al (1996), Gallese 96, Boccino 2002) and from motor imagery (Jeannerod 1996) • IMPLEMENTATION: – x-schemas affect each other by enabling, disabling or modifying execution trajectories. Whenever the CONTROLLER schema makes a transition it may set, get, or modify state leading to triggering or modification of other x -schemas. State is completely distributed (a graph marking) over the network. • RESULT: INTERPRETATION IS IMAGINATIVE SIMULATION!

Simulation Semantics • BASIC ASSUMPTION: SAME REPRESENTATION FOR PLANNING AND SIMULATIVE INFERENCE – Evidence for common mechanisms for recognition and action (mirror neurons) in the F 5 area (Rizzolatti et al (1996), Gallese 96, Boccino 2002) and from motor imagery (Jeannerod 1996) • IMPLEMENTATION: – x-schemas affect each other by enabling, disabling or modifying execution trajectories. Whenever the CONTROLLER schema makes a transition it may set, get, or modify state leading to triggering or modification of other x -schemas. State is completely distributed (a graph marking) over the network. • RESULT: INTERPRETATION IS IMAGINATIVE SIMULATION!

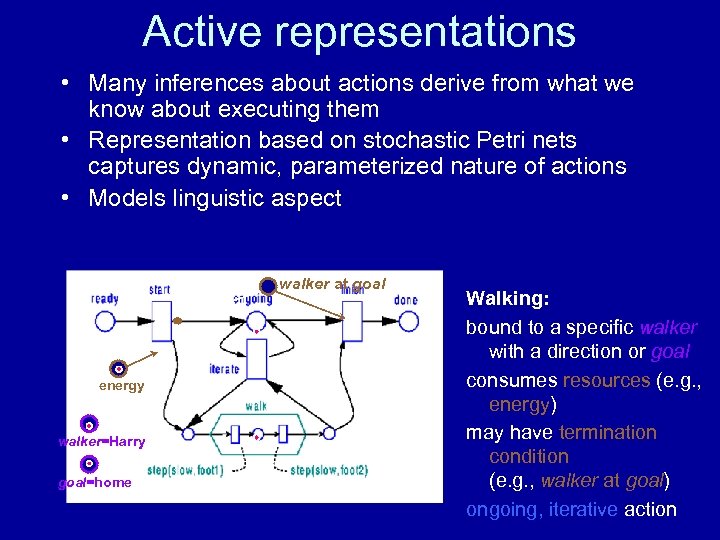

Active representations • Many inferences about actions derive from what we know about executing them • Representation based on stochastic Petri nets captures dynamic, parameterized nature of actions • Models linguistic aspect walker at goal energy walker=Harry goal=home Walking: bound to a specific walker with a direction or goal consumes resources (e. g. , energy) may have termination condition (e. g. , walker at goal) ongoing, iterative action

Active representations • Many inferences about actions derive from what we know about executing them • Representation based on stochastic Petri nets captures dynamic, parameterized nature of actions • Models linguistic aspect walker at goal energy walker=Harry goal=home Walking: bound to a specific walker with a direction or goal consumes resources (e. g. , energy) may have termination condition (e. g. , walker at goal) ongoing, iterative action

Learning Verb Meanings David Bailey A model of children learning their first verbs. Assumes parent labels child’s actions. Child knows parameters of action, associates with word Program learns well enough to: 1) Label novel actions correctly 2) Obey commands using new words (simulation) System works across languages Mechanisms are neurally plausible.

Learning Verb Meanings David Bailey A model of children learning their first verbs. Assumes parent labels child’s actions. Child knows parameters of action, associates with word Program learns well enough to: 1) Label novel actions correctly 2) Obey commands using new words (simulation) System works across languages Mechanisms are neurally plausible.

food toys misc. people sound emotion action prep. demon. social Words learned by most 2 -year olds in a play school (Bloom 1993)

food toys misc. people sound emotion action prep. demon. social Words learned by most 2 -year olds in a play school (Bloom 1993)

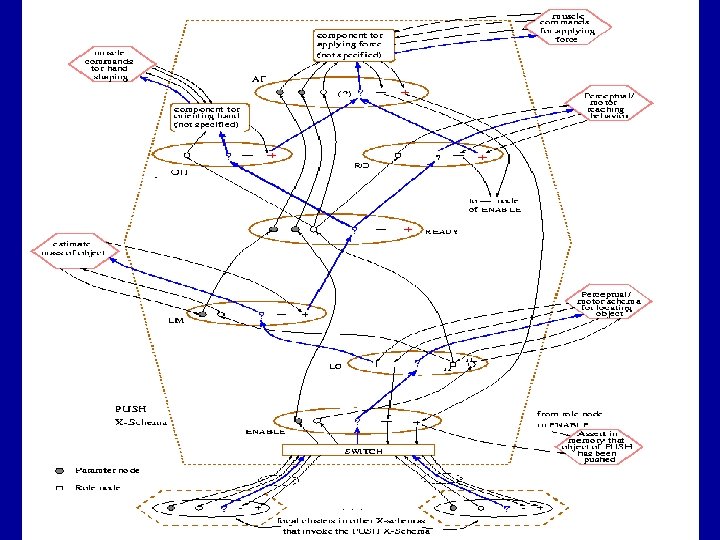

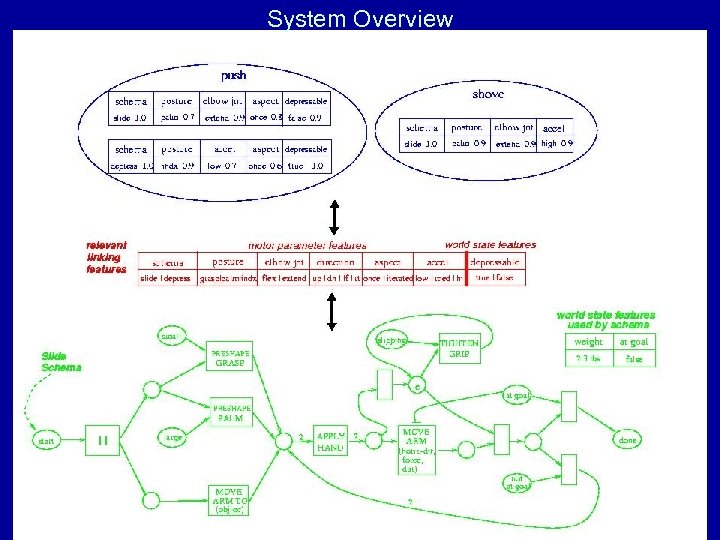

System Overview

System Overview

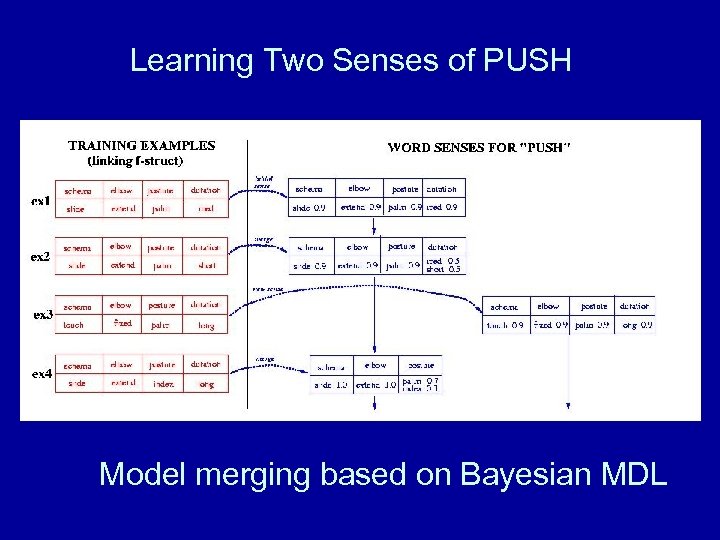

Learning Two Senses of PUSH Model merging based on Bayesian MDL

Learning Two Senses of PUSH Model merging based on Bayesian MDL

Training Results David Bailey English • 165 Training Examples, 18 verbs • Learns optimal number of word senses (21) • 32 Test examples : 78% recognition, 81% action • All mistakes were close lift ~ yank, etc. • Learned some particle CXN, e. g. , pull up Farsi • With identical settings, learned senses not in English

Training Results David Bailey English • 165 Training Examples, 18 verbs • Learns optimal number of word senses (21) • 32 Test examples : 78% recognition, 81% action • All mistakes were close lift ~ yank, etc. • Learned some particle CXN, e. g. , pull up Farsi • With identical settings, learned senses not in English

Task: Interpret simple discourse fragments/ blurbs France fell into recession. Pulled out by Germany US Economy on the verge of falling back into recession after moving forward on an anemic recovery. Indian Government stumbling in implementing Liberalization plan. Moving forward on all fronts, we are going to be ongoing and relentless as we tighten the net of justice. The Government is taking bold new steps. We are loosening the stranglehold on business, slashing tariffs and removing obstacles to international trade.

Task: Interpret simple discourse fragments/ blurbs France fell into recession. Pulled out by Germany US Economy on the verge of falling back into recession after moving forward on an anemic recovery. Indian Government stumbling in implementing Liberalization plan. Moving forward on all fronts, we are going to be ongoing and relentless as we tighten the net of justice. The Government is taking bold new steps. We are loosening the stranglehold on business, slashing tariffs and removing obstacles to international trade.

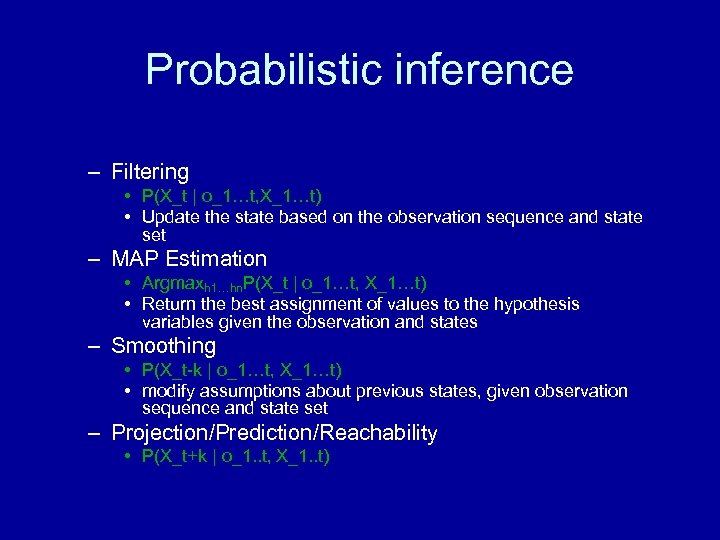

Probabilistic inference – Filtering • P(X_t | o_1…t, X_1…t) • Update the state based on the observation sequence and state set – MAP Estimation • Argmaxh 1…hn. P(X_t | o_1…t, X_1…t) • Return the best assignment of values to the hypothesis variables given the observation and states – Smoothing • P(X_t-k | o_1…t, X_1…t) • modify assumptions about previous states, given observation sequence and state set – Projection/Prediction/Reachability • P(X_t+k | o_1. . t, X_1. . t)

Probabilistic inference – Filtering • P(X_t | o_1…t, X_1…t) • Update the state based on the observation sequence and state set – MAP Estimation • Argmaxh 1…hn. P(X_t | o_1…t, X_1…t) • Return the best assignment of values to the hypothesis variables given the observation and states – Smoothing • P(X_t-k | o_1…t, X_1…t) • modify assumptions about previous states, given observation sequence and state set – Projection/Prediction/Reachability • P(X_t+k | o_1. . t, X_1. . t)

Metaphor Maps • Static Structures that project bindings from source domain f- struct to target domain Bayes net nodes by setting evidence on the target network. • Different types of maps – PMAPS project X- schema Parameters to abstract domains – OMAPS connect roles between source and target domain – SMAPS connect schemas from source to target domains. • ASPECT is an invariant in projection.

Metaphor Maps • Static Structures that project bindings from source domain f- struct to target domain Bayes net nodes by setting evidence on the target network. • Different types of maps – PMAPS project X- schema Parameters to abstract domains – OMAPS connect roles between source and target domain – SMAPS connect schemas from source to target domains. • ASPECT is an invariant in projection.

Results • Model was implemented and tested on discourse fragments from a database of 50 newspaper stories in international economics from standard sources such as WSJ, NYT, and the Economist. • Results show that motion terms are often the most effective method to provide the following types of information about abstract plans and actions. – Information about uncertain events and dynamic changes in goals and resources. (sluggish, fall, off-track, no steam) – Information about evaluations of policies and economic actors and communicative intent (strangle-hold, bleed). – Communicating complex, context-sensitive and dynamic economic scenarios (stumble, slide, slippery slope). – Commincating complex event structure and aspectual information (on the verge of, sidestep, giant leap, small steps, ready, set out, back on track). • ALL THESE BINDINGS RESULT FROM REFLEX, AUTOMATIC INFERENCES PROVIDED BY X-SCHEMA BASED INFERENCES.

Results • Model was implemented and tested on discourse fragments from a database of 50 newspaper stories in international economics from standard sources such as WSJ, NYT, and the Economist. • Results show that motion terms are often the most effective method to provide the following types of information about abstract plans and actions. – Information about uncertain events and dynamic changes in goals and resources. (sluggish, fall, off-track, no steam) – Information about evaluations of policies and economic actors and communicative intent (strangle-hold, bleed). – Communicating complex, context-sensitive and dynamic economic scenarios (stumble, slide, slippery slope). – Commincating complex event structure and aspectual information (on the verge of, sidestep, giant leap, small steps, ready, set out, back on track). • ALL THESE BINDINGS RESULT FROM REFLEX, AUTOMATIC INFERENCES PROVIDED BY X-SCHEMA BASED INFERENCES.

Models of Learning • • Hebbian ~ coincidence Recruitment ~ one trial Supervised ~ correction (backprop) Reinforcement ~ Reward based – delayed reward • Unsupervised ~ similarity

Models of Learning • • Hebbian ~ coincidence Recruitment ~ one trial Supervised ~ correction (backprop) Reinforcement ~ Reward based – delayed reward • Unsupervised ~ similarity

Reinforcement Learning • Basic idea: – Receive feedback in the form of rewards • also called reward based learning in psychology – Agent’s utility is defined by the reward function – Must learn to act so as to maximize expected utility – Change the rewards, change the behavior • Examples: – – Learning coordinated behavior/skills (x-schemas) Playing a game, reward at the end for winning / losing Vacuuming robot, reward for each piece of dirt picked up Automated taxi, reward for each passenger delivered

Reinforcement Learning • Basic idea: – Receive feedback in the form of rewards • also called reward based learning in psychology – Agent’s utility is defined by the reward function – Must learn to act so as to maximize expected utility – Change the rewards, change the behavior • Examples: – – Learning coordinated behavior/skills (x-schemas) Playing a game, reward at the end for winning / losing Vacuuming robot, reward for each piece of dirt picked up Automated taxi, reward for each passenger delivered

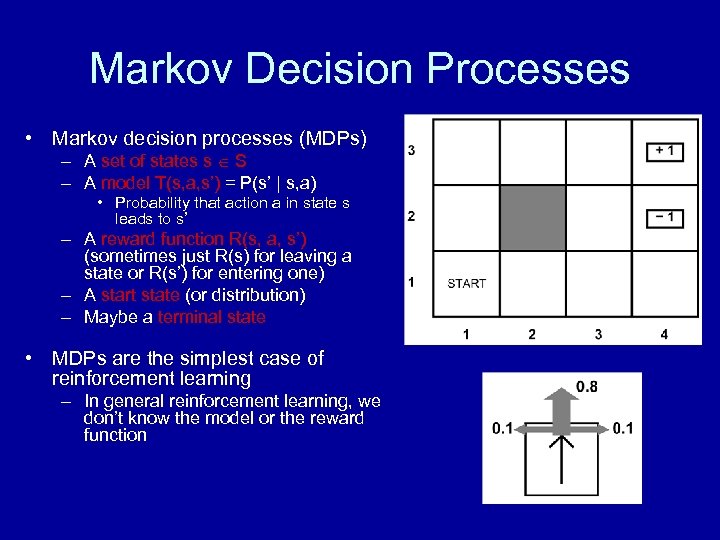

Markov Decision Processes • Markov decision processes (MDPs) – A set of states s S – A model T(s, a, s’) = P(s’ | s, a) • Probability that action a in state s leads to s’ – A reward function R(s, a, s’) (sometimes just R(s) for leaving a state or R(s’) for entering one) – A start state (or distribution) – Maybe a terminal state • MDPs are the simplest case of reinforcement learning – In general reinforcement learning, we don’t know the model or the reward function

Markov Decision Processes • Markov decision processes (MDPs) – A set of states s S – A model T(s, a, s’) = P(s’ | s, a) • Probability that action a in state s leads to s’ – A reward function R(s, a, s’) (sometimes just R(s) for leaving a state or R(s’) for entering one) – A start state (or distribution) – Maybe a terminal state • MDPs are the simplest case of reinforcement learning – In general reinforcement learning, we don’t know the model or the reward function

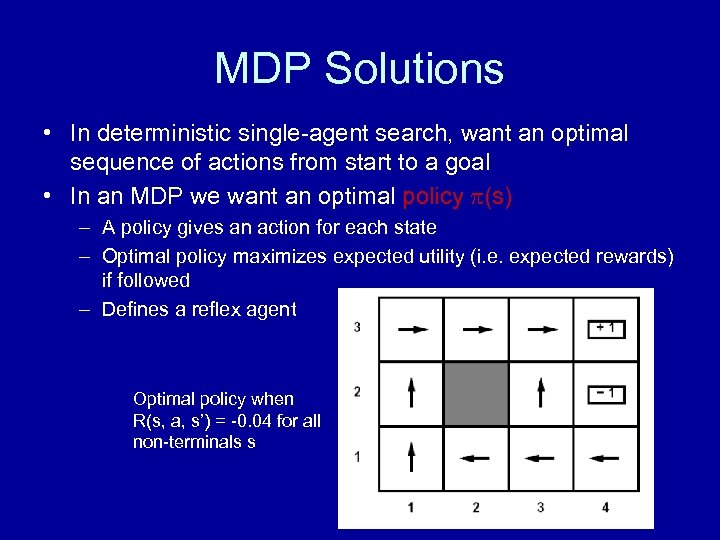

MDP Solutions • In deterministic single-agent search, want an optimal sequence of actions from start to a goal • In an MDP we want an optimal policy (s) – A policy gives an action for each state – Optimal policy maximizes expected utility (i. e. expected rewards) if followed – Defines a reflex agent Optimal policy when R(s, a, s’) = -0. 04 for all non-terminals s

MDP Solutions • In deterministic single-agent search, want an optimal sequence of actions from start to a goal • In an MDP we want an optimal policy (s) – A policy gives an action for each state – Optimal policy maximizes expected utility (i. e. expected rewards) if followed – Defines a reflex agent Optimal policy when R(s, a, s’) = -0. 04 for all non-terminals s

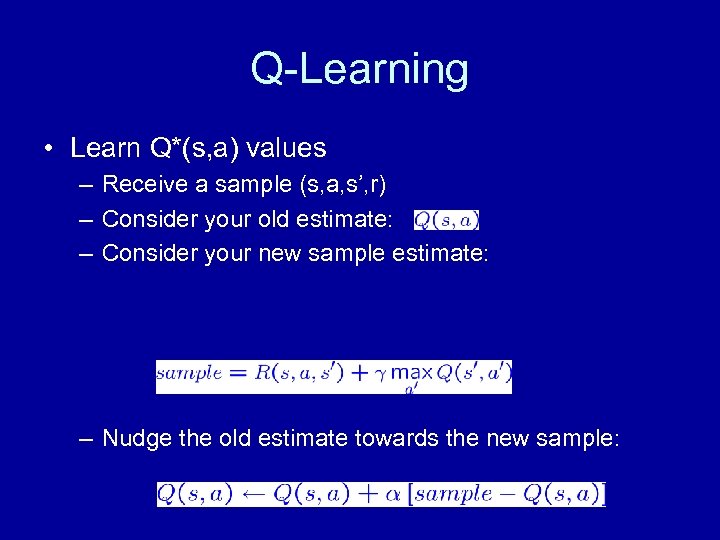

Q-Learning • Learn Q*(s, a) values – Receive a sample (s, a, s’, r) – Consider your old estimate: – Consider your new sample estimate: – Nudge the old estimate towards the new sample:

Q-Learning • Learn Q*(s, a) values – Receive a sample (s, a, s’, r) – Consider your old estimate: – Consider your new sample estimate: – Nudge the old estimate towards the new sample:

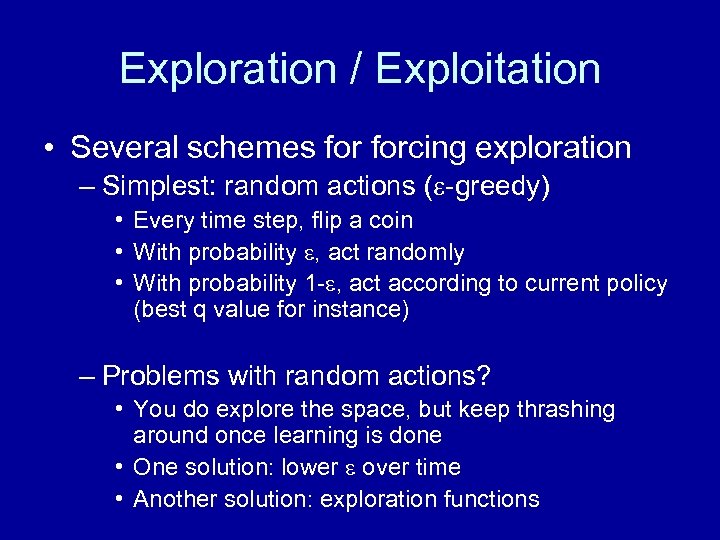

Exploration / Exploitation • Several schemes forcing exploration – Simplest: random actions ( -greedy) • Every time step, flip a coin • With probability , act randomly • With probability 1 - , act according to current policy (best q value for instance) – Problems with random actions? • You do explore the space, but keep thrashing around once learning is done • One solution: lower over time • Another solution: exploration functions

Exploration / Exploitation • Several schemes forcing exploration – Simplest: random actions ( -greedy) • Every time step, flip a coin • With probability , act randomly • With probability 1 - , act according to current policy (best q value for instance) – Problems with random actions? • You do explore the space, but keep thrashing around once learning is done • One solution: lower over time • Another solution: exploration functions

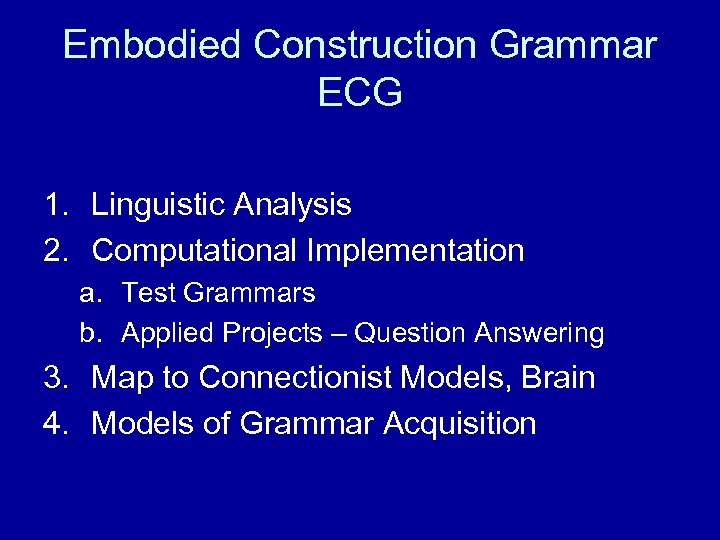

Embodied Construction Grammar ECG 1. Linguistic Analysis 2. Computational Implementation a. Test Grammars b. Applied Projects – Question Answering 3. Map to Connectionist Models, Brain 4. Models of Grammar Acquisition

Embodied Construction Grammar ECG 1. Linguistic Analysis 2. Computational Implementation a. Test Grammars b. Applied Projects – Question Answering 3. Map to Connectionist Models, Brain 4. Models of Grammar Acquisition

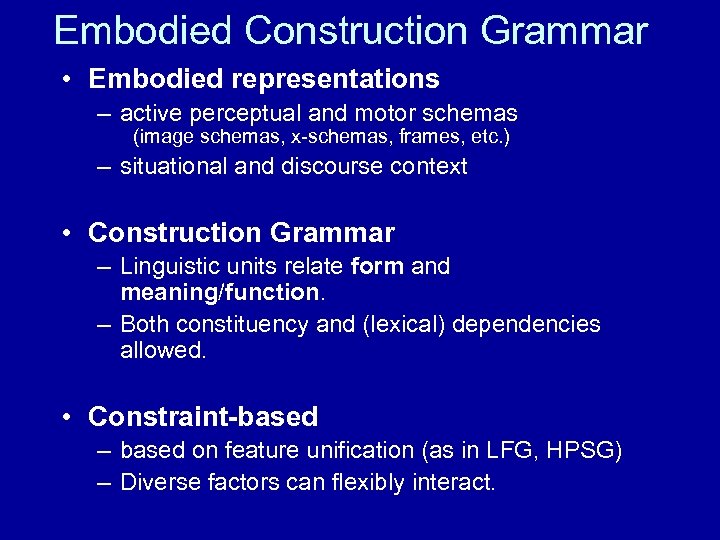

Embodied Construction Grammar • Embodied representations – active perceptual and motor schemas (image schemas, x-schemas, frames, etc. ) – situational and discourse context • Construction Grammar – Linguistic units relate form and meaning/function. – Both constituency and (lexical) dependencies allowed. • Constraint-based – based on feature unification (as in LFG, HPSG) – Diverse factors can flexibly interact.

Embodied Construction Grammar • Embodied representations – active perceptual and motor schemas (image schemas, x-schemas, frames, etc. ) – situational and discourse context • Construction Grammar – Linguistic units relate form and meaning/function. – Both constituency and (lexical) dependencies allowed. • Constraint-based – based on feature unification (as in LFG, HPSG) – Diverse factors can flexibly interact.

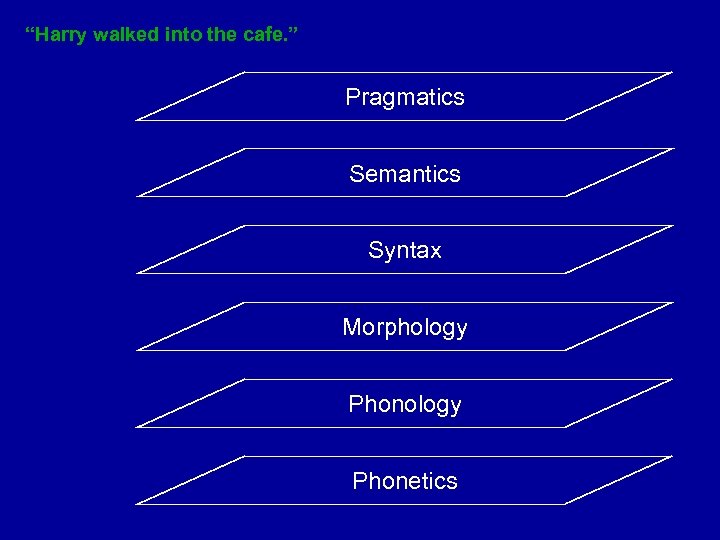

“Harry walked into the cafe. ” Pragmatics Semantics Syntax Morphology Phonetics

“Harry walked into the cafe. ” Pragmatics Semantics Syntax Morphology Phonetics

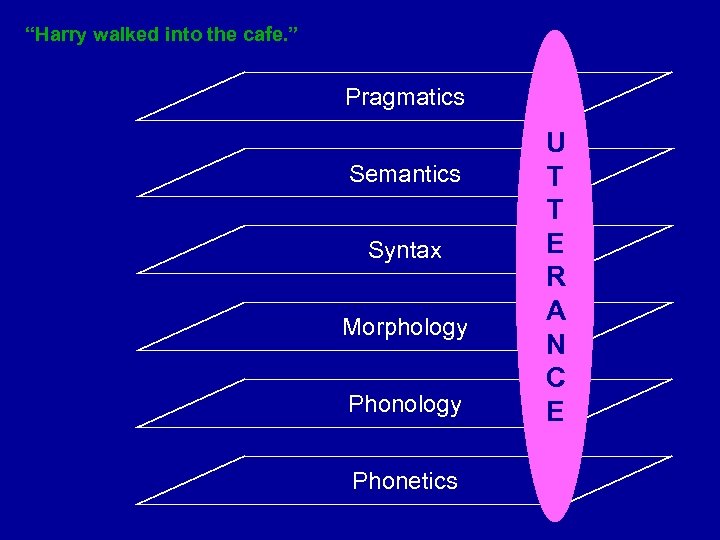

“Harry walked into the cafe. ” Pragmatics Semantics Syntax Morphology Phonetics U T T E R A N C E

“Harry walked into the cafe. ” Pragmatics Semantics Syntax Morphology Phonetics U T T E R A N C E

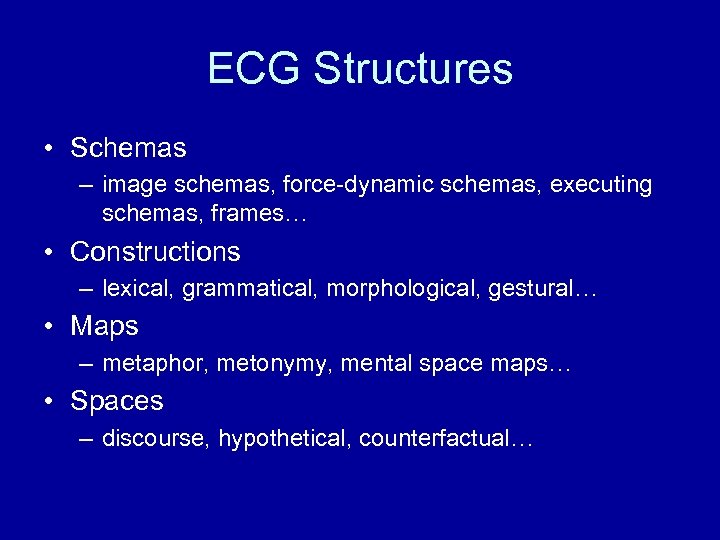

ECG Structures • Schemas – image schemas, force-dynamic schemas, executing schemas, frames… • Constructions – lexical, grammatical, morphological, gestural… • Maps – metaphor, metonymy, mental space maps… • Spaces – discourse, hypothetical, counterfactual…

ECG Structures • Schemas – image schemas, force-dynamic schemas, executing schemas, frames… • Constructions – lexical, grammatical, morphological, gestural… • Maps – metaphor, metonymy, mental space maps… • Spaces – discourse, hypothetical, counterfactual…

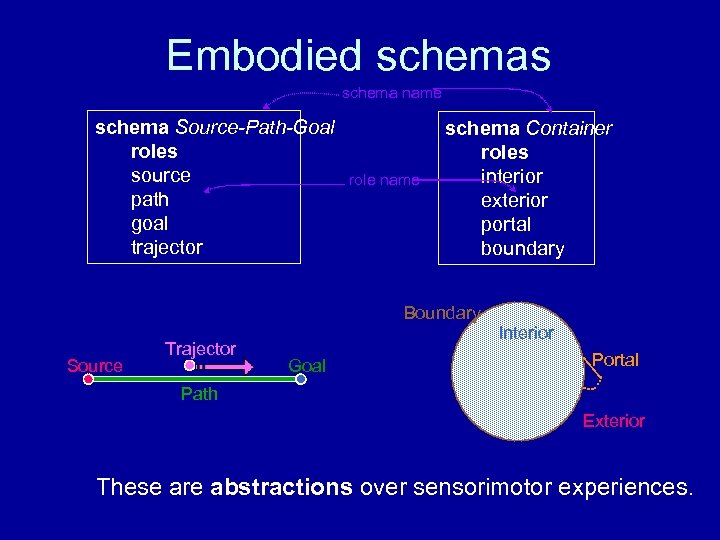

Embodied schemas schema name schema Source-Path-Goal roles source path goal trajector role name schema Container roles interior exterior portal boundary Boundary Source Trajector Goal Interior Portal Path Exterior These are abstractions over sensorimotor experiences.

Embodied schemas schema name schema Source-Path-Goal roles source path goal trajector role name schema Container roles interior exterior portal boundary Boundary Source Trajector Goal Interior Portal Path Exterior These are abstractions over sensorimotor experiences.

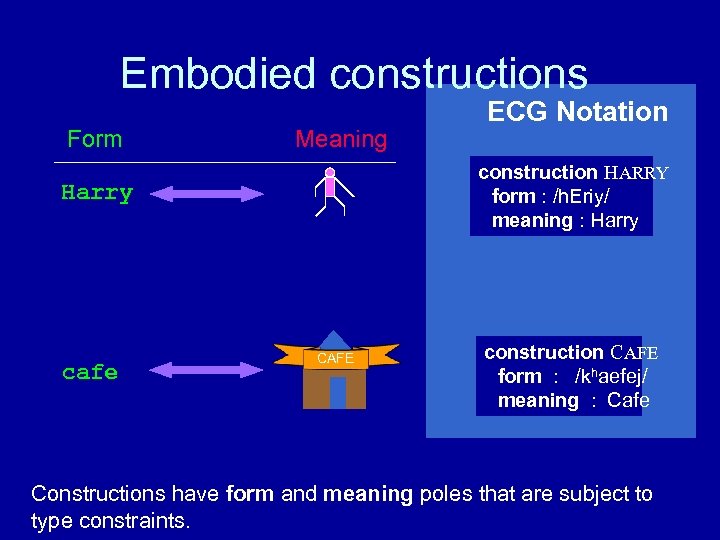

Embodied constructions Form Meaning construction HARRY form : /h. Eriy/ meaning : Harry cafe ECG Notation CAFE construction CAFE form : /khaefej/ meaning : Cafe Constructions have form and meaning poles that are subject to type constraints.

Embodied constructions Form Meaning construction HARRY form : /h. Eriy/ meaning : Harry cafe ECG Notation CAFE construction CAFE form : /khaefej/ meaning : Cafe Constructions have form and meaning poles that are subject to type constraints.

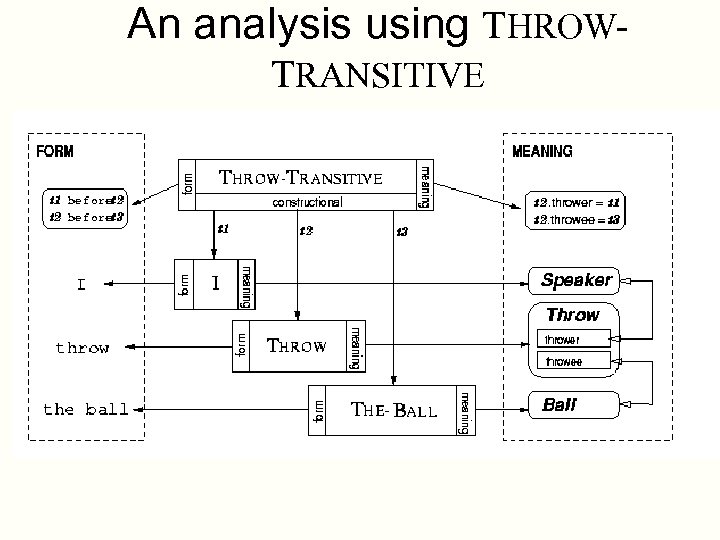

An analysis using THROWTRANSITIVE

An analysis using THROWTRANSITIVE

![Simulation-based language understanding construction WALKED form selff. phon [wakt] meaning : Walk-Action constraints selfm. Simulation-based language understanding construction WALKED form selff. phon [wakt] meaning : Walk-Action constraints selfm.](https://present5.com/presentation/8025a83ea73c89ba3dfd9d3b4d14ae2b/image-67.jpg) Simulation-based language understanding construction WALKED form selff. phon [wakt] meaning : Walk-Action constraints selfm. time before Context. speech-time selfm. . aspect encapsulated “Harry walked into the cafe. ” Utterance Analysis Process Constructions General Knowledge Semantic Specification Belief State CAFE Simulation

Simulation-based language understanding construction WALKED form selff. phon [wakt] meaning : Walk-Action constraints selfm. time before Context. speech-time selfm. . aspect encapsulated “Harry walked into the cafe. ” Utterance Analysis Process Constructions General Knowledge Semantic Specification Belief State CAFE Simulation

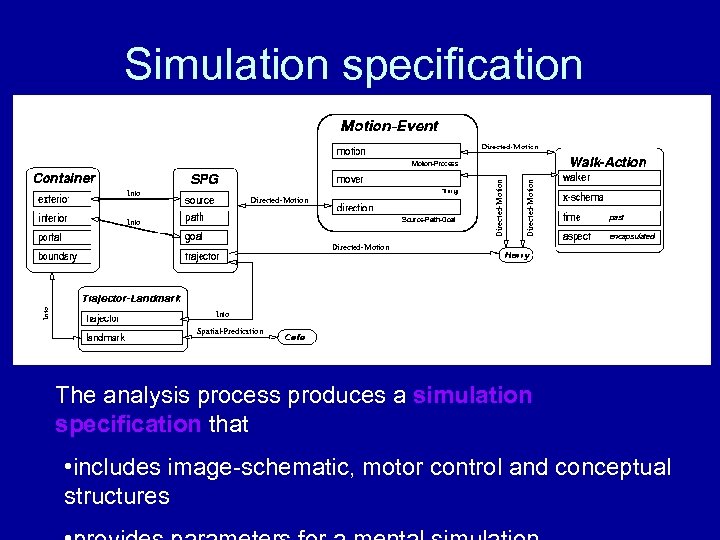

Simulation specification The analysis process produces a simulation specification that • includes image-schematic, motor control and conceptual structures

Simulation specification The analysis process produces a simulation specification that • includes image-schematic, motor control and conceptual structures

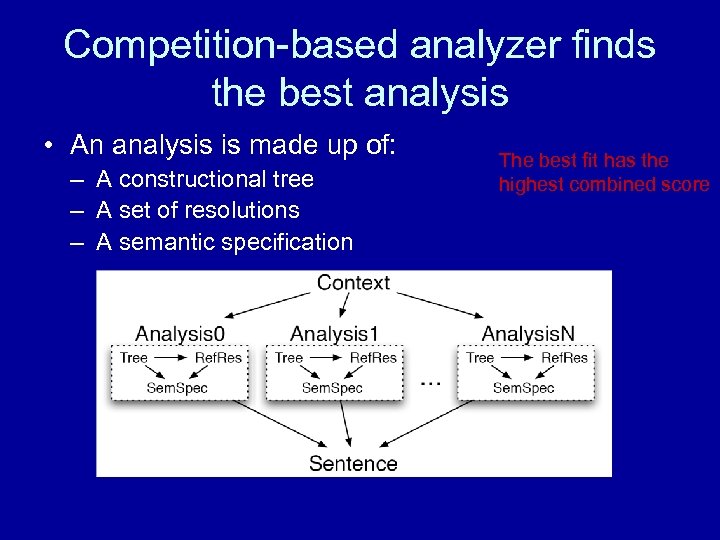

Competition-based analyzer finds the best analysis • An analysis is made up of: – A constructional tree – A set of resolutions – A semantic specification The best fit has the highest combined score

Competition-based analyzer finds the best analysis • An analysis is made up of: – A constructional tree – A set of resolutions – A semantic specification The best fit has the highest combined score

Summary: ECG • Linguistic constructions are tied to a model of simulated action and perception • Embedded in a theory of language processing – Constrains theory to be usable – Frees structures to be just structures, used in processing • Precise, computationally usable formalism – Practical computational applications, like MT and NLU – Testing of functionality, e. g. language learning • A shared theory and formalism for different cognitive mechanisms – Constructions, metaphor, mental spaces, etc.

Summary: ECG • Linguistic constructions are tied to a model of simulated action and perception • Embedded in a theory of language processing – Constrains theory to be usable – Frees structures to be just structures, used in processing • Precise, computationally usable formalism – Practical computational applications, like MT and NLU – Testing of functionality, e. g. language learning • A shared theory and formalism for different cognitive mechanisms – Constructions, metaphor, mental spaces, etc.

State of the Art Natural Language Understanding • Limited Commercial Speech Applications transcription, simple response systems • Statistical NLP for Restricted Tasks tagging, parsing, information retrieval • Template-based Understanding programs expensive, brittle, inflexible, unnatural • Essentially no NLU in QA, HCI systems • ECG being applied in prototypes

State of the Art Natural Language Understanding • Limited Commercial Speech Applications transcription, simple response systems • Statistical NLP for Restricted Tasks tagging, parsing, information retrieval • Template-based Understanding programs expensive, brittle, inflexible, unnatural • Essentially no NLU in QA, HCI systems • ECG being applied in prototypes