c1da1990792b52b079e813e8c2c9cb00.ppt

- Количество слайдов: 36

Uncertainty Analysis and Model “Validation” or Confidence Building

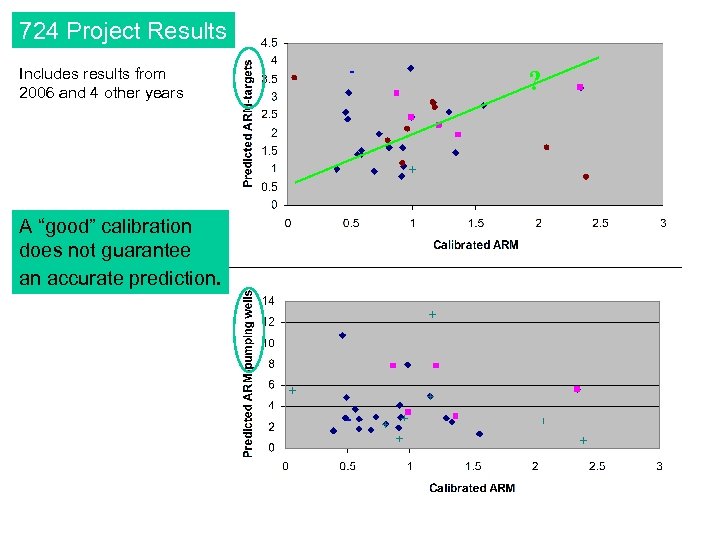

Conclusions • Calibrations are non-unique. • A good calibration (even if ARM = 0) does not ensure that the model will make good predictions. • Field data are essential in constraining the model so that the model can capture the essential features of the system. • Modelers need to maintain a healthy skepticism about their results.

Conclusions • Head predictions are more robust (consistent among different calibrated models) than transport (particle tracking) predictions. • Need for an uncertainty analysis to accompany calibration results and predictions. Ideally models should be maintained for the long term and updated to establish confidence in the model. Rather than a single calibration exercise, a continual process of confidence building is needed.

Uncertainty in the Calibration Involves uncertainty in: ü Conceptual model including boundary conditions, zonation, geometry, etc. ü Parameter values ü Targets

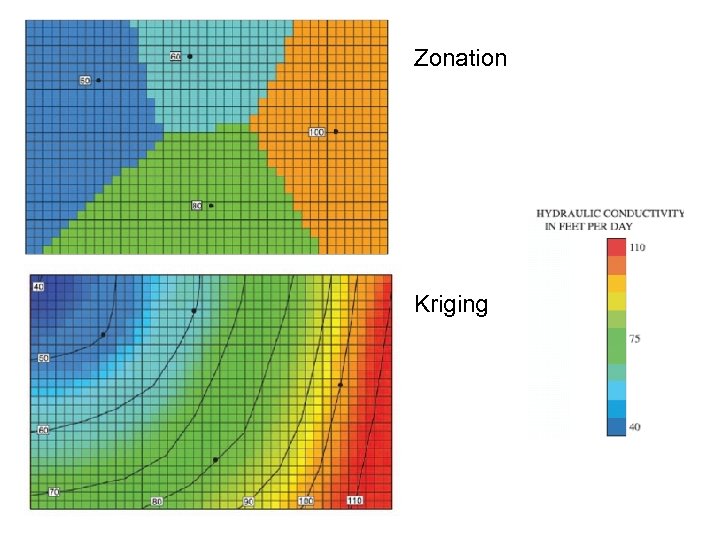

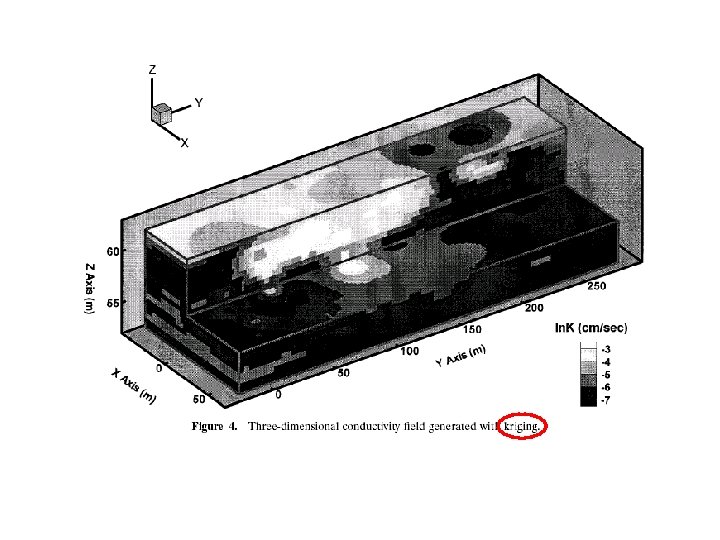

Zonation Kriging

Zonation vs Pilot Points To use conventional inverse models/parameter estimation models in calibration, you need to have a pretty good idea of zonation (of K, for example). (New version of PEST with pilot points does not need zonation as it works with continuous distribution of parameter values. ) Also need to identify reasonable ranges for the calibration parameters and weights.

Parameter Values • Field data are essential in constraining the model so that the model can capture the essential features of the system.

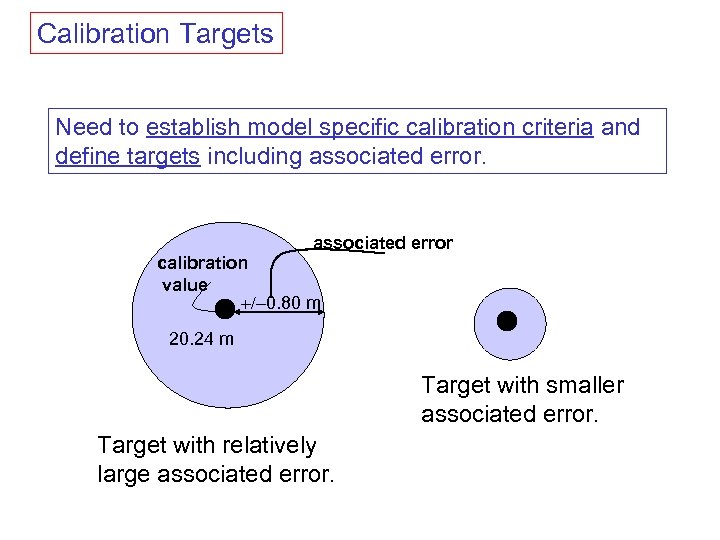

Calibration Targets Need to establish model specific calibration criteria and define targets including associated error calibration value 0. 80 m 20. 24 m Target with smaller associated error. Target with relatively large associated error.

Examples of Sources of Error in a Calibration Target • • • Surveying errors Errors in measuring water levels Interpolation error Transient effects Scaling effects Unmodeled heterogeneities

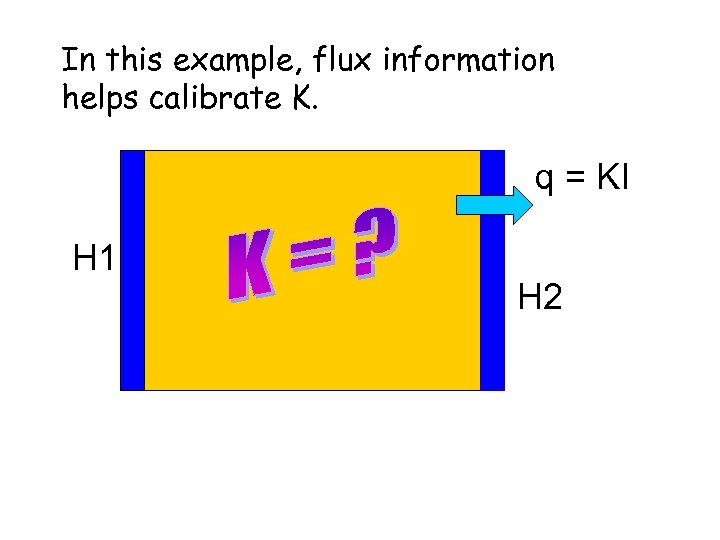

Importance of Flux Targets When recharge rate (R) is a calibration parameter, calibrating to fluxes can help in estimating K and/or R. R was not a calibration parameter in our final project.

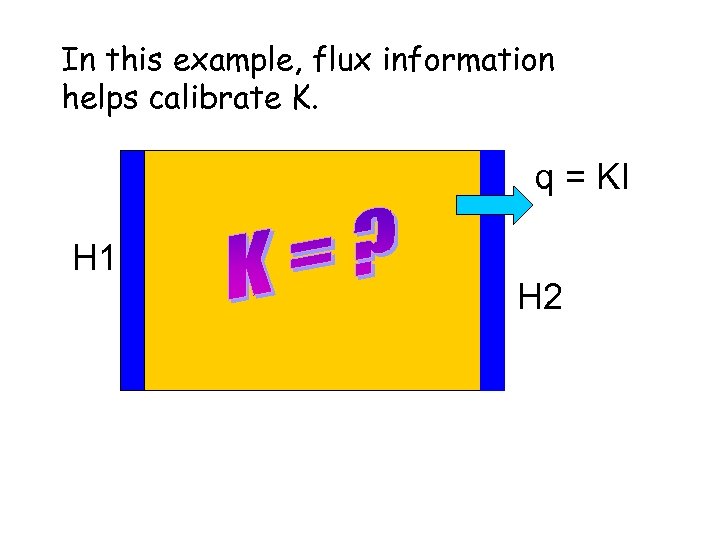

In this example, flux information helps calibrate K. q = KI H 1 H 2

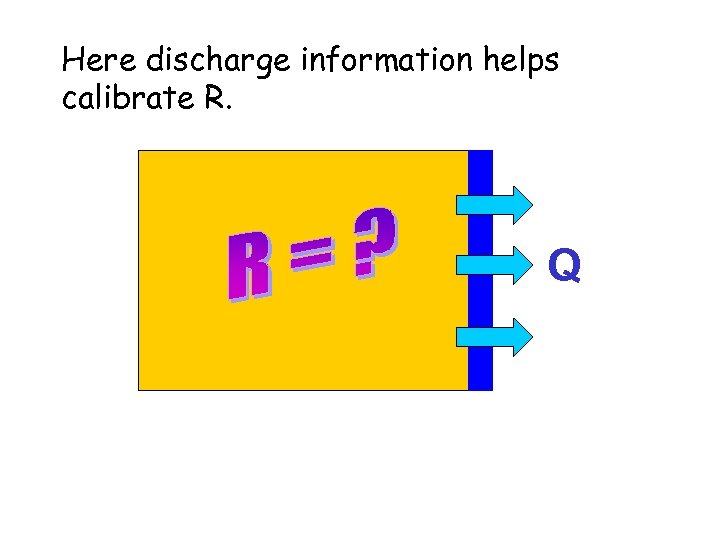

Here discharge information helps calibrate R. Q

In this example, flux information helps calibrate K. q = KI H 1 H 2

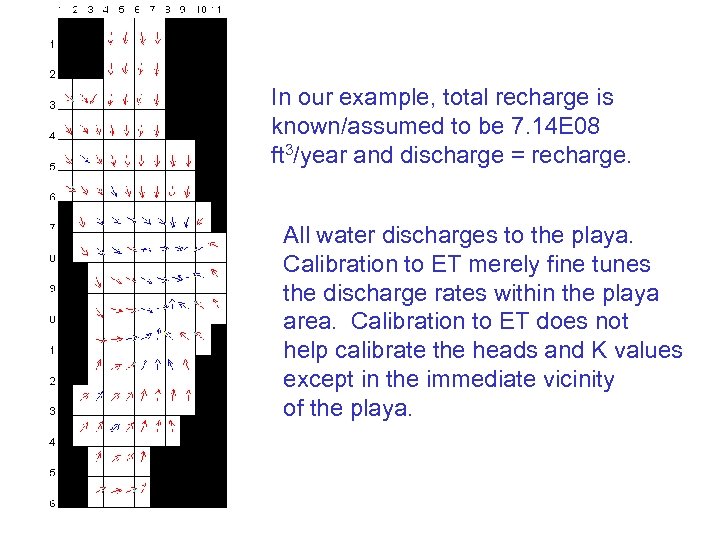

In our example, total recharge is known/assumed to be 7. 14 E 08 ft 3/year and discharge = recharge. All water discharges to the playa. Calibration to ET merely fine tunes the discharge rates within the playa area. Calibration to ET does not help calibrate the heads and K values except in the immediate vicinity of the playa.

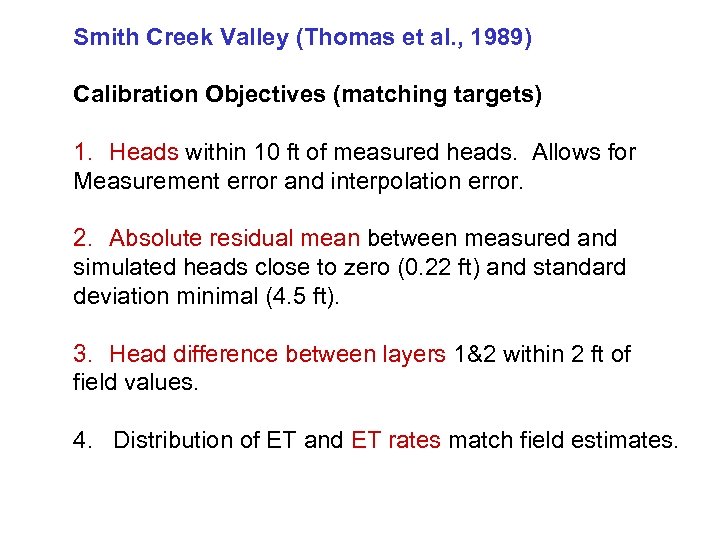

Smith Creek Valley (Thomas et al. , 1989) Calibration Objectives (matching targets) 1. Heads within 10 ft of measured heads. Allows for Measurement error and interpolation error. 2. Absolute residual mean between measured and simulated heads close to zero (0. 22 ft) and standard deviation minimal (4. 5 ft). 3. Head difference between layers 1&2 within 2 ft of field values. 4. Distribution of ET and ET rates match field estimates.

724 Project Results Includes results from 2006 and 4 other years A “good” calibration does not guarantee an accurate prediction. ?

Sensitivity analysis to analyze uncertainty in the calibration Use an inverse model (automated calibration) to quantify uncertainties and optimize the calibration. Perform sensitivity analysis during calibration. Sensitivity coefficients

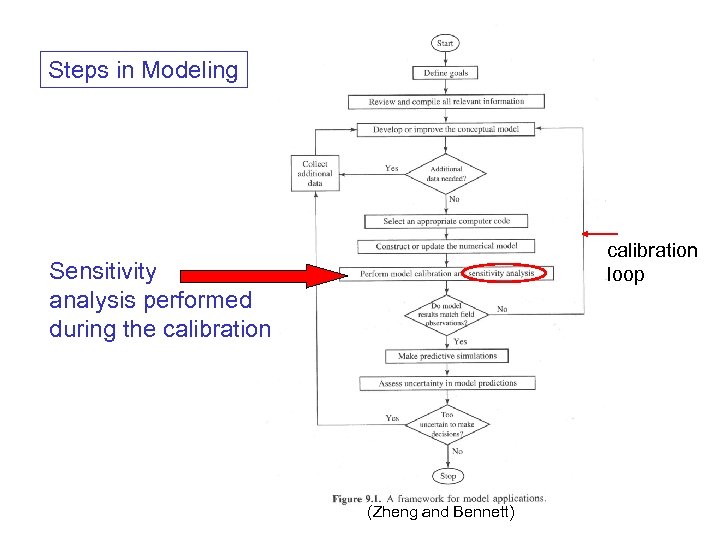

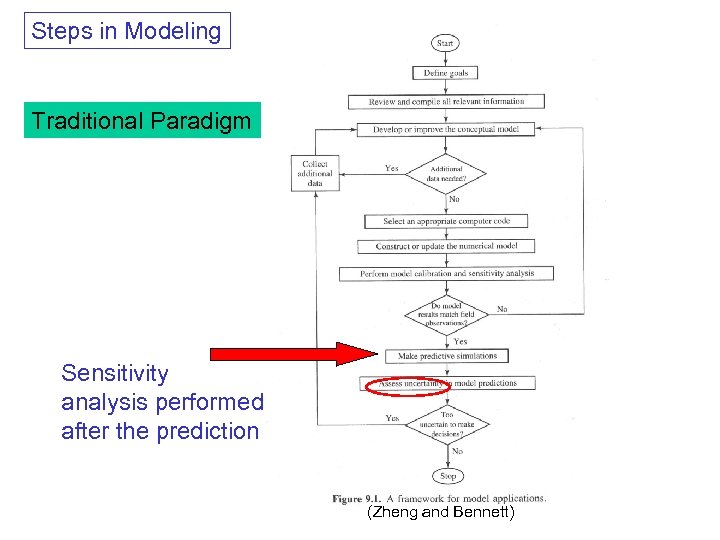

Steps in Modeling calibration loop Sensitivity analysis performed during the calibration (Zheng and Bennett)

Uncertainty in the Prediction ü Reflects uncertainty in the calibration. ü Involves uncertainty in how parameter values (e. g. , recharge) or pumping rates will vary in the future.

Ways to quantify uncertainty in the prediction Sensitivity analysis - parameters Scenario analysis - stresses Stochastic simulation

Steps in Modeling Traditional Paradigm Sensitivity analysis performed after the prediction (Zheng and Bennett)

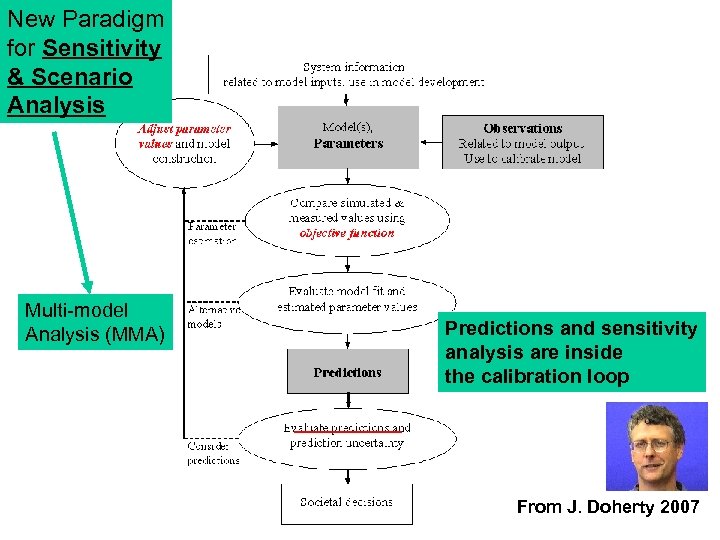

New Paradigm for Sensitivity & Scenario Analysis Multi-model Analysis (MMA) Predictions and sensitivity analysis are inside the calibration loop From J. Doherty 2007

Ways to quantify uncertainty in the prediction Sensitivity analysis - parameters Scenario analysis - stresses Stochastic simulation

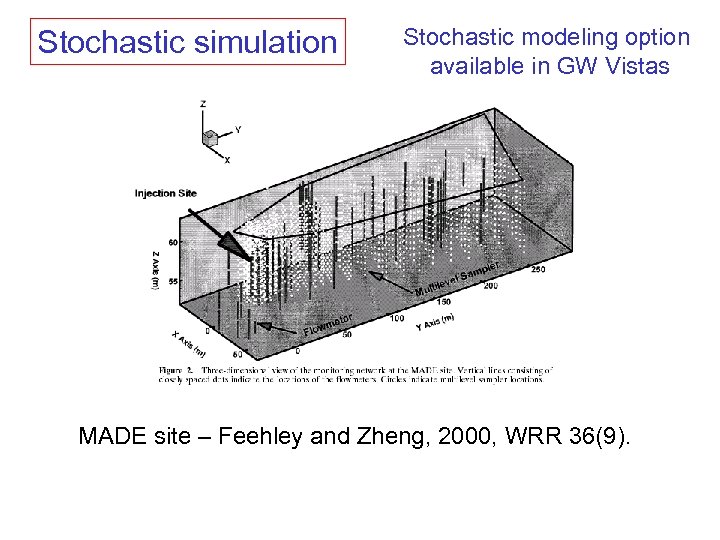

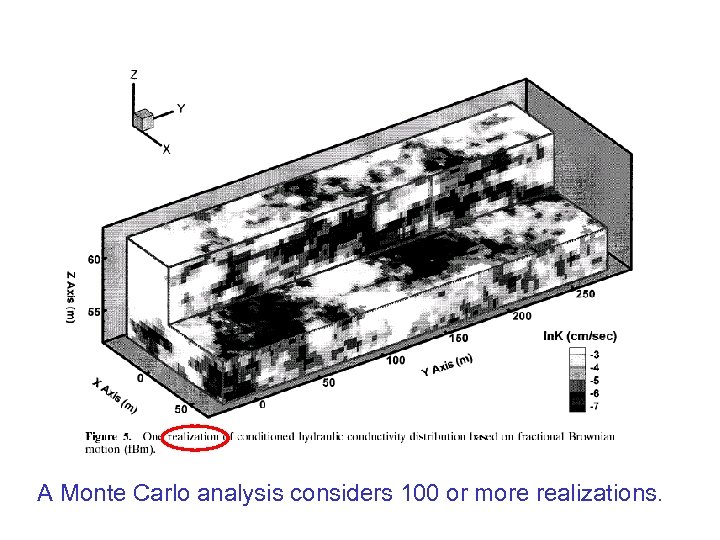

Stochastic simulation Stochastic modeling option available in GW Vistas MADE site – Feehley and Zheng, 2000, WRR 36(9).

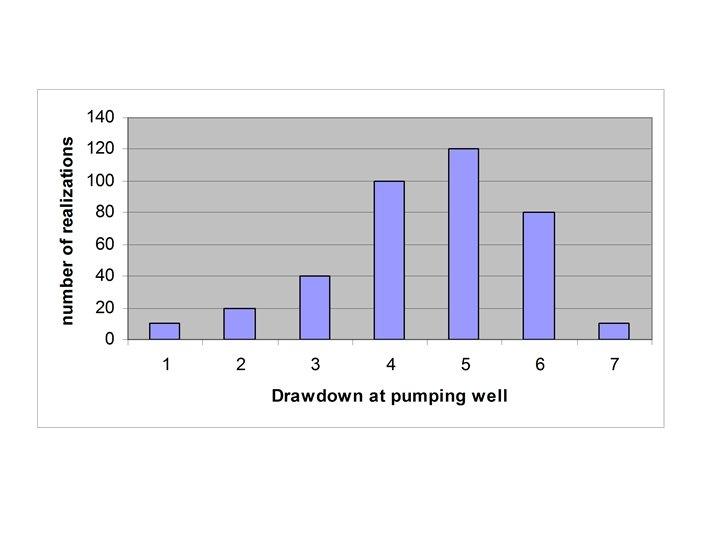

A Monte Carlo analysis considers 100 or more realizations.

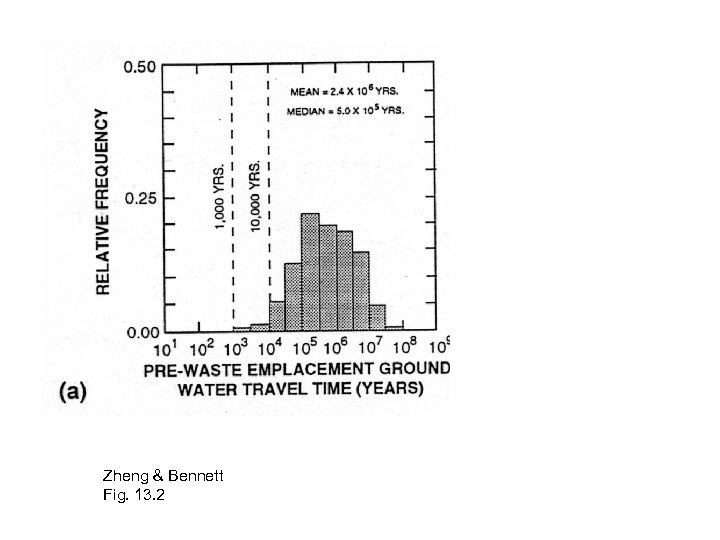

Zheng & Bennett Fig. 13. 2

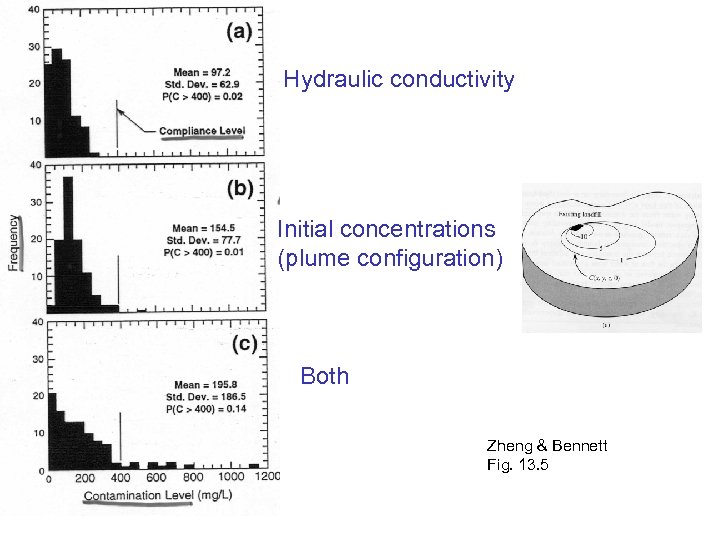

Hydraulic conductivity Initial concentrations (plume configuration) Both Zheng & Bennett Fig. 13. 5

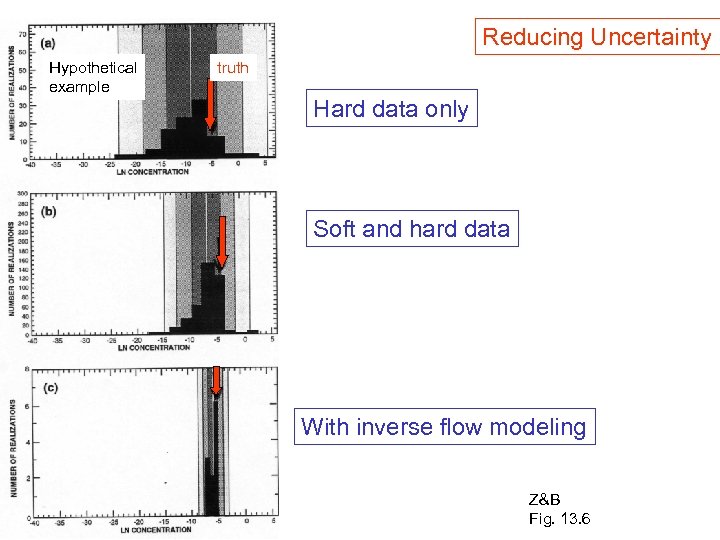

Reducing Uncertainty Hypothetical example truth Hard data only Soft and hard data With inverse flow modeling Z&B Fig. 13. 6

How do we “validate” a model so that we have confidence that it will make accurate predictions? Confidence Building

Modeling Chronology 1960’s Flow models are great! 1970’s Contaminant transport models are great! 1975 What about uncertainty of flow models? 1980 s Contaminant transport models don’t work. (because of failure to account for heterogeneity) 1990 s Are models reliable? Concerns over reliability in predictions arose over efforts to model geologic repositories for high level radioactive waste.

“The objective of model validation is to determine how well the mathematical representation of the processes describes the actual system behavior in terms of the degree of correlation between model calculations and actual measured data” (NRC, 1990) Hmmmmm…. Sounds like calibration… What they really mean is that a valid model will yield an accurate prediction.

What constitutes “validation”? (code vs. model) NRC study (1990): Model validation is not possible. Oreskes et al. (1994): paper in Science Calibration = forced empirical adequacy Verification = assertion of truth (possible in a closed system, e. g. , testing of codes) Validation = establishment of legitimacy (does not contain obvious errors), confirmation, confidence building

How to build confidence in a model Calibration (history matching) steady-state calibration(s) transient calibration “Verification” requires an independent set of field data Post-Audit: requires waiting for prediction to occur Models as interactive management tools (e. g. , the AEM model of The Netherlands)

HAPPY MODELING!

c1da1990792b52b079e813e8c2c9cb00.ppt