07c8a4777e648b3e30fd76c95cf3508e.ppt

- Количество слайдов: 71

Unambiguous + Unlimited = Unsupervised Using the Web for Natural Language Processing Problems Marti Hearst School of Information, UC Berkeley Joint work with Preslav Nakov BYU CS Colloquium, Dec 6, 2007 This research supported in part by NSF DBI-0317510

Natural Language Processing § The ultimate goal: write programs that read and understand stories and conversations. § This is too hard! Instead we tackle sub-problems. § There have been notable successes lately: § Machine translation is vastly improved § Speech recognition is decent in limited circumstances § Text categorization works with some accuracy Marti Hearst, BYU CS 2007

How can a machine understand these differences? Get the cat with the gloves. Marti Hearst, BYU CS 2007

How can a machine understand these differences? Get the sock from the cat with the gloves. Get the glove from the cat with the socks. Marti Hearst, BYU CS 2007

How can a machine understand these differences? § § Decorate the cake with the frosting. Decorate the cake with the kids. Throw out the cake with the frosting. Throw out the cake with the kids. Marti Hearst, BYU CS 2007

Why is this difficult? § Same syntactic structure, different meanings. § Natural language processing algorithms have to deal with the specifics of individual words. § Enormous vocabulary sizes. § The average English speaker’s vocabulary is around 50, 000 words, § Many of these can be combined with many others, § And they mean different things when they do! Marti Hearst, BYU CS 2007

How to tackle this problem? § The field was stuck for quite some time. § Hand-enter all semantic concepts and relations § A new approach started around 1990 § Get large text collections § Compute statistics over the words in those collections § There are many different algorithms. Marti Hearst, BYU CS 2007

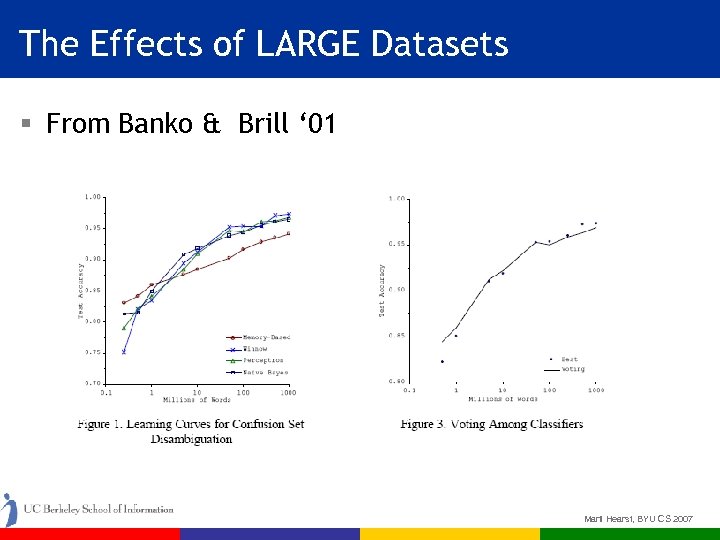

Size Matters Recent realization: bigger is better than smarter! Banko and Brill ’ 01: “Scaling to Very, Very Large Corpora for Natural Language Disambiguation”, ACL Marti Hearst, BYU CS 2007

Example Problem § Grammar checker example: Which word to use? <principal> <principle> § Solution: use well-edited text and look at which words surround each use: § I am in my third year as the principal of Anamosa High School. § School-principal transfers caused some upset. § This is a simple formulation of the quantum mechanical uncertainty principle. § Power without principle is barren, but principle without power is futile. (Tony Blair) Marti Hearst, BYU CS 2007

Using Very, Very Large Corpora § Keep track of which words are the neighbors of each spelling in well-edited text, e. g. : § Principal: “high school” § Principle: “rule” § At grammar-check time, choose the spelling best predicted by the surrounding words. § Surprising results: § Log-linear improvement even to a billion words! § Getting more data is better than fine-tuning algorithms! Marti Hearst, BYU CS 2007

The Effects of LARGE Datasets § From Banko & Brill ‘ 01 Marti Hearst, BYU CS 2007

How to Extend this Idea? § This is an exciting result … § BUT relies on having huge amounts of text that has been appropriately annotated! Marti Hearst, BYU CS 2007

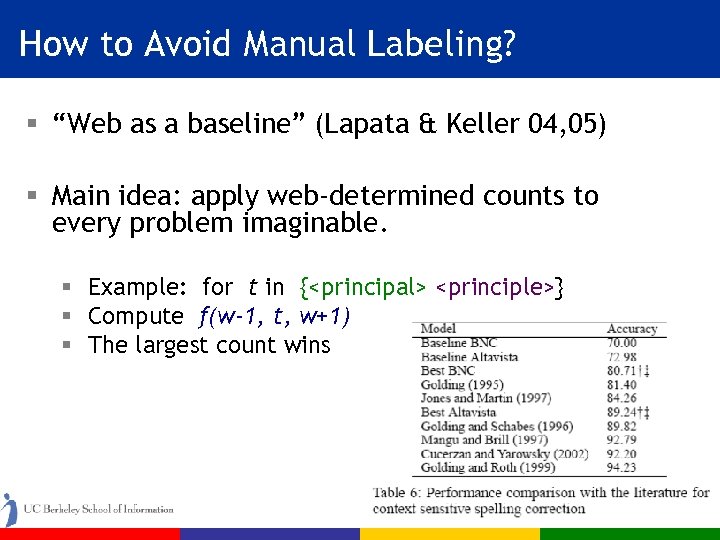

How to Avoid Manual Labeling? § “Web as a baseline” (Lapata & Keller 04, 05) § Main idea: apply web-determined counts to every problem imaginable. § Example: for t in {<principal> <principle>} § Compute f(w-1, t, w+1) § The largest count wins Marti Hearst, BYU CS 2007

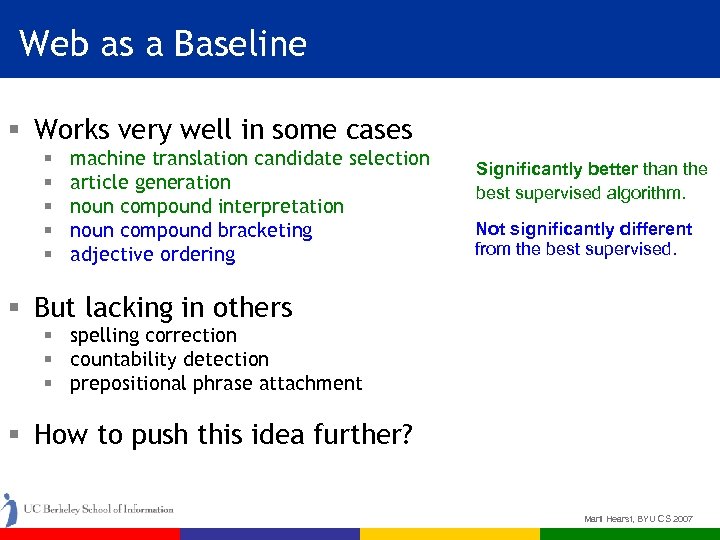

Web as a Baseline § Works very well in some cases § § § machine translation candidate selection article generation noun compound interpretation noun compound bracketing adjective ordering Significantly better than the best supervised algorithm. Not significantly different from the best supervised. § But lacking in others § spelling correction § countability detection § prepositional phrase attachment § How to push this idea further? Marti Hearst, BYU CS 2007

Using Unambiguous Cases § The trick: look for unambiguous cases to start § Use these to improve the results beyond what cooccurrence statistics indicate. § An Early Example: § Hindle and Rooth, “Structural Ambiguity and Lexical Relations”, ACL ’ 90, Comp Ling’ 93 § Problem: Prepositional Phrase attachment § I eat/v spaghetti/n 1 with/p a fork/n 2. § I eat/v spaghetti/n 1 with/p sauce/n 2. § Question: does n 2 attach to v or to n 1? Marti Hearst, BYU CS 2007

Using Unambiguous Cases § How to do this with unlabeled data? § First try: § Parse some text into phrase structure § Then compute certain co-occurrences f(v, n 1, p) f(v, n 1) § Problem: results not accurate enough § The trick: look for unambiguous cases: § Spaghetti with sauce is delicious. (pre-verbal) § I eat with a fork. (no direct object) § Use these to improve the results beyond what cooccurrence statistics indicate. Marti Hearst, BYU CS 2007

Unambiguous + Unlimited = Unsupervised § Apply the Unambiguous Case Idea to the Very, Very Large Corpora idea § The potential of these approaches are not fully realized § Our work (with Preslav Nakov): § Structural Ambiguity Decisions § § § PP-attachment Noun compound bracketing Coordination grouping § Semantic Relation Acquisition § § Hypernym (ISA) relations Verbal relations between nouns § SAT Analogy problems Marti Hearst, BYU CS 2007

Applying U + U = U to Structural Ambiguity § We introduce the use of (nearly) unambiguous features: § Surface features § Paraphrases § Combined with ngrams § From very, very large corpora § Achieve state-of-the-art results without labeled examples. Marti Hearst, BYU CS 2007

![Noun Compound Bracketing (a) (b) [ [ liver cell ] antibody ] [ liver Noun Compound Bracketing (a) (b) [ [ liver cell ] antibody ] [ liver](https://present5.com/presentation/07c8a4777e648b3e30fd76c95cf3508e/image-19.jpg)

Noun Compound Bracketing (a) (b) [ [ liver cell ] antibody ] [ liver [cell line] ] (left bracketing) (right bracketing) In (a), the antibody targets the liver cell. In (b), the cell line is derived from the liver. Marti Hearst, BYU CS 2007

![Dependency Model § right bracketing: [w 1[w 2 w 3] ] § w 2 Dependency Model § right bracketing: [w 1[w 2 w 3] ] § w 2](https://present5.com/presentation/07c8a4777e648b3e30fd76c95cf3508e/image-20.jpg)

Dependency Model § right bracketing: [w 1[w 2 w 3] ] § w 2 w 3 is a compound (modified by w 1) § home health care § w 1 and w 2 independently modify w 3 § adult male rat w 1 w 2 w 3 § left bracketing : [ [w 1 w 2 ]w 3] § only 1 modificational choice possible § law enforcement officer Marti Hearst, BYU CS 2007

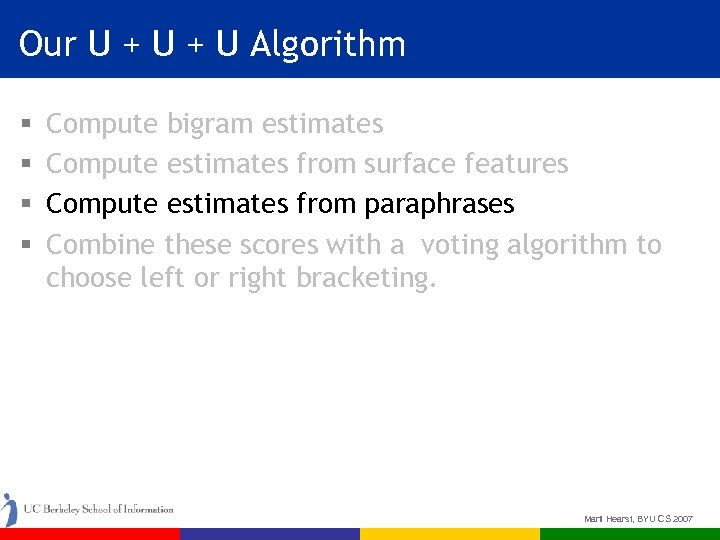

Our U + U Algorithm § § Compute bigram estimates Compute estimates from surface features Compute estimates from paraphrases Combine these scores with a voting algorithm to choose left or right bracketing. § We use the same general approach for two other structural ambiguity problems. Marti Hearst, BYU CS 2007

![Using n-grams to make predictions § Say trying to distinguish: [home health] care home Using n-grams to make predictions § Say trying to distinguish: [home health] care home](https://present5.com/presentation/07c8a4777e648b3e30fd76c95cf3508e/image-22.jpg)

Using n-grams to make predictions § Say trying to distinguish: [home health] care home [health care] § Main idea: compare these co-occurrence probabilities § “home health” vs § “health care” Marti Hearst, BYU CS 2007

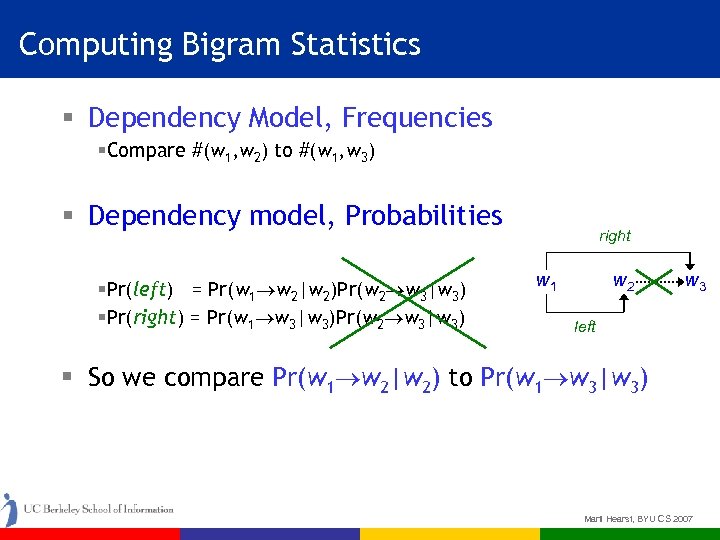

Computing Bigram Statistics § Dependency Model, Frequencies §Compare #(w 1, w 2) to #(w 1, w 3) § Dependency model, Probabilities §Pr(left) = Pr(w 1 w 2|w 2)Pr(w 2 w 3|w 3) §Pr(right) = Pr(w 1 w 3|w 3)Pr(w 2 w 3|w 3) right w 1 w 2 w 3 left § So we compare Pr(w 1 w 2|w 2) to Pr(w 1 w 3|w 3) Marti Hearst, BYU CS 2007

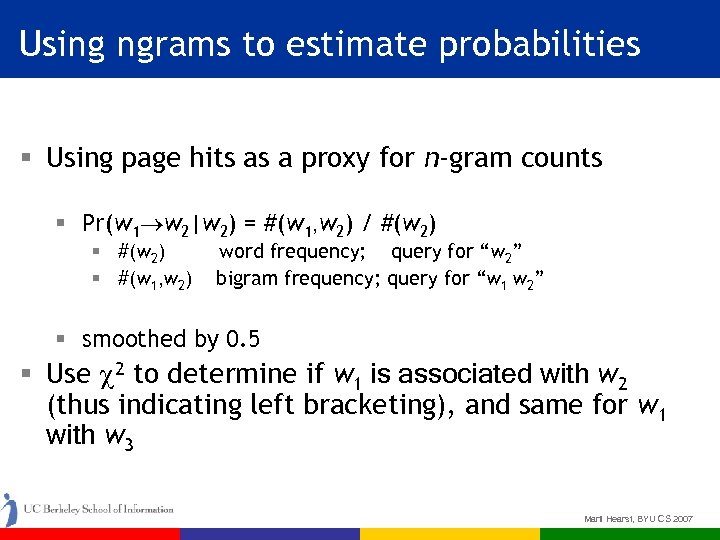

Using ngrams to estimate probabilities § Using page hits as a proxy for n-gram counts § Pr(w 1 w 2|w 2) = #(w 1, w 2) / #(w 2) § #(w 1, w 2) word frequency; query for “w 2” bigram frequency; query for “w 1 w 2” § smoothed by 0. 5 § Use 2 to determine if w 1 is associated with w 2 (thus indicating left bracketing), and same for w 1 with w 3 Marti Hearst, BYU CS 2007

Our U + U Algorithm § § Compute bigram estimates Compute estimates from surface features Compute estimates from paraphrases Combine these scores with a voting algorithm to choose left or right bracketing. Marti Hearst, BYU CS 2007

Web-derived Surface Features § Authors often disambiguate noun compounds using surface markers, e. g. : § amino-acid sequence left § brain stem’s cell left § brain’s stem cell right § The enormous size of the Web makes these frequent enough to be useful. Marti Hearst, BYU CS 2007

Web-derived Surface Features: Dash (hyphen) § Left dash § cell-cycle analysis left § Right dash § donor T-cell right § Double dash § T-cell-depletion unusable… Marti Hearst, BYU CS 2007

Web-derived Surface Features: Possessive Marker § Attached to the first word § brain’s stem cell right § Attached to the second word § brain stem’s cell left § Combined features § brain’s stem-cell right Marti Hearst, BYU CS 2007

Web-derived Surface Features: Capitalization § anycase – lowercase – uppercase § Plasmodium vivax Malaria left § plasmodium vivax Malaria left § lowercase – uppercase – anycase § brain Stem cell right § brain Stem Cell right § Disable this on: § Roman digits § Single-letter words: e. g. vitamin D deficiency Marti Hearst, BYU CS 2007

Web-derived Surface Features: Embedded Slash § Left embedded slash § leukemia/lymphoma cell right Marti Hearst, BYU CS 2007

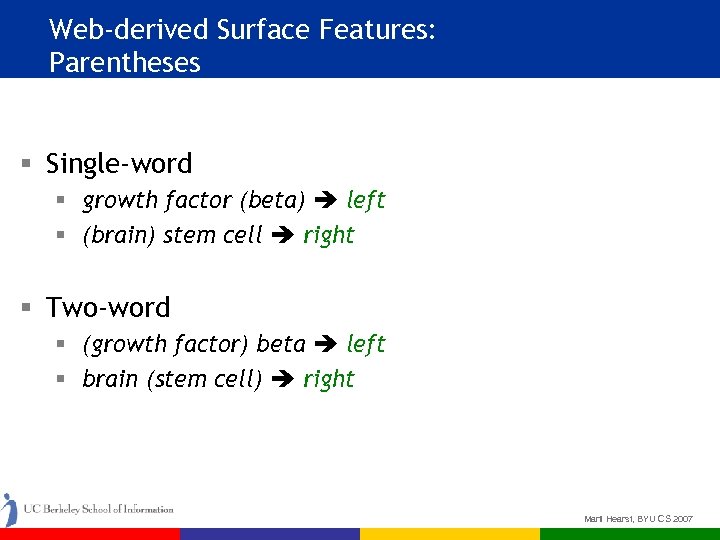

Web-derived Surface Features: Parentheses § Single-word § growth factor (beta) left § (brain) stem cell right § Two-word § (growth factor) beta left § brain (stem cell) right Marti Hearst, BYU CS 2007

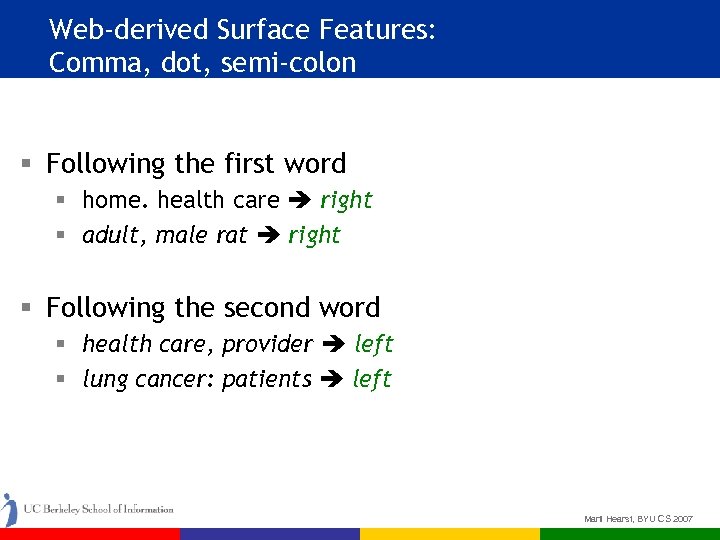

Web-derived Surface Features: Comma, dot, semi-colon § Following the first word § home. health care right § adult, male rat right § Following the second word § health care, provider left § lung cancer: patients left Marti Hearst, BYU CS 2007

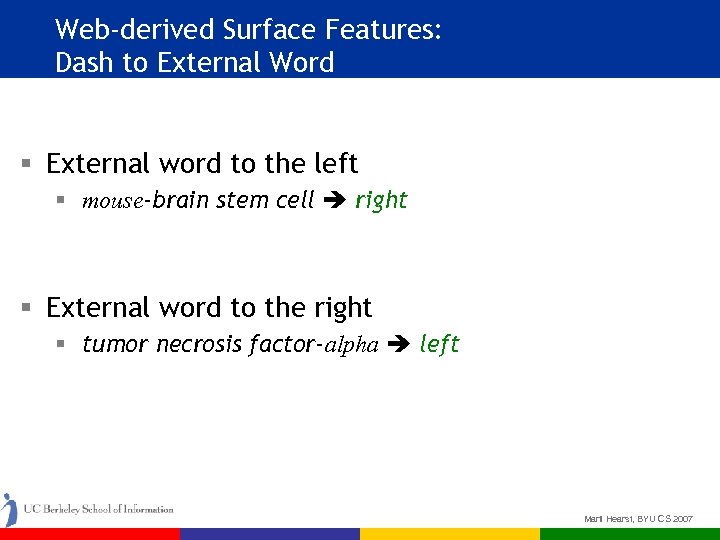

Web-derived Surface Features: Dash to External Word § External word to the left § mouse-brain stem cell right § External word to the right § tumor necrosis factor-alpha left Marti Hearst, BYU CS 2007

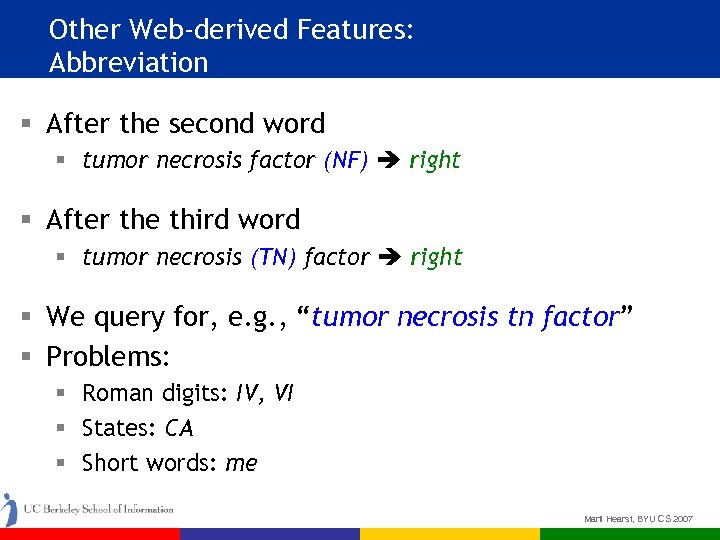

Other Web-derived Features: Abbreviation § After the second word § tumor necrosis factor (NF) right § After the third word § tumor necrosis (TN) factor right § We query for, e. g. , “tumor necrosis tn factor” § Problems: § Roman digits: IV, VI § States: CA § Short words: me Marti Hearst, BYU CS 2007

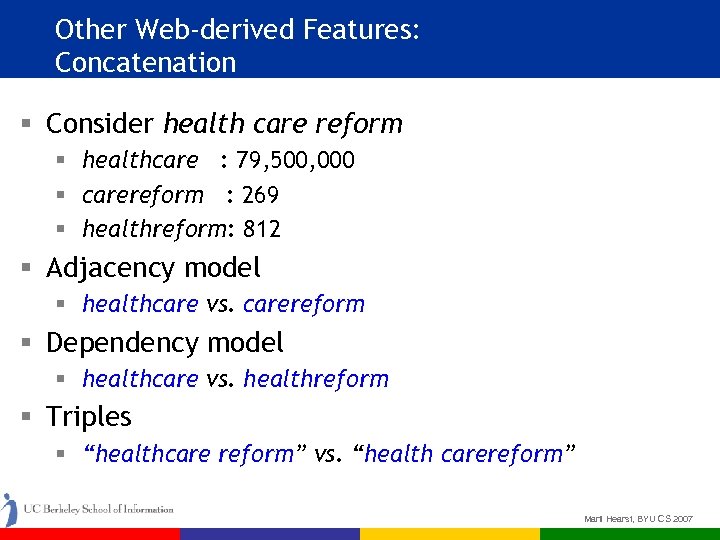

Other Web-derived Features: Concatenation § Consider health care reform § healthcare : 79, 500, 000 § carereform : 269 § healthreform: 812 § Adjacency model § healthcare vs. carereform § Dependency model § healthcare vs. healthreform § Triples § “healthcare reform” vs. “health carereform” Marti Hearst, BYU CS 2007

Other Web-derived Features: Reorder § Reorders for “health care reform” § “care reform health” right § “reform health care” left Marti Hearst, BYU CS 2007

Other Web-derived Features: Internal Inflection Variability § Vary inflection of second word § tyrosine kinase activation § tyrosine kinases activation Marti Hearst, BYU CS 2007

Other Web-derived Features: Switch The First Two Words § Predict right, if we can reorder § adult male rat § male adult rat as Marti Hearst, BYU CS 2007

Our U + U Algorithm § § Compute bigram estimates Compute estimates from surface features Compute estimates from paraphrases Combine these scores with a voting algorithm to choose left or right bracketing. Marti Hearst, BYU CS 2007

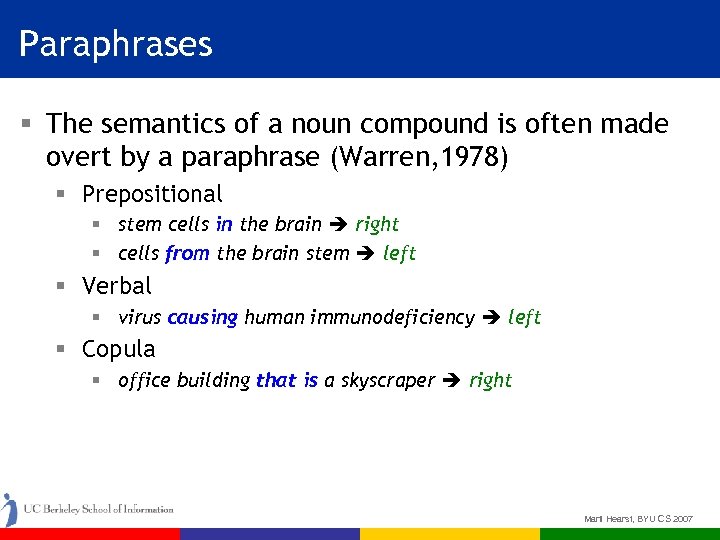

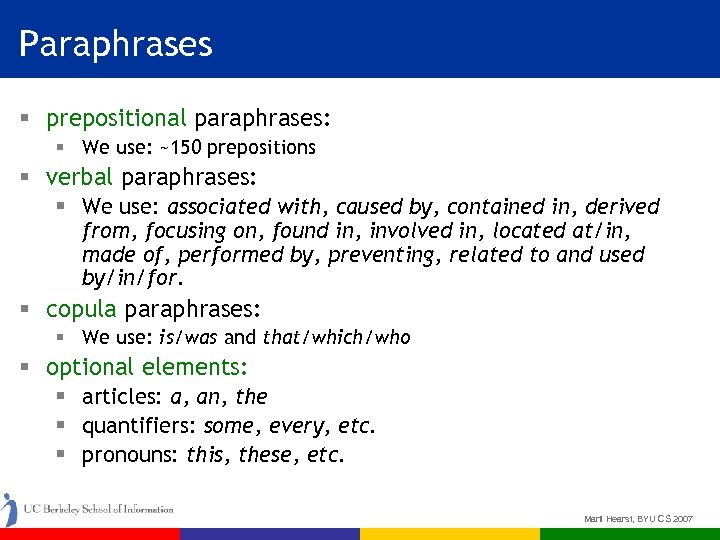

Paraphrases § The semantics of a noun compound is often made overt by a paraphrase (Warren, 1978) § Prepositional § stem cells in the brain right § cells from the brain stem left § Verbal § virus causing human immunodeficiency left § Copula § office building that is a skyscraper right Marti Hearst, BYU CS 2007

Paraphrases § prepositional paraphrases: § We use: ~150 prepositions § verbal paraphrases: § We use: associated with, caused by, contained in, derived from, focusing on, found in, involved in, located at/in, made of, performed by, preventing, related to and used by/in/for. § copula paraphrases: § We use: is/was and that/which/who § optional elements: § articles: a, an, the § quantifiers: some, every, etc. § pronouns: this, these, etc. Marti Hearst, BYU CS 2007

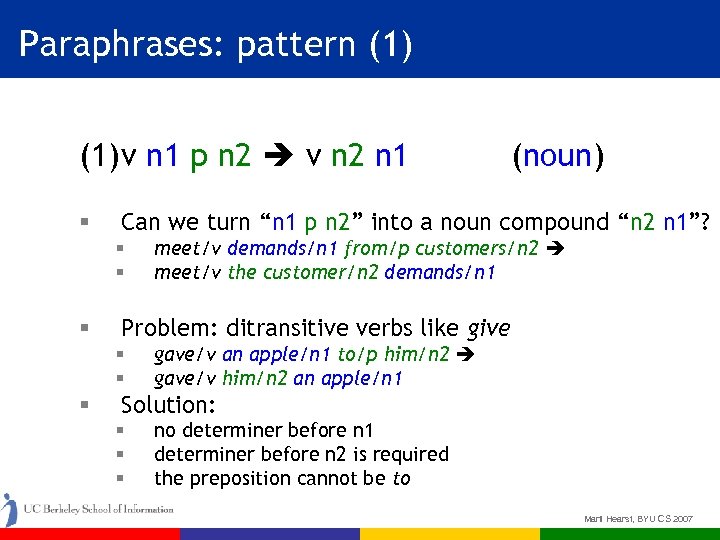

Paraphrases: pattern (1) v n 1 p n 2 v n 2 n 1 § Can we turn “n 1 p n 2” into a noun compound “n 2 n 1”? § § § meet/v demands/n 1 from/p customers/n 2 meet/v the customer/n 2 demands/n 1 Problem: ditransitive verbs like give § § § (noun) gave/v an apple/n 1 to/p him/n 2 gave/v him/n 2 an apple/n 1 Solution: § § § no determiner before n 1 determiner before n 2 is required the preposition cannot be to Marti Hearst, BYU CS 2007

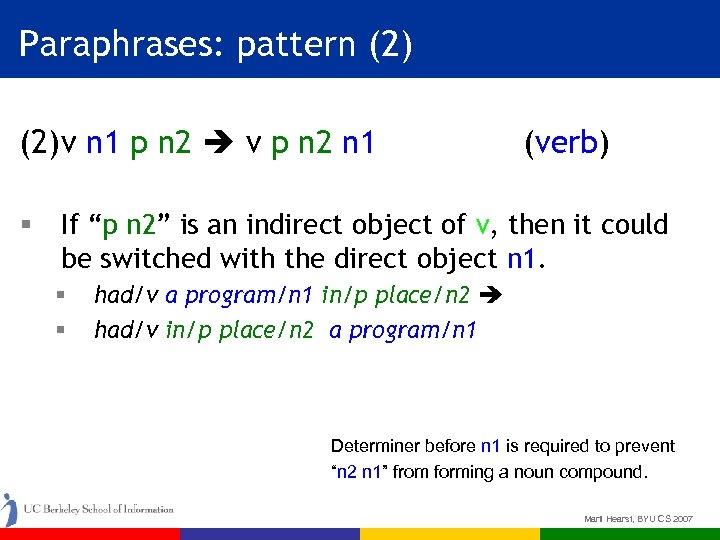

Paraphrases: pattern (2) v n 1 p n 2 v p n 2 n 1 § (verb) If “p n 2” is an indirect object of v, then it could be switched with the direct object n 1. § § had/v a program/n 1 in/p place/n 2 had/v in/p place/n 2 a program/n 1 Determiner before n 1 is required to prevent “n 2 n 1” from forming a noun compound. Marti Hearst, BYU CS 2007

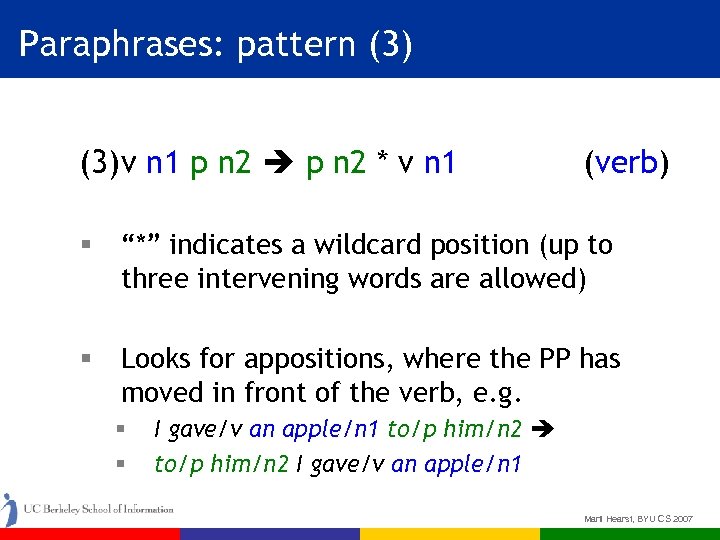

Paraphrases: pattern (3) v n 1 p n 2 * v n 1 (verb) § “*” indicates a wildcard position (up to three intervening words are allowed) § Looks for appositions, where the PP has moved in front of the verb, e. g. § § I gave/v an apple/n 1 to/p him/n 2 I gave/v an apple/n 1 Marti Hearst, BYU CS 2007

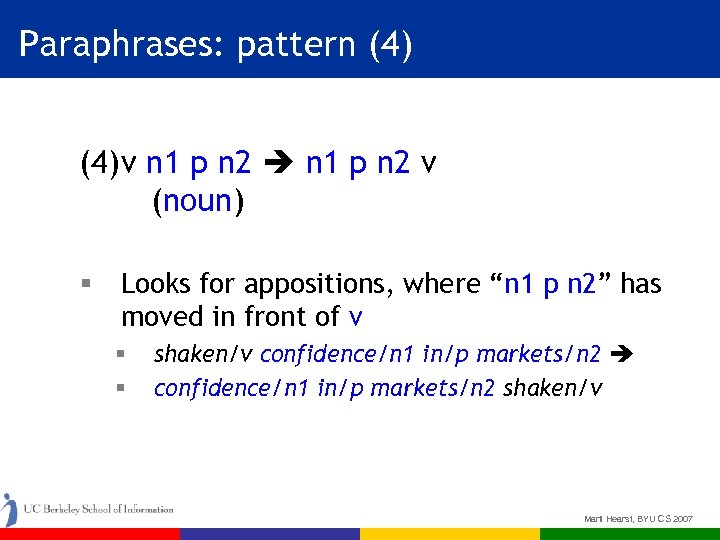

Paraphrases: pattern (4) v n 1 p n 2 v (noun) § Looks for appositions, where “n 1 p n 2” has moved in front of v § § shaken/v confidence/n 1 in/p markets/n 2 shaken/v Marti Hearst, BYU CS 2007

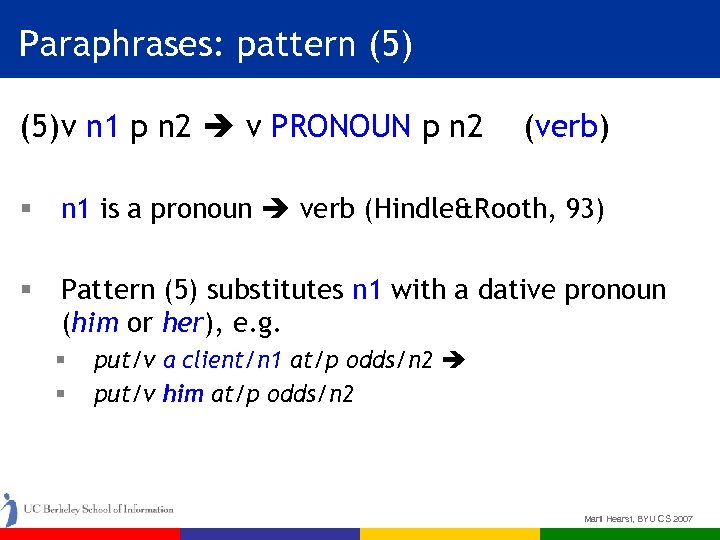

Paraphrases: pattern (5) v n 1 p n 2 v PRONOUN p n 2 (verb) § n 1 is a pronoun verb (Hindle&Rooth, 93) § Pattern (5) substitutes n 1 with a dative pronoun (him or her), e. g. § § put/v a client/n 1 at/p odds/n 2 put/v him at/p odds/n 2 Marti Hearst, BYU CS 2007

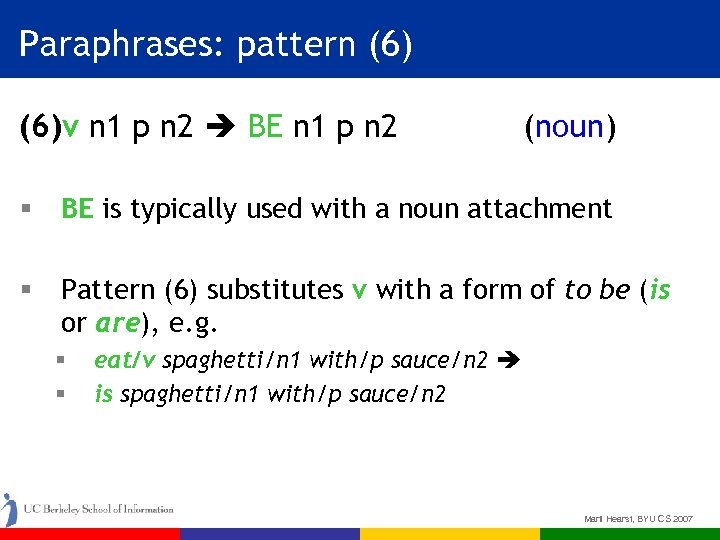

Paraphrases: pattern (6)v n 1 p n 2 BE n 1 p n 2 (noun) § BE is typically used with a noun attachment § Pattern (6) substitutes v with a form of to be (is or are), e. g. § § eat/v spaghetti/n 1 with/p sauce/n 2 is spaghetti/n 1 with/p sauce/n 2 Marti Hearst, BYU CS 2007

Our U + U Algorithm § § Compute bigram estimates Compute estimates from surface features Compute estimates from paraphrases Combine these scores with a voting algorithm to choose left or right bracketing. Marti Hearst, BYU CS 2007

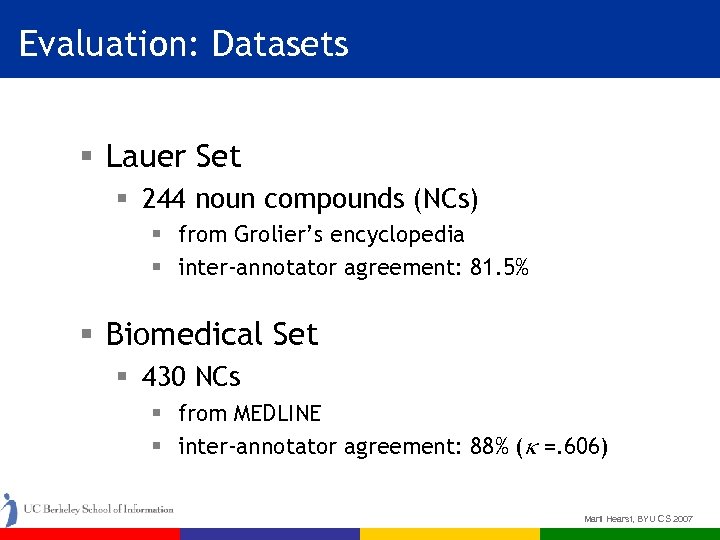

Evaluation: Datasets § Lauer Set § 244 noun compounds (NCs) § from Grolier’s encyclopedia § inter-annotator agreement: 81. 5% § Biomedical Set § 430 NCs § from MEDLINE § inter-annotator agreement: 88% ( =. 606) Marti Hearst, BYU CS 2007

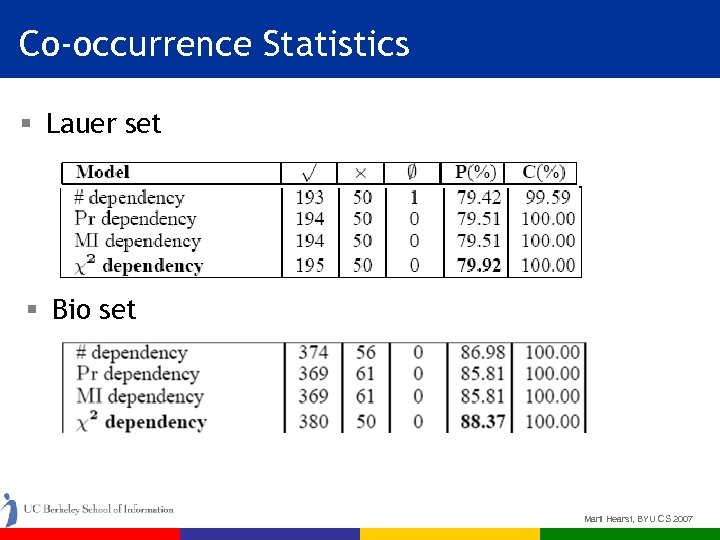

Co-occurrence Statistics § Lauer set § Bio set Marti Hearst, BYU CS 2007

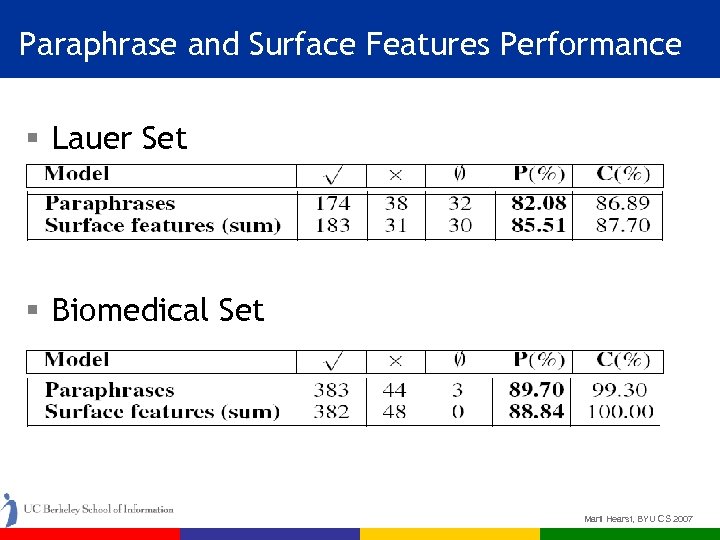

Paraphrase and Surface Features Performance § Lauer Set § Biomedical Set Marti Hearst, BYU CS 2007

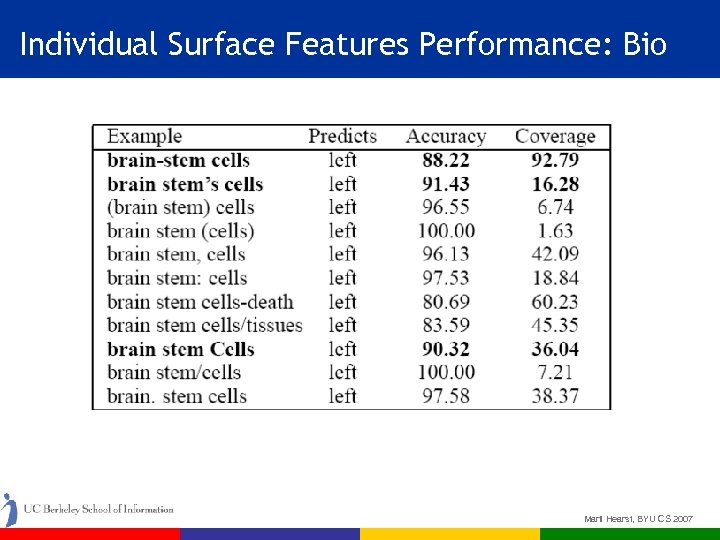

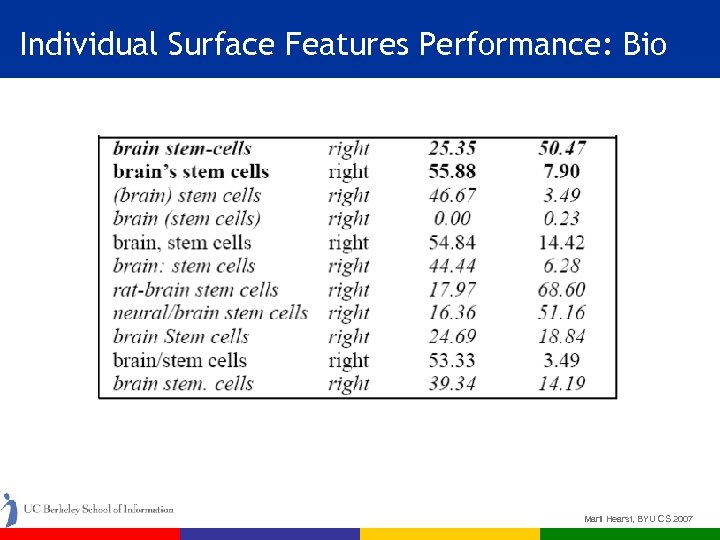

Individual Surface Features Performance: Bio Marti Hearst, BYU CS 2007

Individual Surface Features Performance: Bio Marti Hearst, BYU CS 2007

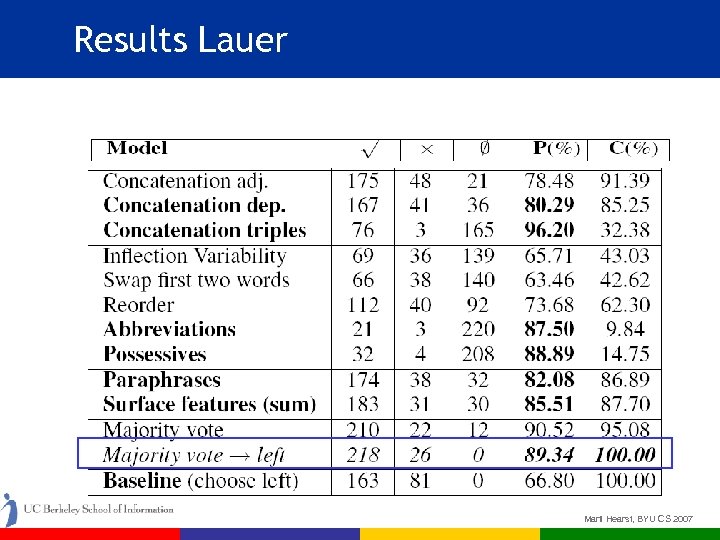

Results Lauer Marti Hearst, BYU CS 2007

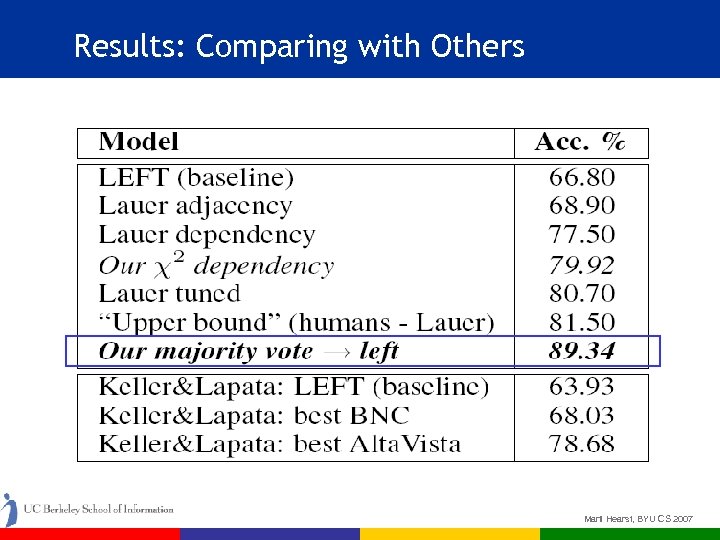

Results: Comparing with Others Marti Hearst, BYU CS 2007

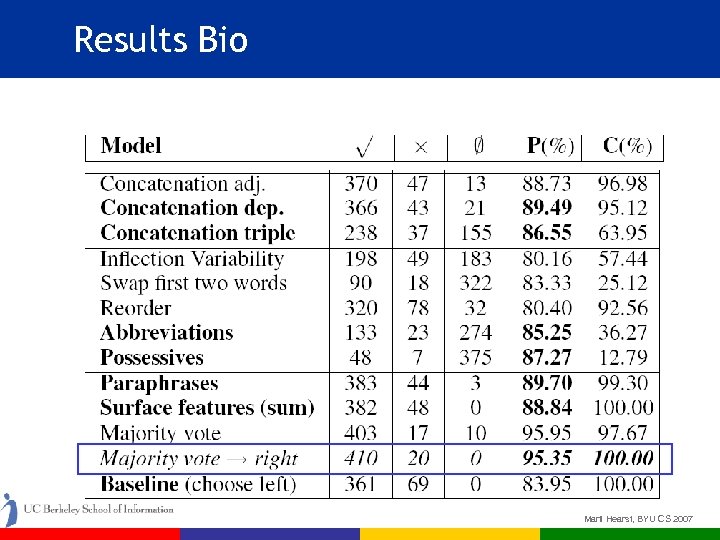

Results Bio Marti Hearst, BYU CS 2007

Results for Noun Compound Bracketing § Introduced search engine statistics that go beyond the n-gram (applicable to other tasks) § surface features § paraphrases § Obtained new state-of-the-art results on NC bracketing § more robust than Lauer (1995) § more accurate than Keller&Lapata (2004) Marti Hearst, BYU CS 2007

Prepositional Phrase Attachment Problem: (a) Peter spent millions of dollars. (b) Peter spent time with his family. Which attachment for quadruple: (noun attach) (verb attach) (v, n 1, p, n 2) Results: Much simpler than other algorithms As good as or better than best unsupervised, and better than some supervised approaches Marti Hearst, BYU CS 2007

Noun Phrase Coordination § (Modified) real sentence: § The Department of Chronic Diseases and Health Promotion leads and strengthens global efforts to prevent and control chronic diseases or disabilities and to promote health and quality of life. Marti Hearst, BYU CS 2007

NC coordination: ellipsis § Ellipsis § car and truck production § means car production and truck production § No ellipsis § president and chief executive § All-way coordination § Securities and Exchange Commission Marti Hearst, BYU CS 2007

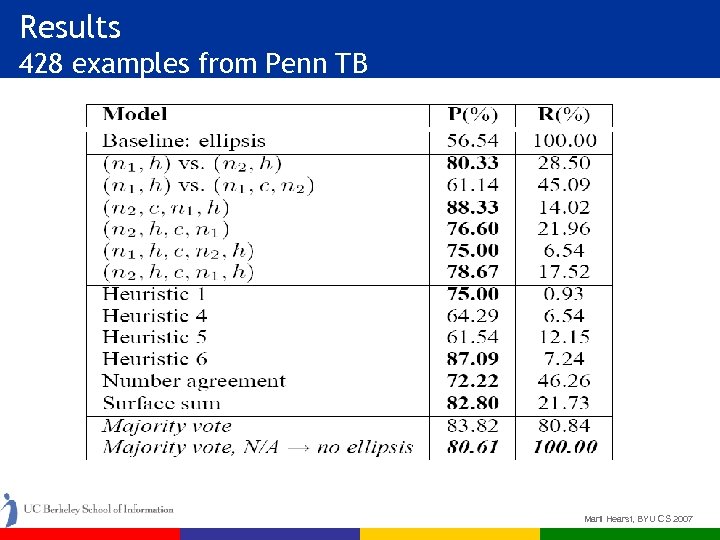

Results 428 examples from Penn TB Marti Hearst, BYU CS 2007

Semantic Relation Detection § Goal: automatically augment a lexical database § Many potential relation types: § ISA (hypernymy/hyponymy) § Part-Of (meronymy) § Idea: find unambiguous contexts which (nearly) always indicate the relation of interest Marti Hearst, BYU CS 2007

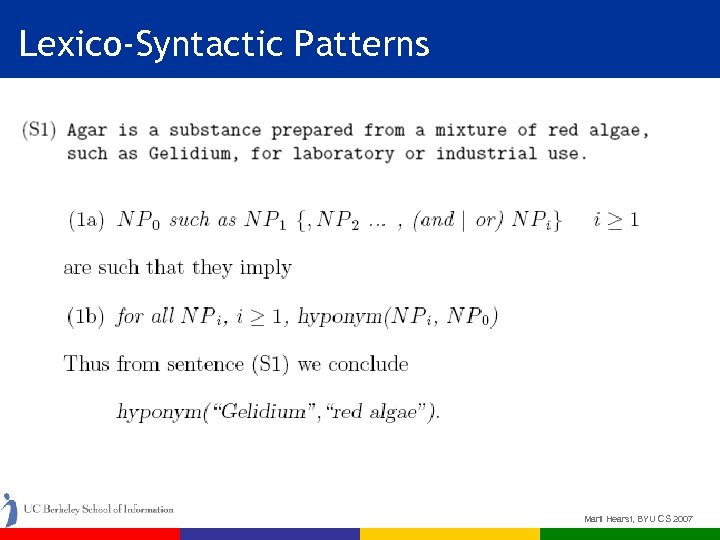

Lexico-Syntactic Patterns Marti Hearst, BYU CS 2007

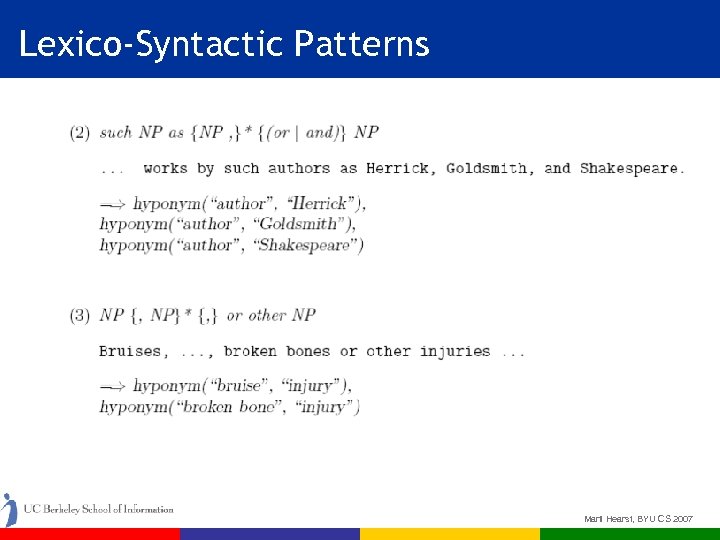

Lexico-Syntactic Patterns Marti Hearst, BYU CS 2007

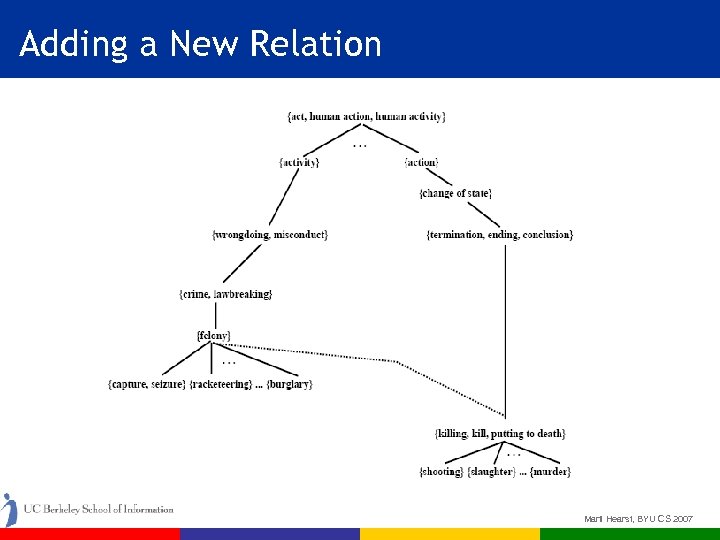

Adding a New Relation Marti Hearst, BYU CS 2007

Semantic Relation Detection § Lexico-syntactic Patterns: § Should occur frequently in text § Should (nearly) always suggest the relation of interest § Should be recognizable with little pre-encoded knowledge. § These patterns have been used extensively by other researchers. Marti Hearst, BYU CS 2007

Semantic Relation Detection § What relationship holds between two nouns? § olive oil – oil comes from olives § machine oil – oil used on machines § Assigning the meaning relations between these terms has been seen as a very difficult solution § Our solution: § Use clever queries against the web to figure out the relations. Marti Hearst, BYU CS 2007

Queries for Semantic Relations § Convert the noun-noun compound into a query of the form: § noun 2 that * noun 1 § “oil that * olive(s)” § This returns search result snippets containing interesting verbs. § In this case: § § § Come from Be obtained from Be extracted from Made from … Marti Hearst, BYU CS 2007

Uncovering Semantic Relations § More examples: § Migraine drug -> treat, be used for, reduce, prevent § Wrinkle drug -> treat, be used for, reduce, smooth § Printer tray -> hold, come with, be folded, fit under, be inserted into § Student protest -> be led by, be sponsored by, pit, be organized by Marti Hearst, BYU CS 2007

Conclusions § Unambiguous + Unlimited = Unsupervised § The enormous size of the web opens new opportunities for text analysis § There are many words, but they are more likely to appear together in a huge dataset § This allows us to do word-specific analysis § To counter the labeled-data roadblock, we start with unambiguous features that we can find naturally. § We’ve applied this to structural and semantic language problems. § These are stepping stones towards sophisticated language understanding. Marti Hearst, BYU CS 2007

Thank you! http: //biotext. berkeley. edu Supported in part by NSF DBI-0317510

07c8a4777e648b3e30fd76c95cf3508e.ppt