ea46a8f8e6cb68498e38e585672c15d4.ppt

- Количество слайдов: 27

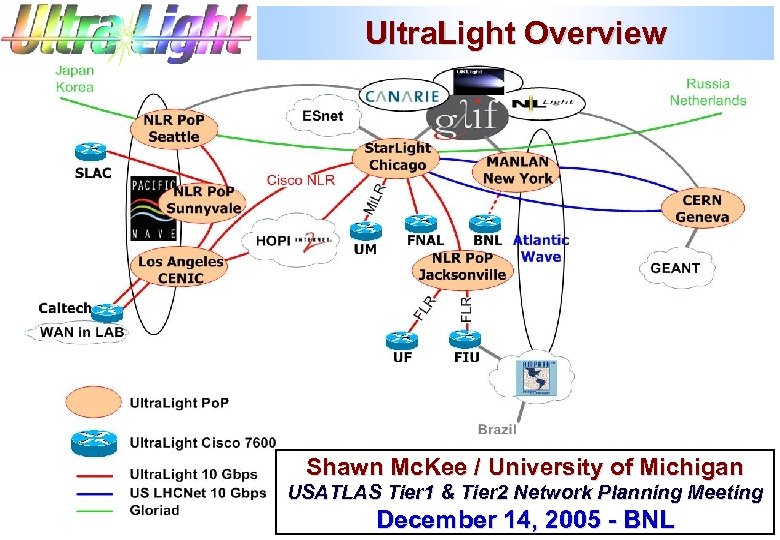

Ultra. Light Overview Shawn Mc. Kee / University of Michigan USATLAS Tier 1 & Tier 2 Network Planning Meeting December 14, 2005 - BNL

Ultra. Light Overview Shawn Mc. Kee / University of Michigan USATLAS Tier 1 & Tier 2 Network Planning Meeting December 14, 2005 - BNL

The Ultra. Light Project Ultra. Light is n A four year $2 M NSF ITR funded by MPS. n Application driven Network R&D. n A collaboration of BNL, Caltech, CERN, Florida, FIU, FNAL, Internet 2, Michigan, MIT, SLAC. n Significant international participation: Brazil, Japan, Korea amongst many others. Goal: Enable the network as a managed resource. Meta-Goal: Enable physics analysis and discoveries which could not otherwise be achieved.

The Ultra. Light Project Ultra. Light is n A four year $2 M NSF ITR funded by MPS. n Application driven Network R&D. n A collaboration of BNL, Caltech, CERN, Florida, FIU, FNAL, Internet 2, Michigan, MIT, SLAC. n Significant international participation: Brazil, Japan, Korea amongst many others. Goal: Enable the network as a managed resource. Meta-Goal: Enable physics analysis and discoveries which could not otherwise be achieved.

Ultra. Light Backbone Ultra. Light has a non-standard core network with dynamic links and varying bandwidth inter-connecting our nodes. Ä Optical Hybrid Global Network The core of Ultra. Light is dynamically evolving as function of available resources on other backbones such as NLR, HOPI, Abilene or ESnet. The main resources for Ultra. Light: n LHCnet (IP, L 2 VPN, CCC) n Abilene (IP, L 2 VPN) n ESnet (IP, L 2 VPN) n Cisco NLR wave (Ethernet) n Cisco Layer 3 10 GE Network n HOPI NLR waves (Ethernet; provisioned on demand) n Ultra. Light nodes: Caltech, SLAC, FNAL, UF, UM, Star. Light, CENIC Po. P at LA, CERN

Ultra. Light Backbone Ultra. Light has a non-standard core network with dynamic links and varying bandwidth inter-connecting our nodes. Ä Optical Hybrid Global Network The core of Ultra. Light is dynamically evolving as function of available resources on other backbones such as NLR, HOPI, Abilene or ESnet. The main resources for Ultra. Light: n LHCnet (IP, L 2 VPN, CCC) n Abilene (IP, L 2 VPN) n ESnet (IP, L 2 VPN) n Cisco NLR wave (Ethernet) n Cisco Layer 3 10 GE Network n HOPI NLR waves (Ethernet; provisioned on demand) n Ultra. Light nodes: Caltech, SLAC, FNAL, UF, UM, Star. Light, CENIC Po. P at LA, CERN

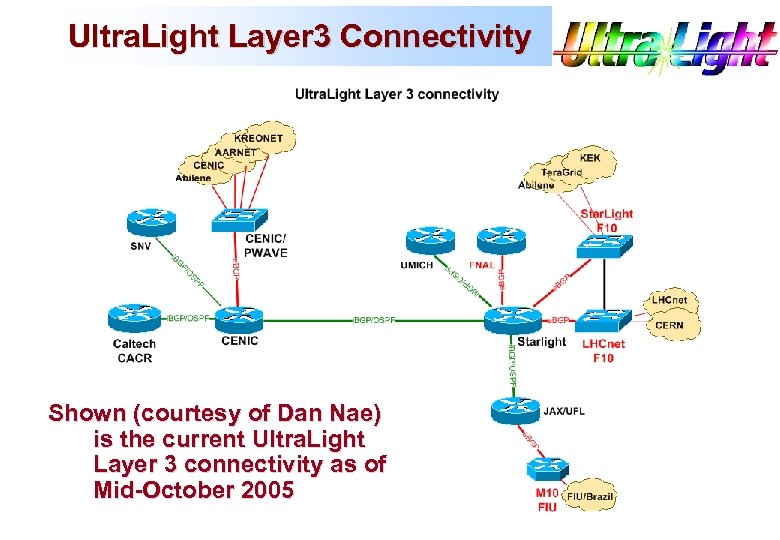

Ultra. Light Layer 3 Connectivity Shown (courtesy of Dan Nae) is the current Ultra. Light Layer 3 connectivity as of Mid-October 2005

Ultra. Light Layer 3 Connectivity Shown (courtesy of Dan Nae) is the current Ultra. Light Layer 3 connectivity as of Mid-October 2005

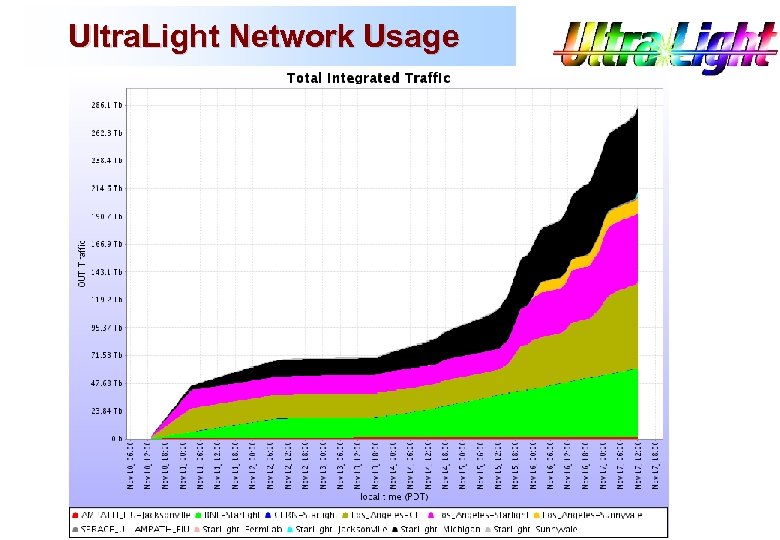

Ultra. Light Network Usage

Ultra. Light Network Usage

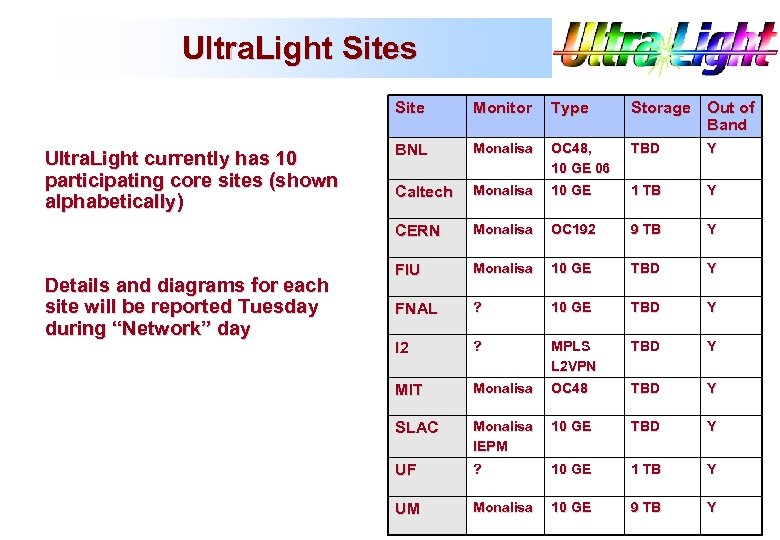

Ultra. Light Sites Site Details and diagrams for each site will be reported Tuesday during “Network” day Type Storage Out of Band BNL Monalisa OC 48, 10 GE 06 TBD Y Caltech Monalisa 10 GE 1 TB Y CERN Ultra. Light currently has 10 participating core sites (shown alphabetically) Monitor Monalisa OC 192 9 TB Y FIU Monalisa 10 GE TBD Y FNAL ? 10 GE TBD Y I 2 ? MPLS L 2 VPN TBD Y MIT Monalisa OC 48 TBD Y SLAC Monalisa IEPM 10 GE TBD Y UF ? 10 GE 1 TB Y UM Monalisa 10 GE 9 TB Y

Ultra. Light Sites Site Details and diagrams for each site will be reported Tuesday during “Network” day Type Storage Out of Band BNL Monalisa OC 48, 10 GE 06 TBD Y Caltech Monalisa 10 GE 1 TB Y CERN Ultra. Light currently has 10 participating core sites (shown alphabetically) Monitor Monalisa OC 192 9 TB Y FIU Monalisa 10 GE TBD Y FNAL ? 10 GE TBD Y I 2 ? MPLS L 2 VPN TBD Y MIT Monalisa OC 48 TBD Y SLAC Monalisa IEPM 10 GE TBD Y UF ? 10 GE 1 TB Y UM Monalisa 10 GE 9 TB Y

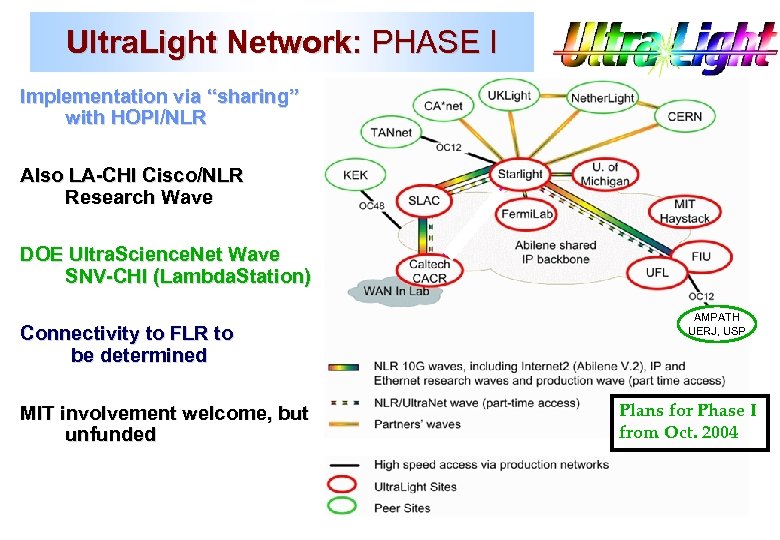

Ultra. Light Network: PHASE I Implementation via “sharing” with HOPI/NLR Also LA-CHI Cisco/NLR Research Wave DOE Ultra. Science. Net Wave SNV-CHI (Lambda. Station) Connectivity to FLR to be determined MIT involvement welcome, but unfunded AMPATH UERJ, USP Plans for Phase I from Oct. 2004

Ultra. Light Network: PHASE I Implementation via “sharing” with HOPI/NLR Also LA-CHI Cisco/NLR Research Wave DOE Ultra. Science. Net Wave SNV-CHI (Lambda. Station) Connectivity to FLR to be determined MIT involvement welcome, but unfunded AMPATH UERJ, USP Plans for Phase I from Oct. 2004

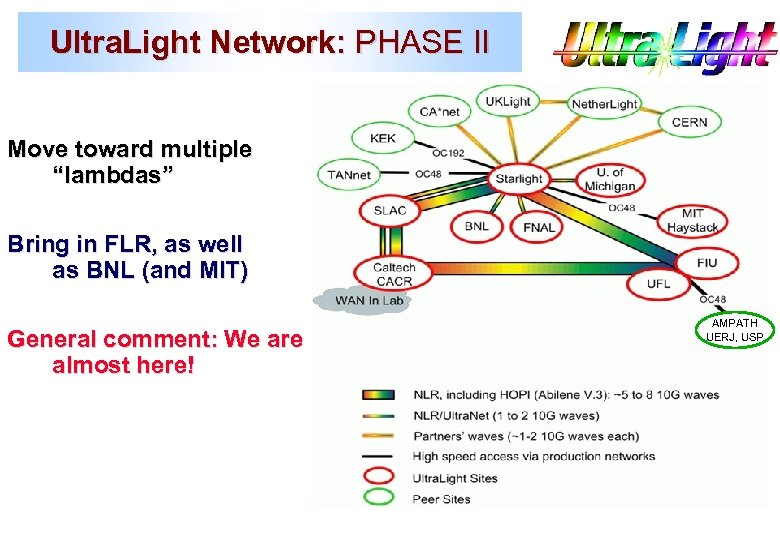

Ultra. Light Network: PHASE II Move toward multiple “lambdas” Bring in FLR, as well as BNL (and MIT) General comment: We are almost here! AMPATH UERJ, USP

Ultra. Light Network: PHASE II Move toward multiple “lambdas” Bring in FLR, as well as BNL (and MIT) General comment: We are almost here! AMPATH UERJ, USP

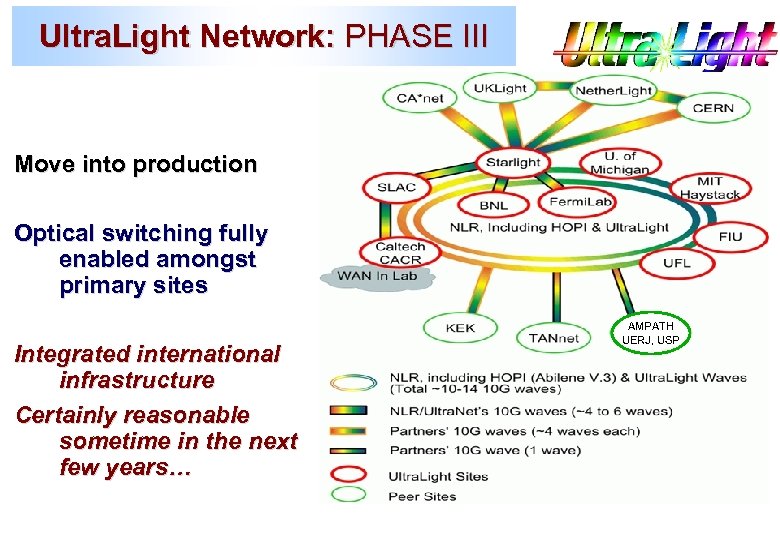

Ultra. Light Network: PHASE III Move into production Optical switching fully enabled amongst primary sites Integrated international infrastructure Certainly reasonable sometime in the next few years… AMPATH UERJ, USP

Ultra. Light Network: PHASE III Move into production Optical switching fully enabled amongst primary sites Integrated international infrastructure Certainly reasonable sometime in the next few years… AMPATH UERJ, USP

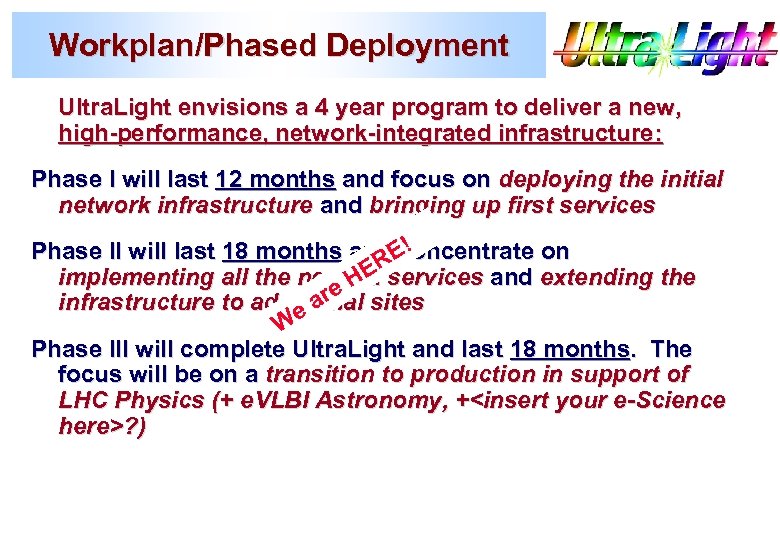

Workplan/Phased Deployment Ultra. Light envisions a 4 year program to deliver a new, high-performance, network-integrated infrastructure: Phase I will last 12 months and focus on deploying the initial network infrastructure and bringing up first services Phase II will last 18 months and concentrate on E! ER implementing all the needed services and extending the e H infrastructure to additional sites ar We Phase III will complete Ultra. Light and last 18 months. The focus will be on a transition to production in support of LHC Physics (+ e. VLBI Astronomy, +

Workplan/Phased Deployment Ultra. Light envisions a 4 year program to deliver a new, high-performance, network-integrated infrastructure: Phase I will last 12 months and focus on deploying the initial network infrastructure and bringing up first services Phase II will last 18 months and concentrate on E! ER implementing all the needed services and extending the e H infrastructure to additional sites ar We Phase III will complete Ultra. Light and last 18 months. The focus will be on a transition to production in support of LHC Physics (+ e. VLBI Astronomy, +

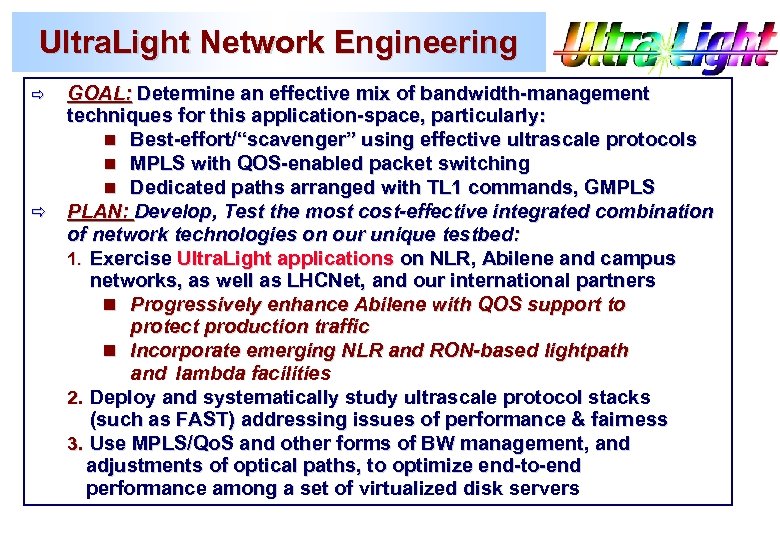

Ultra. Light Network Engineering GOAL: Determine an effective mix of bandwidth-management techniques for this application-space, particularly: n Best-effort/“scavenger” using effective ultrascale protocols n MPLS with QOS-enabled packet switching n Dedicated paths arranged with TL 1 commands, GMPLS ð PLAN: Develop, Test the most cost-effective integrated combination of network technologies on our unique testbed: 1. Exercise Ultra. Light applications on NLR, Abilene and campus networks, as well as LHCNet, and our international partners n Progressively enhance Abilene with QOS support to protect production traffic n Incorporate emerging NLR and RON-based lightpath and lambda facilities 2. Deploy and systematically study ultrascale protocol stacks (such as FAST) addressing issues of performance & fairness 3. Use MPLS/Qo. S and other forms of BW management, and adjustments of optical paths, to optimize end-to-end performance among a set of virtualized disk servers ð

Ultra. Light Network Engineering GOAL: Determine an effective mix of bandwidth-management techniques for this application-space, particularly: n Best-effort/“scavenger” using effective ultrascale protocols n MPLS with QOS-enabled packet switching n Dedicated paths arranged with TL 1 commands, GMPLS ð PLAN: Develop, Test the most cost-effective integrated combination of network technologies on our unique testbed: 1. Exercise Ultra. Light applications on NLR, Abilene and campus networks, as well as LHCNet, and our international partners n Progressively enhance Abilene with QOS support to protect production traffic n Incorporate emerging NLR and RON-based lightpath and lambda facilities 2. Deploy and systematically study ultrascale protocol stacks (such as FAST) addressing issues of performance & fairness 3. Use MPLS/Qo. S and other forms of BW management, and adjustments of optical paths, to optimize end-to-end performance among a set of virtualized disk servers ð

Ultra. Light: Effective Protocols The protocols used to reliably move data are a critical component of Physics “end-to-end” use of the network TCP is the most widely used protocol for reliable data transport, but is becoming ever more ineffective for higher and higher bandwidth-delay networks. Ultra. Light is exploring extensions to TCP (HSTCP, Westwood+, HTCP, FAST) designed to maintain fairsharing of networks and, at the same time, to allow efficient, effective use of these networks. Currently FAST is in our “Ultra. Light Kernel” (a customized 2. 6. 12 -3 kernel). This was used in SC 2005. We are planning to broadly deploy a related kernel with FAST. Longer term we can then continue with access to FAST, HS-TCP, Scalable TCP, BIC and others.

Ultra. Light: Effective Protocols The protocols used to reliably move data are a critical component of Physics “end-to-end” use of the network TCP is the most widely used protocol for reliable data transport, but is becoming ever more ineffective for higher and higher bandwidth-delay networks. Ultra. Light is exploring extensions to TCP (HSTCP, Westwood+, HTCP, FAST) designed to maintain fairsharing of networks and, at the same time, to allow efficient, effective use of these networks. Currently FAST is in our “Ultra. Light Kernel” (a customized 2. 6. 12 -3 kernel). This was used in SC 2005. We are planning to broadly deploy a related kernel with FAST. Longer term we can then continue with access to FAST, HS-TCP, Scalable TCP, BIC and others.

Ultra. Light Kernel Development Having a standard tuned kernel is very important for a number of Ultra. Light activities: 1. Breaking the 1 GB/sec disk-to-disk barrier 2. Exploring TCP congestion control protocols 3. Optimizing our capability for demos and performance 4. The current kernel incorporates the latest FAST and Web 100 patches over a 2. 6. 12 -3 kernel and includes the latest RAID and 10 GE NIC drivers. 5. The Ultra. Light web page (http: //www. ultralight. org ) has a Kernel page which provides the details off the Workgroup->Network page

Ultra. Light Kernel Development Having a standard tuned kernel is very important for a number of Ultra. Light activities: 1. Breaking the 1 GB/sec disk-to-disk barrier 2. Exploring TCP congestion control protocols 3. Optimizing our capability for demos and performance 4. The current kernel incorporates the latest FAST and Web 100 patches over a 2. 6. 12 -3 kernel and includes the latest RAID and 10 GE NIC drivers. 5. The Ultra. Light web page (http: //www. ultralight. org ) has a Kernel page which provides the details off the Workgroup->Network page

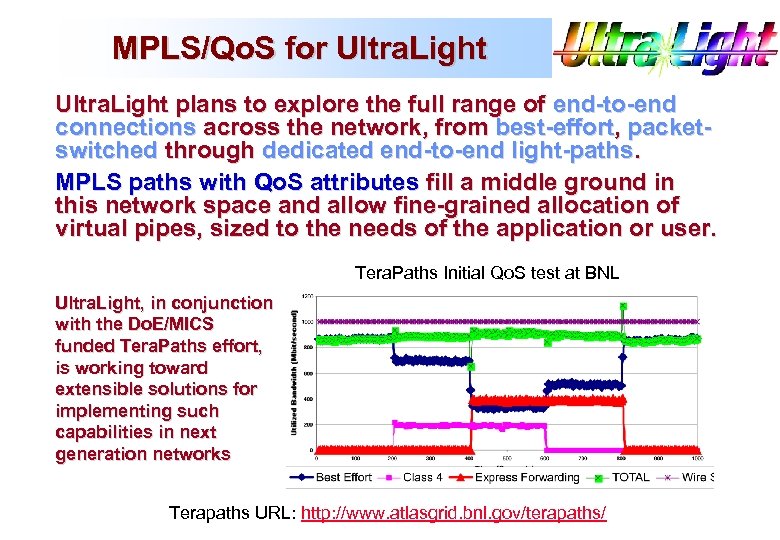

MPLS/Qo. S for Ultra. Light plans to explore the full range of end-to-end connections across the network, from best-effort, packetswitched through dedicated end-to-end light-paths. MPLS paths with Qo. S attributes fill a middle ground in this network space and allow fine-grained allocation of virtual pipes, sized to the needs of the application or user. Tera. Paths Initial Qo. S test at BNL Ultra. Light, in conjunction with the Do. E/MICS funded Tera. Paths effort, is working toward extensible solutions for implementing such capabilities in next generation networks Terapaths URL: http: //www. atlasgrid. bnl. gov/terapaths/

MPLS/Qo. S for Ultra. Light plans to explore the full range of end-to-end connections across the network, from best-effort, packetswitched through dedicated end-to-end light-paths. MPLS paths with Qo. S attributes fill a middle ground in this network space and allow fine-grained allocation of virtual pipes, sized to the needs of the application or user. Tera. Paths Initial Qo. S test at BNL Ultra. Light, in conjunction with the Do. E/MICS funded Tera. Paths effort, is working toward extensible solutions for implementing such capabilities in next generation networks Terapaths URL: http: //www. atlasgrid. bnl. gov/terapaths/

Optical Path Plans Emerging “light path” technologies are becoming popular in the Grid community: n They can extend augment existing grid computing infrastructures, currently focused on CPU/storage, to include the network as an integral Grid component. n Those technologies seem to be the most effective way to offer network resource provisioning on-demand between end-systems. A major capability we are developing in Ultralight is the ability to dynamically switch optical paths across the node, bypassing electronic equipment via a fiber cross connect. The ability to switch dynamically provides additional functionality and also models the more abstract case where switching is done between colors (ITU grid lambdas).

Optical Path Plans Emerging “light path” technologies are becoming popular in the Grid community: n They can extend augment existing grid computing infrastructures, currently focused on CPU/storage, to include the network as an integral Grid component. n Those technologies seem to be the most effective way to offer network resource provisioning on-demand between end-systems. A major capability we are developing in Ultralight is the ability to dynamically switch optical paths across the node, bypassing electronic equipment via a fiber cross connect. The ability to switch dynamically provides additional functionality and also models the more abstract case where switching is done between colors (ITU grid lambdas).

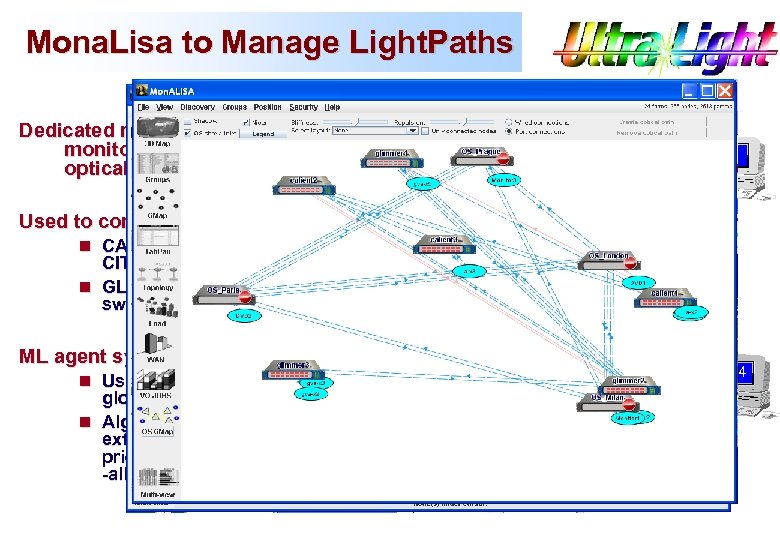

Mona. Lisa to Manage Light. Paths Dedicated modules to monitor and control optical switches Used to control n CALIENT switch @ CIT n GLIMMERGLASS switch @ CERN ML agent system n Used to create global path n Algorithm can be extended to include prioritisation and pre -allocation

Mona. Lisa to Manage Light. Paths Dedicated modules to monitor and control optical switches Used to control n CALIENT switch @ CIT n GLIMMERGLASS switch @ CERN ML agent system n Used to create global path n Algorithm can be extended to include prioritisation and pre -allocation

Monitoring for Ultra. Light Network monitoring is essential for Ultra. Light. We need to understand our network infrastructure and track its performance both historically and in real-time to enable the network as a managed robust component of our overall infrastructure. There are two ongoing efforts we are leveraging to help provide us with the monitoring capability required: IEPM http: //www-iepm. slac. stanford. edu/bw/ Mon. ALISA http: //monalisa. cern. ch We are also looking at new tools like Perf. Sonar which may help provide a monitoring infrastructure for Ultra. Light.

Monitoring for Ultra. Light Network monitoring is essential for Ultra. Light. We need to understand our network infrastructure and track its performance both historically and in real-time to enable the network as a managed robust component of our overall infrastructure. There are two ongoing efforts we are leveraging to help provide us with the monitoring capability required: IEPM http: //www-iepm. slac. stanford. edu/bw/ Mon. ALISA http: //monalisa. cern. ch We are also looking at new tools like Perf. Sonar which may help provide a monitoring infrastructure for Ultra. Light.

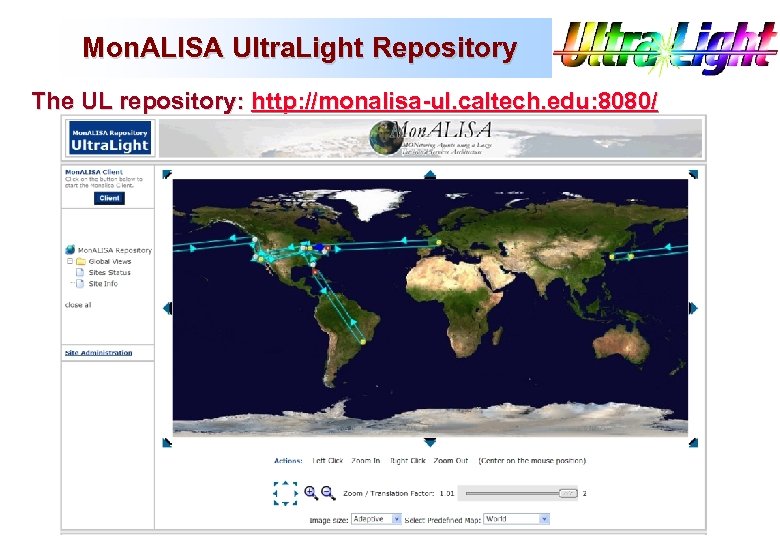

Mon. ALISA Ultra. Light Repository The UL repository: http: //monalisa-ul. caltech. edu: 8080/

Mon. ALISA Ultra. Light Repository The UL repository: http: //monalisa-ul. caltech. edu: 8080/

End-Systems performance Latest disk to disk over 10 Gbps WAN: 4. 3 Gbits/sec (536 MB/sec) - 8 TCP streams from CERN to Caltech; windows, 1 TB file, 24 JBOD disks Quad Opteron AMD 848 2. 2 GHz processors with 3 AMD-8131 chipsets: 4 64 bit/133 MHz PCI-X slots. 3 Supermicro Marvell SATA disk controllers + 24 SATA 7200 rpm SATA disks l Local Disk IO – 9. 6 Gbits/sec (1. 2 GBytes/sec read/write, with <20% CPU utilization) 10 GE NIC l 10 GE NIC – 7. 5 Gbits/sec (memory-to-memory, with 52% CPU utilization) l 2*10 GE NIC (802. 3 ad link aggregation) – 11. 1 Gbits/sec (memory-tomemory) l Need PCI-Express, TCP offload engines l Need 64 bit OS? Which architectures and hardware? Discussions are underway with 3 Ware, Myricom and Supermicro to try to prototype viable servers capable of driving 10 GE networks in the WAN.

End-Systems performance Latest disk to disk over 10 Gbps WAN: 4. 3 Gbits/sec (536 MB/sec) - 8 TCP streams from CERN to Caltech; windows, 1 TB file, 24 JBOD disks Quad Opteron AMD 848 2. 2 GHz processors with 3 AMD-8131 chipsets: 4 64 bit/133 MHz PCI-X slots. 3 Supermicro Marvell SATA disk controllers + 24 SATA 7200 rpm SATA disks l Local Disk IO – 9. 6 Gbits/sec (1. 2 GBytes/sec read/write, with <20% CPU utilization) 10 GE NIC l 10 GE NIC – 7. 5 Gbits/sec (memory-to-memory, with 52% CPU utilization) l 2*10 GE NIC (802. 3 ad link aggregation) – 11. 1 Gbits/sec (memory-tomemory) l Need PCI-Express, TCP offload engines l Need 64 bit OS? Which architectures and hardware? Discussions are underway with 3 Ware, Myricom and Supermicro to try to prototype viable servers capable of driving 10 GE networks in the WAN.

Ultra. Light Global Services • • • Global Services support management and co-scheduling of multiple resource types, and provide strategic recovery mechanisms from system failures Schedule decisions based on CPU, I/O, Network capability and End-to -end task performance estimates, incl. loading effects Decisions are constrained by local and global policies Implementation: Autodiscovering, multithreaded services, serviceengines to schedule threads, making the system scalable and robust Global Services Consist of: Ø Network and System Resource Monitoring, to provide pervasive end-to-end resource monitoring info. to HLS Ø Network Path Discovery and Construction Services, to provide network connections appropriate (sized/tuned) to the expected use Ø Policy Based Job Planning Services, balancing policy, efficient resource use and acceptable turnaround time Ø Task Execution Services, with job tracking user interfaces, incremental re-planning in case of partial incompletion These types of services are required to deliver a managed network. Work along these lines is planned for OSG and future proposals to NSF and DOE.

Ultra. Light Global Services • • • Global Services support management and co-scheduling of multiple resource types, and provide strategic recovery mechanisms from system failures Schedule decisions based on CPU, I/O, Network capability and End-to -end task performance estimates, incl. loading effects Decisions are constrained by local and global policies Implementation: Autodiscovering, multithreaded services, serviceengines to schedule threads, making the system scalable and robust Global Services Consist of: Ø Network and System Resource Monitoring, to provide pervasive end-to-end resource monitoring info. to HLS Ø Network Path Discovery and Construction Services, to provide network connections appropriate (sized/tuned) to the expected use Ø Policy Based Job Planning Services, balancing policy, efficient resource use and acceptable turnaround time Ø Task Execution Services, with job tracking user interfaces, incremental re-planning in case of partial incompletion These types of services are required to deliver a managed network. Work along these lines is planned for OSG and future proposals to NSF and DOE.

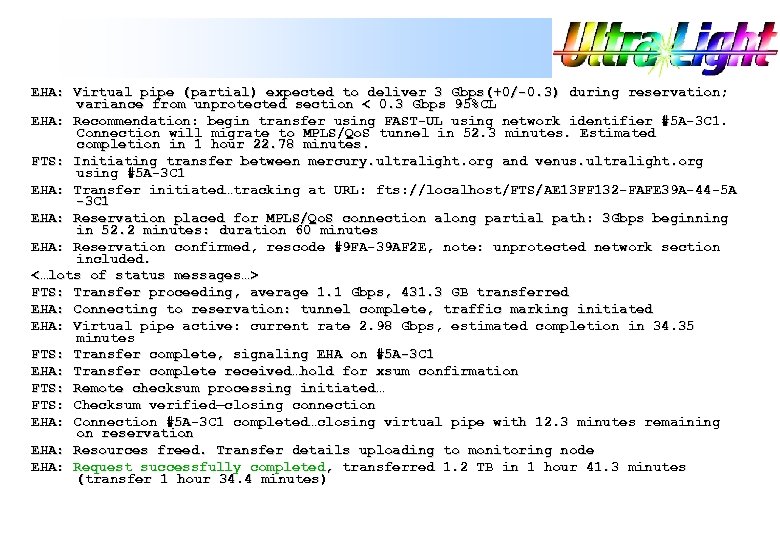

Ultra. Light Application in 2008 Node 1> fts –vvv –in mercury. ultralight. org: /data 01/big/zmumu 05687. root –out venus. ultralight. org: /mstore/events/data –prio 3 –deadline +2: 50 –xsum FTS: Initiating file transfer setup… FTS: Remote host responds ready FTS: Contacting path discovery service PDS: Path discovery in progress… PDS: Path RTT 128. 4 ms, best effort path bottleneck is 10 GE PDS: Path options found: PDS: Lightpath option exists end-to-end PDS: Virtual pipe option exists (partial) PDS: High-performance protocol capable end-systems exist FTS: Requested transfer 1. 2 TB file transfer within 2 hours 50 minutes, priority 3 FTS: Remote host confirms available space for DN=smckee@ultralight. org FTS: End-host agent contacted…parameters transferred EHA: Priority 3 request allowed for DN=smckee@ultralight. org EHA: request scheduling details EHA: Lightpath prior scheduling (higher/same priority) precludes use EHA: Virtual pipe sizeable to 3 Gbps available for 1 hour starting in 52. 4 minutes EHA: request monitoring prediction along path EHA: FAST-UL transfer expected to deliver 1. 2 Gbps (+0. 8/-0. 4) averaged over next 2 hours 50 minutes

Ultra. Light Application in 2008 Node 1> fts –vvv –in mercury. ultralight. org: /data 01/big/zmumu 05687. root –out venus. ultralight. org: /mstore/events/data –prio 3 –deadline +2: 50 –xsum FTS: Initiating file transfer setup… FTS: Remote host responds ready FTS: Contacting path discovery service PDS: Path discovery in progress… PDS: Path RTT 128. 4 ms, best effort path bottleneck is 10 GE PDS: Path options found: PDS: Lightpath option exists end-to-end PDS: Virtual pipe option exists (partial) PDS: High-performance protocol capable end-systems exist FTS: Requested transfer 1. 2 TB file transfer within 2 hours 50 minutes, priority 3 FTS: Remote host confirms available space for DN=smckee@ultralight. org FTS: End-host agent contacted…parameters transferred EHA: Priority 3 request allowed for DN=smckee@ultralight. org EHA: request scheduling details EHA: Lightpath prior scheduling (higher/same priority) precludes use EHA: Virtual pipe sizeable to 3 Gbps available for 1 hour starting in 52. 4 minutes EHA: request monitoring prediction along path EHA: FAST-UL transfer expected to deliver 1. 2 Gbps (+0. 8/-0. 4) averaged over next 2 hours 50 minutes

EHA: Virtual pipe (partial) expected to deliver 3 Gbps(+0/-0. 3) during reservation; variance from unprotected section < 0. 3 Gbps 95%CL EHA: Recommendation: begin transfer using FAST-UL using network identifier #5 A-3 C 1. Connection will migrate to MPLS/Qo. S tunnel in 52. 3 minutes. Estimated completion in 1 hour 22. 78 minutes. FTS: Initiating transfer between mercury. ultralight. org and venus. ultralight. org using #5 A-3 C 1 EHA: Transfer initiated…tracking at URL: fts: //localhost/FTS/AE 13 FF 132 -FAFE 39 A-44 -5 A -3 C 1 EHA: Reservation placed for MPLS/Qo. S connection along partial path: 3 Gbps beginning in 52. 2 minutes: duration 60 minutes EHA: Reservation confirmed, rescode #9 FA-39 AF 2 E, note: unprotected network section included. <…lots of status messages…> FTS: Transfer proceeding, average 1. 1 Gbps, 431. 3 GB transferred EHA: Connecting to reservation: tunnel complete, traffic marking initiated EHA: Virtual pipe active: current rate 2. 98 Gbps, estimated completion in 34. 35 minutes FTS: Transfer complete, signaling EHA on #5 A-3 C 1 EHA: Transfer complete received…hold for xsum confirmation FTS: Remote checksum processing initiated… FTS: Checksum verified—closing connection EHA: Connection #5 A-3 C 1 completed…closing virtual pipe with 12. 3 minutes remaining on reservation EHA: Resources freed. Transfer details uploading to monitoring node EHA: Request successfully completed, transferred 1. 2 TB in 1 hour 41. 3 minutes (transfer 1 hour 34. 4 minutes)

EHA: Virtual pipe (partial) expected to deliver 3 Gbps(+0/-0. 3) during reservation; variance from unprotected section < 0. 3 Gbps 95%CL EHA: Recommendation: begin transfer using FAST-UL using network identifier #5 A-3 C 1. Connection will migrate to MPLS/Qo. S tunnel in 52. 3 minutes. Estimated completion in 1 hour 22. 78 minutes. FTS: Initiating transfer between mercury. ultralight. org and venus. ultralight. org using #5 A-3 C 1 EHA: Transfer initiated…tracking at URL: fts: //localhost/FTS/AE 13 FF 132 -FAFE 39 A-44 -5 A -3 C 1 EHA: Reservation placed for MPLS/Qo. S connection along partial path: 3 Gbps beginning in 52. 2 minutes: duration 60 minutes EHA: Reservation confirmed, rescode #9 FA-39 AF 2 E, note: unprotected network section included. <…lots of status messages…> FTS: Transfer proceeding, average 1. 1 Gbps, 431. 3 GB transferred EHA: Connecting to reservation: tunnel complete, traffic marking initiated EHA: Virtual pipe active: current rate 2. 98 Gbps, estimated completion in 34. 35 minutes FTS: Transfer complete, signaling EHA on #5 A-3 C 1 EHA: Transfer complete received…hold for xsum confirmation FTS: Remote checksum processing initiated… FTS: Checksum verified—closing connection EHA: Connection #5 A-3 C 1 completed…closing virtual pipe with 12. 3 minutes remaining on reservation EHA: Resources freed. Transfer details uploading to monitoring node EHA: Request successfully completed, transferred 1. 2 TB in 1 hour 41. 3 minutes (transfer 1 hour 34. 4 minutes)

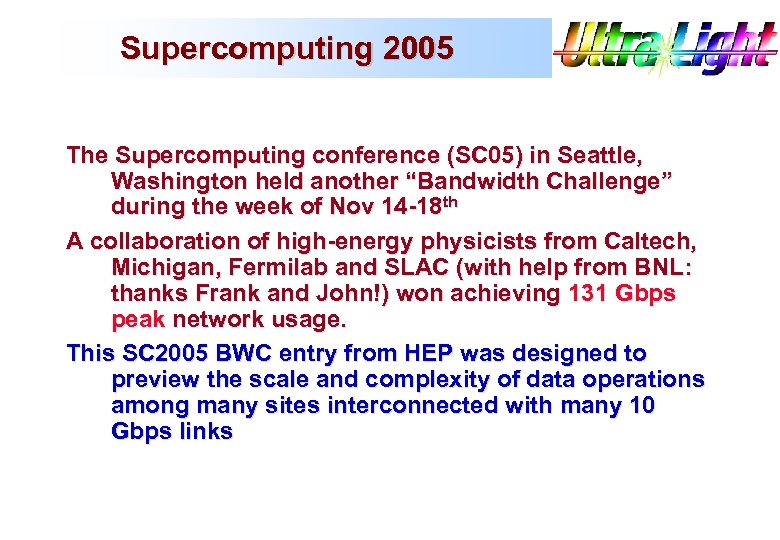

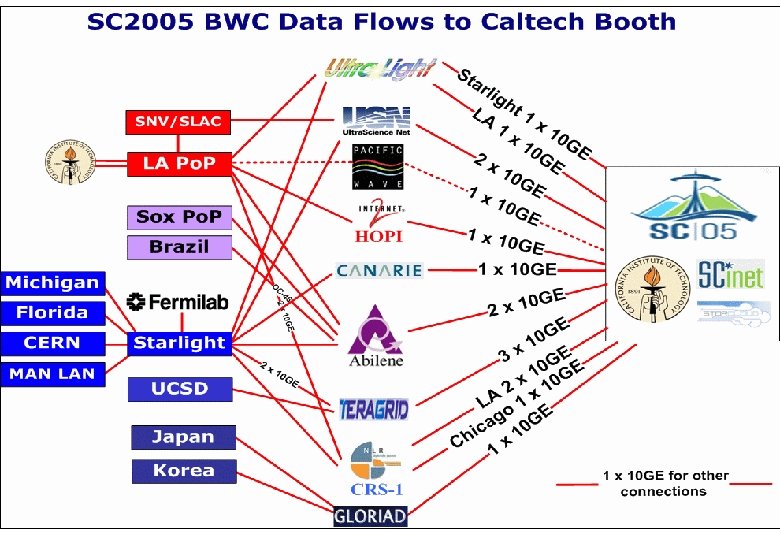

Supercomputing 2005 The Supercomputing conference (SC 05) in Seattle, Washington held another “Bandwidth Challenge” during the week of Nov 14 -18 th A collaboration of high-energy physicists from Caltech, Michigan, Fermilab and SLAC (with help from BNL: thanks Frank and John!) won achieving 131 Gbps peak network usage. This SC 2005 BWC entry from HEP was designed to preview the scale and complexity of data operations among many sites interconnected with many 10 Gbps links

Supercomputing 2005 The Supercomputing conference (SC 05) in Seattle, Washington held another “Bandwidth Challenge” during the week of Nov 14 -18 th A collaboration of high-energy physicists from Caltech, Michigan, Fermilab and SLAC (with help from BNL: thanks Frank and John!) won achieving 131 Gbps peak network usage. This SC 2005 BWC entry from HEP was designed to preview the scale and complexity of data operations among many sites interconnected with many 10 Gbps links

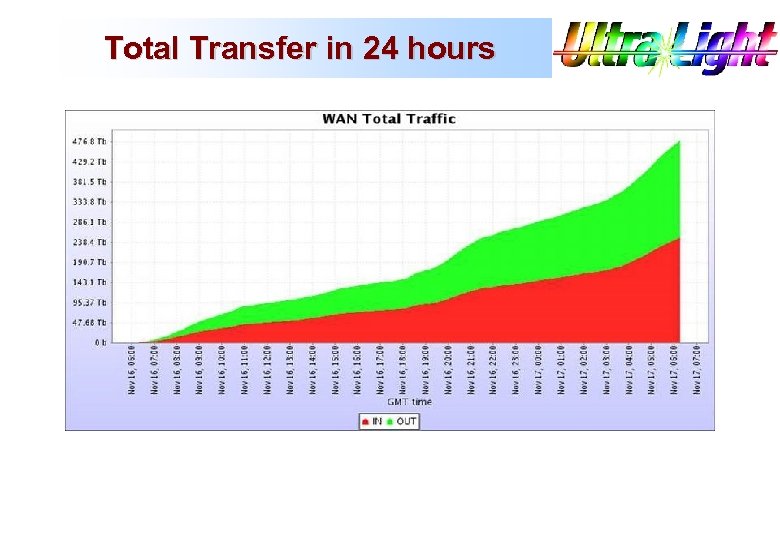

Total Transfer in 24 hours

Total Transfer in 24 hours

BWC Take Away Summary Our collaboration previewed the IT Challenges of the next generation science at the High Energy Physics Frontier (for the LHC and other major programs): n Petabyte-scale datasets n Tens of national and transoceanic links at 10 Gbps (and up) n 100+ Gbps aggregate data transport sustained for hours; We reached a Petabyte/day transport rate for real physics data The team set the scale and learned to gauge the difficulty of the global networks and transport systems required for the LHC mission n Set up, shook down and successfully ran the system in < 1 week Substantive take-aways from this marathon exercise: n An optimized Linux (2. 6. 12 + FAST + NFSv 4) kernel for data transport; after 7 full kernel-build cycles in 4 days n A newly optimized application-level copy program, bbcp, that matches the performance of iperf under some conditions n Extensions of Xrootd, an optimized low-latency file access application for clusters, across the wide area n Understanding of the limits of 10 Gbps-capable systems under stress

BWC Take Away Summary Our collaboration previewed the IT Challenges of the next generation science at the High Energy Physics Frontier (for the LHC and other major programs): n Petabyte-scale datasets n Tens of national and transoceanic links at 10 Gbps (and up) n 100+ Gbps aggregate data transport sustained for hours; We reached a Petabyte/day transport rate for real physics data The team set the scale and learned to gauge the difficulty of the global networks and transport systems required for the LHC mission n Set up, shook down and successfully ran the system in < 1 week Substantive take-aways from this marathon exercise: n An optimized Linux (2. 6. 12 + FAST + NFSv 4) kernel for data transport; after 7 full kernel-build cycles in 4 days n A newly optimized application-level copy program, bbcp, that matches the performance of iperf under some conditions n Extensions of Xrootd, an optimized low-latency file access application for clusters, across the wide area n Understanding of the limits of 10 Gbps-capable systems under stress

Ultra. Light and ATLAS Ultra. Light has deployed and instrumented an Ultra. Light network and made good progress toward defining and constructing a needed ‘managed network’ infrastructure. The developments in Ultra. Light are targeted at providing needed capabilities and infrastructure for LHC. We have some important activities which are ready for additional effort: n Achieving 10 GE disk-to-disk transfers usingle servers n Evaluating TCP congestion control protocols over UL links n Deploying embryonic network services to further the UL vision n Implementing some forms of MPLS/Qo. S and Optical Path control as part of standard Ultra. Light operation n Enabling automated end-host tuning and negotiation We want to extend the footprint of Ultra. Light to include as many interested sites as possible to help insure its developments meet the LHC needs. Questions?

Ultra. Light and ATLAS Ultra. Light has deployed and instrumented an Ultra. Light network and made good progress toward defining and constructing a needed ‘managed network’ infrastructure. The developments in Ultra. Light are targeted at providing needed capabilities and infrastructure for LHC. We have some important activities which are ready for additional effort: n Achieving 10 GE disk-to-disk transfers usingle servers n Evaluating TCP congestion control protocols over UL links n Deploying embryonic network services to further the UL vision n Implementing some forms of MPLS/Qo. S and Optical Path control as part of standard Ultra. Light operation n Enabling automated end-host tuning and negotiation We want to extend the footprint of Ultra. Light to include as many interested sites as possible to help insure its developments meet the LHC needs. Questions?