2d0f8d58de7869ec3ce5fb5502705c28.ppt

- Количество слайдов: 28

UCM-Based Generation of Test Goals Daniel Amyot, University of Ottawa (with Michael Weiss and Luigi Logrippo) damyot@site. uottawa. ca RDA Project (funded by NSERC) /27

UCM-Based Testing? n Can we derive test goals and test cases from UCM models? ¨ Scenario notation good basis! ¨ Reuse of (evolving) requirements model for validation n UCMs are very abstract: ¨ Very little information about data and communication ¨ Difficult to derive implementation-level test cases automatically ¨ Deriving test goals is more realistic n How much value? How much effort? 2

Test Generation Approaches n Based on UCM Testing Patterns Grey-box test selection strategies, applied to requirements scenarios ¨ Manual ¨ n Based on UCM Scenario Definitions UCM + simple data model, initial values and start points, and path traversal algorithms ¨ Semi-automatic ¨ n Based on UCM Transformations ¨ ¨ ¨ Exhaustive traversal Mapping to formal language (e. g. , LOTOS) Automated 3

UCM Testing Patterns /27

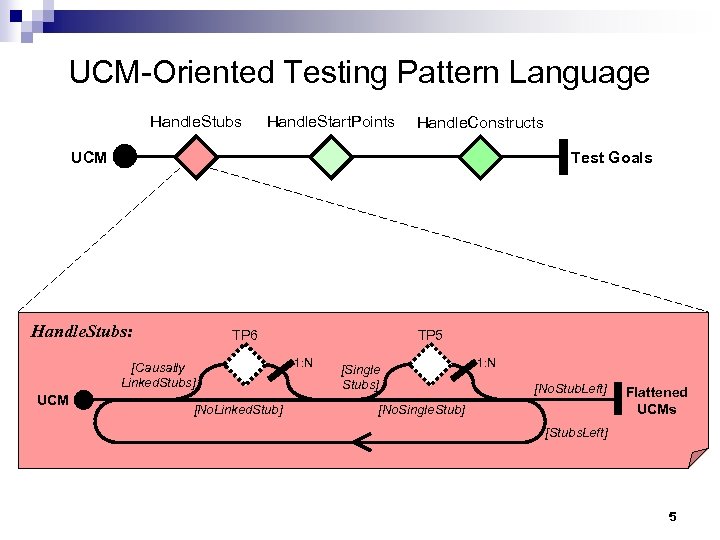

UCM-Oriented Testing Pattern Language Handle. Stubs Handle. Start. Points Handle. Constructs UCM Test Goals Handle. Stubs: TP 6 [Causally Linked. Stubs] UCM [No. Linked. Stub] TP 5 1: N [Single Stubs] 1: N [No. Stub. Left] [No. Single. Stub] Flattened UCMs [Stubs. Left] 5

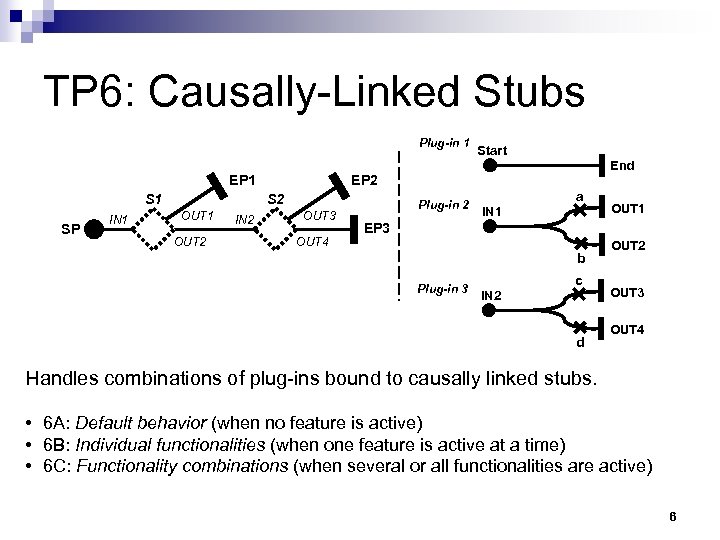

TP 6: Causally-Linked Stubs Plug-in 1 EP 1 SP IN 1 OUT 2 IN 2 End EP 2 S 2 OUT 1 Start OUT 3 OUT 4 Plug-in 2 IN 1 a OUT 1 EP 3 b Plug-in 3 IN 2 c d OUT 2 OUT 3 OUT 4 Handles combinations of plug-ins bound to causally linked stubs. • 6 A: Default behavior (when no feature is active) • 6 B: Individual functionalities (when one feature is active at a time) • 6 C: Functionality combinations (when several or all functionalities are active) 6

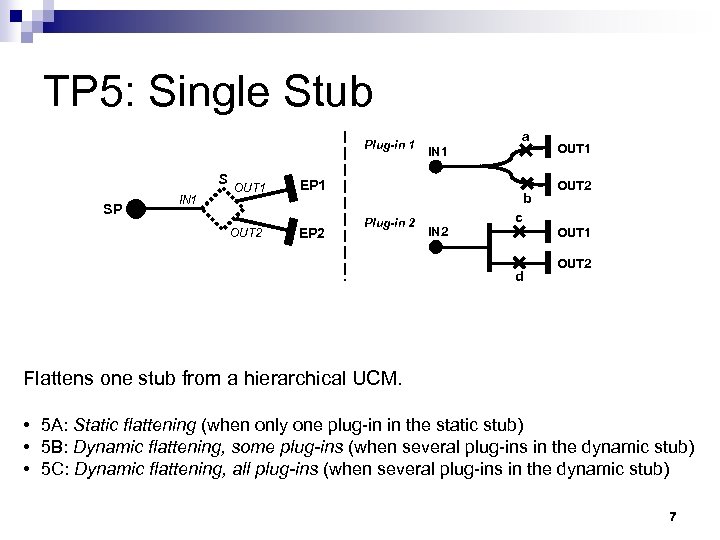

TP 5: Single Stub Plug-in 1 S SP IN 1 OUT 2 IN 1 EP 2 Plug-in 2 IN 2 a b c d OUT 1 OUT 2 Flattens one stub from a hierarchical UCM. • 5 A: Static flattening (when only one plug-in in the static stub) • 5 B: Dynamic flattening, some plug-ins (when several plug-ins in the dynamic stub) • 5 C: Dynamic flattening, all plug-ins (when several plug-ins in the dynamic stub) 7

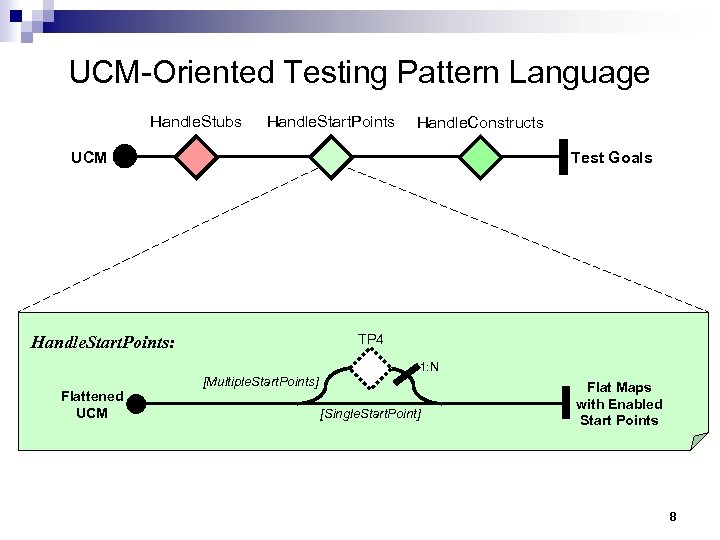

UCM-Oriented Testing Pattern Language Handle. Stubs Handle. Start. Points Handle. Constructs UCM Test Goals TP 4 Handle. Start. Points: 1: N Flattened UCM [Multiple. Start. Points] [Single. Start. Point] Flat Maps with Enabled Start Points 8

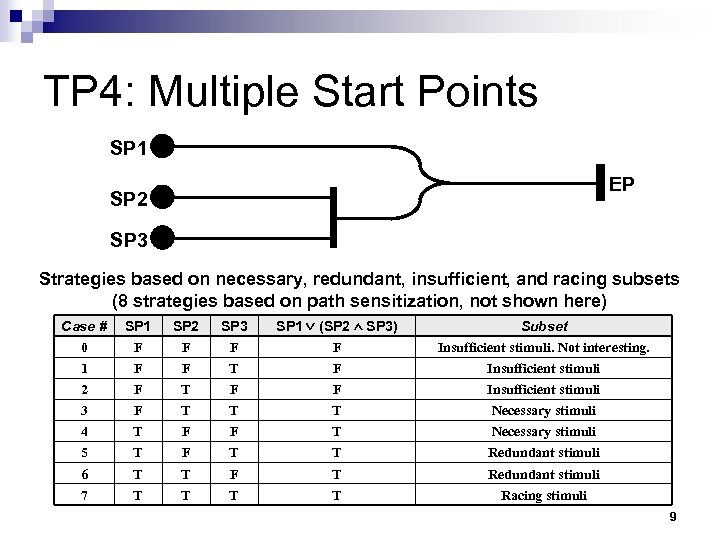

TP 4: Multiple Start Points SP 1 EP SP 2 SP 3 Strategies based on necessary, redundant, insufficient, and racing subsets (8 strategies based on path sensitization, not shown here) Case # SP 1 SP 2 SP 3 SP 1 (SP 2 SP 3) Subset 0 F F Insufficient stimuli. Not interesting. 1 F F T F Insufficient stimuli 2 F T F F Insufficient stimuli 3 F T T T Necessary stimuli 4 T F F T Necessary stimuli 5 T F T T Redundant stimuli 6 T T F T Redundant stimuli 7 T T Racing stimuli 9

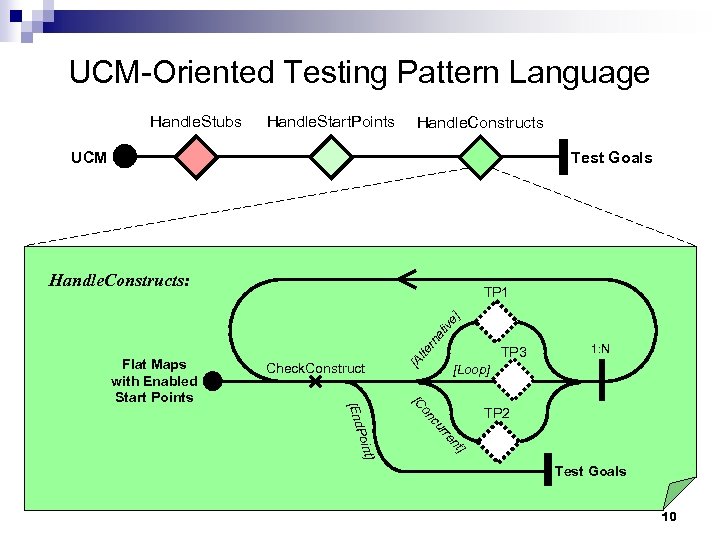

UCM-Oriented Testing Pattern Language Handle. Stubs Handle. Start. Points Handle. Constructs UCM Test Goals Handle. Constructs: [A Check. Construct TP 3 1: N [Loop] [C ] nt rre cu on TP 2 t] Poin [End Flat Maps with Enabled Start Points lte rn at iv e] TP 1 Test Goals 10

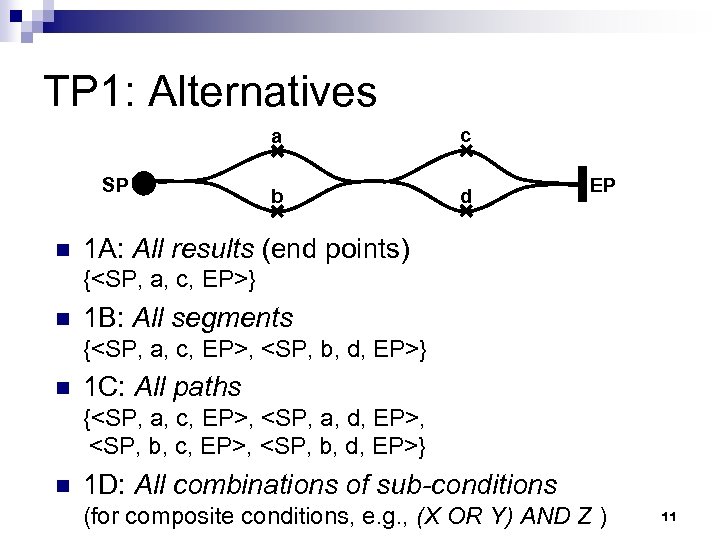

TP 1: Alternatives a SP n c b d EP 1 A: All results (end points) {<SP, a, c, EP>} n 1 B: All segments {<SP, a, c, EP>, <SP, b, d, EP>} n 1 C: All paths {<SP, a, c, EP>, <SP, a, d, EP>, <SP, b, c, EP>, <SP, b, d, EP>} n 1 D: All combinations of sub-conditions (for composite conditions, e. g. , (X OR Y) AND Z ) 11

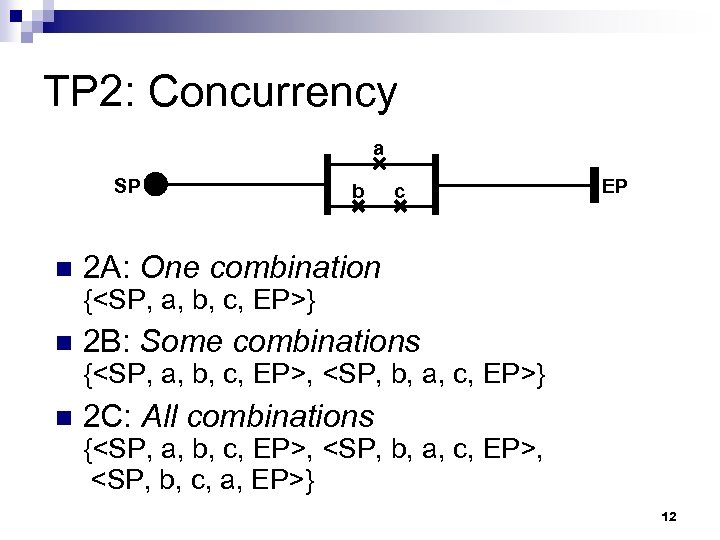

TP 2: Concurrency a SP n b c EP 2 A: One combination {<SP, a, b, c, EP>} n 2 B: Some combinations {<SP, a, b, c, EP>, <SP, b, a, c, EP>} n 2 C: All combinations {<SP, a, b, c, EP>, <SP, b, a, c, EP>, <SP, b, c, a, EP>} 12

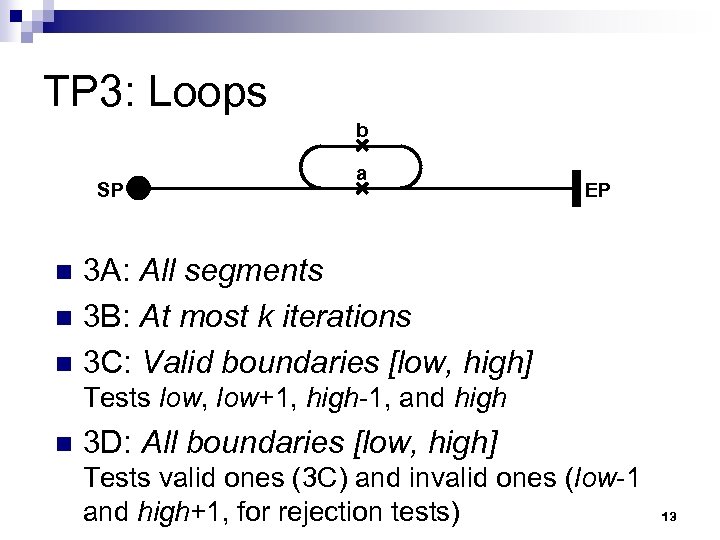

TP 3: Loops b SP a EP 3 A: All segments n 3 B: At most k iterations n 3 C: Valid boundaries [low, high] n Tests low, low+1, high-1, and high n 3 D: All boundaries [low, high] Tests valid ones (3 C) and invalid ones (low-1 and high+1, for rejection tests) 13

Complementary Strategies n Strategies for value selection ¨ Equivalence classes, boundary testing n Typical approaches in traditional testing n Strategies for rejection test cases ¨ Forbidden scenarios ¨ Testing patterns ¨ Incomplete conditions ¨ Off-by-one value 14

UCM Scenario Definitions /27

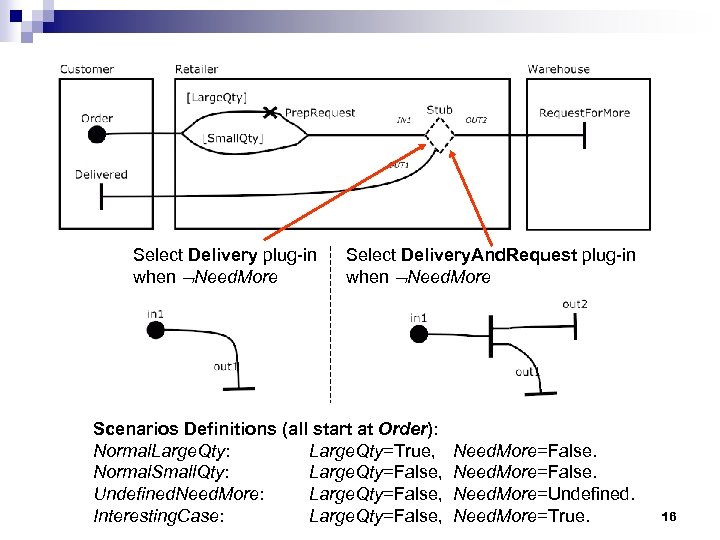

Select Delivery plug-in when Need. More Select Delivery. And. Request plug-in when Need. More Scenarios Definitions (all start at Order): Normal. Large. Qty: Large. Qty=True, Normal. Small. Qty: Large. Qty=False, Undefined. Need. More: Large. Qty=False, Interesting. Case: Large. Qty=False, Need. More=False. Need. More=Undefined. Need. More=True. 16

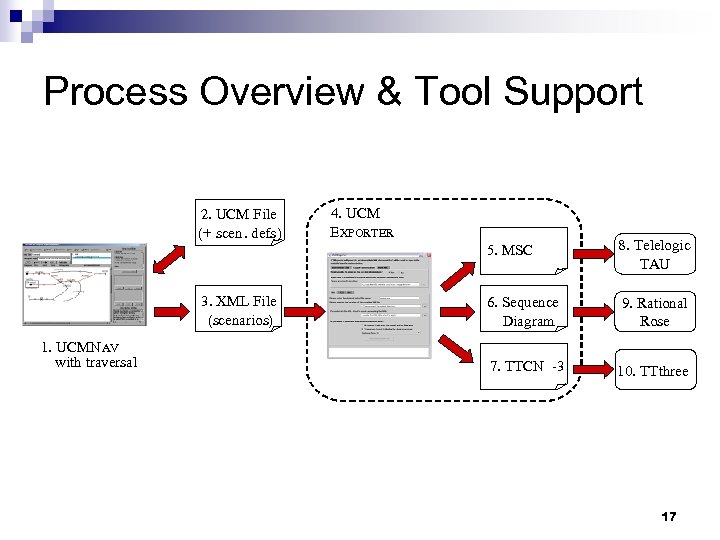

Process Overview & Tool Support 2. UCM File (+ scen. defs) 4. UCM EXPORTER 5. MSC 3. XML File (scenarios) 1. UCMN AV with traversal 8. Telelogic TAU 6. Sequence Diagram 9. Rational Rose 7. TTCN -3 10. TTthree 17

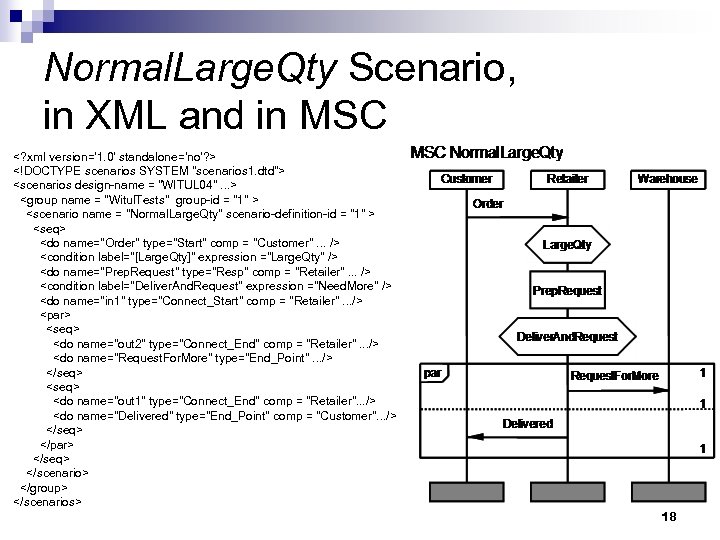

Normal. Large. Qty Scenario, in XML and in MSC <? xml version='1. 0' standalone='no'? > <!DOCTYPE scenarios SYSTEM "scenarios 1. dtd"> <scenarios design-name = "WITUL 04". . . > <group name = "Witul. Tests" group-id = "1" > <scenario name = "Normal. Large. Qty" scenario-definition-id = "1" > <seq> <do name="Order" type="Start" comp = "Customer". . . /> <condition label="[Large. Qty]" expression ="Large. Qty" /> <do name="Prep. Request" type="Resp" comp = "Retailer". . . /> <condition label="Deliver. And. Request" expression ="Need. More" /> <do name="in 1" type="Connect_Start" comp = "Retailer". . . /> <par> <seq> <do name="out 2" type="Connect_End" comp = "Retailer". . . /> <do name="Request. For. More" type="End_Point". . . /> </seq> <do name="out 1" type="Connect_End" comp = "Retailer". . . /> <do name="Delivered" type="End_Point" comp = "Customer". . . /> </seq> </par> </seq> </scenario> </group> </scenarios> 18

UCM Transformations /27

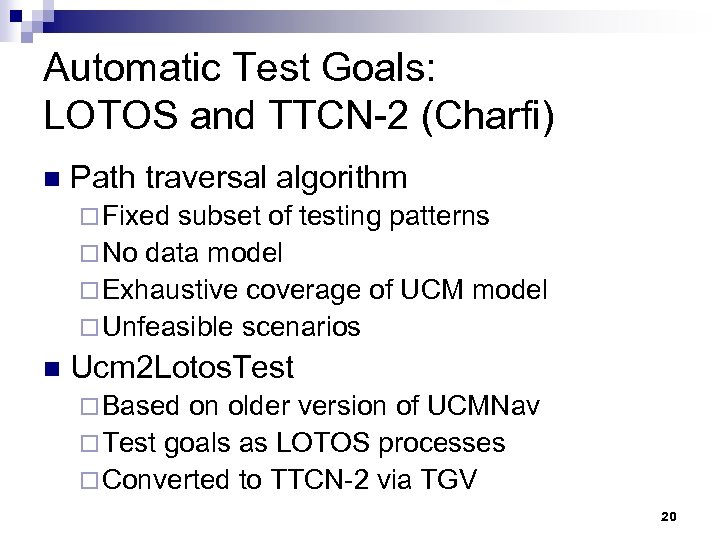

Automatic Test Goals: LOTOS and TTCN-2 (Charfi) n Path traversal algorithm ¨ Fixed subset of testing patterns ¨ No data model ¨ Exhaustive coverage of UCM model ¨ Unfeasible scenarios n Ucm 2 Lotos. Test ¨ Based on older version of UCMNav ¨ Test goals as LOTOS processes ¨ Converted to TTCN-2 via TGV 20

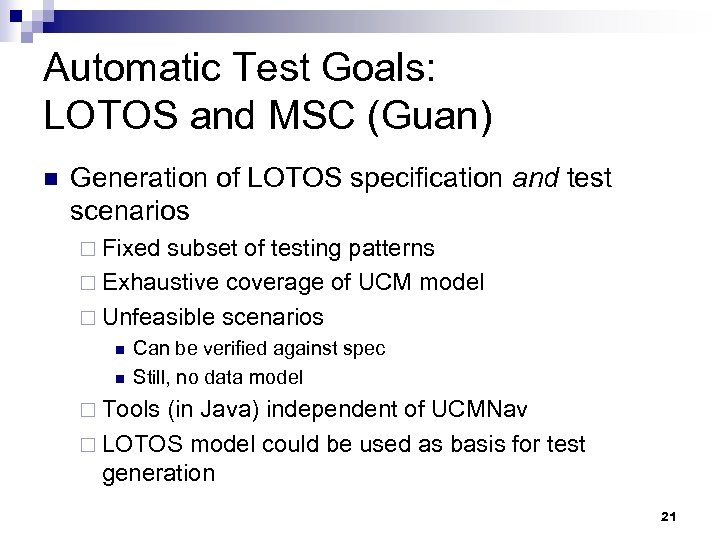

Automatic Test Goals: LOTOS and MSC (Guan) n Generation of LOTOS specification and test scenarios ¨ Fixed subset of testing patterns ¨ Exhaustive coverage of UCM model ¨ Unfeasible scenarios n n Can be verified against spec Still, no data model ¨ Tools (in Java) independent of UCMNav ¨ LOTOS model could be used as basis for test generation 21

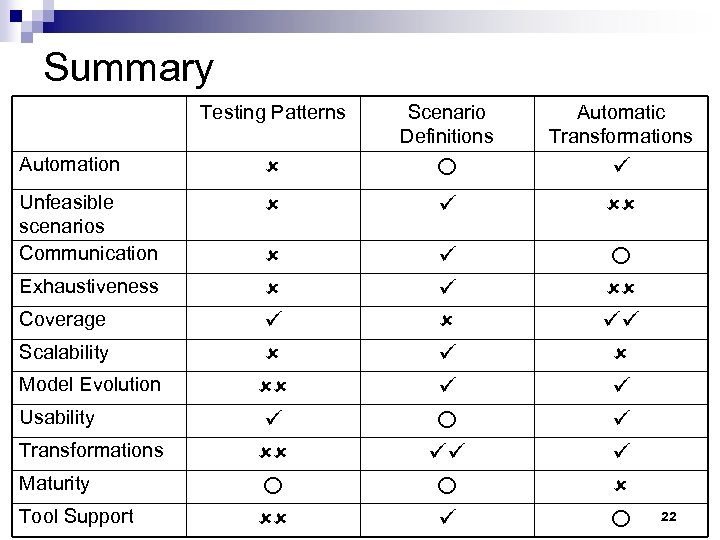

Summary Testing Patterns Scenario Definitions Automatic Transformations Automation Unfeasible scenarios Communication Exhaustiveness Coverage Scalability Model Evolution Usability Transformations Maturity Tool Support 22

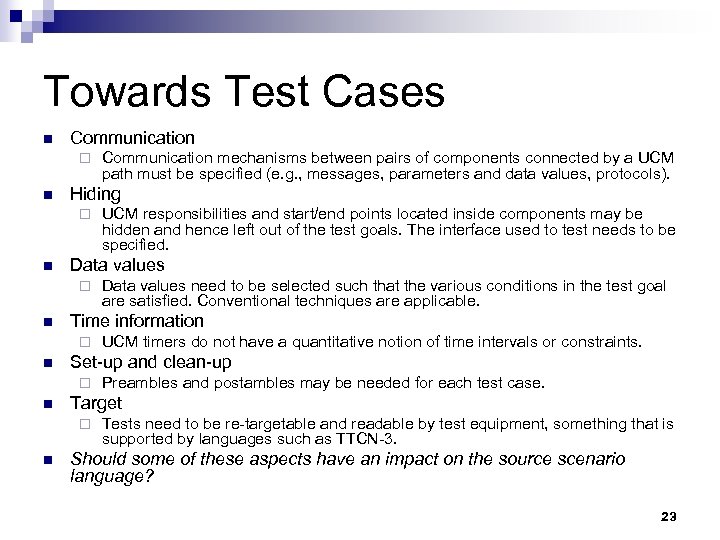

Towards Test Cases n Communication ¨ n Hiding ¨ n Preambles and postambles may be needed for each test case. Target ¨ n UCM timers do not have a quantitative notion of time intervals or constraints. Set-up and clean-up ¨ n Data values need to be selected such that the various conditions in the test goal are satisfied. Conventional techniques are applicable. Time information ¨ n UCM responsibilities and start/end points located inside components may be hidden and hence left out of the test goals. The interface used to test needs to be specified. Data values ¨ n Communication mechanisms between pairs of components connected by a UCM path must be specified (e. g. , messages, parameters and data values, protocols). Tests need to be re-targetable and readable by test equipment, something that is supported by languages such as TTCN-3. Should some of these aspects have an impact on the source scenario language? 23

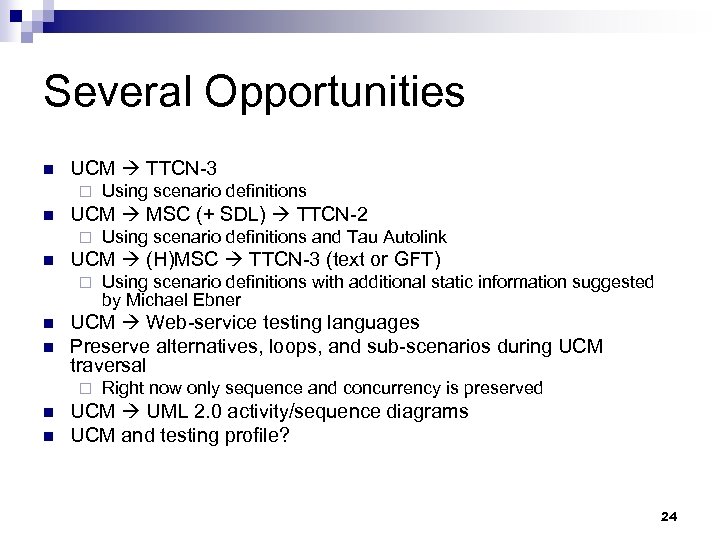

Several Opportunities n UCM TTCN-3 ¨ n UCM MSC (+ SDL) TTCN-2 ¨ n n n Using scenario definitions with additional static information suggested by Michael Ebner UCM Web-service testing languages Preserve alternatives, loops, and sub-scenarios during UCM traversal ¨ n Using scenario definitions and Tau Autolink UCM (H)MSC TTCN-3 (text or GFT) ¨ n Using scenario definitions Right now only sequence and concurrency is preserved UCM UML 2. 0 activity/sequence diagrams UCM and testing profile? 24

Tools n UCMNav 2. 2 http: //www. usecasemaps. org/tools/ucmnav/ n UCMExporter http: //ucmexporter. sourceforge. net/ n UCM 2 LOTOS Available upon request 25

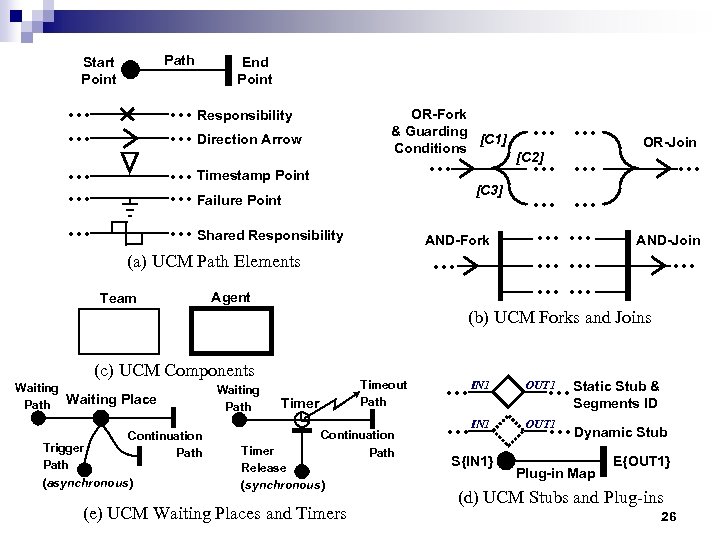

Path Start Point End Point … … … Responsibility … Direction Arrow … … Timestamp Point … Failure Point … Shared Responsibility OR-Fork & Guarding [C 1] Conditions … [C 3] AND-Fork … (a) UCM Path Elements Team Agent … … [C 2] … … … … … OR-Join … AND-Join … (b) UCM Forks and Joins (c) UCM Components Waiting Path Waiting Place Continuation Path Trigger Path (asynchronous) Waiting Path Timeout Path Timer Continuation Path Timer Release (synchronous) (e) UCM Waiting Places and Timers … IN 1 OUT 1 S{IN 1} … Static Stub & Segments ID … Dynamic Stub Plug-in Map E{OUT 1} (d) UCM Stubs and Plug-ins 26

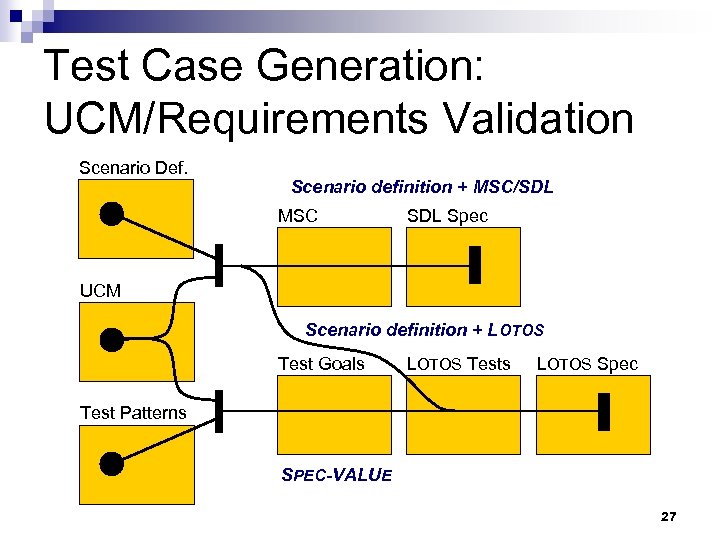

Test Case Generation: UCM/Requirements Validation Scenario Def. Scenario definition + MSC/SDL MSC SDL Spec UCM Scenario definition + LOTOS Test Goals LOTOS Tests LOTOS Spec Test Patterns SPEC-VALUE 27

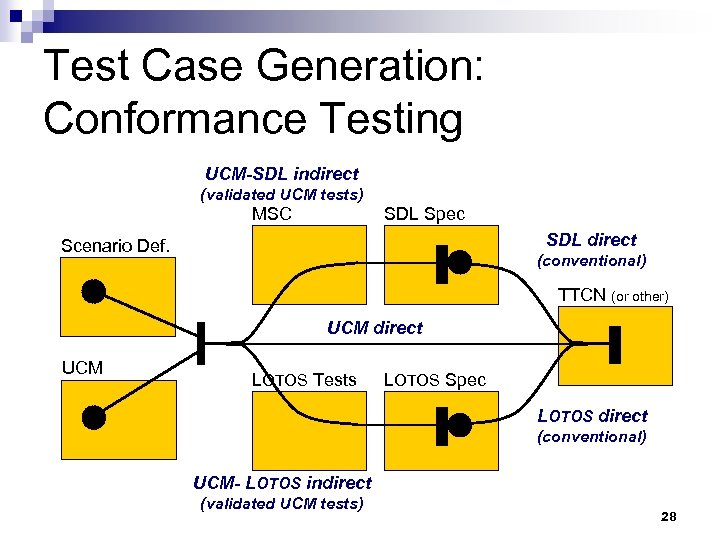

Test Case Generation: Conformance Testing UCM-SDL indirect (validated UCM tests) MSC SDL Spec SDL direct Scenario Def. (conventional) TTCN (or other) UCM direct UCM LOTOS Tests LOTOS Spec LOTOS direct (conventional) UCM- LOTOS indirect (validated UCM tests) 28

2d0f8d58de7869ec3ce5fb5502705c28.ppt