afa0517bc294b69d4027faf2945fdc84.ppt

- Количество слайдов: 19

Two Discourse Driven Language Models for Semantics Haoruo Peng and Dan Roth ACL-2016 1

Two Discourse Driven Language Models for Semantics Haoruo Peng and Dan Roth ACL-2016 1

![A Typical “Semantic Sequence” Problem [Kevin] was robbed by [Robert], and [he] was arrested A Typical “Semantic Sequence” Problem [Kevin] was robbed by [Robert], and [he] was arrested](https://present5.com/presentation/afa0517bc294b69d4027faf2945fdc84/image-2.jpg) A Typical “Semantic Sequence” Problem [Kevin] was robbed by [Robert], and [he] was arrested by the police. n Co-reference ¨ n QA ¨ n Who was arrested? / Was Kevin arrested by police? Event ¨ n “he” refers to whom? What happened to Robert after the robbery? “Understanding what could come next” is central to multiple natural language understanding tasks. 2

A Typical “Semantic Sequence” Problem [Kevin] was robbed by [Robert], and [he] was arrested by the police. n Co-reference ¨ n QA ¨ n Who was arrested? / Was Kevin arrested by police? Event ¨ n “he” refers to whom? What happened to Robert after the robbery? “Understanding what could come next” is central to multiple natural language understanding tasks. 2

Two Key Questions n How do we model the sequential nature of NL at a semantic level? What do we mean by “a semantic level”? ¨ Outcome: Semantic Language Models (Sem. LMs) ¨ Quality evaluation of Sem. LMs ¨ n How do we use the model to better support NLU tasks? Application to co-reference ¨ Application to shallow discourse parsing ¨ 3

Two Key Questions n How do we model the sequential nature of NL at a semantic level? What do we mean by “a semantic level”? ¨ Outcome: Semantic Language Models (Sem. LMs) ¨ Quality evaluation of Sem. LMs ¨ n How do we use the model to better support NLU tasks? Application to co-reference ¨ Application to shallow discourse parsing ¨ 3

![Frame-Based Semantic Sequence [Kevin] was robbed by [Robert], and [he] was arrested by the Frame-Based Semantic Sequence [Kevin] was robbed by [Robert], and [he] was arrested by the](https://present5.com/presentation/afa0517bc294b69d4027faf2945fdc84/image-4.jpg) Frame-Based Semantic Sequence [Kevin] was robbed by [Robert], and [he] was arrested by the police. predicate rob sub Robert argument arrest predicate obj Kevin argument sub … obj the police he argument … [Chambers and Jurafsky, 2008; Bejan, 2008; Jans et al. , 2012] [Granroth-Wilding et al. , 2015; Pichotta and Mooney, 2016] … 4

Frame-Based Semantic Sequence [Kevin] was robbed by [Robert], and [he] was arrested by the police. predicate rob sub Robert argument arrest predicate obj Kevin argument sub … obj the police he argument … [Chambers and Jurafsky, 2008; Bejan, 2008; Jans et al. , 2012] [Granroth-Wilding et al. , 2015; Pichotta and Mooney, 2016] … 4

![What We Do Differently n Infuse discourse information [Kevin] was robbed by [Robert], but What We Do Differently n Infuse discourse information [Kevin] was robbed by [Robert], but](https://present5.com/presentation/afa0517bc294b69d4027faf2945fdc84/image-5.jpg) What We Do Differently n Infuse discourse information [Kevin] was robbed by [Robert], but the police mistakenly arrested [him]. predicate connective argument … 5

What We Do Differently n Infuse discourse information [Kevin] was robbed by [Robert], but the police mistakenly arrested [him]. predicate connective argument … 5

![Two Different Sequences [Kevin] was robbed by [Robert], but the police mistakenly arrested SRL Two Different Sequences [Kevin] was robbed by [Robert], but the police mistakenly arrested SRL](https://present5.com/presentation/afa0517bc294b69d4027faf2945fdc84/image-6.jpg) Two Different Sequences [Kevin] was robbed by [Robert], but the police mistakenly arrested SRL [him]. SRL Shallow Discourse Parsing Rob. 01 sub Robert n obj sub but the police Kevin Co-reference Kevin him Frame-Chain (FC) Sequences Rob. 01 n Arrest. 01 but Arrest. 01 EOS Entity-Centered (EC) Sequences Kevin Rob. 01 -obj but Arrest. 01 -obj 6

Two Different Sequences [Kevin] was robbed by [Robert], but the police mistakenly arrested SRL [him]. SRL Shallow Discourse Parsing Rob. 01 sub Robert n obj sub but the police Kevin Co-reference Kevin him Frame-Chain (FC) Sequences Rob. 01 n Arrest. 01 but Arrest. 01 EOS Entity-Centered (EC) Sequences Kevin Rob. 01 -obj but Arrest. 01 -obj 6

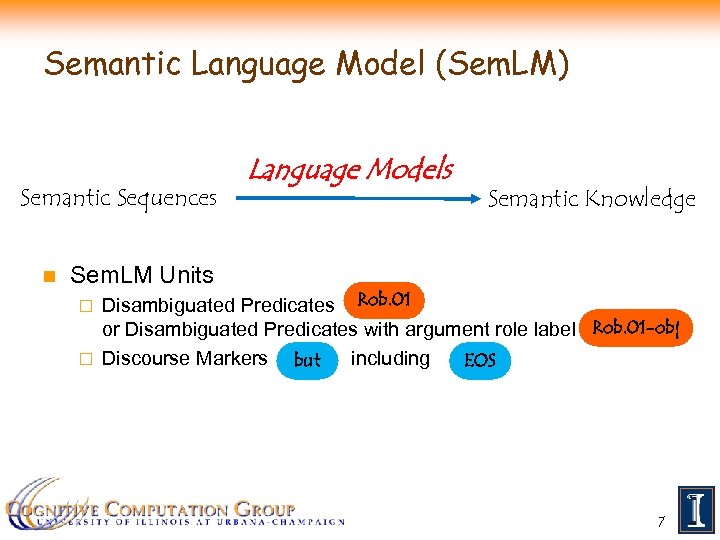

Semantic Language Model (Sem. LM) Semantic Sequences n Language Models Semantic Knowledge Sem. LM Units Disambiguated Predicates Rob. 01 or Disambiguated Predicates with argument role label Rob. 01 -obj ¨ Discourse Markers including but EOS ¨ 7

Semantic Language Model (Sem. LM) Semantic Sequences n Language Models Semantic Knowledge Sem. LM Units Disambiguated Predicates Rob. 01 or Disambiguated Predicates with argument role label Rob. 01 -obj ¨ Discourse Markers including but EOS ¨ 7

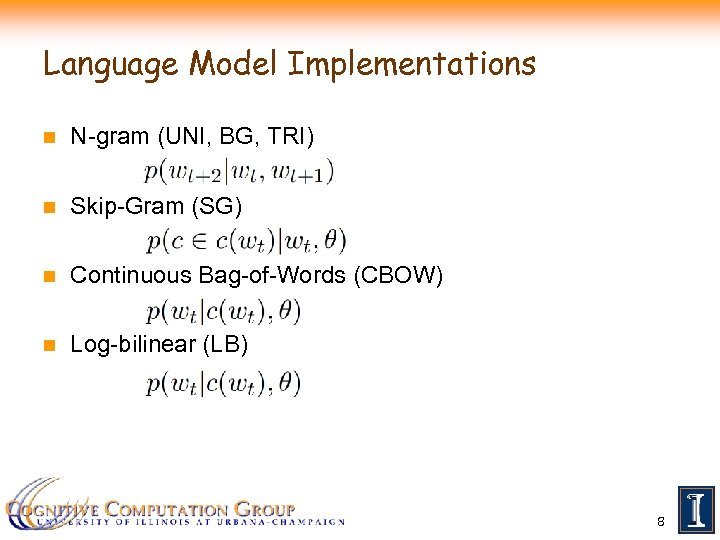

Language Model Implementations n N-gram (UNI, BG, TRI) n Skip-Gram (SG) n Continuous Bag-of-Words (CBOW) n Log-bilinear (LB) 8

Language Model Implementations n N-gram (UNI, BG, TRI) n Skip-Gram (SG) n Continuous Bag-of-Words (CBOW) n Log-bilinear (LB) 8

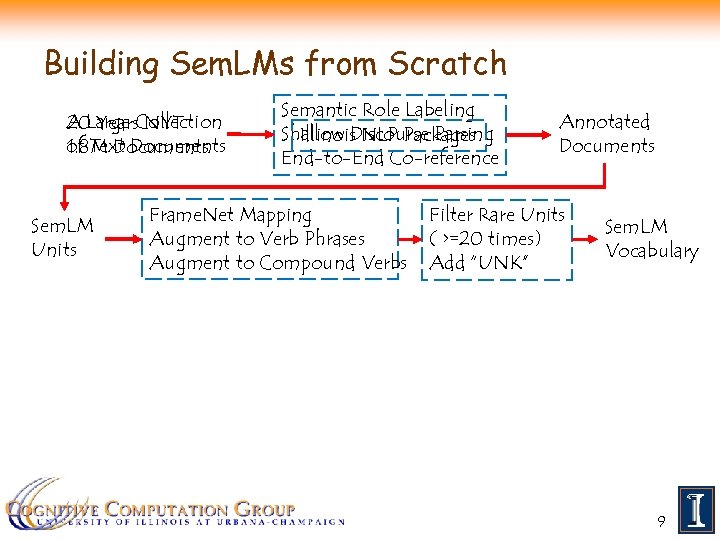

Building Sem. LMs from Scratch A Large Collection 20 Years NYT of Text Documents 1. 8 M Documents Sem. LM Units Semantic Role Labeling Shallow Discourse Parsing Illinois NLP Packages End-to-End Co-reference Frame. Net Mapping Augment to Verb Phrases Augment to Compound Verbs Annotated Documents Filter Rare Units ( >=20 times) Add “UNK” Sem. LM Vocabulary 9

Building Sem. LMs from Scratch A Large Collection 20 Years NYT of Text Documents 1. 8 M Documents Sem. LM Units Semantic Role Labeling Shallow Discourse Parsing Illinois NLP Packages End-to-End Co-reference Frame. Net Mapping Augment to Verb Phrases Augment to Compound Verbs Annotated Documents Filter Rare Units ( >=20 times) Add “UNK” Sem. LM Vocabulary 9

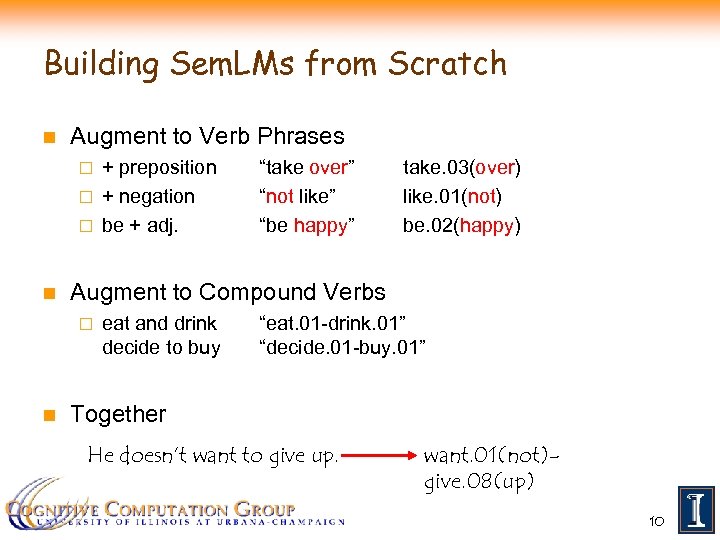

Building Sem. LMs from Scratch n Augment to Verb Phrases + preposition ¨ + negation ¨ be + adj. ¨ n take. 03(over) like. 01(not) be. 02(happy) Augment to Compound Verbs ¨ n “take over” “not like” “be happy” eat and drink decide to buy “eat. 01 -drink. 01” “decide. 01 -buy. 01” Together He doesn’t want to give up. want. 01(not)give. 08(up) 10

Building Sem. LMs from Scratch n Augment to Verb Phrases + preposition ¨ + negation ¨ be + adj. ¨ n take. 03(over) like. 01(not) be. 02(happy) Augment to Compound Verbs ¨ n “take over” “not like” “be happy” eat and drink decide to buy “eat. 01 -drink. 01” “decide. 01 -buy. 01” Together He doesn’t want to give up. want. 01(not)give. 08(up) 10

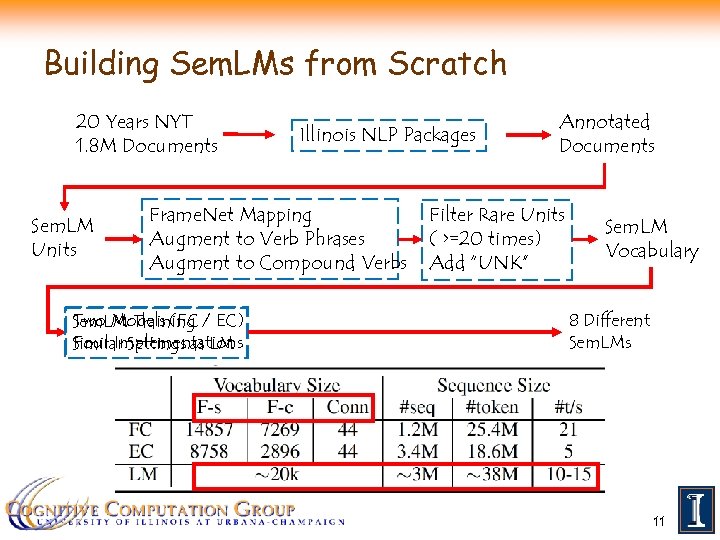

Building Sem. LMs from Scratch 20 Years NYT 1. 8 M Documents Sem. LM Units Illinois NLP Packages Frame. Net Mapping Augment to Verb Phrases Augment to Compound Verbs Two Models (FC Sem. LM Training / EC) Four Implementations Similar Settings as LM Annotated Documents Filter Rare Units ( >=20 times) Add “UNK” Sem. LM Vocabulary 8 Different Sem. LMs 11

Building Sem. LMs from Scratch 20 Years NYT 1. 8 M Documents Sem. LM Units Illinois NLP Packages Frame. Net Mapping Augment to Verb Phrases Augment to Compound Verbs Two Models (FC Sem. LM Training / EC) Four Implementations Similar Settings as LM Annotated Documents Filter Rare Units ( >=20 times) Add “UNK” Sem. LM Vocabulary 8 Different Sem. LMs 11

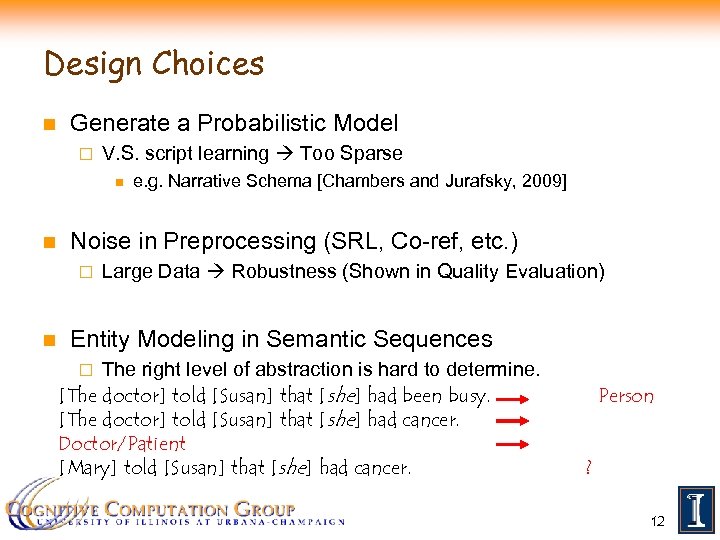

Design Choices n Generate a Probabilistic Model ¨ V. S. script learning Too Sparse n n Noise in Preprocessing (SRL, Co-ref, etc. ) ¨ n e. g. Narrative Schema [Chambers and Jurafsky, 2009] Large Data Robustness (Shown in Quality Evaluation) Entity Modeling in Semantic Sequences The right level of abstraction is hard to determine. [The doctor] told [Susan] that [she] had been busy. [The doctor] told [Susan] that [she] had cancer. Doctor/Patient [Mary] told [Susan] that [she] had cancer. ¨ Person ? 12

Design Choices n Generate a Probabilistic Model ¨ V. S. script learning Too Sparse n n Noise in Preprocessing (SRL, Co-ref, etc. ) ¨ n e. g. Narrative Schema [Chambers and Jurafsky, 2009] Large Data Robustness (Shown in Quality Evaluation) Entity Modeling in Semantic Sequences The right level of abstraction is hard to determine. [The doctor] told [Susan] that [she] had been busy. [The doctor] told [Susan] that [she] had cancer. Doctor/Patient [Mary] told [Susan] that [she] had cancer. ¨ Person ? 12

Quality of Sem. LMs n Two Standard Tests Perplexity Test ¨ Narrative Cloze Test ¨ n Test Corpus NYT Hold-out Data (10% of NYT corpus) + Automatic Annotation ¨ Gold Prop. Bank Data with Frame Chains + Gold Annotation ¨ Gold Ontonotes Data with Coref Chains + Gold Annotation ¨ 13

Quality of Sem. LMs n Two Standard Tests Perplexity Test ¨ Narrative Cloze Test ¨ n Test Corpus NYT Hold-out Data (10% of NYT corpus) + Automatic Annotation ¨ Gold Prop. Bank Data with Frame Chains + Gold Annotation ¨ Gold Ontonotes Data with Coref Chains + Gold Annotation ¨ 13

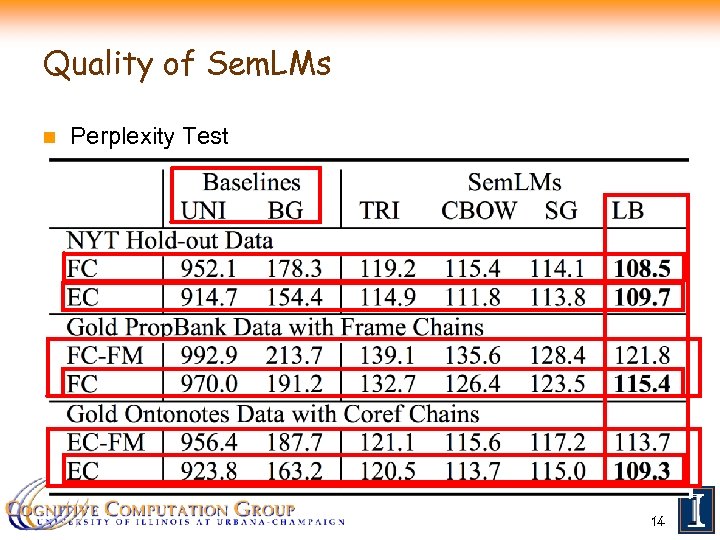

Quality of Sem. LMs n Perplexity Test 14

Quality of Sem. LMs n Perplexity Test 14

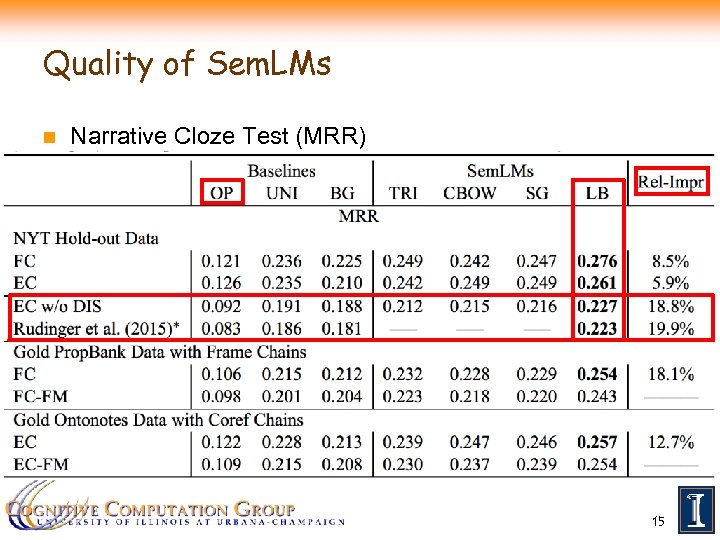

Quality of Sem. LMs n Narrative Cloze Test (MRR) 15

Quality of Sem. LMs n Narrative Cloze Test (MRR) 15

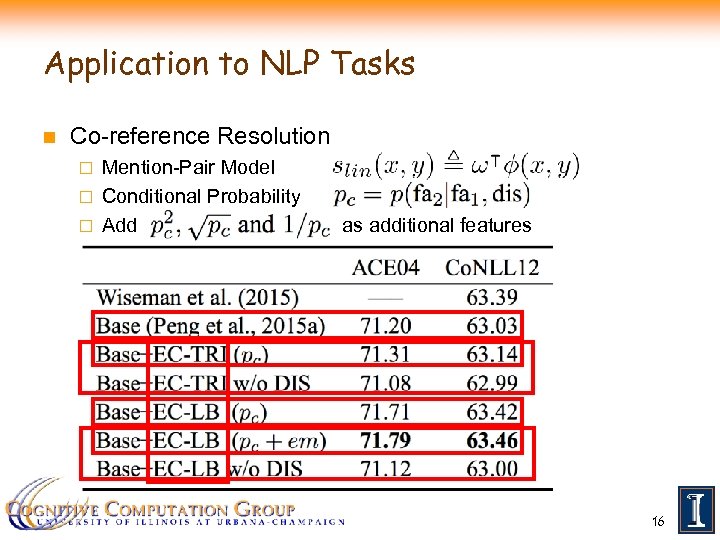

Application to NLP Tasks n Co-reference Resolution Mention-Pair Model ¨ Conditional Probability ¨ Add ¨ as additional features 16

Application to NLP Tasks n Co-reference Resolution Mention-Pair Model ¨ Conditional Probability ¨ Add ¨ as additional features 16

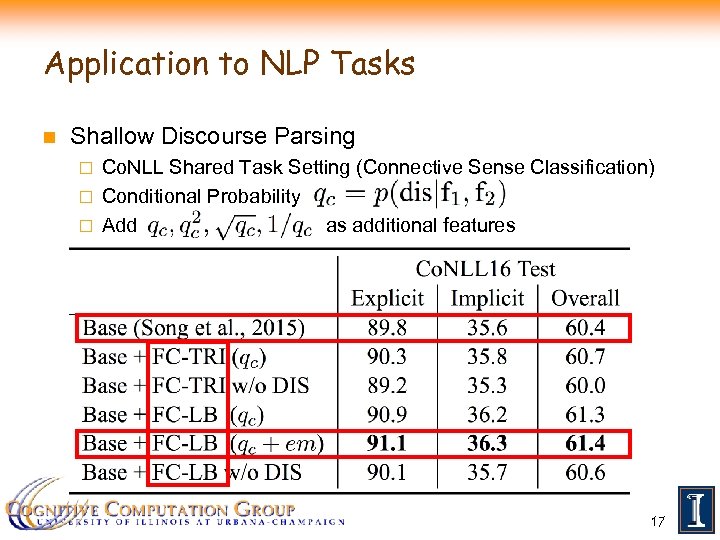

Application to NLP Tasks n Shallow Discourse Parsing Co. NLL Shared Task Setting (Connective Sense Classification) ¨ Conditional Probability ¨ Add as additional features ¨ 17

Application to NLP Tasks n Shallow Discourse Parsing Co. NLL Shared Task Setting (Connective Sense Classification) ¨ Conditional Probability ¨ Add as additional features ¨ 17

Conclusion n How do we model the sequential nature of NL at a semantic level? ¨ n Sem. LMs: Discourse Driven Two Models - Four Implementations High Quality How do we use the modelling to better support NLU tasks? ¨ n Thank You! Two Tasks, which utilize two models separately Sem. LM Conditional Probability as Features Trained Embeddings Sem. LMs Available: Email me at hpeng 7@illinois. edu 18

Conclusion n How do we model the sequential nature of NL at a semantic level? ¨ n Sem. LMs: Discourse Driven Two Models - Four Implementations High Quality How do we use the modelling to better support NLU tasks? ¨ n Thank You! Two Tasks, which utilize two models separately Sem. LM Conditional Probability as Features Trained Embeddings Sem. LMs Available: Email me at hpeng 7@illinois. edu 18

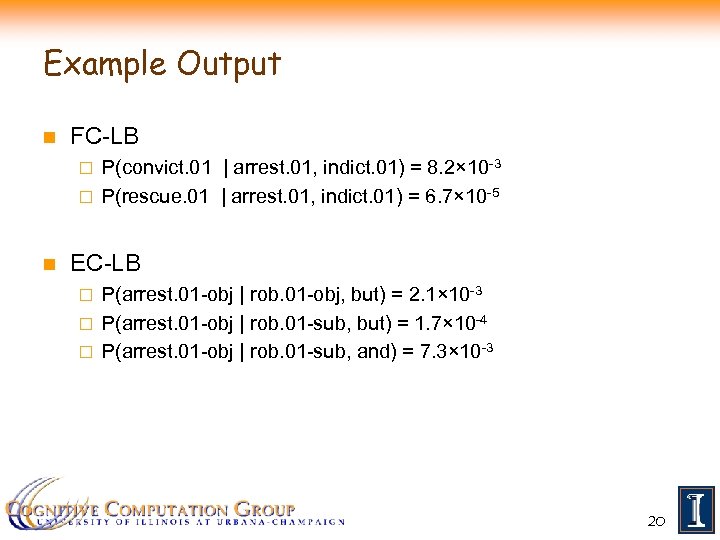

Example Output n FC-LB P(convict. 01 | arrest. 01, indict. 01) = 8. 2× 10 -3 ¨ P(rescue. 01 | arrest. 01, indict. 01) = 6. 7× 10 -5 ¨ n EC-LB P(arrest. 01 -obj | rob. 01 -obj, but) = 2. 1× 10 -3 ¨ P(arrest. 01 -obj | rob. 01 -sub, but) = 1. 7× 10 -4 ¨ P(arrest. 01 -obj | rob. 01 -sub, and) = 7. 3× 10 -3 ¨ 20

Example Output n FC-LB P(convict. 01 | arrest. 01, indict. 01) = 8. 2× 10 -3 ¨ P(rescue. 01 | arrest. 01, indict. 01) = 6. 7× 10 -5 ¨ n EC-LB P(arrest. 01 -obj | rob. 01 -obj, but) = 2. 1× 10 -3 ¨ P(arrest. 01 -obj | rob. 01 -sub, but) = 1. 7× 10 -4 ¨ P(arrest. 01 -obj | rob. 01 -sub, and) = 7. 3× 10 -3 ¨ 20