40aa9243dc3b1af388c5f59d6dc9c31a.ppt

- Количество слайдов: 109

Trust Course CS 6381 -- Grid and Peer-to-Peer Computing Gerardo Padilla

Trust Course CS 6381 -- Grid and Peer-to-Peer Computing Gerardo Padilla

Source • Part 1: A Survey Study on Trust Management in P 2 P Systems • Part 2: Trust-χ: A Peer-to-Peer Framework for Trust Establishment 2

Source • Part 1: A Survey Study on Trust Management in P 2 P Systems • Part 2: Trust-χ: A Peer-to-Peer Framework for Trust Establishment 2

Outline • What is Trust? • What is a Trust Management? • How to measure Trust? – Example • Reputation-based Trust Management Systems – DMRep – Eigen. Rep – P 2 PRep • Frameworks for Trust Establishment – Trust- χ 3

Outline • What is Trust? • What is a Trust Management? • How to measure Trust? – Example • Reputation-based Trust Management Systems – DMRep – Eigen. Rep – P 2 PRep • Frameworks for Trust Establishment – Trust- χ 3

What is Trust? • Kini & Choobineh trust is: "a belief that is influenced by the individual’s opinion about certain critical system features" • Gambetta " …trust (or, symmetrically, distrust) is a particular level of the subjective probability with which an agent will perform a particular action, both before [the trustor] can monitor such action (or independently of his capacity of ever to be able to monitor it) • The Trust-EC project (http: //dsa-isis. jrc. it/Trust. EC/) trust is: "the property of a business relationship, such that reliance can be placed on the business partners and the business transactions developed with them''. • Gradison and Sloman trust is: "the firm belief in the competence of an entity to act dependably, securely and reliably within a specified context". . 4

What is Trust? • Kini & Choobineh trust is: "a belief that is influenced by the individual’s opinion about certain critical system features" • Gambetta " …trust (or, symmetrically, distrust) is a particular level of the subjective probability with which an agent will perform a particular action, both before [the trustor] can monitor such action (or independently of his capacity of ever to be able to monitor it) • The Trust-EC project (http: //dsa-isis. jrc. it/Trust. EC/) trust is: "the property of a business relationship, such that reliance can be placed on the business partners and the business transactions developed with them''. • Gradison and Sloman trust is: "the firm belief in the competence of an entity to act dependably, securely and reliably within a specified context". . 4

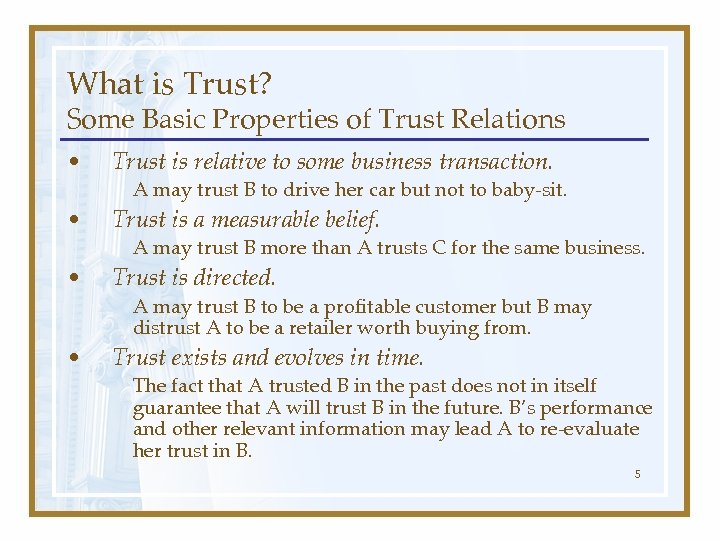

What is Trust? Some Basic Properties of Trust Relations • Trust is relative to some business transaction. • Trust is a measurable belief. • Trust is directed. • Trust exists and evolves in time. A may trust B to drive her car but not to baby-sit. A may trust B more than A trusts C for the same business. A may trust B to be a profitable customer but B may distrust A to be a retailer worth buying from. The fact that A trusted B in the past does not in itself guarantee that A will trust B in the future. B’s performance and other relevant information may lead A to re-evaluate her trust in B. 5

What is Trust? Some Basic Properties of Trust Relations • Trust is relative to some business transaction. • Trust is a measurable belief. • Trust is directed. • Trust exists and evolves in time. A may trust B to drive her car but not to baby-sit. A may trust B more than A trusts C for the same business. A may trust B to be a profitable customer but B may distrust A to be a retailer worth buying from. The fact that A trusted B in the past does not in itself guarantee that A will trust B in the future. B’s performance and other relevant information may lead A to re-evaluate her trust in B. 5

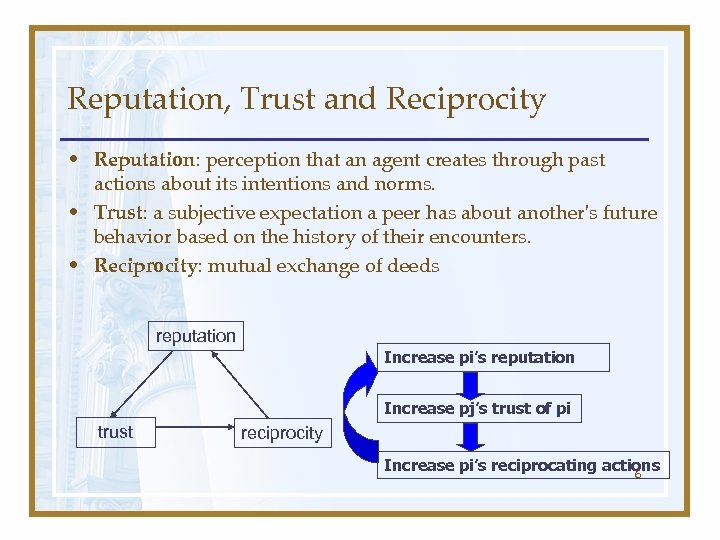

Reputation, Trust and Reciprocity • Reputation: perception that an agent creates through past actions about its intentions and norms. • Trust: a subjective expectation a peer has about another's future behavior based on the history of their encounters. • Reciprocity: mutual exchange of deeds reputation Increase pi’s reputation Increase pj’s trust of pi trust reciprocity Increase pi’s reciprocating actions 6

Reputation, Trust and Reciprocity • Reputation: perception that an agent creates through past actions about its intentions and norms. • Trust: a subjective expectation a peer has about another's future behavior based on the history of their encounters. • Reciprocity: mutual exchange of deeds reputation Increase pi’s reputation Increase pj’s trust of pi trust reciprocity Increase pi’s reciprocating actions 6

Outline • What is Trust? • What is a Trust Management? • How to measure Trust? – Example • Reputation-based Trust Management Systems – DMRep – Eigen. Rep – P 2 PRep • Frameworks for Trust Establishment – Trust- χ 7

Outline • What is Trust? • What is a Trust Management? • How to measure Trust? – Example • Reputation-based Trust Management Systems – DMRep – Eigen. Rep – P 2 PRep • Frameworks for Trust Establishment – Trust- χ 7

What is a Trust Management? • “a unified approach to specifying and interpreting security policies, credentials, relationships [which] allows direct authorization of security-critical actions” – Blaze, Feigenbaum & Lacy • Trust Management is the capture, evaluation and enforcement of trusting intentions. • Other areas: Distributed Agent Artificial Intelligence/ Social Sciences 8

What is a Trust Management? • “a unified approach to specifying and interpreting security policies, credentials, relationships [which] allows direct authorization of security-critical actions” – Blaze, Feigenbaum & Lacy • Trust Management is the capture, evaluation and enforcement of trusting intentions. • Other areas: Distributed Agent Artificial Intelligence/ Social Sciences 8

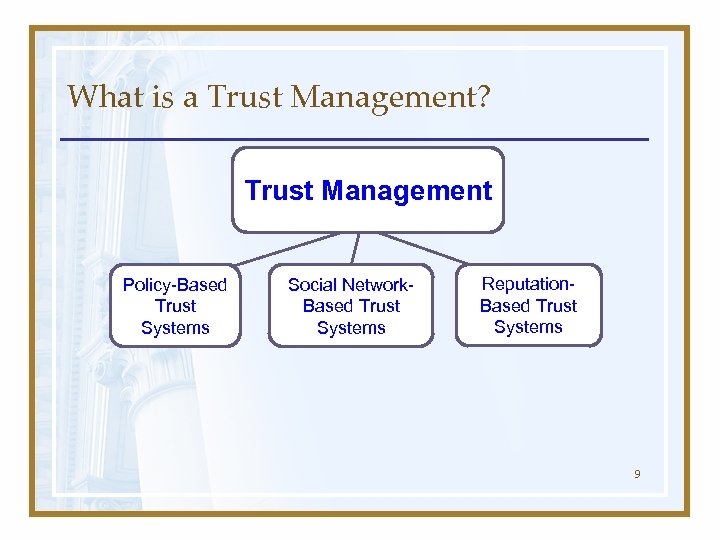

What is a Trust Management? Trust Management Policy-Based Trust Systems Social Network. Based Trust Systems Reputation. Based Trust Systems 9

What is a Trust Management? Trust Management Policy-Based Trust Systems Social Network. Based Trust Systems Reputation. Based Trust Systems 9

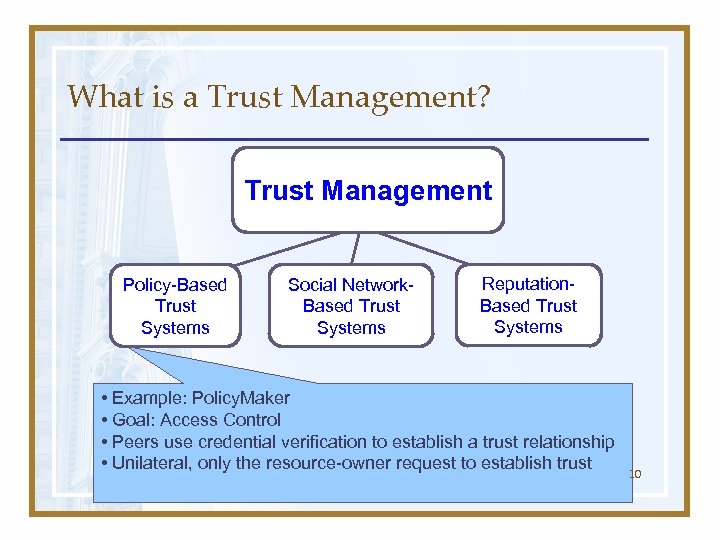

What is a Trust Management? Trust Management Policy-Based Trust Systems Social Network. Based Trust Systems Reputation. Based Trust Systems • Example: Policy. Maker • Goal: Access Control • Peers use credential verification to establish a trust relationship • Unilateral, only the resource-owner request to establish trust 10

What is a Trust Management? Trust Management Policy-Based Trust Systems Social Network. Based Trust Systems Reputation. Based Trust Systems • Example: Policy. Maker • Goal: Access Control • Peers use credential verification to establish a trust relationship • Unilateral, only the resource-owner request to establish trust 10

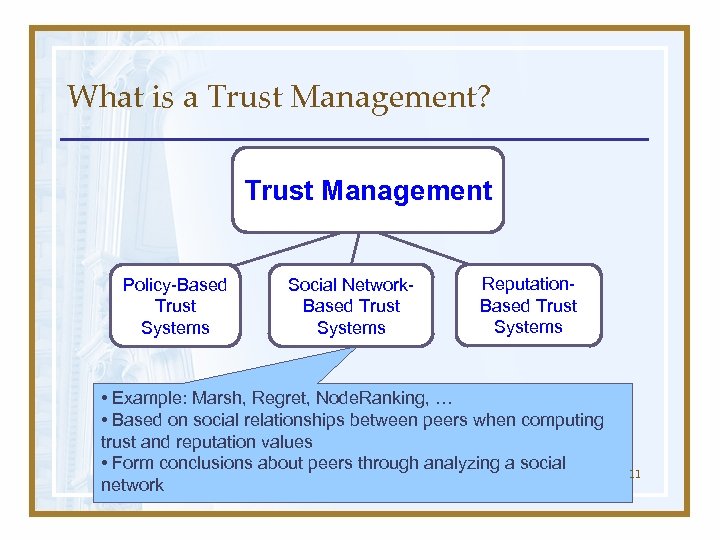

What is a Trust Management? Trust Management Policy-Based Trust Systems Social Network. Based Trust Systems Reputation. Based Trust Systems • Example: Marsh, Regret, Node. Ranking, … • Based on social relationships between peers when computing trust and reputation values • Form conclusions about peers through analyzing a social network 11

What is a Trust Management? Trust Management Policy-Based Trust Systems Social Network. Based Trust Systems Reputation. Based Trust Systems • Example: Marsh, Regret, Node. Ranking, … • Based on social relationships between peers when computing trust and reputation values • Form conclusions about peers through analyzing a social network 11

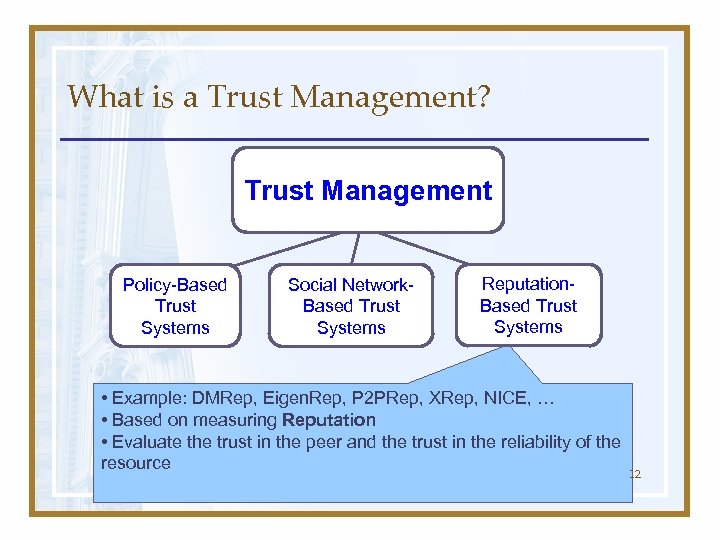

What is a Trust Management? Trust Management Policy-Based Trust Systems Social Network. Based Trust Systems Reputation. Based Trust Systems • Example: DMRep, Eigen. Rep, P 2 PRep, XRep, NICE, … • Based on measuring Reputation • Evaluate the trust in the peer and the trust in the reliability of the resource 12

What is a Trust Management? Trust Management Policy-Based Trust Systems Social Network. Based Trust Systems Reputation. Based Trust Systems • Example: DMRep, Eigen. Rep, P 2 PRep, XRep, NICE, … • Based on measuring Reputation • Evaluate the trust in the peer and the trust in the reliability of the resource 12

Outline • What is Trust? • What is a Trust Management? • How to measure Trust? – Example • Reputation-based Trust Management Systems – DMRep – Eigen. Rep – P 2 PRep • Frameworks for Trust Establishment – Trust- χ 13

Outline • What is Trust? • What is a Trust Management? • How to measure Trust? – Example • Reputation-based Trust Management Systems – DMRep – Eigen. Rep – P 2 PRep • Frameworks for Trust Establishment – Trust- χ 13

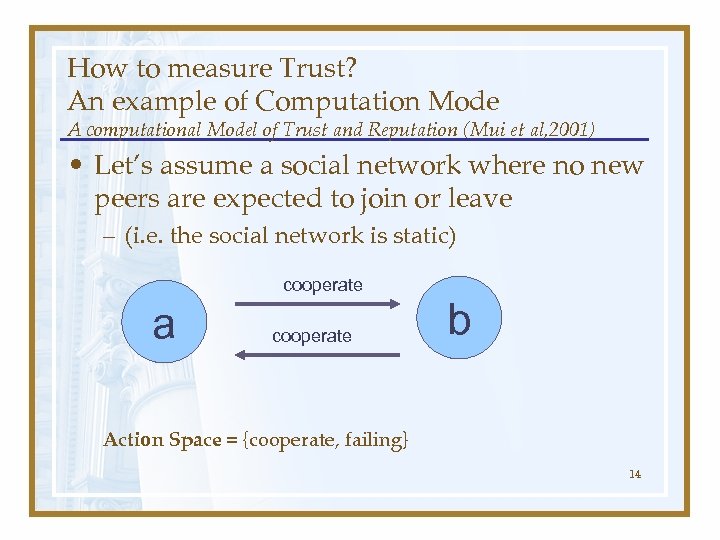

How to measure Trust? An example of Computation Mode A computational Model of Trust and Reputation (Mui et al, 2001) • Let’s assume a social network where no new peers are expected to join or leave – (i. e. the social network is static) cooperate a cooperate b Action Space = {cooperate, failing} 14

How to measure Trust? An example of Computation Mode A computational Model of Trust and Reputation (Mui et al, 2001) • Let’s assume a social network where no new peers are expected to join or leave – (i. e. the social network is static) cooperate a cooperate b Action Space = {cooperate, failing} 14

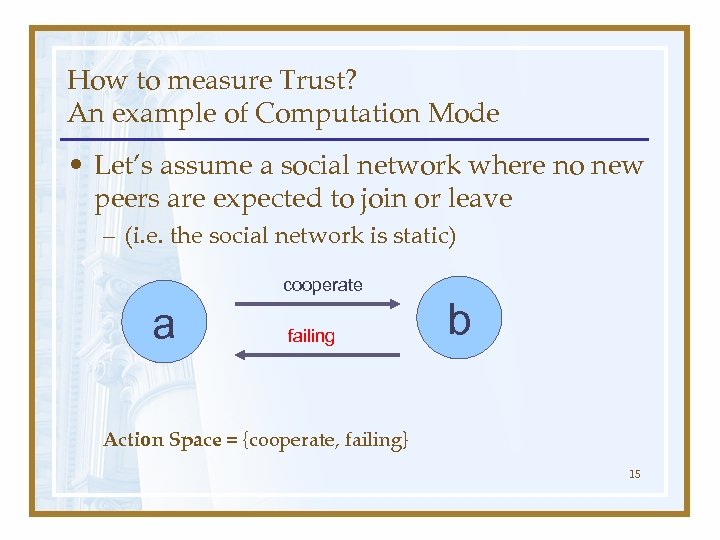

How to measure Trust? An example of Computation Mode • Let’s assume a social network where no new peers are expected to join or leave – (i. e. the social network is static) cooperate a failing b Action Space = {cooperate, failing} 15

How to measure Trust? An example of Computation Mode • Let’s assume a social network where no new peers are expected to join or leave – (i. e. the social network is static) cooperate a failing b Action Space = {cooperate, failing} 15

How to measure Trust? An example of Computation Mode • Reputation: perception that a peer creates through past actions about its intentions and norms – Let θji(c) represents pi’s reputation in a social network of concern to pj for a context c. – This value measures the likelihood that pi reciprocates pj’s actions. 16

How to measure Trust? An example of Computation Mode • Reputation: perception that a peer creates through past actions about its intentions and norms – Let θji(c) represents pi’s reputation in a social network of concern to pj for a context c. – This value measures the likelihood that pi reciprocates pj’s actions. 16

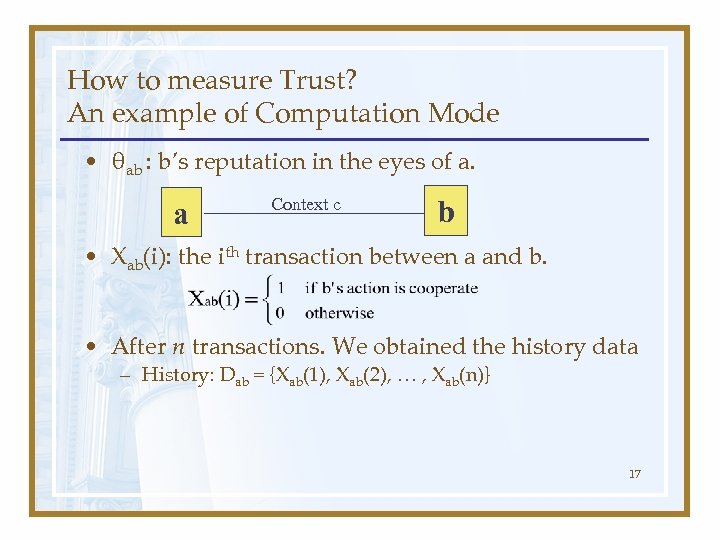

How to measure Trust? An example of Computation Mode • θab : b’s reputation in the eyes of a. a Context c b • Xab(i): the ith transaction between a and b. • After n transactions. We obtained the history data – History: Dab = {Xab(1), Xab(2), … , Xab(n)} 17

How to measure Trust? An example of Computation Mode • θab : b’s reputation in the eyes of a. a Context c b • Xab(i): the ith transaction between a and b. • After n transactions. We obtained the history data – History: Dab = {Xab(1), Xab(2), … , Xab(n)} 17

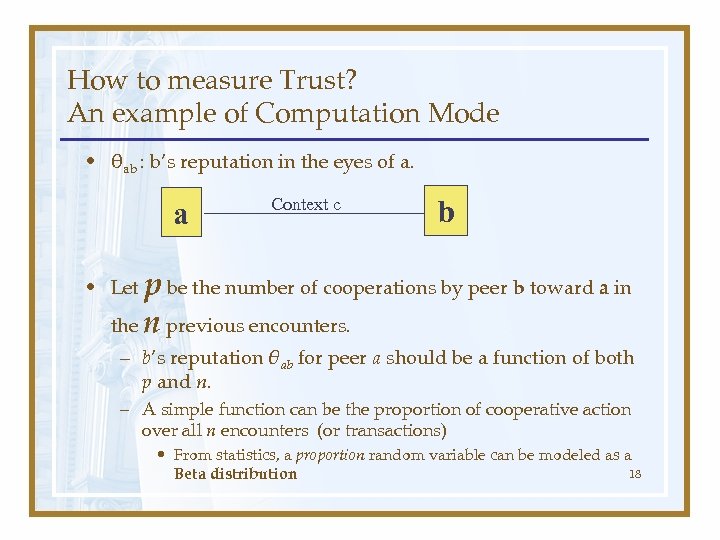

How to measure Trust? An example of Computation Mode • θab : b’s reputation in the eyes of a. a Context c b • Let p be the number of cooperations by peer b toward a in the n previous encounters. – b’s reputation θab for peer a should be a function of both p and n. – A simple function can be the proportion of cooperative action over all n encounters (or transactions) • From statistics, a proportion random variable can be modeled as a 18 Beta distribution

How to measure Trust? An example of Computation Mode • θab : b’s reputation in the eyes of a. a Context c b • Let p be the number of cooperations by peer b toward a in the n previous encounters. – b’s reputation θab for peer a should be a function of both p and n. – A simple function can be the proportion of cooperative action over all n encounters (or transactions) • From statistics, a proportion random variable can be modeled as a 18 Beta distribution

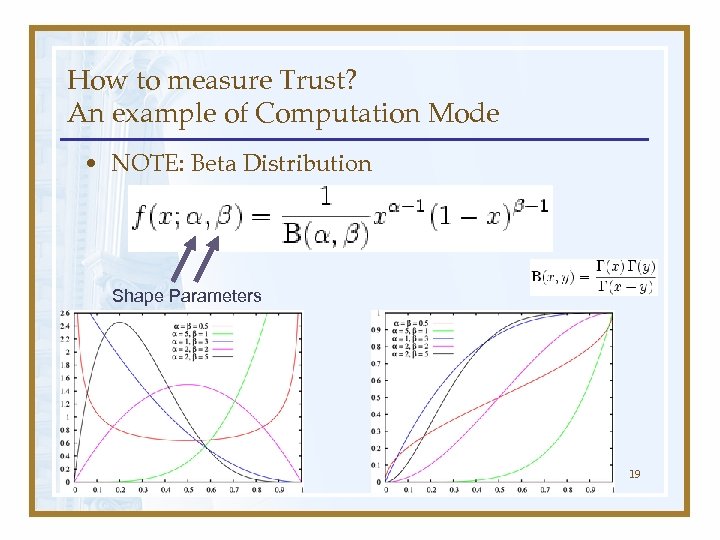

How to measure Trust? An example of Computation Mode • NOTE: Beta Distribution Shape Parameters 19

How to measure Trust? An example of Computation Mode • NOTE: Beta Distribution Shape Parameters 19

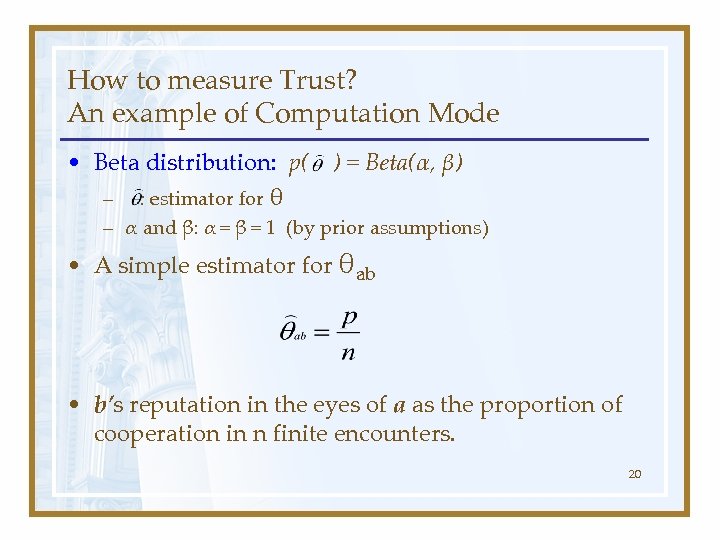

How to measure Trust? An example of Computation Mode • Beta distribution: p( ) = Beta(α, β) – : estimator for θ – α and β: α = β = 1 (by prior assumptions) • A simple estimator for θab • b’s reputation in the eyes of a as the proportion of cooperation in n finite encounters. 20

How to measure Trust? An example of Computation Mode • Beta distribution: p( ) = Beta(α, β) – : estimator for θ – α and β: α = β = 1 (by prior assumptions) • A simple estimator for θab • b’s reputation in the eyes of a as the proportion of cooperation in n finite encounters. 20

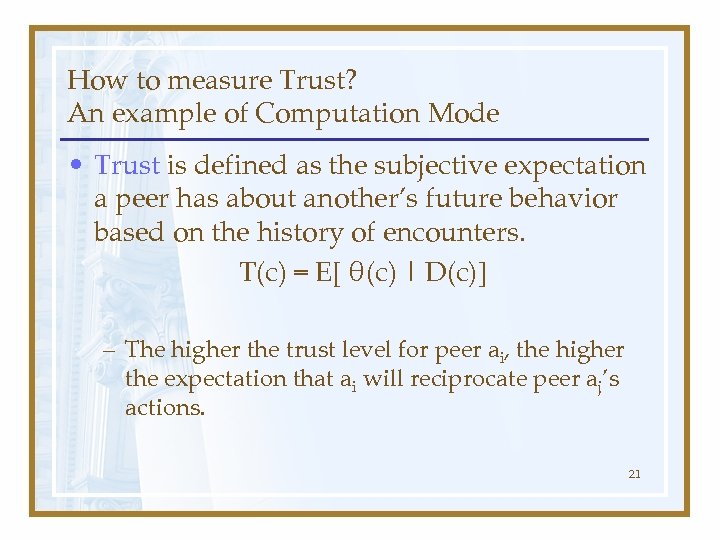

How to measure Trust? An example of Computation Mode • Trust is defined as the subjective expectation a peer has about another’s future behavior based on the history of encounters. T(c) = E[ θ(c) | D(c)] – The higher the trust level for peer ai, the higher the expectation that ai will reciprocate peer aj’s actions. 21

How to measure Trust? An example of Computation Mode • Trust is defined as the subjective expectation a peer has about another’s future behavior based on the history of encounters. T(c) = E[ θ(c) | D(c)] – The higher the trust level for peer ai, the higher the expectation that ai will reciprocate peer aj’s actions. 21

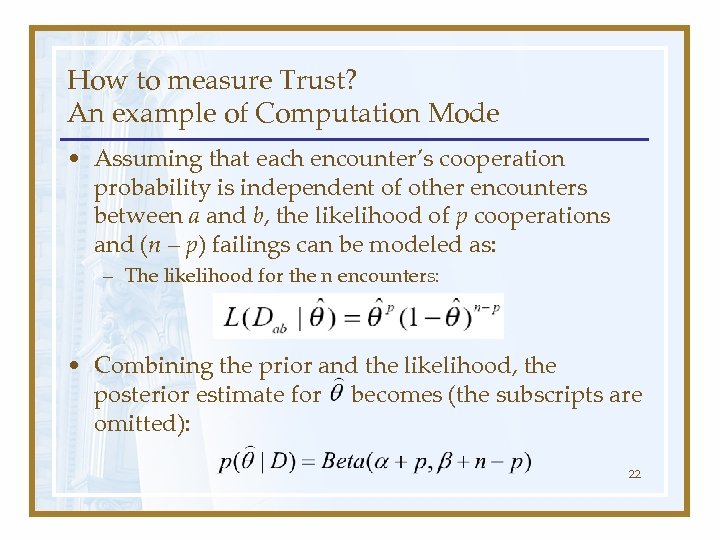

How to measure Trust? An example of Computation Mode • Assuming that each encounter’s cooperation probability is independent of other encounters between a and b, the likelihood of p cooperations and (n – p) failings can be modeled as: – The likelihood for the n encounters: • Combining the prior and the likelihood, the posterior estimate for becomes (the subscripts are omitted): 22

How to measure Trust? An example of Computation Mode • Assuming that each encounter’s cooperation probability is independent of other encounters between a and b, the likelihood of p cooperations and (n – p) failings can be modeled as: – The likelihood for the n encounters: • Combining the prior and the likelihood, the posterior estimate for becomes (the subscripts are omitted): 22

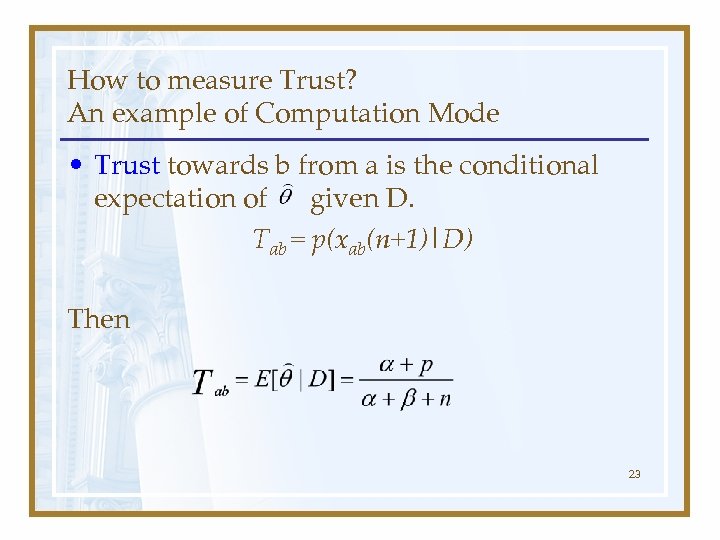

How to measure Trust? An example of Computation Mode • Trust towards b from a is the conditional expectation of given D. Tab = p(xab(n+1)|D) Then 23

How to measure Trust? An example of Computation Mode • Trust towards b from a is the conditional expectation of given D. Tab = p(xab(n+1)|D) Then 23

Outline • What is Trust? • What is a Trust Management? • How to measure Trust? – Example • Reputation-based Trust Management Systems – DMRep – Eigen. Rep – P 2 PRep • Frameworks for Trust Establishment – Trust- χ 24

Outline • What is Trust? • What is a Trust Management? • How to measure Trust? – Example • Reputation-based Trust Management Systems – DMRep – Eigen. Rep – P 2 PRep • Frameworks for Trust Establishment – Trust- χ 24

Reputation-based Trust Management Systems Introduction • Examples of completely centralized mechanism for storing and exploring reputation data: – Amazon. com • Visitors usually look for customer reviews before deciding to buy new books. – e. Bay • Participants at e. Bay’s auctions can rate each other after each transaction. 25

Reputation-based Trust Management Systems Introduction • Examples of completely centralized mechanism for storing and exploring reputation data: – Amazon. com • Visitors usually look for customer reviews before deciding to buy new books. – e. Bay • Participants at e. Bay’s auctions can rate each other after each transaction. 25

Reputation-based Trust Management Systems P 2 P Properties • • No central coordination No central database No peer has a global view of the system Global behavior emerges from local interactions • Peers are autonomous • Peers and connections are unreliable 26

Reputation-based Trust Management Systems P 2 P Properties • • No central coordination No central database No peer has a global view of the system Global behavior emerges from local interactions • Peers are autonomous • Peers and connections are unreliable 26

Reputation-based Trust Management Systems Design Considerations • The system should be self-policing – The shared ethics of the user population are defined and enforced by the peers themselves and not by some central authority • The system should maintain anonymity – A peer’s reputation should be associated with an opaque identifier rather with an externally associated identity • The system should not assign any profit to newcomers • The system should have minimal overhead in terms of computation, infrastructure, storage, and message complexity • The system should be robust to malicious collectives of peers who know one another and attempt to collectively subvert the system. 27

Reputation-based Trust Management Systems Design Considerations • The system should be self-policing – The shared ethics of the user population are defined and enforced by the peers themselves and not by some central authority • The system should maintain anonymity – A peer’s reputation should be associated with an opaque identifier rather with an externally associated identity • The system should not assign any profit to newcomers • The system should have minimal overhead in terms of computation, infrastructure, storage, and message complexity • The system should be robust to malicious collectives of peers who know one another and attempt to collectively subvert the system. 27

Reputation-based Trust Management Systems Design Considerations : DMRep Managing Trust in a P 2 P Information System (Aberer, Despotovic, 2001) • P 2 P Facts: – No central coordination or DB (e. g. not e. Bay) – No peer has global view – Peers autonomous and unreliable • Importance of trust in digital communities, but information dispersed and sources are not unconditionally trustworthy • Solution: reputation as decentralized storage of replicated & redundant transaction history – Calculate binary trust metric based on history of complaints. 28

Reputation-based Trust Management Systems Design Considerations : DMRep Managing Trust in a P 2 P Information System (Aberer, Despotovic, 2001) • P 2 P Facts: – No central coordination or DB (e. g. not e. Bay) – No peer has global view – Peers autonomous and unreliable • Importance of trust in digital communities, but information dispersed and sources are not unconditionally trustworthy • Solution: reputation as decentralized storage of replicated & redundant transaction history – Calculate binary trust metric based on history of complaints. 28

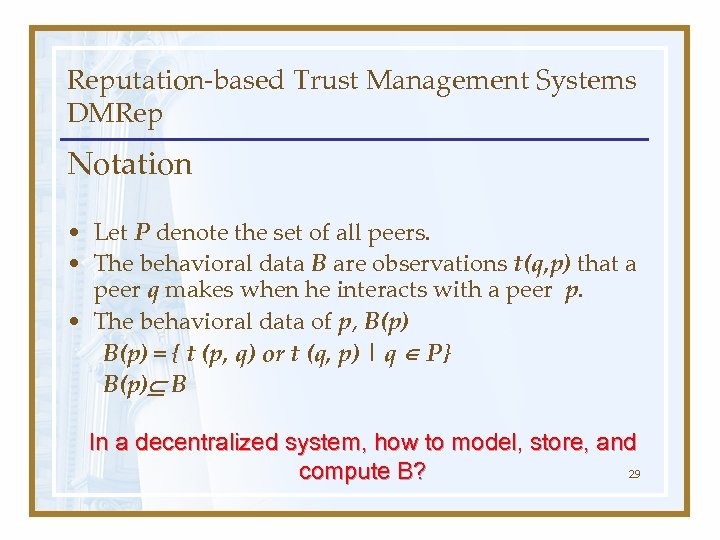

Reputation-based Trust Management Systems DMRep Notation • Let P denote the set of all peers. • The behavioral data B are observations t(q, p) that a peer q makes when he interacts with a peer p. • The behavioral data of p, B(p) = { t (p, q) or t (q, p) | q P} B(p) B In a decentralized system, how to model, store, and 29 compute B?

Reputation-based Trust Management Systems DMRep Notation • Let P denote the set of all peers. • The behavioral data B are observations t(q, p) that a peer q makes when he interacts with a peer p. • The behavioral data of p, B(p) = { t (p, q) or t (q, p) | q P} B(p) B In a decentralized system, how to model, store, and 29 compute B?

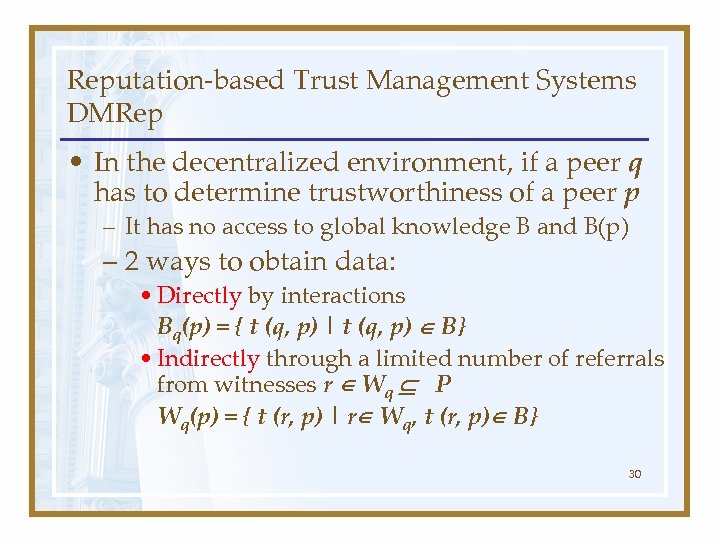

Reputation-based Trust Management Systems DMRep • In the decentralized environment, if a peer q has to determine trustworthiness of a peer p – It has no access to global knowledge B and B(p) – 2 ways to obtain data: • Directly by interactions Bq(p) = { t (q, p) | t (q, p) B} • Indirectly through a limited number of referrals from witnesses r Wq P Wq(p) = { t (r, p) | r Wq, t (r, p) B} 30

Reputation-based Trust Management Systems DMRep • In the decentralized environment, if a peer q has to determine trustworthiness of a peer p – It has no access to global knowledge B and B(p) – 2 ways to obtain data: • Directly by interactions Bq(p) = { t (q, p) | t (q, p) B} • Indirectly through a limited number of referrals from witnesses r Wq P Wq(p) = { t (r, p) | r Wq, t (r, p) B} 30

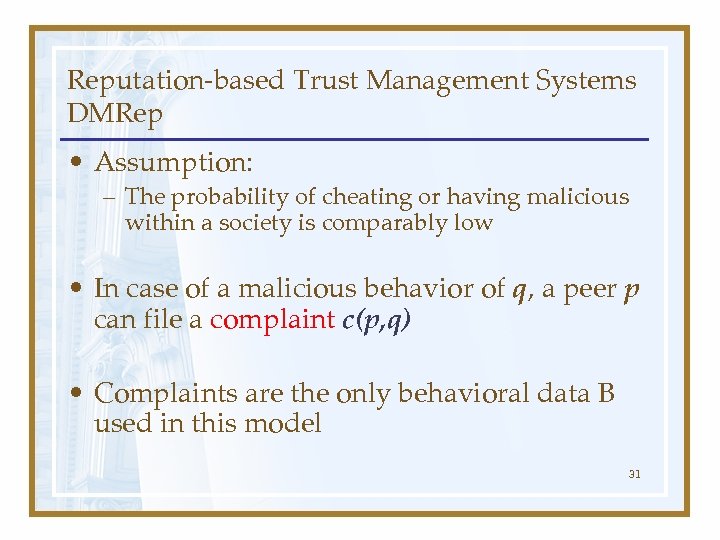

Reputation-based Trust Management Systems DMRep • Assumption: – The probability of cheating or having malicious within a society is comparably low • In case of a malicious behavior of q, a peer p can file a complaint c(p, q) • Complaints are the only behavioral data B used in this model 31

Reputation-based Trust Management Systems DMRep • Assumption: – The probability of cheating or having malicious within a society is comparably low • In case of a malicious behavior of q, a peer p can file a complaint c(p, q) • Complaints are the only behavioral data B used in this model 31

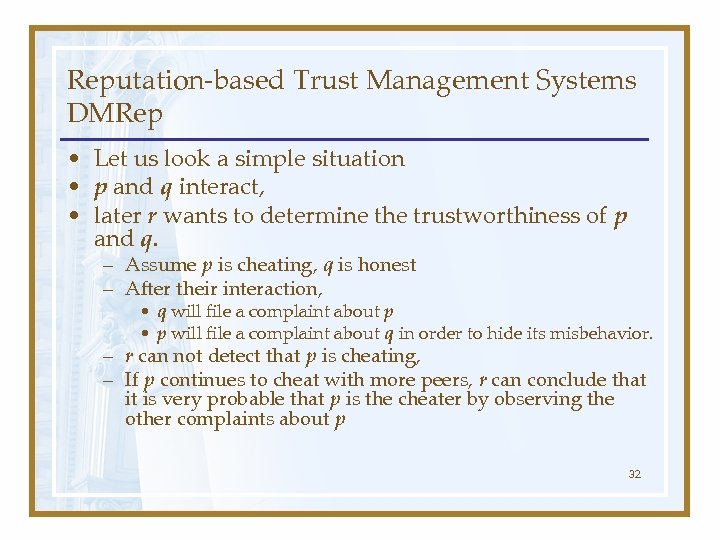

Reputation-based Trust Management Systems DMRep • Let us look a simple situation • p and q interact, • later r wants to determine the trustworthiness of p and q. – Assume p is cheating, q is honest – After their interaction, • q will file a complaint about p • p will file a complaint about q in order to hide its misbehavior. – r can not detect that p is cheating, – If p continues to cheat with more peers, r can conclude that it is very probable that p is the cheater by observing the other complaints about p 32

Reputation-based Trust Management Systems DMRep • Let us look a simple situation • p and q interact, • later r wants to determine the trustworthiness of p and q. – Assume p is cheating, q is honest – After their interaction, • q will file a complaint about p • p will file a complaint about q in order to hide its misbehavior. – r can not detect that p is cheating, – If p continues to cheat with more peers, r can conclude that it is very probable that p is the cheater by observing the other complaints about p 32

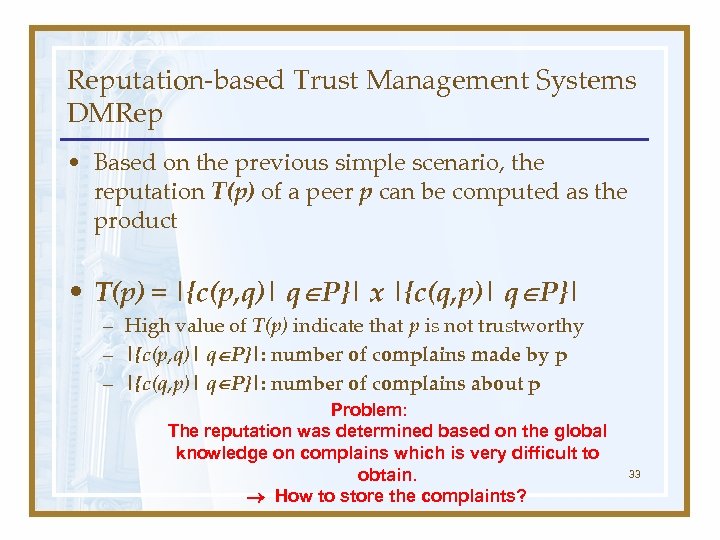

Reputation-based Trust Management Systems DMRep • Based on the previous simple scenario, the reputation T(p) of a peer p can be computed as the product • T(p) = |{c(p, q)| q P}| x |{c(q, p)| q P}| – High value of T(p) indicate that p is not trustworthy – |{c(p, q)| q P}|: number of complains made by p – |{c(q, p)| q P}|: number of complains about p Problem: The reputation was determined based on the global knowledge on complains which is very difficult to obtain. How to store the complaints? 33

Reputation-based Trust Management Systems DMRep • Based on the previous simple scenario, the reputation T(p) of a peer p can be computed as the product • T(p) = |{c(p, q)| q P}| x |{c(q, p)| q P}| – High value of T(p) indicate that p is not trustworthy – |{c(p, q)| q P}|: number of complains made by p – |{c(q, p)| q P}|: number of complains about p Problem: The reputation was determined based on the global knowledge on complains which is very difficult to obtain. How to store the complaints? 33

Reputation-based Trust Management Systems DMRep • The storage structure proposed in this approach uses P-Grid (other can be used, such as CAN or CHORD) • P- Grid is a peer-to-peer lookup system based on a virtual distributed search tree. • It stores data items for which the associated path is a prefix of the data key. – For the trust management application this are the complaints indexed by the peer number. 34

Reputation-based Trust Management Systems DMRep • The storage structure proposed in this approach uses P-Grid (other can be used, such as CAN or CHORD) • P- Grid is a peer-to-peer lookup system based on a virtual distributed search tree. • It stores data items for which the associated path is a prefix of the data key. – For the trust management application this are the complaints indexed by the peer number. 34

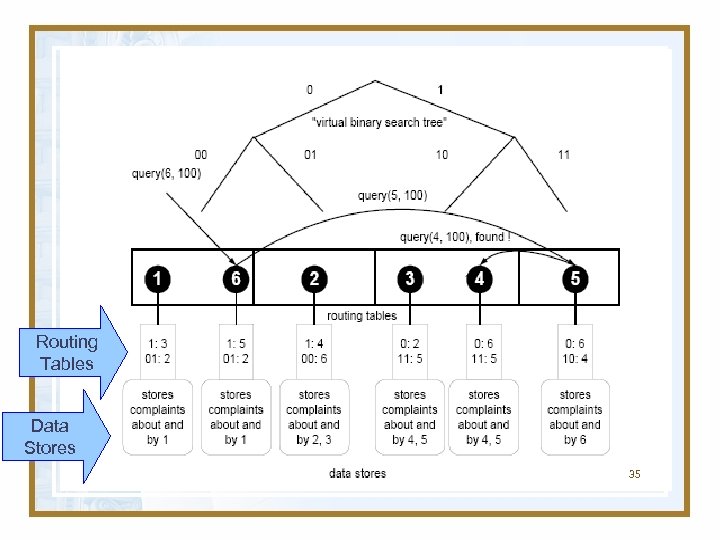

Routing Tables Data Stores 35

Routing Tables Data Stores 35

Reputation-based Trust Management Systems DMRep • The same data can be stored at multiple peers and we have replicas of this data improve reliability • As the example shows, collisions of interest may occur, where peers are responsible for storing complaints about themselves. We do not exclude this, as for large peer populations these cases will be very rare and multiple replicas will be available to double-check. 36

Reputation-based Trust Management Systems DMRep • The same data can be stored at multiple peers and we have replicas of this data improve reliability • As the example shows, collisions of interest may occur, where peers are responsible for storing complaints about themselves. We do not exclude this, as for large peer populations these cases will be very rare and multiple replicas will be available to double-check. 36

Reputation-based Trust Management Systems DMRep – Problem: The peers providing the data could themselves be malicious – Assume that the peers are only malicious with a certain probability π ≤ π max <1. • If there are r replicas satisfies on average π rmax < ε, where ε is an acceptable fault-tolerance. – Problem Solution: If we receive the same data about a specific peer from a sufficient number of replicas we need no further checks, otherwise continue search. 37

Reputation-based Trust Management Systems DMRep – Problem: The peers providing the data could themselves be malicious – Assume that the peers are only malicious with a certain probability π ≤ π max <1. • If there are r replicas satisfies on average π rmax < ε, where ε is an acceptable fault-tolerance. – Problem Solution: If we receive the same data about a specific peer from a sufficient number of replicas we need no further checks, otherwise continue search. 37

Reputation-based Trust Management Systems DMRep • How it works? P-Grid has two operations for storage-retrieve information – insert(p; k; v), • where p is an arbitrary peer in the network, k is the key value to be searched for, and v is a data value associated with the key. – query(r; k) : v, • where r is an arbitrary peer in the network, which returns the data values v for a corresponding query k. 38

Reputation-based Trust Management Systems DMRep • How it works? P-Grid has two operations for storage-retrieve information – insert(p; k; v), • where p is an arbitrary peer in the network, k is the key value to be searched for, and v is a data value associated with the key. – query(r; k) : v, • where r is an arbitrary peer in the network, which returns the data values v for a corresponding query k. 38

Reputation-based Trust Management Systems DMRep • How it works? – Every peer p can file a complaint about q at any time. It stores the complaint by sending messages insert(a 1; key(p); c(p; q)) and insert(a 2; key(q); c(p; q)) to arbitrary peers a 1 and a 2. 39

Reputation-based Trust Management Systems DMRep • How it works? – Every peer p can file a complaint about q at any time. It stores the complaint by sending messages insert(a 1; key(p); c(p; q)) and insert(a 2; key(q); c(p; q)) to arbitrary peers a 1 and a 2. 39

Reputation-based Trust Management Systems DMRep – Query Results • Assume that a peer p query for information about q (p evaluates the trustworthiness of q) – p submits messages query(a; key(q)) to arbitrary peers a. – This process is performed s times. 40

Reputation-based Trust Management Systems DMRep – Query Results • Assume that a peer p query for information about q (p evaluates the trustworthiness of q) – p submits messages query(a; key(q)) to arbitrary peers a. – This process is performed s times. 40

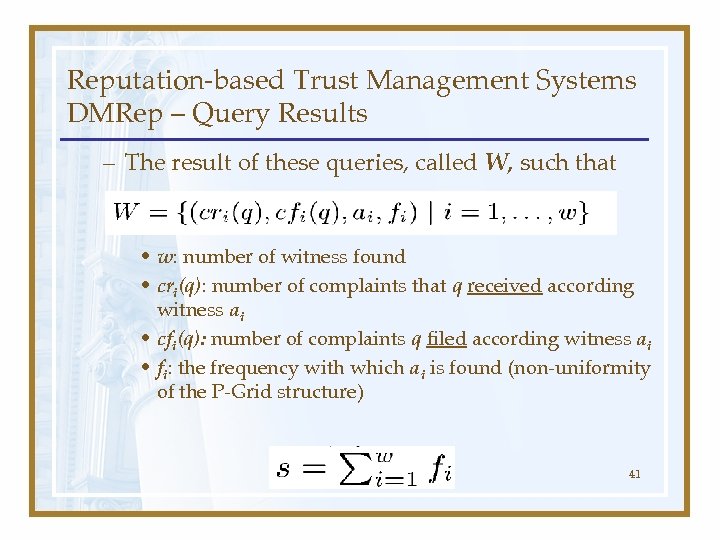

Reputation-based Trust Management Systems DMRep – Query Results – The result of these queries, called W, such that • w: number of witness found • cri(q): number of complaints that q received according witness ai • cfi(q): number of complaints q filed according witness ai • fi: the frequency with which ai is found (non-uniformity of the P-Grid structure) 41

Reputation-based Trust Management Systems DMRep – Query Results – The result of these queries, called W, such that • w: number of witness found • cri(q): number of complaints that q received according witness ai • cfi(q): number of complaints q filed according witness ai • fi: the frequency with which ai is found (non-uniformity of the P-Grid structure) 41

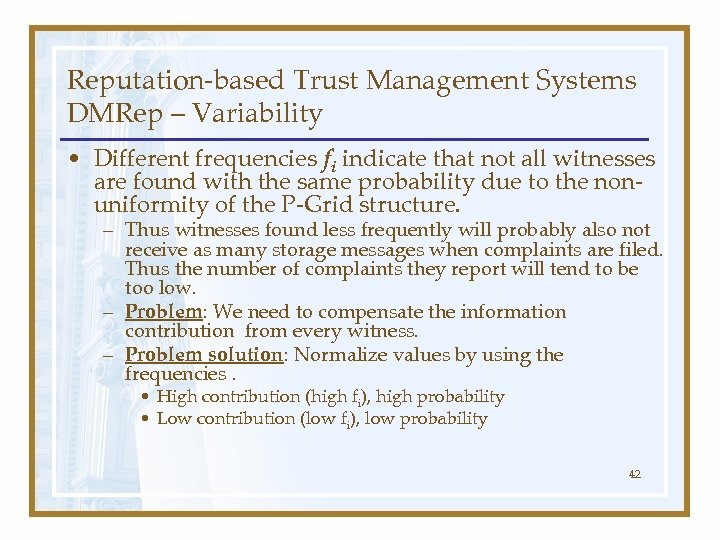

Reputation-based Trust Management Systems DMRep – Variability • Different frequencies fi indicate that not all witnesses are found with the same probability due to the nonuniformity of the P-Grid structure. – Thus witnesses found less frequently will probably also not receive as many storage messages when complaints are filed. Thus the number of complaints they report will tend to be too low. – Problem: We need to compensate the information contribution from every witness. – Problem solution: Normalize values by using the frequencies. • High contribution (high fi), high probability • Low contribution (low fi), low probability 42

Reputation-based Trust Management Systems DMRep – Variability • Different frequencies fi indicate that not all witnesses are found with the same probability due to the nonuniformity of the P-Grid structure. – Thus witnesses found less frequently will probably also not receive as many storage messages when complaints are filed. Thus the number of complaints they report will tend to be too low. – Problem: We need to compensate the information contribution from every witness. – Problem solution: Normalize values by using the frequencies. • High contribution (high fi), high probability • Low contribution (low fi), low probability 42

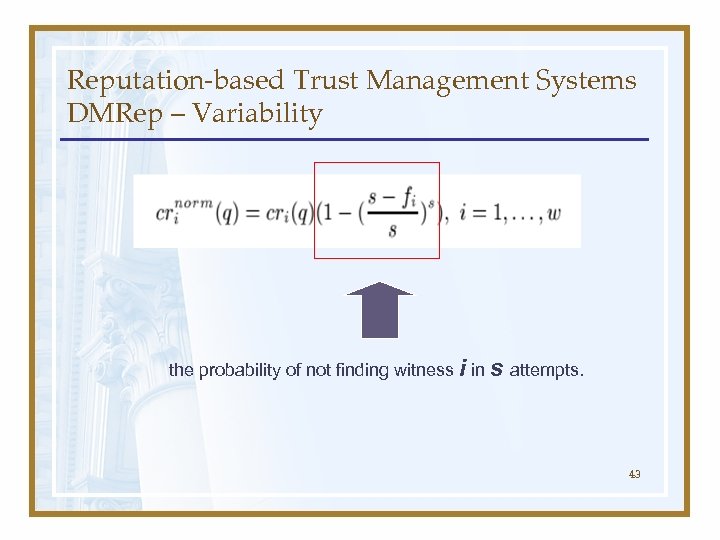

Reputation-based Trust Management Systems DMRep – Variability the probability of not finding witness i in s attempts. 43

Reputation-based Trust Management Systems DMRep – Variability the probability of not finding witness i in s attempts. 43

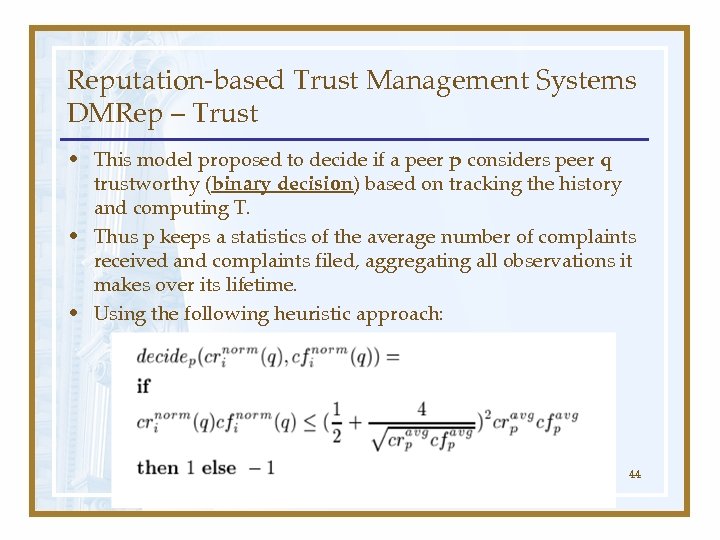

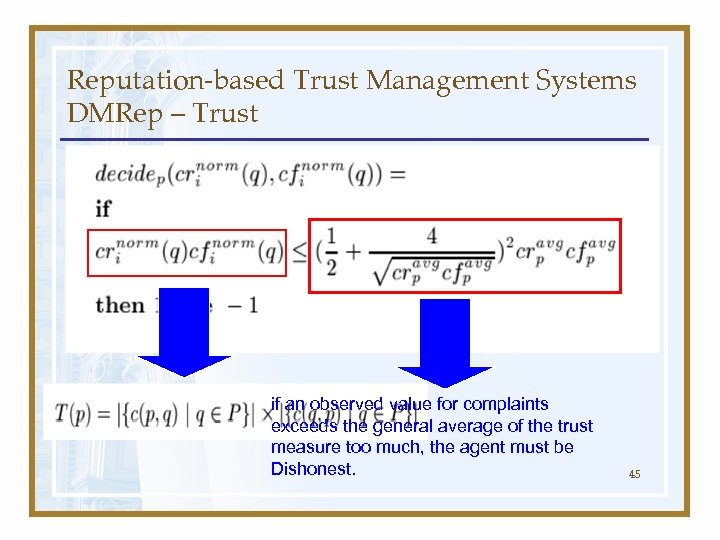

Reputation-based Trust Management Systems DMRep – Trust • This model proposed to decide if a peer p considers peer q trustworthy (binary decision) based on tracking the history and computing T. • Thus p keeps a statistics of the average number of complaints received and complaints filed, aggregating all observations it makes over its lifetime. • Using the following heuristic approach: 44

Reputation-based Trust Management Systems DMRep – Trust • This model proposed to decide if a peer p considers peer q trustworthy (binary decision) based on tracking the history and computing T. • Thus p keeps a statistics of the average number of complaints received and complaints filed, aggregating all observations it makes over its lifetime. • Using the following heuristic approach: 44

Reputation-based Trust Management Systems DMRep – Trust if an observed value for complaints exceeds the general average of the trust measure too much, the agent must be Dishonest. 45

Reputation-based Trust Management Systems DMRep – Trust if an observed value for complaints exceeds the general average of the trust measure too much, the agent must be Dishonest. 45

Reputation-based Trust Management Systems DMRep - Discussion • Strength – The method can be implemented in a fully decentralized peer-to-peer environment and scales well for large number of participants. • Limitations – environment with low cheating rates. – specific data management structure. – Not robust to malicious collectives of peers. 46

Reputation-based Trust Management Systems DMRep - Discussion • Strength – The method can be implemented in a fully decentralized peer-to-peer environment and scales well for large number of participants. • Limitations – environment with low cheating rates. – specific data management structure. – Not robust to malicious collectives of peers. 46

Outline • What is Trust? • What is a Trust Management? • How to measure Trust? – Example • Reputation-based Trust Management Systems – DMRep – Eigen. Rep – P 2 PRep • Frameworks for Trust Establishment – Trust- χ 47

Outline • What is Trust? • What is a Trust Management? • How to measure Trust? – Example • Reputation-based Trust Management Systems – DMRep – Eigen. Rep – P 2 PRep • Frameworks for Trust Establishment – Trust- χ 47

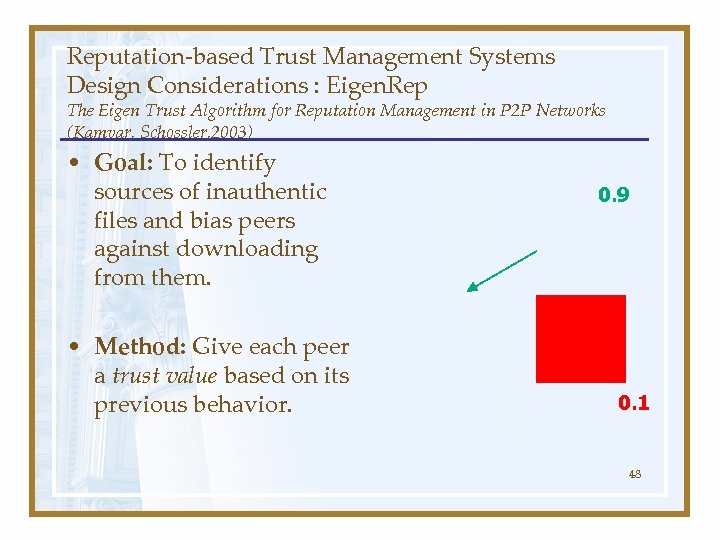

Reputation-based Trust Management Systems Design Considerations : Eigen. Rep The Eigen Trust Algorithm for Reputation Management in P 2 P Networks (Kamvar, Schossler, 2003) • Goal: To identify sources of inauthentic files and bias peers against downloading from them. • Method: Give each peer a trust value based on its previous behavior. 0. 9 0. 1 48

Reputation-based Trust Management Systems Design Considerations : Eigen. Rep The Eigen Trust Algorithm for Reputation Management in P 2 P Networks (Kamvar, Schossler, 2003) • Goal: To identify sources of inauthentic files and bias peers against downloading from them. • Method: Give each peer a trust value based on its previous behavior. 0. 9 0. 1 48

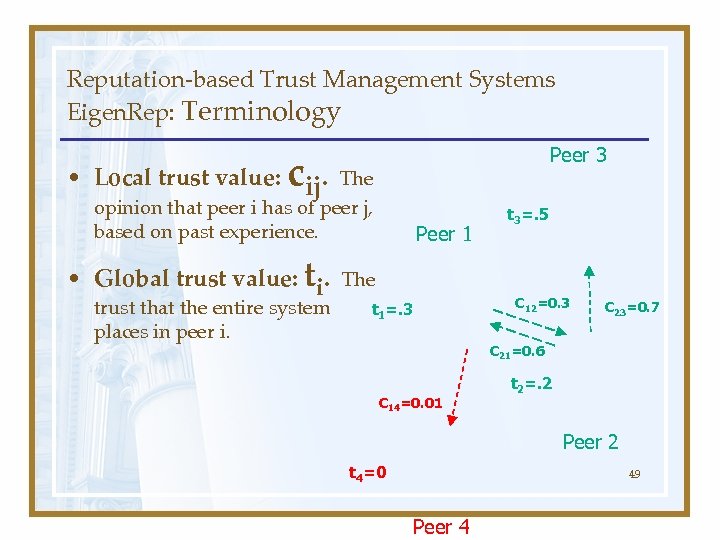

Reputation-based Trust Management Systems Eigen. Rep: Terminology Peer 3 c • Local trust value: ij. The opinion that peer i has of peer j, based on past experience. Peer 1 • Global trust value: ti. The trust that the entire system places in peer i. t 3=. 5 C 12=0. 3 t 1=. 3 C 23=0. 7 C 21=0. 6 C 14=0. 01 t 2=. 2 Peer 2 t 4=0 49 Peer 4

Reputation-based Trust Management Systems Eigen. Rep: Terminology Peer 3 c • Local trust value: ij. The opinion that peer i has of peer j, based on past experience. Peer 1 • Global trust value: ti. The trust that the entire system places in peer i. t 3=. 5 C 12=0. 3 t 1=. 3 C 23=0. 7 C 21=0. 6 C 14=0. 01 t 2=. 2 Peer 2 t 4=0 49 Peer 4

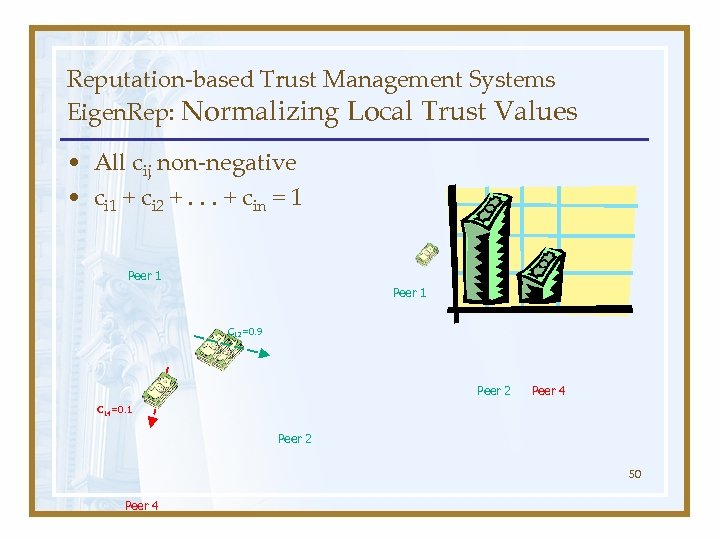

Reputation-based Trust Management Systems Eigen. Rep: Normalizing Local Trust Values • All cij non-negative • ci 1 + ci 2 +. . . + cin = 1 Peer 1 C 12=0. 9 Peer 2 Peer 4 C 14=0. 1 Peer 2 50 Peer 4

Reputation-based Trust Management Systems Eigen. Rep: Normalizing Local Trust Values • All cij non-negative • ci 1 + ci 2 +. . . + cin = 1 Peer 1 C 12=0. 9 Peer 2 Peer 4 C 14=0. 1 Peer 2 50 Peer 4

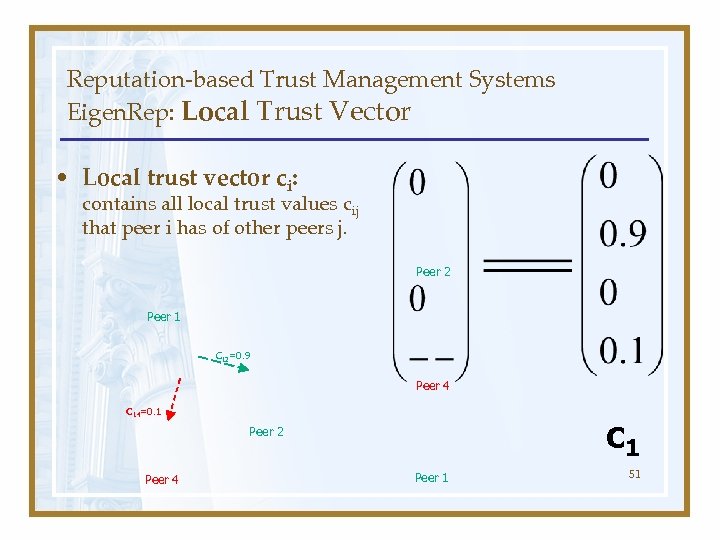

Reputation-based Trust Management Systems Eigen. Rep: Local Trust Vector • Local trust vector ci: contains all local trust values cij that peer i has of other peers j. Peer 2 Peer 1 C 12=0. 9 Peer 4 C 14=0. 1 c 1 Peer 2 Peer 4 Peer 1 51

Reputation-based Trust Management Systems Eigen. Rep: Local Trust Vector • Local trust vector ci: contains all local trust values cij that peer i has of other peers j. Peer 2 Peer 1 C 12=0. 9 Peer 4 C 14=0. 1 c 1 Peer 2 Peer 4 Peer 1 51

Reputation-based Trust Management Systems Eigen. Rep: Local Trust Values • Model Assumptions: – Each time peer i downloads an authentic file from peer j, cij increases. – Each time peer i downloads an inauthentic file from peer j, cij decreases. How to quantify these assumptions? 52

Reputation-based Trust Management Systems Eigen. Rep: Local Trust Values • Model Assumptions: – Each time peer i downloads an authentic file from peer j, cij increases. – Each time peer i downloads an inauthentic file from peer j, cij decreases. How to quantify these assumptions? 52

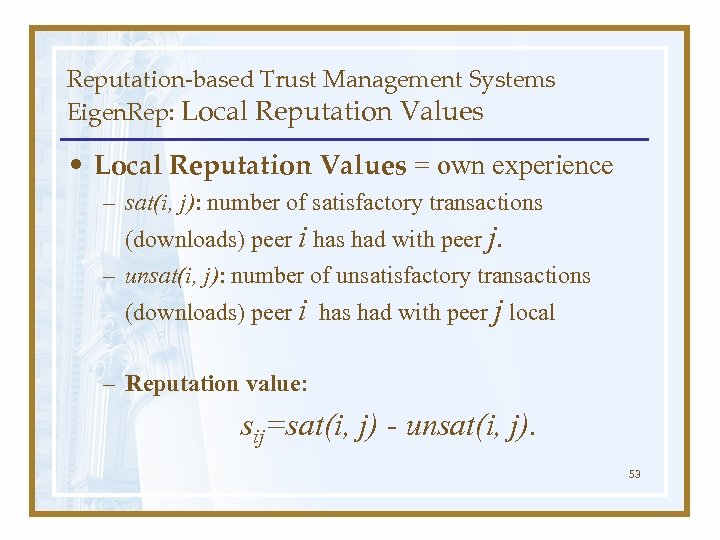

Reputation-based Trust Management Systems Eigen. Rep: Local Reputation Values • Local Reputation Values = own experience – sat(i, j): number of satisfactory transactions (downloads) peer i has had with peer j. – unsat(i, j): number of unsatisfactory transactions (downloads) peer i has had with peer j local – Reputation value: sij=sat(i, j) - unsat(i, j). 53

Reputation-based Trust Management Systems Eigen. Rep: Local Reputation Values • Local Reputation Values = own experience – sat(i, j): number of satisfactory transactions (downloads) peer i has had with peer j. – unsat(i, j): number of unsatisfactory transactions (downloads) peer i has had with peer j local – Reputation value: sij=sat(i, j) - unsat(i, j). 53

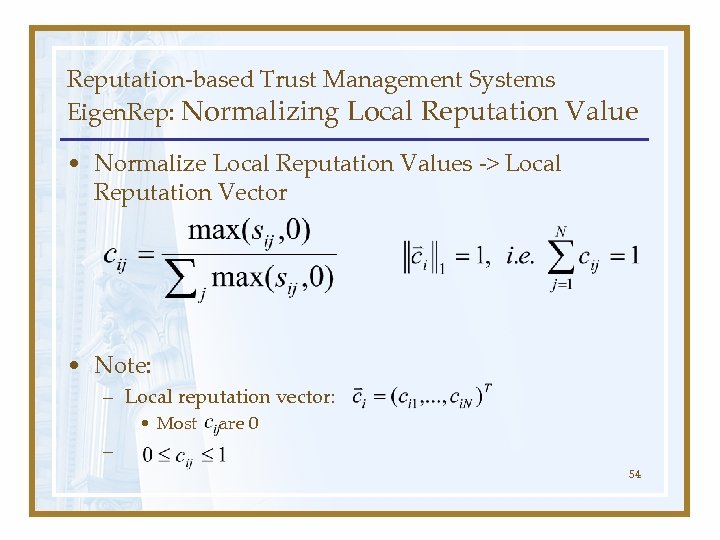

Reputation-based Trust Management Systems Eigen. Rep: Normalizing Local Reputation Value • Normalize Local Reputation Values -> Local Reputation Vector • Note: – Local reputation vector: • Most are 0 – 54

Reputation-based Trust Management Systems Eigen. Rep: Normalizing Local Reputation Value • Normalize Local Reputation Values -> Local Reputation Vector • Note: – Local reputation vector: • Most are 0 – 54

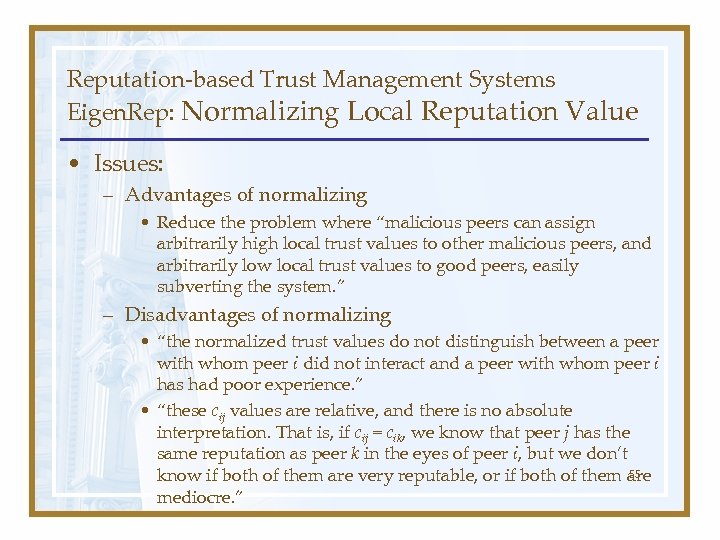

Reputation-based Trust Management Systems Eigen. Rep: Normalizing Local Reputation Value • Issues: – Advantages of normalizing • Reduce the problem where “malicious peers can assign arbitrarily high local trust values to other malicious peers, and arbitrarily low local trust values to good peers, easily subverting the system. ” – Disadvantages of normalizing • “the normalized trust values do not distinguish between a peer with whom peer i did not interact and a peer with whom peer i has had poor experience. ” • “these cij values are relative, and there is no absolute interpretation. That is, if cij = cik, we know that peer j has the same reputation as peer k in the eyes of peer i, but we don’t 55 know if both of them are very reputable, or if both of them are mediocre. ”

Reputation-based Trust Management Systems Eigen. Rep: Normalizing Local Reputation Value • Issues: – Advantages of normalizing • Reduce the problem where “malicious peers can assign arbitrarily high local trust values to other malicious peers, and arbitrarily low local trust values to good peers, easily subverting the system. ” – Disadvantages of normalizing • “the normalized trust values do not distinguish between a peer with whom peer i did not interact and a peer with whom peer i has had poor experience. ” • “these cij values are relative, and there is no absolute interpretation. That is, if cij = cik, we know that peer j has the same reputation as peer k in the eyes of peer i, but we don’t 55 know if both of them are very reputable, or if both of them are mediocre. ”

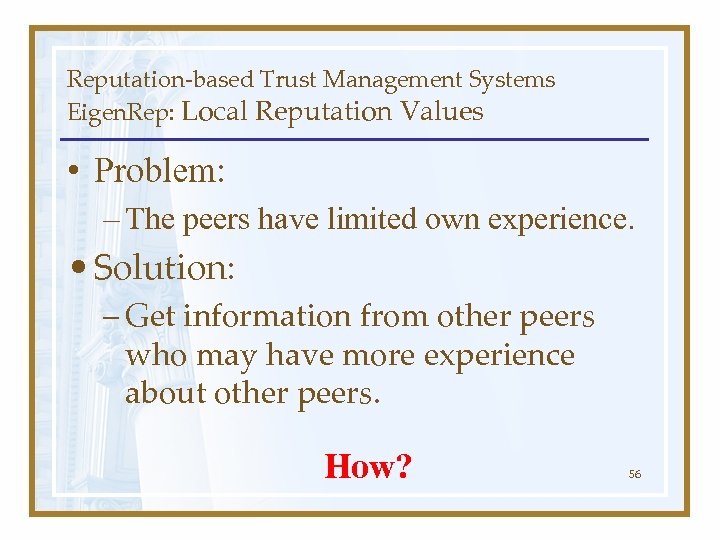

Reputation-based Trust Management Systems Eigen. Rep: Local Reputation Values • Problem: – The peers have limited own experience. • Solution: – Get information from other peers who may have more experience about other peers. How? 56

Reputation-based Trust Management Systems Eigen. Rep: Local Reputation Values • Problem: – The peers have limited own experience. • Solution: – Get information from other peers who may have more experience about other peers. How? 56

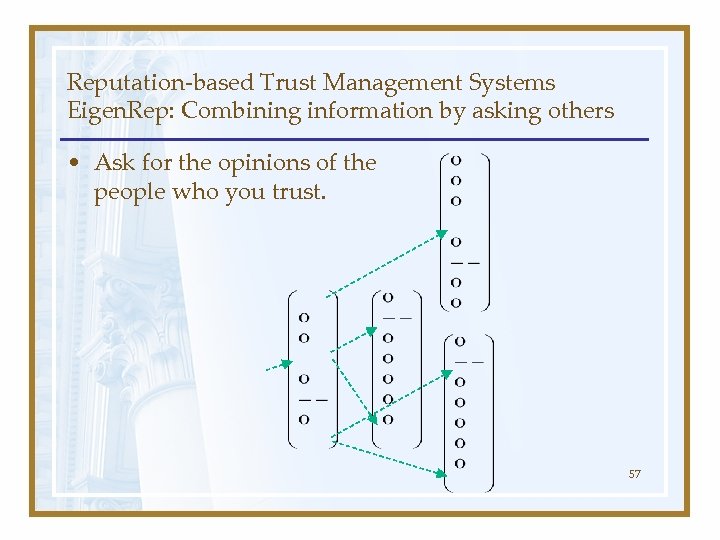

Reputation-based Trust Management Systems Eigen. Rep: Combining information by asking others • Ask for the opinions of the people who you trust. 57

Reputation-based Trust Management Systems Eigen. Rep: Combining information by asking others • Ask for the opinions of the people who you trust. 57

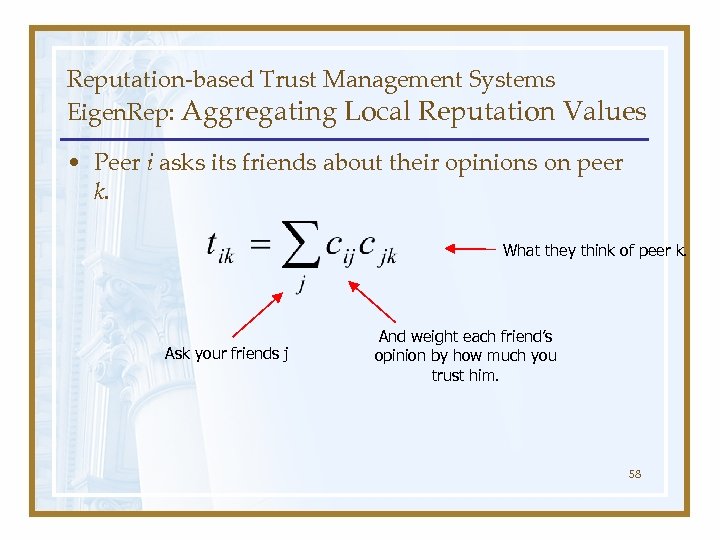

Reputation-based Trust Management Systems Eigen. Rep: Aggregating Local Reputation Values • Peer i asks its friends about their opinions on peer k. What they think of peer k. Ask your friends j And weight each friend’s opinion by how much you trust him. 58

Reputation-based Trust Management Systems Eigen. Rep: Aggregating Local Reputation Values • Peer i asks its friends about their opinions on peer k. What they think of peer k. Ask your friends j And weight each friend’s opinion by how much you trust him. 58

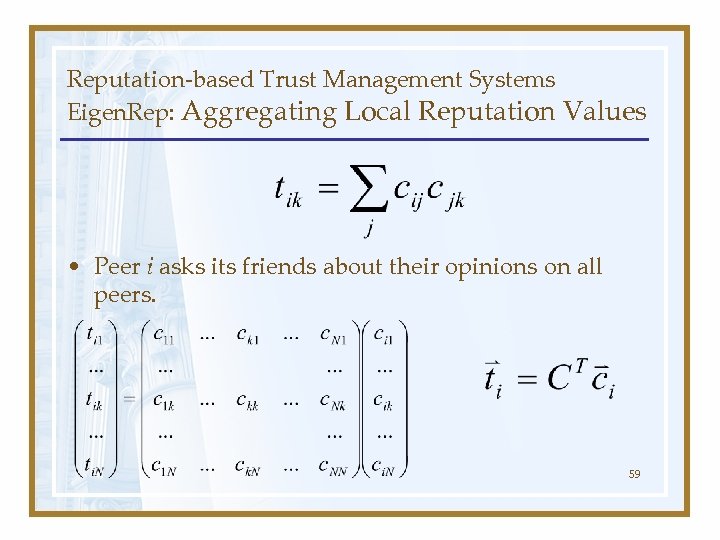

Reputation-based Trust Management Systems Eigen. Rep: Aggregating Local Reputation Values • Peer i asks its friends about their opinions on all peers. 59

Reputation-based Trust Management Systems Eigen. Rep: Aggregating Local Reputation Values • Peer i asks its friends about their opinions on all peers. 59

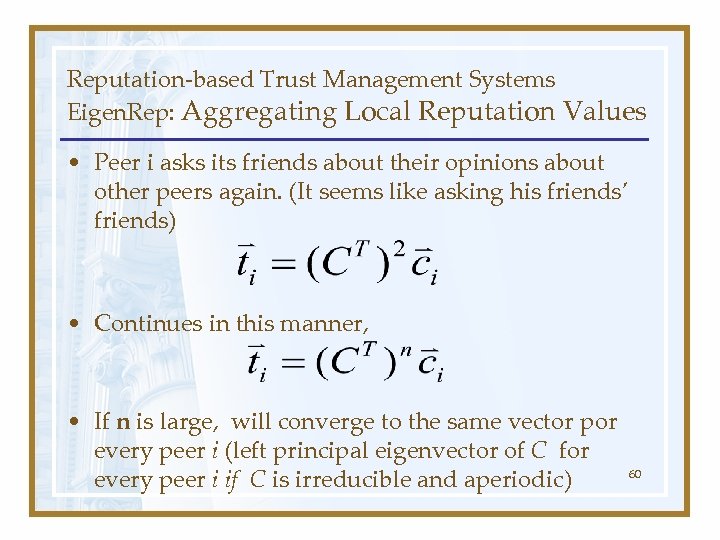

Reputation-based Trust Management Systems Eigen. Rep: Aggregating Local Reputation Values • Peer i asks its friends about their opinions about other peers again. (It seems like asking his friends’ friends) • Continues in this manner, • If n is large, will converge to the same vector por every peer i (left principal eigenvector of C for every peer i if C is irreducible and aperiodic) 60

Reputation-based Trust Management Systems Eigen. Rep: Aggregating Local Reputation Values • Peer i asks its friends about their opinions about other peers again. (It seems like asking his friends’ friends) • Continues in this manner, • If n is large, will converge to the same vector por every peer i (left principal eigenvector of C for every peer i if C is irreducible and aperiodic) 60

Reputation-based Trust Management Systems Eigen. Rep: Global Reputation Vector, • We call this eigenvector. – , the global reputation , an element of , quantifies how much trust the system as a whole places peer j. How to Estimate t? 61

Reputation-based Trust Management Systems Eigen. Rep: Global Reputation Vector, • We call this eigenvector. – , the global reputation , an element of , quantifies how much trust the system as a whole places peer j. How to Estimate t? 61

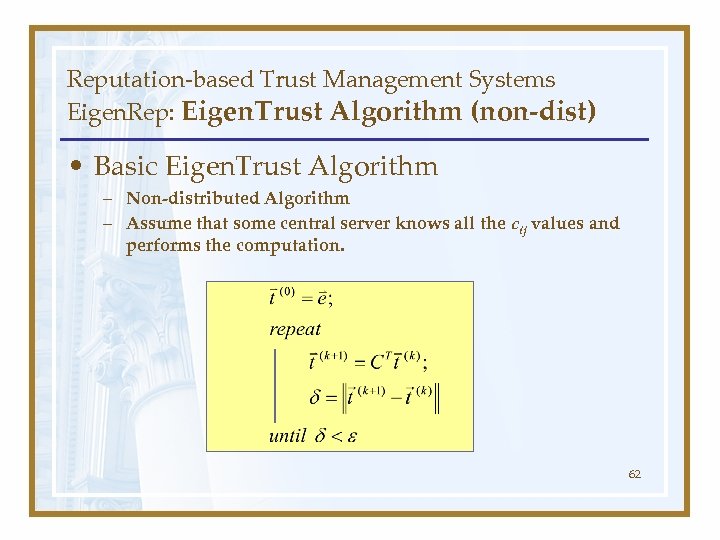

Reputation-based Trust Management Systems Eigen. Rep: Eigen. Trust Algorithm (non-dist) • Basic Eigen. Trust Algorithm – Non-distributed Algorithm – Assume that some central server knows all the cij values and performs the computation. 62

Reputation-based Trust Management Systems Eigen. Rep: Eigen. Trust Algorithm (non-dist) • Basic Eigen. Trust Algorithm – Non-distributed Algorithm – Assume that some central server knows all the cij values and performs the computation. 62

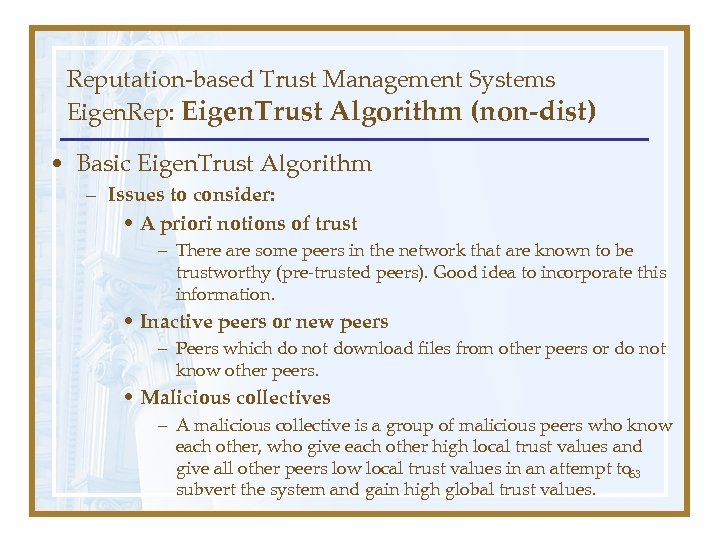

Reputation-based Trust Management Systems Eigen. Rep: Eigen. Trust Algorithm (non-dist) • Basic Eigen. Trust Algorithm – Issues to consider: • A priori notions of trust – There are some peers in the network that are known to be trustworthy (pre-trusted peers). Good idea to incorporate this information. • Inactive peers or new peers – Peers which do not download files from other peers or do not know other peers. • Malicious collectives – A malicious collective is a group of malicious peers who know each other, who give each other high local trust values and give all other peers low local trust values in an attempt to 63 subvert the system and gain high global trust values.

Reputation-based Trust Management Systems Eigen. Rep: Eigen. Trust Algorithm (non-dist) • Basic Eigen. Trust Algorithm – Issues to consider: • A priori notions of trust – There are some peers in the network that are known to be trustworthy (pre-trusted peers). Good idea to incorporate this information. • Inactive peers or new peers – Peers which do not download files from other peers or do not know other peers. • Malicious collectives – A malicious collective is a group of malicious peers who know each other, who give each other high local trust values and give all other peers low local trust values in an attempt to 63 subvert the system and gain high global trust values.

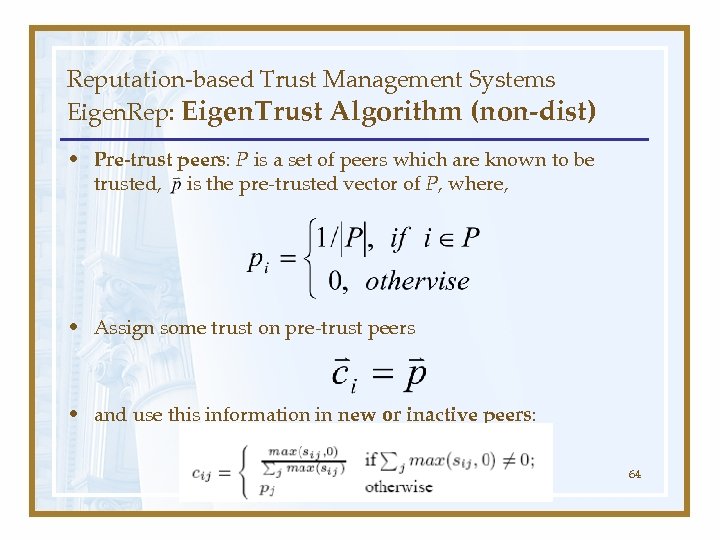

Reputation-based Trust Management Systems Eigen. Rep: Eigen. Trust Algorithm (non-dist) • Pre-trust peers: P is a set of peers which are known to be trusted, is the pre-trusted vector of P, where, • Assign some trust on pre-trust peers • and use this information in new or inactive peers: 64

Reputation-based Trust Management Systems Eigen. Rep: Eigen. Trust Algorithm (non-dist) • Pre-trust peers: P is a set of peers which are known to be trusted, is the pre-trusted vector of P, where, • Assign some trust on pre-trust peers • and use this information in new or inactive peers: 64

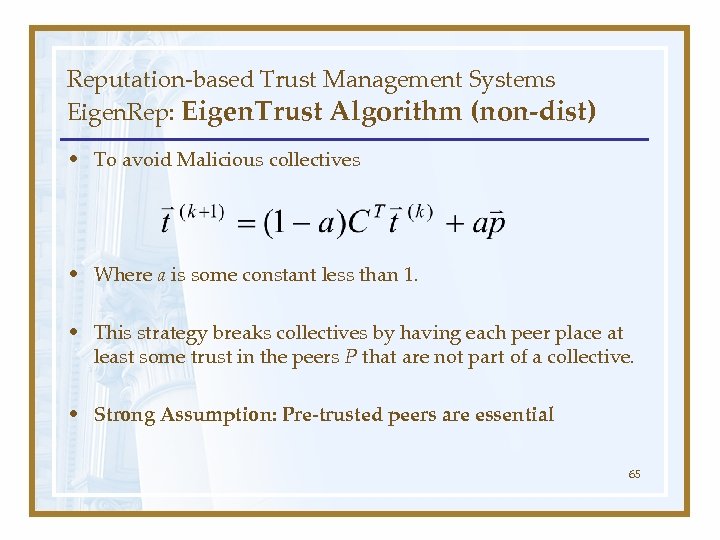

Reputation-based Trust Management Systems Eigen. Rep: Eigen. Trust Algorithm (non-dist) • To avoid Malicious collectives • Where a is some constant less than 1. • This strategy breaks collectives by having each peer place at least some trust in the peers P that are not part of a collective. • Strong Assumption: Pre-trusted peers are essential 65

Reputation-based Trust Management Systems Eigen. Rep: Eigen. Trust Algorithm (non-dist) • To avoid Malicious collectives • Where a is some constant less than 1. • This strategy breaks collectives by having each peer place at least some trust in the peers P that are not part of a collective. • Strong Assumption: Pre-trusted peers are essential 65

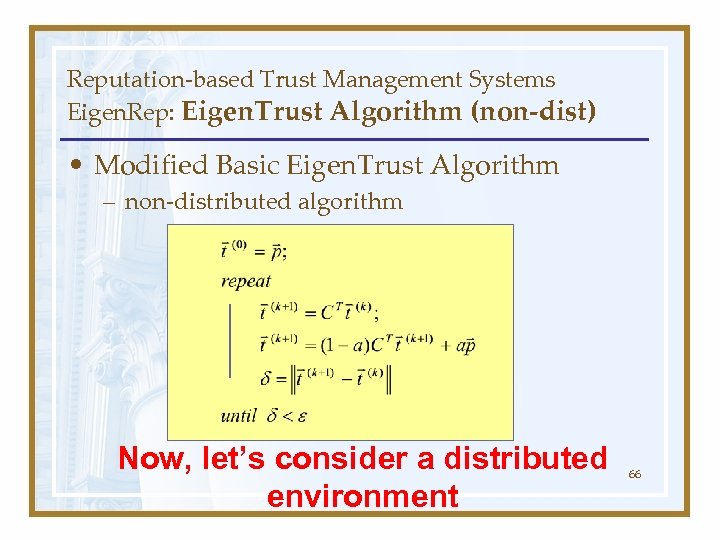

Reputation-based Trust Management Systems Eigen. Rep: Eigen. Trust Algorithm (non-dist) • Modified Basic Eigen. Trust Algorithm – non-distributed algorithm Now, let’s consider a distributed environment 66

Reputation-based Trust Management Systems Eigen. Rep: Eigen. Trust Algorithm (non-dist) • Modified Basic Eigen. Trust Algorithm – non-distributed algorithm Now, let’s consider a distributed environment 66

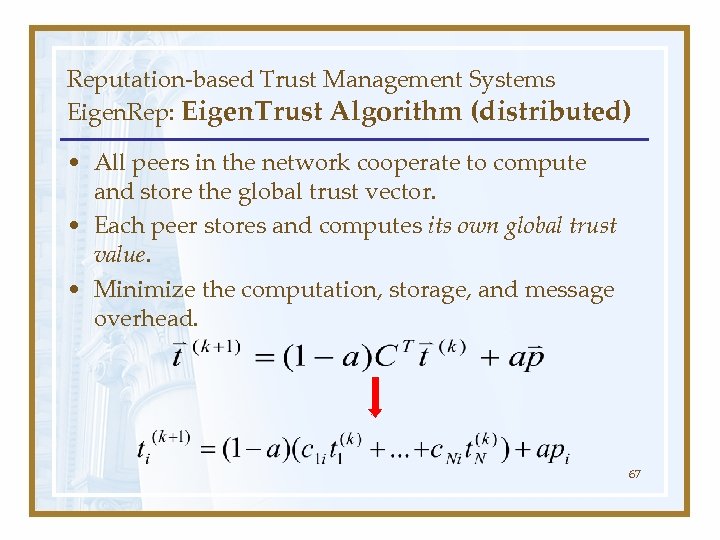

Reputation-based Trust Management Systems Eigen. Rep: Eigen. Trust Algorithm (distributed) • All peers in the network cooperate to compute and store the global trust vector. • Each peer stores and computes its own global trust value. • Minimize the computation, storage, and message overhead. 67

Reputation-based Trust Management Systems Eigen. Rep: Eigen. Trust Algorithm (distributed) • All peers in the network cooperate to compute and store the global trust vector. • Each peer stores and computes its own global trust value. • Minimize the computation, storage, and message overhead. 67

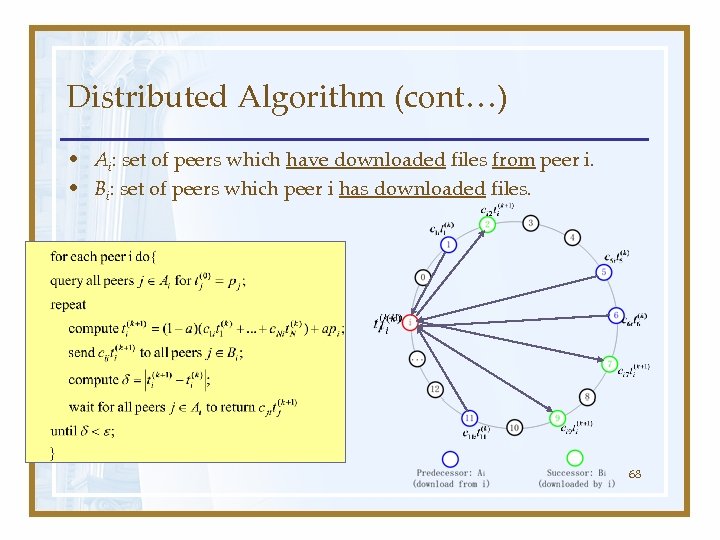

Distributed Algorithm (cont…) • Ai: set of peers which have downloaded files from peer i. • Bi: set of peers which peer i has downloaded files. 68

Distributed Algorithm (cont…) • Ai: set of peers which have downloaded files from peer i. • Bi: set of peers which peer i has downloaded files. 68

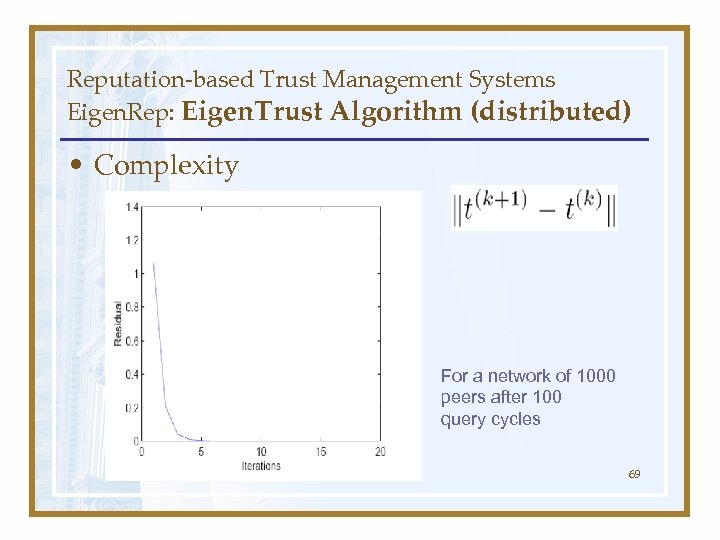

Reputation-based Trust Management Systems Eigen. Rep: Eigen. Trust Algorithm (distributed) • Complexity For a network of 1000 peers after 100 query cycles 69

Reputation-based Trust Management Systems Eigen. Rep: Eigen. Trust Algorithm (distributed) • Complexity For a network of 1000 peers after 100 query cycles 69

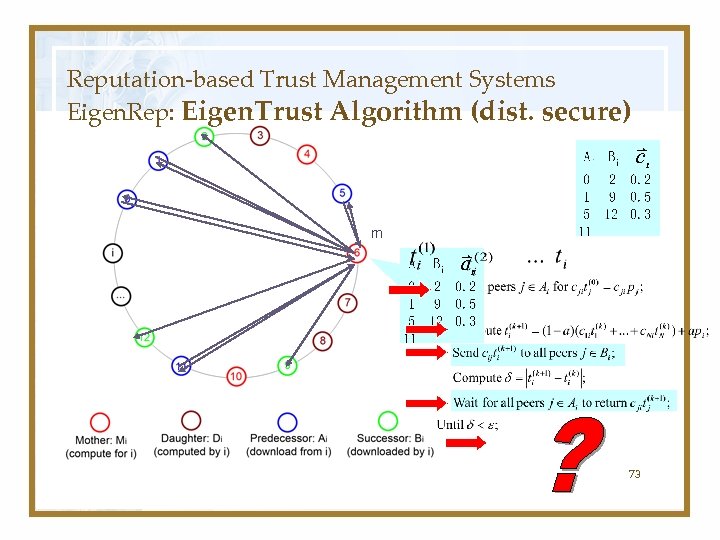

Reputation-based Trust Management Systems Eigen. Rep: Eigen. Trust Algorithm (dist. secure) • Issue: The trust value of one peer should be computed by more than one other peer. – malicious peers report false trust values of their own. – malicious peers compute false trust values for others. 70

Reputation-based Trust Management Systems Eigen. Rep: Eigen. Trust Algorithm (dist. secure) • Issue: The trust value of one peer should be computed by more than one other peer. – malicious peers report false trust values of their own. – malicious peers compute false trust values for others. 70

Reputation-based Trust Management Systems Eigen. Rep: Eigen. Trust Algorithm (dist. secure) • Solution Strategy: – the current trust value of a peer must not be computed by and reside at the peer itself, where it can easily become subject to manipulation. – the trust value of one peer in the network will be computed by more than one other peer. • Use multiple DHTs to assign mother peers, such as CAN or CHORD. • The number of mother peers for one peer is same to all peers. 71

Reputation-based Trust Management Systems Eigen. Rep: Eigen. Trust Algorithm (dist. secure) • Solution Strategy: – the current trust value of a peer must not be computed by and reside at the peer itself, where it can easily become subject to manipulation. – the trust value of one peer in the network will be computed by more than one other peer. • Use multiple DHTs to assign mother peers, such as CAN or CHORD. • The number of mother peers for one peer is same to all peers. 71

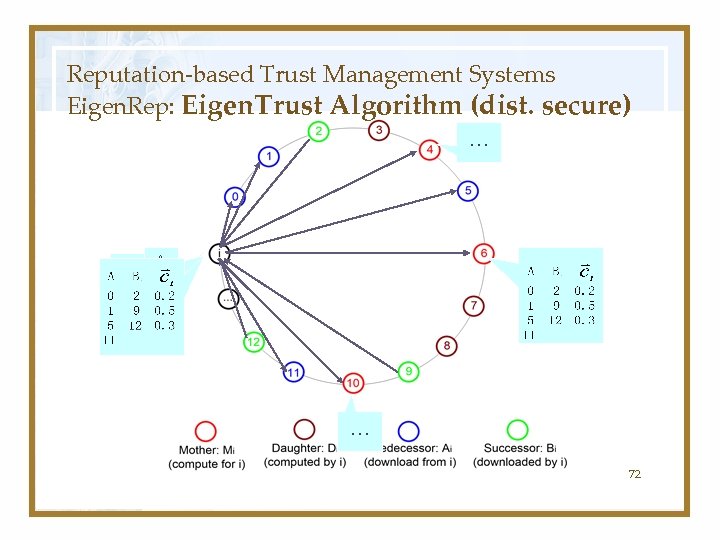

Reputation-based Trust Management Systems Eigen. Rep: Eigen. Trust Algorithm (dist. secure) … Ai, Bi. Ai 0 20 1 91 5 12 5 11 11 # … 72

Reputation-based Trust Management Systems Eigen. Rep: Eigen. Trust Algorithm (dist. secure) … Ai, Bi. Ai 0 20 1 91 5 12 5 11 11 # … 72

Reputation-based Trust Management Systems Eigen. Rep: Eigen. Trust Algorithm (dist. secure) m 73

Reputation-based Trust Management Systems Eigen. Rep: Eigen. Trust Algorithm (dist. secure) m 73

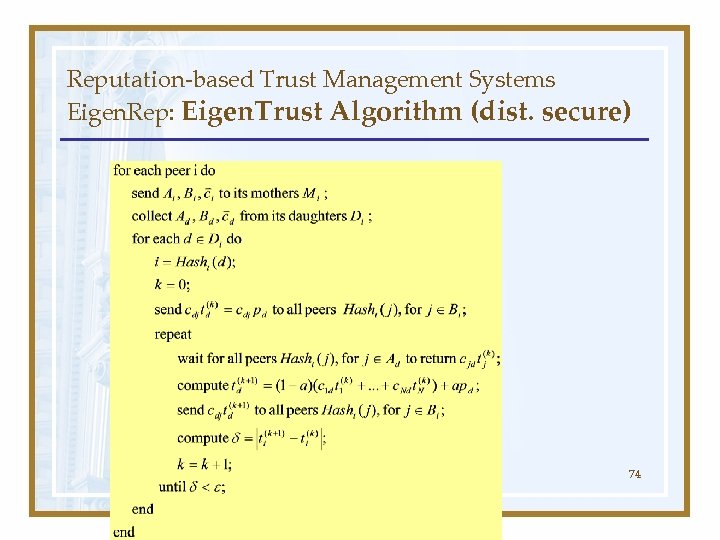

Reputation-based Trust Management Systems Eigen. Rep: Eigen. Trust Algorithm (dist. secure) 74

Reputation-based Trust Management Systems Eigen. Rep: Eigen. Trust Algorithm (dist. secure) 74

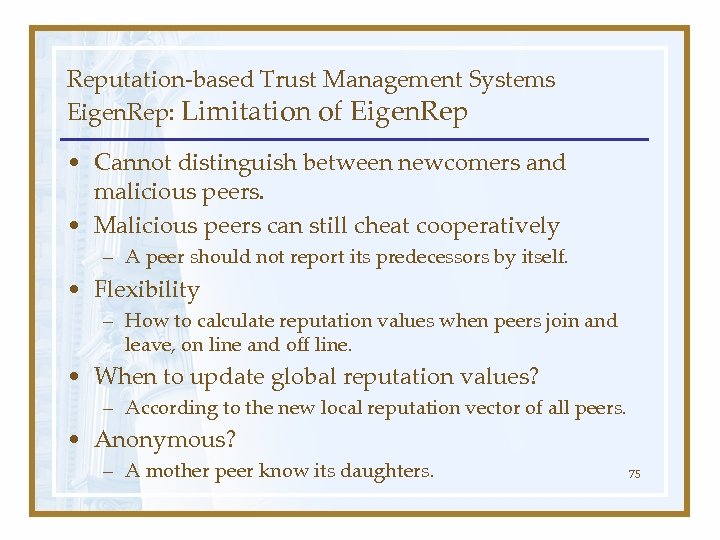

Reputation-based Trust Management Systems Eigen. Rep: Limitation of Eigen. Rep • Cannot distinguish between newcomers and malicious peers. • Malicious peers can still cheat cooperatively – A peer should not report its predecessors by itself. • Flexibility – How to calculate reputation values when peers join and leave, on line and off line. • When to update global reputation values? – According to the new local reputation vector of all peers. • Anonymous? – A mother peer know its daughters. 75

Reputation-based Trust Management Systems Eigen. Rep: Limitation of Eigen. Rep • Cannot distinguish between newcomers and malicious peers. • Malicious peers can still cheat cooperatively – A peer should not report its predecessors by itself. • Flexibility – How to calculate reputation values when peers join and leave, on line and off line. • When to update global reputation values? – According to the new local reputation vector of all peers. • Anonymous? – A mother peer know its daughters. 75

Outline • What is Trust? • What is a Trust Management? • How to measure Trust? – Example • Reputation-based Trust Management Systems – DMRep – Eigen. Rep – P 2 PRep • Frameworks for Trust Establishment – Trust- χ 76

Outline • What is Trust? • What is a Trust Management? • How to measure Trust? – Example • Reputation-based Trust Management Systems – DMRep – Eigen. Rep – P 2 PRep • Frameworks for Trust Establishment – Trust- χ 76

Reputation-based Trust Management Systems P 2 PRep: Introduction Choosing reputable servents in a P 2 P network (Cornelli et al, 2002) • Not focus on computation of reputations • Security of exchanged messages – Queries – Votes • How to prevent different security attacks 77

Reputation-based Trust Management Systems P 2 PRep: Introduction Choosing reputable servents in a P 2 P network (Cornelli et al, 2002) • Not focus on computation of reputations • Security of exchanged messages – Queries – Votes • How to prevent different security attacks 77

Reputation-based Trust Management Systems P 2 PRep: Introduction • Using Gnutella for reference – A fully P 2 P decentralized infrastructure – Peers have low accountability and trust – Security threats to Gnutella • Distribution of tampered information • Man in the middle attack 78

Reputation-based Trust Management Systems P 2 PRep: Introduction • Using Gnutella for reference – A fully P 2 P decentralized infrastructure – Peers have low accountability and trust – Security threats to Gnutella • Distribution of tampered information • Man in the middle attack 78

Reputation-based Trust Management Systems P 2 PRep: Sketch of P 2 PRep • To ensure authenticity of offerers & voters, and confidentiality of votes • Use public-key encryption to provide integrity and confidentiality of messages • Require peer_id to be a digest of a public key, for which the peer knows the private key • Votes are values expressing opinions on other peers • Servent reputation represents the “trustworthiness” of a servent in providing files • Servent credibility represents the “trustworthiness” of a servent in providing votes 79

Reputation-based Trust Management Systems P 2 PRep: Sketch of P 2 PRep • To ensure authenticity of offerers & voters, and confidentiality of votes • Use public-key encryption to provide integrity and confidentiality of messages • Require peer_id to be a digest of a public key, for which the peer knows the private key • Votes are values expressing opinions on other peers • Servent reputation represents the “trustworthiness” of a servent in providing files • Servent credibility represents the “trustworthiness” of a servent in providing votes 79

Reputation-based Trust Management Systems P 2 PRep: Sketch of P 2 PRep • P select a peer among those who respond to P’s query • P polls its peers for opinions about the selected peer • Peers respond to the polling with votes • P uses the votes to make its decision 80

Reputation-based Trust Management Systems P 2 PRep: Sketch of P 2 PRep • P select a peer among those who respond to P’s query • P polls its peers for opinions about the selected peer • Peers respond to the polling with votes • P uses the votes to make its decision 80

Reputation-based Trust Management Systems P 2 PRep: Approaches • Two approaches: – Basic polling • Voters do not provide peer_id in votes – Enhanced polling • Voters declare their peer_id in votes 81

Reputation-based Trust Management Systems P 2 PRep: Approaches • Two approaches: – Basic polling • Voters do not provide peer_id in votes – Enhanced polling • Voters declare their peer_id in votes 81

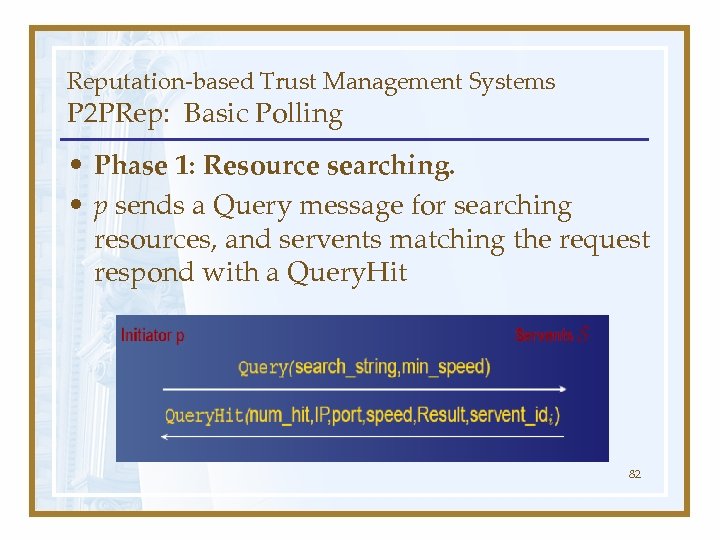

Reputation-based Trust Management Systems P 2 PRep: Basic Polling • Phase 1: Resource searching. • p sends a Query message for searching resources, and servents matching the request respond with a Query. Hit 82

Reputation-based Trust Management Systems P 2 PRep: Basic Polling • Phase 1: Resource searching. • p sends a Query message for searching resources, and servents matching the request respond with a Query. Hit 82

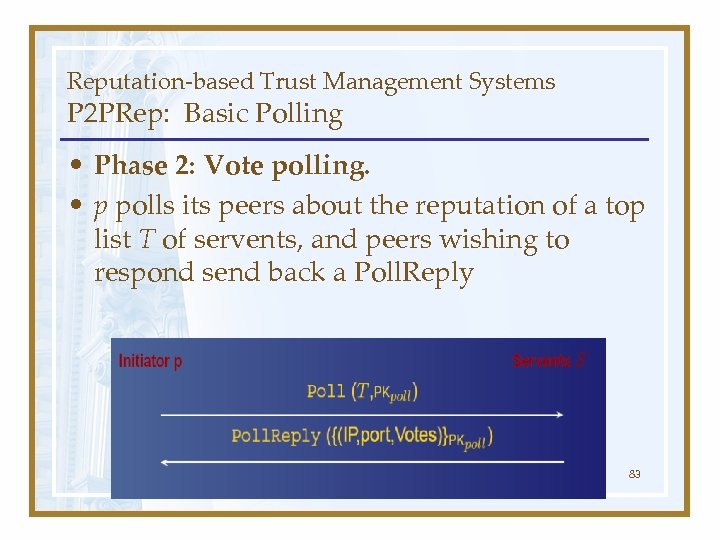

Reputation-based Trust Management Systems P 2 PRep: Basic Polling • Phase 2: Vote polling. • p polls its peers about the reputation of a top list T of servents, and peers wishing to respond send back a Poll. Reply 83

Reputation-based Trust Management Systems P 2 PRep: Basic Polling • Phase 2: Vote polling. • p polls its peers about the reputation of a top list T of servents, and peers wishing to respond send back a Poll. Reply 83

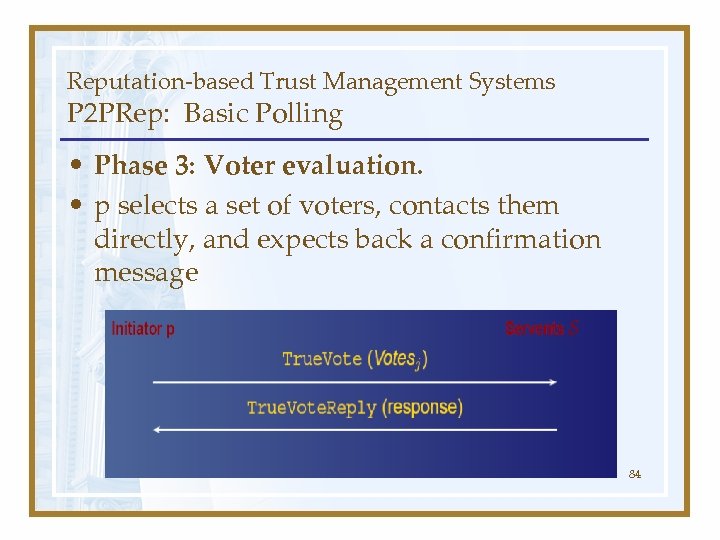

Reputation-based Trust Management Systems P 2 PRep: Basic Polling • Phase 3: Voter evaluation. • p selects a set of voters, contacts them directly, and expects back a confirmation message 84

Reputation-based Trust Management Systems P 2 PRep: Basic Polling • Phase 3: Voter evaluation. • p selects a set of voters, contacts them directly, and expects back a confirmation message 84

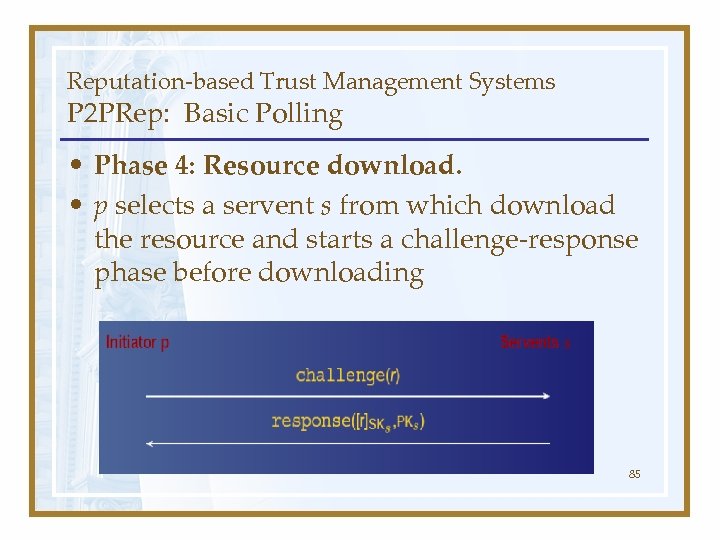

Reputation-based Trust Management Systems P 2 PRep: Basic Polling • Phase 4: Resource download. • p selects a servent s from which download the resource and starts a challenge-response phase before downloading 85

Reputation-based Trust Management Systems P 2 PRep: Basic Polling • Phase 4: Resource download. • p selects a servent s from which download the resource and starts a challenge-response phase before downloading 85

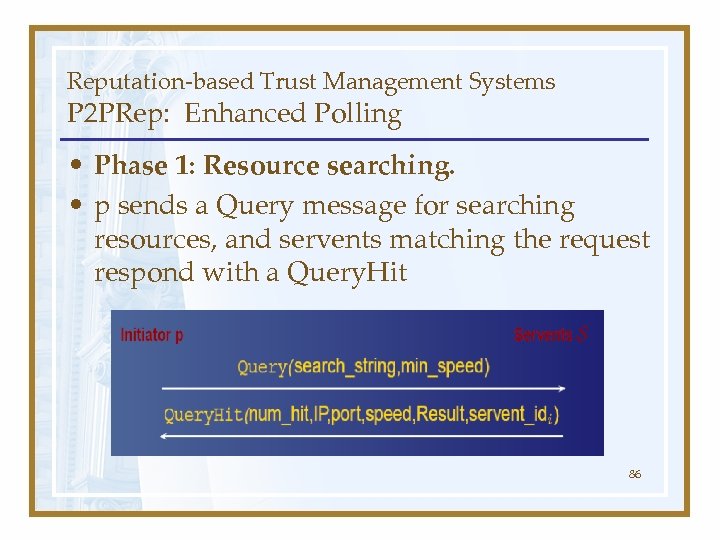

Reputation-based Trust Management Systems P 2 PRep: Enhanced Polling • Phase 1: Resource searching. • p sends a Query message for searching resources, and servents matching the request respond with a Query. Hit 86

Reputation-based Trust Management Systems P 2 PRep: Enhanced Polling • Phase 1: Resource searching. • p sends a Query message for searching resources, and servents matching the request respond with a Query. Hit 86

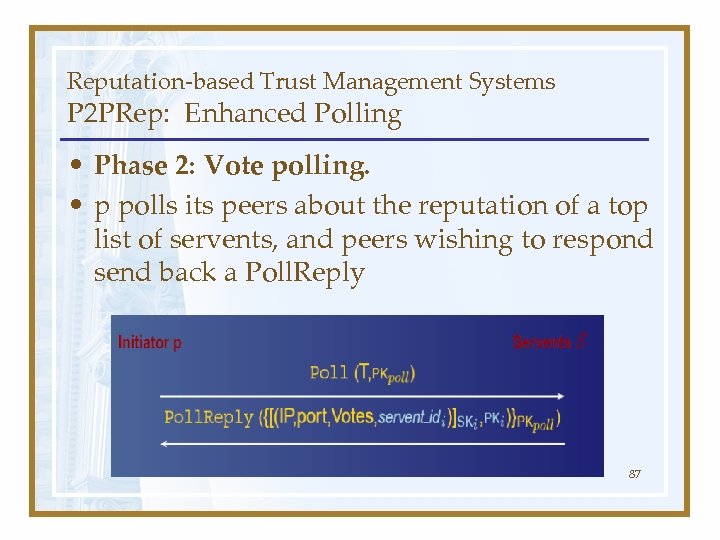

Reputation-based Trust Management Systems P 2 PRep: Enhanced Polling • Phase 2: Vote polling. • p polls its peers about the reputation of a top list of servents, and peers wishing to respond send back a Poll. Reply 87

Reputation-based Trust Management Systems P 2 PRep: Enhanced Polling • Phase 2: Vote polling. • p polls its peers about the reputation of a top list of servents, and peers wishing to respond send back a Poll. Reply 87

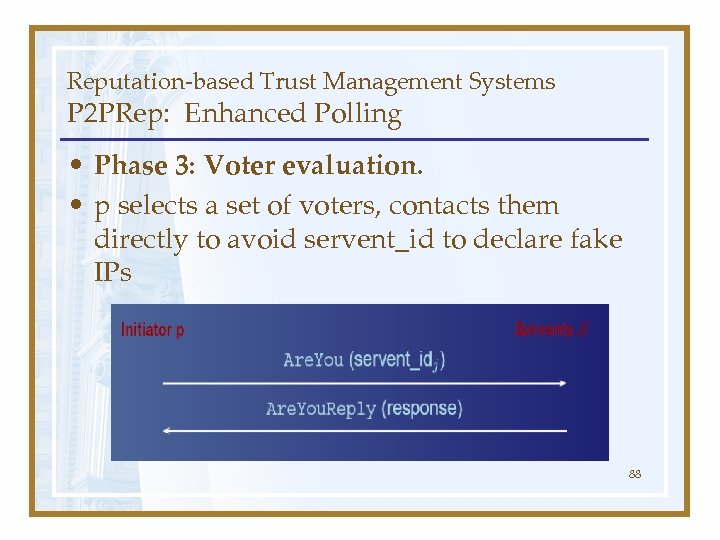

Reputation-based Trust Management Systems P 2 PRep: Enhanced Polling • Phase 3: Voter evaluation. • p selects a set of voters, contacts them directly to avoid servent_id to declare fake IPs 88

Reputation-based Trust Management Systems P 2 PRep: Enhanced Polling • Phase 3: Voter evaluation. • p selects a set of voters, contacts them directly to avoid servent_id to declare fake IPs 88

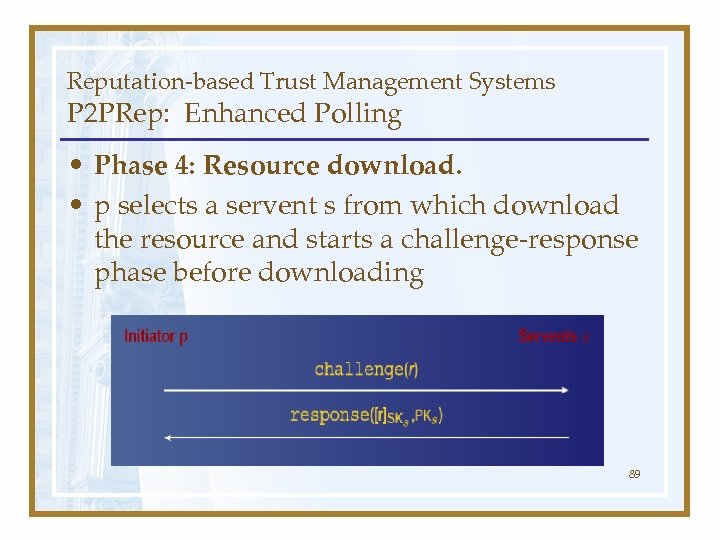

Reputation-based Trust Management Systems P 2 PRep: Enhanced Polling • Phase 4: Resource download. • p selects a servent s from which download the resource and starts a challenge-response phase before downloading 89

Reputation-based Trust Management Systems P 2 PRep: Enhanced Polling • Phase 4: Resource download. • p selects a servent s from which download the resource and starts a challenge-response phase before downloading 89

Reputation-based Trust Management Systems P 2 PRep: Comparison: Basic vs Enhanced • Basic polling – all votes are considered equal • Enhanced polling – peer_ids allow p to weight the votes based on v’s trustworthiness 90

Reputation-based Trust Management Systems P 2 PRep: Comparison: Basic vs Enhanced • Basic polling – all votes are considered equal • Enhanced polling – peer_ids allow p to weight the votes based on v’s trustworthiness 90

Reputation-based Trust Management Systems P 2 PRep: Security Improvements (1) • Distribution of Tampered Information – B responds to A with a fake resource • P 2 PRep Solution: – A discovers the harmful content from B – A updates B’s reputation, preventing further interaction with B – A become witness against B in pollings by others 91

Reputation-based Trust Management Systems P 2 PRep: Security Improvements (1) • Distribution of Tampered Information – B responds to A with a fake resource • P 2 PRep Solution: – A discovers the harmful content from B – A updates B’s reputation, preventing further interaction with B – A become witness against B in pollings by others 91

Reputation-based Trust Management Systems P 2 PRep: Security Improvements (2) • Man in the Middle Attack – Data from C to A can be modified by B, who is in the path • A broadcasts a Query and C responds • B intercepts the Query. Hit from C and rewrites it with B’s IP & port • A receives B’s reply • A chooses B for downloading • B downloads original content from C, modifies it and passes it to A 92

Reputation-based Trust Management Systems P 2 PRep: Security Improvements (2) • Man in the Middle Attack – Data from C to A can be modified by B, who is in the path • A broadcasts a Query and C responds • B intercepts the Query. Hit from C and rewrites it with B’s IP & port • A receives B’s reply • A chooses B for downloading • B downloads original content from C, modifies it and passes it to A 92

Reputation-based Trust Management Systems P 2 PRep: Security Improvements (2) • Man in the Middle Attack – P 2 PRep addresses this problem by including a challenge-response phase before downloading – To impersonate C, B needs • C’s private key • To design a public key whose digest is C’s identifier – Public key encryption strongly enhances the integrity of the exchanged messages – Both versions address this problem 93

Reputation-based Trust Management Systems P 2 PRep: Security Improvements (2) • Man in the Middle Attack – P 2 PRep addresses this problem by including a challenge-response phase before downloading – To impersonate C, B needs • C’s private key • To design a public key whose digest is C’s identifier – Public key encryption strongly enhances the integrity of the exchanged messages – Both versions address this problem 93

Outline • What is Trust? • What is a Trust Management? • How to measure Trust? – Example • Reputation-based Trust Management Systems – DMRep – Eigen. Rep – P 2 PRep • Frameworks for Trust Establishment – Trust- χ 94

Outline • What is Trust? • What is a Trust Management? • How to measure Trust? – Example • Reputation-based Trust Management Systems – DMRep – Eigen. Rep – P 2 PRep • Frameworks for Trust Establishment – Trust- χ 94

Frameworks for Trust Establishment Trust- χ: Introduction • Trust establishment via trust negotiation – Exchange of digital credentials • Credential exchange has to be protected – Policies for credential disclosure • Claim: Current approaches to trust negotiation don’t provide a comprehensive solution that takes into account all phases of the negotiation process 95

Frameworks for Trust Establishment Trust- χ: Introduction • Trust establishment via trust negotiation – Exchange of digital credentials • Credential exchange has to be protected – Policies for credential disclosure • Claim: Current approaches to trust negotiation don’t provide a comprehensive solution that takes into account all phases of the negotiation process 95

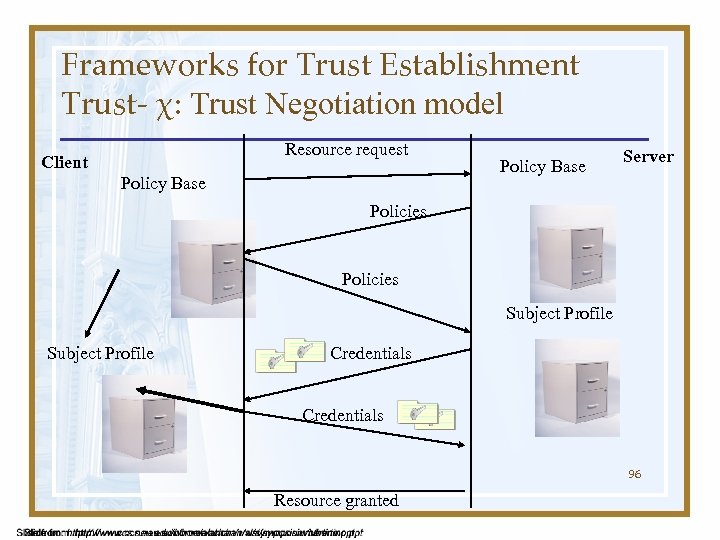

Frameworks for Trust Establishment Trust- χ: Trust Negotiation model Resource request Client Policy Base Server Policies Subject Profile Credentials 96 Resource granted

Frameworks for Trust Establishment Trust- χ: Trust Negotiation model Resource request Client Policy Base Server Policies Subject Profile Credentials 96 Resource granted

Frameworks for Trust Establishment Trust- χ • XML-based system • Designed for a peer-to-peer environment – Both parties are equally responsible for negotiation management. – Either party can act as a requester or a controller of a resource • X-TNL: XML based language for specifying certificates and policies 97

Frameworks for Trust Establishment Trust- χ • XML-based system • Designed for a peer-to-peer environment – Both parties are equally responsible for negotiation management. – Either party can act as a requester or a controller of a resource • X-TNL: XML based language for specifying certificates and policies 97

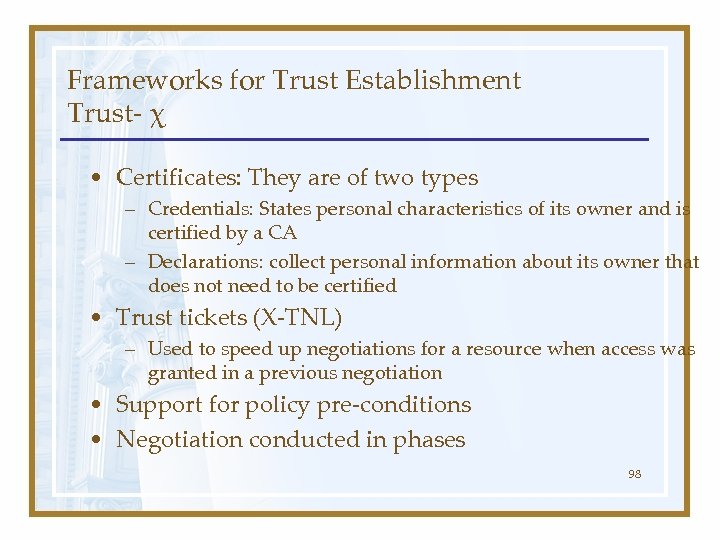

Frameworks for Trust Establishment Trust- χ • Certificates: They are of two types – Credentials: States personal characteristics of its owner and is certified by a CA – Declarations: collect personal information about its owner that does not need to be certified • Trust tickets (X-TNL) – Used to speed up negotiations for a resource when access was granted in a previous negotiation • Support for policy pre-conditions • Negotiation conducted in phases 98

Frameworks for Trust Establishment Trust- χ • Certificates: They are of two types – Credentials: States personal characteristics of its owner and is certified by a CA – Declarations: collect personal information about its owner that does not need to be certified • Trust tickets (X-TNL) – Used to speed up negotiations for a resource when access was granted in a previous negotiation • Support for policy pre-conditions • Negotiation conducted in phases 98

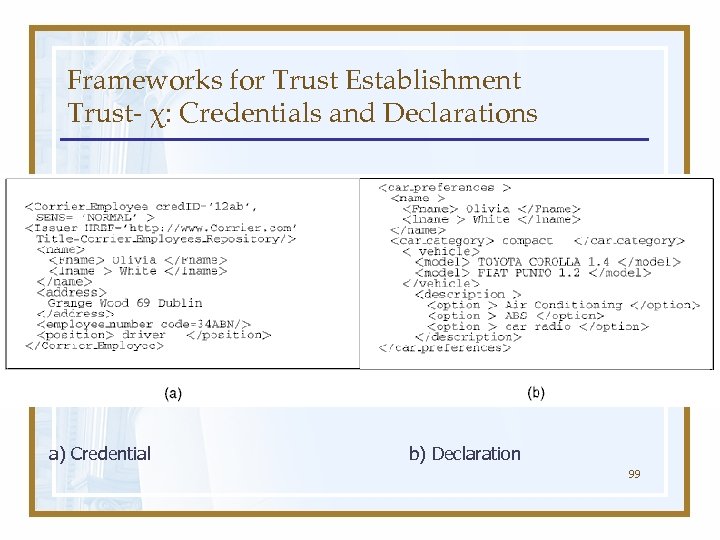

Frameworks for Trust Establishment Trust- χ: Credentials and Declarations a) Credential b) Declaration 99

Frameworks for Trust Establishment Trust- χ: Credentials and Declarations a) Credential b) Declaration 99

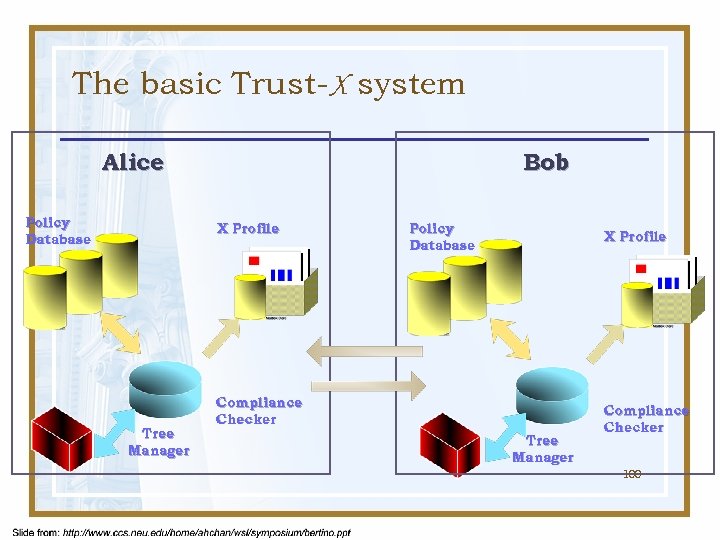

The basic Trust-X system Alice Policy Database Bob X Profile Tree Manager Policy Database X Profile Compliance Checker Tree Manager Compliance Checker 100

The basic Trust-X system Alice Policy Database Bob X Profile Tree Manager Policy Database X Profile Compliance Checker Tree Manager Compliance Checker 100

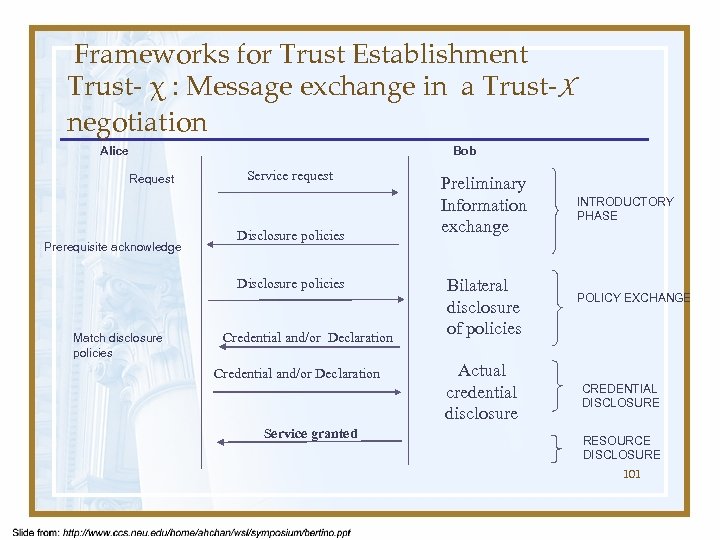

Frameworks for Trust Establishment Trust- χ : Message exchange in a Trust-X negotiation Alice Bob Request Prerequisite acknowledge Service request Disclosure policies Match disclosure policies Credential and/or Declaration Service granted Preliminary Information exchange Bilateral disclosure of policies Actual credential disclosure INTRODUCTORY PHASE POLICY EXCHANGE CREDENTIAL DISCLOSURE RESOURCE DISCLOSURE 101

Frameworks for Trust Establishment Trust- χ : Message exchange in a Trust-X negotiation Alice Bob Request Prerequisite acknowledge Service request Disclosure policies Match disclosure policies Credential and/or Declaration Service granted Preliminary Information exchange Bilateral disclosure of policies Actual credential disclosure INTRODUCTORY PHASE POLICY EXCHANGE CREDENTIAL DISCLOSURE RESOURCE DISCLOSURE 101

Frameworks for Trust Establishment Trust- χ: Disclosure Policies • “They state the conditions under which a resource can be released during a negotiation” • Prerequisites – associated to a policy, it’s a set of alternative disclosure policies that must be satisfied before the disclosure of the policy they refer to. 102

Frameworks for Trust Establishment Trust- χ: Disclosure Policies • “They state the conditions under which a resource can be released during a negotiation” • Prerequisites – associated to a policy, it’s a set of alternative disclosure policies that must be satisfied before the disclosure of the policy they refer to. 102

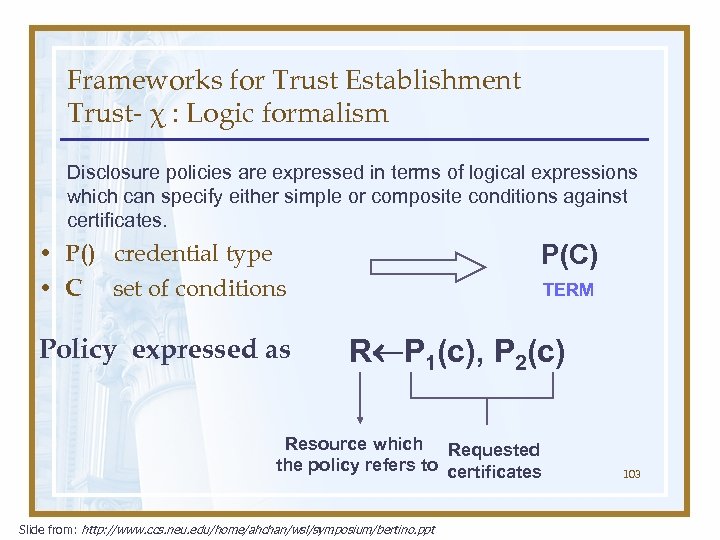

Frameworks for Trust Establishment Trust- χ : Logic formalism Disclosure policies are expressed in terms of logical expressions which can specify either simple or composite conditions against certificates. • P() credential type • C set of conditions Policy expressed as P(C) TERM R P 1(c), P 2(c) Resource which Requested the policy refers to certificates Slide from: http: //www. ccs. neu. edu/home/ahchan/wsl/symposium/bertino. ppt 103

Frameworks for Trust Establishment Trust- χ : Logic formalism Disclosure policies are expressed in terms of logical expressions which can specify either simple or composite conditions against certificates. • P() credential type • C set of conditions Policy expressed as P(C) TERM R P 1(c), P 2(c) Resource which Requested the policy refers to certificates Slide from: http: //www. ccs. neu. edu/home/ahchan/wsl/symposium/bertino. ppt 103

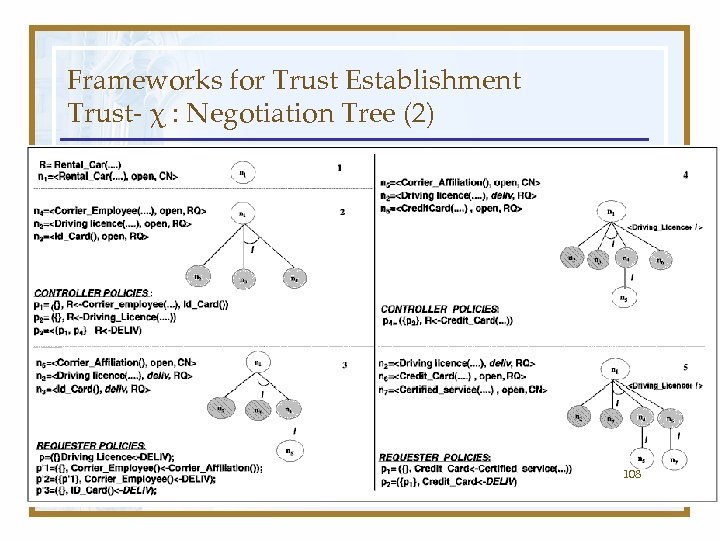

Example • Consider a Rental Car service. • The service is free for the employees of Corrier company. Moreover, the Company already knows Corrier employees and has a digital copy of their driving licenses. Thus, it only asks the employees for the company badge and a valid copy of the ID card, to double check the ownership of the badge. By contrast, rental service is available on payment for unknown requesters, who have to submit first a digital copy of their driving licence and then a valid credit card. These requirements can be formalized as follows: 104

Example • Consider a Rental Car service. • The service is free for the employees of Corrier company. Moreover, the Company already knows Corrier employees and has a digital copy of their driving licenses. Thus, it only asks the employees for the company badge and a valid copy of the ID card, to double check the ownership of the badge. By contrast, rental service is available on payment for unknown requesters, who have to submit first a digital copy of their driving licence and then a valid credit card. These requirements can be formalized as follows: 104

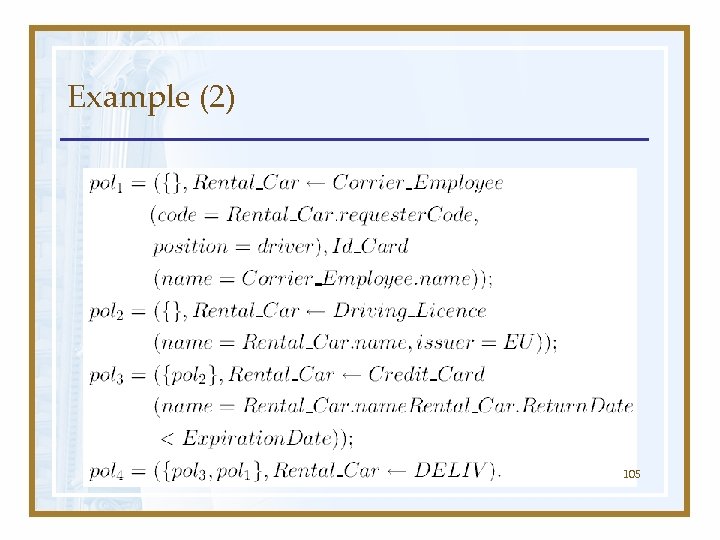

Example (2) 105

Example (2) 105

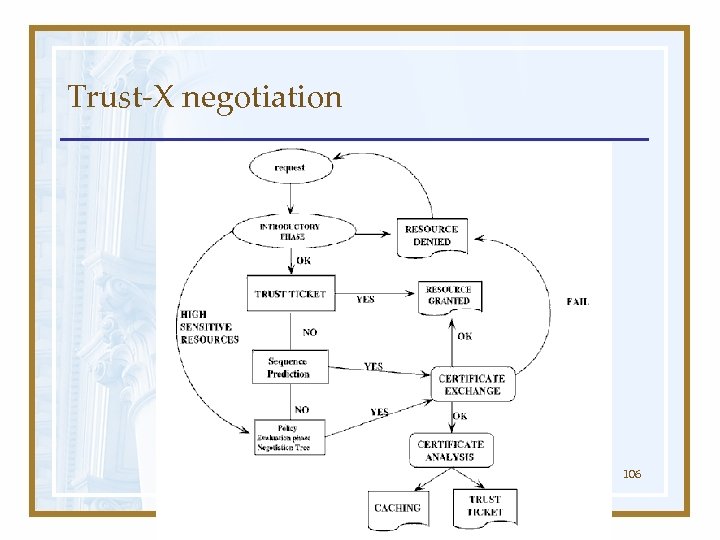

Trust-X negotiation 106

Trust-X negotiation 106

Frameworks for Trust Establishment Trust- χ: Negotiation Tree • Used in the policy evaluation phase • Maintains the progress of a negotiation • Used to identify at least a possible trust sequence that can lead to success in a negotiation (a view) 107

Frameworks for Trust Establishment Trust- χ: Negotiation Tree • Used in the policy evaluation phase • Maintains the progress of a negotiation • Used to identify at least a possible trust sequence that can lead to success in a negotiation (a view) 107

Frameworks for Trust Establishment Trust- χ : Negotiation Tree (2) 108

Frameworks for Trust Establishment Trust- χ : Negotiation Tree (2) 108

Summary • Thanks!, Questions? 109

Summary • Thanks!, Questions? 109