6572fa7d26d300428ac64a346de34b42.ppt

- Количество слайдов: 26

Transport Layer Enhancements for Unified Ethernet in Data Centers K. Kant Raj Ramanujan Intel Corp Exploratory work only, not a committed Intel position

Transport Layer Enhancements for Unified Ethernet in Data Centers K. Kant Raj Ramanujan Intel Corp Exploratory work only, not a committed Intel position

Context Data center is evolving Fabric should too. Last talk: – Enhancements to Ethernet, already on track This talk: – Enhancements to Transport Layer – Exploratory, not in any standards track. Insert Logo Here *Third party marks and brands are the property of their respective owners 2

Context Data center is evolving Fabric should too. Last talk: – Enhancements to Ethernet, already on track This talk: – Enhancements to Transport Layer – Exploratory, not in any standards track. Insert Logo Here *Third party marks and brands are the property of their respective owners 2

Outline –Data Center evolution & transport impact –Transport deficiencies & remedies – Many areas of deficiencies … – Only Congestion Control and Qo. S addressed in detail –Summary & Call to Action Insert Logo Here *Third party marks and brands are the property of their respective owners 3

Outline –Data Center evolution & transport impact –Transport deficiencies & remedies – Many areas of deficiencies … – Only Congestion Control and Qo. S addressed in detail –Summary & Call to Action Insert Logo Here *Third party marks and brands are the property of their respective owners 3

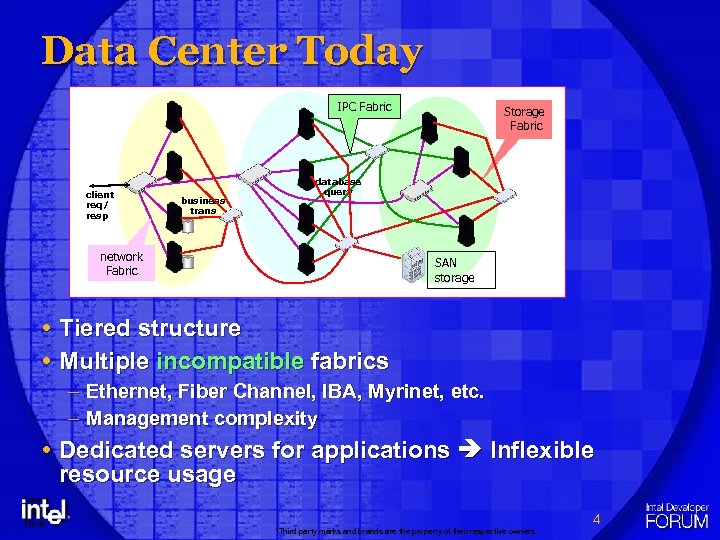

Data Center Today IPC Fabric client req/ resp business trans Storage Fabric database query network Fabric SAN storage Tiered structure Multiple incompatible fabrics – Ethernet, Fiber Channel, IBA, Myrinet, etc. – Management complexity Dedicated servers for applications Inflexible resource usage Insert Logo Here *Third party marks and brands are the property of their respective owners 4

Data Center Today IPC Fabric client req/ resp business trans Storage Fabric database query network Fabric SAN storage Tiered structure Multiple incompatible fabrics – Ethernet, Fiber Channel, IBA, Myrinet, etc. – Management complexity Dedicated servers for applications Inflexible resource usage Insert Logo Here *Third party marks and brands are the property of their respective owners 4

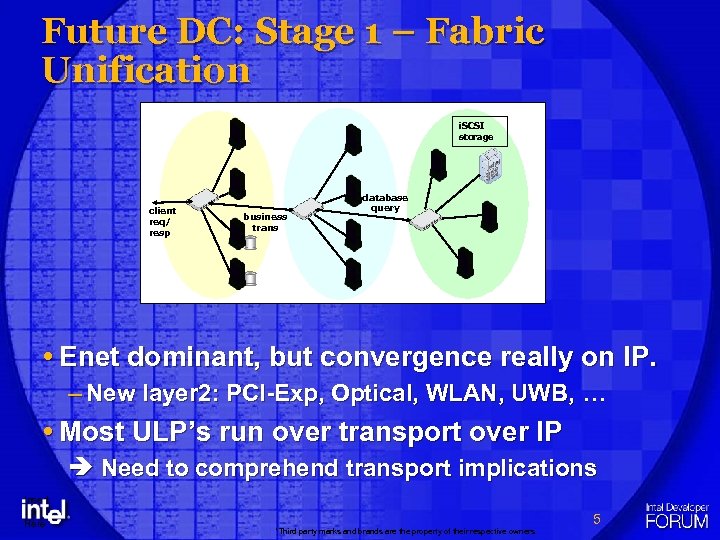

Future DC: Stage 1 – Fabric Unification i. SCSI storage client req/ resp business trans database query Enet dominant, but convergence really on IP. – New layer 2: PCI-Exp, Optical, WLAN, UWB, … Most ULP’s run over transport over IP Need to comprehend transport implications Insert Logo Here *Third party marks and brands are the property of their respective owners 5

Future DC: Stage 1 – Fabric Unification i. SCSI storage client req/ resp business trans database query Enet dominant, but convergence really on IP. – New layer 2: PCI-Exp, Optical, WLAN, UWB, … Most ULP’s run over transport over IP Need to comprehend transport implications Insert Logo Here *Third party marks and brands are the property of their respective owners 5

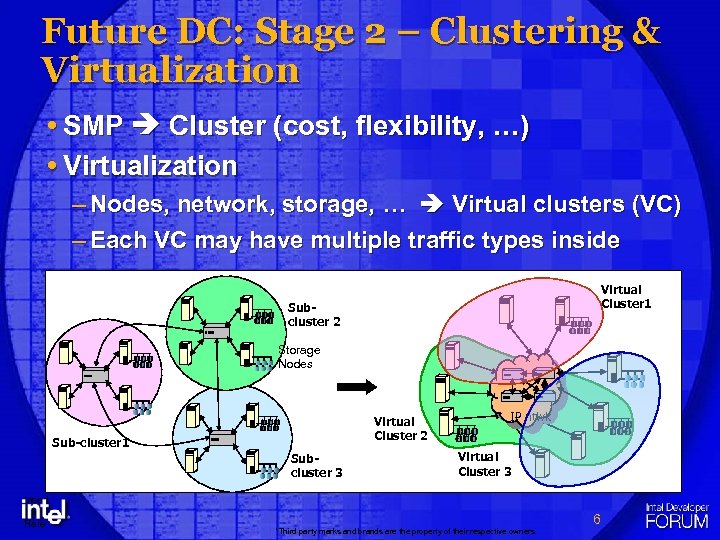

Future DC: Stage 2 – Clustering & Virtualization SMP Cluster (cost, flexibility, …) Virtualization – Nodes, network, storage, … Virtual clusters (VC) – Each VC may have multiple traffic types inside Virtual Cluster 1 Subcluster 2 Storage Nodes Virtual Cluster 2 Sub-cluster 1 Subcluster 3 Insert Logo Here IP ntwk Virtual Cluster 3 *Third party marks and brands are the property of their respective owners 6

Future DC: Stage 2 – Clustering & Virtualization SMP Cluster (cost, flexibility, …) Virtualization – Nodes, network, storage, … Virtual clusters (VC) – Each VC may have multiple traffic types inside Virtual Cluster 1 Subcluster 2 Storage Nodes Virtual Cluster 2 Sub-cluster 1 Subcluster 3 Insert Logo Here IP ntwk Virtual Cluster 3 *Third party marks and brands are the property of their respective owners 6

Future DC: New Usage Models Dynamically provisioned virtual clusters Distributed storage (per node) Streaming traffic (Vo. IP/IPTV + data services) HPC in DC – Data mining for focused advertising, pricing, … Special purpose nodes – Protocol accelerators (XML, authentication, etc. ) New models New fabric requirements Insert Logo Here *Third party marks and brands are the property of their respective owners 7

Future DC: New Usage Models Dynamically provisioned virtual clusters Distributed storage (per node) Streaming traffic (Vo. IP/IPTV + data services) HPC in DC – Data mining for focused advertising, pricing, … Special purpose nodes – Protocol accelerators (XML, authentication, etc. ) New models New fabric requirements Insert Logo Here *Third party marks and brands are the property of their respective owners 7

Fabric Impact More types of traffic, more demanding needs. Protocol impact at all levels – Ethernet: Previous presentation. – IP: Change affects entire infrastructure. – Transport: This talk Why transport focus? – Change primarily confined to endpoints. – Many app needs relate to transport layer – App. interface (Sockets/RDMA) mostly unchanged. Insert Logo Here DC evolution Transport evolution *Third party marks and brands are the property of their respective owners 8

Fabric Impact More types of traffic, more demanding needs. Protocol impact at all levels – Ethernet: Previous presentation. – IP: Change affects entire infrastructure. – Transport: This talk Why transport focus? – Change primarily confined to endpoints. – Many app needs relate to transport layer – App. interface (Sockets/RDMA) mostly unchanged. Insert Logo Here DC evolution Transport evolution *Third party marks and brands are the property of their respective owners 8

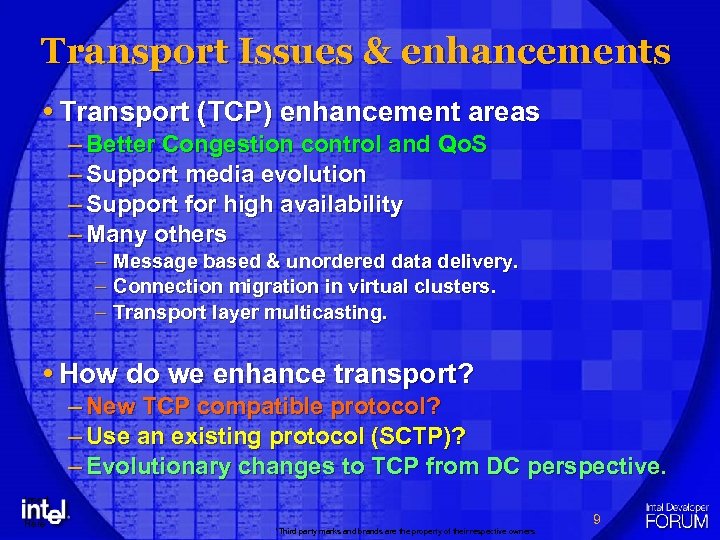

Transport Issues & enhancements Transport (TCP) enhancement areas – Better Congestion control and Qo. S – Support media evolution – Support for high availability – Many others – Message based & unordered data delivery. – Connection migration in virtual clusters. – Transport layer multicasting. How do we enhance transport? – New TCP compatible protocol? – Use an existing protocol (SCTP)? – Evolutionary changes to TCP from DC perspective. Insert Logo Here *Third party marks and brands are the property of their respective owners 9

Transport Issues & enhancements Transport (TCP) enhancement areas – Better Congestion control and Qo. S – Support media evolution – Support for high availability – Many others – Message based & unordered data delivery. – Connection migration in virtual clusters. – Transport layer multicasting. How do we enhance transport? – New TCP compatible protocol? – Use an existing protocol (SCTP)? – Evolutionary changes to TCP from DC perspective. Insert Logo Here *Third party marks and brands are the property of their respective owners 9

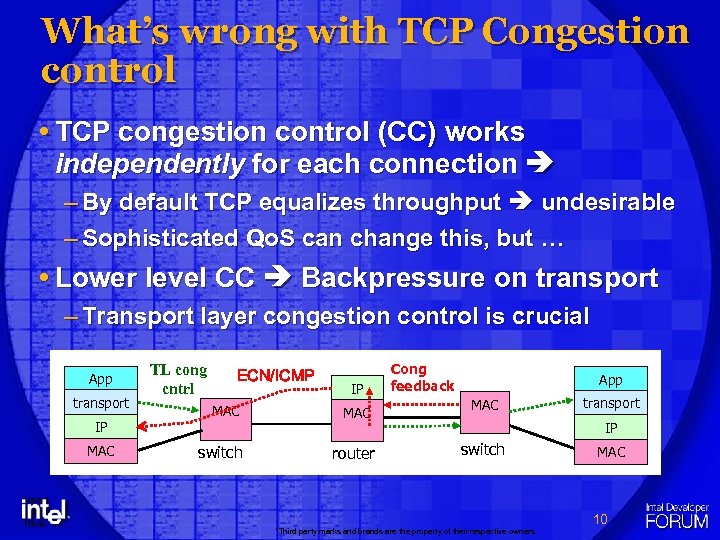

What’s wrong with TCP Congestion control TCP congestion control (CC) works independently for each connection – By default TCP equalizes throughput undesirable – Sophisticated Qo. S can change this, but … Lower level CC Backpressure on transport – Transport layer congestion control is crucial App transport IP MAC Insert Logo Here TL cong cntrl ECN/ICMP MAC switch IP MAC router Cong feedback App MAC transport IP switch *Third party marks and brands are the property of their respective owners MAC 10

What’s wrong with TCP Congestion control TCP congestion control (CC) works independently for each connection – By default TCP equalizes throughput undesirable – Sophisticated Qo. S can change this, but … Lower level CC Backpressure on transport – Transport layer congestion control is crucial App transport IP MAC Insert Logo Here TL cong cntrl ECN/ICMP MAC switch IP MAC router Cong feedback App MAC transport IP switch *Third party marks and brands are the property of their respective owners MAC 10

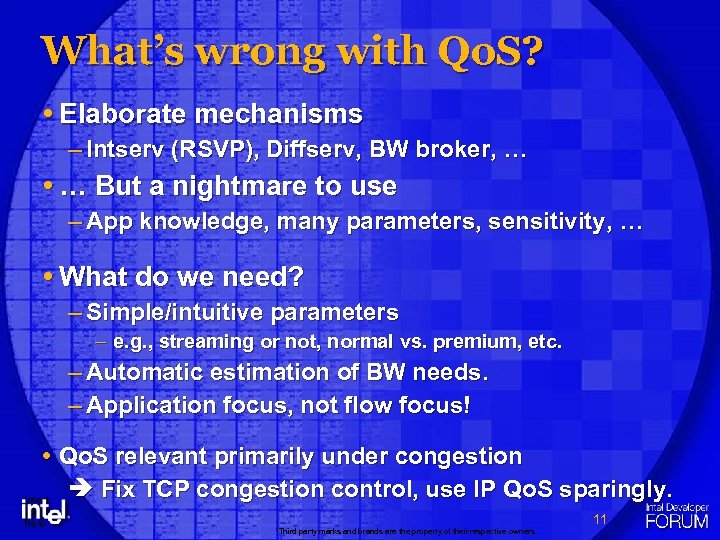

What’s wrong with Qo. S? Elaborate mechanisms – Intserv (RSVP), Diffserv, BW broker, … … But a nightmare to use – App knowledge, many parameters, sensitivity, … What do we need? – Simple/intuitive parameters – e. g. , streaming or not, normal vs. premium, etc. – Automatic estimation of BW needs. – Application focus, not flow focus! Qo. S relevant primarily under congestion Fix TCP congestion control, use IP Qo. S sparingly. Insert Logo Here *Third party marks and brands are the property of their respective owners 11

What’s wrong with Qo. S? Elaborate mechanisms – Intserv (RSVP), Diffserv, BW broker, … … But a nightmare to use – App knowledge, many parameters, sensitivity, … What do we need? – Simple/intuitive parameters – e. g. , streaming or not, normal vs. premium, etc. – Automatic estimation of BW needs. – Application focus, not flow focus! Qo. S relevant primarily under congestion Fix TCP congestion control, use IP Qo. S sparingly. Insert Logo Here *Third party marks and brands are the property of their respective owners 11

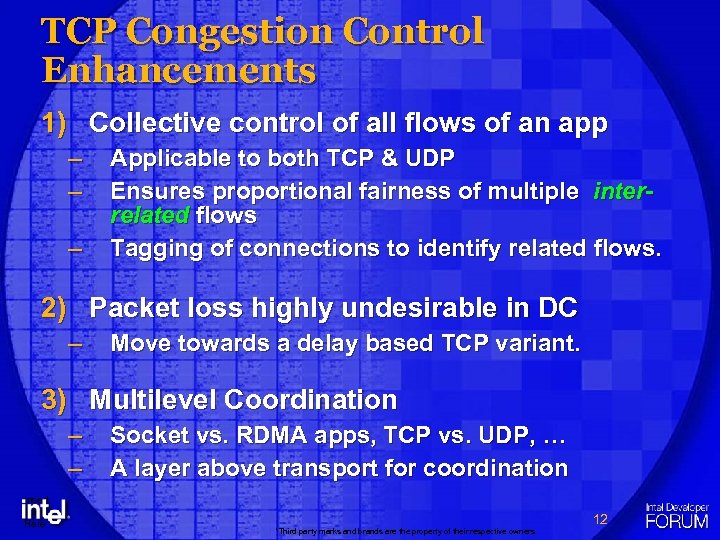

TCP Congestion Control Enhancements 1) Collective control of all flows of an app – – – Applicable to both TCP & UDP Ensures proportional fairness of multiple interrelated flows Tagging of connections to identify related flows. 2) Packet loss highly undesirable in DC – Move towards a delay based TCP variant. 3) Multilevel Coordination – – Insert Logo Here Socket vs. RDMA apps, TCP vs. UDP, … A layer above transport for coordination *Third party marks and brands are the property of their respective owners 12

TCP Congestion Control Enhancements 1) Collective control of all flows of an app – – – Applicable to both TCP & UDP Ensures proportional fairness of multiple interrelated flows Tagging of connections to identify related flows. 2) Packet loss highly undesirable in DC – Move towards a delay based TCP variant. 3) Multilevel Coordination – – Insert Logo Here Socket vs. RDMA apps, TCP vs. UDP, … A layer above transport for coordination *Third party marks and brands are the property of their respective owners 12

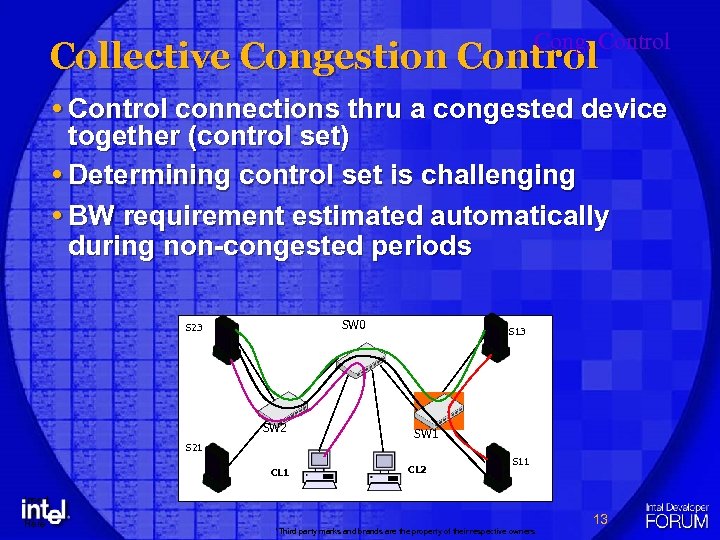

Cong. Control Collective Congestion Control connections thru a congested device together (control set) Determining control set is challenging BW requirement estimated automatically during non-congested periods SW 0 S 23 SW 2 S 13 SW 1 S 21 CL 1 Insert Logo Here CL 2 S 11 *Third party marks and brands are the property of their respective owners 13

Cong. Control Collective Congestion Control connections thru a congested device together (control set) Determining control set is challenging BW requirement estimated automatically during non-congested periods SW 0 S 23 SW 2 S 13 SW 1 S 21 CL 1 Insert Logo Here CL 2 S 11 *Third party marks and brands are the property of their respective owners 13

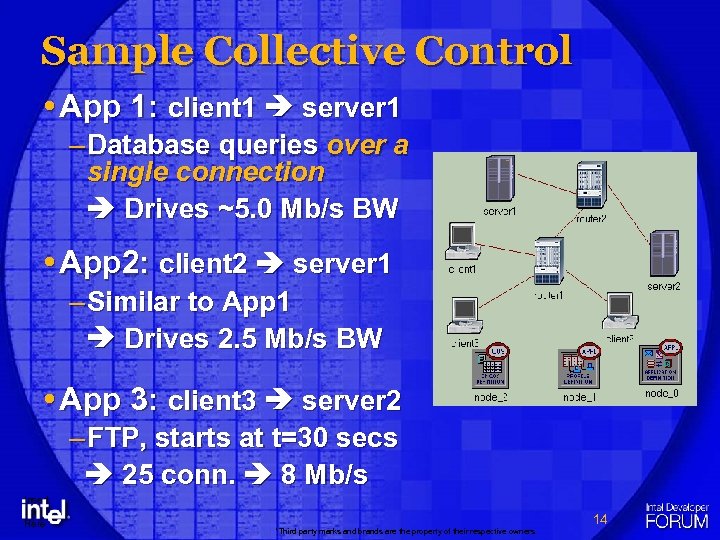

Sample Collective Control App 1: client 1 server 1 – Database queries over a single connection Drives ~5. 0 Mb/s BW App 2: client 2 server 1 – Similar to App 1 Drives 2. 5 Mb/s BW App 3: client 3 server 2 – FTP, starts at t=30 secs 25 conn. 8 Mb/s Insert Logo Here *Third party marks and brands are the property of their respective owners 14

Sample Collective Control App 1: client 1 server 1 – Database queries over a single connection Drives ~5. 0 Mb/s BW App 2: client 2 server 1 – Similar to App 1 Drives 2. 5 Mb/s BW App 3: client 3 server 2 – FTP, starts at t=30 secs 25 conn. 8 Mb/s Insert Logo Here *Third party marks and brands are the property of their respective owners 14

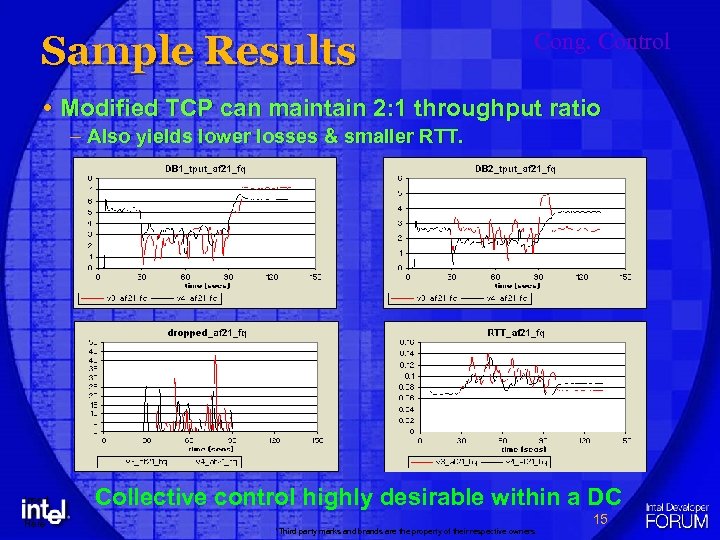

Sample Results Cong. Control Modified TCP can maintain 2: 1 throughput ratio – Also yields lower losses & smaller RTT. Insert Logo Here Collective control highly desirable within a DC *Third party marks and brands are the property of their respective owners 15

Sample Results Cong. Control Modified TCP can maintain 2: 1 throughput ratio – Also yields lower losses & smaller RTT. Insert Logo Here Collective control highly desirable within a DC *Third party marks and brands are the property of their respective owners 15

Adaptation to Media Problem: TCP assumes loss congestion, and designed for WAN (high loss/delay) Effects: – Wireless (e. g. UWB) attractive in DC (wiring reduction, mobility, self configuration). – … but TCP is not a suitable transport. – Overkill for communications within a DC. Solution: A self-adjusting transport – Support multiple congestion/flow-control regimes. – Automatically selected during connection setup. Insert Logo Here *Third party marks and brands are the property of their respective owners 16

Adaptation to Media Problem: TCP assumes loss congestion, and designed for WAN (high loss/delay) Effects: – Wireless (e. g. UWB) attractive in DC (wiring reduction, mobility, self configuration). – … but TCP is not a suitable transport. – Overkill for communications within a DC. Solution: A self-adjusting transport – Support multiple congestion/flow-control regimes. – Automatically selected during connection setup. Insert Logo Here *Third party marks and brands are the property of their respective owners 16

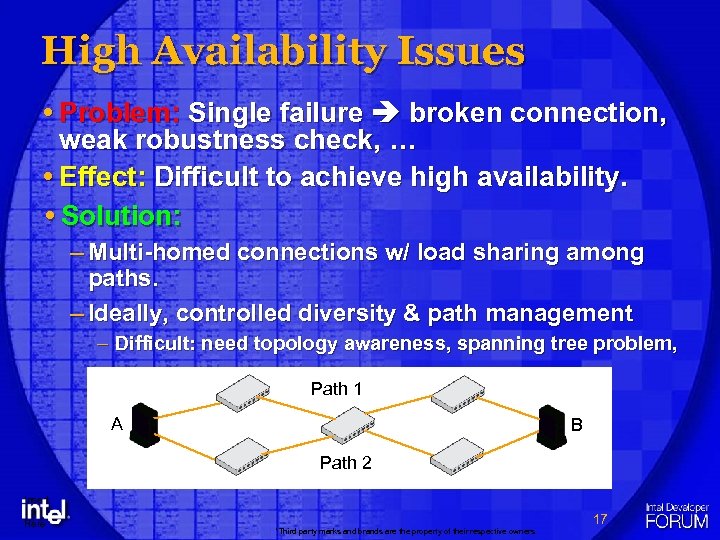

High Availability Issues Problem: Single failure broken connection, weak robustness check, … Effect: Difficult to achieve high availability. Solution: – Multi-homed connections w/ load sharing among paths. – Ideally, controlled diversity & path management – Difficult: need topology awareness, spanning tree problem, Path 1 A B Path 2 Insert Logo Here *Third party marks and brands are the property of their respective owners 17

High Availability Issues Problem: Single failure broken connection, weak robustness check, … Effect: Difficult to achieve high availability. Solution: – Multi-homed connections w/ load sharing among paths. – Ideally, controlled diversity & path management – Difficult: need topology awareness, spanning tree problem, Path 1 A B Path 2 Insert Logo Here *Third party marks and brands are the property of their respective owners 17

Summary & call to action Data Centers are evolving – Transport must evolve too, but a difficult proposition – TCP is heavily entrenched, change needs an industry wide effort Call to Action – Need to get an industry effort going to define – New features & their implementation – Deployment & compatibility issues. – Change will need push from data center administrators & planners. Insert Logo Here *Third party marks and brands are the property of their respective owners 18

Summary & call to action Data Centers are evolving – Transport must evolve too, but a difficult proposition – TCP is heavily entrenched, change needs an industry wide effort Call to Action – Need to get an industry effort going to define – New features & their implementation – Deployment & compatibility issues. – Change will need push from data center administrators & planners. Insert Logo Here *Third party marks and brands are the property of their respective owners 18

Additional Resources Presentation can be downloaded from the IDF web site – when prompted enter: – Username: idf – Password: fall 2005 Additional backup slides Several relevant papers available at http: //kkant. ccwebhost. com/download. html – Analysis of collective bandwidth control. – SCTP performance in data centers. Insert Logo Here *Third party marks and brands are the property of their respective owners 19

Additional Resources Presentation can be downloaded from the IDF web site – when prompted enter: – Username: idf – Password: fall 2005 Additional backup slides Several relevant papers available at http: //kkant. ccwebhost. com/download. html – Analysis of collective bandwidth control. – SCTP performance in data centers. Insert Logo Here *Third party marks and brands are the property of their respective owners 19

Backup Insert Logo Here *Third party marks and brands are the property of their respective owners 20

Backup Insert Logo Here *Third party marks and brands are the property of their respective owners 20

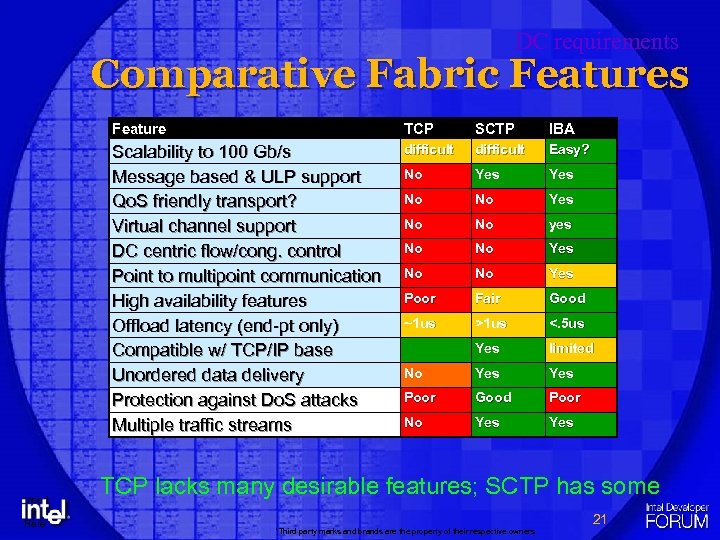

DC requirements Comparative Fabric Features Feature SCTP IBA Scalability to 100 Gb/s Message based & ULP support Qo. S friendly transport? Virtual channel support DC centric flow/cong. control Point to multipoint communication High availability features Offload latency (end-pt only) Compatible w/ TCP/IP base Unordered data delivery Protection against Do. S attacks Multiple traffic streams Insert Logo Here TCP difficult Easy? No Yes No No yes No No Yes Poor Fair Good ~1 us >1 us <. 5 us Yes limited No Yes Poor Good Poor No Yes TCP lacks many desirable features; SCTP has some *Third party marks and brands are the property of their respective owners 21

DC requirements Comparative Fabric Features Feature SCTP IBA Scalability to 100 Gb/s Message based & ULP support Qo. S friendly transport? Virtual channel support DC centric flow/cong. control Point to multipoint communication High availability features Offload latency (end-pt only) Compatible w/ TCP/IP base Unordered data delivery Protection against Do. S attacks Multiple traffic streams Insert Logo Here TCP difficult Easy? No Yes No No yes No No Yes Poor Fair Good ~1 us >1 us <. 5 us Yes limited No Yes Poor Good Poor No Yes TCP lacks many desirable features; SCTP has some *Third party marks and brands are the property of their respective owners 21

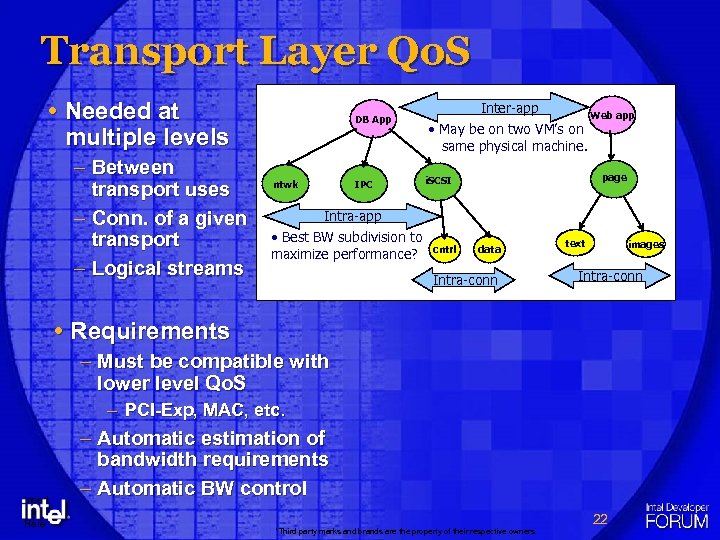

Transport Layer Qo. S Needed at multiple levels – Between transport uses – Conn. of a given transport – Logical streams DB App ntwk IPC Inter-app • May be on two VM’s on same physical machine. Web app page i. SCSI Intra-app • Best BW subdivision to maximize performance? cntrl data Intra-conn text images Intra-conn Requirements – Must be compatible with lower level Qo. S – PCI-Exp, MAC, etc. Insert Logo Here – Automatic estimation of bandwidth requirements – Automatic BW control *Third party marks and brands are the property of their respective owners 22

Transport Layer Qo. S Needed at multiple levels – Between transport uses – Conn. of a given transport – Logical streams DB App ntwk IPC Inter-app • May be on two VM’s on same physical machine. Web app page i. SCSI Intra-app • Best BW subdivision to maximize performance? cntrl data Intra-conn text images Intra-conn Requirements – Must be compatible with lower level Qo. S – PCI-Exp, MAC, etc. Insert Logo Here – Automatic estimation of bandwidth requirements – Automatic BW control *Third party marks and brands are the property of their respective owners 22

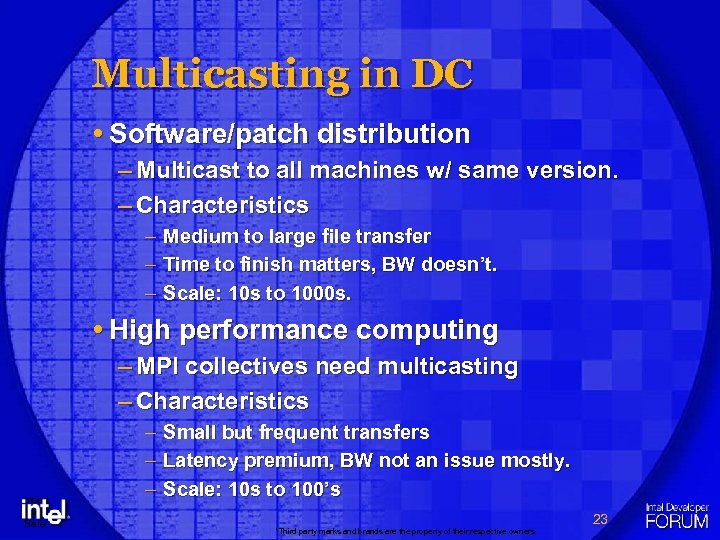

Multicasting in DC Software/patch distribution – Multicast to all machines w/ same version. – Characteristics – Medium to large file transfer – Time to finish matters, BW doesn’t. – Scale: 10 s to 1000 s. High performance computing – MPI collectives need multicasting – Characteristics Insert Logo Here – Small but frequent transfers – Latency premium, BW not an issue mostly. – Scale: 10 s to 100’s *Third party marks and brands are the property of their respective owners 23

Multicasting in DC Software/patch distribution – Multicast to all machines w/ same version. – Characteristics – Medium to large file transfer – Time to finish matters, BW doesn’t. – Scale: 10 s to 1000 s. High performance computing – MPI collectives need multicasting – Characteristics Insert Logo Here – Small but frequent transfers – Latency premium, BW not an issue mostly. – Scale: 10 s to 100’s *Third party marks and brands are the property of their respective owners 23

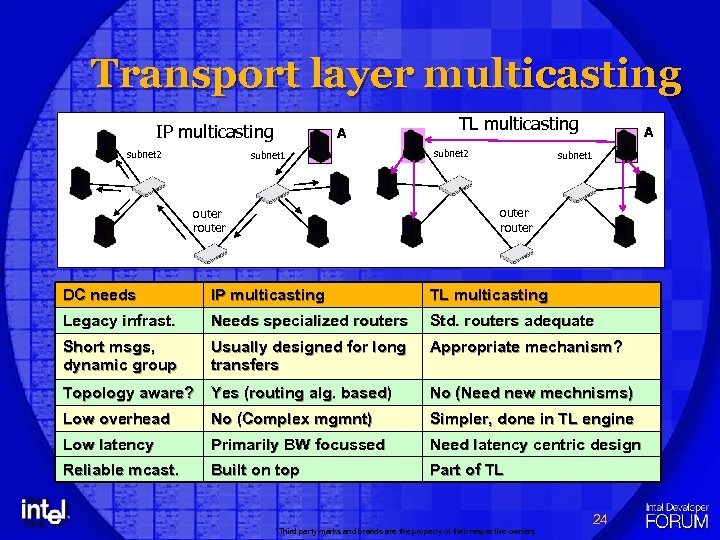

Transport layer multicasting IP multicasting subnet 2 A subnet 1 TL multicasting subnet 2 A subnet 1 outer router DC needs TL multicasting Legacy infrast. Needs specialized routers Std. routers adequate Short msgs, dynamic group Usually designed for long transfers Appropriate mechanism? Topology aware? Yes (routing alg. based) No (Need new mechnisms) Low overhead No (Complex mgmnt) Simpler, done in TL engine Low latency Primarily BW focussed Need latency centric design Reliable mcast. Insert Logo Here IP multicasting Built on top Part of TL *Third party marks and brands are the property of their respective owners 24

Transport layer multicasting IP multicasting subnet 2 A subnet 1 TL multicasting subnet 2 A subnet 1 outer router DC needs TL multicasting Legacy infrast. Needs specialized routers Std. routers adequate Short msgs, dynamic group Usually designed for long transfers Appropriate mechanism? Topology aware? Yes (routing alg. based) No (Need new mechnisms) Low overhead No (Complex mgmnt) Simpler, done in TL engine Low latency Primarily BW focussed Need latency centric design Reliable mcast. Insert Logo Here IP multicasting Built on top Part of TL *Third party marks and brands are the property of their respective owners 24

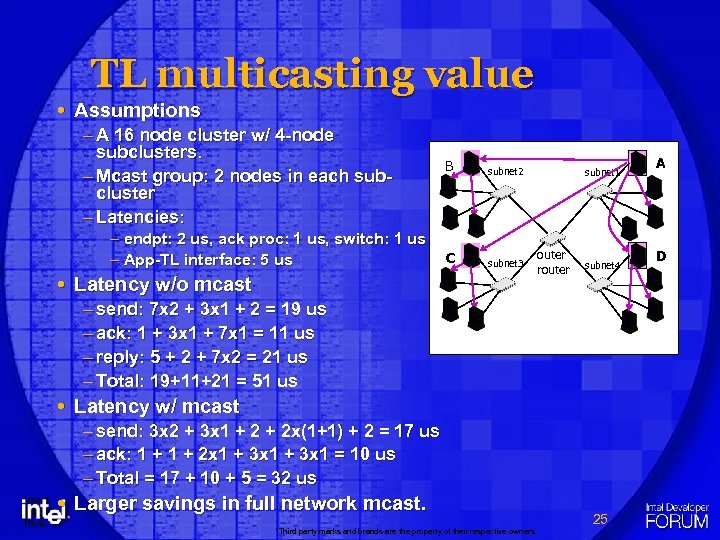

TL multicasting value Assumptions – A 16 node cluster w/ 4 -node subclusters. – Mcast group: 2 nodes in each subcluster – Latencies: – endpt: 2 us, ack proc: 1 us, switch: 1 us – App-TL interface: 5 us B subnet 2 C subnet 3 Latency w/o mcast subnet 1 outer router subnet 4 – send: 7 x 2 + 3 x 1 + 2 = 19 us – ack: 1 + 3 x 1 + 7 x 1 = 11 us – reply: 5 + 2 + 7 x 2 = 21 us – Total: 19+11+21 = 51 us Latency w/ mcast – send: 3 x 2 + 3 x 1 + 2 x(1+1) + 2 = 17 us – ack: 1 + 2 x 1 + 3 x 1 = 10 us – Total = 17 + 10 + 5 = 32 us Insert Logo Here Larger savings in full network mcast. *Third party marks and brands are the property of their respective owners 25 A D

TL multicasting value Assumptions – A 16 node cluster w/ 4 -node subclusters. – Mcast group: 2 nodes in each subcluster – Latencies: – endpt: 2 us, ack proc: 1 us, switch: 1 us – App-TL interface: 5 us B subnet 2 C subnet 3 Latency w/o mcast subnet 1 outer router subnet 4 – send: 7 x 2 + 3 x 1 + 2 = 19 us – ack: 1 + 3 x 1 + 7 x 1 = 11 us – reply: 5 + 2 + 7 x 2 = 21 us – Total: 19+11+21 = 51 us Latency w/ mcast – send: 3 x 2 + 3 x 1 + 2 x(1+1) + 2 = 17 us – ack: 1 + 2 x 1 + 3 x 1 = 10 us – Total = 17 + 10 + 5 = 32 us Insert Logo Here Larger savings in full network mcast. *Third party marks and brands are the property of their respective owners 25 A D

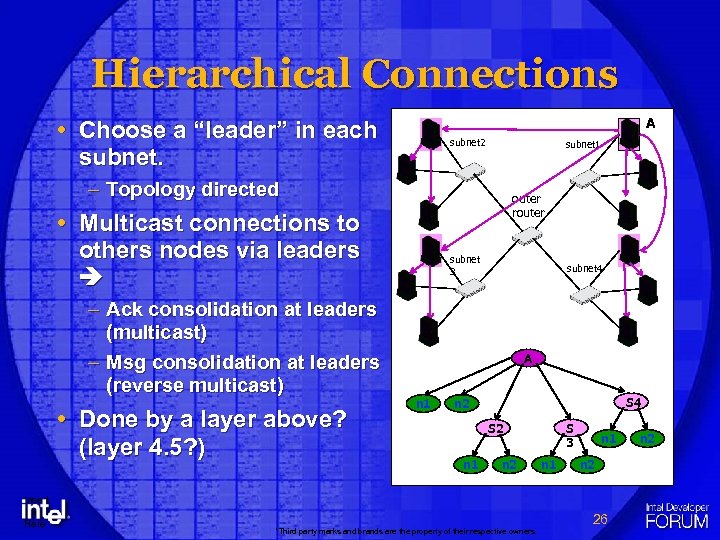

Hierarchical Connections A Choose a “leader” in each subnet 2 subnet 1 – Topology directed outer router Multicast connections to others nodes via leaders – Ack consolidation at leaders (multicast) – Msg consolidation at leaders (reverse multicast) Done by a layer above? (layer 4. 5? ) Insert Logo Here subnet 3 subnet 4 A n 1 S 4 n 2 S 2 n 1 n 2 *Third party marks and brands are the property of their respective owners S 3 n 1 n 2 26 n 2

Hierarchical Connections A Choose a “leader” in each subnet 2 subnet 1 – Topology directed outer router Multicast connections to others nodes via leaders – Ack consolidation at leaders (multicast) – Msg consolidation at leaders (reverse multicast) Done by a layer above? (layer 4. 5? ) Insert Logo Here subnet 3 subnet 4 A n 1 S 4 n 2 S 2 n 1 n 2 *Third party marks and brands are the property of their respective owners S 3 n 1 n 2 26 n 2