f7bdb433f222ab439f554eeae415d8db.ppt

- Количество слайдов: 39

TRANSPARENCY AND REPRODUCIBILITY IN SOCIAL SCIENCES Soazic Elise WANG SONNE Ph. D Fellow, UNU-MERIT, May 19 th , 2017 Maastricht, The Netherlands

NORMS AND ETHOS IN SCIENCES (1) • Over the past decades; transparency, openness and reproducibility have increasingly been recognized as key features of research quality and integrity. • Norms and ethos in scientific research were introduced almost 70 years ago (1942) by Robert Merton : • (i) Universalism (independence) • ii) Communality (common property) • iii) Disinteredness (unselfishness) • iv) Organized Skepticism (disclosure)

WHAT IS BITSS? (2) The Berkeley Initiative for Transparency in the Social Sciences (BITSS) was established in 2012 by UC Berkeley’s Center for Effective Global Action (CEGA). Mission: Strengthen the quality of social science research and evidence used for policy-making, by enhancing the practices of economists, psychologists, political scientists, and other social scientists

TRANSPARENCY…. OPENNESS……. BITSS! ADVOCATE, EDUCATE, CATALYZE Identify, fund, develop tools and resources Build Standards of openness, integrity, and transparency Reward exceptional achievements in the advancement of transparent social science (SSMART), LMR Explore solutions, and monitor progress towards transparency Understand the problem Support researchers in making their research more transparent and open 4

BUT WHY SHOULD WE REALLY CARE ABOUT REPRODUCIBILITY AND OPENNESS IN SOCIAL SCIENCES RESEARCH ?

ACADEMIC RESEARCH MISCONDUCTS IN ECONOMICS MANY OTHER IRREPRODUCIBLE WORKFLOW: Martin Feldstein on Social Security and private savings, Reinhart and Rogoff on debt and GDP growth.

ACADEMIC RESEARCH MISCONDUCTS IN SOCIAL PSYCHOLOGY

OVERVIEW OF UNRELIABLE RESEARCH • • • Publication bias P-hacking Non-disclosure/ Selective reporting Failure to replicate Lack of transparency/ Failure to comply with Mertonian norms: Universalism, Communality, Disinterestedness , Organized skepticism

OVERVIEW OF UNRELIABLE RESEARCH : PUBLICATION BIAS (1) Publication Bias “File drawer problem”

OVERVIEW OF UNRELIABLE RESEARCH : PUBLICATION BIAS (2) – Statistically significant results more likely to be published, while null results are buried in the file drawer.

OVERVIEW OF UNRELIABLE RESEARCH : P-HACKING (1) P-Hacking • Also called “data fishing, ” “data mining. ” or “researcher degree of freedom. ” “If you torture your data long enough, they will confess to anything. ” -Ronald Coase (The University of Virginia, early 1960 s)

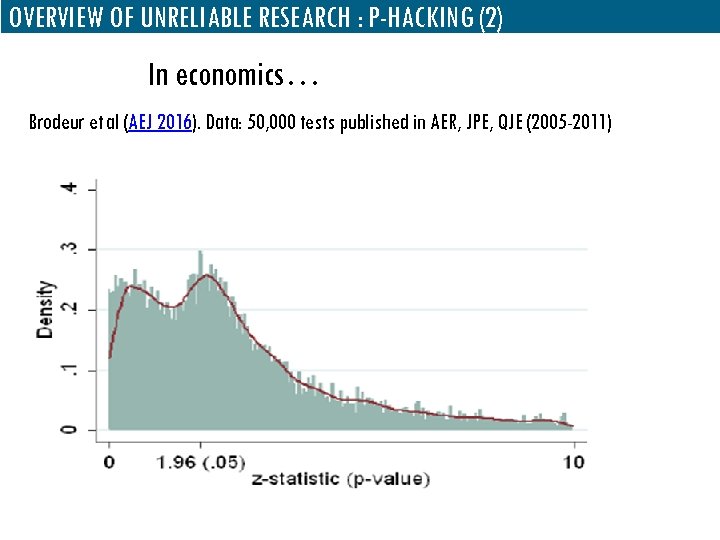

OVERVIEW OF UNRELIABLE RESEARCH : P-HACKING (2) In economics… Brodeur et al (AEJ 2016). Data: 50, 000 tests published in AER, JPE, QJE (2005 -2011)

OVERVIEW OF UNRELIABLE RESEARCH : SELECTIVE REPORTING (1) Selective Reporting • Cherry-picking results for reporting • Malhotra, Franco, Simonovits (2015) find that of the studies run through TESS (Time-Sharing Experiments in the Social Sciences), roughly 60% of papers report fewer outcomes variables than are listed in the questionnaire. • If many relevant outcomes aren’t mentioned in the paper (and aren’t listed elsewhere), how can we be confident that the reported results weren’t just noisy, rather than true effects?

OVERVIEW OF UNRELIABLE RESEARCH : REPLICATION FAILURE (1) • “Replication” is often used to mean different things. Here are three different activities which replication refers to: • PURE REPLICATION: Verification: Checking that original data/code with exactly the same analysis can produce published results; • STATISTICAL REPLICATION: (i)Reproduction : Testing whether the results hold up when the study conducted in a very similar way but with different methods for e. g. ; (ii) Extension : test of external validity, robustness checks: investigating whether the results hold up when the study is conducted in another place, under different conditions, in a different dataset, time or setting.

OVERVIEW OF UNRELIABLE RESEARCH : REPLICATION FAILURE (1) Well-known replications: • David Broockman, Joshua Kalla, and Peter Aronow. (2015): – Attempt to reproduce Lacour M. and Green D. (2015) paper "When contact changes minds: An experiment on transmission of support for gay equality" • Reinhart-Rogoff (2013) – Spreadsheet errors found by grad student (Herndon et al 2013). • Deworming debate (2015) – Miguel/Kremer (2004) and Aiken/Davey et al. (2015) – Big debate within development econ/epidemiology • Reproducibility project in psychology (2015) – ~40% of studies successfully reproduced (See e. g Uhri Simonshon). – But big debate these last couple of months! What does it mean for a replication to “fail”? • Begley et al. (2012): – Attempt to reproduce “landmark” pre-clinical cancer lab studies at Amgen (6 out of 53 studies reproduced).

OVERVIEW OF UNRELIABLE RESEARCH : LACK OF TRANSPARENCY • Sharing data, code and surveys (along with clear documentation) can allow others to check one’s work. • Yet relatively few researchers share their data: • Alsheichk-Ali et al. 2011: Review of 10 first research papers of 2009 published in top 50 journals by impact factor. Of the 500 papers, 351 were subject to a data availability policy of some kind: 59% did not adhere to the policy, most commonly by not publicly depositing the data (73%). Overall, only 47 papers (9%) deposited the full primary raw data online.

WHAT ARE THE SOLUTIONS?

SOLUTIONS TO INCREASE THE RELIABILITY OF RESEARCH Research transparency! 1. Pre-Registration 2. Pre-Analysis Plans (PAPs) 3. Results-neutral publishing” 4. Build a strong reproducible workflow 5. Disclosure , Open Data and materials (code, surveys, readme files, etc. ) 6. Go for Dynamic Documents

SOLUTIONS TO INCREASE THE RELIABILITY OF RESEARCH : REGISTRATION (1) 1. Study Pre-registration • Creating a public record of a study and basic information about the study: i) title, country, status; ii)Abstract; iii) Trial and intervention start and end dates; iv) List of main outcomes; vi) Research design); • All IPA and J-PAL studies strongly encouraged to pre-register studies on the AEA registry (mostly for RCTs) (www. socialscienceregistry. org); • If not an RCT, open science framework (osf. io) ; • Benefits: better knowledge on what studies failed or no to improve meta analysis and decrease the publication bias reduce data-mining. • Debate/ concern: Preregistration limit creativity? ?

SOLUTIONS TO INCREASE THE RELIABILITY OF RESEARCH : REGISTRATION (2) • Other registries

SOLUTIONS TO INCREASE THE RELIABILITY OF RESEARCH : PAPs (1) 2. Pre-Analysis Plan (PAPs) • Pre-analysis plans are more detailed write-ups about the study hypotheses, outcomes, and planned analysis (Extensive listing of econometric specifications to be estimated. ) – The goal is to combat data-mining by tying the hands of the researcher. • Structure of a Pre-Analysis plan: Take a look at one of the first Pre-Analysis Plan in Economics by Casey, Miguel and Glennester (Reshaping institutions: Evidence on impacts using a Pre-Analysis Plan in Sierra Leone)

SOLUTIONS TO INCREASE THE RELIABILITY OF RESEARCH : PAPs (2) WASH Benefits Kenya: Pre-Specified Analysis Plan 1. Introduction with the key main hypotheses to be tested : (i)To determine if water, sanitation, and hygiene interventions aid in early child development; (ii) To determine if the combination of water, sanitation, and hygiene interventions are more beneficial (or cost effective) in early child development than a single intervention alone; (iii) To determine if the combination of water, sanitation, and hygiene interventions plus nutrient supplements are more beneficial (or cost effective) than any of the interventions or supplements alone 2. Analysis methods: i) Unadjusted Non-Parametric Analysis, ii) Basic regression models , iii) Intention to Treat and Treatment on the Treated, Take-up 3. List of Variables and Regressions: i)Primary health outcomes (HAZ, ASQ) ii) Secondary health outcomes(Proportion of children stunted (HAZ<2); Lactulose/mannitol ; Antibody titers; iii) Tertiary Health Outcomes (Weight -for-age Z-scores Childs weight , Proportion of children underweight (WAZ < 2) • Proportion of children wasted (WHZ < 2) • Head circumference Z-scores • Mid-upper arm circumference Z-scores

SOLUTIONS TO INCREASE THE RELIABILITY OF RESEARCH : PAPs (3) WASH Benefits Kenya: Pre-Specified Analysis Plan 4. Planned controlled variables : Location (Kenyan administrative region used for stratification) • Field Officer • Time between intervention delivery and survey (due to the nature of the roll-out, there may be some variation in this, but not much. ) • Month of survey • Age of child at time of intervention, by quintiles • Gender of child • Age of mother, by quintiles • Mother’s education level (binary-had any secondary schooling) • Mother’s Swahili literacy • Mother’s English literacy • Number of households within the study compound • Total number of children under 36 months in the study compound • Tin roof ownership 5. Planned Interactions and Distributional Analysis In addition to determining the average treatment effect for our entire sample, we are interested in examining the heterogeneity of the treatment effects. We intend to do this primarily through sub-group analysis. As we are not deliberately powered to detect interaction effects, we are not expecting significant effects, but will investigate them nonetheless. If certain subgroup effects turn out significant, we will potentially investigate distributional effects using quantile regressions (see Angrist Mostly Harmless? ).

SOLUTIONS TO INCREASE THE RELIABILITY OF RESEARCH : Results Sharing Share all results (including null results!) • Publication bias leads to a skew towards positive (exciting) results. – Problem: how do we disseminate null results, if journals tend to accept positive results at greater rates? • One solution: reporting all results in a public registry

SOLUTIONS TO INCREASE THE RELIABILITY OF RESEARCH : Data Sharing (1) • Why? Making data, code, and other materials publicly available: – Facilitates replication of published results – Re-use of data for further studies and meta-analysis – Promote better quality data/code/metadata

SOLUTIONS TO INCREASE THE RELIABILITY OF RESEARCH : Data Sharing (2) What to share? 1. Datasets • Recommended: cleaned, study dataset ( Personally Identifiable Information removed!) – Minimally: “publication” dataset – code/data underlying publication. – Ideal: start to finish reproducibility – Readme files explaining relation between data and code, as well as any further data documentation. 3. Surveys 4. Study-level metadata

SOLUTIONS TO INCREASE THEReproducible Workflow : Reproducible Workflow (1) RELIABILITY OF RESEARCH How to build a reproducible workflow?

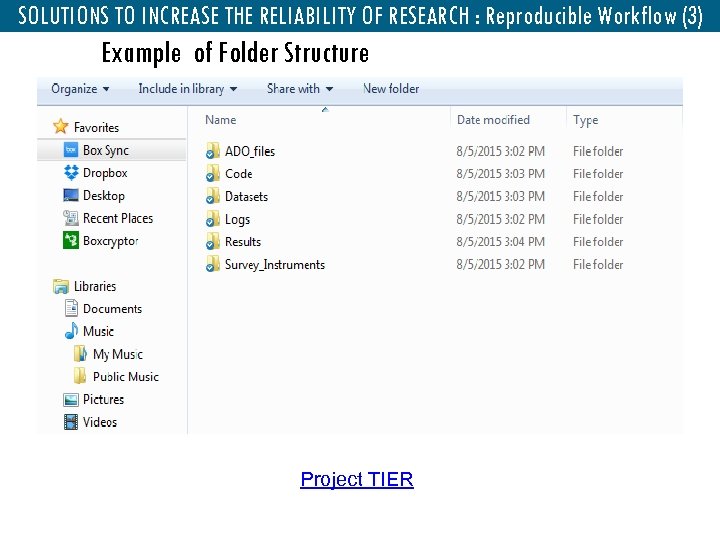

SOLUTIONS TO INCREASE THE RELIABILITY OF RESEARCH : Reproducible Workflow (3) Example of Folder Structure Project TIER

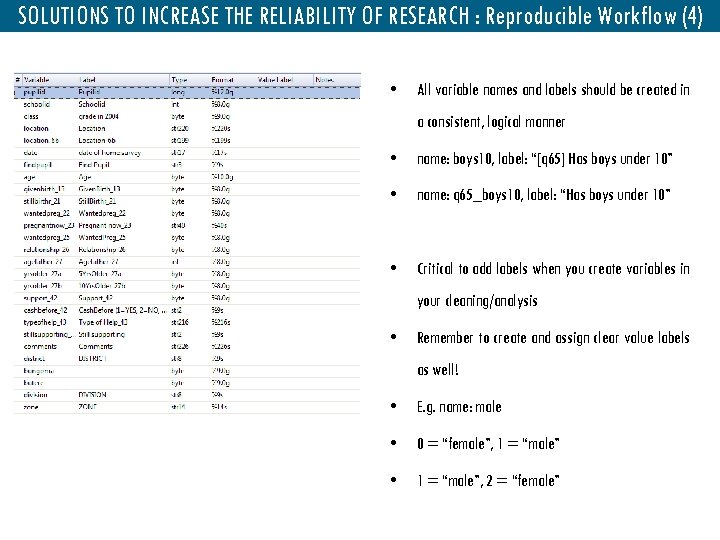

SOLUTIONS TO INCREASE THE RELIABILITY OF RESEARCH : Reproducible Workflow (4) • All variable names and labels should be created in a consistent, logical manner • name: boys 10, label: “[q 65] Has boys under 10” • name: q 65_boys 10, label: “Has boys under 10” • Critical to add labels when you create variables in your cleaning/analysis • Remember to create and assign clear value labels as well! • E. g. name: male • 0 = “female”, 1 = “male” • 1 = “male”, 2 = “female”

SOLUTIONS TO INCREASE THE RELIABILITY OF RESEARCH : Reproducible Workflow (5) • Create a master do-file: a file that runs ALL code in your project – You might want to have master do-files for specific stages of the project as well, e. g. master_clean, master_analysis, etc. • In addition, a master might be useful for: – Setting any globals that might be used across do-files – Installing user-written commands

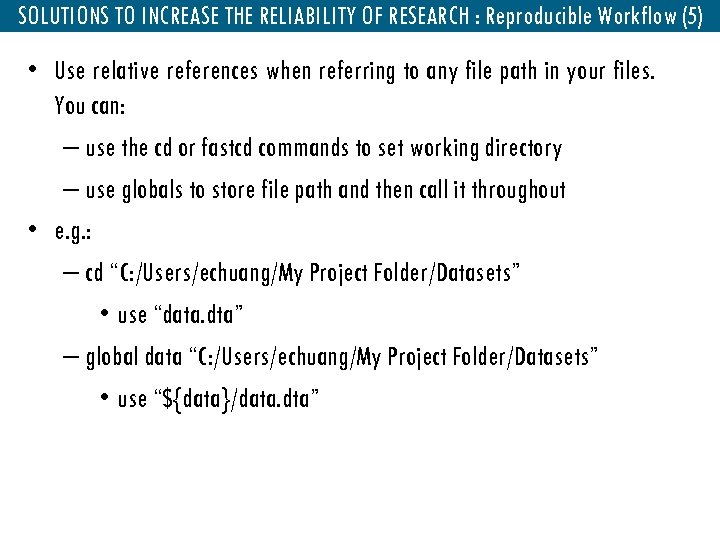

SOLUTIONS TO INCREASE THE RELIABILITY OF RESEARCH : Reproducible Workflow (5) • Use relative references when referring to any file path in your files. You can: – use the cd or fastcd commands to set working directory – use globals to store file path and then call it throughout • e. g. : – cd “C: /Users/echuang/My Project Folder/Datasets” • use “data. dta” – global data “C: /Users/echuang/My Project Folder/Datasets” • use “${data}/data. dta”

SOLUTIONS TO INCREASE THE RELIABILITY OF RESEARCH : Reproducible Workflow (6)

SOLUTIONS TO INCREASE THE RELIABILITY OF RESEARCH : Reproducible Workflow (7)

Dynamic documents SOLUTIONS TO INCREASE THE RELIABILITY OF RESEARCH : Dynamic documents DYNAMIC DOCUMENTS WITH R AND STATA

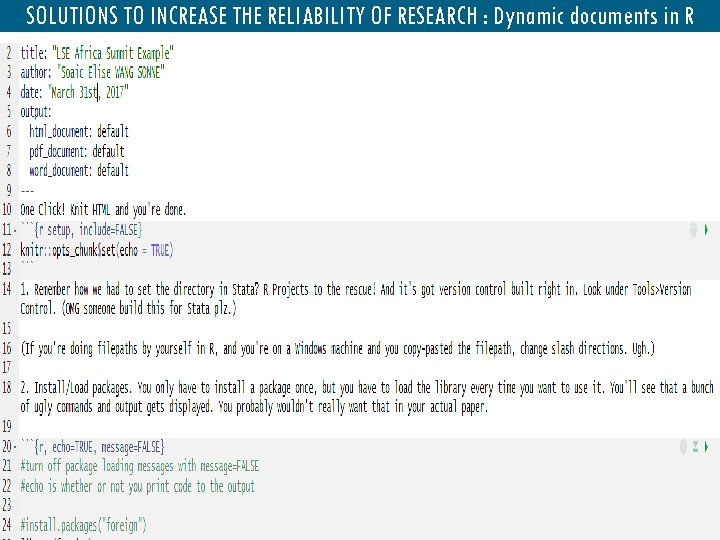

SOLUTIONS TO INCREASE THE RELIABILITY OF RESEARCH : Dynamic documents in R

SOLUTIONS TO INCREASE THE RELIABILITY OF RESEARCH : Dynamic documents in STATA

WAY FORWARD (1) Education BITSS Summer Institute with 35 -40 participants annually from across social sciences – RT 2 Summer Institute 2017 (call for application pending !!!, close TODAY !!!) (fully paid in Berkeley!!!) Semester-long course on transparency available online Over 150 participants in international workshops

WAY FORWARD (2) Growing Ecosystem www. bitss. org @ucbitss

THANK YOU QUESTIONS/ SUGGESTIONS THANK YOU FOR YOUR KIND ATTENTION

f7bdb433f222ab439f554eeae415d8db.ppt