19f3ac5b8a3af58b34827c0f5390c531.ppt

- Количество слайдов: 42

Transient Fault Detection and Recovery via Simultaneous Multithreading Nevroz ŞEN 26/04/2007

Transient Fault Detection and Recovery via Simultaneous Multithreading Nevroz ŞEN 26/04/2007

AGENDA n n n Introduction & Motivation SMT, SRT & SRTR Fault Detection via SMT (SRT) Fault Recovery via SMT (SRTR) Conclusion

AGENDA n n n Introduction & Motivation SMT, SRT & SRTR Fault Detection via SMT (SRT) Fault Recovery via SMT (SRTR) Conclusion

INTRODUCTION n Transient Faults: n n Faults that persist for a “short” duration Caused by cosmic rays (e. g. , neutrons) Charges and/or discharges internal nodes of logic or SRAM Cells – High Frequency crosstalk Solution n No practical solution to absorb cosmic rays n n 1 fault per 1000 computers per year (estimated fault rate) Future is worse n Smaller feature size, reduce voltage, higher transistor count, reduced noise margin

INTRODUCTION n Transient Faults: n n Faults that persist for a “short” duration Caused by cosmic rays (e. g. , neutrons) Charges and/or discharges internal nodes of logic or SRAM Cells – High Frequency crosstalk Solution n No practical solution to absorb cosmic rays n n 1 fault per 1000 computers per year (estimated fault rate) Future is worse n Smaller feature size, reduce voltage, higher transistor count, reduced noise margin

INTRODUCTION n Fault tolerant systems use redundancy to improve reliability: n n Time redundancy: seperate executions Space redundancy: seperate physical copies of resources n n DMR/TMR Data redundancy n n ECC Parity

INTRODUCTION n Fault tolerant systems use redundancy to improve reliability: n n Time redundancy: seperate executions Space redundancy: seperate physical copies of resources n n DMR/TMR Data redundancy n n ECC Parity

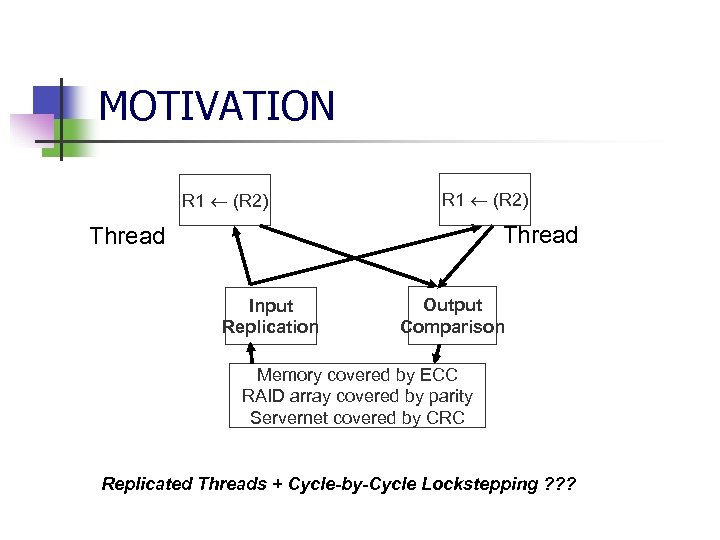

MOTIVATION n n Simultaneous Multithreading improves the performance of a processor by allowing multiple independent threads to execute simultaneously (same cycle) in different functional units Use the replication provided by the different threads to run two copies of the same program so we are able to detect errors

MOTIVATION n n Simultaneous Multithreading improves the performance of a processor by allowing multiple independent threads to execute simultaneously (same cycle) in different functional units Use the replication provided by the different threads to run two copies of the same program so we are able to detect errors

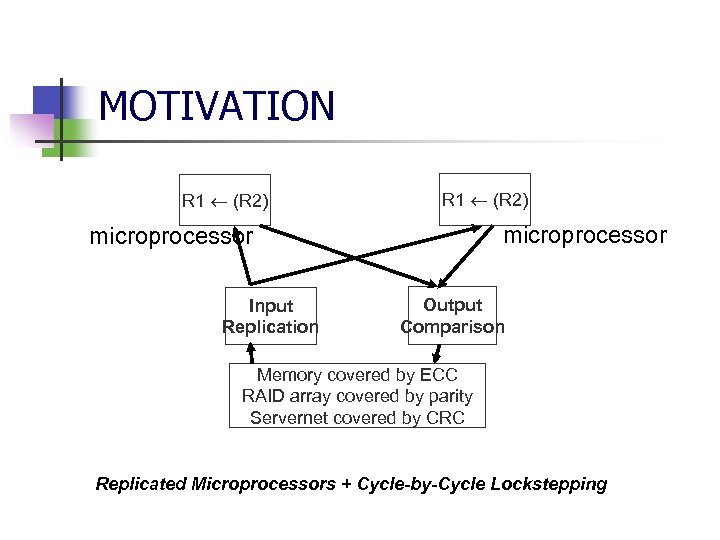

MOTIVATION R 1 (R 2) microprocessor Input Replication Output Comparison Memory covered by ECC RAID array covered by parity Servernet covered by CRC Replicated Microprocessors + Cycle-by-Cycle Lockstepping

MOTIVATION R 1 (R 2) microprocessor Input Replication Output Comparison Memory covered by ECC RAID array covered by parity Servernet covered by CRC Replicated Microprocessors + Cycle-by-Cycle Lockstepping

MOTIVATION R 1 (R 2) Thread Input Replication Output Comparison Memory covered by ECC RAID array covered by parity Servernet covered by CRC Replicated Threads + Cycle-by-Cycle Lockstepping ? ? ?

MOTIVATION R 1 (R 2) Thread Input Replication Output Comparison Memory covered by ECC RAID array covered by parity Servernet covered by CRC Replicated Threads + Cycle-by-Cycle Lockstepping ? ? ?

MOTIVATION Less hardware compared to replicated microprocessors n n n SMT needs ~5% more hardware over uniprocessor SRT adds very little hardware overhead to existing SMT Better performance than complete replication n n Better use of resources Lower cost n n n Avoids complete replication Market volume of SMT & SRT

MOTIVATION Less hardware compared to replicated microprocessors n n n SMT needs ~5% more hardware over uniprocessor SRT adds very little hardware overhead to existing SMT Better performance than complete replication n n Better use of resources Lower cost n n n Avoids complete replication Market volume of SMT & SRT

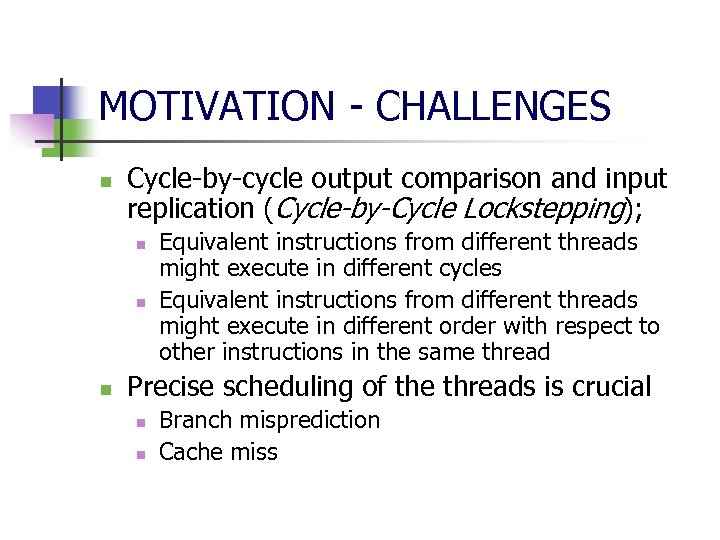

MOTIVATION - CHALLENGES n Cycle-by-cycle output comparison and input replication (Cycle-by-Cycle Lockstepping); n n n Equivalent instructions from different threads might execute in different cycles Equivalent instructions from different threads might execute in different order with respect to other instructions in the same thread Precise scheduling of the threads is crucial n n Branch misprediction Cache miss

MOTIVATION - CHALLENGES n Cycle-by-cycle output comparison and input replication (Cycle-by-Cycle Lockstepping); n n n Equivalent instructions from different threads might execute in different cycles Equivalent instructions from different threads might execute in different order with respect to other instructions in the same thread Precise scheduling of the threads is crucial n n Branch misprediction Cache miss

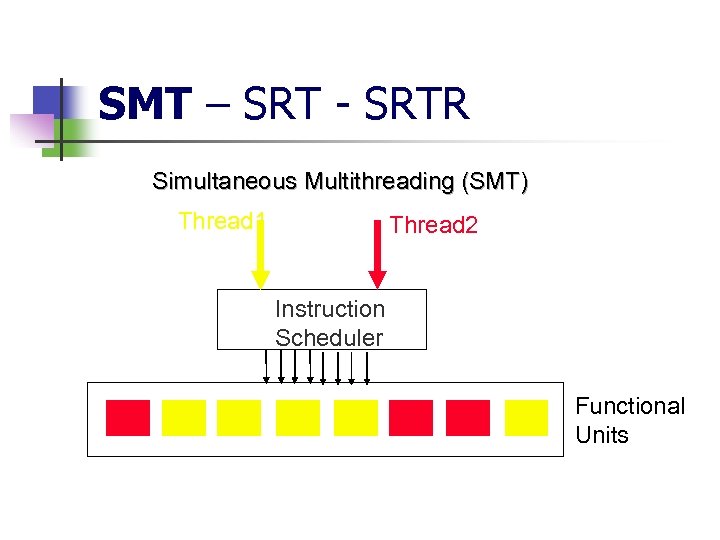

SMT – SRT - SRTR Simultaneous Multithreading (SMT) Thread 1 Thread 2 Instruction Scheduler Functional Units

SMT – SRT - SRTR Simultaneous Multithreading (SMT) Thread 1 Thread 2 Instruction Scheduler Functional Units

SMT – SRT - SRTR n n SRT: Simultaneous & Redundantly Threaded Processor SRT = SMT + Fault Detection SRTR: Simultaneous & Redundantly Threaded Processor with Recovery SRTR = SRT + Fault Recovery

SMT – SRT - SRTR n n SRT: Simultaneous & Redundantly Threaded Processor SRT = SMT + Fault Detection SRTR: Simultaneous & Redundantly Threaded Processor with Recovery SRTR = SRT + Fault Recovery

Fault Detection via SMT - SRT n n n Sphere of Replication (So. R) Output comparison Input replication Performance Optimizations for SRT Simulation Results

Fault Detection via SMT - SRT n n n Sphere of Replication (So. R) Output comparison Input replication Performance Optimizations for SRT Simulation Results

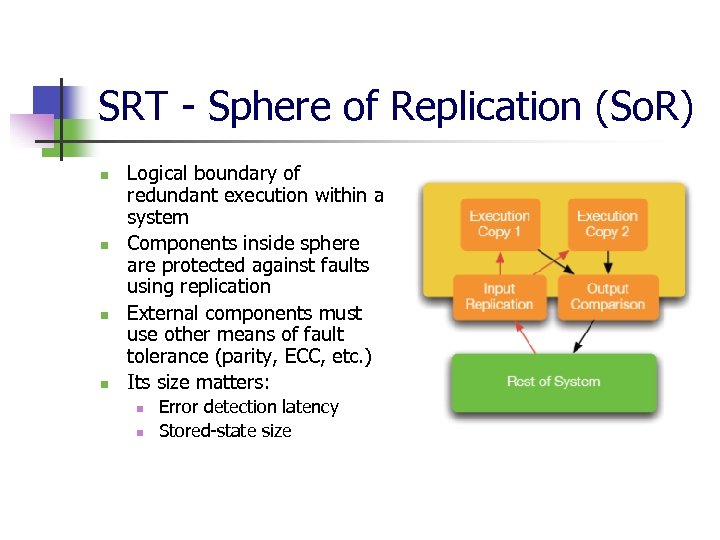

SRT - Sphere of Replication (So. R) n n Logical boundary of redundant execution within a system Components inside sphere are protected against faults using replication External components must use other means of fault tolerance (parity, ECC, etc. ) Its size matters: n n Error detection latency Stored-state size

SRT - Sphere of Replication (So. R) n n Logical boundary of redundant execution within a system Components inside sphere are protected against faults using replication External components must use other means of fault tolerance (parity, ECC, etc. ) Its size matters: n n Error detection latency Stored-state size

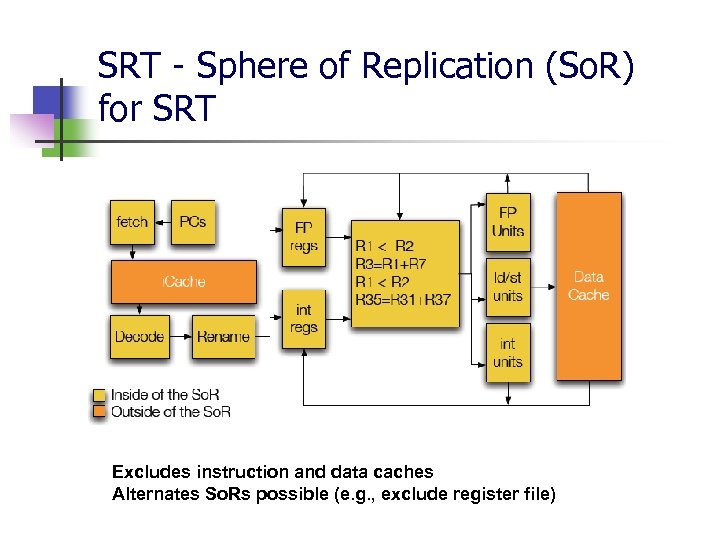

SRT - Sphere of Replication (So. R) for SRT Excludes instruction and data caches Alternates So. Rs possible (e. g. , exclude register file)

SRT - Sphere of Replication (So. R) for SRT Excludes instruction and data caches Alternates So. Rs possible (e. g. , exclude register file)

OUTPUT COMPARISION n n Compare & validate output before sending it outside the So. R Catch faults before propagating to rest of system No need to compare every instruction; Incorrect value caused by a fault propagates through computations and is eventually consumed by a store, checking only stores suffices. Check; 1. Address and data for stores from redundant threads. Both comparison and validation at commit time 2. Address for uncached load from redundant threads 3. Address for cached load from redundant threads: not required Other output comparison based on the boundary of an So. R

OUTPUT COMPARISION n n Compare & validate output before sending it outside the So. R Catch faults before propagating to rest of system No need to compare every instruction; Incorrect value caused by a fault propagates through computations and is eventually consumed by a store, checking only stores suffices. Check; 1. Address and data for stores from redundant threads. Both comparison and validation at commit time 2. Address for uncached load from redundant threads 3. Address for cached load from redundant threads: not required Other output comparison based on the boundary of an So. R

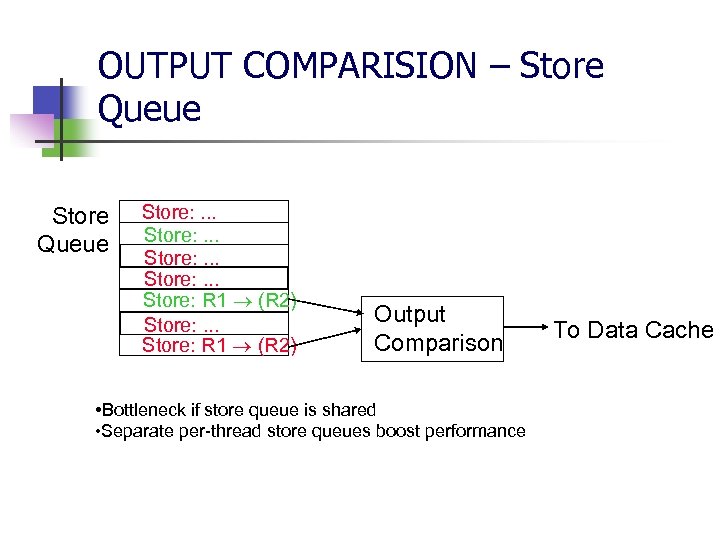

OUTPUT COMPARISION – Store Queue Store: . . . Store: R 1 (R 2) Output Comparison • Bottleneck if store queue is shared • Separate per-thread store queues boost performance To Data Cache

OUTPUT COMPARISION – Store Queue Store: . . . Store: R 1 (R 2) Output Comparison • Bottleneck if store queue is shared • Separate per-thread store queues boost performance To Data Cache

INPUT REPLICATION n n n Replicate & deliver same input (coming from outside So. R) to redundant copies. To do this; Instructions: Assume no self-modification. No check Cached load data: n n n Uncached load data: n n n Active Load Address Buffer Load Value Queue Synchronize when comparing addresses that leave the So. R When data returns, replicate the value for the two threads External Interrupts: n n Stall lead thread and deliver interrupt synchronously Record interrupt delivery point and deliver later

INPUT REPLICATION n n n Replicate & deliver same input (coming from outside So. R) to redundant copies. To do this; Instructions: Assume no self-modification. No check Cached load data: n n n Uncached load data: n n n Active Load Address Buffer Load Value Queue Synchronize when comparing addresses that leave the So. R When data returns, replicate the value for the two threads External Interrupts: n n Stall lead thread and deliver interrupt synchronously Record interrupt delivery point and deliver later

INPUT REPLICATION – Active Load Address Buffer (ALAB) n n n Delays a cache block’s replacement or invalidation after the retirement of the trailing load Counter tracks trailing thread’s outstanding loads When a cache block is about to be replaced: n The ALAB is searched for an entry matching the block’s address n If counter != 0 then: n Do not replace nor invalidate until trailing thread is done n Set the pending-invalidate bit n Else replace - invalidate

INPUT REPLICATION – Active Load Address Buffer (ALAB) n n n Delays a cache block’s replacement or invalidation after the retirement of the trailing load Counter tracks trailing thread’s outstanding loads When a cache block is about to be replaced: n The ALAB is searched for an entry matching the block’s address n If counter != 0 then: n Do not replace nor invalidate until trailing thread is done n Set the pending-invalidate bit n Else replace - invalidate

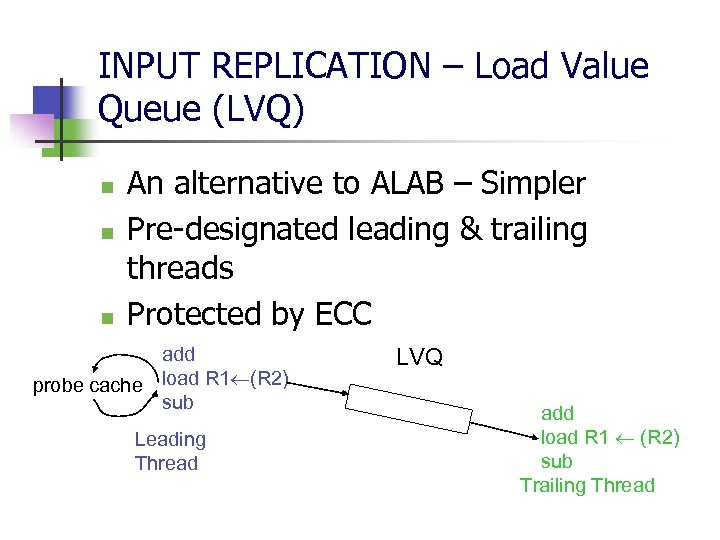

INPUT REPLICATION – Load Value Queue (LVQ) n n n An alternative to ALAB – Simpler Pre-designated leading & trailing threads Protected by ECC add probe cache load R 1 (R 2) sub Leading Thread LVQ add load R 1 (R 2) sub Trailing Thread

INPUT REPLICATION – Load Value Queue (LVQ) n n n An alternative to ALAB – Simpler Pre-designated leading & trailing threads Protected by ECC add probe cache load R 1 (R 2) sub Leading Thread LVQ add load R 1 (R 2) sub Trailing Thread

INPUT REPLICATION – Load Value Queue (LVQ) n Advantages over ALAB; n n n Reduces the pressure on data cache ports Accelerate fault detection of faulty addresses Simple design

INPUT REPLICATION – Load Value Queue (LVQ) n Advantages over ALAB; n n n Reduces the pressure on data cache ports Accelerate fault detection of faulty addresses Simple design

Performance Optimizations for SRT n Idea: Using one thread to improve cache and branch prediction behavior for the other thread. Two techniques; n Slack Fetch n n n Maintains a constant slack of instructions between the threads Prevents the trailing thread from seeing mispredictions and cache misses Branch Outcome Queue (BOQ)

Performance Optimizations for SRT n Idea: Using one thread to improve cache and branch prediction behavior for the other thread. Two techniques; n Slack Fetch n n n Maintains a constant slack of instructions between the threads Prevents the trailing thread from seeing mispredictions and cache misses Branch Outcome Queue (BOQ)

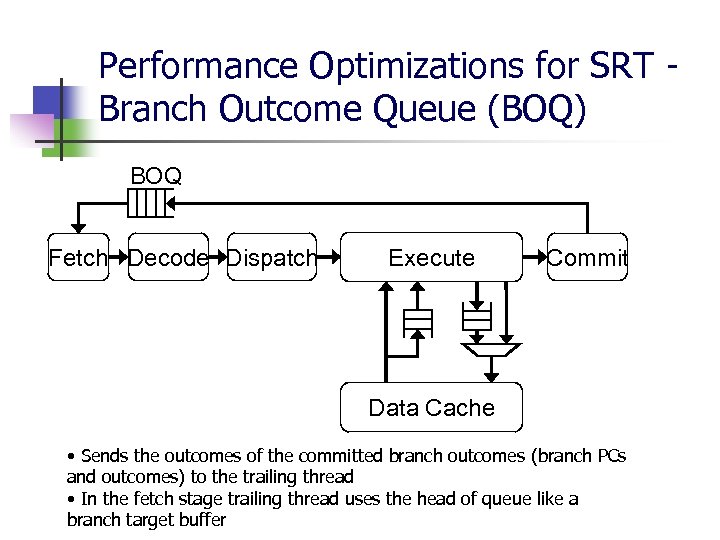

Performance Optimizations for SRT Branch Outcome Queue (BOQ) BOQ Fetch Decode Dispatch Execute Commit Data Cache • Sends the outcomes of the committed branch outcomes (branch PCs and outcomes) to the trailing thread • In the fetch stage trailing thread uses the head of queue like a branch target buffer

Performance Optimizations for SRT Branch Outcome Queue (BOQ) BOQ Fetch Decode Dispatch Execute Commit Data Cache • Sends the outcomes of the committed branch outcomes (branch PCs and outcomes) to the trailing thread • In the fetch stage trailing thread uses the head of queue like a branch target buffer

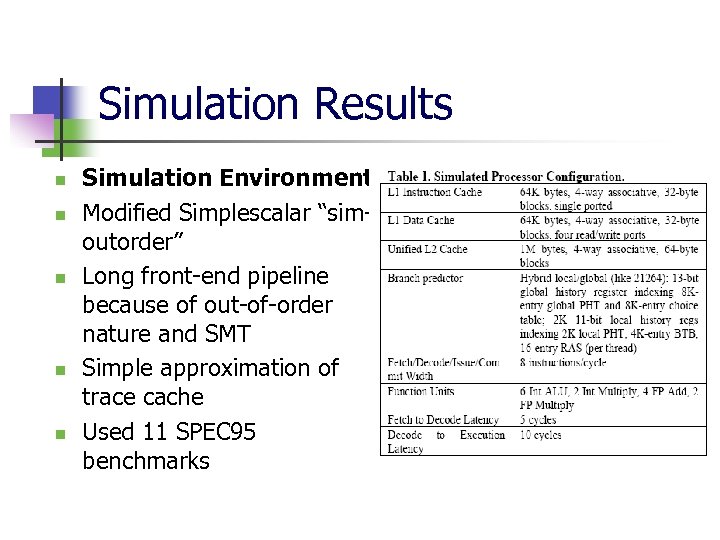

Simulation Results n n n Simulation Environment: Modified Simplescalar “simoutorder” Long front-end pipeline because of out-of-order nature and SMT Simple approximation of trace cache Used 11 SPEC 95 benchmarks

Simulation Results n n n Simulation Environment: Modified Simplescalar “simoutorder” Long front-end pipeline because of out-of-order nature and SMT Simple approximation of trace cache Used 11 SPEC 95 benchmarks

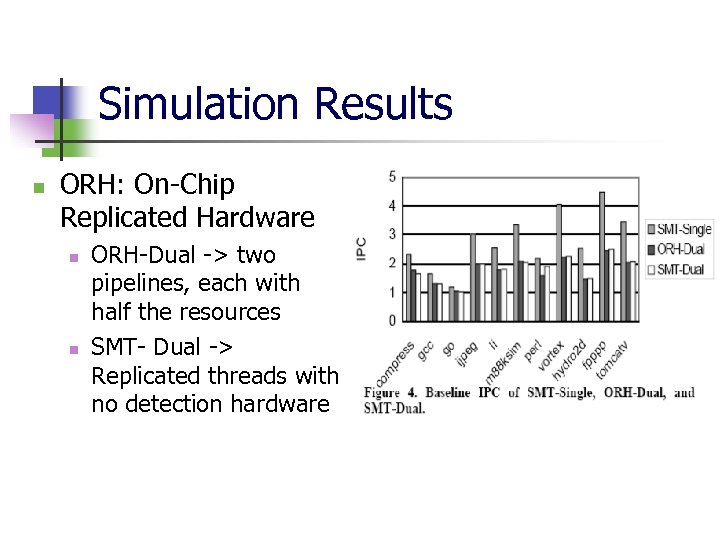

Simulation Results n ORH: On-Chip Replicated Hardware n n ORH-Dual -> two pipelines, each with half the resources SMT- Dual -> Replicated threads with no detection hardware

Simulation Results n ORH: On-Chip Replicated Hardware n n ORH-Dual -> two pipelines, each with half the resources SMT- Dual -> Replicated threads with no detection hardware

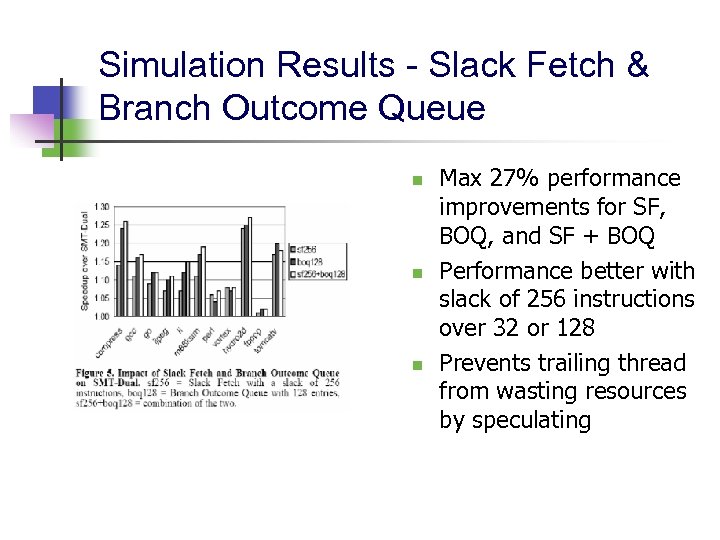

Simulation Results - Slack Fetch & Branch Outcome Queue n n n Max 27% performance improvements for SF, BOQ, and SF + BOQ Performance better with slack of 256 instructions over 32 or 128 Prevents trailing thread from wasting resources by speculating

Simulation Results - Slack Fetch & Branch Outcome Queue n n n Max 27% performance improvements for SF, BOQ, and SF + BOQ Performance better with slack of 256 instructions over 32 or 128 Prevents trailing thread from wasting resources by speculating

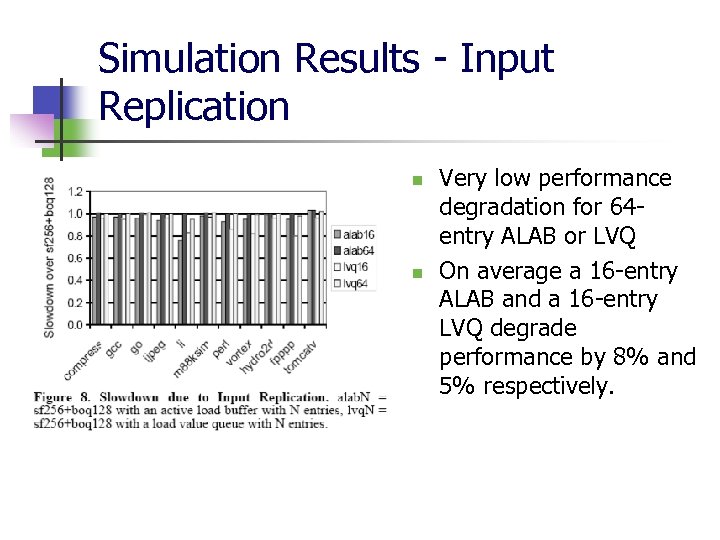

Simulation Results - Input Replication n n Very low performance degradation for 64 entry ALAB or LVQ On average a 16 -entry ALAB and a 16 -entry LVQ degrade performance by 8% and 5% respectively.

Simulation Results - Input Replication n n Very low performance degradation for 64 entry ALAB or LVQ On average a 16 -entry ALAB and a 16 -entry LVQ degrade performance by 8% and 5% respectively.

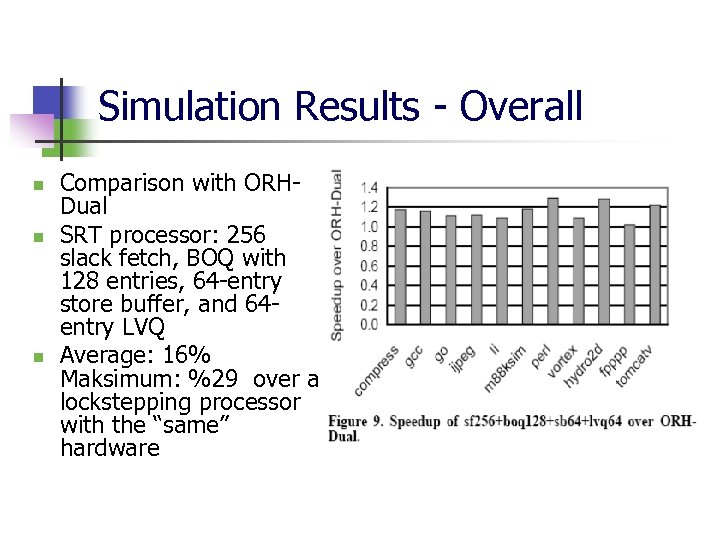

Simulation Results - Overall n n n Comparison with ORHDual SRT processor: 256 slack fetch, BOQ with 128 entries, 64 -entry store buffer, and 64 entry LVQ Average: 16% Maksimum: %29 over a lockstepping processor with the “same” hardware

Simulation Results - Overall n n n Comparison with ORHDual SRT processor: 256 slack fetch, BOQ with 128 entries, 64 -entry store buffer, and 64 entry LVQ Average: 16% Maksimum: %29 over a lockstepping processor with the “same” hardware

Fault Recovery via SMT (SRTR) n What is wrong with SRT: A leading nonstore instruction may commit before the check for the fault occurs n n n Relies on the trailing thread to trigger the detection However, an SRTR processor works well in a fail-fast architecture A faulty instruction cannot be undone once the instruction commits.

Fault Recovery via SMT (SRTR) n What is wrong with SRT: A leading nonstore instruction may commit before the check for the fault occurs n n n Relies on the trailing thread to trigger the detection However, an SRTR processor works well in a fail-fast architecture A faulty instruction cannot be undone once the instruction commits.

Fault Recovery via SMT (SRTR) - Motivation n n In SRT, a leading instruction may commit before the check for faults occurs, relying on the trailing thread to trigger detection. In contrast, SRTR must not allow any leading instruction to commit before checking occurs, SRTR uses the time between the completion and commit time of leading instruction and checks the results as soon as the trailing completes In SPEC 95, complete to commit takes about 29 cycles This short slack has some implications: n n Leading thread provides branch predictions The St. B, LVQ and BOQ need to handle mispredictions

Fault Recovery via SMT (SRTR) - Motivation n n In SRT, a leading instruction may commit before the check for faults occurs, relying on the trailing thread to trigger detection. In contrast, SRTR must not allow any leading instruction to commit before checking occurs, SRTR uses the time between the completion and commit time of leading instruction and checks the results as soon as the trailing completes In SPEC 95, complete to commit takes about 29 cycles This short slack has some implications: n n Leading thread provides branch predictions The St. B, LVQ and BOQ need to handle mispredictions

Fault Recovery via SMT (SRTR) - Motivation n n Leading thread provides the trailing thread with branch predictions instead of outcomes (SRT). Register value queue (RVQ), to store register values and other information necessary for checking of instructions, avoiding bandwidth pressure on the register file. Dependence-based checking elision (DBCE) to reduce the number of checks is developed Recovery via traditional rollback ability of modern pipelines

Fault Recovery via SMT (SRTR) - Motivation n n Leading thread provides the trailing thread with branch predictions instead of outcomes (SRT). Register value queue (RVQ), to store register values and other information necessary for checking of instructions, avoiding bandwidth pressure on the register file. Dependence-based checking elision (DBCE) to reduce the number of checks is developed Recovery via traditional rollback ability of modern pipelines

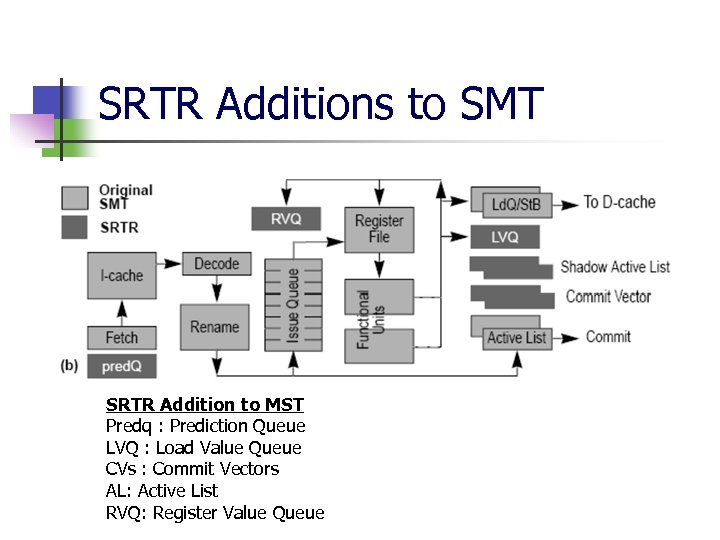

SRTR Additions to SMT SRTR Addition to MST Predq : Prediction Queue LVQ : Load Value Queue CVs : Commit Vectors AL: Active List RVQ: Register Value Queue

SRTR Additions to SMT SRTR Addition to MST Predq : Prediction Queue LVQ : Load Value Queue CVs : Commit Vectors AL: Active List RVQ: Register Value Queue

SRTR – AL & LVQ n Leading and trailing instructions occupy the same positions in their ALs (private for each thread) n n May enter their AL and become ready to commit them at different times The LVQ has to be modified to allow speculative loads n n The Shadow Active List holds pointers to LVQ entries A trailing load might issue before the leading load Branches place the LVQ tail pointer in the SAL The LVQ’s tail pointer points to the LVQ has to be rolled back in a misprediction

SRTR – AL & LVQ n Leading and trailing instructions occupy the same positions in their ALs (private for each thread) n n May enter their AL and become ready to commit them at different times The LVQ has to be modified to allow speculative loads n n The Shadow Active List holds pointers to LVQ entries A trailing load might issue before the leading load Branches place the LVQ tail pointer in the SAL The LVQ’s tail pointer points to the LVQ has to be rolled back in a misprediction

SRTR – PREDQ n n n Leading thread places predicted PC Similar to BOQ but only holds predictions instead of outcomes Using the pred. Q, the two threads fetch essentially the same instructions On a misprediction detection leading clears the pred. Q ECC protected

SRTR – PREDQ n n n Leading thread places predicted PC Similar to BOQ but only holds predictions instead of outcomes Using the pred. Q, the two threads fetch essentially the same instructions On a misprediction detection leading clears the pred. Q ECC protected

SRTR – RVQ & CV n n SRTR checks when the trailing instruction completes The Register Value Queue is used to store register values for checking, avoiding pressure on the register file n n RVQ entries are allocated when instruction enter the AL Pointers to the RVQ entries are placed in the SAL to facilitate their search If check succeeds, the entries in the CV vector are set to checked-ok and comitted If check fails, the entries in the CV vectors are set to failed n Rollback done when entries in head of AL

SRTR – RVQ & CV n n SRTR checks when the trailing instruction completes The Register Value Queue is used to store register values for checking, avoiding pressure on the register file n n RVQ entries are allocated when instruction enter the AL Pointers to the RVQ entries are placed in the SAL to facilitate their search If check succeeds, the entries in the CV vector are set to checked-ok and comitted If check fails, the entries in the CV vectors are set to failed n Rollback done when entries in head of AL

SRTR - Pipeline • After the leading instruction writes its result back, it enters the fault-check stage • The leading instruction puts its value in the RVQ using the pointer from the SAL. • The trailing instructions also use the SAL to obtain their RVQ pointers and find their leading counterparts’

SRTR - Pipeline • After the leading instruction writes its result back, it enters the fault-check stage • The leading instruction puts its value in the RVQ using the pointer from the SAL. • The trailing instructions also use the SAL to obtain their RVQ pointers and find their leading counterparts’

SRTR – DBCE n n n SRTR uses a separate structure, the register value queue (RVQ), to store register values and other information necessary for checking of instructions, avoiding bandwidth pressure on the register file. Check each inst brings BW pressure on RVQ DBCE (Dependence Based Checking Elision) scheme reduce the number of checks, and thereby, the RVQ bandwidth demand.

SRTR – DBCE n n n SRTR uses a separate structure, the register value queue (RVQ), to store register values and other information necessary for checking of instructions, avoiding bandwidth pressure on the register file. Check each inst brings BW pressure on RVQ DBCE (Dependence Based Checking Elision) scheme reduce the number of checks, and thereby, the RVQ bandwidth demand.

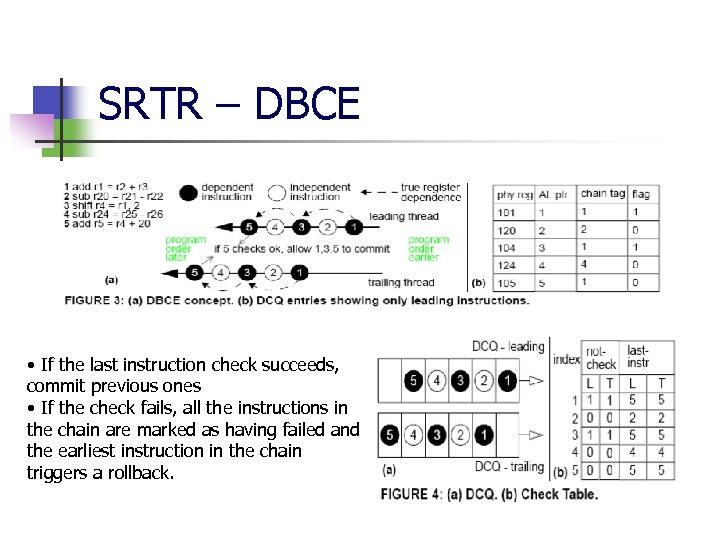

SRTR – DBCE n Idea: n n Faults propagate through dependent instructions Exploits register dependence chains so that only the last instruction in a chain uses the RVQ, and has the leading and trailing values checked.

SRTR – DBCE n Idea: n n Faults propagate through dependent instructions Exploits register dependence chains so that only the last instruction in a chain uses the RVQ, and has the leading and trailing values checked.

SRTR – DBCE • If the last instruction check succeeds, commit previous ones • If the check fails, all the instructions in the chain are marked as having failed and the earliest instruction in the chain triggers a rollback.

SRTR – DBCE • If the last instruction check succeeds, commit previous ones • If the check fails, all the instructions in the chain are marked as having failed and the earliest instruction in the chain triggers a rollback.

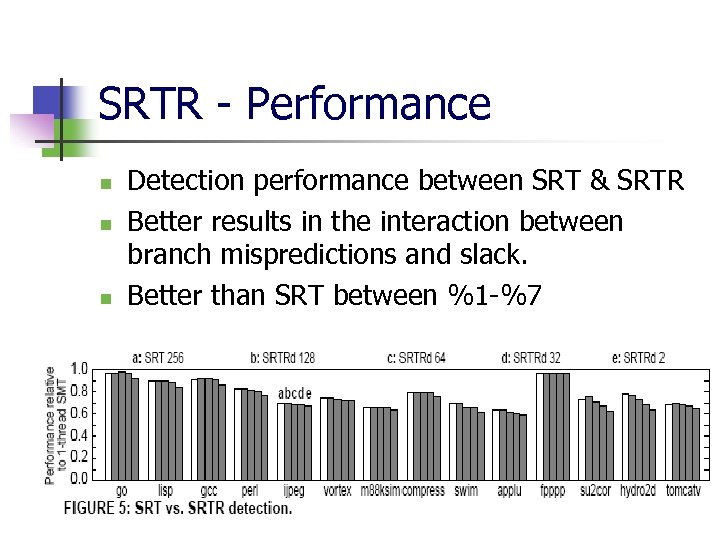

SRTR - Performance n n n Detection performance between SRT & SRTR Better results in the interaction between branch mispredictions and slack. Better than SRT between %1 -%7

SRTR - Performance n n n Detection performance between SRT & SRTR Better results in the interaction between branch mispredictions and slack. Better than SRT between %1 -%7

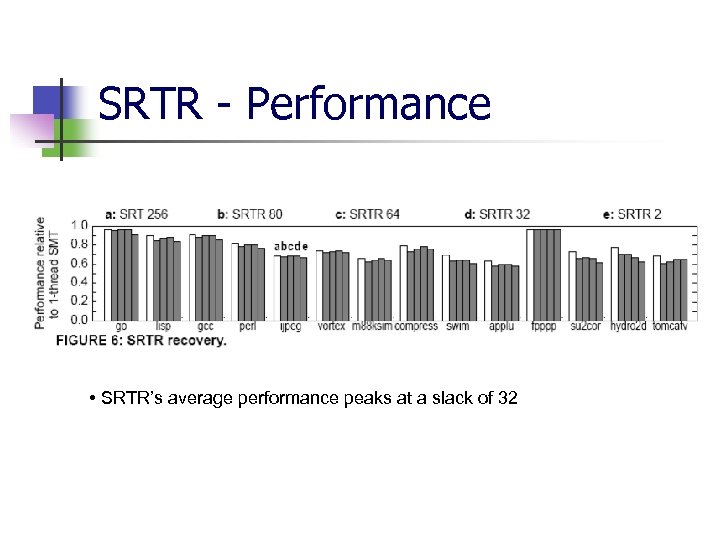

SRTR - Performance • SRTR’s average performance peaks at a slack of 32

SRTR - Performance • SRTR’s average performance peaks at a slack of 32

CONCLUSION n n A more efficient way to detect Transient Faults is presented The trailing thread repeats the computation performed by the leading thread, and the values produced by the two threads are compared. n n n Defined some concepts: LVQ, ALAB, Slack Fetch and BOQ An SRT processor can provide higher performance then an equivalently sized on-chip HW replicated solution. SRT can be extended for fault recovery-SRTR

CONCLUSION n n A more efficient way to detect Transient Faults is presented The trailing thread repeats the computation performed by the leading thread, and the values produced by the two threads are compared. n n n Defined some concepts: LVQ, ALAB, Slack Fetch and BOQ An SRT processor can provide higher performance then an equivalently sized on-chip HW replicated solution. SRT can be extended for fault recovery-SRTR

REFERANCES n n T. N. Vijaykumar, Irith Pomeranz, and Karl Cheng, “Transient Fault Recovery using Simultaneous Multithreading, ” Proc. 29 th Annual Int’l Symp. on Computer Architecture, May 2002. S. K. Reinhardt and S. S. Mukherjee. Transient-fault detection via simultaneous multithreading. In Proceedings of the 27 th Annual International Symposium on Computer Architecture, pages 25– 36, June 2000. Eric Rotenberg, “AR-SMT: A Microarchitectural Approach to Fault Tolerance in Microprocessor, ” Proceedings of Fault-Tolerant Computing Systems (FTCS), 1999. S. S. Mukherjee, M. Kontz, & S. K. Reinhardt, “Detailed Design and Evaluation of Redundant Multithreading Alternatives, ” International Symposium on Computer Architecture (ISCA), 2002

REFERANCES n n T. N. Vijaykumar, Irith Pomeranz, and Karl Cheng, “Transient Fault Recovery using Simultaneous Multithreading, ” Proc. 29 th Annual Int’l Symp. on Computer Architecture, May 2002. S. K. Reinhardt and S. S. Mukherjee. Transient-fault detection via simultaneous multithreading. In Proceedings of the 27 th Annual International Symposium on Computer Architecture, pages 25– 36, June 2000. Eric Rotenberg, “AR-SMT: A Microarchitectural Approach to Fault Tolerance in Microprocessor, ” Proceedings of Fault-Tolerant Computing Systems (FTCS), 1999. S. S. Mukherjee, M. Kontz, & S. K. Reinhardt, “Detailed Design and Evaluation of Redundant Multithreading Alternatives, ” International Symposium on Computer Architecture (ISCA), 2002