7eb8a8f1b588dff77283fe0bc02b7011.ppt

- Количество слайдов: 58

Transfer Learning for Link Prediction Qiang Yang, 楊強,香港科大 Hong Kong University of Science and Technology Hong Kong, China http: //www. cse. ust. hk/~qyang 1

We often find it easier … 2

We often find it easier… 3

We often find it easier… 4

We often find it easier… 5

Transfer Learning? 迁移学习… n People often transfer knowledge to novel situations n n n Chess Checkers C++ Java Physics Computer Science Transfer Learning: The ability of a system to recognize and apply knowledge and skills learned in previous tasks to novel tasks (or new domains) 6

But, direct application will not work Machine Learning: n Training and future (test) data n n follow the same distribution, and are in same feature space 7

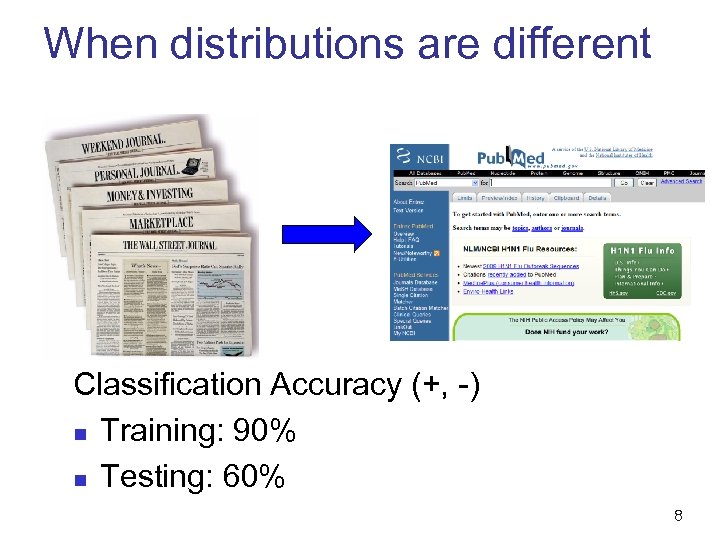

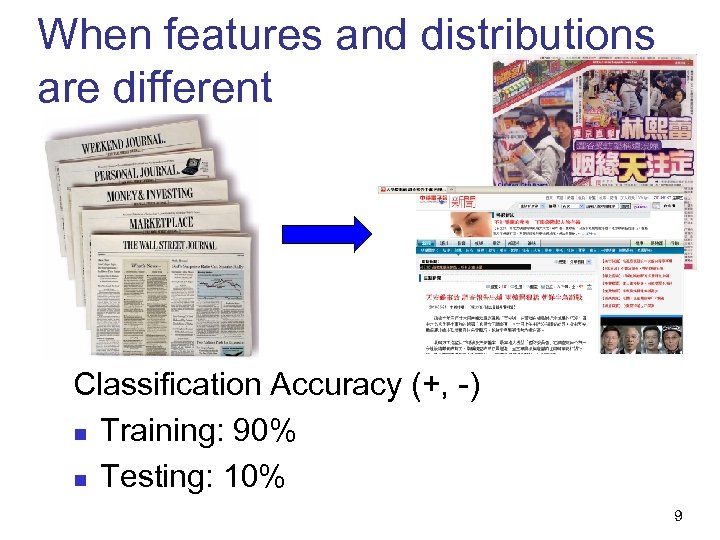

When distributions are different Classification Accuracy (+, -) n Training: 90% n Testing: 60% 8

When features and distributions are different Classification Accuracy (+, -) n Training: 90% n Testing: 10% 9

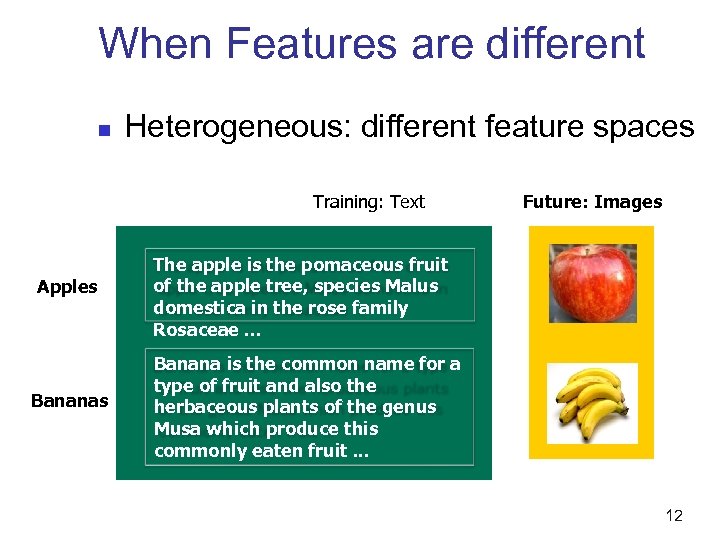

When Features are different n Heterogeneous: different feature spaces Training: Text Apples Bananas Future: Images The apple is the pomaceous fruit of the apple tree, species Malus domestica in the rose family Rosaceae. . . Banana is the common name for a type of fruit and also the herbaceous plants of the genus Musa which produce this commonly eaten fruit. . . 12

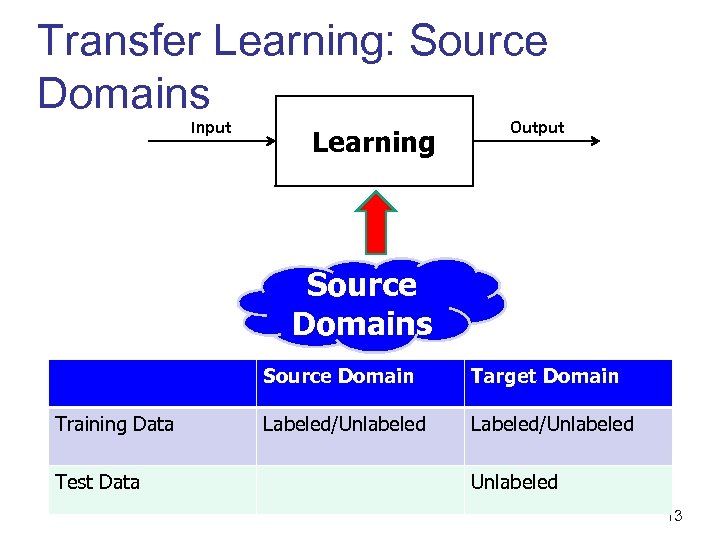

Transfer Learning: Source Domains Input Learning Output Source Domains Source Domain Training Data Test Data Target Domain Labeled/Unlabeled 13

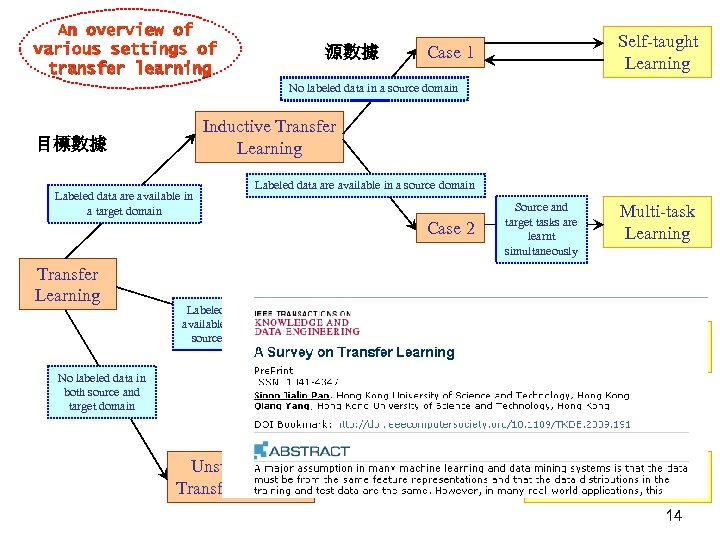

An overview of various settings of transfer learning 源數據 Self-taught Learning Case 1 No labeled data in a source domain Inductive Transfer Learning 目標數據 Labeled data are available in a target domain Labeled data are available in a source domain Case 2 Transfer Learning Labeled data are available only in a source domain No labeled data in both source and target domain Transductive Transfer Learning Source and target tasks are learnt simultaneously Assumption: different domains but single task Multi-task Learning Domain Adaptation Assumption: single domain and single task Unsupervised Transfer Learning Sample Selection Bias /Covariance Shift 14

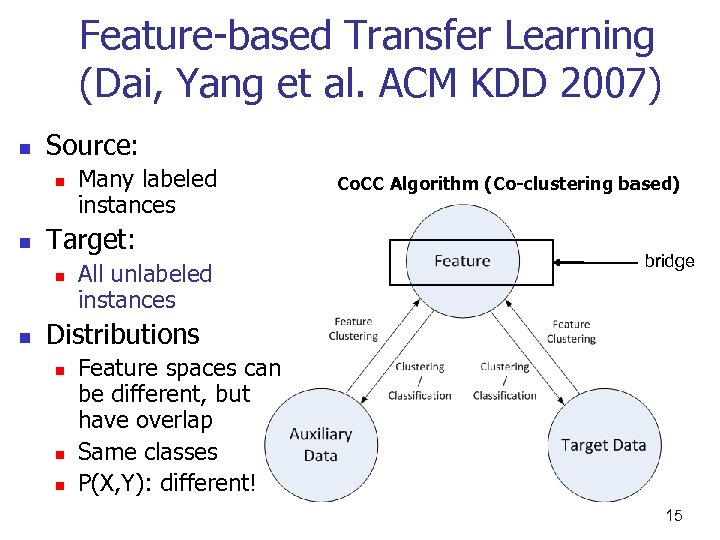

Feature-based Transfer Learning (Dai, Yang et al. ACM KDD 2007) n Source: n n Target: n n Many labeled instances All unlabeled instances Co. CC Algorithm (Co-clustering based) bridge Distributions n n n Feature spaces can be different, but have overlap Same classes P(X, Y): different! 15

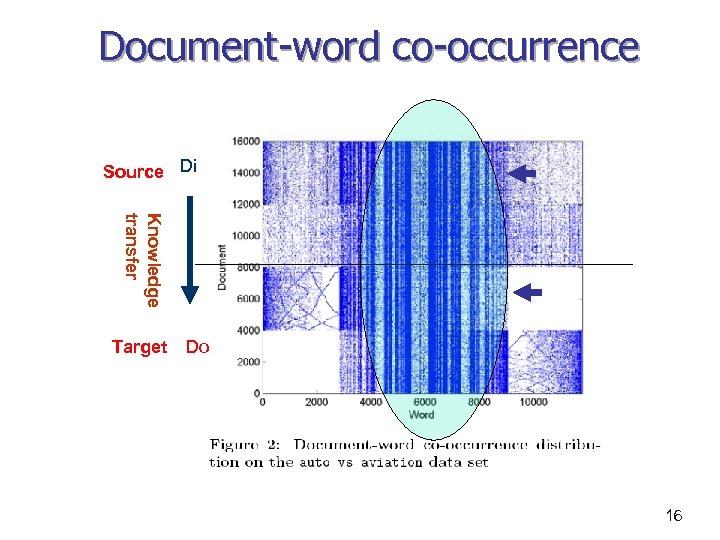

Document-word co-occurrence Source Di Knowledge transfer Target Do 16

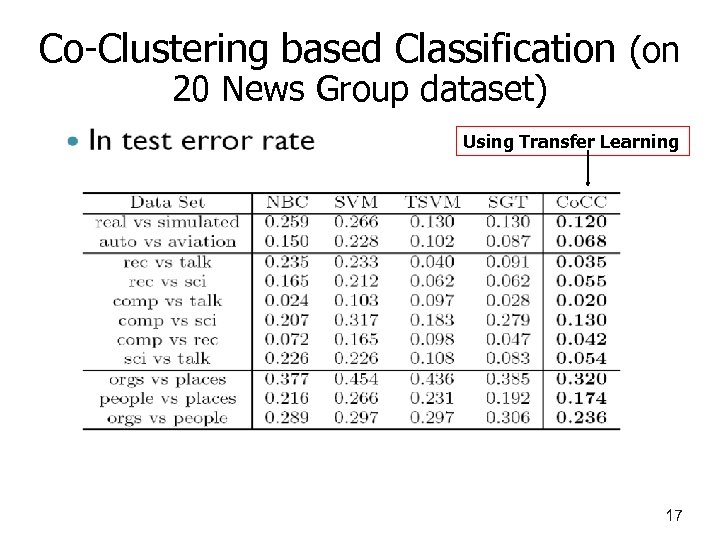

Co-Clustering based Classification (on 20 News Group dataset) Using Transfer Learning 17

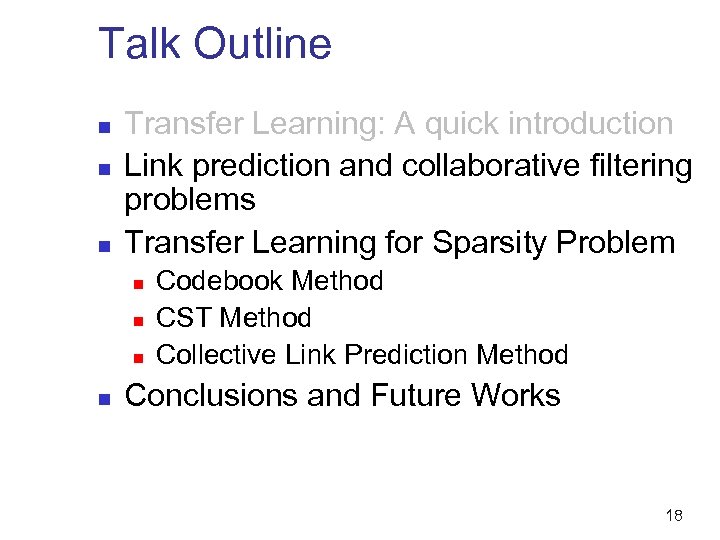

Talk Outline n n n Transfer Learning: A quick introduction Link prediction and collaborative filtering problems Transfer Learning for Sparsity Problem n n Codebook Method CST Method Collective Link Prediction Method Conclusions and Future Works 18

Network Data n Social Networks n n Information Networks n n n The Web (1. 0 with hyperlinks and 2. 0 with tags) Citation network, Wikipedia, etc. Biological Networks n n Facebook, Twitter, Live messenger, etc. Gene network, etc. Other Networks 19

![A Real World Study [Leskovec-Horvitz WWW ‘ 08] n Who talks to whom on A Real World Study [Leskovec-Horvitz WWW ‘ 08] n Who talks to whom on](https://present5.com/presentation/7eb8a8f1b588dff77283fe0bc02b7011/image-18.jpg)

A Real World Study [Leskovec-Horvitz WWW ‘ 08] n Who talks to whom on MSN messenger n n Network: 240 M nodes, 1. 3 billion edges Conclusions: n n Average path length is 6. 6 90% of nodes is reachable <8 steps 20

Global Network Structures n Small world n n Six degrees of separation [Milgram 60 s] Clustering and communities Evolutionary patterns Long tail distributions 21

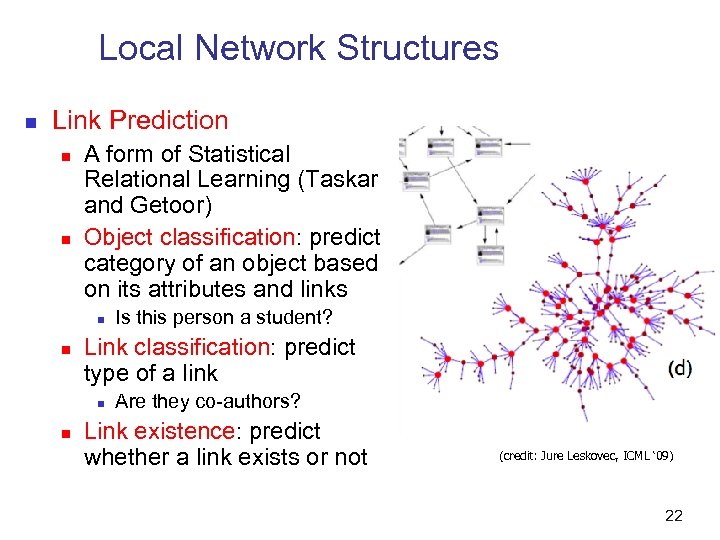

Local Network Structures n Link Prediction n n A form of Statistical Relational Learning (Taskar and Getoor) Object classification: predict category of an object based on its attributes and links n n Link classification: predict type of a link n n Is this person a student? Are they co-authors? Link existence: predict whether a link exists or not (credit: Jure Leskovec, ICML ‘ 09) 22

Link Prediction n n Task: predict missing links in a network Main challenge: Network Data Sparsity 23

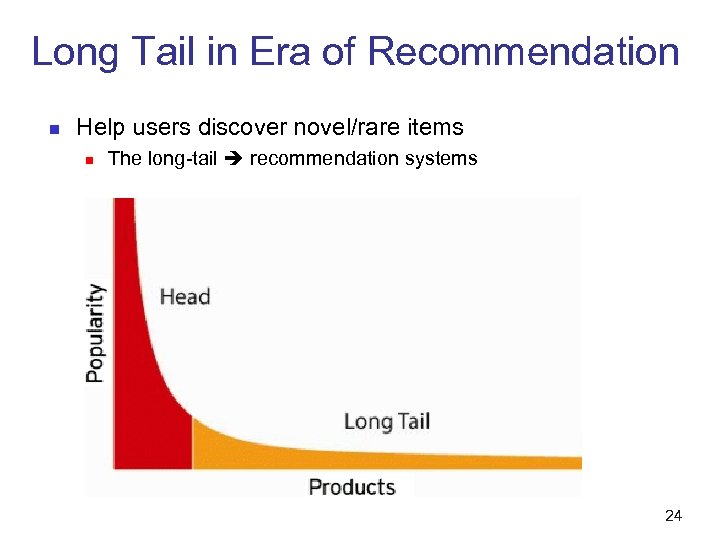

Long Tail in Era of Recommendation n Help users discover novel/rare items n The long-tail recommendation systems 24

Product Recommendation (Amazon. com) 25 25

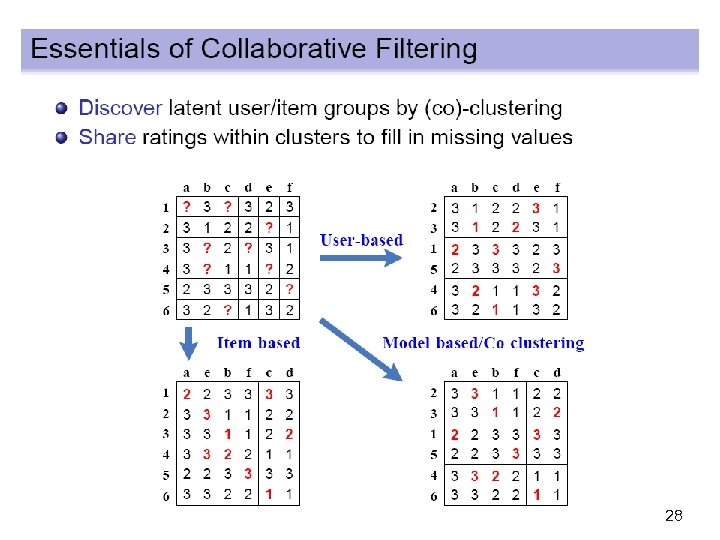

Collaborative Filtering (CF) n n n Wisdom of the crowd, word of mouth, … Your taste in music/products/movies/… is similar to that of your friends (Maltz & Ehrlich, 1995) Key issue: who are your friends? 26

Taobao. com 27

28

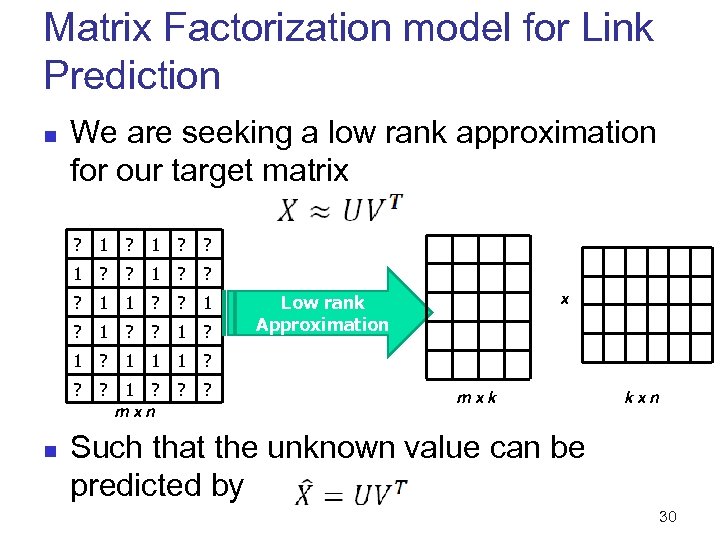

Matrix Factorization model for Link Prediction n We are seeking a low rank approximation for our target matrix ? 1 ? ? ? 1 1 ? ? 1 ? x Low rank Approximation 1 ? 1 1 1 ? ? ? mxn n mxk kxn Such that the unknown value can be predicted by 30

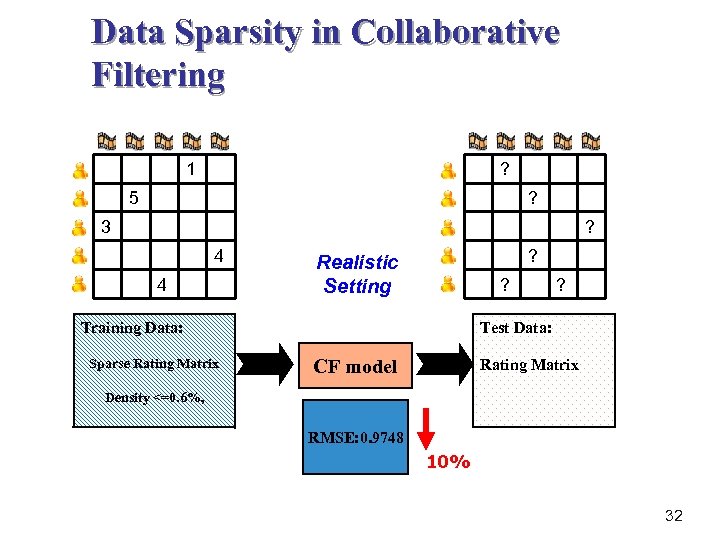

Data Sparsity in Collaborative Filtering 1 ? 5 ? 3 ? 4 4 ? Realistic Setting ? Training Data: Sparse Rating Matrix ? Test Data: CF model Rating Matrix Density <=0. 6%, RMSE: 0. 9748 10% 32

Transfer Learning for Collaborative Filtering? IMDB Database Amazon. com 36 36

Codebook Transfer n n n Bin Li, Qiang Yang, Xiangyang Xue. Can Movies and Books Collaborate? Cross-Domain Collaborative Filtering for Sparsity Reduction. In Proceedings of the Twenty-First International Joint Conference on Artificial Intelligence (IJCAI '09), Pasadena, CA, USA, July 11 -17, 2009. 37

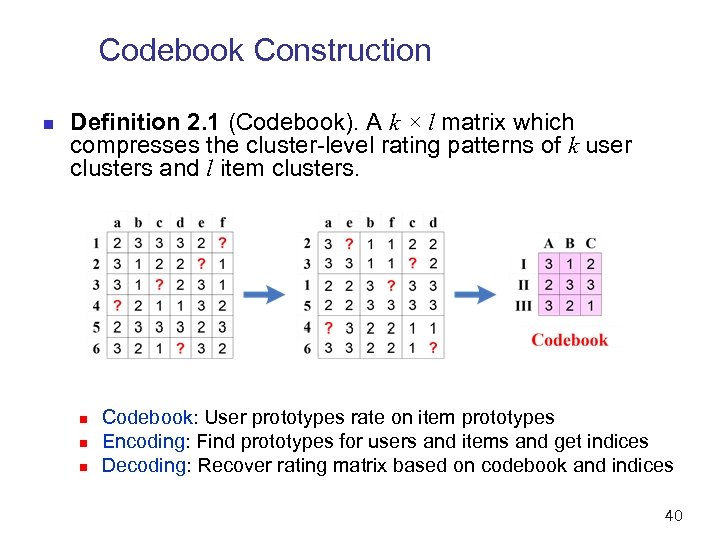

Codebook Construction n Definition 2. 1 (Codebook). A k × l matrix which compresses the cluster-level rating patterns of k user clusters and l item clusters. n n n Codebook: User prototypes rate on item prototypes Encoding: Find prototypes for users and items and get indices Decoding: Recover rating matrix based on codebook and indices 40

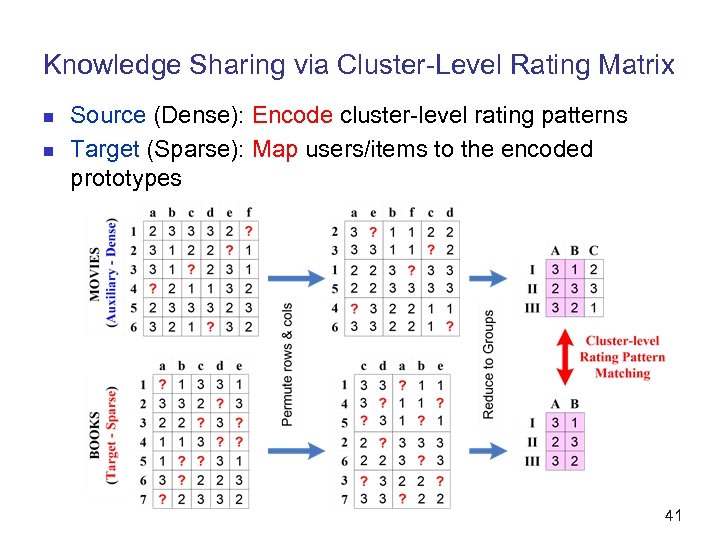

Knowledge Sharing via Cluster-Level Rating Matrix n n Source (Dense): Encode cluster-level rating patterns Target (Sparse): Map users/items to the encoded prototypes 41

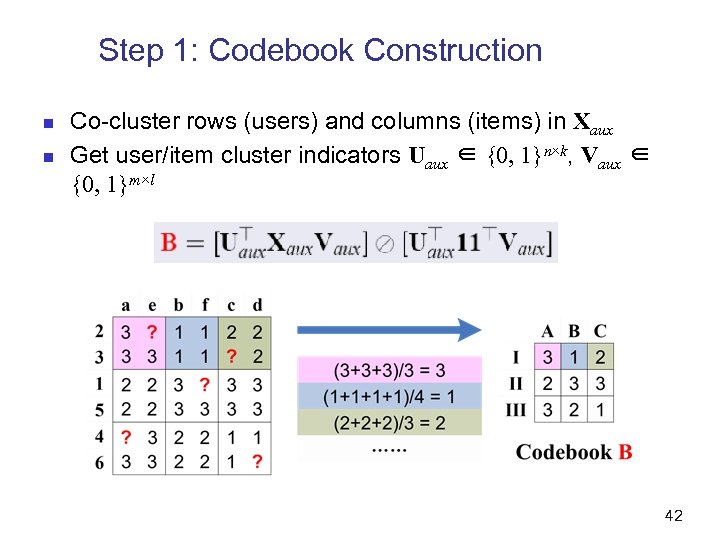

Step 1: Codebook Construction n n Co-cluster rows (users) and columns (items) in Xaux Get user/item cluster indicators Uaux ∈ {0, 1}n×k, Vaux ∈ {0, 1}m×l 42

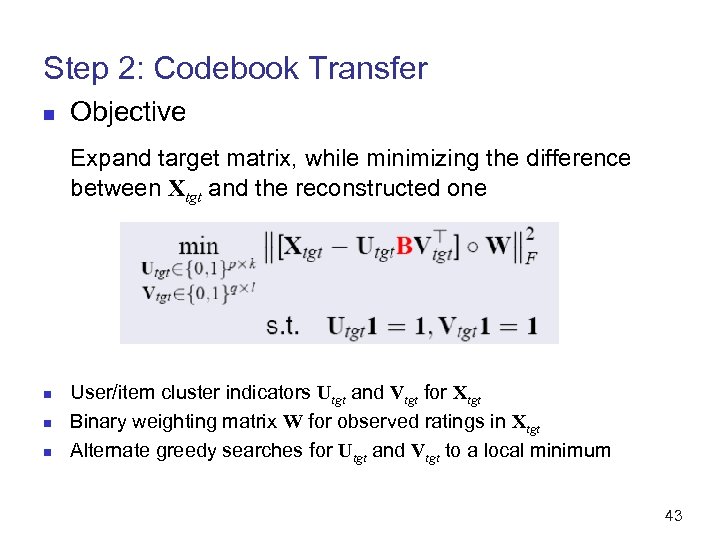

Step 2: Codebook Transfer n Objective Expand target matrix, while minimizing the difference between Xtgt and the reconstructed one n n n User/item cluster indicators Utgt and Vtgt for Xtgt Binary weighting matrix W for observed ratings in Xtgt Alternate greedy searches for Utgt and Vtgt to a local minimum 43

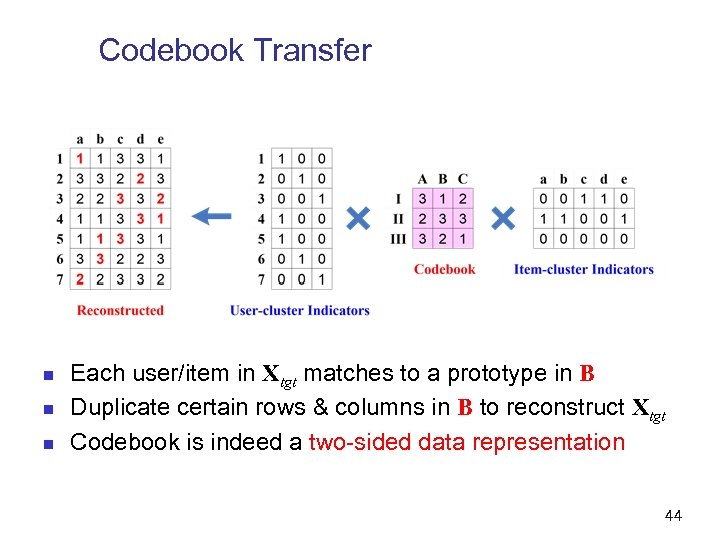

Codebook Transfer n n n Each user/item in Xtgt matches to a prototype in B Duplicate certain rows & columns in B to reconstruct Xtgt Codebook is indeed a two-sided data representation 44

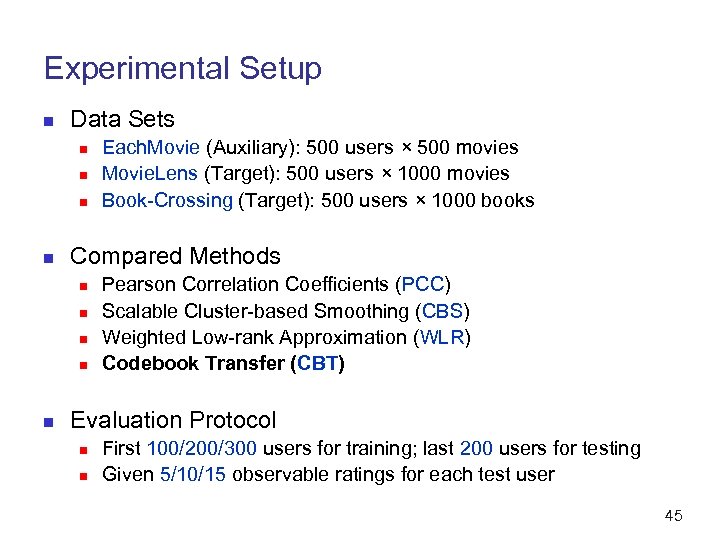

Experimental Setup n Data Sets n n Compared Methods n n n Each. Movie (Auxiliary): 500 users × 500 movies Movie. Lens (Target): 500 users × 1000 movies Book-Crossing (Target): 500 users × 1000 books Pearson Correlation Coefficients (PCC) Scalable Cluster-based Smoothing (CBS) Weighted Low-rank Approximation (WLR) Codebook Transfer (CBT) Evaluation Protocol n n First 100/200/300 users for training; last 200 users for testing Given 5/10/15 observable ratings for each test user 45

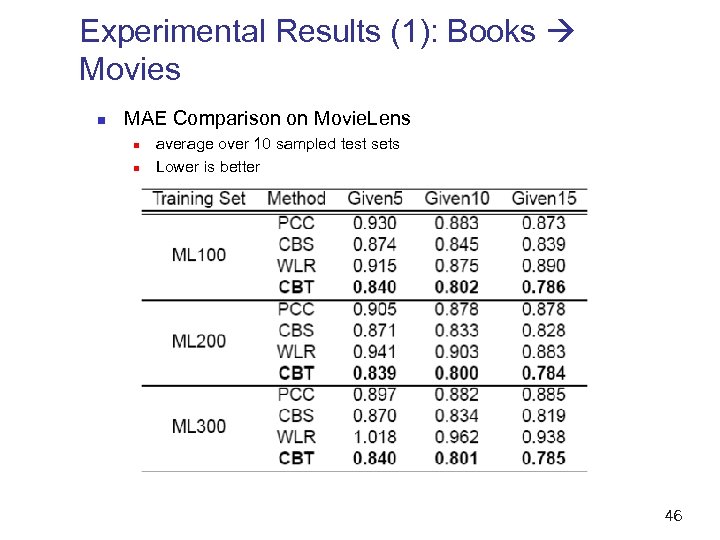

Experimental Results (1): Books Movies n MAE Comparison on Movie. Lens n n average over 10 sampled test sets Lower is better 46

Limitations of Codebook Transfer n Same rating range n n Homogenous dimensions n n Source and target data must have the same range of ratings [1, 5] User and item dimensions must be similar In reality n n Range of ratings can be 0/1 or [1, 5] User and item dimensions may be very different 48

Coordinate System Transfer n n n Weike Pan, Evan Xiang, Nathan Liu and Qiang Yang. Transfer Learning in Collaborative Filtering for Sparsity Reduction. In Proceedings of the 24 th AAAI Conference on Artificial Intelligence (AAAI-10). Atlanta, Georgia, USA. July 11 -15, 2010. 49

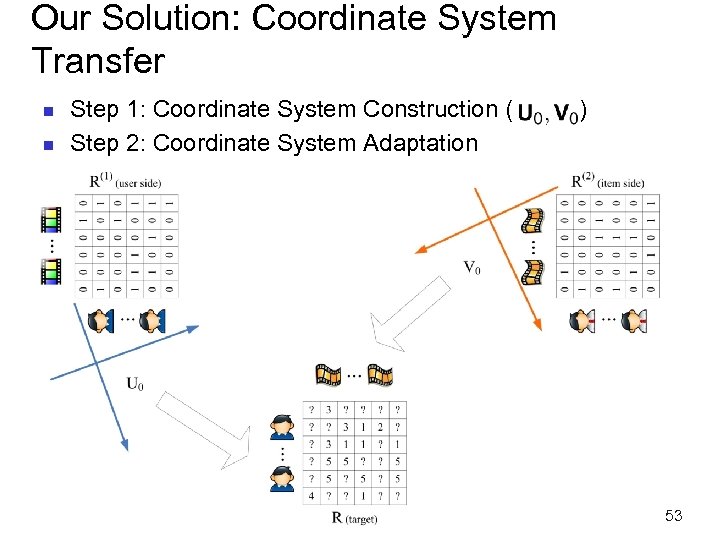

Our Solution: Coordinate System Transfer n n Step 1: Coordinate System Construction ( ) Step 2: Coordinate System Adaptation 53

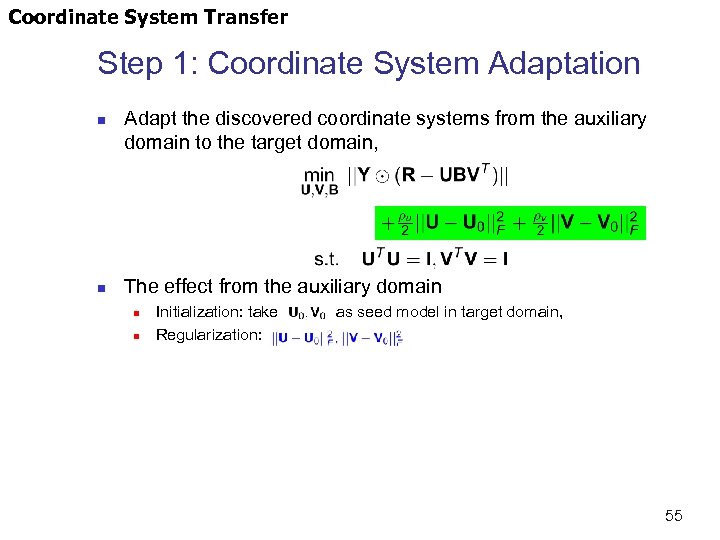

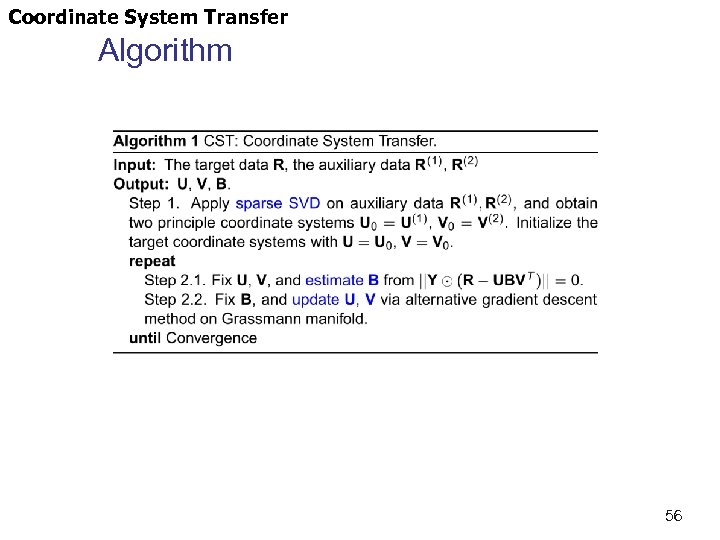

Coordinate System Transfer Step 1: Coordinate System Adaptation n n Adapt the discovered coordinate systems from the auxiliary domain to the target domain, The effect from the auxiliary domain n n Initialization: take as seed model in target domain, Regularization: 55

Coordinate System Transfer Algorithm 56

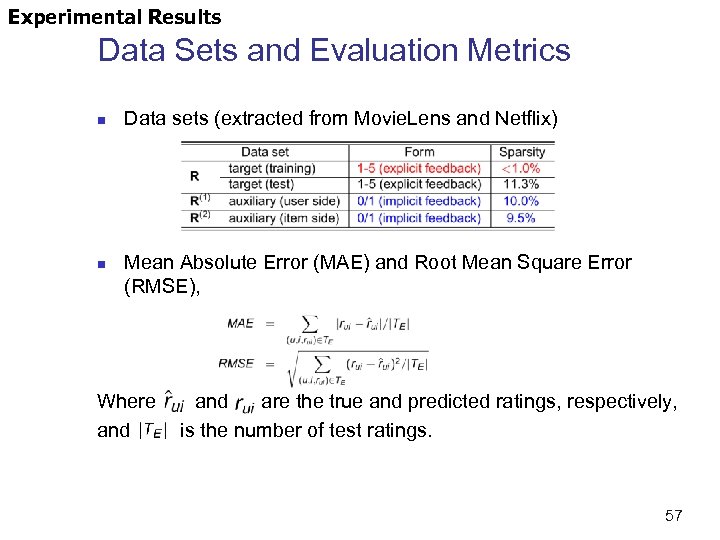

Experimental Results Data Sets and Evaluation Metrics n n Data sets (extracted from Movie. Lens and Netflix) Mean Absolute Error (MAE) and Root Mean Square Error (RMSE), Where and are the true and predicted ratings, respectively, and is the number of test ratings. 57

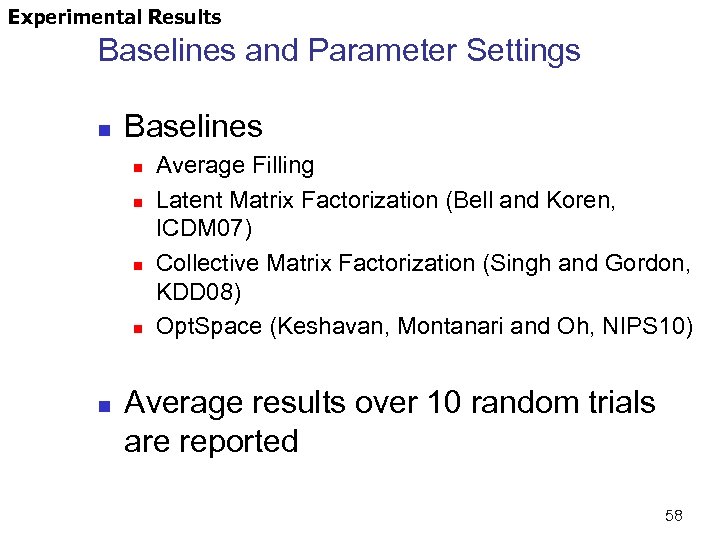

Experimental Results Baselines and Parameter Settings n Baselines n n n Average Filling Latent Matrix Factorization (Bell and Koren, ICDM 07) Collective Matrix Factorization (Singh and Gordon, KDD 08) Opt. Space (Keshavan, Montanari and Oh, NIPS 10) Average results over 10 random trials are reported 58

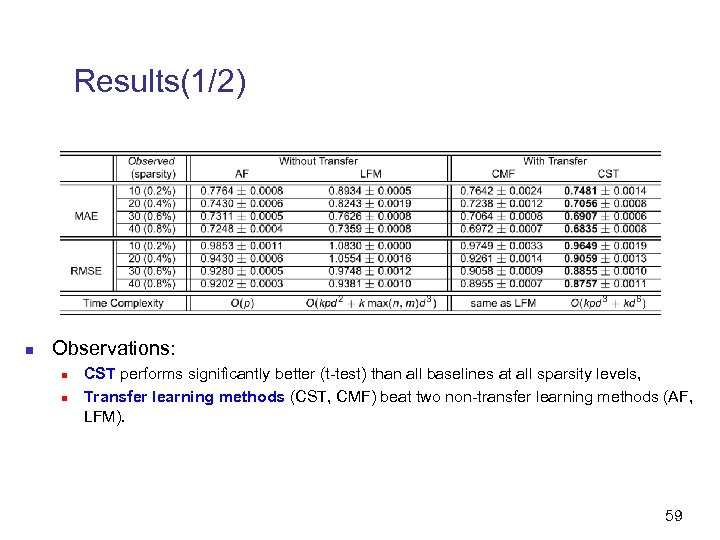

Results(1/2) n Observations: n n CST performs significantly better (t-test) than all baselines at all sparsity levels, Transfer learning methods (CST, CMF) beat two non-transfer learning methods (AF, LFM). 59

Limitation of CST and CBT n Different source domains are related to the target domain differently n n n Book to Movies Food to Movies Rating bias n Users tend to rate items that they like n Thus there are more rating = 5 than rating = 2 62

Our Solution: Collective Link Prediction (CLP) n Jointly learn multiple domains together n n n Learning the similarity of different domains consistency between domains indicates similarity. Introduce a link function to correct the bias 63

n n n Bin Cao, Nathan Liu and Qiang Yang. Transfer Learning for Collective Link Prediction in Multiple Heterogeneous Domains. In Proceedings of 27 th International Conference on Machine Learning (ICML 2010), Haifa, Israel. June 2010. 64

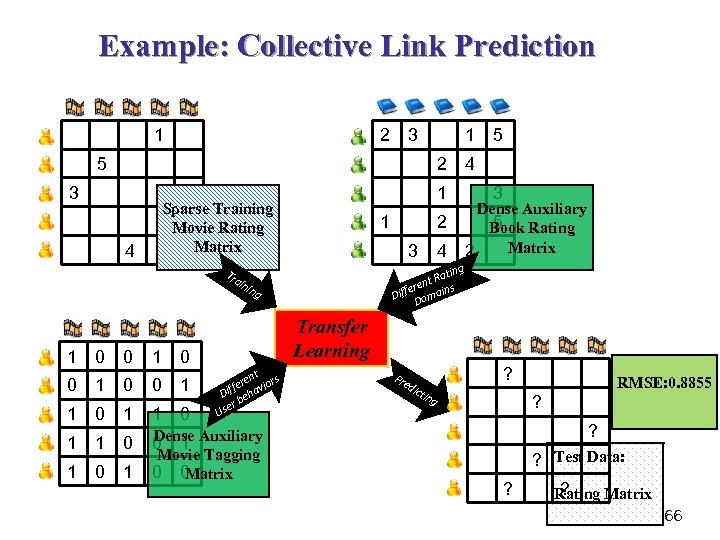

Example: Collective Link Prediction 1 2 3 1 5 2 3 1 Sparse Training 4 Movie Rating Matrix 4 Tra 1 2 3 4 5 4 3 Dense Auxiliary 5 Book Rating Matrix 2 g atin nt R s ere Diff omain D ini ng Transfer Learning 1 0 0 1 1 0 Dense 0 1 Auxiliary 1 0 1 ent s fer avior Dif eh b er Us Movie Tagging 0 0 Matrix Pre ? dic tin g RMSE: 0. 8855 ? ? ? Test Data: ? ? Rating Matrix 66

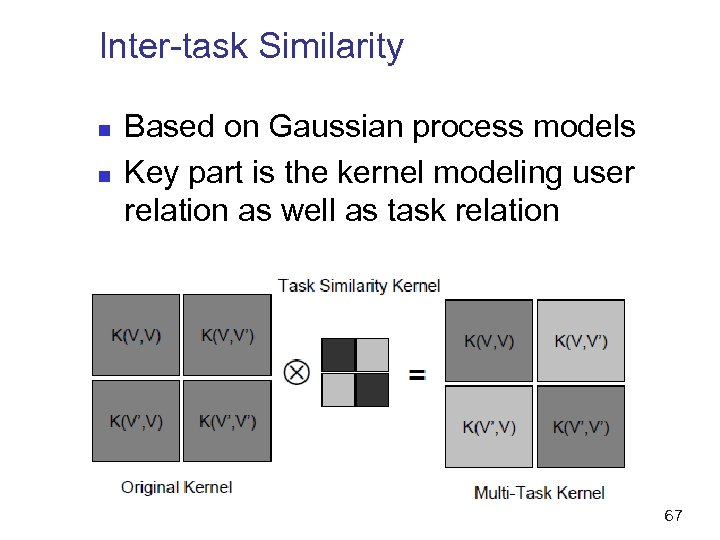

Inter-task Similarity n n Based on Gaussian process models Key part is the kernel modeling user relation as well as task relation 67

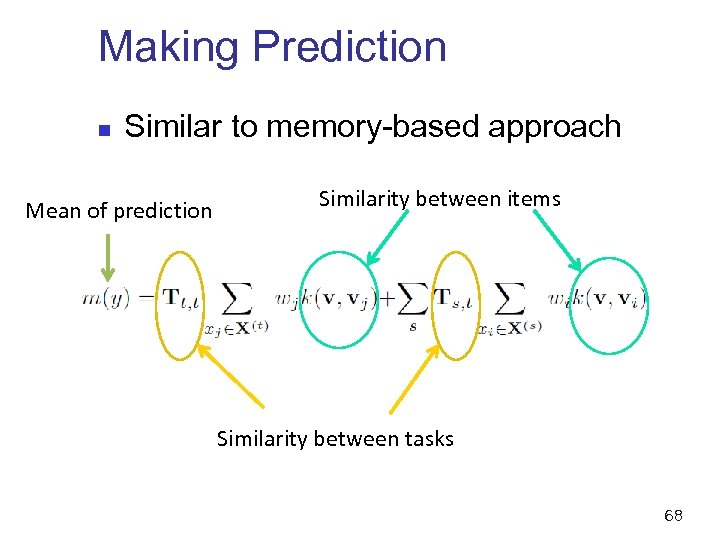

Making Prediction n Similar to memory-based approach Mean of prediction Similarity between items Similarity between tasks 68

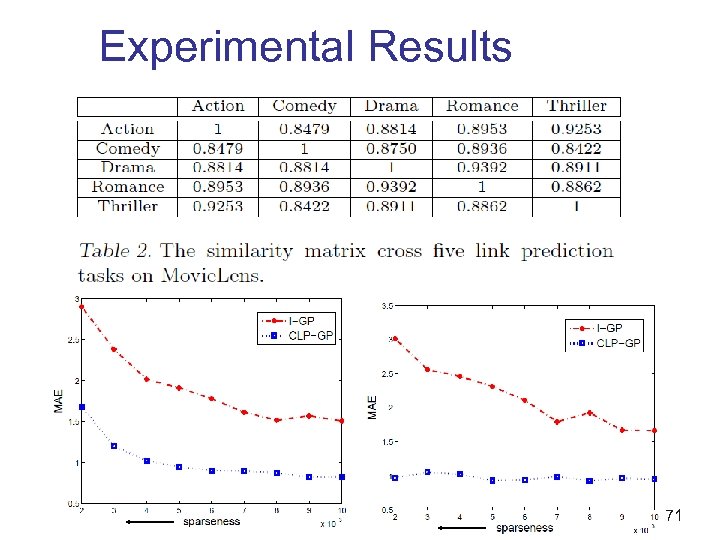

Experimental Results 71

Conclusions and Future Work n n Transfer Learning (舉一反三 ) Link prediction is an important task in graph/network data mining Key Challenge: sparsity Transfer learning from other domains helps improve the performance 72

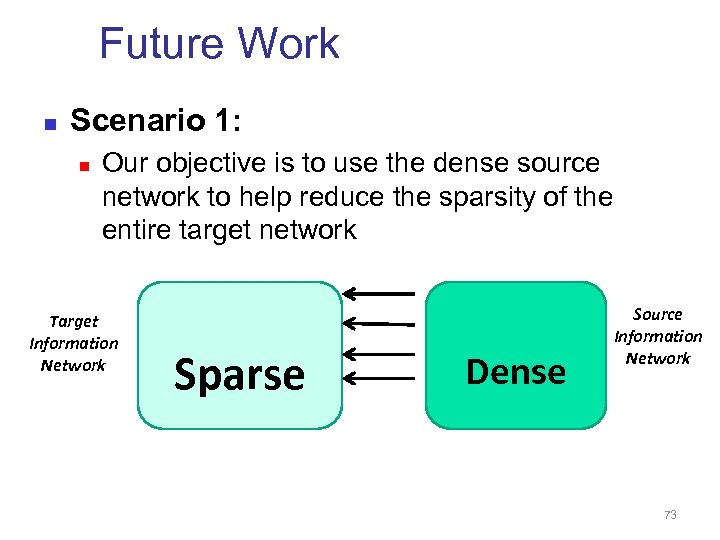

Future Work n Scenario 1: n Our objective is to use the dense source network to help reduce the sparsity of the entire target network Target Information Network Sparse Dense Source Information Network 73

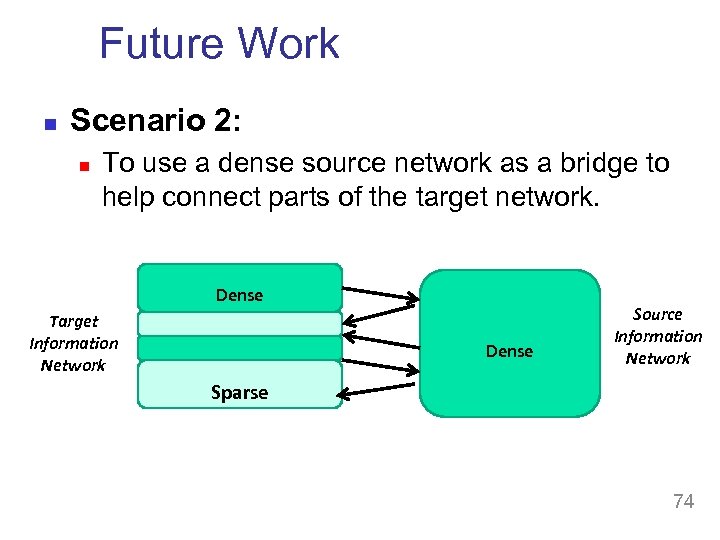

Future Work n Scenario 2: n To use a dense source network as a bridge to help connect parts of the target network. Dense Target Information Network Dense Source Information Network Sparse 74

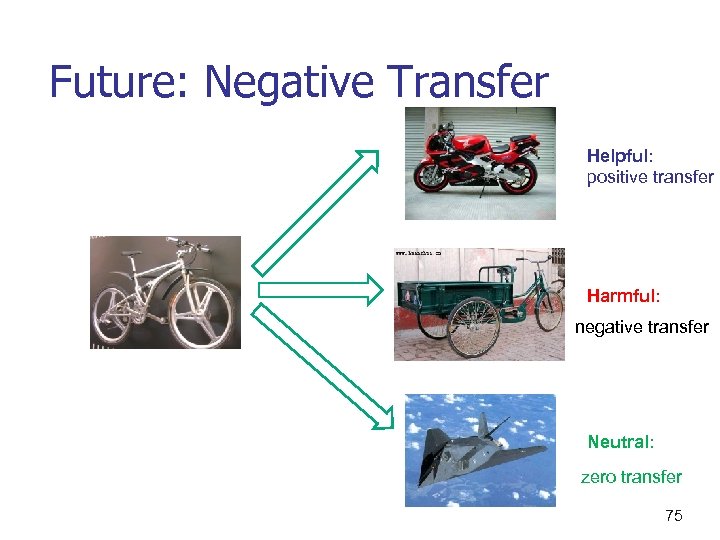

Future: Negative Transfer Helpful: positive transfer Harmful: negative transfer Neutral: zero transfer 75

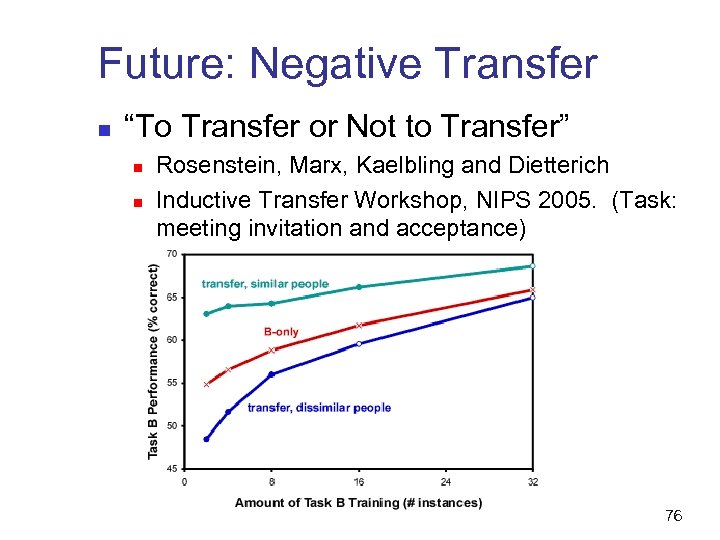

Future: Negative Transfer n “To Transfer or Not to Transfer” n n Rosenstein, Marx, Kaelbling and Dietterich Inductive Transfer Workshop, NIPS 2005. (Task: meeting invitation and acceptance) 76

Acknowledgement n HKUST: n n Shanghai Jiaotong University: n n Sinno J. Pan, Huayan Wang, Bin Cao, Evan Wei Xiang, Derek Hao Hu, Nathan Nan Liu, Vincent Wenchen Zheng Wenyuan Dai, Guirong Xue, Yuqiang Chen, Prof. Yong Yu, Xiao Ling, Ou Jin. Visiting Students n Bin Li (Fudan U. ), Xiaoxiao Shi (Zhong Shan U. ), 77

7eb8a8f1b588dff77283fe0bc02b7011.ppt