6bb30d7693ade1288238b194e0d0637a.ppt

- Количество слайдов: 44

Transcription by Beat-Boxing Elliot Sinyor - MUMT 611 Feb 17, 2005 1

Presentation • Introduction • Background • Making “beat-box” sounds • Some Common Methods • Related Work • “Query-by-beat-boxing: Music Retrieval for the DJ” – Kapur, Benning, Tzanetakis • “A Drum pattern Retrieval Method by Voice Percussion” – Nakano, Ogata, Goto, Hiraga • “Towards Automatic Transcription of Expressive Oral Percussive Performances” – Hazan • Project for MUMT 605 2

Introduction • Ways to input percussion: – Electronic Drums (Yamaha DD-5, Roland V-Drums) – Velocity-sensitive MIDI keyboard – Velocity-insensitive computer keyboard • Vocalized percussion: – Common practice - “beat-boxing”, tabla vocal notation – Few applications that explicitly use vocalized percussion as input. 3

Introduction • Uses • Method of percussion input, for composition or performance • Method of transcription, along with expressive information • Method of retrieving stored percussion samples 4

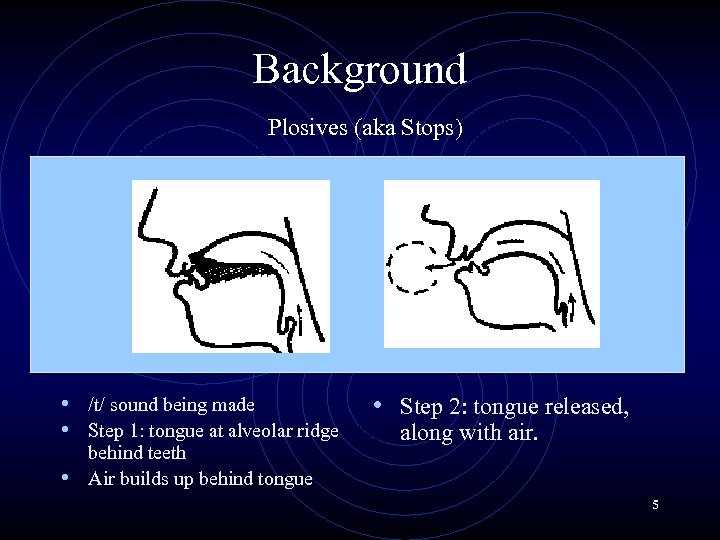

Background Plosives (aka Stops) • /t/ sound being made • Step 1: tongue at alveolar ridge behind teeth • Air builds up behind tongue • Step 2: tongue released, along with air. 5

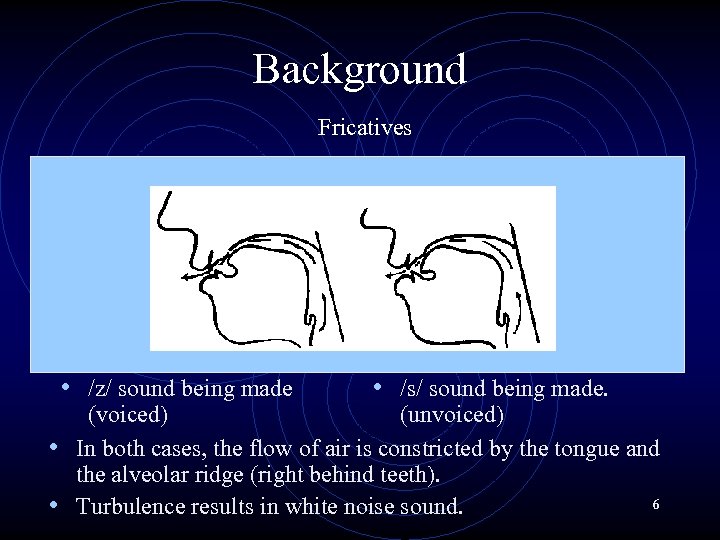

Background Fricatives • /z/ sound being made • /s/ sound being made. (voiced) (unvoiced) • In both cases, the flow of air is constricted by the tongue and the alveolar ridge (right behind teeth). 6 • Turbulence results in white noise sound.

Background • Why does this matter? • Plosives and Fricatives yield short signals (approximately 30 msec) • Noisy, non-deterministic signals • Varies greatly from person to person 7

Common Methods • Segment monophonic input stream (onset detection) • Distinguish between silence and “beats” • Analyse features • Temporal/Spectral features • Classify each sound based on features, and training data • (eg, ANN, minimum-distance criteria) 8

![High-level diagram [XXX] 9 High-level diagram [XXX] 9](https://present5.com/presentation/6bb30d7693ade1288238b194e0d0637a/image-9.jpg)

High-level diagram [XXX] 9

Analysis Features • Some Time-domain features: • Root Mean Squared (RMS) analysis - measure of energy level over a frame • Relative Difference Function (RDF) – used to determine perceptual onset • Zero-crossing Rate (ZCR) analysis - Used to estimate frequency components 10

Analysis Features • Some Frequency-Domain features: • Spectral Flux • Measure of change from 1 frame to another • Spectral Centroid • “center of gravity” • Mel-frequency Cepstral Coefficients • Compact and perceptually relevant way to model the spectrum 11

Analysis Features • Some Frequency-Domain features: • Spectral Flux • Measure of change from 1 frame to another • Spectral Centroid • “center of gravity” • Mel-frequency Cepstral Coefficients • Compact and perceptually relevant way to model the spectrum 12

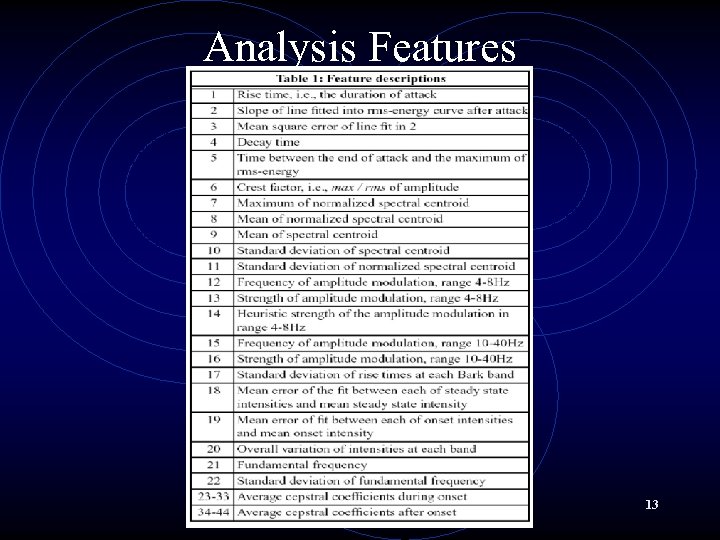

Analysis Features 13

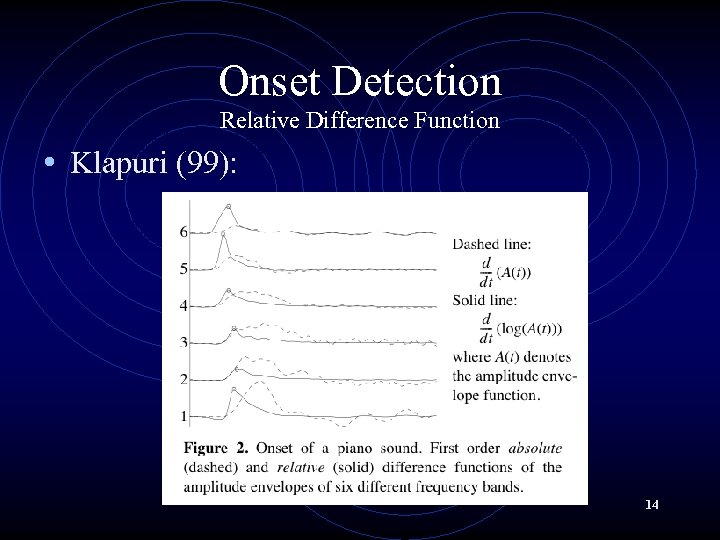

Onset Detection Relative Difference Function • Klapuri (99): 14

Relative Difference Function • “This is psychoacoustically relevant, since perceived increase in signal amplitude is in relation to its level, the same amount of increase being more prominent in a quiet signal. ” • Can be used to find the perceptual onset, whereas physical onset may occur earlier. 15

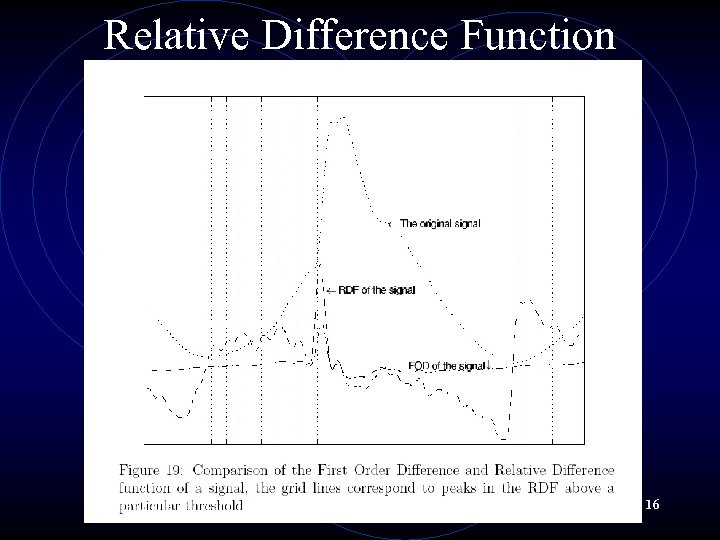

Relative Difference Function 16

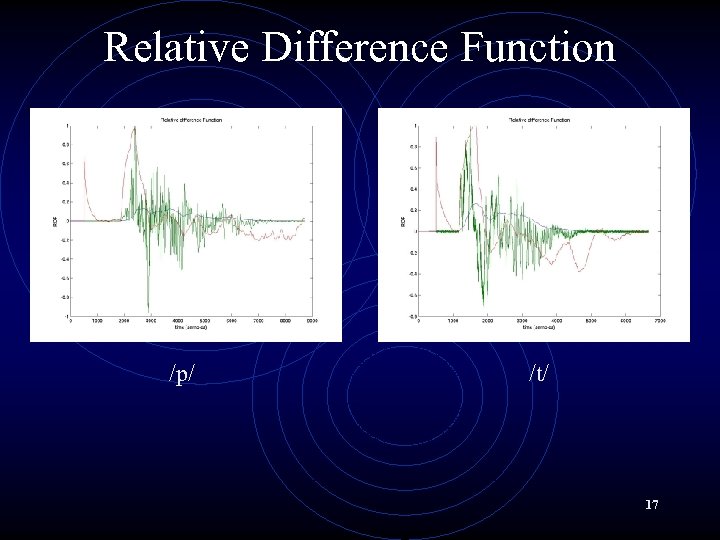

Relative Difference Function /p/ /t/ 17

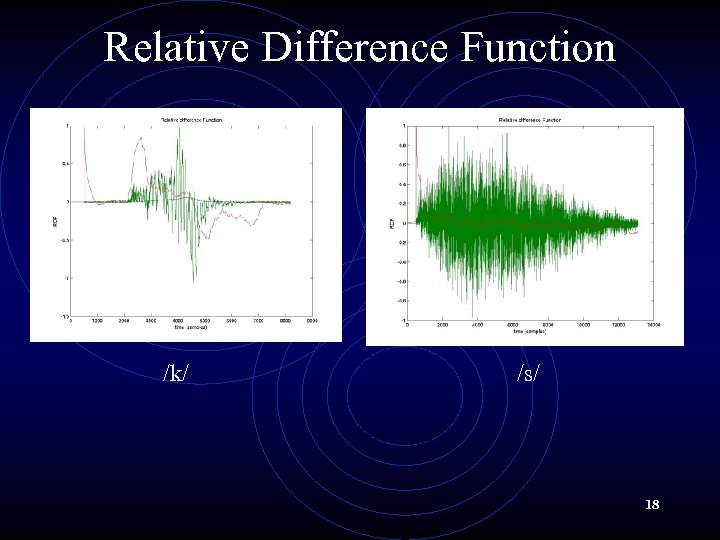

Relative Difference Function /k/ /s/ 18

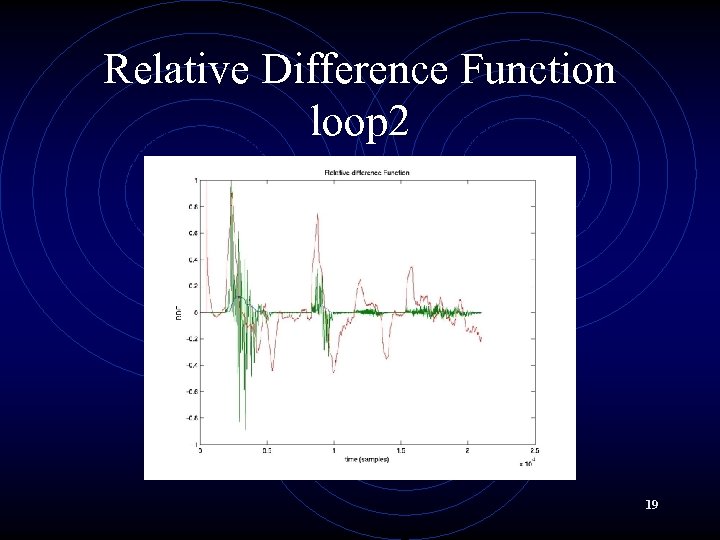

Relative Difference Function loop 2 19

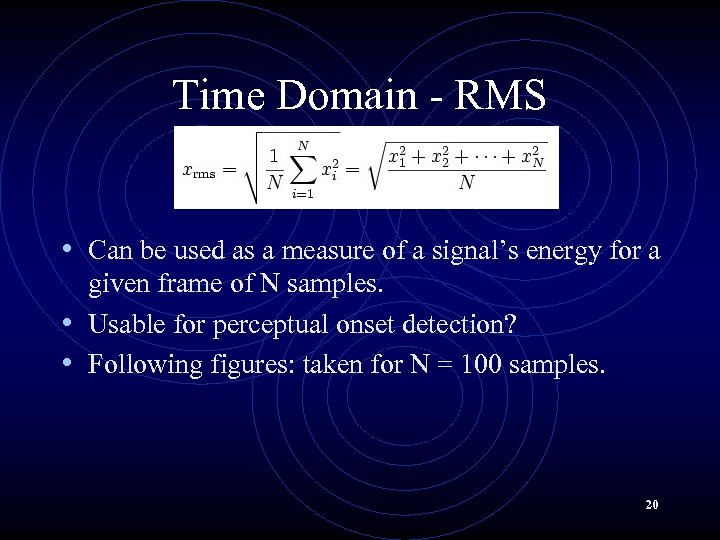

Time Domain - RMS • Can be used as a measure of a signal’s energy for a given frame of N samples. • Usable for perceptual onset detection? • Following figures: taken for N = 100 samples. 20

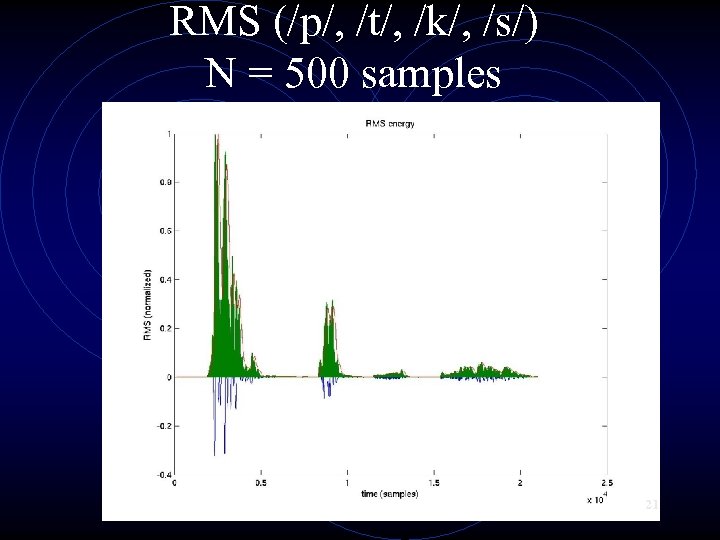

RMS (/p/, /t/, /k/, /s/) N = 500 samples 21

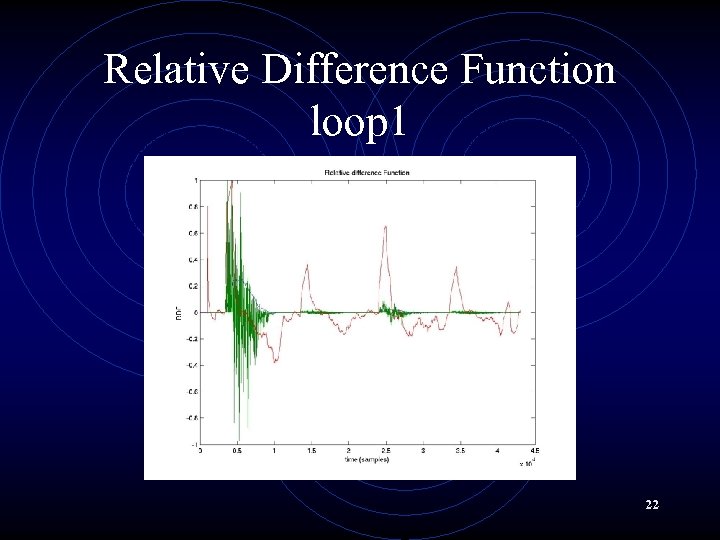

Relative Difference Function loop 1 22

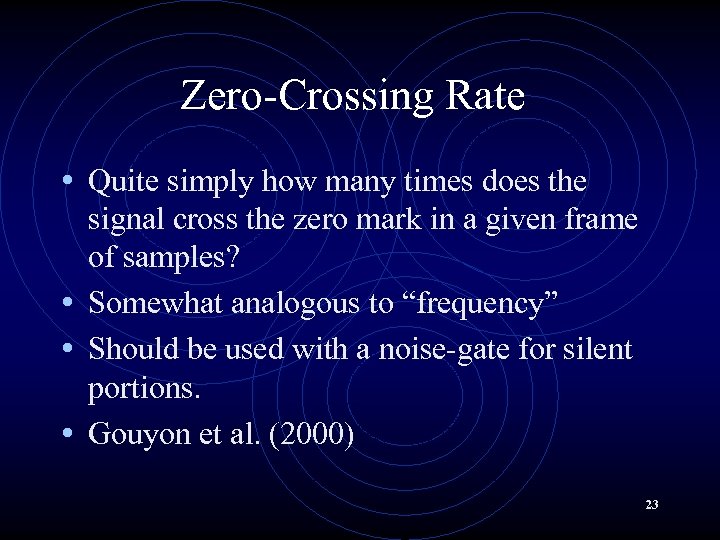

Zero-Crossing Rate • Quite simply how many times does the signal cross the zero mark in a given frame of samples? • Somewhat analogous to “frequency” • Should be used with a noise-gate for silent portions. • Gouyon et al. (2000) 23

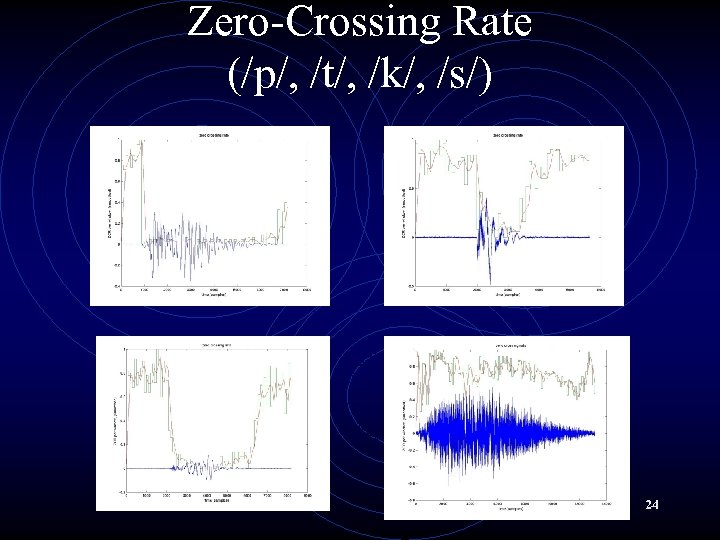

Zero-Crossing Rate (/p/, /t/, /k/, /s/) 24

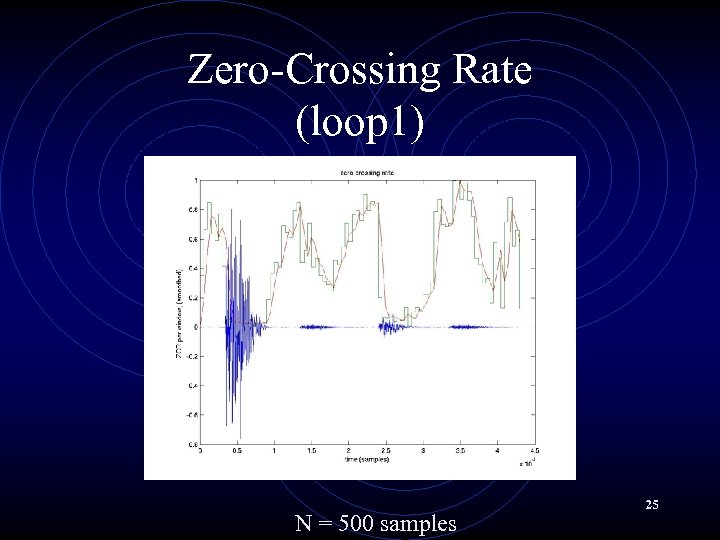

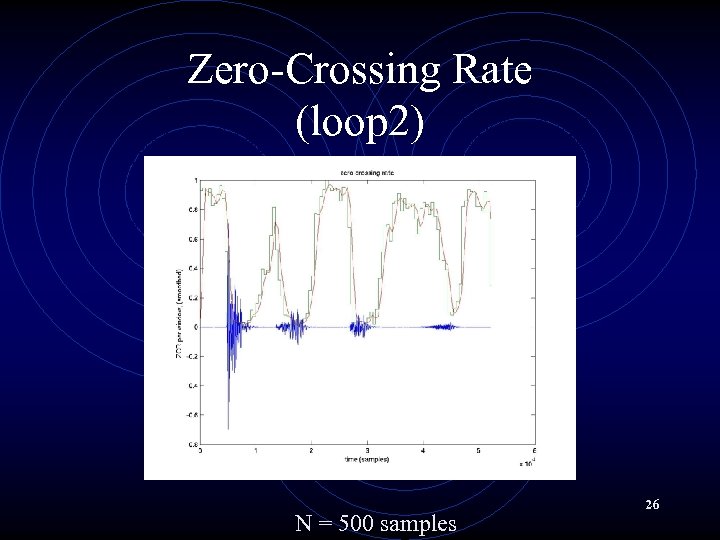

Zero-Crossing Rate (loop 1) N = 500 samples 25

Zero-Crossing Rate (loop 2) N = 500 samples 26

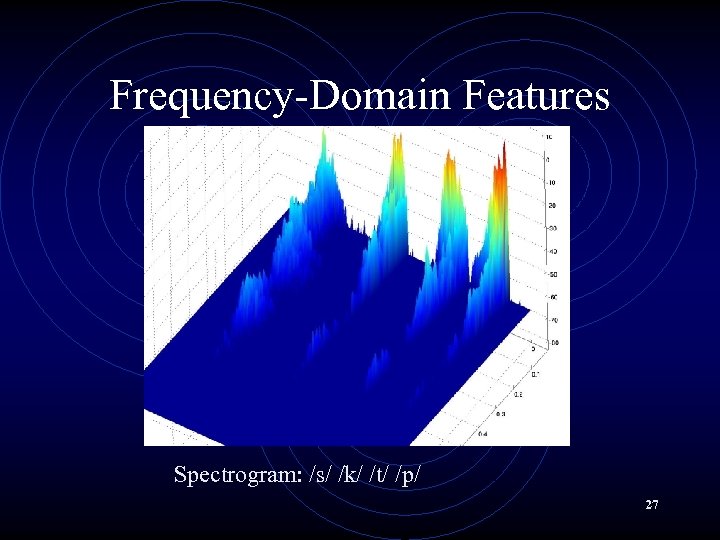

Frequency-Domain Features Spectrogram: /s/ /k/ /t/ /p/ 27

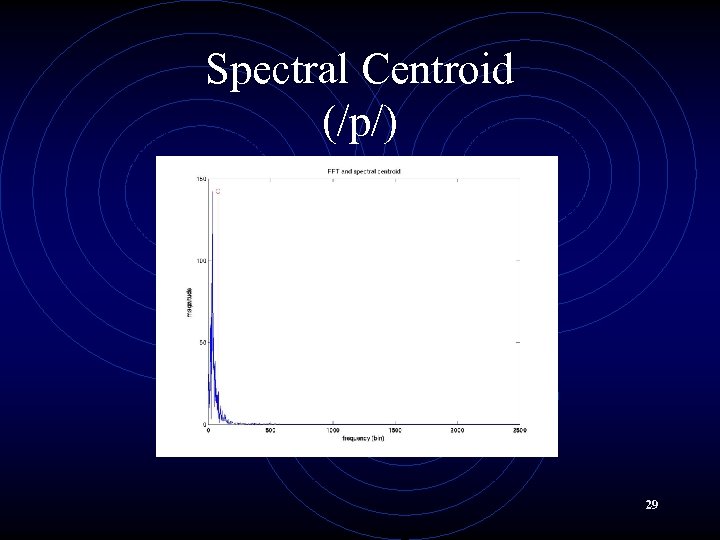

Frequency-Domain Features • Spectral Centroid (ie center of gravity): • For each frame: average frequency weighted by averages, divided by sum of amplitudes • The midpoint of spectral energy distribution • Can be used as a rough estimate of “brightness” 28

Spectral Centroid (/p/) 29

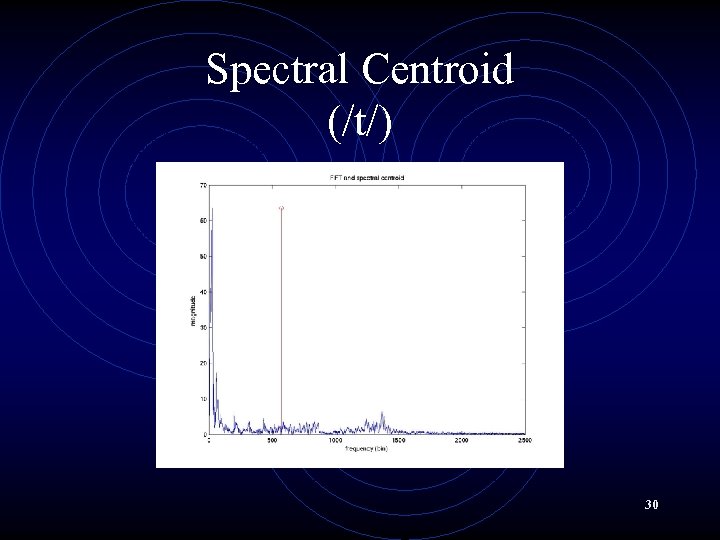

Spectral Centroid (/t/) 30

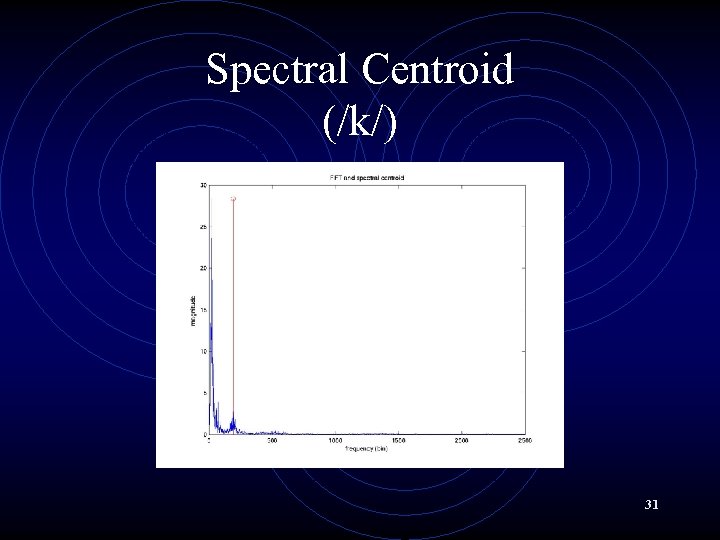

Spectral Centroid (/k/) 31

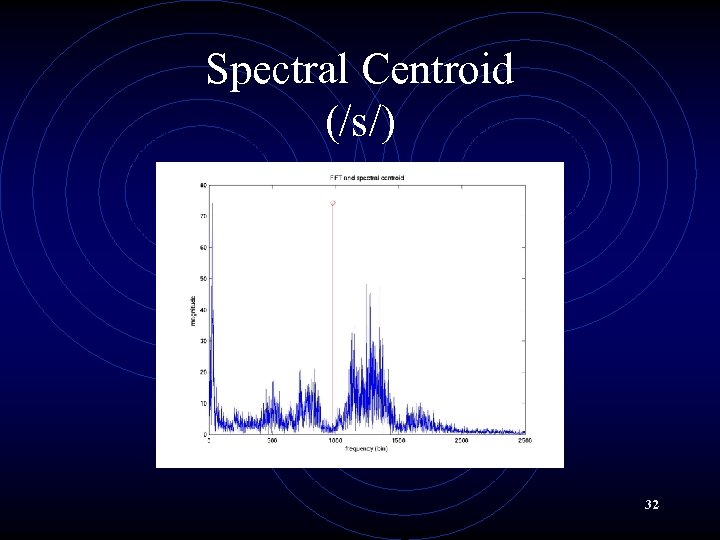

Spectral Centroid (/s/) 32

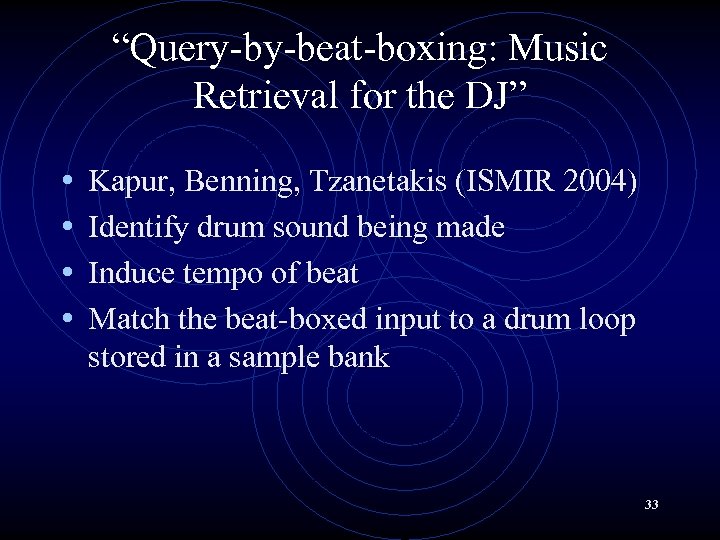

“Query-by-beat-boxing: Music Retrieval for the DJ” • • Kapur, Benning, Tzanetakis (ISMIR 2004) Identify drum sound being made Induce tempo of beat Match the beat-boxed input to a drum loop stored in a sample bank 33

“Query-by-beat-boxing: Music Retrieval for the DJ” Pre-processed targets (drum loops created in Reason) Used ZCR, spectral-centroid, spectral rolloff, LPC as features in a NN Experimented with features to determine most reliable feature set 34

“Query-by-beat-boxing: Music Retrieval for the DJ” • Bionic Beat. Boxing Voice Processor • User provides 4 examples for each class of drum • User beat-boxes according to a click-track • Input beat is segmented, each sound is classified by ANN using ZCR. • Can play back, or use as input in Muse. Scape 35

“Query-by-beat-boxing: Music Retrieval for the DJ” • Muse. Scape • User enters tempo/style (eg Dub, Rnb, House) • Can use analyzed Beat. Boxed loop 36

“A drum pattern retrieval method by voice percussion” • Nakano, Ogata, Goto, Hiraga (ISMIR 2004) • Use “onomatopea” to make monophonic bass-snare patterns • IOI (inter onset interval) compared to stored drum-sequences (all 4/4, 1 measure) • Allows for use of different consonants and vowels to make drum sounds 37

“A drum pattern retrieval method by voice percussion” • Typical onomatopeic expressions of drum sounds stored in pronunciation dictionary (eg Don, Ton, Zu) • Onomatopeic expression mapped to drum sound • Use MFCC as analysis feature 38

“Towards automatic Transcription of expressive oral percussive performances” • Hazan • Goal: to create symbolic representation of voice percussion that includes expressive features • Used 28 features (10 temporal, 18 spectral) • Tree-induction and Lazy Learning (k-NN) tested for accuracy. 39

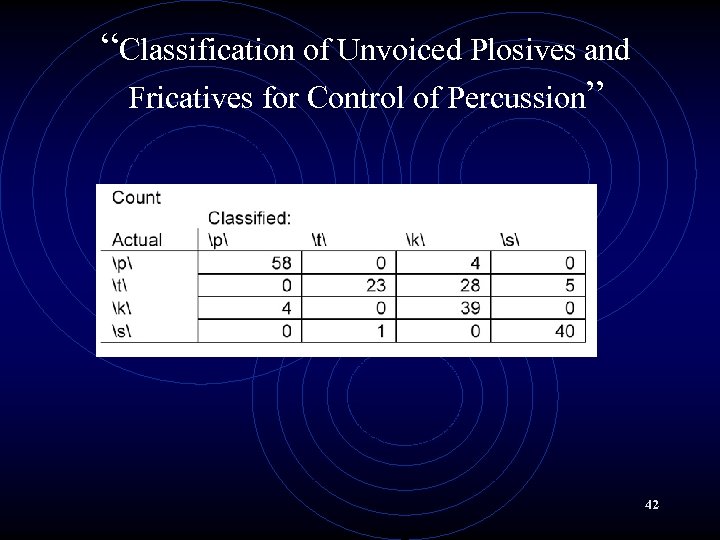

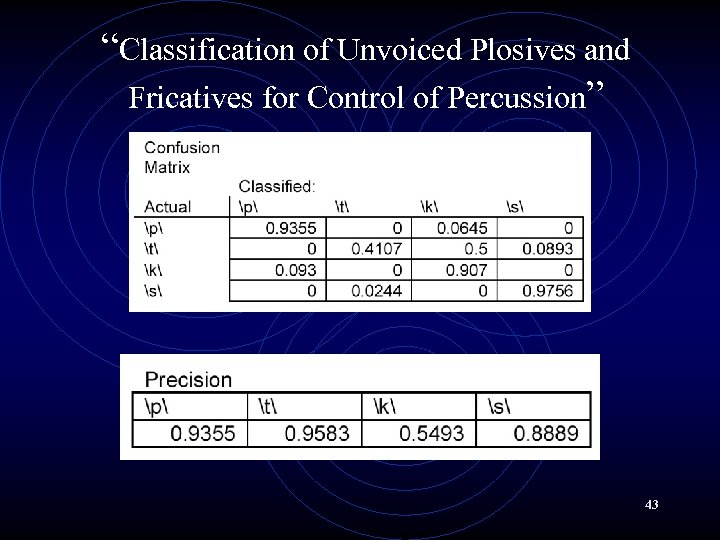

“Classification of Unvoiced Plosives and Fricatives for Control of Percussion” • Sought to distinguish between /p/, /t/, /k/, /s/ sounds • Used 5 features and minimum-distance criteria to classify • Implemented in Matlab 40

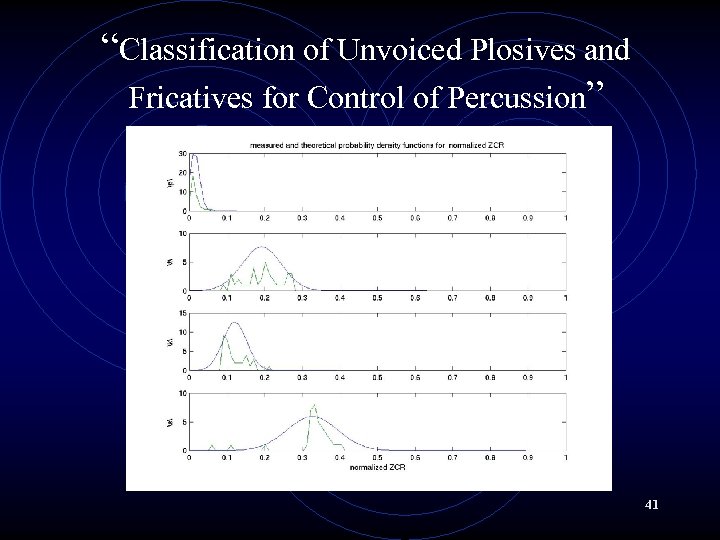

“Classification of Unvoiced Plosives and Fricatives for Control of Percussion” 41

“Classification of Unvoiced Plosives and Fricatives for Control of Percussion” 42

“Classification of Unvoiced Plosives and Fricatives for Control of Percussion” 43

References • A. Kapur, M. Benning, and G. Tzanetakis, “Query-by-beat • • boxing: music retrieval for the DJ”, Proc. Int. Conf. Music Information Retrieval (ISMIR), Barcelona, Spain, 2004. T. Nakano, J. Ogata, M. Goto, Y. Hiraga, “A drum pattern retrieval method by voice percussion”, Proc. Int. Conf. Music Information Retrieval (ISMIR), Barcelona, Spain, 2004. A. Hazan, “Towards automatic transcription of expressive oral percussive performances”, Proc of the 10 th international conference on Intelligent user interfaces, San Diego, 2005. F. Gouyon, F. Pachet, and O. Delerue, “On the use of zerocrossing rate for an application of classification of percussive sounds”, Proc. Of the COST G-6 Conf. on Digital Audio Effects (DAFX-00), Verona, Italy, 2000. A. Klapuri, “Sound onset detection by applying psychoacoustic knowledge”, Proc. IEEE Int. Conf. Acoust. , Speech, and Signal Proc (ICASSP), 1999. 44

6bb30d7693ade1288238b194e0d0637a.ppt