b1435dadc30e95b94d654005e4f02a77.ppt

- Количество слайдов: 52

Transactions The terminology used in this section is that all users (online interactive users or batch programs) issue transactions to the DBMS. A TRANSACTION is an atomic unit of database work specified to the DBMS. Transactions are often called QUERIES when they request only read access (i. e. , QUERIES are READ-ONLY TRANSACTIONS) A transaction is issued using constructs such as reserved words, BEGIN to initiate a transaction (most actual system supply the BEGIN if the user doesn't, e. g. , whenever a new SQL statement is encountered it is assumed to iniate a new transaction) END to end a transaction (usually either COMMIT for successful END and ABORT for unsuccessful END) (most actual system supply this element if the user doesn't, e. g. , If SQL statement execution is successful, Then DBMS supplies COMMIT, else ABORT) READ whenever any data is needed from the DB (e. g. , in an SQL SELECT) WRITE whenever any data needs to be written to the DB (e. g. , in an SQL INSERT or UPDATE) In this set of notes, all others aspects of language, coding, etc. will be considered as unintrepreted aspects. For the purposes of transaction management (Concurrency Control and Recovery) we only need to consider this level of detail.

Transactions The terminology used in this section is that all users (online interactive users or batch programs) issue transactions to the DBMS. A TRANSACTION is an atomic unit of database work specified to the DBMS. Transactions are often called QUERIES when they request only read access (i. e. , QUERIES are READ-ONLY TRANSACTIONS) A transaction is issued using constructs such as reserved words, BEGIN to initiate a transaction (most actual system supply the BEGIN if the user doesn't, e. g. , whenever a new SQL statement is encountered it is assumed to iniate a new transaction) END to end a transaction (usually either COMMIT for successful END and ABORT for unsuccessful END) (most actual system supply this element if the user doesn't, e. g. , If SQL statement execution is successful, Then DBMS supplies COMMIT, else ABORT) READ whenever any data is needed from the DB (e. g. , in an SQL SELECT) WRITE whenever any data needs to be written to the DB (e. g. , in an SQL INSERT or UPDATE) In this set of notes, all others aspects of language, coding, etc. will be considered as unintrepreted aspects. For the purposes of transaction management (Concurrency Control and Recovery) we only need to consider this level of detail.

Transactions cont. ATOMIC unit of work means that the DBMS guarantees that unit will be done to completion or not at all (in which case, the DB and User community will be left just as they were when the request came. i. e. , as if the unit of work never existed) A Transaction is defined by all work specified between a BEGIN statement and the next encountered END (either COMMIT or ABORT) When a transaction arrives at the DBMS, a Transaction Manager (TM) is assigned to it (code segment to act on its behalf). The TM interfaces with other components, e. g. , the Scheduler (SCHED) for permission to access particular data items SCHED is like a policeman, giving permission to access the requested item(s). Its activity is called concurrency control. Once permission is granted for TM to access data items Data Manager (DM) does the actual reads and writes. There are several models for describing this interaction. We will describe two of them, Model-1 and Model-2.

Transactions cont. ATOMIC unit of work means that the DBMS guarantees that unit will be done to completion or not at all (in which case, the DB and User community will be left just as they were when the request came. i. e. , as if the unit of work never existed) A Transaction is defined by all work specified between a BEGIN statement and the next encountered END (either COMMIT or ABORT) When a transaction arrives at the DBMS, a Transaction Manager (TM) is assigned to it (code segment to act on its behalf). The TM interfaces with other components, e. g. , the Scheduler (SCHED) for permission to access particular data items SCHED is like a policeman, giving permission to access the requested item(s). Its activity is called concurrency control. Once permission is granted for TM to access data items Data Manager (DM) does the actual reads and writes. There are several models for describing this interaction. We will describe two of them, Model-1 and Model-2.

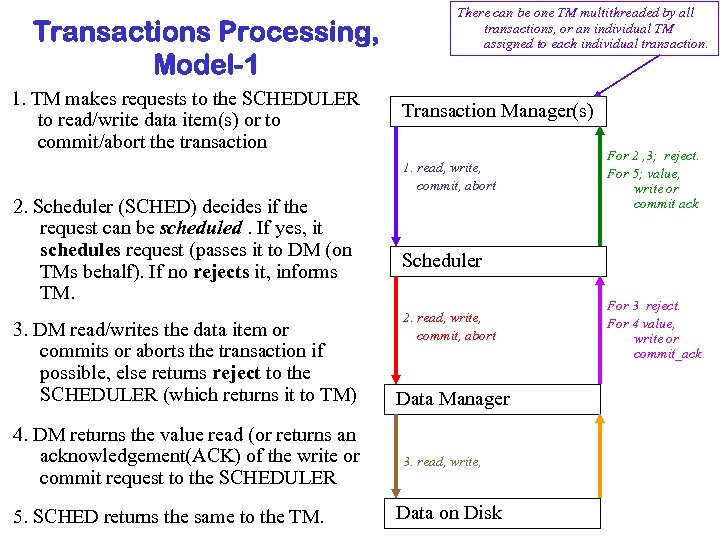

Transactions Processing, Model-1 1. TM makes requests to the SCHEDULER to read/write data item(s) or to commit/abort the transaction There can be one TM multithreaded by all transactions, or an individual TM assigned to each individual transaction. Transaction Manager(s) 1. read, write, commit, abort 2. Scheduler (SCHED) decides if the request can be scheduled. If yes, it schedules request (passes it to DM (on TMs behalf). If no rejects it, informs TM. 3. DM read/writes the data item or commits or aborts the transaction if possible, else returns reject to the SCHEDULER (which returns it to TM) 4. DM returns the value read (or returns an acknowledgement(ACK) of the write or commit request to the SCHEDULER 5. SCHED returns the same to the TM. For 2 , 3; reject. For 5; value, write or commit ack Scheduler 2. read, write, commit, abort Data Manager 3. read, write, Data on Disk For 3 reject. For 4 value, write or commit_ack

Transactions Processing, Model-1 1. TM makes requests to the SCHEDULER to read/write data item(s) or to commit/abort the transaction There can be one TM multithreaded by all transactions, or an individual TM assigned to each individual transaction. Transaction Manager(s) 1. read, write, commit, abort 2. Scheduler (SCHED) decides if the request can be scheduled. If yes, it schedules request (passes it to DM (on TMs behalf). If no rejects it, informs TM. 3. DM read/writes the data item or commits or aborts the transaction if possible, else returns reject to the SCHEDULER (which returns it to TM) 4. DM returns the value read (or returns an acknowledgement(ACK) of the write or commit request to the SCHEDULER 5. SCHED returns the same to the TM. For 2 , 3; reject. For 5; value, write or commit ack Scheduler 2. read, write, commit, abort Data Manager 3. read, write, Data on Disk For 3 reject. For 4 value, write or commit_ack

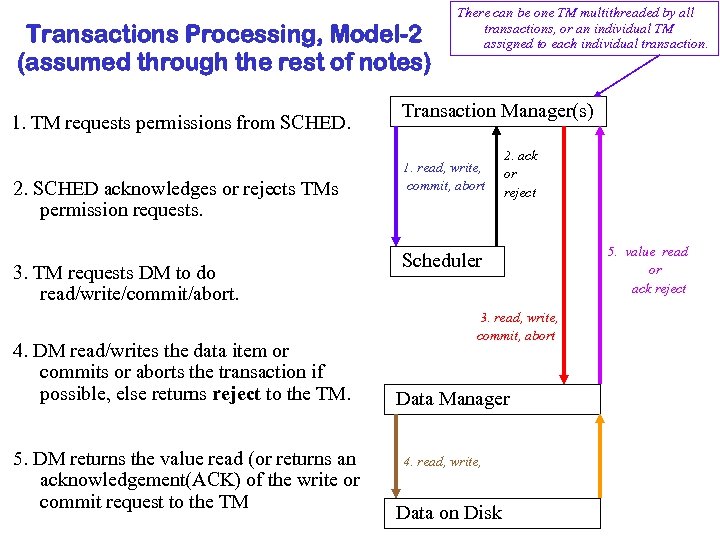

Transactions Processing, Model-2 (assumed through the rest of notes) 1. TM requests permissions from SCHED. 2. SCHED acknowledges or rejects TMs permission requests. 3. TM requests DM to do read/write/commit/abort. 4. DM read/writes the data item or commits or aborts the transaction if possible, else returns reject to the TM. 5. DM returns the value read (or returns an acknowledgement(ACK) of the write or commit request to the TM There can be one TM multithreaded by all transactions, or an individual TM assigned to each individual transaction. Transaction Manager(s) 1. read, write, commit, abort 2. ack or reject Scheduler 3. read, write, commit, abort Data Manager 4. read, write, Data on Disk 5. value read or ack reject

Transactions Processing, Model-2 (assumed through the rest of notes) 1. TM requests permissions from SCHED. 2. SCHED acknowledges or rejects TMs permission requests. 3. TM requests DM to do read/write/commit/abort. 4. DM read/writes the data item or commits or aborts the transaction if possible, else returns reject to the TM. 5. DM returns the value read (or returns an acknowledgement(ACK) of the write or commit request to the TM There can be one TM multithreaded by all transactions, or an individual TM assigned to each individual transaction. Transaction Manager(s) 1. read, write, commit, abort 2. ack or reject Scheduler 3. read, write, commit, abort Data Manager 4. read, write, Data on Disk 5. value read or ack reject

Concurrency Control (the activity of the scheduler, SCHED) We need concurrency control or CC (AKA mutual exclusion) whenever there are shared system resources that cannot be used concurrently. An illegal concurrent use of a shared resource is a conflict, e. g. , printer, or a data item that one user wants to read another wants to change. IN DBMSs the shared resources we will call data items. DATA ITEM GRANULARITY is the level at which we treat CC. The possible Granularity levels are: field level (logical level, very fine granularity) record level (logical level, fine granularity) page level (physical level, medium granularity) file level (logical level, coarse granularity) area level (logical level, quite coarse granularity) database level (logical level, very coarse granularity)

Concurrency Control (the activity of the scheduler, SCHED) We need concurrency control or CC (AKA mutual exclusion) whenever there are shared system resources that cannot be used concurrently. An illegal concurrent use of a shared resource is a conflict, e. g. , printer, or a data item that one user wants to read another wants to change. IN DBMSs the shared resources we will call data items. DATA ITEM GRANULARITY is the level at which we treat CC. The possible Granularity levels are: field level (logical level, very fine granularity) record level (logical level, fine granularity) page level (physical level, medium granularity) file level (logical level, coarse granularity) area level (logical level, quite coarse granularity) database level (logical level, very coarse granularity)

Concurrency Control cont. We will assume, that a data item is a record (i. e. , we assume logical, record-level granularity) This means there are many more shared resources for DBMS to manage than there anywhere else, (e. g. , printers for an O/S to manage), and therefore, CC is a harder problem to solve in a DBMS than anywhere else! A DBMS may have 1, 000 records or more. An O/S may have to manage ~ 50 printers. Ethernet Medium Access Protocol (unswitched) manages ONE shared wire. Although you may have studied mutual exclusion before (e. g. , in an Operating Systems course it is a more complicated problem in DBMS.

Concurrency Control cont. We will assume, that a data item is a record (i. e. , we assume logical, record-level granularity) This means there are many more shared resources for DBMS to manage than there anywhere else, (e. g. , printers for an O/S to manage), and therefore, CC is a harder problem to solve in a DBMS than anywhere else! A DBMS may have 1, 000 records or more. An O/S may have to manage ~ 50 printers. Ethernet Medium Access Protocol (unswitched) manages ONE shared wire. Although you may have studied mutual exclusion before (e. g. , in an Operating Systems course it is a more complicated problem in DBMS.

Concurrency Control cont. In any resource management situation (Operating System(OS), Network Operating System(NOS) or DBMS. . . ) there are "shared resources" and there are "users" SHARED RESOURCE MANAGEMENT: How can the system insure correct access to shared resources among concurrently executing transactions? All answers seem to come from traffic control and managment! (traffic intersections or construction zones or driveup windows). The are in two categories: WAITING POLICY: If a needed resource is unavailable, requester waits until it becomes available (e. g. , intersection red light, Hardees drive up lane). This is how print jobs are managed by an OS Advantages: NO RESTARTING (no unnecessary loss of progress) e. g. , At Hardees, they don't say "Go home! Come back later! Disadvantages: DEADLOCKS may happen unless they are managed. e. g. , at a construction zone, if the two flag women don't coordinate, both traffic lines may start into the construction zones from opposite directions and DEADLOCK in the middle!). Another disadvantage is INCONSISTENT RESPONSE TIMES. At the Hardees window, you may wait an hour or a minute. (Not so important at Hardees (well maybe it is if you're very hungry? ; -), but it is very important at, e. g. , at your local Emergency Room).

Concurrency Control cont. In any resource management situation (Operating System(OS), Network Operating System(NOS) or DBMS. . . ) there are "shared resources" and there are "users" SHARED RESOURCE MANAGEMENT: How can the system insure correct access to shared resources among concurrently executing transactions? All answers seem to come from traffic control and managment! (traffic intersections or construction zones or driveup windows). The are in two categories: WAITING POLICY: If a needed resource is unavailable, requester waits until it becomes available (e. g. , intersection red light, Hardees drive up lane). This is how print jobs are managed by an OS Advantages: NO RESTARTING (no unnecessary loss of progress) e. g. , At Hardees, they don't say "Go home! Come back later! Disadvantages: DEADLOCKS may happen unless they are managed. e. g. , at a construction zone, if the two flag women don't coordinate, both traffic lines may start into the construction zones from opposite directions and DEADLOCK in the middle!). Another disadvantage is INCONSISTENT RESPONSE TIMES. At the Hardees window, you may wait an hour or a minute. (Not so important at Hardees (well maybe it is if you're very hungry? ; -), but it is very important at, e. g. , at your local Emergency Room).

Concurrency Control cont. SHARED RESOURCE MANAGEMENT: How can the system insure correct access to shared resources among concurrently executing transactions? All answers seem to come from traffic control and managment! (traffic intersections or construction zones or driveup windows). The second of the two categories is: RESTART POLICY: If a needed resource is unavailable, then the requester terminates the request and restarts requesting later. e. g. , When someone goes before the parole board: They either get their request or they restart the process later (much later? ; -( In Ethernet (unswitched) CSMA/CD, if node A wants to send a message to node B: 1. Carrier Sense (the "CS" part): the wire is checked for traffic; if it is busy (in use by another sender), A waits (according to some "back-off algorithm") then checks again, etc. until the wire is idle, then SENDs the message. 2. Collision Detection (the "CD" part): listen to bus until you're certain that your message did not collide with another concurrently sent message (the required length of wait time is the traversal_time of wire, since there are terminators (absorbers) at each end). Advantages of restart policies: simple, no deadlock Disadvantages: Lower throughput, lost progress, long delays? , possible livelock.

Concurrency Control cont. SHARED RESOURCE MANAGEMENT: How can the system insure correct access to shared resources among concurrently executing transactions? All answers seem to come from traffic control and managment! (traffic intersections or construction zones or driveup windows). The second of the two categories is: RESTART POLICY: If a needed resource is unavailable, then the requester terminates the request and restarts requesting later. e. g. , When someone goes before the parole board: They either get their request or they restart the process later (much later? ; -( In Ethernet (unswitched) CSMA/CD, if node A wants to send a message to node B: 1. Carrier Sense (the "CS" part): the wire is checked for traffic; if it is busy (in use by another sender), A waits (according to some "back-off algorithm") then checks again, etc. until the wire is idle, then SENDs the message. 2. Collision Detection (the "CD" part): listen to bus until you're certain that your message did not collide with another concurrently sent message (the required length of wait time is the traversal_time of wire, since there are terminators (absorbers) at each end). Advantages of restart policies: simple, no deadlock Disadvantages: Lower throughput, lost progress, long delays? , possible livelock.

Concurrency Control cont. A Transaction = A computation or program taking the database from one consistent state to another (without necessarily preserving consistency at each step of the way). The transaction is an atomic unit of database work, ie, DBMS executes transaction to completion or not at all, GUARANTEED. If only one transaction is allowed to execute at time and if the database starts in a consistent state (obeying all Integrity Constraints or ICs) then it will always end up in a consistent state! The problem is, the above SERIAL EXECUTION is much too inefficient! A DBMS (is supposed to) guarantee the ACIDS PROPERTIES of transactions: ATOMICITY: A transaction is an all-or-nothing proposition. Either a transaction is executed by the DBMS to completion or all of its effects are erased completely. (Transaction = atomic unit of database workload). CONSISTENCY: Correct Transactions take the database from one consistent state to another consistent state. Consistency is defined in terms of consistency constraints or "integrity constraints", e. g. , entity integrity, referential integrity, other integrities. ISOLATION: Each user is given the illusion of being the sole user of the system (by the concurrency control subsystem). DURABILITY: The effects of a transaction are never lost after it is "committed" by the DBMS. (ie, after a COMMIT request is acked by DBMS).

Concurrency Control cont. A Transaction = A computation or program taking the database from one consistent state to another (without necessarily preserving consistency at each step of the way). The transaction is an atomic unit of database work, ie, DBMS executes transaction to completion or not at all, GUARANTEED. If only one transaction is allowed to execute at time and if the database starts in a consistent state (obeying all Integrity Constraints or ICs) then it will always end up in a consistent state! The problem is, the above SERIAL EXECUTION is much too inefficient! A DBMS (is supposed to) guarantee the ACIDS PROPERTIES of transactions: ATOMICITY: A transaction is an all-or-nothing proposition. Either a transaction is executed by the DBMS to completion or all of its effects are erased completely. (Transaction = atomic unit of database workload). CONSISTENCY: Correct Transactions take the database from one consistent state to another consistent state. Consistency is defined in terms of consistency constraints or "integrity constraints", e. g. , entity integrity, referential integrity, other integrities. ISOLATION: Each user is given the illusion of being the sole user of the system (by the concurrency control subsystem). DURABILITY: The effects of a transaction are never lost after it is "committed" by the DBMS. (ie, after a COMMIT request is acked by DBMS).

CC execution types SERIAL EXECUTION insures most of the ACID properties (Consistency and isolation for sure. It also helps in atomicity and durability). i. e. , queue all transactions as they come in (into a FIFO queue? ). Let each transaction execute to completion before the next even starts. Serial execution may produce unacceptable execution delays (i. e. , long response times) and low system utilization. SERIALIZABLE EXECUTION is much, much better! Concurrent execution of multiple transactions is called serializable if the effect of the execution of operations (reads and writes) within the transactions are sequenced in a way that the result is equivalent to some serial execution (i. e. , is as if it was done by a serial execution of transaction operations). Serializability facilitates ATOMICITY, CONSISTENCY and ISOLATION of concurrent, correct transactions, just as well as SERIAL does, but allow much higher system throughput. RECOVERABILITY facilitates DURABILITY (more on this later). An execution is RECOVERABLE if every transactions that commits, commits only after every other transaction it read-from is committed.

CC execution types SERIAL EXECUTION insures most of the ACID properties (Consistency and isolation for sure. It also helps in atomicity and durability). i. e. , queue all transactions as they come in (into a FIFO queue? ). Let each transaction execute to completion before the next even starts. Serial execution may produce unacceptable execution delays (i. e. , long response times) and low system utilization. SERIALIZABLE EXECUTION is much, much better! Concurrent execution of multiple transactions is called serializable if the effect of the execution of operations (reads and writes) within the transactions are sequenced in a way that the result is equivalent to some serial execution (i. e. , is as if it was done by a serial execution of transaction operations). Serializability facilitates ATOMICITY, CONSISTENCY and ISOLATION of concurrent, correct transactions, just as well as SERIAL does, but allow much higher system throughput. RECOVERABILITY facilitates DURABILITY (more on this later). An execution is RECOVERABLE if every transactions that commits, commits only after every other transaction it read-from is committed.

Isolation Levels SQL defines execution types or levels of isolation weaker than SERIALIZABILITY (they do not guarantee ACIDS properties entirely, but they are easier to achieve). REPEATABLE READ ensures that no value read or written by a transaction, T, is changed by any other transaction until T is complete; and that T can read only changes made by committed transactions. READ COMMITTED ensures that no value written by a transaction, T is changed by any other transaction until T is complete; and that T can read only changes made by committed transactions. READ UNCOMMITTED ensures nothing (T can read changes made to an item by an ongoing trans and the item can be further changed while T is in progress. There will be further discussion on these later in these notes. For now, please note there are several suggested paper topics in the topics file concerning isolation levels. But also note that I think these other isolation levels are bunk!

Isolation Levels SQL defines execution types or levels of isolation weaker than SERIALIZABILITY (they do not guarantee ACIDS properties entirely, but they are easier to achieve). REPEATABLE READ ensures that no value read or written by a transaction, T, is changed by any other transaction until T is complete; and that T can read only changes made by committed transactions. READ COMMITTED ensures that no value written by a transaction, T is changed by any other transaction until T is complete; and that T can read only changes made by committed transactions. READ UNCOMMITTED ensures nothing (T can read changes made to an item by an ongoing trans and the item can be further changed while T is in progress. There will be further discussion on these later in these notes. For now, please note there are several suggested paper topics in the topics file concerning isolation levels. But also note that I think these other isolation levels are bunk!

Concurrent Transactions are transactions whose executions overlaps in time (the individual operations (read/write of a particular data item) may be interleaved in time). Again, the only operations we concern ourselves with are BEGIN, READ, WRITE, COMMIT, ABORT. READ and WRITE are the operations that apply to data items. A data item can be a field, record, file, area or DB (logical granules) or page (physical granule). We assume record-level granularity. A read(X) operation, reads current value of the data item, X, into a program variable (which we will also called X for simplicity). Even though we will not concern our selves with these details in this section, read(X) includes the following steps: 1. Find the address of the page containing X. 2. Copy that page to a main memory buffer (unless it is already in memory). 3. Copy the value of the dataitem, X, from the buffer to the program variable, X The write(X) operation, writes the value of the program variable, X, into the database item X. It includes the following steps: 1. Find the address of the page containing X 2. Copy that page to a main memory buffer (unless it is already in memory). 3. Copy the program variable, X, to buffer area for X. 4. Write the buffer back to disk (can be deferred and is governed by DM).

Concurrent Transactions are transactions whose executions overlaps in time (the individual operations (read/write of a particular data item) may be interleaved in time). Again, the only operations we concern ourselves with are BEGIN, READ, WRITE, COMMIT, ABORT. READ and WRITE are the operations that apply to data items. A data item can be a field, record, file, area or DB (logical granules) or page (physical granule). We assume record-level granularity. A read(X) operation, reads current value of the data item, X, into a program variable (which we will also called X for simplicity). Even though we will not concern our selves with these details in this section, read(X) includes the following steps: 1. Find the address of the page containing X. 2. Copy that page to a main memory buffer (unless it is already in memory). 3. Copy the value of the dataitem, X, from the buffer to the program variable, X The write(X) operation, writes the value of the program variable, X, into the database item X. It includes the following steps: 1. Find the address of the page containing X 2. Copy that page to a main memory buffer (unless it is already in memory). 3. Copy the program variable, X, to buffer area for X. 4. Write the buffer back to disk (can be deferred and is governed by DM).

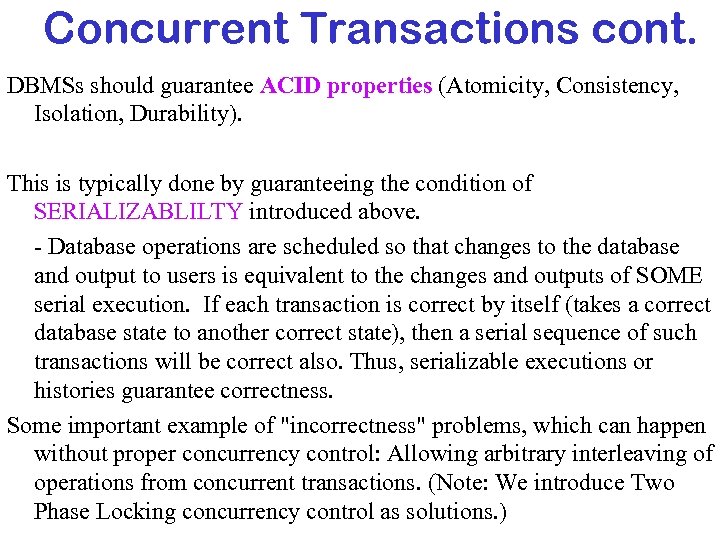

Concurrent Transactions cont. DBMSs should guarantee ACID properties (Atomicity, Consistency, Isolation, Durability). This is typically done by guaranteeing the condition of SERIALIZABLILTY introduced above. - Database operations are scheduled so that changes to the database and output to users is equivalent to the changes and outputs of SOME serial execution. If each transaction is correct by itself (takes a correct database state to another correct state), then a serial sequence of such transactions will be correct also. Thus, serializable executions or histories guarantee correctness. Some important example of "incorrectness" problems, which can happen without proper concurrency control: Allowing arbitrary interleaving of operations from concurrent transactions. (Note: We introduce Two Phase Locking concurrency control as solutions. )

Concurrent Transactions cont. DBMSs should guarantee ACID properties (Atomicity, Consistency, Isolation, Durability). This is typically done by guaranteeing the condition of SERIALIZABLILTY introduced above. - Database operations are scheduled so that changes to the database and output to users is equivalent to the changes and outputs of SOME serial execution. If each transaction is correct by itself (takes a correct database state to another correct state), then a serial sequence of such transactions will be correct also. Thus, serializable executions or histories guarantee correctness. Some important example of "incorrectness" problems, which can happen without proper concurrency control: Allowing arbitrary interleaving of operations from concurrent transactions. (Note: We introduce Two Phase Locking concurrency control as solutions. )

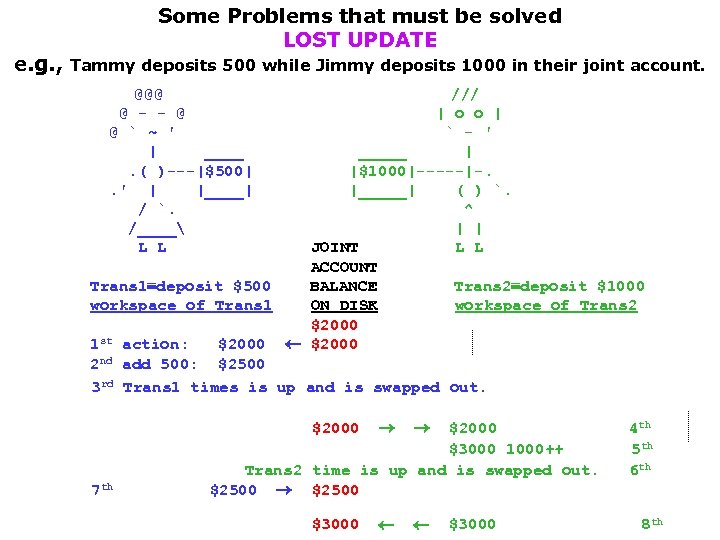

e. g. , Some Problems that must be solved LOST UPDATE Tammy deposits 500 while Jimmy deposits 1000 in their joint account. @@@ @ - - @ @ ` ~ ' | ____. ( )---|$500|. ' | |____| / `. /____ L L /// | o o | ` - ' _____ | |$1000|-----|-. |_____| ( ) `. ^ | | JOINT L L ACCOUNT Trans 1 deposit $500 BALANCE Trans 2 deposit $1000 workspace of Trans 1 ON DISK workspace of Trans 2 $2000 st action: 1 $2000 2 nd add 500: $2500 3 rd Trans 1 times is up and is swapped out. $2000 7 th $3000 $2000 $3000 1000++ Trans 2 time is up and is swapped out. $2500 $3000 4 th 5 th 6 th 8 th

e. g. , Some Problems that must be solved LOST UPDATE Tammy deposits 500 while Jimmy deposits 1000 in their joint account. @@@ @ - - @ @ ` ~ ' | ____. ( )---|$500|. ' | |____| / `. /____ L L /// | o o | ` - ' _____ | |$1000|-----|-. |_____| ( ) `. ^ | | JOINT L L ACCOUNT Trans 1 deposit $500 BALANCE Trans 2 deposit $1000 workspace of Trans 1 ON DISK workspace of Trans 2 $2000 st action: 1 $2000 2 nd add 500: $2500 3 rd Trans 1 times is up and is swapped out. $2000 7 th $3000 $2000 $3000 1000++ Trans 2 time is up and is swapped out. $2500 $3000 4 th 5 th 6 th 8 th

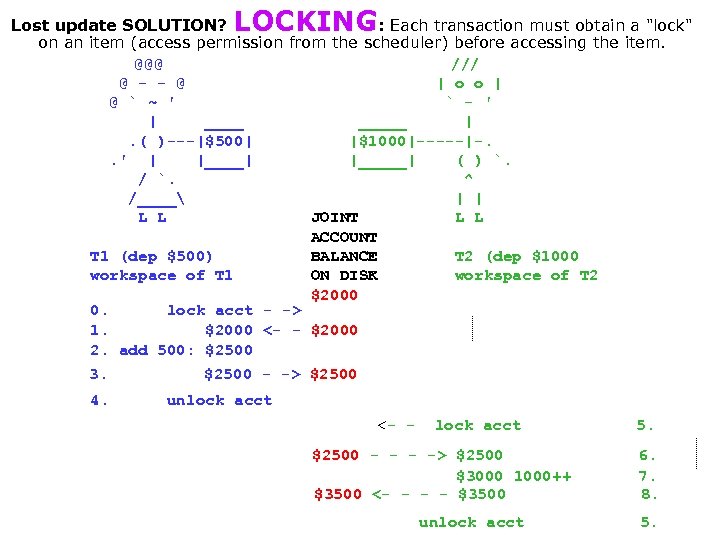

LOCKING Lost update SOLUTION? : Each transaction must obtain a "lock" on an item (access permission from the scheduler) before accessing the item. @@@ /// @ - - @ | o o | @ ` ~ ' ` - ' | _____ |. ( )---|$500| |$1000|-----|-. . ' | |_____| ( ) `. / `. ^ /____ | | L L JOINT L L ACCOUNT T 1 (dep $500) BALANCE T 2 (dep $1000 workspace of T 1 ON DISK workspace of T 2 $2000 0. lock acct - -> 1. $2000 <- - $2000 2. add 500: $2500 3. $2500 - -> $2500 4. unlock acct <- - lock acct $2500 - -> $2500 $3000 1000++ $3500 <- - $3500 unlock acct 5. 6. 7. 8. 5.

LOCKING Lost update SOLUTION? : Each transaction must obtain a "lock" on an item (access permission from the scheduler) before accessing the item. @@@ /// @ - - @ | o o | @ ` ~ ' ` - ' | _____ |. ( )---|$500| |$1000|-----|-. . ' | |_____| ( ) `. / `. ^ /____ | | L L JOINT L L ACCOUNT T 1 (dep $500) BALANCE T 2 (dep $1000 workspace of T 1 ON DISK workspace of T 2 $2000 0. lock acct - -> 1. $2000 <- - $2000 2. add 500: $2500 3. $2500 - -> $2500 4. unlock acct <- - lock acct $2500 - -> $2500 $3000 1000++ $3500 <- - $3500 unlock acct 5. 6. 7. 8. 5.

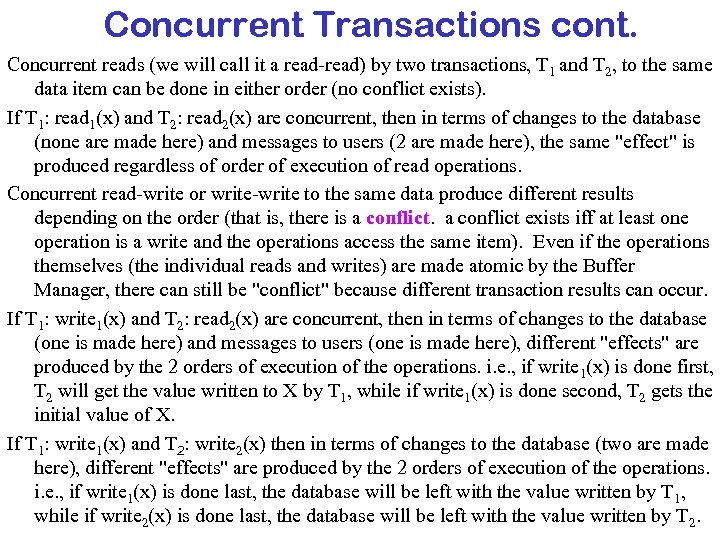

Concurrent Transactions cont. Concurrent reads (we will call it a read-read) by two transactions, T 1 and T 2, to the same data item can be done in either order (no conflict exists). If T 1: read 1(x) and T 2: read 2(x) are concurrent, then in terms of changes to the database (none are made here) and messages to users (2 are made here), the same "effect" is produced regardless of order of execution of read operations. Concurrent read-write or write-write to the same data produce different results depending on the order (that is, there is a conflict exists iff at least one operation is a write and the operations access the same item). Even if the operations themselves (the individual reads and writes) are made atomic by the Buffer Manager, there can still be "conflict" because different transaction results can occur. If T 1: write 1(x) and T 2: read 2(x) are concurrent, then in terms of changes to the database (one is made here) and messages to users (one is made here), different "effects" are produced by the 2 orders of execution of the operations. i. e. , if write 1(x) is done first, T 2 will get the value written to X by T 1, while if write 1(x) is done second, T 2 gets the initial value of X. If T 1: write 1(x) and T 2: write 2(x) then in terms of changes to the database (two are made here), different "effects" are produced by the 2 orders of execution of the operations. i. e. , if write 1(x) is done last, the database will be left with the value written by T 1, while if write 2(x) is done last, the database will be left with the value written by T 2.

Concurrent Transactions cont. Concurrent reads (we will call it a read-read) by two transactions, T 1 and T 2, to the same data item can be done in either order (no conflict exists). If T 1: read 1(x) and T 2: read 2(x) are concurrent, then in terms of changes to the database (none are made here) and messages to users (2 are made here), the same "effect" is produced regardless of order of execution of read operations. Concurrent read-write or write-write to the same data produce different results depending on the order (that is, there is a conflict exists iff at least one operation is a write and the operations access the same item). Even if the operations themselves (the individual reads and writes) are made atomic by the Buffer Manager, there can still be "conflict" because different transaction results can occur. If T 1: write 1(x) and T 2: read 2(x) are concurrent, then in terms of changes to the database (one is made here) and messages to users (one is made here), different "effects" are produced by the 2 orders of execution of the operations. i. e. , if write 1(x) is done first, T 2 will get the value written to X by T 1, while if write 1(x) is done second, T 2 gets the initial value of X. If T 1: write 1(x) and T 2: write 2(x) then in terms of changes to the database (two are made here), different "effects" are produced by the 2 orders of execution of the operations. i. e. , if write 1(x) is done last, the database will be left with the value written by T 1, while if write 2(x) is done last, the database will be left with the value written by T 2.

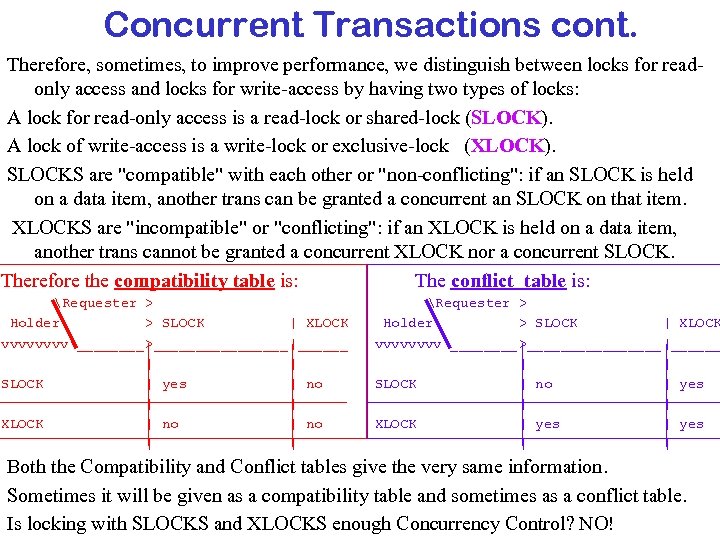

Concurrent Transactions cont. Therefore, sometimes, to improve performance, we distinguish between locks for readonly access and locks for write-access by having two types of locks: A lock for read-only access is a read-lock or shared-lock (SLOCK). A lock of write-access is a write-lock or exclusive-lock (XLOCK). SLOCKS are "compatible" with each other or "non-conflicting": if an SLOCK is held on a data item, another trans can be granted a concurrent an SLOCK on that item. XLOCKS are "incompatible" or "conflicting": if an XLOCK is held on a data item, another trans cannot be granted a concurrent XLOCK nor a concurrent SLOCK. Therefore the compatibility table is: The conflict table is: Requester > Holder > SLOCK | XLOCK vvvv____>________|______ | | SLOCK | yes | no | | XLOCK | no | | Requester > Holder > SLOCK | XLOCK vvvv____>________|______ | | SLOCK | no | yes | | XLOCK | yes | | Both the Compatibility and Conflict tables give the very same information. Sometimes it will be given as a compatibility table and sometimes as a conflict table. Is locking with SLOCKS and XLOCKS enough Concurrency Control? NO!

Concurrent Transactions cont. Therefore, sometimes, to improve performance, we distinguish between locks for readonly access and locks for write-access by having two types of locks: A lock for read-only access is a read-lock or shared-lock (SLOCK). A lock of write-access is a write-lock or exclusive-lock (XLOCK). SLOCKS are "compatible" with each other or "non-conflicting": if an SLOCK is held on a data item, another trans can be granted a concurrent an SLOCK on that item. XLOCKS are "incompatible" or "conflicting": if an XLOCK is held on a data item, another trans cannot be granted a concurrent XLOCK nor a concurrent SLOCK. Therefore the compatibility table is: The conflict table is: Requester > Holder > SLOCK | XLOCK vvvv____>________|______ | | SLOCK | yes | no | | XLOCK | no | | Requester > Holder > SLOCK | XLOCK vvvv____>________|______ | | SLOCK | no | yes | | XLOCK | yes | | Both the Compatibility and Conflict tables give the very same information. Sometimes it will be given as a compatibility table and sometimes as a conflict table. Is locking with SLOCKS and XLOCKS enough Concurrency Control? NO!

Problems that must be solved INCONSISTENT RETREIVAL e. g. , Tammy transfers $100 from savings to checking, concurrently the bank is running an audit on the 2 accounts (summing accounts)

Problems that must be solved INCONSISTENT RETREIVAL e. g. , Tammy transfers $100 from savings to checking, concurrently the bank is running an audit on the 2 accounts (summing accounts)

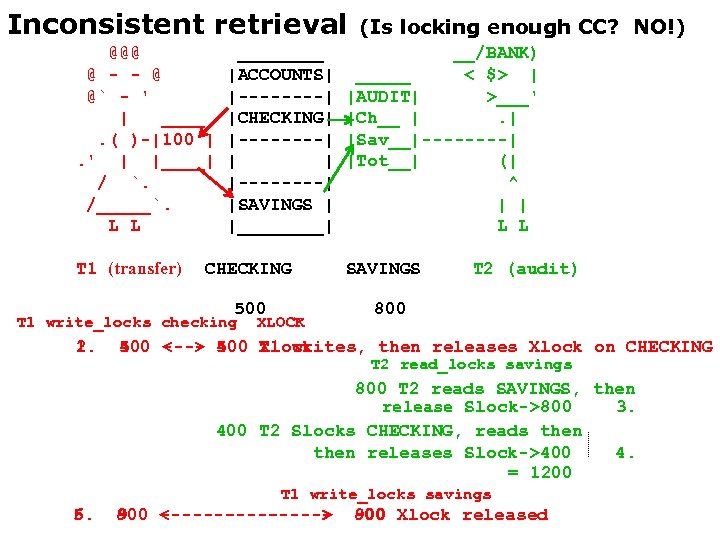

Inconsistent retrieval @@@ @ - - @ @` - ' | ____. ( )-|100 |. ' | |____| / `. /_____`. L L T 1 (transfer) ____ |ACCOUNTS| |----| |CHECKING| |----| |SAVINGS | |____| CHECKING 500 T 1 write_locks checking 2. 1. XLOCK (Is locking enough CC? NO!) __/BANK) _____ < $> | |AUDIT| >___' |Ch__ |. | |Sav__|----| |Tot__| (| ^ | | L L SAVINGS T 2 (audit) 800 400 - - 400 Xlock 500 <--> 500 T 1 writes, then releases Xlock on CHECKING T 2 read_locks savings 800 T 2 reads SAVINGS, then release Slock->800 3. 400 T 2 Slocks CHECKING, reads then releases Slock->400 4. = 1200 T 1 write_locks savings 5. 6. 800 <-------> 900 - 800 Xlock released 900

Inconsistent retrieval @@@ @ - - @ @` - ' | ____. ( )-|100 |. ' | |____| / `. /_____`. L L T 1 (transfer) ____ |ACCOUNTS| |----| |CHECKING| |----| |SAVINGS | |____| CHECKING 500 T 1 write_locks checking 2. 1. XLOCK (Is locking enough CC? NO!) __/BANK) _____ < $> | |AUDIT| >___' |Ch__ |. | |Sav__|----| |Tot__| (| ^ | | L L SAVINGS T 2 (audit) 800 400 - - 400 Xlock 500 <--> 500 T 1 writes, then releases Xlock on CHECKING T 2 read_locks savings 800 T 2 reads SAVINGS, then release Slock->800 3. 400 T 2 Slocks CHECKING, reads then releases Slock->400 4. = 1200 T 1 write_locks savings 5. 6. 800 <-------> 900 - 800 Xlock released 900

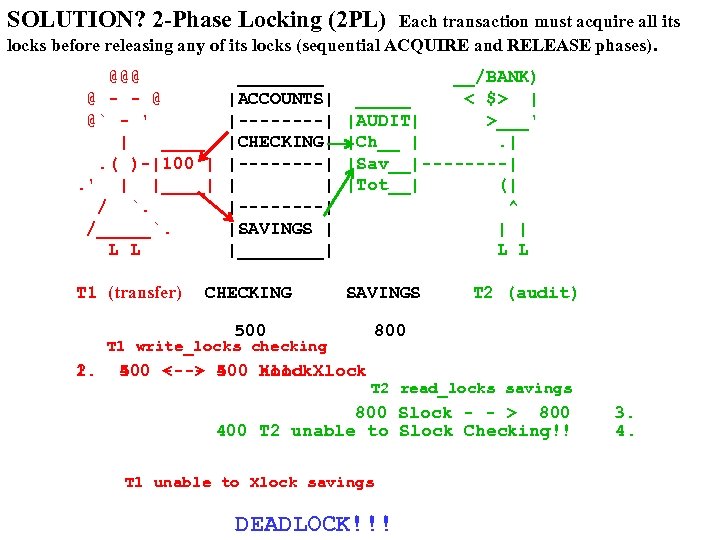

SOLUTION? 2 -Phase Locking (2 PL) Each transaction must acquire all its locks before releasing any of its locks (sequential ACQUIRE and RELEASE phases). @@@ @ - - @ @` - ' | ____. ( )-|100 |. ' | |____| / `. /_____`. L L T 1 (transfer) ____ |ACCOUNTS| |----| |CHECKING| |----| |SAVINGS | |____| CHECKING __/BANK) _____ < $> | |AUDIT| >___' |Ch__ |. | |Sav__|----| |Tot__| (| ^ | | L L SAVINGS 500 T 1 write_locks checking 2. 1. 400 - - 400 Xlock 500 <--> 500 hold Xlock T 2 (audit) 800 T 2 read_locks savings 800 Slock - - > 800 400 T 2 unable to Slock Checking!! T 1 unable to Xlock savings DEADLOCK!!! 3. 4.

SOLUTION? 2 -Phase Locking (2 PL) Each transaction must acquire all its locks before releasing any of its locks (sequential ACQUIRE and RELEASE phases). @@@ @ - - @ @` - ' | ____. ( )-|100 |. ' | |____| / `. /_____`. L L T 1 (transfer) ____ |ACCOUNTS| |----| |CHECKING| |----| |SAVINGS | |____| CHECKING __/BANK) _____ < $> | |AUDIT| >___' |Ch__ |. | |Sav__|----| |Tot__| (| ^ | | L L SAVINGS 500 T 1 write_locks checking 2. 1. 400 - - 400 Xlock 500 <--> 500 hold Xlock T 2 (audit) 800 T 2 read_locks savings 800 Slock - - > 800 400 T 2 unable to Slock Checking!! T 1 unable to Xlock savings DEADLOCK!!! 3. 4.

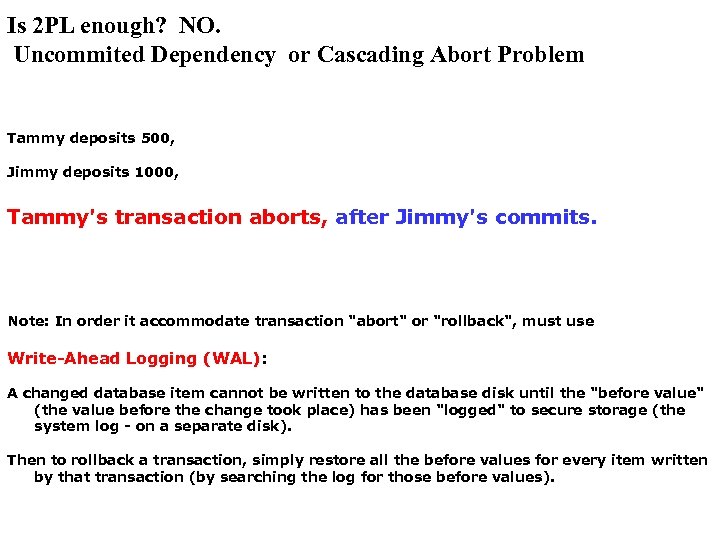

Is 2 PL enough? NO. Uncommited Dependency or Cascading Abort Problem Tammy deposits 500, Jimmy deposits 1000, Tammy's transaction aborts, after Jimmy's commits. Note: In order it accommodate transaction "abort" or "rollback", must use Write-Ahead Logging (WAL): A changed database item cannot be written to the database disk until the "before value" (the value before the change took place) has been "logged" to secure storage (the system log - on a separate disk). Then to rollback a transaction, simply restore all the before values for every item written by that transaction (by searching the log for those before values).

Is 2 PL enough? NO. Uncommited Dependency or Cascading Abort Problem Tammy deposits 500, Jimmy deposits 1000, Tammy's transaction aborts, after Jimmy's commits. Note: In order it accommodate transaction "abort" or "rollback", must use Write-Ahead Logging (WAL): A changed database item cannot be written to the database disk until the "before value" (the value before the change took place) has been "logged" to secure storage (the system log - on a separate disk). Then to rollback a transaction, simply restore all the before values for every item written by that transaction (by searching the log for those before values).

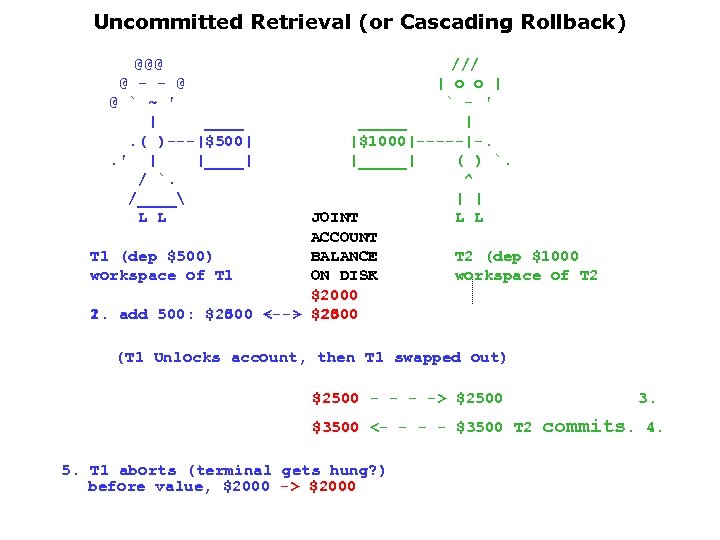

Uncommitted Retrieval (or Cascading Rollback) @@@ @ - - @ @ ` ~ ' | ____. ( )---|$500|. ' | |____| / `. /____ L L /// | o o | ` - ' _____ | |$1000|-----|-. |_____| ( ) `. ^ | | JOINT L L ACCOUNT T 1 (dep $500) BALANCE T 2 (dep $1000 workspace of T 1 ON DISK workspace of T 2 $2000 1. add 500: $2000 <--> $2000 2. $2500 - - $2500 (T 1 Unlocks account, then T 1 swapped out) $2500 - -> $2500 $3500 <- - $3500 T 2 5. T 1 aborts (terminal gets hung? ) before value, $2000 -> $2000 3. commits. 4.

Uncommitted Retrieval (or Cascading Rollback) @@@ @ - - @ @ ` ~ ' | ____. ( )---|$500|. ' | |____| / `. /____ L L /// | o o | ` - ' _____ | |$1000|-----|-. |_____| ( ) `. ^ | | JOINT L L ACCOUNT T 1 (dep $500) BALANCE T 2 (dep $1000 workspace of T 1 ON DISK workspace of T 2 $2000 1. add 500: $2000 <--> $2000 2. $2500 - - $2500 (T 1 Unlocks account, then T 1 swapped out) $2500 - -> $2500 $3500 <- - $3500 T 2 5. T 1 aborts (terminal gets hung? ) before value, $2000 -> $2000 3. commits. 4.

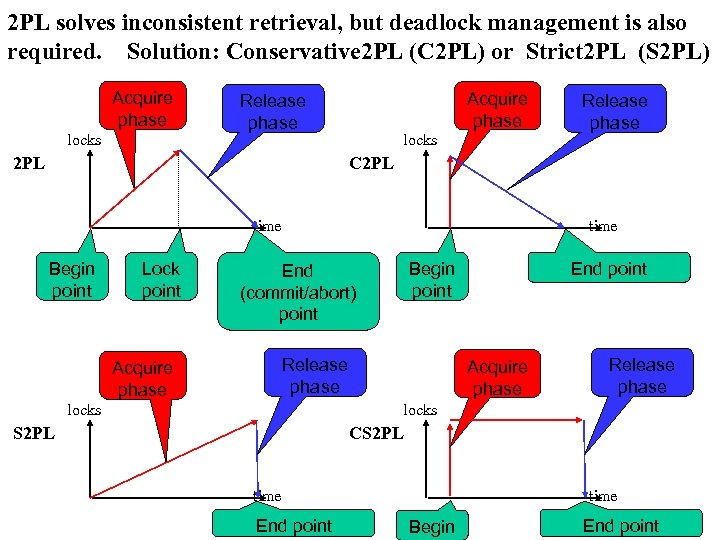

2 PL solves inconsistent retrieval, but deadlock management is also required. Solution: Conservative 2 PL (C 2 PL) or Strict 2 PL (S 2 PL) Acquire phase locks Acquire phase Release phase 2 PL locks Release phase C 2 PL time Begin point Lock point time Release phase Acquire phase locks End point Begin point End (commit/abort) point Acquire phase Release phase locks S 2 PL CS 2 PL time End point time Begin End point

2 PL solves inconsistent retrieval, but deadlock management is also required. Solution: Conservative 2 PL (C 2 PL) or Strict 2 PL (S 2 PL) Acquire phase locks Acquire phase Release phase 2 PL locks Release phase C 2 PL time Begin point Lock point time Release phase Acquire phase locks End point Begin point End (commit/abort) point Acquire phase Release phase locks S 2 PL CS 2 PL time End point time Begin End point

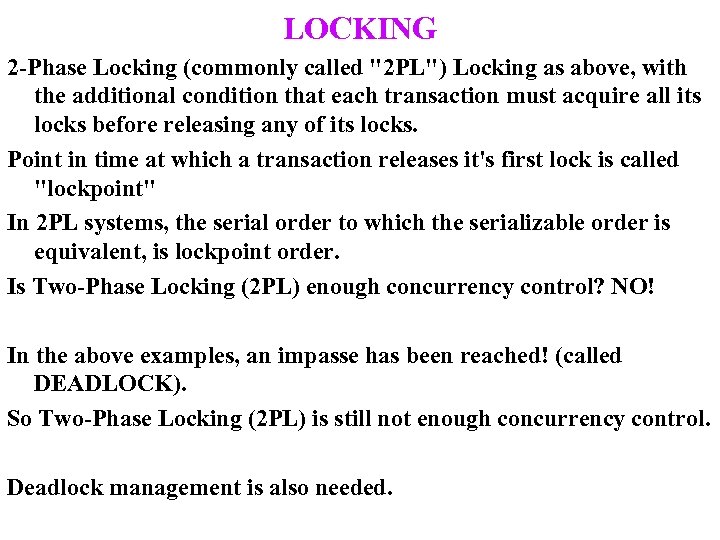

LOCKING 2 -Phase Locking (commonly called "2 PL") Locking as above, with the additional condition that each transaction must acquire all its locks before releasing any of its locks. Point in time at which a transaction releases it's first lock is called "lockpoint" In 2 PL systems, the serial order to which the serializable order is equivalent, is lockpoint order. Is Two-Phase Locking (2 PL) enough concurrency control? NO! In the above examples, an impasse has been reached! (called DEADLOCK). So Two-Phase Locking (2 PL) is still not enough concurrency control. Deadlock management is also needed.

LOCKING 2 -Phase Locking (commonly called "2 PL") Locking as above, with the additional condition that each transaction must acquire all its locks before releasing any of its locks. Point in time at which a transaction releases it's first lock is called "lockpoint" In 2 PL systems, the serial order to which the serializable order is equivalent, is lockpoint order. Is Two-Phase Locking (2 PL) enough concurrency control? NO! In the above examples, an impasse has been reached! (called DEADLOCK). So Two-Phase Locking (2 PL) is still not enough concurrency control. Deadlock management is also needed.

Locking review To review: LOCKING: A TM must acquire a lock (XLOCK to write, SLOCK to read if there are 2 MODES, else, just a LOCK, if there is only 1 MODE) from the SCHEDULER (model-1) or LOCK MANAGER (model-2) before the operation request can be sent to the DATA MANAGER by the SCHEDULER (Model-1 or the LOCK MGR (Model-2) The DATA MANAGER will return the value_read for a READ operation or an Acknowledgement (Ack) for WRITE/COMMIT/ABORT operations. TM request the RELEASE of all locks (to the SCHEDULER/LCOK_MGR RELEASE) on or before the transaction ENDs. TWO PHASE LOCKING (2 PL): For a given transaction, all locks must be acquired before any are releasing any. STRICT TWO PHASE LOCKING (S 2 PL): All locks are RELEASE request are made at transaction END (COMMIT/ABORT). CONSERVATIVE TWO PHASE LOCKING (C 2 PL): All locks are ACQUIRED before any operation request are sent to the DATA MGR.

Locking review To review: LOCKING: A TM must acquire a lock (XLOCK to write, SLOCK to read if there are 2 MODES, else, just a LOCK, if there is only 1 MODE) from the SCHEDULER (model-1) or LOCK MANAGER (model-2) before the operation request can be sent to the DATA MANAGER by the SCHEDULER (Model-1 or the LOCK MGR (Model-2) The DATA MANAGER will return the value_read for a READ operation or an Acknowledgement (Ack) for WRITE/COMMIT/ABORT operations. TM request the RELEASE of all locks (to the SCHEDULER/LCOK_MGR RELEASE) on or before the transaction ENDs. TWO PHASE LOCKING (2 PL): For a given transaction, all locks must be acquired before any are releasing any. STRICT TWO PHASE LOCKING (S 2 PL): All locks are RELEASE request are made at transaction END (COMMIT/ABORT). CONSERVATIVE TWO PHASE LOCKING (C 2 PL): All locks are ACQUIRED before any operation request are sent to the DATA MGR.

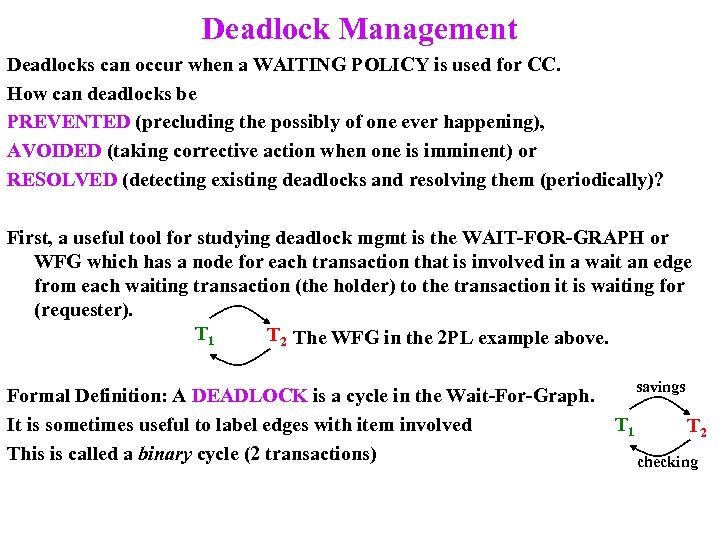

Deadlock Management Deadlocks can occur when a WAITING POLICY is used for CC. How can deadlocks be PREVENTED (precluding the possibly of one ever happening), AVOIDED (taking corrective action when one is imminent) or RESOLVED (detecting existing deadlocks and resolving them (periodically)? First, a useful tool for studying deadlock mgmt is the WAIT-FOR-GRAPH or WFG which has a node for each transaction that is involved in a wait an edge from each waiting transaction (the holder) to the transaction it is waiting for (requester). T 1 T 2 The WFG in the 2 PL example above. Formal Definition: A DEADLOCK is a cycle in the Wait-For-Graph. It is sometimes useful to label edges with item involved This is called a binary cycle (2 transactions) savings T 1 T 2 checking

Deadlock Management Deadlocks can occur when a WAITING POLICY is used for CC. How can deadlocks be PREVENTED (precluding the possibly of one ever happening), AVOIDED (taking corrective action when one is imminent) or RESOLVED (detecting existing deadlocks and resolving them (periodically)? First, a useful tool for studying deadlock mgmt is the WAIT-FOR-GRAPH or WFG which has a node for each transaction that is involved in a wait an edge from each waiting transaction (the holder) to the transaction it is waiting for (requester). T 1 T 2 The WFG in the 2 PL example above. Formal Definition: A DEADLOCK is a cycle in the Wait-For-Graph. It is sometimes useful to label edges with item involved This is called a binary cycle (2 transactions) savings T 1 T 2 checking

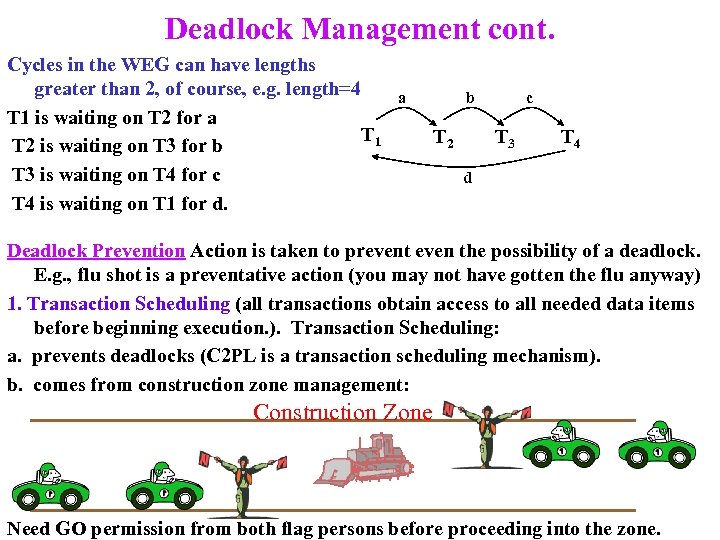

Deadlock Management cont. Cycles in the WEG can have lengths greater than 2, of course, e. g. length=4 T 1 is waiting on T 2 for a T 1 T 2 is waiting on T 3 for b T 3 is waiting on T 4 for c T 4 is waiting on T 1 for d. a b T 2 c T 3 T 4 d Deadlock Prevention Action is taken to prevent even the possibility of a deadlock. E. g. , flu shot is a preventative action (you may not have gotten the flu anyway) 1. Transaction Scheduling (all transactions obtain access to all needed data items before beginning execution. ). Transaction Scheduling: a. prevents deadlocks (C 2 PL is a transaction scheduling mechanism). b. comes from construction zone management: Construction Zone Need GO permission from both flag persons before proceeding into the zone.

Deadlock Management cont. Cycles in the WEG can have lengths greater than 2, of course, e. g. length=4 T 1 is waiting on T 2 for a T 1 T 2 is waiting on T 3 for b T 3 is waiting on T 4 for c T 4 is waiting on T 1 for d. a b T 2 c T 3 T 4 d Deadlock Prevention Action is taken to prevent even the possibility of a deadlock. E. g. , flu shot is a preventative action (you may not have gotten the flu anyway) 1. Transaction Scheduling (all transactions obtain access to all needed data items before beginning execution. ). Transaction Scheduling: a. prevents deadlocks (C 2 PL is a transaction scheduling mechanism). b. comes from construction zone management: Construction Zone Need GO permission from both flag persons before proceeding into the zone.

Deadlock Prevention Action is taken to prevent even the possibility of a deadlock. 2. Serial Execution (prevents deadlock) 3. Wond-Wait and Wait-Die are timestamp-based prevention methods to decide who can wait whenever a conflict arizes. Timestamp = unique ordinal transaction-id or "stamp" (usually start-time i. e. , Do. B (Date of Birth), so we can talk about one transaction being "older than" another, meaning its timestamp is lower (born before the other) ). WOUND_WAIT: When a requesting trans (the requester) finds that the requested data item is held by another trans (the holder): if REQUESTER is OLDER (has lower timestamp), then REQUESTER WOUNDS HOLDER, else REQUESTER WAITS; where WOUND means holder is given a short time to finish with the item, otherwise it must restart (bleeds to death from wound? ) NOTES: WW is a pre-emptive method. The only waits allowed are YOUNGER REQUESTERS waiting for OLDER HOLDERS. Assumes waits are blocking (e. g. , if requester waits, it waits idly), there is never a cycle in WFG. Why not? M Luo, M. S. 87; M Radhakrishnan, M. S. 92; and T Wang, M. S. 96 advanced this protocol as their M. S. theses (and also published their results).

Deadlock Prevention Action is taken to prevent even the possibility of a deadlock. 2. Serial Execution (prevents deadlock) 3. Wond-Wait and Wait-Die are timestamp-based prevention methods to decide who can wait whenever a conflict arizes. Timestamp = unique ordinal transaction-id or "stamp" (usually start-time i. e. , Do. B (Date of Birth), so we can talk about one transaction being "older than" another, meaning its timestamp is lower (born before the other) ). WOUND_WAIT: When a requesting trans (the requester) finds that the requested data item is held by another trans (the holder): if REQUESTER is OLDER (has lower timestamp), then REQUESTER WOUNDS HOLDER, else REQUESTER WAITS; where WOUND means holder is given a short time to finish with the item, otherwise it must restart (bleeds to death from wound? ) NOTES: WW is a pre-emptive method. The only waits allowed are YOUNGER REQUESTERS waiting for OLDER HOLDERS. Assumes waits are blocking (e. g. , if requester waits, it waits idly), there is never a cycle in WFG. Why not? M Luo, M. S. 87; M Radhakrishnan, M. S. 92; and T Wang, M. S. 96 advanced this protocol as their M. S. theses (and also published their results).

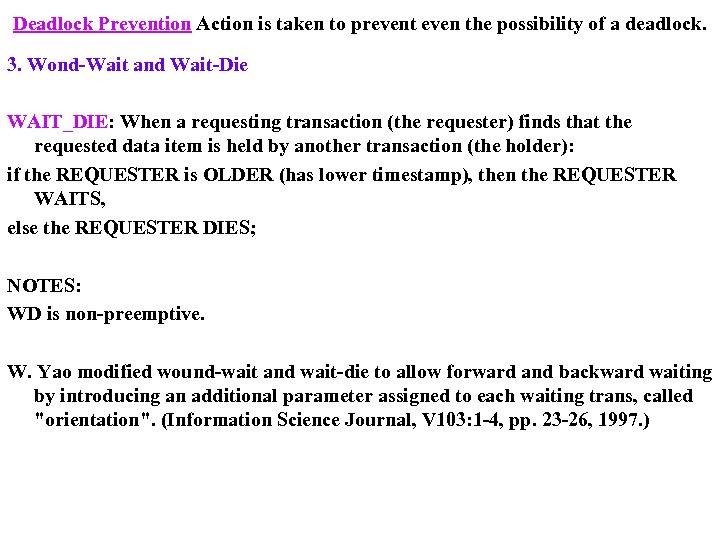

Deadlock Prevention Action is taken to prevent even the possibility of a deadlock. 3. Wond-Wait and Wait-Die WAIT_DIE: When a requesting transaction (the requester) finds that the requested data item is held by another transaction (the holder): if the REQUESTER is OLDER (has lower timestamp), then the REQUESTER WAITS, else the REQUESTER DIES; NOTES: WD is non-preemptive. W. Yao modified wound-wait and wait-die to allow forward and backward waiting by introducing an additional parameter assigned to each waiting trans, called "orientation". (Information Science Journal, V 103: 1 -4, pp. 23 -26, 1997. )

Deadlock Prevention Action is taken to prevent even the possibility of a deadlock. 3. Wond-Wait and Wait-Die WAIT_DIE: When a requesting transaction (the requester) finds that the requested data item is held by another transaction (the holder): if the REQUESTER is OLDER (has lower timestamp), then the REQUESTER WAITS, else the REQUESTER DIES; NOTES: WD is non-preemptive. W. Yao modified wound-wait and wait-die to allow forward and backward waiting by introducing an additional parameter assigned to each waiting trans, called "orientation". (Information Science Journal, V 103: 1 -4, pp. 23 -26, 1997. )

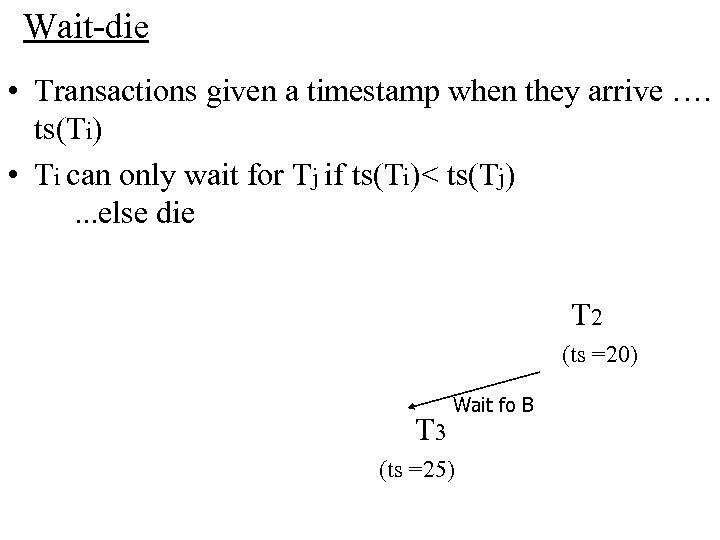

Wait-die • Transactions given a timestamp when they arrive …. ts(Ti) • Ti can only wait for Tj if ts(Ti)< ts(Tj). . . else die T 1 Wait for A (ts =10) T 2 (ts =20) Wait for C? T 3 Wait fo B (ts =25)

Wait-die • Transactions given a timestamp when they arrive …. ts(Ti) • Ti can only wait for Tj if ts(Ti)< ts(Tj). . . else die T 1 Wait for A (ts =10) T 2 (ts =20) Wait for C? T 3 Wait fo B (ts =25)

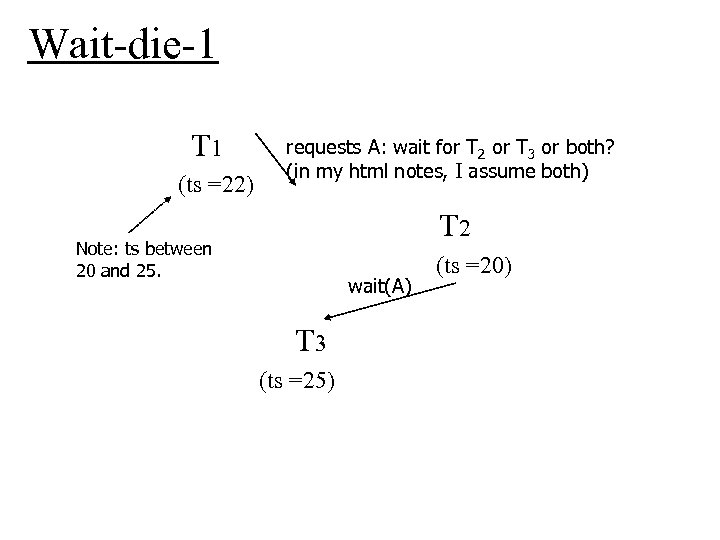

Wait-die-1 T 1 (ts =22) requests A: wait for T 2 or T 3 or both? (in my html notes, I assume both) T 2 Note: ts between 20 and 25. wait(A) T 3 (ts =25) (ts =20)

Wait-die-1 T 1 (ts =22) requests A: wait for T 2 or T 3 or both? (in my html notes, I assume both) T 2 Note: ts between 20 and 25. wait(A) T 3 (ts =25) (ts =20)

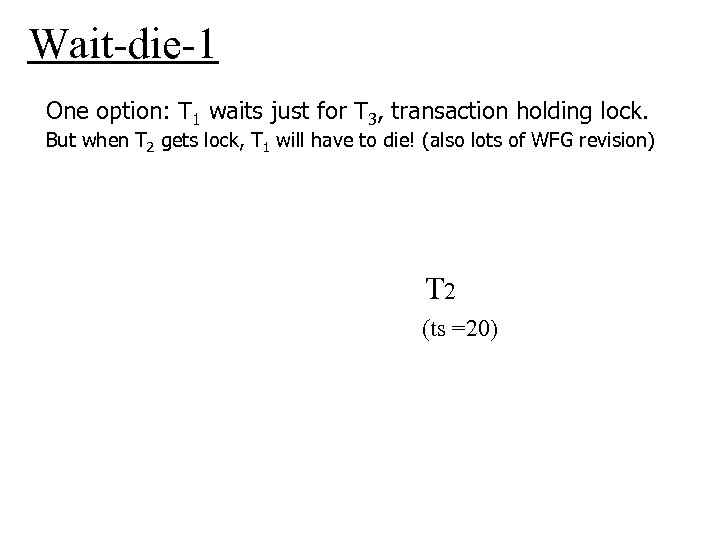

Wait-die-1 One option: T 1 waits just for T 3, transaction holding lock. But when T 2 gets lock, T 1 will have to die! (also lots of WFG revision) T 1 (ts =22) wait(A) T 3 (ts =25) T 2 wait(A) (ts =20)

Wait-die-1 One option: T 1 waits just for T 3, transaction holding lock. But when T 2 gets lock, T 1 will have to die! (also lots of WFG revision) T 1 (ts =22) wait(A) T 3 (ts =25) T 2 wait(A) (ts =20)

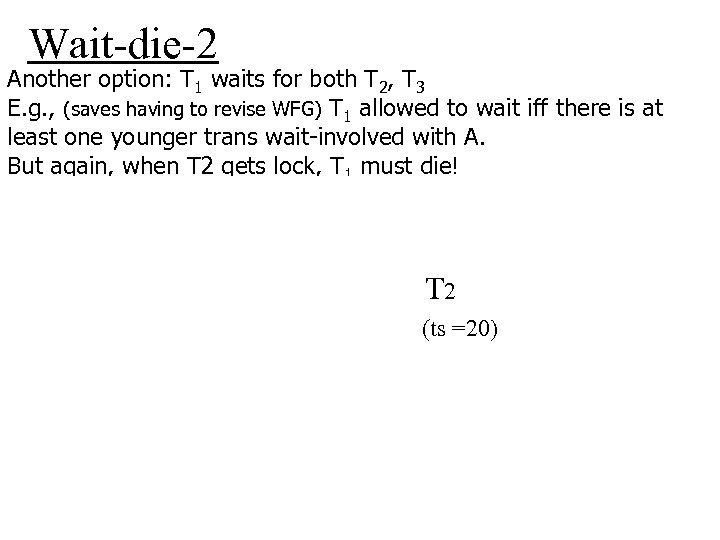

Wait-die-2 Another option: T 1 waits for both T 2, T 3 E. g. , (saves having to revise WFG) T 1 allowed to wait iff there is at least one younger trans wait-involved with A. But again, when T 2 gets lock, T 1 must die! T 1 wait(A) (ts =22) T 2 wait(A) T 3 (ts =25) (ts =20)

Wait-die-2 Another option: T 1 waits for both T 2, T 3 E. g. , (saves having to revise WFG) T 1 allowed to wait iff there is at least one younger trans wait-involved with A. But again, when T 2 gets lock, T 1 must die! T 1 wait(A) (ts =22) T 2 wait(A) T 3 (ts =25) (ts =20)

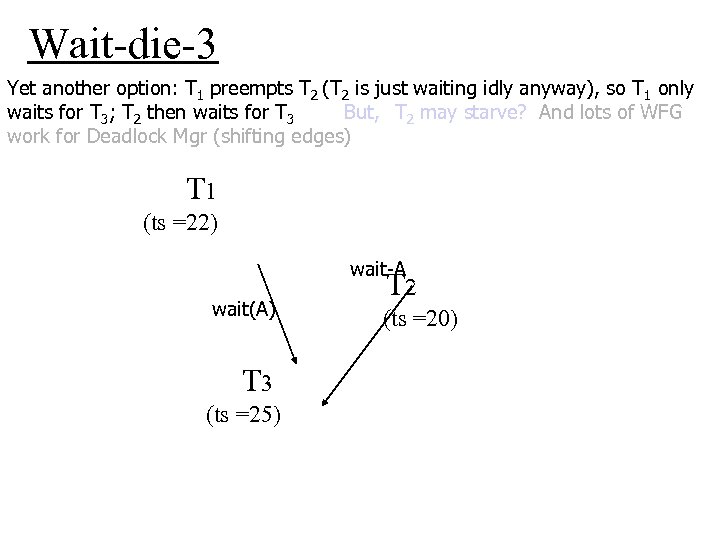

Wait-die-3 Yet another option: T 1 preempts T 2 (T 2 is just waiting idly anyway), so T 1 only waits for T 3; T 2 then waits for T 3 But, T 2 may starve? And lots of WFG work for Deadlock Mgr (shifting edges) T 1 (ts =22) wait-A wait(A) T 3 (ts =25) T 2 (ts =20)

Wait-die-3 Yet another option: T 1 preempts T 2 (T 2 is just waiting idly anyway), so T 1 only waits for T 3; T 2 then waits for T 3 But, T 2 may starve? And lots of WFG work for Deadlock Mgr (shifting edges) T 1 (ts =22) wait-A wait(A) T 3 (ts =25) T 2 (ts =20)

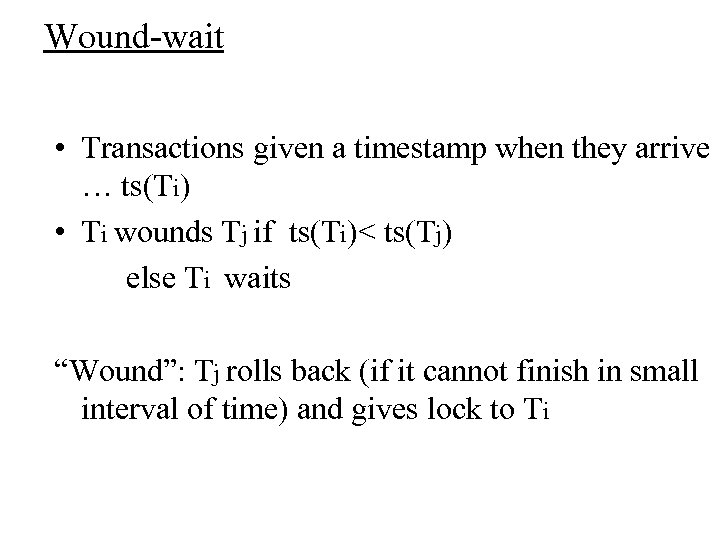

Wound-wait • Transactions given a timestamp when they arrive … ts(Ti) • Ti wounds Tj if ts(Ti)< ts(Tj) else Ti waits “Wound”: Tj rolls back (if it cannot finish in small interval of time) and gives lock to Ti

Wound-wait • Transactions given a timestamp when they arrive … ts(Ti) • Ti wounds Tj if ts(Ti)< ts(Tj) else Ti waits “Wound”: Tj rolls back (if it cannot finish in small interval of time) and gives lock to Ti

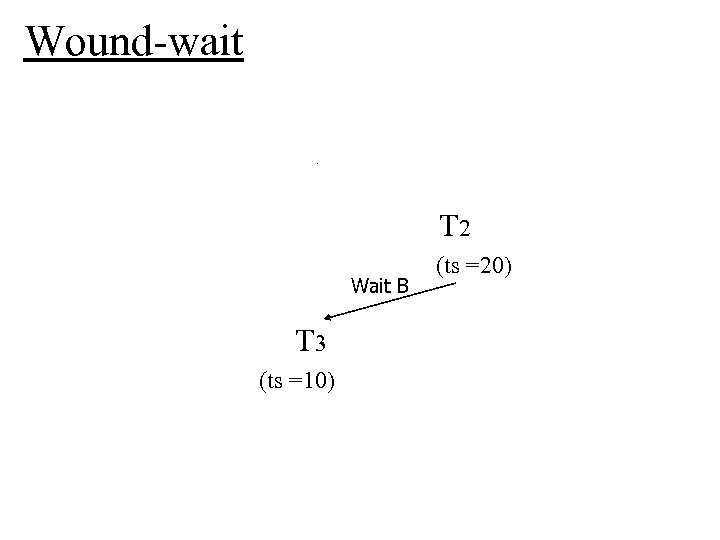

Wound-wait T 1 Wait A (ts =25) T 2 Wait C Wait B T 3 (ts =10) (ts =20)

Wound-wait T 1 Wait A (ts =25) T 2 Wait C Wait B T 3 (ts =10) (ts =20)

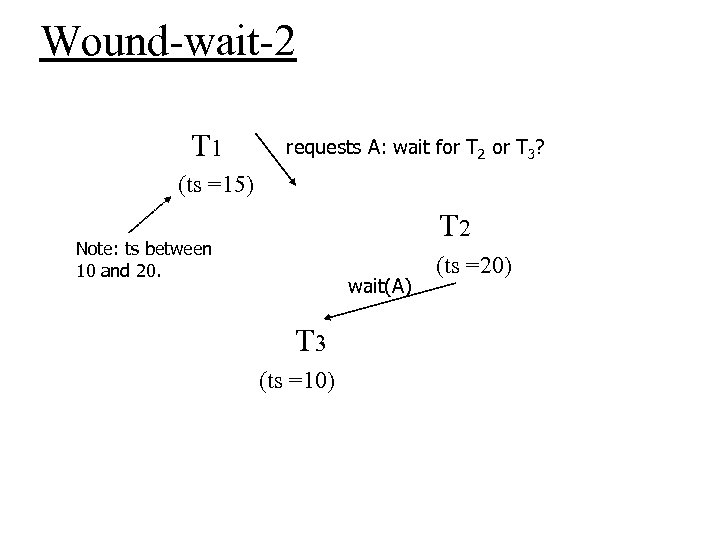

Wound-wait-2 T 1 requests A: wait for T 2 or T 3? (ts =15) T 2 Note: ts between 10 and 20. wait(A) T 3 (ts =10) (ts =20)

Wound-wait-2 T 1 requests A: wait for T 2 or T 3? (ts =15) T 2 Note: ts between 10 and 20. wait(A) T 3 (ts =10) (ts =20)

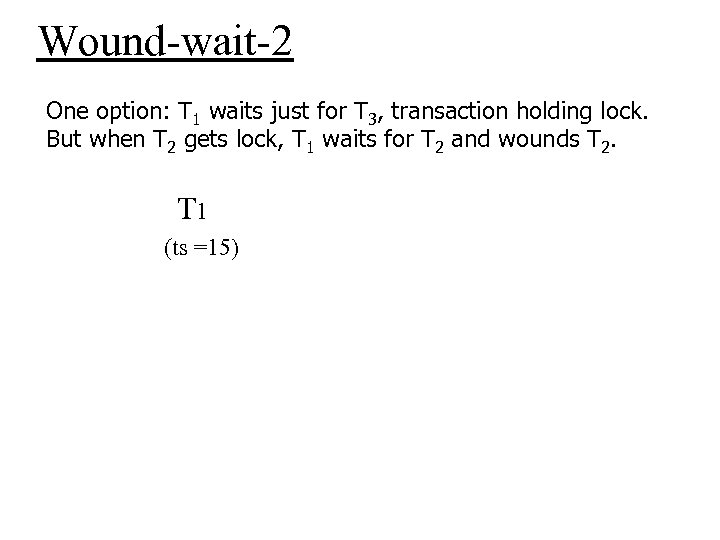

Wound-wait-2 One option: T 1 waits just for T 3, transaction holding lock. But when T 2 gets lock, T 1 waits for T 2 and wounds T 2. T 1 Wait A (ts =15) T 2 wait(A) T 3 (ts =10) (ts =20)

Wound-wait-2 One option: T 1 waits just for T 3, transaction holding lock. But when T 2 gets lock, T 1 waits for T 2 and wounds T 2. T 1 Wait A (ts =15) T 2 wait(A) T 3 (ts =10) (ts =20)

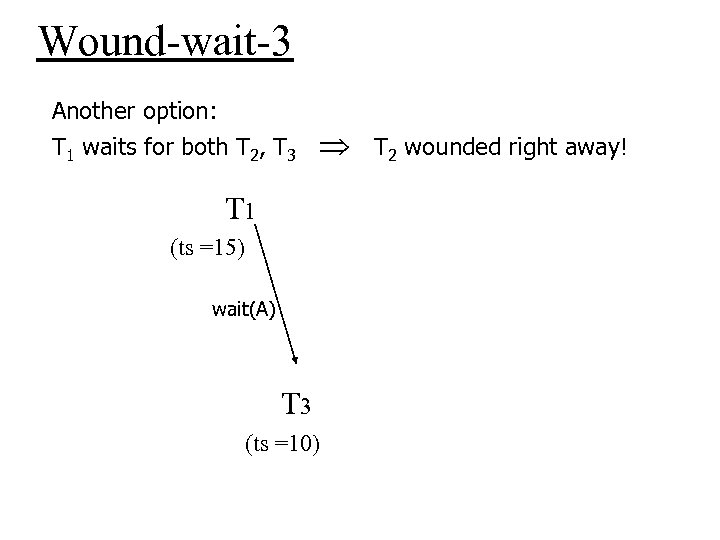

Wound-wait-3 Another option: T 1 waits for both T 2, T 3 T 2 wounded right away! T 1 wait(A) (ts =15) T 2 wait(A) T 3 (ts =10) (ts =20)

Wound-wait-3 Another option: T 1 waits for both T 2, T 3 T 2 wounded right away! T 1 wait(A) (ts =15) T 2 wait(A) T 3 (ts =10) (ts =20)

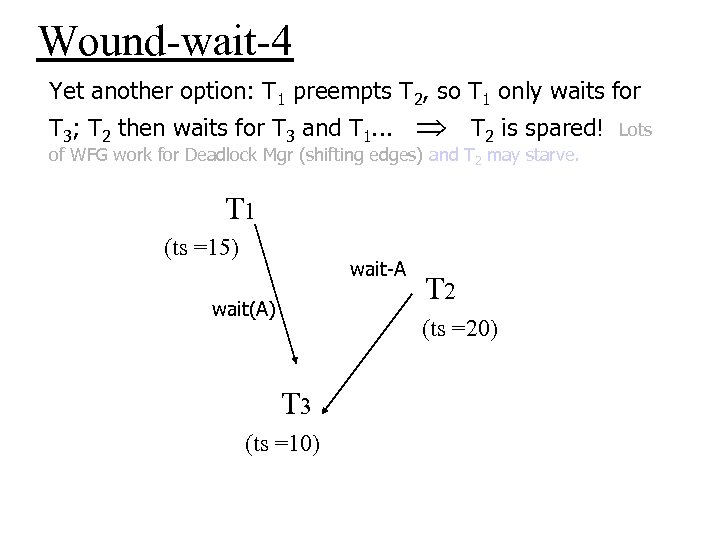

Wound-wait-4 Yet another option: T 1 preempts T 2, so T 1 only waits for T 3; T 2 then waits for T 3 and T 1. . . T 2 is spared! of WFG work for Deadlock Mgr (shifting edges) and T 2 may starve. T 1 (ts =15) wait-A wait(A) T 2 (ts =20) T 3 (ts =10) Lots

Wound-wait-4 Yet another option: T 1 preempts T 2, so T 1 only waits for T 3; T 2 then waits for T 3 and T 1. . . T 2 is spared! of WFG work for Deadlock Mgr (shifting edges) and T 2 may starve. T 1 (ts =15) wait-A wait(A) T 2 (ts =20) T 3 (ts =10) Lots

Deadlock Management Deadlocks can occur when a WAITING POLICY is used for CC. How can deadlocks be PREVENTED (precluding the possibly of one ever happening), AVOIDED (taking corrective action when one is imminent) or RESOLVED (detecting existing deadlocks and resolving them (periodically)? deadlock AVOIDANCE (Avoiding all deadlocks. When one is about to happen, take some action to avoid it. ) 1. Request Denial: Deny any request that would result in deadlock (This requires having and checking a Wait. For. Graph (WFG) for a cycle every time a wait is requested. )

Deadlock Management Deadlocks can occur when a WAITING POLICY is used for CC. How can deadlocks be PREVENTED (precluding the possibly of one ever happening), AVOIDED (taking corrective action when one is imminent) or RESOLVED (detecting existing deadlocks and resolving them (periodically)? deadlock AVOIDANCE (Avoiding all deadlocks. When one is about to happen, take some action to avoid it. ) 1. Request Denial: Deny any request that would result in deadlock (This requires having and checking a Wait. For. Graph (WFG) for a cycle every time a wait is requested. )

Deadlock Management Deadlocks can occur when a WAITING POLICY is used for CC. How can deadlocks be PREVENTED (precluding the possibly of one ever happening), AVOIDED (taking corrective action when one is imminent) or RESOLVED (detecting existing deadlocks and resolving them (periodically)? deadlock detection and resolution techniques All Deadlock detection/resolution protocols use the Wait-For-Graph (WFG). Put an edge in WFG representing each new wait, then periodic analysis WFG for cycles and if one is found, then select a victim transaction to be restarted from each cycle (break the cycle). Victim selection criteria can vary. S ome system use "youngest" others use "oldest", others use "been waiting the longest time" and still others use "been waiting the shortest time". . .

Deadlock Management Deadlocks can occur when a WAITING POLICY is used for CC. How can deadlocks be PREVENTED (precluding the possibly of one ever happening), AVOIDED (taking corrective action when one is imminent) or RESOLVED (detecting existing deadlocks and resolving them (periodically)? deadlock detection and resolution techniques All Deadlock detection/resolution protocols use the Wait-For-Graph (WFG). Put an edge in WFG representing each new wait, then periodic analysis WFG for cycles and if one is found, then select a victim transaction to be restarted from each cycle (break the cycle). Victim selection criteria can vary. S ome system use "youngest" others use "oldest", others use "been waiting the longest time" and still others use "been waiting the shortest time". . .

Deadlock Management RESOLVED (detecting existing deadlocks and resolving them (periodically)? Timeout 1. When a TRANSACTION BEGINs, a timeout clock is set. If transaction is still active when the timeout clock runs down to zero, then transaction is aborted. 2. When a TRANSACTION has to WAIT, a timeout clock is set. If transaction is still waiting when the timeout clock runs down to zero, then transaction is aborted. (reduces timeout clock overhead). Potential improvements probably leap to mind for 2, e. g. , 2. 1 only set timeout clock if the item requested is already in Lock. Table (meaning that there is already a wait in progress for that item). General Notes: Deadlock management is still a very important area of research and there's still much to be done, even though there are many methods described in the literature. One reason: Deadlocks which involves data distributed across a network are a much harder problem than centralized deadlocks. Locking, as a concurrency control method, REQUIRES a CENTRALIZED lock-table object (logically at least) with a SINGLE THREADED lock manager (a monitor or critical section) The Locking protocols presented above are called PESSIMISTIC. OPTIMISTIC locking assumes there will be no conflict and then tests that assumption for validity at COMMIT time. If assumption proved false, entire (completed) transaction is aborted.

Deadlock Management RESOLVED (detecting existing deadlocks and resolving them (periodically)? Timeout 1. When a TRANSACTION BEGINs, a timeout clock is set. If transaction is still active when the timeout clock runs down to zero, then transaction is aborted. 2. When a TRANSACTION has to WAIT, a timeout clock is set. If transaction is still waiting when the timeout clock runs down to zero, then transaction is aborted. (reduces timeout clock overhead). Potential improvements probably leap to mind for 2, e. g. , 2. 1 only set timeout clock if the item requested is already in Lock. Table (meaning that there is already a wait in progress for that item). General Notes: Deadlock management is still a very important area of research and there's still much to be done, even though there are many methods described in the literature. One reason: Deadlocks which involves data distributed across a network are a much harder problem than centralized deadlocks. Locking, as a concurrency control method, REQUIRES a CENTRALIZED lock-table object (logically at least) with a SINGLE THREADED lock manager (a monitor or critical section) The Locking protocols presented above are called PESSIMISTIC. OPTIMISTIC locking assumes there will be no conflict and then tests that assumption for validity at COMMIT time. If assumption proved false, entire (completed) transaction is aborted.

Other Concurrency Control Methods BASIC TIMESTAMP ORDERING (BTO) is a RESTART POLICY (no waiting). Each transaction gets a unique timestamp (ts) (usually arrival time). Note that timestamps were introduced already in the context of deadlock management schemes to accompany Locking Concurrency Control. Now we are going to use timestamps for concurrency control itself! (no deadlock management will be necessary here since the CC method is a "restart" method, not a "waiting" method). BTO SCHEDULING DECISION: When Scheduler receives a READ request, it rejects it iff a YOUNGER trans has written that item. When Scheduler receives a WRITE request, it rejects it iff a YOUNGER transaction has written or read that item. NOTES on BTO: Timestamp is usually "arrival time" but can be ANY linear ordering. When the SCHEDULER rejects a request, the requesting trans restarts. BTO must also schedule accepted operations to DM in ts order also. In order to make the SCHEDULE decisions, scheduler must know the timestamp, ts, of last transaction to write each item and ts of last transaction to read each item. Thus, the system must keep both of these "data-item timestamps" for EVERY data item, x, in the system, namely a data item read timestamp, rts(x), and a data item write timestamp, wts(x). Usually these are kept right with the data item as an part of the data item that only the system can access. That takes a lot of extra space e. g. if there are 10 billion data items (records) in the Data. Base (not uncommon), data-itemtimestamps may take up 160 GB, assuming an 8 byte ts (note that 4 bytes won't do). BTO is a pure RESART policy (uses only restart conflict resolution. BTO CC is deadlock free (since waiting is not used). BTO, however, can experience livelocks (trans continuously restarting for the same reason over and over). BTO results in lower concurrency in central systems (studies have shown) BTO, works better in distributed systems. Why? All the Scheduler has to have in order to make the scheduling decision when a transaction, t asks for a data item, x, is the transaction-timestamp, ts(t), and data-item-writetimestamp, wts(x) (for a read request) and the data-item-read-timestamp, tts(x) (for a write request).

Other Concurrency Control Methods BASIC TIMESTAMP ORDERING (BTO) is a RESTART POLICY (no waiting). Each transaction gets a unique timestamp (ts) (usually arrival time). Note that timestamps were introduced already in the context of deadlock management schemes to accompany Locking Concurrency Control. Now we are going to use timestamps for concurrency control itself! (no deadlock management will be necessary here since the CC method is a "restart" method, not a "waiting" method). BTO SCHEDULING DECISION: When Scheduler receives a READ request, it rejects it iff a YOUNGER trans has written that item. When Scheduler receives a WRITE request, it rejects it iff a YOUNGER transaction has written or read that item. NOTES on BTO: Timestamp is usually "arrival time" but can be ANY linear ordering. When the SCHEDULER rejects a request, the requesting trans restarts. BTO must also schedule accepted operations to DM in ts order also. In order to make the SCHEDULE decisions, scheduler must know the timestamp, ts, of last transaction to write each item and ts of last transaction to read each item. Thus, the system must keep both of these "data-item timestamps" for EVERY data item, x, in the system, namely a data item read timestamp, rts(x), and a data item write timestamp, wts(x). Usually these are kept right with the data item as an part of the data item that only the system can access. That takes a lot of extra space e. g. if there are 10 billion data items (records) in the Data. Base (not uncommon), data-itemtimestamps may take up 160 GB, assuming an 8 byte ts (note that 4 bytes won't do). BTO is a pure RESART policy (uses only restart conflict resolution. BTO CC is deadlock free (since waiting is not used). BTO, however, can experience livelocks (trans continuously restarting for the same reason over and over). BTO results in lower concurrency in central systems (studies have shown) BTO, works better in distributed systems. Why? All the Scheduler has to have in order to make the scheduling decision when a transaction, t asks for a data item, x, is the transaction-timestamp, ts(t), and data-item-writetimestamp, wts(x) (for a read request) and the data-item-read-timestamp, tts(x) (for a write request).

Other Concurrency Control Methods DISTRIBUTED BTO SCHEDULERS NEED NO INFORMATION FROM OTHER SITES ts(t) comes along with the transaction, t (part of its identifier) wts(x) and rts(x) are stored with the data item, x, at that site. Again, one can see, that there is system overhead in BTO since EVERY DATA ITEM has to have a read_timestamp (rts) and a write_timestamp (wts) each could be 8 bytes, so additional 16 bytes of system data for each record. A large database can have billions and even trillions of DATA ITEMS (Records). By contrast, a distributed 2 PL scheduler must maintain Lock Table at some 1 site. Then any request coming from any site for data at any other site would have to be sent across the network from the request-site to the LT-site and then the reply wold have to be sent from the LT site to the data site(s). However, LT is not nearly as large. One further downside to BTO: The BTO Scheduler must submit accepted conflicting operations to DM in ts-order BTO could issue them in a serial manner: Wait to issue next one until previous is ack'ed. That's very inefficient! (serial execution is almost always inefficent) Usually a complex "handshake" protocol is used to optimize this. DO NOT CONFUSE BTO with Wound-Wait or Wait-Die Deadlock Management! Both are timestamp-based, but BTO is Concurrency Control Scheduler, while WW/WD are deadlock prevention methods (to go with a, e. g. , 2 PL scheduler)

Other Concurrency Control Methods DISTRIBUTED BTO SCHEDULERS NEED NO INFORMATION FROM OTHER SITES ts(t) comes along with the transaction, t (part of its identifier) wts(x) and rts(x) are stored with the data item, x, at that site. Again, one can see, that there is system overhead in BTO since EVERY DATA ITEM has to have a read_timestamp (rts) and a write_timestamp (wts) each could be 8 bytes, so additional 16 bytes of system data for each record. A large database can have billions and even trillions of DATA ITEMS (Records). By contrast, a distributed 2 PL scheduler must maintain Lock Table at some 1 site. Then any request coming from any site for data at any other site would have to be sent across the network from the request-site to the LT-site and then the reply wold have to be sent from the LT site to the data site(s). However, LT is not nearly as large. One further downside to BTO: The BTO Scheduler must submit accepted conflicting operations to DM in ts-order BTO could issue them in a serial manner: Wait to issue next one until previous is ack'ed. That's very inefficient! (serial execution is almost always inefficent) Usually a complex "handshake" protocol is used to optimize this. DO NOT CONFUSE BTO with Wound-Wait or Wait-Die Deadlock Management! Both are timestamp-based, but BTO is Concurrency Control Scheduler, while WW/WD are deadlock prevention methods (to go with a, e. g. , 2 PL scheduler)

Other Concurrency Control Methods Optimistic Concurrency Control assumes optimistically, no conflicts will occur. Transactions access data without getting any apriori permissions. But, a Transaction must be VALIDATED when it completes (just prior to COMMIT) to make sure its optimistism was correct. If not, it must abort. VALIDATION (validation must be single threaded - a monitor or mutually excluding): A commiting transaction is "validated" if it is in conflict with no active transaction else it is declared "invalid" and must restarted. So a transaction must list the data items it has accessed and the system must maintain an up-to-date list of "active transactions" with t(ts) and accessed data-item-ids? Basically, an optimistic concurrency control can be thought of as being BTO, in which the "timestamping" is done at its commit time, not at start time, (transaction is validated iff it is not "too late" accessing any of its data) since active transactions are younger than the committing transaction. Validation must be an atomic, single threaded process. Therefore if any active trans has already read a item that the committing trans wants to write (all writes are delayed until validation) it's too late for committing trans to write it in ts order and thus, must be restarted. Note that this is non-prememptive optimistic CC.

Other Concurrency Control Methods Optimistic Concurrency Control assumes optimistically, no conflicts will occur. Transactions access data without getting any apriori permissions. But, a Transaction must be VALIDATED when it completes (just prior to COMMIT) to make sure its optimistism was correct. If not, it must abort. VALIDATION (validation must be single threaded - a monitor or mutually excluding): A commiting transaction is "validated" if it is in conflict with no active transaction else it is declared "invalid" and must restarted. So a transaction must list the data items it has accessed and the system must maintain an up-to-date list of "active transactions" with t(ts) and accessed data-item-ids? Basically, an optimistic concurrency control can be thought of as being BTO, in which the "timestamping" is done at its commit time, not at start time, (transaction is validated iff it is not "too late" accessing any of its data) since active transactions are younger than the committing transaction. Validation must be an atomic, single threaded process. Therefore if any active trans has already read a item that the committing trans wants to write (all writes are delayed until validation) it's too late for committing trans to write it in ts order and thus, must be restarted. Note that this is non-prememptive optimistic CC.

Other Concurrency Control Methods CSMA/CD like CC: Need to write a simple Concurrency Controller (Scheduler) for your boss? This is a very simple and effective SCHEDULER (no critical section coding required) in which cooperating TMs do "self service" 2 PL using the ethernet LAN CSMA/CD protocol CSMA/CD = Carrier Sense Multiple Access with Collision Detect CSMA/CD-Concurrency Control: A cooperating TM, t, seeks access to item, x, it will: 1. Check availability of x (analogous with "carrier sensing") (Is another trans using it in a conflicting mode? ). 2. If x is available, set lock on x (TM does this itself! in a Lock. Table File) else try later (after some backoff random period). 3. Check for collision (with other cooperating trans that might have been setting conflicting locks concurrently (analogous to "collision detecting") 4. If collision, TM removes all lock(s) it set and tries later (after some backoff period). 5. Release all locks after completion (COMMIT or ABORT) (Strict 2 PL). (This is a S 2 PL protocol WITHOUT an active scheduler).

Other Concurrency Control Methods CSMA/CD like CC: Need to write a simple Concurrency Controller (Scheduler) for your boss? This is a very simple and effective SCHEDULER (no critical section coding required) in which cooperating TMs do "self service" 2 PL using the ethernet LAN CSMA/CD protocol CSMA/CD = Carrier Sense Multiple Access with Collision Detect CSMA/CD-Concurrency Control: A cooperating TM, t, seeks access to item, x, it will: 1. Check availability of x (analogous with "carrier sensing") (Is another trans using it in a conflicting mode? ). 2. If x is available, set lock on x (TM does this itself! in a Lock. Table File) else try later (after some backoff random period). 3. Check for collision (with other cooperating trans that might have been setting conflicting locks concurrently (analogous to "collision detecting") 4. If collision, TM removes all lock(s) it set and tries later (after some backoff period). 5. Release all locks after completion (COMMIT or ABORT) (Strict 2 PL). (This is a S 2 PL protocol WITHOUT an active scheduler).

Other Concurrency Control Methods CSMA/CD like CC continued: To make it even simpler, we can dispense with the carrier sense step: CD-Only Method: When a cooperation trans, t, seeks access to a data item, x, it must: 2. Set lock. 3. Check for collisions. 4. If there is a collision, remove all locks and try later (after backoff). 5. Release all locks after completion (COMMIT or ABORT). (This is also a S 2 PL protocol without an active scheduler). In fact, one can write this code in SQL, something like: Assume there is a file acting as the Lock. Table, called LT, such that LT(TID, RID, MODE) where TID is column for the Trans' ID number, RID is column for Record's ID number, and MODE is either "shared" or "exclusive" (S or X). Below shows some of the code for a CD-like CC Method (what additional code would be required for a CSMA/CD like method? ). If T 7 (transaction with TID = 7) needs an XLOCK on the data item with RID = (53, 28), the TM for T 7 issues: BEGIN INSERT INTO LT VALUES ('7', '(53, 28)', 'X'); V = SELECT COUNT(*) FROM LT WHERE RID='(53, 28)'; IF V = 1, THEN COMMT ELSE ABORT (try again later), DELETE FROM LT WHERE TID='7';

Other Concurrency Control Methods CSMA/CD like CC continued: To make it even simpler, we can dispense with the carrier sense step: CD-Only Method: When a cooperation trans, t, seeks access to a data item, x, it must: 2. Set lock. 3. Check for collisions. 4. If there is a collision, remove all locks and try later (after backoff). 5. Release all locks after completion (COMMIT or ABORT). (This is also a S 2 PL protocol without an active scheduler). In fact, one can write this code in SQL, something like: Assume there is a file acting as the Lock. Table, called LT, such that LT(TID, RID, MODE) where TID is column for the Trans' ID number, RID is column for Record's ID number, and MODE is either "shared" or "exclusive" (S or X). Below shows some of the code for a CD-like CC Method (what additional code would be required for a CSMA/CD like method? ). If T 7 (transaction with TID = 7) needs an XLOCK on the data item with RID = (53, 28), the TM for T 7 issues: BEGIN INSERT INTO LT VALUES ('7', '(53, 28)', 'X'); V = SELECT COUNT(*) FROM LT WHERE RID='(53, 28)'; IF V = 1, THEN COMMT ELSE ABORT (try again later), DELETE FROM LT WHERE TID='7';

Other Concurrency Control Methods ROLL (Request Order Link List) Concurrency Control Another CC method that uses Cooperation Transaction Managers and no Scheduler: (Note this technology - together with a later refinement called ROCC, is patent pending concurrency control technology at NDSU. In reverse time order, it can be called ROCC and ROLL Concurrency Control) ROLL: Request Order Linked List Concurrency Control (a further enhancement of this approach, ROCC and MVROCC are patent pending technologies at this time by NDSU). ROLL is a generalized model which includes aspects of locking and timestamp ordering as well as other methods. ROLL is: 1 non-blocking (no idle waiting) 2 restart free and thus livelock free. 3 deadlock free 4 self-service for trans mgrs (no active singlethread scheduler other than an enqueue operation) 5 very parallel (little critical sectioning) 6 ROLL is easily distributed

Other Concurrency Control Methods ROLL (Request Order Link List) Concurrency Control Another CC method that uses Cooperation Transaction Managers and no Scheduler: (Note this technology - together with a later refinement called ROCC, is patent pending concurrency control technology at NDSU. In reverse time order, it can be called ROCC and ROLL Concurrency Control) ROLL: Request Order Linked List Concurrency Control (a further enhancement of this approach, ROCC and MVROCC are patent pending technologies at this time by NDSU). ROLL is a generalized model which includes aspects of locking and timestamp ordering as well as other methods. ROLL is: 1 non-blocking (no idle waiting) 2 restart free and thus livelock free. 3 deadlock free 4 self-service for trans mgrs (no active singlethread scheduler other than an enqueue operation) 5 very parallel (little critical sectioning) 6 ROLL is easily distributed

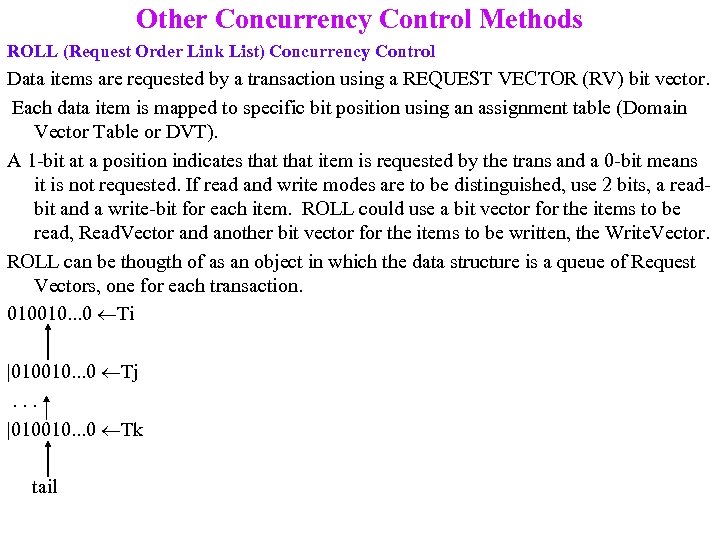

Other Concurrency Control Methods ROLL (Request Order Link List) Concurrency Control Data items are requested by a transaction using a REQUEST VECTOR (RV) bit vector. Each data item is mapped to specific bit position using an assignment table (Domain Vector Table or DVT). A 1 -bit at a position indicates that item is requested by the trans and a 0 -bit means it is not requested. If read and write modes are to be distinguished, use 2 bits, a readbit and a write-bit for each item. ROLL could use a bit vector for the items to be read, Read. Vector and another bit vector for the items to be written, the Write. Vector. ROLL can be thougth of as an object in which the data structure is a queue of Request Vectors, one for each transaction. 010010. . . 0 Ti |010010. . . 0 Tj. . . |010010. . . 0 Tk tail

Other Concurrency Control Methods ROLL (Request Order Link List) Concurrency Control Data items are requested by a transaction using a REQUEST VECTOR (RV) bit vector. Each data item is mapped to specific bit position using an assignment table (Domain Vector Table or DVT). A 1 -bit at a position indicates that item is requested by the trans and a 0 -bit means it is not requested. If read and write modes are to be distinguished, use 2 bits, a readbit and a write-bit for each item. ROLL could use a bit vector for the items to be read, Read. Vector and another bit vector for the items to be written, the Write. Vector. ROLL can be thougth of as an object in which the data structure is a queue of Request Vectors, one for each transaction. 010010. . . 0 Ti |010010. . . 0 Tj. . . |010010. . . 0 Tk tail

Other Concurrency Control Methods ROLL (Request Order Link List) Concurrency Control ROLL has 3 basic methods: POST (allows a transaction to specify its requests) POST is an atomic "enqueue" operation (the only atomicity required the only critical section) CHECK (determines availability). CHECK returns the logical OR of all RVs ahead of requesters POSTED vector in the ROLL. The vector resulting from this OR operation is (called the "Access Vector" or AV and represents a "lock table" for that transaction (specifies which items are available and which are not). If we have a separate Read. ROLL and Write. ROLL, in order to determine what can be read, a trans CHECKs the Write. ROLL only and to determine what can be written, a trans CHECKS both Write. ROLL and Read. ROLL. re. CHECKing can be done any time eg. , when trans finishes data items found available on first CHECK, it would issue another CHECK expecting that some of the previously unavailable items have become available in the interim. ) RELEASE: (releases dataitems to the next requester) RELEASE set some or all of trans' 1 -bits to 0 -bits.