03c37dae89adcfc906c8d1a3cc6c9bd8.ppt

- Количество слайдов: 26

Trading Agent Competition (TAC) Jon Lerner, Silas Xu, Wilfred Yeung CS 286 r, 3 March 2004

Trading Agent Competition (TAC) Jon Lerner, Silas Xu, Wilfred Yeung CS 286 r, 3 March 2004

TAC Overview n n International Competition Intended to spur research into trading agent design First held in July 2000 TAC Classic and TAC SCM Scenarios

TAC Overview n n International Competition Intended to spur research into trading agent design First held in July 2000 TAC Classic and TAC SCM Scenarios

TAC Classic n n Each team in charge of virtual travel agent Agents try to find travel packages for virtual clients All clients wish to travel over same five day period Clients not all equal, each has different preferences for certain types of travel packages

TAC Classic n n Each team in charge of virtual travel agent Agents try to find travel packages for virtual clients All clients wish to travel over same five day period Clients not all equal, each has different preferences for certain types of travel packages

Travel Packages n n Each contains flight info, hotel type, and entertainment tickets To gain positive utility from client, agents must construct feasible packages. Feasible means: q q Arrival date strictly less than departure date Same hotel reserved during all intermediate nights At most one entertainment event per night At most one of each type of entertainment ticket

Travel Packages n n Each contains flight info, hotel type, and entertainment tickets To gain positive utility from client, agents must construct feasible packages. Feasible means: q q Arrival date strictly less than departure date Same hotel reserved during all intermediate nights At most one entertainment event per night At most one of each type of entertainment ticket

Flights n n n Clients have preferences for ideal arrival/departure dates Infinite supply of flights sold through continuously clearing auctions Prices set by a random walk Prices later set to drift upwards to discourage waiting No resale or exchange of flights permitted

Flights n n n Clients have preferences for ideal arrival/departure dates Infinite supply of flights sold through continuously clearing auctions Prices set by a random walk Prices later set to drift upwards to discourage waiting No resale or exchange of flights permitted

Hotels n n Two hotels – high quality and low quality, 16 rooms per hotel per night Sold through ascending, multi-unit, sixteenthprice auctions: one auction for all rooms for single hotel on single night Periodically a random auction closes to encourage agents to bid Clients have different values for high and low quality hotels

Hotels n n Two hotels – high quality and low quality, 16 rooms per hotel per night Sold through ascending, multi-unit, sixteenthprice auctions: one auction for all rooms for single hotel on single night Periodically a random auction closes to encourage agents to bid Clients have different values for high and low quality hotels

Entertainment n n Three types of entertainment available Clients have value for each type Each agent has initial endowment of tickets Buy and sell tickets through continuous double auction

Entertainment n n Three types of entertainment available Clients have value for each type Each agent has initial endowment of tickets Buy and sell tickets through continuous double auction

Agent Themes n Agents have to address: q q q n When to Bid What to Bid On How Much to Bid Combinatorial preferences, but not combinatorial auctions

Agent Themes n Agents have to address: q q q n When to Bid What to Bid On How Much to Bid Combinatorial preferences, but not combinatorial auctions

Strategies n What strategies come to mind? q q What AI techniques might be useful? Simple vs. Complicated Strategies n q q How quickly should you adapt as game progresses? Use of historical data vs. Focus on current game only Play the game vs. Play the players

Strategies n What strategies come to mind? q q What AI techniques might be useful? Simple vs. Complicated Strategies n q q How quickly should you adapt as game progresses? Use of historical data vs. Focus on current game only Play the game vs. Play the players

living agents (Living Systems AG) Winner: TAC 2001 n Makes two assumptions q q n 1. Steadily increasing flight prices favor early decisions for flight tickets. 2. Especially the good performing teams are following a strategy to maximize their own utility. They are not trying to take the risk to reduce other team’s utility. Simple strategy q q q Makes substantial use of historical data. Barely any monitoring/adapting to changing conditions Benefits from other agents’ complicated algorithms to control price; Open-loop, Play the Players

living agents (Living Systems AG) Winner: TAC 2001 n Makes two assumptions q q n 1. Steadily increasing flight prices favor early decisions for flight tickets. 2. Especially the good performing teams are following a strategy to maximize their own utility. They are not trying to take the risk to reduce other team’s utility. Simple strategy q q q Makes substantial use of historical data. Barely any monitoring/adapting to changing conditions Benefits from other agents’ complicated algorithms to control price; Open-loop, Play the Players

living agents: Determining Hotel and Flight Bids n n n Assume hotel auction will clear at historical levels Using these as hotel prices, initial flight prices, and client preferences, determine optimal client trips Immediately place bids based on this optimum q q Purchase corresponding flights immediately Place offers for required hotels at prices high enough to ensure successful acquisition

living agents: Determining Hotel and Flight Bids n n n Assume hotel auction will clear at historical levels Using these as hotel prices, initial flight prices, and client preferences, determine optimal client trips Immediately place bids based on this optimum q q Purchase corresponding flights immediately Place offers for required hotels at prices high enough to ensure successful acquisition

Entertainment Auction n Immediately makes fixed decision as to which entertainment to attempt to buy/sell assuming the historical clearing price of about $80. q q Opportunistically buy and sell around this point Put in final reservation prices at seven minute mark.

Entertainment Auction n Immediately makes fixed decision as to which entertainment to attempt to buy/sell assuming the historical clearing price of about $80. q q Opportunistically buy and sell around this point Put in final reservation prices at seven minute mark.

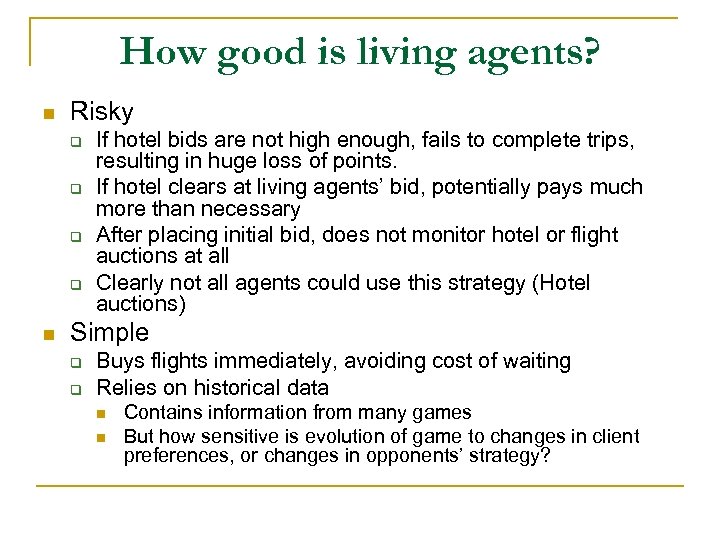

How good is living agents? n Risky q q n If hotel bids are not high enough, fails to complete trips, resulting in huge loss of points. If hotel clears at living agents’ bid, potentially pays much more than necessary After placing initial bid, does not monitor hotel or flight auctions at all Clearly not all agents could use this strategy (Hotel auctions) Simple q q Buys flights immediately, avoiding cost of waiting Relies on historical data n n Contains information from many games But how sensitive is evolution of game to changes in client preferences, or changes in opponents’ strategy?

How good is living agents? n Risky q q n If hotel bids are not high enough, fails to complete trips, resulting in huge loss of points. If hotel clears at living agents’ bid, potentially pays much more than necessary After placing initial bid, does not monitor hotel or flight auctions at all Clearly not all agents could use this strategy (Hotel auctions) Simple q q Buys flights immediately, avoiding cost of waiting Relies on historical data n n Contains information from many games But how sensitive is evolution of game to changes in client preferences, or changes in opponents’ strategy?

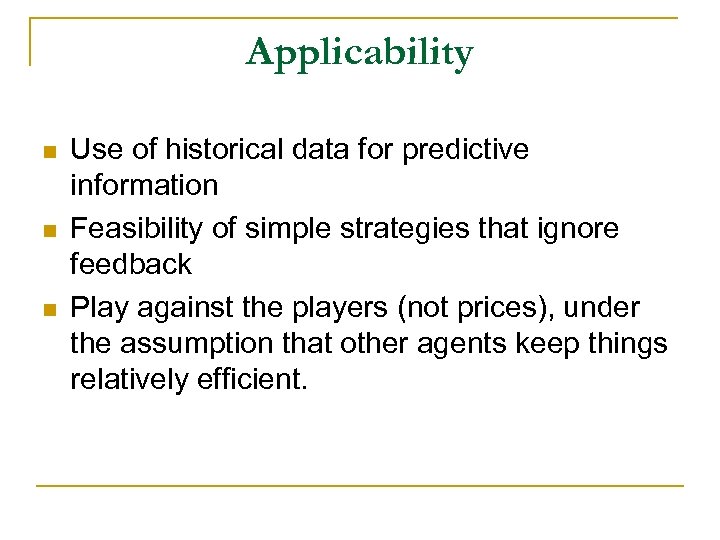

Applicability n n n Use of historical data for predictive information Feasibility of simple strategies that ignore feedback Play against the players (not prices), under the assumption that other agents keep things relatively efficient.

Applicability n n n Use of historical data for predictive information Feasibility of simple strategies that ignore feedback Play against the players (not prices), under the assumption that other agents keep things relatively efficient.

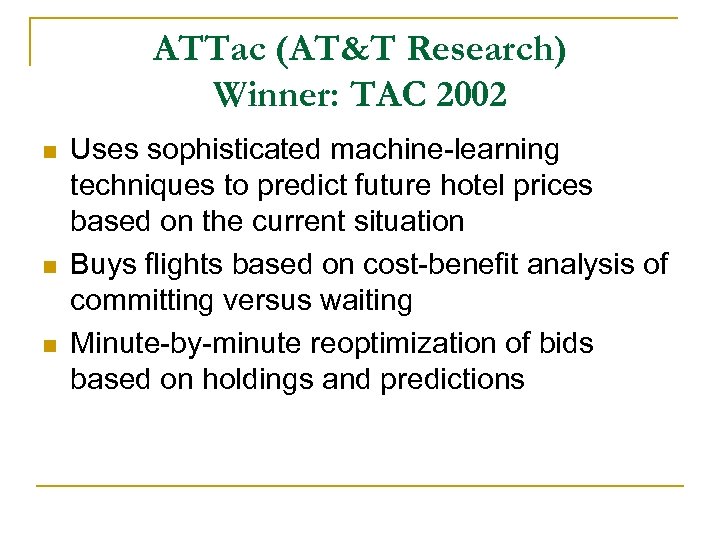

ATTac (AT&T Research) Winner: TAC 2002 n n n Uses sophisticated machine-learning techniques to predict future hotel prices based on the current situation Buys flights based on cost-benefit analysis of committing versus waiting Minute-by-minute reoptimization of bids based on holdings and predictions

ATTac (AT&T Research) Winner: TAC 2002 n n n Uses sophisticated machine-learning techniques to predict future hotel prices based on the current situation Buys flights based on cost-benefit analysis of committing versus waiting Minute-by-minute reoptimization of bids based on holdings and predictions

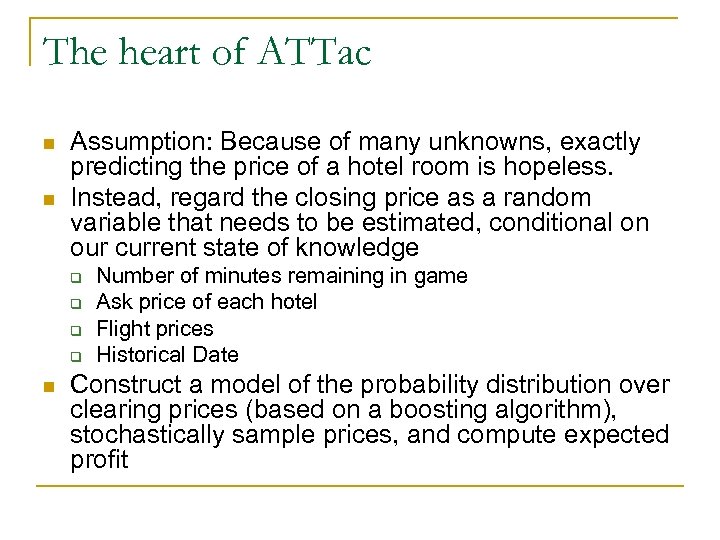

The heart of ATTac n n Assumption: Because of many unknowns, exactly predicting the price of a hotel room is hopeless. Instead, regard the closing price as a random variable that needs to be estimated, conditional on our current state of knowledge q q n Number of minutes remaining in game Ask price of each hotel Flight prices Historical Date Construct a model of the probability distribution over clearing prices (based on a boosting algorithm), stochastically sample prices, and compute expected profit

The heart of ATTac n n Assumption: Because of many unknowns, exactly predicting the price of a hotel room is hopeless. Instead, regard the closing price as a random variable that needs to be estimated, conditional on our current state of knowledge q q n Number of minutes remaining in game Ask price of each hotel Flight prices Historical Date Construct a model of the probability distribution over clearing prices (based on a boosting algorithm), stochastically sample prices, and compute expected profit

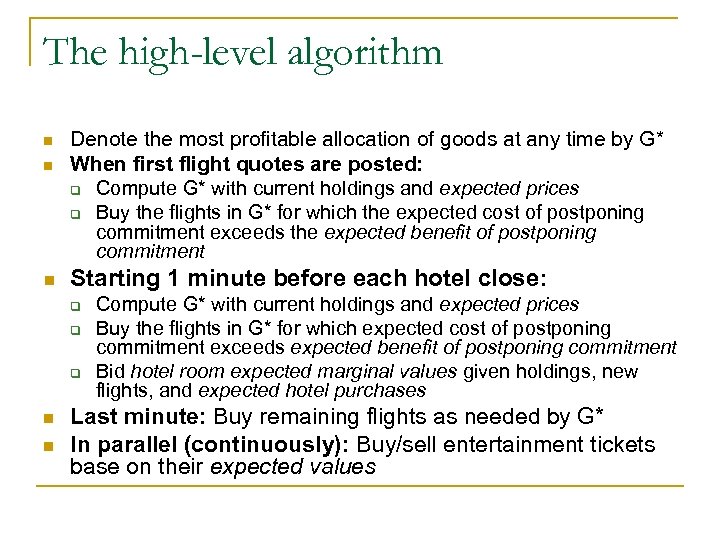

The high-level algorithm n n n Denote the most profitable allocation of goods at any time by G* When first flight quotes are posted: q Compute G* with current holdings and expected prices q Buy the flights in G* for which the expected cost of postponing commitment exceeds the expected benefit of postponing commitment Starting 1 minute before each hotel close: q q q n n Compute G* with current holdings and expected prices Buy the flights in G* for which expected cost of postponing commitment exceeds expected benefit of postponing commitment Bid hotel room expected marginal values given holdings, new flights, and expected hotel purchases Last minute: Buy remaining flights as needed by G* In parallel (continuously): Buy/sell entertainment tickets base on their expected values

The high-level algorithm n n n Denote the most profitable allocation of goods at any time by G* When first flight quotes are posted: q Compute G* with current holdings and expected prices q Buy the flights in G* for which the expected cost of postponing commitment exceeds the expected benefit of postponing commitment Starting 1 minute before each hotel close: q q q n n Compute G* with current holdings and expected prices Buy the flights in G* for which expected cost of postponing commitment exceeds expected benefit of postponing commitment Bid hotel room expected marginal values given holdings, new flights, and expected hotel purchases Last minute: Buy remaining flights as needed by G* In parallel (continuously): Buy/sell entertainment tickets base on their expected values

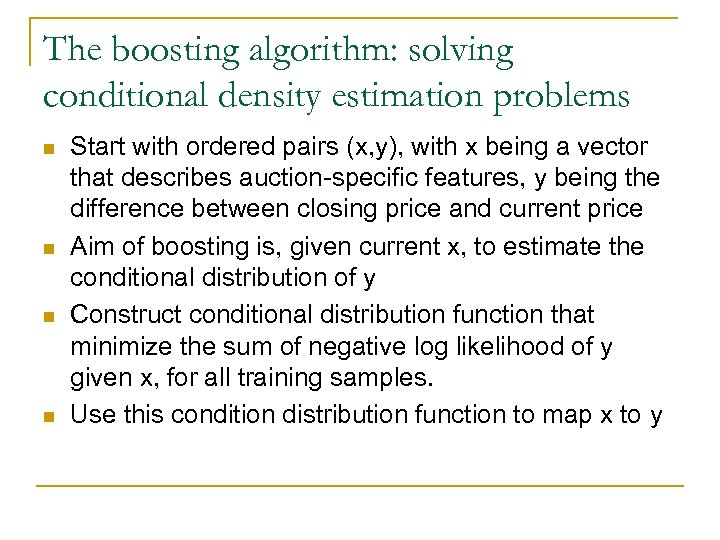

The boosting algorithm: solving conditional density estimation problems n n Start with ordered pairs (x, y), with x being a vector that describes auction-specific features, y being the difference between closing price and current price Aim of boosting is, given current x, to estimate the conditional distribution of y Construct conditional distribution function that minimize the sum of negative log likelihood of y given x, for all training samples. Use this condition distribution function to map x to y

The boosting algorithm: solving conditional density estimation problems n n Start with ordered pairs (x, y), with x being a vector that describes auction-specific features, y being the difference between closing price and current price Aim of boosting is, given current x, to estimate the conditional distribution of y Construct conditional distribution function that minimize the sum of negative log likelihood of y given x, for all training samples. Use this condition distribution function to map x to y

living agents vs. ATTac n n Two very different approaches Statistically insignificant difference in scores in TAC 2001

living agents vs. ATTac n n Two very different approaches Statistically insignificant difference in scores in TAC 2001

Open and Closed Loop Processes n Closed-loop: system feeds information back into itself. Examines the world in an effort to validate the world model. q n appropriate for real-world environments in which feedback is necessary to validate agent actions. Open-loop: no feedback from the environment to the agent. Output from processes are considered complete upon execution. q q appropriate for simulated rather than real environments (tasks not performed perfectly by agent generally. ) generally more efficient for the same reason.

Open and Closed Loop Processes n Closed-loop: system feeds information back into itself. Examines the world in an effort to validate the world model. q n appropriate for real-world environments in which feedback is necessary to validate agent actions. Open-loop: no feedback from the environment to the agent. Output from processes are considered complete upon execution. q q appropriate for simulated rather than real environments (tasks not performed perfectly by agent generally. ) generally more efficient for the same reason.

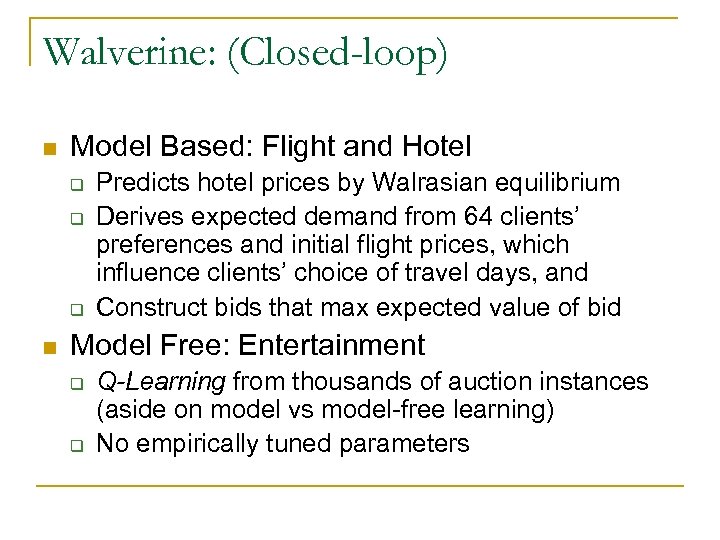

Walverine: (Closed-loop) n Model Based: Flight and Hotel q q q n Predicts hotel prices by Walrasian equilibrium Derives expected demand from 64 clients’ preferences and initial flight prices, which influence clients’ choice of travel days, and Construct bids that max expected value of bid Model Free: Entertainment q q Q-Learning from thousands of auction instances (aside on model vs model-free learning) No empirically tuned parameters

Walverine: (Closed-loop) n Model Based: Flight and Hotel q q q n Predicts hotel prices by Walrasian equilibrium Derives expected demand from 64 clients’ preferences and initial flight prices, which influence clients’ choice of travel days, and Construct bids that max expected value of bid Model Free: Entertainment q q Q-Learning from thousands of auction instances (aside on model vs model-free learning) No empirically tuned parameters

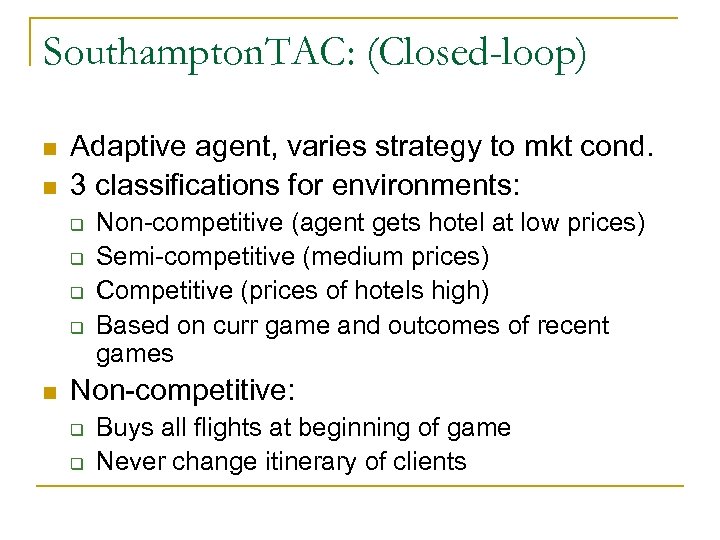

Southampton. TAC: (Closed-loop) n n Adaptive agent, varies strategy to mkt cond. 3 classifications for environments: q q n Non-competitive (agent gets hotel at low prices) Semi-competitive (medium prices) Competitive (prices of hotels high) Based on curr game and outcomes of recent games Non-competitive: q q Buys all flights at beginning of game Never change itinerary of clients

Southampton. TAC: (Closed-loop) n n Adaptive agent, varies strategy to mkt cond. 3 classifications for environments: q q n Non-competitive (agent gets hotel at low prices) Semi-competitive (medium prices) Competitive (prices of hotels high) Based on curr game and outcomes of recent games Non-competitive: q q Buys all flights at beginning of game Never change itinerary of clients

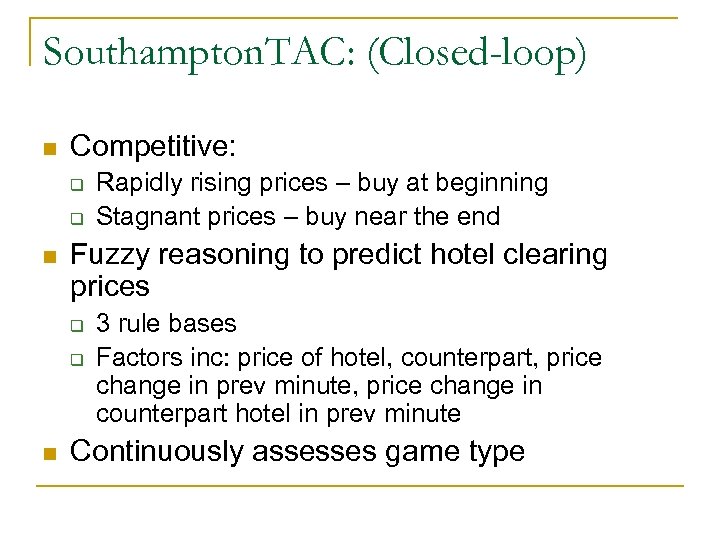

Southampton. TAC: (Closed-loop) n Competitive: q q n Fuzzy reasoning to predict hotel clearing prices q q n Rapidly rising prices – buy at beginning Stagnant prices – buy near the end 3 rule bases Factors inc: price of hotel, counterpart, price change in prev minute, price change in counterpart hotel in prev minute Continuously assesses game type

Southampton. TAC: (Closed-loop) n Competitive: q q n Fuzzy reasoning to predict hotel clearing prices q q n Rapidly rising prices – buy at beginning Stagnant prices – buy near the end 3 rule bases Factors inc: price of hotel, counterpart, price change in prev minute, price change in counterpart hotel in prev minute Continuously assesses game type

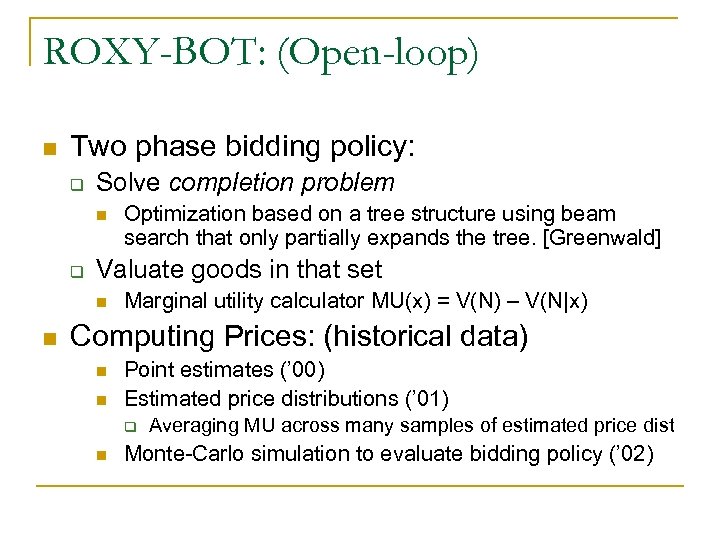

ROXY-BOT: (Open-loop) n Two phase bidding policy: q Solve completion problem n q Valuate goods in that set n n Optimization based on a tree structure using beam search that only partially expands the tree. [Greenwald] Marginal utility calculator MU(x) = V(N) – V(N|x) Computing Prices: (historical data) n n Point estimates (’ 00) Estimated price distributions (’ 01) q n Averaging MU across many samples of estimated price dist Monte-Carlo simulation to evaluate bidding policy (’ 02)

ROXY-BOT: (Open-loop) n Two phase bidding policy: q Solve completion problem n q Valuate goods in that set n n Optimization based on a tree structure using beam search that only partially expands the tree. [Greenwald] Marginal utility calculator MU(x) = V(N) – V(N|x) Computing Prices: (historical data) n n Point estimates (’ 00) Estimated price distributions (’ 01) q n Averaging MU across many samples of estimated price dist Monte-Carlo simulation to evaluate bidding policy (’ 02)

Whitebear (Winner in ’ 02, Open-loop) n Flights: q q q n Hotels: (predictions simply historical averages) q q q n n A: buy everything B: buy only what is absolutely necessary Combination: buy everything except dangerous tickets A: bid small increment greater than current prices B: bid marginal utility Combination: Use A, unless MU is high, use B Domain specific, extensive experimentation No necessarily optimal set of goods, no learning

Whitebear (Winner in ’ 02, Open-loop) n Flights: q q q n Hotels: (predictions simply historical averages) q q q n n A: buy everything B: buy only what is absolutely necessary Combination: buy everything except dangerous tickets A: bid small increment greater than current prices B: bid marginal utility Combination: Use A, unless MU is high, use B Domain specific, extensive experimentation No necessarily optimal set of goods, no learning

Summary: Open vs Closed n All else equal open-strategy better: q q n Simple Avoids waiting costs (higher prices) Predictability of price is determining factor q q Perfectly predictable – open-loop Large price variance – closed-loop n q Open-loop picks the good at the start and may pay a lot Small price variance – optimal closed loop n But complexity for potentially small benefit

Summary: Open vs Closed n All else equal open-strategy better: q q n Simple Avoids waiting costs (higher prices) Predictability of price is determining factor q q Perfectly predictable – open-loop Large price variance – closed-loop n q Open-loop picks the good at the start and may pay a lot Small price variance – optimal closed loop n But complexity for potentially small benefit