e80ff761c1144fde81378d658acef49c.ppt

- Количество слайдов: 37

Traceback Pat Burke Yanos Saravanos

Agenda n n n Introduction Problem Definition Traceback Methods q q n n Packet Marking Hash-based Conclusion References

Why Use Traceback? n n SPAM Do. S Insider attacks Worms / Viruses q q Code Red (2001) spreading at 8 hosts/sec Slammer Worm (2003) spreading at 125 hosts/sec

Why Use Traceback? n Currently very difficult to find spammers, virus authors q q Easy to spoof IPs No inherent tracing mechanism in IP n n Blaster virus author left clues in code, was eventually caught What if we could trace packets back to point of origin?

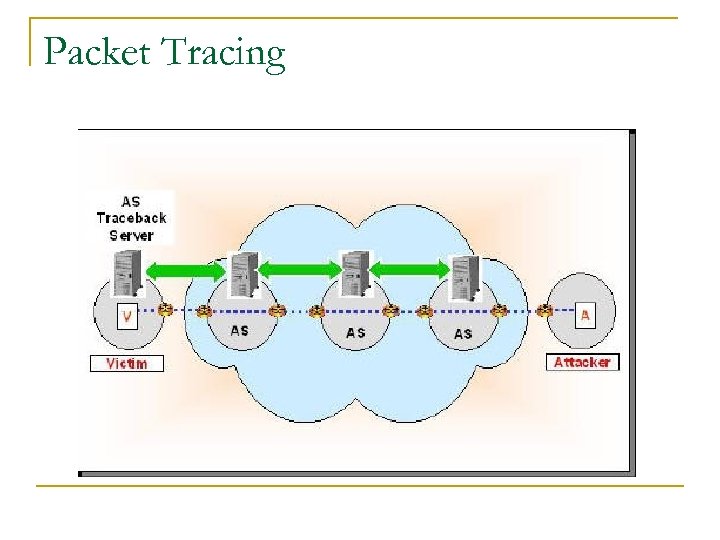

Packet Tracing

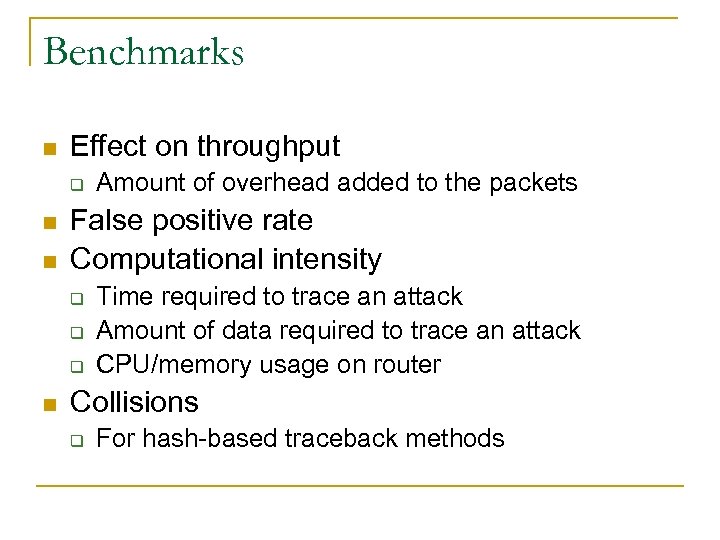

Benchmarks n Effect on throughput q n n False positive rate Computational intensity q q q n Amount of overhead added to the packets Time required to trace an attack Amount of data required to trace an attack CPU/memory usage on router Collisions q For hash-based traceback methods

FDPM Flexible Deterministic Packet Marking

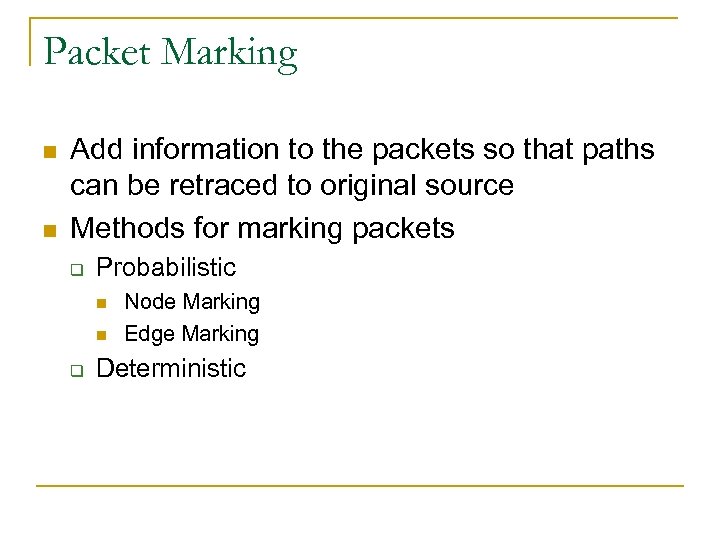

Packet Marking n n Add information to the packets so that paths can be retraced to original source Methods for marking packets q Probabilistic n n q Node Marking Edge Marking Deterministic

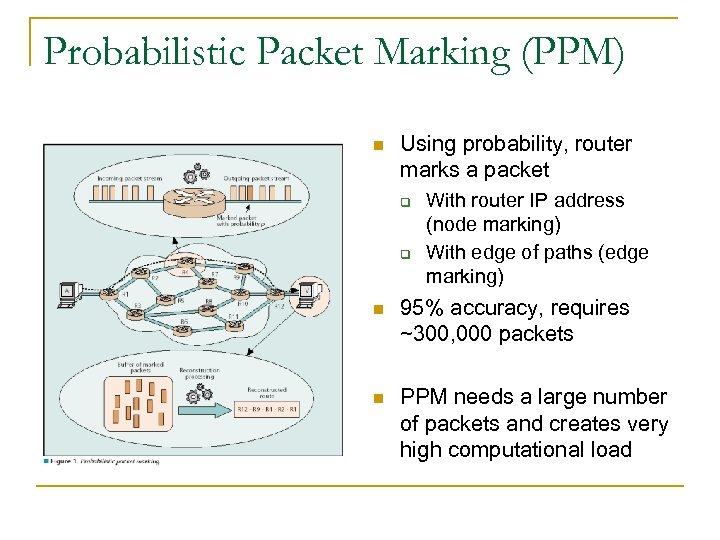

Probabilistic Packet Marking (PPM) n Using probability, router marks a packet q q With router IP address (node marking) With edge of paths (edge marking) n 95% accuracy, requires ~300, 000 packets n PPM needs a large number of packets and creates very high computational load

PPM Nodes - Cons n Large number of false positives q n Slow convergence rate q n n DDo. S with 25 hosts requires several days and has thousands of false positives For 95% success, we need 300, 000 packets Attacker can still inject modified packets into PPM network (mark spoofing) This is only for a single attacker

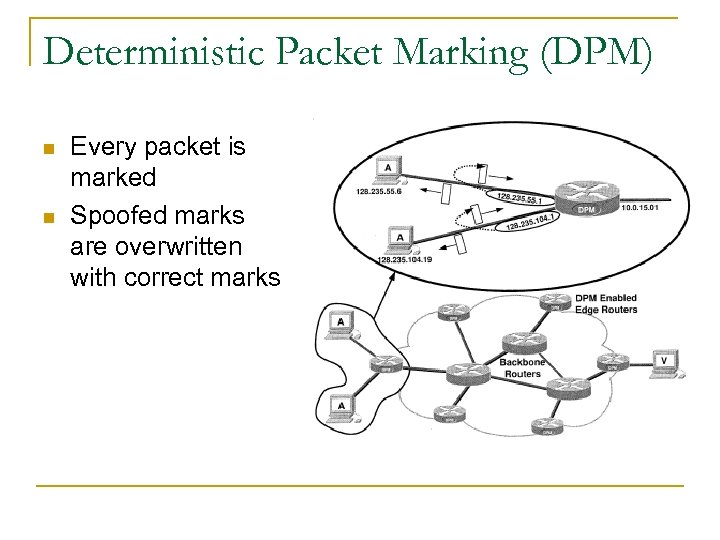

Deterministic Packet Marking (DPM) n n Every packet is marked Spoofed marks are overwritten with correct marks

DPM n n n Incoming packets are marked Outgoing packets are unaltered Requires more overhead than PPM Less computation required Probability of generating ingress IP address (1 -p)d-1

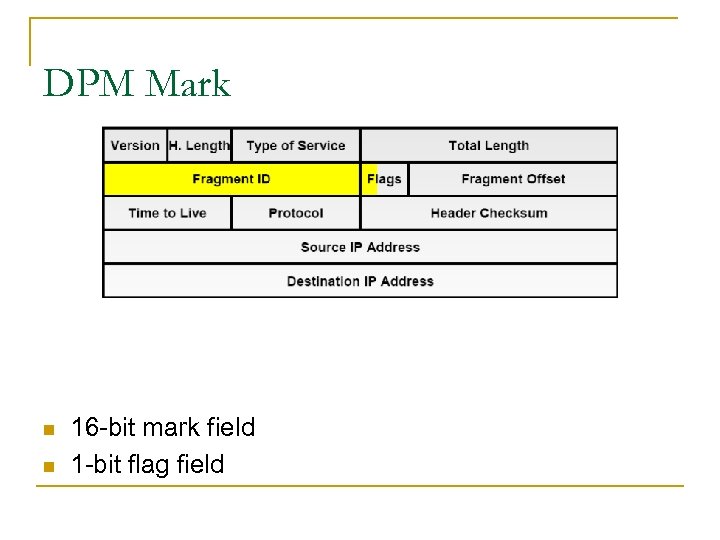

DPM Mark n n 16 -bit mark field 1 -bit flag field

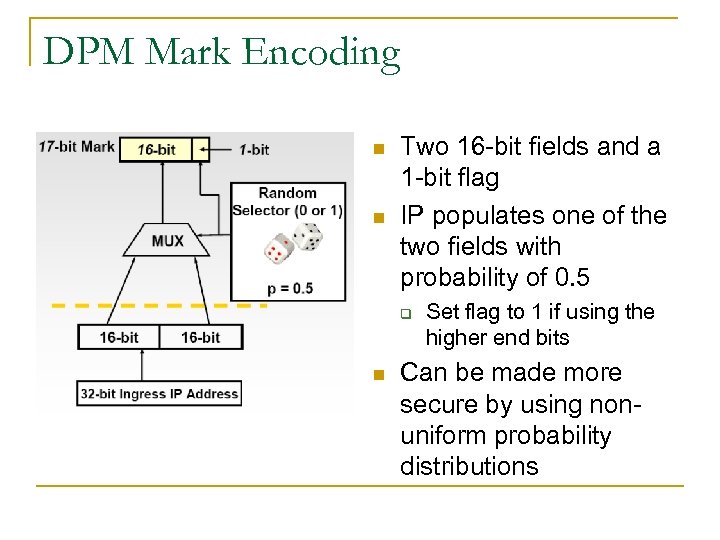

DPM Mark Encoding n n Two 16 -bit fields and a 1 -bit flag IP populates one of the two fields with probability of 0. 5 q n Set flag to 1 if using the higher end bits Can be made more secure by using nonuniform probability distributions

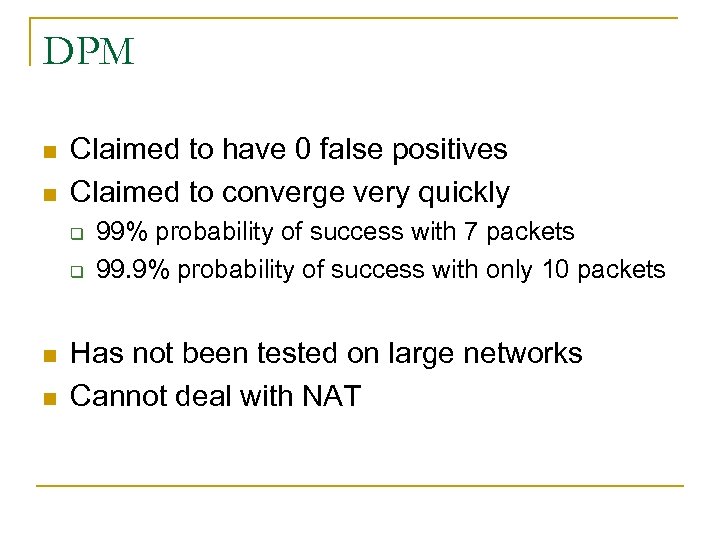

DPM n n Claimed to have 0 false positives Claimed to converge very quickly q q n n 99% probability of success with 7 packets 99. 9% probability of success with only 10 packets Has not been tested on large networks Cannot deal with NAT

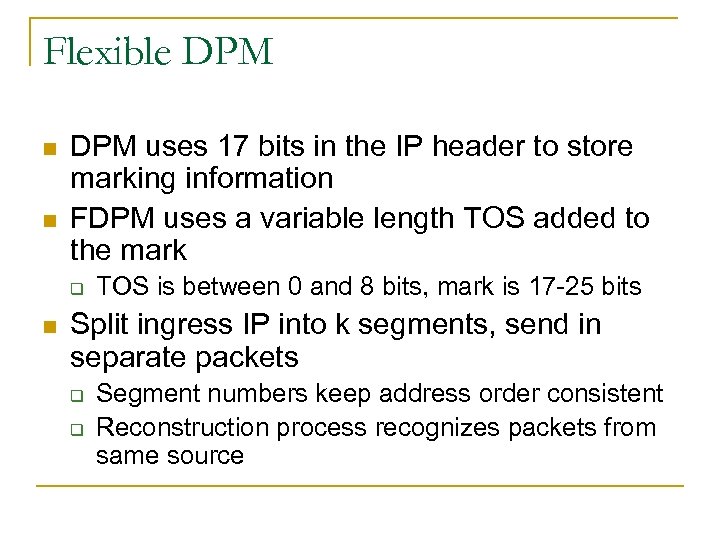

Flexible DPM n n DPM uses 17 bits in the IP header to store marking information FDPM uses a variable length TOS added to the mark q n TOS is between 0 and 8 bits, mark is 17 -25 bits Split ingress IP into k segments, send in separate packets q q Segment numbers keep address order consistent Reconstruction process recognizes packets from same source

FDPM Reconstruction n Mark Recognition q q n Store reconstruction packets in cache Split IP header into fields to find mark length Address Recovery q q Analyze and store mark in recovery table Different source IPs may have the same digest (hash value) and collisions may occur n More than one entry is created

Flow-Based Marking n Mark packets selectively according to flow properties when router has heavy traffic q n n Reduce load on router while still marking Packets are classified according to destination IP address Uses flow thresholds q Lmax is threshold where router’s load is exceeded (called the overload problem)

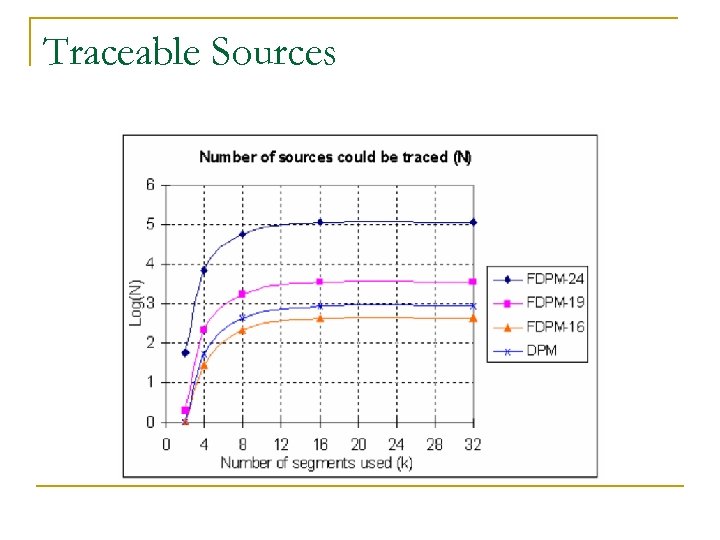

Traceable Sources

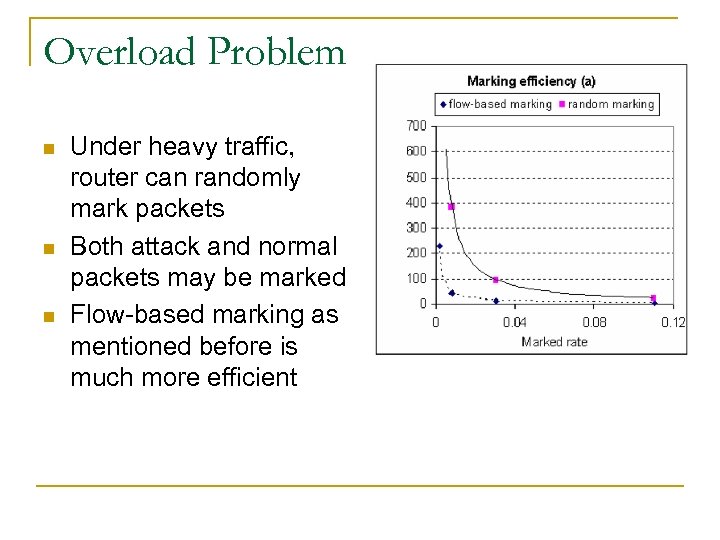

Overload Problem n n n Under heavy traffic, router can randomly mark packets Both attack and normal packets may be marked Flow-based marking as mentioned before is much more efficient

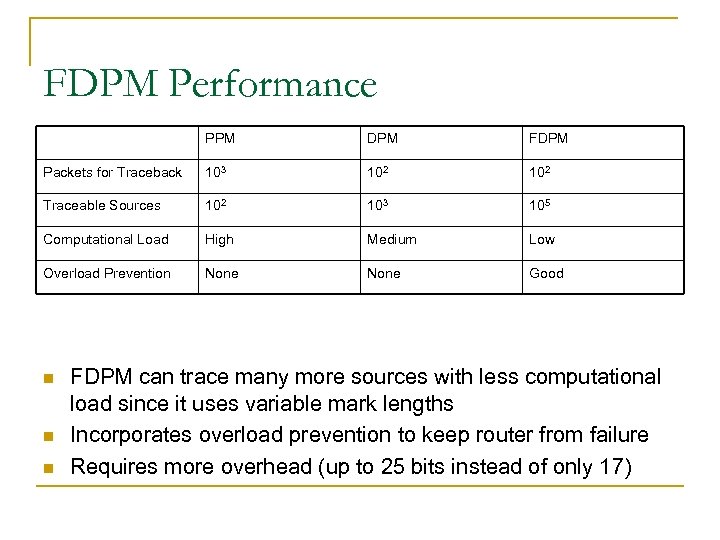

FDPM Performance PPM DPM FDPM Packets for Traceback 103 102 Traceable Sources 102 103 105 Computational Load High Medium Low Overload Prevention None Good n n n FDPM can trace many more sources with less computational load since it uses variable mark lengths Incorporates overload prevention to keep router from failure Requires more overhead (up to 25 bits instead of only 17)

HASH-BASED TRACEBACK Source Path Isolation Engine (SPIE)

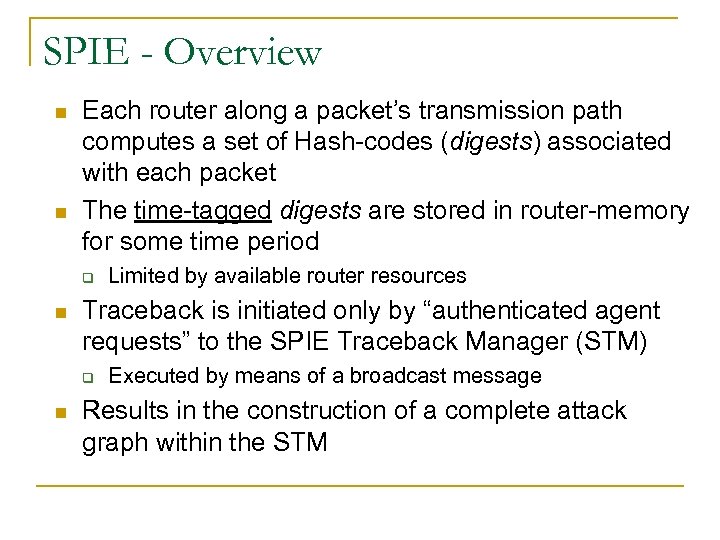

SPIE - Overview n n Each router along a packet’s transmission path computes a set of Hash-codes (digests) associated with each packet The time-tagged digests are stored in router-memory for some time period q n Traceback is initiated only by “authenticated agent requests” to the SPIE Traceback Manager (STM) q n Limited by available router resources Executed by means of a broadcast message Results in the construction of a complete attack graph within the STM

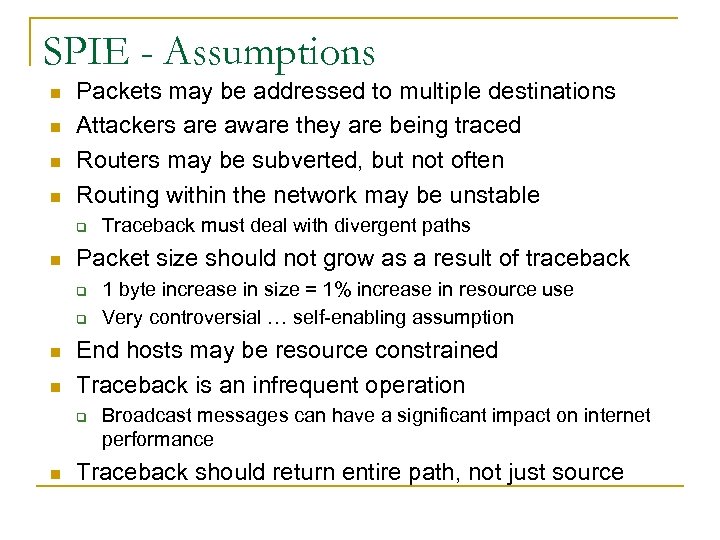

SPIE - Assumptions n n Packets may be addressed to multiple destinations Attackers are aware they are being traced Routers may be subverted, but not often Routing within the network may be unstable q n Packet size should not grow as a result of traceback q q n n 1 byte increase in size = 1% increase in resource use Very controversial … self-enabling assumption End hosts may be resource constrained Traceback is an infrequent operation q n Traceback must deal with divergent paths Broadcast messages can have a significant impact on internet performance Traceback should return entire path, not just source

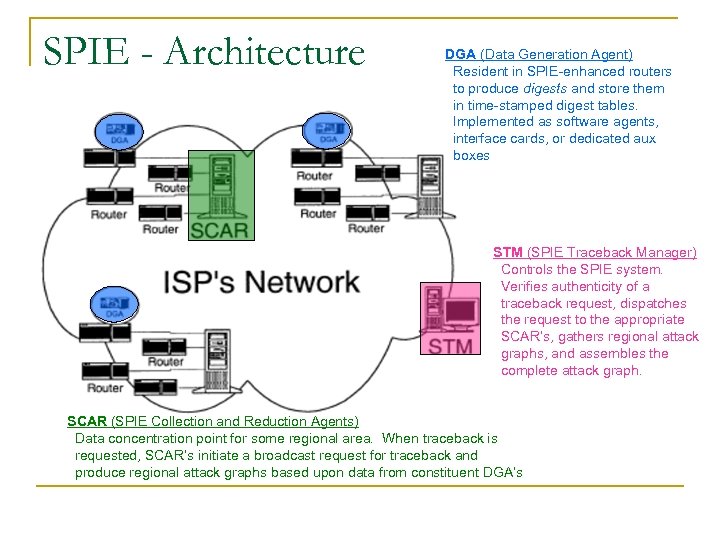

SPIE - Architecture DGA (Data Generation Agent) Resident in SPIE-enhanced routers to produce digests and store them in time-stamped digest tables. Implemented as software agents, interface cards, or dedicated aux boxes STM (SPIE Traceback Manager) Controls the SPIE system. Verifies authenticity of a traceback request, dispatches the request to the appropriate SCAR’s, gathers regional attack graphs, and assembles the complete attack graph. SCAR (SPIE Collection and Reduction Agents) Data concentration point for some regional area. When traceback is requested, SCAR’s initiate a broadcast request for traceback and produce regional attack graphs based upon data from constituent DGA’s

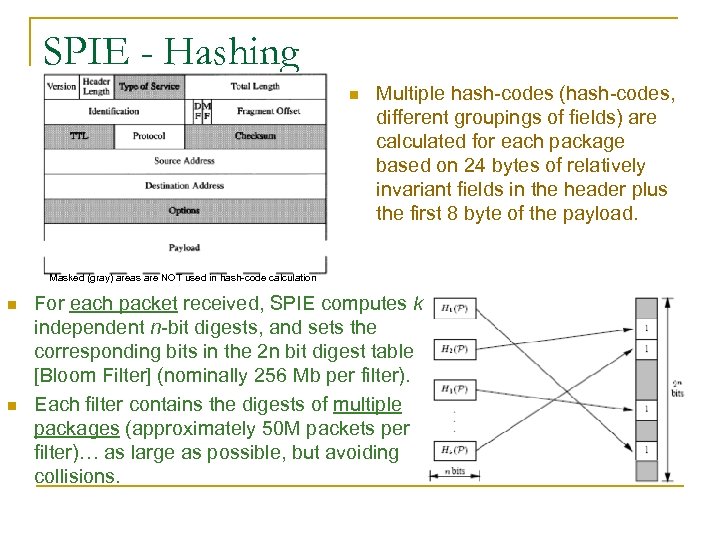

SPIE - Hashing n Multiple hash-codes (hash-codes, different groupings of fields) are calculated for each package based on 24 bytes of relatively invariant fields in the header plus the first 8 byte of the payload. Masked (gray) areas are NOT used in hash-code calculation n n For each packet received, SPIE computes k independent n-bit digests, and sets the corresponding bits in the 2 n bit digest table [Bloom Filter] (nominally 256 Mb per filter). Each filter contains the digests of multiple packages (approximately 50 M packets per filter)… as large as possible, but avoiding collisions.

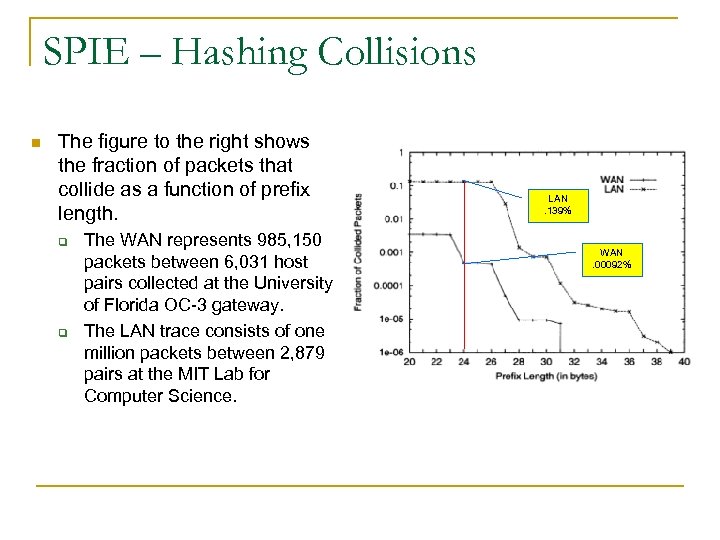

SPIE – Hashing Collisions n The figure to the right shows the fraction of packets that collide as a function of prefix length. q q The WAN represents 985, 150 packets between 6, 031 host pairs collected at the University of Florida OC-3 gateway. The LAN trace consists of one million packets between 2, 879 pairs at the MIT Lab for Computer Science. LAN. 139% WAN. 00092%

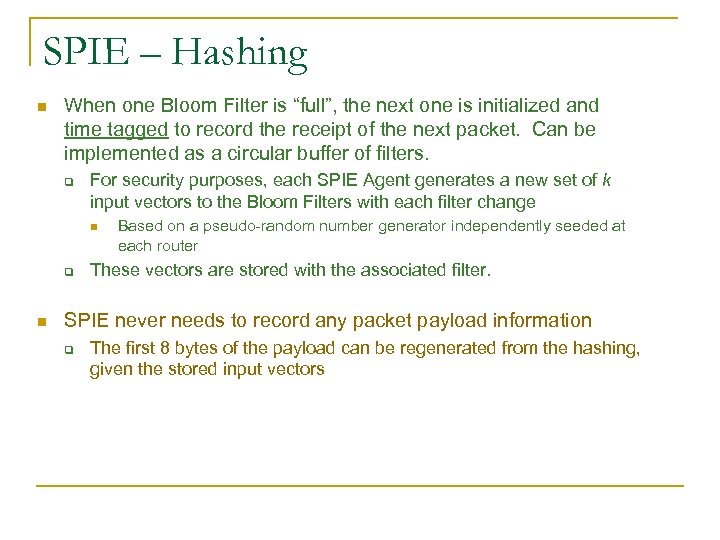

SPIE – Hashing n When one Bloom Filter is “full”, the next one is initialized and time tagged to record the receipt of the next packet. Can be implemented as a circular buffer of filters. q For security purposes, each SPIE Agent generates a new set of k input vectors to the Bloom Filters with each filter change n q n Based on a pseudo-random number generator independently seeded at each router These vectors are stored with the associated filter. SPIE never needs to record any packet payload information q The first 8 bytes of the payload can be regenerated from the hashing, given the stored input vectors

SPIE – Traceback Processing n The SPIE Traceback Manager controls the process q Cryptographically verifies that the authenticity and integrity of the traceback request message n n q Authorized requester Packet ID Victim Approximate time of attack Dispaches the request to the appropriate SPIE Collection and Reduction Agents (SCARs) n SCARs poll their assigned Data Collection Agents (DCAs) q q If the response from the targeted SCARs indicates that other regional SCARs are involved in the Trace, the STM sends another direct request n q q DCAs poll their assigned routers This loop continues until all branches terminate Gathers the resulting attack graphs from the (SCARs) Assembles them into a Complete Attack Graph

SPIE – Traceback Processing (Cont) n SPIE-enhanced routers hash the data received in the Traceback Request to determine whether or not the target message passes through the router q q Computes k digests using the appropriate input vectors Checks for a “ 1” in each of the corresponding K locations of the digest table “near” the target time n If ALL associated bits are set, it is highly likely that the packet was stored. q It is within the realm of possibilities that the Filter is saturated with an overabundance of packets, creating a false positive. § This is controlled by limiting the number of digests in each filter, depending upon Digest Table size and the mean volume of packet traffic.

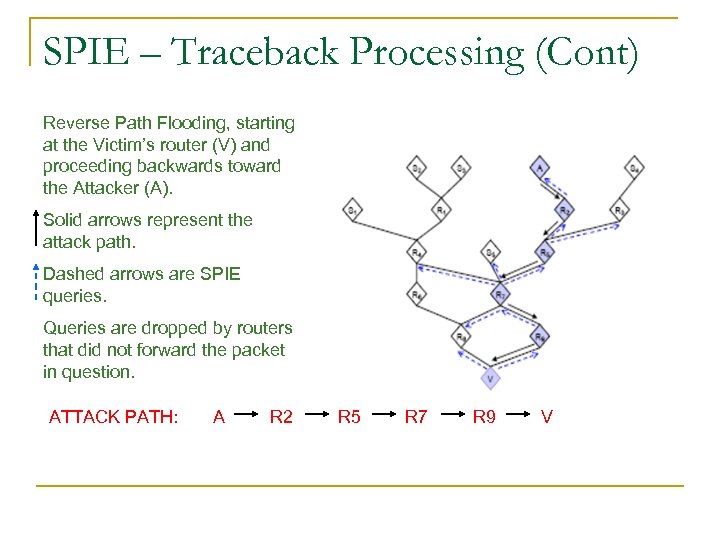

SPIE – Traceback Processing (Cont) Reverse Path Flooding, starting at the Victim’s router (V) and proceeding backwards toward the Attacker (A). Solid arrows represent the attack path. Dashed arrows are SPIE queries. Queries are dropped by routers that did not forward the packet in question. ATTACK PATH: A R 2 R 5 R 7 R 9 V

SPIE – Metrics

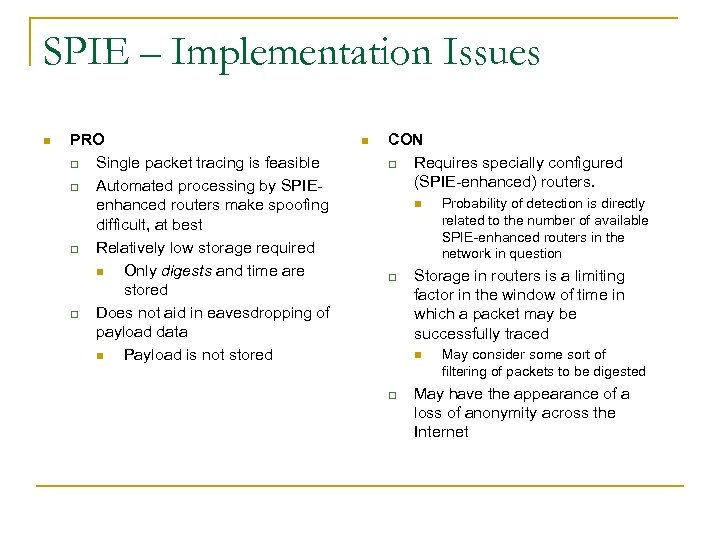

SPIE – Implementation Issues n PRO q Single packet tracing is feasible q Automated processing by SPIEenhanced routers make spoofing difficult, at best q Relatively low storage required n Only digests and time are stored q Does not aid in eavesdropping of payload data n Payload is not stored n CON q Requires specially configured (SPIE-enhanced) routers. n q Storage in routers is a limiting factor in the window of time in which a packet may be successfully traced n q Probability of detection is directly related to the number of available SPIE-enhanced routers in the network in question May consider some sort of filtering of packets to be digested May have the appearance of a loss of anonymity across the Internet

Conclusions n n n Do. S, worms, viruses continuously becoming more dangerous Attacks must be shut down quickly and be traceable Integrating traceback into next generation Internet is critical

Conclusions n Flexible Deterministic Packet Marking q q n As fast as regular DPM, faster than PPM Requires more overhead than DPM, but traces more sources and less computational load Hash-based Traceback q q No packet overhead New, more capable routers

Conclusions n Cooperation is required q q q n Routers must be built to handle new tracing protocols ISPs must provide compliance with protocols Internet is no longer anonymous Some issues must still be solved q q NATs Collisions

References n n Belenky, A. , Ansari, N. “IP Traceback with Deterministic Packet Marking”. IEEE Communications Letter, April 2003. Savage, S. , et al. “Practical Network Support for IP Traceback”. Department of Computer Science, University of Washington. Snoeren, A. , Partridge, Craig, et al. “Single-Packet IP Traceback”. IEEE/ACM Transactions on Networking, December 2002. Xiang, Y. , Zhou, W. “A Defense System Against DDo. S Attacks by Large-Scale IP Traceback”, IEEE 2005.

e80ff761c1144fde81378d658acef49c.ppt