4aa57e21ddabe6fb6c0e666b095bf601.ppt

- Количество слайдов: 54

TQS - Teste e Qualidade de Software (Software Testing and Quality) Test Case Design – Black Box Testing João Pascoal Faria jpf@fe. up. pt www. fe. up. pt/~jpf Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 1

TQS - Teste e Qualidade de Software (Software Testing and Quality) Test Case Design – Black Box Testing João Pascoal Faria jpf@fe. up. pt www. fe. up. pt/~jpf Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 1

Index n Introduction n Black box testing techniques Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 2

Index n Introduction n Black box testing techniques Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 2

Development of test cases Complete testing is impossible Testing cannot guarantee the absence of faults How to select subset of test cases from all possible test cases with a high chance of detecting most faults? Test case design strategies and techniques Because : if we have a good test suite then we can have more confidence in the product that passes that test suite Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 3

Development of test cases Complete testing is impossible Testing cannot guarantee the absence of faults How to select subset of test cases from all possible test cases with a high chance of detecting most faults? Test case design strategies and techniques Because : if we have a good test suite then we can have more confidence in the product that passes that test suite Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 3

Quality attributes of “good” test cases n Capability to find defects • Particularly defects with higher risk • Risk = frequency of failure (manifestation to users) * impact of failure • Cost (of post-release failure) ≈ risk n Capability to exercise multiple aspects of the system under test • Reduces the number of test cases required and the overall cost n Low cost • Development: specify, design, code • Execution • Result analysis: pass/fail analysis, defect localization n Easy to maintain • Reduce whole life-cycle cost • Maintenance cost ≈ size of test artefacts Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 4

Quality attributes of “good” test cases n Capability to find defects • Particularly defects with higher risk • Risk = frequency of failure (manifestation to users) * impact of failure • Cost (of post-release failure) ≈ risk n Capability to exercise multiple aspects of the system under test • Reduces the number of test cases required and the overall cost n Low cost • Development: specify, design, code • Execution • Result analysis: pass/fail analysis, defect localization n Easy to maintain • Reduce whole life-cycle cost • Maintenance cost ≈ size of test artefacts Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 4

Adequacy criteria n Criteria to decide if a given test suite is adequate, i. e. , to give us “enough” confidence that “most” of the defects are revealed • “enough” and “most” depend on the product (or product component) criticality n In practice, reduced to coverage criteria • Requirements / specification coverage - At least on test case for each requirement - Cover all cases described in an informal specification - Cover all statements in a formal specification • Model coverage - State-transition coverage - Cover all states, all transitions, etc. - Use-case and scenario coverage - At least one test case for each use-case or scenario • Code coverage - Cover 100% of methods, cover 90% of instructions, etc. Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 5

Adequacy criteria n Criteria to decide if a given test suite is adequate, i. e. , to give us “enough” confidence that “most” of the defects are revealed • “enough” and “most” depend on the product (or product component) criticality n In practice, reduced to coverage criteria • Requirements / specification coverage - At least on test case for each requirement - Cover all cases described in an informal specification - Cover all statements in a formal specification • Model coverage - State-transition coverage - Cover all states, all transitions, etc. - Use-case and scenario coverage - At least one test case for each use-case or scenario • Code coverage - Cover 100% of methods, cover 90% of instructions, etc. Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 5

Adequacy criteria n “Coverage-based testing strategy based on our finding: • For normal operational testing: specification-based, regardless of code coverage • For exceptional testing: code coverage is an important metrics for testing capability” Xia Cai and Michael R. Lyu, “The Effect of Code Coverage on Fault Detection under Different Testing Profiles”, ICSE 2005 Workshop on Advances in Model-Based Software Testing (A-MOST) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 6

Adequacy criteria n “Coverage-based testing strategy based on our finding: • For normal operational testing: specification-based, regardless of code coverage • For exceptional testing: code coverage is an important metrics for testing capability” Xia Cai and Michael R. Lyu, “The Effect of Code Coverage on Fault Detection under Different Testing Profiles”, ICSE 2005 Workshop on Advances in Model-Based Software Testing (A-MOST) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 6

How do we known what percentage of defects have been revealed? n Goals that require this information: • “Find and fix 99% of the defects” • “Reduce the number of defects to less than 10 defects / KLOC” n n Difficulty: the kind of coverage we can measure (statements covered, requirements covered) is not the kind of coverage we would like to measure (defects found and missed) … Techniques: • Past experience • Mutation testing (defect injection) • Static analysis of code samples • … Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 7

How do we known what percentage of defects have been revealed? n Goals that require this information: • “Find and fix 99% of the defects” • “Reduce the number of defects to less than 10 defects / KLOC” n n Difficulty: the kind of coverage we can measure (statements covered, requirements covered) is not the kind of coverage we would like to measure (defects found and missed) … Techniques: • Past experience • Mutation testing (defect injection) • Static analysis of code samples • … Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 7

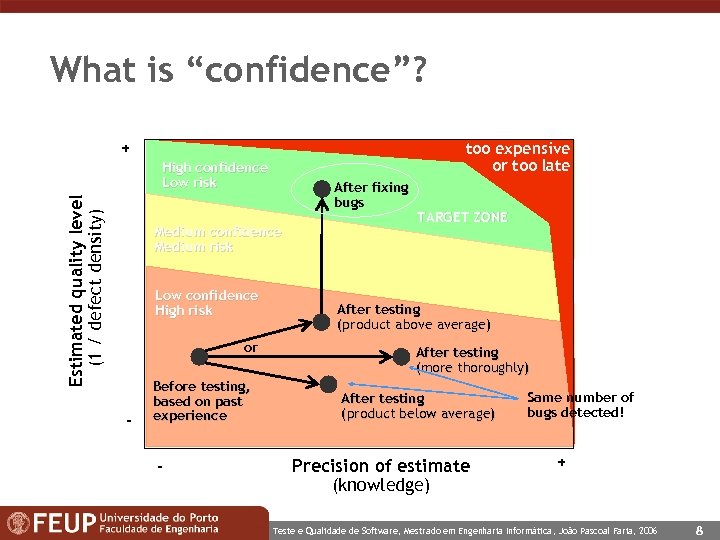

What is “confidence”? + too expensive or too late Estimated quality level (1 / defect density) High confidence Low risk After fixing bugs Medium confidence Medium risk Low confidence High risk or - Before testing, based on past experience - TARGET ZONE After testing (product above average) After testing (more thoroughly) After testing (product below average) Precision of estimate (knowledge) Same number of bugs detected! + Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 8

What is “confidence”? + too expensive or too late Estimated quality level (1 / defect density) High confidence Low risk After fixing bugs Medium confidence Medium risk Low confidence High risk or - Before testing, based on past experience - TARGET ZONE After testing (product above average) After testing (more thoroughly) After testing (product below average) Precision of estimate (knowledge) Same number of bugs detected! + Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 8

Misperceived product quality Test suite quality … and think you are here High Low Many bugs found Some bugs found Very few bugs found Low You may be here … High Product quality Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 9

Misperceived product quality Test suite quality … and think you are here High Low Many bugs found Some bugs found Very few bugs found Low You may be here … High Product quality Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 9

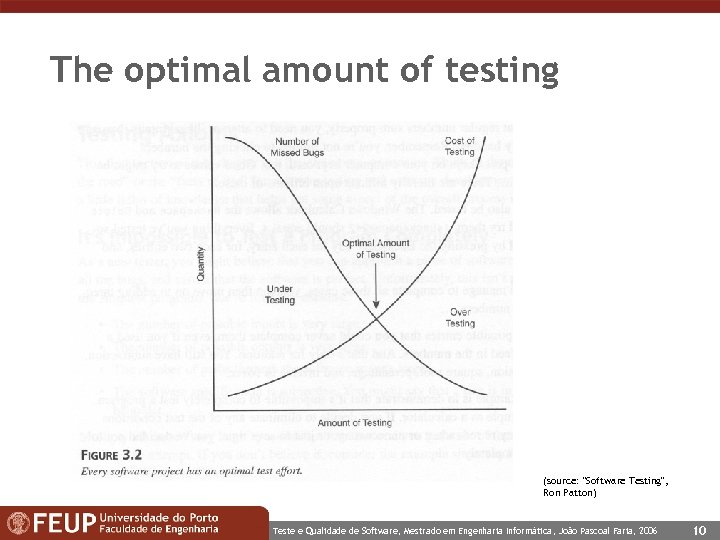

The optimal amount of testing (source: "Software Testing", Ron Patton) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 10

The optimal amount of testing (source: "Software Testing", Ron Patton) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 10

Stop criteria n Criteria to decide when to stop testing (and fixing bugs) n I. e. , stop test-fix iterations and release the product Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 11

Stop criteria n Criteria to decide when to stop testing (and fixing bugs) n I. e. , stop test-fix iterations and release the product Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 11

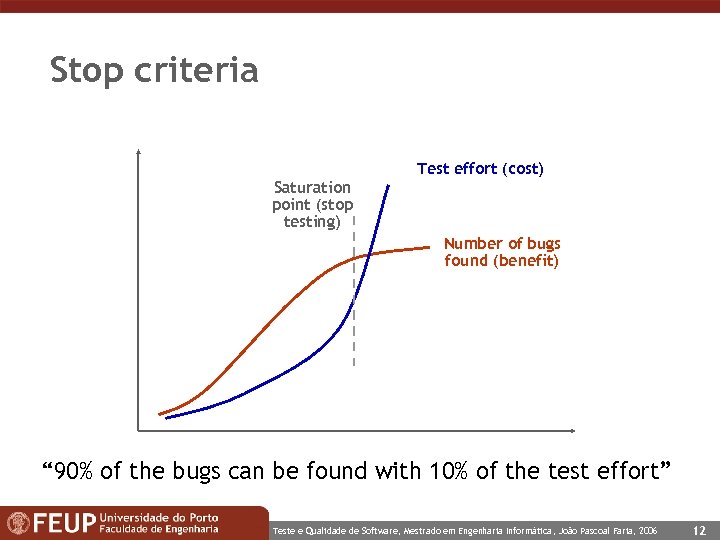

Stop criteria Saturation point (stop testing) Test effort (cost) Number of bugs found (benefit) “ 90% of the bugs can be found with 10% of the test effort” Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 12

Stop criteria Saturation point (stop testing) Test effort (cost) Number of bugs found (benefit) “ 90% of the bugs can be found with 10% of the test effort” Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 12

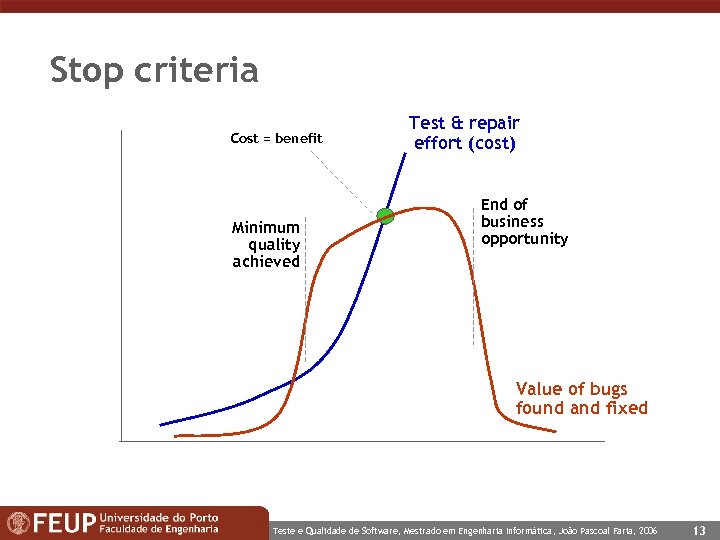

Stop criteria Cost = benefit Minimum quality achieved Test & repair effort (cost) End of business opportunity Value of bugs found and fixed Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 13

Stop criteria Cost = benefit Minimum quality achieved Test & repair effort (cost) End of business opportunity Value of bugs found and fixed Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 13

Stop criteria n What bugs should be fixed? • If bugs found are 50% of the total number of bugs, it makes little difference fixing 95% or 100% of the bugs found • Some bugs are very difficult to fix - Difficult to locate (example: random bugs) - Difficult to correct (example: third party component) • Reduce overall risk - Fix all high severity bugs - Fix (the easiest) 95% of the medium severity bugs - Fix (the easiest) 70% of low severity bugs Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 14

Stop criteria n What bugs should be fixed? • If bugs found are 50% of the total number of bugs, it makes little difference fixing 95% or 100% of the bugs found • Some bugs are very difficult to fix - Difficult to locate (example: random bugs) - Difficult to correct (example: third party component) • Reduce overall risk - Fix all high severity bugs - Fix (the easiest) 95% of the medium severity bugs - Fix (the easiest) 70% of low severity bugs Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 14

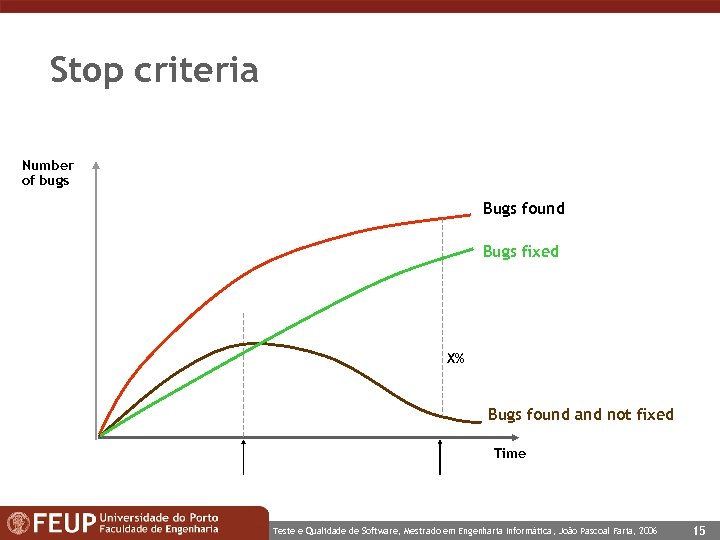

Stop criteria Number of bugs Bugs found Bugs fixed X% Bugs found and not fixed Time Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 15

Stop criteria Number of bugs Bugs found Bugs fixed X% Bugs found and not fixed Time Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 15

Improve versus assess Different test techniques for different purposes: n Find defects and improve the software under test • Defect testing • Choose test cases that have higher probability of finding defects, particularly defects with higher risk, and provide better hints on how to improve the system - Boundary cases Frequent errors Code coverage Stress testing Profiling • Focus here n Assess software quality • Statistical testing • Estimate quality metrics (reliability, availability, efficiency, usability, …) • Test cases that represent typical use cases (based on usage model/profile) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 16

Improve versus assess Different test techniques for different purposes: n Find defects and improve the software under test • Defect testing • Choose test cases that have higher probability of finding defects, particularly defects with higher risk, and provide better hints on how to improve the system - Boundary cases Frequent errors Code coverage Stress testing Profiling • Focus here n Assess software quality • Statistical testing • Estimate quality metrics (reliability, availability, efficiency, usability, …) • Test cases that represent typical use cases (based on usage model/profile) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 16

Test iterations: test to pass and test to fail n First test iterations: Test-to-pass • check if the software fundamentally works • with valid inputs • without stressing the system n Subsequent test iterations: Test-to-fail • try to "break" the system • with valid inputs but at the operational limits • with invalid inputs (source: Ron Patton) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 17

Test iterations: test to pass and test to fail n First test iterations: Test-to-pass • check if the software fundamentally works • with valid inputs • without stressing the system n Subsequent test iterations: Test-to-fail • try to "break" the system • with valid inputs but at the operational limits • with invalid inputs (source: Ron Patton) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 17

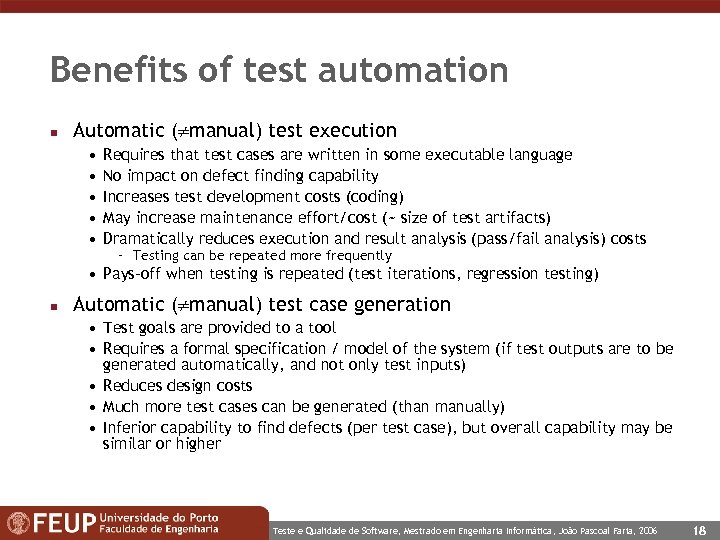

Benefits of test automation n Automatic ( manual) test execution • • • Requires that test cases are written in some executable language No impact on defect finding capability Increases test development costs (coding) May increase maintenance effort/cost (~ size of test artifacts) Dramatically reduces execution and result analysis (pass/fail analysis) costs - Testing can be repeated more frequently • Pays-off when testing is repeated (test iterations, regression testing) n Automatic ( manual) test case generation • Test goals are provided to a tool • Requires a formal specification / model of the system (if test outputs are to be generated automatically, and not only test inputs) • Reduces design costs • Much more test cases can be generated (than manually) • Inferior capability to find defects (per test case), but overall capability may be similar or higher Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 18

Benefits of test automation n Automatic ( manual) test execution • • • Requires that test cases are written in some executable language No impact on defect finding capability Increases test development costs (coding) May increase maintenance effort/cost (~ size of test artifacts) Dramatically reduces execution and result analysis (pass/fail analysis) costs - Testing can be repeated more frequently • Pays-off when testing is repeated (test iterations, regression testing) n Automatic ( manual) test case generation • Test goals are provided to a tool • Requires a formal specification / model of the system (if test outputs are to be generated automatically, and not only test inputs) • Reduces design costs • Much more test cases can be generated (than manually) • Inferior capability to find defects (per test case), but overall capability may be similar or higher Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 18

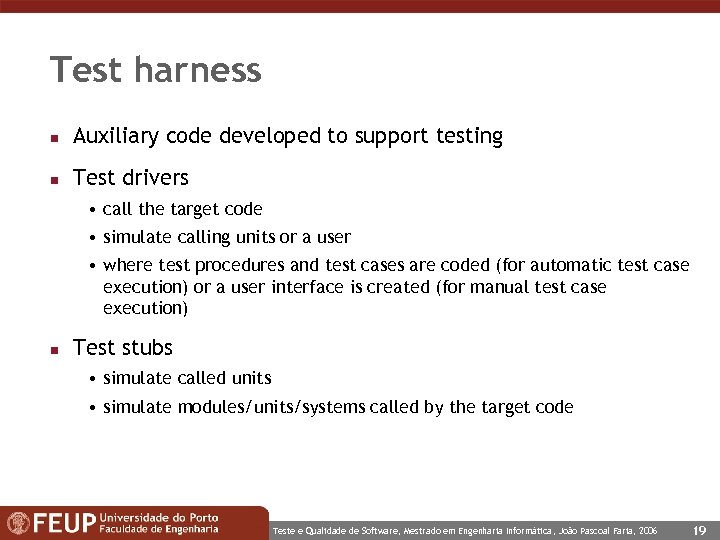

Test harness n Auxiliary code developed to support testing n Test drivers • call the target code • simulate calling units or a user • where test procedures and test cases are coded (for automatic test case execution) or a user interface is created (for manual test case execution) n Test stubs • simulate called units • simulate modules/units/systems called by the target code Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 19

Test harness n Auxiliary code developed to support testing n Test drivers • call the target code • simulate calling units or a user • where test procedures and test cases are coded (for automatic test case execution) or a user interface is created (for manual test case execution) n Test stubs • simulate called units • simulate modules/units/systems called by the target code Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 19

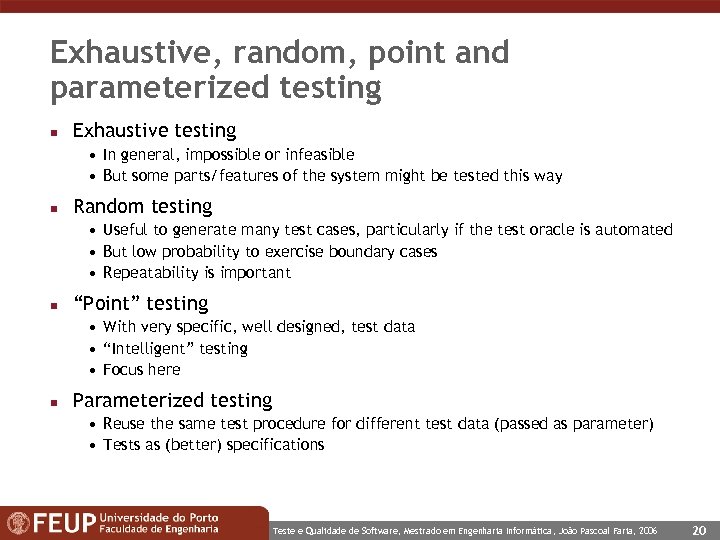

Exhaustive, random, point and parameterized testing n Exhaustive testing • In general, impossible or infeasible • But some parts/features of the system might be tested this way n Random testing • Useful to generate many test cases, particularly if the test oracle is automated • But low probability to exercise boundary cases • Repeatability is important n “Point” testing • With very specific, well designed, test data • “Intelligent” testing • Focus here n Parameterized testing • Reuse the same test procedure for different test data (passed as parameter) • Tests as (better) specifications Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 20

Exhaustive, random, point and parameterized testing n Exhaustive testing • In general, impossible or infeasible • But some parts/features of the system might be tested this way n Random testing • Useful to generate many test cases, particularly if the test oracle is automated • But low probability to exercise boundary cases • Repeatability is important n “Point” testing • With very specific, well designed, test data • “Intelligent” testing • Focus here n Parameterized testing • Reuse the same test procedure for different test data (passed as parameter) • Tests as (better) specifications Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 20

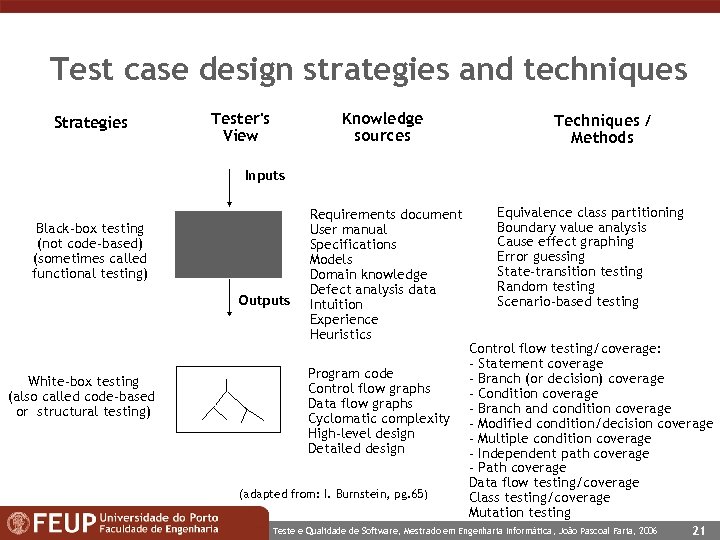

Test case design strategies and techniques Strategies Tester's View Knowledge sources Techniques / Methods Inputs Black-box testing (not code-based) (sometimes called functional testing) Outputs White-box testing (also called code-based or structural testing) Requirements document User manual Specifications Models Domain knowledge Defect analysis data Intuition Experience Heuristics Program code Control flow graphs Data flow graphs Cyclomatic complexity High-level design Detailed design (adapted from: I. Burnstein, pg. 65) Equivalence class partitioning Boundary value analysis Cause effect graphing Error guessing State-transition testing Random testing Scenario-based testing Control flow testing/coverage: - Statement coverage - Branch (or decision) coverage - Condition coverage - Branch and condition coverage - Modified condition/decision coverage - Multiple condition coverage - Independent path coverage - Path coverage Data flow testing/coverage Class testing/coverage Mutation testing Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 21

Test case design strategies and techniques Strategies Tester's View Knowledge sources Techniques / Methods Inputs Black-box testing (not code-based) (sometimes called functional testing) Outputs White-box testing (also called code-based or structural testing) Requirements document User manual Specifications Models Domain knowledge Defect analysis data Intuition Experience Heuristics Program code Control flow graphs Data flow graphs Cyclomatic complexity High-level design Detailed design (adapted from: I. Burnstein, pg. 65) Equivalence class partitioning Boundary value analysis Cause effect graphing Error guessing State-transition testing Random testing Scenario-based testing Control flow testing/coverage: - Statement coverage - Branch (or decision) coverage - Condition coverage - Branch and condition coverage - Modified condition/decision coverage - Multiple condition coverage - Independent path coverage - Path coverage Data flow testing/coverage Class testing/coverage Mutation testing Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 21

Index n Introduction n Black box testing techniques Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 22

Index n Introduction n Black box testing techniques Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 22

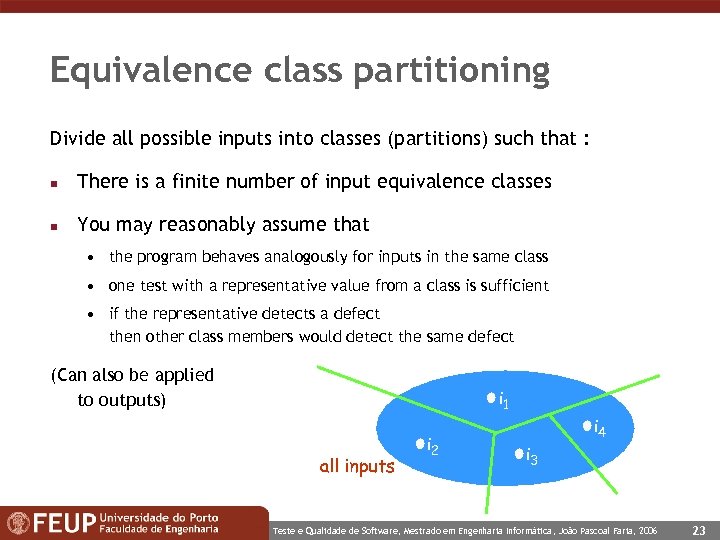

Equivalence class partitioning Divide all possible inputs into classes (partitions) such that : n There is a finite number of input equivalence classes n You may reasonably assume that • the program behaves analogously for inputs in the same class • one test with a representative value from a class is sufficient • if the representative detects a defect then other class members would detect the same defect (Can also be applied to outputs) i 1 all inputs i 2 i 4 i 3 Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 23

Equivalence class partitioning Divide all possible inputs into classes (partitions) such that : n There is a finite number of input equivalence classes n You may reasonably assume that • the program behaves analogously for inputs in the same class • one test with a representative value from a class is sufficient • if the representative detects a defect then other class members would detect the same defect (Can also be applied to outputs) i 1 all inputs i 2 i 4 i 3 Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 23

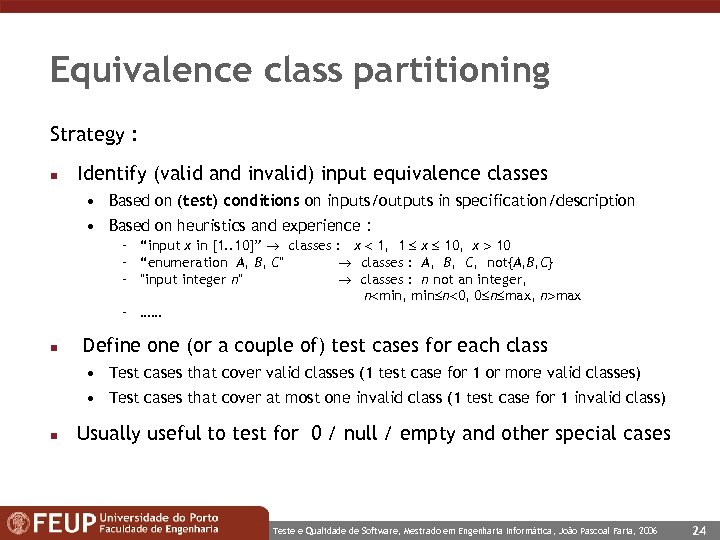

Equivalence class partitioning Strategy : n Identify (valid and invalid) input equivalence classes • Based on (test) conditions on inputs/outputs in specification/description • Based on heuristics and experience : - “input x in [1. . 10]” classes : x 1, 1 x 10, x 10 - “enumeration A, B, C" classes : A, B, C, not{A, B, C} - "input integer n" classes : n not an integer, n min, min n 0, 0 n max, n max - …… n Define one (or a couple of) test cases for each class • Test cases that cover valid classes (1 test case for 1 or more valid classes) • Test cases that cover at most one invalid class (1 test case for 1 invalid class) n Usually useful to test for 0 / null / empty and other special cases Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 24

Equivalence class partitioning Strategy : n Identify (valid and invalid) input equivalence classes • Based on (test) conditions on inputs/outputs in specification/description • Based on heuristics and experience : - “input x in [1. . 10]” classes : x 1, 1 x 10, x 10 - “enumeration A, B, C" classes : A, B, C, not{A, B, C} - "input integer n" classes : n not an integer, n min, min n 0, 0 n max, n max - …… n Define one (or a couple of) test cases for each class • Test cases that cover valid classes (1 test case for 1 or more valid classes) • Test cases that cover at most one invalid class (1 test case for 1 invalid class) n Usually useful to test for 0 / null / empty and other special cases Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 24

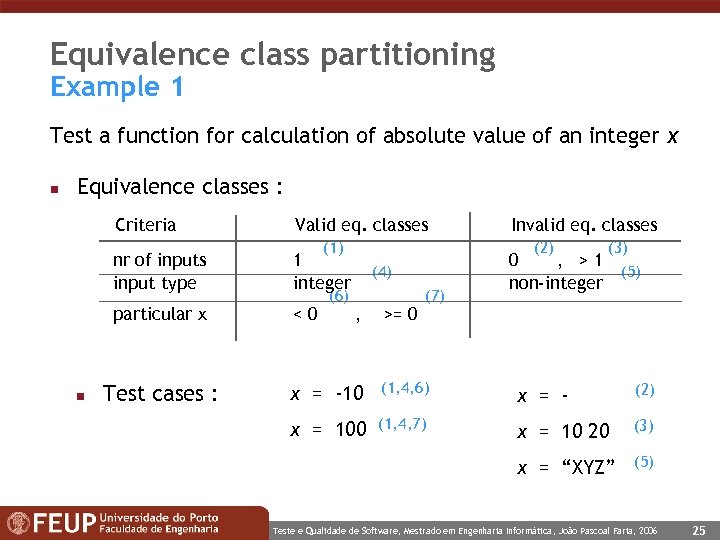

Equivalence class partitioning Example 1 Test a function for calculation of absolute value of an integer x n Equivalence classes : Criteria nr of inputs input type 1 integer particular x n Valid eq. classes <0 Test cases : (1) (6) Invalid eq. classes (2) (4) , >= 0 (7) (3) 0 , >1 (5) non-integer x = -10 (1, 4, 6) x = - (2) x = 100 (1, 4, 7) x = 10 20 (3) x = “XYZ” (5) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 25

Equivalence class partitioning Example 1 Test a function for calculation of absolute value of an integer x n Equivalence classes : Criteria nr of inputs input type 1 integer particular x n Valid eq. classes <0 Test cases : (1) (6) Invalid eq. classes (2) (4) , >= 0 (7) (3) 0 , >1 (5) non-integer x = -10 (1, 4, 6) x = - (2) x = 100 (1, 4, 7) x = 10 20 (3) x = “XYZ” (5) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 25

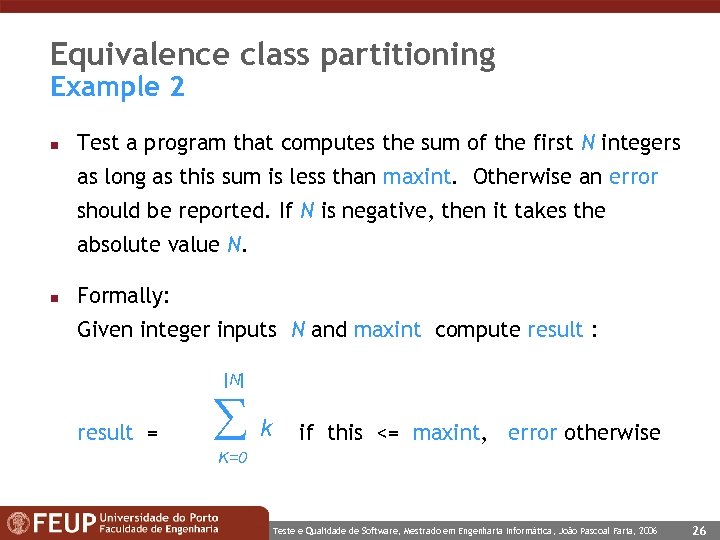

Equivalence class partitioning Example 2 n Test a program that computes the sum of the first N integers as long as this sum is less than maxint. Otherwise an error should be reported. If N is negative, then it takes the absolute value N. n Formally: Given integer inputs N and maxint compute result : |N| result = k if this <= maxint, error otherwise K=0 Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 26

Equivalence class partitioning Example 2 n Test a program that computes the sum of the first N integers as long as this sum is less than maxint. Otherwise an error should be reported. If N is negative, then it takes the absolute value N. n Formally: Given integer inputs N and maxint compute result : |N| result = k if this <= maxint, error otherwise K=0 Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 26

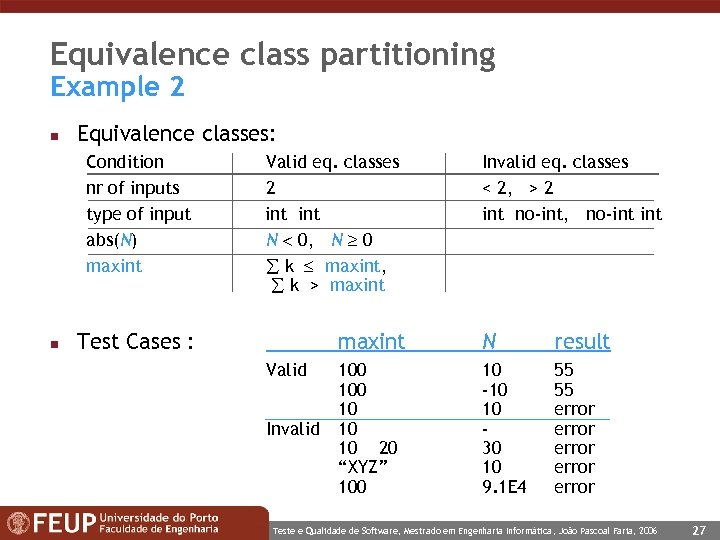

Equivalence class partitioning Example 2 n Equivalence classes: Condition nr of inputs type of input abs(N) maxint n Valid eq. classes 2 int N 0, N 0 k maxint, k > maxint Test Cases : Invalid eq. classes < 2, > 2 int no-int, no-int maxint Valid Invalid N result 100 10 10 10 20 “XYZ” 100 10 -10 10 30 10 9. 1 E 4 55 55 error error Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 27

Equivalence class partitioning Example 2 n Equivalence classes: Condition nr of inputs type of input abs(N) maxint n Valid eq. classes 2 int N 0, N 0 k maxint, k > maxint Test Cases : Invalid eq. classes < 2, > 2 int no-int, no-int maxint Valid Invalid N result 100 10 10 10 20 “XYZ” 100 10 -10 10 30 10 9. 1 E 4 55 55 error error Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 27

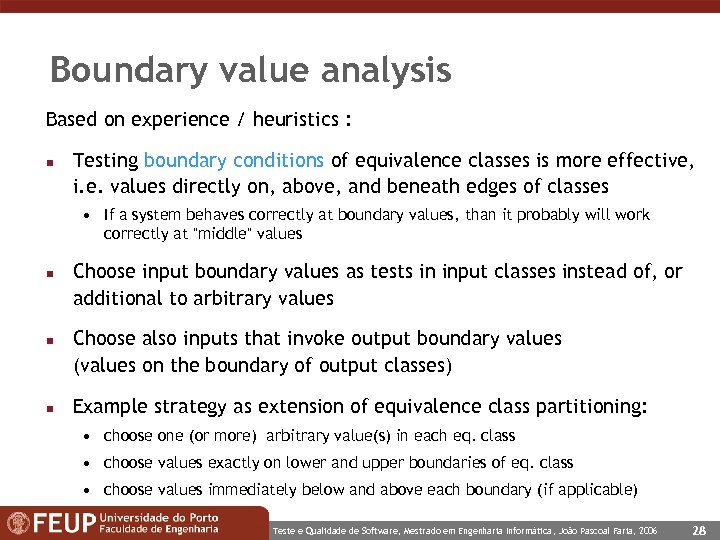

Boundary value analysis Based on experience / heuristics : n Testing boundary conditions of equivalence classes is more effective, i. e. values directly on, above, and beneath edges of classes • If a system behaves correctly at boundary values, than it probably will work correctly at "middle" values n n n Choose input boundary values as tests in input classes instead of, or additional to arbitrary values Choose also inputs that invoke output boundary values (values on the boundary of output classes) Example strategy as extension of equivalence class partitioning: • choose one (or more) arbitrary value(s) in each eq. class • choose values exactly on lower and upper boundaries of eq. class • choose values immediately below and above each boundary (if applicable) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 28

Boundary value analysis Based on experience / heuristics : n Testing boundary conditions of equivalence classes is more effective, i. e. values directly on, above, and beneath edges of classes • If a system behaves correctly at boundary values, than it probably will work correctly at "middle" values n n n Choose input boundary values as tests in input classes instead of, or additional to arbitrary values Choose also inputs that invoke output boundary values (values on the boundary of output classes) Example strategy as extension of equivalence class partitioning: • choose one (or more) arbitrary value(s) in each eq. class • choose values exactly on lower and upper boundaries of eq. class • choose values immediately below and above each boundary (if applicable) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 28

Boundary value analysis “Bugs lurk in corners and congregate at boundaries. ” [Boris Beizer, "Software testing techniques"] "Os bugs escondem-se nos cantos e reúnem-se nas fronteiras" Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 29

Boundary value analysis “Bugs lurk in corners and congregate at boundaries. ” [Boris Beizer, "Software testing techniques"] "Os bugs escondem-se nos cantos e reúnem-se nas fronteiras" Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 29

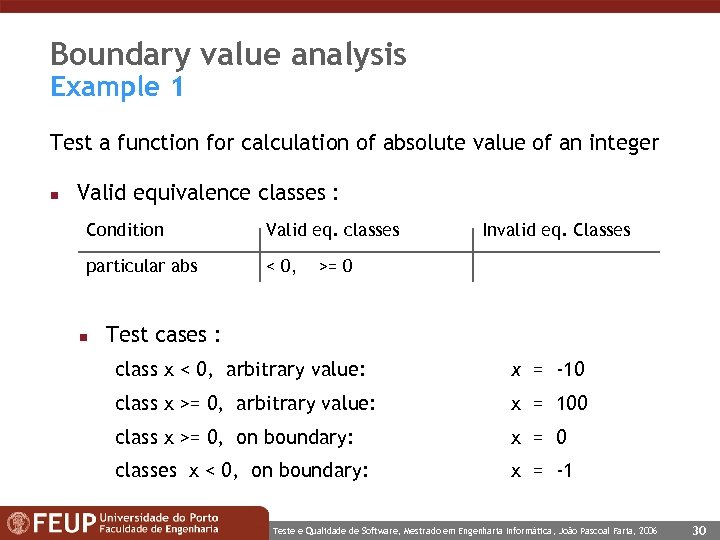

Boundary value analysis Example 1 Test a function for calculation of absolute value of an integer n Valid equivalence classes : Condition Valid eq. classes particular abs < 0, n Invalid eq. Classes >= 0 Test cases : class x < 0, arbitrary value: x = -10 class x >= 0, arbitrary value: x = 100 class x >= 0, on boundary: x = 0 classes x < 0, on boundary: x = -1 Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 30

Boundary value analysis Example 1 Test a function for calculation of absolute value of an integer n Valid equivalence classes : Condition Valid eq. classes particular abs < 0, n Invalid eq. Classes >= 0 Test cases : class x < 0, arbitrary value: x = -10 class x >= 0, arbitrary value: x = 100 class x >= 0, on boundary: x = 0 classes x < 0, on boundary: x = -1 Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 30

![Boundary value analysis A self-assessment test 1 [Myers] “A program reads three integer values. Boundary value analysis A self-assessment test 1 [Myers] “A program reads three integer values.](https://present5.com/presentation/4aa57e21ddabe6fb6c0e666b095bf601/image-31.jpg) Boundary value analysis A self-assessment test 1 [Myers] “A program reads three integer values. The three values are interpreted as representing the lengths of the sides of a triangle. The program prints a message that states whether the triangle is scalene (all lengths are different), isosceles (two lengths are equal), or equilateral (all lengths are equal). ” Write a set of test cases to test this program. Inputs: l 1, l 2, l 3 , integer, li > 0, li < lj + lk Output: error, scalene, isosceles or equilateral Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 31

Boundary value analysis A self-assessment test 1 [Myers] “A program reads three integer values. The three values are interpreted as representing the lengths of the sides of a triangle. The program prints a message that states whether the triangle is scalene (all lengths are different), isosceles (two lengths are equal), or equilateral (all lengths are equal). ” Write a set of test cases to test this program. Inputs: l 1, l 2, l 3 , integer, li > 0, li < lj + lk Output: error, scalene, isosceles or equilateral Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 31

![Boundary value analysis A self-assessment test 1 [Myers] Test cases for: valid inputs: invalid Boundary value analysis A self-assessment test 1 [Myers] Test cases for: valid inputs: invalid](https://present5.com/presentation/4aa57e21ddabe6fb6c0e666b095bf601/image-32.jpg) Boundary value analysis A self-assessment test 1 [Myers] Test cases for: valid inputs: invalid inputs: 1. valid scalene triangle ? 5. side = 0 ? 2. valid equilateral triangle ? 6. negative side ? 3. valid isosceles triangle ? 7. one side is sum of others ? 4. 3 permutations of previous ? 8. 3 permutations of previous ? l 3 9. one side larger than sum of others ? 5. 11. l 2 10. 3 permutations of previous ? l 1 11. all sides = 0 ? 12. non-integer input ? “Bugs lurk in corners and congregate at boundaries. ” 13. wrong number of values ? Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 32

Boundary value analysis A self-assessment test 1 [Myers] Test cases for: valid inputs: invalid inputs: 1. valid scalene triangle ? 5. side = 0 ? 2. valid equilateral triangle ? 6. negative side ? 3. valid isosceles triangle ? 7. one side is sum of others ? 4. 3 permutations of previous ? 8. 3 permutations of previous ? l 3 9. one side larger than sum of others ? 5. 11. l 2 10. 3 permutations of previous ? l 1 11. all sides = 0 ? 12. non-integer input ? “Bugs lurk in corners and congregate at boundaries. ” 13. wrong number of values ? Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 32

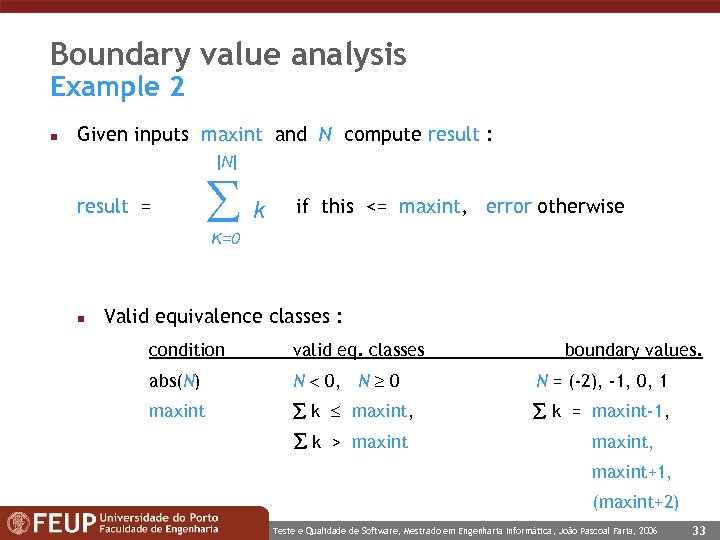

Boundary value analysis Example 2 n Given inputs maxint and N compute result : |N| result = k if this <= maxint, error otherwise K=0 n Valid equivalence classes : condition valid eq. classes abs(N) N 0, N 0 N = (-2), -1, 0, 1 maxint k maxint, k = maxint-1, k > maxint boundary values. maxint, maxint+1, (maxint+2) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 33

Boundary value analysis Example 2 n Given inputs maxint and N compute result : |N| result = k if this <= maxint, error otherwise K=0 n Valid equivalence classes : condition valid eq. classes abs(N) N 0, N 0 N = (-2), -1, 0, 1 maxint k maxint, k = maxint-1, k > maxint boundary values. maxint, maxint+1, (maxint+2) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 33

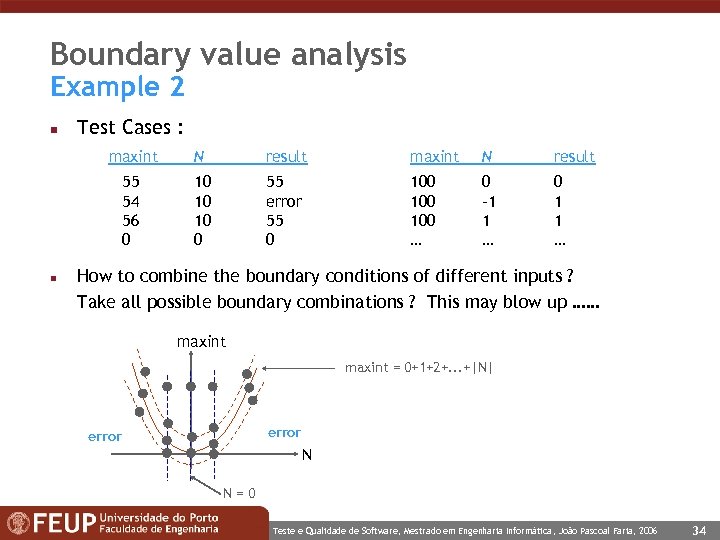

Boundary value analysis Example 2 n Test Cases : maxint result maxint N result 55 54 56 0 n N 10 10 10 0 55 error 55 0 100 100 … 0 -1 1 … 0 1 1 … How to combine the boundary conditions of different inputs ? Take all possible boundary combinations ? This may blow up …… maxint = 0+1+2+. . . +|N| error N N=0 Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 34

Boundary value analysis Example 2 n Test Cases : maxint result maxint N result 55 54 56 0 n N 10 10 10 0 55 error 55 0 100 100 … 0 -1 1 … 0 1 1 … How to combine the boundary conditions of different inputs ? Take all possible boundary combinations ? This may blow up …… maxint = 0+1+2+. . . +|N| error N N=0 Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 34

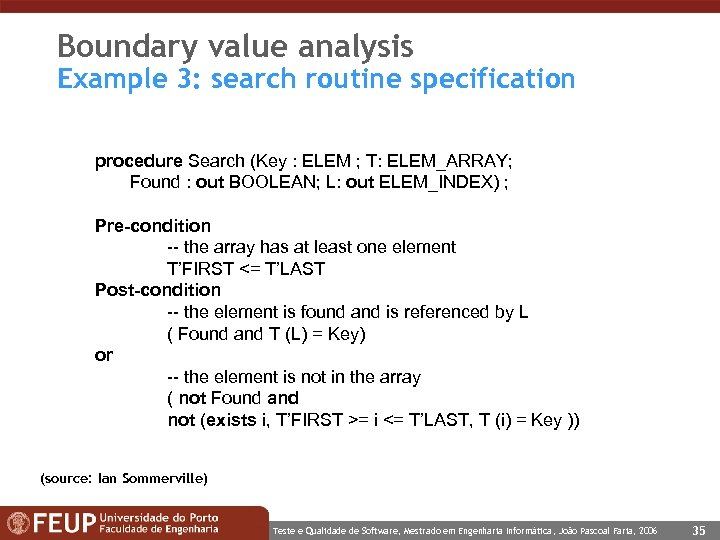

Boundary value analysis Example 3: search routine specification procedure Search (Key : ELEM ; T: ELEM_ARRAY; Found : out BOOLEAN; L: out ELEM_INDEX) ; Pre-condition -- the array has at least one element T’FIRST <= T’LAST Post-condition -- the element is found and is referenced by L ( Found and T (L) = Key) or -- the element is not in the array ( not Found and not (exists i, T’FIRST >= i <= T’LAST, T (i) = Key )) (source: Ian Sommerville) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 35

Boundary value analysis Example 3: search routine specification procedure Search (Key : ELEM ; T: ELEM_ARRAY; Found : out BOOLEAN; L: out ELEM_INDEX) ; Pre-condition -- the array has at least one element T’FIRST <= T’LAST Post-condition -- the element is found and is referenced by L ( Found and T (L) = Key) or -- the element is not in the array ( not Found and not (exists i, T’FIRST >= i <= T’LAST, T (i) = Key )) (source: Ian Sommerville) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 35

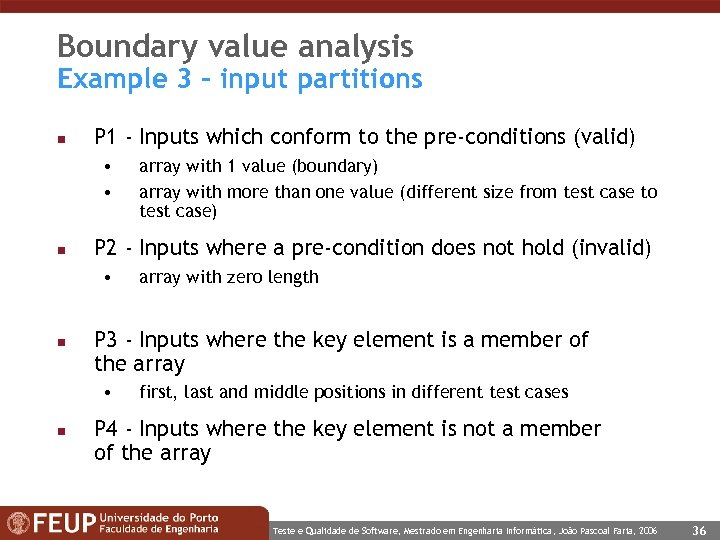

Boundary value analysis Example 3 - input partitions n P 1 - Inputs which conform to the pre-conditions (valid) • • n P 2 - Inputs where a pre-condition does not hold (invalid) • n array with zero length P 3 - Inputs where the key element is a member of the array • n array with 1 value (boundary) array with more than one value (different size from test case to test case) first, last and middle positions in different test cases P 4 - Inputs where the key element is not a member of the array Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 36

Boundary value analysis Example 3 - input partitions n P 1 - Inputs which conform to the pre-conditions (valid) • • n P 2 - Inputs where a pre-condition does not hold (invalid) • n array with zero length P 3 - Inputs where the key element is a member of the array • n array with 1 value (boundary) array with more than one value (different size from test case to test case) first, last and middle positions in different test cases P 4 - Inputs where the key element is not a member of the array Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 36

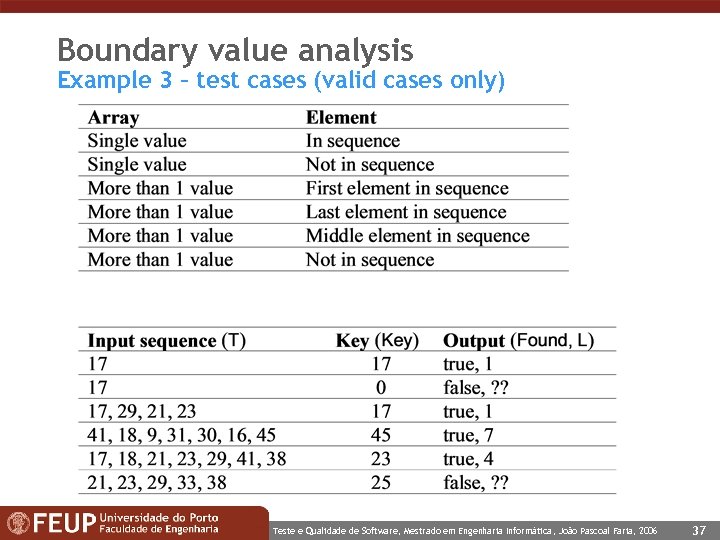

Boundary value analysis Example 3 – test cases (valid cases only) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 37

Boundary value analysis Example 3 – test cases (valid cases only) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 37

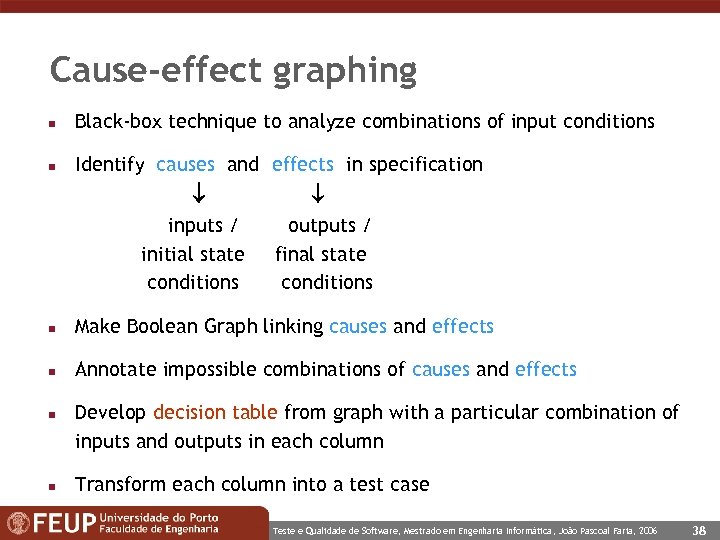

Cause-effect graphing n Black-box technique to analyze combinations of input conditions n Identify causes and effects in specification inputs / initial state conditions outputs / final state conditions n Make Boolean Graph linking causes and effects n Annotate impossible combinations of causes and effects n n Develop decision table from graph with a particular combination of inputs and outputs in each column Transform each column into a test case Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 38

Cause-effect graphing n Black-box technique to analyze combinations of input conditions n Identify causes and effects in specification inputs / initial state conditions outputs / final state conditions n Make Boolean Graph linking causes and effects n Annotate impossible combinations of causes and effects n n Develop decision table from graph with a particular combination of inputs and outputs in each column Transform each column into a test case Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 38

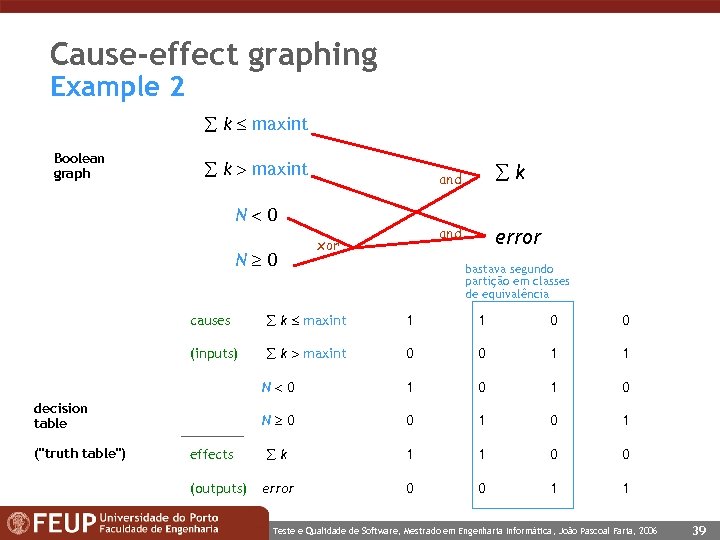

Cause-effect graphing Example 2 k maxint Boolean graph k maxint and N 0 xor k error bastava segundo partição em classes de equivalência causes k maxint 1 1 0 0 (inputs) k maxint 0 0 1 1 N 0 1 0 N 0 0 1 k 1 1 0 0 error 0 0 1 1 decision table ("truth table") effects (outputs) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 39

Cause-effect graphing Example 2 k maxint Boolean graph k maxint and N 0 xor k error bastava segundo partição em classes de equivalência causes k maxint 1 1 0 0 (inputs) k maxint 0 0 1 1 N 0 1 0 N 0 0 1 k 1 1 0 0 error 0 0 1 1 decision table ("truth table") effects (outputs) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 39

Cause-effect graphing n Systematic method for generating test cases representing combinations of conditions n Differently from eq. class partitioning, we define a test case for each possible combination of conditions n Drawback: Combinatorial explosion of number of possible combinations Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 40

Cause-effect graphing n Systematic method for generating test cases representing combinations of conditions n Differently from eq. class partitioning, we define a test case for each possible combination of conditions n Drawback: Combinatorial explosion of number of possible combinations Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 40

Independent effect testing n n With multiple input parameters Show that each input parameter affects the outcome (the value of at least one output parameter) independently of other input parameters • Need at most two test cases for each parameter, with different outcomes, different values of that parameter, and equal values of all the other parameters n Avoids combinatorial explosion of number of test cases Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 41

Independent effect testing n n With multiple input parameters Show that each input parameter affects the outcome (the value of at least one output parameter) independently of other input parameters • Need at most two test cases for each parameter, with different outcomes, different values of that parameter, and equal values of all the other parameters n Avoids combinatorial explosion of number of test cases Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 41

Domain partitioning n Partition the domain of each input or output parameter into equivalence classes • Boolean type: true, false • Enumeration type: value 1, value 2, … (if few values) • Integer type: <0, 0, >0 • Sequence type (array, string, list): empty, not-empty, with/without repetitions, … • Set type: empty, not-empty • Etc. n Special case of equivalence class partitioning • General case: analyze combinations of parameters Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 42

Domain partitioning n Partition the domain of each input or output parameter into equivalence classes • Boolean type: true, false • Enumeration type: value 1, value 2, … (if few values) • Integer type: <0, 0, >0 • Sequence type (array, string, list): empty, not-empty, with/without repetitions, … • Set type: empty, not-empty • Etc. n Special case of equivalence class partitioning • General case: analyze combinations of parameters Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 42

Error guessing n Just ‘guess’ where the errors are …… n Intuition and experience of tester n Ad hoc, not really a technique n But can be quite effective n Strategy: • Make a list of possible errors or error-prone situations (often related to boundary conditions) • Write test cases based on this list Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 43

Error guessing n Just ‘guess’ where the errors are …… n Intuition and experience of tester n Ad hoc, not really a technique n But can be quite effective n Strategy: • Make a list of possible errors or error-prone situations (often related to boundary conditions) • Write test cases based on this list Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 43

Risk based testing n More sophisticated ‘error guessing’ n Try to identify critical parts of program ( high risk code sections ): • parts with unclear specifications • developed by junior programmer while his wife was pregnant …… • complex code (white box? ): measure code complexity - tools available (Mc. Gabe, Logiscope, …) n High-risk code will be more thoroughly tested ( or be rewritten immediately ……) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 44

Risk based testing n More sophisticated ‘error guessing’ n Try to identify critical parts of program ( high risk code sections ): • parts with unclear specifications • developed by junior programmer while his wife was pregnant …… • complex code (white box? ): measure code complexity - tools available (Mc. Gabe, Logiscope, …) n High-risk code will be more thoroughly tested ( or be rewritten immediately ……) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 44

Testing for race conditions n n Also called bad timing and concurrency problems Problems that occur in multitasking systems (with multiple threads or processes) A kind of boundary analysis related to the dynamic views of a system (statetransition view and process-communication view) Examples of situations that may expose race conditions: • problems with shared resources: - saving and loading the same document at the same time with different programs - sharing the same printer, communications port or other peripheral - using different programs (or instances of a program) to simultaneously access a common database • problems with interruptions: - pressing keys or sending mouse clicks while the software is loading or changing states • other problems: - shutting down or starting two or more instances of the software at the same time n Knowledge used: dynamic models (state-transition models, process models) (source: Ron Patton) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 45

Testing for race conditions n n Also called bad timing and concurrency problems Problems that occur in multitasking systems (with multiple threads or processes) A kind of boundary analysis related to the dynamic views of a system (statetransition view and process-communication view) Examples of situations that may expose race conditions: • problems with shared resources: - saving and loading the same document at the same time with different programs - sharing the same printer, communications port or other peripheral - using different programs (or instances of a program) to simultaneously access a common database • problems with interruptions: - pressing keys or sending mouse clicks while the software is loading or changing states • other problems: - shutting down or starting two or more instances of the software at the same time n Knowledge used: dynamic models (state-transition models, process models) (source: Ron Patton) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 45

Random testing n n Input values are (pseudo) randomly generated (-) Need some automatic way to check the outputs (for functional / correctness testing) • By comparing the actual output with the output produced by a "trusted" implementation • By checking the result with some kind of procedure or expression - Some times it's much easier to check a result than to actually computing it - Example: sorting - O(n log n) to perform; O(n) to check (final array is sorted and has same elements than initial array) n n (+) Many test cases may be generated (+) Repeatable (*): pseudo-random generators produce the same sequence of values when started with the same initial value (*) essential to check if a bug was corrected n (-) May not cover special cases that are discovered by "manual" techniques • Combine with "manual" generation of test cases for boundary values n (+) Particularly adequate for performance testing (it's not necessary to check the correctness of outputs) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 46

Random testing n n Input values are (pseudo) randomly generated (-) Need some automatic way to check the outputs (for functional / correctness testing) • By comparing the actual output with the output produced by a "trusted" implementation • By checking the result with some kind of procedure or expression - Some times it's much easier to check a result than to actually computing it - Example: sorting - O(n log n) to perform; O(n) to check (final array is sorted and has same elements than initial array) n n (+) Many test cases may be generated (+) Repeatable (*): pseudo-random generators produce the same sequence of values when started with the same initial value (*) essential to check if a bug was corrected n (-) May not cover special cases that are discovered by "manual" techniques • Combine with "manual" generation of test cases for boundary values n (+) Particularly adequate for performance testing (it's not necessary to check the correctness of outputs) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 46

Requirements based testing n You have a list (or tree) of requirements (or features or properties) n Define at least one test case for each requirement n Build and maintain a (tests to requirements) traceability matrix n n Particularly adequate for system and acceptance testing, but applicable in other situations Hints: • Write test cases as specifications by examples • Write distinctive test cases (examples) - Not enough: Requirement 1 or Requirement 2 => Test. Case 1 Behaviour • Test separately and in combination • Write “positive” (examples in favor) and “negative” (examples against) test cases Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 47

Requirements based testing n You have a list (or tree) of requirements (or features or properties) n Define at least one test case for each requirement n Build and maintain a (tests to requirements) traceability matrix n n Particularly adequate for system and acceptance testing, but applicable in other situations Hints: • Write test cases as specifications by examples • Write distinctive test cases (examples) - Not enough: Requirement 1 or Requirement 2 => Test. Case 1 Behaviour • Test separately and in combination • Write “positive” (examples in favor) and “negative” (examples against) test cases Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 47

Model based testing n Model = visual model n Semi-formal specifications n Behavioral UML models • Use case models • Interaction models (sequence diagrams, collaborations diagrams) • State machine (state-transition) models • Activity models - Same techniques as white box n Can be used to generate test cases in a more or less automated way Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 48

Model based testing n Model = visual model n Semi-formal specifications n Behavioral UML models • Use case models • Interaction models (sequence diagrams, collaborations diagrams) • State machine (state-transition) models • Activity models - Same techniques as white box n Can be used to generate test cases in a more or less automated way Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 48

State-transition testing n Construct a state-transition model (state machine view) of the item to be tested (from the perspective of a user / client) n E. g. , with a state diagram in UML n Define test cases to exercise all states and all transitions between states • Usually, not all possible paths (sequences of states and transitions), because of combinatorial explosion • Each test case describes a sequence of inputs and outputs (including input and output states), and may cover several states and transitions • Also test to fail – with unexpected inputs for a particular state n Particularly useful for testing user interfaces • state – a particular form/screen/page or a particular mode (inspect, insert, modify, delete; draw line mode, brush mode, etc. ) • transition – navigation between forms/screens/pages or transition between modes n n Also useful to test object oriented software Particularly useful when a state-transition model already exists as part of the specification Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 49

State-transition testing n Construct a state-transition model (state machine view) of the item to be tested (from the perspective of a user / client) n E. g. , with a state diagram in UML n Define test cases to exercise all states and all transitions between states • Usually, not all possible paths (sequences of states and transitions), because of combinatorial explosion • Each test case describes a sequence of inputs and outputs (including input and output states), and may cover several states and transitions • Also test to fail – with unexpected inputs for a particular state n Particularly useful for testing user interfaces • state – a particular form/screen/page or a particular mode (inspect, insert, modify, delete; draw line mode, brush mode, etc. ) • transition – navigation between forms/screens/pages or transition between modes n n Also useful to test object oriented software Particularly useful when a state-transition model already exists as part of the specification Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 49

Use case and scenario testing n Particularly adequate for system and integration testing n Use cases capture functional requirements n Each use case is described by one or more normal flow of events and zero or more exceptional flow of events (also called scenarios) n Define at least one test case for each scenario n Build and maintain a (tests to use cases) traceability matrix n Example: library (MFES) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 50

Use case and scenario testing n Particularly adequate for system and integration testing n Use cases capture functional requirements n Each use case is described by one or more normal flow of events and zero or more exceptional flow of events (also called scenarios) n Define at least one test case for each scenario n Build and maintain a (tests to use cases) traceability matrix n Example: library (MFES) Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 50

Formal specification based testing n Formal specification = formal model n Non executable formal specifications: • Constraint language • Operations pre/post conditions (restrictions/effects) • Can be expressed in OCL – Object Constraint Language • Post conditions can be used to check outcomes – test oracle n Executable formal specifications • Action language • Executable test oracle for conformance testing n Example: “Colocação de professores” Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 51

Formal specification based testing n Formal specification = formal model n Non executable formal specifications: • Constraint language • Operations pre/post conditions (restrictions/effects) • Can be expressed in OCL – Object Constraint Language • Post conditions can be used to check outcomes – test oracle n Executable formal specifications • Action language • Executable test oracle for conformance testing n Example: “Colocação de professores” Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 51

Black box testing: which One ? n Black box testing techniques : • Equivalence class partitioning • Boundary value analysis • Cause-effect graphing • Error guessing • ………… n Which one to use ? • None of them is complete • All are based on some kind of heuristics • They are complementary Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 52

Black box testing: which One ? n Black box testing techniques : • Equivalence class partitioning • Boundary value analysis • Cause-effect graphing • Error guessing • ………… n Which one to use ? • None of them is complete • All are based on some kind of heuristics • They are complementary Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 52

Black box testing: which one ? n Always use a combination of techniques • When a formal specification is available try to use it • Identify valid and invalid input equivalence classes • Identify output equivalence classes • Apply boundary value analysis on valid equivalence classes • Guess about possible errors • Cause-effect graphing for linking inputs and outputs Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 53

Black box testing: which one ? n Always use a combination of techniques • When a formal specification is available try to use it • Identify valid and invalid input equivalence classes • Identify output equivalence classes • Apply boundary value analysis on valid equivalence classes • Guess about possible errors • Cause-effect graphing for linking inputs and outputs Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 53

References and further reading n Practical Software Testing, Ilene Burnstein, Springer-Verlag, 2003 n Software Testing, Ron Patton, SAMS, 2001 n The Art of Software Testing, Glenford J. Myers, Wiley & Sons, 1979 (Chapter 4 Test Case Design) • Classical n Software testing techniques, Boris Beizer, Van Nostrand Reinhold, 2 nd Ed, 1990 • Bible n Testing Computer Software, 2 nd Edition, Cem Kaner, Jack Falk, Hung Nguyen, John Wiley & Sons, 1999 • Practical, black box only n Software Engineering, Ian Sommerville, 6 th Edition, Addison-Wesley, 2000 n http: //www. swebok. org/ • Guide to the Software Engineering Body of Knowledge (SWEBOK), IEEE Computer Society Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 54

References and further reading n Practical Software Testing, Ilene Burnstein, Springer-Verlag, 2003 n Software Testing, Ron Patton, SAMS, 2001 n The Art of Software Testing, Glenford J. Myers, Wiley & Sons, 1979 (Chapter 4 Test Case Design) • Classical n Software testing techniques, Boris Beizer, Van Nostrand Reinhold, 2 nd Ed, 1990 • Bible n Testing Computer Software, 2 nd Edition, Cem Kaner, Jack Falk, Hung Nguyen, John Wiley & Sons, 1999 • Practical, black box only n Software Engineering, Ian Sommerville, 6 th Edition, Addison-Wesley, 2000 n http: //www. swebok. org/ • Guide to the Software Engineering Body of Knowledge (SWEBOK), IEEE Computer Society Teste e Qualidade de Software, Mestrado em Engenharia Informática, João Pascoal Faria, 2006 54