3a58008bc967642bb8491cde945372f1.ppt

- Количество слайдов: 23

TP#16 one. M 2 M approach to testing TP#16 Source: ETSI Meeting Date: 2015 -03 -23

TP#16 one. M 2 M approach to testing TP#16 Source: ETSI Meeting Date: 2015 -03 -23

Presentation Outline • Achieving interoperability – Conformance versus interoperability testing • test specification development – Test purposes – Conformance tests – Interoperability tests 2

Presentation Outline • Achieving interoperability – Conformance versus interoperability testing • test specification development – Test purposes – Conformance tests – Interoperability tests 2

Interoperability is … • The ultimate aim of standardization in Information and Communication Technology • The red thread running through the entire standards development process, it’s not an isolated issue – Not something to be somehow fixed at the end • Best practise approach of SDO – To produce with the required degree of parallelism Base Standards and Test Specifications – Base standards should be designed with interoperability in mind • Profiles can help to reduce potential non-interoperability – Two complementary forms of testing • Conformance testing • Interoperability testing (i. e. , a more formal form of IOT) – Testing provides vital feedback into the standardization work 3

Interoperability is … • The ultimate aim of standardization in Information and Communication Technology • The red thread running through the entire standards development process, it’s not an isolated issue – Not something to be somehow fixed at the end • Best practise approach of SDO – To produce with the required degree of parallelism Base Standards and Test Specifications – Base standards should be designed with interoperability in mind • Profiles can help to reduce potential non-interoperability – Two complementary forms of testing • Conformance testing • Interoperability testing (i. e. , a more formal form of IOT) – Testing provides vital feedback into the standardization work 3

About Conformance Testing • Is Black-Box testing – Stimulation and Response Test System Point of Control and Observation (PCO) Device Reqs. 4

About Conformance Testing • Is Black-Box testing – Stimulation and Response Test System Point of Control and Observation (PCO) Device Reqs. 4

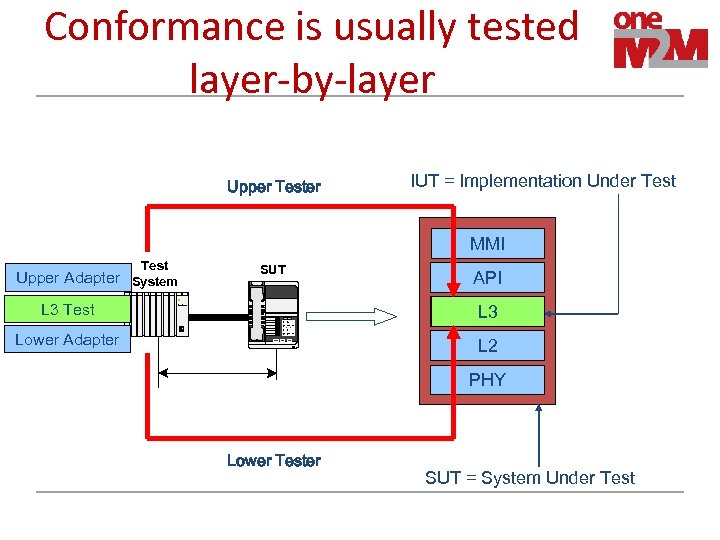

Conformance is usually tested layer-by-layer Upper Tester IUT = Implementation Under Test MMI Test SUT Upper Adapter System L 3 Test d i g i t a l 1 2 3 4 5 6 7 8 9 * 8 # Lower Adapter i API L 3 L 2 i PHY t a l Lower Tester SUT = System Under Test 5

Conformance is usually tested layer-by-layer Upper Tester IUT = Implementation Under Test MMI Test SUT Upper Adapter System L 3 Test d i g i t a l 1 2 3 4 5 6 7 8 9 * 8 # Lower Adapter i API L 3 L 2 i PHY t a l Lower Tester SUT = System Under Test 5

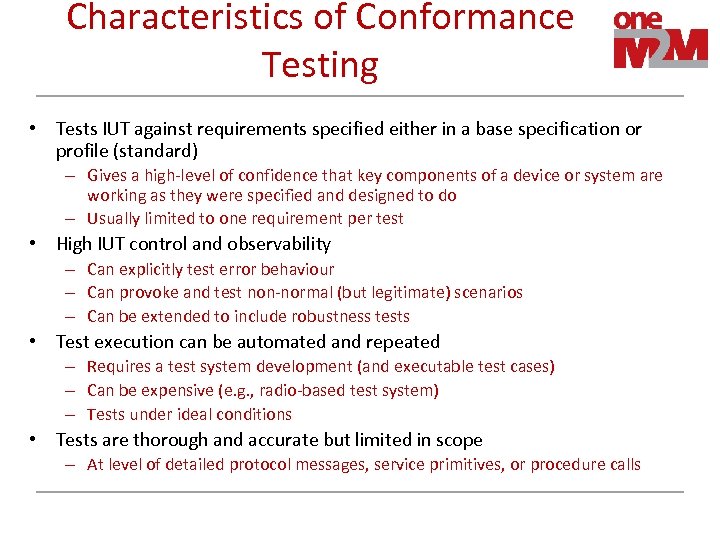

Characteristics of Conformance Testing • Tests IUT against requirements specified either in a base specification or profile (standard) – Gives a high-level of confidence that key components of a device or system are working as they were specified and designed to do – Usually limited to one requirement per test • High IUT control and observability – Can explicitly test error behaviour – Can provoke and test non-normal (but legitimate) scenarios – Can be extended to include robustness tests • Test execution can be automated and repeated – Requires a test system development (and executable test cases) – Can be expensive (e. g. , radio-based test system) – Tests under ideal conditions • Tests are thorough and accurate but limited in scope – At level of detailed protocol messages, service primitives, or procedure calls 6

Characteristics of Conformance Testing • Tests IUT against requirements specified either in a base specification or profile (standard) – Gives a high-level of confidence that key components of a device or system are working as they were specified and designed to do – Usually limited to one requirement per test • High IUT control and observability – Can explicitly test error behaviour – Can provoke and test non-normal (but legitimate) scenarios – Can be extended to include robustness tests • Test execution can be automated and repeated – Requires a test system development (and executable test cases) – Can be expensive (e. g. , radio-based test system) – Tests under ideal conditions • Tests are thorough and accurate but limited in scope – At level of detailed protocol messages, service primitives, or procedure calls 6

Limitations of Conformance Testing • Does not prove end-to-end functionality (interoperability) between communicating systems – Conformance tested implementations may still not interoperate This is often a specification problem rather than a testing problem! Need for minimum requirements coverage or profiles • Tests individual system components – But a system is often greater than the sum of its parts! – Does not test the user’s ‘perception’ of the system • Standardised conformance tests do not include proprietary ‘aspects’ – Difficult to test via proprietary interfaces, e. g. , user APIs – Can however be done by a manufacturer with own conformance tests for proprietary requirements 7

Limitations of Conformance Testing • Does not prove end-to-end functionality (interoperability) between communicating systems – Conformance tested implementations may still not interoperate This is often a specification problem rather than a testing problem! Need for minimum requirements coverage or profiles • Tests individual system components – But a system is often greater than the sum of its parts! – Does not test the user’s ‘perception’ of the system • Standardised conformance tests do not include proprietary ‘aspects’ – Difficult to test via proprietary interfaces, e. g. , user APIs – Can however be done by a manufacturer with own conformance tests for proprietary requirements 7

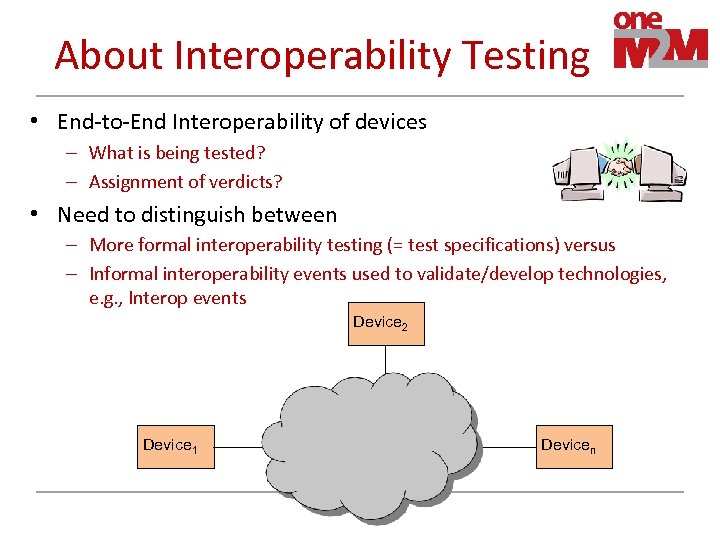

About Interoperability Testing • End-to-End Interoperability of devices – What is being tested? – Assignment of verdicts? • Need to distinguish between – More formal interoperability testing (= test specifications) versus – Informal interoperability events used to validate/develop technologies, e. g. , Interop events Device 2 Device 1 Devicen 8

About Interoperability Testing • End-to-End Interoperability of devices – What is being tested? – Assignment of verdicts? • Need to distinguish between – More formal interoperability testing (= test specifications) versus – Informal interoperability events used to validate/develop technologies, e. g. , Interop events Device 2 Device 1 Devicen 8

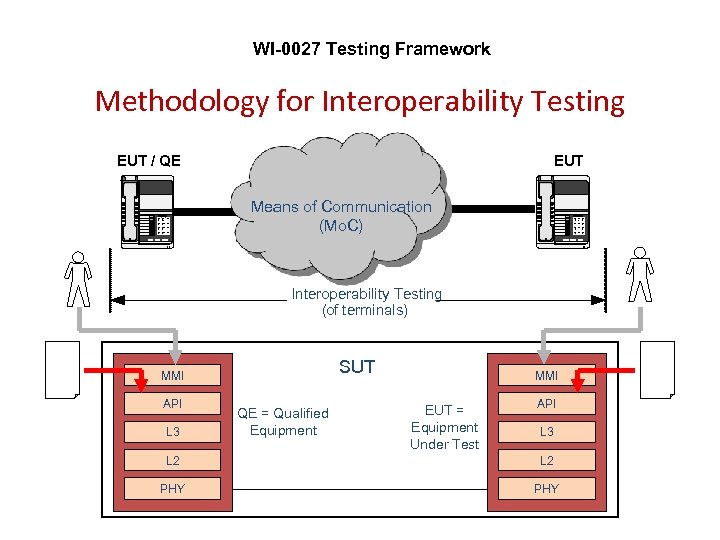

WI-0027 Testing Framework Methodology for Interoperability Testing EUT / QE 1 2 3 4 5 6 7 8 9 * 8 # EUT Means of Communication (Mo. C) 1 2 3 4 5 6 7 8 9 * 8 # Interoperability Testing (of terminals) SUT MMI API L 3 QE = Qualified Equipment MMI EUT = Equipment Under Test API L 3 L 2 PHY 9

WI-0027 Testing Framework Methodology for Interoperability Testing EUT / QE 1 2 3 4 5 6 7 8 9 * 8 # EUT Means of Communication (Mo. C) 1 2 3 4 5 6 7 8 9 * 8 # Interoperability Testing (of terminals) SUT MMI API L 3 QE = Qualified Equipment MMI EUT = Equipment Under Test API L 3 L 2 PHY 9

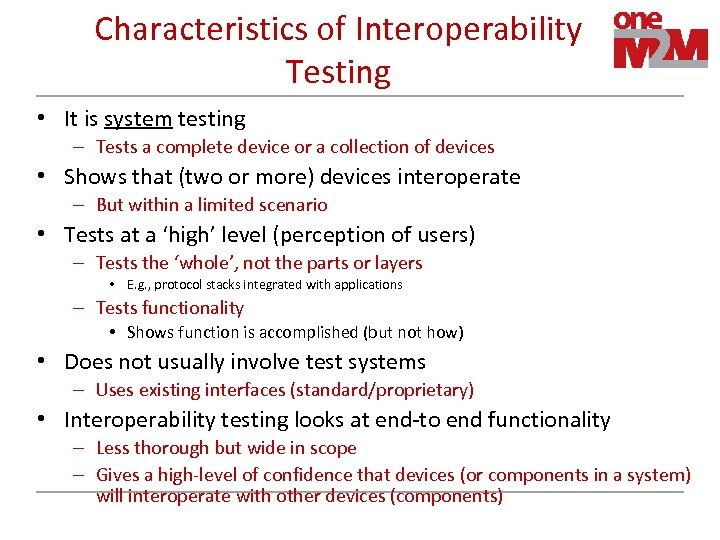

Characteristics of Interoperability Testing • It is system testing – Tests a complete device or a collection of devices • Shows that (two or more) devices interoperate – But within a limited scenario • Tests at a ‘high’ level (perception of users) – Tests the ‘whole’, not the parts or layers • E. g. , protocol stacks integrated with applications – Tests functionality • Shows function is accomplished (but not how) • Does not usually involve test systems – Uses existing interfaces (standard/proprietary) • Interoperability testing looks at end-to end functionality – Less thorough but wide in scope – Gives a high-level of confidence that devices (or components in a system) will interoperate with other devices (components) 10

Characteristics of Interoperability Testing • It is system testing – Tests a complete device or a collection of devices • Shows that (two or more) devices interoperate – But within a limited scenario • Tests at a ‘high’ level (perception of users) – Tests the ‘whole’, not the parts or layers • E. g. , protocol stacks integrated with applications – Tests functionality • Shows function is accomplished (but not how) • Does not usually involve test systems – Uses existing interfaces (standard/proprietary) • Interoperability testing looks at end-to end functionality – Less thorough but wide in scope – Gives a high-level of confidence that devices (or components in a system) will interoperate with other devices (components) 10

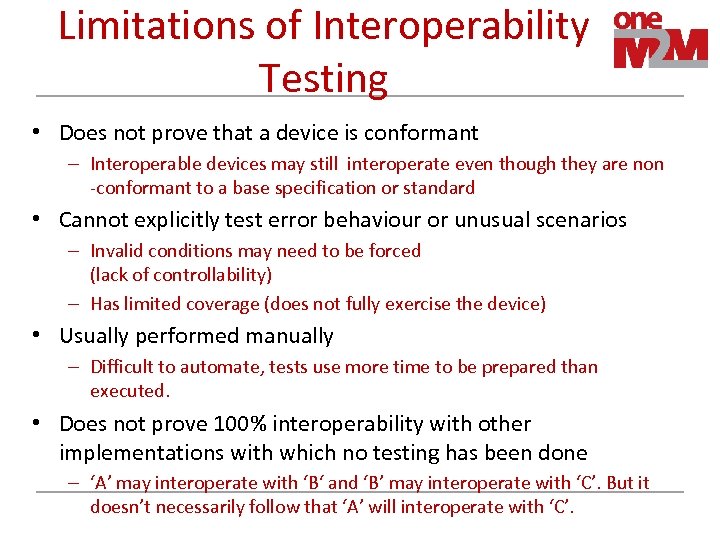

Limitations of Interoperability Testing • Does not prove that a device is conformant – Interoperable devices may still interoperate even though they are non -conformant to a base specification or standard • Cannot explicitly test error behaviour or unusual scenarios – Invalid conditions may need to be forced (lack of controllability) – Has limited coverage (does not fully exercise the device) • Usually performed manually – Difficult to automate, tests use more time to be prepared than executed. • Does not prove 100% interoperability with other implementations with which no testing has been done – ‘A’ may interoperate with ‘B‘ and ‘B’ may interoperate with ‘C’. But it doesn’t necessarily follow that ‘A’ will interoperate with ‘C’. 11

Limitations of Interoperability Testing • Does not prove that a device is conformant – Interoperable devices may still interoperate even though they are non -conformant to a base specification or standard • Cannot explicitly test error behaviour or unusual scenarios – Invalid conditions may need to be forced (lack of controllability) – Has limited coverage (does not fully exercise the device) • Usually performed manually – Difficult to automate, tests use more time to be prepared than executed. • Does not prove 100% interoperability with other implementations with which no testing has been done – ‘A’ may interoperate with ‘B‘ and ‘B’ may interoperate with ‘C’. But it doesn’t necessarily follow that ‘A’ will interoperate with ‘C’. 11

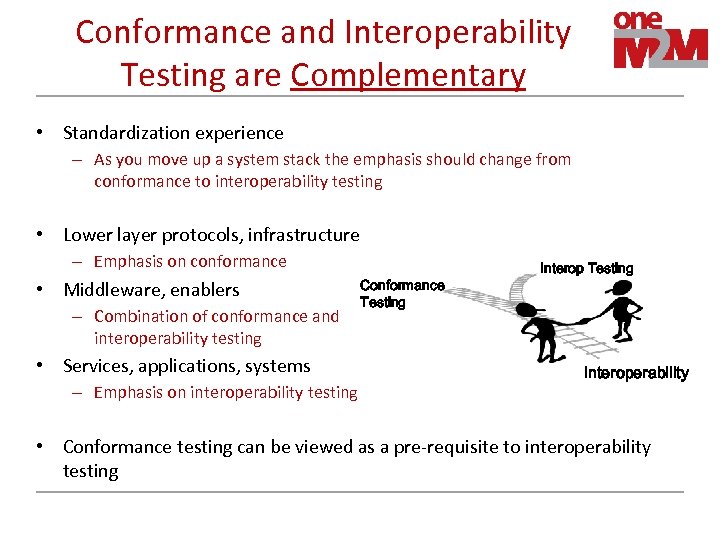

Conformance and Interoperability Testing are Complementary • Standardization experience – As you move up a system stack the emphasis should change from conformance to interoperability testing • Lower layer protocols, infrastructure – Emphasis on conformance • Middleware, enablers – Combination of conformance and interoperability testing • Services, applications, systems – Emphasis on interoperability testing Conformance Testing Interoperability • Conformance testing can be viewed as a pre-requisite to interoperability testing 12

Conformance and Interoperability Testing are Complementary • Standardization experience – As you move up a system stack the emphasis should change from conformance to interoperability testing • Lower layer protocols, infrastructure – Emphasis on conformance • Middleware, enablers – Combination of conformance and interoperability testing • Services, applications, systems – Emphasis on interoperability testing Conformance Testing Interoperability • Conformance testing can be viewed as a pre-requisite to interoperability testing 12

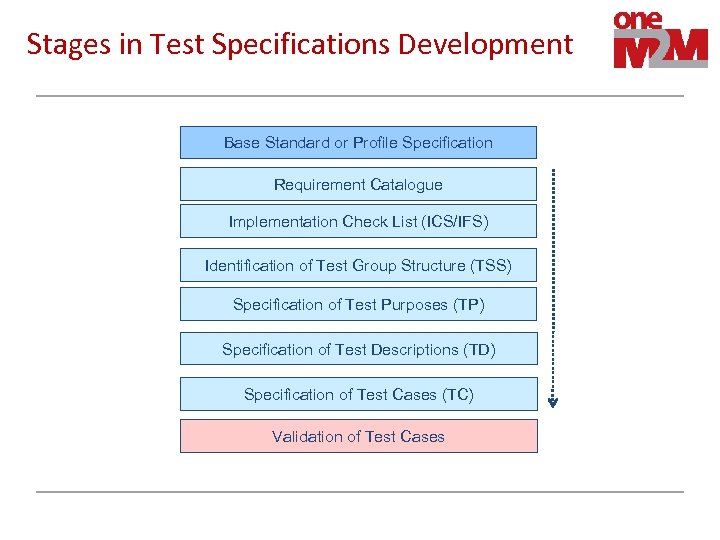

Stages in Test Specifications Development Base Standard or Profile Specification Requirement Catalogue Implementation Check List (ICS/IFS) Identification of Test Group Structure (TSS) Specification of Test Purposes (TP) Specification of Test Descriptions (TD) Specification of Test Cases (TC) Validation of Test Cases 13

Stages in Test Specifications Development Base Standard or Profile Specification Requirement Catalogue Implementation Check List (ICS/IFS) Identification of Test Group Structure (TSS) Specification of Test Purposes (TP) Specification of Test Descriptions (TD) Specification of Test Cases (TC) Validation of Test Cases 13

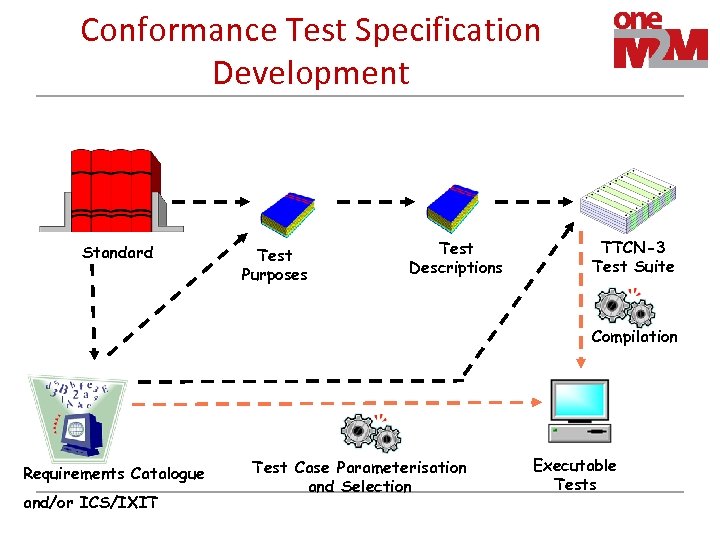

Conformance Test Specification Development Standard Test Purposes Test Descriptions TTCN-3 Test Suite Compilation Requirements Catalogue and/or ICS/IXIT Test Case Parameterisation and Selection Executable Tests

Conformance Test Specification Development Standard Test Purposes Test Descriptions TTCN-3 Test Suite Compilation Requirements Catalogue and/or ICS/IXIT Test Case Parameterisation and Selection Executable Tests

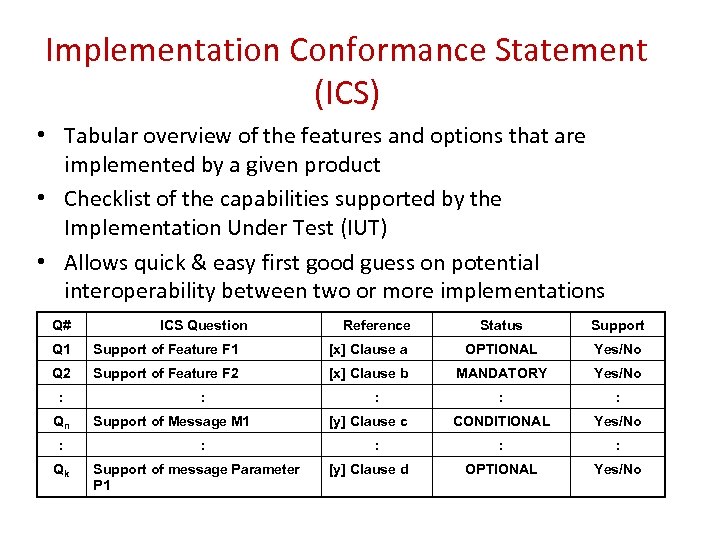

Implementation Conformance Statement (ICS) • Tabular overview of the features and options that are implemented by a given product • Checklist of the capabilities supported by the Implementation Under Test (IUT) • Allows quick & easy first good guess on potential interoperability between two or more implementations Q# ICS Question Reference Status Support Q 1 Support of Feature F 1 [x] Clause a OPTIONAL Yes/No Q 2 Support of Feature F 2 [x] Clause b MANDATORY Yes/No : : CONDITIONAL Yes/No : : OPTIONAL Yes/No : Qn : Qk : Support of Message M 1 : Support of message Parameter P 1 : [y] Clause c : [y] Clause d 15

Implementation Conformance Statement (ICS) • Tabular overview of the features and options that are implemented by a given product • Checklist of the capabilities supported by the Implementation Under Test (IUT) • Allows quick & easy first good guess on potential interoperability between two or more implementations Q# ICS Question Reference Status Support Q 1 Support of Feature F 1 [x] Clause a OPTIONAL Yes/No Q 2 Support of Feature F 2 [x] Clause b MANDATORY Yes/No : : CONDITIONAL Yes/No : : OPTIONAL Yes/No : Qn : Qk : Support of Message M 1 : Support of message Parameter P 1 : [y] Clause c : [y] Clause d 15

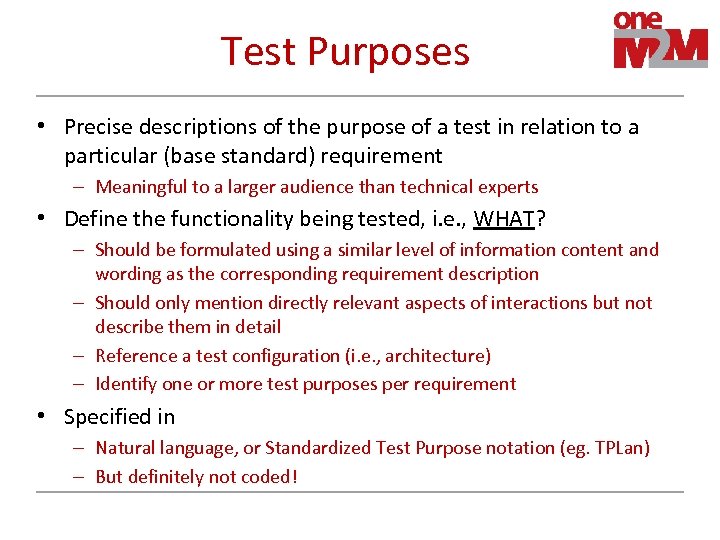

Test Purposes • Precise descriptions of the purpose of a test in relation to a particular (base standard) requirement – Meaningful to a larger audience than technical experts • Define the functionality being tested, i. e. , WHAT? – Should be formulated using a similar level of information content and wording as the corresponding requirement description – Should only mention directly relevant aspects of interactions but not describe them in detail – Reference a test configuration (i. e. , architecture) – Identify one or more test purposes per requirement • Specified in – Natural language, or Standardized Test Purpose notation (eg. TPLan) – But definitely not coded! 16

Test Purposes • Precise descriptions of the purpose of a test in relation to a particular (base standard) requirement – Meaningful to a larger audience than technical experts • Define the functionality being tested, i. e. , WHAT? – Should be formulated using a similar level of information content and wording as the corresponding requirement description – Should only mention directly relevant aspects of interactions but not describe them in detail – Reference a test configuration (i. e. , architecture) – Identify one or more test purposes per requirement • Specified in – Natural language, or Standardized Test Purpose notation (eg. TPLan) – But definitely not coded! 16

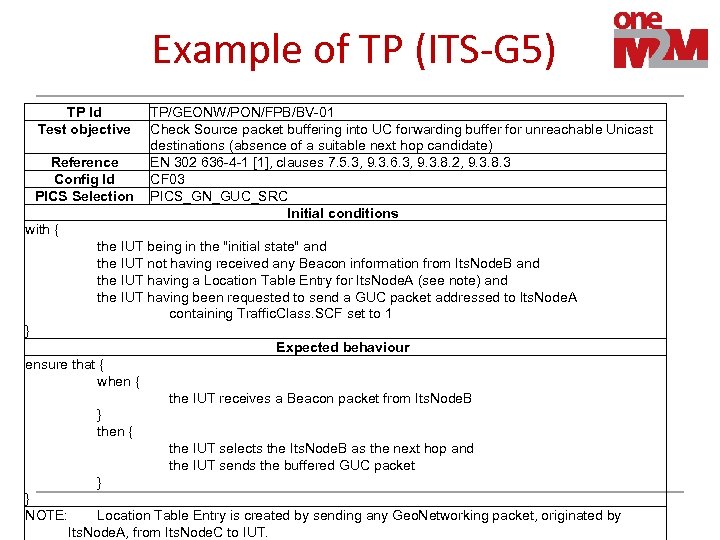

Example of TP (ITS-G 5) TP Id Test objective Reference Config Id PICS Selection TP/GEONW/PON/FPB/BV-01 Check Source packet buffering into UC forwarding buffer for unreachable Unicast destinations (absence of a suitable next hop candidate) EN 302 636 -4 -1 [1], clauses 7. 5. 3, 9. 3. 6. 3, 9. 3. 8. 2, 9. 3. 8. 3 CF 03 PICS_GN_GUC_SRC Initial conditions with { the IUT being in the "initial state" and the IUT not having received any Beacon information from Its. Node. B and the IUT having a Location Table Entry for Its. Node. A (see note) and the IUT having been requested to send a GUC packet addressed to Its. Node. A containing Traffic. Class. SCF set to 1 } Expected behaviour ensure that { when { the IUT receives a Beacon packet from Its. Node. B } then { the IUT selects the Its. Node. B as the next hop and the IUT sends the buffered GUC packet } } NOTE: Location Table Entry is created by sending any Geo. Networking packet, originated by Its. Node. A, from Its. Node. C to IUT.

Example of TP (ITS-G 5) TP Id Test objective Reference Config Id PICS Selection TP/GEONW/PON/FPB/BV-01 Check Source packet buffering into UC forwarding buffer for unreachable Unicast destinations (absence of a suitable next hop candidate) EN 302 636 -4 -1 [1], clauses 7. 5. 3, 9. 3. 6. 3, 9. 3. 8. 2, 9. 3. 8. 3 CF 03 PICS_GN_GUC_SRC Initial conditions with { the IUT being in the "initial state" and the IUT not having received any Beacon information from Its. Node. B and the IUT having a Location Table Entry for Its. Node. A (see note) and the IUT having been requested to send a GUC packet addressed to Its. Node. A containing Traffic. Class. SCF set to 1 } Expected behaviour ensure that { when { the IUT receives a Beacon packet from Its. Node. B } then { the IUT selects the Its. Node. B as the next hop and the IUT sends the buffered GUC packet } } NOTE: Location Table Entry is created by sending any Geo. Networking packet, originated by Its. Node. A, from Its. Node. C to IUT.

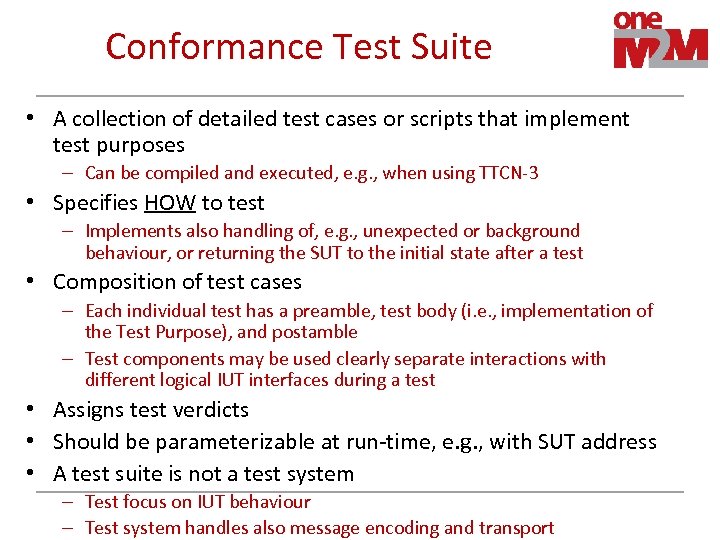

Conformance Test Suite • A collection of detailed test cases or scripts that implement test purposes – Can be compiled and executed, e. g. , when using TTCN-3 • Specifies HOW to test – Implements also handling of, e. g. , unexpected or background behaviour, or returning the SUT to the initial state after a test • Composition of test cases – Each individual test has a preamble, test body (i. e. , implementation of the Test Purpose), and postamble – Test components may be used clearly separate interactions with different logical IUT interfaces during a test • Assigns test verdicts • Should be parameterizable at run-time, e. g. , with SUT address • A test suite is not a test system – Test focus on IUT behaviour – Test system handles also message encoding and transport 18

Conformance Test Suite • A collection of detailed test cases or scripts that implement test purposes – Can be compiled and executed, e. g. , when using TTCN-3 • Specifies HOW to test – Implements also handling of, e. g. , unexpected or background behaviour, or returning the SUT to the initial state after a test • Composition of test cases – Each individual test has a preamble, test body (i. e. , implementation of the Test Purpose), and postamble – Test components may be used clearly separate interactions with different logical IUT interfaces during a test • Assigns test verdicts • Should be parameterizable at run-time, e. g. , with SUT address • A test suite is not a test system – Test focus on IUT behaviour – Test system handles also message encoding and transport 18

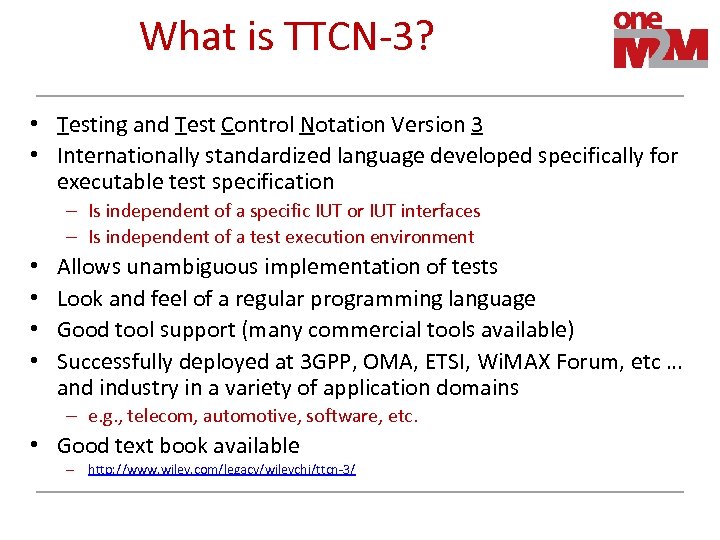

What is TTCN-3? • Testing and Test Control Notation Version 3 • Internationally standardized language developed specifically for executable test specification – Is independent of a specific IUT or IUT interfaces – Is independent of a test execution environment • • Allows unambiguous implementation of tests Look and feel of a regular programming language Good tool support (many commercial tools available) Successfully deployed at 3 GPP, OMA, ETSI, Wi. MAX Forum, etc … and industry in a variety of application domains – e. g. , telecom, automotive, software, etc. • Good text book available – http: //www. wiley. com/legacy/wileychi/ttcn-3/ 19

What is TTCN-3? • Testing and Test Control Notation Version 3 • Internationally standardized language developed specifically for executable test specification – Is independent of a specific IUT or IUT interfaces – Is independent of a test execution environment • • Allows unambiguous implementation of tests Look and feel of a regular programming language Good tool support (many commercial tools available) Successfully deployed at 3 GPP, OMA, ETSI, Wi. MAX Forum, etc … and industry in a variety of application domains – e. g. , telecom, automotive, software, etc. • Good text book available – http: //www. wiley. com/legacy/wileychi/ttcn-3/ 19

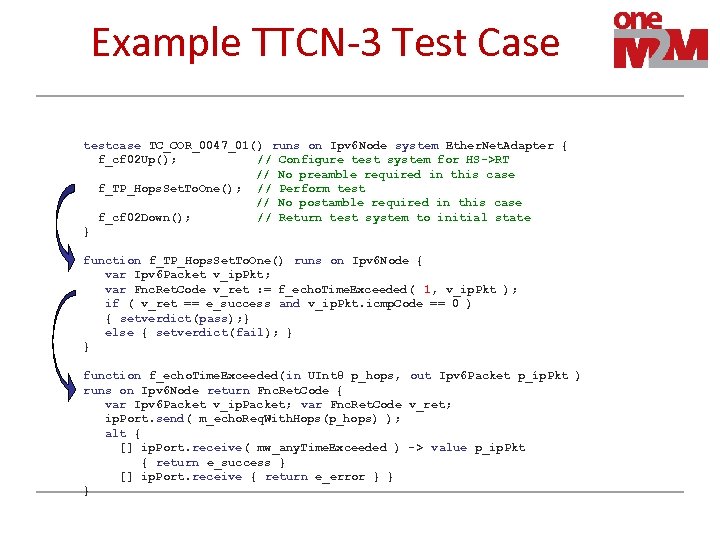

Example TTCN-3 Test Case testcase TC_COR_0047_01() runs on Ipv 6 Node system Ether. Net. Adapter { f_cf 02 Up(); // Configure test system for HS->RT // No preamble required in this case f_TP_Hops. Set. To. One(); // Perform test // No postamble required in this case f_cf 02 Down(); // Return test system to initial state } function f_TP_Hops. Set. To. One() runs on Ipv 6 Node { var Ipv 6 Packet v_ip. Pkt; var Fnc. Ret. Code v_ret : = f_echo. Time. Exceeded( 1, v_ip. Pkt ); if ( v_ret == e_success and v_ip. Pkt. icmp. Code == 0 ) { setverdict(pass); } else { setverdict(fail); } } function f_echo. Time. Exceeded(in UInt 8 p_hops, out Ipv 6 Packet p_íp. Pkt ) runs on Ipv 6 Node return Fnc. Ret. Code { var Ipv 6 Packet v_ip. Packet; var Fnc. Ret. Code v_ret; ip. Port. send( m_echo. Req. With. Hops(p_hops) ); alt { [] ip. Port. receive( mw_any. Time. Exceeded ) -> value p_ip. Pkt { return e_success } [] ip. Port. receive { return e_error } } } 20

Example TTCN-3 Test Case testcase TC_COR_0047_01() runs on Ipv 6 Node system Ether. Net. Adapter { f_cf 02 Up(); // Configure test system for HS->RT // No preamble required in this case f_TP_Hops. Set. To. One(); // Perform test // No postamble required in this case f_cf 02 Down(); // Return test system to initial state } function f_TP_Hops. Set. To. One() runs on Ipv 6 Node { var Ipv 6 Packet v_ip. Pkt; var Fnc. Ret. Code v_ret : = f_echo. Time. Exceeded( 1, v_ip. Pkt ); if ( v_ret == e_success and v_ip. Pkt. icmp. Code == 0 ) { setverdict(pass); } else { setverdict(fail); } } function f_echo. Time. Exceeded(in UInt 8 p_hops, out Ipv 6 Packet p_íp. Pkt ) runs on Ipv 6 Node return Fnc. Ret. Code { var Ipv 6 Packet v_ip. Packet; var Fnc. Ret. Code v_ret; ip. Port. send( m_echo. Req. With. Hops(p_hops) ); alt { [] ip. Port. receive( mw_any. Time. Exceeded ) -> value p_ip. Pkt { return e_success } [] ip. Port. receive { return e_error } } } 20

Validation of (Conformance) Tests • Typical test suite validation requires that – Tests compile with several TTCN-3 tools – Tests are executed on one or more platforms against various implementations of the standard (usually by one. M 2 M members) • Requires very close co-operation with test tool suppliers, test platform providers, equipment manufacturers etc. – Strict and well-defined processes between • Test lab (who execute tests[, certify] and provide feedback) • And one. M 2 M WG TST (who updates the tests) – Timely availability of stable base specifications, test platforms (tools) and implementations is essential 21

Validation of (Conformance) Tests • Typical test suite validation requires that – Tests compile with several TTCN-3 tools – Tests are executed on one or more platforms against various implementations of the standard (usually by one. M 2 M members) • Requires very close co-operation with test tool suppliers, test platform providers, equipment manufacturers etc. – Strict and well-defined processes between • Test lab (who execute tests[, certify] and provide feedback) • And one. M 2 M WG TST (who updates the tests) – Timely availability of stable base specifications, test platforms (tools) and implementations is essential 21

Interoperability Test Descriptions • Specifies detailed steps to be followed to achieve stated test purpose, i. e. , HOW? • State steps clearly and unambiguously without unreasonable restrictions on actual method: – Example: • Answer incoming call NOT • Pick up telephone handset • Written in a structured and tabulated natural language so tests can be performed manually 22

Interoperability Test Descriptions • Specifies detailed steps to be followed to achieve stated test purpose, i. e. , HOW? • State steps clearly and unambiguously without unreasonable restrictions on actual method: – Example: • Answer incoming call NOT • Pick up telephone handset • Written in a structured and tabulated natural language so tests can be performed manually 22

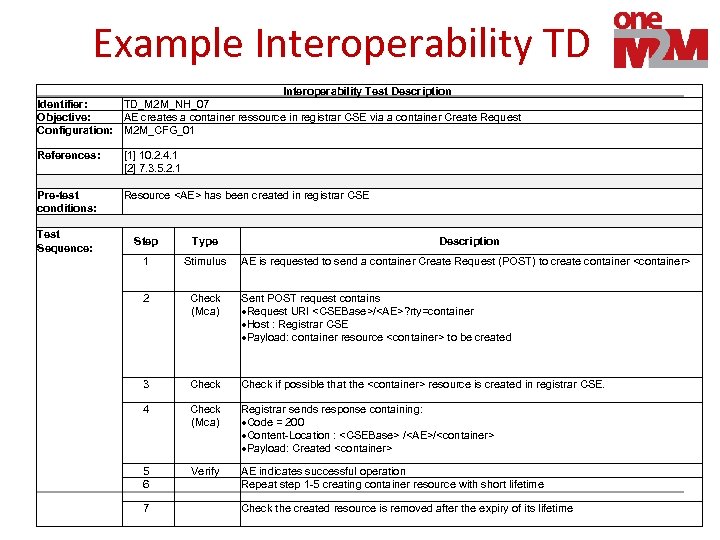

Example Interoperability TD Interoperability Test Description Identifier: TD_M 2 M_NH_07 Objective: AE creates a container ressource in registrar CSE via a container Create Request Configuration: M 2 M_CFG_01 References: Pre-test conditions: Test Sequence: [1] 10. 2. 4. 1 [2] 7. 3. 5. 2. 1 Resource

Example Interoperability TD Interoperability Test Description Identifier: TD_M 2 M_NH_07 Objective: AE creates a container ressource in registrar CSE via a container Create Request Configuration: M 2 M_CFG_01 References: Pre-test conditions: Test Sequence: [1] 10. 2. 4. 1 [2] 7. 3. 5. 2. 1 Resource