431ec9ca6f14f1919389d650313dbe2d.ppt

- Количество слайдов: 83

Towards Eradicating Phishing Attacks Stefan Saroiu University of Toronto

Towards Eradicating Phishing Attacks Stefan Saroiu University of Toronto

Today’s anti-phishing tools have done little to stop the proliferation of phishing

Today’s anti-phishing tools have done little to stop the proliferation of phishing

Many Anti-Phishing Tools Exist

Many Anti-Phishing Tools Exist

Phishing is Gaining Momentum

Phishing is Gaining Momentum

Current Anti-Phishing Tools Are Not Effective n Let’s look at new approaches & new insights! Part 1: new approach: user-assistance n Part 2: need new measurement system n

Current Anti-Phishing Tools Are Not Effective n Let’s look at new approaches & new insights! Part 1: new approach: user-assistance n Part 2: need new measurement system n

Part 1 i. Trust. Page: A User-Assisted Anti. Phishing Tool

Part 1 i. Trust. Page: A User-Assisted Anti. Phishing Tool

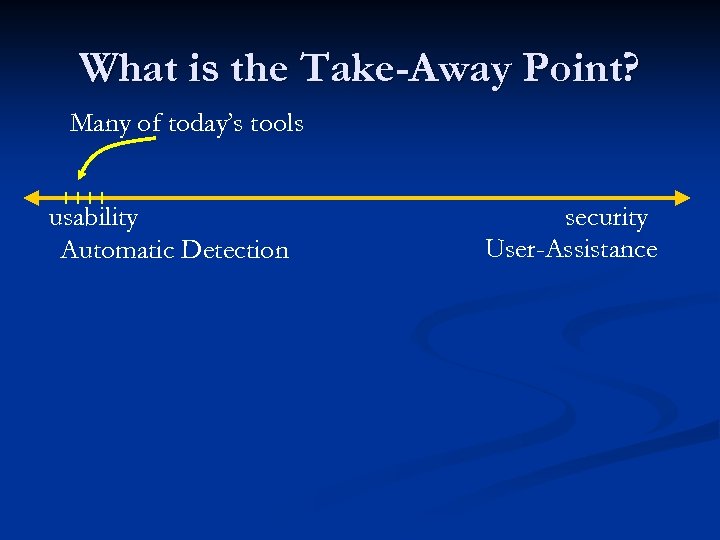

The Problems with Automation n Many anti-phishing tools use auto. detection Automatic detection makes tools user-friendly n But it is subject to false negatives n n Each false negative puts a user at risk

The Problems with Automation n Many anti-phishing tools use auto. detection Automatic detection makes tools user-friendly n But it is subject to false negatives n n Each false negative puts a user at risk

What are False Negatives & False Positives? n Example of a false negative: n n Phishing e-mail not detected by filter heuristics Example of a false positive: n Legitimate e-mail dropped by filter heuristics

What are False Negatives & False Positives? n Example of a false negative: n n Phishing e-mail not detected by filter heuristics Example of a false positive: n Legitimate e-mail dropped by filter heuristics

Current Anti-Phishing Tools Are Not Effective n Most anti-phishing tools use auto. detection Automatic detection makes tools user-friendly n But it is subject to false negatives n n Each false negative puts a user at risk

Current Anti-Phishing Tools Are Not Effective n Most anti-phishing tools use auto. detection Automatic detection makes tools user-friendly n But it is subject to false negatives n n Each false negative puts a user at risk

Can false negatives be eliminated?

Can false negatives be eliminated?

Case Study: Spam. Assassin: one way to stop phishing n Methodology n n Two e-mail corpora: n Phishing: 1, 423 e-mails (Nov. 05 -- Aug. 06) n Legitimate: 478 e-mails from our Sent Mail folders n Spam. Assassin version 3. 1. 8 n Various levels of aggressiveness

Case Study: Spam. Assassin: one way to stop phishing n Methodology n n Two e-mail corpora: n Phishing: 1, 423 e-mails (Nov. 05 -- Aug. 06) n Legitimate: 478 e-mails from our Sent Mail folders n Spam. Assassin version 3. 1. 8 n Various levels of aggressiveness

False Negatives Can’t Be Eliminated

False Negatives Can’t Be Eliminated

Trade-off btw. False Negatives and False Positives Reducing false negatives increases false positives

Trade-off btw. False Negatives and False Positives Reducing false negatives increases false positives

Summary: Automatic Detection False negatives put users at risk n Hard to eliminate false negatives n Making automatic detection more aggressive increases rate of false positives n n n Appears to be fundamental trade-off Let’s look at new approaches

Summary: Automatic Detection False negatives put users at risk n Hard to eliminate false negatives n Making automatic detection more aggressive increases rate of false positives n n n Appears to be fundamental trade-off Let’s look at new approaches

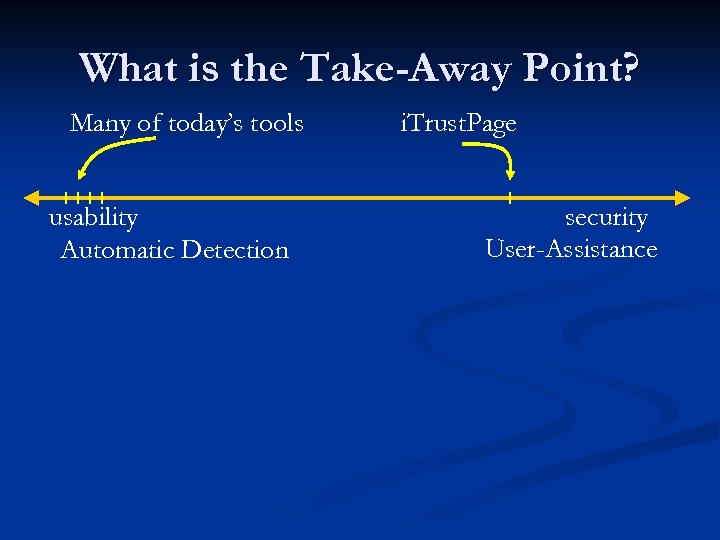

New Approach: User-Assistance n Involve user in the decision making process n Benefits: False-positives unlikely and more tolerable 1. § 2. Combine with conservative automatic detection Use detection that is hard-for-computers but easy-for-people

New Approach: User-Assistance n Involve user in the decision making process n Benefits: False-positives unlikely and more tolerable 1. § 2. Combine with conservative automatic detection Use detection that is hard-for-computers but easy-for-people

Outline Motivation n Design of i. Trust. Page n Evaluation of i. Trust. Page n Summary of Part 1 n

Outline Motivation n Design of i. Trust. Page n Evaluation of i. Trust. Page n Summary of Part 1 n

Two Observations about Phishing 1. Users intend to visit a legitimate page, but they are misdirected to an illegitimate page 2. If two pages look the same, one is likely phishing the other [Florêncio & Herley - Hot. Sec ‘ 06]

Two Observations about Phishing 1. Users intend to visit a legitimate page, but they are misdirected to an illegitimate page 2. If two pages look the same, one is likely phishing the other [Florêncio & Herley - Hot. Sec ‘ 06]

Two Observations about Phishing 1. Users intend to visit a legitimate page, but they are misdirected to an illegitimate page 2. If two pages look the same, one is likely phishing the other [Florêncio & Herley - Hot. Sec ‘ 06] Idea: use these observations to detect phishing

Two Observations about Phishing 1. Users intend to visit a legitimate page, but they are misdirected to an illegitimate page 2. If two pages look the same, one is likely phishing the other [Florêncio & Herley - Hot. Sec ‘ 06] Idea: use these observations to detect phishing

Involving Users n Determine “intent” n n Determine whether pages “look alike” n n Ask user to describe page as if entering search terms Ask user to detect visual similarity between two pages Tasks are hard-for-computers but easy-for-people

Involving Users n Determine “intent” n n Determine whether pages “look alike” n n Ask user to describe page as if entering search terms Ask user to detect visual similarity between two pages Tasks are hard-for-computers but easy-for-people

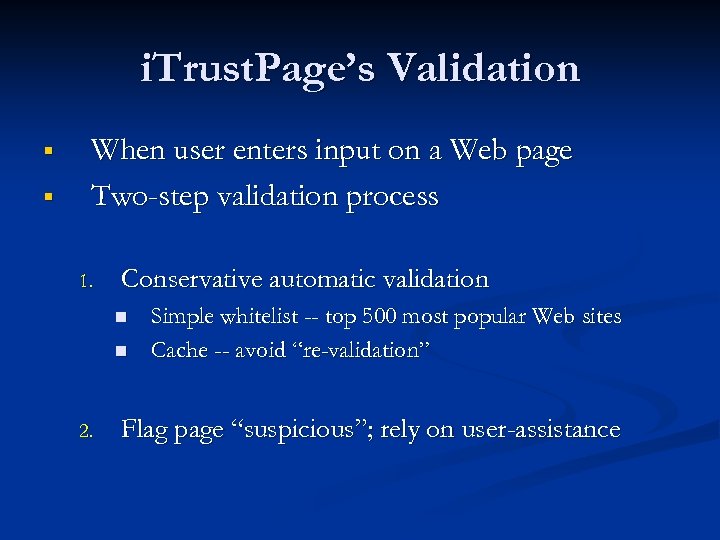

i. Trust. Page’s Validation § § When user enters input on a Web page Two-step validation process 1. Conservative automatic validation n n 2. Simple whitelist -- top 500 most popular Web sites Cache -- avoid “re-validation” Flag page “suspicious”; rely on user-assistance

i. Trust. Page’s Validation § § When user enters input on a Web page Two-step validation process 1. Conservative automatic validation n n 2. Simple whitelist -- top 500 most popular Web sites Cache -- avoid “re-validation” Flag page “suspicious”; rely on user-assistance

i. Trust. Page: Validating Site

i. Trust. Page: Validating Site

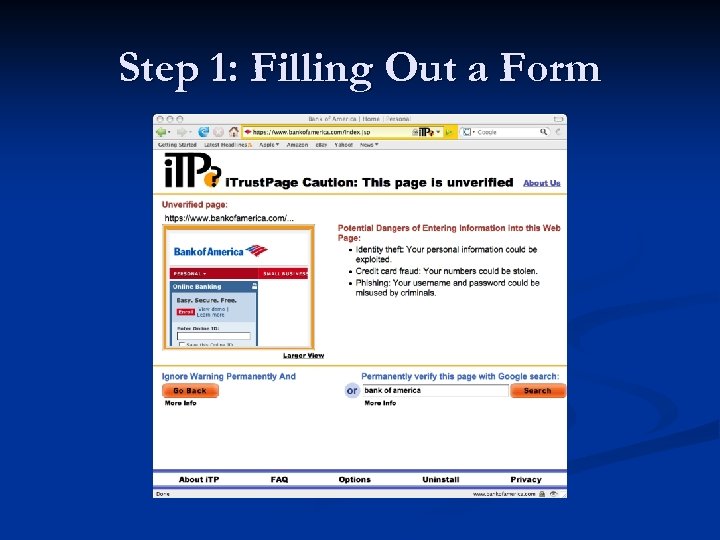

Step 1: Filling Out a Form

Step 1: Filling Out a Form

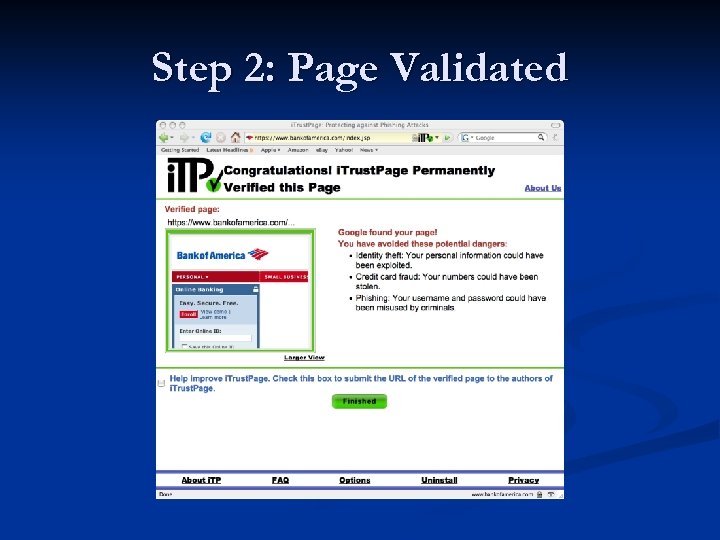

Step 2: Page Validated

Step 2: Page Validated

i. Trust. Page: Avoid Phishing Site

i. Trust. Page: Avoid Phishing Site

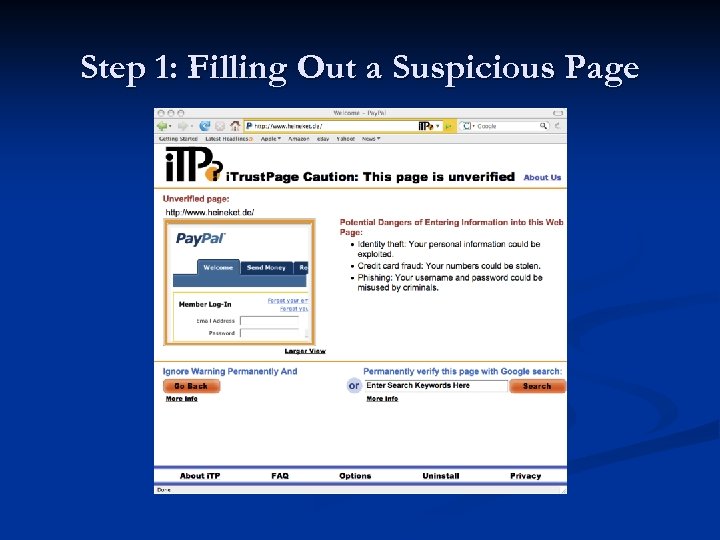

Step 1: Filling Out a Suspicious Page

Step 1: Filling Out a Suspicious Page

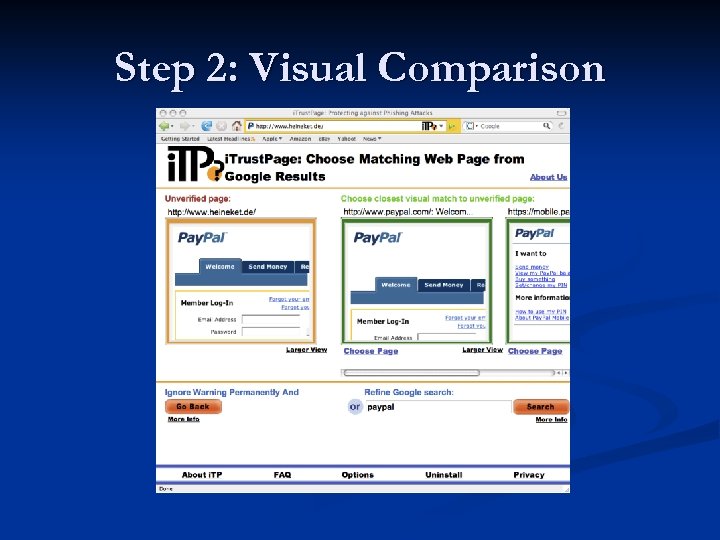

Step 2: Visual Comparison

Step 2: Visual Comparison

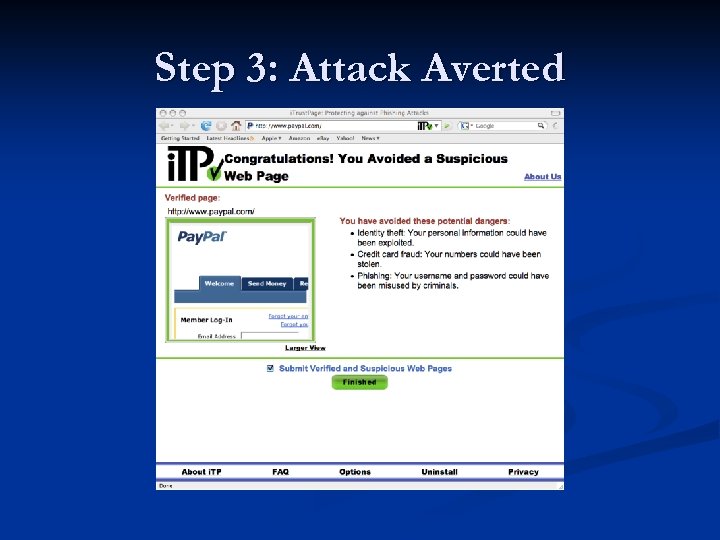

Step 3: Attack Averted

Step 3: Attack Averted

Two Issues: Revise & Bypass n What if users can’t find the page on Google? n n n Visiting an un-indexed page Wrong/ambiguous keywords for search i. Trust. Page supports two options: n n Revise search terms Bypass validation process n Similar to false negatives in automatic tools

Two Issues: Revise & Bypass n What if users can’t find the page on Google? n n n Visiting an un-indexed page Wrong/ambiguous keywords for search i. Trust. Page supports two options: n n Revise search terms Bypass validation process n Similar to false negatives in automatic tools

Outline Motivation n Design of i. Trust. Page n Evaluation of i. Trust. Page n Summary of Part 1 n

Outline Motivation n Design of i. Trust. Page n Evaluation of i. Trust. Page n Summary of Part 1 n

Methodology n Instrumented code sends anonymized logs: n n Info about i. Trust. Page usage High-Level Stats: June 27 th 2007 -- August 9 th, 2007 n 5, 184 unique installations n 2, 050 users with 2+ weeks of activity n

Methodology n Instrumented code sends anonymized logs: n n Info about i. Trust. Page usage High-Level Stats: June 27 th 2007 -- August 9 th, 2007 n 5, 184 unique installations n 2, 050 users with 2+ weeks of activity n

Evaluation Questions n n n How disruptive is i. Trust. Page? Are users willing to help i. Trust. Page’s validation? Did i. Trust. Page prevent any phishing attacks? How many searches until validate? How effective are the whitelist and cache? How often do users visit pages accepting input?

Evaluation Questions n n n How disruptive is i. Trust. Page? Are users willing to help i. Trust. Page’s validation? Did i. Trust. Page prevent any phishing attacks? How many searches until validate? How effective are the whitelist and cache? How often do users visit pages accepting input?

How disruptive is i. Trust. Page?

How disruptive is i. Trust. Page?

i. Trust. Page is not disruptive Users interrupted on less than 2% of pages After first day of use, 50+% of users never interrupted

i. Trust. Page is not disruptive Users interrupted on less than 2% of pages After first day of use, 50+% of users never interrupted

Are users willing to help i. Trust. Page’s validation?

Are users willing to help i. Trust. Page’s validation?

Many Users are Willing to Participate Half the users willing to assist the tool in validation

Many Users are Willing to Participate Half the users willing to assist the tool in validation

Did i. Trust. Page prevent any phishing attacks?

Did i. Trust. Page prevent any phishing attacks?

An Upper Bound n Anonymization of logs prevents us from measuring i. Trust. Page’s effectiveness n 291 visually similar pages chosen instead n 1/3 occurred after two weeks of use

An Upper Bound n Anonymization of logs prevents us from measuring i. Trust. Page’s effectiveness n 291 visually similar pages chosen instead n 1/3 occurred after two weeks of use

Summary of Evaluation n n Not disruptive; disruption rate decreasing over time Half the users are willing to participate in validation Pages with input are very common on Internet i. Trust. Page is easy to use

Summary of Evaluation n n Not disruptive; disruption rate decreasing over time Half the users are willing to participate in validation Pages with input are very common on Internet i. Trust. Page is easy to use

Summary of Part 1 n An alternative approach to automation: n Have user assist tool to provide better protection n Our evaluation has shown our tool’s benefits while avoiding pitfalls of automated tools n i. Trust. Page protects users who always participate in page validation

Summary of Part 1 n An alternative approach to automation: n Have user assist tool to provide better protection n Our evaluation has shown our tool’s benefits while avoiding pitfalls of automated tools n i. Trust. Page protects users who always participate in page validation

What is the Take-Away Point?

What is the Take-Away Point?

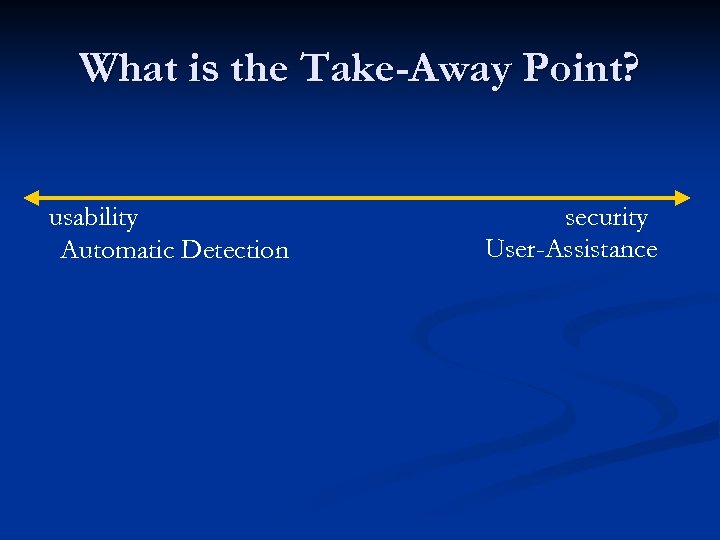

What is the Take-Away Point? usability Automatic Detection security User-Assistance

What is the Take-Away Point? usability Automatic Detection security User-Assistance

What is the Take-Away Point? Many of today’s tools usability Automatic Detection security User-Assistance

What is the Take-Away Point? Many of today’s tools usability Automatic Detection security User-Assistance

What is the Take-Away Point? Many of today’s tools usability Automatic Detection i. Trust. Page security User-Assistance

What is the Take-Away Point? Many of today’s tools usability Automatic Detection i. Trust. Page security User-Assistance

Part 2 Bunker: A System for Gathering Anonymized Traces

Part 2 Bunker: A System for Gathering Anonymized Traces

Motivation n Two ways to anonymize network traces: n n Offline: anonymize trace after raw data is collected Online: anonymize while it is collected

Motivation n Two ways to anonymize network traces: n n Offline: anonymize trace after raw data is collected Online: anonymize while it is collected

Motivation n Two ways to anonymize network traces: n n n Offline: anonymize trace after raw data is collected Online: anonymize while it is collected Today’s traces require deep packet inspection n Privacy risks make offline anonymization unsuitable

Motivation n Two ways to anonymize network traces: n n n Offline: anonymize trace after raw data is collected Online: anonymize while it is collected Today’s traces require deep packet inspection n Privacy risks make offline anonymization unsuitable

Motivation n Two ways to anonymize network traces: n n n Today’s traces require deep packet inspection n n Offline: anonymize trace after raw data is collected Online: anonymize while it is collected Privacy risks make offline anonymization unsuitable Phishing involves sophisticated analysis n Performance needs makes online anon. unsuitable

Motivation n Two ways to anonymize network traces: n n n Today’s traces require deep packet inspection n n Offline: anonymize trace after raw data is collected Online: anonymize while it is collected Privacy risks make offline anonymization unsuitable Phishing involves sophisticated analysis n Performance needs makes online anon. unsuitable

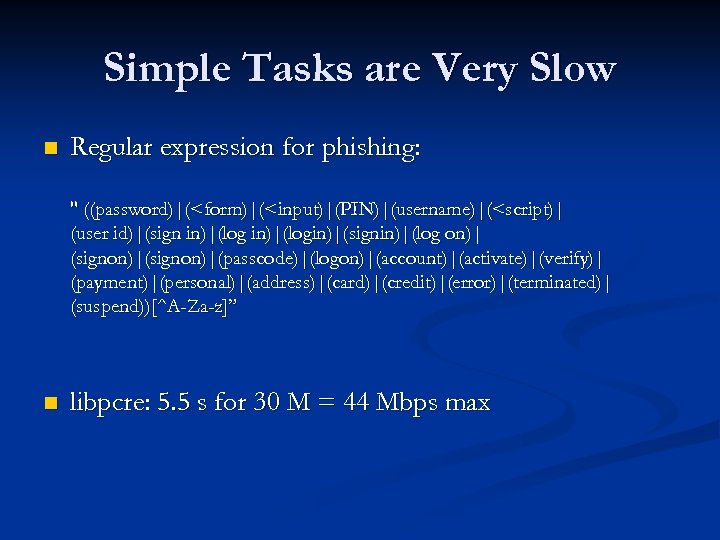

Simple Tasks are Very Slow n Regular expression for phishing: " ((password)|(

Simple Tasks are Very Slow n Regular expression for phishing: " ((password)|(

Motivation n Two ways to anonymize network traces: n n n Today’s traces require deep packet inspection n n Offline: anonymize trace after raw data is collected Online: anonymize while it is collected Privacy risks make offline anonymization unsuitable Phishing involves sophisticated analysis n Performance needs makes online anon. unsuitable

Motivation n Two ways to anonymize network traces: n n n Today’s traces require deep packet inspection n n Offline: anonymize trace after raw data is collected Online: anonymize while it is collected Privacy risks make offline anonymization unsuitable Phishing involves sophisticated analysis n Performance needs makes online anon. unsuitable

Motivation n Two ways to anonymize network traces: n n n Today’s traces require deep packet inspection n n Privacy risks make offline anonymization unsuitable Phishing involves sophisticated analysis n n Offline: anonymize trace after raw data is collected Online: anonymize while it is collected Performance needs makes online anon. unsuitable Need new tool to combine best of both worlds

Motivation n Two ways to anonymize network traces: n n n Today’s traces require deep packet inspection n n Privacy risks make offline anonymization unsuitable Phishing involves sophisticated analysis n n Offline: anonymize trace after raw data is collected Online: anonymize while it is collected Performance needs makes online anon. unsuitable Need new tool to combine best of both worlds

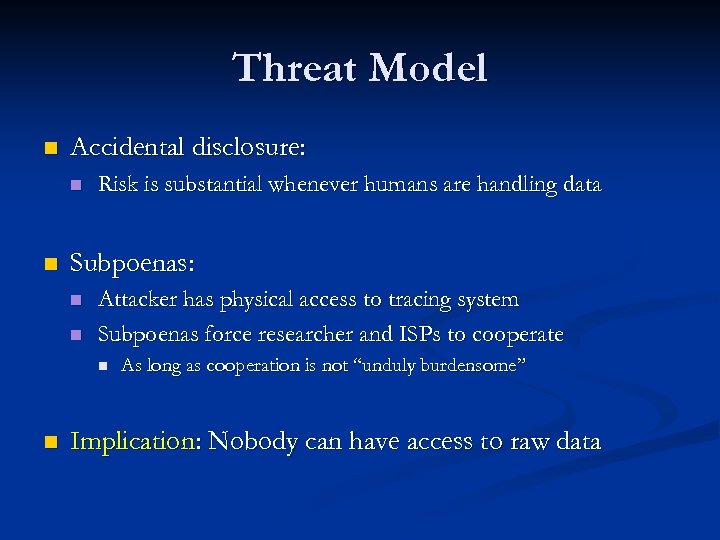

Threat Model n Accidental disclosure: n n Risk is substantial whenever humans are handling data Subpoenas: n n Attacker has physical access to tracing system Subpoenas force researcher and ISPs to cooperate n n As long as cooperation is not “unduly burdensome” Implication: Nobody can have access to raw data

Threat Model n Accidental disclosure: n n Risk is substantial whenever humans are handling data Subpoenas: n n Attacker has physical access to tracing system Subpoenas force researcher and ISPs to cooperate n n As long as cooperation is not “unduly burdensome” Implication: Nobody can have access to raw data

Is Developing Bunker Legal?

Is Developing Bunker Legal?

It Depends on Intent of Use n Developing Bunker is like developing encryption n Must consider purpose and uses of Bunker Developing Bunker for user privacy is legal n Misuse of Bunker to bypass law is illegal n

It Depends on Intent of Use n Developing Bunker is like developing encryption n Must consider purpose and uses of Bunker Developing Bunker for user privacy is legal n Misuse of Bunker to bypass law is illegal n

Our solution: Bunker n Combines best of both worlds n n n Same privacy benefits as online anonymization Same engineering benefits as offline anonymization Pre-load analysis and anonymization code n Lock-it and throw away the key (tamper-resistance)

Our solution: Bunker n Combines best of both worlds n n n Same privacy benefits as online anonymization Same engineering benefits as offline anonymization Pre-load analysis and anonymization code n Lock-it and throw away the key (tamper-resistance)

Outline Motivation n Design of Bunker n Evaluation of Bunker n Summary of Part 2 n

Outline Motivation n Design of Bunker n Evaluation of Bunker n Summary of Part 2 n

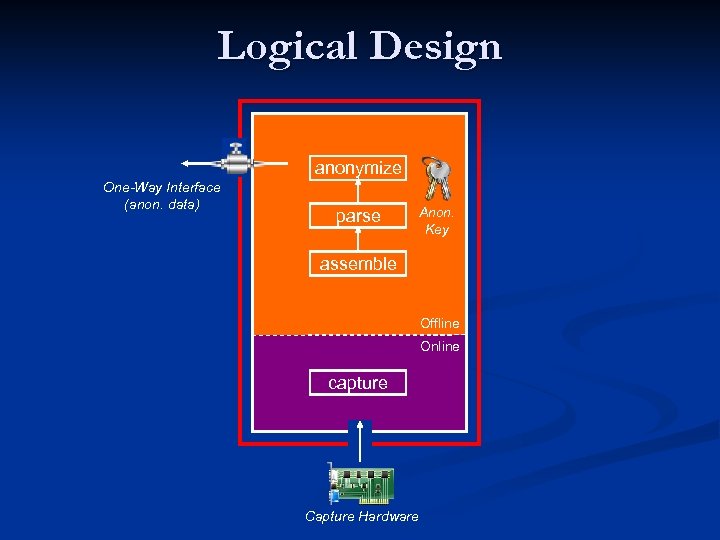

Logical Design anonymize One-Way Interface (anon. data) parse Anon. Key assemble Offline Online capture Capture Hardware

Logical Design anonymize One-Way Interface (anon. data) parse Anon. Key assemble Offline Online capture Capture Hardware

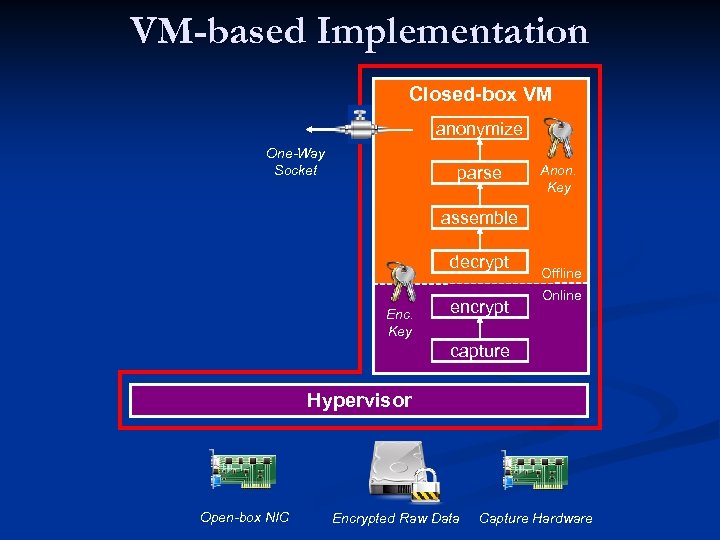

VM-based Implementation Closed-box VM anonymize One-Way Socket parse Anon. Key assemble decrypt Enc. Key encrypt Offline Online capture Hypervisor Open-box NIC Encrypted Raw Data Capture Hardware

VM-based Implementation Closed-box VM anonymize One-Way Socket parse Anon. Key assemble decrypt Enc. Key encrypt Offline Online capture Hypervisor Open-box NIC Encrypted Raw Data Capture Hardware

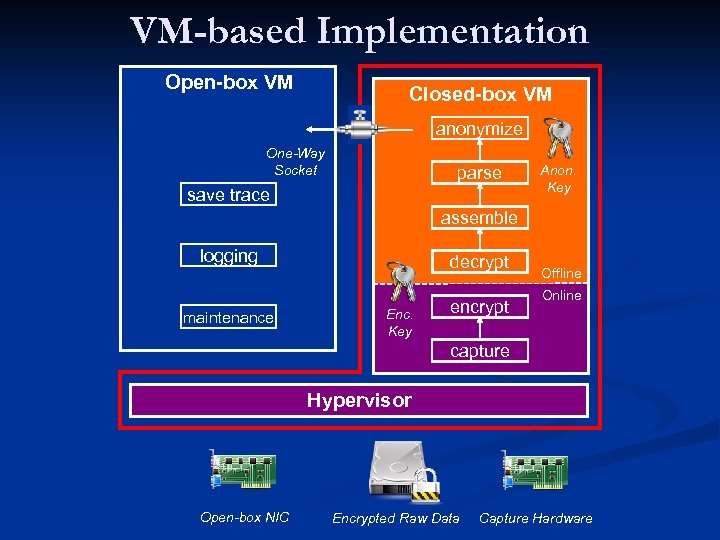

VM-based Implementation Open-box VM Closed-box VM anonymize One-Way Socket parse save trace Anon. Key assemble logging maintenance decrypt Enc. Key encrypt Offline Online capture Hypervisor Open-box NIC Encrypted Raw Data Capture Hardware

VM-based Implementation Open-box VM Closed-box VM anonymize One-Way Socket parse save trace Anon. Key assemble logging maintenance decrypt Enc. Key encrypt Offline Online capture Hypervisor Open-box NIC Encrypted Raw Data Capture Hardware

Outline Motivation n Design of Bunker n Evaluation of Bunker n Summary of Part 2 n

Outline Motivation n Design of Bunker n Evaluation of Bunker n Summary of Part 2 n

Software Engineering Benefits One order of magnitude btw. online and offline Development time: Bunker - 2 months, UW/Toronto - years

Software Engineering Benefits One order of magnitude btw. online and offline Development time: Bunker - 2 months, UW/Toronto - years

Summary of Part 2 n Bunker combines: Privacy benefits of online anonymization n Software engineering benefits of offline anon. n n Ideal tool for characterizing phishing

Summary of Part 2 n Bunker combines: Privacy benefits of online anonymization n Software engineering benefits of offline anon. n n Ideal tool for characterizing phishing

Our Current Use of Bunker n Few “hard facts” known about phishing: n n n Banks have no incentive to disclose info Must focus on victims than on phishing attacks Preliminary study of Hotmail users: n n n How often do people click on links in their e-mails? Do the same people fall victims to phishing? How cautious are people who click on links in e-mails?

Our Current Use of Bunker n Few “hard facts” known about phishing: n n n Banks have no incentive to disclose info Must focus on victims than on phishing attacks Preliminary study of Hotmail users: n n n How often do people click on links in their e-mails? Do the same people fall victims to phishing? How cautious are people who click on links in e-mails?

Our Contributions n i. Trust. Page: new approach to anti-phishing n Bunker: system for gathering anonymized traces

Our Contributions n i. Trust. Page: new approach to anti-phishing n Bunker: system for gathering anonymized traces

Acknowledgements n Graduate students at Toronto n n n Researchers n n Andrew Miklas Troy Ronda Alec Wolman (MSR Redmond) Faculty n Angela Demke Brown (Toronto)

Acknowledgements n Graduate students at Toronto n n n Researchers n n Andrew Miklas Troy Ronda Alec Wolman (MSR Redmond) Faculty n Angela Demke Brown (Toronto)

Questions? i. Trust. Page: https: //addons. mozilla. org http: //www. cs. toronto. edu/~stefan

Questions? i. Trust. Page: https: //addons. mozilla. org http: //www. cs. toronto. edu/~stefan

Research Interests n Building Systems Leveraging Social Networks n n n Making the Internet more secure n n n Exploiting social interactions in mobile systems Rethinking access control for Web 2. 0 Characterizing spread of Bluetooth worms i. Trust. Page + Bunker Characterizing network environments in the wild n n Characterizing residential broadband networks Evaluating emerging “last-meter” Internet apps

Research Interests n Building Systems Leveraging Social Networks n n n Making the Internet more secure n n n Exploiting social interactions in mobile systems Rethinking access control for Web 2. 0 Characterizing spread of Bluetooth worms i. Trust. Page + Bunker Characterizing network environments in the wild n n Characterizing residential broadband networks Evaluating emerging “last-meter” Internet apps

Circumventing i. Trust. Page n “Google bomb”: increasing a phishing page’s rank n This is not enough to circumvent i. Trust. Page n Breaking into a popular site that is already in i. Trust. Page’s whitelist or cache n Compromising a user’s browser

Circumventing i. Trust. Page n “Google bomb”: increasing a phishing page’s rank n This is not enough to circumvent i. Trust. Page n Breaking into a popular site that is already in i. Trust. Page’s whitelist or cache n Compromising a user’s browser

Problems with Password Managers n When password field present: Ask user to select from a list of passwords n Remember password selection for re-visits n n Challenges: Auto. detection of passwd. fields can be “fooled” n Such tools increase amount of confidential info n Don’t assist users on how to handle phishing n

Problems with Password Managers n When password field present: Ask user to select from a list of passwords n Remember password selection for re-visits n n Challenges: Auto. detection of passwd. fields can be “fooled” n Such tools increase amount of confidential info n Don’t assist users on how to handle phishing n

Downloads Released on Mozilla. org

Downloads Released on Mozilla. org

Most Searches Don’t Need Revision Users can find their page majority of the time

Most Searches Don’t Need Revision Users can find their page majority of the time

Outcomes of Validation Process 1/3 of time, users choose to bypass validation

Outcomes of Validation Process 1/3 of time, users choose to bypass validation

Forms and Scripts are Prevalent Many Web pages have multiple forms

Forms and Scripts are Prevalent Many Web pages have multiple forms

Whitelist’s Hit Rate Hit rate remains flat at 55%

Whitelist’s Hit Rate Hit rate remains flat at 55%

Cache’s Hit Rate Hit rate reaches 65% after one week

Cache’s Hit Rate Hit rate reaches 65% after one week

Our solution n Combines best of both worlds n n n Stronger privacy benefits than online anonymization Same engineering benefits as offline anonymization Experimenter must commit to an anonymization process before trace begins

Our solution n Combines best of both worlds n n n Stronger privacy benefits than online anonymization Same engineering benefits as offline anonymization Experimenter must commit to an anonymization process before trace begins

Illustrating the Arms Race Spam. Assassin is adapting to phishing attacks Attackers are also adapting to Spam. Assassin

Illustrating the Arms Race Spam. Assassin is adapting to phishing attacks Attackers are also adapting to Spam. Assassin

Current Anti-Phishing Tools Are Not Effective n Most anti-phishing tools use auto. detection Automatic detection makes tools user-friendly n But it is subject to false negatives n n Each false negative puts a user at risk

Current Anti-Phishing Tools Are Not Effective n Most anti-phishing tools use auto. detection Automatic detection makes tools user-friendly n But it is subject to false negatives n n Each false negative puts a user at risk

Offline Anonymization n Trace anonymized after raw data is collected n n Today’s traces require deep packet inspection n Privacy risk until raw data is deleted Headers insufficient to understand phishing Payload traces pose a serious privacy risk Risk to user privacy is too high n Two universities rejected offline anonymization

Offline Anonymization n Trace anonymized after raw data is collected n n Today’s traces require deep packet inspection n Privacy risk until raw data is deleted Headers insufficient to understand phishing Payload traces pose a serious privacy risk Risk to user privacy is too high n Two universities rejected offline anonymization

Online Anonymization n Trace anonymized online n n Difficult to meet performance demands n n Extraction and anonymization must be done at line speeds Code is frequently buggy and difficult to maintain n n Raw data resides in RAM only Low-level languages (e. g. C) + “Home-made” parsers Small bugs cause large amounts of data loss n Introduces consistent bias against long-lived flows

Online Anonymization n Trace anonymized online n n Difficult to meet performance demands n n Extraction and anonymization must be done at line speeds Code is frequently buggy and difficult to maintain n n Raw data resides in RAM only Low-level languages (e. g. C) + “Home-made” parsers Small bugs cause large amounts of data loss n Introduces consistent bias against long-lived flows

Motivation n Two ways to anonymize traces: n n n Deep packet inspection killed us with phishing n n n Offline: trace anonymized after raw data is collected Online: trace anonymized while raw data is collected A game changer Motivation: try to get the best of both worlds Before I tell you about the design let me elaborate on the security concerns

Motivation n Two ways to anonymize traces: n n n Deep packet inspection killed us with phishing n n n Offline: trace anonymized after raw data is collected Online: trace anonymized while raw data is collected A game changer Motivation: try to get the best of both worlds Before I tell you about the design let me elaborate on the security concerns

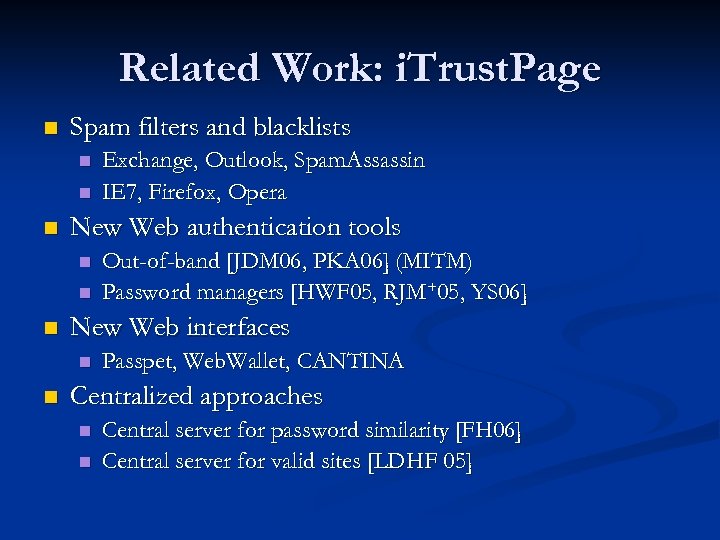

Related Work: i. Trust. Page n Spam filters and blacklists n n n New Web authentication tools n n n Out-of-band [JDM 06, PKA 06] (MITM) Password managers [HWF 05, RJM+05, YS 06] New Web interfaces n n Exchange, Outlook, Spam. Assassin IE 7, Firefox, Opera Passpet, Web. Wallet, CANTINA Centralized approaches n n Central server for password similarity [FH 06] Central server for valid sites [LDHF 05]

Related Work: i. Trust. Page n Spam filters and blacklists n n n New Web authentication tools n n n Out-of-band [JDM 06, PKA 06] (MITM) Password managers [HWF 05, RJM+05, YS 06] New Web interfaces n n Exchange, Outlook, Spam. Assassin IE 7, Firefox, Opera Passpet, Web. Wallet, CANTINA Centralized approaches n n Central server for password similarity [FH 06] Central server for valid sites [LDHF 05]

![Related Work: User Studies n Web password habits [FH 07] n n n Huge Related Work: User Studies n Web password habits [FH 07] n n n Huge](https://present5.com/presentation/431ec9ca6f14f1919389d650313dbe2d/image-82.jpg) Related Work: User Studies n Web password habits [FH 07] n n n Huge password management problems People fall for simple attacks [DTH 06] Warnings more effective than passive cues [WMG 06] Personalized attacks are very successful [JJJM 06] Security tools must be intuitive and simple to use [CO 06]

Related Work: User Studies n Web password habits [FH 07] n n n Huge password management problems People fall for simple attacks [DTH 06] Warnings more effective than passive cues [WMG 06] Personalized attacks are very successful [JJJM 06] Security tools must be intuitive and simple to use [CO 06]

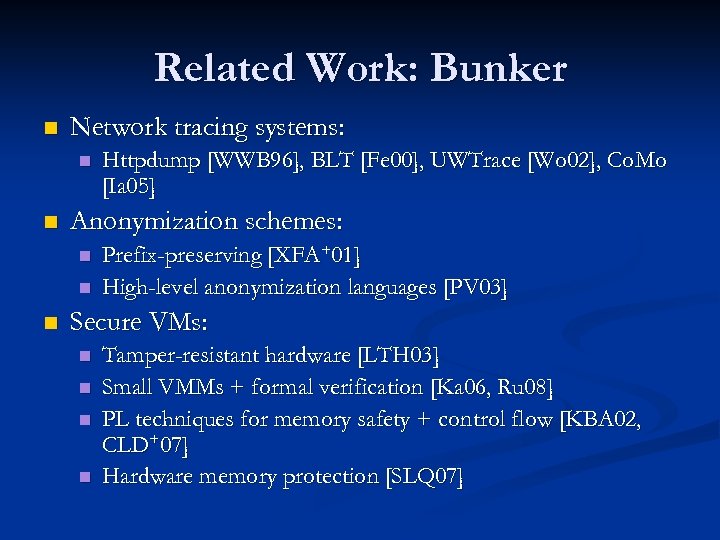

Related Work: Bunker n Network tracing systems: n n Anonymization schemes: n n n Httpdump [WWB 96], BLT [Fe 00], UWTrace [Wo 02], Co. Mo [Ia 05] Prefix-preserving [XFA+01] High-level anonymization languages [PV 03] Secure VMs: n n Tamper-resistant hardware [LTH 03] Small VMMs + formal verification [Ka 06, Ru 08] PL techniques for memory safety + control flow [KBA 02, CLD+07] Hardware memory protection [SLQ 07]

Related Work: Bunker n Network tracing systems: n n Anonymization schemes: n n n Httpdump [WWB 96], BLT [Fe 00], UWTrace [Wo 02], Co. Mo [Ia 05] Prefix-preserving [XFA+01] High-level anonymization languages [PV 03] Secure VMs: n n Tamper-resistant hardware [LTH 03] Small VMMs + formal verification [Ka 06, Ru 08] PL techniques for memory safety + control flow [KBA 02, CLD+07] Hardware memory protection [SLQ 07]