658c2dee48ecaf070c3539934c78fcfb.ppt

- Количество слайдов: 37

Towards energy efficient HPC HP Apollo 8000 at Cyfronet Part I Patryk Lasoń, Marek Magryś

Towards energy efficient HPC HP Apollo 8000 at Cyfronet Part I Patryk Lasoń, Marek Magryś

ACC Cyfronet AGH-UST • established in 1973 • part of AGH University of Science and Technology in Krakow, PL • provides free computing resources for scientific institutions • centre of competence in HPC and Grid Computing • IT service management expertise (ITIL, ISO 20 k) • member of PIONIER • operator of Krakow MAN • home for Zeus

ACC Cyfronet AGH-UST • established in 1973 • part of AGH University of Science and Technology in Krakow, PL • provides free computing resources for scientific institutions • centre of competence in HPC and Grid Computing • IT service management expertise (ITIL, ISO 20 k) • member of PIONIER • operator of Krakow MAN • home for Zeus

International projects

International projects

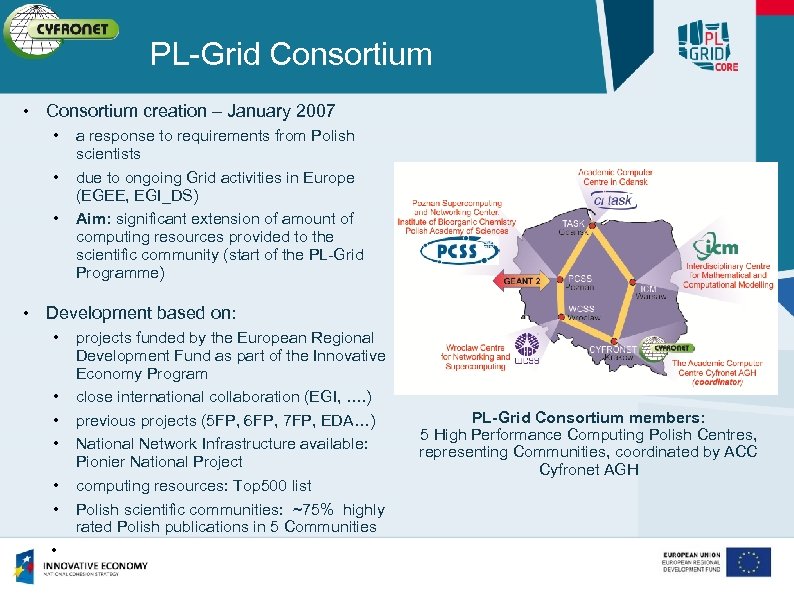

PL-Grid Consortium • Consortium creation – January 2007 • • • a response to requirements from Polish scientists due to ongoing Grid activities in Europe (EGEE, EGI_DS) Aim: significant extension of amount of computing resources provided to the scientific community (start of the PL-Grid Programme) • Development based on: • • projects funded by the European Regional Development Fund as part of the Innovative Economy Program close international collaboration (EGI, …. ) previous projects (5 FP, 6 FP, 7 FP, EDA…) National Network Infrastructure available: Pionier National Project computing resources: Top 500 list Polish scientific communities: ~75% highly rated Polish publications in 5 Communities PL-Grid Consortium members: 5 High Performance Computing Polish Centres, representing Communities, coordinated by ACC Cyfronet AGH

PL-Grid Consortium • Consortium creation – January 2007 • • • a response to requirements from Polish scientists due to ongoing Grid activities in Europe (EGEE, EGI_DS) Aim: significant extension of amount of computing resources provided to the scientific community (start of the PL-Grid Programme) • Development based on: • • projects funded by the European Regional Development Fund as part of the Innovative Economy Program close international collaboration (EGI, …. ) previous projects (5 FP, 6 FP, 7 FP, EDA…) National Network Infrastructure available: Pionier National Project computing resources: Top 500 list Polish scientific communities: ~75% highly rated Polish publications in 5 Communities PL-Grid Consortium members: 5 High Performance Computing Polish Centres, representing Communities, coordinated by ACC Cyfronet AGH

PL-Grid infrastructure • Polish national IT infrastructure supporting e-Science • • • Benefits for users • • one infrastructure instead of 5 separate compute centres unified access to software, compute and storage resources non-trivial quality of service Challenges • • • based upon resources of most powerful academic resource centres compatible and interoperable with European Grid offering grid and cloud computing paradigms coordinated by Cyfronet unified monitoring, accounting, security create environment of cooperation rather than competition Federation – the key to success

PL-Grid infrastructure • Polish national IT infrastructure supporting e-Science • • • Benefits for users • • one infrastructure instead of 5 separate compute centres unified access to software, compute and storage resources non-trivial quality of service Challenges • • • based upon resources of most powerful academic resource centres compatible and interoperable with European Grid offering grid and cloud computing paradigms coordinated by Cyfronet unified monitoring, accounting, security create environment of cooperation rather than competition Federation – the key to success

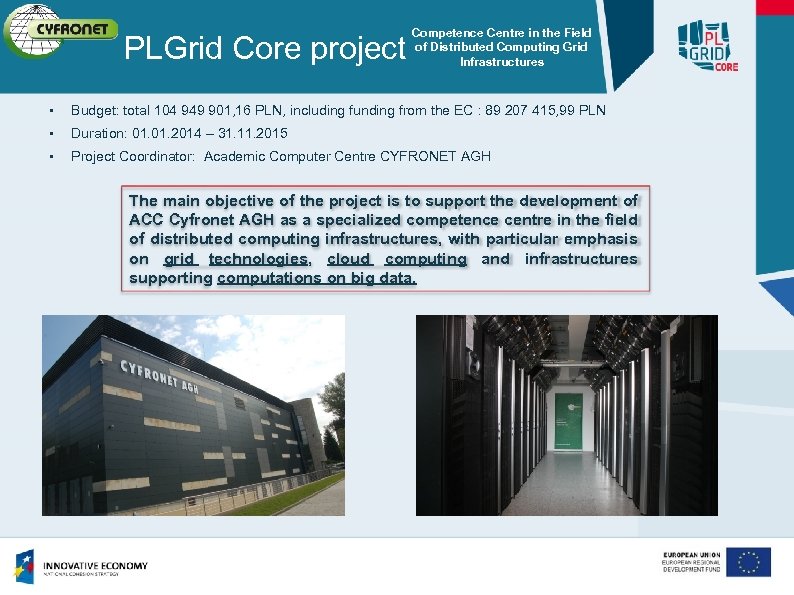

PLGrid Core project Competence Centre in the Field of Distributed Computing Grid Infrastructures • Budget: total 104 949 901, 16 PLN, including funding from the EC : 89 207 415, 99 PLN • Duration: 01. 2014 – 31. 11. 2015 • Project Coordinator: Academic Computer Centre CYFRONET AGH The main objective of the project is to support the development of ACC Cyfronet AGH as a specialized competence centre in the field of distributed computing infrastructures, with particular emphasis on grid technologies, cloud computing and infrastructures supporting computations on big data.

PLGrid Core project Competence Centre in the Field of Distributed Computing Grid Infrastructures • Budget: total 104 949 901, 16 PLN, including funding from the EC : 89 207 415, 99 PLN • Duration: 01. 2014 – 31. 11. 2015 • Project Coordinator: Academic Computer Centre CYFRONET AGH The main objective of the project is to support the development of ACC Cyfronet AGH as a specialized competence centre in the field of distributed computing infrastructures, with particular emphasis on grid technologies, cloud computing and infrastructures supporting computations on big data.

PLGrid Core project – services • Basic infrastructure services • • Paa. S Cloud for scientists • • Uniform access to distributed data Applications maintenance environment of Map. Reduce type End-user services • Technologies and environments implementing the Open Science paradigm • Computing environment for interactive processing of scientific data • Platform for development and execution of large-scale applications organized in a workflow • Automatic selection of scientific literature • Environment supporting data farming mass computations

PLGrid Core project – services • Basic infrastructure services • • Paa. S Cloud for scientists • • Uniform access to distributed data Applications maintenance environment of Map. Reduce type End-user services • Technologies and environments implementing the Open Science paradigm • Computing environment for interactive processing of scientific data • Platform for development and execution of large-scale applications organized in a workflow • Automatic selection of scientific literature • Environment supporting data farming mass computations

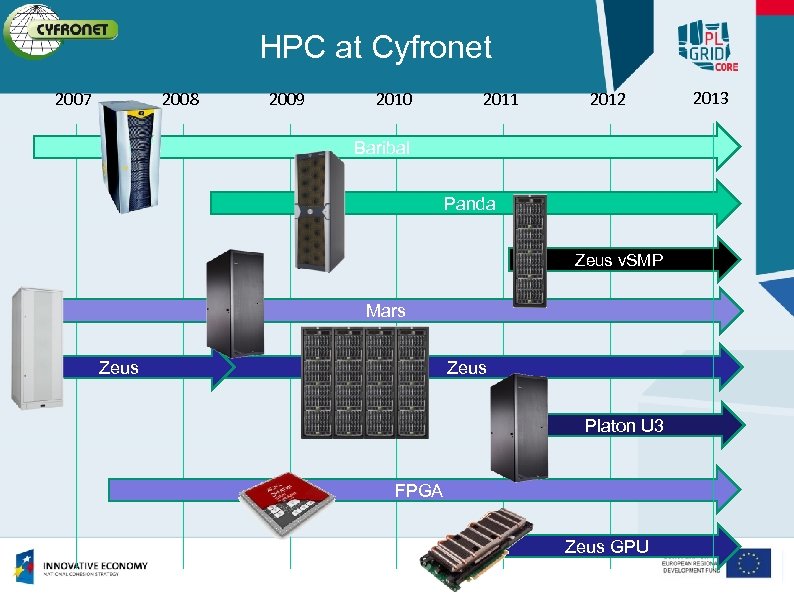

HPC at Cyfronet 2007 2008 2009 2010 2011 2012 Baribal Panda Zeus v. SMP Mars Zeus Platon U 3 FPGA Zeus GPU 2013

HPC at Cyfronet 2007 2008 2009 2010 2011 2012 Baribal Panda Zeus v. SMP Mars Zeus Platon U 3 FPGA Zeus GPU 2013

ZEUS

ZEUS

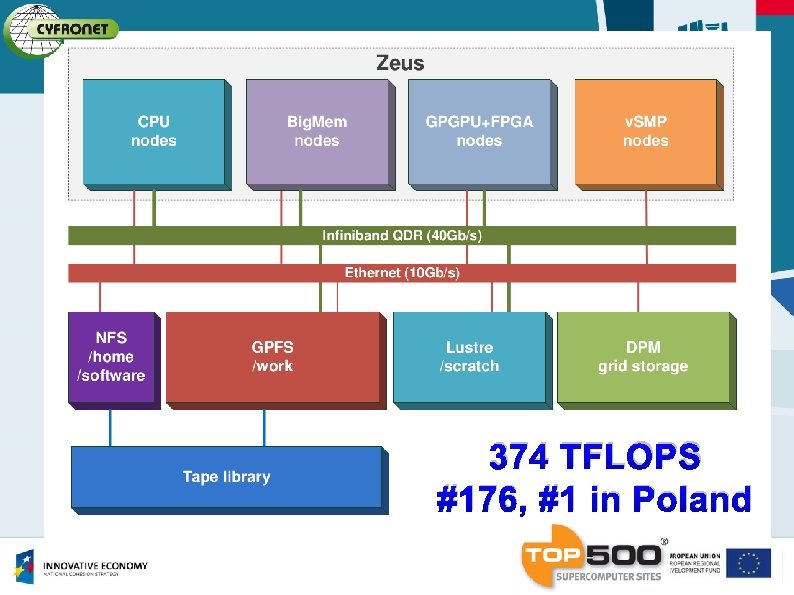

374 TFLOPS #176, #1 in Poland

374 TFLOPS #176, #1 in Poland

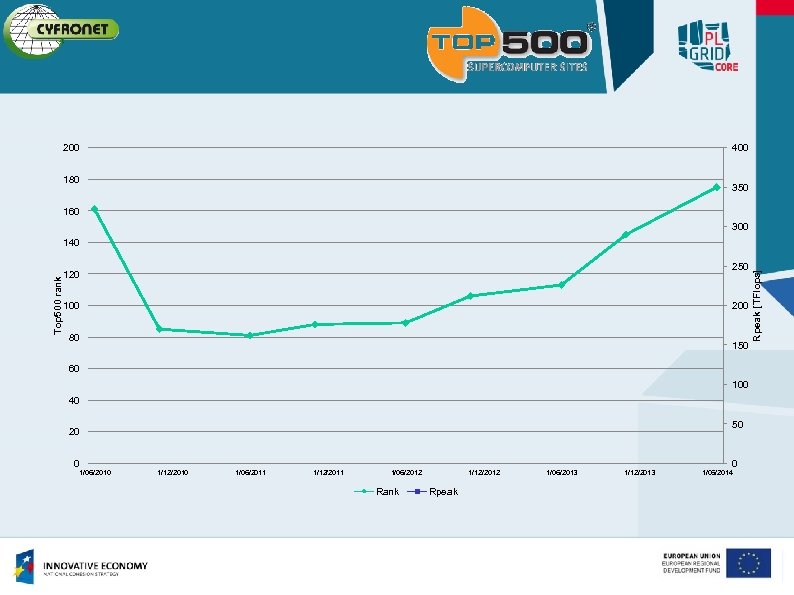

200 400 180 350 160 300 250 120 100 200 80 150 60 100 40 50 20 0 1/06/2010 1/12/2010 1/06/2011 1/12/2011 1/06/2012 Rank 1/12/2012 Rpeak 1/06/2013 1/12/2013 0 1/06/2014 Rpeak [TFlops] Top 500 rank 140

200 400 180 350 160 300 250 120 100 200 80 150 60 100 40 50 20 0 1/06/2010 1/12/2010 1/06/2011 1/12/2011 1/06/2012 Rank 1/12/2012 Rpeak 1/06/2013 1/12/2013 0 1/06/2014 Rpeak [TFlops] Top 500 rank 140

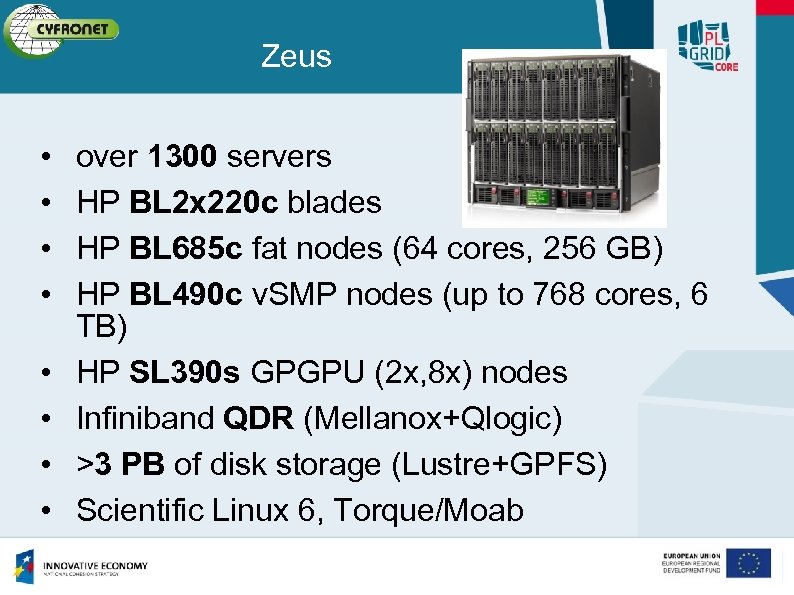

Zeus • • over 1300 servers HP BL 2 x 220 c blades HP BL 685 c fat nodes (64 cores, 256 GB) HP BL 490 c v. SMP nodes (up to 768 cores, 6 TB) HP SL 390 s GPGPU (2 x, 8 x) nodes Infiniband QDR (Mellanox+Qlogic) >3 PB of disk storage (Lustre+GPFS) Scientific Linux 6, Torque/Moab

Zeus • • over 1300 servers HP BL 2 x 220 c blades HP BL 685 c fat nodes (64 cores, 256 GB) HP BL 490 c v. SMP nodes (up to 768 cores, 6 TB) HP SL 390 s GPGPU (2 x, 8 x) nodes Infiniband QDR (Mellanox+Qlogic) >3 PB of disk storage (Lustre+GPFS) Scientific Linux 6, Torque/Moab

Zeus - statistics • 2400 registered users • >2000 jobs running simultaneously • >22000 jobs per day • 96 000 computing hours in 2013 • jobs lasting from minutes to weeks • jobs from 1 core to 4000 cores

Zeus - statistics • 2400 registered users • >2000 jobs running simultaneously • >22000 jobs per day • 96 000 computing hours in 2013 • jobs lasting from minutes to weeks • jobs from 1 core to 4000 cores

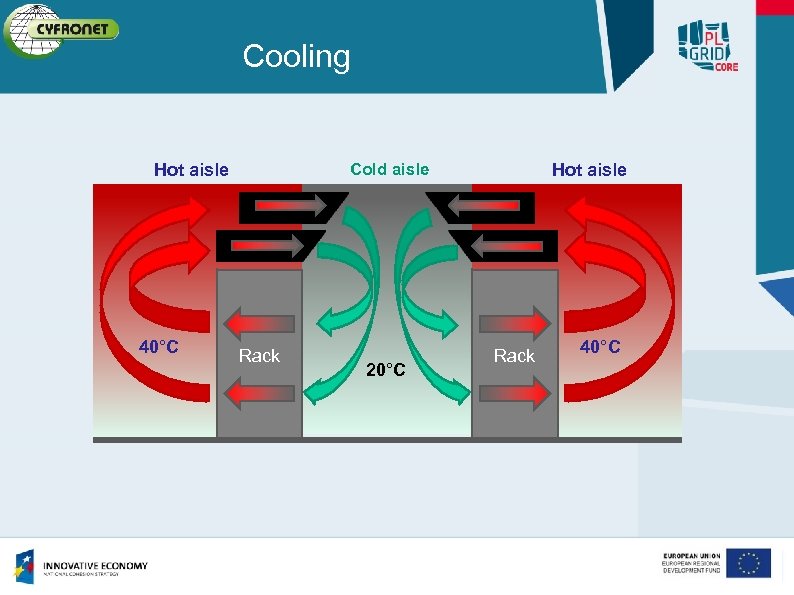

Cooling Cold aisle Hot aisle 40°C Rack 20°C Hot aisle Rack 40°C

Cooling Cold aisle Hot aisle 40°C Rack 20°C Hot aisle Rack 40°C

Future system

Future system

Why upgrade? • Jobs growing • Users hate queuing • New users, new requirements • Technology moving forward • Power bill staying the same

Why upgrade? • Jobs growing • Users hate queuing • New users, new requirements • Technology moving forward • Power bill staying the same

New building

New building

Requirements • • • Petascale system Lowest TCO Energy efficient Dense Good MTBF Hardware: • • core count memory size network topology storage

Requirements • • • Petascale system Lowest TCO Energy efficient Dense Good MTBF Hardware: • • core count memory size network topology storage

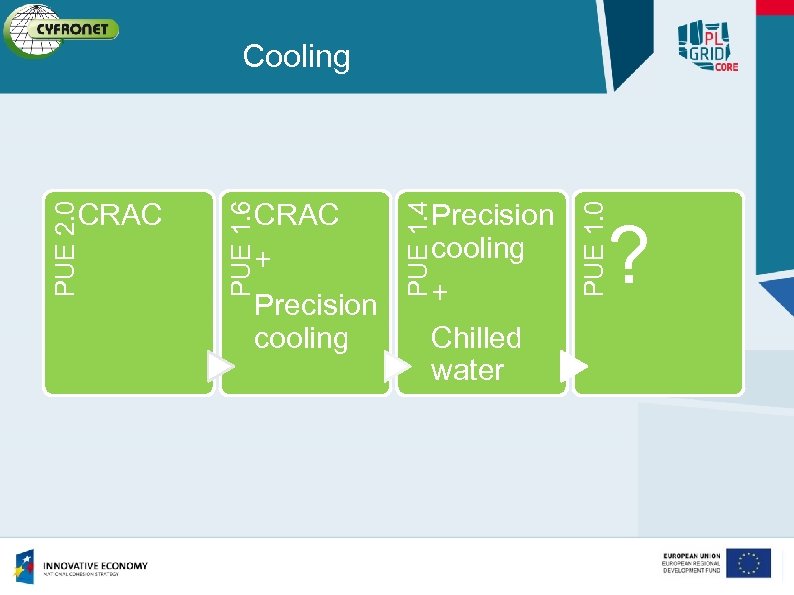

Precision cooling + Chilled water PUE 1. 4 CRAC + Precision cooling PUE 1. 6 PUE 2. 0 CRAC PUE 1. 0 Cooling ?

Precision cooling + Chilled water PUE 1. 4 CRAC + Precision cooling PUE 1. 6 PUE 2. 0 CRAC PUE 1. 0 Cooling ?

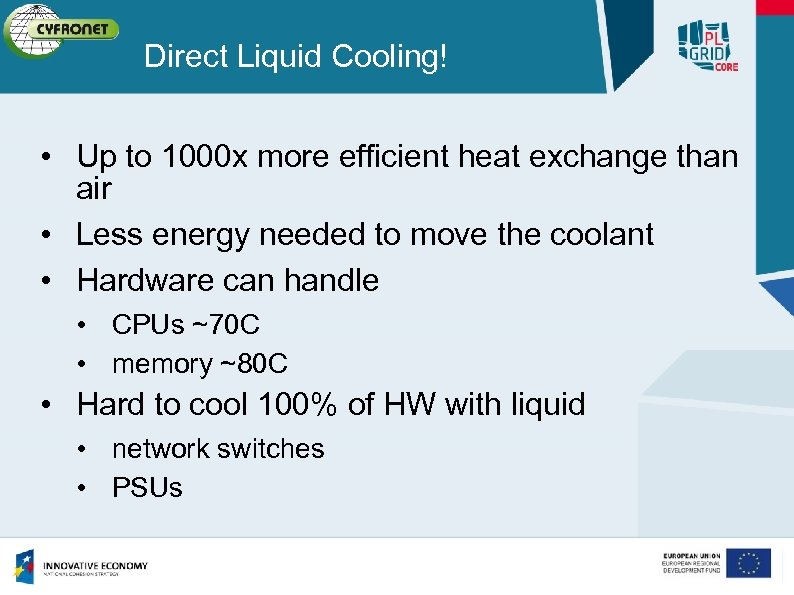

Direct Liquid Cooling! • Up to 1000 x more efficient heat exchange than air • Less energy needed to move the coolant • Hardware can handle • CPUs ~70 C • memory ~80 C • Hard to cool 100% of HW with liquid • network switches • PSUs

Direct Liquid Cooling! • Up to 1000 x more efficient heat exchange than air • Less energy needed to move the coolant • Hardware can handle • CPUs ~70 C • memory ~80 C • Hard to cool 100% of HW with liquid • network switches • PSUs

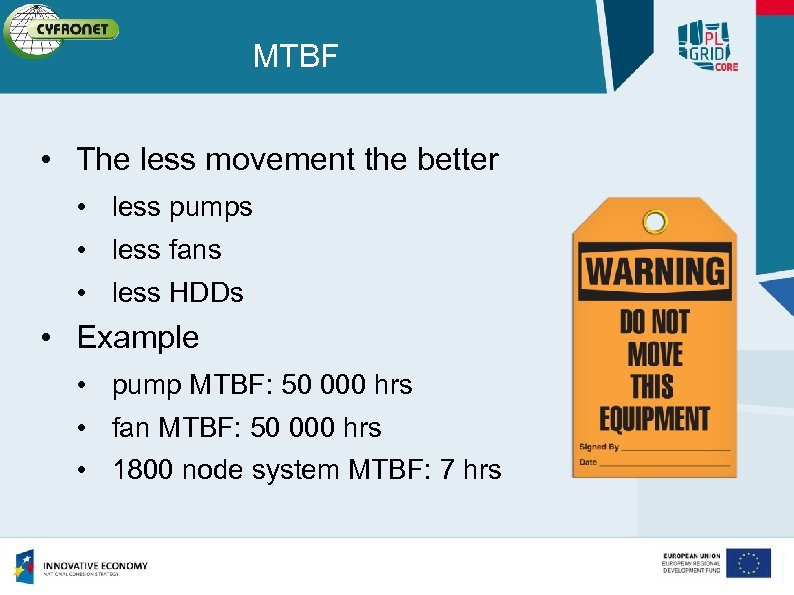

MTBF • The less movement the better • less pumps • less fans • less HDDs • Example • pump MTBF: 50 000 hrs • fan MTBF: 50 000 hrs • 1800 node system MTBF: 7 hrs

MTBF • The less movement the better • less pumps • less fans • less HDDs • Example • pump MTBF: 50 000 hrs • fan MTBF: 50 000 hrs • 1800 node system MTBF: 7 hrs

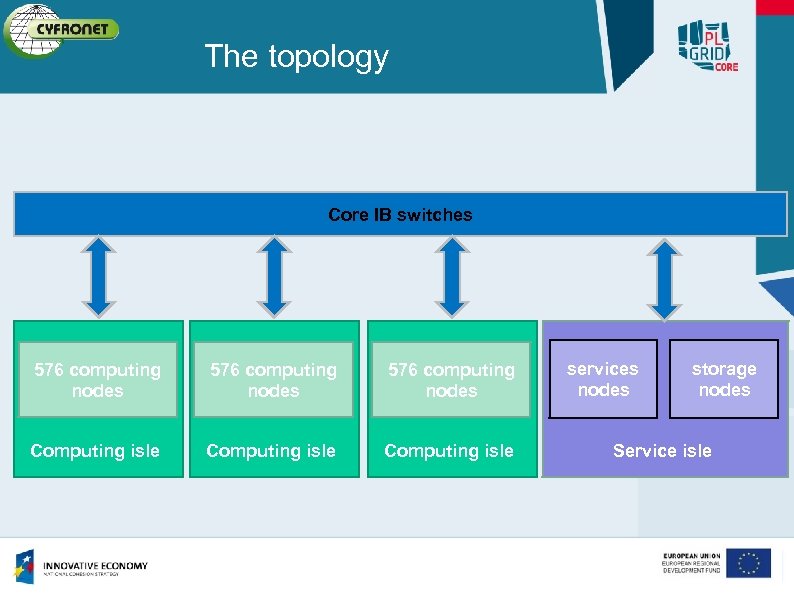

The topology Core IB switches 576 computing nodes Computing isle services nodes storage nodes Service isle

The topology Core IB switches 576 computing nodes Computing isle services nodes storage nodes Service isle

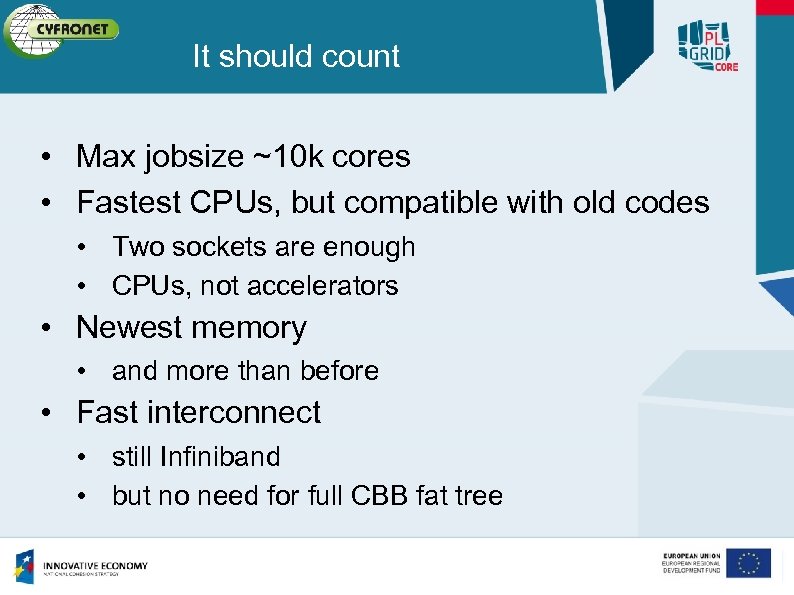

It should count • Max jobsize ~10 k cores • Fastest CPUs, but compatible with old codes • Two sockets are enough • CPUs, not accelerators • Newest memory • and more than before • Fast interconnect • still Infiniband • but no need for full CBB fat tree

It should count • Max jobsize ~10 k cores • Fastest CPUs, but compatible with old codes • Two sockets are enough • CPUs, not accelerators • Newest memory • and more than before • Fast interconnect • still Infiniband • but no need for full CBB fat tree

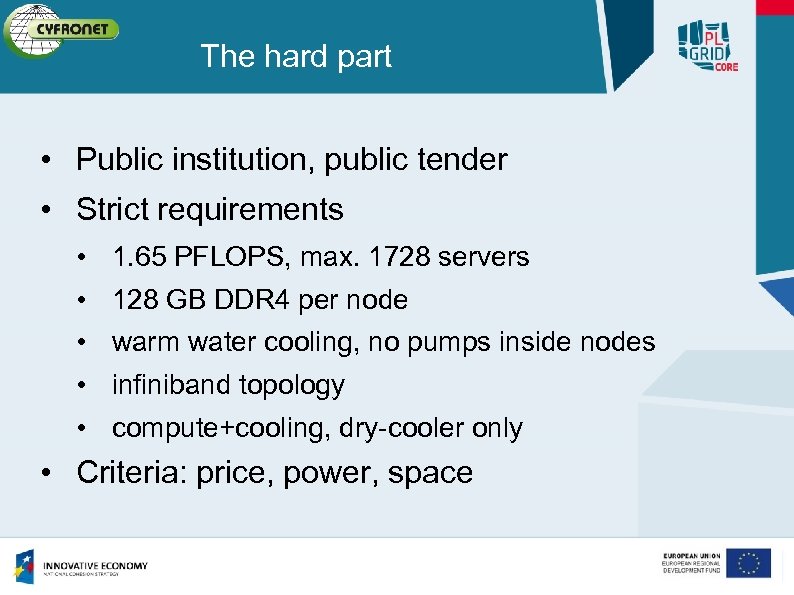

The hard part • Public institution, public tender • Strict requirements • • • 1. 65 PFLOPS, max. 1728 servers 128 GB DDR 4 per node warm water cooling, no pumps inside nodes infiniband topology compute+cooling, dry-cooler only • Criteria: price, power, space

The hard part • Public institution, public tender • Strict requirements • • • 1. 65 PFLOPS, max. 1728 servers 128 GB DDR 4 per node warm water cooling, no pumps inside nodes infiniband topology compute+cooling, dry-cooler only • Criteria: price, power, space

And the winner is… • HP Apollo 8000 • Most energy efficient • The only solution with 100% warm water cooling • Least floor space needed • Lowest TCO

And the winner is… • HP Apollo 8000 • Most energy efficient • The only solution with 100% warm water cooling • Least floor space needed • Lowest TCO

Even more Apollo • Focuses also on ‘ 1’ in PUE! • Power distribution • Less fans • Detailed monitoring • ‘energy to solution’ • • Safer maintenance Less cables Prefabricated piping Simplified management

Even more Apollo • Focuses also on ‘ 1’ in PUE! • Power distribution • Less fans • Detailed monitoring • ‘energy to solution’ • • Safer maintenance Less cables Prefabricated piping Simplified management

System configuration • 1. 65 PFLOPS (first 30. of the current Top 500) • 1728 nodes, Intel Haswell E 5 -2680 v 3 • 41472 cores, 13824 per island • 216 TB DDR 4 RAM • PUE ~1. 05, 680 k. W total power • 15 racks, 12. 99 m 2 • System ready for undisruptive upgrade • Scientific Linux 6 or 7

System configuration • 1. 65 PFLOPS (first 30. of the current Top 500) • 1728 nodes, Intel Haswell E 5 -2680 v 3 • 41472 cores, 13824 per island • 216 TB DDR 4 RAM • PUE ~1. 05, 680 k. W total power • 15 racks, 12. 99 m 2 • System ready for undisruptive upgrade • Scientific Linux 6 or 7

Prometheus • Created human • Gave fire to the people • Accelerated innovation • Defeated Zeus

Prometheus • Created human • Gave fire to the people • Accelerated innovation • Defeated Zeus

Deployment plan • Contract signed on 20. 10. 2014 • Installation of the primary loop started on 12. 11. 2014 • First delivery (service island) expected on 24. 11. 2014 • Apollo piping should arrive before Christmas • Main delivery in January • Installation and acceptance in February • Production work since Q 2 2015

Deployment plan • Contract signed on 20. 10. 2014 • Installation of the primary loop started on 12. 11. 2014 • First delivery (service island) expected on 24. 11. 2014 • Apollo piping should arrive before Christmas • Main delivery in January • Installation and acceptance in February • Production work since Q 2 2015

Future plans • Benchmarking and Top 500 submission • Evaluation of Scientific Linux 7 • Moving users from the previous system • Tuning of applications • Energy-aware scheduling • First experience presented at HP-CAST 24

Future plans • Benchmarking and Top 500 submission • Evaluation of Scientific Linux 7 • Moving users from the previous system • Tuning of applications • Energy-aware scheduling • First experience presented at HP-CAST 24

prometheus@cyfronet. pl

prometheus@cyfronet. pl

More information • www. cyfronet. krakow. pl/en • www. plgrid. pl/en

More information • www. cyfronet. krakow. pl/en • www. plgrid. pl/en